Factors Influencing Generative AI Usage Intention in China: Extending the Acceptance–Avoidance Framework with Perceived AI Literacy

Abstract

1. Introduction

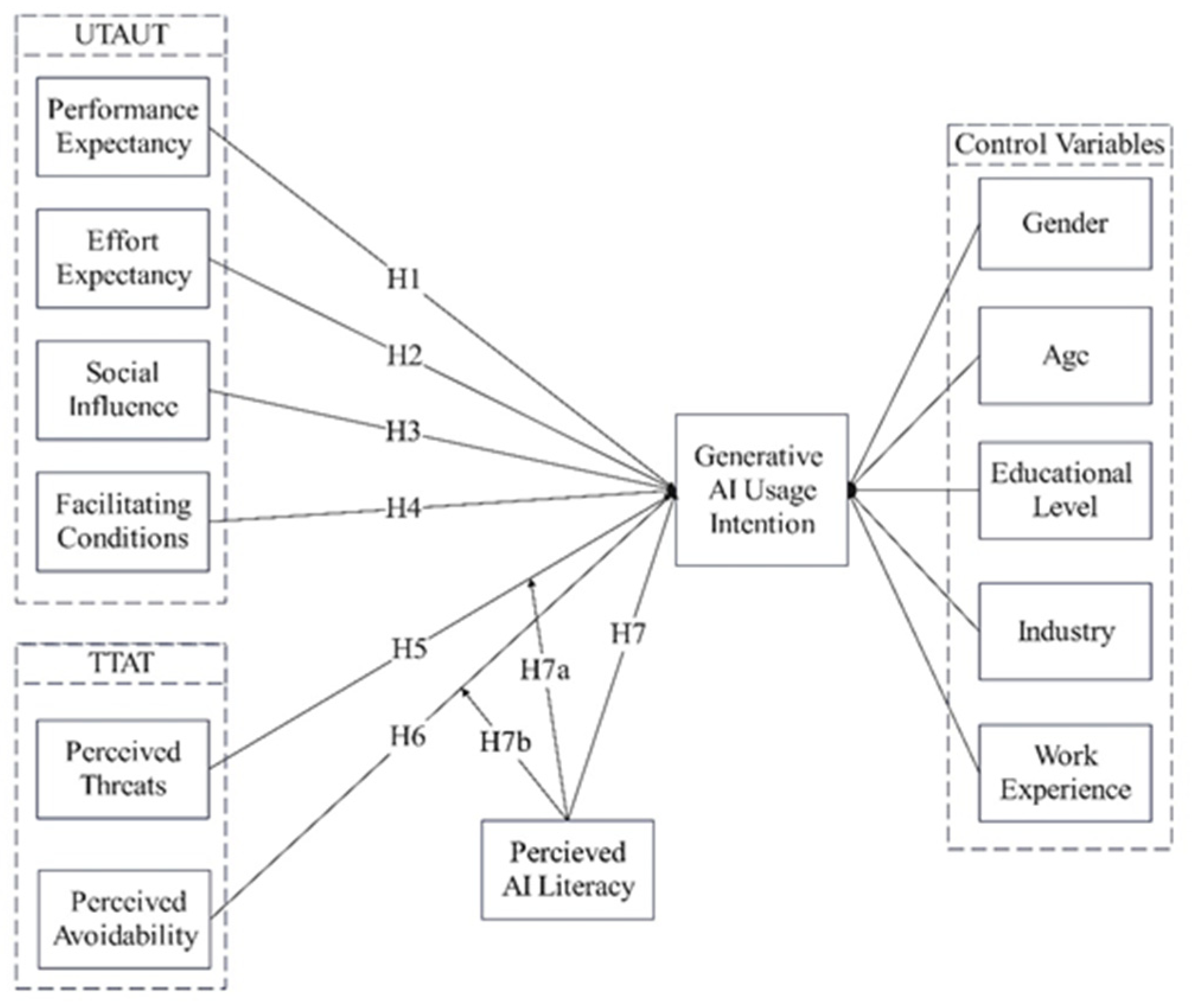

2. Theoretical Foundations and Hypothesis Development

2.1. UTAUT, TTAT, Perceived AI Literacy, and Generative AI Usage Intention

2.2. UTAUT and Generative AI Usage Intention

2.3. TTAT and Generative AI Usage Intention

2.4. Perceived AI Literacy and Generative AI Usage Intention

3. Methodology

3.1. Instrument Development

3.2. Data Collection

3.3. Data Analysis

4. Results

4.1. Respondent Profile

4.2. Non-Response Bias and Common-Method Bias

4.3. Measurement Model Evaluation

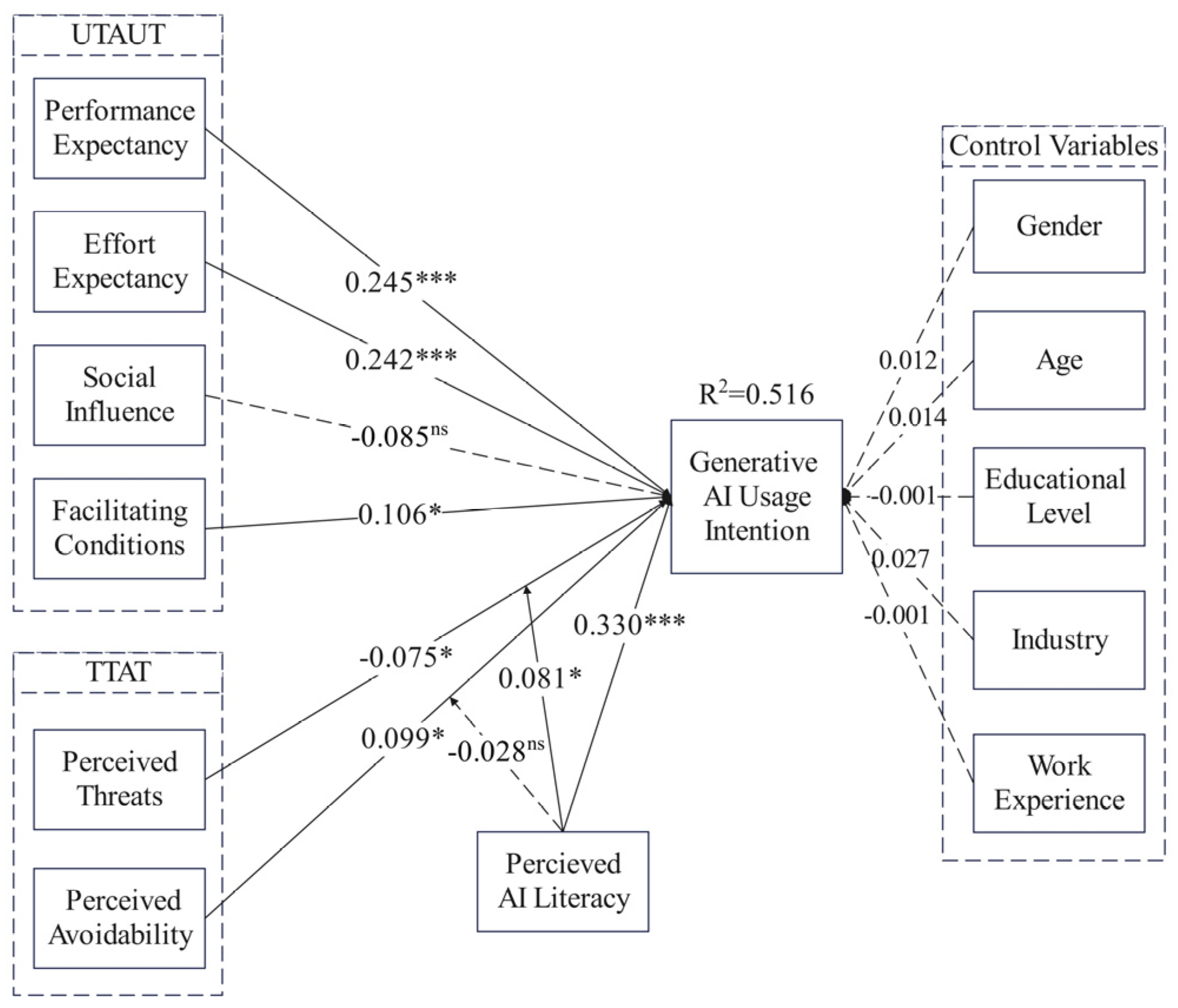

4.4. Structural Model Analysis

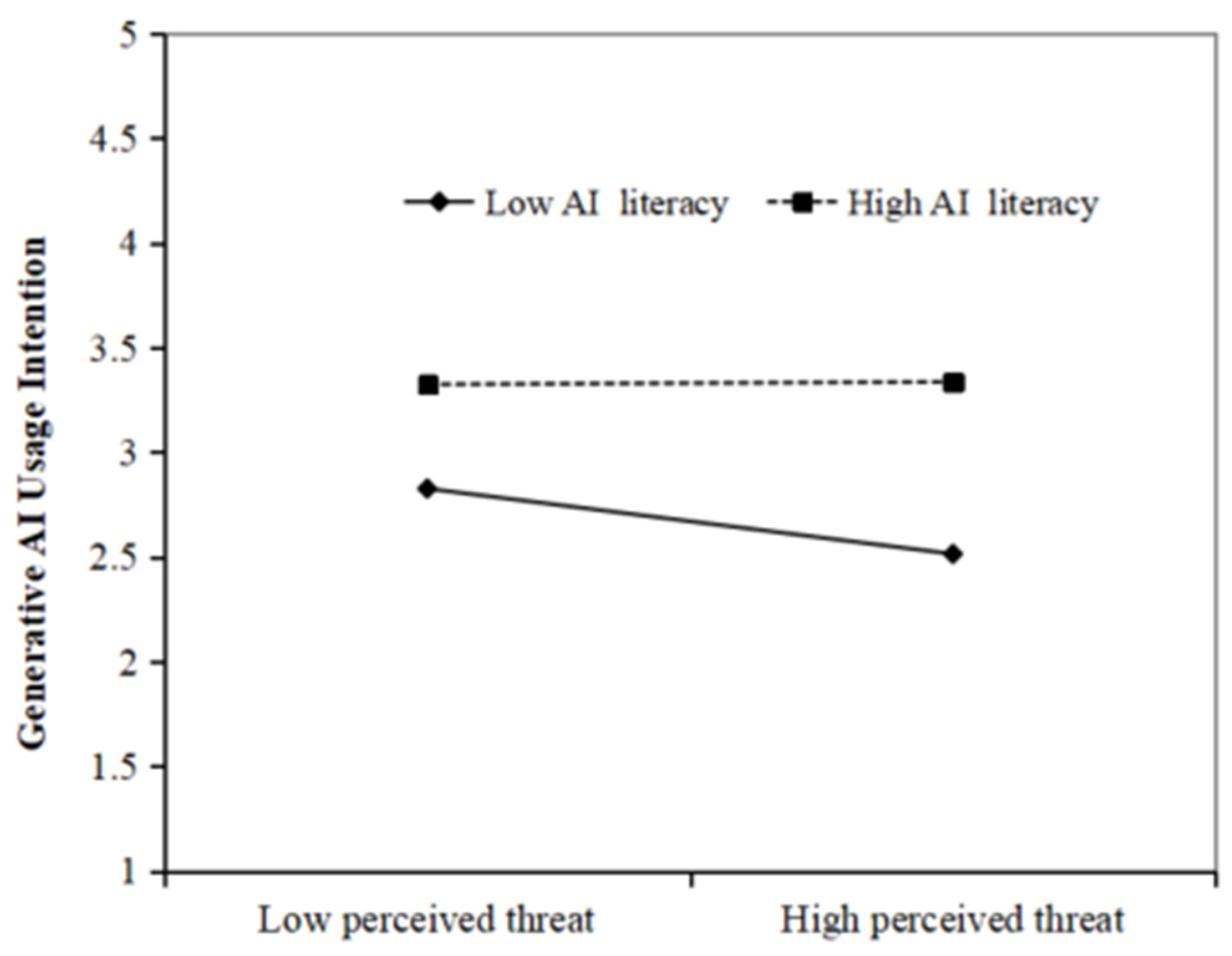

4.5. Moderation Test

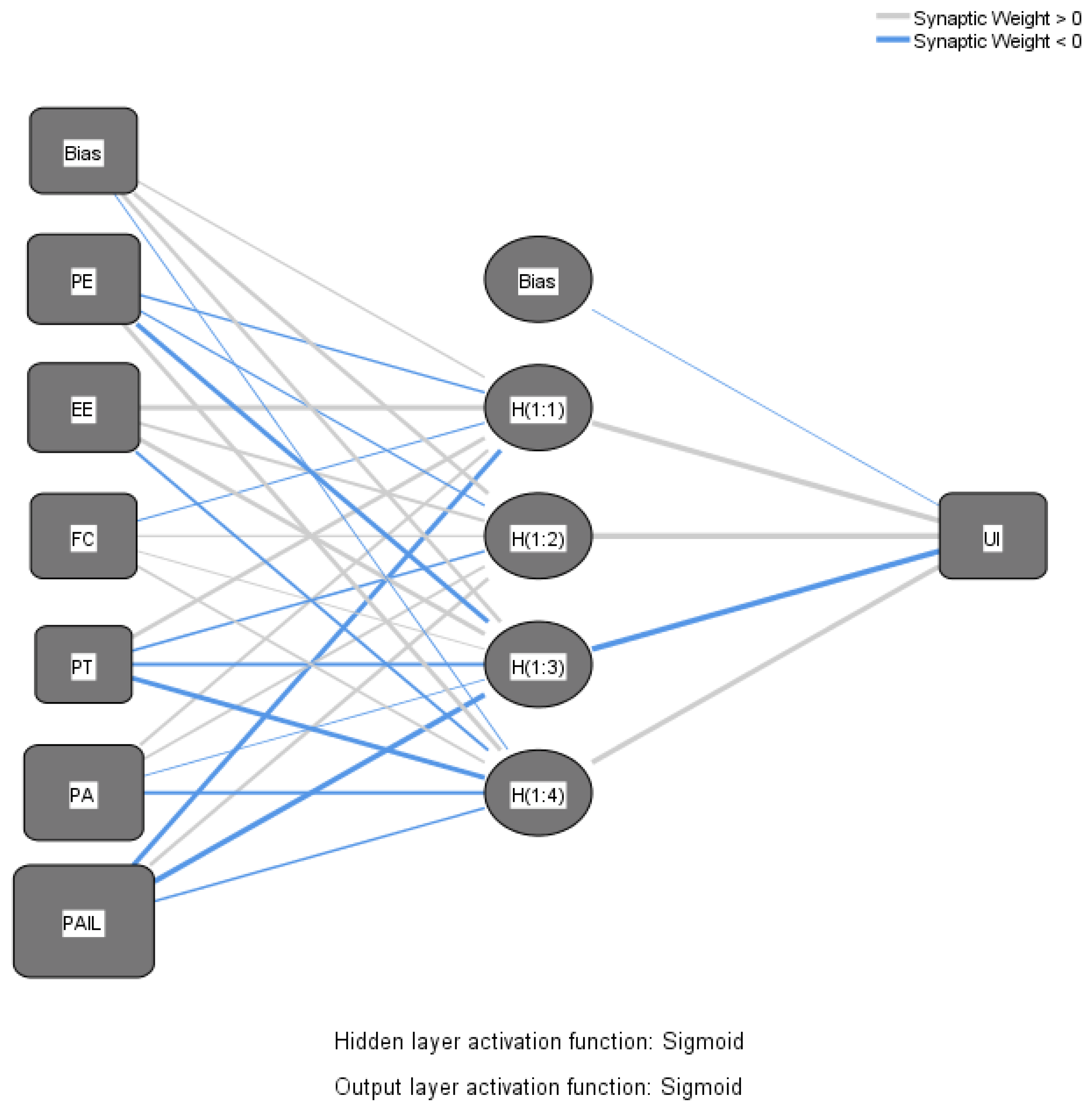

4.6. ANN Analysis

4.7. Discussion of the Results

5. Implications

5.1. Theoretical Implications

5.2. Managerial Implications

6. Limitations and Future Research

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Constructs | Items | Sources |

|---|---|---|

| Performance Expectancy (PE) | PE1: I find Generative AI useful in my daily life. | Venkatesh et al. [64] and Cao et al. [65] |

| PE2: Using Generative AI increases my chances of achieving tasks that are important to me. | ||

| PE3: Using Generative AI helps me accomplish tasks more quickly. | ||

| PE4: Using Generative AI increases my productivity. | ||

| Effort Expectancy (EE) | EE1: Learning how to use Generative AI is easy for me. | Venkatesh et al. [64] and Cao et al. [65] |

| EE2: My interaction with Generative AI is clear and understandable. | ||

| EE3: I find Generative AI easy to use. | ||

| EE4: It is easy for me to become skillful at using Generative AI. | ||

| Social Influence (SI) | SI1: People who are important to me think I should use Generative AI. | Venkatesh et al. [64] and Cao et al. [65] |

| SI2: People who influence my behavior think I should use Generative AI. | ||

| SI3: People whose opinions I value prefer that I use Generative AI. | ||

| Facilitating Conditions (FC) | FC1: I have the resources necessary to use Generative AI. | Venkatesh et al. [64] and Cao et al. [65] |

| FC2: I have the knowledge necessary to understand Generative AI. | ||

| FC3: Generative AI is compatible with other technologies I use. | ||

| FC4: I can get help from others when I have difficulties using Generative AI. | ||

| Perceived Threats (PT) | PT1: My fear of exposure to Generative AI’s risks is high. | Baabdullah et al. [56] and Liang et al. [32] |

| PT2: The extent of my anxiety about potential loss due to Generative AI’s risks is high. | ||

| PT3: The extent of my worry about Generative AI’s risks due to misuse is high. | ||

| Perceived Avoidability (PA) | PA1: Taking everything into consideration (effectiveness of countermeasures, costs, and my confidence in employing countermeasures), the threat of Generative AI could be prevented. | Liang et al. [32] |

| PA2. Taking everything into consideration (effectiveness of countermeasures, costs, and my confidence in employing countermeasures), I could protect myself from the threat of Generative AI. | ||

| PA3. Taking everything into consideration (effectiveness of countermeasures, costs, and my confidence in employing countermeasures), the threat of Generative AI was avoidable. | ||

| Perceived Artificial Intelligence Literacy (PAIL) | PAIL1: I understand the basic concepts of Generative artificial intelligence. | Grassini [67] |

| PAIL2: I believe l can contribute to Generative AI projects. (dropped) | ||

| PAIL3: I can judge the pros and cons of Generative AI. | ||

| PAIL4: I keep up with the latest Generative AI trends. | ||

| PAIL5: I’m comfortable talking about Generative AI with others. | ||

| PAIL6: I can think of new ways to use existing Generative AI tools. | ||

| Generative AI Usage Intention (UI) | UI1: I intend to use Generative AI in the future. | Venkatesh et al. [64] and Meng et al. [68] |

| UI2: I plan to use Generative AI in future. | ||

| UI3: I predict I will use Generative AI in the future. |

| Construct | Indicators | Substantive Factor Loading (Ra) | Ra2 | Method Factor Loading (Rb) | Rb2 |

|---|---|---|---|---|---|

| PE | PE→PE1 | 0.757 | 0.573 | 0.040 | 0.002 |

| PE→PE2 | 0.704 | 0.496 | 0.113 | 0.013 | |

| PE→PE3 | 0.731 | 0.534 | −0.033 | 0.001 | |

| PE→PE4 | 0.846 | 0.716 | −0.131 | 0.017 | |

| EE | EE→EE1 | 0.805 | 0.648 | −0.076 | 0.006 |

| EE→EE2 | 0.817 | 0.667 | −0.095 | 0.009 | |

| EE→EE3 | 0.802 | 0.643 | 0.009 | 0.000 | |

| EE→EE4 | 0.661 | 0.437 | 0.162 | 0.026 | |

| SI | SI→SI1 | 0.850 | 0.723 | 0.026 | 0.001 |

| SI→SI2 | 0.847 | 0.717 | 0.004 | 0.000 | |

| SI→SI3 | 0.898 | 0.806 | −0.030 | 0.001 | |

| FC | FC→FC1 | 0.714 | 0.510 | 0.037 | 0.001 |

| FC→FC2 | 0.755 | 0.570 | −0.040 | 0.002 | |

| FC→FC3 | 0.750 | 0.563 | −0.016 | 0.000 | |

| FC→FC4 | 0.797 | 0.635 | 0.016 | 0.000 | |

| PT | PT→PT1 | 0.820 | 0.672 | −0.013 | 0.000 |

| PT→PT2 | 0.830 | 0.689 | 0.000 | 0.000 | |

| PT→PT3 | 0.799 | 0.638 | 0.012 | 0.000 | |

| PA | PA→PA1 | 0.815 | 0.664 | 0.014 | 0.000 |

| PA→PA2 | 0.760 | 0.578 | 0.061 | 0.004 | |

| PA→PA3 | 0.909 | 0.826 | −0.071 | 0.005 | |

| PAIL | PAIL→PAIL1 | 0.853 | 0.728 | 0.027 | 0.001 |

| PAIL→PAIL3 | 0.740 | 0.548 | 0.109 | 0.012 | |

| PAIL→PAIL4 | 0.916 | 0.839 | −0.031 | 0.001 | |

| PAIL→PAIL5 | 0.916 | 0.839 | −0.038 | 0.001 | |

| PAIL→PAIL6 | 0.775 | 0.601 | −0.071 | 0.005 | |

| UI | UI→UI1 | 0.776 | 0.602 | 0.071 | 0.005 |

| UI→UI2 | 0.774 | 0.599 | 0.074 | 0.005 | |

| UI→UI3 | 0.930 | 0.865 | −0.150 | 0.023 | |

| Average | 0.653 | 0.005 |

References

- Demir, K.A.; Döven, G.; Sezen, B. Industry 5.0 and Human-Robot Co-Working. Procedia Comput. Sci. 2019, 158, 688–695. [Google Scholar] [CrossRef]

- Rožanec, J.M.; Novalija, I.; Zajec, P.; Kenda, K.; Tavakoli Ghinani, H.; Suh, S.; Veliou, E.; Papamartzivanos, D.; Giannetsos, T.; Menesidou, S.A.; et al. Human-Centric Artificial Intelligence Architecture for Industry 5.0. Appl. Int. J. Prod. Res. 2023, 61, 6847–6872. [Google Scholar] [CrossRef]

- Rane, N. ChatGPT and Similar Generative Artificial Intelligence (AI) for Smart Industry: Role, Challenges and Opportunities for Industry 4.0, Industry 5.0 and Society 5.0. Chall. Oppor. Ind. 2023, 31, 10–17. [Google Scholar] [CrossRef]

- Akundi, A.; Euresti, D.; Luna, S.; Ankobiah, W.; Lopes, A.; Edinbarough, I. State of Industry 5.0—Analysis and Identification of Current Research Trends. Appl. Syst. Innov. 2022, 5, 27. [Google Scholar] [CrossRef]

- Nahavandi, S. Industry 5.0—A Human-Centric Solution. Sustainability 2019, 11, 4371. [Google Scholar] [CrossRef]

- Shakya, R.; Vadiee, F.; Khalil, M. A Showdown of ChatGPT vs DeepSeek in Solving Programming Tasks. In Proceedings of the 2025 International Conference on New Trends in Computing Sciences (ICTCS), Amman, Jordan, 16–18 April 2025; pp. 413–418. [Google Scholar]

- Singh, S.; Bansal, S.; Saddik, A.E.; Saini, M. From ChatGPT to DeepSeek AI: A Comprehensive Analysis of Evolution, Deviation, and Future Implications in AI-Language Models. arXiv 2025, arXiv:2504.03219. [Google Scholar]

- CNNIC Generative Artificial Intelligence Application Development Report. Available online: https://www.cnnic.cn/NMediaFile/2025/0321/MAIN17425363970494TQVTVCI5P.pdf (accessed on 12 July 2025).

- Rawashdeh, A. The Consequences of Artificial Intelligence: An Investigation into the Impact of AI on Job Displacement in Accounting. J. Sci. Technol. Policy Manag. 2023, 16, 506–535. [Google Scholar] [CrossRef]

- Plikas, J.H.; Trakadas, P.; Kenourgios, D. Assessing the Ethical Implications of Artificial Intelligence (AI) and Machine Learning (ML) on Job Displacement Through Automation: A Critical Analysis of Their Impact on Society. In Frontiers of Artificial Intelligence, Ethics, and Multidisciplinary Applications; Farmanbar, M., Tzamtzi, M., Verma, A.K., Chakravorty, A., Eds.; Springer Nature: Singapore, 2024; pp. 313–325. [Google Scholar]

- Utomo, P.; Kurniasari, F.; Purnamaningsih, P. The Effects of Performance Expectancy, Effort Expectancy, Facilitating Condition, and Habit on Behavior Intention in Using Mobile Healthcare Application. Int. J. Community Serv. Engagem. 2021, 2, 183–197. [Google Scholar] [CrossRef]

- Zhou, T.; Lu, Y.; Wang, B. Integrating TTF and UTAUT to Explain Mobile Banking User Adoption. Comput. Hum. Behav. 2010, 26, 760–767. [Google Scholar] [CrossRef]

- Xia, Y.; Chen, Y. Driving Factors of Generative AI Adoption in New Product Development Teams from a UTAUT Perspective. Int. J. Hum.–Comput. Interact. 2025, 41, 6067–6088. [Google Scholar] [CrossRef]

- Yakubu, M.N.; David, N.; Abubakar, N.H. Students’ Behavioural Intention to Use Content Generative AI for Learning and Research: A UTAUT Theoretical Perspective. Educ. Inf. Technol. 2025; in press. [Google Scholar] [CrossRef]

- Liang, H.; Xue, Y. Understanding Security Behaviors in Personal Computer Usage: A Threat Avoidance Perspective. J. Assoc. Inf. Syst. 2010, 11, 394–413. [Google Scholar] [CrossRef]

- Ansari, M.F. A Quantitative Study of Risk Scores and the Effectiveness of AI-Based Cybersecurity Awareness Training Programs. Int. J. Smart Sens. Adhoc Netw. 2022, 3, 1. [Google Scholar] [CrossRef]

- Wang, C.; Wang, H.; Li, Y.; Dai, J.; Gu, X.; Yu, T. Factors Influencing University Students’ Behavioral Intention to Use Generative Artificial Intelligence: Integrating the Theory of Planned Behavior and AI Literacy. Int. J. Hum.–Comput. Interact. 2025, 41, 6649–6671. [Google Scholar] [CrossRef]

- Al-Abdullatif, A.M. Modeling Teachers’ Acceptance of Generative Artificial Intelligence Use in Higher Education: The Role of AI Literacy, Intelligent TPACK, and Perceived Trust. Educ. Sci. 2024, 14, 1209. [Google Scholar] [CrossRef]

- Mills, K.; Ruiz, P.; Lee, K.; Coenraad, M.; Fusco, J.; Roschelle, J.; Weisgrau, J. AI Literacy: A Framework to Understand, Evaluate, and Use Emerging Technology. Digital Promise 2024. [Google Scholar] [CrossRef]

- Long, D.; Magerko, B. What Is AI Literacy? Competencies and Design Considerations. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–16. [Google Scholar]

- Ng, D.T.K.; Leung, J.K.L.; Chu, S.K.W.; Qiao, M.S. Conceptualizing AI Literacy: An Exploratory Review. Comput. Educ. Artif. Intell. 2021, 2, 100041. [Google Scholar] [CrossRef]

- Pan, L.; Luo, H.; Gu, Q. Incorporating AI Literacy and AI Anxiety Into TAM: Unraveling Chinese Scholars’ Behavioral Intentions Toward Adopting AI-Assisted Literature Reading. IEEE Access 2025, 13, 38952–38963. [Google Scholar] [CrossRef]

- WEF. The Future of Jobs Report 2023. Available online: https://www3.weforum.org/docs/WEF_Future_of_Jobs_2023.pdf (accessed on 12 July 2025).

- Zhang, B.; Dafoe, A. Artificial Intelligence: American Attitudes and Trends. SSRN 2019. [Google Scholar] [CrossRef]

- Ochieng, E.G.; Ominde, D.; Zuofa, T. Potential Application of Generative Artificial Intelligence and Machine Learning Algorithm in Oil and Gas Sector: Benefits and Future Prospects. Technol. Soc. 2024, 79, 102710. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, H. Leveraging Generative Artificial Intelligence for Sustainable Business Model Innovation in Production Systems. Int. J. Prod. Res. 2025, 1–26. [Google Scholar] [CrossRef]

- Zhang, Q.; Zuo, J.; Yang, S. Research on the Impact of Generative Artificial Intelligence (GenAI) on Enterprise Innovation Performance: A Knowledge Management Perspective. J. Knowl. Manag. 2025; ahead-of-print. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Sharma, S.; Singh, G. Adoption of Artificial Intelligence in Higher Education: An Empirical Study of the UTAUT Model in Indian Universities. Int. J. Syst. Assur. Eng. Manag. 2024; in press. [Google Scholar] [CrossRef]

- Pramod, D.; Patil, K.P.; Kumar, D.; Singh, D.R.; Singh Dodiya, C.; Noble, D. Generative AI and Deep Fakes in Media Industry–An Innovation Resistance Theory Perspective. In Proceedings of the 2024 International Conference on Electrical Electronics and Computing Technologies (ICEECT), Greater Noida, India, 29–31 August 2024; Volume 1, pp. 1–5. [Google Scholar] [CrossRef]

- Singh, A.; Dwivedi, A.; Agrawal, D.; Singh, D. Identifying Issues in Adoption of AI Practices in Construction Supply Chains: Towards Managing Sustainability. Oper. Manag. Res. 2023, 16, 1667–1683. [Google Scholar] [CrossRef]

- Liang, H.; Xue, Y. Avoidance of Information Technology Threats: A Theoretical Perspective. MIS Q. 2009, 33, 71–90. [Google Scholar] [CrossRef]

- Domínguez Figaredo, D.; Stoyanovich, J. Responsible AI Literacy: A Stakeholder-First Approach. Big Data Soc. 2023, 10, 1–15. [Google Scholar] [CrossRef]

- Wang, B.; Rau, P.-L.P.; Yuan, T. Measuring User Competence in Using Artificial Intelligence: Validity and Reliability of Artificial Intelligence Literacy Scale. Behav. Inf. Technol. 2023, 42, 1324–1337. [Google Scholar] [CrossRef]

- Wilton, L.; Ip, S.; Sharma, M.; Fan, F. Where Is the AI? AI Literacy for Educators. In Artificial Intelligence in Education. Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practitioners’ and Doctoral Consortium; Rodrigo, M.M., Matsuda, N., Cristea, A.I., Dimitrova, V., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 180–188. [Google Scholar]

- Cho, S.L.; Jeong, S.C. A Study of Negative Factors Affecting Perceived Usefulness and Intention to Continue Using ChatGPT: Focusing on the moderating effect of AI literacy. J. Inf. Syst. 2024, 33, 1–18. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Du, L.; Lv, B. Factors Influencing Students’ Acceptance and Use Generative Artificial Intelligence in Elementary Education: An Expansion of the UTAUT Model. Educ. Inf. Technol. 2024, 29, 24715–24734. [Google Scholar] [CrossRef]

- Strzelecki, A.; ElArabawy, S. Investigation of the Moderation Effect of Gender and Study Level on the Acceptance and Use of Generative AI by Higher Education Students: Comparative Evidence from Poland and Egypt. Br. J. Educ. Technol. 2024, 55, 1209–1230. [Google Scholar] [CrossRef]

- Li, X.; Zappatore, M.; Li, T.; Zhang, W.; Tao, S.; Wei, X.; Zhou, X.; Guan, N.; Chan, A. GAI vs. Teacher Scoring: Which Is Better for Assessing Student Performance? IEEE Trans. Learn. Technol. 2025, 18, 569–580. [Google Scholar] [CrossRef]

- Jarrahi, M.H.; Askay, D.; Eshraghi, A.; Smith, P. Artificial Intelligence and Knowledge Management: A Partnership between Human and AI. Bus. Horiz. 2023, 66, 87–99. [Google Scholar] [CrossRef]

- Yang, Y. Exploring Factors Influencing L2 Learners’ Use of GAI-Assisted Writing Technology: Based on the UTAUT Model. Asia Pac. J. Educ. 2025, 23, 1–20. [Google Scholar] [CrossRef]

- Godoe, P.; Johansen, T.S. Understanding Adoption of New Technologies: Technology Readiness and Technology Acceptance as an Integrated Concept. J. Eur. Psychol. Stud. 2012, 3, 38–52. [Google Scholar] [CrossRef]

- Tahar, A.; Riyadh, H.A.; Sofyani, H.; Purnomo, W.E. Perceived Ease of Use, Perceived Usefulness, Perceived Security and Intention to Use E-Filing: The Role of Technology Readiness. J. Asian Financ. Econ. Bus. 2020, 7, 537–547. [Google Scholar] [CrossRef]

- Cabero-Almenara, J.; Palacios-Rodríguez, A.; Rojas Guzmán, H.; de los, Á.; Fernández-Scagliusi, V. Prediction of the Use of Generative Artificial Intelligence Through ChatGPT Among Costa Rican University Students: A PLS Model Based on UTAUT2. Appl. Sci. 2025, 15, 3363. [Google Scholar] [CrossRef]

- Goncalves, C.; Rouco, J.C.D. Proceedings of the International Conference on AI Research; Academic Conferences and Publishing Limited: Manchester, UK, 2024; ISBN 978-1-917204-28-6. [Google Scholar]

- Swargiary, K.; Roy, S.K. A Comprehensive Analysis of Influences on Higher Education Through Artificial Intelligence. SSRN 2024. [Google Scholar]

- Jo, H.; Bang, Y. Analyzing ChatGPT Adoption Drivers with the TOEK Framework. Sci. Rep. 2023, 13, 22606. [Google Scholar] [CrossRef] [PubMed]

- DiMaggio, P.J.; Powell, W.W. The Iron Cage Revisited: Institutional Isomorphism and Collective Rationality in Organizational Fields. Am. Sociol. Rev. 1983, 48, 147. [Google Scholar] [CrossRef]

- Kurniasari, F.; Tajul Urus, S.; Utomo, P.; Abd Hamid, N.; Jimmy, S.Y.; Othman, I.W. Determinant Factors of Adoption of Fintech Payment Services in Indonesia Using the UTAUT Approach. Asian-Pac. Manag. Account. J. 2022, 17, 97–125. [Google Scholar] [CrossRef]

- Wang, L.; Xiao, J. Research on Influencing Factors of Learners’ Intention of Online Learning Behaviour in Open Education Based on UTAUT Model. In Proceedings of the 10th International Conference on Education Technology and Computers, Tokyo, Japan, 26–28 October 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 92–98. [Google Scholar]

- Levina, N.; Lifshitz-Assa, H. Is AI Ground Truth Really True? The Dangers of Training and Evaluating AI Tools Based on Experts’ Know-What. MIS Q. 2021, 45, 1501–1526. [Google Scholar] [CrossRef]

- Bagchi, K.; Udo, G. An Analysis of the Growth of Computer and Internet Security Breaches. Commun. Assoc. Inf. Syst. 2003, 12, 46. [Google Scholar] [CrossRef]

- Liu, C.; Wang, N.; Liang, H. Motivating Information Security Policy Compliance: The Critical Role of Supervisor-Subordinate Guanxi and Organizational Commitment. Int. J. Inf. Manag. 2020, 54, 102152. [Google Scholar] [CrossRef]

- Herath, T.; Chen, R.; Wang, J.; Banjara, K.; Wilbur, J.; Rao, H.R. Security Services as Coping Mechanisms: An Investigation into User Intention to Adopt an Email Authentication Service. Inf. Syst. J. 2012, 24, 61–84. [Google Scholar] [CrossRef]

- Liang, H.; Xue, Y.; Pinsonneault, A.; Wu, Y. “Andy” What Users Do Besides Problem-Focused Coping When Facing IT Security Threats: An Emotion-Focused Coping Perspective. MIS Q. 2019, 43, 373–394. [Google Scholar] [CrossRef]

- Pan, J.; Ding, S.; Wu, D.; Yang, S.; Yang, J. Exploring Behavioural Intentions toward Smart Healthcare Services among Medical Practitioners: A Technology Transfer Perspective. Int. J. Prod. Res. 2019, 57, 5801–5820. [Google Scholar] [CrossRef]

- Shiva, A.; Kushwaha, B.P.; Rishi, B. A Model Validation of Robo-Advisers for Stock Investment. Borsa Istanb. Rev. 2023, 23, 1458–1473. [Google Scholar] [CrossRef]

- Beaudry, A.; Pinsonneault, A. Understanding User Responses to Information Technology: A Coping Model of User Adaptation. MIS Q. 2005, 29, 493–524. [Google Scholar] [CrossRef]

- Almatrafi, O.; Johri, A.; Lee, H. A Systematic Review of AI Literacy Conceptualization, Constructs, and Implementation and Assessment Efforts (2019–2023). Comput. Educ. Open 2024, 6, 100173. [Google Scholar] [CrossRef]

- Bozkurt, A.; Sharma, R.C. Generative AI and Prompt Engineering: The Art of Whispering to Let the Genie Out of the Algorithmic World. Asian J. Distance Educ. 2023, 18, i–vii. [Google Scholar]

- Kenku, A.A.; Uzoigwe, T.L. Determinants of artificial intelligence anxiety: Impact of some psychological and organisational characteristics among staff of Federal Polytechnic Nasarawa, Nigeria. Afr. J. Psychol. Stud. Soc. Issues 2024, 27, 46–61. [Google Scholar]

- Al-Abdullatif, A.M.; Alsubaie, M.A. ChatGPT in Learning: Assessing Students’ Use Intentions through the Lens of Perceived Value and the Influence of AI Literacy. Behav. Sci. 2024, 14, 845. [Google Scholar] [CrossRef] [PubMed]

- Venkatesh, V.; Thong, J.Y.L.; Xu, X. Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Cao, G.; Duan, Y.; Edwards, J.S.; Dwivedi, Y.K. Understanding Managers’ Attitudes and Behavioral Intentions towards Using Artificial Intelligence for Organizational Decision-Making. Technovation 2021, 106, 102312. [Google Scholar] [CrossRef]

- Baabdullah, A.M. The Precursors of AI Adoption in Business: Towards an Efficient Decision-Making and Functional Performance. Int. J. Inf. Manag. 2024, 75, 102745. [Google Scholar] [CrossRef]

- Grassini, S. A Psychometric Validation of the PAILQ-6: Perceived Artificial Intelligence Literacy Questionnaire. In Proceedings of the 13th Nordic Conference on Human-Computer Interaction, Uppsala, Sweden, 13–16 October 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 1–10. [Google Scholar]

- Meng, M.; Chenhui, L.; Zheng, H.; Xing, W. Consumer Usage of Mobile Visual Search in China: Extending UTAUT2 With Perceived Contextual Offer and Implementation Intention. J. Glob. Inf. Manag. 2024, 32, 1–29. [Google Scholar] [CrossRef]

- Andrews, J.E.; Ward, H.; Yoon, J. UTAUT as a Model for Understanding Intention to Adopt AI and Related Technologies among Librarians. J. Acad. Librariansh. 2021, 47, 102437. [Google Scholar] [CrossRef]

- Brislin, R.W. Translation and Content Analysis of Oral and Written Materials. In Handbook of Cross-Cultural Psychology; Triandis, H.C., Berry, J.W., Eds.; Allyn and Bacon: Boston, MA, USA, 1980; Volume 2, pp. 389–444. [Google Scholar]

- Hatlevik, O.E.; Throndsen, I.; Loi, M.; Gudmundsdottir, G.B. Students’ ICT Self-Efficacy and Computer and Information Literacy: Determinants and Relationships. Comput. Educ. 2018, 118, 107–119. [Google Scholar] [CrossRef]

- World Economic Forum; Accenture China. Blueprint to Action: China’s Path to AI-Powered Industry Transformation; Online White Paper; World Economic Forum: Geneva, Switzerland, 2025; Available online: https://www.weforum.org/publications/industries-in-the-intelligent-age-white-paper-series/china-transformation-of-industries/ (accessed on 12 July 2025).

- Hair, J.F.; Ringle, C.M.; Sarstedt, M. Editorial–Partial Least Squares: The Better Approach to Structural Equation Modeling? Long Range Plan. 2012, 45, 312–319. [Google Scholar] [CrossRef]

- Saraf, N.; Liang, H.; Xue, Y.; Hu, Q. How Does Organisational Absorptive Capacity Matter in the Assimilation of Enterprise Information Systems? Inf. Syst. J. 2013, 23, 245–267. [Google Scholar] [CrossRef]

- Ringle, C.M.; Wende, S.; Becker, J.-M. SmartPLS 4. Available online: https://www.smartpls.com/ (accessed on 13 July 2025).

- Guan, B.; Hsu, C. The Role of Abusive Supervision and Organizational Commitment on Employees’ Information Security Policy Noncompliance Intention. Internet Res. 2020, 30, 1383–1405. [Google Scholar] [CrossRef]

- Hsu, J.S.-C.; Shih, S.-P.; Hung, Y.W.; Lowry, P.B. The Role of Extra-Role Behaviors and Social Controls in Information Security Policy Effectiveness. Inf. Syst. Res. 2015, 26, 282–300. [Google Scholar] [CrossRef]

- Harman, H.H. Modern Factor Analysis; University of Chicago Press: Chicago, IL, USA, 1976; ISBN 978-0-226-31652-9. [Google Scholar]

- MacKenzie, S.B.; Podsakoff, P.M.; Podsakoff, N.P. Construct Measurement and Validation Procedures in MIS and Behavioral Research: Integrating New and Existing Techniques. MIS Q. 2011, 35, 293–334. [Google Scholar] [CrossRef]

- Bagozzi, R.P. Evaluating Structural Equation Models with Unobservable Variables and Measurement Error: A Comment. J. Mark. Res. 1981, 7, 39–50. [Google Scholar]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A New Criterion for Assessing Discriminant Validity in Variance-Based Structural Equation Modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Hu, L.; Bentler, P.M. Fit Indices in Covariance Structure Modeling: Sensitivity to Underparameterized Model Misspecification. Psychol. Methods 1998, 3, 424–453. [Google Scholar] [CrossRef]

- Yuan, Y.-P.; Tan, G.W.-H.; Ooi, K.-B. Does COVID-19 Pandemic Motivate Privacy Self-Disclosure in Mobile Fintech Transactions? A Privacy-Calculus-Based Dual-Stage SEM-ANN Analysis. IEEE Trans. Eng. Manag. 2024, 71, 2986–3000. [Google Scholar] [CrossRef]

- Cohen, J.; Cohen, P.; West, S.G.; Aiken, L.S. Applied Multiple Regression/Correlation Analysis for the Behavioral Sciences, 3rd ed.; Routledge: New York, NY, USA, 2013; ISBN 978-0-203-77444-1. [Google Scholar]

- Chin, W.W.; Marcolin, B.L.; Newsted, P.R. A Partial Least Squares Latent Variable Modeling Approach for Measuring Interaction Effects: Results from a Monte Carlo Simulation Study and an Electronic-Mail Emotion/Adoption Study. Inf. Syst. Res. 2003, 14, 189–217. [Google Scholar] [CrossRef]

- Yuan, Y.-P.; Dwivedi, Y.K.; Tan, G.W.-H.; Cham, T.-H.; Ooi, K.-B.; Aw, E.C.-X.; Currie, W. Government Digital Transformation: Understanding the Role of Government Social Media. Gov. Inf. Q. 2023, 40, 101775. [Google Scholar] [CrossRef]

- Latif, S.; Zou, Z.; Idrees, Z.; Ahmad, J. A Novel Attack Detection Scheme for the Industrial Internet of Things Using a Lightweight Random Neural Network. IEEE Access 2020, 8, 89337–89350. [Google Scholar] [CrossRef]

- Arpaci, I.; Bahari, M. A Complementary SEM and Deep ANN Approach to Predict the Adoption of Cryptocurrencies from the Perspective of Cybersecurity. Comput. Hum. Behav. 2023, 143, 107678. [Google Scholar] [CrossRef]

- Leong, L.-Y.; Hew, J.-J.; Lee, V.-H.; Tan, G.W.-H.; Ooi, K.-B.; Rana, N.P. An SEM-ANN Analysis of the Impacts of Blockchain on Competitive Advantage. Ind. Manag. Data Syst. 2023, 123, 967–1004. [Google Scholar] [CrossRef]

- Svozil, D.; Kvasnicka, V.; Pospichal, J. Introduction to Multi-Layer Feed-Forward Neural Networks. Chemom. Intell. Lab. Syst. 1997, 39, 43–62. [Google Scholar] [CrossRef]

- Lau, A.J.; Tan, G.W.-H.; Loh, X.-M.; Leong, L.-Y.; Lee, V.-H.; Ooi, K.-B. On the Way: Hailing a Taxi with a Smartphone? A Hybrid SEM-Neural Network Approach. Mach. Learn. Appl. 2021, 4, 100034. [Google Scholar] [CrossRef]

- Leong, L.-Y.; Hew, T.-S.; Ooi, K.-B.; Chong, A.Y.-L. Predicting the Antecedents of Trust in Social Commerce–A Hybrid Structural Equation Modeling with Neural Network Approach. J. Bus. Res. 2020, 110, 24–40. [Google Scholar] [CrossRef]

- Al-Emran, M.; Al-Qaysi, N.; Al-Sharafi, M.A.; Khoshkam, M.; Foroughi, B.; Ghobakhloo, M. Role of Perceived Threats and Knowledge Management in Shaping Generative AI Use in Education and Its Impact on Social Sustainability. Int. J. Manag. Educ. 2025, 23, 101105. [Google Scholar] [CrossRef]

- Joo, K.; Kim, H.M.; Hwang, J. Application of the Extended Unified Theory of Acceptance and Use of Technology to a Robotic Golf Caddy: Health Consciousness as a Moderator. Appl. Sci. 2024, 14, 9915. [Google Scholar] [CrossRef]

- Hoai, N.T. Factors Affecting Students’ Intention to Use Artificial Intelligence in Learning: An Empirical Study in Vietnam. Edelweiss Appl. Sci. Technol. 2025, 9, 1533–1540. [Google Scholar] [CrossRef]

- Al-Maroof, R.S.; Alhumaid, K.; Akour, I.; Salloum, S. Factors That Affect E-Learning Platforms after the Spread of COVID-19: Post Acceptance Study. Data 2021, 6, 49. [Google Scholar] [CrossRef]

- Fan, C.; Hu, M.; Shangguan, Z.; Ye, C.; Yan, S.; Wang, M.Y. The Drivers of Employees’ Active Innovative Behaviour in Chinese High-Tech Enterprises. Sustainability 2021, 13, 6032. [Google Scholar] [CrossRef]

- Farh, J.-L.; Hackett, R.D.; Liang, J. Individual-Level Cultural Values as Moderators of Perceived Organizational Support–Employee Outcome Relationships in China: Comparing the Effects of Power Distance and Traditionality. Acad. Manag. J. 2007, 50, 715–729. [Google Scholar] [CrossRef]

| Demographics | n | Percentage | |

|---|---|---|---|

| Gender | Male | 296 | 50.8 |

| Female | 287 | 49.2 | |

| Age | 20–29 | 194 | 33.3 |

| 30–39 | 163 | 28.0 | |

| 40–49 | 172 | 29.5 | |

| 50–59 | 54 | 9.3 | |

| Educational level | High school | 12 | 2.1 |

| College | 31 | 5.3 | |

| Undergraduate | 388 | 66.6 | |

| Graduate | 148 | 25.4 | |

| Doctoral | 4 | 0.7 | |

| Industry | Service | 89 | 15.3 |

| Financial | 94 | 16.1 | |

| Education | 128 | 22.0 | |

| IT | 168 | 28.8 | |

| Manufacturing | 104 | 17.8 | |

| Work experience | <5 years | 203 | 34.8 |

| 5–10 years | 220 | 37.7 | |

| >10 years | 160 | 27.4 | |

| Constructs | Items | Loadings | Cronbach’s Alpha (α) | Composite Reliability (CR) | Average Variance Extracted (AVE) |

|---|---|---|---|---|---|

| Performance Expectancy (PE) | PE1 | 0.789 | 0.753 | 0.844 | 0.574 |

| PE2 | 0.784 | ||||

| PE3 | 0.726 | ||||

| PE4 | 0.730 | ||||

| Effort Expectancy (EE) | EE1 | 0.755 | 0.773 | 0.854 | 0.595 |

| EE2 | 0.750 | ||||

| EE3 | 0.815 | ||||

| EE4 | 0.763 | ||||

| Social Influence (SI) | SI1 | 0.875 | 0.832 | 0.899 | 0.748 |

| SI2 | 0.863 | ||||

| SI3 | 0.856 | ||||

| Facilitating Conditions (FC) | FC1 | 0.777 | 0.746 | 0.839 | 0.566 |

| FC2 | 0.736 | ||||

| FC3 | 0.704 | ||||

| FC4 | 0.790 | ||||

| Perceived Threats (PT) | PT1 | 0.813 | 0.75 | 0.857 | 0.666 |

| PT2 | 0.829 | ||||

| PT3 | 0.807 | ||||

| Perceived Avoidability (PA) | PA1 | 0.835 | 0.772 | 0.868 | 0.687 |

| PA2 | 0.810 | ||||

| PA3 | 0.840 | ||||

| Perceived AI Literacy (PAIL) | PAIL1 | 0.882 | 0.895 | 0.923 | 0.708 |

| PAIL3 | 0.817 | ||||

| PAIL4 | 0.897 | ||||

| PAIL5 | 0.886 | ||||

| PAIL6 | 0.709 | ||||

| Generative AI Usage Intention (UI) | UI1 | 0.838 | 0.766 | 0.865 | 0.681 |

| UI2 | 0.835 | ||||

| UI3 | 0.801 |

| PE | EE | SI | FC | PT | PA | PAIL | UI | |

|---|---|---|---|---|---|---|---|---|

| PE | 0.758 | |||||||

| EE | 0.404 | 0.771 | ||||||

| SI | 0.559 | 0.207 | 0.865 | |||||

| FC | 0.448 | 0.306 | 0.503 | 0.752 | ||||

| PT | 0.172 | 0.255 | 0.291 | 0.313 | 0.816 | |||

| PA | 0.508 | 0.376 | 0.466 | 0.391 | 0.175 | 0.829 | ||

| PAIL | 0.384 | 0.285 | 0.471 | 0.434 | 0.222 | 0.502 | 0.841 | |

| UI | 0.526 | 0.480 | 0.351 | 0.418 | 0.111 | 0.500 | 0.554 | 0.825 |

| PE | EE | SI | FC | PT | PA | PAIL | UI | |

|---|---|---|---|---|---|---|---|---|

| PE | ||||||||

| EE | 0.518 | |||||||

| SI | 0.702 | 0.248 | ||||||

| FC | 0.595 | 0.391 | 0.647 | |||||

| PT | 0.225 | 0.334 | 0.371 | 0.420 | ||||

| PA | 0.665 | 0.481 | 0.580 | 0.516 | 0.226 | |||

| PAIL | 0.467 | 0.335 | 0.554 | 0.532 | 0.274 | 0.602 | ||

| UI | 0.682 | 0.622 | 0.429 | 0.539 | 0.148 | 0.641 | 0.657 |

| ANN | Training | Testing |

|---|---|---|

| 1 | 0.067 | 0.055 |

| 2 | 0.071 | 0.058 |

| 3 | 0.066 | 0.054 |

| 4 | 0.065 | 0.066 |

| 5 | 0.066 | 0.062 |

| 6 | 0.068 | 0.062 |

| 7 | 0.068 | 0.045 |

| 8 | 0.066 | 0.058 |

| 9 | 0.066 | 0.071 |

| 10 | 0.070 | 0.060 |

| Mean | 0.067 | 0.059 |

| Standard Deviation | 0.002 | 0.007 |

| Network | PE | EE | FC | PT | PA | PAIL |

|---|---|---|---|---|---|---|

| 1 | 0.239 | 0.196 | 0.150 | 0.058 | 0.081 | 0.277 |

| 2 | 0.34 | 0.152 | 0.099 | 0.025 | 0.179 | 0.206 |

| 3 | 0.188 | 0.219 | 0.142 | 0.077 | 0.132 | 0.243 |

| 4 | 0.201 | 0.177 | 0.159 | 0.071 | 0.129 | 0.264 |

| 5 | 0.196 | 0.201 | 0.149 | 0.037 | 0.172 | 0.245 |

| 6 | 0.237 | 0.215 | 0.166 | 0.017 | 0.166 | 0.200 |

| 7 | 0.160 | 0.229 | 0.128 | 0.075 | 0.114 | 0.293 |

| 8 | 0.198 | 0.195 | 0.170 | 0.035 | 0.175 | 0.227 |

| 9 | 0.184 | 0.137 | 0.194 | 0.104 | 0.084 | 0.297 |

| 10 | 0.279 | 0.199 | 0.086 | 0.076 | 0.129 | 0.232 |

| Average Importance | 0.222 | 0.192 | 0.144 | 0.058 | 0.136 | 0.248 |

| Normalized Importance (%) | 89.45% | 77.29% | 58.09% | 23.15% | 54.79% | 100% |

| PLS Path | PLS-SEM: Path Coefficient | Normalized Importance | Ranking (PLS-SEM) | Ranking (ANN) | Remark |

|---|---|---|---|---|---|

| PE-UI | 0.245 | 89.45 | 2 | 2 | Match |

| EE-UI | 0.242 | 77.29 | 3 | 3 | Match |

| FC-UI | 0.106 | 58.09 | 4 | 4 | Match |

| PT-UI | −0.075 | 23.15 | 6 | 6 | Match |

| PA-UI | 0.099 | 54.79 | 5 | 5 | Match |

| PAIL-UI | 0.330 | 100.00 | 1 | 1 | Match |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, C.; Yang, L.; Dong, X.; Li, X. Factors Influencing Generative AI Usage Intention in China: Extending the Acceptance–Avoidance Framework with Perceived AI Literacy. Systems 2025, 13, 639. https://doi.org/10.3390/systems13080639

Liu C, Yang L, Dong X, Li X. Factors Influencing Generative AI Usage Intention in China: Extending the Acceptance–Avoidance Framework with Perceived AI Literacy. Systems. 2025; 13(8):639. https://doi.org/10.3390/systems13080639

Chicago/Turabian StyleLiu, Chenhui, Libo Yang, Xinyu Dong, and Xiaocui Li. 2025. "Factors Influencing Generative AI Usage Intention in China: Extending the Acceptance–Avoidance Framework with Perceived AI Literacy" Systems 13, no. 8: 639. https://doi.org/10.3390/systems13080639

APA StyleLiu, C., Yang, L., Dong, X., & Li, X. (2025). Factors Influencing Generative AI Usage Intention in China: Extending the Acceptance–Avoidance Framework with Perceived AI Literacy. Systems, 13(8), 639. https://doi.org/10.3390/systems13080639