From Transformative Agency to AI Literacy: Profiling Slovenian Technical High School Students Through the Five Big Ideas Lens

Abstract

1. Introduction

1.1. Psychological Empowerment and AI Literacy in Education

1.1.1. Linking Empowerment Constructs to AI Literacy Outcomes

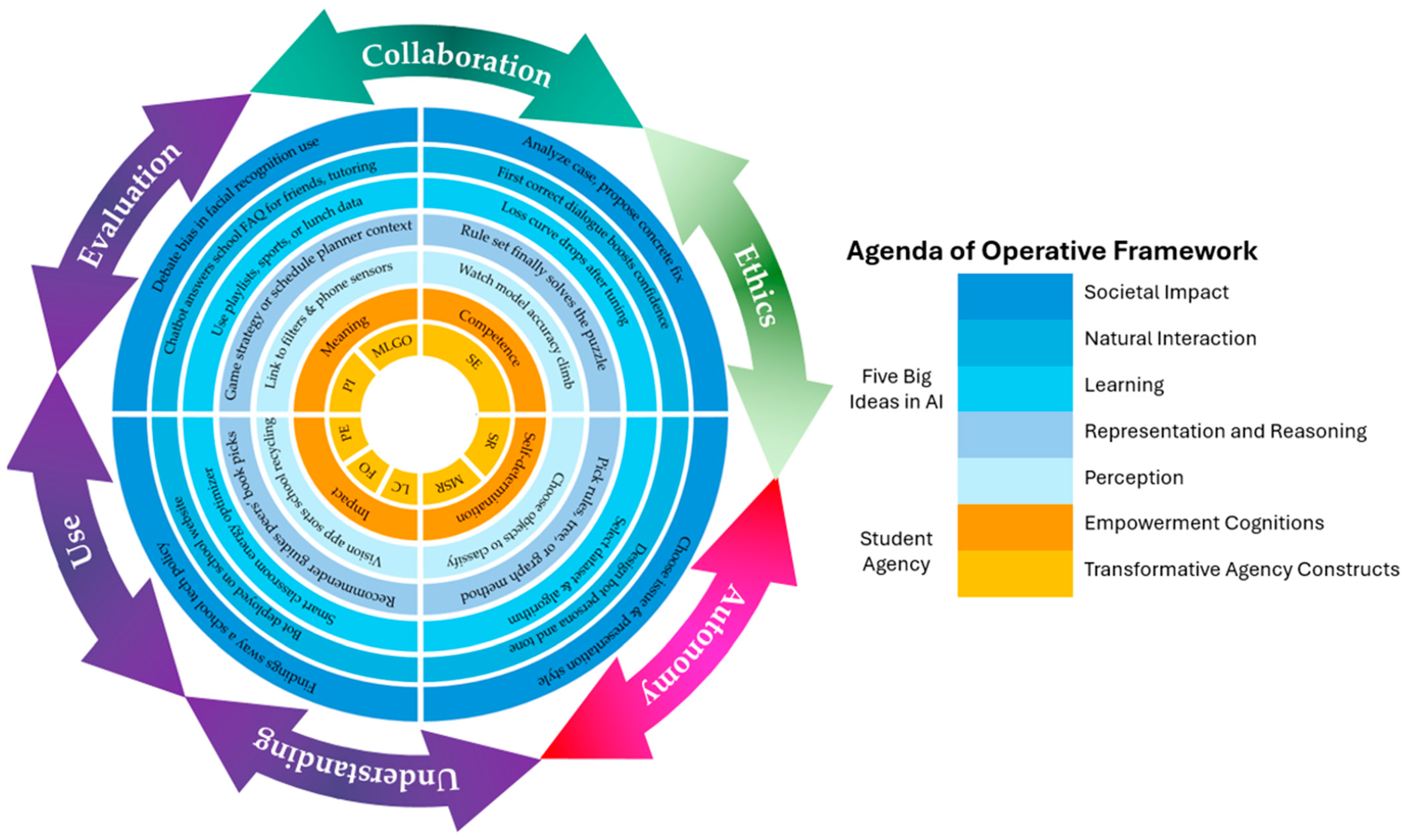

1.1.2. Links to the Five Big Ideas in AI Education

1.2. Aim and Research Questions of the Current Study

- RQ 1: What cluster profiles of transformative agency can be identified among Slovenian technical high school students?

- RQ 2: To what extent do overall AI literacy scores differ between students in the human service and industrial engineering tracks?

- RQ 3: How does membership in a given transformative agency profile predict students’ overall AI literacy scores?

- RQ 4: To what extent do the individual constructs of transformative agency predict students’ overall AI literacy scores, controlling for program track, study year, and sex?

2. Materials and Methods

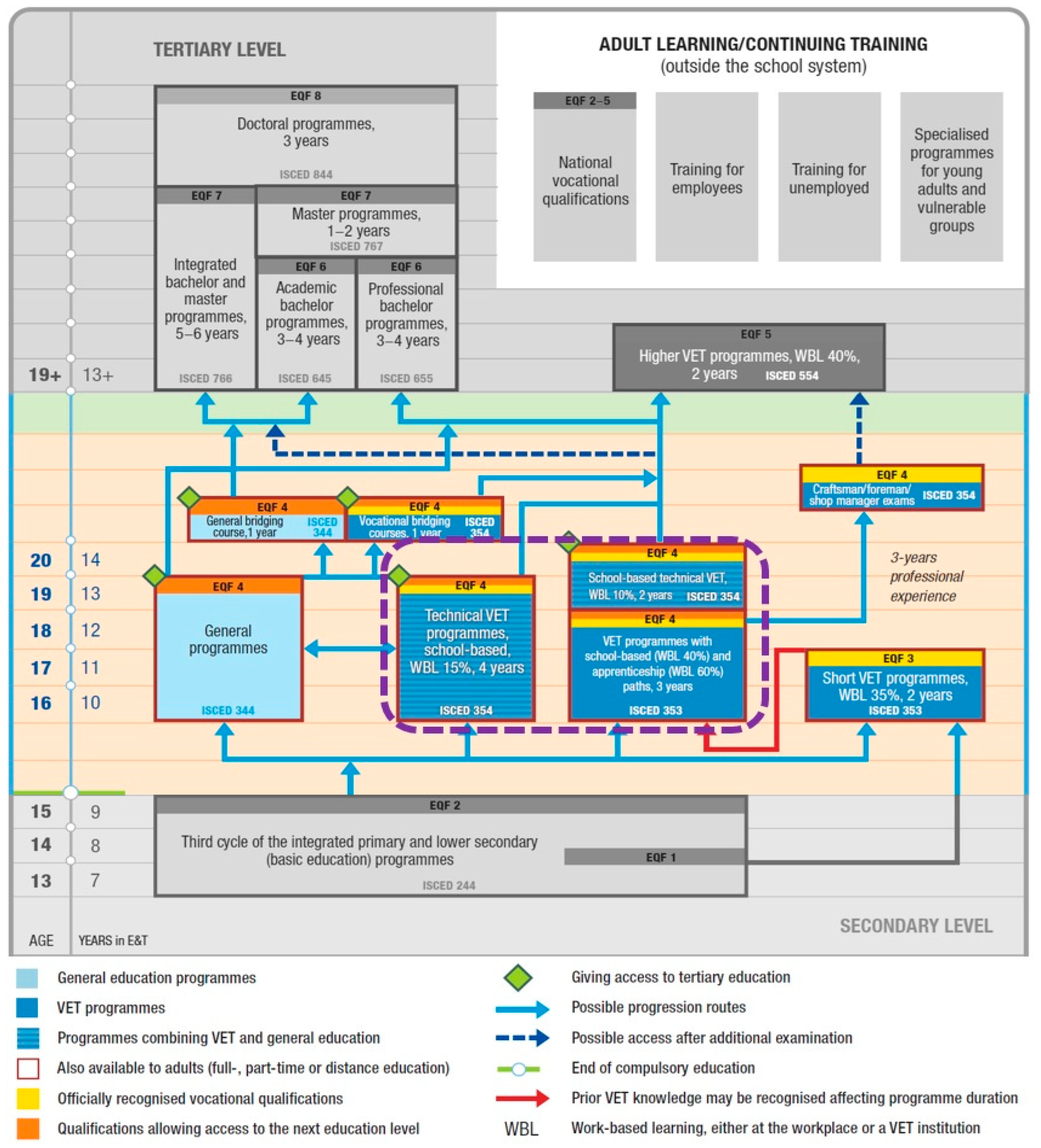

2.1. Participants and Setting

2.2. Data Collection

2.3. Instruments

2.3.1. AI Literacy Test

2.3.2. Student Agency Survey

2.4. Validation Procedures

2.4.1. IRT Calibration of AI Literacy Items

2.4.2. Convergent and Discriminant Validity of Student Agency Constructs

- Fornell–Larcker criterion: each construct’s square root of AVE was larger than its highest latent correlation.

- Heterotrait–monotrait ratio (HTMT): bootstrapped HTMT values ranged from 0.27 to 0.63, all below the conservative 0.85 cut-off [89], and none of the 95 % CIs included 1.00.

- Cross-loadings: every indicator loaded more strongly on its designated construct than on any other.

2.5. Data Analysis Strategy

- Measurement quality—loadings ≥ 0.70, composite reliability ρC ≥ 0.70, AVE ≥ 0.50, and HTMT < 0.85.

- Collinearity—inner variance inflation factor (VIF) ≤ 3.3.

- Structural coefficients—standardized β, 95 % bias-corrected and accelerated bootstrap confidence interval (BCa CI), p, and f2.

- Model fit—R2, Q2, PLSpredict Root Mean Square Error (RMSE) vs. Linear Regression Model (LM) benchmark.

- Interpretation, which reveals which student agency constructs retain significance after controls.

3. Results

3.1. Psychometric Properties of the Measures

3.1.1. AI Literacy Test

3.1.2. Student Agency Survey

3.2. Main Study Results

3.2.1. Analysis of Student Agency Constructs

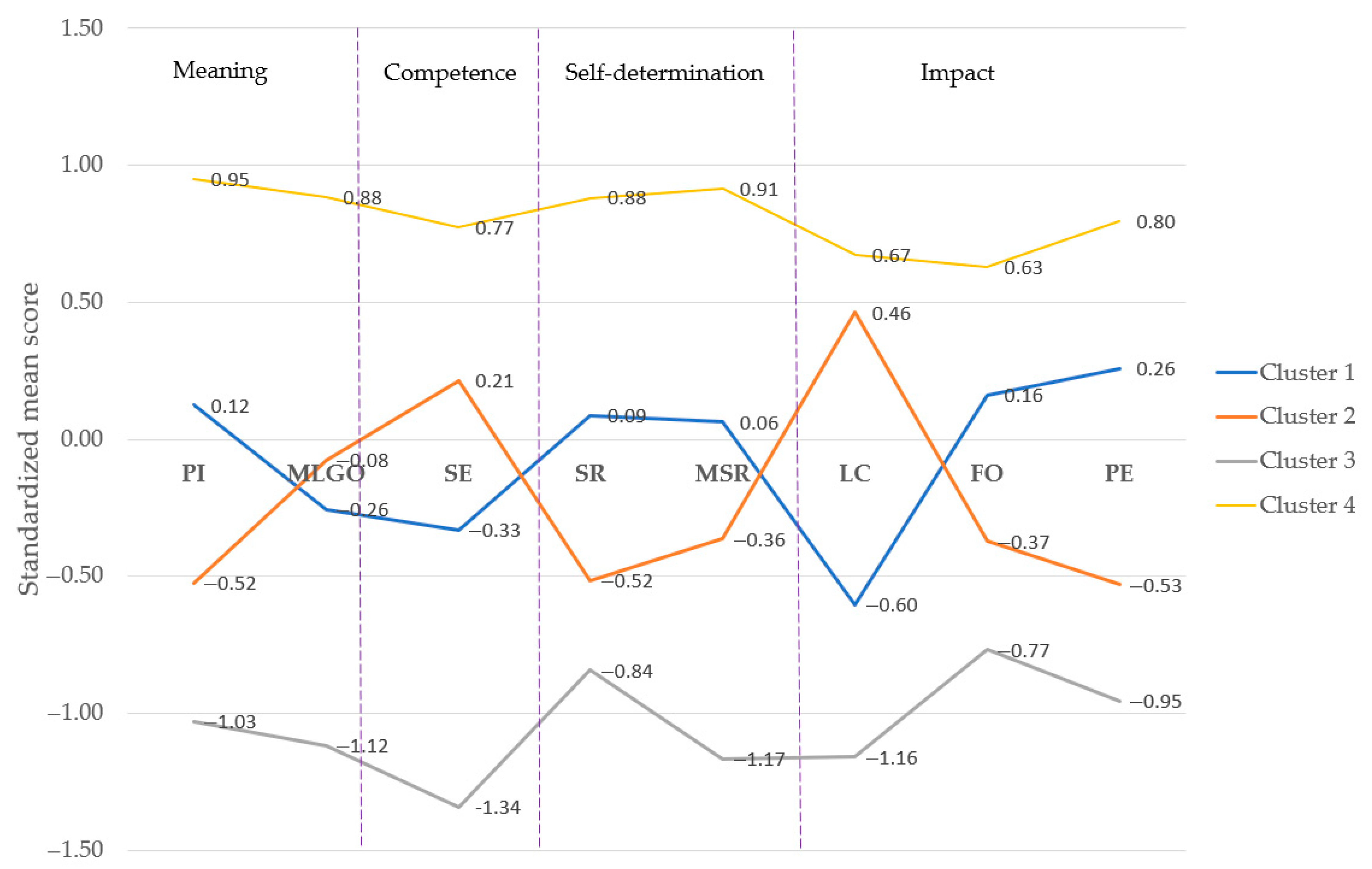

3.2.2. Cluster Profiles of Student Agency

- Low-Self-Belief Moderate (LSBM) (n = 121), characterized by average scores on most agency constructs but notably low self-efficacy and a more external locus of control. Below-average competence is evident, with the scores for meaning and impact hovering around the grand mean. LC is slightly external, contrasting with Cluster 2’s more internal LC.

- Confident–Low Drive (CLD) (n = 117), showing high self-efficacy and internal control yet reduced self-determination, perseverance of effort, and interest. The finding regarding impact is mixed: LC suggests these students feel some personal control, but FO and PE are low, signaling limited forward drive.

- Low Agency/Under-engaged (LA) (n = 68), with uniformly low levels across all constructs. Competence (SE) scores the lowest point. The scores for meaning and impact are subdued, yet SR and FO rise above the cluster’s baseline.

- Highly Agentic (HA) (n = 119), exhibiting high scores on every agency dimension. Meaning and self-determination are especially strong. Impact is solid but shows slightly lower LC and FO than the other dimensions.

3.2.3. AI Literacy Among Human Service and Industrial Engineering Technical Track Students

3.2.4. Student Agency Profiles in Relation to AI Literacy

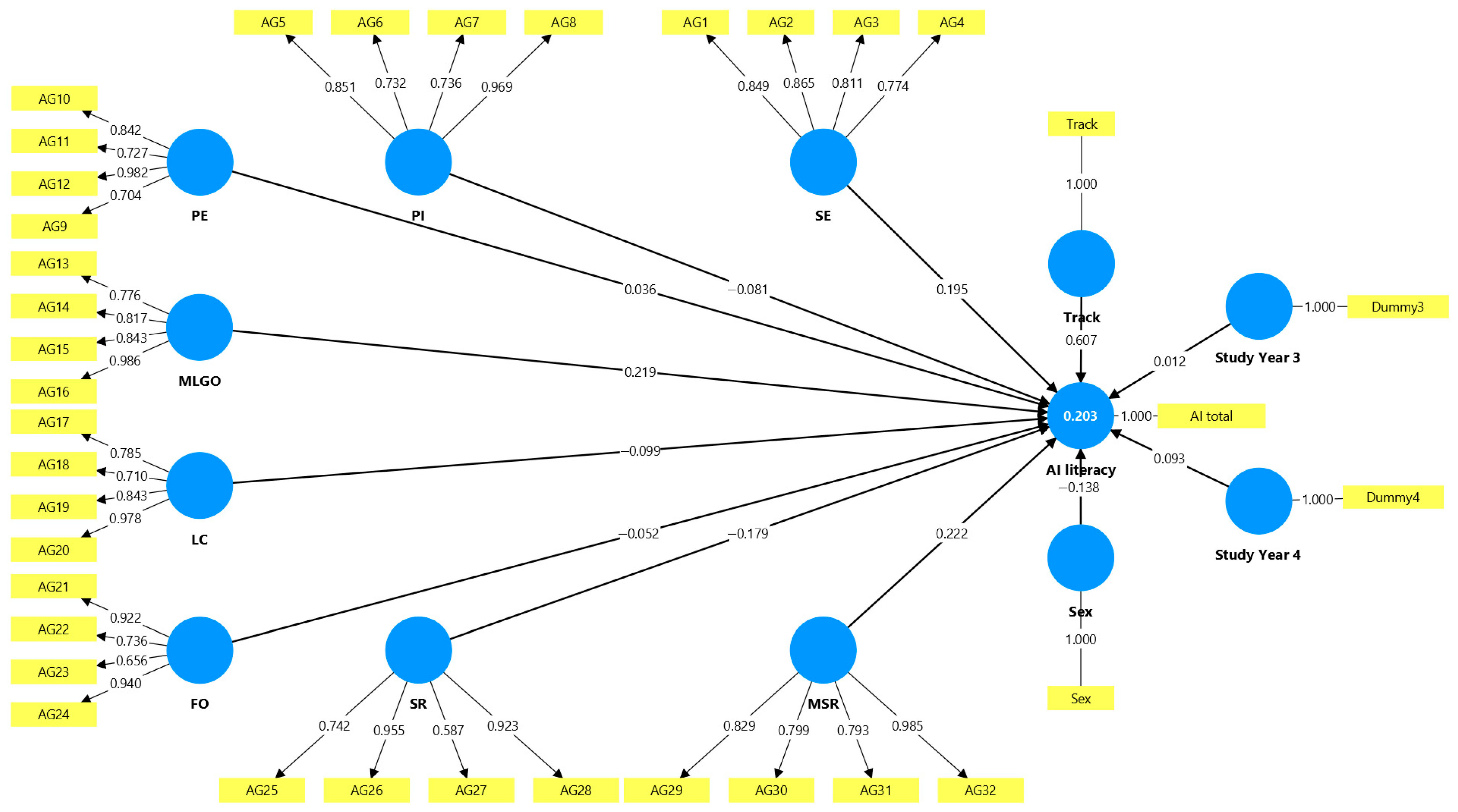

3.2.5. Predictive Power of Transformative Agency Constructs on AI Literacy

4. Discussion

4.1. Student Agency Profiles in VET Students

4.2. AI Literacy in the Human Service and Industrial Engineering Students

4.3. Student Agency Profiles in Relation to AI Literacy

4.4. Predictive Power of Student Transformative Agency Constructs on AI Literacy

4.5. Practical Implications for Curriculum, Pedagogy, and Teacher Education

- Cultivating Self-Efficacy in AI Learning: Instructors and instructional designers should incorporate practices that boost students’ confidence in working with AI. This can be accomplished by providing mastery experiences, social persuasion to encourage learners’ efforts, and exposing students to role models. Mastery Experiences: Begin the term with hands-on, plug-and-play AI activities that prioritize success with minimal technical barriers. For instance, students could use tools like Teachable Machine or web-based platforms (such as Cognimates or Scratch AI) to create simple image recognition or text classification projects. This provides immediate, successful outcomes and establishes a foundation of “I can do this” feelings before introducing more complex coding or theory [7,186,187]. Use structured peer feedback during labs or in online forums where students routinely praise each other’s troubleshooting abilities or creativity (e.g., “Noticing the data imbalance was a great catch!”). Instructors can reinforce this by sharing personalized video or written feedback that recognizes progress and persistence rather than just correctness [7,44]. Regularly feature short talks (live or recorded) from professionals—ideally from underrepresented backgrounds—who apply AI in different contexts (e.g., health, arts, social sciences). Have them address their initial fears, challenges, and when they first felt capable with AI, making the learning journey relatable and fostering a sense of belonging [7,188,189].

- Educational interventions should be strategically oriented towards rapidly building and affirming learners’ confidence with AI concepts and tools. This is especially salient in higher education contexts where time and curricular space for AI are limited, but the societal and workforce demand is urgent [123,190]. The finding that self-efficacy is the crucial catalyst for entry-level AI literacy opens new pathways for supporting students who may not initially self-identify as passionate coders or tech enthusiasts but display situational confidence through prior exposure or effective scaffolding. As echoed in global studies, varied learner groups benefit from tailored approaches, and ‘competence-first’ pathways can broaden access and reduce affective barriers for underrepresented populations [123,142]. As a warm-up, launch courses with activities like experimenting with AI chatbots (such as DALL·E for generating images from prompts or ChatGPT for creating a study companion), letting students see the results of AI rapidly without yet coding [186,191]. These activities lower barriers, create rapid success experiences, and provide a springboard for deeper learning. Structure the first sessions as “AI Bootcamps” where learners complete a sequence of scaffolded challenges—such as customizing pre-built AI templates or analyzing simple datasets. This competence-first approach can be especially liberating for students without a strong tech background [7,186,192].

- While competence may suffice for foundational literacy, educators and policymakers can structure curricula with early intensive self-efficacy boosting components, followed by modules that cultivate deeper intrinsic motivation, critical thinking, and meaning making as students’ progress toward advanced, agentic engagement with AI [24,123,124]. Use the first weeks for exploration with engaging, application-focused AI tools (e.g., AI-driven music composition, image manipulation, or virtual medical diagnosis platforms [186,193]), ensuring initial encounters are positive and confidence-boosting before heavier theoretical material is introduced [7,186,191]. Next, implement assessments that start with low-stakes, growth-focused tasks (e.g., “Share a model you built and one thing you learned”) and gradually incorporate higher-order tasks (like critiquing algorithmic bias or debugging complex code), helping all students feel early success [7,191,192]. Finally, leverage adaptive, AI-powered platforms where tasks and feedback are tailored according to each student’s confidence and progress, letting students choose between “practice” and “challenge” tracks based on their self-assessed readiness [187,194,195].

- Encouraging a Mastery Goal Orientation: Given the positive role of mastery orientation, educational practice should shift the emphasis from performance outcomes to the learning process when it comes to AI. This means creating a classroom culture that values curiosity, improvement, and intellectual risk-taking over just attaining the right answer or scoring high on a test. To foster intrinsic motivation and enduring learning, build a classroom climate focused on experimentation, curiosity, and growth over competition (1) Structure rubrics to reward “evidence of revision,” “quality of reflection,” and “problem-solving strategies,” not just the correctness of code or final AI model performance [187,192]. (2) Require logs where students detail their decision processes, false starts, and what they learned from errors (e.g., “Describe two mistakes made while tuning your model and how you addressed them”). Collaborative reflection can occur on shared documents or forums [15,187]. (3) Schedule class sessions where students publicly share failed attempts or flawed AI models and discuss what these taught them about AI, normalizing and destigmatizing failure as a learning tool [191,192].

- Integrating Metacognitive Strategy Training: Our findings on MSR suggest that teaching students how to think about their own learning is especially beneficial in complex domains such as AI. Teach students to evaluate and steer their own AI learning processes with structured metacognition can be organized as: (1) Make it routine for students to complete a post-mortem after each substantial project, detailing what worked, why the model may have failed, and the debugging strategies used. Provide prompts such as: “What variables most affected your results? How would you redesign your model based on outcomes?” [15,187]. (2) During live sessions, prompt students to narrate their reasoning as they develop or troubleshoot code. Optionally, have students record and replay these sessions for critical self-reflection or peer review [44,187]. (3) Pair students for reciprocal reviews—not to judge the final product but the reasoning process. Peers can highlight robust problem-solving or flag skipped assumptions, fostering awareness of diverse strategies [15,188,189].

- Addressing Negative Factors—Fostering Adaptive Agency: The negative associations we found (SR, LC) highlight areas where educators should be cautious and proactive. By explicitly teaching that control in AI contexts means self-directed inquiry, ethical engagement, and adaptive learning—rather than rigid mastery—educators can harness the motivational power of internal locus of control while fostering the epistemic and ethical dispositions required for high AI literacy. This alignment, supported by mastery goals, social participation, and reflected in both curriculum and climate, can decisively transform internal locus of control from a liability to a robust predictor of critical, responsible AI literacy in industrial engineering students [151,155,158,159,163,164].

- Teacher Education and Systemic Supports: To implement the above changes, teacher education programs and professional development workshops should incorporate the principles of transformative agency [196]. Practical actions for embedding transformative agency in teacher education and professional development include actions such as, co-creation of curriculum and learning experiences, critical reflection and generativity practices, action research and practitioner inquiry, professional learning communities with agency focus, mentorship models emphasizing agency and inclusion and embedding transformative agency in AI integration [7,184,185,186,197,198,199].

4.6. Limitations of the Study and Future Research

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

| 1PL | 2PL | 3PL | ||||

|---|---|---|---|---|---|---|

| Item | Bpar (SE) | Apar (SE) | Bpar (SE) | Apar (SE) | Bpar (SE) | Cpar (SE) |

| ai1 | 3.00 (0.21) | 0.99 (0.18) | 3.21 (0.49) | 2.07 (0.46) | 2.86 (0.28) | 0.04 (0.01) |

| ai2 | 0.89 (0.11) | 0.69 (0.12) | 1.28 (0.25) | 1.21 (0.28) | 1.61 (0.23) | 0.16 (0.04) |

| ai3 | 0.89 (0.11) | 0.40 (0.11) | 2.13 (0.59) | 1.63 (0.45) | 2.26 (0.31) | 0.25 (0.03) |

| ai4 | 1.20 (0.12) | 0.64 (0.12) | 1.85 (0.35) | 1.66 (0.41) | 2.04 (0.24) | 0.18 (0.03) |

| ai5 | −0.63 (0.11) | 0.55 (0.12) | −1.07 (0.27) | 0.76 (0.19) | −0.06 (0.45) | 0.25 (0.10) |

| ai6 | −0.12 (0.10) | 0.39 (0.10) | −0.24 (0.26) | 0.75 (0.35) | 1.26 (0.47) | 0.31 (0.10) |

| ai7 | 0.76 (0.11) | 0.54 (0.11) | 1.38 (0.33) | 1.31 (0.38) | 1.83 (0.27) | 0.23 (0.04) |

| ai8 | 1.80 (0.14) | 0.69 (0.13) | 2.60 (0.47) | 1.79 (0.48) | 2.41 (0.26) | 0.12 (0.02) |

| ai9 | 1.03 (0.11) | 0.49 (0.11) | 2.01 (0.47) | 1.60 (0.48) | 2.25 (0.30) | 0.22 (0.03) |

| ai10 | 2.26 (0.16) | 0.93 (0.16) | 2.55 (0.37) | 1.56 (0.36) | 2.48 (0.29) | 0.06 (0.02) |

| ai11 | 1.54 (0.13) | 0.48 (0.12) | 3.03 (0.73) | 2.03 (0.43) | 2.32 (0.24) | 0.16 (0.02) |

| ai12 | 0.95 (0.11) | 0.58 (0.11) | 1.61 (0.34) | 1.80 (0.46) | 1.88 (0.22) | 0.22 (0.03) |

| ai13 | 1.57 (0.13) | 0.64 (0.13) | 2.40 (0.45) | 1.30 (0.33) | 2.31 (0.32) | 0.12 (0.03) |

| ai14 | 1.11 (0.12) | 0.77 (0.13) | 1.45 (0.25) | 1.21 (0.29) | 1.79 (0.25) | 0.14 (0.04) |

| ai15 | 0.54 (0.11) | 0.54 (0.11) | 0.98 (0.26) | 1.11 (0.37) | 1.72 (0.30) | 0.24 (0.06) |

| ai16 | 1.32 (0.12) | 0.54 (0.12) | 2.37 (0.51) | 1.66 (0.49) | 2.33 (0.29) | 0.18 (0.03) |

| ai17 | 1.19 (0.12) | 0.49 (0.11) | 2.32 (0.54) | 1.67 (0.48) | 2.32 (0.29) | 0.20 (0.03) |

| ai18 | 5.73 (0.69) | 1.79 (0.49) | 4.41 (0.61) | 1.90 (0.51) | 4.14 (0.60) | 0.01 (0.00) |

| ai19 | 0.88 (0.11) | 0.41 (0.11) | 2.02 (0.55) | 2.09 (0.46) | 2.07 (0.22) | 0.26 (0.02) |

| ai20 | 0.79 (0.11) | 0.46 (0.11) | 1.66 (0.43) | 1.38 (0.46) | 2.15 (0.32) | 0.25 (0.04) |

| ai21 | 0.85 (0.11) | 0.53 (0.11) | 1.55 (0.36) | 1.72 (0.44) | 1.83 (0.22) | 0.23 (0.03) |

| ai22 | −0.31 (0.10) | 0.67 (0.12) | −0.44 (0.16) | 0.82 (0.18) | 0.23 (0.34) | 0.20 (0.09) |

| ai23 | 1.08 (0.12) | 0.47 (0.11) | 2.19 (0.53) | 1.84 (0.46) | 2.15 (0.25) | 0.22 (0.03) |

| ai24 | 0.03 (0.10) | 0.47 (0.11) | 0.10 (0.21) | 0.87 (0.35) | 1.31 (0.39) | 0.29 (0.09) |

| ai25 | 1.14 (0.12) | 0.48 (0.11) | 2.29 (0.55) | 1.54 (0.49) | 2.41 (0.33) | 0.21 (0.03) |

| ai26 | −0.76 (0.11) | 2.16 (0.21) | −0.55 (0.06) | 2.36 (0.25) | −0.40 (0.09) | 0.12 (0.05) |

| ai27 | 1.02 (0.11) | 0.48 (0.11) | 2.05 (0.49) | 0.97 (0.37) | 2.44 (0.44) | 0.19 (0.05) |

| ai28 | 0.48 (0.11) | 0.49 (0.11) | 0.97 (0.29) | 0.83 (0.33) | 1.89 (0.41) | 0.23 (0.08) |

| ai29 | −0.04 (0.10) | 1.03 (0.14) | −0.05 (0.10) | 1.36 (0.22) | 0.27 (0.15) | 0.13 (0.06) |

| ai30 | 1.23 (0.12) | 0.38 (0.11) | 3.06 (0.88) | 2.23 (0.42) | 2.29 (0.23) | 0.21 (0.02) |

| ai31 | 0.59 (0.11) | 0.73 (0.12) | 0.83 (0.19) | 1.21 (0.30) | 1.36 (0.22) | 0.19 (0.05) |

| 1PL | 2PL | 3PL | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Item | S-X2 | df | p | S-X2 | df | p | S-X2 | df | p |

| ai1 | 13.738 | 20 | 0.844 | 15.579 | 18 | 0.622 | 12.425 | 18 | 0.825 |

| ai2 | 26.513 | 19 | 0.117 | 26.745 | 18 | 0.084 | 29.533 | 18 | 0.042 |

| ai3 | 27.435 | 19 | 0.095 | 22.966 | 19 | 0.239 | 23.904 | 18 | 0.158 |

| ai4 | 14.144 | 19 | 0.775 | 14.773 | 19 | 0.737 | 15.030 | 19 | 0.721 |

| ai5 | 10.087 | 20 | 0.967 | 12.597 | 19 | 0.859 | 12.176 | 17 | 0.789 |

| ai6 | 23.733 | 19 | 0.207 | 15.393 | 18 | 0.635 | 16.981 | 19 | 0.591 |

| ai7 | 31.577 | 21 | 0.065 | 27.242 | 18 | 0.075 | 25.953 | 18 | 0.101 |

| ai8 | 12.570 | 20 | 0.895 | 13.935 | 19 | 0.788 | 10.800 | 18 | 0.903 |

| ai9 | 12.381 | 21 | 0.929 | 13.350 | 19 | 0.820 | 12.123 | 19 | 0.880 |

| ai10 | 24.464 | 19 | 0.179 | 24.662 | 18 | 0.135 | 22.187 | 19 | 0.275 |

| ai11 | 31.654 | 20 | 0.047 | 26.880 | 20 | 0.139 | 33.743 | 19 | 0.020 |

| ai12 | 20.501 | 20 | 0.427 | 16.813 | 19 | 0.603 | 17.635 | 17 | 0.412 |

| ai13 | 23.602 | 19 | 0.212 | 31.960 | 20 | 0.044 | 32.575 | 19 | 0.027 |

| ai14 | 25.273 | 20 | 0.191 | 25.972 | 19 | 0.131 | 25.467 | 17 | 0.085 |

| ai15 | 15.515 | 21 | 0.796 | 14.855 | 19 | 0.732 | 14.589 | 16 | 0.555 |

| ai16 | 7.384 | 20 | 0.995 | 7.603 | 20 | 0.994 | 6.569 | 17 | 0.989 |

| ai17 | 23.329 | 19 | 0.223 | 22.024 | 20 | 0.339 | 23.934 | 19 | 0.199 |

| ai18 | 4.747 | 21 | 1.000 | 2.297 | 18 | 1.000 | 3.710 | 17 | 1.000 |

| ai19 | 21.931 | 20 | 0.344 | 17.823 | 18 | 0.467 | 15.766 | 18 | 0.609 |

| ai20 | 22.406 | 20 | 0.319 | 22.274 | 20 | 0.326 | 20.772 | 17 | 0.237 |

| ai21 | 19.335 | 19 | 0.436 | 19.660 | 19 | 0.415 | 32.756 | 19 | 0.026 |

| ai22 | 12.537 | 19 | 0.861 | 14.938 | 20 | 0.780 | 13.578 | 18 | 0.756 |

| ai23 | 22.144 | 20 | 0.333 | 17.816 | 18 | 0.468 | 20.662 | 18 | 0.297 |

| ai24 | 16.049 | 20 | 0.714 | 14.098 | 18 | 0.723 | 16.635 | 19 | 0.615 |

| ai25 | 17.800 | 19 | 0.536 | 16.053 | 19 | 0.654 | 16.548 | 18 | 0.554 |

| ai26 | 53.670 | 18 | 0.000 | 15.749 | 18 | 0.610 | 18.446 | 19 | 0.493 |

| ai27 | 18.973 | 20 | 0.524 | 15.850 | 19 | 0.667 | 16.687 | 18 | 0.545 |

| ai28 | 29.157 | 20 | 0.085 | 26.963 | 19 | 0.106 | 26.625 | 19 | 0.114 |

| ai29 | 22.130 | 20 | 0.334 | 23.788 | 18 | 0.162 | 28.899 | 18 | 0.050 |

| ai30 | 31.062 | 19 | 0.040 | 27.408 | 19 | 0.096 | 25.541 | 18 | 0.111 |

| ai31 | 11.843 | 20 | 0.921 | 12.248 | 20 | 0.907 | 9.702 | 18 | 0.941 |

References

- Allen, L.K.; Kendeou, P. ED-AI Lit: An Interdisciplinary Framework for AI Literacy in Education. Policy Insights Behav. Brain Sci. 2023, 11, 3–10. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, Y.; Sun, D.; He, W.; Wei, Y. Navigating the landscape of AI literacy education: Insights from a decade of research (2014–2024). Humanit. Soc. Sci. Commun. 2025, 12, 374. [Google Scholar] [CrossRef]

- Chiu, T.K.F.; Sanusi, I.T. Define, foster, and assess student and teacher AI literacy and competency for all: Current status and future research direction. Comput. Educ. Open 2024, 7, 100182. [Google Scholar] [CrossRef]

- Darvishi, A.; Khosravi, H.; Sadiq, S.; Gašević, D.; Siemens, G. Impact of AI assistance on student agency. Comput. Educ. 2024, 210, 104967. [Google Scholar] [CrossRef]

- Licardo, M.; Kranjec, E.; Lipovec, A.; Dolenc, K.; Arcet, B.; Flogie, A.; Plavčak, D.; Ivanuš Grmek, M.; Bednjički Rošer, B.; Sraka Petek, B.; et al. Generativna Umetna Inteligenca v Izobraževanju: Analiza Stanja v Primarnem, Sekundarnem in Terciarnem Izobraževanju; University of Maribor, University Press: Maribor, Slovenia, 2025; Available online: https://press.um.si/index.php/ump/catalog/view/950/1409/5110 (accessed on 10 May 2025).

- Garg, P.K. Overview of Artificial Intelligence. In Artificial Intelligence; Sharma, L., Garg, P.K., Eds.; Chapman and Hall/CRC: New York, NY, USA, 2021; pp. 3–18. [Google Scholar]

- Chiu, T.K.F.; Meng, H.M.; Chai, C.; King, I.; Wong, S.; Yam, Y. Creation and Evaluation of a Pretertiary Artificial Intelligence (AI) Curriculum. IEEE Trans. Educ. 2021, 65, 30–39. [Google Scholar] [CrossRef]

- Ali, S.; Kumar, V.; Breazeal, C. AI Audit: A Card Game to Reflect on Everyday AI Systems. arXiv 2023, arXiv:2305.17910. [Google Scholar] [CrossRef]

- Casal-Otero, L.; Catala, A.; Fernández-Morante, C.; Taboada, M.; Cebreiro, B.; Barro, S. AI literacy in K-12: A systematic literature review. Int. J. STEM Educ. 2023, 10, 1–17. [Google Scholar] [CrossRef]

- Grover, S.; Broll, B.; Babb, D. Cybersecurity Education in the Age of AI: Integrating AI Learning into Cybersecurity High School Curricula. In Proceedings of the 54th ACM Technical Symposium on Computer Science Education, New York, NY, USA, 15–18 March 2023; Volume 1. [Google Scholar] [CrossRef]

- Borasi, R.; Miller, D.E.; Vaughan-Brogan, P.; DeAngelis, K.; Han, Y.J.; Mason, S. An AI Wishlist from School Leaders. Phi Delta Kappan 2024, 105, 48–51. [Google Scholar] [CrossRef]

- Wu, D.; Chen, M.; Chen, X.; Liu, X. Analyzing K-12 AI education: A large language model study of classroom instruction on learning theories, pedagogy, tools, and AI literacy. Comput. Educ. Artif. Intell. 2024, 7, 100295. [Google Scholar] [CrossRef]

- Vieriu, A.M.; Petrea, G. The Impact of Artificial Intelligence (AI) on Students’ Academic Development. Educ. Sci. 2025, 15, 343. [Google Scholar] [CrossRef]

- Hornberger, M.; Bewersdorff, A.; Schiff, D.S.; Nerdel, C. A multinational assessment of AI literacy among university students in Germany, the UK, and the US. Comput. Hum. Behav. 2025, 4, 100132. [Google Scholar] [CrossRef]

- Jia, X.-H.; Tu, J.-C. Towards a New Conceptual Model of AI-Enhanced Learning for College Students: The Roles of Artificial Intelligence Capabilities, General Self-Efficacy, Learning Motivation, and Critical Thinking Awareness. Systems 2024, 12, 74. [Google Scholar] [CrossRef]

- Stolpe, K.; Hallström, J. Artificial intelligence literacy for technology education. Comput. Educ. Open 2024, 6, 100159. [Google Scholar] [CrossRef]

- Sperling, K.; Stenberg, C.-J.; McGrath, C.; Åkerfeldt, A.; Heintz, F.; Stenliden, L. In search of artificial intelligence (AI) literacy in teacher education: A scoping review. Comput. Educ. Open 2024, 6, 100169. [Google Scholar] [CrossRef]

- Walter, Y. Embracing the future of Artificial Intelligence in the classroom: The relevance of AI literacy, prompt engineering, and critical thinking in modern education. Int. J. Educ. Technol. High. Educ. 2024, 21, 15. [Google Scholar] [CrossRef]

- Bewersdorff, A.; Hornberger, M.; Nerdel, C.; Schiff, D.S. AI advocates and cautious critics: How AI attitudes, AI interest, use of AI, and AI literacy build university students’ AI self-efficacy. Comp. Educ. Artif. Intell. 2025, 8, 100340. [Google Scholar] [CrossRef]

- Klemenčič, M. What is student agency? An ontological exploration in the context of research on student engagement. In Student Engagement in Europe: Society, Higher Education and Student Governance; Klemenčič, M., Bergan, S., Primožič, R., Eds.; Council of Europe Publishing: Strasbourg, France, 2015. [Google Scholar]

- Zhao, J.; Li, S.; Zhang, J. Understanding Teachers’ Adoption of AI Technologies: An Empirical Study from Chinese Middle Schools. Systems 2025, 13, 302. [Google Scholar] [CrossRef]

- GEN-UI. Available online: https://gen-ui.si/ (accessed on 25 April 2025).

- Long, D.; Magerko, B. What is AI literacy? Competencies and design considerations. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Ng, D.T.K.; Wu, W.; Leung, J.K.L.; Chiu, T.K.F.; Chu, S.K.W. Design and validation of the AI literacy questionnaire: The affective, behavioural, cognitive and ethical approach. Br. J. Educ. Technol. 2024, 55, 1082–1104. [Google Scholar] [CrossRef]

- Spreitzer, G.M. Psychological empowerment in the workplace: Dimensions, measurement, and validation. Acad. Manag. J. 1995, 38, 1442–1465. [Google Scholar] [CrossRef]

- Thomas, K.W.; Velthouse, B.A. Cognitive elements of empowerment: An “interpretive” model of intrinsic task motivation. Acad. Manag. Rev. 1990, 15, 666–681. [Google Scholar] [CrossRef]

- Kong, S.C.; Zhu, J.; Yang, Y.N. Developing and Validating a Scale of Empowerment in Using Artificial Intelligence for Problem-Solving for Senior Secondary and University Students. Comput. Educ. Artif. Intell. 2025, 8, 100359. [Google Scholar] [CrossRef]

- Kong, S.C.; Chiu, M.M.; Lai, M. A Study of Primary School Students’ Interest, Collaboration Attitude, and Programming Empowerment in CT Education. Comput. Educ. 2018, 127, 178–189. [Google Scholar] [CrossRef]

- Gibson, D.; Kovanovic, V.; Ifenthaler, D.; Dexter, S.; Feng, S. Learning Theories for Artificial Intelligence Promoting Learning Processes. Br. J. Educ. Technol. 2023, 54, 1125–1146. [Google Scholar] [CrossRef]

- Jääskelä, P.; Poikkeus, A.-M.; Häkkinen, P.; Vasalampi, K.; Rasku-Puttonen, H.; Tolvanen, A. Students’ Agency Profiles in Relation to Student-Perceived Teaching Practices in University Courses. Int. J. Educ. Res. 2020, 103, 101604. [Google Scholar] [CrossRef]

- Diseth, Å. Self-Efficacy, Goal Orientations and Learning Strategies as Mediators between Preceding and Subsequent Academic Achievement. Learn. Individ. Differ. 2011, 21, 191–195. [Google Scholar] [CrossRef]

- Domino, M.R. Self-Regulated Learning Skills in Computer Science: The State of the Field. Ph.D. Thesis, Virginia Tech, Blacksburg, VA, USA, 21 August 2024. [Google Scholar]

- Salas-Pilco, Z.; Yang, Y.; Zhang, Z. AI-Empowered Self-Regulated Learning in Higher Education: A Qualitative Systematic Review. Sci. Learn. 2024, 9, 21. [Google Scholar] [CrossRef]

- Chang, S.-H.; Yao, K.-C.; Chen, Y.-T.; Chung, C.-Y.; Huang, W.-L.; Ho, W.-S. Integrating Motivation Theory into the AIED Curriculum for Technical Education: Examining the Impact on Learning Outcomes and the Moderating Role of Computer Self-Efficacy. Information 2025, 16, 50. [Google Scholar] [CrossRef]

- Getenet, S.; Cantle, R.; Redmond, P.; Albion, P. Students’ digital technology attitude, literacy and self-efficacy in online learning. Int. J. Educ. Tech. High. Educ. 2024, 21, 3. [Google Scholar] [CrossRef]

- Sullivan, K. Achievement via Individual Determination (AVID) and Development of Student Agency. Ph.D. Thesis, University of North Carolina at Chapel Hill Graduate School, Chapel Hill, NC, USA, 26 May 2022. [Google Scholar] [CrossRef]

- Teachers Institute. Available online: https://teachers.institute/learning-learner-development/locus-of-control-learner-autonomy-achievement/ (accessed on 18 April 2025).

- Cui, H.; Bi, X.; Chen, W.; Gao, T.; Qing, Z.; Shi, K.; Ma, Y. Gratitude and academic engagement: Exploring the mediating effects of internal locus of control and subjective well-being. Front. Psychol. 2023, 14, 1287702. [Google Scholar] [CrossRef]

- Li, J.; Li, Y. The Role of Grit on Students’ Academic Success in Experiential Learning Context. Front. Psychol. 2021, 12, 774149. [Google Scholar] [CrossRef]

- Yilmaz, R.; Yilmaz, F.G.K. The Effect of Generative Artificial Intelligence (AI)-Based Tool Use on Students’ Computational Thinking Skills, Programming Self-Efficacy and Motivation. Comput. Educ. Artif. Intell. 2023, 4, 100147. [Google Scholar] [CrossRef]

- Sigurdson, N.; Petersen, A. An exploration of grit in a CS1 context. In Proceedings of the Koli Calling ’18: 18th Koli Calling International Conference on Computing Education Research, New York, NY, USA, 22–25 November 2018; Association for Computing Machinery: New York, NY, USA, 2018; Volume 23, pp. 1–5. [Google Scholar] [CrossRef]

- Dang, S.; Quach, S.; Roberts, R.E. Explanation of Time Perspectives in Adopting AI Service Robots under Different Service Settings. J. Retail. Consum. Serv. 2025, 82, 104109. [Google Scholar] [CrossRef]

- Southworth, J.; Migliaccio, K.; Glover, J.; Glover, J.N.; Reed, D.; McCarty, C.; Brendemuhl, J.; Thomas, A. Developing a Model for AI across the Curriculum: Transforming the Higher Education Landscape via Innovation in AI Literacy. Comput. Educ. Artif. Intell. 2023, 4, 100127. [Google Scholar] [CrossRef]

- Chiu, T.K.F.; Moorhouse, B.L.; Chai, C.S.; Ismailov, M. Teacher Support and Student Motivation to Learn with Artificial Intelligence (AI) Based Chatbot. Interact. Learn. Environ. 2023, 32, 3240–3256. [Google Scholar] [CrossRef]

- Wei, L. Artificial Intelligence in Language Instruction: Impact on English Learning Achievement, L2 Motivation, and Self-Regulated Learning. Front. Psychol. 2023, 14, 1261955. [Google Scholar] [CrossRef]

- Chang, D.H.; Lin, M.P.-C.; Hajian, S.; Wang, Q.Q. Educational Design Principles of Using AI Chatbot That Supports Self-Regulated Learning in Education: Goal Setting, Feedback, and Personalization. Sustainability 2023, 15, 12921. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Investigating Self-Regulation and Motivation: Historical Background, Methodological Developments, and Future Prospects. Am. Educ. Res. J. 2008, 45, 166–183. [Google Scholar] [CrossRef]

- Molenaar, I.; Mooij, S.D.; Azevedo, R.; Bannert, M.; Järvelä, S.; Gašević, D. Measuring Self-Regulated Learning and the Role of AI: Five Years of Research Using Multimodal Multichannel Data. Comput. Hum. Behav. 2023, 139, 107540. [Google Scholar] [CrossRef]

- Deci, E.L.; Ryan, R.M. Self-Determination Theory: A Macrotheory of Human Motivation, Development, and Health. Can. Psychol. 2008, 49, 182–185. [Google Scholar] [CrossRef]

- Guo, J.; An, F.; Lu, Y. Relationship between Perceived Support and Learning Approaches: The Mediating Role of Perceived Classroom Mastery Goal Structure and Computer Self-Efficacy. Curr. Psychol. 2024, 43, 18561–18575. [Google Scholar] [CrossRef]

- Alt, D.; Weinberger, A.; Heinrichs, K.; Naamati-Schneider, L. The Role of Goal Orientations and Learning Approaches in Explaining Digital Concept Mapping Utilization in Problem-Based Learning. Curr. Psychol. 2023, 42, 14175–14190. [Google Scholar] [CrossRef]

- Pesonen, H.; Leinonen, J.; Haaranen, L.; Hellas, A. Exploring the interplay of achievement goals, self-efficacy, prior experience and course achievement. In Proceedings of the UKICER 23: 2023 Conference on United Kingdom & Ireland Computing Education Research, New York, NY, USA, 7–8 September 2023; Association for Computing Machinery: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Wang, C.K.J.; Liu, W.C.; Kee, Y.H.; Chian, L.K. Competence, Autonomy, and Relatedness in the Classroom: Understanding Students’ Motivational Processes Using the Self-Determination Theory. Heliyon 2019, 5, e01983. [Google Scholar] [CrossRef] [PubMed]

- Martín-Núñez, J.L.; Ar, A.; Fernández, R.P.; Abbas, A.; Radovanovic, A. Does Intrinsic Motivation Mediate Perceived Artificial Intelligence (AI) Learning and Computational Thinking of Students During the COVID-19 Pandemic? Comput. Educ. Artif. Intell. 2023, 4, 100128. [Google Scholar] [CrossRef]

- Dang, S.; Quach, S.; Roberts, R.E. How time fuels AI device adoption: A contextual model enriched by machine learning. Technol. Forecast. Soc. Change 2025, 212, 123975. [Google Scholar] [CrossRef]

- UNESCO. AI Competency Framework for Students; UNESCO: Paris, France, 2024. [Google Scholar] [CrossRef]

- Geitz, G.; Brinke, D.J.; Kirschner, P.A. Changing Learning Behaviour: Self-Efficacy and Goal Orientation in PBL Groups in Higher Education. Int. J. Educ. Res. 2016, 75, 146–158. [Google Scholar] [CrossRef]

- Rončević Zubković, B.; Kolić-Vehovec, S. Perceptions of Contextual Achievement Goals: Contribution to High-School Students’ Achievement Goal Orientation, Strategy Use and Academic Achievement. Stud. Psychol. 2014, 56, 137–153. [Google Scholar] [CrossRef]

- Won, S.; Anderman, E.M.; Zimmerman, R.S. Longitudinal Relations of Classroom Goal Structures to Students’ Motivation and Learning Outcomes in Health Education. J. Educ. Psychol. 2020, 112, 1003–1019. [Google Scholar] [CrossRef]

- Honicke, T.; Broadbent, J.; Fuller-Tyszkiewicz, M. Learner Self-Efficacy, Goal Orientation, and Academic Achievement: Exploring Mediating and Moderating Relationships. High. Educ. Res. Dev. 2020, 39, 689–703. [Google Scholar] [CrossRef]

- Touretzky, D.S.; McCune, C.G.; Martin, F.; Seehorn, D. Envisioning AI for K–12: What Should Every Child Know about AI? In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; AAAI Press: Menlo Park, CA, USA, 2019. [Google Scholar]

- Touretzky, D.S.; Gardner-McCune, C.; Seehorn, D. Machine Learning and the Five Big Ideas in AI. Int. J. Artif. Intell. Educ. 2023, 33, 233–266. [Google Scholar] [CrossRef]

- Bećirović, S.; Polz, E.; Tinkel, I. Exploring Students’ AI Literacy and Its Effects on Their AI Output Quality, Self-Efficacy, and Academic Performance. Smart Learn. Environ. 2025, 12, 29. [Google Scholar] [CrossRef]

- Ulfert-Blank, A.-S.; Schmidt, I. Assessing Digital Self-Efficacy: Review and Scale Development. Comput. Educ. 2022, 191, 104626. [Google Scholar] [CrossRef]

- Fung, K.Y.; Fung, K.C.; Lui, T.L.R.; Sin, K.F.; Lee, L.H.; Qu, H.; Song, S. Exploring the Impact of Robot Interaction on Learning Engagement: A Comparative Study of Two Multi-Modal Robots. Smart Learn. Environ. 2025, 12, 12. [Google Scholar] [CrossRef]

- Biagini, G. Assessing the Assessments: Toward a Multidimensional Approach to AI Literacy. Med. Educ. 2023, 20, 91–101. [Google Scholar] [CrossRef]

- Kaup, C.; Brooks, E.A. Cultural–Historical Perspective on How Double Stimulation Triggers Expansive Learning: Implementing Computational Thinking in Mathematics. Des. Learn. 2022, 14, 151–164. [Google Scholar] [CrossRef]

- Engeström, Y. Learning by Expanding: An Activity-Theoretical Approach to Developmental Research, 2nd ed.; Cambridge University Press: Cambridge, UK, 2015. [Google Scholar]

- Engeström, Y.; Sannino, A. From Mediated Actions to Heterogeneous Coalitions: Four Generations of Activity-Theoretical Studies of Work and Learning. Mind Cult. Act. 2021, 28, 4–23. [Google Scholar] [CrossRef]

- Engeström, Y. Expansive Learning at Work: Toward an Activity Theoretical Reconceptualization. J. Educ. Work 2001, 14, 133–156. [Google Scholar] [CrossRef]

- Engeström, Y.; Sannino, A.; Virkkunen, J. On the Methodological Demands of Formative Interventions. Mind Cult. Act. 2014, 21, 118–128. [Google Scholar] [CrossRef]

- Batiibwe, M.S.K. Using Cultural–Historical Activity Theory to Understand How Emerging Technologies Can Mediate Teaching and Learning in a Mathematics Classroom: A Review of Literature. Res. Pract. Technol. Enhanc. Learn. 2019, 14, 12. [Google Scholar] [CrossRef]

- Sannino, A. Transformative Agency as Warping: How Collectives Accomplish Change Amidst Uncertainty. Pedagog. Cult. Soc. 2022, 30, 9–33. [Google Scholar] [CrossRef]

- Prihatmanto, A.S.; Sukoco, A.; Budiyon, A. Next Generation Smart System: 4-Layer Modern Organization and Activity Theory for a New Paradigm Perspective. Arch. Control. Sci. 2024, 34, 589–623. [Google Scholar] [CrossRef]

- Gormley, G.J.; Kajamaa, A.; Conn, R.L.; O’hAre, S. Making the Invisible Visible: A Place for Utilizing Activity Theory within In Situ Simulation to Drive Healthcare Organizational Development? Adv. Simul. 2020, 5, 29. [Google Scholar] [CrossRef]

- Lim, J.; Leinonen, T.; Lipponen, L. How can artificial intelligence be used in creative learning? Cultural-historical activity theory analysis in Finnish kindergarten. In Artificial Intelligence in Education. Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practitioners, Doctoral Consortium and Blue Sky, Proceedings of 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024; Olney, A.M., Chounta, I.-A., Liu, Z., Santos, O.C., Bittencourt, I.I., Eds.; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2024; Volume 2151, pp. 149–156. [Google Scholar] [CrossRef]

- Xiang, X.; Wei, Y.; Lei, Y.; Li, W.; He, X. Impact of Psychological Empowerment on Job Satisfaction among Preschool Teachers: Mediating Role of Professional Identity. Humanit. Soc. Sci. Commun. 2024, 11, 1175. [Google Scholar] [CrossRef]

- Ganduri, L.; Collier Reed, B. A Cultural-Historical Activity Theory Approach to Studying the Development of Students’ Digital Agency in Higher Education. In Proceedings of the European Society for Engineering Education (SEFI), Dublin, Ireland, 11–14 September 2023; Volume 477–487. [Google Scholar] [CrossRef]

- Creswell, J.W.; Creswell, J.L. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches; SAGE Publications, Inc.: Thousand Oaks, CA, USA, 2023. [Google Scholar]

- Center Republike Slovenije za Poklicno Izobraževanje (CPI). Izobraževalni Programi. Available online: https://cpi.si/poklicno-izobrazevanje/izobrazevalni-programi/ (accessed on 15 May 2025).

- Ng, D.; Leung, J.; Chu, S.K.; Qiao, M.S. AI literacy: Definition, teaching, evaluation and ethical issues. Proc. Assoc. Inf. Sci. Technol. 2021, 58, 504–509. [Google Scholar] [CrossRef]

- Ng, D.T.K.; Leung, J.K.L.; Chu, S.K.W.; Qiao, M.S. Conceptualizing AI Literacy: An Exploratory Review. Comput. Educ. Artif. Intell. 2021, 2, 100041. [Google Scholar] [CrossRef]

- Hornberger, M.; Bewersdorff, A.; Nerdel, C. What Do University Students Know about Artificial Intelligence? Development and Validation of an AI Literacy Test. Comput. Educ.: Artif. Intell. 2023, 5, 100165. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, L.; Zhu, X. Is Social Interaction a Catalyst for Digital Environments? Exploring Affordances of Synchronous Delivery in Online CLIL. Interact. Learn. Environ. 2024, 33, 2689–2702. [Google Scholar] [CrossRef]

- Zeiser, K.; Scholz, C.; Cirks, V. Maximizing Student Agency: Implementing and Measuring Student-Centered Learning Practices; American Institutes for Research: Washington, DC, USA, 2018. [Google Scholar]

- Rupnik, D.; Avsec, S. Student Agency as an Enabler in Cultivating Sustainable Competencies for People-Oriented Technical Professions. Educ. Sci. 2025, 15, 469. [Google Scholar] [CrossRef]

- Benjamini, Y.; Hochberg, Y. Controlling the False Discovery Rate: A Practical and Powerful Approach to Multiple Testing. J. R. Stat. Soc. Ser. B 1995, 57, 289–300. [Google Scholar]

- Meyer, J.P. Applied Measurement with jMetrik; Routledge: New York, NY, USA, 2014. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A New Criterion for Assessing Discriminant Validity in Variance-Based Structural Equation Modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- George, D.; Mallery, P. IBM SPSS Statistics 29 Step by Step: A Simple Guide and Reference, 18th ed.; Routledge: New York, NY, USA, 2024. [Google Scholar] [CrossRef]

- Mishra, P.; Pandey, C.M.; Singh, U.; Gupta, A.; Sahu, C.; Keshri, A. Descriptive Statistics and Normality Tests for Statistical Data. Ann. Card. Anaesth. 2019, 22, 67–72. [Google Scholar] [CrossRef]

- LeBlanc, V.; Cox, M.A.A. Interpretation of the Point-Biserial Correlation Coefficient in the Context of a School Examination. Quant. Methods Psychol. 2017, 13, 46–56. [Google Scholar] [CrossRef]

- Cohen, J.; Cohen, P.; West, S.G.; Aiken, L.S. Applied Multiple Regression/Correlation Analysis for the Behavioral Sciences, 3rd ed.; Routledge: Mahwah, NJ, USA, 2003. [Google Scholar] [CrossRef]

- Pituch, K.A.; Stevens, J.P. Applied Multivariate Statistics for the Social Sciences: Analyses with SAS and IBM’s SPSS, 6th ed.; Routledge: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- Moosbrugger, H.; Kelava, A. Testtheorie und Fragebogenkonstruktion, 3rd ed.; Springer: Berlin, Germany, 2020. [Google Scholar] [CrossRef]

- Boone, W.J.; Staver, J.R. Advances in Rasch Analyses in the Human Sciences; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Denovan, A.; Dagnall, N.; Drinkwater, K.G.; Escolà-Gascón, Á. The Illusory Health Beliefs Scale: Preliminary Validation Using Exploratory Factor and Rasch Analysis. Front. Psychol. 2024, 15, 1408734. [Google Scholar] [CrossRef]

- Crowe, M.; Maciver, D.; Rush, R.; Forsyth, K. Psychometric Evaluation of the ACHIEVE Assessment. Front. Pediatr. 2020, 8, 245. [Google Scholar] [CrossRef]

- Orlando, M.; Thissen, D. Likelihood-Based Item-Fit Indices for Dichotomous Item Response Theory Models. Appl. Psychol. Meas. 2000, 24, 50–64. [Google Scholar] [CrossRef]

- Linacre, J.M. A User’s Guide to Winsteps/Ministep: Rasch-Model Computer Programs. Program Manual 5.10.1; Winsteps.com: Chicago, IL, USA, 2025; Available online: https://www.winsteps.com/winman/copyright.htm (accessed on 25 May 2025).

- Cheung, G.W.; Cooper-Thomas, H.D.; Lau, R.S.; Wang, L.C. Reporting Reliability, Convergent and Discriminant Validity with Structural Equation Modeling: A Review and Best-Practice Recommendations. Asia Pac. J. Manag. 2024, 41, 745–783. [Google Scholar] [CrossRef]

- Rönkkö, M.; Cho, E. An Updated Guideline for Assessing Discriminant Validity. Organ. Res. Methods 2022, 25, 6–14. [Google Scholar] [CrossRef]

- Hair, J.F.; Babin, B.J.; Anderson, R.E.; Black, W.C. Multivariate Data Analysis, 8th ed.; Pearson Prentice: Upper Saddle River, NJ, USA, 2019. [Google Scholar]

- Kim, H.Y. Statistical Notes for Clinical Researchers: Assessing Normal Distribution (2) Using Skewness and Kurtosis. Restor. Dent. Endod. 2013, 38, 52–54. [Google Scholar] [CrossRef]

- Barton, B.; Peat, J. Medical Statistics: A Guide to SPSS, Data Analysis and Clinical Appraisal, 2nd ed.; Wiley Blackwell, BMJ Books: Sydney, Australia, 2014. [Google Scholar]

- Schober, P.; Boer, C.; Schwarte, L.A. Correlation Coefficients: Appropriate Use and Interpretation. Anesth. Analg. 2018, 126, 1763–1768. [Google Scholar] [CrossRef]

- Madhulatha, T.S. An Overview on Clustering Methods. IOSR J. Eng. 2012, 2, 719–725. [Google Scholar] [CrossRef]

- Milligan, G.W.; Cooper, M.C. An Examination of Procedures for Determining the Number of Clusters in a Data Set. Psychometrika 1985, 50, 159–179. [Google Scholar] [CrossRef]

- Eldridge, S.M.; Ashby, D.; Kerry, S. Sample Size for Cluster Randomized Trials: Effect of Coefficient of Variation of Cluster Size and Analysis Method. Int. J. Epidemiol. 2006, 35, 1292–1300. [Google Scholar] [CrossRef] [PubMed]

- Coraggio, L.; Coretto, P. Selecting the Number of Clusters, Clustering Models, and Algorithms: A Unifying Approach Based on the Quadratic Discriminant Score. J. Multivar. Anal. 2023, 196, 105181. [Google Scholar] [CrossRef]

- Roski, M.; Sebastian, R.; Ewerth, R.; Hoppe, A.; Nehring, A. Learning Analytics and the Universal Design for Learning (UDL): A Clustering Approach. Comput. Educ. 2024, 214, 105028. [Google Scholar] [CrossRef]

- Rossbroich, J.; Durieux, J.; Wilderjans, T.F. Model Selection Strategies for Determining the Optimal Number of Overlapping Clusters in Additive Overlapping Partitional Clustering. J. Classif. 2022, 39, 264–301. [Google Scholar] [CrossRef]

- Grimm, K.J.; Houpt, R.; Rodgers, D. Model Fit and Comparison in Finite Mixture Models: A Review and a Novel Approach. Front. Educ. 2021, 6, 613645. [Google Scholar] [CrossRef]

- Nylund, K.L.; Asparouhov, T.; Muthén, B.O. Deciding on the Number of Classes in Latent Class Analysis and Growth Mixture Modeling: A Monte Carlo Simulation Study. Struct. Equ. Model. 2007, 14, 535–569. [Google Scholar] [CrossRef]

- Hooker, J.N.; Marrett, R.; Wang, Q. Rigorizing the Use of the Coefficient of Variation to Diagnose Fracture Periodicity and Clustering. J. Struct. Geol. 2023, 168, 104830. [Google Scholar] [CrossRef]

- University of Ljubljana, Faculty of Education. Available online: https://www.pef.uni-lj.si/ (accessed on 20 May 2025).

- Digital First Network. Available online: https://digitalfirstnetwork.eu/ (accessed on 20 April 2025).

- Hair, J.F.; Sarstedt, M.; Ringle, C.M.; Gudergan, S.P. Advanced Issues in Partial Least Squares Structural Equation Modeling, 2nd ed.; Sage Publications: Thousand Oaks, CA, USA, 2024. [Google Scholar]

- Godhe, A.; Lindström, B. Creating Multimodal Texts in Language Education—Negotiations at the Boundary. Res. Pract. Technol. Enhanc. Learn. 2014, 9, 165–188. Available online: https://rptel.apsce.net/index.php/RPTEL/article/view/2014-09010 (accessed on 5 May 2025).

- Bandura, A. Self-Efficacy: The Exercise of Control; W. H. Freeman/Times Books/Henry Holt & Co.: New York, NY, USA, 1997. [Google Scholar]

- Goller, M. Human Agency at Work: An Active Approach Towards Expertise Development; Springer: Wiesbaden, Germany, 2017. [Google Scholar] [CrossRef]

- Chan, C. Holistic competencies and AI in education: A synergistic pathway. Australas. J. Educ. Technol. 2024, 40, 1–12. [Google Scholar] [CrossRef]

- Chee, H.; Ahn, S.; Lee, J. A competency framework for AI literacy: Variations by different learner groups and an implied learning pathway. Br. J. Educ. Technol. 2024, 1–37, Early access. Full record is not available. [Google Scholar] [CrossRef]

- Yuan, C.W.; Tsai, H.S.; Chen, Y.-T. Charting competence: A holistic scale for measuring proficiency in artificial intelligence literacy. J. Educ. Comput. Res. 2024, 62, 1455–1484. [Google Scholar] [CrossRef]

- Heikkilä, M.; Iiskala, T.; Mikkilä-Erdmann, M. Voices of Student Teachers’ Professional Agency at the Intersection of Theory and Practice. Learn. Cult. Soc. Interact. 2020, 25, 100405. [Google Scholar] [CrossRef]

- Yi, Y. Establishing the concept of AI literacy. Eur. J. Bioeth. 2021, 12, 131–144. [Google Scholar] [CrossRef]

- Cedefop; Institute of the Republic of Slovenia for Vocational Education and Training (CPI). Vocational education and training in Europe—Slovenia: System description. In Cedefop; ReferNet. Vocational Education and Training in Europe: VET in Europe Database—Detailed VET System Descriptions; Publications Office: Luxembourg, 2024; Available online: https://www.cedefop.europa.eu/en/tools/vet-in-europe/systems/slovenia-u3 (accessed on 24 May 2025).

- van Dinther, M.; Dochy, F.; Segers, M.; Braeken, J. Student perceptions of assessment and student self-efficacy in competence-based education. Educ. Stud. 2014, 40, 330–351. [Google Scholar] [CrossRef]

- Duchatelet, D.; Donche, V. Fostering self-efficacy and self-regulation in higher education: A matter of autonomy support or academic motivation? High. Educ. Res. Dev. 2019, 38, 733–747. [Google Scholar] [CrossRef]

- Brooks, C.; Young, S.L. Are choice-making opportunities needed in the classroom? Using self-determination theory to consider student motivation and learner empowerment. Int. J. Teach. Learn. High. Educ. 2011, 23, 48–59. Available online: http://files.eric.ed.gov/fulltext/EJ938578.pdf (accessed on 10 April 2025).

- Cheng, W.; Nguyen, P.N.T. Academic motivations and the risk of not in employment, education or training: University and vocational college undergraduates comparison. Educ. Train. 2024, 66, 91–105. [Google Scholar] [CrossRef]

- Chuang, Y.; Huang, T.; Lin, S.; Chen, B. The influence of motivation, self-efficacy, and fear of failure on the career adaptability of vocational school students: Moderated by meaning in life. Front. Psychol. 2022, 13, 958334. [Google Scholar] [CrossRef]

- Dæhlen, M. Completion in vocational and academic upper secondary school: The importance of school motivation, self-efficacy, and individual characteristics. Eur. J. Educ. 2017, 52, 336–347. [Google Scholar] [CrossRef]

- Jónsdóttir, H.H.; Blöndal, K.S. The choice of track matters: Academic self-concept and sense of purpose in vocational and academic tracks. Scand. J. Educ. Res. 2022, 67, 621–636. [Google Scholar] [CrossRef]

- Fieger, P.; Foley, A. Perceived personal benefits from study as determinants of student satisfaction in Australian vocational education and training. Educ. Train. 2024, 66, 1293–1310. [Google Scholar] [CrossRef]

- Stenalt, M.H.; Lassesen, B. Does student agency benefit student learning? A systematic review of higher education research. Assess. Eval. High. Educ. 2021, 47, 653–669. [Google Scholar] [CrossRef]

- Nieminen, J.; Tuohilampi, L. ‘Finally studying for myself’—Examining student agency in summative and formative self-assessment models. Assess. Eval. High. Educ. 2020, 45, 1031–1045. [Google Scholar] [CrossRef]

- Wang, T.; Cheng, E.C.K. An Investigation of Barriers to Hong Kong K-12 Schools Incorporating Artificial Intelligence in Education. Comput. Educ. Artif. Intell. 2021, 2, 100031. [Google Scholar] [CrossRef]

- Alamäki, A.; Nyberg, C.; Kimberley, A.; Salonen, A.O. Artificial intelligence literacy in sustainable development: A learning experiment in higher education. Front. Educ. 2024, 9, 1343406. [Google Scholar] [CrossRef]

- Gartner, S.; Krašna, M. Ethics of artificial intelligence in education. Rev. Elem. Educ. 2023, 16, 61–79. [Google Scholar] [CrossRef]

- Wang, S.; Sun, Z. Roles of artificial intelligence experience, information redundancy, and familiarity in shaping active learning: Insights from intelligent personal assistants. Educ. Inf. Technol. 2025, 30, 2525–2546. [Google Scholar] [CrossRef]

- Al-Abdullatif, A.; Alsubaie, M.A. ChatGPT in learning: Assessing students’ use intentions through the lens of perceived value and the influence of AI literacy. Behav. Sci. 2024, 14, 845. [Google Scholar] [CrossRef]

- Lee, I.A.; Ali, S.; Zhang, H.; DiPaola, D.; Breazeal, C. Developing middle school students’ AI literacy. In Proceedings of the SIGCSE 21: Proceedings of the 52nd ACM Technical Symposium on Computer Science Education, New York, NY, USA, 13–20 March 2021; pp. 1001–1007. [Google Scholar] [CrossRef]

- Vanneste, B.S.; Puranam, P. Artificial intelligence, trust, and perceptions of agency. Acad. Manage. Rev. 2024, 49, amr-2022. [Google Scholar] [CrossRef]

- Lin, P.-Y.; Chai, C.-S.; Jong, M.S.-Y.; Dai, Y.; Guo, Y.; Qin, J. Modeling the Structural Relationship among Primary Students’ Motivation to Learn Artificial Intelligence. Comput. Educ. Artif. Intell. 2021, 2, 100006. [Google Scholar] [CrossRef]

- Vansteenkiste, M.; Simons, J.; Lens, W.; Sheldon, K.M.; Deci, E.L. Motivating Learning, Performance, and Persistence: The Synergistic Effects of Intrinsic Goals and Autonomy Support. J. Pers. Soc. Psychol. 2004, 87, 246–260. [Google Scholar] [CrossRef] [PubMed]

- Bandura, A. Social Cognitive Theory: An Agentic Perspective. Annu. Rev. Psychol. 2001, 52, 1–26. [Google Scholar] [CrossRef] [PubMed]

- Kong, S.-C.; Cheung, M.-Y.W.; Tsang, O. Developing an Artificial Intelligence Literacy Framework: Evaluation of a Literacy Course for Senior Secondary Students Using a Project-Based Learning Approach. Comput. Educ. Artif. Intell. 2024, 6, 100214. [Google Scholar] [CrossRef]

- Frazier, L.D.; Schwartz, B.L.; Metcalfe, J. The MAPS Model of Self-Regulation: Integrating Metacognition, Agency, and Possible Selves. Metacognition Learn. 2021, 16, 297–318. [Google Scholar] [CrossRef]

- Santokhie, S.; Lipps, G.E. Development and Validation of the Tertiary Student Locus of Control Scale. SAGE Open 2020, 10, 2158244019899061. [Google Scholar] [CrossRef]

- Shepherd, S.; Owen, D.; Fitch, T.J.; Marshall, J.L. Locus of Control and Academic Achievement in High School Students. Psychol. Rep. 2006, 98, 318–322. [Google Scholar] [CrossRef] [PubMed]

- Terzi, A.R.; Çetin, G.; Eser, A. The Relationship between Undergraduate Students’ Locus of Control and Epistemological Beliefs. Educ. Res. 2012, 3, 30–39. Available online: https://www.interesjournals.org/articles/the-relationship-between-undergraduate-studentslocus-of-control-and-epistemological-beliefs.pdf (accessed on 17 May 2025).

- Celik, I.; Sarıcam, H. The Relationships between Positive Thinking Skills, Academic Locus of Control and Grit in Adolescents. Univ. J. Educ. Res. 2018, 6, 392–398. Available online: http://files.eric.ed.gov/fulltext/EJ1171309.pdf (accessed on 24 April 2025).

- Selwyn, N. On the Limits of Artificial Intelligence (AI) in Education. Nord. Tidsskr. Pedagog. Og Krit. 2024, 10, 3–14. [Google Scholar] [CrossRef]

- Anderson, A.; Hattie, J.; Hamilton, R. Locus of control, self-efficacy, and motivation in different schools: Is moderation the key to success? Educ. Psychol. 2005, 25, 517–535. [Google Scholar] [CrossRef]

- Buluş, M. Goal orientations, locus of control and academic achievement in prospective teachers: An individual differences perspective. Educ. Sci. Theory Pract. 2011, 11, 540–546. Available online: https://files.eric.ed.gov/fulltext/EJ927364.pdf (accessed on 3 May 2025).

- Karaman, M.A.; Nelson, K.M.; Vela, J.C. The mediation effects of achievement motivation and locus of control between academic stress and life satisfaction in undergraduate students. Br. J. Guid. Couns. 2017, 46, 375–384. [Google Scholar] [CrossRef]

- Yeşilyurt, E. Academic locus of control, tendencies towards academic dishonesty and test anxiety levels as the predictors of academic self-efficacy. Educ. Sci. Theory Pract. 2014, 14, 1945–1956. Available online: http://files.eric.ed.gov/fulltext/EJ1050425.pdf (accessed on 14 May 2025).

- Keith, T.; Pottebaum, S.M.; Eberhart, S.W. Effects of self-concept and locus of control on academic achievement: A large-sample path analysis. J. Psychoeduc. Assess. 1986, 4, 61–72. [Google Scholar] [CrossRef]

- Maqsud, M. Relationships of locus of control to self-esteem, academic achievement, and prediction of performance among Nigerian secondary school pupils. Br. J. Educ. Psychol. 1983, 53, 215–221. [Google Scholar] [CrossRef]

- Findley, M.J.; Cooper, H.M. Locus of Control and Academic Achievement: A Literature Review. J. Pers. Soc. Psychol. 1983, 44, 419–427. [Google Scholar] [CrossRef]

- Purcell, D.; Cavanaugh, G.; Thomas-Purcell, K.; Caballero, J.; Waldrop, D.; Ayala, V.; Davenport, R.; Ownby, R. e-Health literacy scale, patient attitudes, medication adherence, and internal locus of control. HLRP Health Lit. Res. Pract. 2023, 7, e80–e88. [Google Scholar] [CrossRef]

- Villa, E.A.; Sebastian, M.A. Achievement Motivation, Locus of Control and Study Habits as Predictors of Mathematics Achievement of New College Students. Int. Electron. J. Math. Educ. 2021, 16, em0661. [Google Scholar] [CrossRef]

- Ng, D.T.K.; Su, J.; Leung, J.; Chu, S. Artificial Intelligence (AI) Literacy Education in Secondary Schools: A Review. Interact. Learn. Environ. 2023, 32, 6204–6224. [Google Scholar] [CrossRef]

- Azevedo, R.; Cromley, J.G. Does Training on Self-Regulated Learning Facilitate Students’ Learning With Hypermedia? J. Educ. Psychol. 2004, 96, 523–535. [Google Scholar] [CrossRef]

- Pruessner, L.; Barnow, S.; Holt, D.V.; Joormann, J.; Schulze, K. A cognitive control framework for understanding emotion regulation flexibility. Emotion 2020, 20, 21–29. [Google Scholar] [CrossRef]

- Dane, E. Reconsidering the trade-off between expertise and flexibility: A cognitive entrenchment perspective. Acad. Manage. Rev. 2010, 35, 579–603. [Google Scholar] [CrossRef]

- Credé, M.; Tynan, M.C.; Harms, P.D. Much Ado about Grit: A Meta-Analytic Synthesis of the Grit Literature. J. Pers. Soc. Psychol. 2017, 113, 492–511. [Google Scholar] [CrossRef]

- Yang, Y.; Xia, N. Enhancing students’ metacognition via AI-driven educational support systems. Int. J. Emerg. Technol. Learn. 2023, 18, 133–148. [Google Scholar] [CrossRef]

- Carolus, A.; Koch, M.; Straka, S.; Latoschik, M.; Wienrich, C. MAILS—Meta AI Literacy Scale: Development and testing of an AI literacy questionnaire based on well-founded competency models and psychological change- and meta-competencies. arXiv 2023, arXiv:2302.09319. [Google Scholar] [CrossRef]

- Shiri, A. Artificial intelligence literacy: A proposed faceted taxonomy. Digit. Libr. Perspect. 2024, 40, 681–699. [Google Scholar] [CrossRef]

- Taşkın, M. Artificial intelligence in personalized education: Enhancing learning outcomes through adaptive technologies and data-driven insights. Hum.-Comput. Interact. 2025, 8, 173. [Google Scholar] [CrossRef]

- Hashim, S.; Omar, M.K.; Jalil, H.A.; Sharef, N.M. Trends on technologies and artificial intelligence in education for personalized learning: Systematic literature review. Int. J. Acad. Res. Prog. Educ. Dev. 2022, 11, 670–686. [Google Scholar] [CrossRef]

- Strielkowski, W.; Grebennikova, V.; Lisovskiy, A.; Rakhimova, G.; Vasileva, T. AI-driven adaptive learning for sustainable educational transformation. Sustain. Dev. 2024, 33, 1921–1947. [Google Scholar] [CrossRef]

- Zheng, L.; Niu, J.; Zhong, L.; Gyasi, J.F. The effectiveness of artificial intelligence on learning achievement and learning perception: A meta-analysis. Interact. Learn. Environ. 2021, 31, 5650–5664. [Google Scholar] [CrossRef]

- Xu, Z.; Zhao, Y.; Zhang, B.; Liew, J.; Kogut, A. A meta-analysis of the efficacy of self-regulated learning interventions on academic achievement in online and blended environments in K–12 and higher education. Behav. Inf. Technol. 2022, 42, 2911–2931. [Google Scholar] [CrossRef]

- Husman, J.; Lens, W. The Role of the Future in Student Motivation. Educ. Psychol. 1999, 34, 113–125. [Google Scholar] [CrossRef]

- Dweck, C.S.; Leggett, E.L. A Social-Cognitive Approach to Motivation and Personality. Psychol. Rev. 1988, 95, 256–273. [Google Scholar] [CrossRef]

- Ames, C.A.; Archer, J. Achievement goals in the classroom: Students’ learning strategies and motivation processes. J. Educ. Psychol. 1988, 80, 260–267. [Google Scholar] [CrossRef]

- Baumeister, R.; Vohs, K. Self-regulation, ego depletion, and motivation. Soc. Pers. Psychol. Compass 2007, 1, 115–128. [Google Scholar] [CrossRef]

- Hwang, H.S.; Zhu, L.; Cui, Q. Development and validation of a digital literacy scale in the artificial intelligence era for college students. KSII Trans. Internet Inf. Syst. 2023, 17, 2241–2258. [Google Scholar] [CrossRef]

- Zhao, L.; Wu, X.; Luo, H. Developing AI literacy for primary and middle school teachers in China: Based on a structural equation modeling analysis. Sustainability 2022, 14, 14549. [Google Scholar] [CrossRef]

- Relmasira, S.C.; Lai, Y.C.; Donaldson, J.P. Fostering AI Literacy in Elementary Science, Technology, Engineering, Art, and Mathematics (STEAM) Education in the Age of Generative AI. Sustainability 2023, 15, 13595. [Google Scholar] [CrossRef]

- Kumar, P.C.; Cotter, K.; Cabrera, L.Y. Taking responsibility for meaning and mattering: An agential realist approach to generative AI and literacy. Read. Res. Q. 2024, 59, 570–578. [Google Scholar] [CrossRef]

- Savec, V.F.; Jedrinović, S. The Role of AI Implementation in Higher Education in Achieving the Sustainable Development Goals: A Case Study from Slovenia. Sustainability 2025, 17, 183. [Google Scholar] [CrossRef]

- Xu, Z. AI in education: Enhancing learning experiences and student outcomes. Appl. Comput. Eng. 2024, 51, 104–111. [Google Scholar] [CrossRef]

- Druga, S.; Otero, N.; Ko, A.J. The landscape of teaching resources for AI education. In Proceedings of the ITiCSE 22: 27th ACM Conference on on Innovation and Technology in Computer Science Education, New York, NY, USA, 8–13 July 2022; Volume 1, pp. 420–426. [Google Scholar] [CrossRef]

- D’Mello, S.K.; Biddy, Q.L.; Breideband, T.; Bush, J.B.; Chang, M.; Cortez, A.; Flanigan, J.; Foltz, P.W.; Gorman, J.C.; Hirshfield, L.M.; et al. From learning optimization to learner flourishing: Reimagining AI in education at the Institute for Student-AI Teaming (iSAT). AI Mag. 2024, 45, 61–68. [Google Scholar] [CrossRef]

- Long, D.; Teachey, A.; Magerko, B. Family learning talk in AI literacy learning activities. In Proceedings of the CHI 22: CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 29 April 2022–5 May 2022. Article 268. [Google Scholar] [CrossRef]

- Ma, S.; Chen, Z. The development and validation of the Artificial Intelligence Literacy Scale for Chinese college students (AILS-CCS). IEEE Access 2024, 12, 146419–146429. [Google Scholar] [CrossRef]

- Eager, B.; Brunton, R. Prompting higher education towards AI-augmented teaching and learning practice. J. Univ. Teach. Learn. Pract. 2023, 20, 1–19. [Google Scholar] [CrossRef]

- Schleiss, J.; Laupichler, M.C.; Raupach, T.; Stober, S. AI course design planning framework: Developing domain-specific AI education courses. Educ. Sci. 2023, 13, 954. [Google Scholar] [CrossRef]

- Sousa, M.; Mas, F.D.; Pesqueira, A.; Lemos, C.; Verde, J.M.; Cobianchi, L. The potential of AI in health higher education to increase the students’ learning outcomes. TEM J. 2021, 10, 1671–1679. [Google Scholar] [CrossRef]

- Dogan, M.E.; Dogan, T.G.; Bozkurt, A. The use of artificial intelligence (AI) in online learning and distance education processes: A systematic review of empirical studies. Appl. Sci. 2023, 13, 3612. [Google Scholar] [CrossRef]

- Funda, V.; Mbangeleli, N. Artificial intelligence (AI) as a tool to address academic challenges in South African higher education. Int. J. Learn. Teach. Educ. Res. 2024, 23, 99–114. [Google Scholar] [CrossRef]

- Ribič, L.; Devetak, I.; Potočnik, R. Pre-Service Primary School Teachers’ Understanding of Biogeochemical Cycles of Elements. Educ. Sci. 2025, 15, 110. [Google Scholar] [CrossRef]

- Li, L.; Ruppar, A. Conceptualizing teacher agency for inclusive education: A systematic and international review. Teach. Educ. Spec. Educ. 2020, 44, 42–59. [Google Scholar] [CrossRef]

- Yang, S.; Han, J. Perspectives of transformative learning and professional agency: A native Chinese language teacher’s story of teacher identity transformation in Australia. Front. Psychol. 2022, 13, 894025. [Google Scholar] [CrossRef]

- Shavard, G. Teacher agency in collaborative lesson planning: Stabilising or transforming professional practice? Teach. Teach. 2022, 28, 555–567. [Google Scholar] [CrossRef]

- Klemen, T.; Devetak, I. Introduction of Hydrosphere Environmental Problems in Lower Secondary School Chemistry Lessons. Educ. Sci. 2025, 15, 111. [Google Scholar] [CrossRef]

- Liu, K.; Ball, A. Critical reflection and generativity: Toward a framework of transformative teacher education for diverse learners. Rev. Res. Educ. 2019, 43, 105–168. [Google Scholar] [CrossRef]

- Brevik, L.M.; Gudmundsdottir, G.; Lund, A.; Strømme, T. Transformative agency in teacher education: Fostering professional digital competence. Teach. Teach. Educ. 2019, 86, 102877. [Google Scholar] [CrossRef]

- Cochran-Smith, M.; Craig, C.; Orland-Barak, L.; Cole, C.; Hill-Jackson, V. Agents, agency, and teacher education. J. Teach. Educ. 2022, 73, 445–448. [Google Scholar] [CrossRef]

| Big Idea | Typical Learning Activities and Focal Concepts | Key Learner Outcomes Reported |

|---|---|---|

| 1. Perception (how computers sense the world) | Hands-on image-/sound-classification demos; sensor data investigations; pattern-recognition tasks tying perception to later ML work | Higher self-efficacy when students train simple classifiers. |

| Greater perseverance through troubleshooting. | ||

| Early understanding that “data quality → model quality”. | ||

| 2. Representation and Reasoning (how AI stores and manipulates knowledge) | Building decision trees, rule-based “expert” systems, concept maps; explaining why a model made a choice | Growth in metacognition (“Why did the AI infer X?”). |

| More adaptive help seeking and iterative debugging. | ||

| Strong link to mastery goal orientation → deeper conceptual transfer. | ||

| 3. Learning (machine learning from data and experience) | Training and tuning ML models; experimenting with hyper parameters; comparing training vs. test accuracy | Significant rise in students’ AI empowerment (confidence, interest, agency). |

| Narrowing of gender gap in AI self-efficacy. | ||

| Better grit/self-regulation predicts more successful model refinement. | ||

| 4. Natural Interaction (AI–human interfaces) | Programming conversational agents or voice-controlled robots; evaluating user experience of AI assistants | Boost in creative self-efficacy and ownership when learners set their own project goals. |

| Enthusiastic exploration of multimodal interaction. | ||

| Autonomy supportive tasks foster innovative problem solving. | ||

| 5. Societal Impact (ethics, equity, future-of-work) | Case studies on algorithmic bias; AI-for-social-good design challenges; debating policy and privacy scenarios | Heightened sense of personal responsibility and internal locus of control. |

| Deeper ethical reasoning when projects connect to students’ lived experience. | ||

| Evidence that empowerment mindset → sustained civic engagement with AI issues. |

| Element | Role in the Activity System (CHAT) | Operative Framework | Operational Variables |

|---|---|---|---|

| Subject | Slovenian secondary technical students | Psychological empowerment theory | Four agency dimensions: meaning, competence, self-determination, impact |

| Mediating artifacts | AI tools, datasets, and classroom software | - | Contextual but not directly measured |

| Object/outcome | AI literacy to be mastered | AI4K12 Five Big Ideas | Perception, Representation and Reasoning, Learning, Natural Interaction, Societal Impact |

| Rules/community/division of labor | School track, sex, study year | - | Control variables |

| Competency [23] | Item | Item Label | Difficulty Index | Difficulty Index Corrected for Guessing | Discrimination Index |

|---|---|---|---|---|---|

| Recognizing AI | 1 | Typical applications | 0.061 | 0.000 | 0.281 |

| 2 | Recognizing a chatbot | 0.306 | 0.075 | 0.255 | |

| Interdisciplinarity | 3 | AI systems | 0.306 | 0.075 | 0.182 |

| 4 | Interdisciplinary research fields | 0.249 | 0.000 | 0.248 | |

| Understanding intelligence | 5 | Intelligence of AI 1 | 0.633 | 0.511 | 0.174 |

| 6 | Intelligence of AI 2 | 0.522 | 0.363 | 0.157 | |

| 7 | Similarities of humans and AI | 0.332 | 0.109 | 0.209 | |

| General vs. narrow | 8 | Weak and strong AI | 0.162 | 0.000 | 0.217 |

| 9 | Capabilities of weak AI | 0.280 | 0.040 | 0.209 | |

| AI’s strengths and weaknesses | 10 | Superiority of AI | 0.113 | 0.000 | 0.304 |

| 11 | Superiority of humans | 0.198 | 0.000 | 0.204 | |

| Representations | 12 | Knowledge representations 1 | 0.294 | 0.059 | 0.207 |

| 13 | Knowledge representations 2 | 0.193 | 0.000 | 0.258 | |

| Decision-making | 14 | Decision-making | 0.266 | 0.021 | 0.266 |

| 15 | Optimization | 0.376 | 0.168 | 0.202 | |

| 16 | Supervised and unsupervised learning | 0.231 | 0.000 | 0.209 | |

| Machine learning steps | 17 | Iterative process | 0.256 | 0.008 | 0.200 |

| 18 | Steps in supervised learning | 0.005 | 0.000 | 0.309 | |

| 19 | Training and test data | 0.308 | 0.077 | 0.181 | |

| Human role in AI | 20 | Human influence 1 | 0.325 | 0.100 | 0.192 |

| 21 | Human influence 2 | 0.313 | 0.084 | 0.218 | |

| Technical | 22 | Programmability | 0.565 | 0.420 | 0.190 |

| Data literacy | 23 | Visualization of data | 0.271 | 0.028 | 0.183 |

| Learning from data | 24 | Learning from data | 0.487 | 0.316 | 0.157 |

| 25 | Learning from user data | 0.261 | 0.015 | 0.179 | |

| Critical thinking | 26 | Representativeness of data | 0.661 | 0.548 | 0.353 |

| Ethics | 27 | Ethical principles | 0.282 | 0.043 | 0.149 |

| 28 | Black box | 0.388 | 0.184 | 0.171 | |

| 29 | Societal challenges | 0.504 | 0.339 | 0.244 | |

| 30 | Risks of AI | 0.245 | 0.000 | 0.171 | |

| 31 | Legal challenges | 0.365 | 0.153 | 0.242 |

| Student Agency Constructs | Outer loadings (λ) Range | Composite Reliability ρC | Cronbach’s α | ρA | AVE |

|---|---|---|---|---|---|

| Self-efficacy (SE) | 0.75–0.88 | 0.89 | 0.84 | 0.85 | 0.68 |

| Perseverance of interest (PI) | 0.69–0.95 | 0.90 | 0.85 | 0.88 | 0.69 |

| Perseverance of effort (PE) | 0.66–0.98 | 0.89 | 0.83 | 0.87 | 0.68 |

| Mastery learning goal orientation (MLGO) | 0.80–0.98 | 0.92 | 0.88 | 0.89 | 0.74 |

| Locus of control (LC) | 0.74–0.91 | 0.90 | 0.85 | 0.88 | 0.70 |

| Future orientation (FO) | 0.80–0.98 | 0.91 | 0.87 | 0.89 | 0.73 |

| Self-regulation (SR) | 0.78–0.97 | 0.91 | 0.88 | 0.89 | 0.73 |

| Metacognitive self-regulation (MSR) | 0.79–0.96 | 0.91 | 0.87 | 0.88 | 0.73 |

| Student Agency Constructs | SE | PI | PE | MLGO | LC | FO | SR | MSR |

|---|---|---|---|---|---|---|---|---|

| SE | 0.82 | |||||||

| PI | 0.42 (0.49) | 0.83 | ||||||

| PE | 0.29 (0.34) | 0.55 (0.63) | 0.82 | |||||

| MLGO | 0.47 (0.55) | 0.41 (0.48) | 0.39 (0.44) | 0.86 | ||||

| LC | 0.53 (0.63) | 0.31 (0.36) | 0.24 (0.27) | 0.38 (0.44) | 0.84 | |||

| FO | 0.27 (0.31) | 0.25 (0.29) | 0.28 (0.32) | 0.32 (0.36) | 0.24 (0.27) | 0.85 | ||

| SR | 0.34 (0.39) | 0.50 (0.56) | 0.45 (0.39) | 0.39 (0.44) | 0.26 (0.30) | 0.38 (0.44) | 0.86 | |

| MSR | 0.46 (0.53) | 0.47(0.54) | 0.46(0.54) | 0.56 (0.64) | 0.39 (0.45) | 0.40 (0.46) | 0.49 (0.56) | 0.85 |

| Student Agency Constructs | M | SD | 95% CI | Skewness | Kurtosis |

|---|---|---|---|---|---|

| SE | 4.51 | 0.98 | [4.40, 4.59] | −0.67 | 0.33 |

| PI | 3.83 | 0.97 | [3.83, 3.92] | −0.05 | −0.45 |

| PE | 3.71 | 1.04 | [3.61, 3.81] | 0.03 | −0.42 |

| MLGO | 4.24 | 1.08 | [4.13, 4.34] | −0.30 | −0.29 |

| LC | 4.55 | 0.99 | [4.45, 4.64] | −0.64 | 0.44 |

| FO | 3.73 | 1.16 | [3.62, 3.84] | −0.11 | −0.39 |

| SR | 3.86 | 1.10 | [3.75, 3.96] | −0.21 | −0.29 |

| MSR | 4.09 | 1.11 | [3.98, 4.19] | −0.23 | −0.42 |

| Student Agency Constructs | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| SE | 1.00 | |||||||

| PI | 0.43 | 1.00 | ||||||

| PE | 0.29 | 0.53 | 1.00 | |||||

| MLGO | 0.48 | 0.42 | 0.39 | 1.00 | ||||

| LC | 0.54 | 0.32 | 0.24 | 0.38 | 1.00 | |||

| FO | 0.27 | 0.25 | 0.28 | 0.32 | 0.24 | 1.00 | ||

| SR | 0.34 | 0.49 | 0.45 | 0.39 | 0.28 | 0.38 | 1.00 | |

| MSR | 0.45 | 0.47 | 0.48 | 0.55 | 0.39 | 0.41 | 0.51 | 1.00 |

| Student Agency Constructs | Hierarchical Clustering | k-Means Clustering | ||||||

|---|---|---|---|---|---|---|---|---|

| 1 (n = 204) | 2 (n = 66) | 3 (n = 42) | 4 (n = 113) | 1 (n = 121) | 2 (n = 117) | 3 (n = 68) | 4 (n = 119) | |

| SE | 4.45 (0.77) | 3.29 (0.96) | 4.55 (0.60) | 5.25 (0.66) | 4.20 (0.79) | 4.75 (0.58) | 3.28 (0.94) | 5.25 (0.65) |

| PI | 3.84 (0.72) | 2.78 (0.68) | 2.90 (0.61) | 4.77 (0.59) | 3.81 (0.75) | 3.47 (0.77) | 2.88 (0.67) | 4.75 (0.66) |

| PE | 3.81 (0.80) | 2.80 (0.84) | 2.55 (0.65) | 4.50 (0.87) | 3.85 (0.78) | 3.25 (0.85) | 2.78 (0.84) | 4.55 (0.87) |

| MLGO | 4.05 (0.79) | 2.98 (0.92) | 4.34 (0.85) | 5.27 (0.67) | 3.88 (0.71) | 4.33 (0.84) | 3.01 (0.92) | 5.22 (0.72) |

| LC | 4.41 (0.86) | 3.63 (1.08) | 4.96 (0.86) | 5.18 (0.68) | 4.14 (0.83) | 4.88 (0.71) | 3.55 (1.08) | 5.20 (0.63) |

| FO | 3.55 (1.05) | 2.91 (1.07) | 3.64 (0.76) | 4.59 (0.98) | 4.15 (0.73) | 3.06 (0.91) | 2.76 (0.94) | 4.52 (1.07) |

| SR | 3.88 (0.75) | 3.02 (0.99) | 2.46 (0.64) | 4.82 (0.86) | 4.02 (0.61) | 3.21 (0.97) | 2.91 (0.90) | 4.87 (0.77) |

| MSR | 4.02 (0.90) | 2.70 (0.76) | 4.08 (0.96) | 5.02 (0.73) | 3.99 (0.78) | 3.97 (0.92) | 2.68 (0.77) | 5.11 (0.69) |

| Number of Clusters | Silhouette Score | AIC | BIC |

|---|---|---|---|

| 2 | 0.56 | 1928.42 | 2058.09 |

| 3 | 0.53 | 1798.74 | 1993.24 |

| 4 | 0.51 | 1732.80 | 1992.13 |

| 5 | 0.45 | 1685.36 | 2009.53 |

| 6 | 0.38 | 1648.88 | 2037.88 |

| 7 | 0.33 | 1627.60 | 2081.43 |

| 8 | 0.26 | 1614.16 | 2132.82 |

| 9 | 0.23 | 1604.00 | 2187.50 |

| 10 | 0.22 | 1596.09 | 2244.42 |

| Track | n | M | SD | 95% CI | Skewness | Kurtosis | Md | IQR |

|---|---|---|---|---|---|---|---|---|

| Human service | 217 | 8.88 | 2.97 | [8.48, 9.27] | 1.16 | 2.91 | 9.00 | 3.50 |

| Industrial engineering | 208 | 10.70 | 5.01 | [10.01, 11.38] | 1.57 | 3.25 | 10.00 | 4.75 |

| Kruskal–Wallis/Pairwise Contrast | 3 vs. 1 | 2 vs. 3 | 2 vs. 1 | 4 vs. 3 | 4 vs. 1 | 4 vs. 2 |

|---|---|---|---|---|---|---|

| H-statistics | 15.27 | 69.30 | 54.20 | 73.55 | 58.30 | 4.25 |

| Adjusted p-value | 1 | 0.001 | 0.004 | 0.000 | 0.001 | 1 |

| Effect size ε2 * | 0.036 | 0.163 | 0.128 | 0.173 | 0.142 | 0.010 |

| Variable | Loadings (Min.–Max.) | Cronbach’s α | ρC | AVE | Max. HTMT | Max. |r| | |

|---|---|---|---|---|---|---|---|

| FO | [0.656–0.940] | 0.874 | 0.891 | 0.676 | 0.822 | 0.463 | 0.435 |

| LC | [0.710–0.986] | 0.854 | 0.901 | 0.697 | 0.835 | 0.450 | 0.535 |

| MLGO | [0.776–0.986] | 0.879 | 0.918 | 0.738 | 0.859 | 0.644 | 0.565 |

| MSR | [0.793–0.985] | 0.874 | 0.915 | 0.731 | 0.855 | 0.560 | 0.483 |

| PE | [0.704–0.982] | 0.835 | 0.891 | 0.675 | 0.822 | 0.636 | 0.340 |

| PI | [0.732–0.969] | 0.849 | 0.896 | 0.686 | 0.828 | 0.567 | 0.468 |

| SE | [0.774–0.865] | 0.844 | 0.895 | 0.681 | 0.825 | 0.630 | 0.340 |

| SR | [0.586–0.955] | 0.877 | 0.885 | 0.665 | 0.815 | 0.567 | 0.138 |

| Path | β | Bias | 2.5% | 97.5% | p-Value | Cohen f2 * |

|---|---|---|---|---|---|---|

| FO -> AI literacy | −0.052 | 0.010 | −0.204 | 0.044 | 0.393 | 0.002 |

| LC -> AI literacy | −0.099 | 0.017 | −0.220 | −0.022 | 0.043 | 0.008 |

| MLGO -> AI literacy | 0.219 | −0.007 | 0.096 | 0.350 | 0.001 | 0.036 |

| MSR -> AI literacy | 0.222 | −0.024 | 0.123 | 0.356 | 0.000 | 0.030 |

| PE -> AI literacy | 0.036 | −0.010 | −0.082 | 0.184 | 0.598 | 0.001 |

| PI -> AI literacy | −0.081 | 0.016 | −0.226 | 0.026 | 0.194 | 0.005 |

| SE -> AI literacy | 0.195 | −0.021 | 0.092 | 0.331 | 0.002 | 0.027 |

| Sex -> AI literacy | −0.138 | −0.013 | −0.328 | 0.069 | 0.175 | 0.002 |

| SR -> AI literacy | −0.179 | 0.028 | −0.351 | −0.080 | 0.014 | 0.026 |

| Study Year 3 -> AI literacy | 0.012 | −0.001 | −0.200 | 0.218 | 0.911 | 0.000 |

| Study Year 4 -> AI literacy | 0.093 | 0.002 | −0.105 | 0.294 | 0.366 | 0.001 |

| Track -> AI literacy | 0.607 | −0.012 | 0.411 | 0.830 | 0.000 | 0.042 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Avsec, S.; Rupnik, D. From Transformative Agency to AI Literacy: Profiling Slovenian Technical High School Students Through the Five Big Ideas Lens. Systems 2025, 13, 562. https://doi.org/10.3390/systems13070562

Avsec S, Rupnik D. From Transformative Agency to AI Literacy: Profiling Slovenian Technical High School Students Through the Five Big Ideas Lens. Systems. 2025; 13(7):562. https://doi.org/10.3390/systems13070562

Chicago/Turabian StyleAvsec, Stanislav, and Denis Rupnik. 2025. "From Transformative Agency to AI Literacy: Profiling Slovenian Technical High School Students Through the Five Big Ideas Lens" Systems 13, no. 7: 562. https://doi.org/10.3390/systems13070562

APA StyleAvsec, S., & Rupnik, D. (2025). From Transformative Agency to AI Literacy: Profiling Slovenian Technical High School Students Through the Five Big Ideas Lens. Systems, 13(7), 562. https://doi.org/10.3390/systems13070562