How Do Ethical Factors Affect User Trust and Adoption Intentions of AI-Generated Content Tools? Evidence from a Risk-Trust Perspective

Abstract

1. Introduction

- Do the ethical issues associated with AIGC tools significantly affect users’ adoption intentions?

- Among various ethical factors, which specific dimensions exert a significant influence on user behavior?

- Do these ethical factors indirectly affect adoption intentions through the pathways of perceived risk and trust?

2. Literature Review

2.1. User Adoption of AI-Generated Content (AIGC) Tools

2.2. Categorization of AI Ethical Issues and User Perception

2.3. Perceived Risk (PR) and Trust (TR)

3. Research Methods

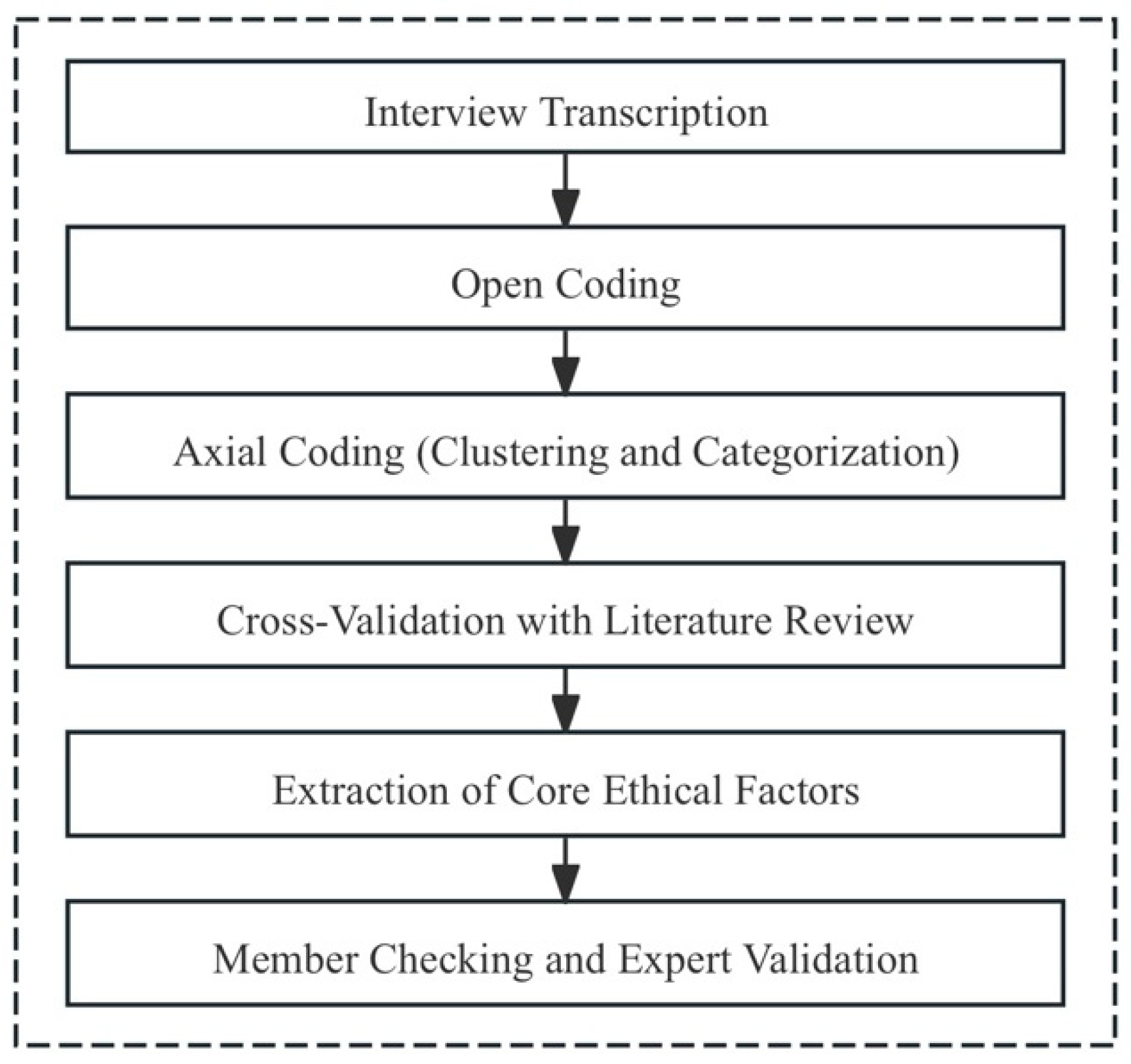

3.1. Overview of Research Design Process

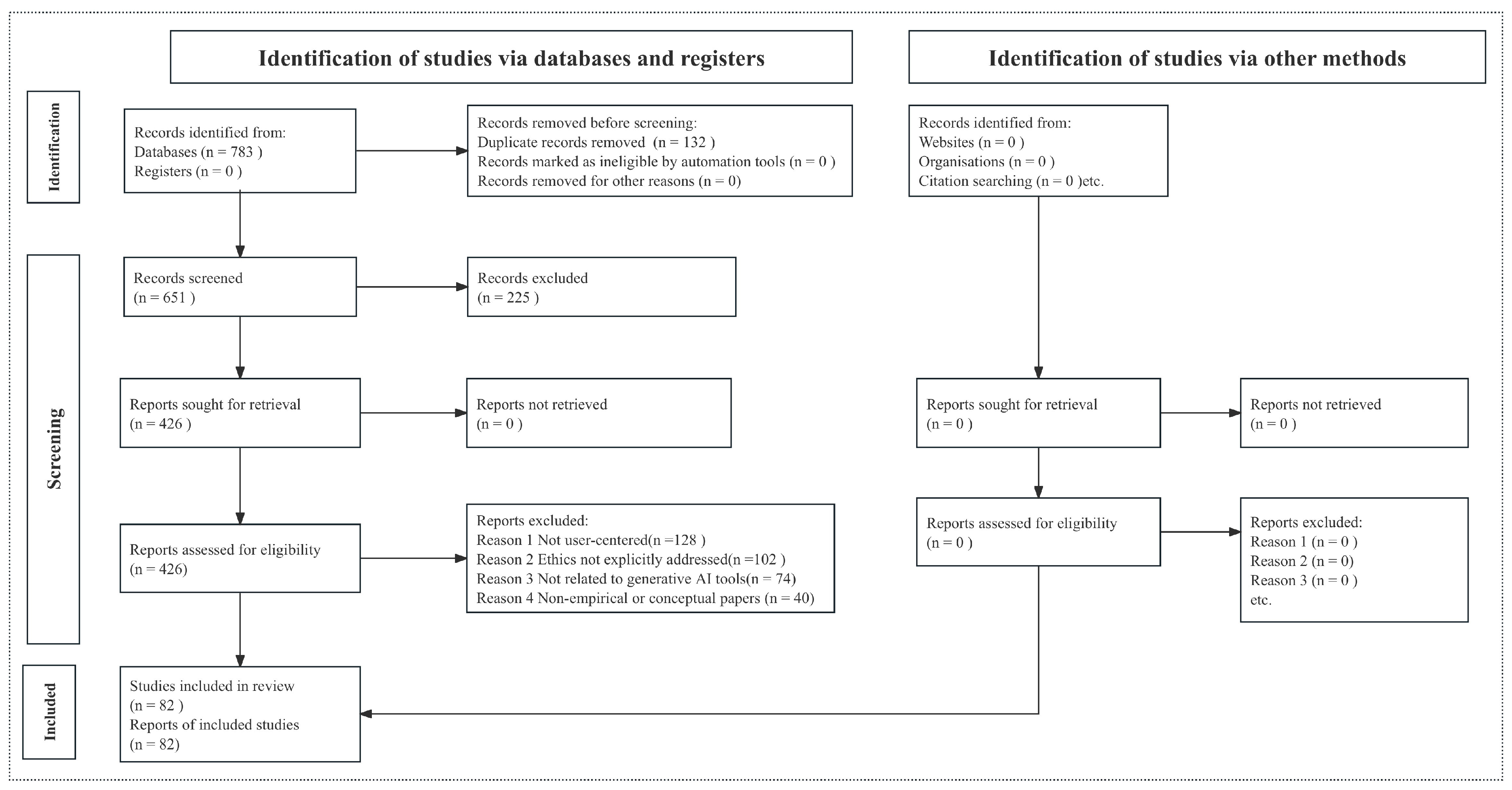

3.2. Systematic Literature Review (SLR)

3.3. Expert Interview Design and Implementation

4. Results

4.1. Phase I: Identification and Construction of Ethical Variables

4.1.1. Findings from Systematic Literature Review

4.1.2. Findings from Expert Interviews

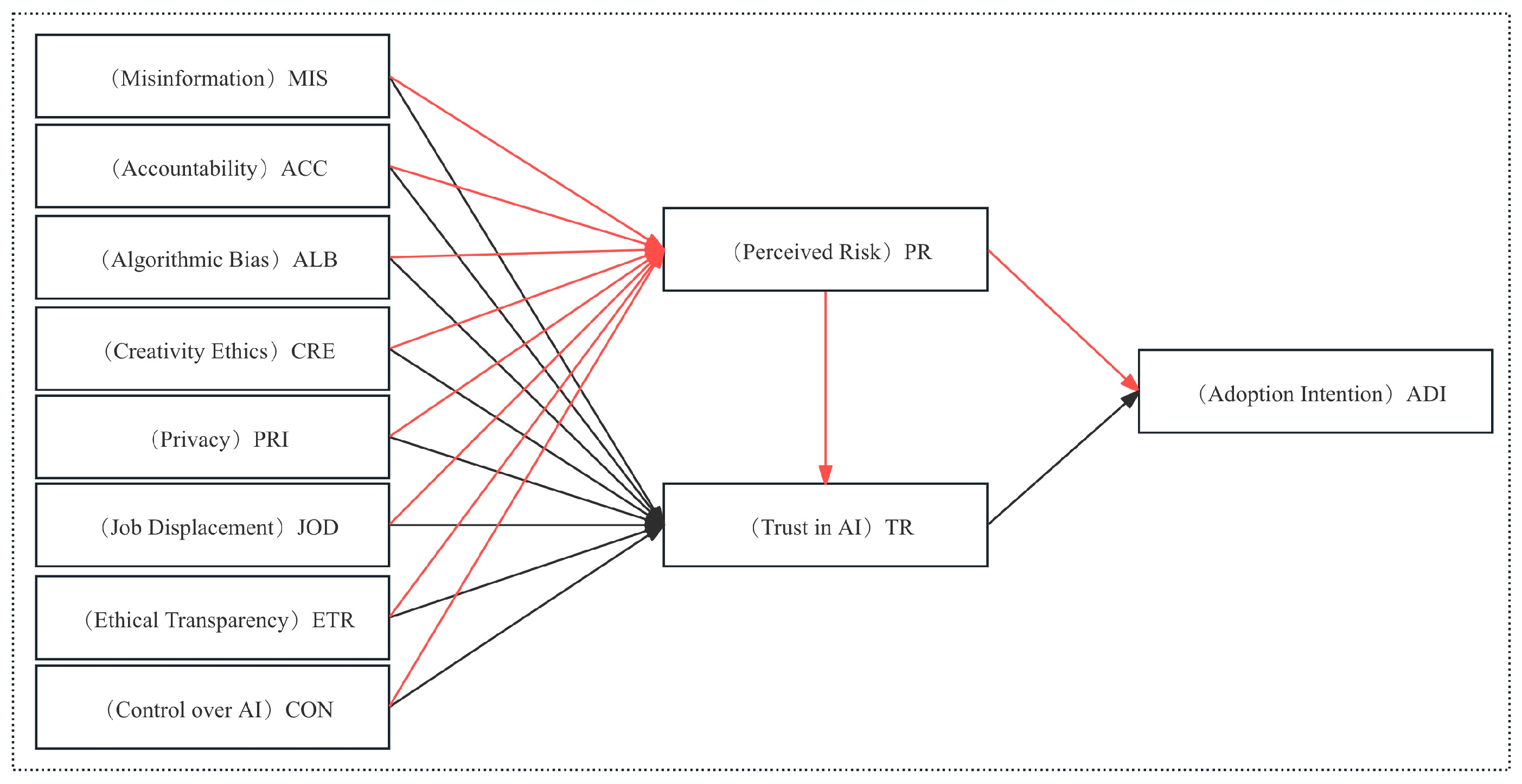

4.2. Hypotheses Development

4.2.1. Influence of Ethical Perception on Perceived Risk (PR)

4.2.2. Influence of Ethical Perception on Trust (TR)

4.2.3. The Effects of Mediating Variables on Adoption Intention (ADI)

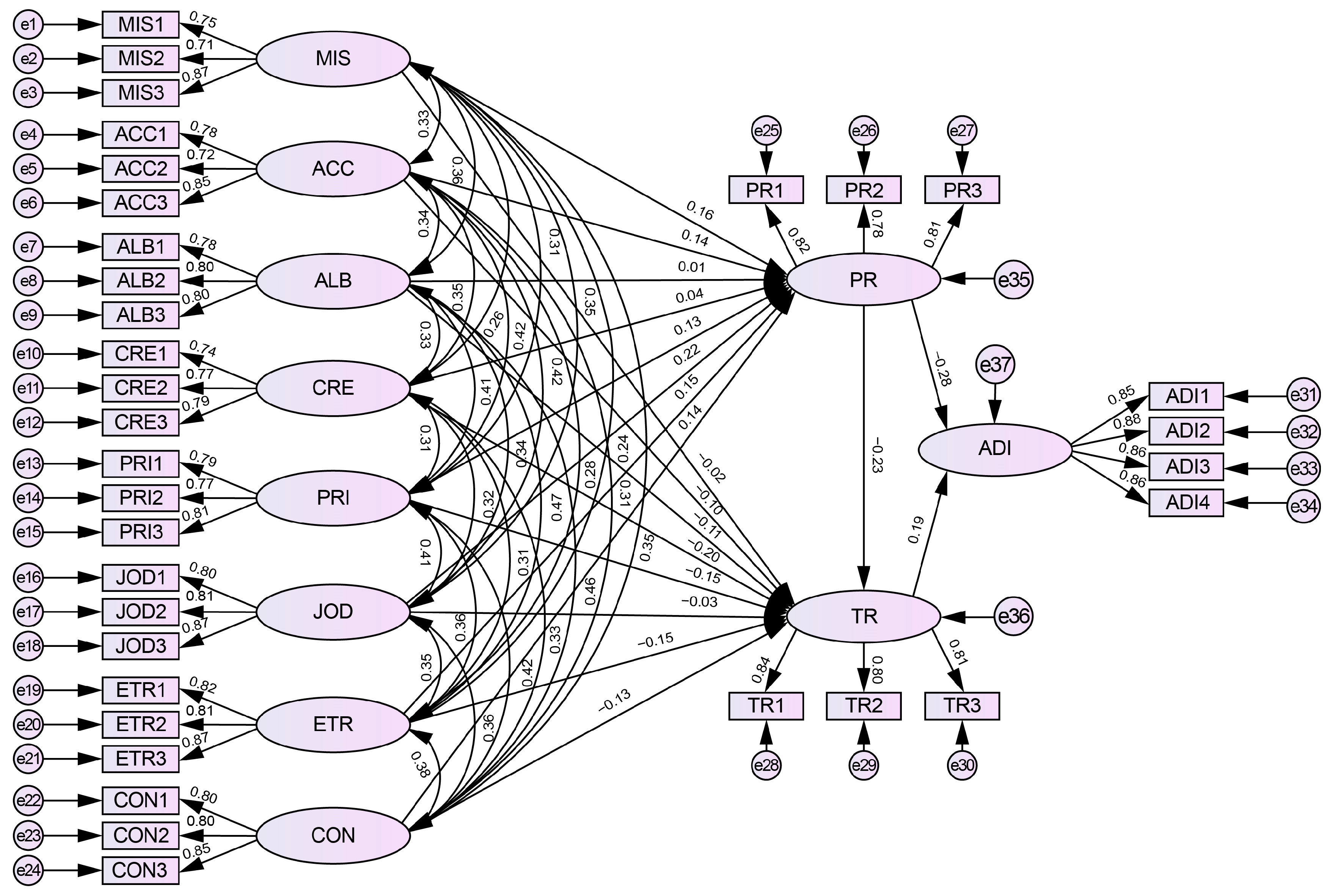

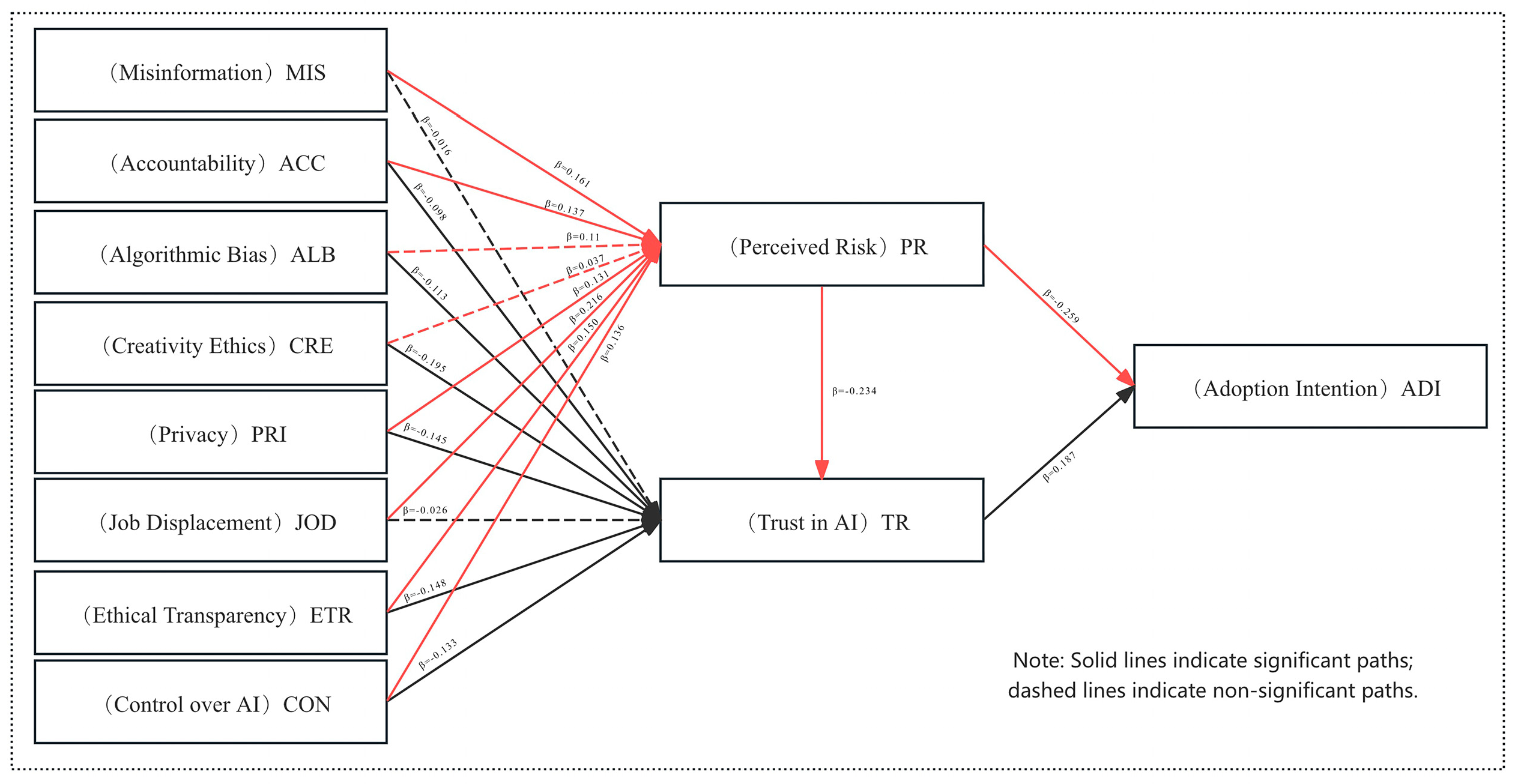

4.3. Research Model

4.4. Phase II: Model Testing and Empirical Analysis

4.4.1. Participants

4.4.2. Quantitative Analysis Results

5. Discussion

5.1. The Impact of Ethical Perceptions on Perceived Risk (PR)

5.2. The Impact of Ethical Perceptions on Trust (TR)

5.3. The Logical Relationship Between Trust–Risk Mechanism and Adoption Intention (ADI)

6. Contributions and Future Directions

6.1. Theoretical and Practical Contributions

6.2. Research Limitations and Future Research Directions

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Quantitative Survey Questionnaire Items

| Variables | Items | Issue | References |

| Misinformation (MIS) | MIS1 | I am concerned that AI-generated content may not be truthful or accurate. | [8,45,77] |

| MIS2 | I find it difficult to determine whether AI content has been fact-checked. | ||

| MIS3 | AI may produce misleading or deceptively realistic false information. | ||

| Accountability (ACC) | ACC1 | I am worried about whether the platform will take responsibility for errors in AI-generated content. | [11,57] |

| ACC2 | I am unclear about who should be held accountable when problems occur. | ||

| ACC3 | I believe AI platforms should clearly define responsibility attribution. | ||

| Algorithmic Bias (ALB) | ALB1 | I am concerned that AI-generated content may contain gender, racial, or cultural bias. | [30,59,70] |

| ALB2 | I think AI systems may make decisions based on unfair data. | ||

| ALB3 | Content generated or recommended by AI may reinforce stereotypes. | ||

| Creativity Ethics (CRE) | CRE1 | I am concerned that AI may infringe upon others’ original works. | [22,49,50] |

| CRE2 | It is difficult for me to determine whether AI-generated content constitutes plagiarism. | ||

| CRE3 | I feel confused about the ownership of AI-generated creations. | ||

| Privacy (PRI) | PRI1 | I am concerned that the platform may store or analyze the content I input. | [22,24,27] |

| PRI2 | I am unsure whether AI tools make use of my personal data. | ||

| PRI3 | I am worried that the platform has not clearly explained its data usage policies. | ||

| Job Displacement (JOD) | JOD1 | I am worried that AI-generated tools may replace parts of my job. | [1,17,51] |

| JOD2 | Using AI makes me concerned that my professional skills will be devalued. | ||

| JOD3 | The development of AIGC may lead to job loss for creative workers. | ||

| Ethical Transparency (ETR) | ETR1 | I do not understand how AI-generated content is constructed. | [24,41] |

| ETR2 | I feel that the platform has not adequately explained its technologies and data sources. | ||

| ETR3 | I hope AI platforms will be more transparent about their usage rules and limitations. | ||

| Control over AI (CON) | CON1 | I find the output of AI difficult to predict at times. | [5,56,70] |

| CON2 | Sometimes I feel I cannot effectively control the behavior of the AI. | ||

| CON3 | I would like to fine-tune the style or structure of AI-generated content more precisely. | ||

| Perceived Risk (PR) | PR1 | Using AI tools makes me feel a certain degree of uncertainty. | [39,60] |

| PR2 | I am concerned that AI outputs may cause negative consequences. | ||

| PR3 | I believe the potential risks associated with AI cannot be fully anticipated. | ||

| Trust in AI (TR) | TR1 | I trust that the AI platform can reasonably manage its generated content. | [32,41] |

| TR2 | I believe the AI platform is generally trustworthy. | ||

| TR3 | I trust these tools will not cause me harm or distress. | ||

| Adoption Intention (ADI) | ADI1 | If conditions permit, I am willing to continue using AIGC tools. | [14,18,33] |

| ADI2 | I am willing to recommend AI-generated tools to others. | ||

| ADI3 | I plan to use such AI tools more frequently in the future. | ||

| ADI4 | I will actively explore more ways to use AI-generated tools. |

References

- Chen, H.-C.; Chen, Z. Using ChatGPT and Midjourney to Generate Chinese Landscape Painting of Tang Poem ‘the Difficult Road to Shu’. Int. J. Soc. Sci. Artist. Innov. 2023, 3, 1–10. [Google Scholar] [CrossRef]

- Cao, Y.; Li, S.; Liu, Y.; Yan, Z.; Dai, Y.; Yu, P.S.; Sun, L. A Comprehensive Survey of AI-Generated Content (AIGC): A History of Generative AI from GAN to ChatGPT. arXiv 2023, arXiv:2303.04226. Available online: https://arxiv.org/abs/2303.04226 (accessed on 7 April 2025).

- Gao, M.; Leong, W.Y. Research on the Application of AIGC in the Film Industry. J. Innov. Technol. 2024, 2024, 1–12. [Google Scholar] [CrossRef]

- Wu, F.; Hsiao, S.-W.; Lu, P. An AIGC-Empowered Methodology to Product Color Matching Design. Displays 2024, 81, 102623. [Google Scholar] [CrossRef]

- Wang, Y.; Pan, Y.; Yan, M.; Su, Z.; Luan, T.H. A Survey on ChatGPT: AI–Generated Contents, Challenges, and Solutions. IEEE Open J. Comput. Soc. 2023, 4, 280–302. [Google Scholar] [CrossRef]

- Song, F. Optimizing User Experience: AI- Generated Copywriting and Media Integration with ChatGPT on Xiaohongshu. Commun. Humanit. Res. 2023, 18, 215–221. [Google Scholar] [CrossRef]

- Murphy, C.; Thomas, F.P. Navigating the AI Revolution: This Journal’s Journey Continues. J. Spinal Cord Med. 2023, 46, 529–530. [Google Scholar] [CrossRef] [PubMed]

- Doyal, A.S.; Sender, D.; Nanda, M.; Serrano, R.A. Chat GPT and Artificial Intelligence in Medical Writing: Concerns and Ethical Considerations. Cureus 2023, 15, e43292. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Liu, S.; Yang, H.; Guo, J.; Wu, Y.; Liu, J. Ethical Considerations of Using ChatGPT in Health Care. J. Med. Internet Res. 2023, 25, e48009. [Google Scholar] [CrossRef]

- Peng, L.; Zhao, B. Navigating the Ethical Landscape behind ChatGPT. Big Data Soc. 2024, 11, 20539517241237488. [Google Scholar] [CrossRef]

- Ghandour, A.; Woodford, B.J.; Abusaimeh, H. Ethical Considerations in the Use of ChatGPT: An Exploration through the Lens of Five Moral Dimensions. IEEE Access 2024, 12, 60682–60693. [Google Scholar] [CrossRef]

- Stahl, B.C.; Eke, D. The Ethics of ChatGPT—Exploring the Ethical Issues of an Emerging Technology. Int. J. Inf. Manag. 2024, 74, 102700. [Google Scholar] [CrossRef]

- Choudhury, A.; Elkefi, S.; Tounsi, A.; Statler, B.M. Exploring Factors Influencing User Perspective of ChatGPT as a Technology That Assists in Healthcare Decision Making: A Cross Sectional Survey Study. PLoS ONE 2024, 19, e0296151. [Google Scholar] [CrossRef] [PubMed]

- Jo, H. Decoding the ChatGPT Mystery: A Comprehensive Exploration of Factors Driving AI Language Model Adoption. Inf. Dev. 2023, 2666669231202764. [Google Scholar] [CrossRef]

- Balaskas, S.; Tsiantos, V.; Chatzifotiou, S.; Rigou, M. Determinants of ChatGPT Adoption Intention in Higher Education: Expanding on TAM with the Mediating Roles of Trust and Risk. Information 2025, 16, 82. [Google Scholar] [CrossRef]

- Chen, G.; Fan, J.; Azam, M. Exploring Artificial Intelligence (AI) Chatbots Adoption among Research Scholars Using Unified Theory of Acceptance and Use of Technology (UTAUT). J. Librariansh. Inf. Sci. 2024, 9610006241269189. [Google Scholar] [CrossRef]

- Shuhaiber, A.; Kuhail, M.A.; Salman, S. ChatGPT in Higher Education—A Student’s Perspective. Comput. Hum. Behav. Rep. 2025, 17, 100565. [Google Scholar] [CrossRef]

- Biloš, A.; Budimir, B. Understanding the Adoption Dynamics of ChatGPT among Generation Z: Insights from a Modified UTAUT2 Model. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 863–879. [Google Scholar] [CrossRef]

- Wang, S.-F.; Chen, C.-C. Exploring Designer Trust in Artificial Intelligence-Generated Content: TAM/TPB Model Study. Appl. Sci. 2024, 14, 6902. [Google Scholar] [CrossRef]

- Wang, C.; Chen, X.; Hu, Z.; Jin, S.; Gu, X. Deconstructing University Learners’ Adoption Intention towards AIGC Technology: A Mixed-methods Study Using ChatGPT as an Example. J. Comput. Assisted Learn. 2025, 41, e13117. [Google Scholar] [CrossRef]

- Yu, H.; Dong, Y.; Wu, Q. User-Centric AIGC Products: Explainable Artificial Intelligence and AIGC Products. arXiv 2023, arXiv:2308.09877. [Google Scholar] [CrossRef]

- Boina, R.; Achanta, A. Balancing Language Brilliance with User Privacy: A Call for Ethical Data Handling in ChatGPT. Int. J. Sci. Res. 2023, 12, 440–443. [Google Scholar] [CrossRef]

- Smuha, N.A. The EU Approach to Ethics Guidelines for Trustworthy Artificial Intelligence. Comput. Law Rev. Int. 2019, 20, 97–106. [Google Scholar] [CrossRef]

- Li, Z. AI Ethics and Transparency in Operations Management: How Governance Mechanisms Can Reduce Data Bias and Privacy Risks. J. Appl. Econ. Policy Stud. 2024, 13, 89–93. [Google Scholar] [CrossRef]

- Lewis, D.; Hogan, L.; Filip, D.; Wall, P. Global Challenges in the Standardization of Ethics for Trustworthy AI. J. ICT Stand 2020, 8, 123–150. [Google Scholar] [CrossRef]

- Qureshi, N.I.; Choudhuri, S.S.; Nagamani, Y.; Varma, R.A.; Shah, R. Ethical Considerations of AI in Financial Services: Privacy, Bias, and Algorithmic Transparency. In Proceedings of the 2024 International Conference on Knowledge Engineering and Communication Systems (ICKECS), Chikkaballapur, India, 18–19 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Campbell, M.; Barthwal, A.; Joshi, S.; Shouli, A.; Shrestha, A.K. Investigation of the Privacy Concerns in AI Systems for Young Digital Citizens: A Comparative Stakeholder Analysis. In Proceedings of the 2025 IEEE 15th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 6–8 January 2025; pp. 30–37. [Google Scholar] [CrossRef]

- Morante, G.; Viloria-Núñez, C.; Florez-Hamburger, J.; Capdevilla-Molinares, H. Proposal of an Ethical and Social Responsibility Framework for Sustainable Value Generation in AI. In Proceedings of the 2024 IEEE Technology and Engineering Management Society (TEMSCON LATAM), Panama, Panama, 18–19 July 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Shrestha, A.K.; Joshi, S. Toward Ethical AI: A Qualitative Analysis of Stakeholder Perspectives. In Proceedings of the 2025 IEEE 15th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 6–8 January 2025; pp. 22–29. [Google Scholar] [CrossRef]

- Yang, Q.; Lee, Y.-C. Ethical AI in Financial Inclusion: The Role of Algorithmic Fairness on User Satisfaction and Recommendation. Big Data Cogn. Comput. 2024, 8, 105. [Google Scholar] [CrossRef]

- Angerschmid, A.; Zhou, J.; Theuermann, K.; Chen, F.; Holzinger, A. Fairness and Explanation in AI-Informed Decision Making. Mach. Learn. Knowl. Extr. 2022, 4, 556–579. [Google Scholar] [CrossRef]

- Bhaskar, P.; Misra, P.; Chopra, G. Shall I Use ChatGPT? A Study on Perceived Trust and Perceived Risk towards ChatGPT Usage by Teachers at Higher Education Institutions. Int. J. Inf. Learn. Technol. 2024, 41, 428–447. [Google Scholar] [CrossRef]

- Zhou, T.; Lu, H. The Effect of Trust on User Adoption of AI-Generated Content. Electron. Libr. 2024, 43, 61–76. [Google Scholar] [CrossRef]

- Kenesei, Z.; Ásványi, K.; Kökény, L.; Jászberényi, M.; Miskolczi, M.; Gyulavári, T.; Syahrivar, J. Trust and Perceived Risk: How Different Manifestations Affect the Adoption of Autonomous Vehicles. Transp. Res. Part A Policy Pract. 2022, 164, 379–393. [Google Scholar] [CrossRef]

- Kumar, M.; Sharma, S.; Singh, J.B.; Dwivedi, Y.K. “Okay Google, What about My Privacy?”: User’s Privacy Perceptions and Acceptance of Voice Based Digital Assistants. Comput. Hum. Behav. 2021, 120, 106763. [Google Scholar]

- Bawack, R.E.; Bonhoure, E.; Mallek, S. Why Would Consumers Risk Taking Purchase Recommendations from Voice Assistants? Inf. Technol. People 2024, 38, 1686–1711. [Google Scholar] [CrossRef]

- Rigotti, C.; Fosch-Villaronga, E. Fairness, AI & Recruitment. Comput. Law Secur. Rev. 2024, 53, 105966. [Google Scholar] [CrossRef]

- Haleem, A.; Javaid, M.; Khan, I.H. Current Status and Applications of Artificial Intelligence (AI) in Medical Field: An Overview. Curr. Med. Res. Pract. 2019, 9, 231–237. [Google Scholar] [CrossRef]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F.; et al. AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations. Mines Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef]

- Al-kfairy, M.; Mustafa, D.; Kshetri, N.; Insiew, M.; Alfandi, O. Ethical Challenges and Solutions of Generative AI: An Interdisciplinary Perspective. Informatics 2024, 11, 58. [Google Scholar] [CrossRef]

- Law, R.; Ye, H.; Lei, S.S.I. Ethical Artificial Intelligence (AI): Principles and Practices. Int. J. Contemp. Hosp. Manag. 2024, 37, 279–295. [Google Scholar] [CrossRef]

- Shrestha, A.K.; Barthwal, A.; Campbell, M.; Shouli, A.; Syed, S.; Joshi, S.; Vassileva, J. Navigating AI to Unpack Youth Privacy Concerns: An In-Depth Exploration and Systematic Review. arXiv 2024, arXiv:2412.16369. [Google Scholar] [CrossRef]

- Stracke, C.M.; Chounta, I.A.; Holmes, W.; Tlili, A.; Bozkurt, A. A Standardised PRISMA-Based Protocol for Systematic Reviews of the Scientific Literature on Artificial Intelligence and Education (AI&ED). J. Appl. Learn. Teach. 2023, 6, 64–70. [Google Scholar] [CrossRef]

- Hamdan, Q.U.; Umar, W.; Hasan, M. Navigating Ethical Dilemmas of Generative AI In Medical Writing. J. Rawalpindi Med. Coll. 2024, 28, 363–364. [Google Scholar] [CrossRef]

- Li, F.; Yang, Y. Impact of Artificial Intelligence–Generated Content Labels on Perceived Accuracy, Message Credibility, and Sharing Intentions for Misinformation: Web-Based, Randomized, Controlled Experiment. JMIR Form. Res. 2024, 8, e60024. [Google Scholar] [CrossRef] [PubMed]

- Ozanne, M.; Bhandari, A.; Bazarova, N.N.; DiFranzo, D. Shall AI Moderators Be Made Visible? Perception of Accountability and Trust in Moderation Systems on Social Media Platforms. Big Data Soc. 2022, 9, 20539517221115666. [Google Scholar] [CrossRef]

- Wang, C.; Wang, K.; Bian, A.; Islam, R.; Keya, K.; Foulde, J.; Pan, S. User Acceptance of Gender Stereotypes in Automated Career Recommendations. arXiv 2021, arXiv:2106.07112. [Google Scholar]

- Brauner, P.; Hick, A.; Philipsen, R.; Ziefle, M. What Does the Public Think about Artificial Intelligence?—A Criticality Map to Understand Bias in the Public Perception of AI. Front. Comput. Sci. 2023, 5, 1113903. [Google Scholar] [CrossRef]

- Guo, X.; Li, Y.; Peng, Y.; Wei, X. Copyleft for Alleviating AIGC Copyright Dilemma: What-If Analysis, Public Perception and Implications. arXiv 2024, arXiv:2402.12216. [Google Scholar] [CrossRef]

- Zhuang, L. AIGC (Artificial Intelligence Generated Content) Infringes the Copyright of Human Artists. Appl. Comput. Eng. 2024, 34, 31–39. [Google Scholar] [CrossRef]

- Wang, K.-H.; Lu, W.-C. AI-Induced Job Impact: Complementary or Substitution? Empirical Insights and Sustainable Technology Considerations. Sustain. Technol. Entrep. 2025, 4, 100085. [Google Scholar] [CrossRef]

- Liu, Y.; Meng, X.; Li, A. AI’s Ethical Implications: Job Displacement. Adv. Comput. Commun. 2023, 4, 138–142. [Google Scholar] [CrossRef]

- Shin, D. The Effects of Explainability and Causability on Perception, Trust, and Acceptance: Implications for Explainable AI. Int. J. Hum.-Comput. Stud. 2021, 146, 102551. [Google Scholar] [CrossRef]

- Kim, S.S.Y. Establishing Appropriate Trust in AI through Transparency and Explainability. In Proceedings of the CHI EA’24: Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 11–16 May 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Wamba-Taguimdje, S.-L.; Wamba, S.F.; Twinomurinzi, H. Why Should Users Take the Risk of Sustainable Use of Generative Artificial Intelligence Chatbots: An Exploration of ChatGPT’s Use. J. Glob. Inf. Manag. 2024, 32, 1–32. [Google Scholar] [CrossRef]

- Sieger, L.N.; Hermann, J.; Schomäcker, A.; Heindorf, S.; Meske, C.; Hey, C.-C.; Doğangün, A. User Involvement in Training Smart Home Agents: Increasing Perceived Control and Understanding. In Proceedings of the 10th International Conference on Human-Agent Interaction, Christchurch, New Zealand, 5–8 December 2022; pp. 76–85. [Google Scholar] [CrossRef]

- Aumüller, U.; Meyer, E. Trusting AI: Factors Influencing Willingness of Accountability for AI-Generated Content in the Workplace. In Human Factors and Systems Interaction; AHFE International: Orlando, FL, USA, 2024. [Google Scholar] [CrossRef]

- Chen, C.; Sundar, S.S. Is This AI Trained on Credible Data? The Effects of Labeling Quality and Performance Bias on User Trust. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–11. [Google Scholar] [CrossRef]

- Hou, T.-Y.; Tseng, Y.-C.; Yuan, C.W. Is This AI Sexist? The Effects of a Biased AI’s Anthropomorphic Appearance and Explainability on Users’ Bias Perceptions and Trust. Int. J. Inf. Manag. 2024, 76, 102775. [Google Scholar] [CrossRef]

- Aquilino, L.; Bisconti, P.; Marchetti, A. Trust in AI: Transparency, and Uncertainty Reduction. Development of a New Theoretical Framework. In Proceedings of the MULTITTRUST 2023 Multidisciplinary Perspectives on Human-AI Team Trust 2023, Gothenburg, Sweden, 4 December 2023. [Google Scholar]

- Choudhury, A.; Asan, O.; Medow, J.E. Effect of Risk, Expectancy, and Trust on Clinicians’ Intent to Use an Artificial Intelligence System—Blood Utilization Calculator. Appl. Ergon. 2022, 101, 103708. [Google Scholar] [CrossRef]

- Marjerison, R.K.; Dong, H.; Kim, J.-M.; Zheng, H.; Zhang, Y.; Kuan, G. Understanding User Acceptance of AI-Driven Chatbots in China’s E-Commerce: The Roles of Perceived Authenticity, Usefulness, and Risk. Systems 2025, 13, 71. [Google Scholar] [CrossRef]

- Ashrafi, D.M.; Ahmed, S.; Shahid, T.S. Privacy or Trust: Understanding the Privacy Paradox in Users Intentions towards e-Pharmacy Adoption through the Lens of Privacy-Calculus Model. J. Sci. Technol. Policy Manag. 2024; ahead-of-print. [Google Scholar] [CrossRef]

- Ferketich, S. Internal Consistency Estimates of Reliability. Res. Nurs. Health 1990, 13, 437–440. [Google Scholar] [CrossRef]

- Sireci, S.G. The Construct of Content Validity. Social Indic. Res. 1998, 45, 83–117. [Google Scholar] [CrossRef]

- Bentler, P.M. Comparative Fit Indexes in Structural Models. Psychol. Bull. 1990, 107, 238–246. [Google Scholar] [CrossRef]

- Caporusso, N. Generative Artificial Intelligence and the Emergence of Creative Displacement Anxiety: Review. Res. Directs Psychol. Behav. 2023, 3, 9. [Google Scholar] [CrossRef]

- Molla, M.M. Artificial Intelligence (AI) and Fear of Job Displacement in Banks in Bangladesh. Int. J. Sci. Bus. 2024, 42, 1–18. [Google Scholar] [CrossRef]

- Liu, X.; Liu, Y. Developing and Validating a Scale of Artificial Intelligence Anxiety among Chinese EFL Teachers. Euro. J. Educ. 2025, 60, e12902. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, Y.; Luo, Q.; Parker, A.G.; De Choudhury, M. Synthetic Lies: Understanding AI-Generated Misinformation and Evaluating Algorithmic and Human Solutions. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–20. [Google Scholar] [CrossRef]

- Brauner, P.; Glawe, F.; Liehner, G.L.; Vervier, L.; Ziefle, M. AI Perceptions across Cultures: Similarities and Differences in Expectations, Risks, Benefits, Tradeoffs, and Value in Germany and China. arXiv 2024, arXiv:2412.13841. [Google Scholar] [CrossRef]

- Moon, D.; Ahn, S. A Study on Functional Requirements and Inspection Items for AI System Change Management and Model Improvement on the Web Platform. J. Web Eng. 2024, 23, 831–848. [Google Scholar] [CrossRef]

- Smith, H. Clinical AI: Opacity, Accountability, Responsibility and Liability. AI Soc. 2020, 36, 535–545. [Google Scholar] [CrossRef]

- Henriksen, A.; Enni, S.; Bechmann, A. Situated Accountability: Ethical Principles, Certification Standards, and Explanation Methods in Applied AI. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, Virtual, 19–21 May 2021; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Beckers, S. Moral Responsibility for AI Systems. Adv. Neural Inf. Process. Syst. 2023, 36, 4295–4308. [Google Scholar] [CrossRef]

- Yonsei University, Seoul, Republic of Korea; Kim, H. Investigating the Effects of Generative-Ai Responses on User Experience after Ai Hallucination. In Proceedings of the MBP 2024 Tokyo International Conference on Management & Business Practices, Tokyo, Japan, 18–19 January 2024; pp. 92–101. [Google Scholar] [CrossRef]

- Jia, H.; Appelman, A.; Wu, M.; Bien-Aimé, S. News Bylines and Perceived AI Authorship: Effects on Source and Message Credibility. Comput. Hum. Behav. Artif. Hum. 2024, 2, 100093. [Google Scholar] [CrossRef]

- Mazzi, F. Authorship in Artificial Intelligence-generated Works: Exploring Originality in Text Prompts and Artificial Intelligence Outputs through Philosophical Foundations of Copyright and Collage Protection. J. World Intellect. Prop. 2024, 27, 410–427. [Google Scholar] [CrossRef]

- Ferrario, A. Design Publicity of Black Box Algorithms: A Support to the Epistemic and Ethical Justifications of Medical AI Systems. J. Med. Ethics 2022, 48, 492–494. [Google Scholar] [CrossRef]

- Chaudhary, G. Unveiling the Black Box: Bringing Algorithmic Transparency to AI. Masaryk Univ. J. Law Technol. 2024, 18, 93–122. [Google Scholar] [CrossRef]

- Thalpage, N. Unlocking the Black Box: Explainable Artificial Intelligence (XAI) for Trust and Transparency in AI Systems. J. Digit. Art Humanit. 2023, 4, 31–36. [Google Scholar] [CrossRef]

- Ijaiya, H. Harnessing AI for Data Privacy: Examining Risks, Opportunities and Strategic Future Directions. Int. J. Sci. Res. Arch. 2024, 13, 2878–2892. [Google Scholar] [CrossRef]

- Shin, D. User Perceptions of Algorithmic Decisions in the Personalized AI System:Perceptual Evaluation of Fairness, Accountability, Transparency, and Explainability. J. Broadcast. Electron. Media 2020, 64, 541–565. [Google Scholar] [CrossRef]

- Zhou, J.; Verma, S.; Mittal, M.; Chen, F. Understanding Relations between Perception of Fairness and Trust in Algorithmic Decision Making. In Proceedings of the 2021 8th International Conference on Behavioral and Social Computing (BESC), Doha, Qatar, 29–31 October 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Lankton, N.K.; McKnight, D.H. What Does It Mean to Trust Facebook? Examining Technology and Interpersonal Trust Beliefs. SIGMIS Database 2011, 42, 32–54. [Google Scholar] [CrossRef]

- Muir, B.M. Trust in Automation: Part I. Theoretical Issues in the Study of Trust and Human Intervention in Automated Systems. Ergonomics 1994, 37, 1905–1922. [Google Scholar] [CrossRef]

- Featherman, M.S.; Pavlou, P.A. Predicting E-Services Adoption: A Perceived Risk Facets Perspective. Int. J. Hum.-Comput. Stud. 2003, 59, 451–474. [Google Scholar] [CrossRef]

- Mcknight, D.H.; Carter, M.; Thatcher, J.B.; Clay, P.F. Trust in a Specific Technology: An Investigation of Its Components and Measures. ACM Trans. Manage. Inf. Syst. 2011, 2, 1–25. [Google Scholar] [CrossRef]

| ID | Gender | Affiliation | Field of Research | Brief Description of AIGC-Related Experience |

|---|---|---|---|---|

| E01 | Male | A Comprehensive University | AI Ethics | Principal investigator of a national AI ethics project; focuses on the societal impact of generative models |

| E02 | Female | AI Content Platform Company | Product Development | Participated in video generation module design and user testing for Runway-like platforms |

| E03 | Male | University | Human–Computer Interaction (HCI) | Published multiple papers on AIGC user interaction and experience |

| E04 | Male | Digital Creative Startup | Image Generation Product Design | Extensive use of Midjourney/Stable Diffusion for commercial content creation |

| E05 | Female | Social Research Institute | Sociology of Technology | Investigates how AI tools influence creative behavior among young users |

| E06 | Female | University | Digital Communication | Researches the impact of AIGC on information credibility and dissemination structures |

| E07 | Female | AI Ethics and Policy Think Tank | Public Policy | Authored several policy advisory reports on AI ethics and regulatory frameworks |

| E08 | Male | Media Industry | Video Content Editing | Hands-on experience with Runway for automated video editing; familiar with creator pain points |

| E09 | Female | University | Philosophy of Technology | Explores AI creativity, subjectivity, and algorithmic bias from a philosophical perspective |

| E010 | Male | AI Application Development Company | Dialogue System Development | Responsible for semantic control and safety mechanisms in AIGC text generation products |

| Ethical Dimension | Core Keywords | Number of Articles |

|---|---|---|

| Misinformation | misinformation, disinformation, factuality, fake content | 39 |

| Accountability | accountability, responsibility, liability | 41 |

| Algorithmic Bias | bias, discrimination, fairness | 47 |

| Creativity Ethics | copyright, IP, ownership, authorship | 29 |

| Privacy | privacy, data protection, user data | 54 |

| Job Displacement | job loss, creative replacement, automation threat | 31 |

| Ethical Transparency | transparency, explainability, black-box | 28 |

| Control over AI | control, autonomy, system unpredictability | 22 |

| Code | Ethical Dimension | User Concerns | Keywords in Literature | Conceptual Definition |

|---|---|---|---|---|

| V1 | (MIS) Misinformation | “Sometimes it makes things up with full confidence.” | misinformation, fake content, hallucination, deepfake, | User concerns about the factual accuracy of AI-generated content and the potential for misleading or fabricated information. |

| “I can’t tell whether what it says is true or not.” | ||||

| V2 | (ACC) Accountability | “If AI makes a mistake, who takes responsibility?” | accountability, liability, responsibility, | User perception of unclear responsibility when AIGC produces harmful, inappropriate, or incorrect content. |

| “I’m just using it, I didn’t build it.” | ||||

| V3 | (ALB) Algorithmic Bias | “Does it prefer white faces?” | bias, discrimination, fairness, training data, | User awareness of unfair or biased outputs related to gender, race, culture, etc., caused by algorithmic training data or system design. |

| V3 | “The outputs are all stereotyped, no matter what I input.” | |||

| V4 | (CRE) Creativity Ethics | “Did it copy from my artwork?” | copyright, IP, authorship, originality, | Ethical concerns about content ownership, originality, and the erosion of creative labor value in the age of generative AI. |

| V4 | “That image I made—can someone generate the same thing with AI?” | |||

| V5 | (PRI) Privacy | “Will the prompts I input be saved?” | data privacy, personal data, collection, consent, | Concerns over how user data are collected, stored, used, and whether consent and boundaries are clearly communicated. |

| V5 | “Are they using my data to train the model?” | |||

| V6 | (JOD) Job Displacement | “Why would clients hire me when AI can do the same?” | job loss, automation, creative replacement, | Anxiety over the potential of AIGC to replace creative or professional roles, threatening job security. |

| V6 | “Will video editors be obsolete soon?” | |||

| V7 | (ETR) Ethical Transparency | “I don’t know what material it’s pulling from.” | transparency, explainability, black-box, usage disclosure, | Perceived lack of moral and procedural clarity about how AIGC tools function, where data come from, and what boundaries are in place. |

| V7 | “It’s a black box—I can’t see what it’s doing.” | |||

| V8 | (CON) Control over AI | “I can’t tweak the output to match my intent.” | control, autonomy, intervention, unpredictability, | A sense of limited user agency or unpredictability when interacting with AIGC tools, leading to concerns over lack of control. |

| V8 | “It sometimes just ignores my prompts.” |

| Variable | Category | Frequency | Percentage |

|---|---|---|---|

| Gender: | Male | 309 | 53.09% |

| Female | 273 | 46.91% | |

| Age Group: | Under 18 | 24 | 4.12% |

| 18–25 | 178 | 30.58% | |

| 26–30 | 177 | 30.41% | |

| 31–40 | 127 | 21.82% | |

| Over 40 | 76 | 13.06% | |

| Current Occupation | Student | 94 | 16.15% |

| Teacher | 120 | 20.62% | |

| Media Professional | 109 | 18.73% | |

| Technology Industry | 86 | 14.78% | |

| Freelancer | 95 | 16.32% | |

| Other | 78 | 13.40% | |

| Highest Educational Attainment | High school or below | 62 | 10.65% |

| Associate degree | 179 | 30.76% | |

| Bachelor’s degree | 205 | 35.22% | |

| Master’s degree | 100 | 17.18% | |

| Doctorate or above | 36 | 6.19% | |

| Average Weekly Usage Frequency of AIGC Tools | Rarely | 43 | 7.39% |

| 1–2 times per week | 53 | 9.11% | |

| 3–5 times per week | 217 | 37.29% | |

| Almost daily | 175 | 30.07% | |

| Multiple times daily | 94 | 16.15% |

| Dimension | Number of Items | Cronbach’s Alpha |

|---|---|---|

| MIS | 3 | 0.820 |

| ACC | 3 | 0.824 |

| ALB | 3 | 0.835 |

| CRE | 3 | 0.813 |

| PRI | 3 | 0.833 |

| JOD | 3 | 0.866 |

| ETR | 3 | 0.868 |

| CON | 3 | 0.855 |

| PR | 3 | 0.846 |

| TR | 3 | 0.856 |

| ADI | 4 | 0.918 |

| Fit Index | Recommended Threshold | Observed Value | Evaluation Result |

|---|---|---|---|

| CMIN/DF | <3 | 2.197 | Excellent |

| GFI | >0.80 | 0.913 | Excellent |

| AGFI | >0.80 | 0.89 | Excellent |

| RMSEA | <0.08 | 0.045 | Excellent |

| NFI | >0.9 | 0.909 | Good |

| IFI | >0.9 | 0.948 | Excellent |

| TLI | >0.9 | 0.938 | Excellent |

| CFI | >0.9 | 0.948 | Excellent |

| PNFI | >0.5 | 0.765 | Excellent |

| PCFI | >0.5 | 0.798 | Excellent |

| Dimension | Observed Variable | Factor Loading | S.E. | C.R. | P | CR | AVE |

|---|---|---|---|---|---|---|---|

| MIS | MIS1 | 0.751 | 0.824 | 0.611 | |||

| MIS2 | 0.712 | 0.063 | 16.117 | *** | |||

| MIS3 | 0.874 | 0.069 | 17.708 | *** | |||

| ACC | ACC1 | 0.785 | 0.828 | 0.617 | |||

| ACC2 | 0.716 | 0.057 | 16.681 | *** | |||

| ACC3 | 0.849 | 0.058 | 18.625 | *** | |||

| ALB | ALB1 | 0.778 | 0.835 | 0.629 | |||

| ALB2 | 0.799 | 0.057 | 18.307 | *** | |||

| ALB3 | 0.801 | 0.058 | 18.332 | *** | |||

| CRE | CRE1 | 0.744 | 0.813 | 0.592 | |||

| CRE2 | 0.769 | 0.064 | 16.157 | *** | |||

| CRE3 | 0.795 | 0.068 | 16.386 | *** | |||

| PRI | PRI1 | 0.790 | 0.833 | 0.625 | |||

| PRI2 | 0.769 | 0.056 | 17.893 | *** | |||

| PRI3 | 0.813 | 0.057 | 18.614 | *** | |||

| JOD | JOD1 | 0.805 | 0.867 | 0.685 | |||

| JOD2 | 0.810 | 0.050 | 20.569 | *** | |||

| JOD3 | 0.867 | 0.048 | 21.690 | *** | |||

| ETR | ETR1 | 0.817 | 0.869 | 0.689 | |||

| ETR2 | 0.805 | 0.046 | 20.808 | *** | |||

| ETR3 | 0.867 | 0.048 | 22.130 | *** | |||

| CON | CON1 | 0.802 | 0.855 | 0.663 | |||

| CON2 | 0.795 | 0.052 | 19.597 | *** | |||

| CON3 | 0.845 | 0.052 | 20.530 | *** | |||

| PR | PR1 | 0.827 | 0.845 | 0.645 | |||

| PR2 | 0.774 | 0.049 | 19.473 | *** | |||

| PR3 | 0.807 | 0.049 | 20.321 | *** | |||

| TR | TR1 | 0.836 | 0.857 | 0.666 | |||

| TR2 | 0.801 | 0.050 | 21.131 | *** | |||

| TR3 | 0.811 | 0.047 | 21.420 | *** | |||

| ADI | ADI1 | 0.845 | 0.918 | 0.738 | |||

| ADI2 | 0.885 | 0.043 | 26.981 | *** | |||

| ADI3 | 0.860 | 0.041 | 25.852 | *** | |||

| ADI4 | 0.845 | 0.038 | 25.133 | *** |

| MIS | ACC | ALB | CRE | PRI | JOD | ETR | CON | PR | TR | ADI | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| MIS | 0.782 | ||||||||||

| ACC | 0.332 | 0.785 | |||||||||

| ALB | 0.362 | 0.341 | 0.793 | ||||||||

| CRE | 0.262 | 0.345 | 0.327 | 0.77 | |||||||

| PRI | 0.311 | 0.419 | 0.406 | 0.306 | 0.791 | ||||||

| JOD | 0.352 | 0.423 | 0.339 | 0.317 | 0.410 | 0.828 | |||||

| ETR | 0.240 | 0.283 | 0.466 | 0.307 | 0.362 | 0.350 | 0.83 | ||||

| CON | 0.346 | 0.307 | 0.456 | 0.326 | 0.422 | 0.359 | 0.378 | 0.814 | |||

| PR | 0.424 | 0.439 | 0.386 | 0.329 | 0.454 | 0.504 | 0.417 | 0.444 | 0.803 | ||

| TR | −0.377 | −0.467 | −0.504 | −0.488 | −0.523 | −0.451 | −0.500 | −0.515 | −0.592 | 0.816 | |

| ADI | −0.118 | −0.173 | −0.196 | −0.189 | −0.227 | −0.167 | −0.235 | −0.169 | −0.377 | 0.341 | 0.859 |

| Fit Index | Recommended Threshold | Observed Value | Evaluation Result |

|---|---|---|---|

| CMIN/DF | <3 | 2.175 | Excellent |

| GFI | >0.80 | 0.913 | Excellent |

| AGFI | >0.80 | 0.892 | Excellent |

| RMSEA | <0.08 | 0.045 | Excellent |

| NFI | >0.9 | 0.909 | Excellent |

| IFI | >0.9 | 0.948 | Excellent |

| TLI | >0.9 | 0.939 | Excellent |

| CFI | >0.9 | 0.948 | Excellent |

| PNFI | >0.5 | 0.778 | Excellent |

| PCFI | >0.5 | 0.811 | Excellent |

| Path | Path Coefficient | S.E. | C.R. | p | ||

|---|---|---|---|---|---|---|

| PR | <--- | MIS | 0.161 | 0.060 | 3.420 | <0.001 |

| PR | <--- | ACC | 0.137 | 0.059 | 2.738 | 0.006 |

| PR | <--- | ALB | 0.011 | 0.058 | 0.197 | 0.844 |

| PR | <--- | CRE | 0.037 | 0.055 | 0.792 | 0.429 |

| PR | <--- | PRI | 0.131 | 0.056 | 2.530 | 0.011 |

| PR | <--- | JOD | 0.216 | 0.052 | 4.322 | <0.001 |

| PR | <--- | ETR | 0.150 | 0.048 | 3.079 | 0.002 |

| PR | <--- | CON | 0.136 | 0.053 | 2.688 | 0.007 |

| TR | <--- | MIS | −0.016 | 0.053 | −0.382 | 0.702 |

| TR | <--- | ACC | −0.098 | 0.052 | −2.135 | 0.033 |

| TR | <--- | ALB | −0.113 | 0.051 | −2.339 | 0.019 |

| TR | <--- | CRE | −0.195 | 0.049 | −4.489 | <0.001 |

| TR | <--- | PRI | −0.145 | 0.050 | −3.057 | 0.002 |

| TR | <--- | JOD | −0.026 | 0.047 | −0.567 | 0.571 |

| TR | <--- | ETR | −0.148 | 0.043 | −3.294 | <0.001 |

| TR | <--- | CON | −0.133 | 0.047 | −2.864 | 0.004 |

| TR | <--- | PR | −0.234 | 0.050 | −4.503 | <0.001 |

| ADI | <--- | PR | −0.259 | 0.070 | −4.379 | <0.001 |

| ADI | <--- | TR | 0.187 | 0.071 | 3.204 | 0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, T.; Tian, Y.; Chen, Y.; Huang, Y.; Pan, Y.; Jang, W. How Do Ethical Factors Affect User Trust and Adoption Intentions of AI-Generated Content Tools? Evidence from a Risk-Trust Perspective. Systems 2025, 13, 461. https://doi.org/10.3390/systems13060461

Yu T, Tian Y, Chen Y, Huang Y, Pan Y, Jang W. How Do Ethical Factors Affect User Trust and Adoption Intentions of AI-Generated Content Tools? Evidence from a Risk-Trust Perspective. Systems. 2025; 13(6):461. https://doi.org/10.3390/systems13060461

Chicago/Turabian StyleYu, Tao, Yihuan Tian, Yihui Chen, Yang Huang, Younghwan Pan, and Wansok Jang. 2025. "How Do Ethical Factors Affect User Trust and Adoption Intentions of AI-Generated Content Tools? Evidence from a Risk-Trust Perspective" Systems 13, no. 6: 461. https://doi.org/10.3390/systems13060461

APA StyleYu, T., Tian, Y., Chen, Y., Huang, Y., Pan, Y., & Jang, W. (2025). How Do Ethical Factors Affect User Trust and Adoption Intentions of AI-Generated Content Tools? Evidence from a Risk-Trust Perspective. Systems, 13(6), 461. https://doi.org/10.3390/systems13060461