Abstract

Recent advancements in artificial intelligence, particularly in generative technologies, have significantly redefined the design paradigm for community public cultural spaces, shifting from a traditionally designer-centric model to one that emphasizes multi-stakeholder co-creation. This paper focuses on the design of public cultural spaces at the community scale, proposing a generative dynamic workflow-based co-creation framework that integrates large language models (LLMs) with text-to-image technologies. The framework includes a natural dialogue-based needs-capturing module, a needs analysis module, and a needs expression text-to-image module. This study validates the proposed framework by developing a system prototype for renovating a public space in a student dormitory at Tongji University’s Jiading campus. The results show that the prototype demonstrates good usability and a relatively satisfactory capability in capturing user requirements. These findings indicate that this research helps address key limitations in traditional community design practices, such as limited resident participation, inefficient integration of diverse needs, and slow iteration processes.

1. Introduction

Co-creation has recently become a significant topic in urban planning and design, highlighting the value of multi-party participation and collective wisdom. Throughout this process, the needs of residents, community managers, business owners, and designers often intertwine, resulting in a diversified and complex landscape of needs [1]. How to effectively facilitate communication among these different stakeholders—especially regarding needs-expression channels for community public spaces and methods for integrating those needs into decision-making—has become pivotal to ensuring both the quality of co-creation design and a sense of public engagement [2].

In practice, the design of community public cultural spaces frequently suffers from low levels of resident participation. Several key reasons include unclear pathways for involving residents in the design process, challenges in participation, and the lack of guaranteed timely responses. Traditional design approaches typically rely on face-to-face meetings and surveys, which are relatively low in efficiency and thus fail to capture residents’ genuine needs. Some residents may not be able to clearly express their needs through conventional channels, or they may be hindered from participating widely due to constraints of time and location. Even when residents do participate, the designer-led nature of traditional approaches often prevents the efficient integration of multiple stakeholders’needs [3]. The design process tends to be lengthy and intricate, making it difficult to iterate solutions swiftly in response to resident feedback. As a result, the final design often diverges significantly from residents’ expectations [4]. Consequently, improving the efficiency of need expression, promoting the effective integration of diverse needs, and accelerating the generation and iteration of design solutions remain critical challenges.

In recent years, large language models (LLMs), a cornerstone technology of generative artificial intelligence, have advanced rapidly [5]. Benefiting from large-scale data training and breakthroughs in deep learning, LLMs now possess robust language comprehension and generation capabilities, supporting a broad range of tasks—from natural language processing to cross-domain knowledge reasoning [6]. In the realm of community public space design, LLMs open up new possibilities for facilitating communication and collaboration among diverse stakeholders, particularly when it comes to integrating heterogeneous needs and automatically producing design solutions. By leveraging conversational interactions, LLMs can more accurately capture the needs of community members and generate visualized design concepts accordingly, thus providing highly precise decision support for community space design.

This paper proposes a community public space that needs a co-creation framework based on a generative dynamic workflow, aiming to address the issues of low resident participation, inefficient needs consolidation, and delayed designer decision-making commonly observed in traditional design models. The framework comprises three main components: The first is the natural dialogue-based needs-capturing module. By leveraging the advantages of conversational LLMs, semantic understanding, and employing a tree-structured survey process and proactive guidance strategies, the module effectively captures the multidimensional needs of multiple stakeholders in the renovation of public cultural spaces. The second is the needs analysis module. Through processing vast amounts of structured data by performing tokenization and modeling to extract design preferences and personalized requirements, the module transforms disparate and varied structured information into a semantic, hierarchical data architecture, thereby establishing a robust foundation for generating design images. The third is the needs expression text-to-image module. This module converts structured, co-created needs into public space design with LLMs to generate coherent textual descriptions, bridging text and visuals by optimizing text-to-image models for sketch design, which reduces misinterpretation and enhances stakeholder consensus.

Finally, an experimental renovation of the male dormitory public cultural space at Tongji University’s Jiading campus was conducted to evaluate system usability and performance. A usability scale and interaction experience metrics were employed to assess the system’s ease of use, the accuracy of the needs capture, and the consistency of the co-creation design results, thereby verifying its effectiveness and potential for optimization in actual scenarios.

This research makes three primary contributions: (1) Generative dynamic workflow for rapid, multi-stakeholder input. Existing methods typically rely on sequential, designer-driven procedures, such as paper surveys and iterative face-to-face meetings, which are time-consuming and prone to information loss. By contrast, our generative dynamic workflow employs LLMs and text-to-image synthesis to translate multistakeholder needs directly into visualized design concepts within minutes, allowing various community members to participate directly in the design process of public cultural spaces. This shift from a designer-centric workflow to a resident-inclusive one with an AI-augmented workflow reduces the latency between idea capture and concept visualization, thereby increasing needs-gathering and communication efficiency by an order of magnitude. (2) Structured, multilevel needs analysis that overcomes data fragmentation. Conventional needs assessment protocols often yield disparate qualitative notes or spreadsheet fragments that require manual consolidation, leading to analytic inefficiency and imprecise decisions. Our approach introduces a hierarchical parsing mechanism for parsing needs that converts unstructured feedback into a standardized, multidimensional data schema. This structure overcomes the fragmentary nature of traditional needs research—where data tends to be scattered, analysis inefficient, and decisions imprecise—and substantially increases the standardization of needs information, thereby enhancing the practicality of subsequent design applications. (3) Real-time co-creation interactive system embedded in public social media platforms. Underpinned by the generative dynamic workflow, the system transforms residents’ design requirements into intuitive design sketches, significantly reducing both the technical and psychological barriers to expressing needs. This real-time interactive model accommodates multiple stakeholder groups and lowers the threshold for vulnerable communities to participate in digital governance. Consequently, it provides a highly efficient and feasible pathway for sustained, long-term engagement by various parties in the design of community public cultural spaces.

2. Literature Review

2.1. Generative Dialogue LLMs

The earliest AI dialog system can be traced back to ELIZA, developed in 1966 by Joseph Weizenbaum [7], which used a text interface for simple interactive conversations with users. Initially designed to simulate a psychotherapeutic dialogue scenario, it tested whether computers could exhibit human-like interactive capabilities. Subsequently, early AI dialog systems began to develop rudimentary Natural Language Processing (NLP) capacities, attempting to mimic human emotional and cognitive responses through scripted interactions. After 2000, as computer technology advanced rapidly and new interaction patterns emerged, conversational AI evolved from simple text exchanges to more complex task handling and multimodal interactions, enabling conversational agents to find wide applications in customer service, educational tutoring, and healthcare. In recent years, the rise of generative AI technologies—represented by LLMs such as ChatGPT—has further opened up new possibilities for AI dialog systems to handle complex tasks and achieve multiparty interaction.

Against this technological backdrop, researchers have begun exploring the introduction of AI dialog systems and conversational agents into community planning and multistakeholder collaboration, aiming to improve communication efficiency and decision-making quality among diverse stakeholders. For example, the Situate AI Guide proposed by Kawakami et al. [8] establishes a toolkit for collaborative design, supporting early-stage deliberations among multiple stakeholders in the public sector. Although primarily geared toward AI decision-making in public agencies, its cross-stakeholder communication and reflection mechanisms are equally insightful for community space design. Quan and Lee [9] developed a ChatGPT-assisted Participatory Planning Support System (PPSS) that uses personalized dialogs, tasks, and process coordination agents to provide end-to-end support—from problem definition and data analysis to design generation and evaluation. This system captures the needs of residents, businesses, and designers with high accuracy while employing deep generative models for rapid output of design proposals, thereby boosting public participation and design iteration efficiency. Its hypothetical “New Seoullo” case showcases significant potential for real-world application. Similarly, Zhou et al. [10] built a LLMs-based multi-agent collaboration framework. Through role-playing and a fishbowl discussion mechanism, it enabled multiple rounds of dialog between residents and planners, effectively mitigating the information overload problem often caused by a large number of participants in traditional methods, thus substantially improving collaborative discussion and decision-making quality. On the other hand, research by Kawakami et al. [11] indicates that current algorithmic decision-support tools often fall short in interface design and the presentation of explanatory information, leading to user misunderstandings regarding risk scoring. Although their study focuses on the child welfare domain, their exploration of using dialog to enhance human–computer interaction and increase transparency can also inform community planning efforts.

In summary, multi-agent platforms based on AI dialog systems and conversational agents can partially address the challenges of limited participation in traditional processes by simulating multirole dialog to capture and integrate diverse demands in real time, thus driving iterative optimization of design proposals. However, challenges remain in terms of information interpretation, uncertainty in risk presentation, and role-based communication. Future research should further explore prompt design, knowledge integration, and interface interaction to achieve the best balance in human–machine collaborative decision-making.

2.2. Image Generated AI in Spatial Design

Generative AI, particularly text-to-image (T2I) models, has made significant progress in deep learning and artificial intelligence-generated content (AIGC). Early approaches, such as Generative Adversarial Networks (GANs), laid the foundation for image synthesis but suffered from mode collapse [12]. Diffusion models (DMs) later emerged as a more stable and effective alternative, with Ho et al. refining the denoising diffusion probabilistic models (DDPMs) to significantly enhance image generation quality [13]. Pretrained LLMs like Imagen [14], DALL·E 2 [15], and Stable Diffusion [16] further improved the alignment between text prompts and generated images, making AI-driven image synthesis more reliable and applicable across various domains. More recently, multimodal-guided approaches have enabled advanced image editing techniques, leveraging T2I models for applications such as style transfer, semantic manipulation, and controllable image generation [17]. Despite these advancements, challenges such as maintaining semantic consistency, improving generalization, and ensuring interpretability remain open research directions [18].

With the rapid advancement of generative AI, researchers have increasingly explored its applications beyond image generation, particularly in spatial design and decision-making. In urban planning, data-driven methods have been leveraged to optimize environmental sustainability, as seen in studies on climate-responsive urban planning, where AI-assisted generative models analyze meteorological data and urban morphology to mitigate heat island effects and improve livability [19]. Similarly, AI-driven structural design has demonstrated the potential to automate and optimize architectural layouts, enhancing both efficiency and precision [20]. In design research, Thoring et al. [21] proposed the concept of the “Augmented Designer”, emphasizing that AI should augment rather than replace human creativity, using generative techniques to facilitate ideation and innovation. Beyond architecture, AI-based decision-making frameworks have been applied in complex systems such as urban planning, education, and policy formulation, reinforcing the notion that AI serves as a tool to enhance rather than supplant human judgment [22]. Furthermore, generative AI has been explored in personalized decision-making processes, such as in tourism, where models like ChatGPT provide tailored recommendations and itinerary planning, significantly improving user experience [23]. These studies highlight the growing role of generative AI in augmenting decision-making across various domains, with potential applications in community planning and urban development.

It is worth emphasizing that while generative AI has made significant progress in image synthesis and spatial design, its application in community co-creation remains in its early stages. Community co-creation often involves collaboration among diverse stakeholders, integration of opinions in complex contexts, and the expression of creative ideas—all of which align closely with the strengths of generative AI in content generation and interactive engagement. Therefore, exploring the role of generative AI in supporting community co-creation is not only meaningful but also opens up new research directions for advancing human-AI collaborative innovation.

2.3. Collaborative Co-Creation in Public Spaces of Community

In the co-creation process of community public cultural spaces, multistakeholder collaborative governance has become a key pathway to addressing design and participation challenges. Natarajan [24] proposes the theory of “social–spatial learning”, emphasizing that community members can gain a deeper understanding of space by sharing local experience and knowledge, thereby incorporating local wisdom into participatory planning. Tian and Wang [25] find that in intelligent community collaborative governance, factors such as collaborative entities, supporting facilities, and community culture determine the effectiveness of multiparty interaction. They also note that traditional face-to-face meetings and surveys fall short in effectively conveying community demands, while digital platforms and LLMs can facilitate real-time, multichannel communication and demand integration, accelerating the iterative optimization of design plans. Meanwhile, Qian [26], in analyzing the governance practices of suburban relocation communities in China, uncovers horizontal and vertical coordination mechanisms among residents’ committees, homeowners’ committees, and property management companies. Although the allocation of resources in these systems remains largely government-led, they provide empirical references for multiparty collaborative co-creation. Further, research by Grootjans et al. [27] points out that setting shared goals, ensuring transparent communication, and promoting face-to-face interaction are at the core of collaborative governance—elements that also apply to the design and management of public cultural spaces.

Collaborative co-creation in community public cultural spaces is becoming an important trend in urban planning; however, traditional participatory models still exhibit significant limitations—particularly with respect to ambiguous resident participation pathways, shallow engagement, and low influence on decision-making [28]. Often, residents are relegated to a passive, symbolic role in which planning decisions are predetermined by design experts, leaving residents to offer only limited feedback at later stages in the plan. This dynamic hinders the expression of residents’ own creativity and needs, thereby diminishing their sense of engagement and community identity [28].

In particular, traditional face-to-face consultations and surveys struggle to cover the needs of various age groups or more vulnerable populations, further constraining the overall impact of participatory processes [29]. Meanwhile, although older communities may possess abundant idle spaces, resident knowledge, and cultural resources, they lack effective mechanisms for developing and integrating these resources, limiting the potential for sustained community revitalization [30]. The swift evolution of generative AI is ushering in unprecedented modes of human–AI co-creation, empowering professionals to enrich their creative workflows. Gmeiner et al. [31] developed an evidence-based workflow that leverages learning-science principles to design and rigorously evaluate interface supports that help professionals acquire conceptual and procedural skills for effective human–AI co-creation with generative AI systems. Ford and Bryan-Kinns [32] surveyed 122 music-focused AI co-creation systems and propose that, instead of merely lowering technical barriers, design should foreground “reflection-oriented” interfaces—such as latent-space visualizations and suggestive cross-genre prompts—to help novices critically examine and evolve their creative intentions while collaborating with generative models. Zamfirescu-Pereira et al. [33] introduced BotDesigner, a no-code iterative workflow that enables non-AI experts to co-create instructional chatbots with GPT-3 via prompt engineering, demonstrating how generative dynamic workflows can democratize human-AI co-creation in real-world design scenarios.

To address these issues, the application of new technologies has proven to be an effective solution. For instance, using augmented reality enables residents to engage in planning decisions in a deeper, more personalized manner, thereby enhancing both participation and satisfaction [29]. Design-driven social innovation models (such as living labs) can effectively reorganize community resources and resident needs, forming a resident demand-oriented and sustainable community entrepreneurship ecosystem [30].

In summary, collaborative co-creation involving multiple stakeholders more accurately reflects residents’ actual needs, promoting a deeper integration of public space design with community culture and local knowledge. This approach enables public cultural spaces to evolve toward greater intelligence, inclusivity, and participatory engagement, thereby offering both theoretical support and practical examples for constructing diverse, open, and equitable co-creation mechanisms.

3. Methods

In the current planning, design, and implementation of community public cultural spaces, a crucial challenge is how to efficiently integrate multiple stakeholders’ needs in order to enhance participation and decision-making efficiency among all parties involved. Traditional new construction and renovation projects for community public cultural spaces often rely on numerous face-to-face meetings and sample surveys—methods that are both time-consuming and labor-intensive, while still failing to encompass the genuine needs of every stakeholder in the community. As a result, it is not uncommon to see newly built or renovated public cultural spaces left unused or repurposed, largely due to the lack of comprehensive capture and effective coordination of residents’ diverse needs. With the recent explosion of generative artificial intelligence technologies, employing a generative dynamic workflow that integrates multiple models to achieve co-creation in community public cultural space design has demonstrated significant value.

This paper proposes a collaborative co-creation framework for community public space needs, built on a generative dynamic workflow, and applies it to real-world tasks through a community public cultural space co-creation system. The objective is to construct a structured, multiparty interaction framework that enables efficient co-creation, analysis, and expression in the collaborative design of community public spaces. Concretely, by implementing an intelligent, end-to-end design process of “needs co-creation—needs analysis—needs expression”, the system can conduct large-scale, efficient needs elicitation, precise analysis, and visual feedback for community users.

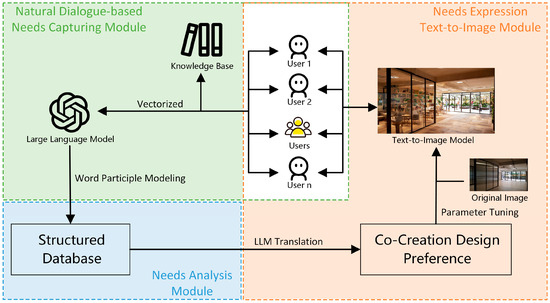

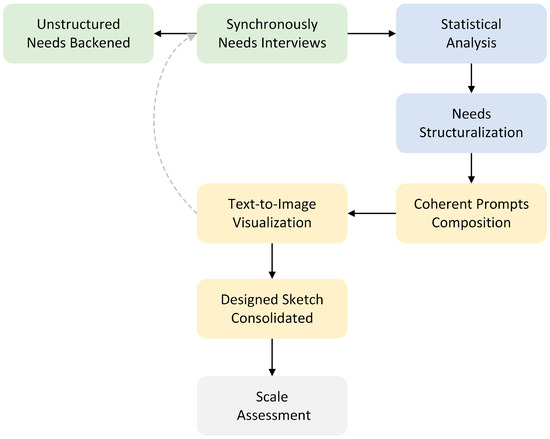

Functionally, the system primarily uses public social media platforms (e.g., WeChat accounts) as the front-end interface, while the back-end integrates multiple generative models and modules to form a highly extensible and interactive system for the co-creation of public cultural spaces. As shown in Figure 1, the core components include:

Figure 1.

Overall System Framework.

- A natural dialogue-based needs capturing module. This module automatically extracts public space design requirements from residents’ natural language interactions. By leveraging a carefully orchestrated LLMs mechanism—employing tree-structured dialogue scripts and proactive guidance—it effectively captures multidimensional, structured needs information in the process of constructing or renovating community public cultural spaces, thereby simplifying the needs-gathering process and enhancing overall efficiency.

- A needs analysis module. This module processes the large volumes of structured data obtained, performing tokenization and modeling to derive residents’ design preferences and collectively personalized needs. In doing so, it transforms scattered and varied structured information into a semantic, hierarchical data architecture, laying a clear foundation for design image generation by the generative LLMs.

- A needs expression text-to-image module. This system converts personalized, co-created needs into preliminary design sketches for community residents to reference. By invoking LLMs interfaces to organize structured needs data into logically coherent textual descriptions and then feeding those descriptions into a text-to-image model—with parameters tested and optimized for community public cultural space design—it visually captures the consensus reached among multiple stakeholders during co-creation. This capability significantly enhances the efficiency of subsequent designer decision-making and refinement.

By seamlessly integrating natural language needs collection, structured data analysis, and design visualization, this collaborative co-creation framework for community public spaces offers systematic support for co-design and co-creation processes. Overall, the method expands the theoretical application of generative models in the realm of community co-creation and provides a robust technological foundation for collaborative participation among multiple stakeholders.

3.1. Natural Dialogue-Based Needs Capturing Module

The Natural Dialogue-Based Needs Capturing Module leverages a structured interaction framework built upon LLMs to capture the multidimensional design requirements of multiple stakeholders in the context of community public cultural space construction and renovation. By providing detailed, structured data, it supports collaborative co-creation in design.

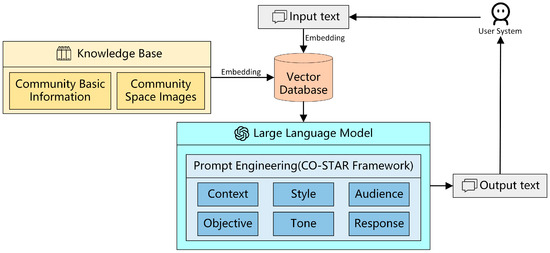

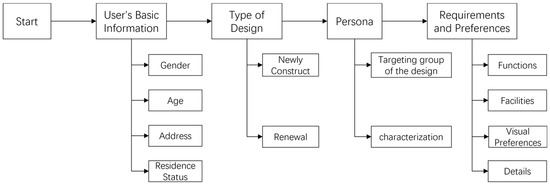

As shown in Figure 2, The module is primarily responsible for collecting and interacting with users during the initial stages of needs gathering [34]. The module incorporates a sophisticated set of prompt engineering strategies based on ChatGPT 4′s multiturn conversational capabilities to ensure a comprehensive capture of user needs. A library of pre-designed conversation templates and guidance scripts was developed under the CO-STAR framework [35]. These templates include clearly defined conversational trees that guide users through multiple stages—from basic demographic data collection to detailed spatial feature specification. By programmatically refining typical designer research workflows—such as interview steps and needs assessments—into interactive prompts, it constructs a tree-structured research workflow (see Figure 3). Specifically, once a user initiates a conversation, the system first collects basic residential information, including gender, age, location, and living conditions. To ensure user privacy, the system employs an anonymization process where user identities are decoupled from their responses. During the initial interaction, no sensitive personally identifiable information is recorded. Instead, each user is assigned a randomly generated user ID, which is used throughout the data collection process for consistency and verification to guarantee that all stored data remains anonymized and that privacy is maintained throughout the system’s operation. Next, it identifies whether the targeted public cultural space is a new project or a renovation. It then guides the user in describing the demographic profile of potential users (i.e., community groups and their characteristics). Finally, it prompts a discussion of detailed spatial features to collect more granular needs data. Throughout, residents are encouraged to express their usage habits, improvement suggestions, and anticipated design requirements for the public cultural space, while the system saves unstructured needs data from these conversations as semi-structured information.

Figure 2.

Framework of the Natural Dialogue-Based Needs-Capturing Module.

Figure 3.

Tree-Structured Survey Process.

By systematically designing the LLMs prompts along the dimensions of contextual background, task objectives, dialogue style, emotional tone, target audience, and response format, stakeholders in community public cultural spaces can provide feedback on their needs through everyday conversational means (for example, via automated interactions on WeChat). This greatly streamlines the needs-gathering process. Additionally, during the interaction, if the initial prompt fails to capture certain types of needs (such as specific functional or aesthetic requirements), fallback strategies relying on adaptive re-prompting are activated, rephrasing the question and providing examples to elicit more precise responses. This ensures that even non-specific or vague feedback is eventually transformed into actionable, structured data.

Finally, the LLMs consolidate the users’ semi-structured needs and store them in a database. Leveraging the model’s reasoning capabilities, the system performs semantic parsing on the interaction data, extracting unstructured text into semi-structured JSON data. Aside from a unique user identifier, all other data types are recorded as “text”. Table 1 illustrates the specific information included, such as functional requirements, emotional experiences, and spatial preferences. These measures help eliminate noise and improve data quality.

Table 1.

Detailed Introduction to the JSON Data.

By effectively guiding the various design requirements of multiple stakeholders in the community and facilitating robust interaction among all parties, the Natural Dialogue-Based Needs Capturing Module not only ensures diversity in data collection—thus creating a solid data foundation for subsequent statistical analysis and generative design models—but also significantly enhances the efficiency of needs gathering and the convenience of participation. Supported by artificial intelligence tools, it promotes greater collaboration and co-creation in the design of public spaces. Breaking through the spatial and temporal limitations of traditional face-to-face meetings and designer-led needs-gathering markedly elevates residents’ engagement and the effectiveness of expressing their needs.

3.2. Needs Analysis Module

The Need Analysis Module processes the semi-structured data gathered by the Natural Dialogue-Based Needs Capturing Module through a process of tokenization and modeling. This step converts the raw, dispersed JSON data (sentences) into structured information (key words) suitable for the LLMs to organize into a form easily understood by text-to-image models, facilitating the generation of images that align with specific design requirements.

The raw JSON data recorded by the Natural Dialogue-Based Needs Capturing Module capture the multidimensional design preferences of each community resident regarding public cultural spaces. On the one hand, it includes user profile information (such as user ID, gender, age, group, and living status), as well as details related to space usage, individual requirement descriptions, space type, facility requirements, and environmental preferences. On the other hand, the text is stored in a partially unstructured JSON format, which can be redundant and inconsistent. Thus, before entering the analysis phase, the system performs detailed information extraction and semantic processing.

By applying the Jieba word segmentation algorithm to the collected semi-structured data, the system performs preliminary filtering and cleaning. Specifically, Jieba employs dynamic programming to determine the optimal path for segmentation, enabling rapid and efficient keyword extraction and noise removal from the raw textual needs. The algorithm also marks and categorizes the data commonly required to design public cultural spaces (e.g., spatial functions, decorative facilities, lighting needs, and specific user requirements).

In concrete terms, text from the following fields—space usage, specific requirements, facilities, environment, and lighting preference—is tokenized, with irrelevant terms removed. The refined output is then consolidated into structurally clear, semantically labeled phrases or tags, thereby reducing ambiguity and enhancing data parsability. Table 2 presents examples of the structured data fields along with representative values.

Table 2.

Examples of Structured Data Fields and Descriptions.

By transforming unstructured data into a standardized format, the Needs Analysis Module effectively consolidates the diverse design preferences of multiple stakeholders within the community’s public cultural space. This process systematizes complex needs information into high-quality and interpretable semantic inputs, thereby laying a robust foundation for subsequent text-to-image modeling.

3.3. Needs Expression Text-to-Image Module

The Needs Expression Text-to-Image System translates the structured, personalized design requirements processed by the Needs Analysis Module into intuitive design sketches, thus offering a visual communication platform for both community residents and designers. This module is built upon a Variational AutoEncoder (VAE)-based text-to-image model, specifically, the kolors-base-1.0-vae-fp16_kolors-base-1.0.safetensors model [36]. In the Needs Expression Module, the structured requirements for a given public cultural space are matched with existing site images stored in a knowledge base [37]. By calling the ChatGPT interface to refine the structured data, the system maps key design requirements—such as functional features, decorative style, and lighting preferences—into a format more readily understood by the text-to-image model, thereby enabling the model to fully digest these complex inputs.

To convert the structured needs data into textual prompts for the VAE-based model, the system employs a template-based approach. It concatenates different user requirements into a unified prompt by combining functional, aesthetic, and environmental attributes. For example, the prompt may be generated using a template such as: “A community space with {functional features} that includes {decorative style} elements, enhanced by {environmental conditions}, and illuminated under {lighting preference}”. This approach ensures that all aspects of user needs are explicitly stated. Additionally, negative prompts are utilized to exclude undesired features, ensuring that the generated image aligns more closely with the collective vision. For instance, if a subset of users explicitly rejects a particular decor style, the negative prompt component is automatically incorporated to mitigate its influence during image generation.

Images from the knowledge base are incorporated into the prompting process. These reference images are processed via an image embedding pipeline, where the textual prompt is combined with features extracted from the reference image using model-specific parameters (see Table 3). This method, often referred to as image prompt embedding, allows the text-to-image model to blend textual instructions with visual cues, thereby producing a design output that resonates with the real-world context of the public cultural space. Furthermore, optimal parameter settings and control strategies are applied to ensure the pre-trained generative model can better accommodate real-world community public cultural space design scenarios and multidimensional needs, all without requiring retraining [38]. Through multiple rounds of experimental feedback, a fine-tuned combination of model inference parameters was established to guarantee stable, high-quality outputs in design sketch generation. After the preliminary design sketches are produced, the system automatically sends them to each stakeholder. Further interaction and feedback enable iterative updates, ultimately forming a broadly accepted design proposal. The final design sketches are then transmitted in a structured image file format to the designer.

Table 3.

Example of Structured Data Fields and Descriptions.

Kolors is a text-to-image generative model built upon the Latent Diffusion Model (LDM) framework, with a classic U-Net serving as its core diffusion backbone and a bilingual General Language Model (GLM) encoding textual prompts, enhanced by fine-tuning on carefully filtered and high-aesthetic images [39]. During image generation, the model iteratively denoises from a purely noisy latent vector to the description-matched images via a VAE decoder through a cross-attention mechanism incorporating GLM-encoded textual embeddings, exhibiting strong performance in rendering Chinese characters, depicting multiple entities, accurately capturing semantic details, and producing aesthetically appealing images [39]. Further, the kolors-base-1.0-vae-fp16_kolors-base-1.0.safetensors model is a specialized checkpoint in half-precision format, which ensures an efficient process while preserving high-fidelity details, which is essential in generating nuanced design sketches that reflect the complex spatial, functional, and aesthetic requirements of public cultural spaces. By incorporating bilingual text embeddings via GLM, it supports complex prompts in both English and Chinese, maintaining robust semantic alignment and enabling a more inclusive co-creation process. Moreover, the half-precision design achieves an optimal balance between computational efficiency and model accuracy, making it well-suited for large-scale text-to-image generation tasks—especially in public cultural spaces with high user density.

By incorporating a text-to-image model and a feedback iteration mechanism [40], the Needs Expression Module efficiently converts structured textual needs into visual design sketches. This process bridges the informational gap between textual descriptions and visual representations [41], reducing the risk of misinterpretation that can arise from purely textual depictions, and provides a systematic, intuitive means of expression for community public cultural space co-creation. As a result, stakeholders are able to achieve initial consensus on aspects such as spatial layout, functional configurations, and visual style during the early design stages, thus significantly enhancing decision-making efficiency in collaborative design processes.

In sum, the community public cultural space needs a co-creation framework based on a generative dynamic workflow proposed in this paper, which establishes an intelligent interactive workflow for gathering and analyzing the design needs of multiple stakeholders in community public cultural spaces. It also harnesses a fine-tuned text-to-image model to transform these structured requirements into intuitive design sketches, thereby forming a complete closed-loop. From an application standpoint, the Community Public Cultural Space Co-creation System built on this framework delivers three key benefits: (1) Efficiency—LLMs-assisted needs gathering and semantic analysis significantly shorten the initial research phase and reduce manpower costs. (2) Quality—Rapid prototyping and iterative feedback powered by the text-to-image model allow stakeholders to visualize and discuss design concepts in real time, minimizing the risk of discovering misaligned goals only after final designs are completed. (3) Collaboration—The system establishes a co-creation ecosystem open to all participants via a public social media platform. This setup greatly improves the depth and frequency of interactions between residents and designers, leading to a higher level of consensus.

4. Results and Discussion

This study takes the renovation of the public cultural space beneath the men’s dormitory at Tongji University’s Jiading Campus as an example. To ensure a diverse representation of stakeholders, a service experience survey of the co-creation system was conducted with eight subjects, reflecting a variety of age groups, educational backgrounds, and living conditions. Specifically, the participants included two undergraduate students (both aged 22), one living with family and another in a shared-living arrangement; three master’s students (ages 20–30, 23, and 26), one living alone and two in shared-living arrangements; two doctoral students (ages 28 and 29) in shared-living arrangements; and one 47-year-old teacher living with family (also fulfilling an administrative role). By incorporating these eight individuals—whose backgrounds encompass bachelor-, master-, and doctoral-level students, as well as faculty/administrative representation—we aimed to capture a broad spectrum of perspectives relevant to the targeted public cultural space. Not only do they vary in academic status and professional identity (e.g., doctor and teacher), but they also reflect different living statuses (living alone, living with family, and co-living arrangements) and age ranges (early twenties to late forties). This demographic diversity is crucial because the public cultural space beneath the men’s dormitory serves multiple purposes for students, staff, and visitors alike, thus requiring input from stakeholders who differ in their daily routines, spatial needs, and community engagement levels.

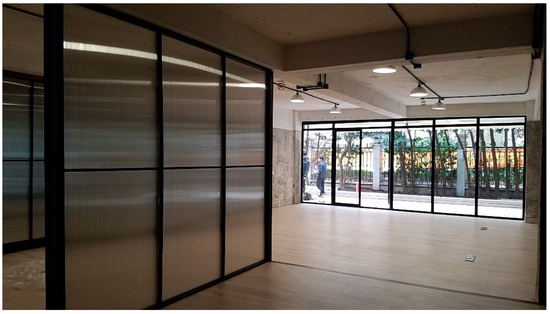

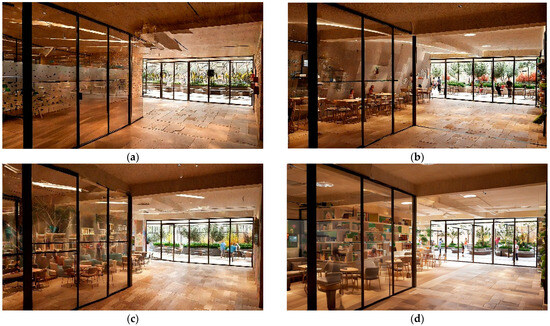

The experiment was divided into four stages—introducing the project background, conducting needs research, refining the needs results, and completing an activity questionnaire. Before starting the experiment, the system’s database was preloaded with the renovation background information and on-site images of the public cultural space slated for improvement; the sample image is shown in Figure 4. By involving undergraduate, master’s, and doctoral students, along with a teacher-administrator, we ensured that the evaluation phase captured distinct usage scenarios (e.g., studying, research collaborations, leisure activities, and administrative oversight) and varying time schedules (e.g., daytime instruction vs. evening student gatherings). Accordingly, the samples encompass core segments of the dormitory’s user base—residents of different ages, academic ranks, and family statuses—who collectively represent typical daily users and decision-making influences for campus facilities. Although limited to eight participants, this multifaceted demographic composition strengthens the generalizability of the initial findings, illustrating how the system responds to diverse stakeholder needs.

Figure 4.

Public Cultural Space of the Dormitory Area to Be Renovated.

The specific steps for the renovation research are as follows (see Figure 5). First, all participants were uniformly briefed on the background of the public cultural space renovation project and the process to be completed so they could better understand the content of the project and propose design needs suited to their own needs. Second, all participants simultaneously carried out the research needed for the public cultural space. After each participant finished the needs research process, the system conducted a statistical analysis of the unstructured needs data uploaded to the back end, identifying those needs that suit the majority of users and converting them into structured data. Next, the structured data were organized into coherent paragraphs to help the text-to-image model fully comprehend the various stakeholders’ design requirements for the public cultural space, thereby generating visual feedback that aligns more closely with real-world needs. After multiple iterations, the final needs were determined. Lastly, each participant was asked to complete a feedback questionnaire on the public cultural space renovation project. This questionnaire was used to conduct a System Usability Scale (SUS) test and measure the interactive experience of the resulting design scheme.

Figure 5.

Visualizations of the System’s Workflows.

In the questionnaire, this research employed a comprehensive survey that included two main parts: the usability test and the interactive experience assessment of the design scheme. These were designed to evaluate the co-creation system’s usability and the interaction experience of its design proposals in practical application. For the detailed content of the questionnaire, please refer to Table 4. The first part of the questionnaire referenced the usability scale, primarily using multiple dimensions—such as ease of use, learning cost, functional integration, and consistency—to quantitatively evaluate user experiences with the co-creation system. This assessment helps determine whether the system features simple and efficient operations. The second part of the survey focused on the interactive experience of residents after they had submitted their design needs, including the extent to which users felt their needs were understood, their expectations for text-to-image results, and the consistency between the generated design and their original needs. The goal was to assess the system’s effectiveness in capturing and responding to varied user needs. Analyzing these two sets of results enables the identification of weaknesses in process design, functional layout, and human-computer interaction, allowing targeted improvements in user guidance, reducing operational complexity, and refining both needs conversion and image-generation algorithms. Ultimately, such enhancements aim to improve the overall utility and user satisfaction of the co-creation system.

Table 4.

Introduction to the Scale Assessments.

All subjects were gathered on-site at the renovation area. After the project background was introduced, they collectively began the needs research for renovating the dormitory public cultural space, completing the research tasks within ten minutes and receiving the first version of the design plan two minutes later. Over the subsequent half hour, they continuously interacted with the community public cultural space co-creation system to ensure that their design requirements were fully understood.

Upon analyzing the questionnaire responses, reliability testing, and confidence interval evaluations were conducted. During the reliability and validity assessment of the questionnaire, the initial Cronbach’s alpha was found to be 0.676. Further investigation revealed that for subject 2’s responses on the SUS, items 7 and 8—designed to measure the same underlying construct with reversed wording—yielded contradictory scores. Conceptually, these two items should produce similar or closely aligned ratings rather than opposing values, suggesting that subject 2 may have misread or incorrectly recorded one of the items. After contacting subject 2 to verify the data, the score for item 8 was changed from 5 to 1. A subsequent reliability analysis produced a revised Cronbach’s alpha of 0.787, indicating a good level of internal consistency among the questionnaires. Although this value is somewhat below stricter thresholds (e.g., 0.80 or 0.90), it remains within an acceptable range for social science and educational research contexts, suggesting that the scale items are coherently aligned with the intended construct.

Furthermore, Bartlett’s test of sphericity yielded a chi-square value of 406.218 (p = 0.000), allowing for the rejection of the null hypothesis that the scale items are uncorrelated at a high level of significance (e.g., α = 0.01). This result confirms substantial inter-item correlations and indicates that the questionnaire data can indeed reflect potential latent constructs or dimensions. Collectively, these findings highlight the importance of verifying anomalous responses, as even a single misread item can significantly affect the psychometric properties of the scale.

The initial needs data and the final consolidated needs data from the experiment are shown in Table 5. The data for IDs 1–8 represent the needs information from various participating groups, while the data for ID 0 represent the finalized, consolidated needs.

Table 5.

Experimental Statistical Analysis Results.

Figure 6 shows the design sketches for the dormitory public cultural space, generated after multiple rounds of feedback and optimization with stakeholders, which ultimately yielded a satisfactory conceptual design for the renovation.

Figure 6.

This is the iteratively renovated public cultural space sketches of the dormitory area, which are optimized through multiple rounds of feedback: (a) The public cultural space sketch generated for the first time; (b) The public cultural space sketch generated after iterative feedback; (c) The public cultural space sketch generated after optimization; (d) The public cultural space sketch generated after several times of iterative feedback and optimization.

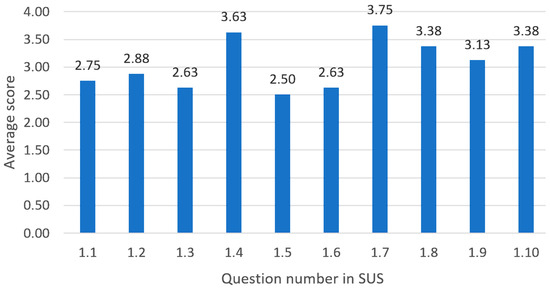

The average total score of the SUS scale for this experiment was 76.56, placing the system in the “Good” range for usability according to the SUS scoring criteria—indicating positive user perceptions regarding ease of use, functional integration, and overall satisfaction. To provide a more detailed look into how each individual item contributed to this overall rating, Figure 7 presents the average score per question on the SUS scale. On the x-axis, each item (1.1 through 1.10) corresponds to a specific dimension of usability (e.g., complexity, learnability, confidence), and the y-axis indicates the mean rating assigned by subjects, ranging from 1 (strongly disagree) to 5 (strongly agree).

Figure 7.

Average Scores Per Question on the SUS Scale.

From Figure 7, it is evident that Question 1.7 garnered the highest average score (3.75), highlighting that users considered the learning threshold of the Community Public Cultural Space Co-Creation System to be minimal because stakeholders from diverse backgrounds could quickly and smoothly adopt its functions. In contrast, Question 1.5 had the lowest average score (2.5), suggesting that the cross-model integration of certain features, such as transitions between needs analysis and image generation, remains an area for improvement. The moderate ratings (3.13–3.63) on other items further reveal how users perceive the system’s design choices, including functional integration, consistency, and intuitiveness. Because the system has integrated multiple interconnected functional modules—needs research, needs analysis, and image generation—some delays can occur when jumping between modules, preventing the process from feeling entirely natural. Offering loading prompts and step-by-step guidance during a conversation could improve the user’s patience and overall experience. Additionally, the variance of the overall SUS score was 11.35, indicating that participants’ comprehension and usage experiences of the system’s features, interaction methods, and learning processes vary to some extent, reflecting a certain degree of divergence in perceived usability. This divergence may stem from differences in technical background or personal preferences regarding system workflows. Consequently, future refinements—such as adding step-by-step prompts or user personas for more tailored guidance—could help address these variations and enhance the overall user experience. Taken together, Figure 7 illustrates both the system’s strengths (e.g., ease of learning) and the aspects that require further optimization (e.g., feature integration), thereby reinforcing the interpretation of the SUS total score and substantiating the claim that the system demonstrates “Good” usability. Future updates could incorporate user personas to provide differentiated interaction styles suited to users with distinct technical backgrounds or needs preferences, thereby narrowing the gap in usage experiences of the Community Public Cultural Space Co-creation System.

The interactive experience assessment in this experiment focused on users’ experiences after receiving space design feedback, including the model’s understanding of user needs, participants’ anticipation of the text-to-image results, and the alignment between the resulting designs and user needs. Each of these three questions has a maximum score of 5, where higher scores represent a stronger level of agreement, and the variance indicates the degree of opinion divergence among participants.

As shown in Table 6, Question 2.2 not only achieved the highest average score (4.38) but also displayed the highest variance (0.70), suggesting that most participants highly anticipated seeing the public cultural space design outcomes produced by the text-to-image technology. However, significant differences remained in their actual perceptions. This discrepancy indicates that the system’s co-creation design philosophy notably boosts users’ expectations for the system’s responses—thanks to the “Needs Co-creation–Needs Analysis–Needs Expression” value loop and the incorporation of intuitive visual feedback. On the other hand, some users might be relatively cautious about or less accepting of novel generative AI technologies, worrying that the generated sketches might fail to fully meet their expectations. Thus, in promoting this system to certain user groups, higher educational costs may be required.

Table 6.

Average Scores and Average Variances for the Second Part of the Survey.

Question 2.1 and Question 2.3 both have average scores around 3.3, slightly below the overall mean of 3.67 for Part Two. Moreover, Question 2.3 features the lowest variance (0.48), indicating that while the system did capture and reflect users’ actual design needs to a certain extent in the generated design sketches, it did not achieve a fully satisfactory or ideal level of understanding or implementation, though this view was shared by most participants. This result implies that the analysis algorithms and knowledge graphs employed during the Needs Analysis stage have yet to reach optimal performance. Additionally, further enhancements are needed in the fine-tuning process of the text-to-image model to strengthen its ability to correlate textual needs, ensuring that users’ design requirements are thoroughly addressed.

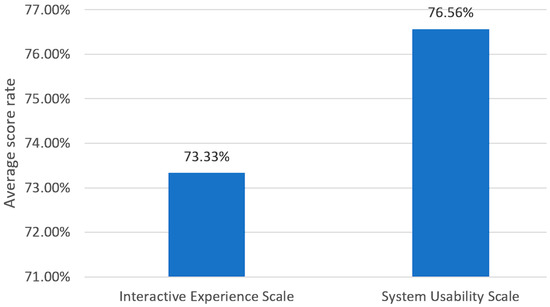

Comparing the average scores from both the SUS (76.56%) and the Interactive Experience Scale (73.33%) shown in Figure 8, the x-axis identifies which scale is being measured (Usability vs. Interactive Experience), while the y-axis indicates the mean percentage score achieved by each scale. This difference suggests that, although participants generally found the Community Public Cultural Space Co-creation System user-friendly (i.e., high usability), there is slightly more room for improvement in areas related to interactive engagement. Users generally hold high expectations for the system’s visualization of design needs while also suggesting improvements regarding the integration of system functionality, technical learning curve, and precise needs-to-design-image matching.

Figure 8.

Average Scores for System Usability Scale and Interactive Experience Scale.

In light of these findings, the system demonstrates a solid foundation for practical application—users hold high expectations for the system’s visualization of design needs yet also recommend improvements in functionality integration, technical learning curves, and the precise matching of needs to design outputs. The variability in responses further highlights how diverse user backgrounds and preferences can influence perceptions of both usability and interactive experience.

The proposed AI agent-driven co-creation model offers a scalable and adaptive approach to participatory design, enabling efficient aggregation of resident input and real-time generation of spatial solutions. Its integration of natural language interaction, automated needs synthesis, and generative visualization supports continuous user engagement and iterative refinement. This framework holds promise for broader applications in community planning and public infrastructure design, particularly in contexts requiring high inclusivity and responsiveness. Beyond practical utility, it contributes to advancing socially intelligent design systems that promote civic participation, procedural equity, and resident-centered spatial governance.

Consequently, future enhancements should focus on tailoring feature entry points and guidance mechanisms to accommodate these differences. By refining multimodel collaboration, streamlining the unified interface design, and introducing more flexible feedback loops, the co-creation process for public cultural spaces can be significantly strengthened. Ultimately, this approach will drive higher user satisfaction and provide an efficient, intuitive, and sustainable solution for community-level design and planning.

5. Conclusions

This study proposes a co-creation framework based on a generative dynamic workflow, aiming to address issues of low resident participation, inefficient needs integration, and delayed designer decision-making in community public cultural space design. By integrating a needs-capturing module, a data analysis module, a visual scheme generation module, and an iterative feedback module, the framework realizes a closed-loop optimization from needs collection to the generation of visualized solutions. Through intelligent interactive methods, the framework lowers the threshold for residents to express their needs and enhances the efficiency of multistakeholder collaborative design while simultaneously ensuring dynamic optimization of the space design. Experimental results show that the system offers significant advantages in improving the responsiveness, accuracy, and democratization of public cultural space planning. Additionally, this study provides a systematic solution for the intelligent design of urban public spaces, extends the application scenarios of generative artificial intelligence in smart city governance, and offers new perspectives for optimizing community co-development and co-management models.

Despite these evident advantages, several limitations remain and warrant explicit discussion. First, the diversity of needs may pose challenges for the system-level integration: specific requirements can be under-represented when particular user groups, especially minority or vulnerable populations, are insufficiently sampled. Second, it is susceptible to response bias (e.g., social-desirability effects or acquiescence) because of self-reported feedback, potentially distorting the true distribution of needs. Third, the performance of data-driven generative models is bounded by the scope, balance, and cultural specificity of their training data; inadequate coverage or sampling bias can restrict both model accuracy and the ecological validity of generated results. Fourth, variations in age, digital literacy and technical background create differential levels of user adaptability: some individuals may find the system difficult to use owing to its technological complexity, which could impede broader adoption. Finally, because the present study was conducted in a single university context, the system’s transferability to other cultural or urban settings remains untested; local norms, spatial conventions, and governance structures may necessitate substantial recalibration.

Future work should address these limitations along several lines. (1) Enhanced regional and group-specific segmentation: Refining the needs-analysis module to incorporate geospatial clustering and demographic weighting will improve the system’s ability to distinguish among diverse stakeholder groups and mitigate under-representation. (2) Bias-aware feedback mechanisms: Incorporating techniques such as inverse probability weighting, anomaly detection for inconsistent responses, and triangulation with objective usage metrics can reduce self-report bias and increase data reliability. (3) Cross-cultural data augmentation and model fine-tuning: Expanding the training corpus to include multicity, cross-cultural datasets—and employing domain-adaptation methods—will strengthen model generalizability across different urban contexts. (4) Adaptive user-interface personalization: Integrating in-depth persona analysis with a recommender engine can deliver role-specific tutorials, step-by-step prompts, and interface simplification for low-literacy users. (5) Multi-site longitudinal validation: Deploying the system in varied community settings (e.g., commercial districts and rural towns) and conducting longitudinal evaluations will clarify long-term usability, cultural adaptability, and governance implications, thereby enhancing the robustness and external validity of the framework.

Author Contributions

Conceptualization, C.L., M.Z., M.H., and Y.L.; methodology, C.L., M.Z., and M.H.; software, M.Z.; validation, C.L., M.Z., and M.H.; data curation, M.Z.; formal analysis, M.Z.; writing—original draft preparation, C.L., M.Z., M.H., X.W., and H.G.; writing—review and editing, P.C., C.W., and Y.L.; visualization, M.Z.; supervision, Y.L.; project administration, C.L.; funding acquisition, C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the National Natural Science Foundation of China under Grant 52308032, and in part by the Program of Interdisciplinary Cluster on Innovation Design and Intelligent Manufacturing of Tongji University.

Data Availability Statement

The data presented in this study are available on request from the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LLMs | Large language models |

| NLP | Natural Language Processing |

| PPSS | Participatory Planning Support System |

| T2I | Text-to-Image |

| AIGC | Artificial Intelligence-Generated Content |

| GANs | Generative Adversarial Networks |

| DMs | Diffusion Models |

| DDPMs | Denoising Diffusion Probabilistic Models |

| VAE | Variational AutoEncoder |

| LDM | Latent Diffusion Model |

| GLM | General Language Model |

| SUS | System Usability Scale |

References

- Liao, J.; Liu, Y.; Feng, X. Approaches to Public Participation in Micro-renewal of Old Communities: A Case Study of Lane 580 Community, Zhengli Road, Yangpu Chuangzhi District in Shanghai. Landsc. Archit. 2020, 27, 92–98. [Google Scholar] [CrossRef]

- Hou, X. Landscape micro-renewal of community public space in beijing old city based on community building and multi-governance: Taking the micro gardens in beijing old city as the example. Chin. Landsc. Arch. 2019, 35, 23–27. [Google Scholar]

- Liu, Y.; Kou, H. Study on the strategy of micro-renewal and micro-governance by public participatory of shanghai community garden. Chin. Landsc. Arch. 2019, 35, 5–11. [Google Scholar]

- Purcell, A.T.; Gero, J.S. Drawings and the design process: A review of protocol studies in design and other disciplines and related research in cognitive psychology. Des. Stud. 1998, 19, 389–430. [Google Scholar] [CrossRef]

- Naveed, H.; Khan, A.U.; Qiu, S.; Saqib, M.; Anwar, S.; Usman, M.; Akhtar, N.; Barnes, N.; Mian, A. A comprehensive overview of large language models. arXiv 2023, arXiv:2307.06435. [Google Scholar]

- Zhang, Z.; Liu, S.; Shen, Y.; Zhang, Y.; Hou, Z.; Wang, X.; Luo, J. iDesignGPT: Large language model agentic workflows boost engineering design. Res. Sq. 2025. [Google Scholar] [CrossRef]

- Schöbel, S.; Schmitt, A.; Benner, D.; Saqr, M.; Janson, A.; Leimeister, J.M. Charting the evolution and future of conversational agents: A research agenda along five waves and new frontiers. Inf. Syst. Front. 2024, 26, 729–754. [Google Scholar] [CrossRef]

- Kawakami, A.; Coston, A.; Zhu, H.; Heidari, H.; Holstein, K. The situate AI Guidebook: Co-designing a toolkit to support multi-stakeholder, early-stage deliberations around public sector AI proposals. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–22. [Google Scholar]

- Quan, S.J.; Lee, S. Enhancing participatory planning with ChatGPT-assisted planning support systems: A hypothetical case study in Seoul. Int. J. Urban Sci. 2025, 29, 89–122. [Google Scholar] [CrossRef]

- Zhou, Z.; Lin, Y.; Jin, D.; Li, Y. Large language model for participatory urban planning. arXiv 2024, arXiv:2402.17161. [Google Scholar]

- Kawakami, A.; Sivaraman, V.; Stapleton, L.; Cheng, H.-F.; Perer, A.; Wu, Z.S.; Zhu, H.; Holstein, K. “Why Do I Care What’s Similar?” Probing Challenges in AI-Assisted Child Welfare Decision-Making through Worker-AI Interface Design Concepts. In Proceedings of the 2022 ACM Designing Interactive Systems Conference, Online, 13–17 June 2022; pp. 454–470. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Saharia, C.; Chan, W.; Saxena, S.; Li, L.; Whang, J.; Denton, E.L.; Ghasemipour, K.; Gontijo Lopes, R.; Karagol Ayan, B.; Salimans, T. Photorealistic text-to-image diffusion models with deep language understanding. Adv. Neural Inf. Process. Syst. 2022, 35, 36479–36494. [Google Scholar]

- Ramesh, A.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical text-conditional image generation with clip latents. arXiv 2022, arXiv:2204.06125. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Shuai, X.; Ding, H.; Ma, X.; Tu, R.; Jiang, Y.-G.; Tao, D. A survey of multimodal-guided image editing with text-to-image diffusion models. arXiv 2024, arXiv:2406.14555. [Google Scholar]

- Dhariwal, P.; Nichol, A. Diffusion models beat gans on image synthesis. Adv. Neural Inf. Process. Syst. 2021, 34, 8780–8794. [Google Scholar]

- Aydin, E.E.; Ortner, F.P.; Peng, S.; Yenardi, A.; Chen, Z.; Tay, J.Z. Climate-responsive urban planning through generative models: Sensitivity analysis of urban planning and design parameters for urban heat island in Singapore’s residential settlements. Sustain. Cities Soc. 2024, 114, 105779. [Google Scholar] [CrossRef]

- Liao, W.; Lu, X.; Fei, Y.; Gu, Y.; Huang, Y. Generative AI design for building structures. Autom. Constr. 2024, 157, 105187. [Google Scholar] [CrossRef]

- Thoring, K.; Huettemann, S.; Mueller, R.M. The augmented designer: A research agenda for generative AI-enabled design. Proc. Des. Soc. 2023, 3, 3345–3354. [Google Scholar] [CrossRef]

- Bukar, U.A.; Sayeed, M.S.; Razak, S.F.A.; Yogarayan, S.; Sneesl, R. Decision-making framework for the utilization of generative artificial intelligence in education: A case study of ChatGPT. IEEE Access 2024, 12, 95368–95389. [Google Scholar] [CrossRef]

- Wong, I.A.; Lian, Q.L.; Sun, D. Autonomous travel decision-making: An early glimpse into ChatGPT and generative AI. J. Hosp. Tour. Manag. 2023, 56, 253–263. [Google Scholar] [CrossRef]

- Natarajan, L. Socio-spatial learning: A case study of community knowledge in participatory spatial planning. Prog. Plan. 2017, 111, 1–23. [Google Scholar] [CrossRef]

- Tian, N.; Wang, W. Innovative Pathways for Collaborative Governance in Technology-Driven Smart Communities. Sustainability 2024, 17, 98. [Google Scholar] [CrossRef]

- Qian, Z. Collaborative Neighbourhood Governance: Investigating Two Types of Resettlement Neighbourhoods in Suburban Shanghai, China. Urban Policy Res. 2023, 41, 434–451. [Google Scholar] [CrossRef]

- Grootjans, S.J.; Stijnen, M.; Kroese, M.; Ruwaard, D.; Jansen, M. Collaborative governance at the start of an integrated community approach: A case study. BMC Public Health 2022, 22, 1013. [Google Scholar] [CrossRef] [PubMed]

- Gearin, E.; Hurt, C.S. Making space: A new way for community engagement in the urban planning process. Sustainability 2024, 16, 2039. [Google Scholar] [CrossRef]

- Hunter, M.G.; Soro, A.; Brown, R.A.; Harman, J.; Yigitcanlar, T. Augmenting community engagement in city 4.0: Considerations for digital agency in urban public space. Sustainability 2022, 14, 9803. [Google Scholar] [CrossRef]

- Jiang, C.; Xiao, Y.; Cao, H. Co-creating for locality and sustainability: Design-driven community regeneration strategy in Shanghai’s old residential context. Sustainability 2020, 12, 2997. [Google Scholar] [CrossRef]

- Gmeiner, F.; Conlin, J.L.; Tang, E.H.; Martelaro, N.; Holstein, K. An evidence-based workflow for studying and designing learning supports for human-ai co-creation. In Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–15. [Google Scholar]

- Ford, C.; Bryan-Kinns, N.; Generative, A. Speculating on reflection and people’s music co-creation with AI. In Proceedings of the GenAICHI 2022, Online, 10 May 2022. [Google Scholar]

- Zamfirescu-Pereira, J.D.; Wong, R.Y.; Hartmann, B.; Yang, Q. Why Johnny can’t prompt: How non-AI experts try (and fail) to design LLM prompts. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–21. [Google Scholar]

- Jaakkola, E.; Alexander, M. The role of customer engagement behavior in value co-creation: A service system perspective. J. Serv. Res. 2014, 17, 247–261. [Google Scholar] [CrossRef]

- Ding, W.; Wang, X.; Zhao, Z. CO-STAR: A collaborative prediction service for short-term trends on continuous spatio-temporal data. Future Gener. Comput. Syst. 2020, 102, 481–493. [Google Scholar] [CrossRef]

- Huang, H.; He, R.; Sun, Z.; Tan, T. Introvae: Introspective variational autoencoders for photographic image synthesis. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; Volume 31. [Google Scholar]

- Gruber, T. Collective knowledge systems: Where the social web meets the semantic web. J. Web Semant. 2008, 6, 4–13. [Google Scholar] [CrossRef]

- Grefenstette, J.J. Optimization of control parameters for genetic algorithms. IEEE Trans. Syst. Man Cybern. 2007, 16, 122–128. [Google Scholar] [CrossRef]

- Team, K. Kolors: Effective training of diffusion model for photorealistic text-to-image synthesis. arXiv 2024. Available online: https://github.com/Kwai-Kolors/Kolors/blob/master/imgs/Kolors_paper.pdf (accessed on 23 April 2025).

- Wang, Z.; Huang, Y.; Song, D.; Ma, L.; Zhang, T. Promptcharm: Text-to-image generation through multi-modal prompting and refinement. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–21. [Google Scholar]

- Fu, K.; Wu, R.; Tang, Y.; Chen, Y.; Liu, B.; Lc, R. “Being Eroded, Piece by Piece”: Enhancing Engagement and Storytelling in Cultural Heritage Dissemination by Exhibiting GenAI Co-Creation Artifacts. In Proceedings of the 2024 ACM Designing Interactive Systems Conference, Copenhagen, Denmark, 1–5 July 2024; pp. 2833–2850. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).