1. Introduction

In recent years, the imbalance between the supply and demand for health services has worsened, leading to longer waiting lists and delays beyond clinically recommended periods. For example, by the end of 2019, over 27,000 patients in Portugal had been waiting for surgery for more than one year [

1], while in Australia, the percentage of elective patients waiting over a year ranged between 1.7% and 2.8% from 2015 to 2020 [

2]. The situation deteriorated further during the COVID-19 pandemic, with operating room case volumes in the United States decreasing by around 35% between March and July 2020 compared to the prior year [

3]. Such disruptions, which affected most medical and diagnostic services, created significant backlogs, requiring hospitals to operate at 120% of historical throughput for ten months to recover just two months of additional surgical demand.

To try and mitigate this situation, decision-support tools have been proposed and deployed to support activities related to the delivery of health services. Aiming at reducing clinicians’ workload and increasing the efficiency of administrative tasks, these tools have shown potential in predicting patients’ health trajectories, recommending treatments, guiding surgical care, monitoring patients, and supporting efforts to improve population health. However, as pointed out by [

4], “innovations in medications and medical devices are required to undergo extensive evaluation, often including randomized clinical trials, to validate clinical effectiveness and safety.” Similar rigor should apply to decision-support tools, especially when integrated into complex healthcare systems where multiple stakeholders, dynamic interactions, and contextual constraints can lead to unintended consequences.

In this context of higher demand and longer waiting times, the need for Computer-Based Patient Prioritization Tools (PPT) has accelerated. PPTs aim to manage access to care by ranking patients equitably and rigorously based on clinical criteria so that those with urgent needs receive services before those with less urgent needs. While PPTs have been widely studied, few works have explored their real-world implementation and, more importantly, the constructs determining successful adoption in clinical practice [

5,

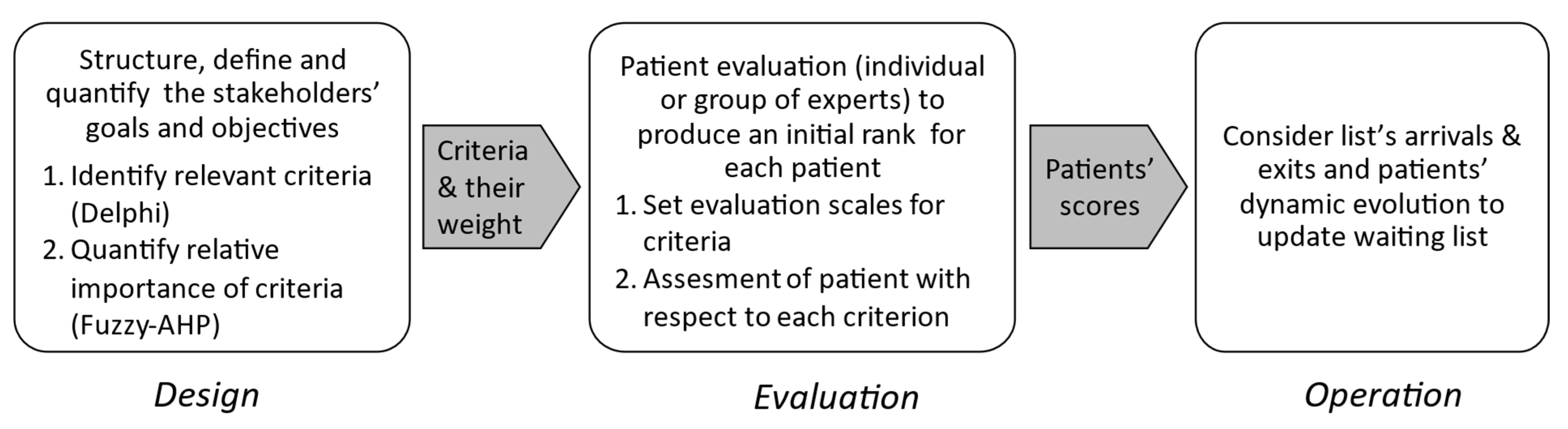

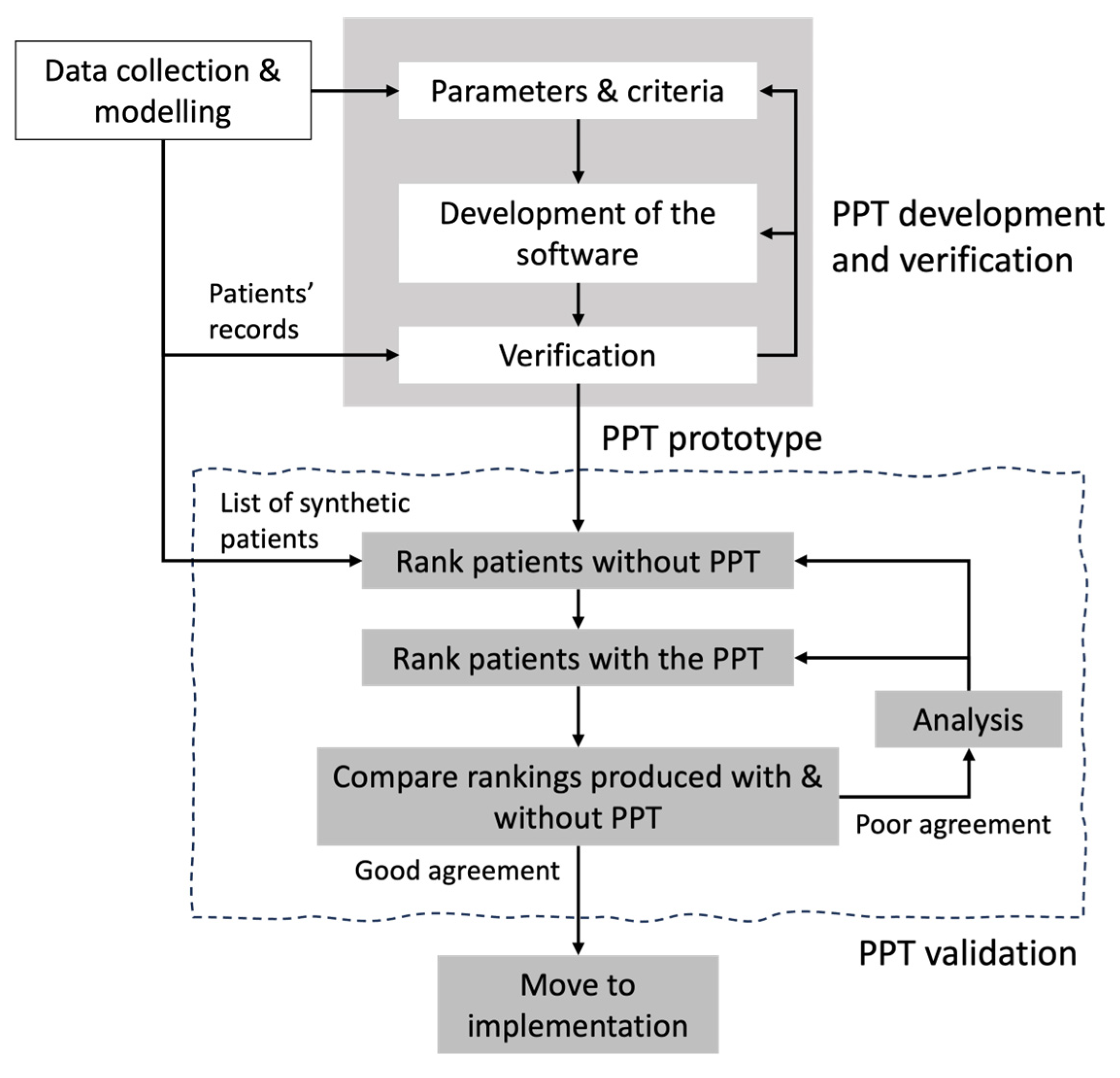

6]. The development of such tools follows an iterative life cycle—design, implementation, verification, validation, and deployment—where verification and validation (V&V) play a critical role in ensuring reliability, safety, and user trust [

7,

8].

Recent studies on the acceptability of new technologies in healthcare show that a substantial proportion of users remain hesitant toward AI-based solutions, mainly due to concerns about accuracy and security. This highlights the importance of robust verification and, even more specifically, validation processes before deployment in complex healthcare environments [

9].

This paper presents and discusses the validation process of a PPT as part of its development life cycle. It follows the more technical, software-oriented verification process performed after the construction of the prototype and it can be considered an early stage of implementation. Validation aims to demonstrate how the tool can deliver improved outcomes while avoiding unintended consequences when integrated into a complex healthcare system characterized by multiple stakeholders, interdependent processes, and resource constraints. Such complexity amplifies the risk of emergent behaviors and unintended effects, making rigorous validation essential before large-scale deployment. The PPT was designed to manage patients’ access to the urodynamic test (UT) in the urology service at the Hospital de Clínicas of the Federal University of Paraná, Brazil [

10,

11]. The UT is an interactive diagnostic study of the lower urinary tract, essential for diagnosing conditions such as urinary incontinence and neurogenic bladder [

12].

At the Hospital de Clínicas of the Federal University of Paraná, the waiting list for the UT has grown dramatically, exceeding 3000 patients with an average waiting time of three years. The list was managed manually using an Excel spreadsheet, with no formal method for selecting patients. In practice, prioritization depended on residents, who informed administrative staff which patients they believed should be scheduled for the exam. The absence of structured criteria created several issues: patients who had already undergone other treatments, older patients, and even deceased patients remained on the list because there was no routine process for updating or removing cases. Patients who returned to the clinic with worsening symptoms were usually prioritized, introducing a bias linked to the frequency of follow-up visits. Conversely, patients with urinary incontinence—particularly women—often remained on the list indefinitely, as their condition was perceived as non-urgent. This lack of standardization resulted in prolonged waiting times and inequities in access to care, highlighting the need for a systematic and transparent prioritization approach. These challenges justified the development of a PPT that, if not reducing the average waiting time, could at least support managers in prioritizing patients based on clinical urgency, ensuring transparency, consistency, and fairness in the decision-making process [

6].

This paper is structured as follows: the next section presents a literature review on PPT, followed by a methodological section that introduces the development of the PPT designed for managing the prioritization of access to UT, and the process proposed for its validation. Then, results are presented and discussed. Conclusions, further research avenues, and limitations of this work conclude the paper.

2. Literature Review on Patients’ Prioritization Tools

Over the past decades, waiting lists have expanded significantly due to a combination of structural and demographic pressures. Rising demand for healthcare services—driven by population aging, the growing prevalence of chronic diseases, and advances in medical technology—has outpaced the capacity of health systems to deliver timely care. At the same time, resource constraints, including workforce shortages and limited infrastructure, have exacerbated this imbalance, making waiting lists a persistent challenge worldwide.

In response, many countries have undertaken various strategies to reduce waiting times. These interventions typically target either demand, supply, or both. On the demand side, cost-sharing mechanisms have been proposed to curb excessive utilization; however, such measures are widely regarded as inequitable and unpopular. On the supply side, persistent shortages of healthcare personnel and funding constraints remain significant barriers to expanding service capacity. Even more, studies have reported moderate success when dedicated funding envelopes were allocated to reduce specific waiting lists [

13]. Beyond these measures, efforts have also focused on improving efficiency within existing resources. Drawing inspiration from lean thinking—particularly the principles of the Toyota Production System—many hospitals have adopted process improvement strategies over the past decade to streamline workflows and ultimately reduce delays.

In contrast to these approaches aimed at increasing capacity or curbing demand, patient prioritization seeks to optimize access by ensuring that those with the greatest need are treated first. The complexity of assessing, ranking, and managing patients on waiting lists has led to the development of PPTs. These tools are intended to assist decision-makers in determining which patients should be scheduled when demand exceeds available capacity. Typically, PPTs employ a weighted set of criteria, allowing each patient to be evaluated against predefined factors. The cumulative score derived from these criteria enables systematic ranking and supports resource allocation decisions [

5,

14]. PPTs have attracted considerable attention for their potential to promote fairness, transparency, consistency, and efficiency—four foundational principles for managing waiting lists and ensuring equitable access to healthcare services [

15,

16].

The criteria used in PPTs vary depending on the clinical context but commonly include personal factors (e.g., age), social factors (e.g., ability to work), clinical indicators (e.g., quality of life, severity of condition), and other context-specific elements [

17,

18]. This contextual dependency has led to a lack of standardization across tools, as highlighted in several studies [

19,

20].

Precisely, selecting the appropriate criteria and determining their relative importance constitute one, if not the most challenging aspect in the development of PPTs. The choice of criteria must reflect both clinical relevance and ethical considerations, yet there is no universal consensus on which factors should be prioritized. Studies have shown that criteria such as pain intensity, functional limitations, disease progression, and social roles are commonly used, but their inclusion and evermore, the weight they receive, vary significantly across tools and contexts [

5,

14,

17]. This lack of standardization in criteria and objectives complicates comparisons between systems and may affect the fairness and transparency of prioritization decisions.

In recent years, ethical dimensions are increasingly integrated into prioritization frameworks. Some authors argue that prioritization should not solely rely on clinical urgency but also consider equity, social vulnerability, and moral responsibility [

21,

22]. Moreover, assigning weights to each criterion—a process often based on expert consensus, statistical modeling, or decision-making frameworks like the Analytic Hierarchy Process (AHP)—introduces further complexity. The relative importance of criteria can be influenced by clinical urgency, patient-reported outcomes, and societal values, making the weighting process inherently subjective [

23]. Furthermore, participatory modeling approaches involving stakeholders have been proposed to ensure legitimacy and inclusiveness in decision-making [

21]. For instance, some tools rely on participatory approaches involving clinicians and patients to establish consensus, while others use simulation or AI-based models to optimize scoring systems. Despite these efforts, the challenge remains to balance clinical objectivity with ethical sensitivity, ensuring that prioritization tools are both effective and just.

Examples of scoring systems illustrate how PPTs operate in practice. For example, the Western Canada Waiting List Project developed tools for various specialties, including hip and knee arthroplasty, cataract surgery, and mental health services. These tools use point-count systems, where each criterion is assigned a numerical value, and the total score determines the patient’s priority level [

15,

16,

24]. In orthopedic surgery, the WOMAC index evaluates pain, stiffness, and physical function, producing a score from 0 to 100. Patients are then categorized into urgency groups [

5]. Similarly, the Obesity Surgery Score (OSS) incorporates BMI, obesity-related comorbidities, and functional limitations to identify high-risk patients and prioritize bariatric surgery [

25].

Efforts have also been made to develop generic tools applicable across elective procedures. One such tool evaluates patients based on clinical and functional impairment, expected benefit, and social role, encompassing subcriteria like disease severity, pain, progression rate, daily activity limitations, and caregiving responsibilities [

20]. The Surgical Waiting List Info System (SWALIS) project in Italy exemplifies a national initiative to standardize prioritization across surgical services. It provides real-time data to monitor waiting lists and supports equitable and efficient resource management [

26].

Despite the potential benefits of patient prioritization tools, their implementation in clinical settings is scarce and faces several barriers. Organizational resistance to change, limited staff training, and concerns about the accuracy and fairness of scoring systems can hinder adoption [

6]. Healthcare professionals may be skeptical of algorithm-based decisions, especially when ethical considerations and patient preferences are not adequately addressed [

21]. A critical barrier is the issue of user trust—clinicians and administrators may hesitate to rely on prioritization tools if they perceive them as opaque or unreliable. This lack of trust can significantly impede integration into routine practice. However, rigorous validation processes, including assessments of reliability, validity, and clinical relevance, can help mitigate this barrier by demonstrating the tool’s effectiveness and legitimacy [

17,

18]. Technical challenges, such as integrating prioritization tools into existing electronic health record systems, also pose significant obstacles. Moreover, the lack of standardized protocols and the need for continuous validation of the tools across diverse clinical contexts complicate their widespread use [

22]. Addressing these barriers requires participatory implementation strategies, ongoing evaluation, and institutional support to ensure that prioritization tools are effectively and ethically integrated into healthcare workflows.

Although PPTs have been widely discussed in the literature for their conceptual and methodological merits, evidence of rigorous validation and verification remains scarce. The reviewed studies emphasize theoretical frameworks or propose algorithmic models, and only a few of them conduct evaluations in real-world clinical environments. This gap raises concerns about the reliability, generalizability, and practical applicability of these tools. This paper proposes and illustrates a validation process for a patient prioritization tool, emphasizing that rigorous validation—through reliability testing, stakeholder engagement, and contextual adaptation—is key to fostering trust and facilitating successful implementation in clinical practice.

4. Results

This section reports the application of the proposed validation methodology to the PPT for managing access to urodynamic tests. The application of the validation process followed the next main steps. First, a synthetic dataset of patients was generated to simulate real clinical scenarios. Second, two experts independently evaluated each patient within the PPT according to the predefined criteria and scales established during the Design phase (see [

10,

11]). Performing these evaluations directly in the PPT ensured the independence of each expert’s judgment while reinforcing the robustness of the results by incorporating multiple expert opinions (in our case, two). Third, the prioritization tool (PPT) computed a ranking of patients based on these evaluations and the weights previously assigned to each criterion, as described in [

10,

11]. Subsequently, each expert produced an individual ranking of the same patients according to their clinical judgment. Then the validation process begins. In this process, the concordance between the rankings generated by the PPT (using the experts’ evaluations) and those produced manually by the experts is evaluated and discrepancies are analyzed collaboratively with experts. If discrepances show that criteria in the PPT are not aligned with experts’, the PPT parameters (i.e., weights granted to the criteria) may be adjusted. These steps are detailed in the following subsections.

Data collection for testing. The process began with the generation of ten synthetic patients by a nurse from the outpatient clinic of urinary dysfunction. The sample size was small to allow manual ranking by physicians while ensuring diversity in terms of pathologies, signs, and symptoms—an essential consideration in complex healthcare systems where patient heterogeneity significantly impacts prioritization.

Two complementary data sources were used: (i) existing clinical records, including the date of the patient’s first consultation, socio-economic characteristics, and previous test results; and (ii) patient responses to a questionnaire based on the uro-functional classification. Data collection spanned three weeks, as patients were encouraged to provide detailed narratives of their conditions.

To facilitate expert evaluation, a researcher transcribed the collected information into narrative-style patient histories, including names (fictional), age, gender, occupation, test results, and relevant clinical details. This format was chosen to mirror the physicians’ usual workflow, making the evaluation process as realistic as possible. An example of such a record is provided below.

“A female patient, 25 years old, neurogenic bladder due to congenital spinal cord injury, reports having a great loss in work activity due to the disease, has no dependents, lives with her mother. The patient retired due to disability. She reports a large amount of urine loss all the time. She waits in line for 14 months to perform Urodynamics. No renal disease, normal USG, creatinine of 0.3, and oxybutynin with significant improvement of symptoms. ICIQ = 21.”

Test of agreement—Round one. The ten patients’ records (P01 to P10) were submitted to two experts who individually ranked them. To minimize potential bias, the files were presented in random order, and experts were instructed not to communicate during the evaluation. The first expert was the urodynamics doctor’s chief, and the other was a resident urologist. Both experts had participated in the Design step, contributing to the identification of the relevant criteria and the quantification of their relative importance. We will refer to the ranks produced by the urodynamics’ chief and by the resident as and , respectively.

Next, both experts assessed each patient according to the criteria and scales defined in the PPT (see step two, Evaluation, in the previous subsection). Based on these evaluations, the PPT generated two rankings:

(using expert

’s assessments) and

(using expert

’s assessments). Patients were sorted by their computed scores, with higher scores indicating greater urgency. The agreement metrics

and

were computed between

and

to assess the inter-rated agreement, and between the two ranks produced by each expert (i.e., manually and using the PPT). Finally, we also computed the values of the metrics between the two ranks produced using the PPT.

Table 1 reports the results. Each line gives the ranking of each patient P01 to P10 in a prioritized list (lines

,

, and

). The right part of the table reports the values of the agreement metrics between the lists. For instance, the value of the Spearman average distance between list

and list

,

, is given under header

. Notice that since

then

.

The comparisons provide insights into the alignment between expert judgment and the tool’s logic, as well as the tool’s robustness when fed with different expert inputs.

Table 1 shows that the rankings produced by both experts are quite similar, with agreement values of

and

. Although both experts work within the same service, slight differences in their interpretation of patient histories prevent a perfect match. In practice, it is unlikely that two experts will produce identical rankings. For this reason, we accept

as a high agreement rate and adopt this value as a baseline for comparison. The two rankings produced by the PPT also exhibit good agreement, with distances

and

, slightly worse than our baseline. However, when comparing the rankings produced by experts

and

to those generated by the PPT based on their assessments (

and

, respectively), the differences are substantial. Specifically, the values of

and

rise to

and

, respectively, and we observe a similar deterioration in

and

, that produced values of

and

, respectively.

In summary, there is a strong agreement between the rankings produced by the two experts, but significant divergence from the PPT-generated rankings. Since the two PPT rankings are relatively consistent, further investigation was required to identify the reasons for these discrepancies and to determine corrections to the PPT if needed.

Analysis round one. The two experts (the chief and the resident) participated in a meeting with the research team to discuss how they assessed and prioritized the patients in the test set, and to identify the factors explaining the differences between their rankings and those produced by the PPT.

We began by examining patients whose positions differed most between the manual and PPT-generated lists. This analysis revealed that patients with neurogenic bladder were assigned lower priority in the manual rankings compared to the PPT rankings. Neurogenic bladder is recognized as a primary risk factor for kidney complications, and the experts acknowledged its high importance in the prioritization process. They realized that this aspect—particularly creatinine levels—had been undervalued in their manual assessments, whereas the PPT had appropriately weighted it during evaluation.

Test of agreement—Round two. Based on the results of the analysis, we did not find reason to modify the PPT. However, the experts were invited to reconsider their rankings using any methodology they deemed appropriate. In this second round, the experts decided to work together, adopting a two-step approach. First, they categorized patients into three priority groups—high, medium, and low—and then they ranked the patients within each group. In this revised classification, patients with neurogenic bladder were assigned to the high-priority category; those with Benign Prostatic Hyperplasia (BPH) to the medium-priority category; and patients with Urinary Incontinence Refractory (UIR) and other conditions to the low-priority category. Within each category, patients were ordered based on their risk of developing kidney disease.

The experts revisited the patient histories, prioritizing first according to kidney function parameters (creatinine). When renal function was similar between two patients, the underlying condition served as the secondary criterion. For example, between a patient with BPH and another with neurogenic bladder—both with similar renal function—the latter was prioritized due to the higher risk of sudden renal complications. At the end, the experts produced a new joint ranking referred to as

.

Table 2 presents this ranking and the agreement metrics

and

computed between

and

and

, the rankings generated by the PPT in the first round using the assessments of the Chief and the Resident, respectively.

The results indicate that ranking is closer to the ones produced by the PPT. Indeed, is closer to than , suggesting that the Resident’s assessments were less accurate. Nevertheless, the distances still reflect low agreement.

Analysis round two. A new look at the experts’ ranking showed that patient P06 was the main reason for the discrepancy with the ranking . We thus analyzed the patient P06 history in more detail, and we discovered that P06 had been classified as having kidney disease, although the actual patient’s condition—renal cyst grade I—was not of clinical relevance. This can be considered an error or inaccuracy of the data. We corrected the assessment of P06 with respect to this criterion in the PPT and a third round was launched.

Test of agreement—Round three. In this third round, we consider ranking

E produced jointly by the experts during round two and a new ranking, referred to as

, produced by the two experts using the PPT. Ranking

E and the agreement metrics with respect to

are reported in

Table 3.

The updated ranking demonstrated substantial convergence with the experts’ ranking, leading to values of 1.2 and 0.842 for the agreement metrics and , which confirms a good agreement.

Analysis round three. It is noteworthy that patient P01, diagnosed with neurogenic bladder, was initially poorly prioritized by both the experts and the PPT. Although this patient had normal test results, annual follow-up was necessary to prevent kidney damage due to spinal disease. Consequently, the physicians decided to place this patient on a separate list for periodic examinations. The ranking of the PPT was manually adjusted to reflect this decision.

Final analysis. The final rankings, presented in

Table 4 achieved agreement distances of

, which matches our baseline for

, and

, which is very close to 1. Importantly, both experts deemed the final PPT ranking (

) as reasonable as their own, and expressed confidence in the tool, confirming their willingness to adopt it in practice.

5. Discussion

The primary objective of the validation process was to ensure that the behavior of the PPT aligns with the prioritization logic defined by the experts during the design phase. In pursuing this goal, the process revealed certain inconsistencies between the agreed weighting framework and the experts’ individual decision-making practices. Although the experts had reached consensus on the relative importance of the different criteria, this agreement did not fully translate into their manual rankings. When prioritizing patients individually, each expert tended to deviate from the agreed weighting logic, suggesting that cognitive biases and contextual factors influence decision-making even when predefined priorities are clear. This reinforces the need for structured tools such as PPT to ensure consistency and adherence to the intended prioritization framework.

Specifically, the analysis of the initial discrepancies between manual and PPT rankings led experts to conclude that they did not give neurogenic bladder sufficient relevance in their assessments, even though its importance was acknowledged during the design phase. The experts adapted their approach to ensure adequate consideration of neurogenic bladder, and the experiments demonstrated that the distance between their second ranking and the PPT’s ranking was significatively reduced.

Applying all relevant criteria consistently across multiple patients also proved challenging. To mitigate this difficulty, experts changed their ranking strategy: patients were first separated into priority groups and then sorted within each group. Using this approach, they produced a new ranking that was extremely close to the PPT’s, particularly for high- and low-priority patients, while medium-priority patients were ranked similarly.

Finally, discrepancies concerning patient P06 allowed the team to identify a data error. After correcting this error, the agreement between the manual and PPT rankings reached values that can be considered as excellent.

The validation process provided valuable insights into the challenges and complexities associated with expert-based evaluation. By analyzing and comparing their own rankings with those generated by the PPT, the experts were prompted to reconsider not only the relative importance assigned to each criterion but also the relevance of certain criteria in practice. During this process, the experts recognized a potential gap between the importance they believed a criterion should have and the actual weight it received in their decision-making. This observation underscores the cognitive limitations inherent in complex, multicriteria decision contexts and highlights the value of systematic approaches such as PPT. Both experts acknowledged that maintaining stability and consistency in applying criterion weights is a key feature of the tool. Ultimately, the experts expressed strong confidence in the PPT’s results and endorsed its practical implementation, recognizing its potential to enhance transparency, fairness, and reliability in patient prioritization.

Beyond validating the PPT’s performance, the process itself provided a structured framework for expert reflection and iterative improvement. The application of the process involved several key steps: first, experts compared their initial manual rankings with the PPT-generated ranking, which highlighted discrepancies and prompted discussion about the interpretation of criteria. Second, adjustments were made to ensure that critical clinical factors, such as neurogenic bladder, were adequately weighted. Third, experts adopted a grouping strategy to simplify ranking, which improved alignment with the PPT and reduced cognitive load. These steps illustrate how the process not only validated the tool but also served as a learning mechanism, enabling experts to refine prioritization practices and uncover systemic weaknesses in referral pathways. This iterative approach demonstrates the practical value of combining quantitative methods with expert judgment to achieve more equitable and transparent decision-making.

When asked to summarize his impressions about the development of the PPT for helping to manage the access to the urodynamic test, the chief of urology service answered: “This research prompted a critical reassessment of the urodynamic examination process, from patient indication to service delivery. By engaging physicians in reflective analysis, the study exposed weaknesses in the referral and prioritization mechanisms, encouraging the health system to adopt a more structured and equitable approach. This included recognizing that time of arrival in the waiting list should not be the sole criterion for care allocation, as medical urgency and social context must also be considered.

The findings led to concrete management improvements. The hospital redefined its patient flow, distinguishing those who truly required urodynamic testing from those who could benefit from alternative, conservative treatments such as physiotherapy or pessaries. This process fostered a more efficient and fair use of resources and deepened the organization’s understanding of equity, prioritization, and patient-centered care within the public health context.

6. Conclusions

This paper illustrates and discusses the validation process designed to assess the extent to which a Computer-Based Patient Prioritization Tool (PPT), conceived to manage patients’ access to the urodynamic test in the urology service at the Hospital de Clínicas of the Federal University of Paraná, Brazil, can produce outcomes that meet experts’ requirements before its potential implementation. Validation plays a critical role in the development life cycle of decision-support tools, especially in complex healthcare systems where multiple stakeholders, interdependent processes, and resource constraints can lead to unintended consequences if tools are not rigorously tested.

The proposed validation process aimed to identify and explain differences between prioritization decisions made by experts and those generated by the PPT, ultimately assessing the level of trust that can be placed in the tool. Through a series of recursive tests, disagreements were quantified using the Average Spearman footrule distance and the Spearman correlation coefficient. Initial results revealed strong agreement between experts but significant divergence from the PPT. However, this process uncovered both human and data-related issues: experts adjusted their weighting of criteria, and a data error was corrected. After these refinements, the disagreement decreased substantially to reach distances that confirm a very good agreement between the rankings, confirming the tool’s excellent performance. These results demonstrate that the PPT can reliably reproduce expert consensus and ensure consistency in prioritization decisions.

Beyond validating the PPT, this iterative process demonstrated its methodological value as a structured approach for aligning expert judgment with algorithmic logic and improving decision-support tools through feedback loops. From a practical perspective, the study contributed to enhancing transparency and fairness in patient prioritization, fostering user confidence, and prompting organizational changes in referral practices. These findings highlight the importance of structured validation in bridging the gap between research prototypes and real-world implementation in complex healthcare environments.

Despite the promising results, the validation process carried out has some limitations. First, the test set of 10 patients does not capture the full diversity of clinical profiles and scenarios. Larger and more heterogeneous samples are needed to confirm the tool’s robustness before practical implementation. Additionally, the patient descriptions used in the tests were synthesized from two sources—clinical records and patient questionnaires—and transcribed by a nurse into historical-like records, which may have homogenized and, potentially, introduced a bias in the information presented to experts. Future tests should involve real patient data to minimize potential bias introduced during test case preparation.

A second limitation concerns variability in expert opinions. The experiments involved two experts from the same service, whose rankings were highly consistent. However, other experts might produce more divergent rankings. Since the PPT design averages expert opinions, it is possible that some experts could reject rankings, including those generated by the tool. This highlights the importance of internal communication and change management during implementation. Stakeholders must understand that PPT rankings will approximate expert consensus but will not replicate any individual expert’s decisions. Finally, communication strategies should emphasize the benefits of standardized criteria and evaluation as a means to achieve fairness and transparency in access to healthcare services.

Future work will focus on expanding the test set, involving multiple experts from different institutions, and conducting real-world pilot studies to assess the tool’s performance in a complex healthcare environment. These steps are essential to strengthen trust, ensure scalability, and support the transition from prototype to practice.