1. Introduction

With the global trend of population aging and the rising prevalence of sub-health issues, healthcare has emerged as a critical societal concern worldwide. Many countries, including China, face substantial challenges in the allocation of medical resources, extending beyond physical infrastructure to include human resources. These issues were starkly revealed during the COVID-19 pandemic, when severe staffing shortages forced healthcare systems to develop ethical frameworks for rationing limited scarce staff time to maximize population-level benefits [

1].

China has established a three-tier medical service system in which primary hospitals provide basic medical services, secondary hospitals manage common diseases, while tertiary hospitals are responsible not only for the diagnosis and treatment of acute and critical diseases but also for medical education and research. However, Chinese patients have historically placed disproportionate trust in high-profile public hospitals and renowned specialists. This preference has led to an imbalanced utilization of health care resources, resulting in overcrowded tertiary hospitals while medical resources at secondary and primary hospitals remain underutilized. Indeed, pervasive trust in high-profile public hospitals and well-known specialists has also been documented in many other countries [

2,

3]. Consequently, it is essential to develop effective initiatives to improve overall utilization of medical resources.

Nowadays, online consultation platforms (OCPs), as a key component of the “Internet+” initiative, have become an integral part of China’s healthcare system. Most hospitals in China encourage physicians to register on OCPs to provide internet-based medical services, such as online consultations, thereby offering patients more convenient access to healthcare for common diseases [

4,

5]. According to data from Collaborative Research Network, the market size of China’s Internet-based healthcare industry reached 309.9 billion RMB in 2022. Prominent online medical platforms such as Haodf, Chunyu YiSheng, and 39 Health provide a wide range of services, including online diagnosis and appointment scheduling, thus offering patients more accessible and diversified medical service options.

Although, in theory, physicians registered on OCPs are sufficiently qualified to ensure the quality and comparability of consultation services within their respective specialties, a pattern similar to that observed in offline healthcare services persists. On OCPs, a pattern mirroring offline settings persists: well-known physicians from top-tier hospitals are disproportionately sought after, while capable specialists from lower-level hospitals and newly registered doctors remain underutilized. This reflects a broader systemic issue in which patient preferences are driven more by physician visibility and historical reputation than by competence alone.

Given the proven success of recommendation mechanisms in promoting high-quality products on online trading platforms, it is essential to develop an appropriate recommendation system to enhance patient-doctor matching efficiency on OCPs. Such a system should recommend under-utilized physicians to patients with relevant treatment needs thereby promoting a more balanced allocation of medical resources. Nevertheless, existing doctor recommendation mechanisms face a critical tri-lemma, balancing algorithmic efficiency, recommendation quality, and equitable resource allocation.

On one hand, as the computational complexity of recommendation models rises, small- and medium-sized platforms with limited resources often struggle to bear the deployment and maintenance costs associated with high-performance computing infrastructure. On the other hand, current recommendation algorithms primarily focus on matching patients’ diagnostic needs with doctors’ professional expertise [

6], while overlooking other patient preferences in physician selection—let alone considering the fairness of public medical resource allocation. This imbalance leads to a paradoxical situation: patients endure long waiting times for doctor responses, risking dissatisfaction or delayed treatment, while newly registered high-quality doctors remain underexposed and underutilized.

To address these challenges, this study proposes a lightweight doctor recommendation framework for OCPs, employing a multi-dimensional data fusion strategy. The framework integrates patients’ diverse preference dimensions with fairness in public medical resource allocation, thereby providing a practical and high-quality solution for small- and medium-sized OCPs.

2. Literature Review

2.1. Research on Factors Influencing Doctor Selection of Patients

Physicians’ professional competence is one of the core considerations in patients’ doctor-selection decisions; however, no unified standard currently exists for its evaluation. According to the literature, factors associated with doctors’ professional competence that significantly influence patients’ choices include professional title [

7,

8], years of practice [

8], hospital level [

9], and academic achievements [

7,

10], among others.

Research on online medical services has further identified additional factors shaping patients’ decision-making. These include doctors’ online reputation [

11,

12,

13], the volume of online review texts [

14,

15], the diversity of services offered [

11], and the pricing of consultation services [

11]. Moreover, the level of online engagement between physicians and patients has been shown to significantly affect patients’ willingness to choose a particular doctor [

16].

In addition, some studies highlight the role of patient-specific factors, such as geographic distance [

17], condition severity [

18,

19], whether it is the patient’s first visit [

13,

19], and even patient gender [

7], in influencing doctor selection.

In summary, extensive research has examined patients’ doctor selection behavior. A comprehensive analysis of existing findings suggests that the evaluation of physicians’ professional qualifications primarily involves factors such as professional title, hospital level, and online reputation. Nevertheless, patients also draw on historical textual information regarding doctors’ online performance and institutional background. These insights provide the foundation for constructing the patient doctor-selection priority evaluation indicator set in this study.

2.2. Doctor Recommendation

With the increasing adoption of OCPs, research on online doctor recommendation has become a prominent academic focus. Current studies indicate that online doctor recommendation algorithms primarily rely on content-based methods [

20] and collaborative filtering techniques [

21]. In recent years, multimodal fusion, deep learning techniques, and applications of large language models have become major directions in recommendation algorithm development [

22].

Content-based doctor recommendation algorithms match patients’ consultation needs with doctors’ characteristics by using data such as consultation text or disease keyword tags [

23], doctor reputation scores, and historical consultation data [

24,

25]. Among these factors, text representation techniques played a pivotal role in determining the efficiency and accuracy of content-based doctor recommendations. Existing studies show that embedding methods like Word2Vec are widely used to extract disease features from consultation texts [

26], while LDA topic models are commonly applied for extracting doctor characteristics [

27,

28].

Collaborative filtering-based recommendation algorithms, by contrast, generate recommendations by identifying similarities between patients and doctors [

29]. These methods can be enhanced by incorporating diverse features such as user demographics and aggregated review statistics to improve recommendation accuracy [

30]. To address the cold-start problem for newly registered doctors, these algorithms primarily combine symptom similarity with social network analysis to optimize recommendation results [

31,

32].

In recent years, some studies have further enhanced recommendation robustness by integrating collaborative filtering with content-based methods [

33]. Additionally, an emerging approach that defines doctor recommendation as an extreme multilabel classification task has proven effective in leveraging limited data and achieving significant results in cold-start scenarios [

34]. Deep learning techniques have also become increasingly prevalent in doctor recommendation mechanisms. For example, Convolutional Neural Networks (CNN) have been used to learn symptom-doctor matching features [

35,

36]; Graph Neural Networks (GNNs) have been employed to model multi-layer doctor–patient relationships [

37]; attention mechanism have been applied to improve the granularity of doctor–patient interaction modeling [

38]; and knowledge graphs have been integrated with deep networks to improve recommendation quality and interpretability [

39,

40,

41]. For medical tag clustering and classification, SBERT and OpenAI models have been shown to significantly outperform traditional FastText embeddings [

42].

In summary, recent research has predominantly employed multimodal fusion approaches, integrating deep learning and large language models, to improve recommendation accuracy. Nevertheless, this field faces three major challenges that constitute critical research gaps:

- (1)

Model Specificity vs. Generality: Although most studies employ general-purpose models such as Word2Vec and BERT for feature extraction, limited attention has been given to developing domain-specific and computationally efficient text representation models tailored to the medical consultation context.

- (2)

Accuracy vs. Efficiency Trade-off: Despite their advantages in improving recommendation accuracy, deep learning and large language models incur substantial computational complexity, increasing system costs and reducing operational efficiency. This makes them less suitable for platforms under resource constraints.

- (3)

Narrow Focus on Symptom Matching: Existing recommendation models tend to focus exclusively on aligning patient symptoms with doctors’ professional skills, often overlooking patients’ personalized preferences and the equitable distribution of public medical resources.

To address these challenges, this study proposes an online doctor recommendation algorithm for OCPs that emphasizes three key aspects: the selection of a lightweight yet efficient text representation model, the development of a comprehensive physician recommendation evaluation system, and the integration of both doctor–patient technical matching and the prioritization of underutilized medical service resources.

3. Problem Description

Haodf.com is a prominent Chinese online medical service platform that provides healthcare reference information to Chinese patients. Since the launch of its first website version in 2006, the platform has evolved into an influential outpatient information query system, accumulating substantial structured and unstructured medical data. The data involved in this study comprises two anonymized datasets obtained from the Haodf Online Consultation Platform (OCP), including physician information and patient information, collected in strict accordance with data privacy and compliance protocols.

As the study relies on pre-existing, de-identified data with no possibility of individual re-identification, it qualifies for exemption from institutional review board (IRB) approval under applicable ethical guidelines. Furthermore, all data-handling procedures adhere strictly to the platform’s terms of service and relevant data protection regulations, thereby ensuring the highest standards of research ethics and data security throughout the study.

As shown in

Table 1, physician information typically includes basic attributes (e.g., name, title, hospital), professional background (e.g., specializations, publications), and service data (e.g., price, gift). Some of these attributes are provided in structured formats, whereas others appear as semi-structured text.

For patients potentially receiving physician recommendation services, the relevant data contains registration information and brief symptom descriptions. These are standardized into a set of patient characteristic indicators, as presented in

Table 2, specifically including: Consultation ID, Gender, Age, and Consultation content. Symptom descriptions are typically unstructured data entered as free-text inputs.

Assuming a patient has registered on the Haodf.com platform, the primary workflow for recommending physicians capable of providing online consultation services proceeds as follows:

- (1)

The patient inputs symptom descriptions as text into the platform search box.

- (2)

The platform analyzes keywords extracted from the symptom description.

- (3)

The system searches the backend database for physicians whose expertise matches the patient’s needs.

- (4)

When multiple physicians are available, the platform determines recommendation priorities based on physician-patient matching.

- (5)

The system generates and displays a ranked list of recommended physicians according to priority for patient selection.

The next section will provide a detailed description of the main steps involved in generating physician recommendations, including technical specifics of key processes.

4. Methodology

4.1. General Procedure of Algorithm

The doctor recommendation process on the OCPs primarily entails matching doctors to patients’ needs. According to the literature, patients’ trust in the perceived quality of medical service may be shaped by both the information provided by doctors and reviews from other patients with similar medical needs.

In this study, we propose a hybrid recommendation approach that integrates three strategies: (1) leveraging records from patients with similar requirements, (2) expanding the set of doctor candidates by including those with similar specialties, and (3) determining the recommendation order using an entropy weight-TOPSIS multi-criteria evaluation model.

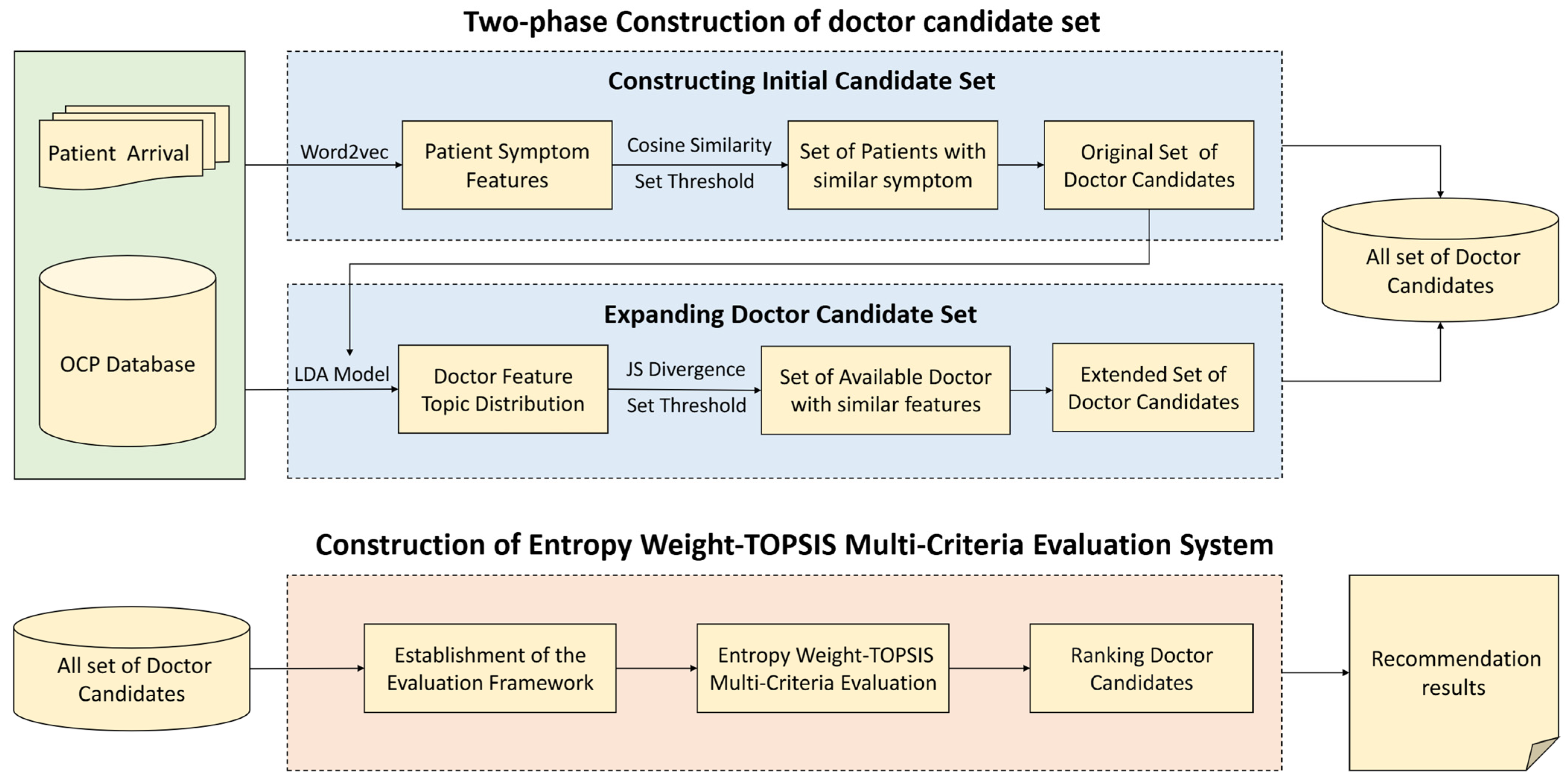

As illustrated in

Figure 1, the general procedure of the proposed doctor recommendation model is as follows:

- (1)

First, identify patients with similar consultation requirements by matching the consultation text recorded on the OCP with that of the target patient, thereby generating an initial set of doctor candidates.

- (2)

Next, apply the LDA topic model to extract topic scores for each doctor based on their professional profiles.

- (3)

Then, compute the Jensen–Shannon (JS) divergence between the topic distributions of all registered doctors and those in the initial candidate set, expanding the candidate pool to include physicians with a similar area of expertise.

- (4)

Finally, assess the alignment between the target patient’s requirements and the profiles of doctors from the expanded candidate set using the entropy weight-TOPSIS multi-criteria evaluation model. Candidates are then ranked in descending order of recommendation priority, facilitating the patient’s final decision.

Subsequently,

Section 4.2 and

Section 4.3 will detail the technical specifics of constructing the physician candidate pool and implementing the multi-criteria evaluation model for physician recommendation priorities.

4.2. Two-Phase Construction of Doctor Candidate Set

4.2.1. Constructing Initial Candidate Set

The initial set of doctors is constructed by identifying doctors who have previously provided consultations to patients with needs similar to those of the target patient. The process for constructing the initial set of candidates is as follows:

First, a synonym dictionary, a domain-specific feature-word dictionary, and a stopword list are employed to standardize the consultation text provided by the target patient. This preprocessing step enhances the semantic representation of the text by enabling more accurate recognition and categorization of professional terms. It also reduces the dimensionality of the feature space, thereby improving data-processing efficiency and predictive accuracy, as well as strengthening the robustness of subsequent decision-making processes.

Second, one million medical Q&A texts collected from online resources are used to pre-train the Word2Vec model, a deep learning-based technique developed by Google in 2013 for generating word embeddings [

43]. For each disease keyword, its corresponding word embedding is obtained from the pre-trained model, after which a sentence-level vector representation is constructed for the consultation text submitted by the target patient.

Finally, the similarity between the text vectors obtained in the previous steps is evaluated using cosine similarity,

, as calculated in Equation (1). Doctors who have previously treated patients whose text similarity exhibits a similarity score above a predefined threshold relative to that of the target patient are selected as candidate doctors.

where

represents the consultation text of the target patient

i, and

denotes the consultation text of another patient

j. 4.2.2. Expanding Doctor Candidate Set

The candidate set obtained through the process presented in

Section 4.1 is expanded by including additional doctors who possess similar medical specialties.

First, data associated with other physicians eligible to provide consultations for the target patient are retrieved from the Online Consultation Platform (OCP). The selection of LDA over newer methods such as BERTopic is primarily attributed to its transparent probabilistic framework [

44]. These data are then utilized to train the LDA topic model [

45]. This approach facilitates the extraction of relevant disease-related topics and generates topic-word distributions for each topic [

46].

Then, the JS divergence is employed to measure the similarity between doctors’ topic distributions [

47]. JS scatter is a symmetric variant of the KL divergence, which yields values within the range [0, 1], where the smaller values indicate greater similarity between two topic distributions. The formula for calculating the JS scatter between two distributions

P and

is provided in Equation (2). To facilitate the calculation of similarity, the

scatter results are transformed into a similarity metric, with values also ranging from [0, 1], the process is shown in Equation (3). In this converted scale, higher values (closer to 1) indicate greater similarity.

Finally, the initial set of doctors, derived from the similar patient-based doctor recommendation algorithm, is extended. Similarity scores between each doctor’s topic distribution and those of others are computed. Doctors with similarity scores exceeding a predefined threshold are then included to construct the final extended doctor recommendation set.

4.3. Construction of Entropy Weight-TOPSIS Multi-Criteria Evaluation System

4.3.1. Establishment of the Evaluation Framework

Based on the operational characteristics of online healthcare platforms and a synthesis of existing literature on patients’ physician selection behavior, this study proposes a comprehensive physician evaluation system, as systematically detailed in

Table 3. This integrated framework ensures both scientific rigor and practical utility in physician recommendation mechanisms.

- (1)

Symptom-Physician Matching: This dimension utilizes advanced natural language processing techniques to computationally quantify the semantic alignment between patients’ symptomatic descriptions and physicians’ specialized expertise. By ensuring precise matching of medical conditions with relevant specialist knowledge, it establishes a foundational layer for clinically appropriate recommendations.

- (2)

Clinical Expertise Qualification: Through objective assessment of medical qualifications including institutional accreditation levels, professional certification ranks, and scholarly research output, this dimension establishes a credibility baseline that reflects the hierarchical authority structure inherent in medical practice while ensuring clinical competence.

- (3)

Patient-Centric Preference Integration: Incorporating non-clinical selection factors including service pricing sensitivity, patient-generated satisfaction indicators (e.g., virtual gifting), and consultation availability metrics, this dimension acknowledges the multidimensional nature of patient decision-making beyond purely medical considerations.

- (4)

Equity-Driven Resource Allocation: Implementing algorithmic safeguards including newcomer promotion mechanisms and exposure balancing protocols, this dimension demonstrates potential to mitigate resource concentration among established physicians while promoting equitable distribution of patient access opportunities, thereby addressing systemic fairness requirements in healthcare delivery.

This integrated framework represents a significant advancement beyond conventional recommendation mechanisms that prioritize matching accuracy alone. By simultaneously addressing medical relevance, professional credibility, individual preference, and equity considerations, it establishes a new standard for ethically balanced and operationally effective physician recommendation mechanisms in online healthcare environments.

The data for the evaluation index system were sourced from the Haodf platform. Categorical variables, including virtual gifts received and academic achievements, underwent preprocessing that involved the removal of outliers and median imputation. For other indicators, such as symptom matching degree, similar patient consultation volume, price competitiveness, patient consultation density, support for new physicians, and exposure fairness—specific values were computed based on the collected platform data.

First, the study employed the Word2Vec model and cosine similarity to compute the symptom matching degree between patients and physicians. A higher matching score indicates a stronger patient preference for selecting that physician. By setting a symptom matching threshold, the number of historically similar patients was counted—a greater number of similar past patients suggests richer experience in managing comparable conditions.

Second, the study constructed a price competitiveness indicator based on physicians’ consultation fees. This metric reflects the market attractiveness of a physician’s pricing relative to the average level of peers with the same professional title, calculated using Equation (4), where

denotes the online consultation price of physician

and

represents the mean consultation price of all physicians sharing the same title as physician

.

Patient service density is a key metric for measuring the balance between physicians’ service load and clinical experience. This study calculates physicians’ patient service density using their registration duration and total patient consultation volume, as shown in Equation (5), where

represents the cumulative number of patients treated by physician

, and

denotes physician

registration years.

Finally, to prevent top-performing physicians from monopolizing traffic and promote balanced allocation of medical resources, this study innovatively incorporates exposure fairness and new physician support indicators, ensuring reasonable exposure opportunities for newly registered physicians and primary healthcare workers, thereby improving overall healthcare service accessibility.

The exposure fairness indicator is a core mechanism for mitigating “popularity bias.” It applies inverse normalization to physicians’ daily visit counts to construct a cost criterion within the TOPSIS model. This means physicians with excessively high exposure are penalized, as their high visit counts are transformed into low scores on this criterion. The calculation formula is shown in Equation (6), where

represents physician j daily visit count, and

denotes the visit vector for all physicians.

The new doctor support indicator is designed to counteract the bias against new physicians by assigning them a higher weight. It applies inverse normalization to physicians’ registration years to create a benefit criterion in TOPSIS. A newer physician will have a higher score on this criterion, directly boosting their overall ranking. The formula is shown in Equation (7), where

represents physician j registration years,

denotes the registration years of all physicians.

4.3.2. Entropy Weight-TOPSIS Multi-Criteria Evaluation

The Technique for Order Preference by Similarity to an Ideal Solution (TOPSIS) is a multi-criteria decision-making (MCDM) method developed by Hwang and Yoon in 1981 [

49]. Its core principle is to rank alternatives based on their relative proximity to an ideal solution. Specifically, TOPSIS identifies the optimal alternative as the one that is closest to the positive ideal solution (PIS), which maximizes all beneficial criteria and minimizes all cost criteria, and farthest from the negative ideal solution (NIS), which minimizes all beneficial criteria and maximizes all cost criteria. This method is widely recognized for its ability to provide a clear and intuitive ranking of alternatives by leveraging raw data and effectively handling multiple indicators, and has been extensively applied in various fields including healthcare management and supply chain optimization.

The TOPSIS method is a comprehensive evaluation approach designed to address decision-making problems involving multiple indicators and alternatives [

50]. It assesses the relative advantages and disadvantages of each alternative based on raw data to facilitate a holistic evaluation. In this study, the overall evaluation result is derived from the integration of multiple key factors influencing a patient’s choice of doctor, including hospital level, professional title, gift value, total publications, symptom similarity, price competitiveness, Service Density, Exposure Fairness, new doctor support, and similar patient count [

51].

This study utilizes the entropy-weighted TOPSIS framework to calculate a comprehensive “Physician-Patient Need Matching Degree,” which quantifies the overall alignment between physician capabilities and patient needs. This integrated metric synthesizes four dimensions: medical relevance, professional credibility, individual preference, and equity considerations. As detailed in

Table 3, all evaluation indicators were normalized and converted into benefit-type criteria, where higher values indicate more desirable outcomes.

A key innovation is the introduction of two equity-focused indicators: “Exposure Fairness” suppresses the over-exposure of popular physicians by inversely normalizing visit counts, while “New Doctor Support” prioritizes recent registrants through inverse normalization of registration years. Both function as benefit criteria within the TOPSIS model, ensuring that recommendation outcomes balance precision with equitable resource allocation.

The computational process begins with constructing the data matrix using preprocessed feature variables, where the

ith row represents the

ith doctor and the

jth column represents the

jth feature variable. The data normalization is shown in Equation (8)

For each normalized value

, the corresponding scale value

was computed in Equation (9)

The entropy value

and the corresponding entropy weight

were calculated in Equations (10) and (11)

where

,

is a very small constant introduced to avoid the log-zero problem and

denotes the total number of features.

The formulas for the positive ideal solutions

and negative ideal solutions

are shown in Equations (12) and (13).

The distance from the doctor

to the positive ideal solution

and the distance from the doctor to the negative ideal solution

are calculated in Equations (14)–(16), which are then utilized to compute the TOPSIS score

for doctor

i:

The model outputs a relative closeness score between 0 and 1 for each physician, representing their comprehensive “Physician-Patient Need Matching Degree.” A higher score indicates superior performance across meeting specific medical needs, possessing appropriate qualifications, aligning with patient preferences, and promoting systemic fairness, thereby establishing a new standard that balances ethical considerations with operational effectiveness.

5. Experimental Results

5.1. Data Preparation and Parameter Setting

Numerical experiments were conducted using data from 567 doctors and 8082 patients in the “Respiratory and Critical Care Medicine” submodule of the Haodf OCP, recorded prior to 27 March 2024. The dataset comprised patient-doctor conversation records, patient-provided doctor ratings, and information on doctor specialties.

Data extraction from the targeted OCP was performed using the Beautiful Soup4 module within the Python 3.8 environment. Invalid data removed from the experimental dataset include: (1) nonsensical or duplicate entries, and (2) records of independent doctors who provided insufficient information and had not launched the online consultation services.

For text representation learning, this study utilized a specialized medical corpus containing 1.6 million medical consultation and symptom texts captured from the Haodf platform to pre-train the Word2Vec model following the data processing framework proposed in [

52]. Before the training, all medical texts underwent strict word segmentation using Jieba Chinese text segmentation tool, combined with a custom medical terminology dictionary to improve segmentation accuracy. The model training adopts the Continuous Bag of Words (CBOW) architecture implemented through the Python Gensim library, with the following parameter configurations: vector dimension = 300, context window size = 5, minimum word count = 2, negative sampling size = 10.

To validate the accuracy of the trained Word2Vec model, following the methodology of Zhou et al. [

31], five representative medical terms—“cough”, “pneumonia”, “chest tightness”, “fever”, and “nodule”—were selected for similarity evaluation. As shown in

Table 4, the resulting word vectors demonstrated high-quality characteristics. For instance, the term “pneumonia” showed strong associations with terms like “viral pneumonia”, “lung infection”, and “white lung”, with similarity results aligning well with established medical knowledge, thereby providing a reliable foundation for subsequent analyses.

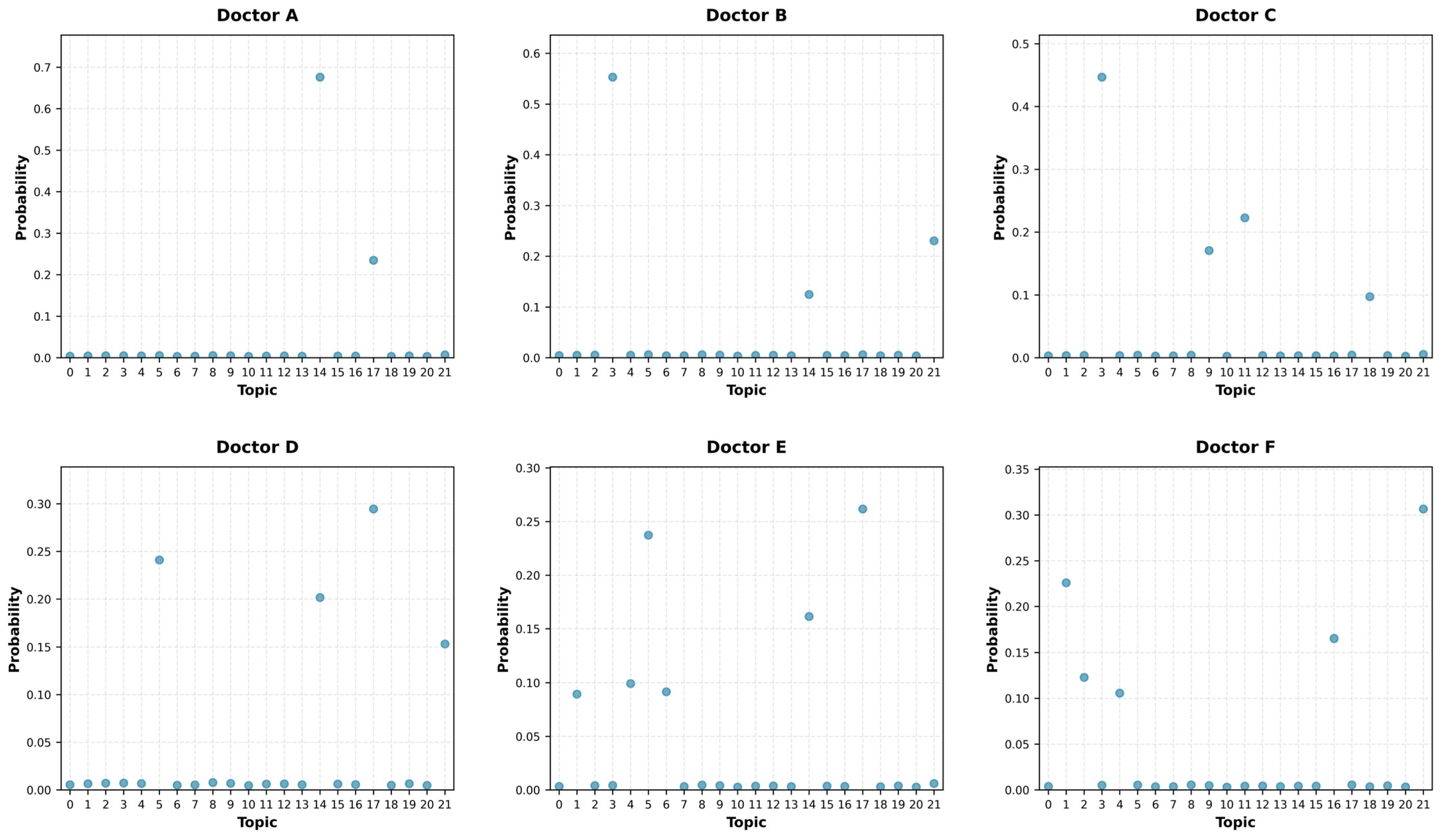

To address the physician cold-start problem, this study further introduced an LDA topic-based physician recommendation algorithm, which incorporates new physicians lacking historical consultation records into the candidate recommendation pool. Given that the respiratory medicine experimental data covers approximately 22 distinct disease categories, the number of topics (k) in the LDA model was correspondingly set to 22. The model was configured with asymmetric prior parameters (α = 1.0, β = 0.01) to reflect the varied topic distributions across medical specialties. After iterative training, we obtained the topic-word distributions for these 22 topics and the document-topic distribution for each doctor, as illustrated in

Figure 2.

This study established a comprehensive multi-dimensional feature system incorporating ten clinically relevant evaluation metrics: hospital level, professional title, gift value, total publications, symptom similarity, price competitiveness, service density, exposure fairness, new doctor support, and similar patient count. The preprocessed feature variable data for representative physicians are detailed in

Table 5, demonstrating the quantitative foundation for subsequent recommendation algorithms.

Based on the integrated framework comprising the trained Word2Vec model, LDA topic distributions, and comprehensive interaction data between 567 physicians and 8082 patients, this study conducted systematic numerical experiments to compare different text representation models and physician recommendation mechanisms. These experiments ultimately facilitated the development of an optimized lightweight recommendation algorithm specifically designed for OCPs.

5.2. Evaluation Metrics for Recommendation Results

Unlike typical recommendation scenarios, where a user may be recommended multiple items, each patient in this study selects only one doctor, even though several suitable options may exist. Therefore, HR@K and NDCG@K are adopted to evaluate the effectiveness of the proposed recommendation algorithm [

41,

53,

54]. The two metrics are defined in Equations (17)–(20)

where U denotes the set of all patients in the test set and the indicator function determines whether the physician consulted by patient

appears in the Top-K recommendation list. The primary objective is to evaluate whether the appropriate physician is included in the recommendation list.

where

∈ {0, 1} indicates whether the doctor at position

i in the recommendation list is the correct person.

denotes the discounted cumulative gain of the relevance scores of all doctors in the recommendation list,

represents the ideal (maximum) value of

, and

measures the ranking quality of the recommendation list.

Additionally, this study constructs recommendation fairness metrics by calculating two key indicators—Long-Tail Physician Ratio (

) and New Physician Ratio (

) to evaluate the fairness performance of the recommendation system, as expressed in Equations (21) and (22). The long-tail physicians are defined as those whose daily visit count

falls below the 20th percentile

of platform. The study measures the proportion of low-exposure physicians in recommendation results by calculating the ratio of long-tail physicians within the complete physician set

.

The New Physician Ratio metric calculates the proportion of physicians with less than one year of online consultation experience (

< 1 year) in the recommendation results. This indicator assesses the system effectiveness in supporting emerging medical practitioners.

5.3. Comparative Analysis and Selection of Text Representation Models

To construct a lightweight recommendation mechanism and identify the optimal text representation model, this study conducted systematic comparative experiments using a dataset comprising 8082 respiratory consultation records from 567 physicians. The experiment designated 100 patients as the test set, while the historical consultation records of the remaining 7982 patients served as the candidate pool, simulating a real-world recommendation scenario. The evaluation focused on two core dimensions: recommendation performance and computational efficiency. First, each text representation model converted the consultation texts of both test patients and historical patients into vector representations. Subsequently, textual similarity between each test patient and all historical patients was calculated, and their associated physicians were ranked based on these similarity scores. Finally, HR@K (Hit Rate) and NDCG@K (Normalized Discounted Cumulative Gain) metrics were employed to evaluate the accuracy and ranking quality of the top-K recommendations (K = 5, 10, 15, 20, 25, 30), while the total runtime for completing the entire matching task was strictly recorded to quantify the lightweight characteristics of the models.

Three representative text representation models were employed to assess their efficacy in capturing features relevant to respiratory medicine for physician recommendation tasks. The detailed parameter configurations and complexity analysis of each model are presented in

Table 6.

Word2Vec: A classical static word embedding model that learns local contextual features through Continuous Bag-of-Words (CBOW) architecture [

43]. Its lightweight characteristics and simple architecture provide an essential baseline for subsequent research. The model was specifically trained using respiratory medicine consultation texts collected from the Haodf platform. With theoretical computational complexity of O(L), it achieves high efficiency through simple word vector lookups and averaging operations.

DistilBioBERT: A biomedical domain pre-trained model derived from BERT architecture via knowledge distillation. While retaining approximately 93% of the original BioBERT performance [

55], it significantly improves computational efficiency. However, its Transformer architecture still maintains O(L

2·d) complexity due to the self-attention mechanism.

all-MiniLM-L6-v2: A general-domain efficient sentence embedding model that demonstrates strong performance in semantic similarity tasks [

56]. Its balance of performance and efficiency makes it suitable for clinical deployment. Despite being a distilled model, it retains the O(L

2·d) complexity characteristic of Transformer architectures.

In the experimental design, each text representation model was first utilized to convert target patients’ consultation content and historical medical records into vector representations. Cosine similarity was then computed to quantify symptom similarity, followed by ranking associated physicians based on similarity scores for recommendation. To quantitatively verify the “lightweight” feature, we additionally introduced time measurements for text vectorization and text similarity calculation ranking, recording the total time each model takes to process data for 100 patients.

As shown in

Table 7, the Word2Vec model specifically trained on medical consultation texts from the Haodf platform significantly outperformed comparative models across both HR@K and NDCG@K metrics, while demonstrating superior computational efficiency.

The efficiency analysis validates the practical impact of theoretical complexity: Word2Vec’s inference speed is approximately 5.6 times faster than all-MiniLM-L6-v2 and 18 times faster than DistilBioBERT. This significant efficiency difference stems from architectural essence: Word2Vec completes text representation through simple word vector lookups and averaging operations, avoiding the expensive self-attention computations and inter-layer forward propagation in Transformer models.

Therefore, this study ultimately adopted this specialty-customized Word2Vec model to extract patient symptom features from historical medical visit data and to construct the initial doctor candidate pool. The selection of this model was based on comprehensive technical considerations: optimal computational efficiency, excellent performance, balanced resource consumption, and high feasibility of deployment. Word2Vec achieves the best computational efficiency while maintaining outstanding recommendation performance, making it an ideal choice for building a lightweight recommendation mechanism.

5.4. Comparison Among Recommendation Schemes

This study conducted a systematic comparison of multiple algorithmic combinations within a unified experimental framework for the proposed physician recommendation system, aiming to identify the optimal approach by comprehensively evaluating both recommendation accuracy and resource allocation fairness. The experiment utilized the same dataset as described in

Section 5.3, containing 8082 respiratory department consultation records completed by 567 physicians. In multiple experimental rounds, 100 patients were randomly selected as the test set, while the historical consultation records of the remaining 7982 patients served as the candidate pool, effectively simulating real-world recommendation scenarios for algorithm evaluation. All algorithms were trained and tested on identical data partitions and evaluated using four core metrics: HR@K (Hit Rate), NDCG@K (Normalized Discounted Cumulative Gain), LT@K (Long Tail Physician Ratio), and ND@K (New Doctor Ratio). The recommendation algorithms included in the comparative analysis are:

- (1)

Recommendation Algorithm W2V

A content-based recommendation method utilizing the Word2Vec model. This approach employs Word2Vec-generated word vectors and cosine similarity to compute the similarity between new patients’ consultation texts and historical medical records, ranking physicians based on symptom similarity.

- (2)

Recommendation Algorithm W2V-Threshold

Building upon the Word2Vec-based recommendation algorithm, this approach introduces a similarity threshold set at 0.7 to filter physician-patient pairs whose similarity exceeds this value. Physicians are then ranked according to the number of qualified similar cases they have handled [

48].

- (3)

Recommendation Algorithm W2V-LDA

A hybrid physician recommendation algorithm that integrates patient-physician similarity and physician domain specialization. First, Word2Vec and cosine similarity are used to calculate the similarity between new patients and historical patients. Physicians linked to cases surpassing the predefined similarity threshold of 0.7 are included in the initial candidate pool. Next, the LDA model and JS divergence are applied to measure domain similarity among physicians, thereby expanding the candidate pool. Finally, physicians are comprehensively ranked by considering both the number of similar cases and the similarity between physician expertise and patient medical conditions [

33].

- (4)

Recommendation Algorithm W2V-LDA-TOPSIS

A hybrid physician recommendation algorithm was developed in this study. Building upon the W2V-LDA hybrid model, this version incorporates both physician competencies and patient needs, and the fairness of medical resource allocation. By introducing a multi-criteria evaluation system, an entropy-weighted TOPSIS evaluation model is constructed. This ensures recommendation quality while enhancing the comprehensiveness and equity of physician recommendations.

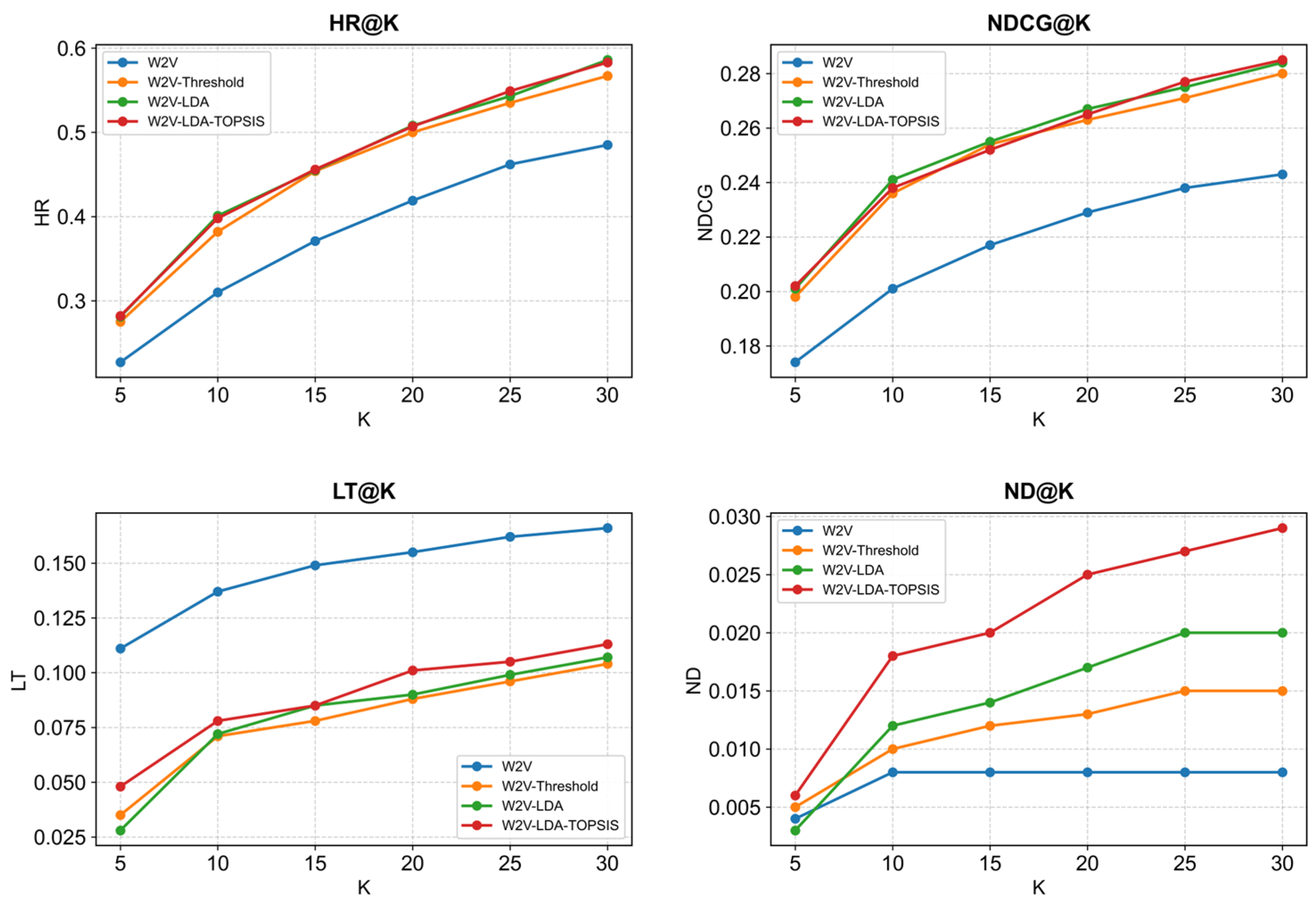

Figure 3 illustrates the performance trends across six recommendation positions (TOP5 to TOP30) for the four models, revealing consistent patterns in recommendation accuracy and fairness metrics.

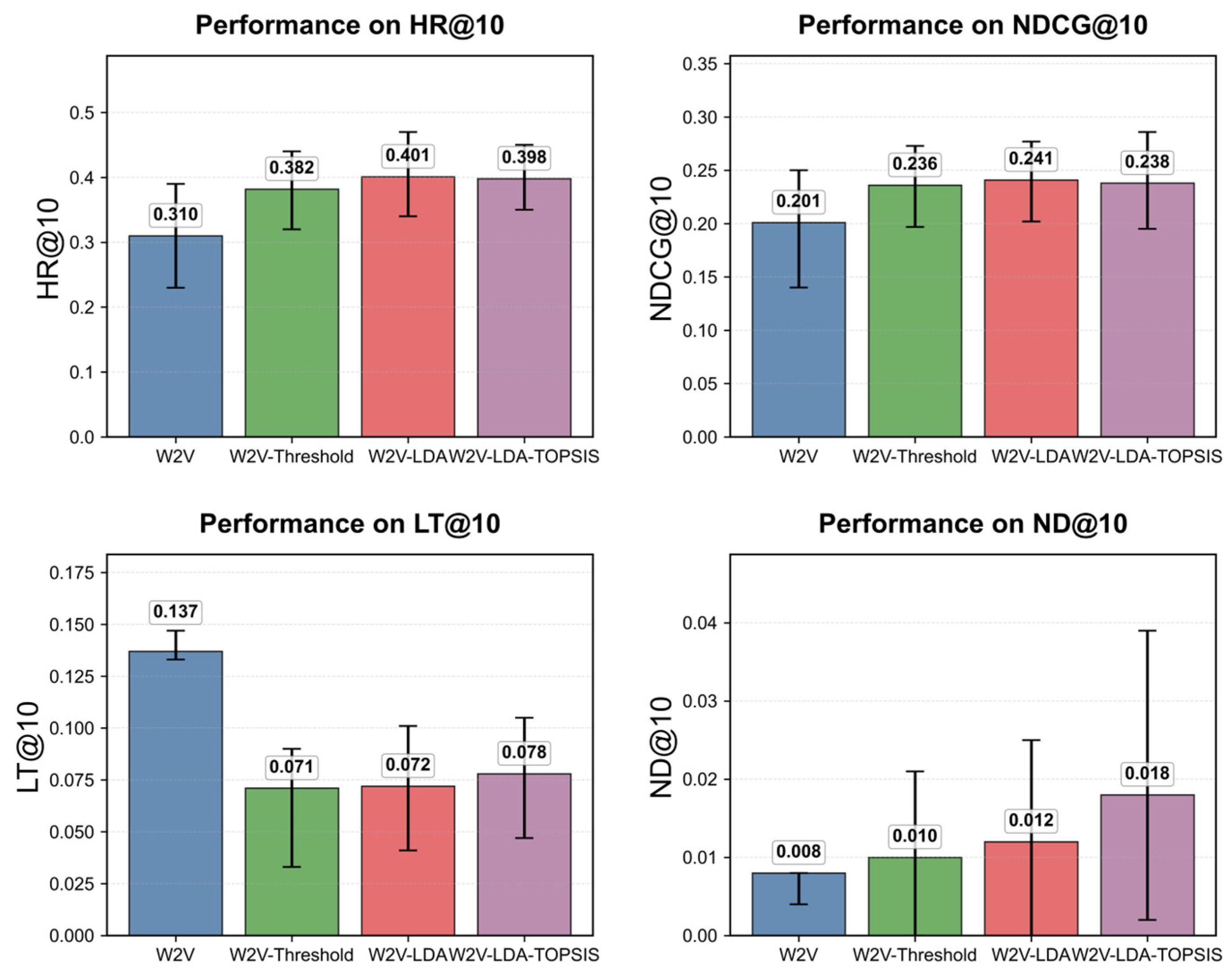

Figure 4 provides a detailed statistical comparison at the TOP-10 level, clearly displaying mean performance values with error bars indicating the full data range across multiple experimental runs.

As systematically summarized in

Table 8, the statistical analysis demonstrates substantial performance improvements across all enhanced algorithms. All three improved models achieved statistically significant enhancements in HR@10 accuracy compared to the baseline W2V model, with improvements ranging from 24.8% to 29.4% (all

p < 0.01). The W2V-LDA model exhibited the highest absolute improvement, increasing HR@10 from 0.310 to 0.401. While the W2V model showed the highest LT@10 performance (0.138), this reflected an over-reliance on long-tail physicians that compromised overall recommendation quality. All enhanced algorithms significantly reduced this imbalance, decreasing long-tail physician ratios by 45.4–48.8% (all

p < 0.001) while maintaining or improving accuracy metrics.

Further analysis reveals important performance nuances. Although W2V-LDA achieved the strongest HR@10 improvement, W2V-LDA-TOPSIS demonstrated superior balance across evaluation criteria. This distinction becomes particularly evident in the TOP-20 to TOP-30 recommendation range, where the multi-criteria optimization framework of W2V-LDA-TOPSIS provided more consistent performance.

The experimental results confirm the effectiveness of expanding physician candidate pools through LDA technology. Both W2V-LDA and W2V-LDA-TOPSIS models successfully incorporated physicians with relevant symptom matching, thereby enhancing recommendation accuracy. Most notably, the W2V-LDA-TOPSIS model achieved exceptional performance in promoting physician diversity, demonstrating a 134.4% improvement in ND@10 (p < 0.05)—the only algorithm to show statistically significant enhancement in new doctor exposure. Through its integrated evaluation system, this model not only maintained strong recommendation quality (HR@10: 0.398, +28.4%) but also achieved optimal balance between accuracy and fairness objectives.

These findings compellingly demonstrate the limitations of approaches relying solely on historical consultation data. Such methods not only produce statistically inferior accuracy metrics but also severely constrain physician diversity in recommendations. This limitation disproportionately affects long-tail and newly registered physicians, who struggle to gain exposure due to insufficient historical records. The proposed W2V-LDA-TOPSIS model addresses these challenges by comprehensively considering recommendation accuracy, patient preferences, and resource allocation fairness. While delivering statistically significant accuracy improvements, it effectively enhances exposure opportunities for underrepresented physician groups, ultimately generating higher-quality ranking results that balance both accuracy and equity considerations.

5.5. Discussions

Based on the experimental findings, several key insights have emerged. In terms of text representation models, our results indicate that the Word2Vec model trained specifically on medical data performs exceptionally well in the specialized field of respiratory medicine, outperforming general-purpose pre-trained models on both HR@K and NDCG@K metrics. This finding highlights a critical requirement for recommendation mechanisms in specialized domains: accurately capturing domain-specific terminology and symptom features is more important than general semantic understanding capabilities. With its simple architecture and specialized training, Word2Vec demonstrates clear advantages in calculating medical text similarity, providing a practical solution for clinical environments with limited resources.

In our comparison of multiple algorithms, we observed an inherent trade-off between recommendation accuracy and fairness within the system. While the basic W2V model performs well in covering long-tail physicians, its overall recommendation quality remains limited. The W2V-Threshold model maintains good accuracy but falls short in representing both long-tail and newly registered physicians. Notably, the W2V-LDA-TOPSIS model achieves the best balance between accuracy metrics (HR@K, NDCG@K) and fairness metrics (LT@K, ND@K) by incorporating a multi-criteria decision-making approach. This demonstrates the effectiveness of combining physician expertise matching with fair resource allocation within a unified evaluation framework. These findings provide valuable guidance for online medical platforms, showing that optimizing single metrics is no longer sufficient for complex healthcare environments—comprehensive multi-dimensional evaluation systems are essential.

From a practical perspective, the hybrid recommendation framework we developed shows significant application value. By using LDA topic modeling to expand the candidate physician pool, we effectively address the cold-start problem for both new and long-tail physicians, providing technical support for balanced medical resource allocation. Additionally, the entropy-weighted TOPSIS evaluation system enables platforms to dynamically adjust their recommendation strategies based on changing resource supply and demand conditions. Particularly in environments where quality medical resources are relatively scarce, our framework demonstrates potential to increase the representation of new physicians while maintaining good recommendation accuracy, showing alignment between technical solutions and real-world needs.

It is important to acknowledge several limitations of this study that may affect the generalizability of our findings. First, our experimental validation was conducted exclusively within the respiratory medicine department, and the framework’s performance across other medical specialties remains to be verified. Second, the data source was limited to a single Chinese online consultation platform (Haodf.com), which may introduce platform-specific biases and cultural particularities in physician selection patterns. Third, the fairness evaluation indicators, while innovative, represent an initial approach to quantifying medical resource equity and could benefit from more sophisticated metrics. These limitations suggest that while our framework shows promise, its broader applicability requires further validation across diverse medical contexts and cultural settings.

Based on these considerations, future research should focus on three main directions: developing cross-departmental adaptation mechanisms to validate broader applicability, exploring the integration of large language models with lightweight architectures to enhance semantic understanding while preserving efficiency, and designing dynamic fairness adjustment mechanisms that respond to real-time supply and demand conditions. These research directions will collectively advance online medical recommendation mechanisms toward greater intelligence, fairness, and practical utility.

6. Conclusions

This study develops a hybrid lightweight recommendation framework to address the limitations of current OCPs in balancing recommendation accuracy, computational efficiency, and equitable resource allocation. Using respiratory medicine as a representative scenario, the framework systematically integrates multi-criteria evaluation into the physician recommendation mechanism: employing a domain-adapted Word2Vec model for initial physician screening from extensive consultation records, utilizing LDA topic modeling for intelligent candidate pool expansion to effectively address cold-start problems, and incorporating both patient preference indicators and fairness metrics into an entropy-weighted TOPSIS evaluation system, ultimately demonstrating potential for synergistic optimization of recommendation quality and resource allocation fairness.

This research makes three key contributions that directly address the challenges identified in the literature. First, it demonstrates that domain-specific Word2Vec training provides an effective balance between accuracy and efficiency, offering a practical solution to the challenge of developing specialized yet lightweight text representation models for medical domains. Second, the proposed framework maintains computational efficiency through its hybrid architecture, addressing the critical trade-off between model complexity and operational feasibility for resource-constrained platforms. Third, by integrating multi-dimensional evaluation criteria including fairness metrics, the system moves beyond narrow symptom matching to comprehensively address both patient preferences and equitable resource allocation—a significant advancement over existing approaches.

Beyond technical contributions, this research offers substantial practical value. For online medical platforms, adoption of this framework could enhance user satisfaction through improved matching while promoting more efficient utilization of medical resources—potentially increasing platform retention and creating competitive advantages in emerging healthcare markets. From a societal perspective, wider implementation could help alleviate the concentration of medical resources in top-tier institutions, improving healthcare accessibility, especially in underserved regions. Besides, the framework’s design principles show promising potential for adaptation beyond healthcare. Similar matching challenges exist in other professional service domains such as legal consultation, educational tutoring, and technical support platforms, where balancing expertise matching, client preferences, and provider exposure represents a common challenge. The lightweight architecture further enhances its suitability for small-to-medium platforms across various service sectors.

Looking forward, this research framework presents several promising directions for expansion: validating cross-departmental adaptability through extension to other medical specialties, exploring the integration of large language models with lightweight architectures to enhance semantic understanding while preserving computational efficiency, and developing dynamic fairness adjustment mechanisms capable of intelligently adapting to real-time supply-demand conditions. These advancements will collectively drive the evolution of online medical recommendation mechanisms toward greater precision, equity, and sustainability, ultimately contributing to universal accessibility of quality healthcare resources.

Author Contributions

Conceptualization, H.F. and S.L.; methodology, H.F.; software, S.L.; validation, S.L.; formal analysis, S.L.; investigation, S.L.; resources, H.F.; data curation, S.L.; writing—original draft preparation, S.L.; writing—review and editing, H.F.; visualization, S.L.; supervision, H.F.; project administration, H.F.; funding acquisition, H.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the School-level project “Research on Intelligent Recommendation of Medical Services on Online Consultation Platforms” at Shanghai University and The APC was funded by Shanghai University.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Butler, C.R.; Webster, L.B.; Diekema, D.S. Staffing Crisis Capacity: A Different Approach to Healthcare Resource Allocation for a Different Type of Scarce Resource. J. Med. Ethics 2024, 50, 647–649. [Google Scholar] [CrossRef]

- Saha, E.; Rathore, P. Case Mix-Based Resource Allocation Under Uncertainty in Hospitals: Physicians Being the Scarce Resource. Comput. Ind. Eng. 2022, 174, 108767. [Google Scholar] [CrossRef]

- Di Costanzo, C. Healthcare Resource Allocation and Priority-Setting: A European Challenge. Eur. J. Health Law 2020, 27, 93–114. [Google Scholar] [CrossRef] [PubMed]

- Xu, D.; Zhan, J.; Cheng, T.; Fu, H.; Yip, W. Understanding online dual practice of public hospital doctors in China: A mixed-methods study. Health Policy Plan. 2022, 37, 440–451. [Google Scholar] [CrossRef]

- Xu, D.; Huang, Y.; Tsuei, S.T.; Fu, H.; Yip, W. Factors influencing engagement in online dual practice by public hospital doctors in three large cities: A mixed-methods study in China. J. Glob. Health 2023, 13, 12. [Google Scholar] [CrossRef]

- Jiang, H.; Mi, Z.; Xu, W. Online Medical Consultation Service-Oriented Recommendations: Systematic Review. J. Med. Internet Res. 2024, 26, e46073. [Google Scholar] [CrossRef]

- Gong, Y.; Wang, H.; Xia, Q.; Zheng, L.; Shi, Y. Factors that determine a patient’s willingness to physician selection in online healthcare communities: A trust theory perspective. Technol. Soc. 2021, 64, 101510. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, Y. Designing a doctor evaluation index system for an online medical platform based on the information system success model in China. Front. Public Health 2023, 11, 1126893. [Google Scholar] [CrossRef]

- Han, X.; Jiang, P.; Han, W.; Zhu, Q. Influencing factors of doctors’ online rating information characteristics: Based on social capital theory and social exchange theory. Inf. Resour. Manag. J. 2023, 13, 78–90. [Google Scholar]

- Chen, Z.; Song, Q.; Wang, A.; Xie, D.; Qi, H. Study on the relationships between doctor characteristics and online consultation volume in the online medical community. Healthcare 2022, 10, 1551. [Google Scholar] [CrossRef]

- Cao, X.Y.; Liu, J.Q. Patient choice decision behavior in online medical community from the perspective of service diversity. J. Syst. Manag. 2021, 30, 76–87. [Google Scholar]

- Wu, P.; Wang, L.; Jiang, J.; Yu, L. No pains no gains: Understanding the impacts of physician efforts in online reviews on outpatient appointment. Aslib J. Inform. Manag. 2024. [Google Scholar] [CrossRef]

- Alshammarie, F.F.; Alenazi, A.A.; Alhamazani, Y.S.; Almarzouk, L.H.; Alduheim, M.A.; Alanazi, W.S. Factors influencing the selection of a physician for dermatological consultation in Saudi Arabia: A national survey. Healthcare 2025, 13, 404. [Google Scholar] [CrossRef]

- Shan, W.; Wang, J.; Shi, X.; Evans, R.D. The impact of electronic word-of-mouth on patients’ choices in online health communities: A cross-media perspective. J. Bus. Res. 2024, 173, 114404. [Google Scholar] [CrossRef]

- Wang, H.; Liu, S.; Gao, B.; Aziz, A. How do recommendations influence patient satisfaction? Evidence from an online health community. Inf. Technol. People 2024, 38, 2381–2412. [Google Scholar] [CrossRef]

- Chen, Q.; Jin, J.; Zhang, T.; Yan, X. The effects of log-in behaviors and web reviews on patient consultation in online health communities: Longitudinal study. J. Med. Internet Res. 2021, 23, e25367. [Google Scholar] [CrossRef]

- Chen, Q.; Xu, D.; Fu, H.; Yip, W. Distance effects and home bias in patient choice on the internet: Evidence from an online healthcare platform in China. China Econ. Rev. 2022, 72, 101757. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, J.N.; Chiu, Y.L.; Hsu, Y.T. Exploring types of information sources used when choosing doctors: Observational study in an online health care community. J. Med. Internet Res. 2020, 22, e20910. [Google Scholar] [CrossRef]

- Du, G.; Huang, L.; Xu, X. Medical recommendation model considering patient preference diversity with persistent use behavior. J. Syst. Manag. 2024, 33, 667–685. [Google Scholar]

- Pazzani, M.J.; Billsus, D. Content-Based Recommendation Systems; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Su, X.Y.; Khoshgoftaar, T.M. A survey of collaborative filtering techniques. Adv. Artif. Intell. 2009, 2009, 421425. [Google Scholar] [CrossRef]

- Wang, H.; Yang, W.; Li, J.; Ou, J.; Song, Y.; Chen, Y. An improved heterogeneous graph convolutional network for job recommendation. Eng. Appl. Artif. Intell. 2023, 126, 107147. [Google Scholar] [CrossRef]

- Ju, C.; Zhang, S. Doctor recommendation model based on ontology characteristics and disease text mining perspective. BioMed Res. Int. 2021, 2021, 7431199. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Hu, J.; Liu, Y.; Chen, X. Doctor recommendation based on an intuitionistic normal cloud model considering patient preferences. Cogn. Comput. 2020, 12, 460–478. [Google Scholar] [CrossRef]

- Wei, J.; Yan, H.; Shao, X.; Zhao, L.; Han, L.; Yan, P.; Wang, S. A machine learning-based hybrid recommender framework for smart medical systems. PeerJ Comput. Sci. 2024, 10, e1880. [Google Scholar] [CrossRef]

- Ye, J.X.; Xiong, H.X.; Jiang, W.X. A physician recommendation algorithm integrating inquiries and decisions of patients. Data Anal. Knowl. Discov. 2020, 4, 153–164. [Google Scholar]

- Meng, Q.Q.; Xiong, H.X. A doctor recommendation based on graph computing and LDA topic model. Int. J. Comput. Intell. Syst. 2021, 14, 808. [Google Scholar] [CrossRef]

- Lu, W.; Gao, P.; Zhai, Y.K. An adaptive recommendation method for telemedicine specialists with feedback adjustment. J. Syst. Manag. 2023, 32, 960–975. [Google Scholar]

- Pan, Y.; Ni, X. Recommending online medical experts with labeled-LDA model. Data Anal. Knowl. Discov. 2020, 4, 34–43. [Google Scholar]

- Sridevi, M.; Rajeshwara, R.R. Finding Right Doctors and Hospitals: A Personalized Health Recommender. Inf. Commun. Technol. Compet. Strateg. 2017, 40, 709–719. [Google Scholar]

- Zhou, X.; Xiong, H.X.; Xiao, B. A physician recommendation algorithm based on the fusion of label and patient consultation text. Inf. Sci. 2023, 41, 145–154. [Google Scholar]

- Sreenivasa, B.R.; Nirmala, C.R. Hybrid time centric recommendation model for e-commerce applications using behavioral traits of user. Inf. Technol. Manag. 2023, 24, 133–146. [Google Scholar] [CrossRef]

- Meng, Q.Q.; Xiong, H.X. A study of physician recommendations based on text information of online consultation. Inf. Sci. 2021, 39, 152–160. [Google Scholar]

- Valdeira, F.; Racković, S.; Danalachi, V.; Han, Q.; Soares, C. Extreme Multilabel Classification for Specialist Doctor Recommendation with Implicit Feedback and Limited Patient Metadata. arXiv 2023, arXiv:2308.11022. Available online: https://arxiv.org/abs/2308.11022 (accessed on 21 August 2023). [CrossRef]

- Yan, Y.; Yu, G.; Yan, X. Online doctor recommendation with convolutional neural network and sparse inputs. Comput. Intell. Neurosci. 2020, 2020, 8826557. [Google Scholar] [CrossRef]

- Haque, P.; Pranta, S.B.; Zoha, S.A. Doctor recommendation based on patient syndrome using convolutional neural network. EDU J. Comput. Electr. Eng. 2021, 2, 30–36. [Google Scholar] [CrossRef]

- Mondal, S.; Basu, A.; Mukherjee, N. Building a trust-based doctor recommendation system on top of multilayer graph database. J. Biomed. Inform. 2020, 110, 103549. [Google Scholar] [CrossRef]

- Nie, H.; Cai, R. Online doctor recommendation system with attention mechanism. Data Anal. Knowl. Discov. 2023, 7, 138–148. [Google Scholar]

- Rotmensch, M.; Halpern, Y.; Tlimat, A.; Horng, S.; Sontag, D. Learning a Health Knowledge Graph from Electronic Medical Records. Sci. Rep. 2017, 7, 5994. [Google Scholar] [CrossRef]

- Yuan, H.; Deng, W. Doctor recommendation on healthcare consultation platforms: An integrated framework of knowledge graph and deep learning. Internet Res. 2022, 32, 454–476. [Google Scholar] [CrossRef]

- Zhang, F.; Li, X. Knowledge-enhanced online doctor recommendation framework based on knowledge graph and joint learning. Inf. Sci. 2024, 662, 120268. [Google Scholar] [CrossRef]

- Zheng, Y.; Yan, Y.; Chen, S.; Cai, Y.; Ren, K.; Liu, Y. Integrating retrieval-augmented generation for enhanced personalized physician recommendations in web-based medical services: Model development study. Front. Public Health 2025, 13, 1501408. [Google Scholar] [CrossRef] [PubMed]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. Adv. Neural Inf. Process. Syst. 2013, 26. [Google Scholar] [CrossRef]

- Ütük Bayılmış, O.; Orhan, S. Decoding Digital Labor: A Topic Modeling Analysis of Platform Work Experiences. Systems 2025, 13, 819. [Google Scholar] [CrossRef]

- Wu, X.; Wu, Z. Optimizing Innovation Decisions with Deep Learning: An Attention–Utility Enhanced IPA–Kano Framework for Customer-Centric Product Development. Systems 2025, 13, 684. [Google Scholar] [CrossRef]

- Song, G.; Wang, Y.; Chen, X.; Hu, H.; Liu, F. Evaluating User Engagement in Online News: A Deep Learning Approach Based on Attractiveness and Multiple Features. Systems 2024, 12, 274. [Google Scholar] [CrossRef]

- Guo, J.; Zhang, W.; Chen, J.; Li, Z.; Liu, Y. Improving the accuracy and diversity of personalized recommendation through a two-stage neighborhood selection. Inf. Technol. Manag. 2024, 26, 509–526. [Google Scholar] [CrossRef]

- Li, Y.Y.; Xiong, H.X.; Li, X.M. Recommending doctors online based on combined conditions. Data Anal. Knowl. Discov. 2020, 4, 130–142. [Google Scholar]

- Hwang, C.-L.; Yoon, K. Multiple Attribute Decision Making: Methods and Applications—A State-of-the-Art Survey; Lecture Notes in Economics and Mathematical Systems; Springer: Berlin/Heidelberg, Germany, 1981. [Google Scholar]

- Roszkowska, E. Multi-criteria decision making models by applying the Topsis method to crisp and interval data. Multicriteria Decis. Mak. 2011, 6, 200–230. [Google Scholar]

- Shao, Q.; Liu, S.; Lin, J.; Liou, J.J.H.; Zhu, D. Green Supplier Evaluation in E-Commerce Systems: An Integrated Rough-Dombi BWM-TOPSIS Approach. Systems 2025, 13, 731. [Google Scholar] [CrossRef]

- Kim, Y.-S.; Chang, T.-W. Deep Learning-Based Freight Recommendation System for Freight Brokerage Platform. Systems 2024, 12, 477. [Google Scholar] [CrossRef]

- Akhadam, A.; Kbibchi, O.; Mekouar, L.; Iraqi, Y. A comparative evaluation of recommender systems tools. IEEE Access 2025, 13, 29493–29522. [Google Scholar] [CrossRef]

- Cai, W.; Yang, M.; Lin, L. An Inspiration Recommendation System for Automotive Styling Design Based on User Behavior Data and Group Preferences. Systems 2024, 12, 491. [Google Scholar] [CrossRef]

- Rohanian, O.; Nouriborji, M.; Kouchaki, S.; Clifton, D.A. On the effectiveness of compact biomedical transformers. Bioinformatics 2023, 39, btad103. [Google Scholar] [CrossRef]

- Colangelo, M.T.; Meleti, M.; Guizzardi, S.; Calciolari, E.; Galli, C. A comparative analysis of sentence transformer models for automated journal recommendation using PubMed metadata. Big Data Cogn. Comput. 2025, 9, 67. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).