1. Introduction

Digital Engineering (DE) is reshaping how industries design, develop, and manage complex systems. As an integrated approach that leverages digital models, data-driven decision-making, and advanced simulation techniques, DE has emerged as a transformative approach to designing, developing, and managing complex systems across many industries [

1]. However, despite its growing significance, DE’s definition, scope, and implementation vary across industries and academic literature [

2]. Since the release of the 2018 U.S. Department of Defense (DoD) Digital Engineering Strategy [

3], DE has increasingly influenced government, industry, and academic domains, redefining practices for designing, developing, and managing complex systems. This research seeks to provide a comprehensive assessment of the current state of DE by systematically reviewing its core components, technologies, strategies, adoption challenges, industry applications, and the influence of the 2018 DoD DE Strategy.

Despite the growing body of work surrounding DE, relatively few systematic literature reviews (SLRs) have synthesized the field comprehensively. Existing reviews often focus on individual aspects and components, such as Model-Based Systems Engineering (MBSE) [

4,

5], digital twin applications in manufacturing [

6,

7,

8,

9,

10], or digital thread [

11,

12]. For instance, some studies explore DE within aerospace [

13], construction [

14], or manufacturing [

15,

16] sectors but rarely examine its cross-industry diffusion or alignment with national strategies such as the DoD’s DE Strategy framework. One of the most current SLRs on DE focused on determining a conceptual DE framework to support the DoD acquisition processes, and it was pointed out that the DE has fragmented, unstandardized definitions [

17]. Another review addresses DE from its current development, its implementation, main challenges, and future directions [

2]. Furthermore, another study has investigated the current state of digitalization practices and identified a conceptual framework that satisfies the goals of the DoD DE Strategy [

17]. While these reviews contribute valuable insights, they do not systematically assess the degree to which DE research aligns with the DoD’s five strategic goals or trace the evolution of terminology and best practices over time. This gap highlights the need for a structured SLR that not only catalogs DE tools and methods but also examines the motivations, challenges, and strategic influences shaping the field. By doing so, this study advances understanding of DE in three ways. First, it isolates DE as an explicit construct, distinguishing it from adjacent concepts such as MBSE, digital twins, and digital thread, and clarifies how authors define and bound the term in practice. Second, it combines component- and benefits-focused synthesis with an implementation lens that surfaces recurring barriers and enabling conditions reported across sectors. Third, by using the 2018 DoD Digital Engineering Strategy as an interpretive framework, it shows which aspects of DE practice are accumulating evidence and which remain largely aspirational, translating a high-level policy document into an empirically grounded research and practice agenda. Therefore, this SLR is structured around three main research objectives:

Develop a shared understanding of DE Concepts, methodologies, and implementation practices.

Identify existing studies, frameworks, challenges, and best practices related to DE knowledge and skills.

Evaluate the extent to which the five strategic goals outlined in the 2018 DoD Digital Engineering Strategy have influenced academic literature, technological advancements, and organizational practices in Digital Engineering.

As DE continues to expand, various frameworks, methodologies, and technologies have emerged, shaping its implementation across industries. Therefore, in the first objective, we want to explore how DE is defined in the literature, how its definition has evolved, explore the motivations behind DE adoption mentioned in the literature, and which key DE components are frequently discussed as essential enablers of DE. Organizations must navigate technical, organizational, workforce, financial, and regulatory barriers to effectively implement DE strategies. The second objective of this research is to categorize and quantify these challenges while identifying best practices that have enabled successful DE adoption across various sectors. In the last objective, we aim to assess the impact of the 2018 DoD Digital Engineering Strategy on academic research and industry practices, to determine whether the DoD Strategy has significantly shaped Digital Engineering research, methodologies, and best practices. To address the main objectives, we have structured this research with five research questions (RQ):

RQ1: How is DE defined in the literature?

RQ2: What are the motivations and benefits, stated in the literature, to apply DE?

RQ3: What DE components (e.g., digital twins, MBSE, digital threads, authoritative source of truth) are most mentioned when talking about DE?

RQ4: What are the key challenges in implementing DE, and what solutions have been proposed?

RQ5: To what extent do academic publications explicitly reference or align with the five strategic goals of the 2018 DoD Digital Engineering Strategy?

By systematically reviewing the existing literature, this study seeks to holistically clarify the current state of DE and highlight areas that will require future research. The findings of this paper will contribute to a deeper understanding of DE’s role in modern engineering practices and provide valuable insights to guide future applications. The remainder of this paper is structured according to the following. In

Section 2, background information on current DE research is provided. In

Section 3, the methodology of the systematic literature review is described. In

Section 4, the results from this research are provided.

Section 5 discusses the results, and

Section 6 presents concluding thoughts.

2. Background

Technological advancement, increased system complexity, and growing demands for speed and agility have reshaped engineering practices across sectors [

18]. Organizations such as the DoD have responded to these challenges by investing in research, digital innovation, and workforce development. The DoD has traditionally followed a linear, document-heavy engineering approach that often results in document-intensive engineering processes and communication with a large amount of data used in activities and decisions stored separately in disjointed and static forms across organizations, tools, and environments [

19,

20]. These legacy methods struggle to meet the demands posed by exponential technological growth, increasing complexity, and the need for rapid access to information. To modernize these practices, the DoD has started the DE initiative to support lifecycle activities and promote a culture of innovation, experimentation, and efficiency [

3]. DE is defined as “an integrated digital approach that uses authoritative sources of systems’ data and models as a continuum across disciplines to support lifecycle activities from concept through disposal” [

21]. This approach incorporates existing model-based principles, such as model-based engineering (MBE), MBSE, digital thread, digital twin, etc. [

20,

21].

The DoD 2018 Digital Engineering Strategy outlines five strategic goals for DE to promote continuous engineering activities, centralized access to current and verified data, adoption of emerging tools, collaborative digital environments, and strategic workforce transition [

20]. The first goal is to formalize the development, integration, and use of digital models, encouraging the use of consistent modeling practices across the system lifecycle to replace traditional document-centric processes. The second goal focuses on establishing an authoritative source of truth (ASoT), which is a centralized, validated repository of models and data to ensure all stakeholders work from the same accurate information. Third, the strategy aims to improve engineering efficiency and effectiveness by leveraging automation, model reuse, and digital collaboration to accelerate development timelines and enhance system quality. The fourth goal is to establish a supporting Digital Engineering ecosystem, which involves building the necessary infrastructure, including tools, data environments, and standards, to enable widespread adoption of Digital Engineering practices. Lastly, the strategy emphasizes the need to transform the workforce, advocating for training, education, and cultural shifts to equip engineers with the skills and mindset required to operate in a Digital Engineering environment. These goals are not exclusive to defense and are equally relevant to modern enterprises adopting new technologies while upskilling their workforce. Despite its promise, implementing DE at scale, whether in government or industry, comes with both technical and cultural challenges [

22,

23].

DE encompasses a suite of interrelated concepts that work together to improve the efficiency, traceability, and integration of complex systems throughout their lifecycle. Among the most critical of these concepts are MBSE, digital twins, simulations, digital threads, and ASoT. MBSE is defined as the “formalized application of modeling to support system requirements, design, analysis, verification, and validation activities” throughout the system lifecycle [

24,

25]. It forms the methodological foundation of DE, replacing document-based systems engineering with model-centric practices that improve consistency and communication across engineering teams. Building on this foundation, the concept of the digital twin enhances real-time system visibility. A digital twin is a virtual representation of a physical asset, process, or system that mirrors its real-world counterpart’s behavior and communication, enabling real-time monitoring and predictive analysis [

26]. Digital twins allow engineers to simulate and assess system behavior under varying conditions before physical deployment, reducing risk and accelerating decision-making. Supporting both MBSE and digital twins is the digital thread, which provides the connectivity and continuity needed across lifecycle stages [

12]. To maintain consistency and data integrity within such a complex ecosystem, DE requires an ASoT, which ensures that models and data remain accurate and trustworthy across teams and disciplines [

27].

3. Methodology

This research follows an SLR approach to ensure a holistic and unbiased assessment of the existing body of work on DE. An SLR is designed to minimize bias by adhering to a predefined search strategy, inclusion and exclusion criteria, and systematic procedures that allow for consistent identification, evaluation, and synthesis of relevant literature [

28]. The methodology for this review aligns with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) framework [

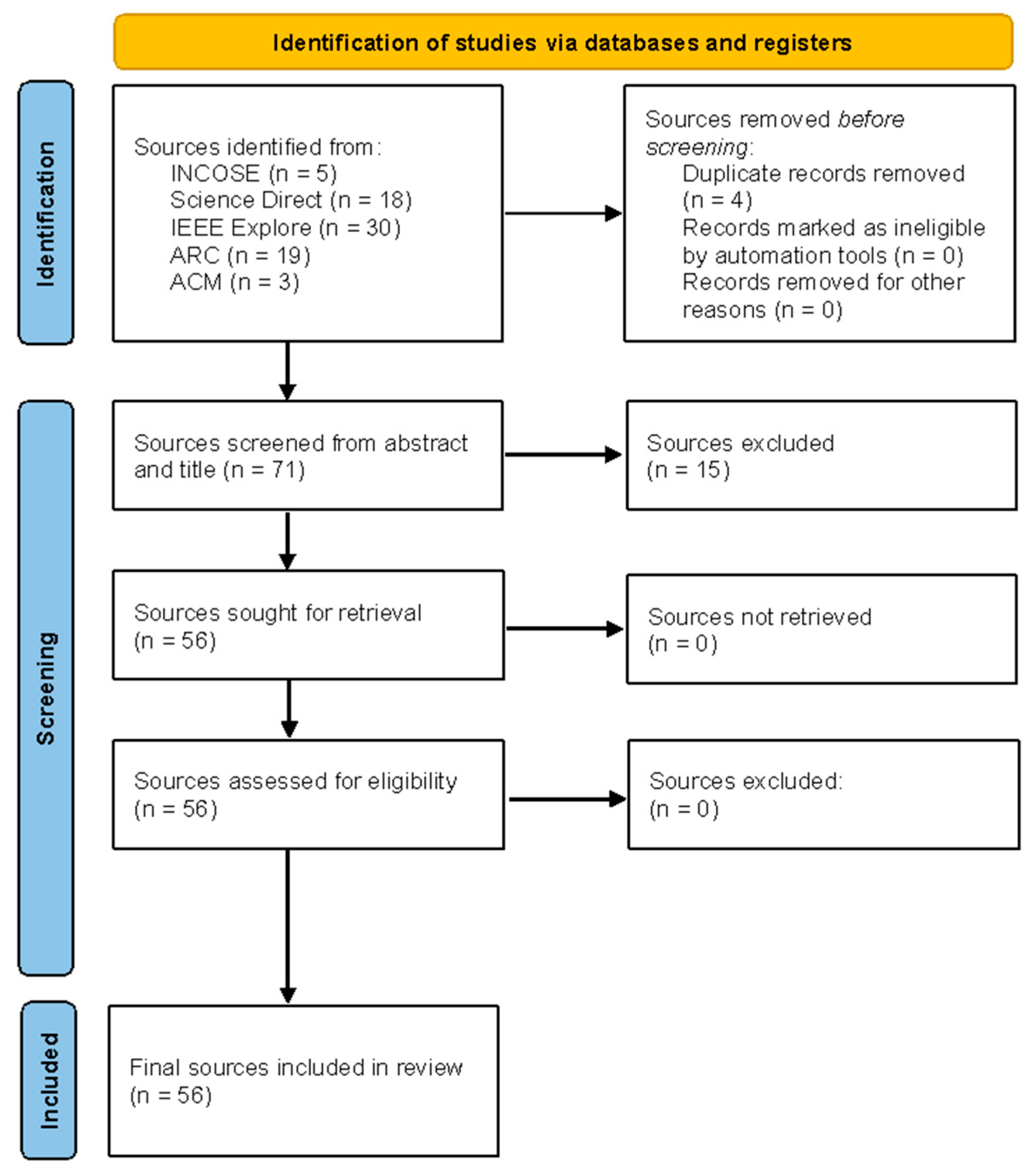

29,

30], which provides a widely recognized set of guidelines for transparent reporting. The structured SLR methodology developed for this research is summarized in

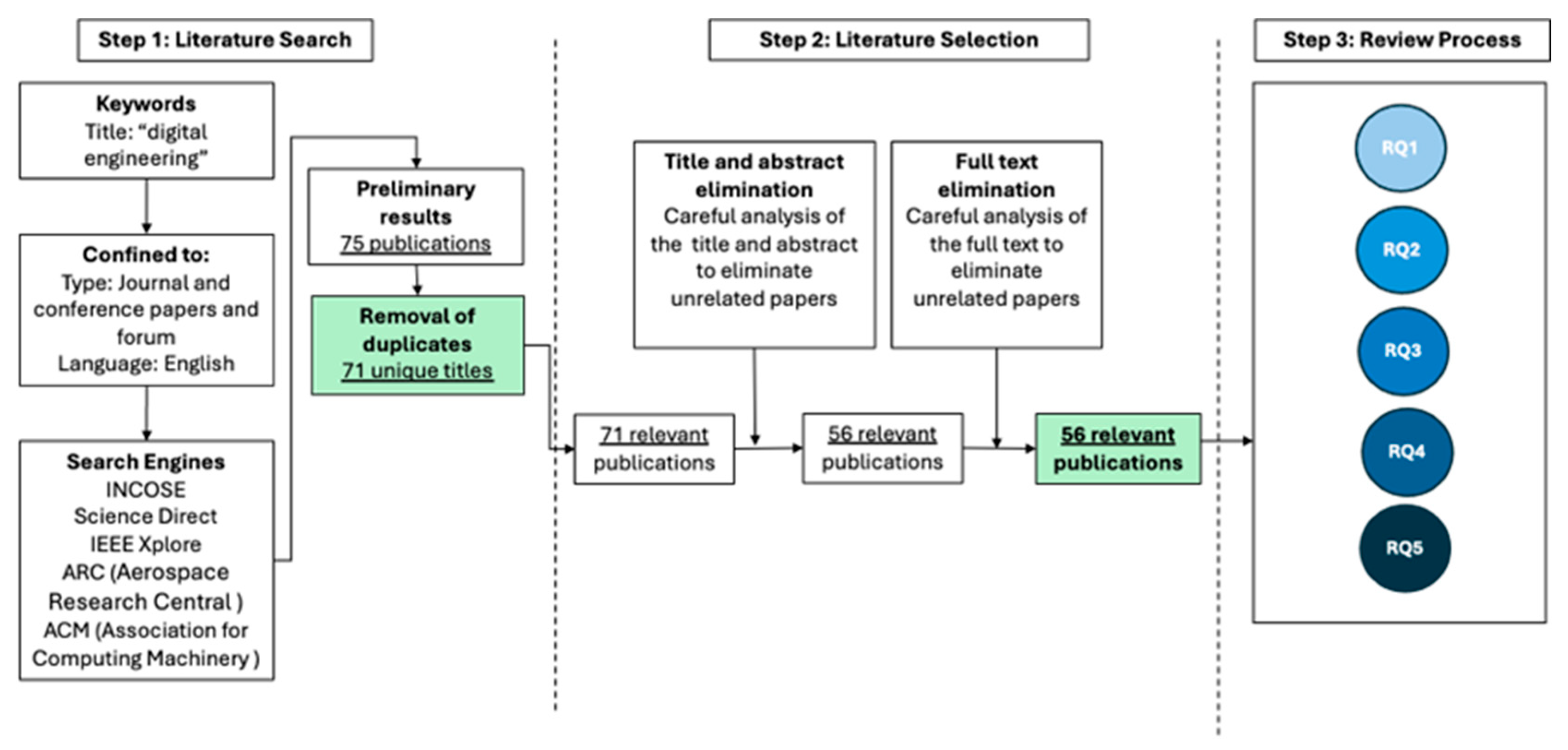

Figure 1, and the corresponding PRISMA flow diagram outlining the selection process is provided in

Figure 2; with a completed PRISMA checklist provided in the

Supplementary Materials. This SLR process and data extraction were conducted as part of the ISE 439/539 Digital Engineering course offered in Spring 2025 at the University of Alabama in Huntsville, aligning with the pedagogical strategy for integrating DE into industrial and systems engineering education [

31].

In step 1, a comprehensive literature search was conducted across five academic databases: IEEE Xplore, ScienceDirect, Aerospace Research Central (ARC), INCOSE, and the Association for Computing Machinery (ACM). These databases were selected due to their broad coverage of engineering, systems engineering, aerospace, and computing research. The thoroughness of an SLR search largely depends on the search strings employed [

32,

33,

34]. Therefore, to ensure conceptual clarity and comparability across studies, the exact core search string used in each database was the phrase “Digital Engineering,” applied to the article title field. This decision was intended to capture literature that frames Digital Engineering as a central construct, rather than tangentially related concepts such as MBSE, digital twins, or digital threads, which, while integral to DE, could otherwise dilute the scope of analysis. We acknowledge that this choice narrows the corpus and that future work could extend the search to title, abstract, and keyword fields to assess broader interpretations of Digital Engineering. The database search was performed on 3 October 2024. The search initially identified 75 publications. After duplicate removal, 71 unique titles remained. Only English-language journal articles, conference papers, and forum publications were considered.

In step 2, a two-stage approach was taken. First, titles and abstracts were analyzed for relevance. Second, full-text reviews were performed to confirm alignment with the inclusion criteria. The screening criteria includes: only publications explicitly presenting an application, framework, or implementation of DE were retained, while conceptual discussions without practical application, literature reviews, surveys, or studies with only tangential reference to DE were excluded. Applying these criteria resulted in a refined set of 56 relevant publications. The detailed dataset extracted from all studies included in this SLR is provided in the

Supplementary Materials.

In step 3, the in-depth review was carried out and a structured data extraction process was applied. The systematic extraction and coding of study data were conducted by a team of 13 reviewers under instructor supervision. Each of the 56 papers was first reviewed independently by two reviewers, who extracted information using a standardized form that captured bibliographic details, study definitions, methods, and key findings. A third reviewer then performed a cross-check for each paper by reconciling discrepancies between the two initial reviews, completing any missing items, and entering a consolidated final record into the shared dataset. Extracted data were aligned with the defined research questions and included both quantitative and qualitative information, such as study objectives, key contributions, identified challenges, implementation approaches, reported benefits, and proposed solutions. Reviewer groups were subsequently assigned to individual research questions and used the reconciled dataset to conduct the analytic synthesis, including categorization, frequency counts, visualizations, and narrative results. Because coding was finalized through reconciliation and consensus rather than through independent application of a fixed codebook, formal inter-rater reliability statistics were not computed. We acknowledge this as a limitation and note that consensus-based reconciliation served as the primary mechanism for ensuring coding consistency.

This review is constrained by the selection of five databases and the restriction to English-language publications, which may omit relevant works published in other outlets or languages. Additionally, as the database search was conducted in October 2024, emerging DE research published thereafter is not reflected in the present analysis. To maintain conceptual clarity and ensure that the selected studies explicitly addressed DE as a defined discipline, the search strategy limited results to papers containing the phrase “Digital Engineering” in the title. This approach helped differentiate DE-focused research from broader digital transformation or MBSE studies that only partially overlap with DE. However, this deliberate narrowing also constitutes a limitation, as it may bias the sample toward defense-oriented publications, where the term is more commonly used, and exclude other relevant works that contribute to DE principles under different terminologies. Another limitation of this review is that the analysis relied on frequency counts to identify recurring concepts and themes across the corpus. This approach treats all mentions equally and does not distinguish between brief references and detailed empirical examinations. We adopted this method to provide a descriptive overview suited to the heterogeneous nature of the publications, which span conceptual, methodological, and applied studies. Because the depth and purpose of discussions varied substantially across papers, implementing a weighted or depth-based scoring system would have required introducing subjective judgments beyond the scope of this review.

4. Results

Fifty-six publications were subjected to a full-text assessment to evaluate RQ1–RQ5.

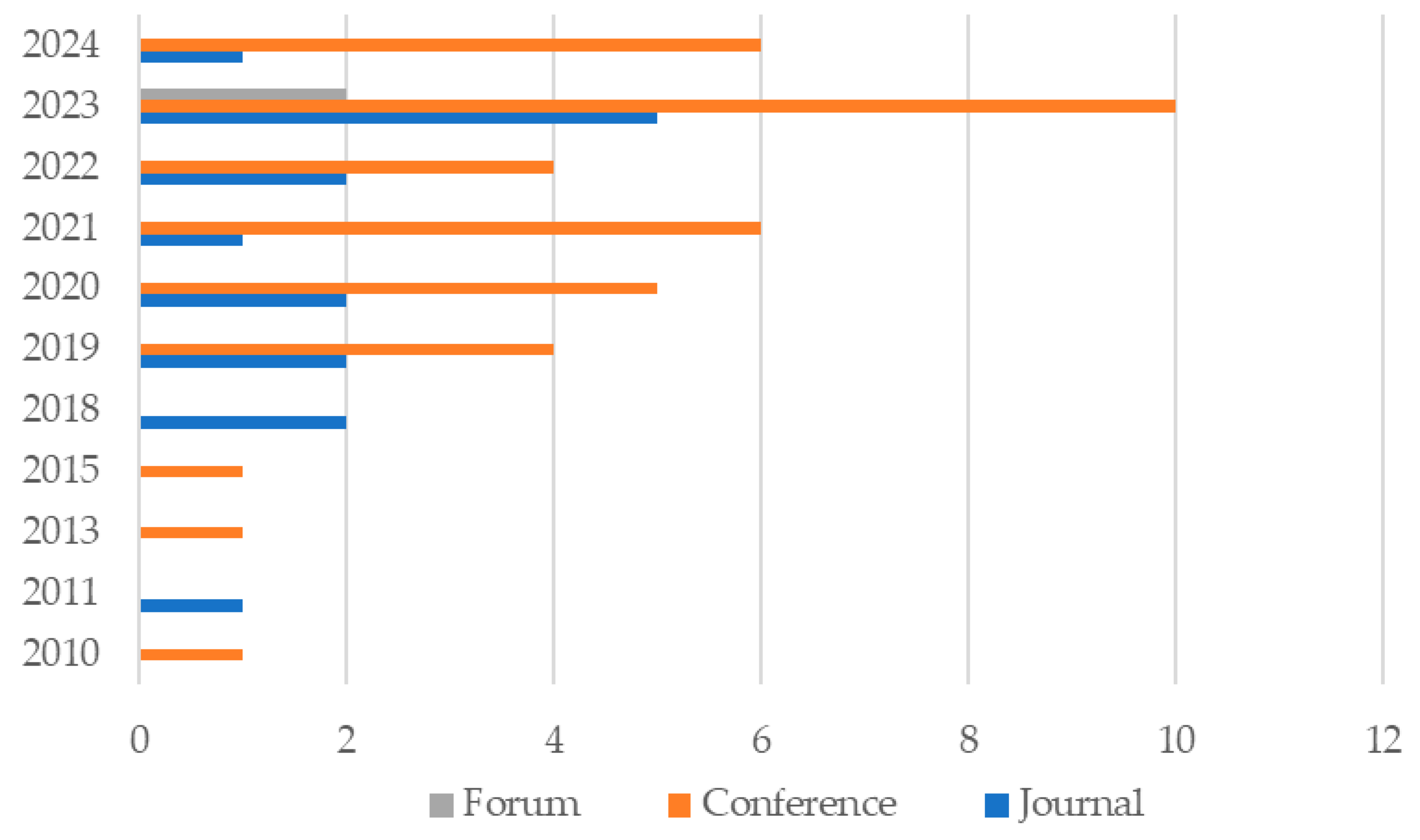

Figure 3 illustrates the distribution of publications over time, categorized by type (conference papers, journal articles, and forums). The graph reveals a sharp increase in conference papers beginning in 2019, immediately following the release of the 2018 DoD Digital Engineering Strategy, suggesting that the strategy significantly influenced academic and professional interest in DE. Since the search criteria specifically targeted papers with “Digital Engineering” in the title, the temporal shift in output reflects how the DoD Strategy catalyzed scholarly engagement with the topic.

4.1. RQ1: DE Definitions

This section presents the findings related to RQ1: “How is DE defined in the literature?”. To better understand how DE is conceptualized across the literature, we analyzed the specific sources cited when definitions were provided. Out of the 56 reviewed papers, only 23 offered an explicit definition of DE. The distribution of references is summarized in

Table 1.

The analysis reveals that nearly 57% of all definitions (13 of 23) cited sources originating from the U.S. DoD, particularly the Digital Engineering Strategy and the Defense Acquisition University (DAU) Glossary. These definitions typically emphasize a lifecycle perspective of DE, integrating authoritative digital models and data across engineering disciplines. For example, the DoD Strategy defines DE as: “An integrated digital approach that uses authoritative sources of system data and models as a continuum across disciplines to support lifecycle activities from concept through disposal” [

20]. Whereas DAU [

21] uses the definition from the DoD Instruction 5000.97 Digital Engineering [

20], which defines DE as: “A means of using and integrating digital models and the underlying data to support the development, test and evaluation, and sustainment of a system”. The consistency in referencing the DoD Strategy highlights its strong influence, especially in government, defense, and systems engineering literature. This shows the DoD has had a central role in shaping how DE is understood in academic and industry circles.

Meanwhile, 34.8% of papers defined DE without citing any source, indicating that authors either formulated their understanding or assumed the concept was well-known enough not to require citation.

Table 2 shows the summary of the definitions found in the literature that had no source provided. This lack of citation may reflect conceptual ambiguity or inconsistency across the field. Only two papers (8.7%) referenced other academic sources such as [

35,

36], which expand the view of DE to include workforce competencies and broad engineering applications, respectively.

Model-Based Engineering is the most dominant underlying concept, appearing in three of the eight unsourced definitions (including one duplicate). Several definitions attempt to articulate DE as an ecosystem of tools, data, and processes, highlighting its transformative effect on systems engineering and decision-making. Some definitions [

37,

39] take a conceptual or aspirational tone, focusing on cultural shifts and new ways of thinking, rather than technical specifics. The lack of citations with these detailed definitions suggests that authors are attempting to contribute to the ongoing conceptualization of DE, potentially adding original definitions based on the context of their study.

4.2. RQ2: Motivations to Apply DE

This section presents the findings related to RQ2: “What are the motivations or benefits, stated in the literature, to apply DE?” and builds upon an analysis of case studies presented in the reviewed literature.

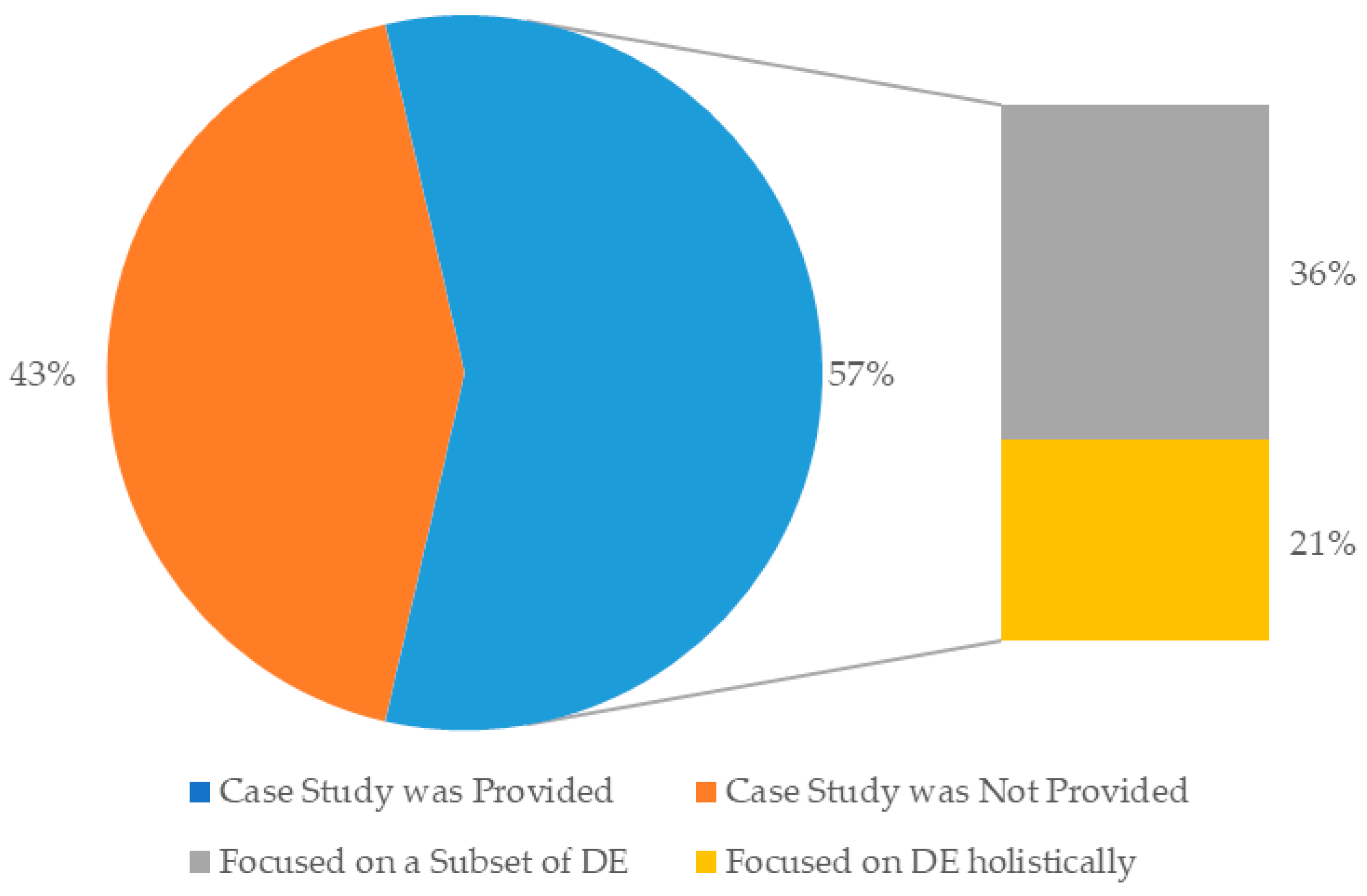

To understand the practical application of DE, we examined how case studies were presented across the 56 reviewed papers. For this review, a case study was defined as an applied example where a DE framework, methodology, or toolset was implemented to produce results and inform conclusions. As shown in

Figure 4, among the 56 papers, 32 (57%) included such case studies, while 24 (43%) did not. This suggests that although more than half of the literature grounds its findings in practical applications, a significant portion remains primarily conceptual or theoretical, reflecting an ongoing need to strengthen empirical validation in the DE domain. Among the 32 case study papers, we further distinguished between “general DE” applications and those focusing on specific “subsets of DE”. General DE case studies (12 papers, 36%) approached DE holistically, often demonstrating organization-wide integration, cultural adoption, or lifecycle benefits without anchoring the analysis to one specific methodology. In contrast, the majority of case studies (20 papers, 64%) emphasized specific DE subsets, such as MBSE, digital threads, and digital twins. These papers highlighted the tangible advantages of subset adoption, such as improved traceability, real-time monitoring, and lifecycle data continuity. This indicates that while broad DE frameworks provide valuable conceptual direction, research interest has leaned toward showcasing the benefits of targeted DE technologies that can yield immediate organizational gains.

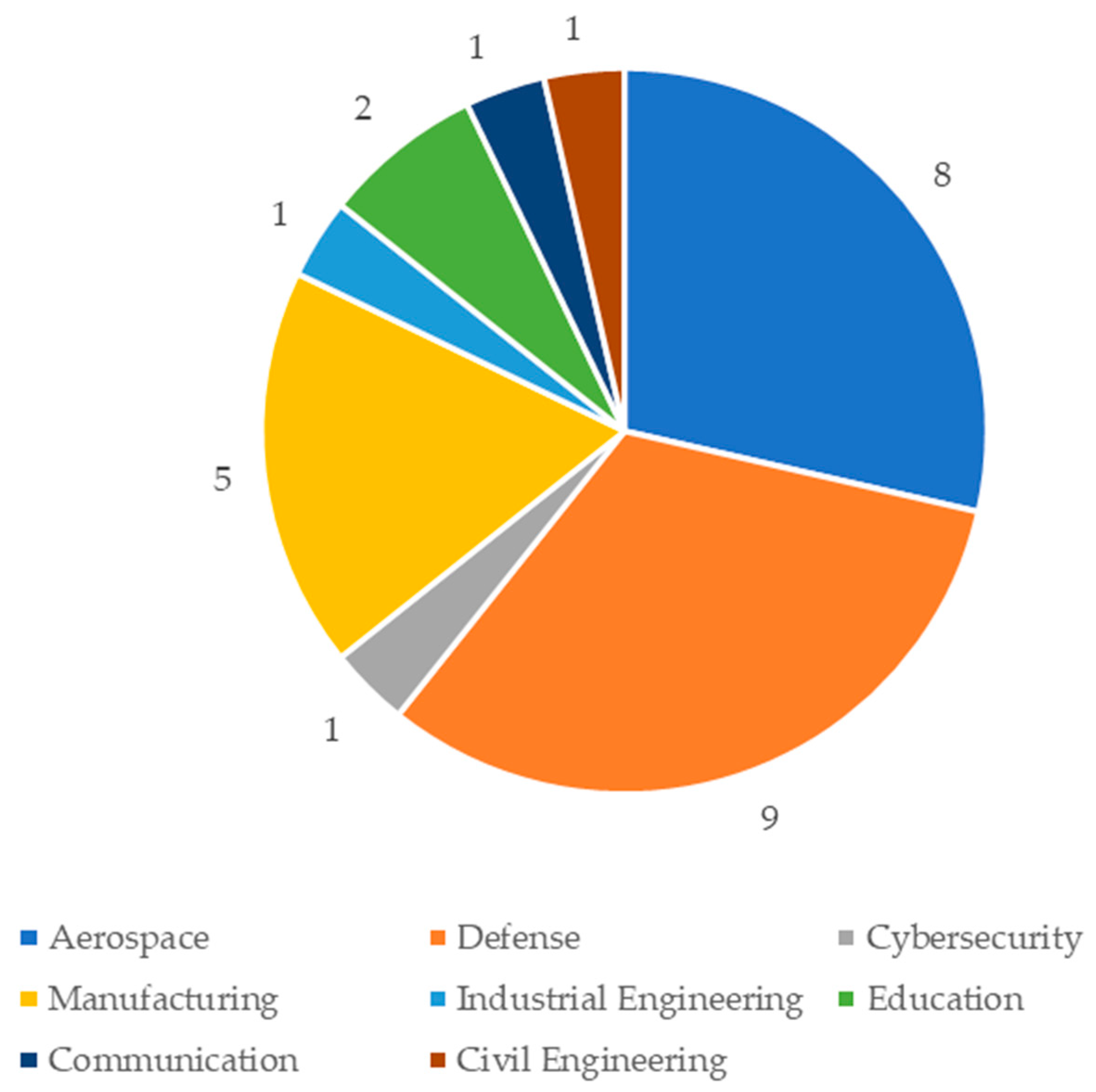

We further analyzed the industry context of the case studies, differentiating between those associated with general DE and DE subsets. As illustrated in

Figure 5, the most frequently represented sectors were defense and aerospace, which reflect the origins of many DE frameworks and tools. The aerospace sector, including aviation, has embraced DE for its ability to enhance safety-critical system design and reduce development costs. Other industries, such as automotive, healthcare, and manufacturing, were represented but to a lesser extent, suggesting that DE adoption outside defense and aerospace remains an emerging frontier.

Given the varying scope of DE adoption, we also categorized the specific subsets emphasized within the subset-focused case studies. These findings are summarized in

Table 3. Notably, 35% of subset case studies centered on MBSE, highlighting the central use of modeling in DE. Other commonly cited subsets included the following: ASOT, simulation, digital threads, and digital twins. Less frequently mentioned subsets included augmented reality, ontology-based modeling, and System of Systems (SoS)/End-to-End (E2E) modeling. The higher number of subset-focused studies highlights an ongoing preference for showcasing the impact of modular, implementable DE technologies rather than organization-wide DE transformations. This could reflect both the complexity of broad DE adoption and the tendency of researchers to focus on more bounded, measurable interventions.

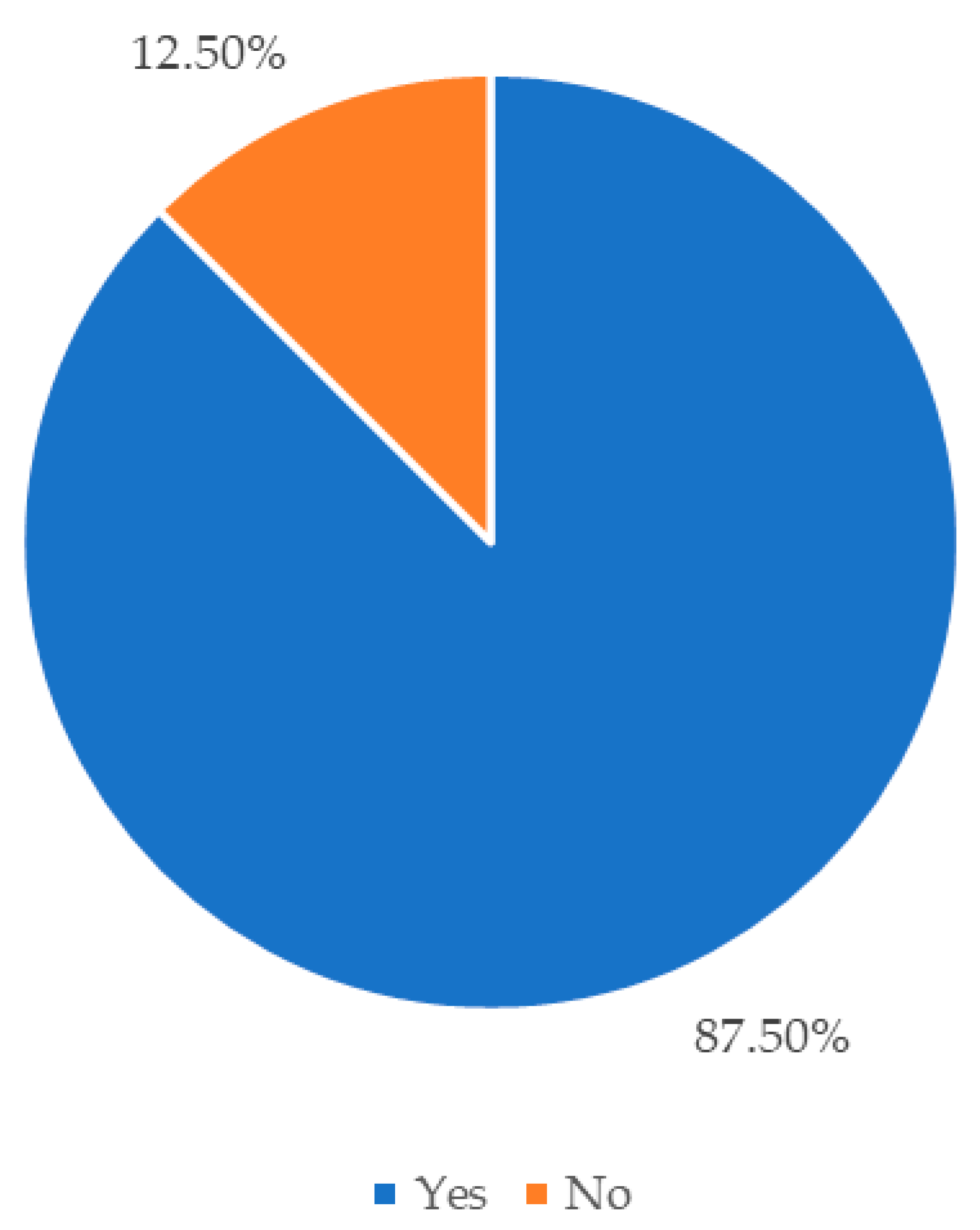

Among the 56 papers reviewed, 47 papers (87.5%) explicitly discussed motivations or benefits associated with adopting DE, as illustrated in

Figure 6. These statements were identified throughout various sections of the papers, including introductions, discussions, and conclusions. The remaining 12.5% of papers made no mention of motivations or benefits for DE adoption.

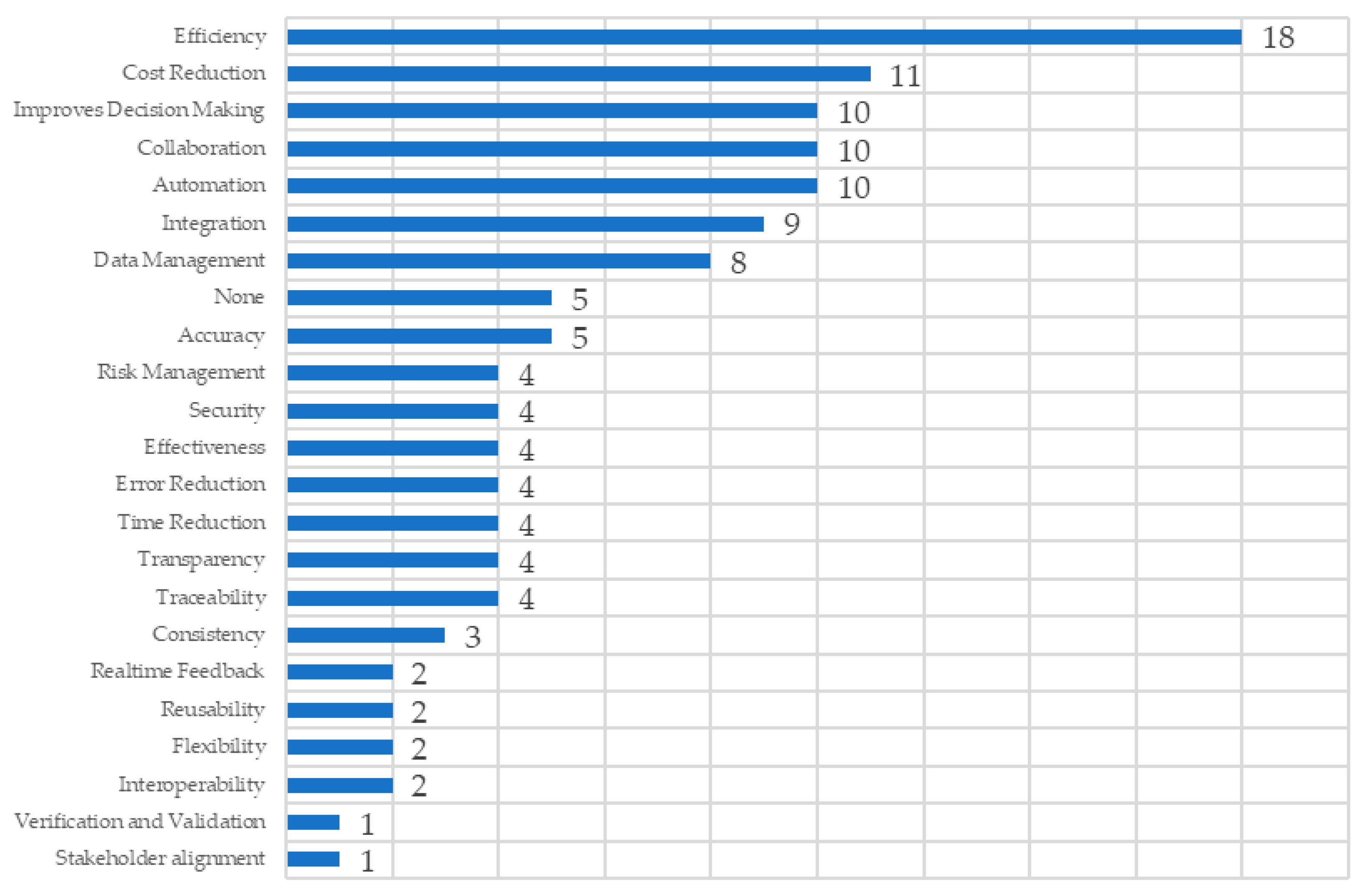

To better interpret the underlying drivers for DE adoption, a thematic categorization of the stated motivations was conducted. This analysis involved keyword searches and context reviews to ensure consistent classification. It is important to note that many papers cited multiple motivations; therefore, the frequencies presented represent non-exclusive counts across themes.

Figure 7 presents the frequency of each thematic category identified. The most commonly cited motivation was efficiency (17 papers), frequently tied to analytics-driven management and performance metrics (e.g., [

1,

40,

42]), followed by integration (12 papers), often emphasizing interoperable data/model exchange and semantic pipelines (e.g., [

35,

44,

61]), and cost reduction (11 papers), including explicit cost models and reductions in test/program expenses (e.g., [

13,

62,

63]). Collaboration and improved decision-making were each cited in 10 papers (e.g., [

56]). Less frequently mentioned categories included data management, automation, and accuracy, with validation and verification appearing in only one paper [

37].

This dual analysis, of both case study content and motivations, highlights that industries such as defense and aerospace are not only leading in DE implementation but also articulate clear rationales for adoption. The dominance of MBSE as a DE subset reinforces the field’s reliance on models as central artifacts. These findings suggest that understanding and emphasizing specific DE subsets may enhance adoption by aligning digital strategies with industry-specific goals. Moreover, identifying motivation patterns helps stakeholders prioritize DE initiatives. For example, a defense organization aiming to increase efficiency can confidently look to DE as a validated means of achieving this goal, as supported by the existing body of literature. Categorization of the industries by key motivators mentioned and the DE subsets of the case studies results in clearer understanding of the motivations behind DE implementation. From the analysis, defense and aerospace industries commonly mention key motivators behind DE adoption. Therefore, these industries have knowledge of the benefits that DE would provide for their areas specifically. The classification of the subsets focused on in the case studies provided in the literature show what DE subsets are most relevant and applicable. This information is useful when implementing DE because it shows what areas should be focused on. For example, MBSE, being the most common subset, shows the importance of the role of models in DE.

4.3. RQ3: Most Common DE Components

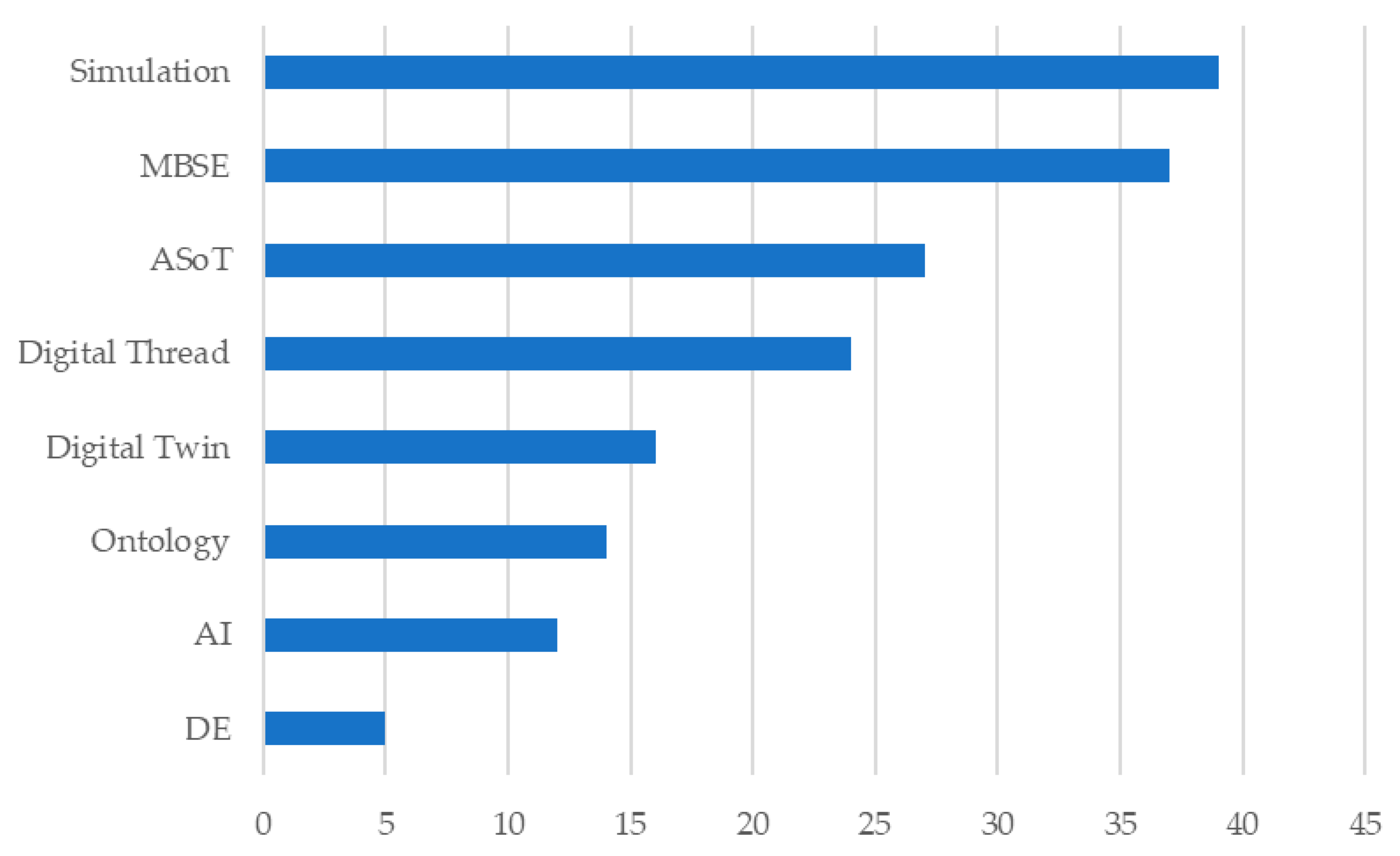

This section addresses RQ3: “What DE components (e.g., digital twins, MBSE, digital threads, authoritative source of truth) are most mentioned when talking about DE?”. We examine how specific DE components are discussed, adopted, and implemented in the literature, aiming to identify which components receive the most attention. Identifying heavily emphasized components can help future research explore root causes, whether due to ease of implementation, industry preference, or alignment with specific use cases. It also raises the question of whether some industries may be underutilizing the full spectrum of DE tools.

Figure 8 presents the frequency of DE component mentions across the reviewed papers. If a paper referenced multiple components, each was counted. The most frequently mentioned components were simulation (39) and MBSE (37), consistent with simulation curricula and road-mapping efforts that foreground simulation’s role in DE [

36,

63] and with SysML/MBSE practice in contemporary workflows [

35,

64]. Other notable mentions included authoritative source of truth (ASoT) (27) and digital thread (24), reflected in work on reusable/enterprise DE environments and hub/pathfinder infrastructures that emphasize authoritative shared data and End-to-End modeling [

47,

60,

65]. Digital twin, ontology, and AI collectively matched simulation in total mentions, supported by twin-focused methodology [

50], semantic/ontology pipelines for integration [

44,

66], and AI applications within DE workflows [

48].

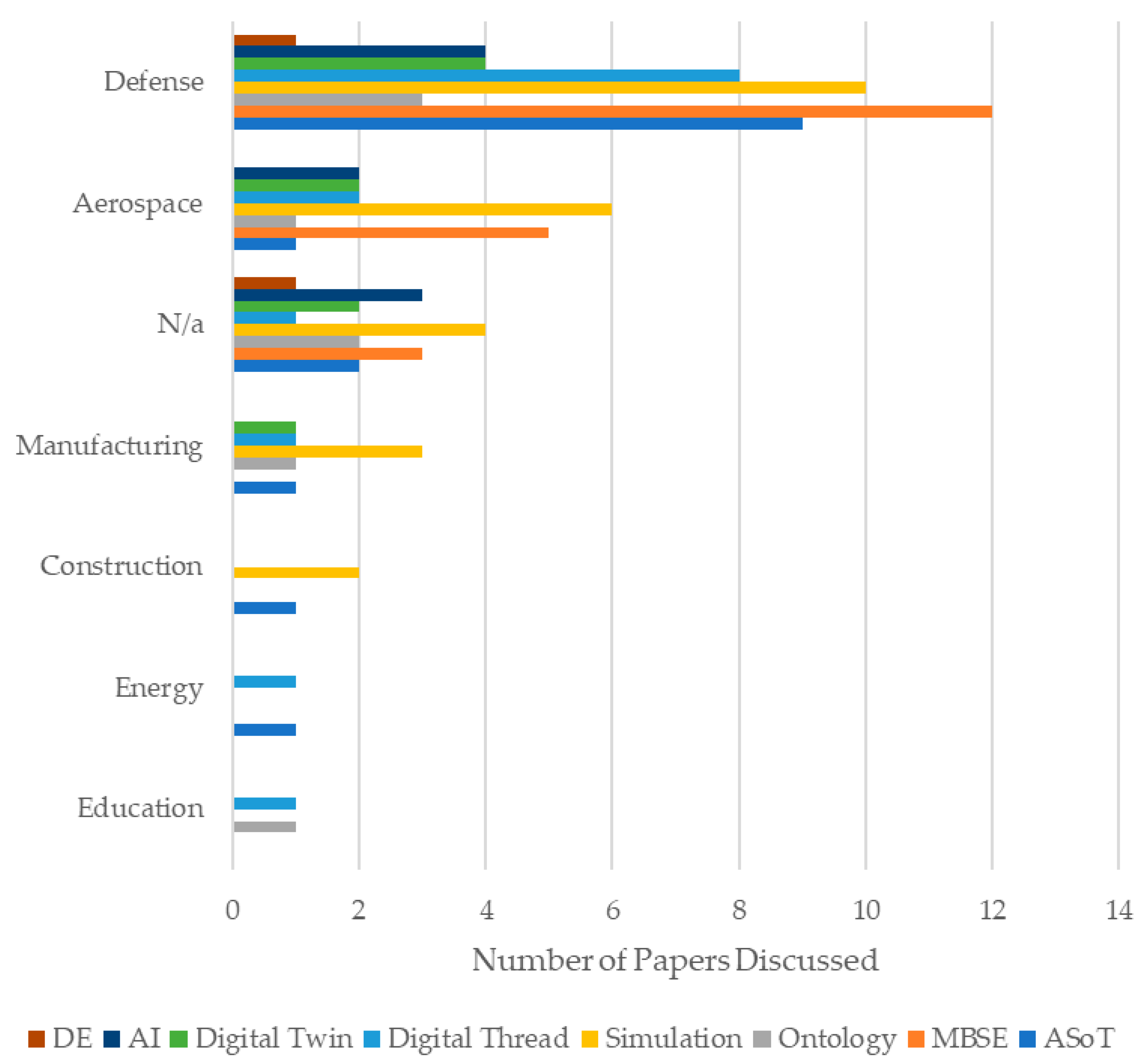

We also analyzed DE component mentions by industry (

Figure 9). When a paper addressed multiple industries, all were included. The defense sector was the most represented across the dataset, with MBSE leading its DE mentions (12), followed by simulation (10), ASoT (9), and digital thread (8). The aerospace sector, often overlapping with defense, showed similar trends, particularly in MBSE and simulation. Simulation was frequently employed to analyze model-generated data, further reinforcing its importance in mission-critical domains. This overlap suggests that MBSE’s prominence may be driven in part by its established use in both aerospace and defense.

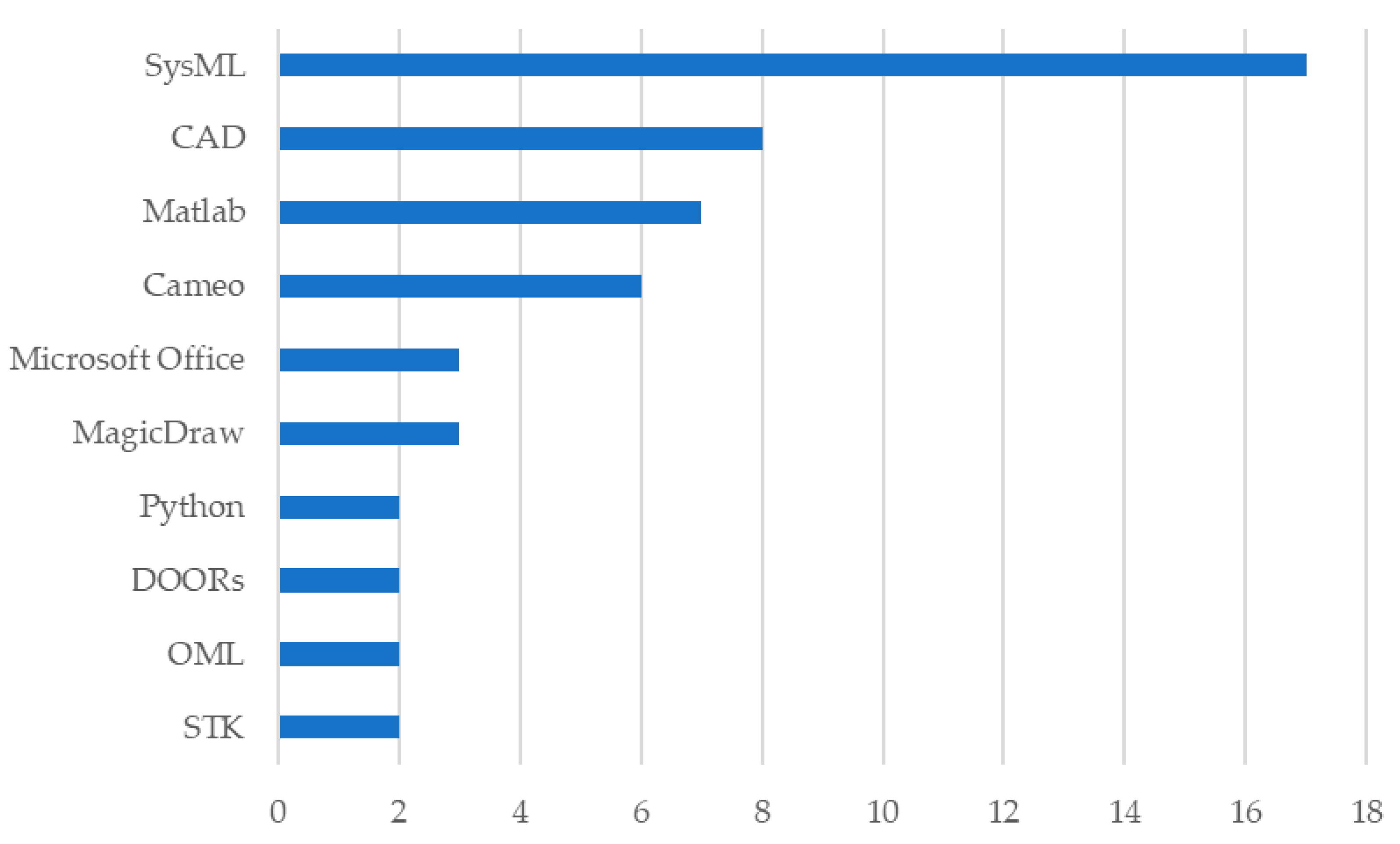

Figure 10 highlights the most mentioned DE tools, with papers often contributing multiple mentions. In practice, SysML, Cameo Systems Modeler, MagicDraw, and DOORS all align under the category of MBSE tools and languages. Taken together, they represent the strongest and most consistently referenced set of tools, underscoring that MBSE is the most widely used and prominent subcomponent within Digital Engineering. This consolidation explains why MBSE-related tools dominate the landscape, particularly in defense and aerospace, where systems modeling and requirements management are foundational.

Software with only one mention was excluded from the figure for clarity. The dominance of Cameo reflects its central role in DE toolchains across high-technology sectors.

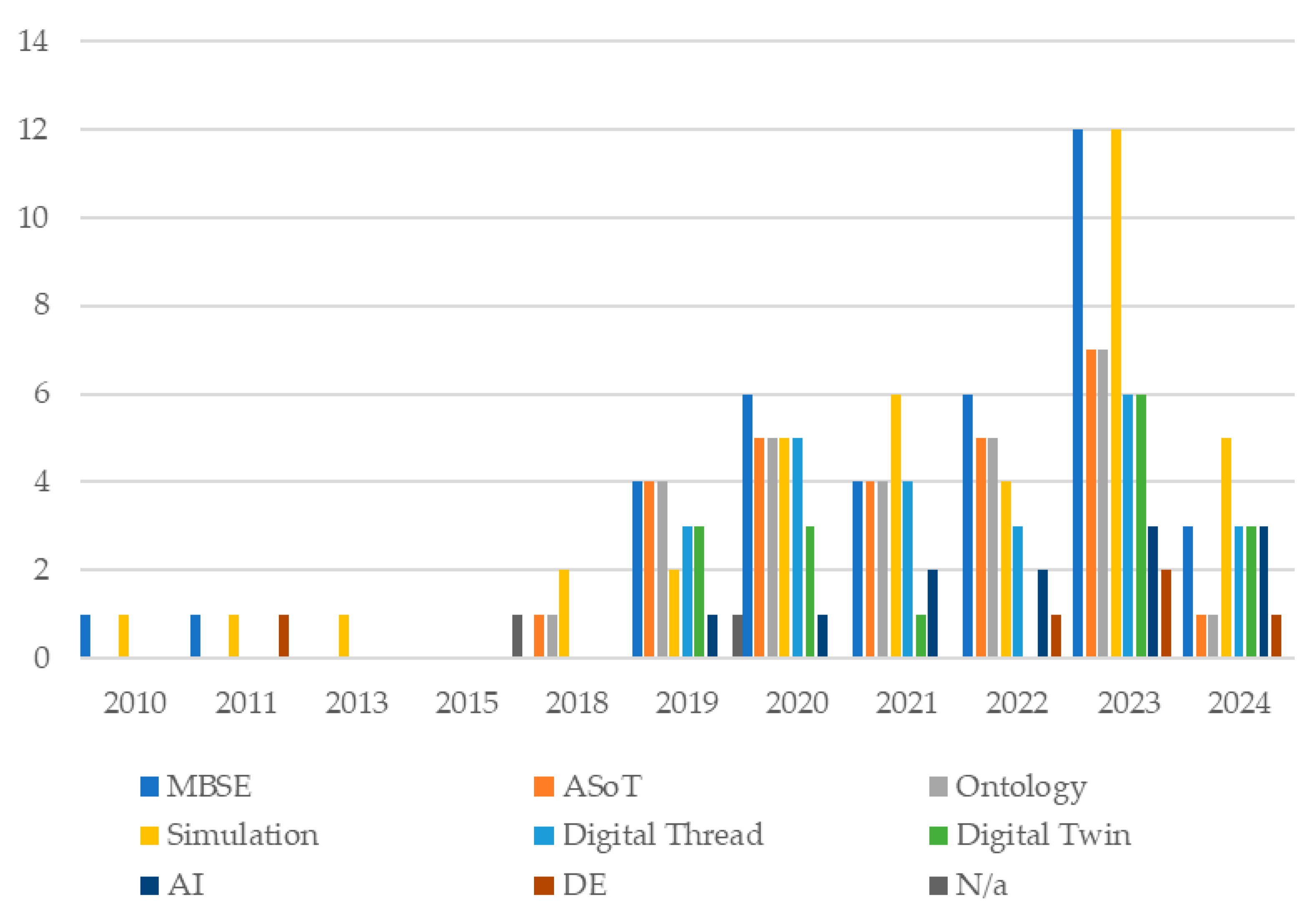

Figure 11 illustrates how DE component mentions have evolved over time. Each component was counted if mentioned in a paper, regardless of co-occurrence. A clear increase in mentions follows the release of the 2018 DoD Digital Engineering Strategy, suggesting a strong influence on scholarly discourse. The most notable growth occurred in five years post-release, with a marked spike in MBSE and simulation mentions in 2023. As the years progress, concepts like MBSE, ASoT, ontology, and digital threads continue to grow in mentions. However, in 2023 there is a large spike in MBSE and simulation. It is important to note that even though there is a large spike coming in 2023, the was also a large spike in papers from 2023 to begin with. Given the criteria of selection being “papers that had Digital Engineering in the title,” it would seem the 2018 DoD Digital Engineering had the largest effect 5 years after its release.

This trend coincides with a general increase in publications featuring “Digital Engineering” in their titles during that year. To better quantify the direct impact of the DoD Strategy, future work should compare this data with the number of papers that explicitly reference the 2018 Strategy document.

4.4. RQ4: Challenges and Solutions in Implementing DE

This section presents the results from RQ4: “What are the key challenges in implementing DE, and what solutions have been proposed?”. To systematize the analysis, a coding protocol was employed to review each publication and classify reported implementation challenges into four core categories: Technical, Cultural, Financial, and Regulatory. A barrier was coded as present if the narrative of the study explicitly or implicitly mapped onto the definitions in

Table 4.

The papers were then categorized across four main industrial sectors: defense, aerospace, manufacturing, and education, with an additional “general” category for papers that presented cross-industry frameworks or non-specific application domains. To ensure clarity, a set of inclusion criteria was developed to guide the categorization of papers into these sectors. These criteria were based on explicit sector application, institutional affiliation, or the contextual framing of the DE challenge in the paper. Some papers spanned multiple sectors, particularly those at the intersection of aerospace and defense; in those cases, papers were double counted to preserve the sectoral analysis. This sectoral aggregation provided a lens through which to view not only the prevalence of different barriers within each domain but also the context in which they would arise. For example, sectors like defense and aerospace had multiple overlapping barriers due to security requirements, highly regulated environments, and legacy infrastructure. Manufacturing and education had a different barrier profile due to operational agility, workforce training needs, and digital infrastructure maturity.

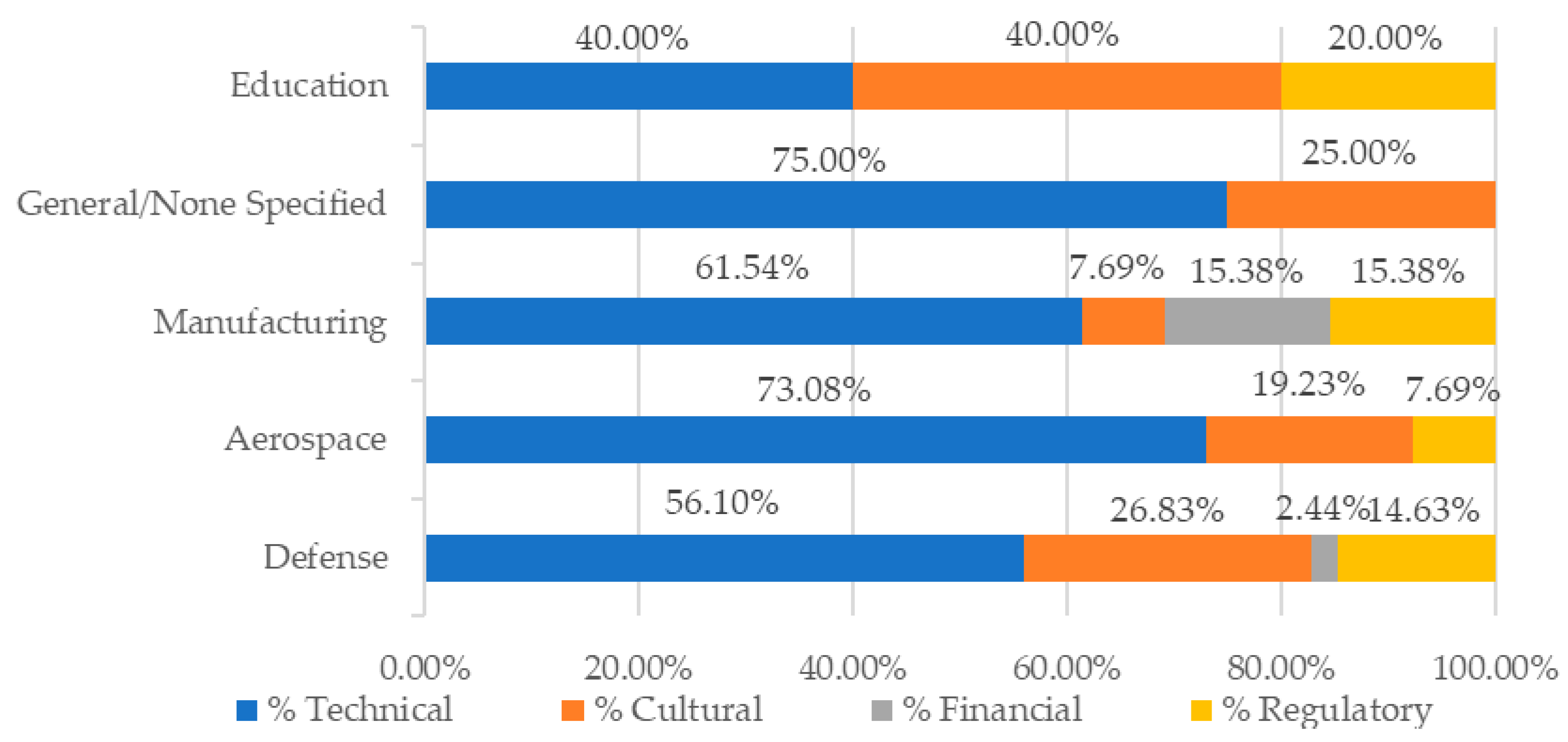

By presenting the data in this way we can see not only the frequency of each barrier category but also its prominence in each sector. This allows us to see how challenges like cultural resistance or regulatory complexity manifest differently depending on the institutional priorities and structural configuration of a sector. These patterns are key to designing sector-specific and implementation-ready mitigation strategies. The proportion of each barrier category by sector is shown in

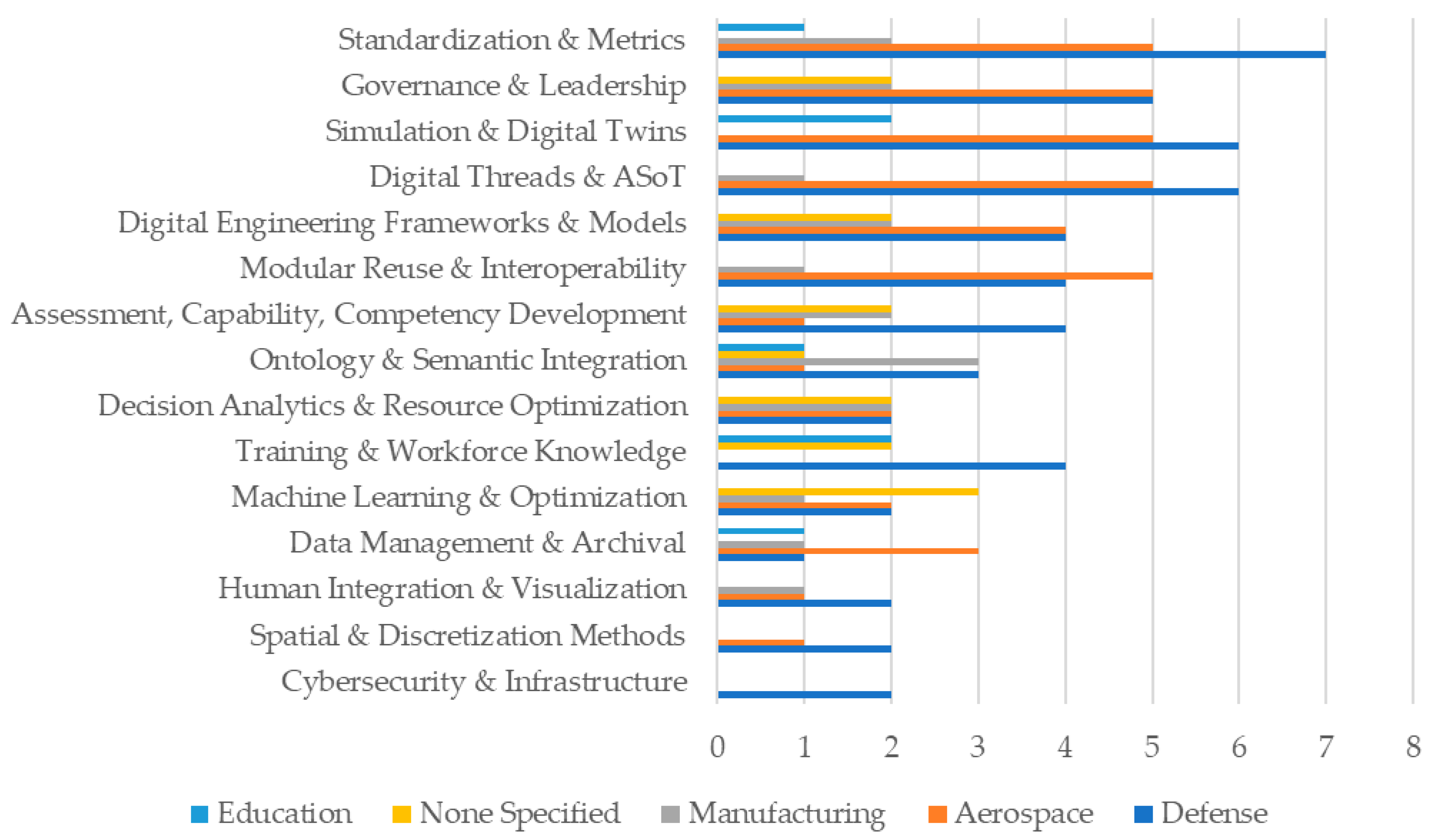

Figure 12.

To characterize solution strategies, proposed solutions were first tagged with keywords generated from term-frequency analysis and the papers’ associated sectors were recorded. These keywords were then consolidated into a 15-category classification schema (

Table 5), enabling frequency-based mapping of solution adoption across industries and clarifying which forms of solutions each sector most often requires.

Among these, Standardization and Metrics emerged as the most frequently cited solution category with 15 mentions, highlighting its role as a foundational pillar in the Digital Engineering ecosystem. This solution type encompasses developing and adopting standardized metrics taxonomies, data interfaces, and compliance with domain-specific frameworks. The widespread citation of this category illustrates a collective acknowledgment that interoperability, data consistency, and validation protocols are essential to DE integration. Without a shared vocabulary or set of metrics, collaboration across multidisciplinary teams becomes fractured, delaying system integration and increasing lifecycle costs.

Closely trailing behind, Governance Mechanisms (14 mentions) and Simulation and Digital Twins (13 mentions) were also highly cited. Governance Mechanisms emphasize structural oversight, leadership engagement, and quality assurance practices that facilitate model traceability, configuration control, and institutional readiness. Meanwhile, Simulation and Digital Twins offer real-time virtual feedback loops, enabling validation of system behavior under varying conditions before physical prototyping. These categories reflect a shared emphasis on codified infrastructure, process consistency, strategic oversight, and high-fidelity digital validation tools that together form the backbone of scalable, sustainable DE adoption strategies.

These trends are further visualized in

Figure 13, which presents the frequency of each solution category across the reviewed literature. The bar chart reveals not only which strategies are most prominent but also illustrates the breadth of approaches being considered to support DE adoption.

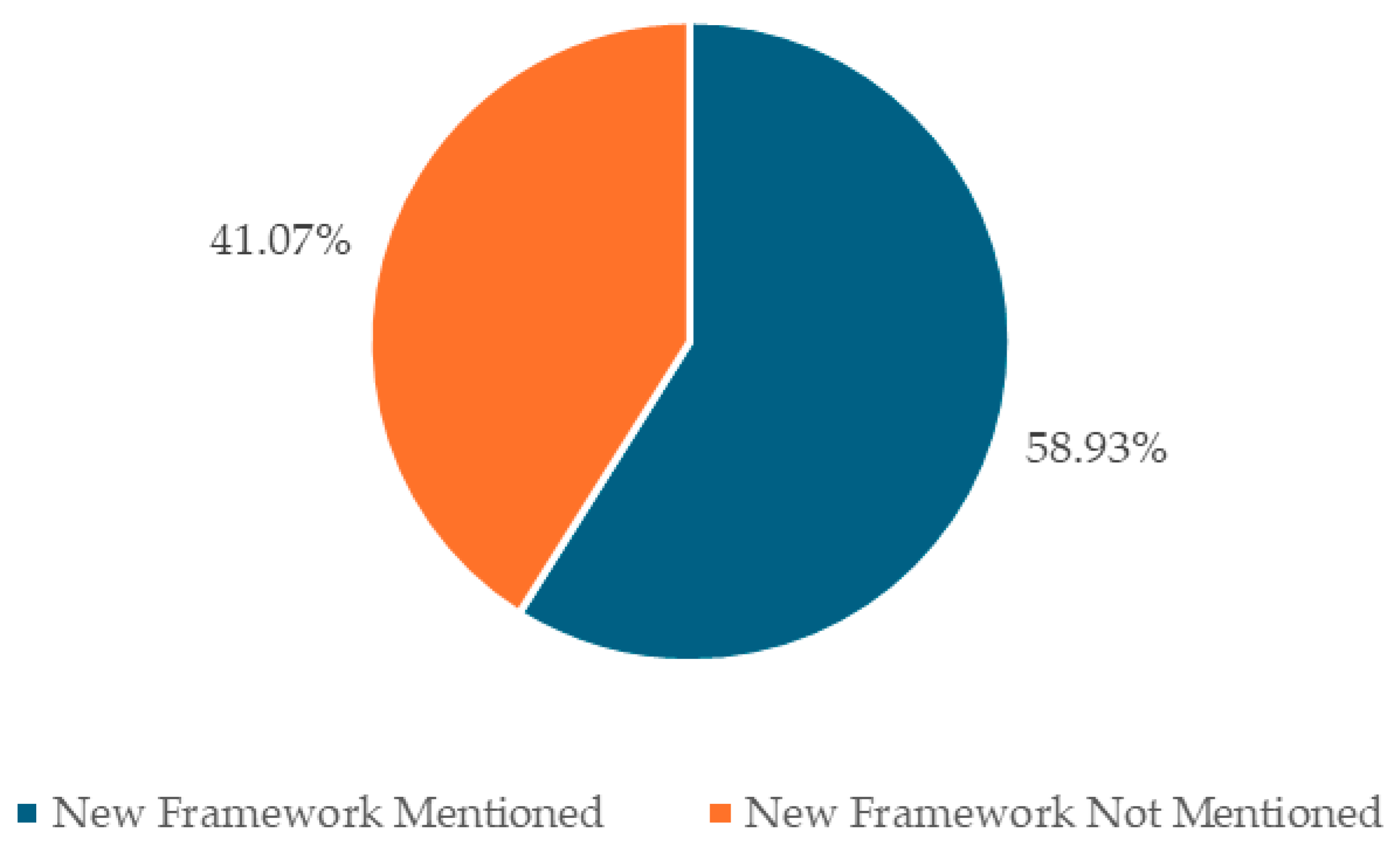

Each of the 56 papers was examined to see if it proposed a new methodology. For this analysis, a paper was considered to have proposed a new methodology if it introduced an original concept, framework or structured process to advance DE in practice. As shown in

Figure 14, 33 papers (58.9%) met these criteria and the remaining 23 papers (41.1%) offered empirical results, discussed challenges and benefits, or evaluated existing tools without proposing a new methodology.

This finding indicates a robust level of conceptual development in the field, with over half of the literature reviewed contributing new approaches to DE implementation. The relatively high frequency of novel proposals reflects the field’s emergent and evolving nature, where frameworks are still being shaped to meet the unique demands of modern engineering systems. Even among papers that did not propose a methodology, many contributed valuable insights into recurring problems, adoption barriers, or sector-specific considerations, suggesting that the literature continues to play a critical role in identifying areas for improvement.

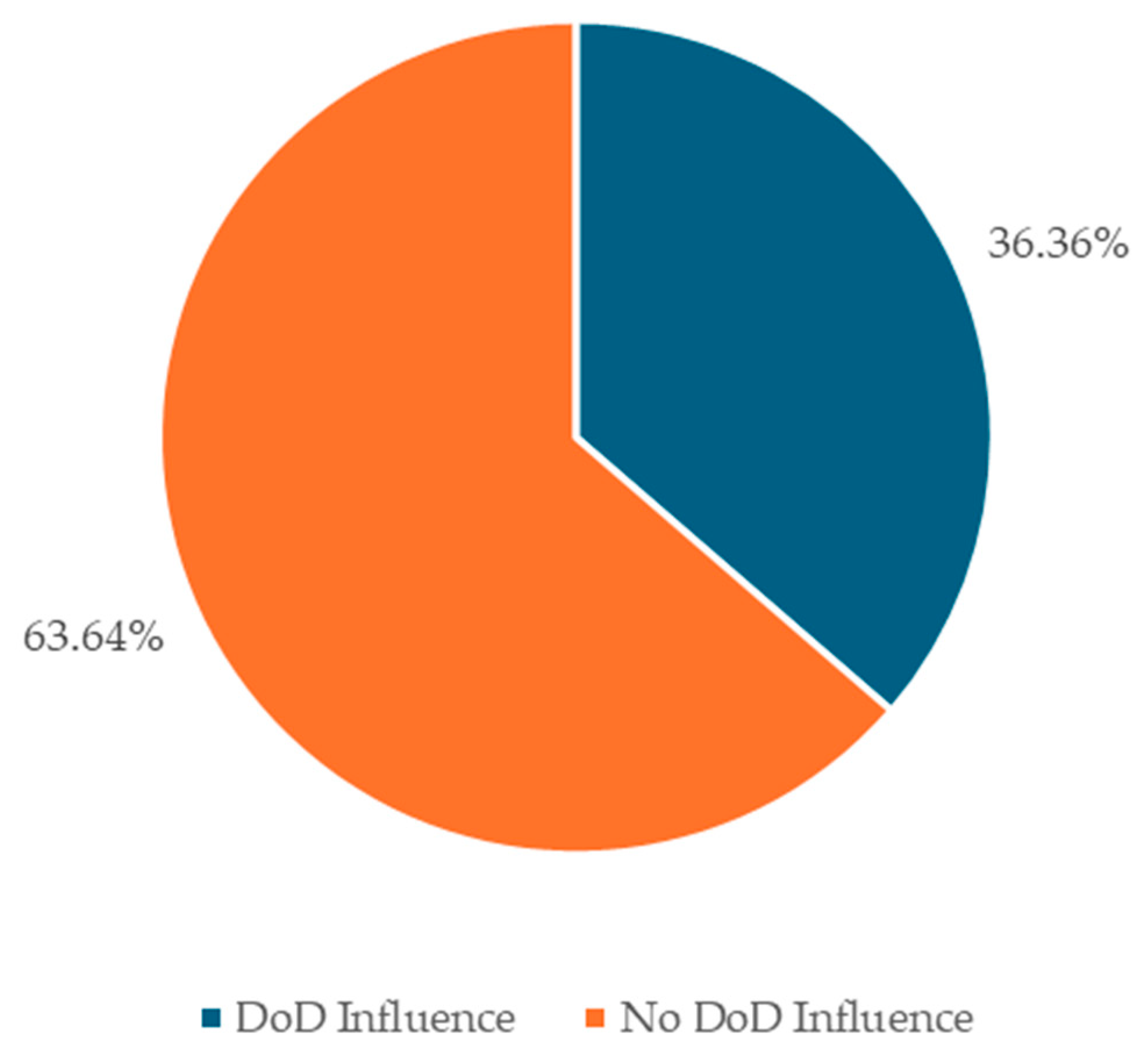

Given the DoD’s visible role in DE related policy and programmatic initiatives, particularly through its release of the 2018 Digital Engineering Strategy, this review also examined the extent to which the proposed methodologies were possibly influenced by that document. Only explicit references to the strategy’s goals or focal areas were considered valid indicators of influence. Although some papers may have incorporated principles aligned with the strategy, only those with clear, documented references were included in this analysis to ensure analytical rigor.

As shown in

Figure 15, 12 of the 33 methodology papers (36.4%) explicitly cited the 2018 DoD Digital Engineering Strategy as a basis for their framework development [

13,

27,

42,

43,

52,

63,

69,

70,

71,

73,

77,

81]. This suggests that the strategy has become an important reference point for a portion of the academic dialog, while many methodological contributions continue to develop independently of explicit DoD guidance. The remaining 21 papers (63.6%) either did not reference the strategy or pursued alternative conceptual directions.

Notably, an additional four papers, despite not proposing any new methodology, still explicitly referenced the 2018 Strategy, indicating that its influence extends beyond conceptual contributions and informs broader discussions on DE implementation. These papers often leveraged the strategy as a benchmark or motivator for research, even when their focus was evaluative or thematic rather than prescriptive. Challenges were classified by sector and thematically coded across recurring categories including technical, organizational, cultural, and process-specific issues. Solutions were then analyzed according to their frequency, thematic focus, and sectoral relevance.

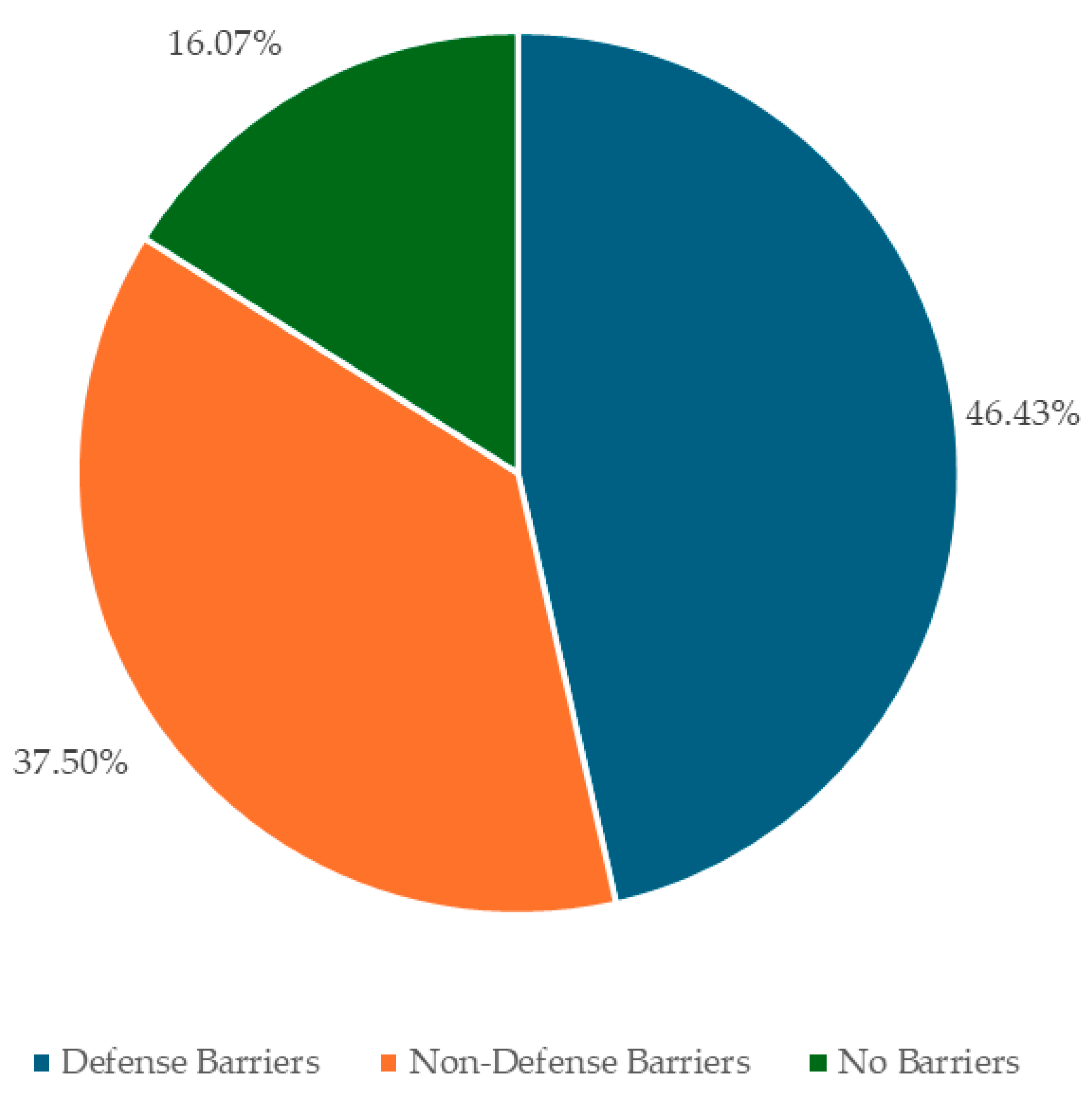

Of the 56 studies, 26 papers discussed barriers specific to the defense sector [

1,

13,

27,

36,

39,

40,

41,

42,

43,

45,

52,

60,

63,

64,

65,

68,

69,

73,

74,

75,

76,

77,

81,

82,

83,

84], 21 addressed barriers in non-defense sectors [

16,

37,

38,

44,

47,

48,

49,

50,

53,

54,

57,

58,

59,

61,

67,

70,

78,

79,

80,

85,

86], and 9 papers did not mention barriers at all [

40,

41,

45,

52,

60,

68,

74,

76,

83].

Figure 16 shows this mix of barriers.

Papers were categorized into sectors based on their stated focus. Defense papers were identified through direct references to DoD priorities or organizations, while non-defense papers discussed sectors such as aerospace, manufacturing, or education. In cases where a paper addressed multiple sectors, defense was given precedence to avoid double-counting. This classification enabled a more accurate understanding of how barriers differ by sector.

Barriers varied in frequency and type between sectors.

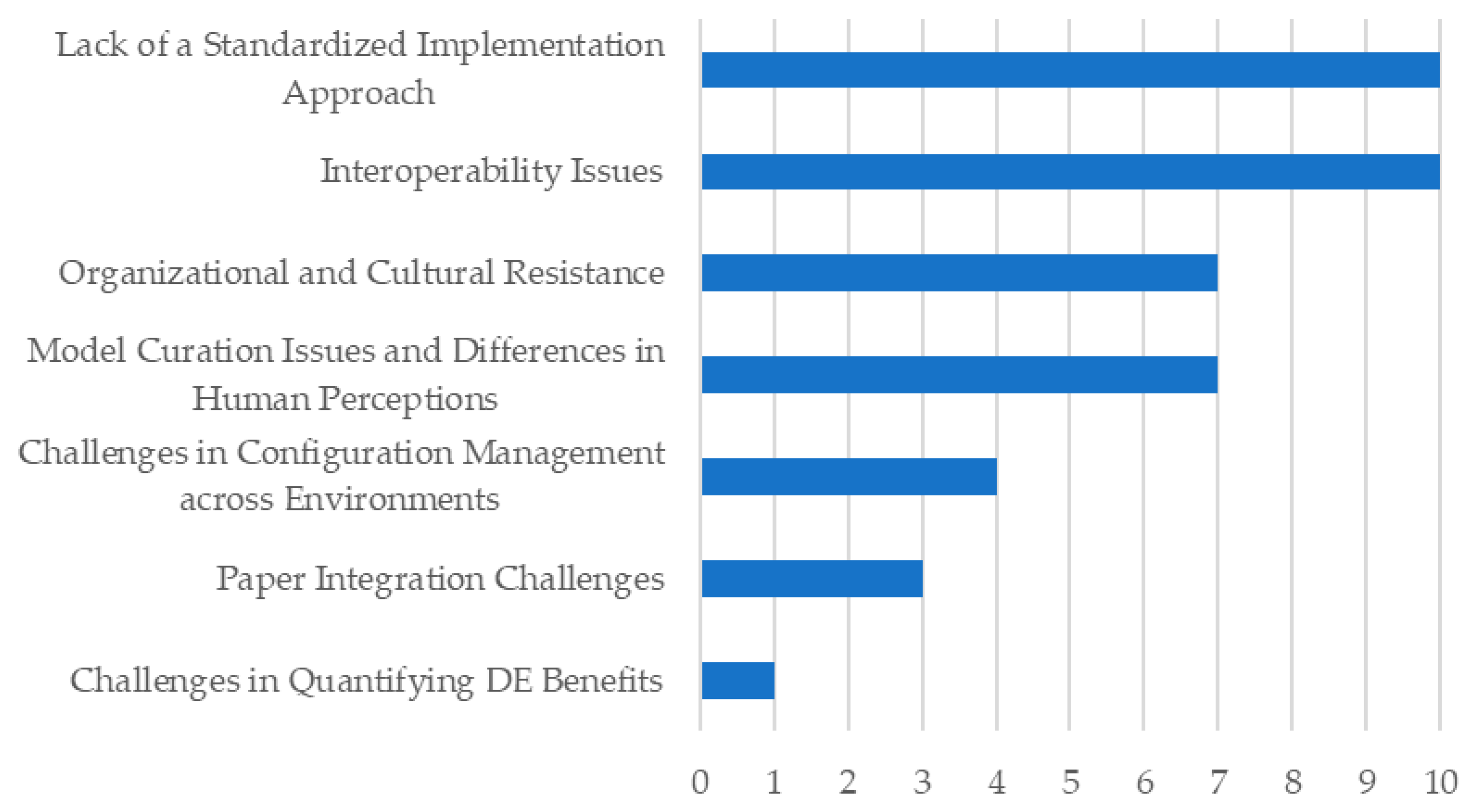

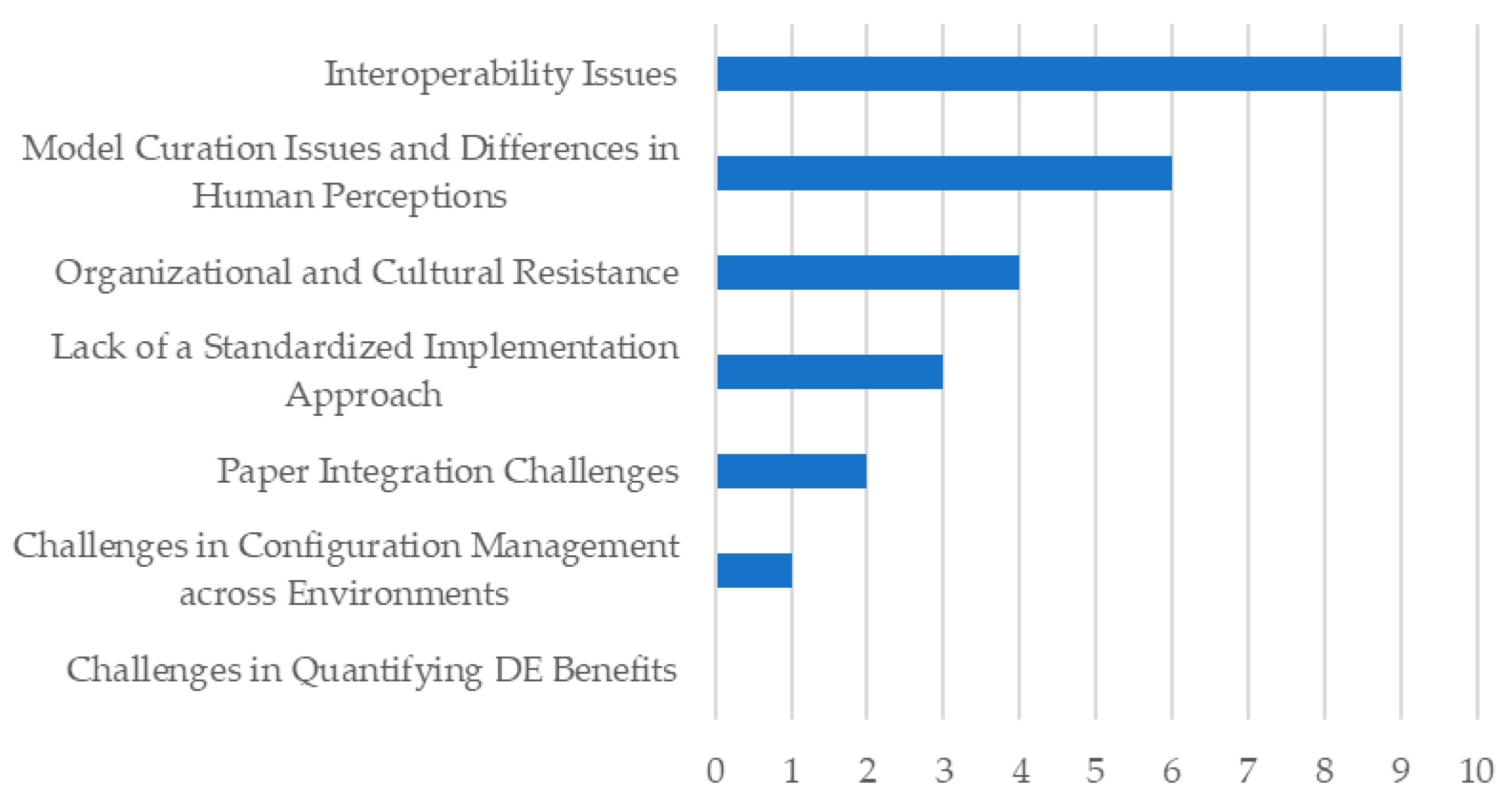

Figure 17 and

Figure 18 illustrate the most frequently cited barriers within each sector.

The most common challenge across both sectors was lack of interoperability between tools, systems, and platforms. In the defense sector this was mentioned in 10 of the 26 papers [

27,

39,

60,

64,

68,

73,

75,

76,

82,

83] and in the non-defense sector in 9 of 21 papers [

13,

38,

48,

49,

53,

58,

59,

85,

86]. This is a big problem given the reliance on legacy systems and the many software tools used across the lifecycle. Interoperability issues were often related to integrating different digital tools, managing proprietary data formats and transferring models between platforms. Notably this was 42.8% of all barriers mentions in the non-defense sector and 38.5% in the defense sector.

Another big barrier was cultural and organizational resistance to change. This included inadequate training, lack of leadership emphasis and difficulty in transitioning personnel from traditional workflows to digital environments. This was mentioned in 7 of the 26 defense papers [

1,

36,

40,

43,

52,

63,

76] and 4 of the 21 non-defense papers [

16,

61,

85,

86]. In these cases, the challenge was not the technology itself but the workforce’s readiness and skepticism about DE adoption. These papers said that without sufficient cultural alignment and communication, even well designed DE frameworks will fail. This was 26.9% of the barriers in the defense sector and 19% in the non-defense sector. A third common barrier was the lack of a standard approach to DE. In the defense sector this was mentioned in 10 papers (38.5%) [

13,

36,

39,

40,

41,

42,

43,

69,

76,

81] and in the non-defense sector in 3 papers (14.3%) [

38,

67,

70]. Several papers noted that the lack of cross-industry consensus on DE best practices creates confusion during implementation. With rapidly evolving technological approaches that were considered state of the art yesterday can become outdated today, making standardization even harder.

A smaller but still significant challenge was the difficulty of moving from paper-based documentation to fully digital environments. This paper-to-digital barrier was mentioned in three defense papers [

13,

69,

81] and two non-defense papers [

49,

58]. Moving from static documentation to model-based systems is not just a technological upgrade, it is a process change, retraining staff and addressing concerns about digital reliability. Several papers noted that clients or stakeholders often prefer traditional documents and may resist a change that feels unfamiliar or unnecessary.

Finally challenges in model curation and configuration management were also mentioned. In the defense sector four papers mentioned configuration management [

40,

45,

57,

64] and seven papers model curation [

27,

42,

60,

73,

77,

81,

84]. In non-defense papers configuration management was mentioned once [

61] and model curation in six papers [

16,

47,

50,

53,

70,

78]. These were issues around version control, data consistency, and resolving conflicting model interpretations across departments. The findings suggest these are more acute in defense-related projects which have long development timelines and complex organizational structures.

4.5. RQ5: Influence of the 2018 DoD DE Strategy

This section addresses RQ5: “To what extent do academic publications explicitly reference or align with the five strategic goals of the 2018 DoD Digital Engineering Strategy?”. Specifically, it examines how the DoD Strategy has influenced the development of research frameworks, methodologies, and best practices in DE. Investigating the extent to which academic publications refer to or align with the 2018 DoD Digital Engineering Strategy is important because the strategy represents one of the earliest and most comprehensive efforts to formalize the vision of DE, specifically targeting the DoD. By analyzing how the five strategic goals are reflected in scholarly work, we can assess whether academic research is supporting, extending, or diverging from national priorities. This alignment matters not only for advancing theory but also for ensuring that research outputs remain relevant to real-world implementation challenges, such as workforce transformation, technological innovation, and infrastructure development. Identifying which goals receive the most academic attention, and which remain underexplored, provides valuable insight into where research is reinforcing policy initiatives and where gaps exist that may hinder broader adoption of DE practices.

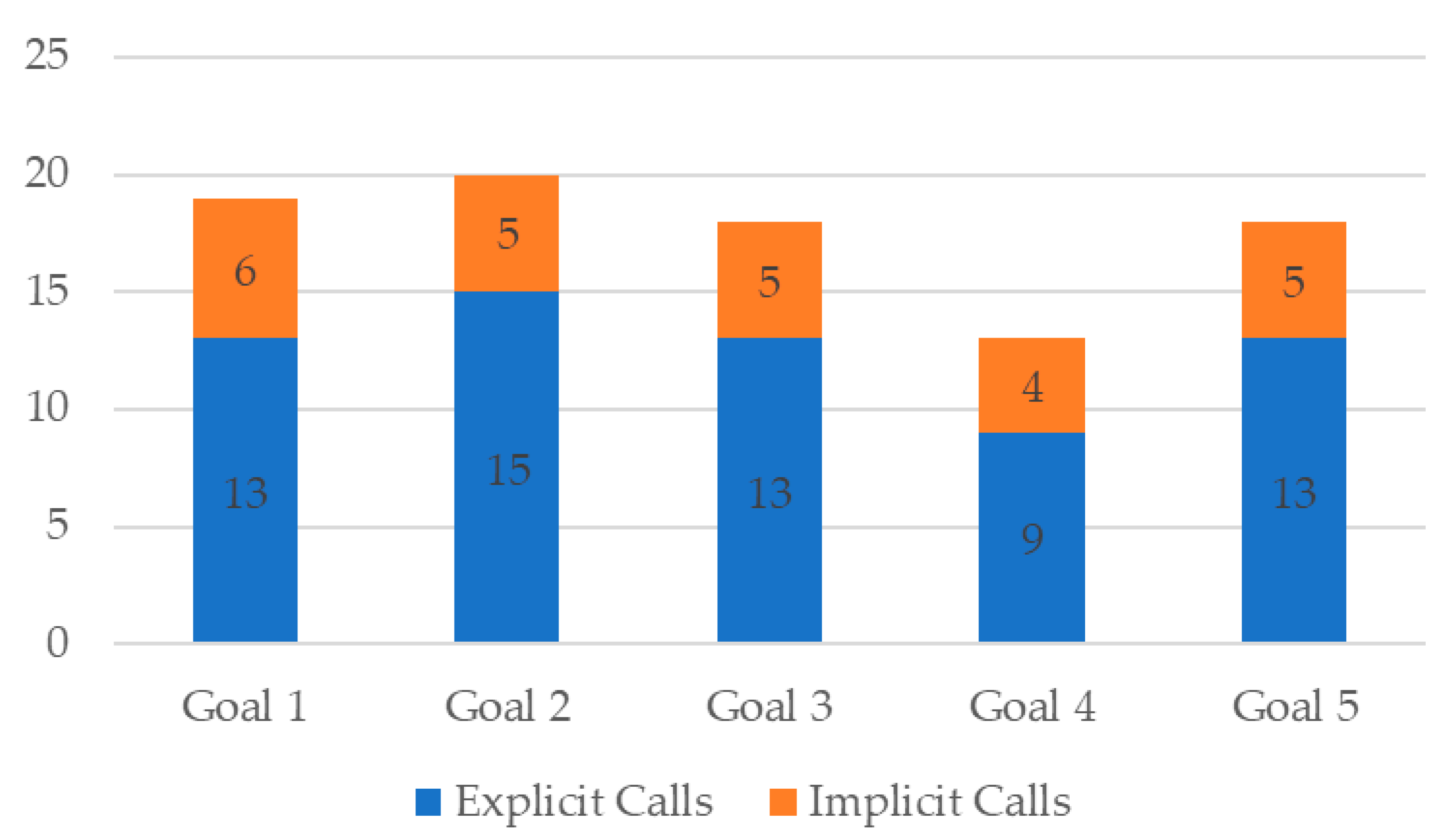

Out of the 56 reviewed papers, 20 (36%) were identified as explicitly or implicitly referring to the DoD DE Strategy. Among these, explicit references were most frequent for Goals 2 and 1, followed closely by Goals 3 and 5. In contrast, Goal 4 was the least represented.

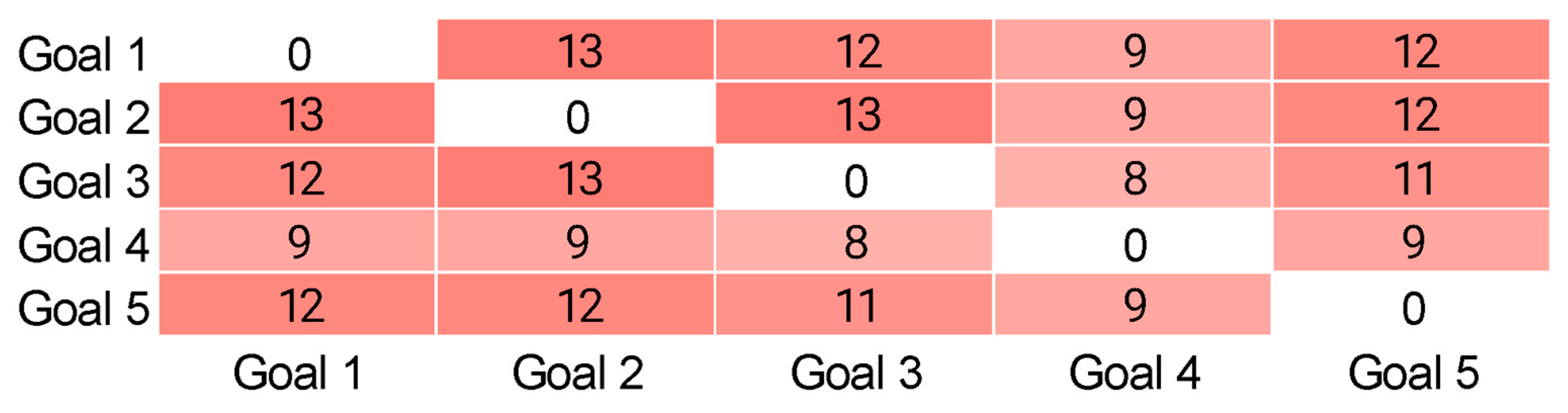

Figure 19 is the breakdown of explicit and implicit mentions of each of the goals. The distribution suggests that research has gravitated toward methodological and cultural dimensions of DE, areas where academia can directly contribute through conceptual development, case studies, and workforce training. The lower emphasis on infrastructure-related goals likely reflects barriers such as resource intensity, reliance on large-scale organizational investment, and limited accessibility of enterprise-level digital ecosystems within academic settings. Overall, the findings indicate that while the DoD Strategy has shaped research directions, particularly in advancing model-centric approaches and workforce transformation, its influence has been uneven across the five goals. This highlights both the alignment of academia with certain strategic priorities and the gaps where further engagement is needed.

Figure 20 presents a co-occurrence map of the five strategy goals, illustrating how frequently they appear together within the literature. A continuous color gradient encodes the frequency with which two terms appear together. The color scale ranges from low-intensity (representing infrequent co-occurrence) to high-intensity (representing frequent co-occurrence). The analysis highlights a particularly strong triad among Goals 1, 2, and 5, where studies combine model-driven decision support with authoritative model/data backbones and the workforce/culture to enable them. This triad reflects an integrated view of DE: technical advances in modeling and data integration are seen as inseparable from the human and organizational capacity needed to enable them. Importantly, no evidence of trade-offs or conflicts between goals was identified, suggesting that the DoD Strategy is conceptualized in the literature as a cohesive framework rather than a set of competing imperatives. Industry mirrors this: of the 20 papers that referenced the DoD Strategy, 18 are defense-focused and 2 generalize beyond defense. Furthermore, the dominance of MBSE is striking: 18 of 20 papers explicitly link their implementation of the DoD Strategy to MBSE, 1 uses MBE terminology without the MBSE label, and only 1 makes no reference to MBSE.

When broken down by goal:

Goal 1 aligns with MBSE, digital surrogates and model curation/application, reflecting its centrality in formalizing modeling practices [

13,

39,

42,

43,

52,

60,

63,

69,

70,

71,

73,

74,

76,

77,

81,

84].

Goal 2 extends into data integration, information standardization and unified repositories, highlighting the infrastructural backbone required for effective DE [

1,

13,

27,

36,

39,

42,

43,

52,

68,

69,

70,

71,

73,

76,

77,

81,

84].

Goal 3 is tied to model-based testing, digital surrogates, and advanced analytics [

1,

13,

27,

39,

42,

43,

52,

58,

63,

69,

70,

71,

76,

77,

81,

84].

Goal 4 appears less frequently and is often expressed as toolchains and acquisition infrastructure [

13,

39,

42,

43,

58,

60,

69,

70,

71,

73].

Goal 5 shows up in competency frameworks, curriculum, and organizational change initiatives [

1,

36,

39,

42,

43,

58,

60,

69,

70,

71,

76,

81,

84].

Overall, the co-occurrence patterns reveal that DE research aligns most strongly with methodological, data, and workforce dimensions of the DoD Strategy, while infrastructure-focused work remains relatively limited. This indicates that while academic efforts are reinforcing much of the DoD Strategy’s intent, certain goals, particularly Goal 4, may require greater attention to fully realize the integrated vision of DE.

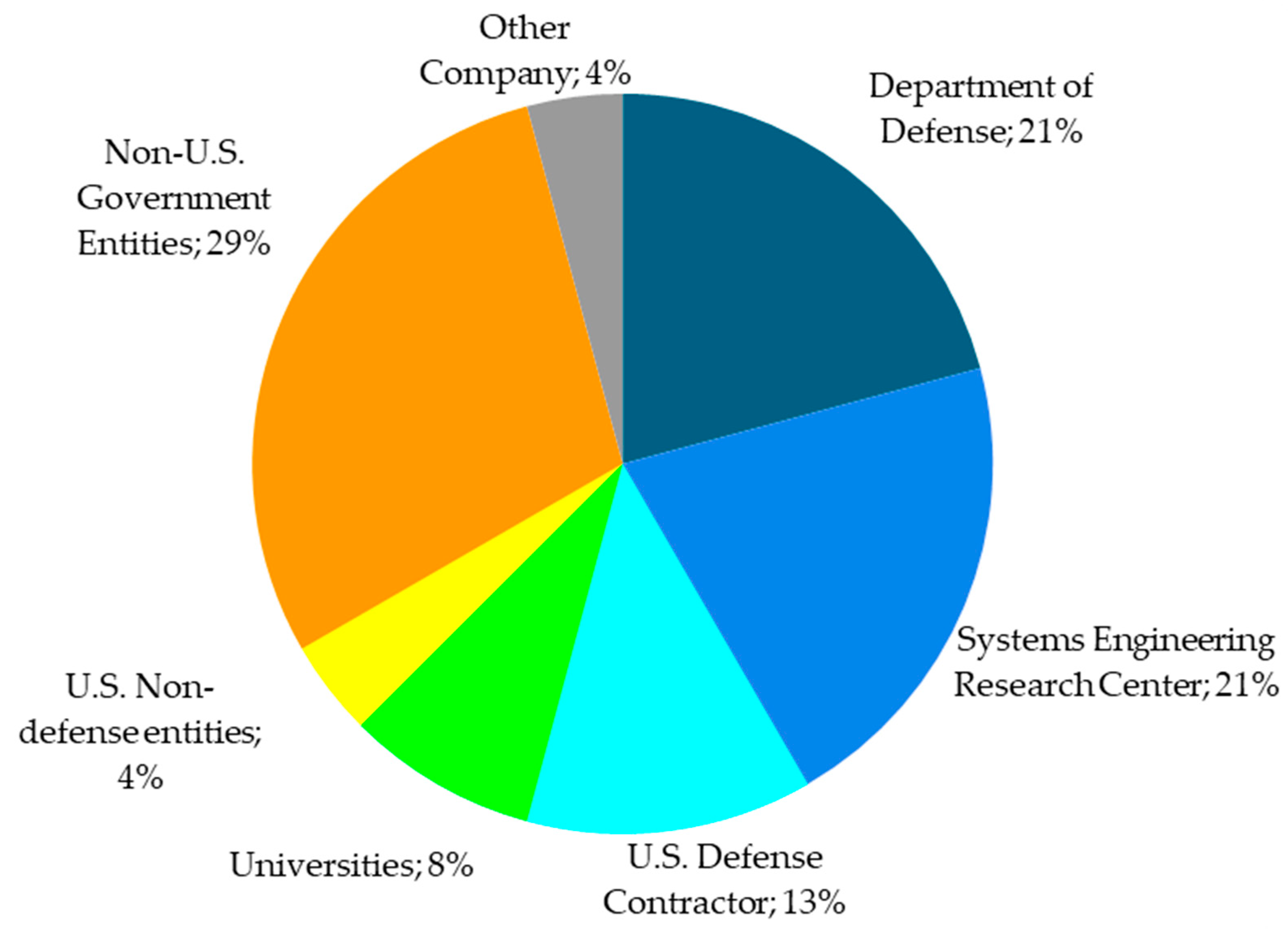

In addition to analyzing research themes and strategy alignment, it was also of interest to examine funding sources, as funding often shapes the scope, orientation, and applicability of research. Understanding who sponsors DE research provides insight into the drivers of methodological development and highlights the sectors most invested in operationalizing DE principles. Across the 56 reviewed papers, 43% reported funding support.

Figure 21 shows the overall distribution of these sources, with non-U.S. government entities (29%) representing the largest share, followed by the Department of Defense (21%), the Systems Engineering Research Center (21%), U.S. defense contractors (13%), universities (8%), and smaller contributions from U.S. non-defense entities (4%) and other companies (4%).

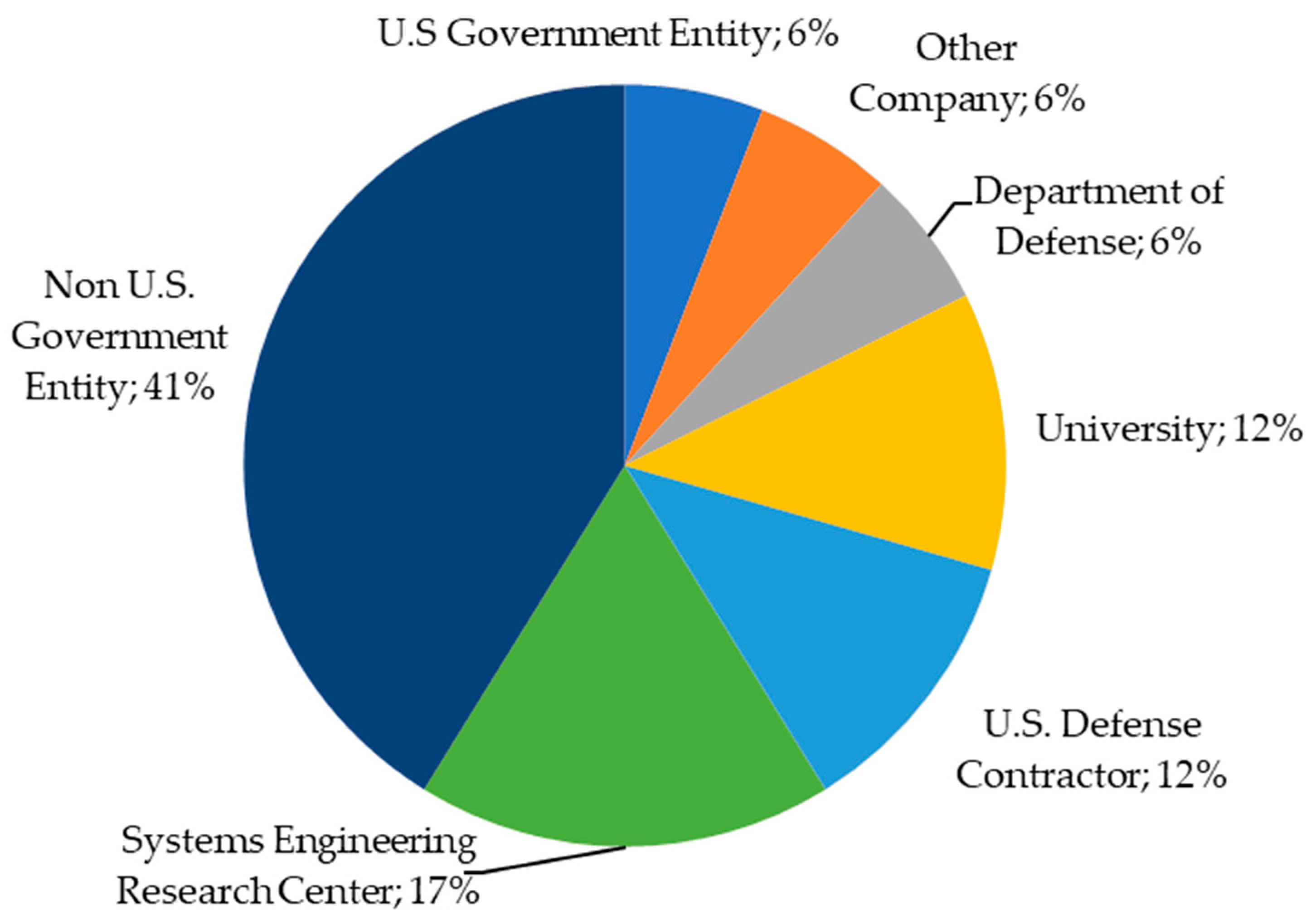

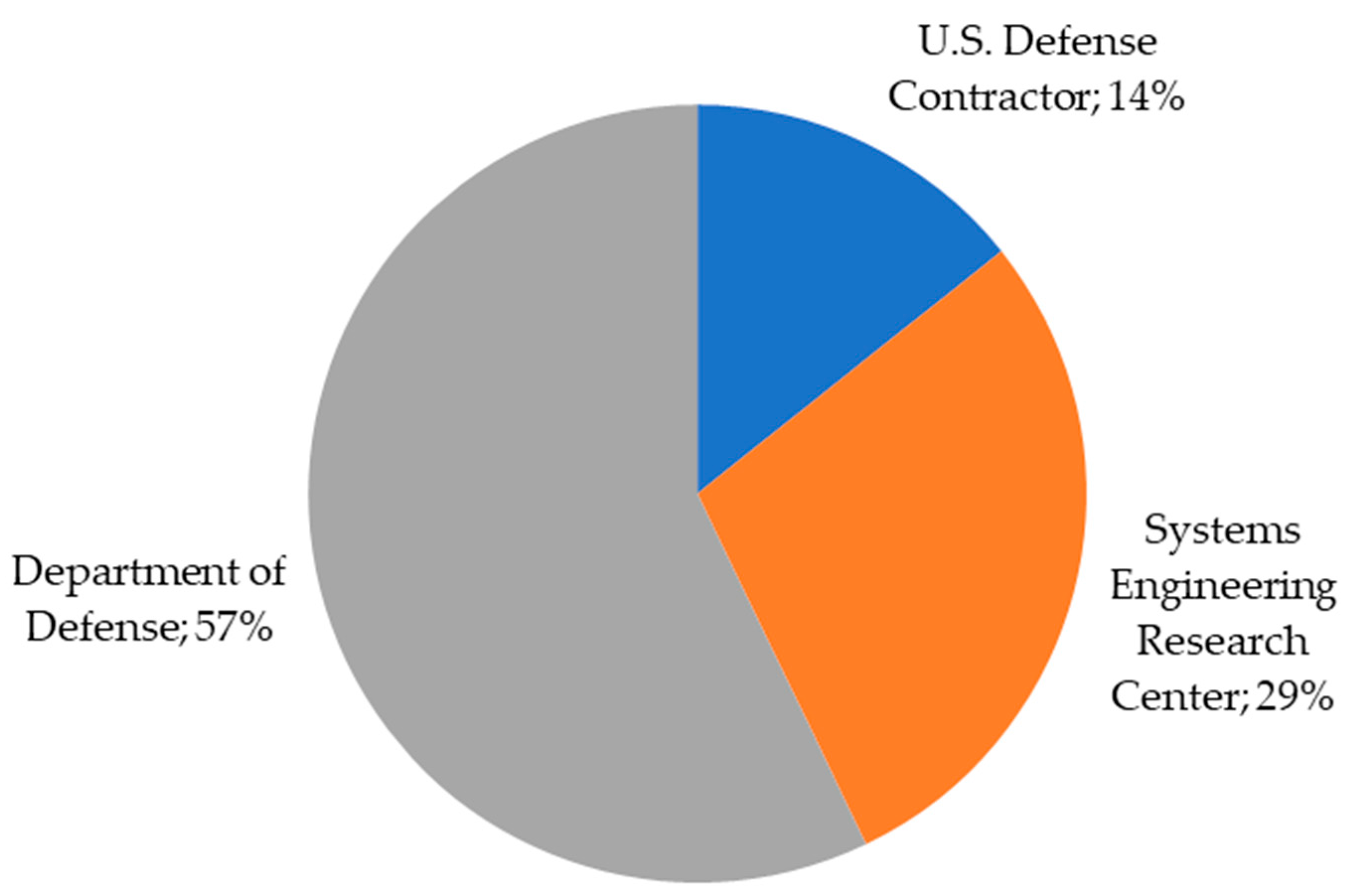

When stratifying by strategy mention, however, the funding landscape shifts noticeably. Among papers without explicit reference to the DoD DE Strategy, non-U.S. government sponsors dominate (41%) (

Figure 22). In contrast, for papers that do reference the strategy, funding is concentrated almost entirely within defense-linked channels: DoD (57%) [

36,

63,

76,

81], Systems Engineering Research Center (SERC) (29%) [

1,

77], and defense contractors (14%) [

27] (

Figure 23). Taking together, this distribution reveals that 86% of strategy-referencing papers are directly tied to DoD or SERC funding, and 100% are defense-related when contractors are included. These patterns indicate a strong association between the DoD Strategy citation and defense-linked funding, although they do not by themselves demonstrate that the DoD Strategy guidelines caused these funding distributions.

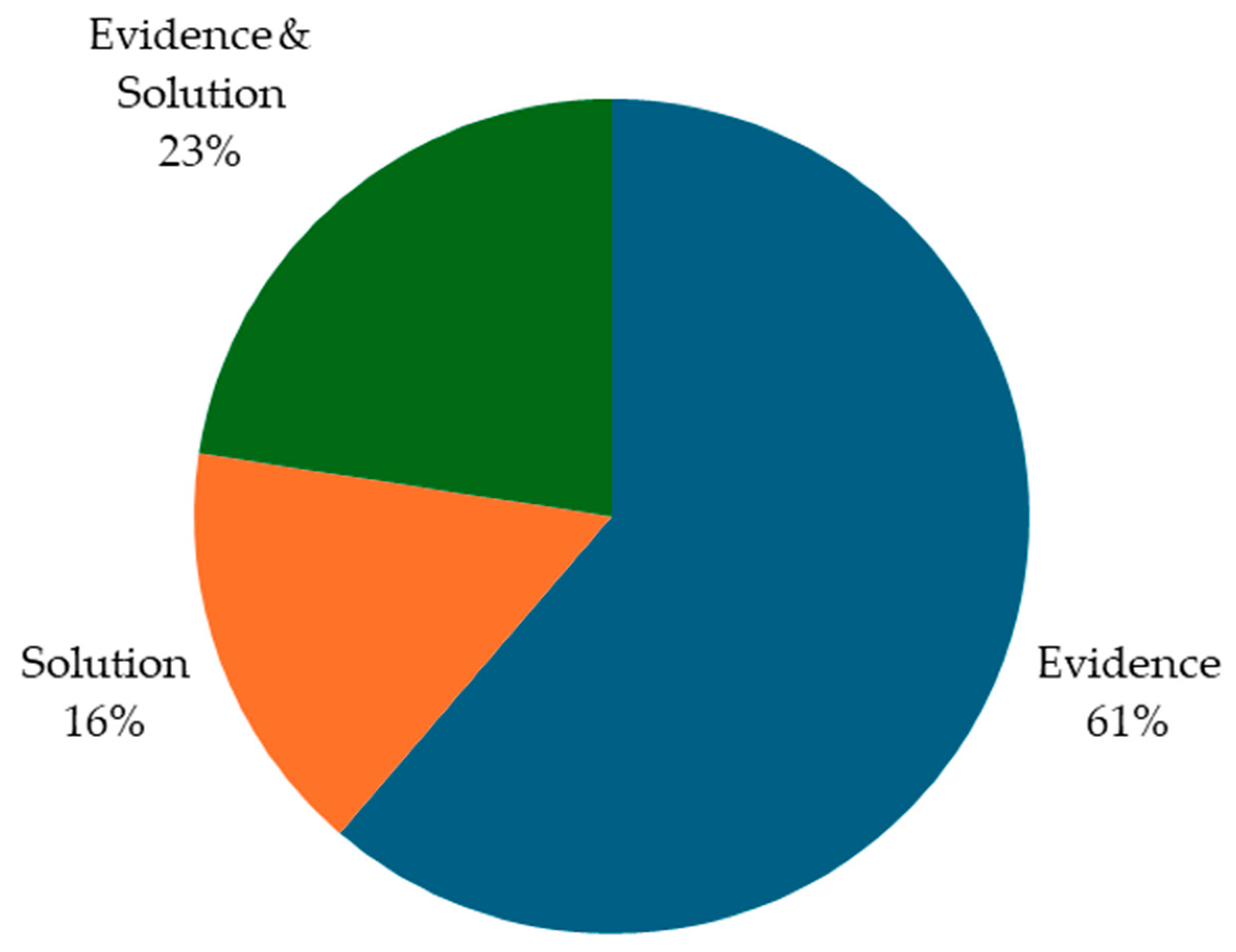

A final analytical lens considers how the DoD DE Strategy goals are used rhetorically versus operationally. Most papers that mention the strategy invoke the goals as evidence or background context to frame claims, while a smaller subset presents solution frameworks or methods that directly implement one or more goals. A residual group integrates both approaches in

Figure 24.

Evidence-oriented usage is seen in papers that cite the goals primarily to establish motivation or justification [

27,

35,

36,

39,

41,

43,

52,

58,

67,

68,

69,

70,

71,

72,

76,

77,

81,

84,

85].

Solution-oriented usage appears in studies that develop architectures, processes, or methods aligned with specific goals [

55,

56,

60,

64].

Hybrid usage is found in a small set of papers that both motivate with and operationalize the goals [

13,

41,

42,

48,

73,

86].

Read alongside MBSE’s dominance and the limited emphasis on Goal 4, this distribution suggests an implementation gap. The DoD Strategy is widely cited to legitimize research directions, yet far fewer studies advance goal-driven architectures, toolchains, or infrastructure that operationalize the strategy in practice, particularly in the infrastructure/environment dimension [

13,

39,

42,

67,

73]. In summary, MBSE remains the principal mechanism for aligning with Goals 1 and 2; Goal 5 is often framed as the organizational prerequisite for long-term adoption; and Goal 4 is underdeveloped compared to its importance.

5. Discussion

Across the five research questions, several common themes emerge that characterize the current state of DE. Together, the findings illustrate a field strongly shaped by defense priorities, conceptually ambitious but operationally immature, and unevenly distributed across sectors. In interpreting these findings, it is important to recall that the corpus is intentionally restricted to studies that include the phrase “Digital Engineering” in the title. This focus made it possible to treat DE as an explicit construct and to analyze how authors define and bound the term in practice, but it also narrows the lens through which the field is viewed. Some organizations and sectors may implement practices that are consistent with DE principles under other labels, such as MBSE, digital transformation, or model-based digital thread, and those efforts are not captured here. As a result, the patterns reported in this review likely overrepresent DE-labeled, often defense-oriented, work and generalizations to the broader digitalization landscape should be made with caution.

The analysis of DE definitions demonstrates the heavy reliance on the U.S. DoD DE Strategy and Defense Acquisition University materials as primary definitional anchors. While these provide necessary structure and legitimacy to the discipline, they also confine DE’s conceptual scope within the defense and aerospace ecosystem. The lack of cited definitions in roughly one-third of the reviewed papers and the limited presence of cross-sector or international references indicate an opportunity for broader, consensus-driven frameworks. Expanding the definitional base to include non-defense and international perspectives is essential to establish a globally coherent understanding of DE.

The analysis of DE motivations and components points out that efficiency, integration, and cost reduction are the most frequently cited drivers, with MBSE and simulation emerging as the dominant technological enablers. It was particularly interesting to observe how often DE is framed almost synonymously with MBSE or simulation. This pattern suggests a prevailing misconception in the literature, treating DE as an extension or rebranding of model-based approaches rather than as a broader, integrative paradigm that connects data, models, people, and processes across the entire lifecycle. While MBSE and simulation are essential components, they represent only part of the DE ecosystem, which also includes data governance, digital threads, authoritative sources of truth, and organizational transformation. This conceptual narrowing highlights the need for the DE community to communicate more clearly that DE is a system-level strategy, not a single methodology or toolset.

Despite these conceptual limitations, the literature demonstrates a strong belief in DE’s transformative potential. However, much of the current work remains descriptive rather than evaluative, emphasizing anticipated benefits over measurable evidence. This emphasizes an urgent need for empirical research that quantifies DE’s impact on lifecycle cost, schedule adherence, and decision quality.

Implementation challenges are remarkably consistent across sectors, centered around interoperability limitations, organizational cultural resistance, and a lack of standardization. These issues manifest differently depending on context: defense and aerospace organizations struggle with legacy systems and compliance constraints, whereas manufacturing and education sectors emphasize agility, workforce training, and return on investment. Despite these barriers, the literature demonstrates a growing number of proposed frameworks, signaling an active and evolving research landscape. Yet, the persistent gap between conceptual innovation and operational realization suggests that DE remains in an early phase of adoption, defined more by its promise than by systematically validated results.

Further analysis suggests that the 2018 DoD Digital Engineering Strategy has become an important reference point in academic dialog, particularly in defense-oriented work, but its influence is neither universal nor uniform across the five strategic goals. Only 20 of the 56 papers reference the DoD Strategy, and 12 of the 33 methodology papers (36.4 percent) cite it as a basis for framework development. Where it is referenced, goals related to modeling practices (Goal 1), authoritative data and model management (Goal 2), and workforce transformation (Goal 5) dominate the literature, whereas infrastructure development (Goal 4) receives limited attention. This pattern indicates that academic research frequently leverages the DoD Strategy guidelines to frame motivations and methodological choices in model-centric and workforce domains, while having less visibility into or access to the large-scale enterprise ecosystems needed to study infrastructure integration and digital continuity in depth.

From the perspective of Goal 4, the findings suggest that DE is still largely conceptualized as a modeling and data problem rather than as an infrastructure and acquisition integration problem. Very few studies describe how DE artifacts connect to enterprise software environments, contract deliverables, or configuration-controlled acquisition baselines. This highlights an implementation gap between the DoD Strategy’s infrastructure aspirations and the current research focus and the need for more actionable guidance for organizations seeking to operationalize Goal 4.

Notably, the DoD Strategy goals are most often used as justification for research directions, less often as the backbone of End-to-End operations that connect environments, governance, model curation, cybersecurity, and workforce change. The near-term opportunity lies in publishing the “plumbing” of DE operationalization: defining service boundaries for the ASoT, establishing model curation cadence and governance roles, and identifying minimal standardized outcome measures that enable cross-study comparison and benchmarking.

Sectoral differences and funding asymmetries further reinforce these patterns. Defense and aerospace studies focus on compliance, configuration management, and curated repositories supported by dedicated funding streams, whereas non-defense sectors prioritize interoperability and faster time-to-value within tighter capital constraints. When the DoD Strategy is cited, funding overwhelmingly originates from defense-linked sources, which appear to facilitate deeper investments in governance and infrastructure. The shared language and structure introduced through defense-oriented initiatives have likely advanced DE maturity, yet they also risk overfitting solutions to one sector’s governance and acquisition context.

Beyond its technical and methodological contributions, the findings of this review highlight the role of DE as a foundational enabler of digital transformation in engineering organizations. DE provides the model-centric, data-driven infrastructure that supports shifts in how engineering work is coordinated, communicated, and governed. By establishing authoritative digital artifacts, continuous data flows, and integrated lifecycle models, DE helps organizations transition from document-oriented processes toward collaborative, model-based practices. These technical shifts have meaningful organizational implications: they facilitate cross-functional alignment, enable faster decision-making, and promote a culture of transparency and traceability. As organizations adopt DE practices, they also confront necessary cultural and procedural changes, such as redefining roles, restructuring workflows, and developing new competencies around digital artifacts. In this way, DE functions not only as a set of tools but as the technical engine that accelerates and stabilizes broader digital transformation efforts. Recognizing DE’s dual role, as both a methodological framework and a catalyst for organizational change, provides a more comprehensive understanding of its impact and reinforces its strategic importance in contemporary engineering practice.

Taken together, findings from RQ1–RQ5 suggest two things for the DE community. First, broaden the reference points beyond defense so guidance travels across industries, by including sector-neutral standards, metrics, and exemplars. Second, shift from frameworks to operational implementations. That would close the rhetoric-practice gap, show where DE works, how well and under what conditions, and accelerate maturity from concept-forward claims to evidence-based practice.

6. Conclusions and Future Research

This systematic literature review consolidates three decades of research on DE, tracing its evolution from conceptual foundations to emerging operational frameworks. By examining DE definitions, motivations, components, challenges, and how the 2018 U.S. DoD Digital Engineering Strategy is reflected in 56 peer-reviewed studies, this work provides an integrated understanding of how DE has evolved as both a discipline and a practice.

While DE is widely recognized as a transformative enabler of lifecycle integration, a noticeable portion of the literature approaches DE primarily through the lenses of MBSE and simulation. This focus is understandable given their foundational roles in model-centric engineering; however, it also highlights a tendency to narrow the interpretation of DE’s broader intent. In practice, DE encompasses far more than modeling, it integrates data management, digital threads, authoritative sources of truth, automation, and organizational change. Emphasizing this wider systems perspective can help the community move toward a more comprehensive and balanced understanding of DE as a unifying engineering paradigm rather than a collection of specific methods or tools.

The review also highlights the uneven distribution of DE maturity across sectors. Defense and aerospace continue to dominate, benefiting from structured governance frameworks and sustained funding, while manufacturing, education, and other domains are still in exploratory stages. This imbalance highlights the importance of developing cross-sector frameworks that adapt DE principles to different operational realities rather than replicating defense-specific solutions.

Across the corpus, empirical evaluations of DE in operational settings remain relatively scarce compared to conceptual frameworks, reference architectures, and position papers. As a result, many of the reported benefits of DE are supported more by reasoned argument than by systematic evidence. Future work should therefore move beyond proposing new frameworks and taxonomies toward studies that design, implement, and rigorously evaluate DE solutions in real programs. This includes comparative analyses against conventional practice and quantification of impacts on lifecycle cost, schedule performance, quality, and risk. Specifically, upcoming work should: (i) develop sector-neutral DE maturity models that account for differences in scale, risk tolerance, and digital infrastructure; (ii) advance interoperability standards and open data ecosystems, ensuring that DE implementations remain scalable and vendor agnostic; (iii) conduct empirical case studies of programs that attempt to embed DE assets into acquisition and sustainment processes, particularly in relation to enterprise toolchains and configuration-controlled baselines; (iv) develop and evaluate reference architectures and integration patterns that link MBSE environments, configuration management systems, and enterprise data services; (v) create methods and metrics for assessing integration maturity and digital continuity across the lifecycle; and (vi) invest in workforce and education initiatives that strengthen DE competencies across disciplines, bridging the gap between conceptual understanding and practical application.

By addressing these priorities, DE can evolve from a sector-specific strategy to a globally recognized engineering paradigm that unites digital models, authoritative data, and integrated decision-making across the full system lifecycle. Ultimately, realizing this vision will require collaboration across academia, industry, and government to align conceptual progress with operational maturity, transforming DE from a promising idea into a proven, trusted, and scalable practice.