1. Introduction

Maintaining consistency in complex systems is a continuous challenge that requires active coordination. In data-intensive systems, inconsistencies may arise in formats, resolutions, latencies, structures, access, and other aspects. When such hurdles arise, the losses due to integration delays or failures can be severe. Data silos have been attributed to delays and unnecessary barriers that negatively impact patients with rare diseases and slow drug development [

1], as well as to disruptions of critical workflows [

2]. In this work, we explore the potential of systematically leveraging generative artificial intelligence (AI) to mitigate such issues and expedite the fusion process to the point of demand. The goal is to ultimately enhance preparedness for emerging data silos and modalities, and to expedite statistical inference and experimentation through a streamlined data pipeline.

There are many technical and organizational explanations behind the emergence of a data silo. In this work, we focus on the multimodality aspect, as it is fundamental to the emergence and resolution of a new silo to handle different or emerging modalities, which in turn may pose the challenge of latent integration. Establishing a connection between inherently differing data can be less intuitive. However, recent advances in machine learning embeddings and diffusion algorithms [

3,

4] have led to novel methods for handling multimodality, encompassing both structured and unstructured data. For example, image-to-text captioning and mappings continue to improve, producing reliable results in domains such as robotics and image retrieval [

5].

Here, we propose an iterative strategy for integrating newly emerging silos as they arise. Building on recent advances in multimodal processing, we demonstrate how such models can significantly accelerate integration, while also preventing delays and eliminating key obstacles to achieving an efficient real-time response. By following a principled system design, it becomes possible to establish and sustain a dynamic fusion layer that provides robust support for decision-making at the critical milestones of system evolution.

The proposed iterative process begins with the initialization of a unified metamodel upon the introduction of the first silo. At this stage, reasoning procedures infer the schema and establish normalization as a prerequisite to full integration. Once this foundation is prepared, the variables, entities, and relations of each newly introduced silo undergo an additional reasoning step to evaluate their alignment with the existing unified metamodel. The process identifies suitable candidates for mapping new elements; when alignment proves insufficient, the unified metamodel is extended to incorporate the necessary concepts. In cases involving the removal of a previously involved silo, the reasoning process assesses the implications of deleting or modifying the remaining structures. After each cycle, a revised version of the metamodel is generated and preserved, thereby documenting both its evolution and the progression of the unification process over time.

By exposing such procedure to multimodal inference, the reasoning over the collected features from silo databases can enable derived knowledge to be more accessible with advanced query procedures. With intelligent augmentation of missing priors, the adaptive fusion utilizes previously learned architectures to compensate with quantifiable accuracy and transparent reasoning. The formal grounding to recognize the multiple layers allows for a combined neuro-symbolic approach to the inherent complexity in data growth. Executing complex queries can benefit from inference on multiple levels starting from the schema and combining observed data with prior knowledge in generative models.

In the following sections, we discuss background on existing techniques for data integration and schema inference. We also discuss recent AI models that can be applied to achieve and enhance this goal. In

Section 3, we describe the methods in which multimodality support can be leveraged. We provide the foundational mathematical formulation in

Section 4. In

Section 5, we provide a detailed description of the flow and outline the key implementation aspects. In

Section 5.4, we demonstrate the feasibility of the results with examples using real data.

Section 6 demonstrates the approach more empirically with additional results for validation and quantification of accuracy. Having shown how to maintain consistency in complex data systems in the face of silo emergence, we consider implications for the management of complex systems more generally. We discuss the problem within the context of a System of Systems (SoS), where siloed databases share SoS traits—such as independence, distribution, and emergent behavior—but also pose unique integration challenges.

2. Background

We present an overview of methods used in the existing literature for data integration and statistical inference. We then provide a brief background on the candidate AI models that have recently achieved substantial improvements in addressing multimodality.

2.1. Data Integration

There have been numerous techniques in the industry for handling large datasets with heterogeneous shapes and topologies, utilizing data virtualization and management tools. Data warehouses, lakes, buckets, and meshes have been widely used in practice to provide speed and efficiency in both construction and usage. Each product aims to provide specific benefits tailored to specific needs, according to well-established trade-offs. Some offer products to speed up business intelligence packages while maintaining stronger governance and compliance [

6]. Others provide more flexible object storage with less restrictive techniques, such as open file formats [

7]. Several approaches have emerged for handling table formats, columns, schemas, and typing. For example, Apache Iceberg [

8] provides schema evolution for large analytics datasets in data lakes, while Snowflake Open Catalog [

9] serves as a catalog to facilitate reading, writing, and other types of queries across different engines, thereby increasing interoperability. Different solutions enforce different rules. Some support auto-detection of features such as types and formats. Solutions are typically created to address specific emerging needs that can vary over time.

Fundamentally, these solutions employ a range of underlying technical, statistical, and algorithmic methods to manage large-scale operations and facilitate seamless growth. Probabilistic models provide type and schema inference using Bayesian networks [

10]. Other forms use a logic-based system and constraint-solving based on observed data, leveraging satisfiability solvers [

11]. Casting databases as graphs is also used to enable neural network methods [

12] for signal propagation, thereby enabling learning and prediction. Matching entities and columns across different datasets [

13,

14] has been examined through techniques that quantify similarity and learn and compare distributions. A variety of logic-based inferences have been applied to establish mappings and examine semantic consistency across domains [

15].

2.2. Applicable AI Models

Recent advances in various AI models can be leveraged to further facilitate the integration of data across systems, thereby enabling the construction of unified views. Transformer-based models with integrated attention mechanisms [

4] can facilitate semantically rich reasoning by extracting features across diverse data topologies, including text and images. Embeddings of different data and system representations into a unified form (e.g., Word2Vec [

16]) enable alignment, search, and quantification of similarity (e.g., cosine similarity for text [

17]). Neural auto-encoders [

18] can produce a variety of statistical checks and insights, assisting in restoring data quality and handling growth. Generative adversarial networks (GANs) [

19] generate high-quality synthetic data that can be utilized in data augmentation. Diffusion models [

3] have the potential to substantially extend existing data integration techniques and methodologies by enabling the seamless generation and synthesis of multimodal data. They can be used to structure various modalities into an organized textual description and to augment missing modalities, providing a consistent and unified view for users and decision-makers. When connected to live data streams, whether from social media feeds or security imagery, these models can mitigate barriers in interpretation and transformation.

In this work, we aim to leverage these advances for complex reasoning tasks involving segregated and heterogeneous data silos. Our goal is to achieve reliable data integration and more advanced knowledge extraction while accounting for variability in confidence thresholds from generative models. Some works have emerged to accommodate such variability and add verification accounts, such as claim verification [

20] and retrieval-augmented generation [

21,

22]. In our proposed framework, we formalize a distinction between prior knowledge and latent data. We then demonstrate, using public datasets, the use of AI models for data integration and their reasoning capabilities in a controllable and transparent manner.

3. Methods

3.1. Ontological Schema Inference

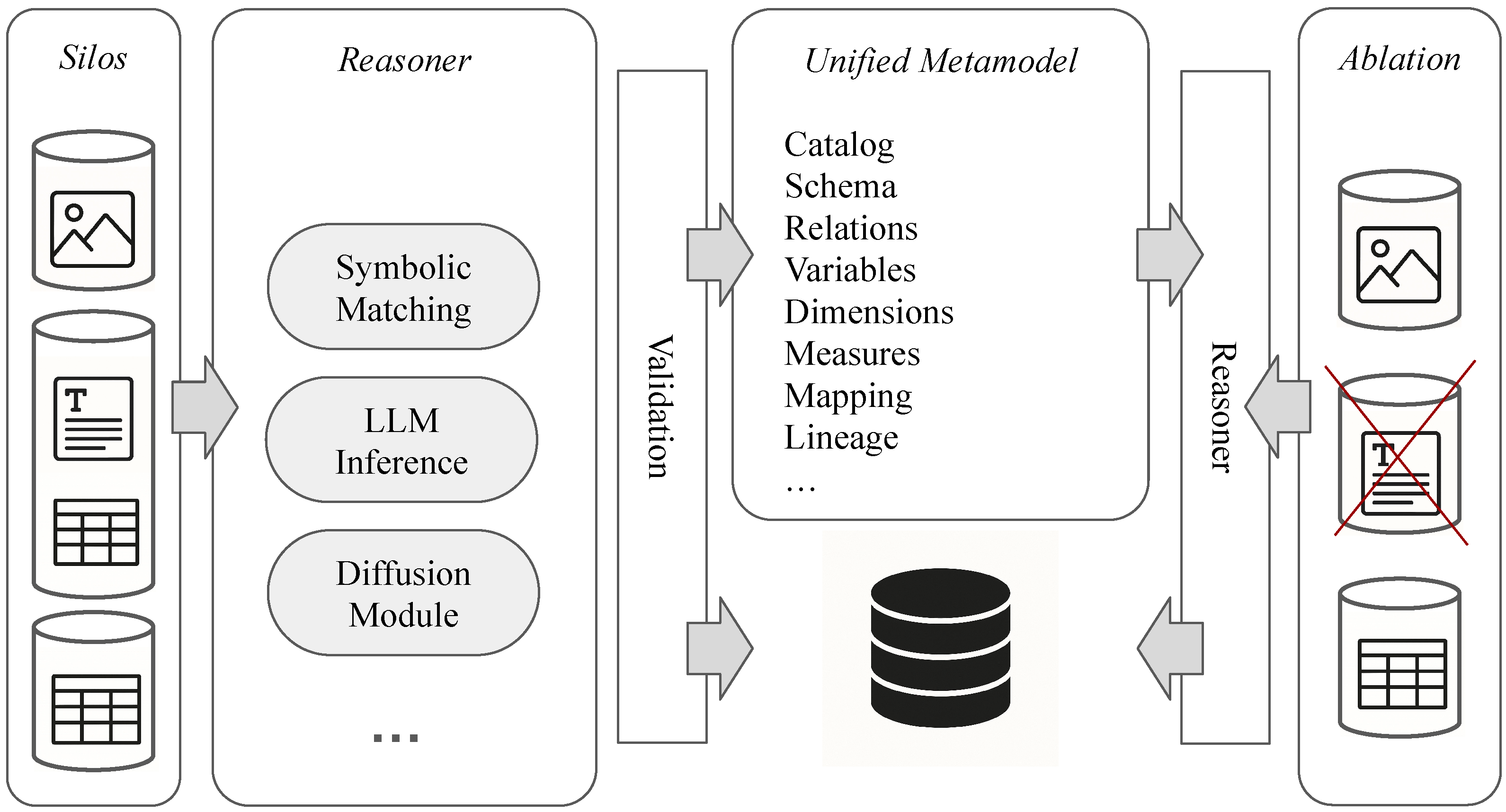

Figure 1 illustrates the proposed integration strategy. Each silo first undergoes a schema construction phase that includes data cleanup, normalization, and, where necessary, imputation (i.e., the correction of missing data). Once prepared, the newly inferred schema is aligned and mapped against the existing unified store. During this stage, the reasoning engine identifies potential joins, which are then validated to ensure global consistency with the system’s overarching design rules. The outcome is a fully specified and deployable encoding of the unified metamodel, now extended to incorporate the most recent silo.

Reasoning about an interconnected schema can be challenging, especially as it grows in size and complexity. Evolving changes and requirements necessitate accurate representations and handling in the system. Delays or discrepancies in capturing such requirements can be costly.

Figure 1 shows an overall view of a streamlined, multimodal-aided automation in the AI model. The emerging data, tables, and variables can then be seamlessly integrated into the existing system while enabling traceability.

We demonstrate this inference using an LLM (large language model) to initialize the schema by reasoning about objects, data types, and relational semantics, either using the entire dataset or a subset of the given samples. Once inferred, the schema can evolve automatically to account for emerging data that were not available or deemed irrelevant during initialization. Additionally, the approach facilitates exploratory analysis of unrelated domains (e.g., healthcare, agriculture, finance, supply chains). The latter may not necessarily yield an integrated schema, but it could provide valuable insights into the data and latent correlations and expedite statistical studies and analytics. It could also be used for a domain branch off where a new schema can be fitted to serve different needs in a well-defined cycle.

3.2. Format Heterogeneity

Now, the preliminary schema construction is in place. We explore the fusion across heterogeneous domains (e.g., healthcare and supply chains), various formats (e.g., CSV, XML, JSON), and diverse structures (e.g., text and images). At this stage, basic symbolic reasoning might be insufficient. Other models (e.g., GANs and diffusion models) might be necessary to address multimodality.

Our approach is to guide the generic AI model toward extracting more relevant, semantically rich alignment by combining inference and validation through a multi-layer scaffold. By doing so, the framework lays the foundation for integrating live data streams in heterogeneous formats, enabling the discovery of latent mappings and relationships. For example, the established unified metamodel can account for connections to publicly available data streams. Then, the dynamic formation and fusion of silos can be utilized to integrate these streams in the same manner, leveraging prior knowledge in other models to learn and discover their content and structure. Leveraging multimodality in this way benefits many applications, such as combining sensor feeds. It can also help handle missing data, especially in real-time environments where some nodes or data sources may not function as expected.

4. Mathematical Formulation for the Evolving Unified Metamodel

We describe the underlying mathematical formulation of this approach in two parts. The first part describes the foundational formalism, while the second part describes the methodology and the potential uses of prior knowledge to enrich the data fusion procedure for the data under study.

4.1. The Foundational Formalism

4.1.1. Defining Silo as a Typed Information Space

We model each data silo as a typed multi-dimensional space (e.g., a tensor or a manifold), and the integration process as a cognitive expansion of a unified metamodel space that grows through alignment, transformation, and composition. Each silo

S is represented as a tuple

where

is the set of entities (or records, objects, etc),

the set of attributes,

a typing function assigning each attribute a data type or modality (e.g., numeric, text, image, graph, acoustic, electromagnetic, electrochemical, etc.), and

is an interpretation function providing semantic context (e.g., units, ontology tags, distribution, probabilistic models, etc.).

4.1.2. Unified Metamodel Space

Across all emerged silos, the unified metamodel evolves as:

where

is a set of canonical entities,

an extensible feature space, and

a set of relations between entities, features, and modalities. Each silo is embedded into this space via an integration map

which reconciles its schema with the unified metamodel schema.

4.1.3. Cross-Silo Correspondence

Given two silos

and

, integration requires discovering structural correspondences

where each element

associates an attribute

in

with an attribute

in

through a transformation

where

can represent unit conversion, normalization, or modality transformation.

4.1.4. Compositional Closure and Derived Features

New knowledge emerges through feature synthesis. For attributes

drawn from any silos, we define a derived feature

where

g is any executable operation (e.g., algebraic, logical, statistical). Thus, the feature space

is closed under composition:

4.1.5. Cognitive Expansion of the Metamodel Space

Let

denote the unified metamodel space at iteration

t. Integrating a new silo

expands the space as

where

are newly derivable features enabled only after the integration of

. This models the emergence of new insights from cross-silo fusion.

4.1.6. Minimal Realization

The cognitive expansion defined above describes the potential knowledge space after integrating a new silo. However, not all newly derivable features or relations need to be instantiated. Instead, minimal realization is derived as the smallest concretization of the unified metamodel space. Newly observed features and modalities are merged with earlier ones. Transformation, conversion, and mapping are introduced as needed to accurately maintain each silo representation in the new unified space while avoiding superfluous expansion.

Let

denote the potential expanded space,

where

is the set of all features derivable through compositional closure. A realization is any instantiated subset

such that

We define the minimal realization as

It retains only the subset of derived features across modalities that are functionally necessary to ensure interoperability between the newly integrated silo and existing ones to satisfy reasoning or analytical queries.

4.1.7. Modalities and Cross-Modal Mappings

The unified space may be decomposed by modality as a disjoint union

with cross-modal transformations

which may be learned (e.g., diffusion models) or specified.

Together, the above formalism treats data silos as information manifolds, integrating them to construct structural correspondences that enable compositional knowledge expansion and efficient neuro-symbolic reasoning in a unified multimodal space.

4.2. Separating Prior Knowledge from Observed Data for the Integration and Reasoning Queries

We distinguish between prior/common knowledge (e.g., general facts, units, rates, taxonomies) that an LLM or curated knowledge source can provide and data/evidence from a concrete database. The former can be subject to error or uncertainty due to model inference or partial inputs. The system fuses both to answer queries and construct derived indicators.

4.2.1. Knowledge Decomposition

Let denote the prior knowledge, while is the set of observed data via silo emergence. informs the multidimensional structures and layers of the unified metamodel space, and an AI model (e.g., LLM) can safely provide within a negligible error threshold. Analogous to prior in Bayesian inference, this stage provides a cognitive ground for stratifying meta layers and interrelationships based on the complex neural architectures learned from the concrete training corpus. It identifies axioms and definitions, such as units of time, algebra, and calendar identities. It can also be used to identify: unit canons, constants, conversions, generic ontologies, demographics, taxonomies, classes, soft constraints, ranges, logical implications, etc.

4.2.2. A Stochastic Oracle for Priors

We treat the LLM or the trained module as a stochastic oracle returning possibly uncertain priors. A query to the oracle (e.g., a needed constant, a conversion rule, a time resolution) yields an estimate with, optionally, variance and a confidence score.

4.2.3. Normalization and Grounding

Given an attribute a in some silo with specific unit/type , normalize it to canonical form using the unit graph and prior functions from using the query to the knowledge oracle (e.g., LLM). If required data is missing, it can be requested and added to with a confidence weight when applicable.

4.2.4. Query Reasoning and Semantics

Let a user query be specified as an expression Q. We define Q as a typed program over similar to structured queries (SQL). The program consists of normalized attributes that may be pulled from the knowledge base across different silos. instantiates the prior reasoning and inference for functions and rules, possibly after consulting an LLM. It could also involve a composition, projection, or join with aggregations over different dimensions or partitions.

Thus, this framework abstracts the system’s cognition as typed program evaluation over two sources: priors (general rules, units, taxonomies, ranges) and evidence (silo data). The LLM provides on-demand stochastic priors and transformations, while concrete data provide grounded observations in a transparent, controllable reasoning process.

5. Configuration

The system’s main catalog contains essential information about the metamodel, including modalities. After initialization, the catalog update process can be invoked upon the emergence of a new silo and immediately after a preprocessing step. In the following subsections, we will provide a detailed description of the configuration steps.

5.1. Schema Inference and Normalization

We use an LLM (i.e., GPT-5 [

23]) to infer the overall schema from the entire dataset or a sample file. Data types, units, sample values, and ranges inform the overall design. Once inferred, the normalization process takes effect, yielding a model that can be incorporated into the unified metamodel. We note that this process can also capture the multimodality inherent in the LLM’s underlying neural architecture. It can also be bootstrapped with minimal inputs, especially for cases of limited access, data, and partial observability. Such features can be particularly beneficial, especially in silos with significant interoperability barriers. It allows the system to evolve over time from diverse input artifacts. It also ensures an expedited implementation with the prospect of efficient deployability.

5.2. Mapping and Extending

Once the normalized schema has been constructed, a mapping procedure checks each variable or attribute for potential matches in the unified metamodel catalog. The attributes can be divided into two sets. The first is the set of source attributes inferred from the new silo. The second one is the set of attributes that already exist in the unified metamodel. Each attribute is characterized by type (e.g., numeric, text, image), unit (e.g., lb, dollar, mile, byte), domain or topic (e.g., human health, transportation, food resilience [

24]), timing (e.g., years, minutes, timestamps, interval), name, and a key set of available joins. For a target attribute

t to be a potential candidate for a source

s, the reasoning takes into account the commonality in all characterizations. We quantify the overall feasibility of prospect mapping via a weighted confidence score. If such confidence is low, the reasoner expands the metamodel with a new relation or dimension.

5.2.1. Mapping

In the case of mapping,

s must be of the same type as

t. Otherwise, there should exist a model (e.g., diffusion) that transforms the type of

s into

t (e.g., fruit name, image, nutritional facts, etc). Similarly, the unit should be of a compatible form, or a plausible conversion should exist. The domain can also contribute to quantifying the confidence score, while accounting for the approximation inherent in names and categories. We draw on the topics from [

24] and attempt to work through the encountered multimodalities. The descriptive name or column title can also be informative with a given weight (e.g., cosine similarity). Although we opt to use LLM-based approximation for such assessments due to known limitations in previous methods, work to rigorously quantify these weights is ongoing.

Timing is also a key aspect of the transformation, informed by entries to guide the mapping and time-resolution procedures. Different time resolutions can be processed during the reasoning step to harmonize data coming from different sources. Additionally, the intersection of time intervals in historical data can inform the fusion process and yield insights and analytics into existing overlaps. We also plan to use this dimension as a stepping stone toward simulations in future work.

The selection of join keys between the source and target can be informed by the overlap in the underlying distribution and quality of the entries. Diffusion models can be particularly useful for exploring potential matches in cases of high-dimensional data. The final confidence score for each pair can then be determined by several factors, including similarity in attribute names or semantics, unit conversion, join keys, and distribution, with tunable weights assigned to each factor.

The result is a JSON mapping specification of the form:

{

"source": "<file>:<var_s>",

"best_target": "<file>:<var_t*>",

"join_keys": [...J*(s,t*)...],

"conversion": "<lambda*>",

"confidence": 0.0-1.0

}

The results indicate the best target candidate for the source attribute, along with the join keys. In the case of conversion or transformation, the reasoning selects the most suitable models (), which can include a closed-form conversion in simple cases and a diffusion model in more complex cases.

5.2.2. Adding New Relations and Concepts

In cases of low mapping confidence scores, we consider extending the metamodel with a new concept or relation. It means that the newly introduced silo does not closely align with the existing unified schema, necessitating further extensions.

The Algorithm 1 illustrates the reasoning procedure in each iteration. The iteration can take place upon the emergence of a new silo. We replicate this in our experiment by segregating the data into separate folders and creating a new folder in each iteration. In practice, this can be undertaken by introducing new data files, streams, or other forms of data virtualization. Benefiting from the reasoning and multimodal capacity of the underlying model significantly mitigates barriers to building more seamless, automated integration of emerging silos. We will discuss details about the conducted experiment in

Section 5.4.

| Algorithm 1 Iterative Multimodal Silo Integration |

- 1:

Profile each segment in S: extract attribute names, types, units, and basic statistics. - 2:

Schema Inference: query an LLM with profiles and sample rows to obtain a JSON schema_profile describing:

- 3:

Store schema_profile in catalog. - 4:

for each source attribute do - 5:

Compare with existing unified schema . - 6:

If similarity ≥ threshold and join keys match: map with transformation. - 7:

Otherwise, extend schema. - 8:

end for - 9:

Apply Proposals: Update with new measures, dimensions, or relations. - 10:

Save updated catalog with version tag. - 11:

Repeat for each new silo.

|

5.3. Ablation

We address the case of ablation for various reasons. The degree of fusion across data coming from isolated sources needs to be controlled early on and during the lifecycle. The former, early, and preemptive approach might be more straightforward by recording the lineage of data silos and their impact on the unified metamodel. The latter might be more challenging, as it involves unified metamodels with insufficient lineage information. Another reason is to handle growth and prevent the metamodel from growing too large. The size of the metamodel can be quantified using metrics such as the number of attributes and relationships. Therefore, ablation can serve as a control mechanism to evolve the metamodel to an optimized state. It also lays the foundation for testing the necessity of a specific silo and its overall added value in the unified metamodel, particularly in light of an efficient handling of multimodality. Having a tool for examining the quality of a metamodel, with and without a specific silo, can be indispensable. However, it can be challenging, especially when the degree of integration is less well-known.

5.3.1. Preemptive Approach

The preemptive approach is simpler, but it can only be used under restrictive conditions. The fusion of a silo has been documented, and the introduced data has been tracked in a manner similar to timestamping. Also, no significant changes happened after the introduction. The ablation can then take place deterministically by backtracking to the earliest version before the subject silo. The fusion procedure can continue with the remaining ones.

5.3.2. Reasoning Approach

Again, we employ a reasoning procedure for ablation within any particular silo without resorting to lineage data or reverting to an earlier metamodel version. We rely on the LLM to process the different metamodel versions and extract the relevant data to achieve a reasonably successful ablation. This approach has the advantage of handling large metamodels that lack sufficient information about every silo emergence. It can also be useful in cases where data becomes increasingly difficult to manage with deterministic backtracking due to the intertwining and other complexities in data and model growth.

Enabling reasoning in this approach can serve as an instrument to tame complexity. Since added fusions might lead to an infeasible increase in size and data dimensions. We propose using this mean for experimental purposes or actual deployment.

5.4. Example Experiment

In this section, we will use an example with real data from data.gov [

24] and USDA.gov [

25] data sources to demonstrate the approach. We select some publicly available datasets and gradually add them to observe the results. The data is usually available in different formats. In our test, we used data in CSV, XLSX, and JSON with different structures and types. There are three main processes to demonstrate: The first one is the initialization process, where no unified model has been established yet. The second process involves adding an emerging silo to the existing unified schema. The third demonstrates the ablation process. We will also demonstrate various actions that may occur, such as adding a new relation or object, or simply mapping or concatenating values and entries into an existing table.

We devise this simple experiment to clearly illustrate the iterations through the proposed procedure in Algorithm 1, covering various cases of alignment and multimodality support. In the first two rounds, we experiment with two CSV files that are closely aligned from a metamodel perspective, differing only in some metrics and values. Then, we gradually move toward more diverse, heterogeneous data by introducing new dimensions (e.g., price). We then demonstrate the handling and leveraging of multimodality by introducing data in various forms. Finally, we demonstrate two cases of ablation.

Figure 2 illustrates the main steps of the experiment. In

Section 6, we demonstrate the procedure more empirically.

5.4.1. Adding Food Availability Data

Executing the procedure in Algorithm 1 produced the following schema shown in

Table 1 when applied to the file

fruitveg.csv from Food Availability [

25] at the first iteration. The full JSON snippet is provided in

Appendix A.

Then, we upload the file

eggs.csv from the same source. The JSON file has been thoroughly updated, with notable changes to measures shown in

Table 2.

5.4.2. Introducing Price Data

In this step, we experiment with a new dimension by introducing the Food Prices dataset [

24].

Table 3 displays the inferred schema prior to the integration of the unified metamodel.

This iteration extended the unified metamodel by adding the price, given the commodity and year as join keys. It also expanded the list of measures with yield, cup equivalent price, and cup equivalent size.

5.4.3. Adding Images

In this iteration, we present an alternative perspective by introducing images, which enable various use cases. Examples in the food domain include quality control, price validation and analytics, defect and anomaly detection, dietary assessment, waste reduction, and many others [

26,

27]. We continue the experiment by introducing scanned images of sample grocery receipts. The following snippet illustrates the changes in the unified metamodel after introducing images using symbolic reasoning and diffusion.

{

"source": "receipts.jsonl::line_items.total_price",

"best_target": "measures.price_usd_per_lb",

"join_keys": ["Commodity","Time","Retailer","Geo"],

"transform": "DIFFUSION_PIPELINE( steps=[

VISION_EMBED_MATCH(desc_raw,image_path)->Commodity,

OCR_DENOISE(ocr_text), UNIT_NORMALIZE(qty,unit)->pounds,

PRICE_PER_LB(total_price,pounds) ] )"

}

The reasoner inferred a new schema for the receipt. We omit that for brevity. We only show the extension snippet that establishes the mapping between the scanned images and the corresponding variables in the unified view. It also extended the schema with retailer and geo dimensions.

5.4.4. Example Queries

We demonstrate, after the unification process, with two example queries from the established dataset. The first example is to inquire about the total spending using a simple aggregation after combining availability and price data. The second example consists of a query about per capita nutritional consumption, derived from the same data and combined with inference. For example, the second query requires further reasoning on prior knowledge to retrieve facts such as nutrition and consumption units. The results of such a query and the reasoning can be valuable for informing general statistics on the nutritional availability and macroeconomics of the whole population, supported by evidence from the integrated data. We will demonstrate further examples in

Section 6.

5.4.5. Ablation Example

We ablate the related part to food availability to examine the system’s robustness when a foundational part is removed, as the availability silo was incorporated early on in the process. The goal is to demonstrate that the integration of price and receipt silos remains intact. The unified metamodel remains intact. Some analytics and measures are no longer available, such as per capita availability. The results of this step inform decision-makers, highlighting what analytics are preserved and what are lost.

We also experiment with the price silo to examine the degree of loss, given that the receipts silos include high-granularity price data. In this case, the analytical view will remain intact if receipts can supplement the missing price data. For example, the pricing dataset can be inferred from receipts, provided there is sufficient data to cover all price combinations. The results from ablation reasoning inform that. A decision-maker, for example, may choose one data source over another. Therefore, from an analytical standpoint, the loss due to the ablation of one silo can be quantifiable in terms of the effect on other thematic or analytical views. This case demonstrates the added value of supporting multimodality, combined with semantic reasoning, for cost savings.

6. Empirical Assessment with Sample Public Health Data

We conduct an empirical assessment of the proposed approach with a sample of public health-related data [

24]. We downloaded 10 datasets and ran the procedure sequentially to perform inference. After each iteration, we report the data name and other numerical information. The full results are reported in the code repository.

Table 4 shows the reported results and updates of the schema inference after each iteration.

6.1. Queries and Derived Health Indicators

After each iteration, the newly introduced data enables more queries based on the derived features. In

Table 5, we report two sample queries after each iteration that are generated by GPT-5 in each integration step to demonstrate the two types of queries that can be performed on the fused data. The first type relies only on the observed data. The second type also relies on the data; however, it may suggest further prior knowledge that can be provided by the corresponding AI model. We note that the second query may include insights and related variables or metrics that an expert can further examine to conduct a full statistical inquiry. The generated insights, however, can lay the ground work to generate a technical assessment and setup for the experiment and the system under study, enabling the expert to more easily utilize technical scaffolds in an intelligent frameworks.

Table 6 shows some of the derived health indicators after performing the 10 iterations.

6.2. Validation

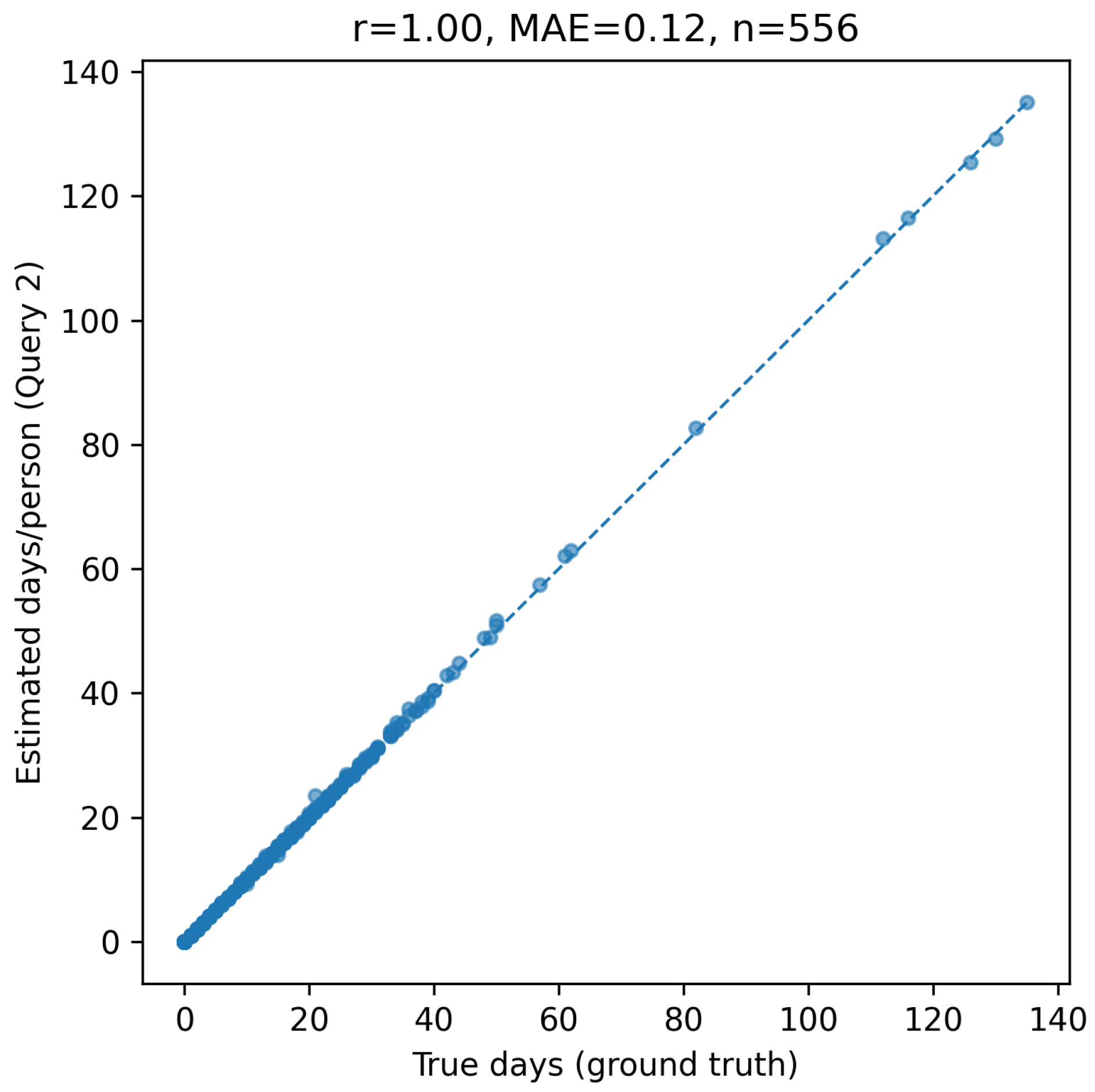

We perform this demonstration for query execution with and without prior. We use the obtained results with prior for validation against an established ground truth. In this example, we execute the query about the top counties in the US with person-days with maximum 8-h average ozone concentration over the National Ambient Air Quality Standard (NAAQS) in the year 2001. Then, we normalize the results using queries with prior by sending an inquiry to GPT-5 about the population to produce the days, as opposed to person-days. For the validation, we check the accuracy of the results against the number of days with maximum 8-h average ozone concentration over NAAQS, which is already obtained in the public dataset. The results of executing the first basic query (no prior or GPT call) is shown in

Table 7. Now, we assume that the data about days is holdout and demonstrate how to acquire them by a combined query from the observed dataset and obtaining the population per each county in the year 2001. Algorithm 2 describes the overall procedures. The results of this query is shown in

Table 8 along with a quantified uncertainty. Finally, the results are checked against the holdout from the dataset to quantify the accuracy of the second query that uses GPT calls for prior.

| Algorithm 2 Query with Prior Procedure |

Input: Dataset D (e.g., air quality measures), target year y, prior source (e.g., GPT-5) Output: Derived query results combining data and priors

- 1:

Identify the target measure in D (e.g., MeasureID = 293 for ozone exceedance). - 2:

Extract observations for each in year y. - 3:

Query the prior source for missing contextual variables (e.g., population per county): - 4:

For each county , compute derived measures such that: - 5:

Record each prior’s uncertainty (e.g., model confidence or interpolation error).

|

To assess the accuracy of the GPT-augmented query with population data as prior reproduces ozone exceedance counts, we compared the derived average days per person against the CDC Tracking Network’s reported number of days above the NAAQS threshold (Measure 292) in the holdout. We computed mean absolute error (MAE) and mean absolute percentage error (MAPE) across all counties for 2001. Results in

Table 9 show near-perfect linear fit and low relative error (<1%), indicating how well population-adjusted estimates reproduce official measures despite using GPT-5 generated priors.

Figure 3 shows the linear fitness of the produced results in the second query against ground truth values.

Note on Some Encountered Issues

While running experiments, we encountered several technical issues. The performance of answering queries with prior knowledge can be sensitive to the mechanisms used to formulate and batch such queries. While multiple options for optimizations are possible, variations in context and technical configuration may significantly affect performance. The speed of such queries can be highly sensitive to local and external factors in the models and other technical setups used. In practice, justifying such an approach is subject to the trade-off between speed and adaptability in evolving systems. Resorting to an authoritative source can be more efficient but maintaining an acceptable information freshness may necessitate future migration overhead and complex modality adaptations. The computational and interface overhead of accessing prior knowledge can significantly determine feasibility especially if the approach is to be used in real-time settings.

6.3. Limitations

While the approach seems promising, significant challenges and limitations remain. A clear distinction between prior and observed data is necessary for practical application and use cases. The reliability of prior data remains an open question, even for simplistic tasks and resolutions. A temporary fix can rely on a simple quantification procedure to ensure proper assessment and confidence threshold passing, consistent with the domain requirements. For example, critical domains require a higher confidence threshold, and the derived insights can take an informative role. Queries and statistical analyses can be substantially assisted and accelerated in these contexts, but they also demand further examination, investigation, and assessment.

Some limitations of this study include the limited scale and types of datasets examined. Examining more complex data with higher dimensions can also strengthen the results and observations. We plan to continue with computationally intensive experiments and expand by verifying and tuning the approach to enhance reliability, and by testing in online deployments and with live data streams. We are also working on ways to quantify the confidence threshold obtained during the alignment. Researchers [

28] pointed out the sensitivity of AI models in general to spurious correlations [

28]. We acknowledge this to be a challenging issue that demands careful assessment in the results evaluation with proper tooling and expertise. The proposed approach can help address this challenge.

7. Discussion: Towards a Multimodal Unified View

Currently, various AI models with learned neural architectures deliver varying improvements, particularly on well-defined tasks with known inputs and prompts. However, the quality of the results is variable and may degrade as the metamodeling layer gets more complex. The structured results, however, are still semantically enriched but may not be readily applicable to the domain of interest. At this layer, more precise instructions and nuanced corrections are necessary to elicit essential improvements in the reasoning process. It remains an open question whether the underlying model can effectively address this challenge. In this presentation, we address this issue through multiple layers of scaffolding for the data, schema, and query results, and thorough validation and examination of the generated code and results, utilizing both statistical and symbolic reasoning.

Figure 4 shows a generalized view of the iterations demonstrated in

Section 5.4.

Another prospect is integrating live streams. As native multimodality evolves in models, incorporating live data streams becomes increasingly accessible. Service and data virtualization techniques can benefit from real-time access to the latest data, with fewer barriers and less need to conform to strict requirements (e.g., APIs), especially for real-time dashboards, monitoring, and security systems.

Relation to Systems of Systems

Systems of Systems exhibit unique features that cannot be present in any single component. Emergent behavior arises in ways that demand a combined reasoning capacity across multiple dimensions. While each constituent needs to fulfill its own mission, the system as a whole must also operate and behave consistently. Preserving some degree of independence in management and governance across parts is also necessary. They also evolve over time as new requirements emerge and existing components are removed or modified.

Treating siloed databases and warehouses as SoS opens the way to applying well-established methodologies proven to address integration barriers iteratively and more formally. Such an approach enables more SoS engineering deliberation to be cast onto unpredictable and emerging data silos. We recognize the unique challenges posed by data silos. However, a proper application of methodical SoS approaches, combined with the leverage of recent AI models, can enhance confidence and preparedness to tackle newly emerging modalities and needs.

While the employed technologies can resolve significant barriers, such as interface rigidity, data migration, and some degree of semantic divergence, another set of challenges may persist, including ownership and conventional legacy constraints. The role of generative AI in resolving these challenges might be limited. However, it can still assist decision-making by expediting several processes and therefore informing stakeholders more efficiently. By exposing schema and semantic mappings to the learning process, the model can infer correspondences and establish connections between various modalities in heterogeneous systems. The flexibility of the transformation inherently diminishes the constraints on harmonization to the degree that they can be applied on demand when possible.

8. Conclusions

In this paper, we discussed how to leverage advances in recent AI models to effectively handle multimodality in large data collections. We focused on the potential and impact of such leverage in tackling interoperability within heterogeneous data silos. The literature is rich in approaches for using statistical inference to manage large data integration. We selected a set of models that have advanced in recent years and can be used to resolve key interoperability issues. We outlined an approach for applying such models. We demonstrated, using a realistic case with reasoning steps that can be applied to the collected data as they emerge. We also highlighted the major limitations of this study, particularly regarding scale and interpretability. We point out ongoing challenges in quantifying uncertainty and accuracy, as well as verifying the correctness of the overall results.

Nevertheless, combining the outputs of recent models could offer a pathway to continuous, dynamic fusion of segregated data through multimodal semantic reasoning, by enabling a more resilient system that can overcome traditional integration and data migration barriers. The inherent accounts of less intuitive transformations in these models enhance preparedness to emerging needs, forms, and modalities. It mitigates barriers to data analysis and expedites experiments and statistical analyses through advanced reasoning queries. Our validation results indicate that prior-integration pipeline can faithfully reconstruct validated environmental metrics from silo data using only public priors. Systems can evolve with more acceleration in particular aspects. Models can be equipped with native features to mitigate delays or disruptions, in accordance with modular design principles and transparent, controllable reasoning.

Author Contributions

Conceptualization, B.P.Z. and A.A.; methodology, A.A.; software, A.A.; validation, B.P.Z. and A.A.; formal analysis, A.A.; investigation, B.P.Z.; resources, A.A.; data curation, A.A.; writing—original draft preparation, A.A.; writing—review and editing, B.P.Z. and A.A.; visualization, A.A.; supervision, B.P.Z.; project administration, B.P.Z. and A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Conflicts of Interest

Authors Abdurrahman Alshareef and Bernard Phillip Zeigler were employed by the company RTSync Corp. The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| GAN | Generative Adversarial Networks |

| GPT | Generative Pre-trained Transformer |

| LLM | Large Language Model |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| SoS | Systems of Systems |

Appendix A. JSON Responses

| Listing A1. Full JSON representation of the unified schema, source profile, and inferred schema. |

{

"version": "0.0.1",

"unified_schema": {

"dimensions": {

"Commodity": { "type": "string", "id_key": "commodity_id" },

"Time": { "type": "temporal", "grain": "year", "id_key": "time_id" }

},

"measures": {

"availability_per_capita": {

"type": "numeric",

"unit": "Pounds per capita"

}

},

"relations": {

"food_data": {

"keys": ["Commodity", "Time"],

"measures": ["availability_per_capita"]

}

}

},

"sources": {

"fruitveg.csv": {

"profile": {

"path": "fruitveg.csv",

"shape": [884, 4],

"cols": [

{ "name": "Commodity", "type": "text" },

{ "name": "Year", "type": "num" },

{ "name": "Attribute", "type": "text" },

{ "name": "Value", "type": "num" }

],

"time_cols": ["Year"]

},

"inferred_schema": {

"columns": [

{

"name": "Commodity",

"semantic_type": "dimension",

"dtype": "text",

"time": false,

"candidate_roles": ["Commodity"]

},

{

"name": "Year",

"semantic_type": "dimension",

"dtype": "num",

"time": true,

"candidate_roles": ["Time"]

},

{

"name": "Attribute",

"semantic_type": "dimension",

"dtype": "text",

"time": false,

"candidate_roles": ["Commodity", "Form", "Unit"]

},

{

"name": "Value",

"semantic_type": "measure",

"dtype": "num",

"unit": "Pounds per capita",

"time": false

}

],

"suggested_dimensions": ["Commodity", "Year", "Attribute"],

"suggested_measures": ["Value"]

}

}

}

}

|

| Listing A2. Detailed measure definitions with semantic units. |

{

"measures": {

"availability_per_capita": {

"type": "numeric",

"unit": "Pounds per capita"

},

"eggs_shell_equiv_million_dozen": {

"type": "numeric",

"unit": "Shell-egg equivalent, Million dozen"

},

"eggs_total_million_pounds": {

"type": "numeric",

"unit": "Million pounds"

},

"eggs_lbs_per_capita": {

"type": "numeric",

"unit": "Pounds per capita"

},

"population_millions": {

"type": "numeric",

"unit": "Millions"

}

}

}

|

| Listing A3. Full JSON for Vegetable-Prices-2022.csv: profile and inferred schema. |

{

"sources": {

"Vegetable-Prices-2022.csv": {

"profile": {

"path": "Vegetable-Prices-2022.csv",

"shape": [93, 8],

"cols": [

"Vegetable",

"Form",

"RetailPrice",

"RetailPriceUnit",

"Yield",

"CupEquivalentSize",

"CupEquivalentUnit",

"CupEquivalentPrice"

]

},

"inferred_schema": {

"columns": [

{ "name": "Vegetable",; "semantic_type": "dimension", "candidate_roles": ["Commodity"] },

{ "name": "Form", "semantic_type": "dimension", "candidate_roles": ["Form"] },

{ "name": "RetailPrice", "semantic_type": "measure", "unit": "varies; see RetailPriceUnit" },

{ "name": "Yield", "semantic_type": "measure", "unit": "dimensionless" },

{ "name": "CupEquivalentSize", "semantic_type": "measure", "unit": "Pounds" },

{ "name": "CupEquivalentPrice","semantic_type": "measure", "unit": "USD per cup equivalent" }

],

"suggested_dimensions": ["Vegetable", "Form"],

"suggested_measures": ["RetailPrice", "Yield", "CupEquivalentSize", "CupEquivalentPrice"]

}

}

}

}

|

References

- Denton, N.; Molloy, M.; Charleston, S.; Lipset, C.; Hirsch, J.; Mulberg, A.E.; Howard, P.; Marsh, E.D. Data silos are undermining drug development and failing rare disease patients. Orphanet J. Rare Dis. 2021, 16, 161. [Google Scholar] [CrossRef] [PubMed]

- IBM. What Are Data Silos? 2025. Available online: https://www.ibm.com/think/topics/data-silos (accessed on 1 September 2025).

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 6–11 July 2015; pp. 2256–2265. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Advances in Neural Information Processing Systems. Curran Associates Inc.: Red Hook, NY, USA, 2017; Volume 30, pp. 5998–6008. [Google Scholar]

- Ming, Y.; Hu, N.; Fan, C.; Feng, F.; Zhou, J.; Yu, H. Visuals to text: A comprehensive review on automatic image captioning. IEEE/CAA J. Autom. Sin. 2022, 9, 1339–1365. [Google Scholar] [CrossRef]

- Google LLC. Google Cloud Platform. 2025. Available online: https://cloud.google.com/ (accessed on 1 September 2025).

- Apache Software Foundation. Apache Parquet. 2025. Available online: https://parquet.apache.org/ (accessed on 1 September 2025).

- Apache Software Foundation. Apache Iceberg. 2025. Available online: https://iceberg.apache.org/ (accessed on 1 September 2025).

- Snowflake Inc. Snowflake Open Catalog. 2025. Available online: https://www.snowflake.com/en/ (accessed on 1 September 2025).

- Wuillemin, P.H.; Torti, L. Structured probabilistic inference. Int. J. Approx. Reason. 2012, 53, 946–968. [Google Scholar] [CrossRef]

- Bouquet, P.; Magnini, B.; Serafini, L.; Zanobini, S. A SAT-based algorithm for context matching. In Proceedings of the International and Interdisciplinary Conference on Modeling and Using Context, Stanford, CA, USA, 23–25 June 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 66–79. [Google Scholar]

- Fey, M.; Hu, W.; Huang, K.; Lenssen, J.E.; Ranjan, R.; Robinson, J.; Ying, R.; You, J.; Leskovec, J. Relational deep learning: Graph representation learning on relational databases. arXiv 2023, arXiv:2312.04615. [Google Scholar] [CrossRef]

- Köpcke, H.; Rahm, E. Frameworks for entity matching: A comparison. Data Knowl. Eng. 2010, 69, 197–210. [Google Scholar] [CrossRef]

- Barlaug, N.; Gulla, J.A. Neural networks for entity matching: A survey. ACM Trans. Knowl. Discov. Data 2021, 15, 1–37. [Google Scholar] [CrossRef]

- Brachman, R.; Levesque, H. Knowledge Representation and Reasoning; Elsevier: Amsterdam, The Netherlands, 2004. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed Representations of Words and Phrases and Their Compositionality. In Proceedings of the 27th Annual Conference on Neural Information Processing Systems (NeurIPS 2013), Lake Tahoe, NV, USA, 5–10 December 2013; Advances in Neural Information Processing Systems. Curran Associates Inc.: Red Hook, NY, USA, 2013; Volume 26, pp. 3111–3119. [Google Scholar]

- Chandrasekaran, D.; Mago, V. Evolution of semantic similarity—a survey. ACM Comput. Surv. 2021, 54, 1–37. [Google Scholar] [CrossRef]

- Chen, S.; Guo, W. Auto-encoders in deep learning—A review with new perspectives. Mathematics 2023, 11, 1777. [Google Scholar] [CrossRef]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative adversarial networks: An overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Zhang, X.; Gao, W. Towards llm-based fact verification on news claims with a hierarchical step-by-step prompting method. arXiv 2023, arXiv:2310.00305. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Arslan, M.; Ghanem, H.; Munawar, S.; Cruz, C. A Survey on RAG with LLMs. Procedia Comput. Sci. 2024, 246, 3781–3790. [Google Scholar] [CrossRef]

- OpenAI. GPT-5. 2025. Available online: https://platform.openai.com/docs/models/gpt-5 (accessed on 1 September 2025).

- U.S. General Services Administration. Data.gov—The Home of the U.S. Government’s Open Data. Available online: https://data.gov (accessed on 1 September 2025).

- U.S. Department of Agriculture. USDA.gov—United States Department of Agriculture. Available online: https://www.usda.gov (accessed on 1 September 2025).

- Liu, D.; Zuo, E.; Wang, D.; He, L.; Dong, L.; Lu, X. Deep Learning in Food Image Recognition: A Comprehensive Review. Appl. Sci. 2025, 15, 7626. [Google Scholar] [CrossRef]

- Selvaraj, M.G.; Vergara, A.; Montenegro, F.; Ruiz, H.A.; Safari, N.; Raymaekers, D.; Ocimati, W.; Ntamwira, J.; Tits, L.; Omondi, A.B.; et al. Detection of banana plants and their major diseases through aerial images and machine learning methods: A case study in DR Congo and Republic of Benin. ISPRS J. Photogramm. Remote Sens. 2020, 169, 110–124. [Google Scholar] [CrossRef]

- Ye, W.; Zheng, G.; Cao, X.; Ma, Y.; Zhang, A. Spurious correlations in machine learning: A survey. arXiv 2024, arXiv:2402.12715. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).