1. Introduction

Corporate misconduct refers to deceptive or illegal activities carried out by an individual or a company. Baucus defined corporate illegality as violating administrative and civil law [

1], including the falsification of financial statements, environmental violations, and financial misreporting. Studies found that firms’ misconduct can significantly undermine market stability, investor wealth, and social trust [

2,

3]. Energy giant Enron’s collapse affected thousands of employees and caused a loss to shareholders as high as USD 74 billion

1. Therefore, corporate misconduct prediction is highly noticed by regulators and investors to reduce losses and maintain a healthy market. For external investors and regulators, the financial report is a fundamental way to realize the financial situation and programs, and to discuss the corporate risks and the probability of misconduct. As we know, financial statements are vital components in reports due to their structured and relatively objective data. But it may also present some fraudulent statements that sometimes mislead users. As a section in financial reports, Management Discussion and Analysis (MD&A) conveys textual information such as the potential attitude and opinion from management. Textual information contains sentiment, which reflects the underlying emotional tone or attitude of the text, such as positive, negative, or neutral, and serves as a vital component for forecasting future performance, predicting estate performance, risk and investments [

4,

5,

6,

7].

While traditional misconduct prediction models often rely on structured financial data, such as accounting ratios and governance metrics [

8,

9], a growing body of research recognizes that the textual content of corporate disclosures, particularly the MD&A, contains valuable forward-looking information. Accordingly, a growing body of studies has employed sentiment analysis as a crucial tool for extracting managerial tone to predict corporate outcomes, including the likelihood of corporate misconduct [

10,

11]. However, the effectiveness of this approach hinges on the accuracy of the sentiment measurement itself. Prior dominant sentiment analysis methods in the field of financial text analysis mainly refer to lexicon-based and traditional machine learning (ML) methods, but they face significant limitations in this field. Lexicon methods tally words from predefined lists or dictionaries, such as the Henry List [

12] and the Diction, Loughran, and McDonald List [

13], to create sentiment indicators to predict misconduct, which are inherently context-agnostic. For instance, the method identifies and counts the word “good”, which is in the predefined list, and assigns it a positive weight to construct a positive. These approaches struggle to differentiate sentiment in phrases with identical words and often misinterpret domain-specific terms whose polarity depends entirely on the context. These inaccuracies are particularly detrimental in the context of misconduct prediction, as subtle, nuanced, or deceptively worded phrases may be the important early indicators of underlying issues. In conclusion, the traditional lexicon-based and ML methods perform sentiment analysis from text to misconduct prediction, with several limitations, specifically, context-dependency, domain-specific terms, and cross-lingual barriers. First, they may fail to capture semantic relationships and nuanced expressions [

14]. Second, it is hard to update lists frequently and extract the most relevant aspect phrases, regarding the continuous emergence and transferring difficulty of specific words in the specific domain. And a list of characteristic lexicon constructed from the content of foreign annual reports is restricted by an insufficient understanding of Chinese annual reports’ textual content [

15]. Some words are merely polite, and frequent expressions in the Chinese financial market may be overlooked in the calculation by the traditional method.

Table 1 provides specific examples from Chinese MD&A texts (translated into English) that illustrate these challenges. While seemingly general, the inability to correctly interpret such phrases can lead to a misjudgment of sentiment analysis and a firm’s true condition, potentially masking the early warning signs of distress that often precede misconduct.

Consider, for instance, a common phrase in MD&A: “The company’s performance is better than expected despite challenges” (the first row in

Table 1). A traditional lexicon-based approach would process this sentence by simply counting words. It would likely register “better” as a positive term and “challenges” as a negative term. Depending on the specific dictionary weights, the net sentiment could be calculated as neutral or even slightly negative, completely missing the overarching optimistic tone. The method is mechanically blind to the crucial contextual cues provided by phrases like “better than expected” and “despite”, which signal resilience and outperformance. Our MDARisk, in contrast, is designed to understand these relational phrases and syntactic structures. It can recognize that “despite challenges” is a subordinate clause that sets up a contrast, and that the main clause, “performance is better than expected”, carries the dominant, positive sentiment. This ability to comprehend complex sentence structures, rather than just isolated words, is precisely what is needed to accurately gauge managerial tone from nuanced disclosures.

Table 1 provides further examples.

Overall, the lexicon and conventional ML methods have difficulty identifying real emotional tendency with the special language context at a high quality, resulting in the accuracy of misconduct prediction possibly being weakened. Our research aims to enhance the precision of sentiment analysis, subsequently contributing to improved predictive performance in identifying misconduct.

Our study focuses on how to enhance the predictive performance of sentiment. To address limitations, we explore the potential of Large Language Models (LLMs) to enhance sentiment analysis for corporate misconduct prediction. Unlike traditional lexicon methods that rely on predefined word lists [

12,

13], LLMs are trained on vast textual corpora, enabling them to interpret language in a context-aware manner [

16]. This inherent capability allows them to overcome the key challenges identified in

Table 1. Specifically, for example, an LLM can differentiate the sentiment of “solid performance” versus “solid debt” by analyzing the entire phrase, and it can infer the sentiment of domain-specific terms like “hedging” from the surrounding sentence structure. We propose that by leveraging these advanced capabilities, a more nuanced and accurate sentiment measure can be extracted from MD&A texts. The central aim of this research is therefore to design and validate a new approach, which we name the MDARisk, which utilizes an LLM-based core, the MultiSenti module, to capture these context-sensitive signals and improve the prediction of corporate misconduct.

To achieve this goal, we conduct a comprehensive empirical study using textual data from the MD&A sections of Chinese A-share listed companies. Our research design follows a two-phase validation strategy. First, we establish the economic significance of our LLM-derived sentiment measure by examining its association with subsequent corporate misconduct. Second, we assess its practical utility by evaluating its incremental contribution to out-of-sample misconduct prediction when compared against both baseline and traditional lexicon-based models [

17]. Our findings reveal that the sentiment extracted by our approach is a powerful and robust predictor of future corporate violations. The inclusion of our MultiSenti-derived feature significantly enhances the performance of predictive models, demonstrating a clear superiority over established financial text analysis methods. These results highlight the substantial value of leveraging advanced language models to identify governance-related risks from corporate disclosures.

This study makes two primary contributions. First, we verify the feasibility and effectiveness of employing a Large Language Model for sentiment analysis in financial texts, which aligns with prior research [

16]. Second, our MDARisk offers a lightweight, scalable monitoring tool that complements traditional quantitative methods. From a corporate misconduct prediction perspective, the empirical results provide support for developing and adopting AI-assisted surveillance systems in regulatory technology applications.

The remainder of this article is organized as follows.

Section 2 reviews the relevant work on corporate misconduct and sentiment analysis.

Section 3 outlines the research methodology of the MDARisk framework.

Section 4 details the proposed empirical and experimental settings of the logit regression and out-of-sample test. Our analysis continues with a discussion of our results in

Section 5. Finally,

Section 6 provides a conclusion to our study.

4. Datasets and Validation Protocol for MDARisk

This section details the data, variables, and experimental protocols used to implement and evaluate the MDARisk framework introduced in

Section 3. We first describe the sample and data sources, then outline the procedures for our two-phase validation: (i) an econometric test to validate the construct validity of the MultiSenti module and (ii) an out-of-sample predictive evaluation to validate the practical utility of the complete MDARisk.

4.1. Sample and Data Sources

We study A-share non-financial firms from 2019 to 2023. Annual report MD&A texts are obtained from Cninfo; firm characteristics and governance variables come from CSMAR. We exclude delisted firms and observations with missing key fields, yielding 19,988 firm-years.

4.2. Implementing MultiSenti to Measure Sentiment Score of MD&A

We implemented the MultiSenti module as described in

Section 3.2.2, using Zhipu AI’s ChatGLM4-Flash to analyze the MD&A sections of corporate annual reports. For each MD&A text, the module performs three independent analytical runs to generate a final majority-vote sentiment label and an averaged intensity score (avg_sentiment_score). Considering the lagged effect of disclosure sentiment on future misconduct, the MD&A texts are from the 2018–2022 period, corresponding to misconduct outcomes from 2019 to 2023.

4.3. Measure of Corporate Misconduct

We measure corporate misconduct using regulatory violation data from the CSMAR database. To be more specific, we exclude violations that resulted from personal actions of the company’s shareholders or management. Our primary dependent variable, Misconduct, is a dummy variable that equals 1 if a firm was sanctioned for a regulatory violation in the year following the annual report’s disclosure and 0 otherwise. The period for this variable ranges from 2019 to 2023, reflecting the early warning effect of sentiment in the annual report texts.

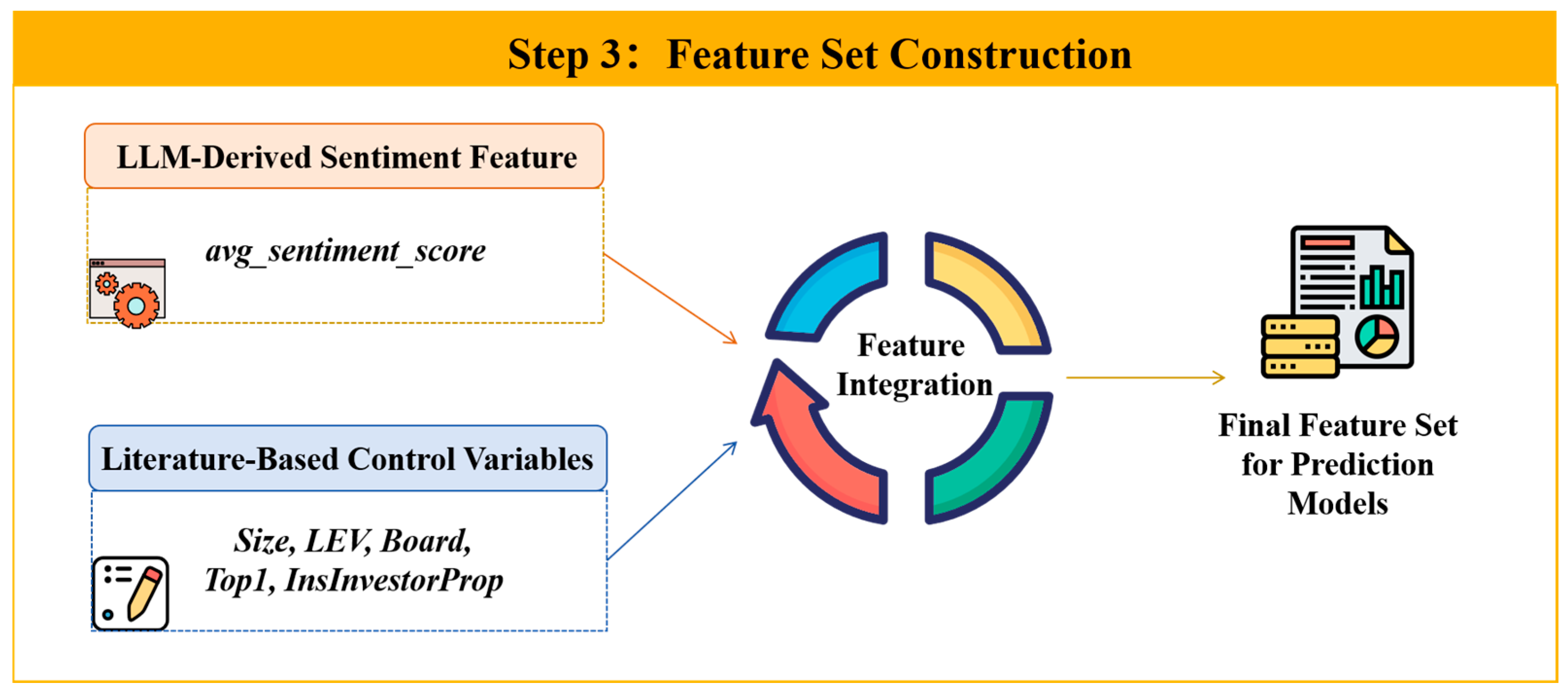

4.4. Feature Construction and Controls

To mitigate omitted variable bias and isolate the incremental effect of managerial sentiment, we include a set of control variables widely recognized in the corporate finance and accounting literature as significant predictors of corporate misconduct. Following prior studies [

20,

48,

51], we control for firm size (Size) and financial leverage (LEV), as larger and more leveraged firms may face different levels of scrutiny and financial pressure. We also account for corporate governance characteristics [

21,

43,

52], including board size (Board), ownership concentration (Top1), and the proportion of institutional investors (InsInvestorProp), as these factors are known to influence managerial oversight and firm behavior [

21,

57]. Variable definitions follow

Table 2; this step corresponds to MDARisk Step 3 in

Figure 1.

4.5. Econometric Validation Protocol (Phase 1)

As the first phase of our validation strategy, this econometric analysis aims to establish the construct validity of our MultiSenti module by formally testing its output against a key design requirement. Specifically, this phase validates DR1 (Capability to Interpret Context-Dependent and Domain-Specific Language) by examining whether the context-aware sentiment score generated by our artifact is economically meaningful.

To do so, we formulate our primary hypothesis, which posits that a more negative sentiment in the MD&A—as captured by a lower avg_sentiment_score—is associated with a higher probability of subsequent corporate misconduct. The hypothesis is as follows:

H1: There is a significant positive correlation between the negative sentiment tendency in MD&A text and the risk of corporate misconduct.

Through testing this hypothesis, we can verify if our artifact captures a genuine signal of governance risk rather than statistical noise. A significant finding would provide strong evidence that MultiSenti, by fulfilling DR1, can extract information relevant to corporate risk that is often missed by context-agnostic methods. This step is a prerequisite for the subsequent predictive validation in Phase 2.

Based on H1, we construct a panel logit model as a baseline model to examine the relationship between MD&A sentiment and the likelihood of corporate misconduct. The use of a logit model is standard for rare-event corporate outcomes [

58]. To control for unobserved firm-specific heterogeneity and common time shocks, we incorporate both firm and year fixed effects in our preferred specification.

The dependent variable is the corporate misconduct risk indicator, which is measured by a dummy variable representing whether the company has been penalized for violations. The primary independent variable is the sentiment score of the annual report text. Control variables include company size, board size, debt leverage, ownership concentration, and institutional concentration. To prevent the influence of corporate misconduct on the independent variables, all independent variables are lagged by one period. The approach concentrates on the impact of ex ante factors on the company’s motivations and severity of violations.

4.6. Predictive Validation Protocol (Phase 2)

To operationalize the second phase of our validation, which evaluates the predictive utility of the full MDARisk, we design a series of out-of-sample prediction experiments using multiple machine learning classifiers: Logistic Regression, Support Vector Machine (SVM), Gradient Boosting Decision Trees (GBDTs), Linear Discriminant Analysis (LDA), and XGBoost. Using multiple classifiers ensures that our findings are robust and not contingent on the specific assumptions of a single algorithm, a common practice in predictive modeling research [

59].

We compare three feature configurations:

Baseline Features: Includes firm-level controls such as industry classification, institutional ownership ratio, etc.;

Baseline + Traditional Sentiment: The baseline features augmented with sentiment scores generated by the lexicon-based method of Jiang et al. [

17];

Baseline + LLM Sentiment: The baseline features augmented with the avg_sentiment_score produced by MultiSenti.

The MultiSenti module generates the sentiment feature by averaging the scores from three independent analytical runs for each MD&A document. To ensure a realistic forecasting scenario, we use a time-based holdout split (70% for training, 30% for testing) and apply SMOTE to the training set only to handle the class imbalance of the minority misconduct class [

52]. Model performance is assessed using metrics appropriate for imbalanced datasets, including Accuracy, Recall, F1-score, and AUC. All results are averaged across multiple runs with different random seeds to ensure robustness.

5. Validation Results

This section presents the results of the two-phase validation strategy designed to evaluate our MDARisk framework. We begin with descriptive statistics, followed by the results of our Phase 1 econometric validation, which assesses the construct validity of the core MultiSenti module. We then present the results of our Phase 2 predictive validation, which demonstrates the practical utility of the complete MDARisk framework.

5.1. Descriptive Statistics

Table 3 shows the descriptive statistics. The mean of Misconduct is 0.1251, indicating that approximately 12.5% of the firm-year observations in our sample are associated with a corporate misconduct event in the subsequent year.

Regarding our sentiment metrics, we present two related indicators. First, Sentiment is a binary variable derived from the majority vote of our three LLM agents (1 for “Positive”, 0 for “Negative”). The mean value of it is 0.9510, which aligns with the general observation that management tends to frame disclosures optimistically, indicating that managers tend to maintain a positive tone regarding the company’s business. Our core explanatory variable, avg_sentiment_score, captures the intensity of this sentiment as a continuous measure from 0 to 1, which provides a more nuanced measure than the simple binary classification. The mean of avg_sentiment_score is 0.7967. This further suggests that most listed companies express optimism about their business conditions and future prospects, tending to convey positive and optimistic information to external parties.

5.2. Econometric Validation of the MultiSenti Module (Phase 1)

To examine the association between the sentiment of MD&A with future firm performance and risk, we start our analyses by using Spearman’s rank correlation.

Table 4 shows the association. It can be observed that the sentiment indicators

Sentiment and

avg_sentiment_score are significantly negatively correlated with the indicator of corporate misconduct risk,

Misconduct. This suggests that disclosures in MD&A provide additional information that is predictive of company performance and risk, which is hard to capture by financial data. Simultaneously, it also provides an initial, unconditional indication that a more negative tone relates to higher violation risk, which supports the fixed-effects tests below.

Following our econometric validation protocol as mentioned in

Section 4.5, we now present the results of our Phase 1 validation, which is designed to assess the construct validity of the core MultiSenti module. The central question here is whether the sentiment score it generates is a meaningful economic indicator of future corporate misconduct.

Table 5 presents the regression results for the impact of sentiment score on the prediction of corporate misconduct. Column 1 represents the logit model result without any fixed effect, while column 2 is the result with the firm fixed effect. In column 3, we present the result that contains the fixed effect with year and firm. There is a difference in observations among models due to the fact that the sample that only has one period would be dropped. We can find that

avg_sentiment_score has a significantly negative effect on corporate misconduct risk (

Misconduct). This, once again, demonstrates that the more negative sentiment in the text, the higher the probability of corporate misconduct and the greater the company’s risk. The results are consistent with expectations and validate our hypothesis. And they show the value of sentiment information that has been verified in other studies [

15,

31], as the negative emotions expressed by management in the annual report may reflect the operational difficulties faced by the company, as well as potential issues that management might be attempting to conceal, thus increasing the company’s future financial risk and motivation for misconduct. The logit regression results of the models show a similar relationship, significant at the 1% level, further supporting the significant predictive power of negative sentiment in the text for misconduct. Interpreting magnitudes, a one SD increase in the avg_sentiment_score (0.129) reduces the odds of next year’s misconduct by about 8–19% (exp[β × 0.129], the value decided by β). Results indicate that the sentiment evaluated by the Large Language Model can present corporate situation and violation risk in efficiency.

This finding provides strong empirical support for our hypothesis (H1) and, crucially, validates that our MultiSenti module captures a reliable and theoretically consistent signal of misconduct risk.

5.3. Robustness Test for Phase 1

To ensure the robustness of our phase 1 validation findings, we conduct two additional tests: excluding the anomalous COVID-19 period and using an alternative measure for the dependent variable. The special circumstances of the pandemic in 2020 may have affected corporate operations and information transmission. Listed companies may adopt unusually optimistic tones in their Management Discussion and Analysis (MD&A) to cope with the crisis. Consequently, we further exclude the 2020 samples and re-estimate the fixed effect model to verify the robustness of the baseline regression.

Table 6 shows that the coefficient for the sentiment score in the annual report is significant at the 1% level, indicating that the sentiment score in the annual report continues to exhibit a robust negative relationship with firm risk after excluding the pandemic’s influence. This result is consistent with the baseline regression. Specifically, the coefficient in the test that excludes the 2020 data is larger compared to the baseline regression, suggesting that abnormal changes in management sentiment have a stronger predictive power for firm risk in a normal market environment. The coefficient of

avg_sentiment_score is −0.8161 and significant at the 1% level. This implies an odds ratio of approximately 0.90 (exp[−0.8161 × 0.129]), suggesting that for a one-SD increase in the sentiment score, the odds of a firm committing a violation decrease by about 10%. This confirms the strong negative association between managerial sentiment and misconduct risk. This further validates the significant role of tone factors in risk early warning in textual analysis, highlighting its predictive power for firm risk.

From a model specification perspective, incorporating time fixed effects helps control for macroeconomic fluctuations, such as the impact of changes in the broader environment. Although the sample size decreased due to the exclusion of 2020 data, it still meets the basic requirements for econometric analysis, and the key variables remain significant. This result further strengthens the robustness of the research conclusion, indicating that abnormal changes in the sentiment of management can provide valuable risk signals independent of external shocks.

To enhance the persuasiveness of the results, we estimated the corporate misconduct risks by counting the number of corporate misconduct in the current year. Similar to the baseline model, we fixed individual and year effects in the test.

Table 7 shows that the variable

avg_sentiment_score yields a consistently significant negative impact on the variable

Violation_count of enterprises in all models, reinforcing that the framework captures systematic variation in governance risk rather than noise. Collectively, these robustness checks strengthen our confidence in the construct validity of the MultiSenti module’s sentiment score.

5.4. Validation of the MDARisk (Phase 2)

Having established the construct validity of our core component, we now proceed to the phase 2 validation, which evaluates the practical utility and predictive effectiveness of the entire MDARisk in a realistic forecasting scenario.

Table 8 shows the out-of-sample performance.

The superior out-of-sample predictive performance of the MDARisk, particularly when compared to the model augmented with traditional lexicon-based sentiment, provides strong empirical evidence that our MultiSenti module successfully fulfills DR1 (Context-Aware Sentiment Analysis). By consistently outperforming this baseline, our LLM-based approach demonstrates its capability to capture the nuanced, context-sensitive signals within MD&A texts, which translates directly into improved misconduct prediction. Specifically, we can draw two key conclusions. First, compared with the baseline models that use only firm-level features, the inclusion of sentiment scores generated by the MDARisk leads to notable gains across nearly all classifiers, particularly in Accuracy and AUC. For instance, using the GBDT classifier, Accuracy increased from 76.37% to 82.37%, representing a 6 percentage point improvement, while AUC also improved slightly from 0.6705 to 0.6728. Similar trends can be observed in other models. The Logistic Regression model, for example, saw its Accuracy increase from 67.40% to 69.55% and its AUC rise from 0.6639 to 0.6677. The most pronounced gain in Accuracy occurred with the SVM model, which improved from 65.54% to 68.40%, an increase of 2.86 percentage points. This demonstrates that our proposed feature provides significant predictive information beyond traditional firm-level controls.

Second, and more importantly, our approach consistently outperforms the traditional lexicon-based sentiment method. The traditional sentiment method based on Jiang et al.’s financial lexicon yields only marginal or inconsistent, and in some cases even negative, improvements in both Accuracy and AUC across most models [

17]. In a direct comparison, for every single classifier, the model incorporating our LLM-driven sentiment achieves higher Accuracy and a higher AUC than the model using the traditional sentiment score. This finding robustly validates that MDARisk is more effective at extracting predictively useful signals from MD&A texts than the existing lexicon-based solution.

Figure 6 visualizes the net performance gains over the baseline, offering a clear and compelling summary of our findings. As shown in our visualization, the gains from the traditional method are generally small—often below 1 percentage point—and in some cases even slightly negative. In comparison, MDARisk consistently outperforms the traditional method, yielding noticeable improvements in both metrics. For example, in the GBDT and SVM models, the LLM-based approach improves Accuracy by over 7 and 2.8 percentage points, respectively, and delivers higher AUC values across all classifiers. These results highlight the advantage of leveraging Large Language Models to capture nuanced, context-sensitive sentiment signals from unstructured MD&A texts. Overall, the stark visual evidence further validates the effectiveness of our MDARisk in enhancing out-of-sample prediction of corporate misconduct. The ability of MDARisk to understand context and nuance, a limitation of traditional methods, translates directly into stronger out-of-sample predictive performance.

In summary, the results from this phase successfully validate the MDARisk framework as an effective tool for corporate misconduct prediction. Its ability to understand context and nuance—a key limitation of traditional methods—translates directly into stronger out-of-sample predictive performance, thereby demonstrating its superior practical utility.

6. Conclusions

This study undertook a design-science-inspired approach to develop and rigorously evaluate MDARisk, a novel framework for predicting corporate misconduct from Chinese MD&A disclosures. Motivated by the documented limitations of traditional lexicon-based methods, our primary objective was to build an artifact that could extract contextual sentiment with higher accuracy, thereby improving the overall predictive performance for corporate misconduct. Through a comprehensive two-phase evaluation, this research has successfully demonstrated the validity and utility of our proposed solution.

Utilizing a dataset comprising China’s A-share listed companies from 2019 to 2023 (MD&A 2018–2022), we first established construct and economic validity. In firm and year fixed effect logit models with a one-period lag and extensive robustness checks, the LLM-derived avg_sentiment_score is significantly negatively associated with next-year misconduct and with the number of violations. This indicates that MultiSenti extracts a sentiment signal that is economically meaningful for governance risk. We then validated system-level predictive utility. Across diverse classifiers and a time-based split, adding the MultiSenti feature improves out-of-sample Accuracy and AUC over both a baseline without text and a lexicon-augmented baseline, demonstrating incremental information content for forecasting violations.

Through this successful validation, this study makes several key contributions: (i) We propose MultiSenti, a novel Multi-Agent module leveraging LLMs for sentiment analysis, which addresses the inherent limitations of traditional methods in handling challenges such as semantic ambiguity, policy-oriented language nuances, and evolving terminology specific to the Chinese financial context. (ii) We empirically demonstrate the effectiveness of our artifact through a rigorous two-phase validation. Our Phase 1 econometric validation confirmed the construct validity of MultiSenti by establishing a robust negative association between its sentiment score and subsequent corporate misconduct. Furthermore, our Phase 2 predictive validation established the practical utility of the complete MDARisk framework, showing that it significantly enhances out-of-sample prediction accuracy compared to baseline and traditional methods. Furthermore, the application of LLM technology, as implemented in our framework, offers a lightweight and efficient solution for processing vast amounts of textual disclosure data, thereby enhancing regulatory efficiency and supporting more rational assessments of corporate risk based on annual reports.

Based on the research conclusions, we offer practical recommendations from multiple perspectives. First, listed companies should enhance the informational value and transparency of MD&A by providing context-rich, forward-looking narratives on risks and internal controls and by ensuring the content is truthful, accurate, and complete to reduce information asymmetry. Second, the regulatory department can incorporate text-based analytics—particularly LLM-driven sentiment, readability, and uncertainty—into early-warning systems to prioritize reviews of high-risk filings, while strengthening enforcement against false or misleading disclosure. Third, investors should combine sentiment-based text signals with fundamentals to assess ex ante violation risk and exercise caution toward firms with poor disclosure quality. In addition, financial media should standardize the dissemination of information to avoid over-interpreting text features and ensure the effective transmission of market information. Collectively, these measures form a “corporate self-discipline—strengthened regulation—market rationality” governance system, which helps optimize the information environment in capital markets, prevent misconduct risks, and promote the healthy development of markets.

While our findings are robust, we acknowledge certain limitations that present avenues for future research. Our analysis centered on sentiment; future work could integrate other textual dimensions, such as readability or topic modeling, within MDARisk for a more comprehensive risk assessment, which may improve the explanatory power of the model. Beyond expanding the textual scope, the sentiment analysis method itself also has limitations worth noting.

First, the LLM may overestimate the negative emotions because the annual report is replete with a large number of repetitive and standardized legal disclaimers and descriptions of risk factors (for example, “The COVID-19 pandemic may have a significant adverse impact on our future performance”). LLM may judge all these texts as “negative”, even if this is just a regular statement required by law rather than the true pessimism of the management. Second, the LLM method may have trouble with discovering antiphrasis or abnormal sentiment, which may be manipulated by the company that has committed misconduct [

43]. Additionally, the application of LLMs for sentiment analysis entails substantial computational costs that pose a significant constraint, particularly for large-scale organizational use cases involving high-frequency updates or the processing of massive textual datasets.

Furthermore, the method for improving LLMs’ textual analysis for detecting sarcasm, boilerplate legalese, etc., still requires research in the future. Improving the LLM with fine-tuning and extracting more textual features could significantly boost its adaptability to industry-specific contexts and linguistic subtleties. Such an approach may improve both the practical effectiveness of the LLM-based sentiment analysis across diverse application scenarios.

In sum, we design, build, and validate MDARisk. The framework delivers an economically meaningful measure of managerial tone via MultiSenti and demonstrably improves out-of-sample prediction of corporate misconduct, providing a practical and scalable complement to traditional risk assessment.