A Systematic Review of Generative AI in K–12: Mapping Goals, Activities, Roles, and Outcomes via the 3P Model

Abstract

1. Introduction

2. Literature Review

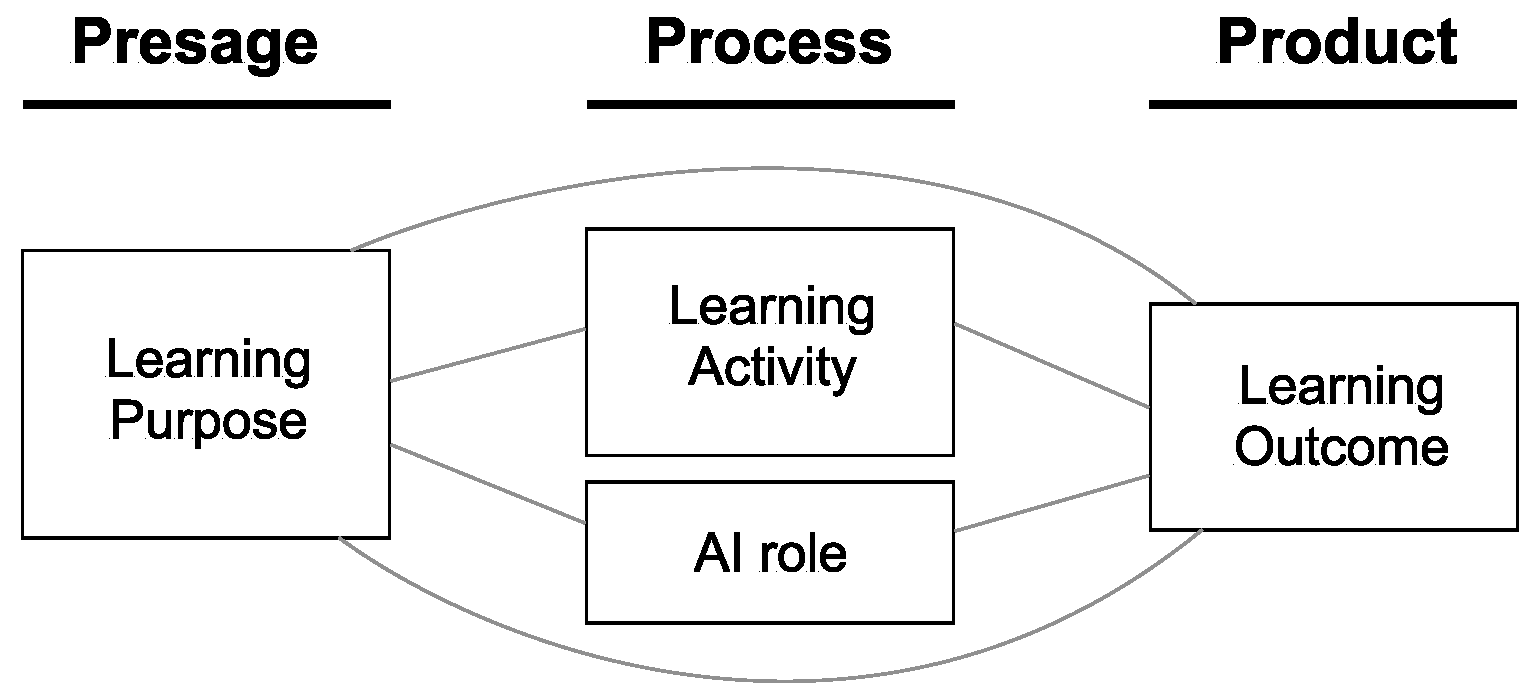

3. Materials and Methods

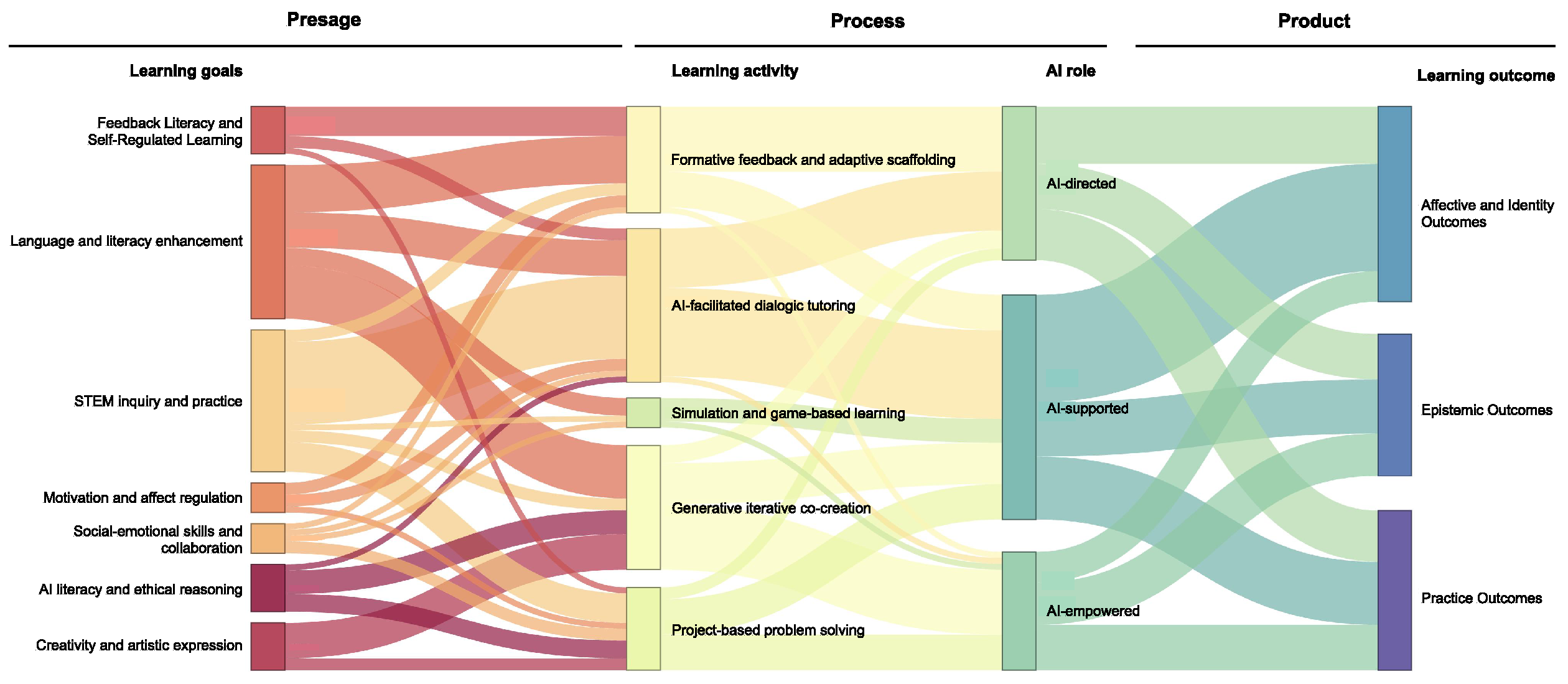

- RQ1

- (Presage): What primary learning goals does GenAI support for children and adolescents, and which educational needs do these goals address?

- RQ2

- (Process): What learning activities are implemented with GenAI?

- RQ3

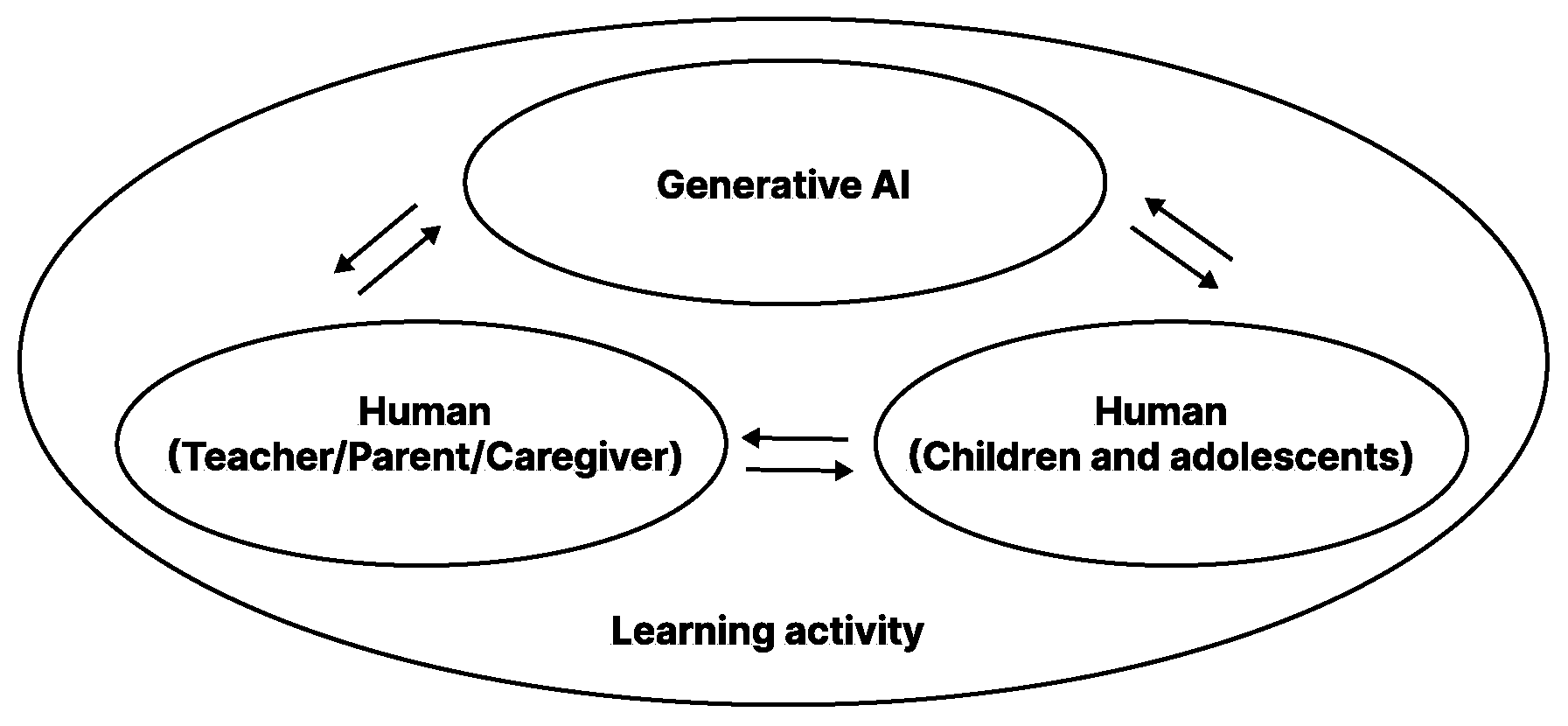

- (Process): How do AI, students, teachers, and parents collaborate, and what role patterns emerge?

- RQ4

- (Product): What opportunities and risks in learning outcomes are reported when GenAI is introduced?

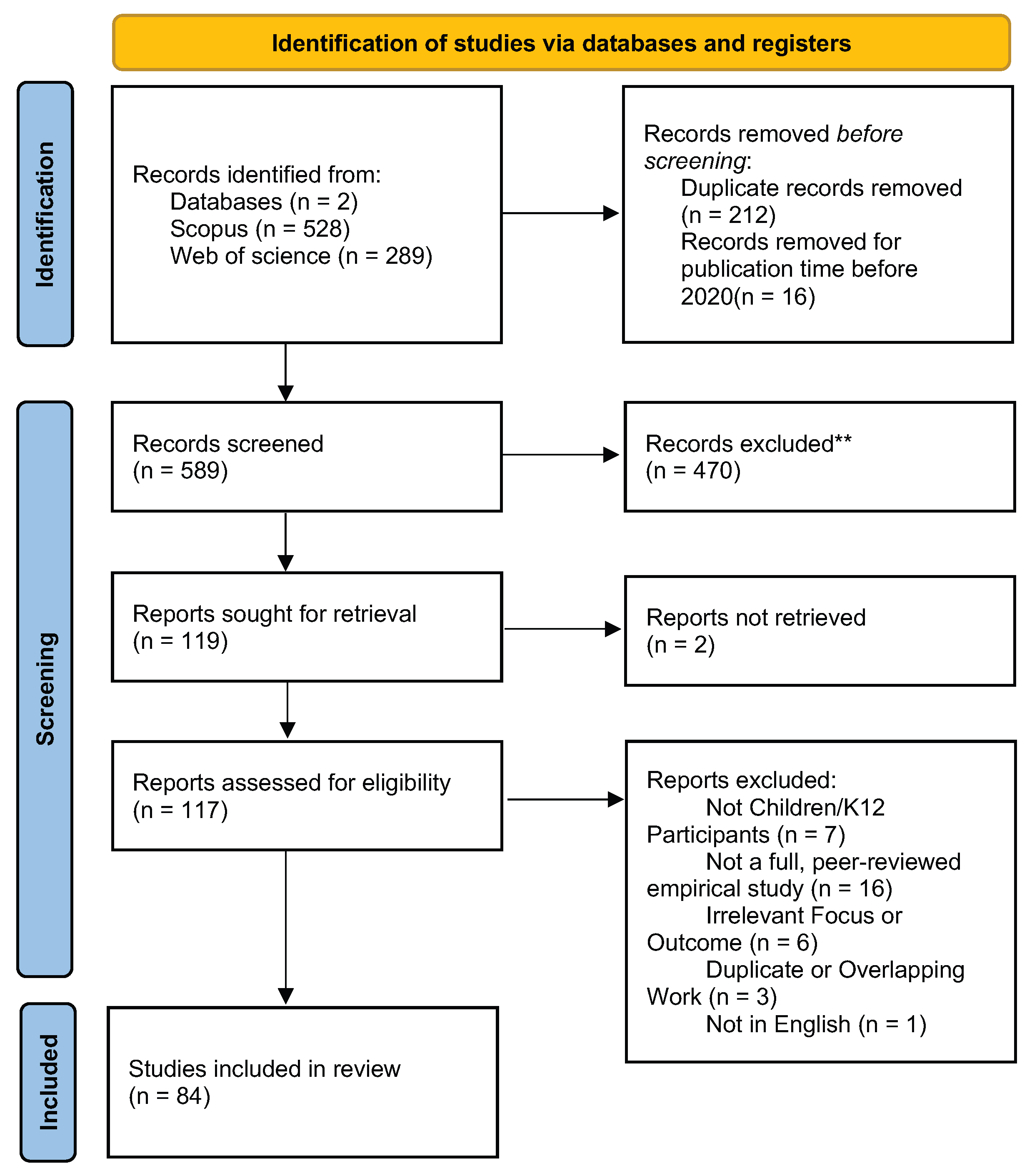

3.1. Search Strategy

3.2. Selection Method

3.3. Selection Results

3.4. Coding Scheme

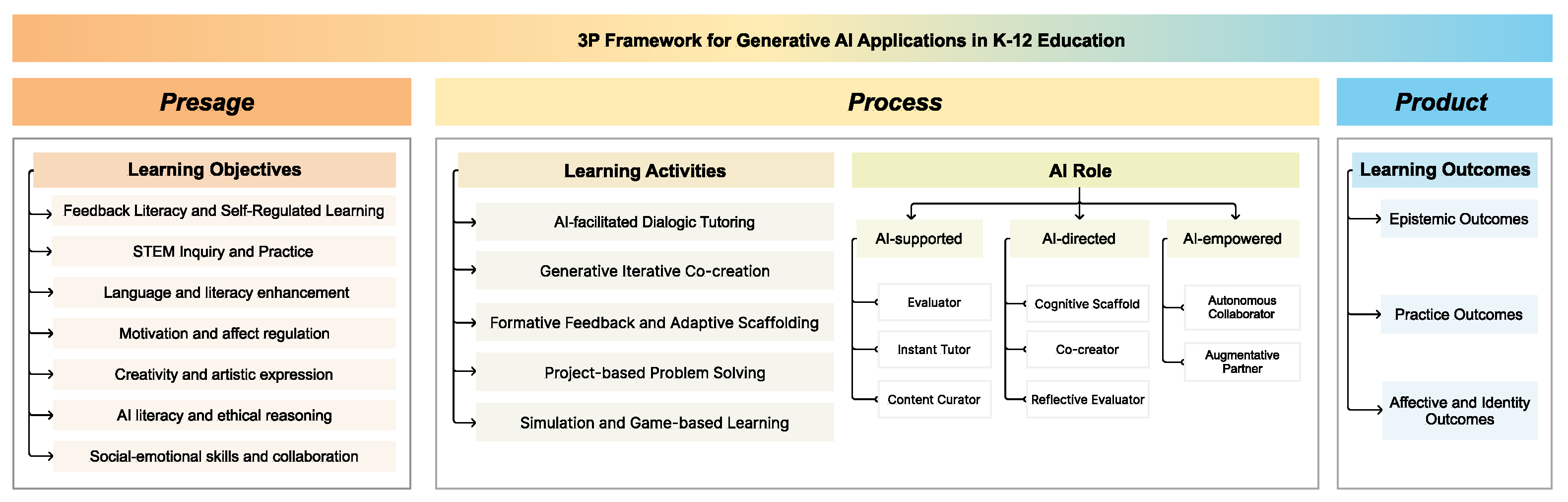

4. Results

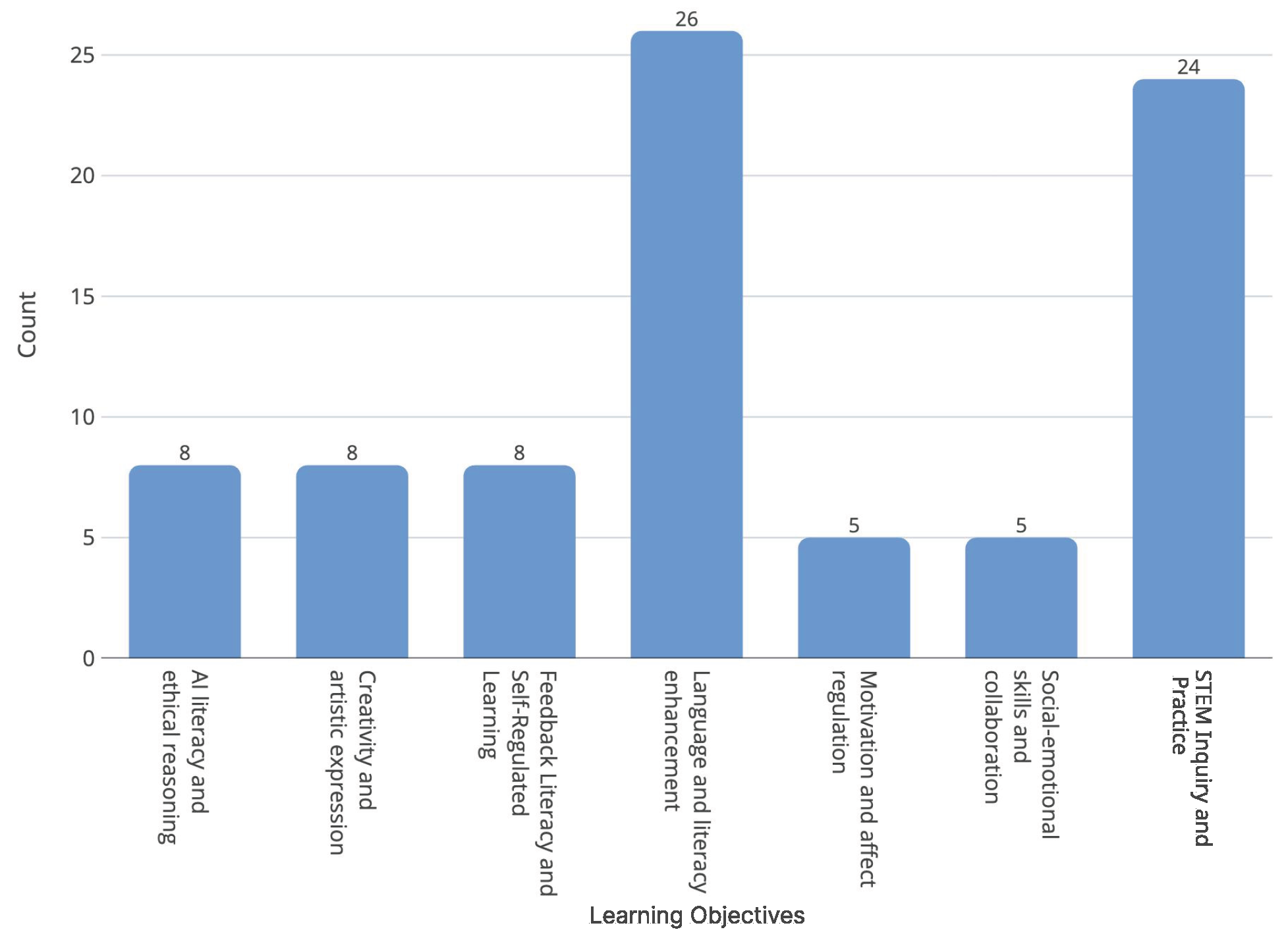

4.1. Learning Objectives

4.1.1. Language and Literacy Enhancement

4.1.2. STEM Inquiry and Practice

4.1.3. Motivation and Affect Regulation

4.1.4. Creativity and Artistic Expression

4.1.5. Social–Emotional Skills and Collaboration

4.1.6. Feedback Literacy and Self-Regulated Learning

4.1.7. AI Literacy and Ethical Reasoning

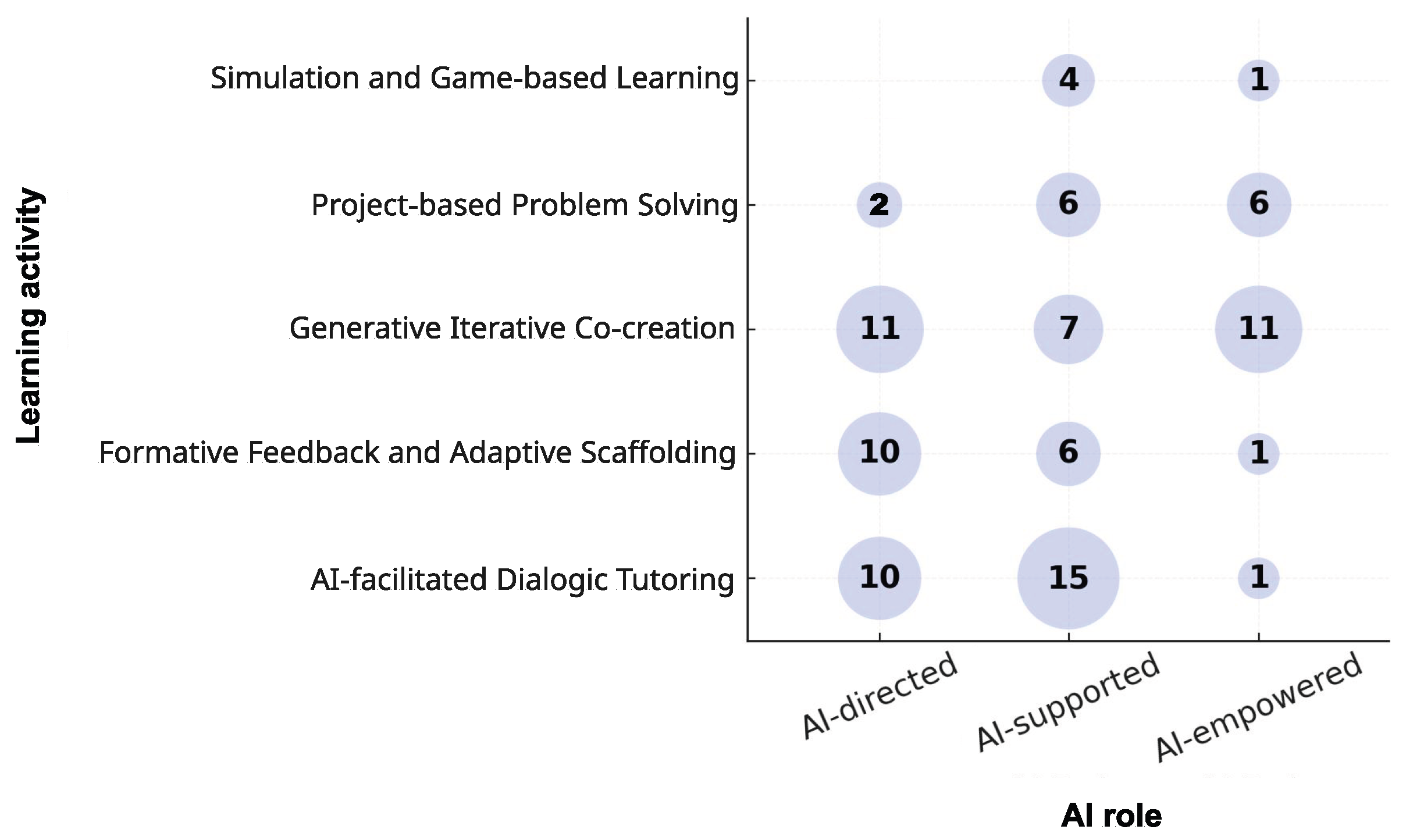

4.2. PROCESS: Learning Activities and AI Roles

4.2.1. RQ2 Learning Activities

4.2.2. RQ3 AI Role

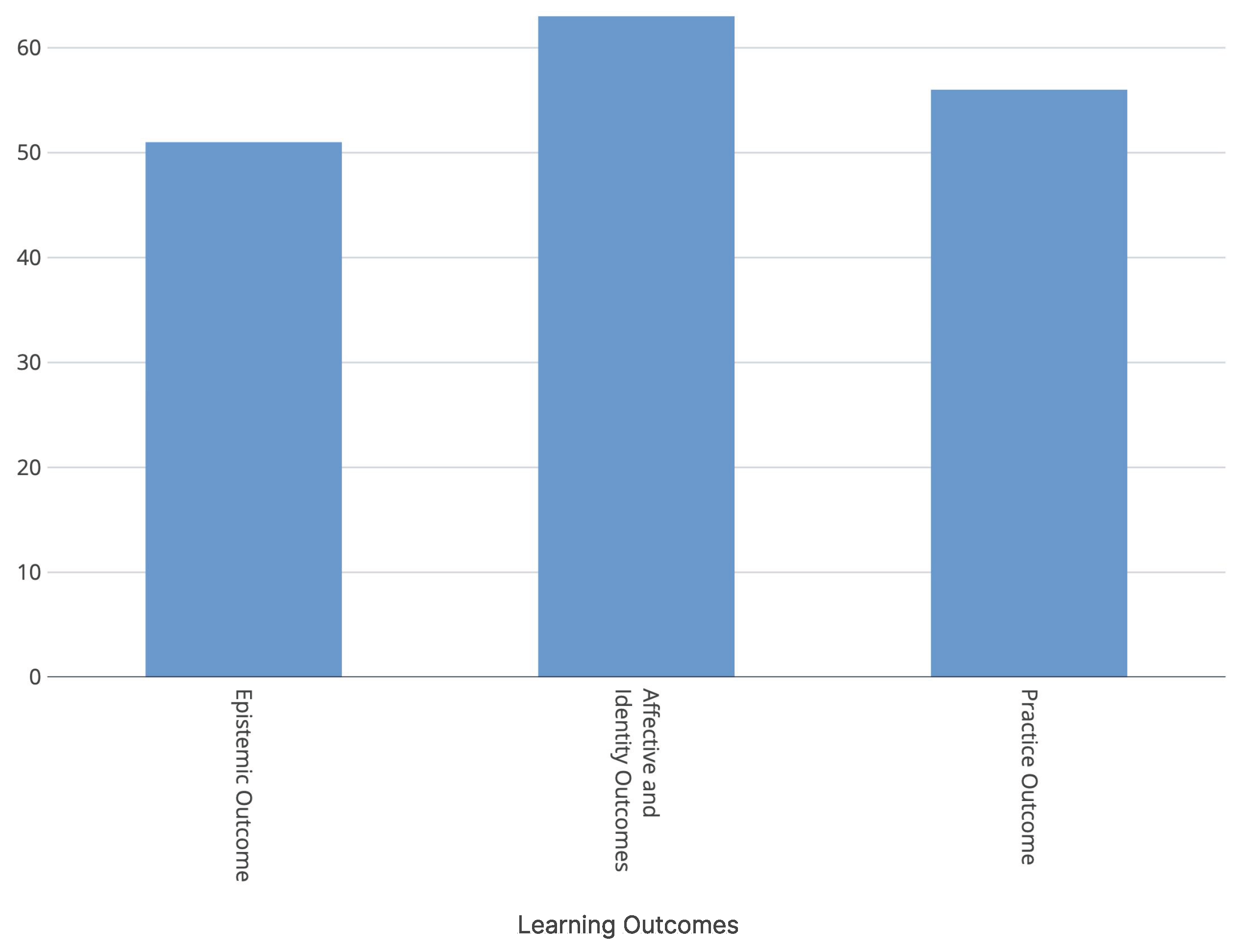

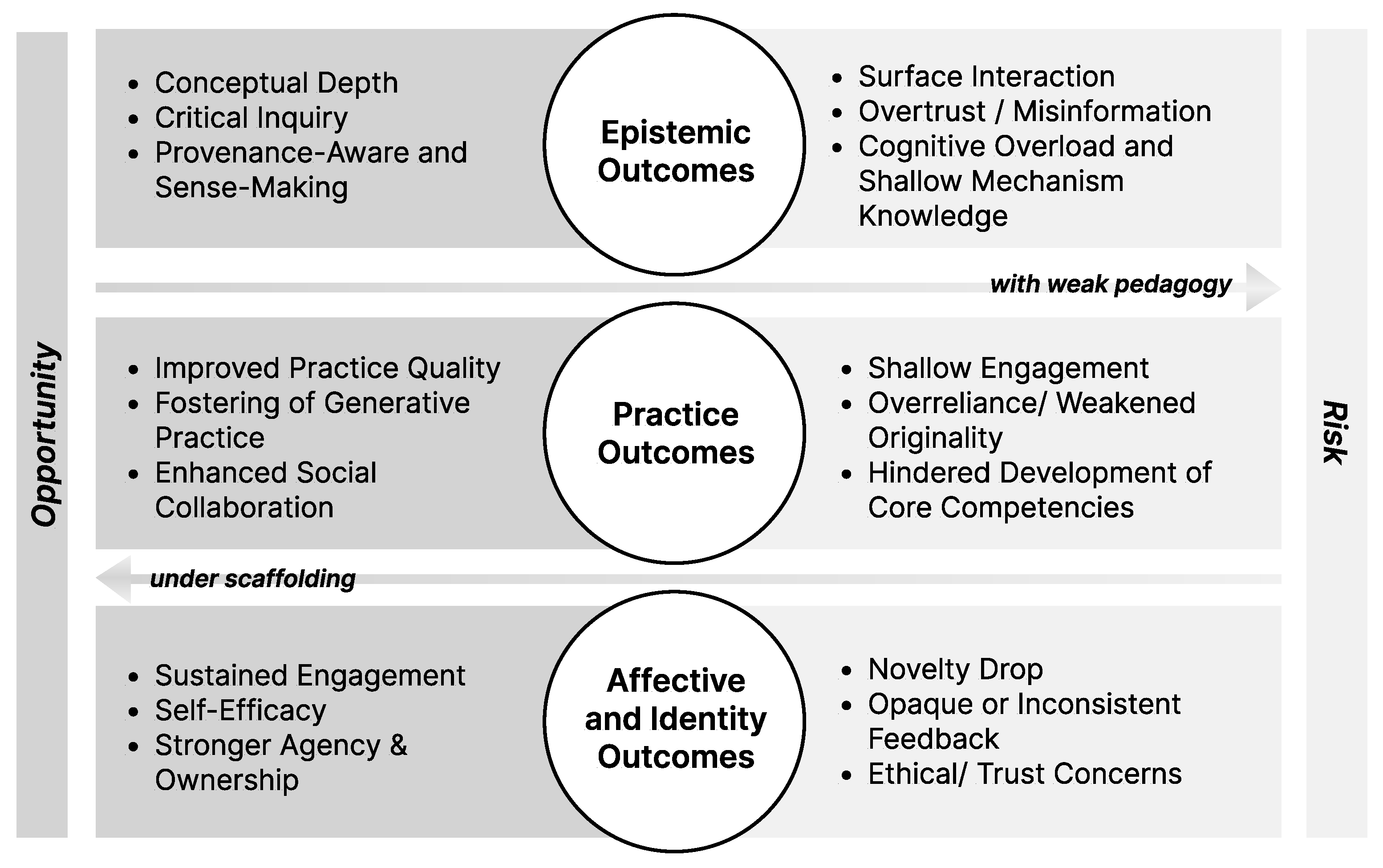

4.2.3. PRODUCT: RQ4 Learning Outcomes

4.2.4. Epistemic Outcomes

4.2.5. Practice Outcomes

4.2.6. Affective and Identity Outcomes

5. Conclusions

Limitations and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Madden, M.; Calvin, A.; Hasse, A.; Lenhart, A. The Dawn of the AI Era: Teens, Parents, and the Adoption of Generative AI at Home and School; Common Sense: San Francisco, CA, USA, 2024. [Google Scholar]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2024, arXiv:2303.08774. [Google Scholar]

- Yan, L.; Greiff, S.; Teuber, Z.; Gašević, D. Promises and challenges of generative artificial intelligence for human learning. Nat. Hum. Behav. 2024, 8, 1839–1850. [Google Scholar] [CrossRef]

- Doshi, A.R.; Hauser, O.P. Generative AI enhances individual creativity but reduces the collective diversity of novel content. Sci. Adv. 2024, 10, eadn5290. [Google Scholar] [CrossRef]

- Resnick, M. Generative AI and Creative Learning: Concerns, Opportunities, and Choices. In An MIT Exploration of Generative AI; MIT: Cambridge, MA, USA, 2024. [Google Scholar] [CrossRef]

- Abdelghani, R.; Wang, Y.H.; Yuan, X.; Wang, T.; Lucas, P.; Sauzéon, H.; Oudeyer, P.Y. GPT-3-driven pedagogical agents to train children’s curious question-asking skills. Int. J. Artif. Intell. Educ. 2024, 34, 483–518. [Google Scholar] [CrossRef]

- Wang, J.; Fan, W. The effect of ChatGPT on students’ learning performance, learning perception, and higher-order thinking: Insights from a meta-analysis. Humanit. Soc. Sci. Commun. 2025, 12, 621. [Google Scholar] [CrossRef]

- Cheah, Y.H.; Lu, J.; Kim, J. Integrating generative artificial intelligence in K–12 education: Examining teachers’ preparedness, practices, and barriers. Comput. Educ. Artif. Intell. 2025, 8, 100363. [Google Scholar] [CrossRef]

- Han, A.; Zhou, X.; Cai, Z.; Han, S.; Ko, R.; Corrigan, S.; Peppler, K.A. Teachers, Parents, and Students’ perspectives on Integrating Generative AI into Elementary Literacy Education. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024. [Google Scholar] [CrossRef]

- Mishra, P.; Warr, M.; Islam, R. TPACK in the age of ChatGPT and Generative AI. J. Digit. Learn. Teach. Educ. 2023, 39, 235–251. [Google Scholar] [CrossRef]

- Döğer, M.F.; Göçen, A. Artificial intelligence (AI) as a new trajectory in education. J. Educ. Res. 2025, 1–15. [Google Scholar] [CrossRef]

- Zhai, C.; Wibowo, S.; Li, L.D. The effects of over-reliance on AI dialogue systems on students’ cognitive abilities: A systematic review. Smart Learn. Environ. 2024, 11, 28. [Google Scholar] [CrossRef]

- Dangol, A.; Wolfe, R.; Zhao, R.; Kim, J.; Ramanan, T.; Davis, K.; Kientz, J.A. Children’s Mental Models of AI Reasoning: Implications for AI Literacy Education. In Proceedings of the 24th Interaction Design and Children, Reykjavik, Iceland, 23–26 June 2025; Association for Computing Machinery: New York, NY, USA, 2025; pp. 106–123. [Google Scholar] [CrossRef]

- Dietz, G.; Outa, J.; Lowe, L.; Landay, J.A.; Gweon, H. Theory of AI Mind: How adults and children reason about the “mental states” of conversational AI. In Proceedings of the Annual Meeting of the Cognitive Science Society, Sydney, Australia, 26–29 July 2023; Volume 45. [Google Scholar]

- Baines, A.; Gruia, L.; Collyer-Hoar, G.; Rubegni, E. Playgrounds and Prejudices: Exploring Biases in Generative AI For Children. In Proceedings of the 23rd Annual ACM Interaction Design and Children Conference, New York, NY, USA, 17–20 June 2024; IDC ’24. pp. 839–843. [Google Scholar] [CrossRef]

- Hays, L.; Jurkowski, O.; Sims, S.K. ChatGPT in K–12 education. TechTrends 2024, 68, 281–294. [Google Scholar] [CrossRef]

- Rahman, M.M.; Watanobe, Y. ChatGPT for education and research: Opportunities, threats, and strategies. Appl. Sci. 2023, 13, 5783. [Google Scholar] [CrossRef]

- Wang, G.; Zhao, J.; Van Kleek, M.; Shadbolt, N. Informing age-appropriate ai: Examining principles and practices of ai for children. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 30 April–5 May 2022; pp. 1–29. [Google Scholar]

- Chen, L.; Chen, P.; Lin, Z. Artificial intelligence in education: A review. IEEE Access 2020, 8, 75264–75278. [Google Scholar] [CrossRef]

- Microsoft Education. 2025 AI in Education: A Microsoft Special Report; Technical Report; Microsoft Corporation: Redmond, WA, USA, 2025. [Google Scholar]

- Zhai, X. Transforming Teachers’ Roles and Agencies in the Era of Generative AI: Perceptions, Acceptance, Knowledge, and Practices. J. Sci. Educ. Technol. 2024, 1–11. [Google Scholar] [CrossRef]

- Otermans, P.C.J.; Baines, S.; Pereira, M.; Livingstone, C.; Aditya, D. Chatting with the Future: A Comprehensive Exploration of Parents’ Perspectives on Conversational AI Implementation in Children’s Education. Int. J. Technol. Educ. 2024, 7, 573–586. [Google Scholar] [CrossRef]

- Yu, Y.; Shafto, P.; Bonawitz, E.; Yang, S.C.H.; Golinkoff, R.M.; Corriveau, K.H.; Hirsh-Pasek, K.; Xu, F. The Theoretical and Methodological Opportunities Afforded by Guided Play With Young Children. Front. Psychol. 2018, 9, 1152. [Google Scholar] [CrossRef] [PubMed]

- Lave, J.; Wenger, E. Situated Learning: Legitimate Peripheral Participation; Cambridge University Press: Cambridge, UK, 1991. [Google Scholar]

- Gee, J.P. Chapter 3: Identity as an analytic lens for research in education. Rev. Res. Educ. 2000, 25, 99–125. [Google Scholar] [CrossRef]

- Rajagopal, A.; Vedamanickam, N. New approach to human AI interaction to address digital divide & AI divide: Creating an interactive AI platform to connect teachers & students. In Proceedings of the 2019 IEEE International Conference on Electrical, Computer and Communication Technologies (ICECCT), Tamil Nadu, India, 20–22 February 2019; IEEE: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Yusuf, A.; Pervin, N.; Román-González, M.; Noor, N.M. Generative AI in education and research: A systematic mapping review. Rev. Educ. 2024, 12, e3489. [Google Scholar] [CrossRef]

- Zhang, P.; Tur, G. A systematic review of ChatGPT use in K–12 education. Eur. J. Educ. 2024, 59, e12599. [Google Scholar] [CrossRef]

- Ogunleye, B.; Zakariyyah, K.I.; Ajao, O.; Olayinka, O.; Sharma, H. A systematic review of generative AI for teaching and learning practice. Educ. Sci. 2024, 14, 636. [Google Scholar] [CrossRef]

- Marzano, D. Generative Artificial Intelligence (GAI) in Teaching and Learning Processes at the k–12 Level: A Systematic Review. Technol. Knowl. Learn. 2025, 1–41. [Google Scholar] [CrossRef]

- Aravantinos, S.; Lavidas, K.; Voulgari, I.; Papadakis, S.; Karalis, T.; Komis, V. Educational Approaches with AI in Primary School Settings: A Systematic Review of the Literature Available in Scopus. Educ. Sci. 2024, 14, 744. [Google Scholar] [CrossRef]

- Park, Y.; Doo, M.Y. Role of AI in blended learning: A systematic literature review. Int. Rev. Res. Open Distrib. Learn. 2024, 25, 164–196. [Google Scholar] [CrossRef]

- Yue, M.; Jong, M.S.Y.; Dai, Y. Pedagogical Design of K–12 Artificial Intelligence Education: A Systematic Review. Sustainability 2022, 14, 15620. [Google Scholar] [CrossRef]

- Yim, I.H.Y.; Su, J. Artificial intelligence (AI) learning tools in K–12 education: A scoping review. J. Comput. Educ. 2025, 12, 93–131. [Google Scholar] [CrossRef]

- Martin, F.; Zhuang, M.; Schaefer, D. Systematic review of research on artificial intelligence in K–12 education (2017–2022). Comput. Educ. Artif. Intell. 2024, 6, 100195. [Google Scholar] [CrossRef]

- Crompton, H.; Jones, M.V.; Burke, D. Affordances and challenges of artificial intelligence in K–12 education: A systematic review. J. Res. Technol. Educ. 2024, 56, 248–268. [Google Scholar] [CrossRef]

- Lee, S.J.; Kwon, K. A systematic review of AI education in K–12 classrooms from 2018 to 2023: Topics, strategies, and learning outcomes. Comput. Educ. Artif. Intell. 2024, 6, 100211. [Google Scholar] [CrossRef]

- Zhang, K.; Aslan, A. AI technologies for education: Recent research & future directions. Comput. Educ. Artif. Intell. 2021, 2, 100025. [Google Scholar] [CrossRef]

- OpenAI. Introducing GPTs. 2023. Available online: https://openai.com/index/introducing-gpts/ (accessed on 22 July 2025).

- OpenAI. DALL·E 2. 2022. Available online: https://openai.com/index/dall-e-2/ (accessed on 22 July 2025).

- Midjourney, Inc. Midjourney Web App. 2024. Available online: https://www.midjourney.com/app/ (accessed on 22 July 2025).

- Arslan, B.; Lehman, B.; Tenison, C.; Sparks, J.; López, A.; Gu, L.; Zapata-Rivera, D. Opportunities and Challenges of Using Generative AI to Personalize Educational Assessment. Front. Artif. Intell. 2024, 7, 1460651. [Google Scholar] [CrossRef] [PubMed]

- Mittal, U.; Sai, S.; Chamola, V.; Sangwan, D. A Comprehensive Review on Generative AI for Education. IEEE Access 2024, 12, 142733–142759. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Kerres, M.; Bedenlier, S.; Bond, M.; Prinsloo, P. The Impact of Generative AI (GenAI) on Practices, Policies and Research Direction in Education: A Case of ChatGPT and Midjourney. Interact. Learn. Environ. 2024, 32, 6187–6203. [Google Scholar] [CrossRef]

- Miao, X.; Brooker, R.; Monroe, S. Where Generative AI Fits Within and in Addition to Existing AI K12 Education Interactions: Industry and Research Perspectives. In Machine Learning in Educational Sciences; Khine, M.S., Ed.; Springer Nature: Singapore, 2024; pp. 359–384. [Google Scholar] [CrossRef]

- Kumpulainen, K.; Lipponen, L.; Hilppö, J.; Mikkola, A. Building on the positive in children’s lives: A co-participatory study on the social construction of children’s sense of agency. Early Child Dev. Care 2014, 184, 211–229. [Google Scholar] [CrossRef]

- Sfard, A. On two metaphors for learning and the dangers of choosing just one. Educ. Res. 1998, 27, 4–13. [Google Scholar] [CrossRef]

- Salomon, G. Distributed Cognitions: Psychological and Educational Considerations; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education—Where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef]

- Ouyang, F.; Jiao, P. Artificial Intelligence in Education: The Three Paradigms. Comput. Educ. Artif. Intell. 2021, 2, 100020. [Google Scholar] [CrossRef]

- Xu, W.; Ouyang, F. A systematic review of AI role in the educational system based on a proposed conceptual framework. Educ. Inf. Technol. 2022, 27, 4195–4223. [Google Scholar] [CrossRef]

- Hutchins, E. Cognition in the Wild; MIT Press: Cambridge, MA, USA, 1995. [Google Scholar]

- Yusuf, H.; Money, A.; Daylamani-Zad, D. Pedagogical AI conversational agents in higher education: A conceptual framework and survey of the state of the art. Educ. Technol. Res. Dev. 2025, 73, 815–874. [Google Scholar] [CrossRef]

- Cai, Z.; Han, A.; Zhou, X.; Gazulla, E.D.; Peppler, K. Child-AI Co-Creation: A Review of the Current Research Landscape and a Proposal for Six Design Considerations. In Proceedings of the 24th Interaction Design and Children, New York, NY, USA, 23–26 June 2025; IDC ’25. pp. 916–922. [Google Scholar] [CrossRef]

- OECD. An OECD learning framework 2030. In The Future of Education and Labor; Springer: Cham, Switzerland, 2019; pp. 23–35. [Google Scholar]

- Brown, J.S.; Collins, A.; Duguid, P. Situated cognition and the culture of learning. Educational Researcher 1989, 18, 32–42. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Denyer, D.; Tranfield, D. Developing design propositions through research synthesis: Applying the CIMO-logic framework. Int. J. Oper. Prod. Manag. 2008, 38, 4–20. [Google Scholar]

- Biggs, J.; Tang, C.; Kennedy, G. Teaching for Quality Learning at University 5e; McGraw-Hill Education: Maidenhead, UK, 2022. [Google Scholar]

- Hong, Q.N.; Gonzalez-Reyes, A.; Pluye, P. Improving the usefulness of a tool for appraising the quality of qualitative, quantitative and mixed methods studies, the Mixed Methods Appraisal Tool (MMAT). J. Eval. Clin. Pract. 2018, 24, 459–467. [Google Scholar] [CrossRef]

- Bloom, B.S.; Engelhart, M.D.; Furst, E.J.; Hill, W.H.; Krathwohl, D.R. Taxonomy of Educational Objectives: The Classification of Educational Goals. Handbook 1: Cognitive Domain; Longman: New York, NY, USA, 1956. [Google Scholar]

- Thornhill-Miller, B.; Camarda, A.; Mercier, M.; Burkhardt, J.M.; Morisseau, T.; Bourgeois-Bougrine, S.; Vinchon, F.; El Hayek, S.; Augereau-Landais, M.; Mourey, F.; et al. Creativity, critical thinking, communication, and collaboration: Assessment, certification, and promotion of 21st century skills for the future of work and education. J. Intell. 2023, 11, 54. [Google Scholar] [CrossRef]

- Wittrock, M.C. Generative learning processes of the brain. Educ. Psychol. 1992, 27, 531–541. [Google Scholar] [CrossRef]

- Miao, F.; Shiohira, K. k–12 AI curricula. A mapping of government-endorsed AI curricula. UNESCO Publ. 2022, 3, 1144399. [Google Scholar]

- Engeström, Y. Learning by Expanding: An Activity-Theoretical Approach to Developmental Research; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Piaget, J. Biology and Knowledge: An Essay on the Relations Between Organic Regulations and Cognitive Processes; U. Chicago Press: Chicago, IL, USA, 1971. [Google Scholar]

- Vygotsky, L.S. Mind in Society: The Development of Higher Psychological Processes; Harvard University Press: Cambridge, MA, USA, 1978; Volume 86. [Google Scholar]

- Gee, J.P. Situated Language and Learning: A Critique of Traditional Schooling; Routledge: London, UK, 2012. [Google Scholar]

- Street, B.V. Literacy in Theory and Practice; Cambridge University Press: Cambridge, UK, 1984; Volume 9. [Google Scholar]

- Chen, Y.; Zhang, X.; Hu, L. A progressive prompt-based image-generative AI approach to promoting students’ achievement and perceptions in learning ancient Chinese poetry. Educ. Technol. Soc. 2024, 27, 284–305. [Google Scholar]

- Liu, Y.; Zha, S.; Zhang, Y.; Wang, Y.; Zhang, Y.; Xin, Q.; Nie, L.Y.; Zhang, C.; Xu, Y. BrickSmart: Leveraging Generative AI to Support Children’s Spatial Language Learning in Family Block Play. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 26 April–1 May 2025; pp. 1–19. [Google Scholar]

- Chu, H.C.; Hsu, C.Y.; Wang, C.C. Effects of AI-generated drawing on students’ learning achievement and creativity in an ancient poetry course. Educ. Technol. Soc. 2025, 28, 295–309. [Google Scholar]

- Lin, H.; Xiong, Z.; Tang, H.; Jiang, S.; Wei, W.; Fang, K. Implementing Generative AI Agent Game to Support Reading of Classical Chinese Literature: A Needs Analysis. In Proceedings of the 2024 4th International Conference on Educational Technology (ICET), Wuhan, China, 13–15 September 2024; IEEE: New York, NY, USA, 2024; pp. 86–91. [Google Scholar]

- Cheng, A.Y.; Guo, M.; Ran, M.; Ranasaria, A.; Sharma, A.; Xie, A.; Le, K.N.; Vinaithirthan, B.; Luan, S.; Wright, D.T.H.; et al. Scientific and fantastical: Creating immersive, culturally relevant learning experiences with augmented reality and large language models. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–23. [Google Scholar]

- Gharaibeh, M.; Basulayyim, A. ChatGPT enhances reading comprehension for children with dyslexia in Arabic language. Disabil. Rehabil. Assist. Technol. 2025, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Murgia, E.; Pera, M.S.; Landoni, M.; Huibers, T. Children on ChatGPT readability in an educational context: Myth or opportunity? In Proceedings of the Adjunct proceedings of the 31st ACM Conference on User Modeling, Adaptation and Personalization, Limassol, Cyprus, 26–29 June 2023; pp. 311–316. [Google Scholar]

- Xiao, C.; Xu, S.X.; Zhang, K.; Wang, Y.; Xia, L. Evaluating reading comprehension exercises generated by LLMs: A showcase of ChatGPT in education applications. In Proceedings of the 18th Workshop on Innovative Use of NLP for Building Educational Applications (BEA 2023), Toronto, ON, USA, 13 July 2023; pp. 610–625. [Google Scholar]

- Glover-Tay, S.A.; Korsah, G.A. Exploring the Efficacy of a Local-Language Interfaced ChatGPT as A Self-Learning Support Tool for Junior High School Students in Rural Communities in Africa. In Proceedings of the 2024 IEEE 9th International Conference on Adaptive Science and Technology (ICAST), Accra, Ghana, 24–26 October 2024; IEEE: New York, NY, USA, 2024; Volume 9, pp. 1–8. [Google Scholar]

- Kambam, M.M.; Mutyaboyina, L.; Mohammad, A.; Kedala, K.S. Exploring the efficacy of ChatGPT in developing English vocabulary skills. In Proceedings of the AIP Conference Proceedings, Proddatur, India, 5–6 April 2024; AIP Publishing LLC.: Melville, NY, USA, 2025; Volume 3237, p. 050011. [Google Scholar]

- Zheng, S. The effects of chatbot use on foreign language reading anxiety and reading performance among Chinese secondary school students. Comput. Educ. Artif. Intell. 2024, 7, 100271. [Google Scholar] [CrossRef]

- Ding, L. Aigc technology in mobile english learning: An empirical study on learning outcomes. In Proceedings of the 2024 Asia Pacific Conference on Computing Technologies, Communications and Networking, Chengdu China, 26–27 July 2024; pp. 92–98. [Google Scholar]

- Liu, Z.M.; Hwang, G.J.; Chen, C.Q.; Chen, X.D.; Ye, X.D. Integrating large language models into EFL writing instruction: Effects on performance, self-regulated learning strategies, and motivation. Comput. Assist. Lang. Learn. 2024, 1–25. [Google Scholar] [CrossRef]

- Moltudal, S.H.; Gamlem, S.M.; Segaran, M.; Engeness, I. Same assignment—Two different feedback contexts: Lower secondary students’ experiences with feedback during a three draft writing process. Front. Educ. 2025, 10, 1509904. [Google Scholar] [CrossRef]

- Woo, D.J.; Wang, D.; Guo, K.; Susanto, H. Teaching EFL students to write with ChatGPT: Students’ motivation to learn, cognitive load, and satisfaction with the learning process. Educ. Inf. Technol. 2024, 29, 24963–24990. [Google Scholar] [CrossRef]

- Sapan, M.; Uzun, L. The Effect of ChatGPT-Integrated English Teaching on High School EFL Learners’ Writing Skills and Vocabulary Development. Int. J. Educ. Math. Sci. Technol. 2024, 12, 1657–1677. [Google Scholar] [CrossRef]

- Jang, J.; Eun, S.; Lee, H.; Choi, J.; Cho, Y.H. The effects of prompt scaffolding on learning to write arguments with ChatGPT. In Proceedings of the 18th International Conference of the Learning Sciences-ICLS 2024, Buffalo, NY, USA, 10–14 June 2024; International Society of the Learning Sciences: Bloomington, IN, USA, 2024; pp. 1502–1505. [Google Scholar]

- Jamshed, M.; Manjur Ahmed, A.S.M.; Sarfaraj, M.; Warda, W.U. The Impact of ChatGPT on English Language Learners’ Writing Skills: An Assessment of AI Feedback on Mobile. Int. J. Interact. Mob. Technol. 2024, 18, 18. [Google Scholar] [CrossRef]

- Zhu, W.; Xing, W.; Lyu, B.; Li, C.; Zhang, F.; Li, H. Bridging the gender gap: The role of AI-powered math story creation in learning outcomes. In Proceedings of the 15th International Learning Analytics and Knowledge Conference, Dublin, Ireland, 3–7 March 2025; pp. 918–923. [Google Scholar]

- Dietz Smith, G.; Prasad, S.; Davidson, M.J.; Findlater, L.; Shapiro, R.B. Contextq: Generated questions to support meaningful parent-child dialogue while co-reading. In Proceedings of the 23rd Annual ACM Interaction Design and Children Conference, Delft, The Netherlands, 17–20 June 2024; pp. 408–423. [Google Scholar]

- Gharaibeh, M.; Ayasrah, M.N.; Almulla, A.A. Supplemental role of ChatGPT in enhancing writing ability for children with dysgraphia in the Arabic language. Educ. Inf. Technol. 2025, 1–21. [Google Scholar] [CrossRef]

- Alneyadi, S.; Wardat, Y. Integrating ChatGPT in Grade 12 Quantum Theory Education: An Exploratory Study at Emirate School (UAE). Int. J. Inf. Educ. Technol. 2024, 14, 398–410. [Google Scholar] [CrossRef]

- Lin, C.H.; Zhou, K.; Li, L.; Sun, L. Integrating generative AI into digital multimodal composition: A study of multicultural second-language classrooms. Comput. Compos. 2025, 75, 102895. [Google Scholar] [CrossRef]

- Min, T.; Lee, B.; Jho, H. Integrating generative artificial intelligence in the design of scientific inquiry for middle school students. Educ. Inf. Technol. 2025, 30, 15329–15360. [Google Scholar] [CrossRef]

- Shao, Z.; Yuan, S.; Gao, L.; He, Y.; Yang, D.; Chen, S. Unlocking Scientific Concepts: How Effective Are LLM-Generated Analogies for Student Understanding and Classroom Practice? In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 26 April–1 May 2025; pp. 1–19. [Google Scholar]

- Zhao, G.; Yang, L.; Hu, B.; Wang, J. A Generative Artificial Intelligence (AI)-Based Human-Computer Collaborative Programming Learning Method to Improve Computational Thinking, Learning Attitudes, and Learning Achievement. J. Educ. Comput. Res. 2025, 63, 1059–1087. [Google Scholar] [CrossRef]

- Chen, L.; Xiao, S.; Chen, Y.; Song, Y.; Wu, R.; Sun, L. ChatScratch: An AI-augmented system toward autonomous visual programming learning for children aged 6–12. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–19. [Google Scholar]

- Frazier, M.; Damevski, K.; Pollock, L. Customizing chatgpt to help computer science principles students learn through conversation. In Proceedings of the 2024 on Innovation and Technology in Computer Science Education V. 1, Milan, Italy, 8–10 July 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 633–639. [Google Scholar]

- Khor, E.T.; Leta, C.; Elizabeth, K.; Peter, S. Student Perceptions of Using Generative AI Chatbot in Learning Programming. In Proceedings of the International Conference on Computers in Education, Quezon City, Philippines, 25–29 November 2024. [Google Scholar]

- Alarcón-López, C.; Krütli, P.; Gillet, D. Assessing ChatGPT’s Influence on Critical Thinking in Sustainability Oriented Activities. In Proceedings of the 2024 IEEE Global Engineering Education Conference (EDUCON), Kos, Greece, 8–11 May 2024; IEEE: New York, NY, USA, 2024; pp. 1–10. [Google Scholar]

- Bitzenbauer, P. ChatGPT in physics education: A pilot study on easy-to-implement activities. Contemp. Educ. Technol. 2023, 15, ep430. [Google Scholar] [CrossRef]

- Alneyadi, S.; Wardat, Y. ChatGPT: Revolutionizing student achievement in the electronic magnetism unit for eleventh-grade students in Emirates schools. Contemp. Educ. Technol. 2023, 15, ep448. [Google Scholar] [CrossRef]

- Xing, W.; Song, Y.; Li, C.; Liu, Z.; Zhu, W.; Oh, H. Development of a generative AI-powered teachable agent for middle school mathematics learning: A design-based research study. Br. J. Educ. Technol. 2025, 56, 2043–2077. [Google Scholar] [CrossRef]

- Lin, Y.F.; Fu-Yu Yang, E.; Wu, J.S.; Yeh, C.Y.; Liao, C.Y.; Chan, T.W. Enhancing students’ authentic mathematical problem-solving skills and confidence through error analysis of GPT-4 solutions. Res. Pract. Technol. Enhanc. Learn. 2025, 20. [Google Scholar] [CrossRef]

- Alarbi, K.; Halaweh, M.; Tairab, H.; Alsalhi, N.R.; Annamalai, N.; Aldarmaki, F. Making a revolution in physics learning in high schools with ChatGPT: A case study in UAE. Eurasia J. Math. Sci. Technol. Educ. 2024, 20, em2499. [Google Scholar] [CrossRef] [PubMed]

- Helal, M.; Holthaus, P.; Wood, L.; Velmurugan, V.; Lakatos, G.; Moros, S.; Amirabdollahian, F. When the robotic Maths tutor is wrong-can children identify mistakes generated by ChatGPT? In Proceedings of the 2024 5th International Conference on Artificial Intelligence, Robotics and Control (AIRC), Venue Cairo, Egypt, 22–24 April 2024; IEEE: New York, NY, USA, 2024; pp. 83–90. [Google Scholar]

- Bachiri, Y.A.; Mouncif, H.; Bouikhalene, B. Artificial intelligence empowers gamification: Optimizing student engagement and learning outcomes in e-learning and moocs. Int. J. Eng. Pedagog. 2023, 13, 4. [Google Scholar] [CrossRef]

- Li, Y.; Wu, Y.; Chiu, T.K. How teacher presence affects student engagement with a generative artificial intelligence chatbot in learning designed with first principles of instruction. J. Res. Technol. Educ. 2025, 1–17. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, W. Integrating large language models into project-based learning based on self-determination theory. Interact. Learn. Environ. 2025, 33, 3580–3592. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhu, C.; Wu, T.; Wang, S.; Zhou, Y.; Chen, J.; Wu, F.; Li, Y. Impact of assignment completion assisted by large language model-based chatbot on middle school students’ learning. Educ. Inf. Technol. 2025, 30, 2429–2461. [Google Scholar] [CrossRef]

- Zheng, W.; Tse, A.W.C. The impact of generative artificial intelligence-based formative feedback on the mathematical motivation of Chinese grade 4 students: A case study. In Proceedings of the 2023 IEEE International Conference on Teaching, Assessment and Learning for Engineering (TALE), Auckland, New Zealand, 27 November–1 December 2023; IEEE: New York, NY, USA, 2023; pp. 1–8. [Google Scholar]

- Putjorn, T.; Putjorn, P. Augmented imagination: Exploring generative AI from the perspectives of young learners. In Proceedings of the 2023 15th International Conference on Information Technology and Electrical Engineering (ICITEE), Chiang Mai, Thailand, 26–27 October 2023; IEEE: New York, NY, USA, 2023; pp. 353–358. [Google Scholar]

- Vartiainen, H.; Tedre, M.; Jormanainen, I. Co-creating digital art with generative AI in K-9 education: Socio-material insights. Int. J. Educ. Through Art 2023, 19, 405–423. [Google Scholar] [CrossRef]

- Chen, J.; Mokmin, N.A.M.; Qi, S. Generative AI-powered arts-based learning in middle school history: Impact on achievement, motivation, and cognitive load. J. Educ. Res. 2025, 1–13. [Google Scholar] [CrossRef]

- Berg, C.; Omsén, L.; Hansson, H.; Mozelius, P. Students’ AI-generated Images: Impact on Motivation, Learning and, Satisfaction. In Proceedings of the ICAIR 2024; ACI Academic Conferences International: Reading, UK, 2024; Volume 4. [Google Scholar]

- Elgarf, M.; Salam, H.; Peters, C. Fostering children’s creativity through LLM-driven storytelling with a social robot. Front. Robot. AI 2024, 11, 1457429. [Google Scholar] [CrossRef]

- Rubenstein, L.D.; Waldron, A.; Ramirez, G. Transforming Worlds Into Words: Using ChatGPT to Bring Student Visions to Life. Gift. Child Today 2025, 48, 104–117. [Google Scholar] [CrossRef]

- Kim, D.Y.; Ravi, P.; Williams, R.; Yoo, D. App Planner: Utilizing Generative AI in k–12 Mobile App Development Education. In Proceedings of the 23rd Annual ACM Interaction Design and Children Conference, Delft, The Netherlands, 17–20 June 2024; pp. 770–775. [Google Scholar]

- Zha, S.; Liu, Y.; Zheng, C.; Xu, J.; Yu, F.; Gong, J.; Xu, Y. Mentigo: An Intelligent Agent for Mentoring Students in the Creative Problem Solving Process. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 26 April–1 May 2025; pp. 1–22. [Google Scholar]

- Li, W.; Ji, J.W.; Tseng, J.C.; Liu, C.Y.; Huang, J.Y.; Liu, H.Y.; Zhou, M. A ChatGPT-AIPSS approach for SDGs. Educ. Technol. Soc. 2025, 28, 301–318. [Google Scholar]

- Doherty, E.; Perkoff, E.M.; von Bayern, S.; Zhang, R.; Dey, I.; Bodzianowski, M.; Puntambekar, S.; Hirshfield, L. Piecing together teamwork: A responsible approach to an LLM-based educational jigsaw agent. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 26 April–1 May 2025; pp. 1–17. [Google Scholar]

- Shen, J.J.; King Chen, J.; Findlater, L.; Dietz Smith, G. eaSEL: Promoting Social-Emotional Learning and Parent-Child Interaction through AI-Mediated Content Consumption. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 26 April–1 May 2025; pp. 1–18. [Google Scholar]

- Chen, Z.; Xiong, Z.; Ruan, X.; Jiang, S.; Wei, W.; Fang, K. Exploring Learners’ Interactions with GenAI Agents in Educational Games: Typologies and Emotional Factors in Human-Computer Interaction. In Proceedings of the 2024 4th International Conference on Educational Technology (ICET), Wuhan, China, 13–15 September 2024; IEEE: New York, NY, USA, 2024; pp. 136–140. [Google Scholar]

- Ho, H.R.; Kargeti, N.; Liu, Z.; Mutlu, B. SET-PAiREd: Designing for Parental Involvement in Learning with an AI-Assisted Educational Robot. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 26 April–1 May 2025; pp. 1–20. [Google Scholar]

- Burner, T.; Lindvig, Y.; Wærness, J.I. “We Should Not Be Like a Dinosaur”—Using AI Technologies to Provide Formative Feedback to Students. Educ. Sci. 2025, 15, 58. [Google Scholar] [CrossRef]

- Tang, Q.; Deng, W.; Huang, Y.; Wang, S.; Zhang, H. Can Generative Artificial Intelligence be a Good Teaching Assistant?—An Empirical Analysis Based on Generative AI-Assisted Teaching. J. Comput. Assist. Learn. 2025, 41, e70027. [Google Scholar] [CrossRef]

- Jansen, T.; Horbach, A.; Meyer, J. Feedback from Generative AI: Correlates of Student Engagement in Text Revision from 655 Classes from Primary and Secondary School. In Proceedings of the 15th International Learning Analytics and Knowledge Conference, Dublin, Ireland, 3–7 March 2025; pp. 831–836. [Google Scholar]

- Klar, M. Using ChatGPT is easy, using it effectively is tough? A mixed methods study on k–12 students’ perceptions, interaction patterns, and support for learning with generative AI chatbots. Smart Learn. Environ. 2025, 12, 32. [Google Scholar] [CrossRef]

- Shahriar, T.; Matsuda, N. “I Am Confused! How to Differentiate Between…?” Adaptive Follow-Up Questions Facilitate Tutor Learning with Effective Time-On-Task. In Proceedings of the International Conference on Artificial Intelligence in Education, Recife, Brazil, 8–12 July 2024; Springer: Cham, Switzerland, 2024; pp. 17–30. [Google Scholar]

- Shin, I.; Hwang, S.B.; Yoo, Y.J.; Bae, S.; Kim, R.Y. Comparing student preferences for AI-generated and peer-generated feedback in AI-driven formative peer assessment. In Proceedings of the 15th International Learning Analytics and Knowledge Conference, Dublin, Ireland, 3–7 March 2025; pp. 159–169. [Google Scholar]

- Yu, H.; Xie, Q. Generative AI vs. Teachers: Feedback Quality, Feedback Uptake, and Revision. Lang. Teach. Res. Q. 2025, 47, 113–137. [Google Scholar] [CrossRef]

- Villan, F.; Dos Santos, R.P. ChatGPT as Co-Advisor in Scientific Initiation: Action Research with Project-Based Learning in Elementary Education. Acta Sci. (Canoas) 2023, 25, 60–117. [Google Scholar] [CrossRef]

- Ali, S.; Ravi, P.; Williams, R.; DiPaola, D.; Breazeal, C. Constructing dreams using generative AI. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 23268–23275. [Google Scholar]

- Salem, A.; Sumi, K. Deception detection in educational AI: Challenges for Japanese middle school students in interacting with generative AI robots. Front. Artif. Intell. 2024, 7, 1493348. [Google Scholar] [CrossRef]

- Ali, S.; DiPaola, D.; Lee, I.; Hong, J.; Breazeal, C. Exploring generative models with middle school students. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–13. [Google Scholar]

- Chen, B.; Zhu, X.; del Castillo H., F.D. Integrating generative AI in knowledge building. Comput. Educ. Artif. Intell. 2023, 5, 100184. [Google Scholar] [CrossRef]

- Lyu, Z.; Ali, S.; Breazeal, C. Introducing variational autoencoders to high school students. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 36, pp. 12801–12809. [Google Scholar]

- Lin, Z.; Dai, Y. Fostering Epistemic Insights into AI Ethics through a Constructionist Pedagogy: An Interdisciplinary Approach to AI Literacy. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 27 February–4 March 2025; Volume 39, pp. 29171–29177. [Google Scholar]

- Morales-Navarro, L.; Kafai, Y.B.; Vogelstein, L.; Yu, E.; Metaxa, D. Learning About Algorithm Auditing in Five Steps: Scaffolding How High School Youth Can Systematically and Critically Evaluate Machine Learning Applications. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 27 February–4 March 2025; Volume 39, pp. 29186–29194. [Google Scholar]

- Benjamin, J.J.; Lindley, J.; Edwards, E.; Rubegni, E.; Korjakow, T.; Grist, D.; Sharkey, R. Responding to generative ai technologies with research-through-design: The ryelands ai lab as an exploratory study. In Proceedings of the 2024 ACM Designing Interactive Systems Conference, Copenhagen, Denmark, 1–5 July 2024; pp. 1823–1841. [Google Scholar]

- Yang, T.C.; Hsu, Y.C.; Wu, J.Y. The effectiveness of ChatGPT in assisting high school students in programming learning: Evidence from a quasi-experimental research. Interact. Learn. Environ. 2025, 33, 3726–3743. [Google Scholar] [CrossRef]

- Zhang, L.; Jiang, Q.; Xiong, W.; Zhao, W. Effects of ChatGPT-Based Human–Computer Dialogic Interaction Programming Activities on Student Engagement. J. Educ. Comput. Res. 2025, 07356331251333874. [Google Scholar] [CrossRef]

- Huang, Y.S.; Wu, W.T.; Wu, P.H.; Teng, Y.J. Investigating the Impact of Integrating Prompting Strategies in ChatGPT on Students Learning Achievement and Cognitive Load. In Proceedings of the International Conference on Innovative Technologies and Learning, Tartu, Estonia, 14–16 August 2024; Springer: Cham, Switzerland, 2024; pp. 3–11. [Google Scholar]

- Park, Y.; Bum, J.; Lim, D. Effects of Using a GPT-based Application for Building EFL Students’ Question-asking Skills. Foreign Lang. Educ. 2024, 31, 51–74. [Google Scholar] [CrossRef]

- Khuibut, W.; Premthaisong, S.; Chaipidech, P. Integrating ChatGPT into synectics model to improve high school student’s creative writing skill. In Proceedings of the International Conference on Computers in Education, Matsue, Japan, 4–8 December 2023. [Google Scholar]

- Li, X.; Li, T.; Wang, M.; Tao, S.; Zhou, X.; Wei, X.; Guan, N. Navigating the textual maze: Enhancing textual analytical skills through an innovative GAI prompt framework. IEEE Trans. Learn. Technol. 2025, 18, 206–215. [Google Scholar] [CrossRef]

- Schicchi, D.; Limongelli, C.; Monteleone, V.; Taibi, D. A closer look at ChatGPT’s role in concept map generation for education. Interact. Learn. Environ. 2025, 1–21. [Google Scholar] [CrossRef]

- Guo, Q.; Zhen, J.; Wu, F.; He, Y.; Qiao, C. Can Students Make STEM Progress With the Large Language Models (LLMs)? An Empirical Study of LLMs Integration Within Middle School Science and Engineering Practice. J. Educ. Comput. Res. 2025, 63, 372–405. [Google Scholar] [CrossRef]

- Zhang, H.; Li, M. A Study on Impact of Junior High School Students’ Programming Learning Effect Based on Generative Artificial Intelligence. In Proceedings of the 2024 4th International Conference on Educational Technology (ICET), Wuhan, China, 13–15 September 2024; IEEE: New York, NY, USA, 2024; pp. 106–109. [Google Scholar]

- Chen, C.H.; Law, V. The role of help-seeking from ChatGPT in digital game-based learning. Educ. Technol. Res. Dev. 2025, 73, 1703–1721. [Google Scholar] [CrossRef]

- Gong, X.; Li, Z.; Qiao, A. Impact of generative AI dialogic feedback on different stages of programming problem solving. Educ. Inf. Technol. 2024, 30, 9689–9709. [Google Scholar] [CrossRef]

- Jauhiainen, J.S.; Garagorry Guerra, A. Generative AI and education: Dynamic personalization of pupils’ school learning material with ChatGPT. Front. Educ. 2024, 9, 1288723. [Google Scholar] [CrossRef]

- Pea, R.D. Practices of distributed intelligence and designs for education. Distrib. Cogn. Psychol. Educ. Considerations 1993, 11, 47–87. [Google Scholar]

- Mason, M. What is complexity theory and what are its implications for educational change? Educ. Philos. Theory 2008, 40, 35–49. [Google Scholar] [CrossRef]

- Vosniadou, S. Capturing and modeling the process of conceptual change. Learn. Instr. 1994, 4, 45–69. [Google Scholar] [CrossRef]

| Search Dimension | Search Query |

|---|---|

| Generative AI | “generative ai” OR “generative artificial intelligence” OR “GenAI” OR “large language model *” OR “LLM *” OR “ChatGPT” OR “chat generative pre-trained transformer” OR “GPT-4o” OR “GPT-4” OR “GPT-3.5” OR “AI-generated content” OR “AIGC” OR “AI-generated” OR “generative model *” |

| k–12/Children | “k–12” OR “children” OR “students” |

| Education Context | “education” OR “learning” |

| Application Scene | “school” OR “home” OR “family” |

| Exclusion | NOT (“higher education” OR “university” OR “college student *” OR “undergraduate *” OR “postsecondary”) |

| Category | Specific Criteria |

|---|---|

| Inclusion Criteria | 1. Explicitly focus on the application of generative artificial intelligence (e.g., Generative AI, LLM, ChatGPT, AIGC, etc.) in educational settings for children and adolescents aged 3–18 (including K–12, children, primary and secondary school students, etc.). |

| 2. Study participants are children/adolescents aged 3 to 18 (may include teachers and parents as collaborative roles, but must involve learning or teaching activities with young learners). | |

| 3. The study is empirical and involves concrete teaching interventions, classroom/home/project-based practices, or learning activities. | |

| 4. Published as a peer-reviewed English journal article, conference paper, or other formal scholarly publication. | |

| Exclusion Criteria | 1. Unrelated to generative AI in child/adolescent education, or focuses on higher/adult education, teacher training, or non-generative AI (e.g., traditional adaptive systems, scoring systems, discriminative models, knowledge graphs, etc.). |

| 2. Participants are not aged 3–18 (e.g., only university students, adults, teachers), or the study setting is not relevant to K–12. | |

| 3. Only reports technical implementation or surveys/interviews, without actual teaching/learning activities (e.g., only scoring historical data, teachers using AI for lesson preparation). | |

| 4. Non-empirical research: reviews, opinion pieces, short abstracts, conference posters, non-peer-reviewed literature, or book chapters. | |

| 5. Duplicate publications. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, X.; Tan, H. A Systematic Review of Generative AI in K–12: Mapping Goals, Activities, Roles, and Outcomes via the 3P Model. Systems 2025, 13, 840. https://doi.org/10.3390/systems13100840

Lin X, Tan H. A Systematic Review of Generative AI in K–12: Mapping Goals, Activities, Roles, and Outcomes via the 3P Model. Systems. 2025; 13(10):840. https://doi.org/10.3390/systems13100840

Chicago/Turabian StyleLin, Xiaoling, and Hao Tan. 2025. "A Systematic Review of Generative AI in K–12: Mapping Goals, Activities, Roles, and Outcomes via the 3P Model" Systems 13, no. 10: 840. https://doi.org/10.3390/systems13100840

APA StyleLin, X., & Tan, H. (2025). A Systematic Review of Generative AI in K–12: Mapping Goals, Activities, Roles, and Outcomes via the 3P Model. Systems, 13(10), 840. https://doi.org/10.3390/systems13100840