1. Introduction

Scheduling is a fundamental component of advanced manufacturing processes and systems. It efficiently utilizes resources to maximize objectives, e.g., makespan, flow time, average tardiness, etc. It plays a significant role in modern production facilities by optimally organizing and controlling the work and workloads in a manufacturing process, resulting in minimal inventory, processing time, idle time, and product cost [

1]. In scheduling resources, such as machines, are allocated to tasks, such as jobs, to ensure the completion of these tasks in a limited amount of time. Scheduling while manufacturing, is basically the arrangement of jobs that can be processed on available machines subjected to different constraints.

Some of the classical models used to solve scheduling problems are job-shop, flow-shop, and open-shop. The Permutation Flow-shop Scheduling Problem (PFSP) addresses the most important problems related to machine scheduling and involves the sequential processing of

n jobs on

m machines [

2]. Flow-Shop scheduling problems are NP-complete [

3,

4], for which complete enumeration requires considerable computational effort and time exponentially increasing with the problem size. The intricate nature of these problems renders exact solution methods impractical when dealing with numerous jobs and/or machines. This is the primary rationale behind the utilization of various heuristics, found in the literature.

2. Literature Review

Pioneering efforts by Johnson [

5] concluded that PFSP with more than two machines could not be solved analytically. Consequently, researchers focused on other heuristic-based approaches to handle the PFSP of more than two machines. Some notable examples include Palmer’s heuristic [

6], CDS (Campbell, Dudek & Smith) [

7], VNS (Variable Neighborhood Search) [

8], Branch & Bound [

9], etc. However, with the increase in problem size, even the best heuristics tend to drift away from the optimal solutions and converge to suboptimal solutions. Therefore, the focus of research shifted towards meta-heuristics. Many such approaches, as viable solutions to PFSP, have already been reported in the literature, which includes GA (Genetic Algorithms) [

10,

11], PSO (Particle Swarm Optimization) [

12,

13] and ACO (Ant Colony Optimization) [

14], Q-Learning algorithms [

15], HWOA (Hybrid Whale Optimization Algorithms) [

16], CWA (Enhanced Whale Optimization Algorithms) [

17], and BAT-algorithms [

18]. Metaheuristic-based approaches start with sequences generated randomly by a heuristic and then iterate until a stopping criterion is satisfied [

19]. These approaches have been extensively applied to find optimal solutions to flow-shop scheduling problems [

20]. Goldberg et al. [

21] proposed GA-based algorithms as viable solutions to scheduling problem. A GA-based heuristic for flow-shop scheduling, with makespan minimization as the objective, was presented by Chen et al. [

22]. The authors utilized a partial crossover, no mutation, and a different heuristic for the random generation of the initial population. A comparative analysis showed no improvement in results for a population size of more than 60. However, hybrid GA-based approaches have significantly improved results [

23,

24,

25,

26,

27]. Despite this, the increased computational cost of such approaches is a major limitation. Therefore, comparatively more efficient algorithms such as PSO have recently been opted for more frequently [

28].

PSO, initially proposed by Kennedy and Eberhart [

29], is based on the “collective intelligence” exhibited by swarms of animals. In PSO, a randomly generated initial population of solutions iteratively propagates toward the optimal solution. PSO has been extensively applied to flow-shop scheduling problems. Tasgetiren et al. [

30] implemented the PSO algorithm on a single machine while optimizing the total weighted tardiness problem and developed the SPV (Smallest Position Value) heuristic-based approach for solving a wide range of scheduling and sequencing problems. The authors hybridized PSO with VNS to obtain better results by avoiding local minima. Improved performance of the PSO was reported in comparison to ACO, with ARPD (average relative percent deviation) as the evaluation criteria. Another approach, presented by Tasgetiren et al. [

31], utilized PSO with SPV and VNS for solving Taillard’s [

32] benchmark problems and concluded that PSO with VNS produced the same results as GA with VNS. Moslehi et al. [

33] conducted a study on the challenges of a Limited Buffer PSFP (LBPSFP) using a hybrid VNS (HVNS) algorithm combined with SA. Despite the similar performance, the computational efficiency of PSO was found to be exceedingly better than GA [

28,

34,

35]. In addition, Fuqiang et al. [

36] proposed a Two Level PSO (TLPSO) to solve the management problem related to credit portfolio. TLPSO included internal search and external search processes, and the experimental results show that the TLPSO is more reliable than the other tested methods.

Horng et al. [

37] presented a hybrid metaheuristic by combining SA (Simulated Annealing) with PSO and compared their results with that of simple GA and PSO. The results for 20 different mathematical optimization functions confirmed the quick convergence and good optimality of SA-PSO compared to standalone GA and PSO. A similar but slightly different metaheuristic compounding PSO, SA, and TS (Tabu Search) was developed by Zhang et al. [

38]. The algorithm obtained quality solutions while consuming lesser computational time when tested with 30 instances of 10 different sizes taken from Taillard’s [

39] benchmark problems for PFSP and performed significantly better than NPSO (Novel PSO) and GA. Given the optimizing capabilities of hybrid approaches, recently, researchers have focused even more on applying hybrid metaheuristics to PFSP to improve the global and local neighborhood search capabilities of the standard algorithms. The optimizing capabilities of the hybrid approaches have invigorated the researchers to apply these techniques to the global and localneighborhood search algorithms. Yannis et al. [

40] hybridized his PSO-VNS algorithm with PR (Path Relinking Strategy). A comparative analysis of the technique while solving Taillard’s [

32] problems yielded a significantly better PSO-VNS-PR algorithm performance than PSO with constant global and local neighborhood searches. The effects of population initialization on PSO performance were studied by Laxmi et al. [

41]. The authors hybridized standard PSO with a NEH (Nawaz, Enscore & Ham) heuristic for population initialization and SA for enhanced local neighborhood search. A significantly improved performance of the algorithm was reported compared to other competing algorithms. Fuqiang et al. [

42] developed a technique including SA and GA for scheduled risk management of IT outsourcing projects. They concluded that SA, in combination with GA, is the superior algorithm in terms of stability and convergence.

From the literature review presented above, it can be evidently concluded that metaheuristics have the increased the capability of handling NP-hard problems. Furthermore, PSO, combined with other heuristics, have performed better than other tools, e.g., GA, ACO, etc. Therefore, to further validate this conclusion, a PSO-based approach was developed during this research in a stepwise manner. First, a standard PSO was developed and validated through Taillard’s [

32] suggested benchmark problems. This was followed by its gradual hybridization with VNS only and then both with VNS & SA while observing the initial temperature’s effect on SA optimality [

43]. An internal comparison based on Taillard’s benchmark problems was also carried out to justify the effect of hybridization. After validation, the hybrid PSO (HPSO)—developed during this research—was also compared with a recently reported Hybrid Genetic Simulated Annealing Algorithm (HGSA) [

26] and other famous techniques based on the ARPD values. The comparison showed the effectiveness and the robust nature of the HPSO (PSO—VNS—SA), developed during this research, as it outperformed all its competitors.

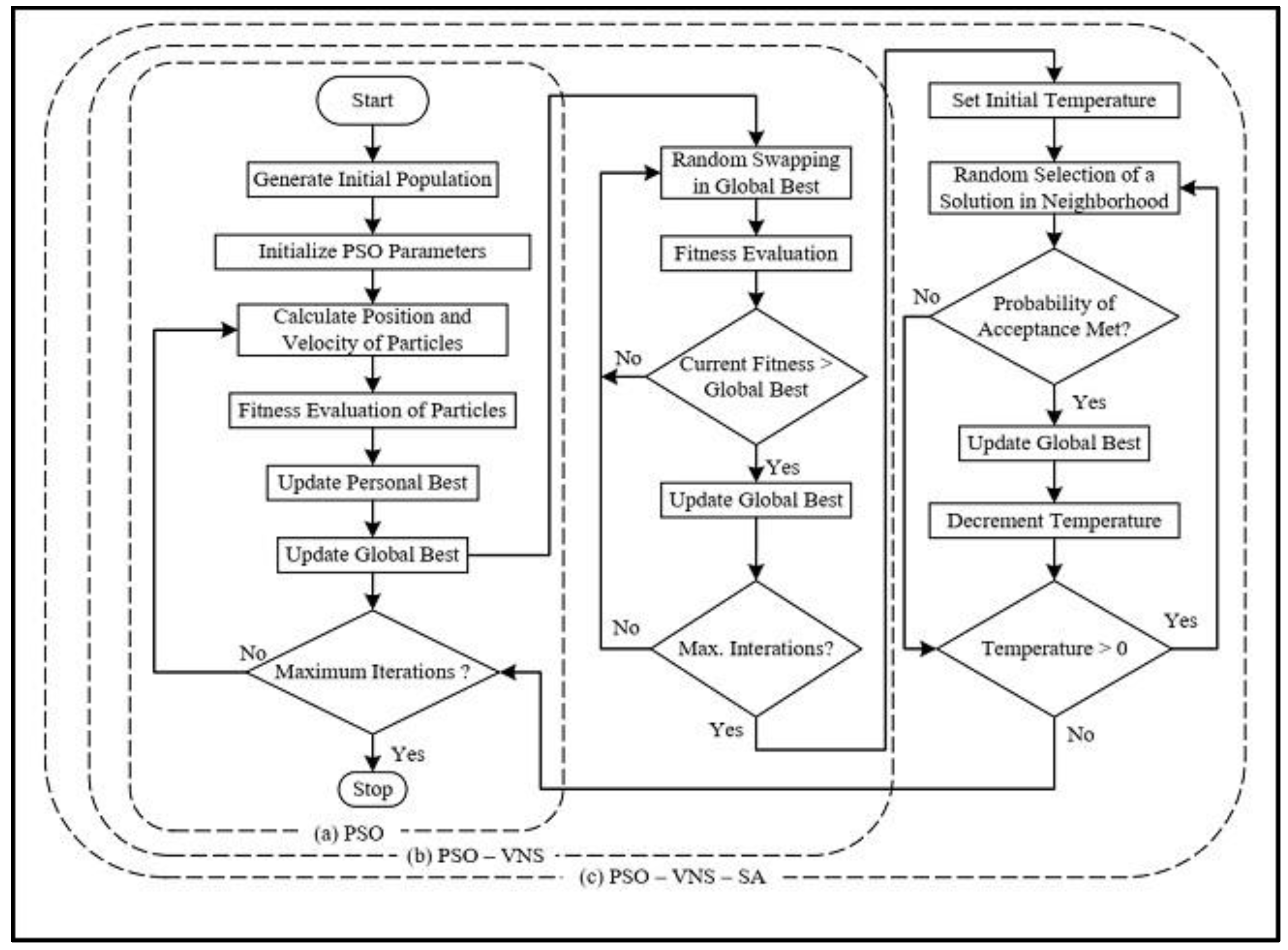

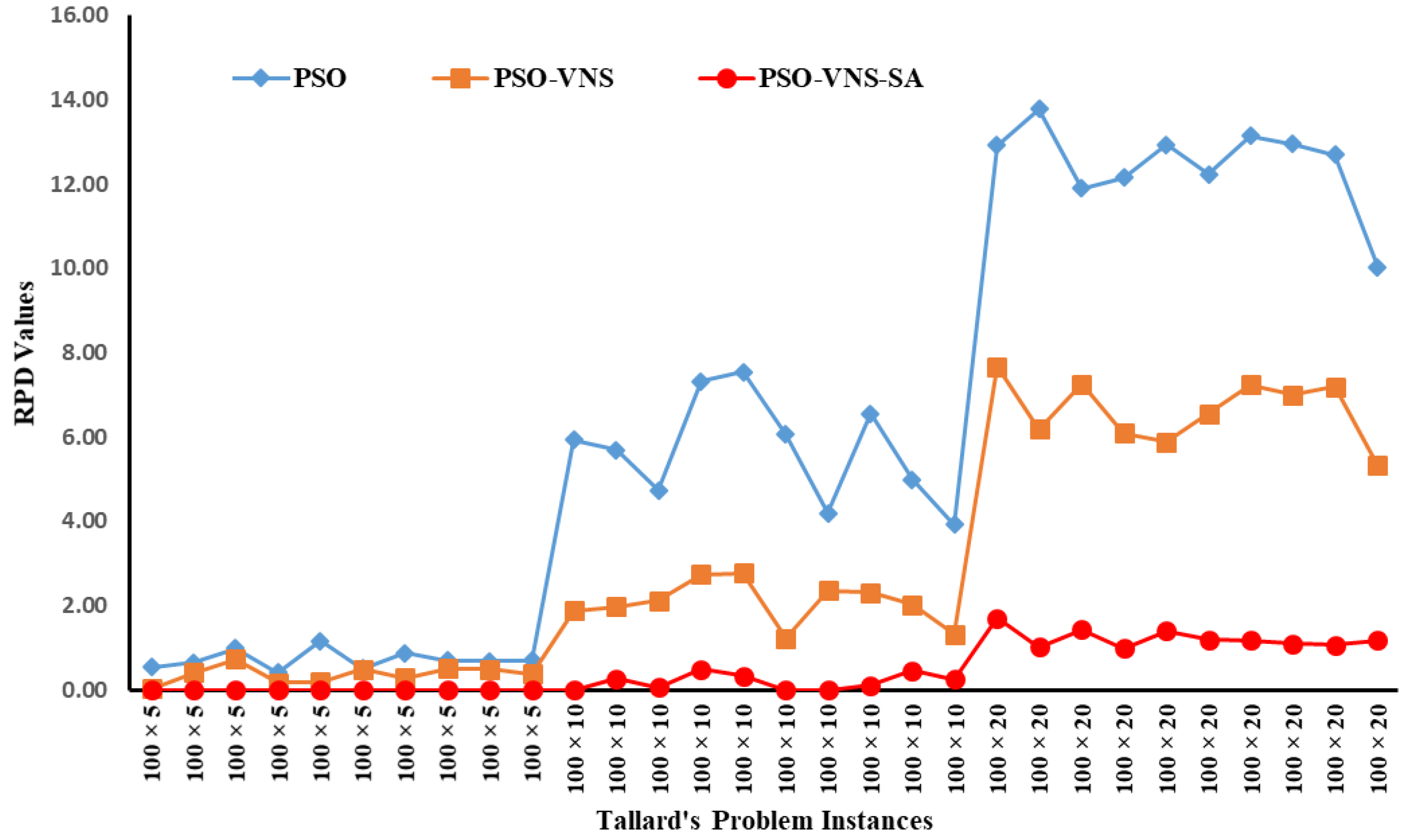

4. Results and Discussion

The sizes of Taillard’s benchmark problems, used for validation of the three algorithms presented in

Figure 1, ranged from 20 × 5 (

n ×

m) to 500 × 20. Each problem was given ten runs while using a swarm size of twice the number of jobs, and the inertial weights used, ranged from 1.2 to 0.4, with a decrement factor of 0.975. The cognitive and social acceleration coefficients (C1 and C2) were initialized with a value of 2, and the maximum iterations were kept limited to 100. The results obtained are listed in

Table 2,

Table 3,

Table 4 and

Table 5 and presented in

Figure 2,

Figure 3,

Figure 4 and

Figure 5. The recently reported results of the Q-Learning algorithm [

15], though limited to a maximum problem size of 50 × 20, have also been analyzed for performance comparisons. The data in

Table 2 and

Figure 2 show that the algorithm achieved the upper bound in all instances of the 20-job problems resulting in an ARPD value of zero. A similar trend can be observed for the 50-job problems (

Table 3,

Figure 3), where the HPSO results in an ARPD value of 0.80, which is significantly better than the PSO-VNS and PSO with ARPD values of 3.21 and 4.20, respectively. The performance consistency is also apparent from the 100- and 200-job instances results, where the HPSO achieved ARPD values of 0.48 and 0.63 compared to 3.03 and 3.06 for PSO-VNS and 6.30 and 8.49 for the PSO.

Figure 2,

Figure 3,

Figure 4 and

Figure 5 clearly depict that the performance of HPSO in comparison to standard PSO and PSO–VNS was consistently superior as it returned improved solutions for the entire set of 120 benchmark problems.

It can be concluded that the assimilation of VNS and SA significantly improved the convergence ability of standard PSO, which is evident from the results of the three PSO-based algorithms. The performance difference among the three variants of the PSO was significant (

p << 0.05) for problem sizes up to 50 × 5 (

Figure 4). However, the deviation of the results became more pronounced with the increasing problem size, as is evident from

Figure 4 and

Figure 5. Zhang et al. [

49] conveyed a similar pattern of results for a hybrid metaheuristic-based approach they developed by combining PSO and SA with a stochastic variable neighborhood search. Researchers have mostly reported a significantly improved performance of SA with an initial temperature setting of 5°. However, the HPSO algorithm presented in this paper performed comparatively better with an initial temperature setting of 100° and a cooling rate of 0.95°. A possible reason for this deviation from other algorithms is the comparatively wider initial search space that increased the probability acceptance level of SA.

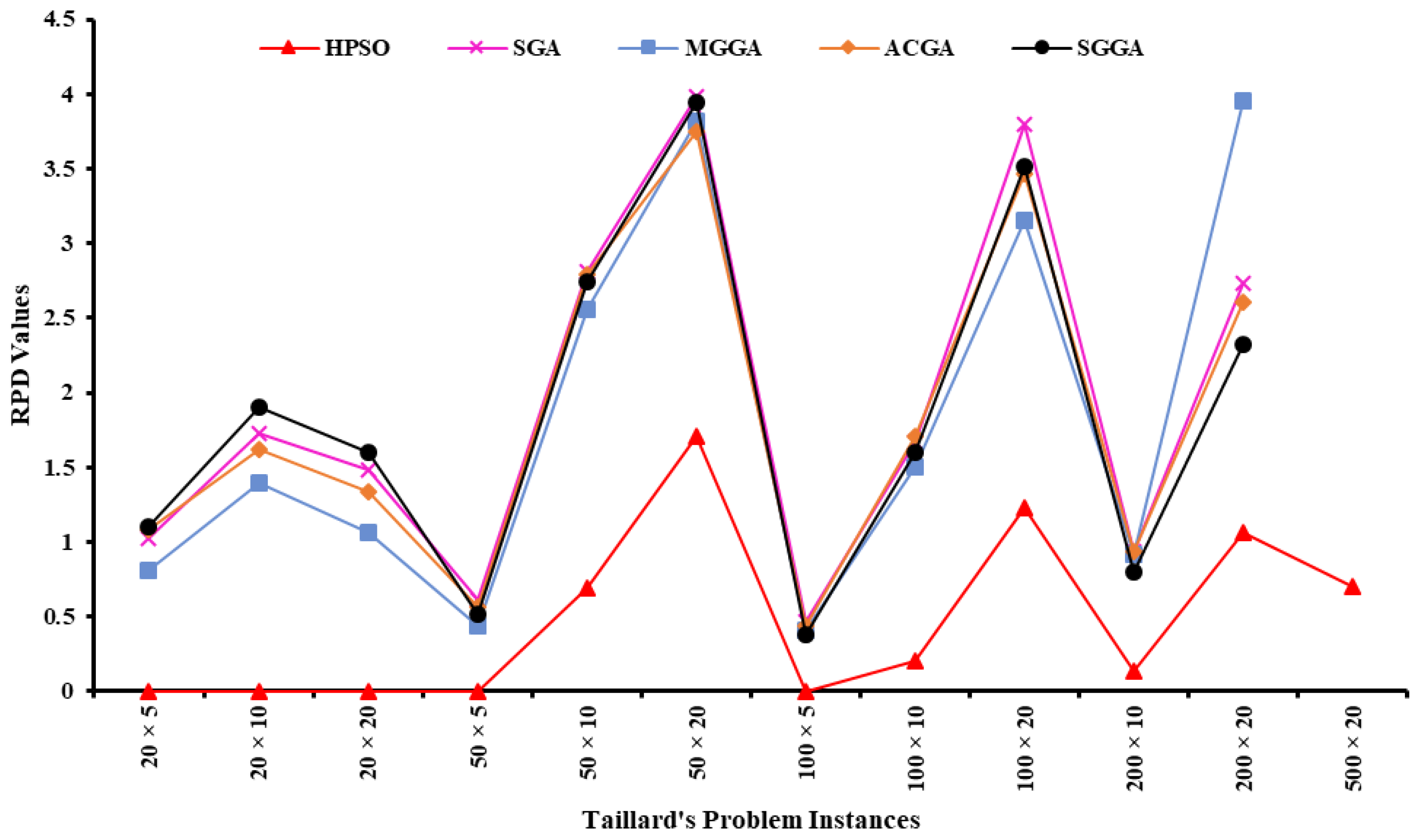

To further elaborate on the HPSO’s effectiveness, its performance has also been compared against hybrid GA (HGA)-based approaches. Since HGAs have been extensively reported in the literature and are widely regarded as the best metaheuristic for these sorts of problems, to justify the robust behavior of HPSO developed during this research, its performance was also compared with HGA by Tseng et al. [

27] and HGSA by Wei et al. [

26] (hybrid GA with SA). The algorithm performed significantly better than HGA (

p = 0.05) and HGSA (

p = 0.05), as evident from

Figure 6.

Comparisons were also carried out with four different versions of GA, i.e., SGA (Simple Genetic Algorithm) [

24], MGGA (Mining Gene Genetic Algorithm) [

23], ACGA (Artificial Chromosome with Genetic Algorithm) [

23], and SGGA (Self-Guided Genetic Algorithm) [

24], as presented in

Figure 7. The deviation of the GA from the upper bound, which increases in magnitude with the increasing problem size, was significantly more than HPSO. Thus, it can be concluded that the algorithm developed during this research is comparatively more robust and performs better than other hybrid GA techniques even while handling larger problem sizes.

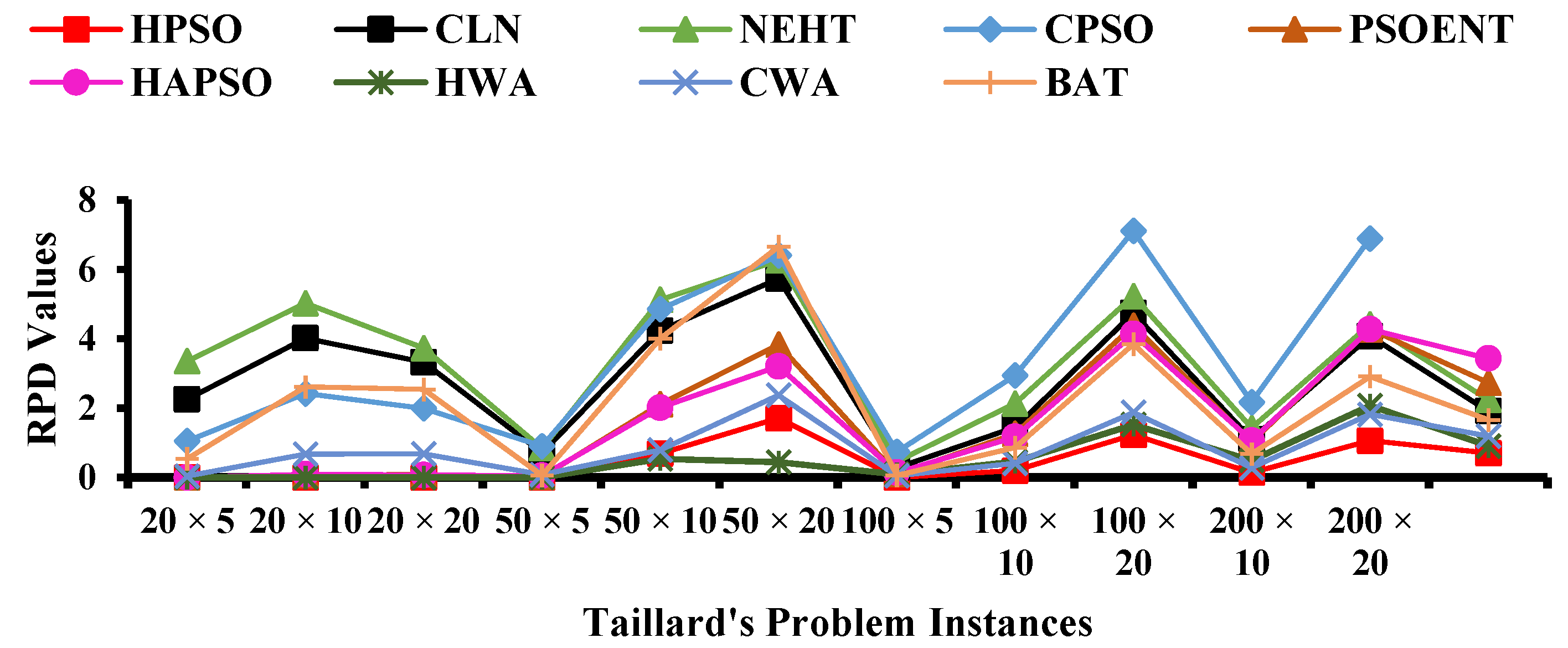

Once the internal comparison of HPSO with standard PSO, PSO-VNS, and validation against HGA and HGSA was completed, the last part of validation was against other notable techniques already reported in the literature. For this purpose, a more detailed comparison was carried out with WOA [

16], Chaotic Whale Optimization (CWA) [

17], the BAT-algorithm [

18], NEHT (NEH algorithm together with the improvement presented by Taillard) [

31], ACO [

50], CPSO (Combinatorial PSO) [

51], PSOENT (PSO with Expanding Neighborhood Topology) [

40], and HAPSO (Hybrid Adaptive PSO) [

52]. This comparison was solely based on ARPD values and is shown in

Table 6 and graphically illustrated in

Figure 8. The improved performance of HPSO, developed during this research, is evident as compared to other reported hybrid heuristics.

The results of the Q-Learning algorithm [

15] have not been shown in

Table 6 due to the limited results reported by the author, as only 30 out of the 120 problems limited to a maximum problem size of 50 × 20 were analyzed. However, a comparison was performed for ARPD values for the limited number of problems for both HPSO and Q-learning. The results show a superior performance of the HPSO compared to the Q-Learning algorithm.

A row-wise comparison yields the performance variation of different approaches for individual problem groups. It is important to note that the technique developed during this research (HPSO) outperformed the other algorithms for each problem set. Although there is a performance variation across the techniques for different problem sizes, the algorithm was consistently better than all the other techniques. A smaller ARPD value in each problems group resulted in an overall smallest average ARPD value of 0.48, significantly better than the closest value of 0.85 for the HWA algorithm. It validates the claim that the HPSO approach, developed during this research, is comparatively more robust and remains consistent even while handling large sized problems. Furthermore, the average computation time of HPSO is reported in

Table 7.

5. Conclusions

As a member of the class of NP-complete problems, PFSP has been regularly researched and reported in the literature. Several heuristic-based approaches in the literature can efficiently handle this problem. However, for the larger problem sizes, most of the researchers focused on hybridized metaheuristics due to their ability to produce quality results in polynomial time, even for large-sized problems. Following this trend, a PSO-based approach was developed during this research in a stepwise manner. First, a standard PSO was developed, then it was hybridized with VNS, and finally, with SA. The final version, HPSO, outperformed not just standard PSO and PSO-VNS, but it also performed exceedingly well against other algorithms, including HGA, HGSA, ACO, BAT, WOA, and CWA. Comparisons based on the ARPD values showed that the performance of HPSO remained comparatively consistent, as evidenced by its small overall ARPD value of 0.48.