Prototyping an Online Virtual Simulation Course Platform for College Students to Learn Creative Thinking

Abstract

:1. Introduction

- What are the needs and dilemmas of college students in online virtual simulation learning?

- What are the opportunities and solutions to help college students learn on an online virtual simulation course platform?

- How do college students experience these solutions?

2. Related Works

3. Materials and Methods

- Apply 2D virtual simulation technology. Avoid using 3D and adopt 2D, which is more efficient in information transfer.

- Focus on practical teaching content. The cases need to be close to life, enhance the sense of participation of college students, and create a relaxed learning atmosphere.

- Offer detailed operating instructions. College students need to become more familiar with the virtual simulation environment, resulting in a lot of time and effort spent using the course platform, which should reduce learning costs.

- Provide immediate error feedback and text-assisted instructions. Do instant feedback on the steps that users produce errors so that users can correct their mistakes in time to improve the learning efficiency of the course.

- Teach in stages. The teaching content should be phased and modularized, which is convenient for users to master and select.

- Show a macroscopic course outline and straightforward learning process, users can flexibly arrange their learning progress and enhance the goal of learning.

4. Results

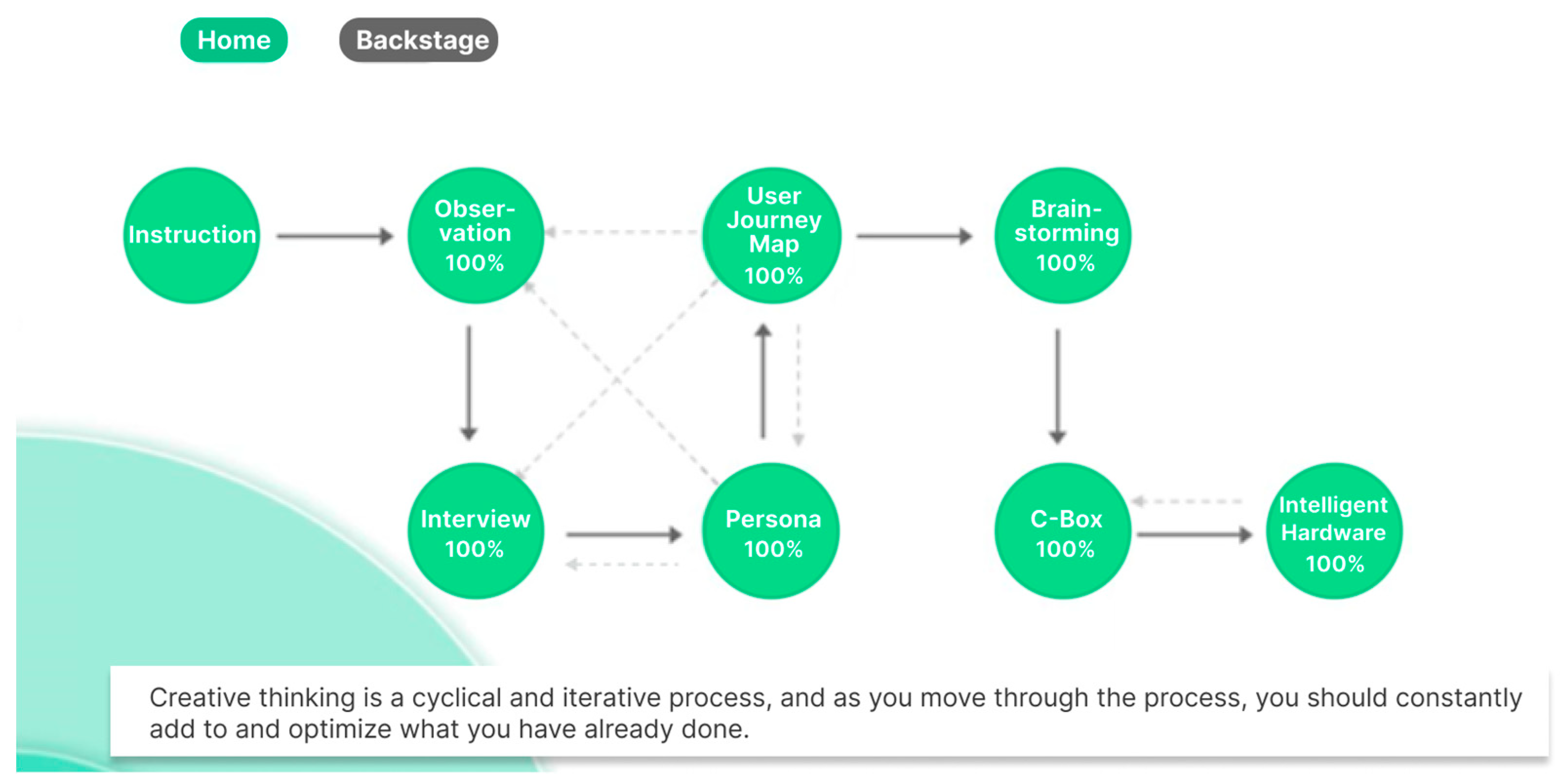

4.1. Homepage

4.2. Interview Module

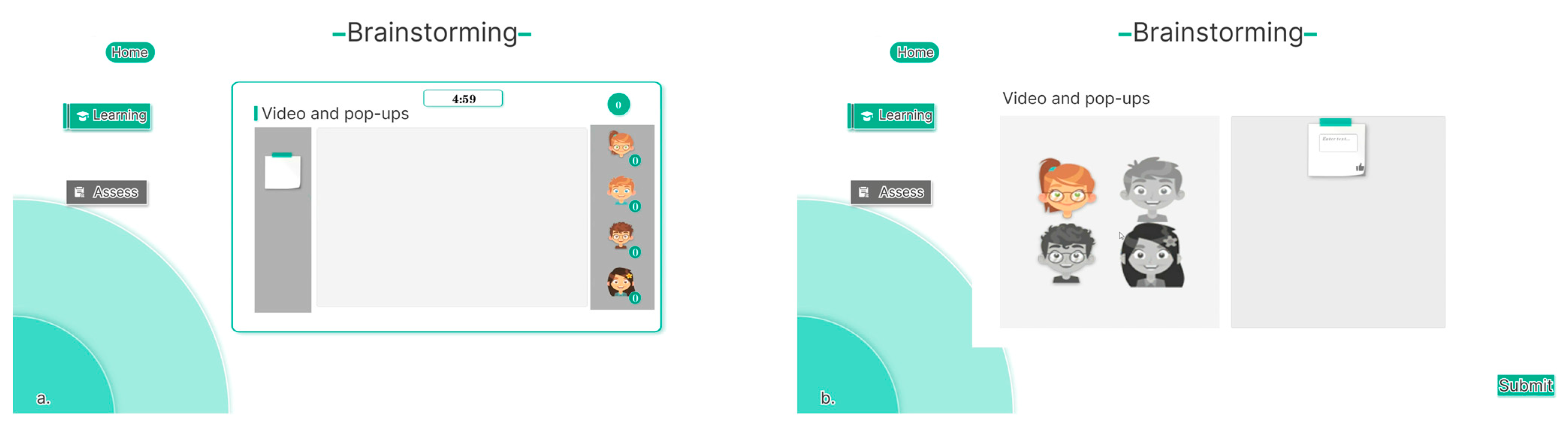

4.3. Brainstorming Module

4.4. Intelligent Hardware Module

5. Evaluations and Improvements

5.1. Expert Evaluation

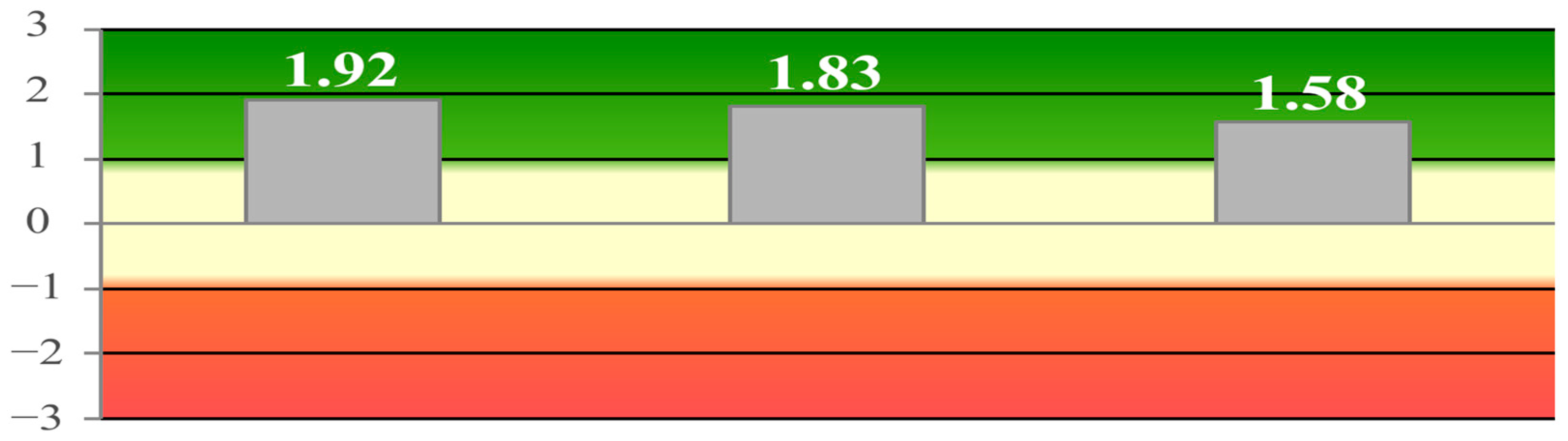

5.2. User Evaluation

- Excellent: within the best 10% of the results in the benchmark dataset.

- Good: 10% of the benchmark dataset is better than the evaluated product, and 75% is worse.

- Above average: 25% of the benchmark dataset is better than the evaluated product, and 50% is worse.

- Below average: 50% of the benchmark dataset is better than the evaluated product, and 25% is worse.

- Bad: within the worst 25% of the benchmark dataset.

5.3. The Improvements

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Maritz, A.; De Waal, A.; Buse, S.; Herstatt, C.; Lassen, A.; Maclachlan, R. Innovation education programs: Toward a conceptual framework. Eur. J. Innov. Manag. 2014, 17, 166–182. [Google Scholar] [CrossRef]

- Lewrick, M.; Link, P.; Leifer, L. The Design Thinking Playbook: Mindful Digital Transformation of Teams, Products, Services, Businesses and Ecosystems; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Huang, J.; Gan, L.; Jiang, M.; Zhang, Q.; Zhu, G.; Hu, S.; Zhang, X.; Liu, W. Building a virtual simulation teaching and learning platform towards creative thinking for Beijing Shahe education park. In Proceedings of the International Conference on Human Interaction and Emerging Technologies, Virtual, 27–29 August 2021; pp. 1218–1226. [Google Scholar]

- Kuo, F.R.; Chen, N.S.; Hwang, G.J. A creative thinking approach to enhancing the web-based problem solving performance of university students. Comput. Educ. 2014, 72, 220–230. [Google Scholar] [CrossRef]

- Alqahtani, A.Y.; Rajkhan, A.A. E-learning critical success factors during the COVID-19 pandemic: A comprehensive analysis of e-learning managerial perspectives. Educ. Sci. 2020, 10, 216. [Google Scholar] [CrossRef]

- Lattanzio, S.; Nassehi, A.; Parry, G.; Newnes, L.B. Concepts of transdisciplinary engineering: A transdisciplinary landscape. Int. J. Agil. Syst. Manag. 2021, 14, 292–312. [Google Scholar] [CrossRef]

- Liu, W.; Byler, E.; Leifer, L. Engineering design entrepreneurship and innovation: Transdisciplinary teaching and learning in a global context. In Design, User Experience, and Usability. Case Studies in Public and Personal Interactive Systems: Proceedings of theInternational Conference on Human-Computer Interaction, Copenhagen, Denmark, 19–24 July 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 451–460. [Google Scholar]

- Dym, C.L.; Agogino, A.M.; Eris, O.; Frey, D.D.; Leifer, L.J. Engineering design thinking, teaching, and learning. J. Eng. Educ. 2005, 94, 103–120. [Google Scholar]

- Hirshfield, L.J.; Koretsky, M.D. Cultivating creative thinking in engineering student teams: Can a computer-mediated virtual laboratory help? J. Comput. Assist. Learn. 2021, 37, 587–601. [Google Scholar] [CrossRef]

- Kuratko, D.F.; Audretsch, D.B. Strategic entrepreneurship: Exploring different perspectives of an emerging concept. Entrep. Theory Pract. 2009, 33, 1–17. [Google Scholar]

- Ma, L.; Lee, C.S. Investigating the adoption of MOOCs: A technology–user–environment perspective. J. Comput. Assist. Learn. 2019, 35, 89–98. [Google Scholar]

- Ryan, P.J.; Watson, R.B. Research challenges for the internet of things: What role can or play? Systems 2017, 5, 24. [Google Scholar]

- Vergara, D.; Rubio, M.P.; Lorenzo, M. On the design of virtual reality learning environments in engineering. Multimodal Technol. Interact. 2017, 1, 11. [Google Scholar]

- Øritsland, T.A.; Buur, J. Interaction styles: An aesthetic sense of direction in interface design. Int. J. Hum. Comput. Interact. 2003, 15, 67–85. [Google Scholar]

- Guilford, J.P. (Ed.) Creativity Research: Past, Present and Future. Frontiers of Creativity Research: Beyond the Basics; Bearly Limited: Hong Kong, China, 1987; pp. 33–65. [Google Scholar]

- Amabile, T.M. A model of creativity and innovation in organizations. Res. Organ. Behav. 1988, 10, 123–167. [Google Scholar]

- Mumford, M.D.; Mobley, M.I.; Reiter-Palmon, R.; Uhlman, C.E.; Doares, L.M. Process analytic models of creative capacities. Creat. Res. J. 1991, 4, 91–122. [Google Scholar]

- Sternberg, R.J. A Three-Facet Model of Creativity. In The Nature of Creativity; Cambridge University Press: New York, NY, USA, 1988; pp. 125–147. [Google Scholar]

- Sarsani, M.R. (Ed.) Creativity in Education; Palgrave Macmillan: London, UK, 2005. [Google Scholar]

- Runco, M.A.; Jaeger, G.J. The standard definition of creativity. Creat. Res. J. 2012, 24, 92–96. [Google Scholar]

- Oblinger, D.; Oblinger, J. Educating the Net Generation; Educause: Boulder, CO, USA, 2005. Available online: https://www.educause.edu/ir/library/PDF/pub7101.PDF (accessed on 23 December 2022).

- Lowenthal, P.R.; Wilson, B.; Parrish, P. Context matters: A description and typology of the online learning landscape. In Proceedings of the AECT International Convention, Louisville, KY, USA, 30 October 2009; Available online: https://members.aect.org/pdf/Proceedings/proceedings09/2009I/09_20.pdf (accessed on 23 December 2022).

- Hiltz, S.R.; Turoff, M. Education goes digital: The evolution of online learning and the revolution in higher education. Commun. ACM 2005, 48, 59–64. [Google Scholar] [CrossRef]

- McAuley, A.; Stewart, B.; Siemens, G.; Cormier, D. Massive open Online Courses. Digital Ways of Knowing and Learning. The MOOC Model for Digital Practice; University of Prince Edward Island: Charlottetown, PE, Canada, 2010. [Google Scholar]

- Volery, T.; Lord, D. Critical success factors in online education. Int. J. Educ. Manag. 2000, 14, 216–223. [Google Scholar] [CrossRef]

- Gloria, A.M.; Uttal, L. Conceptual considerations in moving from face-to-face to online teaching. Int. J. E-Learn. 2020, 19, 139–159. [Google Scholar]

- Alhabeeb, A.; Rowley, J. E-learning critical success factors: Comparing perspectives from academic staff and students. Comput. Educ. 2018, 127, 1–12. [Google Scholar]

- Wong, L.; Fong, M. Student attitudes to traditional and online methods of delivery. J. Inf. Technol. Educ. Res. 2014, 13, 1–13. [Google Scholar] [CrossRef]

- Malmberg, J.; Järvelä, S.; Holappa, J.; Haataja, E.; Huang, X.; Siipo, A. Going beyond what is visible: What multichannel data can reveal about interaction in the context of collaborative learning? Comput. Hum. Behav. 2019, 96, 235–245. [Google Scholar] [CrossRef]

- Knowles, E.; Kerkman, D. An investigation of students attitude and motivation toward online learning. InSight A Collect. Fac. Scholarsh. 2007, 2, 70–80. [Google Scholar] [CrossRef]

- Garrison, D.R.; Cleveland-Innes, M. Facilitating cognitive presence in online learning: Interaction is not enough. Am. J. Distance Educ. 2005, 19, 133–148. [Google Scholar]

- Harasim, L. Shift happens: Online education as a new paradigm in learning. Internet High. Educ. 2000, 3, 41–61. [Google Scholar]

- Roussou, M.; Slater, M. Comparison of the effect of interactive versus passive virtual reality learning activities in evoking and sustaining conceptual change. IEEE Trans. Emerg. Top. Comput. 2017, 8, 233–244. [Google Scholar]

- Li, W.; Hu, Q. The Variety of position representation in reorientation: Evidence from virtual reality experiment. Psychol. Dev. Educ. 2018, 34, 385–394. [Google Scholar]

- Cornelius, S.; Gordon, C.; Harris, M. Role engagement and anonymity in synchronous online role play. Int. Rev. Res. Open Distrib. Learn. 2011, 12, 57–73. [Google Scholar]

- Zhang, L.; Beach, R.; Sheng, Y. Understanding the use of online role-play for collaborative argument through teacher experiencing: A case study. Asia-Pac. J. Teach. Educ. 2016, 44, 242–256. [Google Scholar] [CrossRef]

- Beckmann, E.A.; Mahanty, S. The evolution and evaluation of an online role play through design-based research. Australas. J. Educ. Technol. 2016, 32, 35–47. [Google Scholar] [CrossRef]

- Doğantan, E. An interactive instruction model design with role play technique in distance education: A case study in open education system. J. Hosp. Leis. Sport Tour. Educ. 2020, 27, 100268. [Google Scholar] [CrossRef]

- Schott, C.; Marshall, S. Virtual reality and situated experiential education: A conceptualization and exploratory trial. J. Comput. Assist. Learn. 2018, 34, 843–852. [Google Scholar] [CrossRef]

- Jensen, L.; Konradsen, F. A review of the use of virtual reality head-mounted displays in education and training. Educ. Inf. Technol. 2018, 23, 151–1529. [Google Scholar]

- Howard, M.C.; Van Zandt, E.C. A meta-analysis of the virtual reality problem: Unequal effects of virtual reality sickness across individual differences. Virtual Real. 2021, 25, 1221–1246. [Google Scholar] [CrossRef]

- Schott, C.; Marshall, S. Virtual reality for experiential education: A user experience exploration. Australas. J. Educ. Technol. 2021, 37, 96–110. [Google Scholar] [CrossRef]

- Chang, E.; Kim, H.T.; Yoo, B. Virtual reality sickness: A review of causes and measurements. Int. J. Hum. Comput. Interact. 2020, 36, 1658–1682. [Google Scholar]

- Corbin, J. Grounded theory. J. Posit. Psychol. 2017, 12, 301–302. [Google Scholar]

- Scupin, R. The KJ method: A technique for analyzing data derived from Japanese ethnology. Hum. Organ. 1997, 56, 233–237. [Google Scholar] [CrossRef]

- Mahatody, T.; Sagar, M.; Kolski, C. State of the art on the cognitive walkthrough method, its variants and evolutions. Int. J. Hum. Comput. Interact. 2010, 26, 741–785. [Google Scholar] [CrossRef]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Applying the user experience questionnaire (UEQ) in different evaluation scenarios. In Design, User Experience, and Usability. Theories, Methods, and Tools for Designing the User Experience: Proceedings of the International Conference of Design, User Experience, and Usability, Crete, Greece, 22–27 June 2014; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 383–392. [Google Scholar]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Design and evaluation of a short version of the user experience questionnaire (UEQ-S). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 103–108. [Google Scholar] [CrossRef]

- Hamilton, D.; McKechnie, J.; Edgerton, E.; Wilson, C. Immersive virtual reality as a pedagogical tool in education: A systematic literature review of quantitative learning outcomes and experimental design. J. Comput. Educ. 2021, 8, 1–32. [Google Scholar] [CrossRef]

- Liou, H.H.; Yang, S.J.; Chen, S.Y.; Tarng, W. The influences of the 2D image-based augmented reality and virtual reality on student learning. J. Educ. Technol. Soc. 2017, 20, 110–121. [Google Scholar]

| Dimensions | Categories | User Needs and Pain Points | Design Opportunities |

|---|---|---|---|

| Information presentation | Information transfer efficiency Information Feedback | Not easy to view 3D images Difficulty reading text in 3D Do not know how to operate correctly Do not know the position and progress Do not know which step went wrong | Display 2D images Present text in 2D Demonstrate operations Set up a progress alert Provide feedback for errors |

| Platform characteristics | Difficulty to use Fun Interactivity Functionality | Spend time to understand the platform Learning process is boring Interaction lacks memory points Not convenient to look back | Adopt an easy design logic Provide rich content Enrich interaction styles Module course content |

| Course assessment | Nature of assessment Assessment method | Objective assessment is rigid No mastery of practical content | Assess principal content Assess in stages |

| Instruction design | Course preparation Course content | No holistic understanding of the course Low motivation to learn | Add overall introduction Enhance the purpose |

| Presentation format | Simulation degree Simulation presentation | Technology is difficult to simulate Poor 3D visual presentation | Adopt cartoon styles Adopt virtual simulation |

| Dimensions | Our Platform | Zoom | Rain Classroom |

|---|---|---|---|

| Information presentation | Equipped with appropriate presentation format for teaching content with high efficiency | Suitable for abstract theory | Works as an auxiliary tool |

| Platform characteristics | Novice teaching, easy to use, interactive, replay at any time | No interactivity, inconvenient to replay | Weak interactivity, unable to record video and audio |

| Course assessment | Phased teaching, timely assessment, easy to master | Teaching only, no assessment | Teaching only, less assessment |

| Instruction design | Clear and modular course structure | Cannot show learning progress clearly | Cannot perform independent learning |

| Presentation format | Multiple presentation methods | PowerPoint lectures only | Images and text only |

| Dimensions | M | SD | Levels |

|---|---|---|---|

| Attractiveness | 1.92 | 0.61 | Excellent |

| Perspicuity | 1.57 | 1.07 | Above average |

| Efficiency | 1.97 | 0.58 | Excellent |

| Dependability | 1.94 | 0.71 | Excellent |

| Stimulation | 1.43 | 0.97 | Good |

| Novelty | 1.74 | 0.82 | Excellent |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, X.; Liu, W.; Jia, J.; Zhang, X.; Leifer, L.; Hu, S. Prototyping an Online Virtual Simulation Course Platform for College Students to Learn Creative Thinking. Systems 2023, 11, 89. https://doi.org/10.3390/systems11020089

Wu X, Liu W, Jia J, Zhang X, Leifer L, Hu S. Prototyping an Online Virtual Simulation Course Platform for College Students to Learn Creative Thinking. Systems. 2023; 11(2):89. https://doi.org/10.3390/systems11020089

Chicago/Turabian StyleWu, Xiaojian, Wei Liu, Jingpeng Jia, Xuemin Zhang, Larry Leifer, and Siyuan Hu. 2023. "Prototyping an Online Virtual Simulation Course Platform for College Students to Learn Creative Thinking" Systems 11, no. 2: 89. https://doi.org/10.3390/systems11020089