Abstract

With the rapid development of science and technology, the ability to creative thinking has become an essential criterion for measuring talents. Current creative thinking courses for college students are affected by COVID-19 and are challenging to conduct. This study aimed to explore practical ways to teach creative thinking knowledge online and explored design opportunities for working on this teaching activity online. Through qualitative interviews, we found that the factors that influenced the design of the online virtual simulation course platform were focused on five dimensions: information presentation, platform characteristics, course assessment, instruction design, and presentation format. Through the analysis of user requirements, we obtained six corresponding design guidelines. Based on the knowledge system of design thinking, we set up eight modules in the course platform and developed a prototype including 100 user interfaces. We invited three experts and 30 users to conduct cognitive walk-through sessions and made design iterations based on the feedback. After user evaluation, dimensions of attractiveness, efficiency, dependability, and novelty reached excellent rating and were recognized by users.

1. Introduction

Along with the rapid improvement of technology, human beings have gradually stepped into the information era. In this context, innovation education is being developed in universities and educational institutions [1,2,3]. In line with this educational trend, our institute launched a Design Thinking course in 2019. This experiment aims to leverage transdisciplinary strengths to improve university students’ creative thinking and problem-solving skills [4,5,6]. The course structure is based on Stanford University’s ME310 course framework, which is globally recognized as an effective model for creative talent development [7,8]. We have localized the course according to the actual teaching context and focused on developing students’ creative thinking. In terms of teaching content, the course integrates psychology-related knowledge, introduces global resources, and focuses on the combination of theory and practice, actively guiding students to break through their professional limitations and cultivate their creative thinking from multiple perspectives and in-depth [9,10]. However, the time and space costs limit the popularity of creative thinking talent cultivation. COVID-19 makes its implementation more complicated, thus domestic universities have implemented online learning teaching methods. Creative thinking courses focus on experiential education. The existing massively open online course (MOOC) platforms have poor interactivity, single course presentation, and untimely feedback, which reduce the teaching efficiency [11].

We investigated the characteristics of college students learning creative thinking in an online virtual simulation environment, conducted a quantitative analysis of the teaching effectiveness of offline courses, and conducted in-depth interviews with target users in conjunction with the analysis results. We found a way to define user interface design and interaction style of the virtual simulation course platform to improve user experience in line with the preference of college students [12,13,14]. According to the findings in literature, key issues to be addressed are:

- What are the needs and dilemmas of college students in online virtual simulation learning?

- What are the opportunities and solutions to help college students learn on an online virtual simulation course platform?

- How do college students experience these solutions?

2. Related Works

Creativity, also known as creativity, is defined by Guilford [15] as a concrete manifestation of creativity, by Amabile [16] as the ability to generate novel and valuable ideas, and by Mumford et al. [17] as the merging and reorganization of existing knowledge. Sternberg [18] considered creativity a multidimensional phenomenon and proposed a three-facet model of creativity, which divides creativity into the level of intelligence, style of intelligence, and personality, forming the three faces of creativity. He emphasized the interaction between these three faces and the many internal factors contained in each of them. Sarsani [19] argues that creativity should be summarized as a holistic, multidimensional concept. In subsequent psychological studies, creativity has been defined in two parts: originality and effectiveness [20]. Originality refers to novelty, rarity, and uniqueness. Effectiveness is related to utility and appropriateness and must have some value to a group or culture. In general, creativity comes from a wide range of sources. Creativity is holistic, multidimensional, and complex and needs to produce valuable outputs.

Some researchers describe online learning as a way of learning carried out online in an entire process [21]. online learning tends to look very different depending on the delivery context [22]. Most researchers describe online learning as a learning activity that uses some technology or, furthermore, as distance learning. This learning provides educational opportunities to learners who need access to educational conditions. It has accessibility, connectivity, flexibility, and the ability to facilitate student-teacher interaction [23]. The concept of MOOC was introduced to describe a collaborative course on connectivism [24]. The MOOC provides a platform for exploring new pedagogical approaches, with the instructor as the central element. The personalized learning environment has led to a change in the traditional teacher-student relationship. This new relationship creates greater demands on the skills and knowledge of the teacher [25,26]. For students, online learning is influenced by four factors: teacher traits, student traits, technical support, and convenience, the most important of which are the traits of the students themselves [27]. Student’s behaviors can be more complex when they engage in online learning [28]. Therefore, students need to have strong self-management skills and be able to adapt to the difficulties and challenges presented by the online learning environment [29]. Otherwise, the expected quality of learning will not be achieved [30]. In addition, interaction is the focus of online learning research, traditional interaction styles lead to silence in online courses, and online learning requires new ways of interaction [31,32]. Online learning is portable and flexible, breaking time and space constraints, publicizing resources, and personalizing learning. However. new ways of teaching and learning also bring challenges, as teachers’ skills are too late to adapt to new teaching tools, and they need to invest more time and effort in preparation. Students need to have more vital self-management skills. Online learning platforms require new ways of interaction.

The birth of virtual simulation technology has led to a new type of relationship between teachers and students based on learning in virtual environments [33]. Virtual simulation technology has been used in psychological research to explore psychological processes and intrinsic mechanisms [34]. Some researchers have made many attempts to explore students’ experiences in virtual environments, and role-playing is one of them. Role-playing employs different roles to understand others’ perspectives, actively interact with others promptly, and freely share views. This interaction helps improve students’ collaboration, cognitive, and communication skills [35,36]. However, the role-play approach could be more suitable for online instruction [37]. Students’ reluctance to speak, difficulty in substituting roles, lack of collaboration, and difficulties in sharing information rely on facilitators, who need to analyze the status and characteristics of the student body in real-time and plan accordingly [38].

The use of virtual reality technology is another attempt at virtual simulation education. Situated and experiential education has positive pedagogical value, but due to budget and time pressures, many universities have reduced the use of situated and experiential instruction [39]. Based on improvements in virtual reality technology, educators see it as a practical educational approach [40]. However, its limitations are equally prominent. One of the most severe problems is motion sickness, which produces different effects, such as nausea, vomiting, and vertigo [41]. In addition, virtual reality has many hardware inconveniences, such as the inability to take handwritten notes [42]. The fully immersive experience of virtual reality brings powerful environmental representations, including spatial relationships, visual presentations, and rich soundscapes. It allows students to break limits of space and time to meet requirements of situated and experiential teaching. However, the problem of negative experiences in virtual reality cannot be ignored. The negative experience caused by motion sickness seriously affects users’ willingness to use it. Since it continuously affects the use process, it prevents users from focusing on the given task [43]. The negative experience cannot be solved in the short term due to hardware limitations (e.g., the comfort of wearing glasses), and too much information within 3D scene makes virtual reality a big potential problem for teaching applications.

Over the past few years, we have experimented with several online platform-based creativity development teaching methods due to the impact of COVID-19. We have tested with meeting software Zoom (zoom.us, accessed on 1 February 2023), Tencent Meeting (meeting.tencent.com, accessed on 1 February 2023), Rain Classroom (yuketang.cn/en, accessed on 1 February 2023), and online collaboration software Figma (figma.com, accessed on 1 February 2023) and JS Design (js.design, accessed on 1 February 2023). The advantage of this kind of online collaboration software is that it is developed by large enterprises, has good product maturity, and rarely encounters breakdowns. However, in terms of actual pedagogical results, more is needed to support effective online creativity development. We need to open several software programs to achieve the desired features and interactions simultaneously. For example, for an online workshop, we needed both Zoom and Figma. Both the teaching teams and student teams require a unified platform to meet their online teaching and learning needs.

Based on the above issues, we have explored an online virtual simulation teaching model suitable for creative thinking cultivation.

3. Materials and Methods

We learned about research on online virtual simulations and analyzed current online and virtual simulation designs for college students’ creativity enhancement and existing online learning platforms. We then analyzed the teaching effectiveness quantitatively based on the study’s results. We used them as the basis for developing an interview protocol, divided into four dimensions: background and basic information, online learning, use of the virtual simulation platform, and creative thinking courses. A total of eight participants were screened, including three males and eight females: two aged 20 years, three aged 21 years, and three aged 23 years. We then conducted a qualitative analysis of the in-depth interviews using Nvivo12 software (qsrinternational.com/nvivo-qualitative-data-analysis-software, accessed on 1 February 2023). The qualitative analysis methodology was based on the rooting theory [44]. The text was labeled in an open coding phase, and a total of 282 labels and 169 categories were obtained. After obtaining the data, 72 categories were finally obtained by comparing descriptions with similar content in different categories for refinement.

After completing the open data coding, we coded the data into main axes, extracted and summarized the common characteristics in different categories of information, and conducted selective coding research. Through the overall analysis of the coded information, we extracted five core dimensions: information presentation, platform characteristics, course assessment, instruction design, and presentation format.

Regarding visual presentation of the course, most participants felt that 3D visual presentation could have been more effective. There were problems such as too much redundant information and poor readability of textual information. In contrast, 2D visual style provided a better experience of use. In terms of instruction design, most participants emphasized efficiency of knowledge acquisition and were most interested in practical teaching cases and skills-based instruction. Regarding course assessment, they preferred to accept a combination of subjective and objective evaluations. Regarding platform characteristics, they wanted to improve interactivity and fun while keeping difficulty of using low and optimizing the playback and multiple learning processes. Regarding information presentation, they were more receptive to PowerPoint-based theoretical lectures and were willing to participate in practical case training based on virtual simulation technology.

They need an online virtual simulation course platform with high learning efficiency, rich teaching cases, practical teaching guidance, timely interactive feedback, and simple operation methods. We have considered the core categories as design influencing factors, found design opportunities from user needs and pain points, and translated them into specific design guidelines (see Table 1).

Table 1.

The core dimensions, categories, user needs and pain points, and design opportunities.

Based on the design opportunities obtained from the above analysis, six design guidelines were summarized in an affinity way by the A-scheme diagramming method [45]. This approach collects linguistic information about the problem to be solved and uses the relationships inherent in the text to categorize and merge them hierarchically. Therefore, in interaction design, this method can explore the functions and hierarchies and create a list of product functions.

- Apply 2D virtual simulation technology. Avoid using 3D and adopt 2D, which is more efficient in information transfer.

- Focus on practical teaching content. The cases need to be close to life, enhance the sense of participation of college students, and create a relaxed learning atmosphere.

- Offer detailed operating instructions. College students need to become more familiar with the virtual simulation environment, resulting in a lot of time and effort spent using the course platform, which should reduce learning costs.

- Provide immediate error feedback and text-assisted instructions. Do instant feedback on the steps that users produce errors so that users can correct their mistakes in time to improve the learning efficiency of the course.

- Teach in stages. The teaching content should be phased and modularized, which is convenient for users to master and select.

- Show a macroscopic course outline and straightforward learning process, users can flexibly arrange their learning progress and enhance the goal of learning.

4. Results

Based on the guidelines, we designed the course platform with appropriate interactive features for each module. This helps enhance the course’s interactivity and increase students’ interest in independent learning. In this section, we select representative modules to show and discuss, while other modules have a similar interface design style.

4.1. Homepage

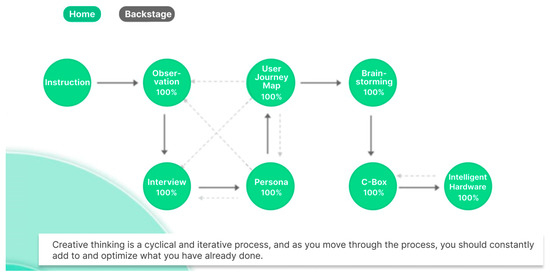

The course homepage serves as the module entrance, course framework display, and learning progress display. This page visually displays the course framework and learning progress figuratively (see Figure 1). When a module is not completed, the module is grayed out with no stroke, and progress is shown as “0%”. At this point, the user clicks on the module to enter the learning phase of the module. When the user has completed the learning phase of a module and has not completed the assessment phase, the circle is green with a half-circle stroke, and the progress shows “50%”. At this point, the user clicks on the module to enter the assessment phase. When a module is completed, the corresponding module circle is green with a green circle stroke, and the progress inside the circle shows “100%”. At this point, the user clicks on the module to enter the learning phase to relearn. On this page, the solid gray arrows represent the order of the course. The light gray dotted arrows mean that the user can go back to the previous step to iterate when he encounters difficulties in a particular step.

Figure 1.

The homepage.

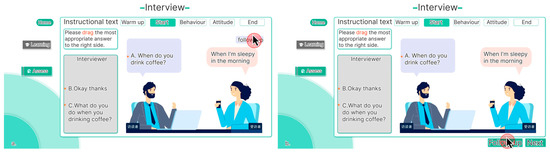

4.2. Interview Module

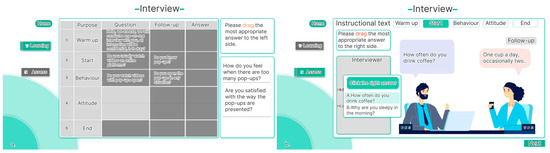

The learning phase of this module starts with a sample video of an interview, followed by a text-based explanation of the interview method. After the user clicks on the “Next” button, the course jumps to an instructional page on interview outlining and interview steps (see Figure 2). This page provides more detailed textual instructions for each stage, and a draggable text option appears on the right side, which the user needs to drag to the corresponding position in the interview outline. The assessment phase uses 2D virtual simulation technology. After entering the assessment phase, the system provides an outline of the interview so that the user can understand the content and topic of the entire interview. After reading the interview outline, the user will click Next to enter the simulated interview interface. On this page, the user, as the primary interviewer, asks questions to the subject. After selecting the appropriate questions, the user must drag the corresponding options into the dialog box. If the chosen question is inappropriate, the system promptly identifies the error. The option of “follow-up questions” appears in some specific questions. After completing the entire interview process, the platform generates a transcript.

Figure 2.

The user interface for teaching the interview module. (a): interview outline and interview steps. (b): the option of “follow-up questions”.

4.3. Brainstorming Module

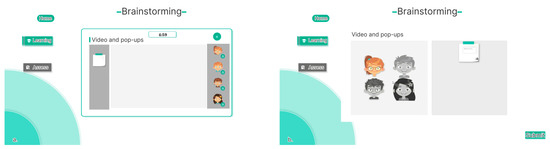

This module introduces the role, rules, and conditions of brainstorming. The user clicks the “Next” button to enter the Beginner’s Guide stage. The interface guides the user through the brainstorming module’s functions and interactions with a highlighted cursor and provides supporting textual instructions. After the user is familiar with the operation, he clicks “End Newbie Guide” to enter the formal learning session, which lasts for 5 min (see Figure 3). The logic of the formal learning session is the same as that of the Beginner’s Guide, where users simulate the process of posting sticky notes by clicking on the sticky notes on the left side and typing their thoughts in the sticky notes. At the end of the session, users can view the results of their brainstorming with their group members. The assessment phase replaces the theme of the textual explanation section and removes the newbie guide feature. Users directly enter the formal brainstorming session and can view the results. The answers to the assessment questions are open-ended and can be completed by the user without objective scoring.

Figure 3.

The user interface of teaching the brainstorming module. (a): go to the brainstorming session. (b): view brainstorming results.

4.4. Intelligent Hardware Module

After entering the module, the user first understands the meaning of prototyping through textual explanations. After clicking the “Next” button, the interface continues to explain the circuit connection, operation principle, and the function of the components in a text and picture format to ensure that the user understands the hardware principles deeply when using the virtual simulation technology (see Figure 4). After learning the principles, the user clicks the “Next” button to jump to the virtual simulation of hardware construction. The platform guides the user to complete the established intelligent hardware building tasks on this page. The user needs to follow the prompts of the task text to build the established intelligent hardware to understand the basic principles. The assessment phase allows users to independently complete the construction of intelligent hardware for specific purposes in a purely virtual environment utilizing proposing questions. The user must select the correct components from the left side and place them in the correct position until the intelligent hardware is successfully built.

Figure 4.

The user interface of teaching the intelligent hardware module. (a): learn the hardware principles. (b): the virtual simulation of hardware construction.

5. Evaluations and Improvements

The usability evaluations included expert evaluation and user evaluation. A total of three experts and 30 college students experienced and tested a high-fidelity prototype. These participants conducted cognitive walk-through sessions [46]. They explained what they were thinking about at each step of the operation.

5.1. Expert Evaluation

Since the functional framework of our platform differs significantly from other online teaching tools, it was impossible to find a suitable competitor for AB test. For this reason, in an expert evaluation stage, we invited user interface design experts to compare our platform and others (e.g., Zoom and Rain Classroom) on five experience dimensions of concern to users. The results are shown in Table 2.

Table 2.

The side-by-side comparison between our platform and Zoom and Rain Classroom.

The experts simulated each operation step in the platform’s process, experienced all the test tasks, evaluated the product according to their professional knowledge and experience feelings during the experience, and proposed modifications. From the point of view of the teaching objective, the platform achieves the expected teaching effect. However, each module is relatively independent, and the relationship needs to be more prominent. A brief introduction should be added at the beginning of each module. The module corresponds to the teaching objective. It may be more suitable for the users to learn, and practice using the interview method to clarify the objective and then introduce the related methodological knowledge. For each module video, a textual introduction should be added. For users, watching videos is intuitive, but the reading text is easier and more efficient. From the point of view of interaction design, there are still a few problems with low efficiency. The overall functionality still needs to be improved.

5.2. User Evaluation

The participants completed the corresponding test tasks based on the test task sheet and, after that, filled out the user experience questionnaire (UEQ) scale and discussed the overall course experience [47,48]. This scale was designed as a semantic difference scale, with each item consisting of a pair of words with opposite meanings, using a 7-point scale for users to rate. The scores ranged from −3 (totally disagree) to +3 (totally agree), with half of the items starting with a positive rating and the rest with a negative rating, in a randomized order. The scale contains 26 items and six dimensions: attractiveness, perspicuity, efficiency, dependability, stimulation, and novelty. They evaluated the experience of this platform from the above six dimensions. A total of 31 participants were recruited, of which 30 were valid, one of them took less than one minute to fill in the scale, and all the items were filled in with seven, so the data were judged as invalid.

The UEQ scale, as a well-established scale, specifies an accompanying data analysis method. Namely, by comparing the results of the evaluated product with the benchmark data, it is possible to derive the relative quality of the evaluated product compared to other products. The benchmark dataset contains 21,175 people from 468 studies covering products in different industries (e.g., business software and social networks). The quality of the evaluated products is classified into five categories by comparing them with the benchmark data:

- Excellent: within the best 10% of the results in the benchmark dataset.

- Good: 10% of the benchmark dataset is better than the evaluated product, and 75% is worse.

- Above average: 25% of the benchmark dataset is better than the evaluated product, and 50% is worse.

- Below average: 50% of the benchmark dataset is better than the evaluated product, and 25% is worse.

- Bad: within the worst 25% of the benchmark dataset.

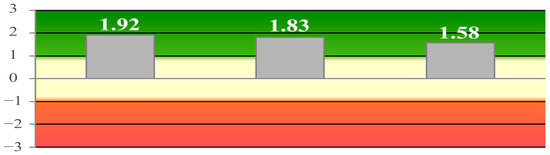

The data from the UEQ scale are shown in Table 3. Overall, the participants positively evaluated this online virtual simulation course platform. The highest average score was for efficiency, with a mean of 1.97 (SD = 0.58), which was an excellent rating compared to the standard pool. It proves that in the design transformation phase, we ensured the efficiency of communicating the course information. The strategy of abandoning 3D virtual simulation means and adopting graphic lecture plus 2D virtual simulation technology according to the course content was effective. Next was dependability, with a mean value of 1.94 (SD = 0.71), which was an excellent rating compared to the standard pool. Users can receive timely feedback and suggestions from the course platform when they make mistakes or select the wrong options. In addition, attractiveness was excellent, with a mean of 1.92 (SD = 0.61), and novelty, with a mean of 1.74 (SD = 0.82), demonstrating that the course content and interaction style contributed to the quality of user experience. However, stimulation, with a mean of 1.43 (SD = 0.97), and perspicuity, with a mean of 1.57 (SD = 1.07), need to be improved.

Table 3.

The UEQ scores.

The dimensions of the UEQ scale can be categorized as attractiveness, pragmatic quality (i.e., perspicuity, efficiency, and dependability), and hedonic quality (i.e., stimulation and novelty). Attractiveness would become an independent value dimension. Pragmatic quality describes the task-related dimensions, and hedonic quality describes the task-independent dimensions. Figure 5 shows attractiveness, pragmatic, and hedonic quality baseline values. The attractiveness and pragmatic qualities are both at a high level and fit well with the aforementioned design simulation. However, there is still more room for improvement in hedonic quality. According to the scale results, when users evaluate this platform in general, they are positive about the efficiency of knowledge transfer, course content, and the novelty of interaction design. However, users still need clarification about some of the operations.

Figure 5.

The UEQ results. Left: attractiveness. Middle: pragmatic quality. Right: hedonic quality.

5.3. The Improvements

Here we show the representative interview module. Other modules have similar features and need to be described in detail due to space constraints. When users learn the “follow-up questions” step, they are often confused by the inconsistent interaction styles. The original button was inconsistent with the other buttons, which caused the user to spend more time looking for the button and understanding how to operate it (see Figure 6). In addition, the color of the “follow-up questions” button was not different enough from the default color, which caused users to click the button repeatedly. After iteration, the button was made to have the same interaction style as the “Next” button, and its position was changed to the left of the “Next” button. This saves the user from having to search for the button and makes it easy to distinguish between the button’s pressed state and its default state. In terms of the sequence of actions on the entire page, before the improvement, the interaction logic was: find the interactive button, click “follow-up questions”, not sure if it was clicked, click “follow-up questions”, answer the question, find the interactive button, click “Next”. After the improvement, the interaction logic is: click “follow-up questions”, answer the question, click “Next”.

Figure 6.

The improvements of user interface of teaching the interview module. (a): before. (b): after.

6. Discussion

This course platform is planned to be online in 2023. Subsequent user feedback needs to be collected, and interaction design needs to be iterated accordingly. Regarding course content, the platform currently only has our institute’s self-developed creative thinking course. We expect more universities to join in the content creation of this platform. In creativity cultivation, this study is limited by the immaturity of the technical means, which makes it challenging to provide the interactive function of online collaboration, and only the form of single-person learning is selected. With the development of immersive virtual simulation technology and the update of hardware, the course platform will include a virtual, collaborative, and interactive space in the future [49]. In this context, we can realize both online workshops in the virtual simulation environment and teaching attempts such as scene-building and gamified teaching.

The curriculum design was based on the Stanford University’s Design Thinking creativity development course, which has proven effective for creativity development. From there, it is only necessary to efficiently transfer this value to the network to ensure the same creativity development effect. The usability evaluations show that the platform improves users’ learning efficiency and interest and increases memory points and appeal, optimizing teaching effectiveness. After enhancing the ease-of-use experience of online teaching, the platform would be more conducive to improving students’ creativity.

For the future direction of development, we will make further iterations in technology, such as the use of augmented reality technology to increase the richness of the user’s perception of the natural world through the information generated by the computer system [50]. Reality is augmented by applying virtual information to the real world and superimposing computer-generated virtual objects, scenes, and system information onto real scenes. We will use such technology to keep college students in an atmosphere of efficient collaboration. The application of the new technology requires professional technical teams to provide support in 3D modeling, computer programming, server operation, and website construction. We plan to build a professional technical support team for virtual experiments.

7. Conclusions

This paper describes the process of developing an online course platform for creative thinking virtual simulation facing the college student population. We explored the needs of college students for creativity development course learning in an online virtual simulation environment. We found the influencing factors of online virtual simulation learning and generated six design guidelines accordingly. Based on this, we designed and developed a virtual simulation experimental platform to refine the practical syllabus and teaching contents. We have effectively integrated virtual simulation experiments into real project-based teaching and learning. The course platform uses virtual simulation technology to simulate situated and experiential education, allowing students to complete “user research”, “affinity analysis”, and “prototyping” in an online manner. This technology has improved students’ empathy and ability to define and solve problems. In addition, by building this platform, we have expanded the practical field of virtual simulation technology and enriched the teaching methods of cultivating creativity.

Author Contributions

Conceptualization, J.J.; methodology, L.L.; writing—original draft preparation, X.W.; writing—review and editing, W.L.; supervision, S.H.; project administration, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Ethics Committee of the Beijing Normal University (Code: 202202280023).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. Written informed consent has been obtained from the participants to publish this paper.

Data Availability Statement

Not applicable.

Acknowledgments

We want to express our gratitude to the students at the Beijing Normal University and the participants for their meaningful support of this work.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Maritz, A.; De Waal, A.; Buse, S.; Herstatt, C.; Lassen, A.; Maclachlan, R. Innovation education programs: Toward a conceptual framework. Eur. J. Innov. Manag. 2014, 17, 166–182. [Google Scholar] [CrossRef]

- Lewrick, M.; Link, P.; Leifer, L. The Design Thinking Playbook: Mindful Digital Transformation of Teams, Products, Services, Businesses and Ecosystems; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Huang, J.; Gan, L.; Jiang, M.; Zhang, Q.; Zhu, G.; Hu, S.; Zhang, X.; Liu, W. Building a virtual simulation teaching and learning platform towards creative thinking for Beijing Shahe education park. In Proceedings of the International Conference on Human Interaction and Emerging Technologies, Virtual, 27–29 August 2021; pp. 1218–1226. [Google Scholar]

- Kuo, F.R.; Chen, N.S.; Hwang, G.J. A creative thinking approach to enhancing the web-based problem solving performance of university students. Comput. Educ. 2014, 72, 220–230. [Google Scholar] [CrossRef]

- Alqahtani, A.Y.; Rajkhan, A.A. E-learning critical success factors during the COVID-19 pandemic: A comprehensive analysis of e-learning managerial perspectives. Educ. Sci. 2020, 10, 216. [Google Scholar] [CrossRef]

- Lattanzio, S.; Nassehi, A.; Parry, G.; Newnes, L.B. Concepts of transdisciplinary engineering: A transdisciplinary landscape. Int. J. Agil. Syst. Manag. 2021, 14, 292–312. [Google Scholar] [CrossRef]

- Liu, W.; Byler, E.; Leifer, L. Engineering design entrepreneurship and innovation: Transdisciplinary teaching and learning in a global context. In Design, User Experience, and Usability. Case Studies in Public and Personal Interactive Systems: Proceedings of theInternational Conference on Human-Computer Interaction, Copenhagen, Denmark, 19–24 July 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 451–460. [Google Scholar]

- Dym, C.L.; Agogino, A.M.; Eris, O.; Frey, D.D.; Leifer, L.J. Engineering design thinking, teaching, and learning. J. Eng. Educ. 2005, 94, 103–120. [Google Scholar]

- Hirshfield, L.J.; Koretsky, M.D. Cultivating creative thinking in engineering student teams: Can a computer-mediated virtual laboratory help? J. Comput. Assist. Learn. 2021, 37, 587–601. [Google Scholar] [CrossRef]

- Kuratko, D.F.; Audretsch, D.B. Strategic entrepreneurship: Exploring different perspectives of an emerging concept. Entrep. Theory Pract. 2009, 33, 1–17. [Google Scholar]

- Ma, L.; Lee, C.S. Investigating the adoption of MOOCs: A technology–user–environment perspective. J. Comput. Assist. Learn. 2019, 35, 89–98. [Google Scholar]

- Ryan, P.J.; Watson, R.B. Research challenges for the internet of things: What role can or play? Systems 2017, 5, 24. [Google Scholar]

- Vergara, D.; Rubio, M.P.; Lorenzo, M. On the design of virtual reality learning environments in engineering. Multimodal Technol. Interact. 2017, 1, 11. [Google Scholar]

- Øritsland, T.A.; Buur, J. Interaction styles: An aesthetic sense of direction in interface design. Int. J. Hum. Comput. Interact. 2003, 15, 67–85. [Google Scholar]

- Guilford, J.P. (Ed.) Creativity Research: Past, Present and Future. Frontiers of Creativity Research: Beyond the Basics; Bearly Limited: Hong Kong, China, 1987; pp. 33–65. [Google Scholar]

- Amabile, T.M. A model of creativity and innovation in organizations. Res. Organ. Behav. 1988, 10, 123–167. [Google Scholar]

- Mumford, M.D.; Mobley, M.I.; Reiter-Palmon, R.; Uhlman, C.E.; Doares, L.M. Process analytic models of creative capacities. Creat. Res. J. 1991, 4, 91–122. [Google Scholar]

- Sternberg, R.J. A Three-Facet Model of Creativity. In The Nature of Creativity; Cambridge University Press: New York, NY, USA, 1988; pp. 125–147. [Google Scholar]

- Sarsani, M.R. (Ed.) Creativity in Education; Palgrave Macmillan: London, UK, 2005. [Google Scholar]

- Runco, M.A.; Jaeger, G.J. The standard definition of creativity. Creat. Res. J. 2012, 24, 92–96. [Google Scholar]

- Oblinger, D.; Oblinger, J. Educating the Net Generation; Educause: Boulder, CO, USA, 2005. Available online: https://www.educause.edu/ir/library/PDF/pub7101.PDF (accessed on 23 December 2022).

- Lowenthal, P.R.; Wilson, B.; Parrish, P. Context matters: A description and typology of the online learning landscape. In Proceedings of the AECT International Convention, Louisville, KY, USA, 30 October 2009; Available online: https://members.aect.org/pdf/Proceedings/proceedings09/2009I/09_20.pdf (accessed on 23 December 2022).

- Hiltz, S.R.; Turoff, M. Education goes digital: The evolution of online learning and the revolution in higher education. Commun. ACM 2005, 48, 59–64. [Google Scholar] [CrossRef]

- McAuley, A.; Stewart, B.; Siemens, G.; Cormier, D. Massive open Online Courses. Digital Ways of Knowing and Learning. The MOOC Model for Digital Practice; University of Prince Edward Island: Charlottetown, PE, Canada, 2010. [Google Scholar]

- Volery, T.; Lord, D. Critical success factors in online education. Int. J. Educ. Manag. 2000, 14, 216–223. [Google Scholar] [CrossRef]

- Gloria, A.M.; Uttal, L. Conceptual considerations in moving from face-to-face to online teaching. Int. J. E-Learn. 2020, 19, 139–159. [Google Scholar]

- Alhabeeb, A.; Rowley, J. E-learning critical success factors: Comparing perspectives from academic staff and students. Comput. Educ. 2018, 127, 1–12. [Google Scholar]

- Wong, L.; Fong, M. Student attitudes to traditional and online methods of delivery. J. Inf. Technol. Educ. Res. 2014, 13, 1–13. [Google Scholar] [CrossRef]

- Malmberg, J.; Järvelä, S.; Holappa, J.; Haataja, E.; Huang, X.; Siipo, A. Going beyond what is visible: What multichannel data can reveal about interaction in the context of collaborative learning? Comput. Hum. Behav. 2019, 96, 235–245. [Google Scholar] [CrossRef]

- Knowles, E.; Kerkman, D. An investigation of students attitude and motivation toward online learning. InSight A Collect. Fac. Scholarsh. 2007, 2, 70–80. [Google Scholar] [CrossRef]

- Garrison, D.R.; Cleveland-Innes, M. Facilitating cognitive presence in online learning: Interaction is not enough. Am. J. Distance Educ. 2005, 19, 133–148. [Google Scholar]

- Harasim, L. Shift happens: Online education as a new paradigm in learning. Internet High. Educ. 2000, 3, 41–61. [Google Scholar]

- Roussou, M.; Slater, M. Comparison of the effect of interactive versus passive virtual reality learning activities in evoking and sustaining conceptual change. IEEE Trans. Emerg. Top. Comput. 2017, 8, 233–244. [Google Scholar]

- Li, W.; Hu, Q. The Variety of position representation in reorientation: Evidence from virtual reality experiment. Psychol. Dev. Educ. 2018, 34, 385–394. [Google Scholar]

- Cornelius, S.; Gordon, C.; Harris, M. Role engagement and anonymity in synchronous online role play. Int. Rev. Res. Open Distrib. Learn. 2011, 12, 57–73. [Google Scholar]

- Zhang, L.; Beach, R.; Sheng, Y. Understanding the use of online role-play for collaborative argument through teacher experiencing: A case study. Asia-Pac. J. Teach. Educ. 2016, 44, 242–256. [Google Scholar] [CrossRef]

- Beckmann, E.A.; Mahanty, S. The evolution and evaluation of an online role play through design-based research. Australas. J. Educ. Technol. 2016, 32, 35–47. [Google Scholar] [CrossRef]

- Doğantan, E. An interactive instruction model design with role play technique in distance education: A case study in open education system. J. Hosp. Leis. Sport Tour. Educ. 2020, 27, 100268. [Google Scholar] [CrossRef]

- Schott, C.; Marshall, S. Virtual reality and situated experiential education: A conceptualization and exploratory trial. J. Comput. Assist. Learn. 2018, 34, 843–852. [Google Scholar] [CrossRef]

- Jensen, L.; Konradsen, F. A review of the use of virtual reality head-mounted displays in education and training. Educ. Inf. Technol. 2018, 23, 151–1529. [Google Scholar]

- Howard, M.C.; Van Zandt, E.C. A meta-analysis of the virtual reality problem: Unequal effects of virtual reality sickness across individual differences. Virtual Real. 2021, 25, 1221–1246. [Google Scholar] [CrossRef]

- Schott, C.; Marshall, S. Virtual reality for experiential education: A user experience exploration. Australas. J. Educ. Technol. 2021, 37, 96–110. [Google Scholar] [CrossRef]

- Chang, E.; Kim, H.T.; Yoo, B. Virtual reality sickness: A review of causes and measurements. Int. J. Hum. Comput. Interact. 2020, 36, 1658–1682. [Google Scholar]

- Corbin, J. Grounded theory. J. Posit. Psychol. 2017, 12, 301–302. [Google Scholar]

- Scupin, R. The KJ method: A technique for analyzing data derived from Japanese ethnology. Hum. Organ. 1997, 56, 233–237. [Google Scholar] [CrossRef]

- Mahatody, T.; Sagar, M.; Kolski, C. State of the art on the cognitive walkthrough method, its variants and evolutions. Int. J. Hum. Comput. Interact. 2010, 26, 741–785. [Google Scholar] [CrossRef]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Applying the user experience questionnaire (UEQ) in different evaluation scenarios. In Design, User Experience, and Usability. Theories, Methods, and Tools for Designing the User Experience: Proceedings of the International Conference of Design, User Experience, and Usability, Crete, Greece, 22–27 June 2014; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 383–392. [Google Scholar]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Design and evaluation of a short version of the user experience questionnaire (UEQ-S). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 103–108. [Google Scholar] [CrossRef]

- Hamilton, D.; McKechnie, J.; Edgerton, E.; Wilson, C. Immersive virtual reality as a pedagogical tool in education: A systematic literature review of quantitative learning outcomes and experimental design. J. Comput. Educ. 2021, 8, 1–32. [Google Scholar] [CrossRef]

- Liou, H.H.; Yang, S.J.; Chen, S.Y.; Tarng, W. The influences of the 2D image-based augmented reality and virtual reality on student learning. J. Educ. Technol. Soc. 2017, 20, 110–121. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).