Practitioners’ Perspectives towards Requirements Engineering: A Survey

Abstract

:1. Introduction

2. Related Work

Summary

3. Research Methodology

3.1. Research Questions

3.2. Survey Design

3.3. Survey Execution

3.4. Survey Sampling

3.5. Data Analysis and Validation

4. Survey Results

4.1. Profile

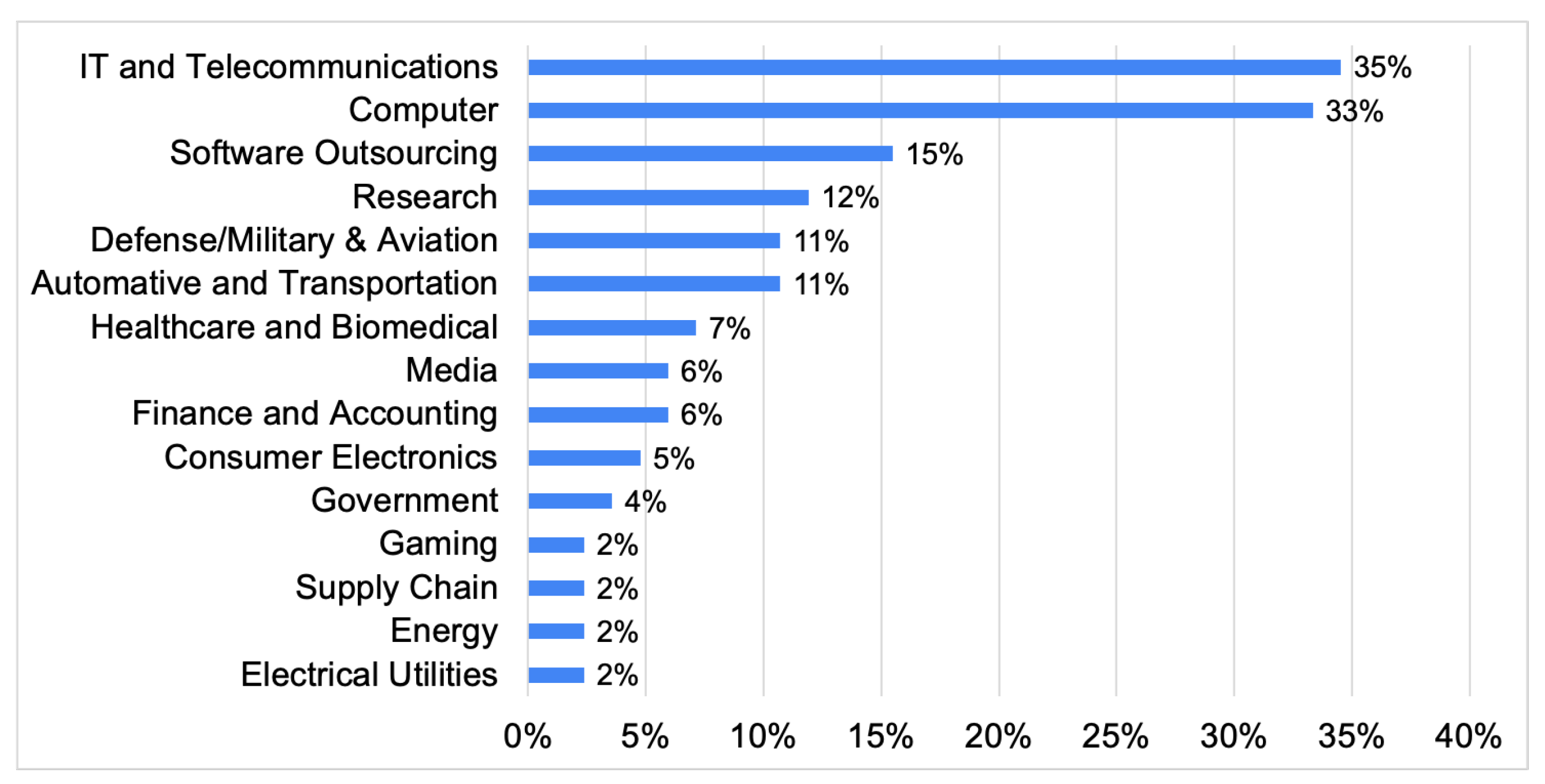

4.1.1. Work Industries

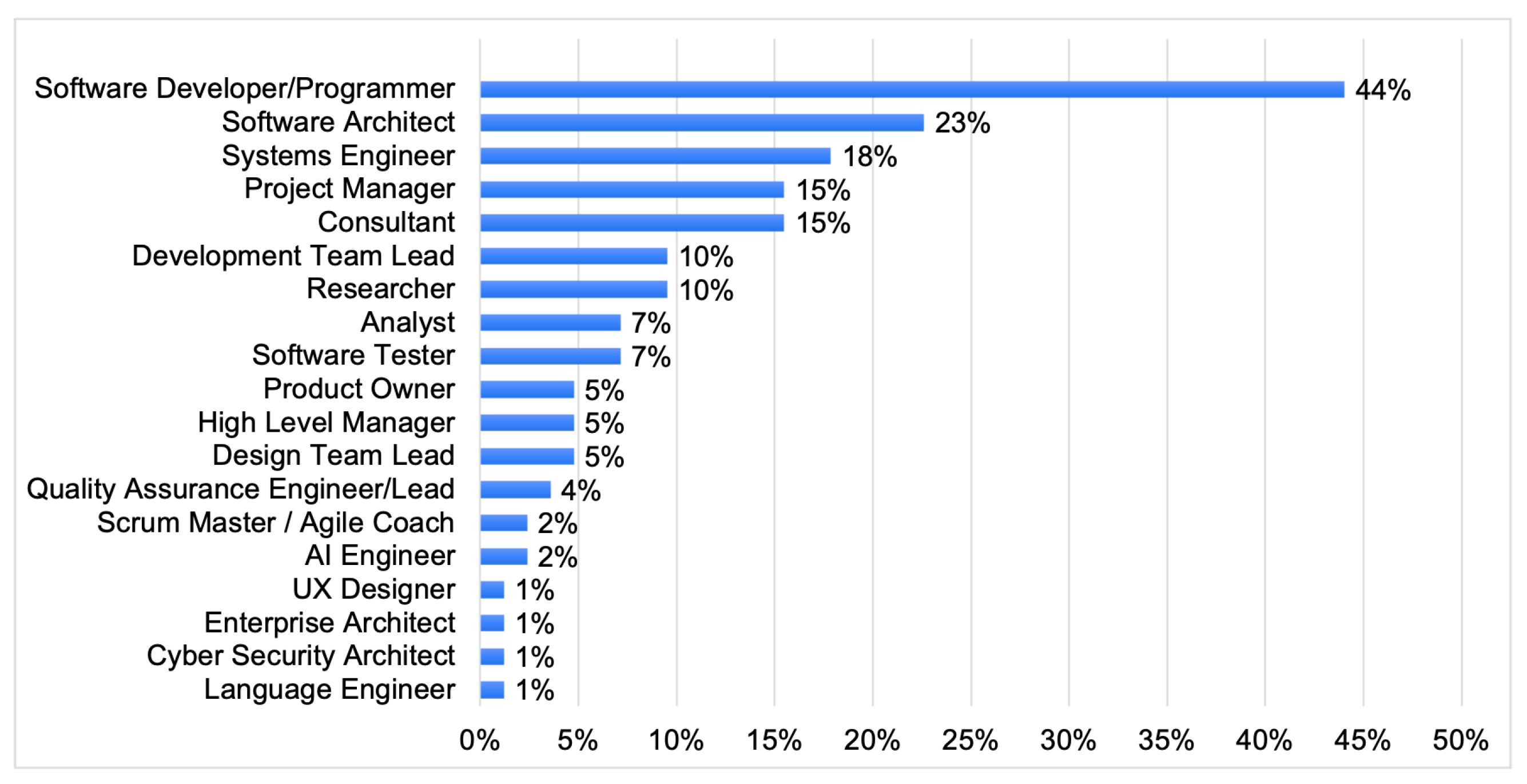

4.1.2. Job Positions

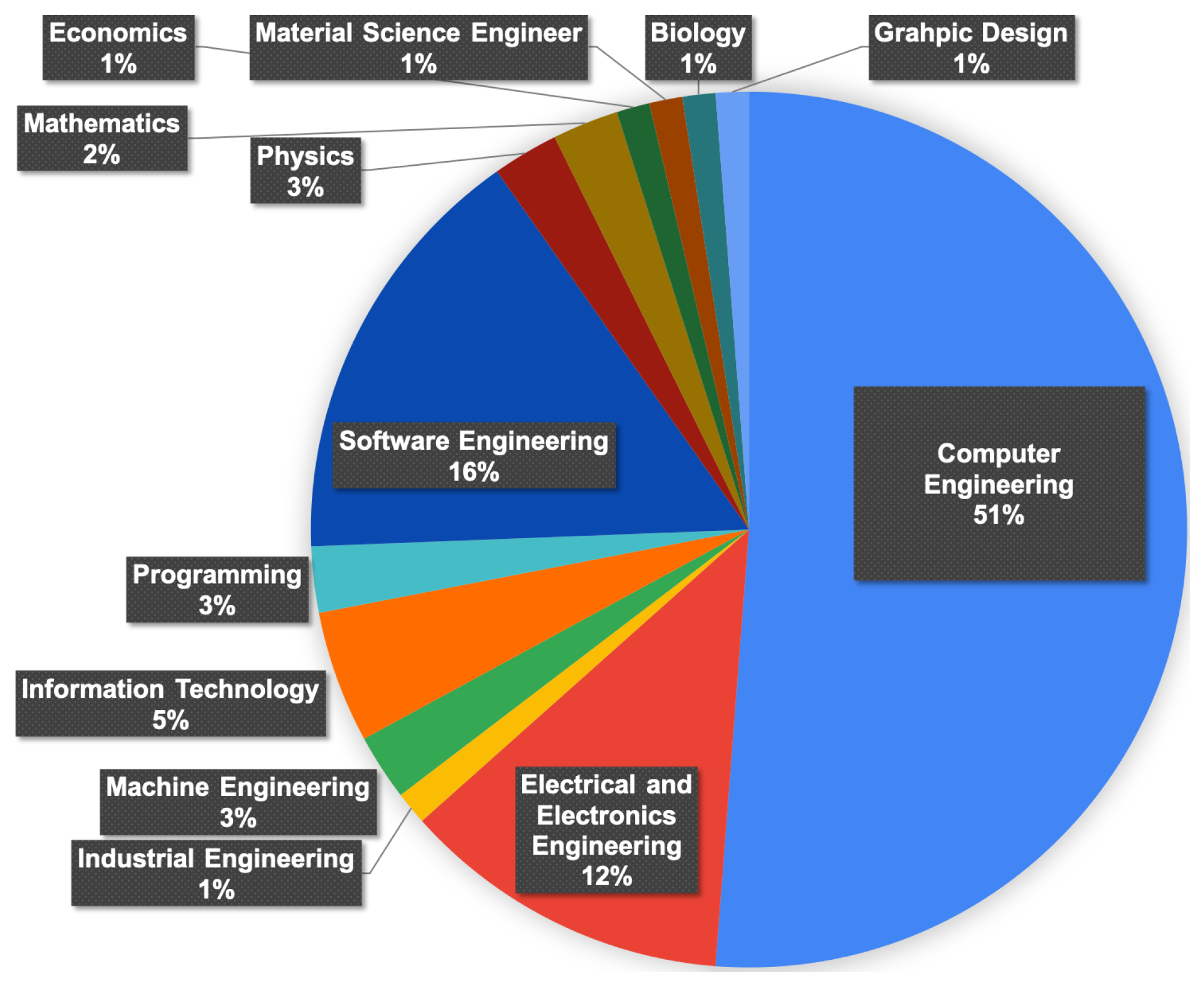

4.1.3. Bachelor Degrees

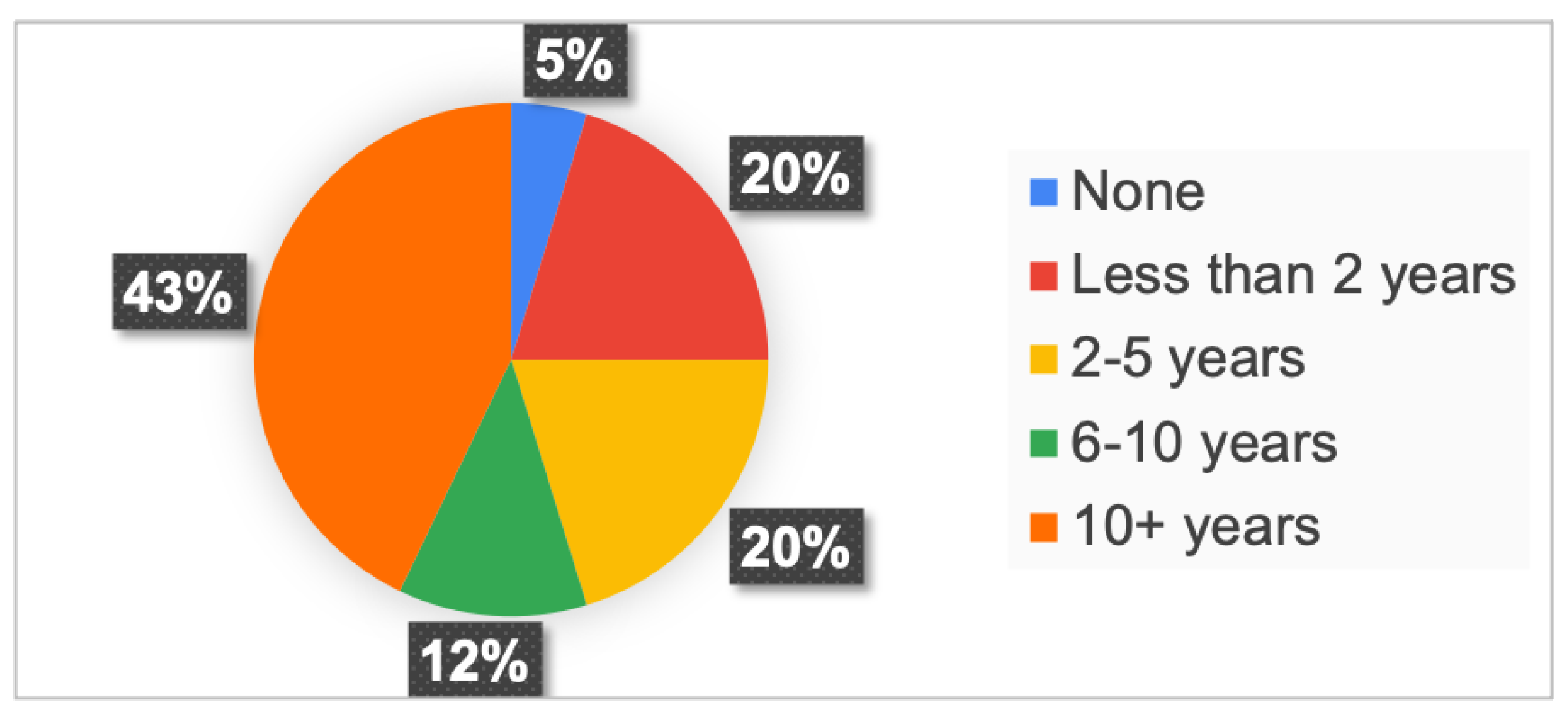

4.1.4. Years of Experience

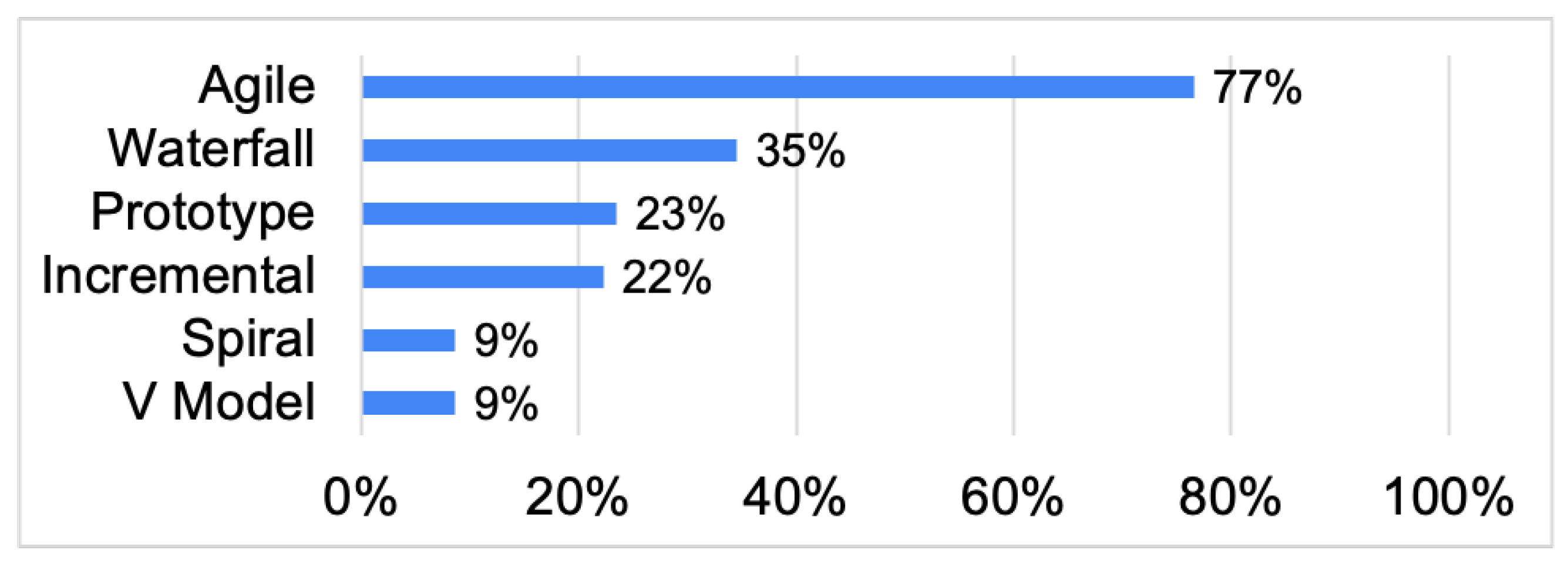

4.1.5. Software Process Model

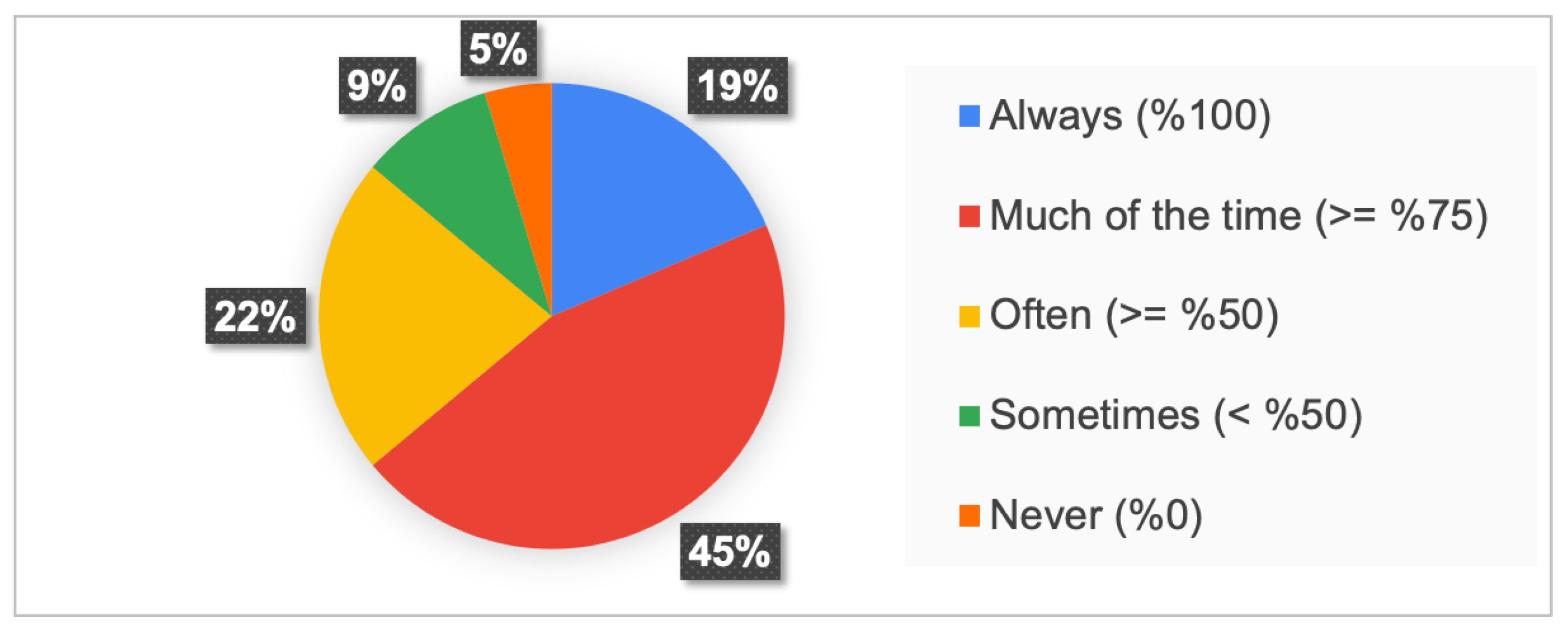

4.1.6. The Frequency of Requirements Specification

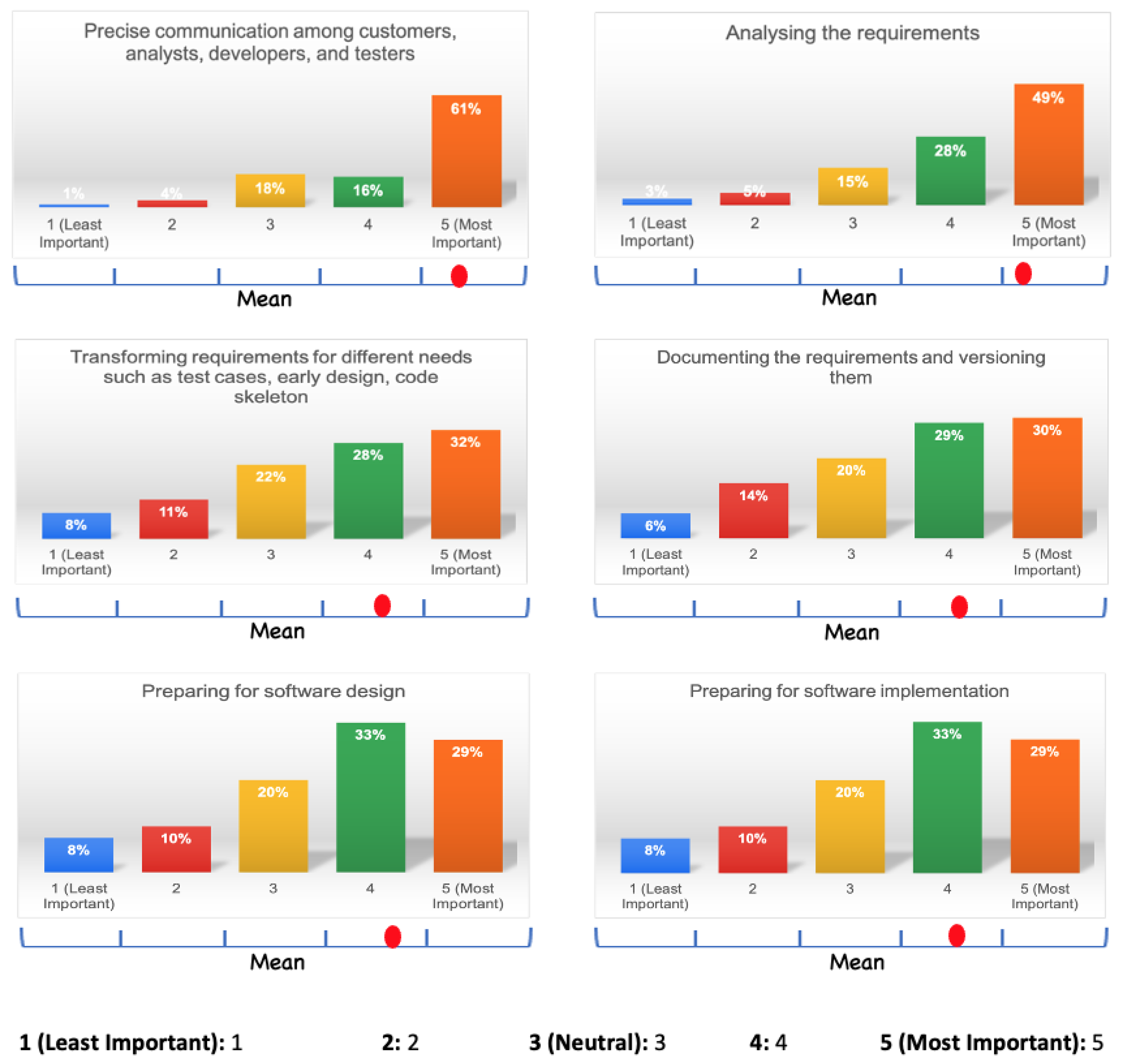

4.2. Participants’ Motivations for Specifying the Software Requirements

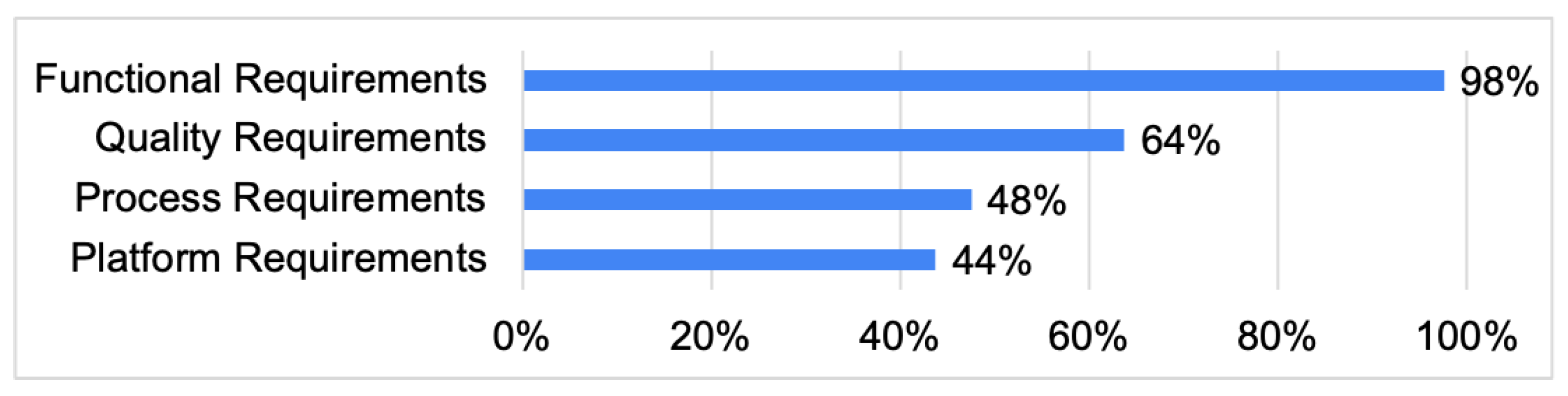

4.2.1. The Types of Requirements

4.2.2. The Requirements Specification Concerns

4.3. Requirements Gathering

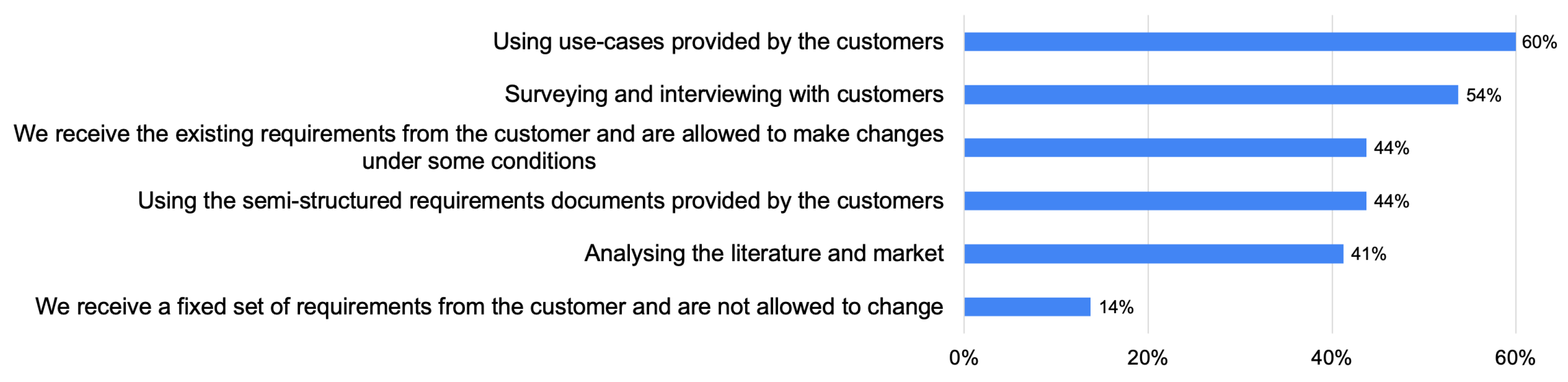

Techniques Used for Gathering Requirements

4.4. Requirements Specifications

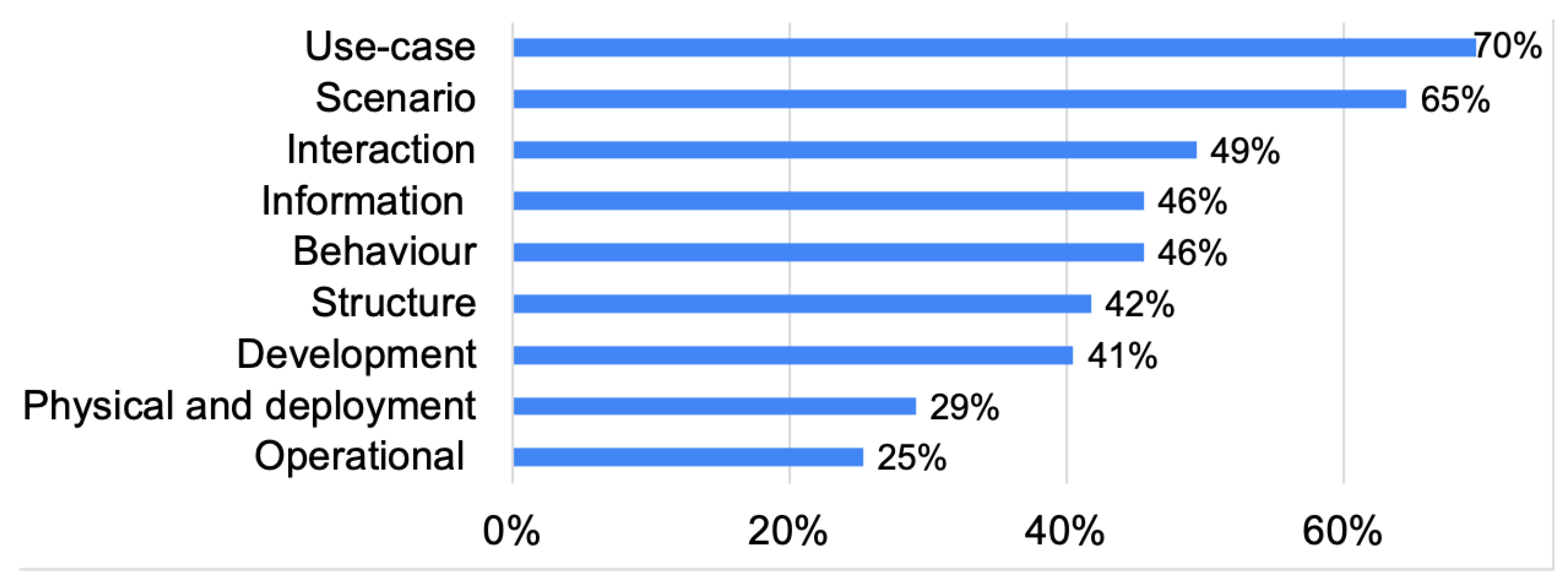

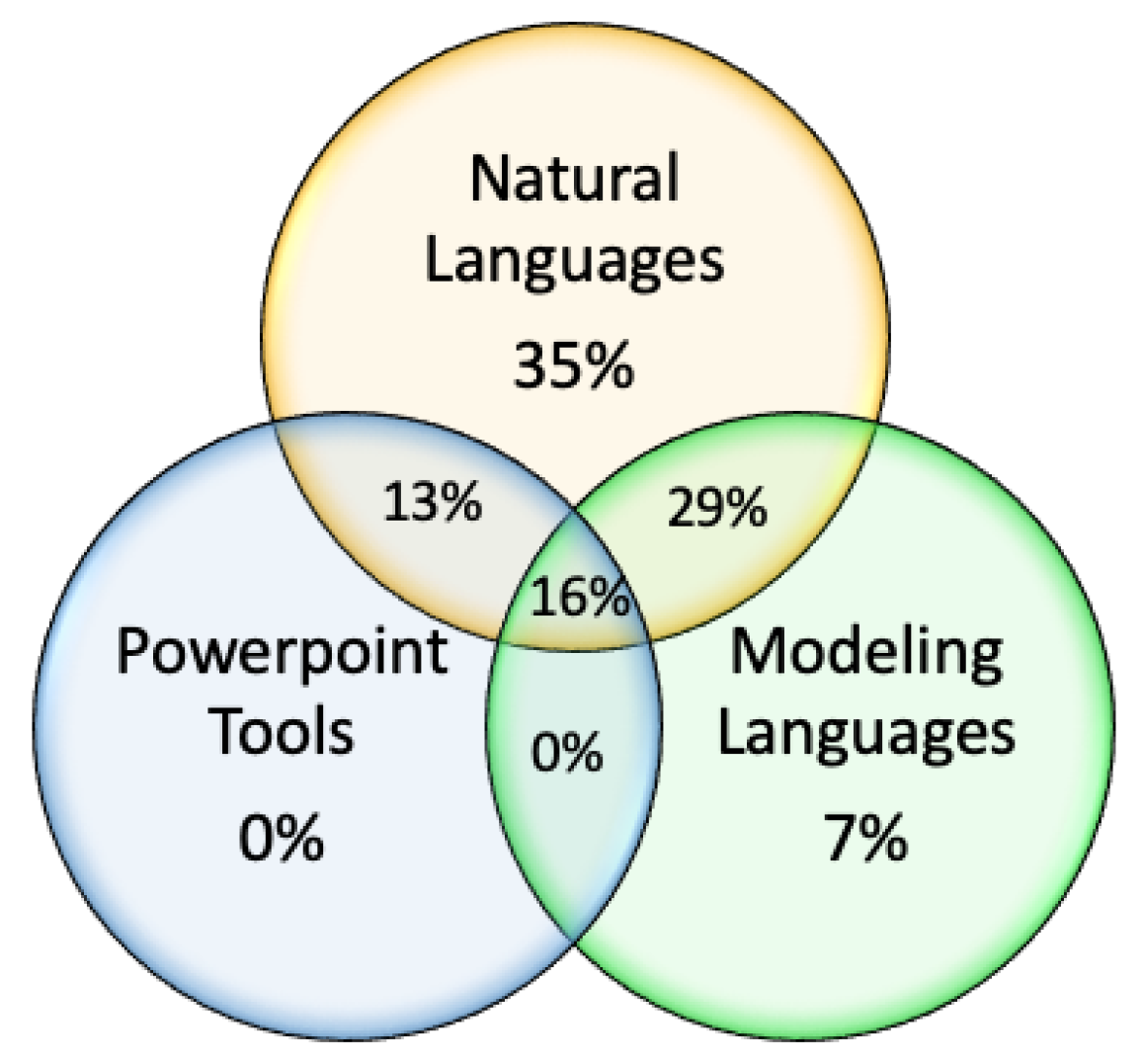

The Modeling Approaches Used for the Requirements Specifications

4.5. Customer Involvement

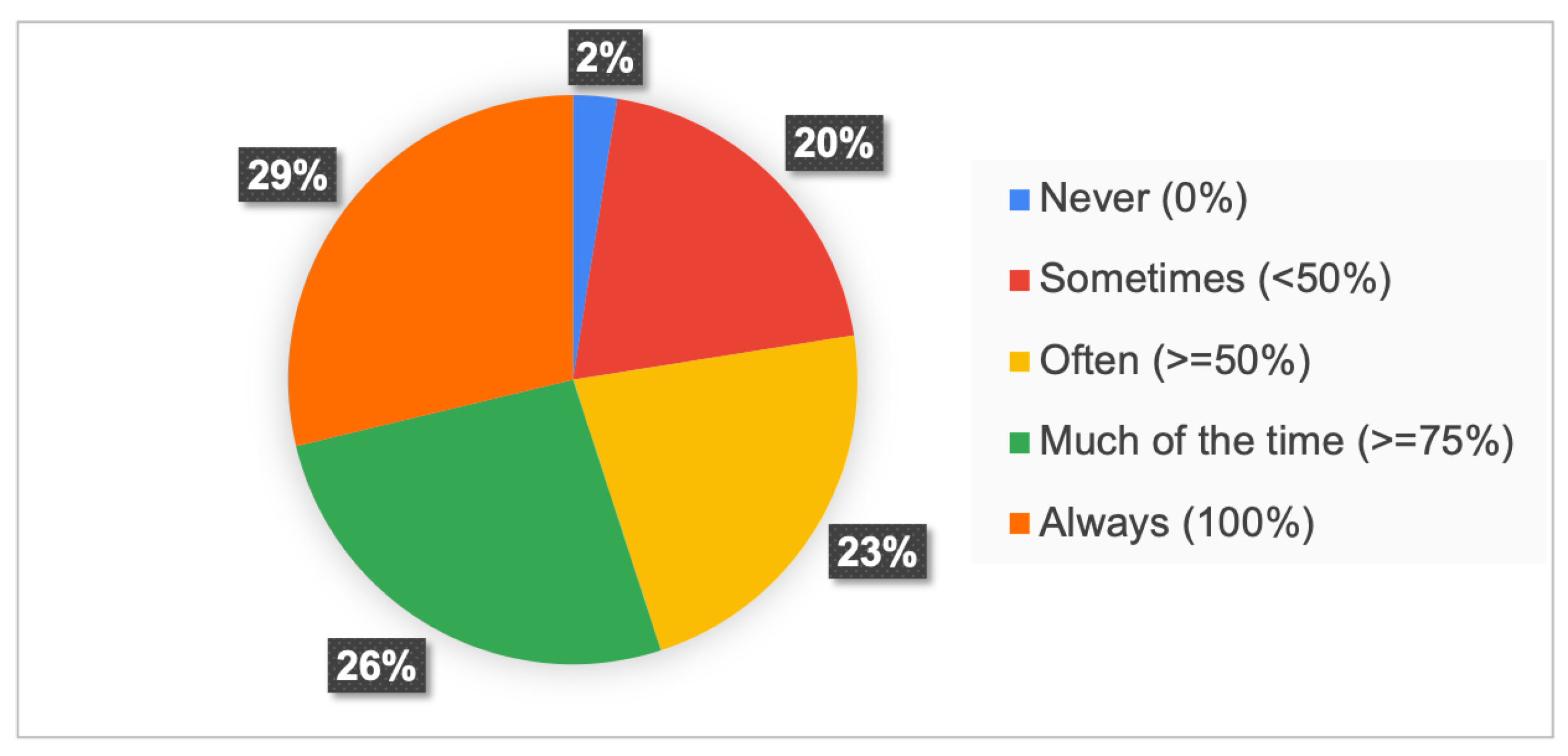

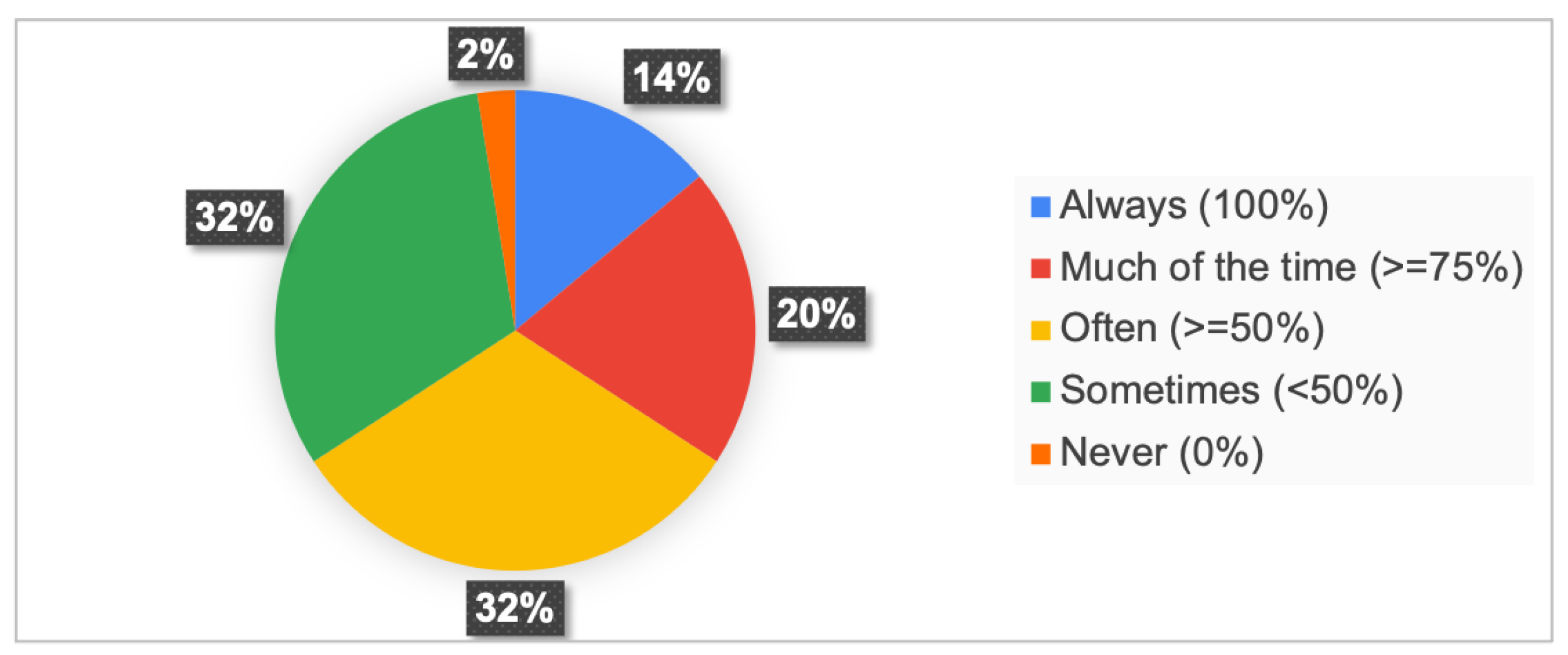

4.5.1. The Frequency of Involving Customers

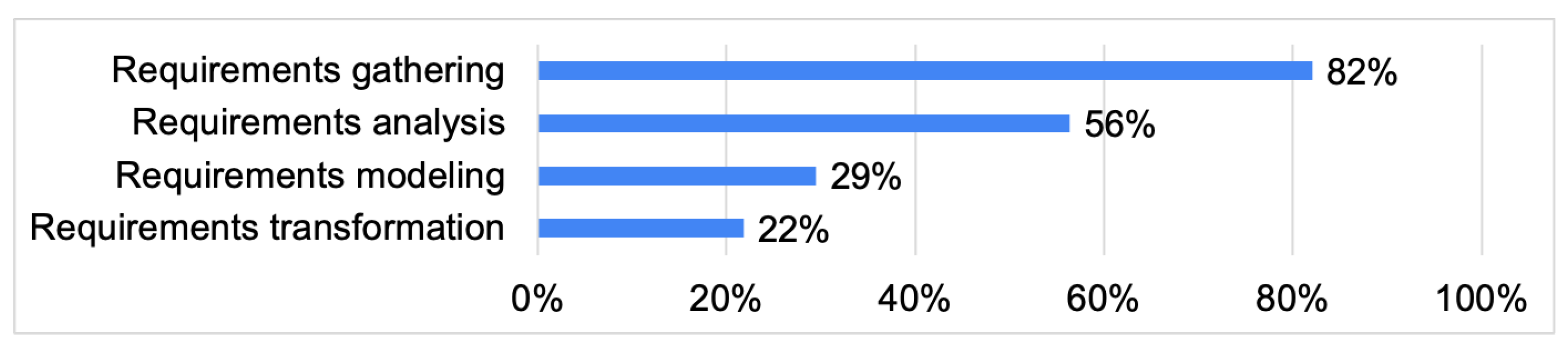

4.5.2. Customers’ Involvement in the Requirements Engineering Activities

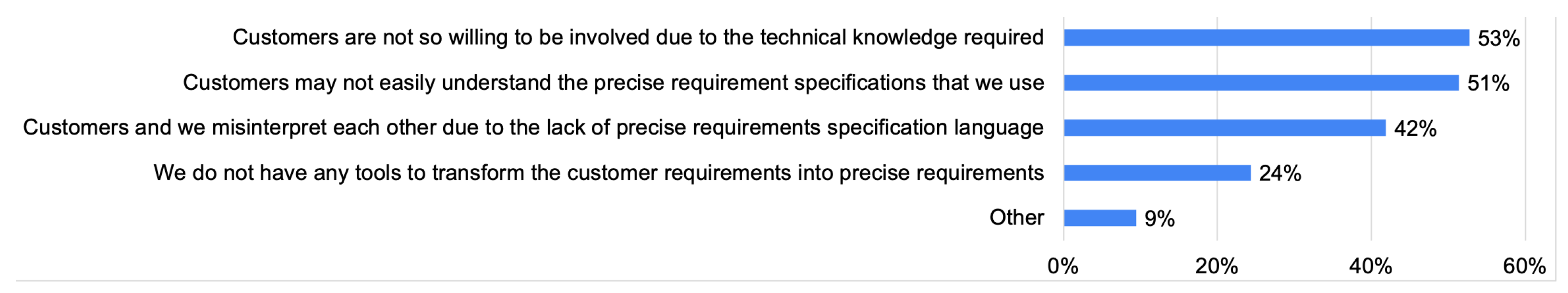

4.5.3. Participants’ Challenges on Involving Customers

4.6. Requirements Evolution

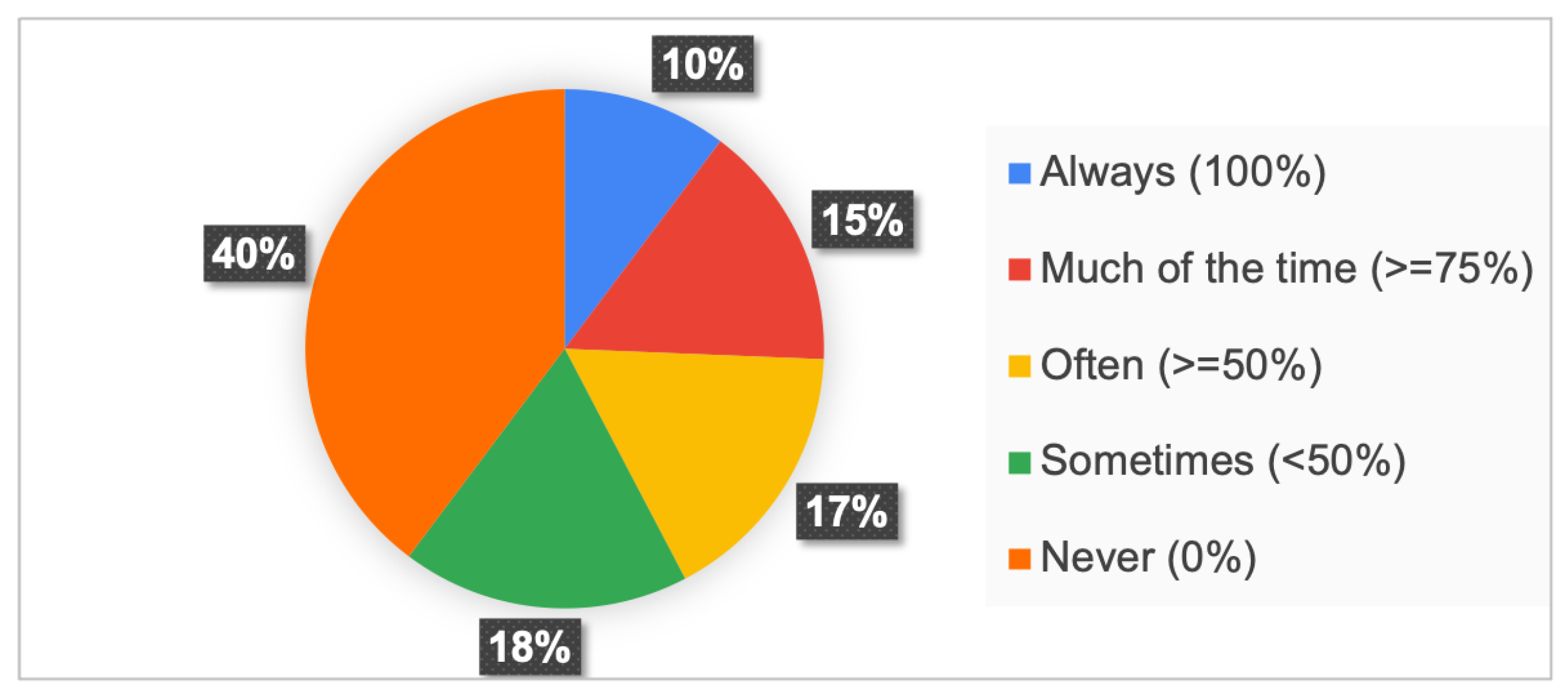

4.6.1. The Frequency of Changing the Software Requirements

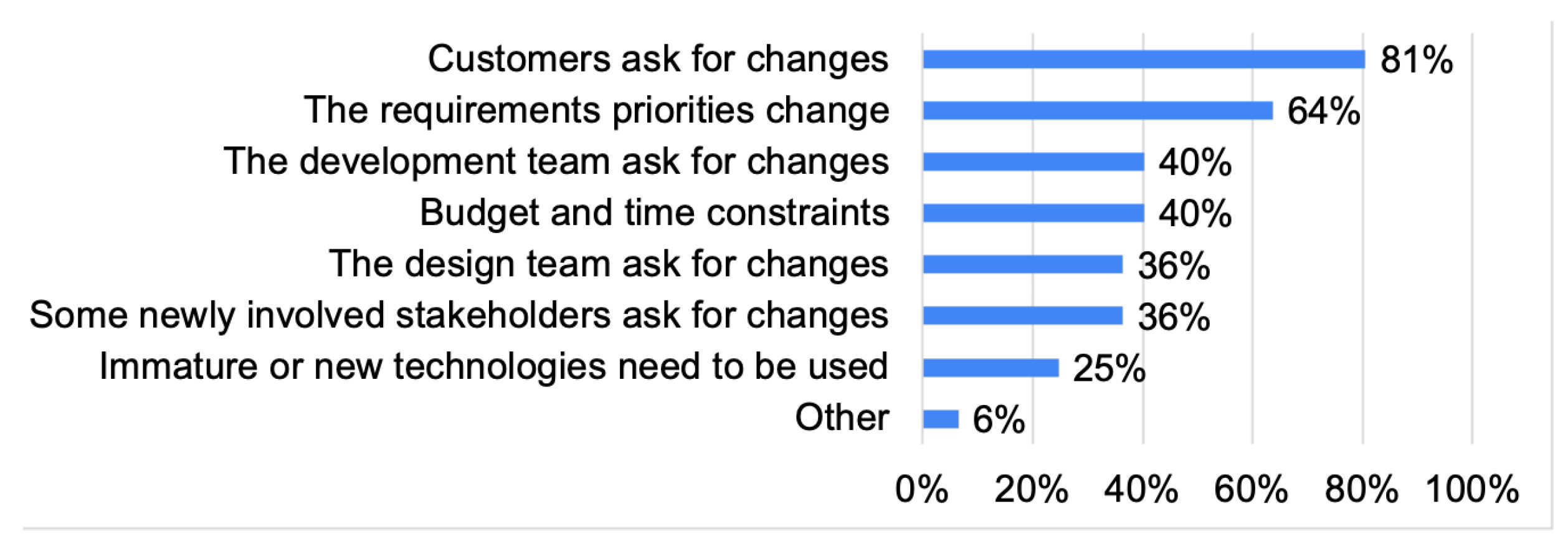

4.6.2. Participants’ Reasons for Changing the Software Requirements

4.6.3. Participants’ Usage Frequencies for the Traceability Tools

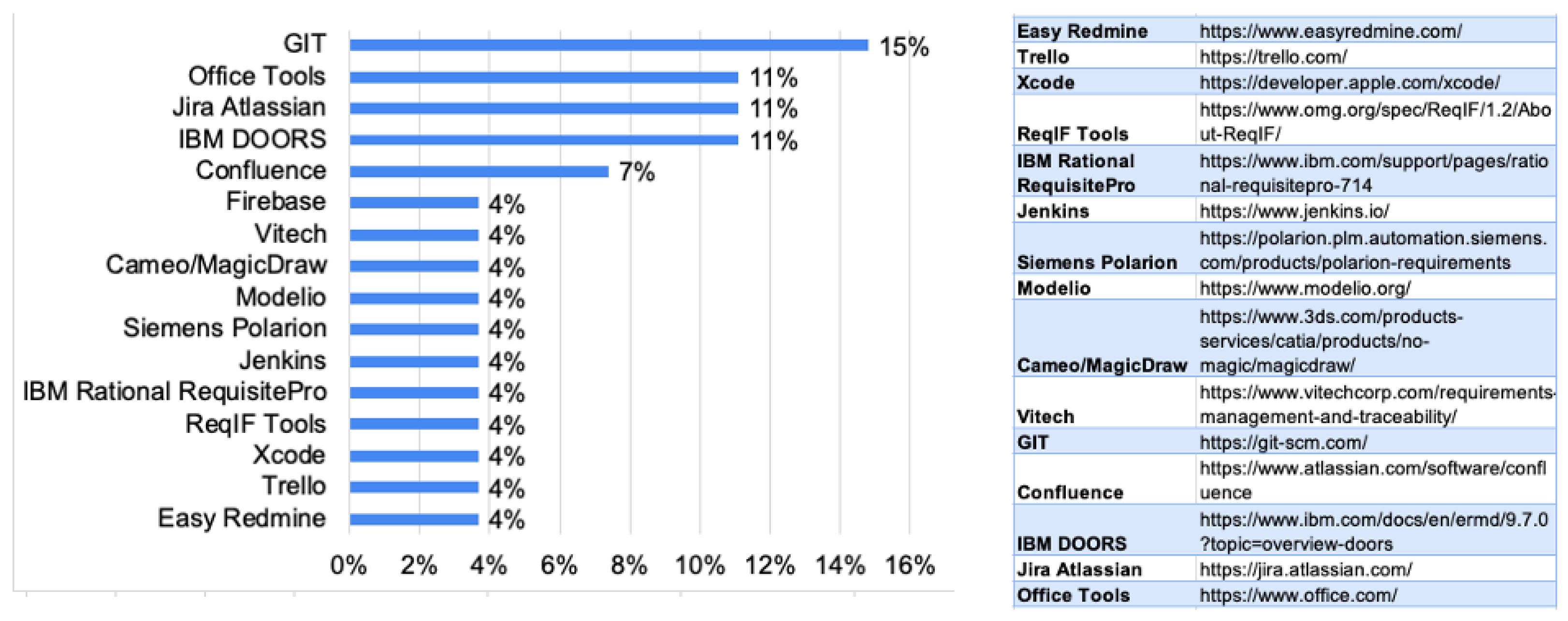

4.6.4. The Tools Used by the Participants for Tracing the Requirements Changes

4.7. Requirements Analysis

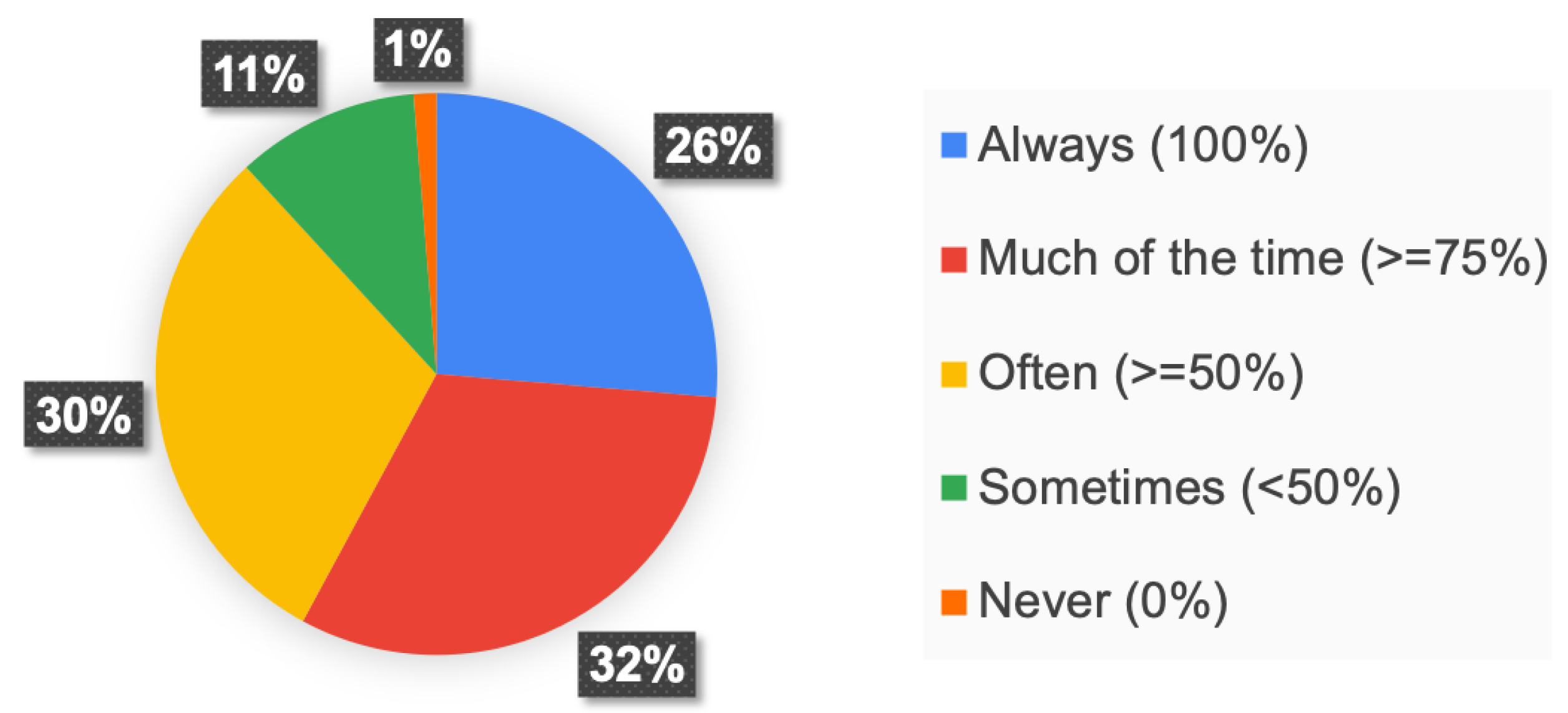

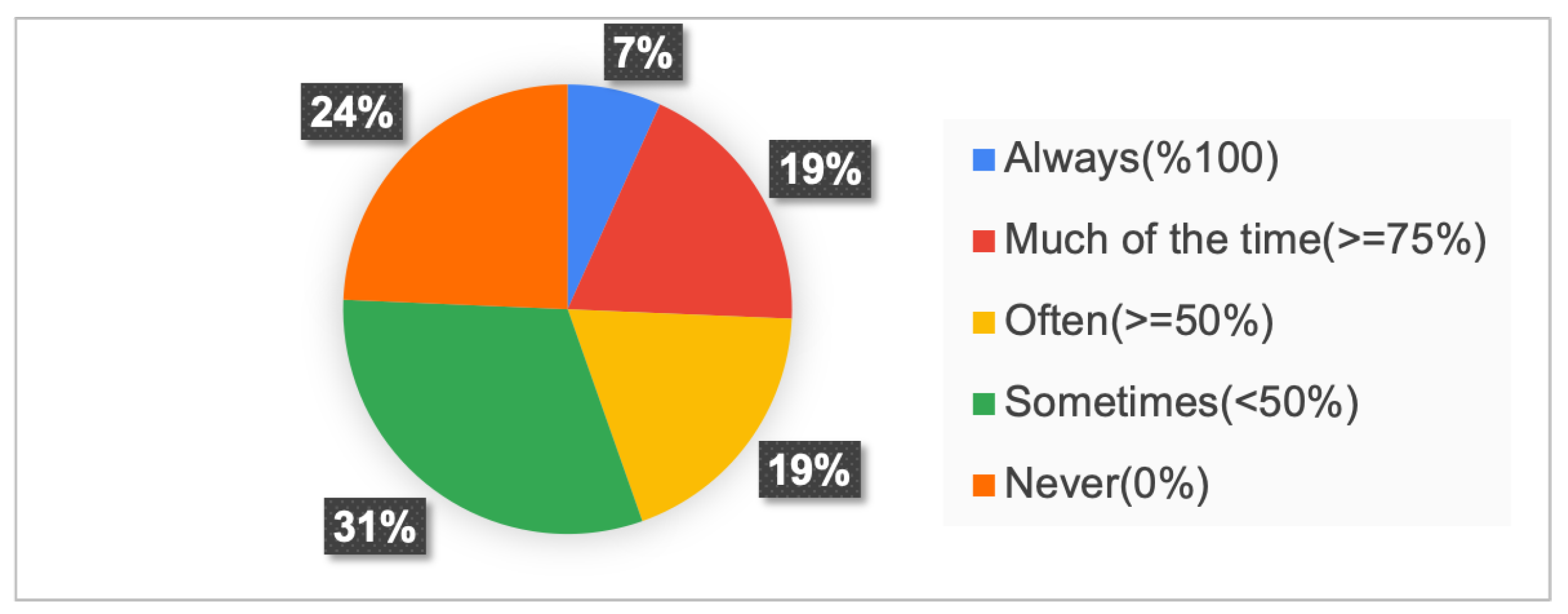

4.7.1. The Frequency of Analysing the Software Requirements

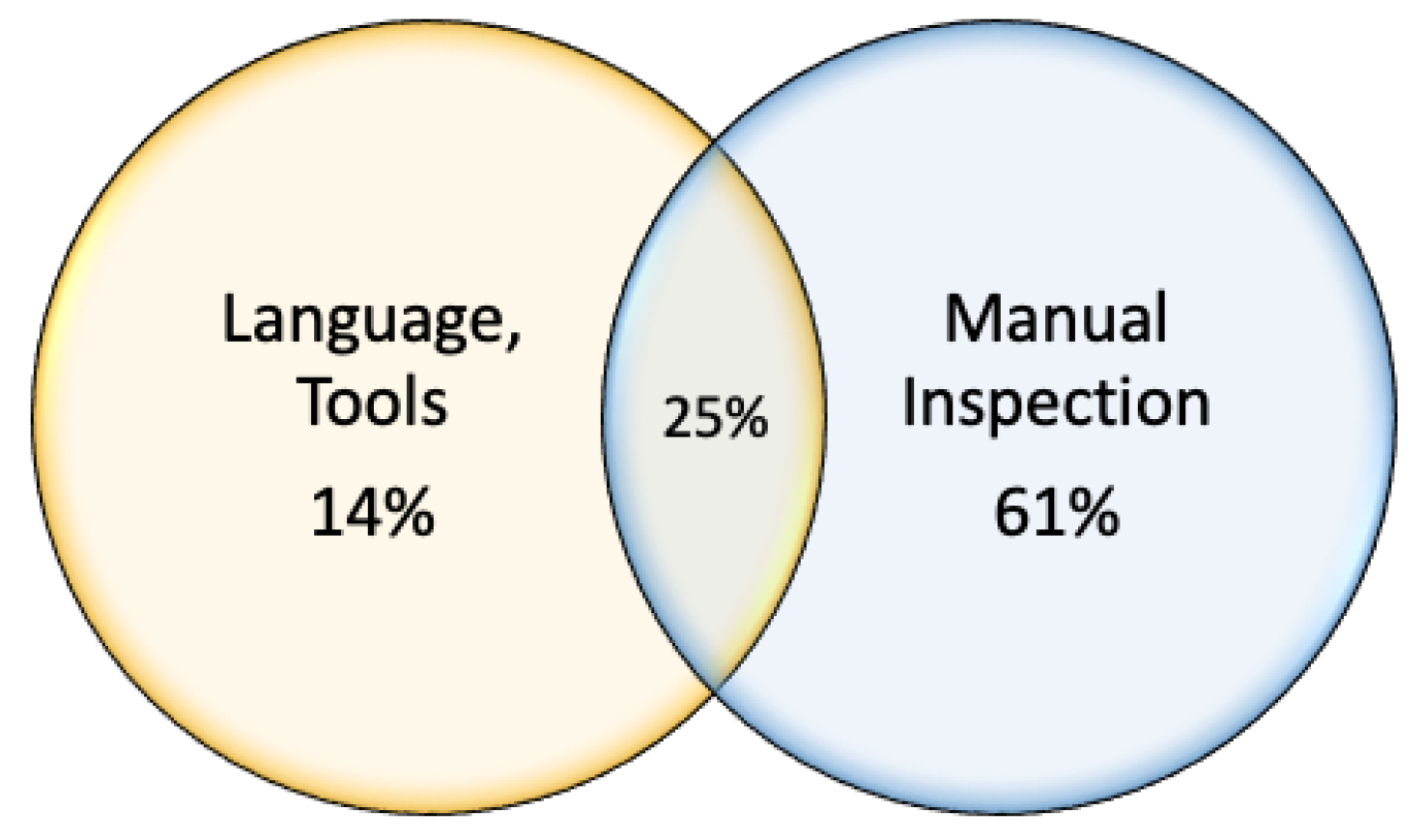

4.7.2. Analysis Techniques

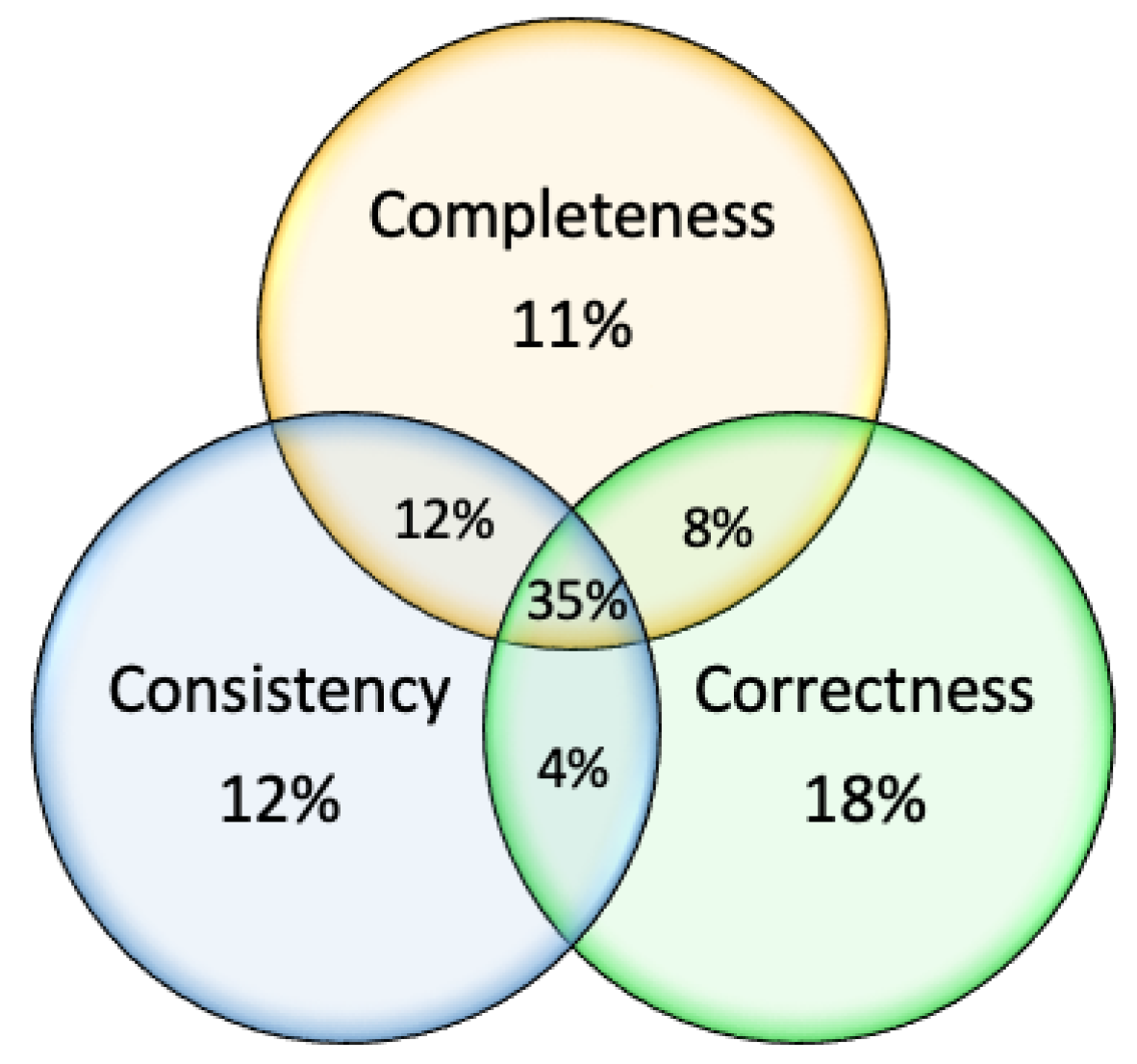

4.7.3. Analysis Goals

4.7.4. The Languages and Tools Used for the Requirements Analysis

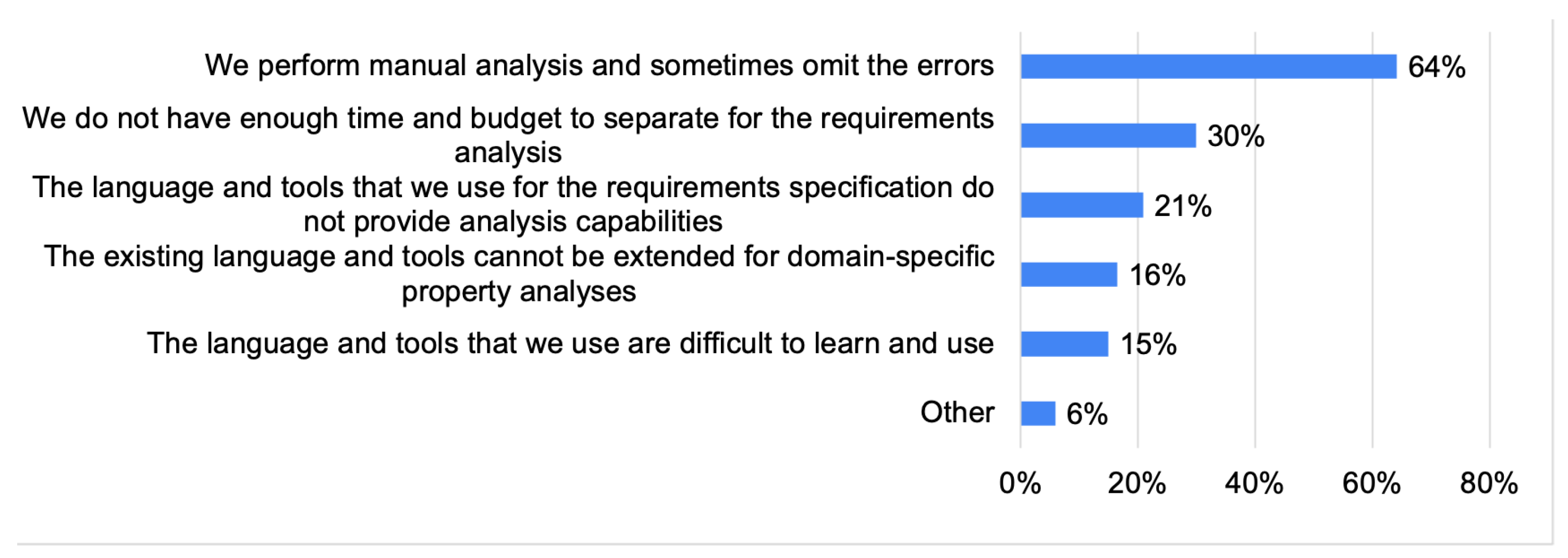

4.7.5. Participants’ Challenges on Analyzing Software Requirements

4.8. Requirements Transformation

4.8.1. The Frequency of Transforming the Software Requirements

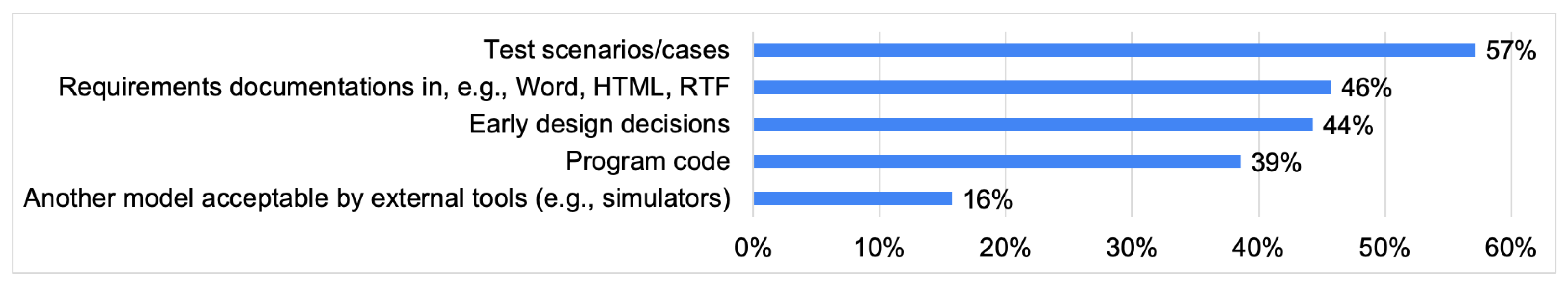

4.8.2. The Types of Artifacts to Be Produced

4.8.3. Techniques Used for the Software Requirements Transformations

4.8.4. The Languages and Tools Used for the Software Requirements Transformations

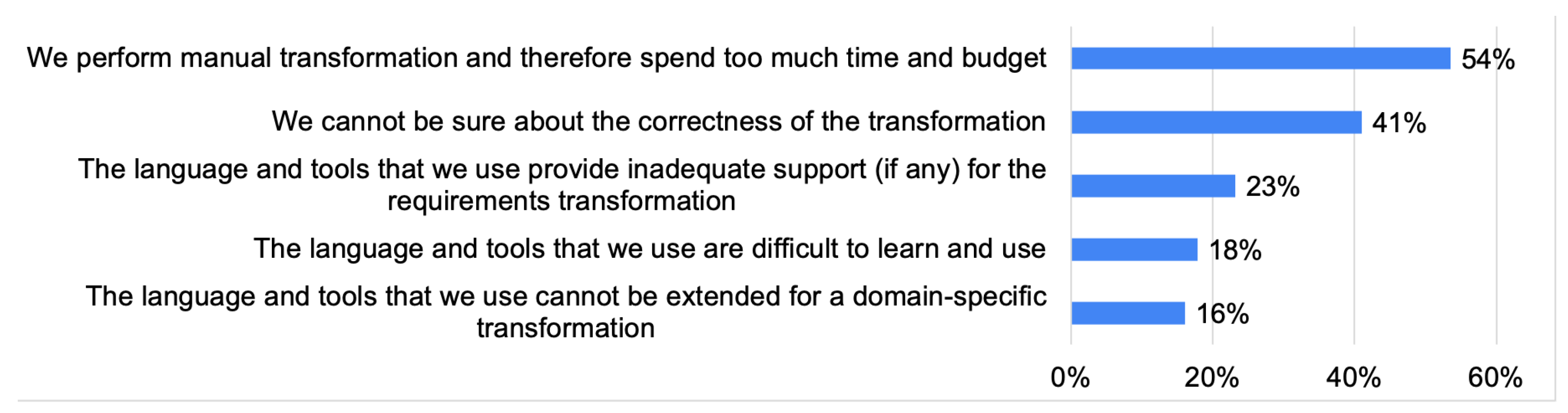

4.8.5. Participants’ Challenges on Transforming Software Requirements

5. Discussion

5.1. Summary of Findings

5.2. Lessons Learned

5.3. Threats to Validity

5.3.1. Internal Validity

5.3.2. External Validity

5.3.3. Construct Validity

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fitzgerald, B. Software Crisis 2.0. Computer 2012, 45, 89–91. [Google Scholar] [CrossRef] [Green Version]

- Chaos Report 2015. Available online: https://www.standishgroup.com/sample_research_files/CHAOSReport2015-Final.pdf (accessed on 15 October 2022).

- Dowson, M. The Ariane 5 software failure. ACM SIGSOFT Softw. Eng. Notes 1997, 22, 84. [Google Scholar] [CrossRef]

- Jackson, M. Seeing More of the World. IEEE Softw. 2004, 21, 83–85. [Google Scholar] [CrossRef]

- Leveson, N.G. The Therac-25: 30 Years Later. Computer 2017, 50, 8–11. [Google Scholar] [CrossRef]

- Davidson, C. A dark Knight for algos. Risk 2012, 25, 32–34. [Google Scholar]

- British Airways System Problem. Available online: https://www.theguardian.com/world/2017/may/27/british-airways-system-problem-delays-heathrow (accessed on 12 January 2023).

- Google Plus Data Leak. Available online: https://www.usatoday.com/story/tech/2018/12/11/google-plus-leak-social-network-shut-down-sooner-after-security-bug/2274296002/ (accessed on 12 January 2023).

- Amazon Software Problem. Available online: https://fortune.com/2021/12/10/amazon-software-problem-cloud-outage-cause/ (accessed on 12 January 2023).

- Hsia, P.; Davis, A.M.; Kung, D.C. Status Report: Requirements Engineering. IEEE Softw. 1993, 10, 75–79. [Google Scholar] [CrossRef]

- Cheng, B.H.C.; Atlee, J.M. Research Directions in Requirements Engineering. In Proceedings of the International Conference on Software Engineering, ISCE 2007, Workshop on the Future of Software Engineering, FOSE 2007, Minneapolis, MN, USA, 23–25 May 2007; Briand, L.C., Wolf, A.L., Eds.; IEEE Computer Society: Washington, DC, USA, 2007; pp. 285–303. [Google Scholar] [CrossRef] [Green Version]

- Nuseibeh, B.; Easterbrook, S.M. Requirements engineering: A roadmap. In Proceedings of the 22nd International Conference on on Software Engineering, Future of Software Engineering Track, ICSE 2000, Limerick, Ireland, 4–11 June 2000; Finkelstein, A., Ed.; ACM: Boston, MA, USA, 2000; pp. 35–46. [Google Scholar] [CrossRef] [Green Version]

- Rodrigues da Silva, A.; Olsina, L. Special Issue on Requirements Engineering, Practice and Research. Appl. Sci. 2022, 12, 12197. [Google Scholar] [CrossRef]

- Lethbridge, T.C.; Lagamiere, R. Object-Oriented Software Engineering–Practical Software Development Using UML and Java; MacGraw-Hill: New York, NY, USA, 2001. [Google Scholar]

- Rumbaugh, J.; Jacobson, I.; Booch, G. Unified Modeling Language Reference Manual, 2nd ed.; Pearson Higher Education: New York, NY, USA, 2004. [Google Scholar]

- Balmelli, L. An Overview of the Systems Modeling Language for Products and Systems Development. J. Obj. Tech. 2007, 6, 149–177. [Google Scholar] [CrossRef]

- Feiler, P.H.; Gluch, D.P.; Hudak, J.J. The Architecture Analysis & Design Language (AADL): An Introduction; Technical Report; Software Engineering Institute: Pittsburgh, PA, USA, 2006. [Google Scholar]

- Boehm, B.W. Verifying and Validating Software Requirements and Design Specifications. IEEE Softw. 1984, 1, 75–88. [Google Scholar] [CrossRef]

- Zowghi, D.; Gervasi, V. On the interplay between consistency, completeness, and correctness in requirements evolution. Inf. Softw. Technol. 2003, 45, 993–1009. [Google Scholar] [CrossRef] [Green Version]

- Kos, T.; Mernik, M.; Kosar, T. A Tool Support for Model-Driven Development: An Industrial Case Study from a Measurement Domain. Appl. Sci. 2019, 9, 4553. [Google Scholar] [CrossRef] [Green Version]

- Lam, W.; Shankararaman, V. Requirements Change: A Dissection of Management Issues. In Proceedings of the 25th EUROMICRO ’99 Conference, Informatics: Theory and Practice for the New Millenium, Milan, Italy, 8–10 September 1999; IEEE Computer Society: Washington, DC, USA, 1999; pp. 2244–2251. [Google Scholar] [CrossRef]

- Jayatilleke, S.; Lai, R. A systematic review of requirements change management. Inf. Softw. Technol. 2018, 93, 163–185. [Google Scholar] [CrossRef]

- Kabbedijk, J.; Brinkkemper, S.; Jansen, S.; van der Veldt, B. Customer Involvement in Requirements Management: Lessons from Mass Market Software Development. In Proceedings of the RE 2009, 17th IEEE International Requirements Engineering Conference, Atlanta, GA, USA, 31 August–4 September 2009; IEEE Computer Society: Washington, DC, USA, 2009; pp. 281–286. [Google Scholar] [CrossRef]

- Saiedian, H.; Dale, R. Requirements engineering: Making the connection between the software developer and customer. Inf. Softw. Technol. 2000, 42, 419–428. [Google Scholar] [CrossRef]

- Franch, X.; Fernández, D.M.; Oriol, M.; Vogelsang, A.; Heldal, R.; Knauss, E.; Travassos, G.H.; Carver, J.C.; Dieste, O.; Zimmermann, T. How do Practitioners Perceive the Relevance of Requirements Engineering Research? An Ongoing Study. In Proceedings of the 25th IEEE International Requirements Engineering Conference, RE 2017, Lisbon, Portugal, 4–8 September 2017; Moreira, A., Araújo, J., Hayes, J., Paech, B., Eds.; IEEE Computer Society: Washington, DC, USA, 2017; pp. 382–387. [Google Scholar] [CrossRef] [Green Version]

- Fernández, D.M.; Wagner, S.; Kalinowski, M.; Felderer, M.; Mafra, P.; Vetrò, A.; Conte, T.; Christiansson, M.; Greer, D.; Lassenius, C.; et al. Naming the pain in requirements engineering–Contemporary problems, causes, and effects in practice. Empir. Softw. Eng. 2017, 22, 2298–2338. [Google Scholar] [CrossRef] [Green Version]

- Verner, J.M.; Cox, K.; Bleistein, S.J.; Cerpa, N. Requirements Engineering and Software Project Success: An industrial survey in Australia and the U.S. Australas. J. Inf. Syst. 2005, 13, 225–238. [Google Scholar] [CrossRef] [Green Version]

- Solemon, B.; Sahibuddin, S.; Ghani, A.A.A. Requirements Engineering Problems and Practices in Software Companies: An Industrial Survey. In Proceedings of the Advances in Software Engineering– International Conference on Advanced Software Engineering and Its Applications, ASEA 2009 Held as Part of the Future Generation Information Technology Conference, FGIT 2009, Jeju Island, Korea, 10–12 December 2009; Proceedings; Communications in Computer and Information Science. Slezak, D., Kim, T., Akingbehin, K., Jiang, T., Verner, J.M., Abrahão, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 59, pp. 70–77. [Google Scholar] [CrossRef]

- Kassab, M. The changing landscape of requirements engineering practices over the past decade. In Proceedings of the 2015 IEEE Fifth International Workshop on Empirical Requirements Engineering, EmpiRE 2015, Ottawa, ON, Canada, 24 August 2015; Berntsson-Svensson, R., Daneva, M., Ernst, N.A., Marczak, S., Madhavji, N.H., Eds.; IEEE Computer Society: Washington, DC, USA, 2015; pp. 1–8. [Google Scholar] [CrossRef]

- Juzgado, N.J.; Moreno, A.M.; Silva, A. Is the European Industry Moving toward Solving Requirements Engineering Problems? IEEE Softw. 2002, 19, 70–77. [Google Scholar] [CrossRef]

- Neill, C.J.; Laplante, P.A. Requirements Engineering: The State of the Practice. IEEE Softw. 2003, 20, 40–45. [Google Scholar] [CrossRef] [Green Version]

- Carrillo-de-Gea, J.M.; Nicolás, J.; Alemán, J.L.F.; Toval, A.; Ebert, C.; Vizcaíno, A. Requirements engineering tools: Capabilities, survey and assessment. Inf. Softw. Technol. 2012, 54, 1142–1157. [Google Scholar] [CrossRef]

- Liu, L.; Li, T.; Peng, F. Why Requirements Engineering Fails: A Survey Report from China. In Proceedings of the RE 2010, 18th IEEE International Requirements Engineering Conference, Sydney, New South Wales, Australia, 27 September–1 October 2010; IEEE Computer Society: Washington, DC, USA, 2010; pp. 317–322. [Google Scholar] [CrossRef]

- Nikula, U.; Sajaniemi, J.; Kälviäinen, H. A State-of-the-Practice Survey on Requirements Engineering in Small-and Medium-Sized Enterprises; Technical report; Telecom Business Research Center Lappeenranta: Lappeenranta, Finland, 2000. [Google Scholar]

- Memon, R.N.; Ahmad, R.; Salim, S.S. Problems in Requirements Engineering Education: A Survey. In Proceedings of the 8th International Conference on Frontiers of Information Technology, Islamabad, Pakistan, 21–23 December 2010; Association for Computing Machinery: New York, NY, USA, 2010. FIT ’10. [Google Scholar] [CrossRef]

- Jarzebowicz, A.; Sitko, N. Communication and Documentation Practices in Agile Requirements Engineering: A Survey in Polish Software Industry. In Proceedings of the Information Systems: Research, Development, Applications, Education–12th SIGSAND/PLAIS EuroSymposium 2019, Gdansk, Poland, 19 September 2019; Proceedings; Lecture Notes in Business Information Processing. Wrycza, S., Maslankowski, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; Volume 359, pp. 147–158. [Google Scholar] [CrossRef]

- Ågren, S.M.; Knauss, E.; Heldal, R.; Pelliccione, P.; Malmqvist, G.; Bodén, J. The impact of requirements on systems development speed: A multiple-case study in automotive. Requir. Eng. 2019, 24, 315–340. [Google Scholar] [CrossRef] [Green Version]

- Palomares, C.; Franch, X.; Quer, C.; Chatzipetrou, P.; López, L.; Gorschek, T. The state-of-practice in requirements elicitation: An extended interview study at 12 companies. Requir. Eng. 2021, 26, 273–299. [Google Scholar] [CrossRef]

- Groves, R.M.; Fowler, F.J., Jr.; Couper, M.P.; Lepkowski, J.M.; Singer, E.; Tourangeau, R. Survey Methodology, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Ozkaya, M. What is Software Architecture to Practitioners: A Survey. In Proceedings of the MODELSWARD 2016–Proceedings of the 4rd International Conference on Model-Driven Engineering and Software Development, Rome, Italy, 19–21 February 2016; Hammoudi, S., Pires, L.F., Selic, B., Desfray, P., Eds.; SciTePress: Setubal, Portugal, 2016; pp. 677–686. [Google Scholar] [CrossRef]

- Ozkaya, M. Do the informal & formal software modeling notations satisfy practitioners for software architecture modeling? Inf. Softw. Technol. 2018, 95, 15–33. [Google Scholar] [CrossRef]

- Ozkaya, M.; Akdur, D. What do practitioners expect from the meta-modeling tools? A survey. J. Comput. Lang. 2021, 63, 101030. [Google Scholar] [CrossRef]

- Akdur, D.; Garousi, V.; Demirörs, O. A survey on modeling and model-driven engineering practices in the embedded software industry. J. Syst. Archit. 2018, 91, 62–82. [Google Scholar] [CrossRef] [Green Version]

- Molléri, J.S.; Petersen, K.; Mendes, E. Survey Guidelines in Software Engineering: An Annotated Review. In Proceedings of the 10th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement, ESEM 2016, Ciudad Real, Spain, 8–9 September 2016; ACM: Boston, MA, USA, 2016; pp. 58:1–58:6. [Google Scholar] [CrossRef]

- DFDS. Available online: https://www.dfds.com.tr (accessed on 1 October 2022).

- Rozanski, N.; Woods, E. Software Systems Architecture: Working with Stakeholders Using Viewpoints and Perspectives, 2nd ed.; Addison-Wesley Professional: Westford, MA, USA, 2011. [Google Scholar]

- Taylor, R.N.; Medvidovic, N.; Dashofy, E.M. Software Architecture–Foundations, Theory, and Practice; Wiley: Hoboken, NJ, USA, 2010. [Google Scholar]

- Popping, R. Analyzing Open-ended Questions by Means of Text Analysis Procedures. Bull. Sociol. Methodol. De Méthodol. Sociol. 2015, 128, 23–39. [Google Scholar] [CrossRef] [Green Version]

- Fricker, R.D. Sampling methods for web and e-mail surveys. In The SAGE Handbook of Online Research Methods; SAGE: Thousand Oaks, CA, USA, 2008; pp. 195–216. [Google Scholar]

- Raw Data of Our Survey on the Practitioners’ Perspectives towards Requirements Engineering. Available online: https://zenodo.org/record/6754676#.Y-swTa1ByUk (accessed on 15 October 2022).

- Kelly, S.; Lyytinen, K.; Rossi, M. MetaEdit+ A Fully Configurable Multi-User and Multi-Tool CASE and CAME Environment. In Seminal Contributions to Information Systems Engineering, 25 Years of CAiSE; Bubenko, J., Krogstie, J., Pastor, O., Pernici, B., Rolland, C., Sølvberg, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 109–129. [Google Scholar] [CrossRef] [Green Version]

- QVscribe. Available online: https://qracorp.com (accessed on 15 October 2022).

- Vitech. Available online: https://www.vitechcorp.com (accessed on 15 October 2022).

- Jackson, D. Alloy: A lightweight object modelling notation. ACM Trans. Softw. Eng. Methodol. 2002, 11, 256–290. [Google Scholar] [CrossRef]

- Lamport, L.; Matthews, J.; Tuttle, M.R.; Yu, Y. Specifying and verifying systems with TLA+. In Proceedings of the 10th ACM SIGOPS European Workshop, Saint-Emilion, France, 1 July 2002; Muller, G., Jul, E., Eds.; ACM: Boston, MA, USA, 2002; pp. 45–48. [Google Scholar] [CrossRef] [Green Version]

- Plat, N.; Larsen, P.G. An overview of the ISO/VDM-SL standard. ACM SIGPLAN Not. 1992, 27, 76–82. [Google Scholar] [CrossRef]

- Mairiza, D.; Zowghi, D.; Nurmuliani, N. An investigation into the notion of non-functional requirements. In Proceedings of the 2010 ACM Symposium on Applied Computing (SAC), Sierre, Switzerland, 22–26 March 2010; Shin, S.Y., Ossowski, S., Schumacher, M., Palakal, M.J., Hung, C., Eds.; ACM: Boston, MA, USA, 2010; pp. 311–317. [Google Scholar] [CrossRef] [Green Version]

- Kopczynska, S.; Ochodek, M.; Nawrocki, J.R. On Importance of Non-functional Requirements in Agile Software Projects–A Survey. In Integrating Research and Practice in Software Engineering; Studies in Computational Intelligence; Jarzabek, S., Poniszewska-Maranda, A., Madeyski, L., Eds.; Springer: Berlin/Heidelberg, Germany, 2020; Volume 851, pp. 145–158. [Google Scholar] [CrossRef]

- Bajpai, V.; Gorthi, R.P. On non-functional requirements: A survey. In Proceedings of the 2012 IEEE Students’ Conference on Electrical, Electronics and Computer Science, Bhopal, India, 1–2 March 2012; pp. 1–4. [Google Scholar] [CrossRef]

- AL-Ta’ani, R.H.; Razali, R. Prioritizing Requirements in Agile Development: A Conceptual Framework. Procedia Technol. 2013, 11, 733–739. [Google Scholar] [CrossRef] [Green Version]

- Erata, F.; Challenger, M.; Tekinerdogan, B.; Monceaux, A.; Tüzün, E.; Kardas, G. Tarski: A Platform for Automated Analysis of Dynamically Configurable Traceability Semantics. In Proceedings of the Symposium on Applied Computing, Marrakech, Morocco, 3–7 April 2017; Association for Computing Machinery: New York, NY, USA, 2017. SAC ’17. pp. 1607–1614. [Google Scholar] [CrossRef]

- Erata, F.; Goknil, A.; Tekinerdogan, B.; Kardas, G. A Tool for Automated Reasoning about Traces Based on Configurable Formal Semantics. In Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering, Paderborn, Germany, 4–8 September 2017; Association for Computing Machinery: New York, NY, USA, 2017. ESEC/FSE 2017. pp. 959–963. [Google Scholar] [CrossRef] [Green Version]

- Malavolta, I.; Lago, P.; Muccini, H.; Pelliccione, P.; Tang, A. What Industry Needs from Architectural Languages: A Survey. IEEE Trans. Softw. Eng. 2013, 39, 869–891. [Google Scholar] [CrossRef]

- Ramesh, B.; Cao, L.; Baskerville, R.L. Agile requirements engineering practices and challenges: An empirical study. Inf. Syst. J. 2010, 20, 449–480. [Google Scholar] [CrossRef]

- Akdur, D. Modeling knowledge and practices in the software industry: An exploratory study of Turkey-educated practitioners. J. Comput. Lang. 2021, 66, 101063. [Google Scholar] [CrossRef]

- Eysholdt, M.; Behrens, H. Xtext: Implement your language faster than the quick and dirty way. In Proceedings of the SPLASH/OOPSLA Companion, Reno, NV, USA, 17–21 October 2010; Cook, W.R., Clarke, S., Rinard, M.C., Eds.; ACM: Boston, MA, USA, 2010; pp. 307–309. [Google Scholar]

- Viyović, V.; Maksimović, M.; Perisić, B. Sirius: A rapid development of DSM graphical editor. In Proceedings of the IEEE 18th International Conference on Intelligent Engineering Systems INES 2014, Tihany, Hungary, 3–5 July 2014; pp. 233–238. [Google Scholar] [CrossRef]

- Pech, V.; Shatalin, A.; Voelter, M. JetBrains MPS as a Tool for Extending Java. In Proceedings of the 2013 International Conference on Principles and Practices of Programming on the Java Platform: Virtual Machines, Languages, and Tools, Stuttgart, Germany, 11–13 September 2013; Association for Computing Machinery: New York, NY, USA, 2013. PPPJ ’13. pp. 165–168. [Google Scholar] [CrossRef] [Green Version]

- Kärnä, J.; Tolvanen, J.P.; Kelly, S. Evaluating the use of domain-specific modeling in practice. In Proceedings of the 9th OOPSLA workshop on Domain-Specific Modeling, Orlando, FL, USA, 25–29 October 2009. [Google Scholar]

- Nokia Case Study–MetaEdit+ Revolutionized the Way Nokia Develops Mobile Phone Software. Available online: https://www.metacase.com/papers/MetaEdit_{}in_{}Nokia.pdf (accessed on 14 July 2022).

- Siau, K.; Loo, P. Identifying Difficulties in Learning Uml. Inf. Syst. Manag. 2006, 23, 43–51. [Google Scholar] [CrossRef]

- Simons, A.J.H.; Graham, I. 30 Things that Go Wrong in Object Modelling with UML 1.3. In Behavioral Specifications of Businesses and Systems; The Kluwer International Series in Engineering and Computer Science; Kilov, H., Rumpe, B., Simmonds, I., Eds.; Springer: Berlin/Heidelberg, Germany, 1999; Volume 523, pp. 237–257. [Google Scholar] [CrossRef]

- Ozkaya, M. Are the UML modelling tools powerful enough for practitioners? A literature review. IET Softw. 2019, 13, 338–354. [Google Scholar] [CrossRef]

- Holzmann, G.J. The SPIN Model Checker–Primer and Reference Manual; Addison-Wesley: Boston, MA, USA, 2004. [Google Scholar]

- Feiler, P.H.; Lewis, B.A.; Vestal, S. The SAE Architecture Analysis & Design Language (AADL): A Standard for Engineering Performance Critical Systems. In Proceedings of the IEEE International Symposium on Intelligent Control, Toulouse, France, 25–29 April 2006; pp. 1206–1211. [Google Scholar] [CrossRef]

- Bolognesi, T.; Brinksma, E. Introduction to the ISO Specification Language LOTOS. Comput. Netw. ISDN Syst. 1987, 14, 25–59. [Google Scholar] [CrossRef] [Green Version]

- Wohlin, C.; Runeson, P.; Höst, M.; Ohlsson, M.C.; Regnell, B. Experimentation in Software Engineering; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar] [CrossRef]

- Siegmund, J.; Siegmund, N.; Apel, S. Views on Internal and External Validity in Empirical Software Engineering. In Proceedings of the 37th IEEE/ACM International Conference on Software Engineering, ICSE 2015, Florence, Italy, 16–24 May 2015; Bertolino, A., Canfora, G., Elbaum, S.G., Eds.; IEEE Computer Society: Washington, DC, USA, 2015; Volume 1, pp. 9–19. [Google Scholar] [CrossRef] [Green Version]

- Shull, F.; Singer, J.; Sjøberg, D.I.K. (Eds.) Guide to Advanced Empirical Software Engineering; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar] [CrossRef]

- AITOC ITEA Project. Available online: https://itea4.org/project/aitoc.html (accessed on 15 October 2022).

| Similar Works | Year | Survey Summary | Country | # of Res. | Practitioners’ Motivation | Activities | Languages, Tools | Challenges | Customers |

|---|---|---|---|---|---|---|---|---|---|

| Franch et al. [25] | 2017 | - Online survey - Practs thoughts on 435 research papers | General | 153 | No | No | No | No | No |

| Fernandez et al. [26] | 2017 | - Company survey - Practs’ challenges - Gathering, spec., changing req. | General | 228 | No | Ga, spe, evol | No | Yes | Yes |

| Verner et al. [27] | 2005 | - Survey - Practs’ thoughts - Project man. impact on req. quality | U.S., Australia | 143 | No | No | No | No | Yes |

| Solemon et al. [28] | 2009 | - Survey - Practs’ thoughts - Challenges (internal, external) - CMMI impact on companies | Malaysia | 64 | No | No | No | Yes | Yes |

| Kassab [29] | 2015 | - 3 surveys - Techniques, tools usage and changes over time | U.S. | 500 | No | Ga, spec, ana, man | Yes | No | No |

| Juzdago et al. [30] | 2002 | - Survey - Practs’ experiences - Adopting new techs., source of reqs., dependability | General | 11 * | No | Ga | No | Yes | No |

| Neill et al. [31] | 2003 | - Online survey - Req. gathering and spec. - Tools, notations | General | 194 | No | Ga, spec | Yes | No | No |

| Carrillo de Gea et al. [32] | 2012 | - Survey - Tool vendors - Tool capabilities | General | 38 | No | No | No | No | No |

| Liu et al. [33] | 2010 | - Online survey - Success, failure scenarios | China | 377 | No | Ga, spec | Yes | No | Yes |

| Nikula et al. [34] | 2000 | - Interview - Techniques, tools - Practs needs - Industry-Academia | General | 12* | Yes | No | Yes | No | No |

| Memon et al. [35] | 2010 | - Student survey - Challenges on learning req. eng. | Malaysia | 48 | No | No | Yes | Yes | No |

| Jarzębowicz et al. [36] | 2019 | - Online survey - Practs’ thoughts - Req. eng. and agile development | Poland | 69 | No | Spec. | Yes | No | Yes |

| Agren et al. [37] | 2019 | - Interview - Automative - Practs thoughts - Req. eng. impacts on automative software | General | 20 | No | No | No | No | No |

| Palomares et al. [38] | 2021 | - Interview - Practs thoughts - Roles involved in gathering - Gathering challenges | Sweden | 24 | No | Ga | Yes | Yes | Yes |

| Our Work | 2023 | - Survey - Practs’ thoughts - Several activities - Techniques, tools, languages in activities - Activity challenges - Customers | General | 84 | Yes | Ga, spe, ana, trac, evol, tran | Yes | Yes | Yes |

| Res. Que. | Survey Questions | Multiple Answers | Free Text | Single Answer |

|---|---|---|---|---|

| Profile | 1- Which industry(ies) do you work in? | X | X | |

| 2- What is (are) your current job position(s)? | X | X | ||

| 3- What is your bachelor degree? | X | X | ||

| 4- How many years of experience do you have in software development? | X | |||

| 5- Which software process models do you use for developing software systems? | X | X | ||

| 6- Do you specify the requirements of any software system to be developed? | X | |||

| RQ1 | 7- Please rate the importance of the following motivations for the requirements specification for you. | X | ||

| RQ1 | 8- Which type(s) of requirements do you focus on? | X | X | |

| RQ1 | 9- Which of the following concerns are important for you in the requirements specification? | X | X | |

| RQ2 | 10- How do you gather the requirements to be specified? | X | X | |

| RQ2 | 11- Which approach(es) do you use for specifying the requirements? | X | X | |

| RQ4 | 12- How often do you involve customers in the requirements specification process? | X | ||

| RQ4 | 13- What aspect(s) of the requirements engineering do you involve the customers in? | X | X | |

| RQ4 | 14- What are the challenges that you face with while involving customers in the requirements specification? | X | X | |

| RQ2 | 15- How often do you need to change the requirements during the software development lifecycle? | X | ||

| RQ1 | 16- What makes you change requirements? | X | X | |

| RQ2 | 17- Do you use any traceability tools for determining the affected parts (e.g., design, code, test artefacts) upon changing a requirement? | X | ||

| RQ2 | 18- If you use any tools for changing and tracing requirements, please tell us which tools you use. | X | ||

| RQ2 | 19- How often do you analyse the requirements during the software development lifecycle? | X | ||

| RQ2 | 20- How do you analyse the requirements? | X | X | |

| RQ2 | 21- Which of the following analysis goals are important for you? | X | ||

| RQ2 | 22- If you use languages and tools for the requirements analysis, please give the language and tool names and describe how you use them for the requirements analysis. | X | ||

| RQ3 | 23- What are the challenges you face with while analysing the requirements? | X | X | |

| RQ2 | 24- How often do you transform the requirements into some other artefact? | X | ||

| RQ2 | 25- What would you like to produce with the requirements transformation? | X | X | |

| RQ2 | 26- How do you perform the requirements transformation? | X | X | |

| RQ2 | 27- If you use language and tools for the requirements transformation, please describe which language(s) and tool(s) you use and how. | X | ||

| RQ3 | 28- What are the challenges that you face with while transforming the requirements? | X | X |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ozkaya, M.; Akdur, D.; Toptani, E.C.; Kocak, B.; Kardas, G. Practitioners’ Perspectives towards Requirements Engineering: A Survey. Systems 2023, 11, 65. https://doi.org/10.3390/systems11020065

Ozkaya M, Akdur D, Toptani EC, Kocak B, Kardas G. Practitioners’ Perspectives towards Requirements Engineering: A Survey. Systems. 2023; 11(2):65. https://doi.org/10.3390/systems11020065

Chicago/Turabian StyleOzkaya, Mert, Deniz Akdur, Etem Cetin Toptani, Burak Kocak, and Geylani Kardas. 2023. "Practitioners’ Perspectives towards Requirements Engineering: A Survey" Systems 11, no. 2: 65. https://doi.org/10.3390/systems11020065