Can Nonliterates Interact as Easily as Literates with a Virtual Reality System? A Usability Evaluation of VR Interaction Modalities

Abstract

:1. Introduction

- RQ 1: Is the designed educational application usable for the nonliterate population?

- H1:The designed educational application will be usable by the nonliterate population.

- RQ 2: If yes, then how easy is the application for nonliterate users as compared to the two other groups?

- H2:The designed VR application will be as easy to use for nonliterate users as it is for literate users.

- RQ 3: Which interaction modality is more usable by nonliterate users?

- H3:Nonliterate people will find hands to be more usable due to their intuitive and reality-based interaction styles as compared to controllers.

2. Literature Review

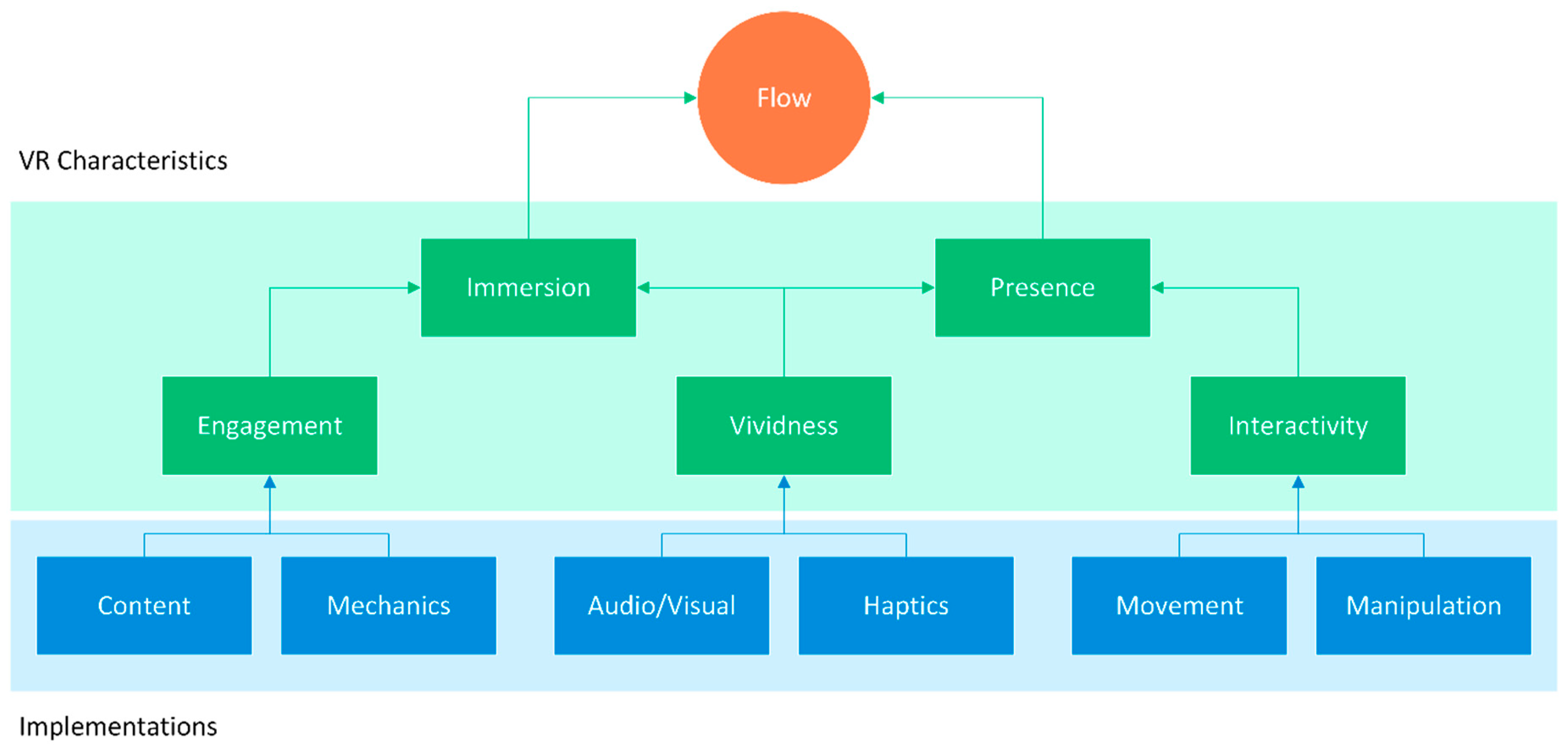

2.1. Virtual Reality

2.2. Interactivity

2.3. VR Sickness

2.4. Characteristics of Nonliterate Adults

3. VR Prototype Design

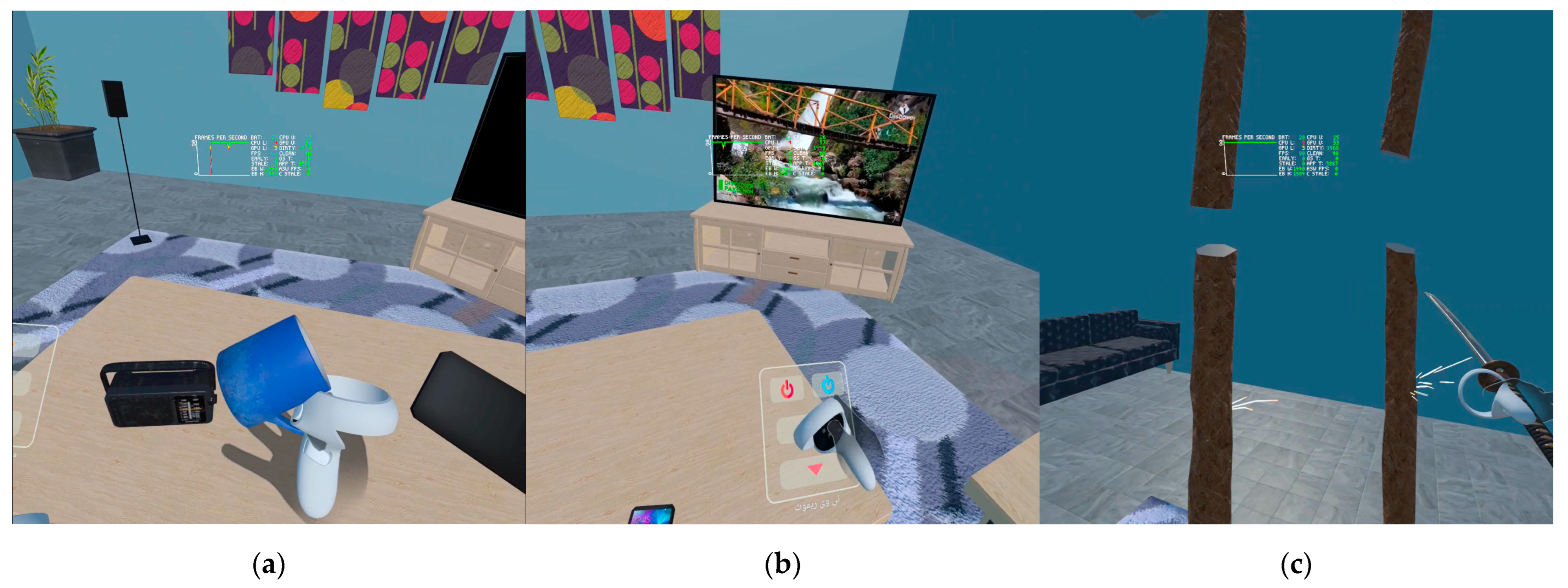

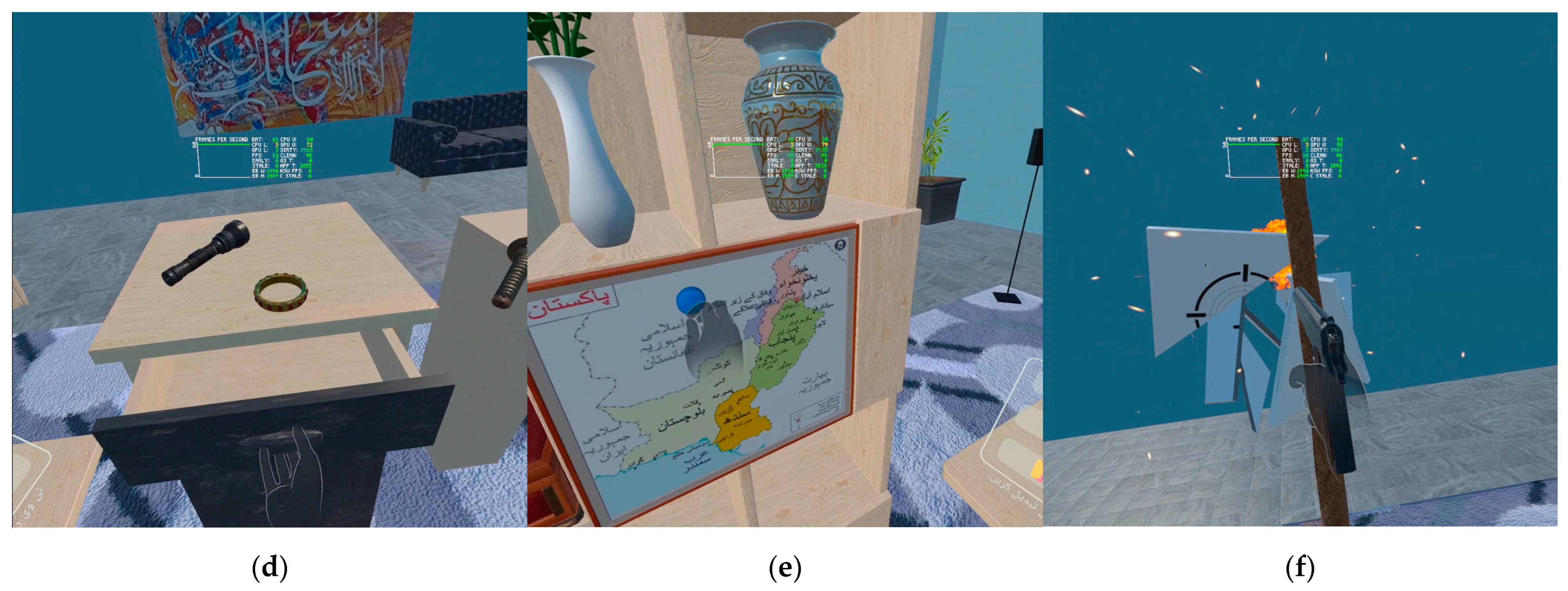

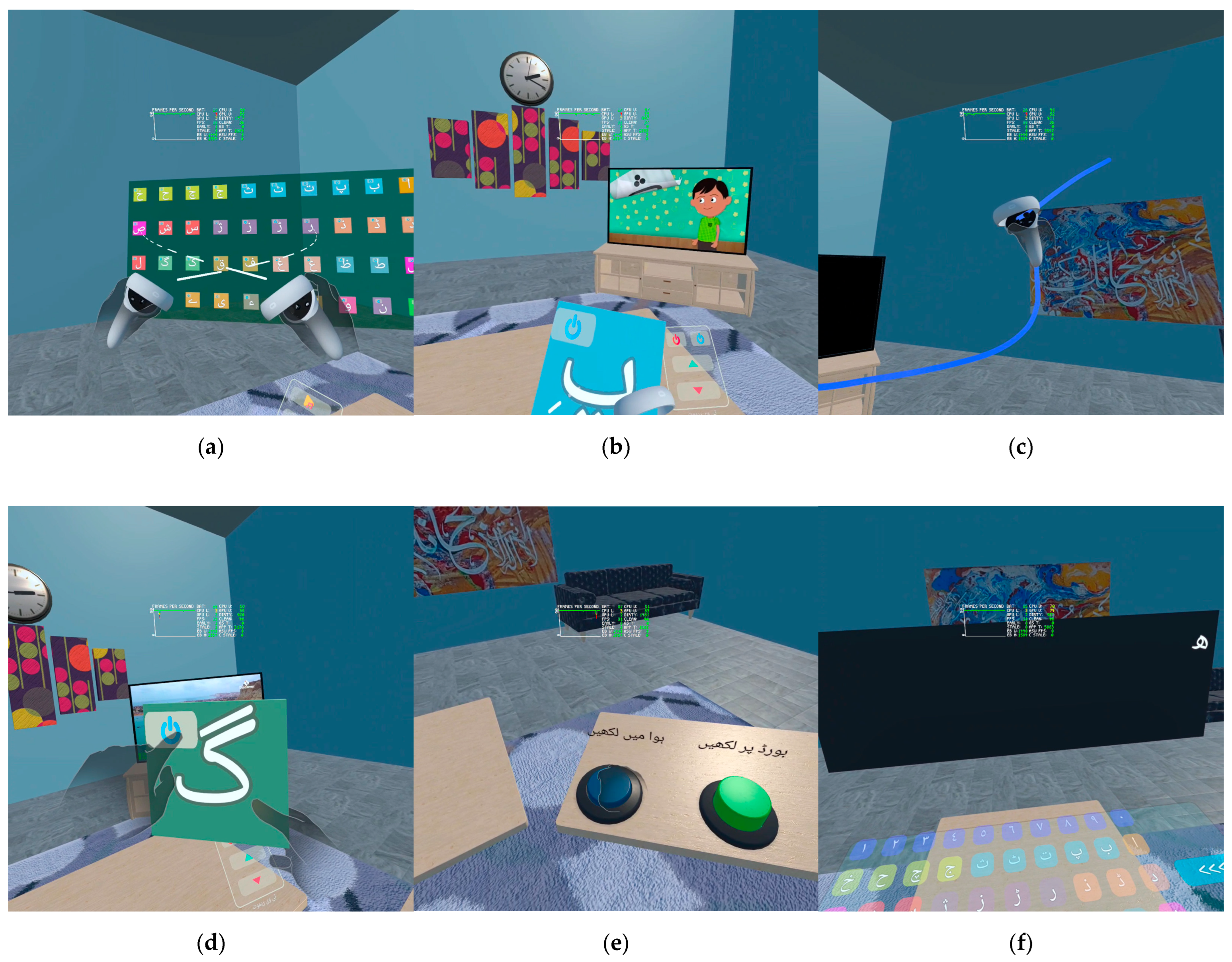

3.1. VR Environment and Level Design

3.2. Tools and Technologies

4. Measures

Validity of Measures

5. Study 1

- Tech-Literate: This group encompasses literate individuals with a high level of expertise and proficiency in utilizing computer systems, software applications, and digital devices. Participants in this group were mainly from computer science and software engineering backgrounds.

- Non-tech-Literate: This group consists of literate individuals with a basic or limited familiarity with the use of technology. Participants in this group came from non-technical fields.

- Nonliterate: This group comprises individuals who are not literate, regardless of their level of technology literacy. These individuals may have difficulties using digital devices and computer systems and may need support or training to effectively utilize technology. Participants in this group came from a variety of fields that did not require education or technical experience.

- RQ 1: Is the designed educational application usable for the nonliterate population?

- H1: The designed educational application will be usable by the nonliterate population.

- RQ 2: If yes, then how easy is the application for nonliterate users as compared to the two other groups?

- H2: The designed VR application will be as easy to use for nonliterate users as it is for literate users.

5.1. Procedure

5.2. Participants

5.3. Analysis of Results

5.3.1. Analysis of Results with “User Type” as Predictor

5.3.2. Analysis of Results with “Interaction Modality” as a Predictor

5.3.3. Analysis of Results with the “Use of the Technology” as a Predictor

5.4. Discussion of Results

6. Study 2

- RQ 3: Which interaction modality is more usable by nonliterate users?

- H3: Nonliterate will find hands to be more usable due to their intuitive and reality-based interaction style as compared to controllers.

6.1. Procedure

6.2. Participants

6.3. Analysis of Results

6.3.1. Analysis of Results with Controllers as an Interaction Modality

6.3.2. Analysis of Results with Hands as Interaction Modality

6.3.3. Analysis of Results for Years of Technological Experience on Interaction

6.4. Discussions of Results

7. Discussions

7.1. Summary of Results

7.2. Limitations

7.3. Suggestions and Recommendations

- Consider using controllers as the interaction mode instead of hands: Results from both studies indicate that nonliterate users find controllers easier to use and perform interactions faster and with fewer errors compared to using their hands.

- Enhance the design of the VR educational application: Based on the results of Study 1, it is recommended that the design of the application be modified to cater to the specific needs of nonliterate users, taking into consideration their behavior patterns and the difficulties they face.

- Improve lighting conditions: Results from Study 1 indicate that low lighting levels can negatively impact the completion time of complex tasks. Hence, it is recommended to ensure adequate brightness levels in the room where the VR experience is conducted.

- Provide clear instructions and a reticle: Results from Study 1 show that nonliterate users sometimes sought external help and were confused by the reticle while trying to grab an object. Hence, it is recommended to provide clear instructions and a visible reticle to help users with their interactions.

- Consider alternative interactions: Results from Study 1 suggest that gestures could be a suitable alternative interaction mode for nonliterate users and warrant further investigation.

- Consider user training and familiarization: Results from Study 1 indicate that user training and familiarization could impact the performance of nonliterate users in VR systems. Hence, it is recommended to explore the effect of training on VR interaction performance for nonliterate users.

- Consider the impact of technology experience: Results from Study 2 suggest that more experience with technology does not necessarily lead to better VR interaction performance. Hence, it is recommended to examine the impact of technology experience on specific VR interaction tasks to better understand how experience affects performance.

- Consider the impact of technology use: Results from Study 1 show that the use of modern technology can have a significant impact on VR interactions for nonliterate users. Hence, it is recommended to explore the impact of using different types of technology (e.g., smartphones, laptops, and computers) on VR interactivity.

- Expand the study to include a wider range of tasks and interactions: Results from Study 1 indicate that nonliterate users struggled with some VR tasks and that the study could be expanded to include a wider range of tasks and interactions to gain a more comprehensive understanding of the abilities of nonliterate users in VR systems.

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| N | Mean | Std. Deviation | |||

|---|---|---|---|---|---|

| 1st Task (Object Interaction—Grab Interaction) Completion Time | 1 | 12 | 45.42 | 22.236 | |

| 2 | 8 | 44.88 | 19.853 | ||

| 3 | 10 | 59.40 | 12.756 | ||

| Total | 30 | 49.93 | 19.483 | ||

| Model | Fixed Effects | 18.917 | |||

| Random Effects | |||||

| 2nd Task (Map—Grab & Move Interaction) Completion Time | 1 | 12 | 25.58 | 27.158 | |

| 2 | 8 | 54.25 | 36.850 | ||

| 3 | 10 | 58.80 | 20.741 | ||

| Total | 30 | 44.30 | 31.398 | ||

| Model | Fixed Effects | 28.212 | |||

| Random Effects | |||||

| 3rd Task (Change Music/TV Ch—Poke Interaction) Completion Time | 1 | 12 | 33.42 | 19.228 | |

| 2 | 8 | 24.63 | 14.162 | ||

| 3 | 10 | 41.60 | 16.399 | ||

| Total | 30 | 33.80 | 17.787 | ||

| Model | Fixed Effects | 17.096 | |||

| Random Effects | |||||

| 4th Task (Put Jewellery—Grab & Move + Grab & Place Interaction) Completion Time | 1 | 12 | 21.50 | 11.302 | |

| 2 | 8 | 23.38 | 11.057 | ||

| 3 | 10 | 38.60 | 17.977 | ||

| Total | 30 | 27.70 | 15.501 | ||

| Model | Fixed Effects | 13.837 | |||

| Random Effects | |||||

| 5th Task (Sword & Cut—Grab & Use Interaction) Completion Time | 1 | 12 | 20.25 | 11.871 | |

| 2 | 8 | 23.38 | 11.488 | ||

| 3 | 10 | 33.30 | 15.720 | ||

| Total | 30 | 25.43 | 13.987 | ||

| Model | Fixed Effects | 13.191 | |||

| Random Effects | |||||

| 6th Task (Pistol & Shoot—Grab & Move + Grab & Use Interaction) Completion Time | 1 | 12 | 53.83 | 21.294 | |

| 2 | 8 | 32.25 | 10.553 | ||

| 3 | 10 | 53.10 | 16.231 | ||

| Total | 30 | 47.83 | 19.289 | ||

| Model | Fixed Effects | 17.361 | |||

| Random Effects | |||||

| 1st Task (Alphabet Cards—Distance Grab + Pinch Interaction) Completion Time | 1 | 12 | 30.30 | 21.734 | |

| 2 | 8 | 49.05 | 40.580 | ||

| 3 | 10 | 21.36 | 4.527 | ||

| Total | 30 | 32.32 | 26.520 | ||

| Model | Fixed Effects | 25.024 | |||

| Random Effects | |||||

| 2nd Task (Alphabet Cards—Two-handed, Distance Grab + Pinch + Poke Interaction) Completion Time | 1 | 12 | 39.22 | 22.222 | |

| 2 | 8 | 36.22 | 13.764 | ||

| 3 | 10 | 69.21 | 37.228 | ||

| Total | 30 | 48.42 | 29.804 | ||

| Model | Fixed Effects | 26.688 | |||

| Random Effects | |||||

| Air Writing (Pinch + Move Interaction) | 1 | 12 | 64.01 | 15.376 | |

| 2 | 8 | 68.27 | 26.401 | ||

| 3 | 10 | 80.88 | 11.873 | ||

| Total | 30 | 70.77 | 18.909 | ||

| Model | Fixed Effects | 18.001 | |||

| Random Effects | |||||

| Board Writing (Grab or Pinch + Move interaction) | 1 | 12 | 80.01 | 19.229 | |

| 2 | 8 | 85.34 | 32.991 | ||

| 3 | 10 | 101.11 | 14.839 | ||

| Total | 30 | 88.46 | 23.638 | ||

| Model | Fixed Effects | 22.500 | |||

| Random Effects | |||||

| Typewriter (Poke Interaction) | 1 | 12 | 96.00 | 23.063 | |

| 2 | 8 | 102.39 | 39.581 | ||

| 3 | 10 | 121.32 | 17.793 | ||

| Total | 30 | 106.14 | 28.356 | ||

| Model | Fixed Effects | 26.989 | |||

| Random Effects | |||||

| Errors in Grab Interaction | 1 | 12 | 2.17 | 1.193 | |

| 2 | 8 | 2.75 | 2.252 | ||

| 3 | 10 | 4.20 | 2.251 | ||

| Total | 30 | 3.00 | 2.034 | ||

| Model | Fixed Effects | 1.893 | |||

| Random Effects | |||||

| Errors in Grab and Move Interaction | 1 | 12 | 8.17 | 5.734 | |

| 2 | 8 | 7.75 | 4.833 | ||

| 3 | 10 | 11.40 | 6.004 | ||

| Total | 30 | 9.13 | 5.655 | ||

| Model | Fixed Effects | 5.609 | |||

| Random Effects | |||||

| Errors in Grab and Use Sword | 1 | 12 | 4.58 | 4.420 | |

| 2 | 8 | 2.88 | 1.727 | ||

| 3 | 10 | 4.40 | 3.688 | ||

| Total | 30 | 4.07 | 3.591 | ||

| Model | Fixed Effects | 3.642 | |||

| Random Effects | |||||

| Errors in Grab and Use Pistol | 1 | 12 | 8.75 | 6.510 | |

| 2 | 8 | 4.25 | 4.590 | ||

| 3 | 10 | 4.70 | 4.218 | ||

| Total | 30 | 6.20 | 5.586 | ||

| Model | Fixed Effects | 5.354 | |||

| Random Effects | |||||

| Errors in Distance Grab Interaction | 1 | 12 | 1.00 | 1.954 | |

| 2 | 8 | 1.75 | 2.435 | ||

| 3 | 10 | 4.00 | 2.667 | ||

| Total | 30 | 2.20 | 2.618 | ||

| Model | Fixed Effects | 2.337 | |||

| Random Effects | |||||

| Errors in Poke Interaction on Alphabet Card | 1 | 12 | .83 | 1.115 | |

| 2 | 8 | 1.63 | 2.200 | ||

| 3 | 10 | 2.80 | 3.706 | ||

| Total | 30 | 1.70 | 2.575 | ||

| Model | Fixed Effects | 2.518 | |||

| Random Effects | |||||

| Errors in Pinch and Move Interaction to Write in Air | 1 | 12 | 1.83 | 1.850 | |

| 2 | 8 | 1.00 | 0.926 | ||

| 3 | 10 | 2.50 | 2.321 | ||

| Total | 30 | 1.83 | 1.877 | ||

| Model | Fixed Effects | 1.848 | |||

| Random Effects | |||||

| Errors in Grab, Move, and Use Interaction to Write on Board | 1 | 12 | 2.08 | 1.621 | |

| 2 | 8 | 1.00 | 1.773 | ||

| 3 | 10 | 3.10 | 2.514 | ||

| Total | 30 | 2.13 | 2.097 | ||

| Model | Fixed Effects | 1.998 | |||

| Random Effects | |||||

| Errors in Poke Interaction on Urdu Keyboard | 1 | 12 | 1.67 | 2.807 | |

| 2 | 8 | 2.25 | 2.659 | ||

| 3 | 10 | 1.30 | 1.059 | ||

| Total | 30 | 1.70 | 2.277 | ||

| Model | Fixed Effects | 2.328 | |||

| Random Effects | |||||

| The user is confident while interacting with the VR. | 1 | 12 | 4.75 | 0.622 | |

| 2 | 8 | 4.88 | 0.354 | ||

| 3 | 10 | 4.30 | 0.823 | ||

| Total | 30 | 4.63 | 0.669 | ||

| Model | Fixed Effects | 0.645 | |||

| Random Effects | |||||

| The user required external guidance to complete the task. | 1 | 12 | 1.33 | 0.778 | |

| 2 | 8 | 1.13 | 0.354 | ||

| 3 | 10 | 2.30 | 0.483 | ||

| Total | 30 | 1.60 | 0.770 | ||

| Model | Fixed Effects | 0.598 | |||

| Random Effects | |||||

| The user tries to interact with every object. | 1 | 12 | 3.08 | 1.165 | |

| 2 | 8 | 2.88 | 1.458 | ||

| 3 | 10 | 1.40 | 0.699 | ||

| Total | 30 | 2.47 | 1.332 | ||

| Model | Fixed Effects | 1.125 | |||

| Random Effects | |||||

| The user is following the in-app instructions. | 1 | 12 | 3.58 | 0.900 | |

| 2 | 8 | 4.00 | 1.195 | ||

| 3 | 10 | 4.80 | 0.422 | ||

| Total | 30 | 4.10 | 0.995 | ||

| Model | Fixed Effects | 0.872 | |||

| Random Effects | |||||

| The user tried varied poses to interact with the objects. | 1 | 12 | 2.33 | 1.371 | |

| 2 | 8 | 1.88 | 0.835 | ||

| 3 | 10 | 1.70 | 0.949 | ||

| Total | 30 | 2.00 | 1.114 | ||

| Model | Fixed Effects | 1.116 | |||

| Random Effects | |||||

| Total Interaction in Level 1 | 1 | 12 | 27.67 | 7.820 | |

| 2 | 8 | 28.38 | 10.446 | ||

| 3 | 10 | 25.40 | 6.022 | ||

| Total | 30 | 27.10 | 7.897 | ||

| Model | Fixed Effects | 8.080 | |||

| Random Effects | |||||

| Total Interactions in Level 2 | 1 | 12 | 8.58 | 1.443 | |

| 2 | 8 | 9.38 | 2.825 | ||

| 3 | 10 | 9.10 | 2.132 | ||

| Total | 30 | 8.97 | 2.059 | ||

| Model | Fixed Effects | 2.105 | |||

| Random Effects | |||||

| I feel discomfort after using VR. | 1 | 12 | 1.17 | 0.389 | |

| 2 | 8 | 1.25 | 0.463 | ||

| 3 | 10 | 1.50 | 0.707 | ||

| Total | 30 | 1.30 | 0.535 | ||

| Model | Fixed Effects | 0.533 | |||

| Random Effects | |||||

| I feel fatigued after using VR. | 1 | 12 | 1.25 | 0.622 | |

| 2 | 8 | 1.13 | 0.354 | ||

| 3 | 10 | 1.00 | 0.000 | ||

| Total | 30 | 1.13 | 0.434 | ||

| Model | Fixed Effects | 0.436 | |||

| Random Effects | |||||

| The user is in postural sway while standing. | 1 | 12 | 1.08 | 0.289 | |

| 2 | 8 | 1.00 | 0.000 | ||

| 3 | 10 | 1.30 | 0.483 | ||

| Total | 30 | 1.13 | 0.346 | ||

| Model | Fixed Effects | 0.334 | |||

| Random Effects | |||||

Appendix B

| N | Mean | Std. Deviation | |||

|---|---|---|---|---|---|

| 1st Task (Object Interaction—Grab Interaction) Completion Time | 1 | 12 | 49.33 | 20.219 | |

| 2 | 18 | 50.33 | 19.560 | ||

| Total | 30 | 49.93 | 19.483 | ||

| Model | Fixed Effects | 19.821 | |||

| Random Effects | |||||

| 2nd Task (Map—Grab & Move Interaction) Completion Time | 1 | 12 | 35.50 | 23.283 | |

| 2 | 18 | 50.17 | 35.211 | ||

| Total | 30 | 44.30 | 31.398 | ||

| Model | Fixed Effects | 31.076 | |||

| Random Effects | |||||

| 3rd Task (Change Music/TV Ch—Poke Interaction) Completion Time | 1 | 12 | 34.83 | 17.251 | |

| 2 | 18 | 33.11 | 18.598 | ||

| Total | 30 | 33.80 | 17.787 | ||

| Model | Fixed Effects | 18.081 | |||

| Random Effects | |||||

| 4th Task (Put Jewellery—Grab & Move + Grab & Place Interaction) Completion Time | 1 | 12 | 22.17 | 9.916 | |

| 2 | 18 | 31.39 | 17.614 | ||

| Total | 30 | 27.70 | 15.501 | ||

| Model | Fixed Effects | 15.066 | |||

| Random Effects | |||||

| 5th Task (Sword & Cut—Grab & Use Interaction) Completion Time | 1 | 12 | 24.58 | 14.126 | |

| 2 | 18 | 26.00 | 14.275 | ||

| Total | 30 | 25.43 | 13.987 | ||

| Model | Fixed Effects | 14.216 | |||

| Random Effects | |||||

| 6th Task (Pistol & Shoot—Grab & Move + Grab & Use Interaction) Completion Time | 1 | 12 | 35.75 | 10.931 | |

| 2 | 18 | 55.89 | 19.638 | ||

| Total | 30 | 47.83 | 19.289 | ||

| Model | Fixed Effects | 16.765 | |||

| Random Effects | |||||

| 1st Task (Alphabet Cards—Distance Grab + Pinch Interaction) Completion Time | 1 | 12 | 30.61 | 25.809 | |

| 2 | 18 | 33.46 | 27.665 | ||

| Total | 30 | 32.32 | 26.520 | ||

| Model | Fixed Effects | 26.951 | |||

| Random Effects | |||||

| 2nd Task (Alphabet Cards—Two-handed, Distance Grab + Pinch + Poke Interaction) Completion Time | 1 | 12 | 44.96 | 26.247 | |

| 2 | 18 | 50.72 | 32.488 | ||

| Total | 30 | 48.42 | 29.804 | ||

| Model | Fixed Effects | 30.190 | |||

| Random Effects | |||||

| Air Writing (Pinch + Move Interaction) | 1 | 12 | 74.42 | 17.347 | |

| 2 | 18 | 68.34 | 19.990 | ||

| Total | 30 | 70.77 | 18.909 | ||

| Model | Fixed Effects | 18.996 | |||

| Random Effects | |||||

| Board Writing (Grab or Pinch + Move interaction) | 1 | 12 | 93.02 | 21.672 | |

| 2 | 18 | 85.42 | 24.995 | ||

| Total | 30 | 88.46 | 23.638 | ||

| Model | Fixed Effects | 23.745 | |||

| Random Effects | |||||

| Typewriter (Poke Interaction) | 1 | 12 | 111.62 | 26.011 | |

| 2 | 18 | 102.49 | 29.977 | ||

| Total | 30 | 106.14 | 28.356 | ||

| Model | Fixed Effects | 28.485 | |||

| Random Effects | |||||

| Errors in Grab Interaction | 1 | 12 | 2.50 | 2.023 | |

| 2 | 18 | 3.33 | 2.029 | ||

| Total | 30 | 3.00 | 2.034 | ||

| Model | Fixed Effects | 2.027 | |||

| Random Effects | |||||

| Errors in Grab and Move Interaction | 1 | 12 | 6.75 | 4.181 | |

| 2 | 18 | 10.72 | 6.047 | ||

| Total | 30 | 9.13 | 5.655 | ||

| Model | Fixed Effects | 5.391 | |||

| Random Effects | |||||

| Errors in Grab and Use Sword | 1 | 12 | 2.25 | 1.603 | |

| 2 | 18 | 5.28 | 4.056 | ||

| Total | 30 | 4.07 | 3.591 | ||

| Model | Fixed Effects | 3.316 | |||

| Random Effects | |||||

| Errors in Grab and Use Pistol | 1 | 12 | 2.42 | 1.165 | |

| 2 | 18 | 8.72 | 5.959 | ||

| Total | 30 | 6.20 | 5.586 | ||

| Model | Fixed Effects | 4.700 | |||

| Random Effects | |||||

| Errors in Distance Grab Interaction | 1 | 12 | 2.00 | 2.256 | |

| 2 | 18 | 2.33 | 2.890 | ||

| Total | 30 | 2.20 | 2.618 | ||

| Model | Fixed Effects | 2.659 | |||

| Random Effects | |||||

| Errors in Poke Interaction on Alphabet Card | 1 | 12 | 1.58 | 1.782 | |

| 2 | 18 | 1.78 | 3.040 | ||

| Total | 30 | 1.70 | 2.575 | ||

| Model | Fixed Effects | 2.619 | |||

| Random Effects | |||||

| Errors in Pinch and Move Interaction to Write in Air | 1 | 12 | 1.42 | 1.621 | |

| 2 | 18 | 2.11 | 2.026 | ||

| Total | 30 | 1.83 | 1.877 | ||

| Model | Fixed Effects | 1.877 | |||

| Random Effects | |||||

| Errors in Grab, Move, and Use Interaction to Write on Board | 1 | 12 | 1.17 | 1.403 | |

| 2 | 18 | 2.78 | 2.264 | ||

| Total | 30 | 2.13 | 2.097 | ||

| Model | Fixed Effects | 1.971 | |||

| Random Effects | |||||

| Errors in Poke Interaction on Urdu Keyboard | 1 | 12 | 1.58 | 1.311 | |

| 2 | 18 | 1.78 | 2.777 | ||

| Total | 30 | 1.70 | 2.277 | ||

| Model | Fixed Effects | 2.315 | |||

| Random Effects | |||||

| The user is confident while interacting with the VR. | 1 | 12 | 4.92 | 0.289 | |

| 2 | 18 | 4.44 | 0.784 | ||

| Total | 30 | 4.63 | 0.669 | ||

| Model | Fixed Effects | 0.637 | |||

| Random Effects | |||||

| The user required external guidance to complete the task. | 1 | 12 | 1.50 | 0.522 | |

| 2 | 18 | 1.67 | 0.907 | ||

| Total | 30 | 1.60 | 0.770 | ||

| Model | Fixed Effects | 0.779 | |||

| Random Effects | |||||

| The user tries to interact with every object. | 1 | 12 | 2.33 | 1.231 | |

| 2 | 18 | 2.56 | 1.423 | ||

| Total | 30 | 2.47 | 1.332 | ||

| Model | Fixed Effects | 1.351 | |||

| Random Effects | |||||

| The user is following the in-app instructions. | 1 | 12 | 4.50 | 0.798 | |

| 2 | 18 | 3.83 | 1.043 | ||

| Total | 30 | 4.10 | 0.995 | ||

| Model | Fixed Effects | 0.954 | |||

| Random Effects | |||||

| The user tried varied poses to interact with the objects. | 1 | 12 | 1.67 | 0.888 | |

| 2 | 18 | 2.22 | 1.215 | ||

| Total | 30 | 2.00 | 1.114 | ||

| Model | Fixed Effects | 1.098 | |||

| Random Effects | |||||

| Total Interaction in Level 1 | 1 | 12 | 25.00 | 7.198 | |

| 2 | 18 | 28.50 | 8.227 | ||

| Total | 30 | 27.10 | 7.897 | ||

| Model | Fixed Effects | 7.839 | |||

| Random Effects | |||||

| Total Interactions in Level 2 | 1 | 12 | 9.25 | 2.527 | |

| 2 | 18 | 8.78 | 1.734 | ||

| Total | 30 | 8.97 | 2.059 | ||

| Model | Fixed Effects | 2.082 | |||

| Random Effects | |||||

| I feel discomfort after using VR. | 1 | 12 | 1.42 | 0.669 | |

| 2 | 18 | 1.22 | 0.428 | ||

| Total | 30 | 1.30 | 0.535 | ||

| Model | Fixed Effects | 0.535 | |||

| Random Effects | |||||

| I feel fatigued after using VR. | 1 | 12 | 1.17 | 0.389 | |

| 2 | 18 | 1.11 | 0.471 | ||

| Total | 30 | 1.13 | 0.434 | ||

| Model | Fixed Effects | 0.441 | |||

| Random Effects | |||||

| The user is in postural sway while standing. | 1 | 12 | 1.08 | 0.289 | |

| 2 | 18 | 1.17 | 0.383 | ||

| Total | 30 | 1.13 | 0.346 | ||

| Model | Fixed Effects | 0.349 | |||

| Random Effects | |||||

Appendix C

| Sum of Squares | df | Mean Square | F | Sig. | ||

|---|---|---|---|---|---|---|

| 1st Task (Object Interaction—Grab Interaction) Completion Time | Between Groups | 1345.675 | 2 | 672.838 | 1.880 | 0.172 |

| Within Groups | 9662.192 | 27 | 357.859 | |||

| Total | 11,007.867 | 29 | ||||

| 2nd Task (Map—Grab & Move Interaction) Completion Time | Between Groups | 7098.283 | 2 | 3549.142 | 4.459 | 0.021 |

| Within Groups | 21,490.017 | 27 | 795.927 | |||

| Total | 28,588.300 | 29 | ||||

| 3rd Task (Change Music/TV Ch—Poke Interaction) Completion Time | Between Groups | 1283.608 | 2 | 641.804 | 2.196 | 0.131 |

| Within Groups | 7891.192 | 27 | 292.266 | |||

| Total | 9174.800 | 29 | ||||

| 4th Task (Put Jewellery—Grab & Move + Grab & Place Interaction) Completion Time | Between Groups | 1799.025 | 2 | 899.513 | 4.698 | 0.018 |

| Within Groups | 5169.275 | 27 | 191.455 | |||

| Total | 6968.300 | 29 | ||||

| 5th Task (Sword & Cut—Grab & Use Interaction) Completion Time | Between Groups | 975.142 | 2 | 487.571 | 2.802 | 0.078 |

| Within Groups | 4698.225 | 27 | 174.008 | |||

| Total | 5673.367 | 29 | ||||

| 6th Task (Pistol & Shoot—Grab & Move + Grab & Use Interaction) Completion Time | Between Groups | 2652.100 | 2 | 1326.050 | 4.399 | 0.022 |

| Within Groups | 8138.067 | 27 | 301.410 | |||

| Total | 10,790.167 | 29 | ||||

| 1st Task (Alphabet Cards—Distance Grab + Pinch Interaction) Completion Time | Between Groups | 3489.324 | 2 | 1744.662 | 2.786 | 0.079 |

| Within Groups | 16,907.404 | 27 | 626.200 | |||

| Total | 20,396.728 | 29 | ||||

| 2nd Task (Alphabet Cards—Two-handed, Distance Grab + Pinch + Poke Interaction) Completion Time | Between Groups | 6528.401 | 2 | 3264.201 | 4.583 | 0.019 |

| Within Groups | 19,231.381 | 27 | 712.273 | |||

| Total | 25,759.782 | 29 | ||||

| Air Writing (Pinch + Move Interaction) | Between Groups | 1620.563 | 2 | 810.281 | 2.501 | 0.101 |

| Within Groups | 8748.740 | 27 | 324.027 | |||

| Total | 10,369.303 | 29 | ||||

| Board Writing (Grab or Pinch + Move interaction) | Between Groups | 2535.393 | 2 | 1267.696 | 2.504 | 0.101 |

| Within Groups | 13,668.257 | 27 | 506.232 | |||

| Total | 16,203.650 | 29 | ||||

| Typewriter (Poke Interaction) | Between Groups | 3650.809 | 2 | 1825.404 | 2.506 | 0.100 |

| Within Groups | 19,666.825 | 27 | 728.401 | |||

| Total | 23,317.634 | 29 | ||||

| Errors in Grab Interaction | Between Groups | 23.233 | 2 | 11.617 | 3.241 | 0.055 |

| Within Groups | 96.767 | 27 | 3.584 | |||

| Total | 120.000 | 29 | ||||

| Errors in Grab and Move Interaction | Between Groups | 77.900 | 2 | 38.950 | 1.238 | 0.306 |

| Within Groups | 849.567 | 27 | 31.465 | |||

| Total | 927.467 | 29 | ||||

| Errors in Grab and Use Sword | Between Groups | 15.675 | 2 | 7.838 | 0.591 | 0.561 |

| Within Groups | 358.192 | 27 | 13.266 | |||

| Total | 373.867 | 29 | ||||

| Errors in Grab and Use Pistol | Between Groups | 130.950 | 2 | 65.475 | 2.284 | 0.121 |

| Within Groups | 773.850 | 27 | 28.661 | |||

| Total | 904.800 | 29 | ||||

| Errors in Distance Grab Interaction | Between Groups | 51.300 | 2 | 25.650 | 4.695 | 0.018 |

| Within Groups | 147.500 | 27 | 5.463 | |||

| Total | 198.800 | 29 | ||||

| Errors in Poke Interaction on Alphabet Card | Between Groups | 21.158 | 2 | 10.579 | 1.669 | 0.207 |

| Within Groups | 171.142 | 27 | 6.339 | |||

| Total | 192.300 | 29 | ||||

| Errors in Pinch and Move Interaction to Write in Air | Between Groups | 10.000 | 2 | 5.000 | 1.465 | 0.249 |

| Within Groups | 92.167 | 27 | 3.414 | |||

| Total | 102.167 | 29 | ||||

| Errors in Grab, Move, and Use Interaction to Write on Board | Between Groups | 19.650 | 2 | 9.825 | 2.460 | 0.104 |

| Within Groups | 107.817 | 27 | 3.993 | |||

| Total | 127.467 | 29 | ||||

| Errors in Poke Interaction on Urdu Keyboard | Between Groups | 4.033 | 2 | 2.017 | 0.372 | 0.693 |

| Within Groups | 146.267 | 27 | 5.417 | |||

| Total | 150.300 | 29 | ||||

| The user is confident while interacting with the VR. | Between Groups | 1.742 | 2 | 0.871 | 2.095 | 0.143 |

| Within Groups | 11.225 | 27 | 0.416 | |||

| Total | 12.967 | 29 | ||||

| The user required external guidance to complete the task. | Between Groups | 7.558 | 2 | 3.779 | 10.583 | 0.000 |

| Within Groups | 9.642 | 27 | 0.357 | |||

| Total | 17.200 | 29 | ||||

| The user tries to interact with every object. | Between Groups | 17.275 | 2 | 8.638 | 6.821 | 0.004 |

| Within Groups | 34.192 | 27 | 1.266 | |||

| Total | 51.467 | 29 | ||||

| The user is following the in-app instructions. | Between Groups | 8.183 | 2 | 4.092 | 5.385 | 0.011 |

| Within Groups | 20.517 | 27 | 0.760 | |||

| Total | 28.700 | 29 | ||||

| The user tried varied poses to interact with the objects. | Between Groups | 2.358 | 2 | 1.179 | 0.946 | 0.401 |

| Within Groups | 33.642 | 27 | 1.246 | |||

| Total | 36.000 | 29 | ||||

| Total Interaction in Level 1 | Between Groups | 45.758 | 2 | 22.879 | 0.350 | 0.708 |

| Within Groups | 1762.942 | 27 | 65.294 | |||

| Total | 1808.700 | 29 | ||||

| Total Interactions in Level 2 | Between Groups | 3.275 | 2 | 1.637 | 0.369 | 0.695 |

| Within Groups | 119.692 | 27 | 4.433 | |||

| Total | 122.967 | 29 | ||||

| I feel discomfort after using VR. | Between Groups | 0.633 | 2 | 0.317 | 1.115 | 0.342 |

| Within Groups | 7.667 | 27 | 0.284 | |||

| Total | 8.300 | 29 | ||||

| I feel fatigued after using VR. | Between Groups | 0.342 | 2 | 0.171 | 0.900 | 0.418 |

| Within Groups | 5.125 | 27 | 0.190 | |||

| Total | 5.467 | 29 | ||||

| The user is in postural sway while standing. | Between Groups | 0.450 | 2 | 0.225 | 2.014 | 0.153 |

| Within Groups | 3.017 | 27 | 0.112 | |||

| Total | 3.467 | 29 | ||||

Appendix D

| Sum of Squares | df | Mean Square | F | Sig. | ||

|---|---|---|---|---|---|---|

| 1st Task (Object Interaction—Grab Interaction) Completion Time | Between Groups | 7.200 | 1 | 7.200 | 0.018 | 0.893 |

| Within Groups | 11,000.667 | 28 | 392.881 | |||

| Total | 11,007.867 | 29 | ||||

| 2nd Task (Map—Grab & Move Interaction) Completion Time | Between Groups | 1548.800 | 1 | 1548.800 | 1.604 | 0.216 |

| Within Groups | 27,039.500 | 28 | 965.696 | |||

| Total | 28,588.300 | 29 | ||||

| 3rd Task (Change Music/TV Ch—Poke Interaction) Completion Time | Between Groups | 21.356 | 1 | 21.356 | 0.065 | 0.800 |

| Within Groups | 9153.444 | 28 | 326.909 | |||

| Total | 9174.800 | 29 | ||||

| 4th Task (Put Jewellery—Grab & Move + Grab & Place Interaction) Completion Time | Between Groups | 612.356 | 1 | 612.356 | 2.698 | 0.112 |

| Within Groups | 6355.944 | 28 | 226.998 | |||

| Total | 6968.300 | 29 | ||||

| 5th Task (Sword & Cut—Grab & Use Interaction) Completion Time | Between Groups | 14.450 | 1 | 14.450 | 0.071 | 0.791 |

| Within Groups | 5658.917 | 28 | 202.104 | |||

| Total | 5673.367 | 29 | ||||

| 6th Task (Pistol & Shoot—Grab & Move + Grab & Use Interaction) Completion Time | Between Groups | 2920.139 | 1 | 2920.139 | 10.389 | 0.003 |

| Within Groups | 7870.028 | 28 | 281.072 | |||

| Total | 10,790.167 | 29 | ||||

| 1st Task (Alphabet Cards—Distance Grab + Pinch Interaction) Completion Time | Between Groups | 58.596 | 1 | 58.596 | 0.081 | 0.778 |

| Within Groups | 20,338.132 | 28 | 726.362 | |||

| Total | 20,396.728 | 29 | ||||

| 2nd Task (Alphabet Cards—Two-handed, Distance Grab + Pinch + Poke Interaction) Completion Time | Between Groups | 239.201 | 1 | 239.201 | 0.262 | 0.612 |

| Within Groups | 25,520.580 | 28 | 911.449 | |||

| Total | 25,759.782 | 29 | ||||

| Air Writing (Pinch + Move Interaction) | Between Groups | 265.964 | 1 | 265.964 | 0.737 | 0.398 |

| Within Groups | 10,103.339 | 28 | 360.834 | |||

| Total | 10,369.303 | 29 | ||||

| Board Writing (Grab or Pinch + Move interaction) | Between Groups | 416.176 | 1 | 416.176 | 0.738 | 0.398 |

| Within Groups | 15,787.474 | 28 | 563.838 | |||

| Total | 16,203.650 | 29 | ||||

| Typewriter (Poke Interaction) | Between Groups | 599.148 | 1 | 599.148 | 0.738 | 0.397 |

| Within Groups | 22,718.486 | 28 | 811.375 | |||

| Total | 23,317.634 | 29 | ||||

| Errors in Grab Interaction | Between Groups | 5.000 | 1 | 5.000 | 1.217 | 0.279 |

| Within Groups | 115.000 | 28 | 4.107 | |||

| Total | 120.000 | 29 | ||||

| Errors in Grab and Move Interaction | Between Groups | 113.606 | 1 | 113.606 | 3.908 | 0.058 |

| Within Groups | 813.861 | 28 | 29.066 | |||

| Total | 927.467 | 29 | ||||

| Errors in Grab and Use Sword | Between Groups | 66.006 | 1 | 66.006 | 6.003 | 0.021 |

| Within Groups | 307.861 | 28 | 10.995 | |||

| Total | 373.867 | 29 | ||||

| Errors in Grab and Use Pistol | Between Groups | 286.272 | 1 | 286.272 | 12.959 | 0.001 |

| Within Groups | 618.528 | 28 | 22.090 | |||

| Total | 904.800 | 29 | ||||

| Errors in Distance Grab Interaction | Between Groups | 0.800 | 1 | 0.800 | 0.113 | 0.739 |

| Within Groups | 198.000 | 28 | 7.071 | |||

| Total | 198.800 | 29 | ||||

| Errors in Poke Interaction on Alphabet Card | Between Groups | 0.272 | 1 | 0.272 | 0.040 | 0.844 |

| Within Groups | 192.028 | 28 | 6.858 | |||

| Total | 192.300 | 29 | ||||

| Errors in Pinch and Move Interaction to Write in Air | Between Groups | 3.472 | 1 | 3.472 | 0.985 | 0.329 |

| Within Groups | 98.694 | 28 | 3.525 | |||

| Total | 102.167 | 29 | ||||

| Errors in Grab, Move, and Use Interaction to Write on Board | Between Groups | 18.689 | 1 | 18.689 | 4.811 | 0.037 |

| Within Groups | 108.778 | 28 | 3.885 | |||

| Total | 127.467 | 29 | ||||

| Errors in Poke Interaction on Urdu Keyboard | Between Groups | 0.272 | 1 | 0.272 | 0.051 | 0.823 |

| Within Groups | 150.028 | 28 | 5.358 | |||

| Total | 150.300 | 29 | ||||

| The user is confident while interacting with the VR. | Between Groups | 1.606 | 1 | 1.606 | 3.957 | 0.057 |

| Within Groups | 11.361 | 28 | 0.406 | |||

| Total | 12.967 | 29 | ||||

| The user required external guidance to complete the task. | Between Groups | 0.200 | 1 | 0.200 | 0.329 | 0.571 |

| Within Groups | 17.000 | 28 | 0.607 | |||

| Total | 17.200 | 29 | ||||

| The user tries to interact with every object. | Between Groups | 0.356 | 1 | 0.356 | 0.195 | 0.662 |

| Within Groups | 51.111 | 28 | 1.825 | |||

| Total | 51.467 | 29 | ||||

| The user is following the in-app instructions. | Between Groups | 3.200 | 1 | 3.200 | 3.514 | 0.071 |

| Within Groups | 25.500 | 28 | 0.911 | |||

| Total | 28.700 | 29 | ||||

| The user tried varied poses to interact with the objects. | Between Groups | 2.222 | 1 | 2.222 | 1.842 | 0.186 |

| Within Groups | 33.778 | 28 | 1.206 | |||

| Total | 36.000 | 29 | ||||

| Total Interaction in Level 1 | Between Groups | 88.200 | 1 | 88.200 | 1.435 | 0.241 |

| Within Groups | 1720.500 | 28 | 61.446 | |||

| Total | 1808.700 | 29 | ||||

| Total Interactions in Level 2 | Between Groups | 1.606 | 1 | 1.606 | 0.370 | 0.548 |

| Within Groups | 121.361 | 28 | 4.334 | |||

| Total | 122.967 | 29 | ||||

| I feel discomfort after using VR. | Between Groups | 0.272 | 1 | 0.272 | 0.949 | 0.338 |

| Within Groups | 8.028 | 28 | 0.287 | |||

| Total | 8.300 | 29 | ||||

| I feel fatigued after using VR. | Between Groups | 0.022 | 1 | 0.022 | 0.114 | 0.738 |

| Within Groups | 5.444 | 28 | 0.194 | |||

| Total | 5.467 | 29 | ||||

| The user is in postural sway while standing. | Between Groups | 0.050 | 1 | 0.050 | 0.410 | 0.527 |

| Within Groups | 3.417 | 28 | 0.122 | |||

| Total | 3.467 | 29 | ||||

Appendix E

| Estimates | |||||||

|---|---|---|---|---|---|---|---|

| Dependent Variable | Use of Recent Technology | User Type | Interaction Modality | Mean | Std. Error | 95% Confidence Interval | |

| Lower Bound | Upper Bound | ||||||

| 2nd Task (Map—Grab & Move Interaction) Completion Time | No | Nonliterate | Controllers | 78.000 | 22.335 | 31.681 | 124.319 |

| Hands | 87.500 | 15.793 | 54.747 | 120.253 | |||

| Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Yes | Nonliterate | Controllers | 48.000 | 11.167 | 24.840 | 71.160 | |

| Hands | 47.667 | 12.895 | 20.924 | 74.409 | |||

| Non-Tech-Literate | Controllers | 23.500 | 11.167 | 0.340 | 46.660 | ||

| Hands | 85.000 | 11.167 | 61.840 | 108.160 | |||

| Tech-Literate | Controllers | 20.667 | 12.895 | −6.076 | 47.409 | ||

| Hands | 27.222 | 7.445 | 11.782 | 42.662 | |||

| 4th Task (Put Jewellery—Grab & Move + Grab & Place Interaction) Completion Time | No | Nonliterate | Controllers | 31.000 | 10.670 | 8.872 | 53.128 |

| Hands | 68.000 | 7.545 | 52.353 | 83.647 | |||

| Non-Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Yes | Nonliterate | Controllers | 29.250 | 5.335 | 18.186 | 40.314 | |

| Hands | 34.000 | 6.160 | 21.224 | 46.776 | |||

| Non-Tech-Literate | Controllers | 16.250 | 5.335 | 5.186 | 27.314 | ||

| Hands | 30.500 | 5.335 | 19.436 | 41.564 | |||

| Tech-Literate | Controllers | 17.667 | 6.160 | 4.891 | 30.443 | ||

| Hands | 22.778 | 3.557 | 15.402 | 30.154 | |||

| 6th Task (Pistol & Shoot—Grab & Move + Grab & Use Interaction) Completion Time | No | Nonliterate | Controllers | 50.000 | 13.596 | 21.804 | 78.196 |

| Hands | 46.500 | 9.614 | 26.562 | 66.438 | |||

| Non-Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Yes | Nonliterate | Controllers | 42.500 | 6.798 | 28.402 | 56.598 | |

| Hands | 72.667 | 7.850 | 56.387 | 88.946 | |||

| Non-Tech-Literate | Controllers | 30.000 | 6.798 | 15.902 | 44.098 | ||

| Hands | 34.500 | 6.798 | 20.402 | 48.598 | |||

| Tech-Literate | Controllers | 29.667 | 7.850 | 13.387 | 45.946 | ||

| Hands | 61.889 | 4.532 | 52.490 | 71.288 | |||

| 2nd Task (Alphabet Cards—Two-handed, Distance Grab + Pinch + Poke Interaction) Completion Time | No | Nonliterate | Controllers | 78.600 | 24.022 | 28.782 | 128.418 |

| Hands | 117.800 | 16.986 | 82.574 | 153.026 | |||

| Non-Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Yes | Nonliterate | Controllers | 52.350 | 12.011 | 27.441 | 77.259 | |

| Hands | 56.167 | 13.869 | 27.404 | 84.929 | |||

| Non-Tech-Literate | Controllers | 33.100 | 12.011 | 8.191 | 58.009 | ||

| Hands | 39.350 | 12.011 | 14.441 | 64.259 | |||

| Tech-Literate | Controllers | 39.700 | 13.869 | 10.938 | 68.462 | ||

| Hands | 39.056 | 8.007 | 22.450 | 55.661 | |||

| The user required external guidance to complete the task. | No | Nonliterate | Controllers | 2.000 | 0.589 | 0.778 | 3.222 |

| Hands | 3.000 | 0.417 | 2.136 | 3.864 | |||

| Non-Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Yes | Nonliterate | Controllers | 2.000 | 0.295 | 1.389 | 2.611 | |

| Hands | 2.333 | 0.340 | 1.628 | 3.039 | |||

| Non-Tech-Literate | Controllers | 1.250 | 0.295 | 0.639 | 1.861 | ||

| Hands | 1.000 | 0.295 | 0.389 | 1.611 | |||

| Tech-Literate | Controllers | 1.000 | 0.340 | 0.294 | 1.706 | ||

| Hands | 1.444 | .196 | 1.037 | 1.852 | |||

| The user tries to interact with every object. | No | Nonliterate | Controllers | 1.000 | 1.180 | −1.447 | 3.447 |

| Hands | 1.000 | 0.834 | −0.731 | 2.731 | |||

| Non-Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Yes | Nonliterate | Controllers | 2.000 | 0.590 | 0.776 | 3.224 | |

| Hands | 1.000 | 0.681 | −0.413 | 2.413 | |||

| Non-Tech-Literate | Controllers | 2.500 | 0.590 | 1.276 | 3.724 | ||

| Hands | 3.250 | 0.590 | 2.026 | 4.474 | |||

| Tech-Literate | Controllers | 3.000 | 0.681 | 1.587 | 4.413 | ||

| Hands | 3.111 | 0.393 | 2.295 | 3.927 | |||

| The user is following the in-app instructions. | No | Nonliterate | Controllers | 4.000 | 0.870 | 2.195 | 5.805 |

| Hands | 4.500 | 0.615 | 3.224 | 5.776 | |||

| Non-Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Yes | Nonliterate | Controllers | 5.000 | 0.435 | 4.097 | 5.903 | |

| Hands | 5.000 | 0.503 | 3.958 | 6.042 | |||

| Non-Tech-Literate | Controllers | 4.250 | 0.435 | 3.347 | 5.153 | ||

| Hands | 3.750 | 0.435 | 2.847 | 4.653 | |||

| Tech-Literate | Controllers | 4.333 | 0.503 | 3.291 | 5.375 | ||

| Hands | 3.333 | 0.290 | 2.732 | 3.935 | |||

| Errors in Grab and Use Sword | No | Nonliterate | Controllers | 3.000 | 3.656 | −4.583 | 10.583 |

| Hands | 5.500 | 2.586 | 0.138 | 10.862 | |||

| Non-Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Yes | Nonliterate | Controllers | 3.000 | 1.828 | -.792 | 6.792 | |

| Hands | 6.000 | 2.111 | 1.622 | 10.378 | |||

| Non-Tech-Literate | Controllers | 1.750 | 1.828 | −2.042 | 5.542 | ||

| Hands | 4.000 | 1.828 | 0.208 | 7.792 | |||

| Tech-Literate | Controllers | 1.667 | 2.111 | −2.711 | 6.045 | ||

| Hands | 5.556 | 1.219 | 3.028 | 8.083 | |||

| Errors in Grab and Use Pistol | No | Nonliterate | Controllers | 4.000 | 4.529 | −5.392 | 13.392 |

| Hands | 2.000 | 3.202 | −4.641 | 8.641 | |||

| Non-Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Yes | Nonliterate | Controllers | 2.500 | 2.264 | −2.196 | 7.196 | |

| Hands | 9.667 | 2.615 | 4.244 | 15.089 | |||

| Non-Tech-Literate | Controllers | 2.250 | 2.264 | −2.446 | 6.946 | ||

| Hands | 6.250 | 2.264 | 1.554 | 10.946 | |||

| Tech-Literate | Controllers | 2.000 | 2.615 | −3.422 | 7.422 | ||

| Hands | 11.000 | 1.510 | 7.869 | 14.131 | |||

| Errors in Distance Grab Interaction | No | Nonliterate | Controllers | 5.000 | 2.126 | 0.591 | 9.409 |

| Hands | 7.500 | 1.503 | 4.383 | 10.617 | |||

| Non-Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Yes | Nonliterate | Controllers | 1.750 | 1.063 | −0.454 | 3.954 | |

| Hands | 4.333 | 1.227 | 1.788 | 6.879 | |||

| Non-Tech-Literate | Controllers | 2.250 | 1.063 | 0.046 | 4.454 | ||

| Hands | 1.250 | 1.063 | −0.954 | 3.454 | |||

| Tech-Literate | Controllers | 1.000 | 1.227 | −1.545 | 3.545 | ||

| Hands | 1.000 | 0.709 | −0.470 | 2.470 | |||

| Errors in Grab, Move, and Use Interaction to Write on Board | No | Nonliterate | Controllers | 3.000 | 1.376 | 0.146 | 5.854 |

| Hands | 4.441 × 10−16 | 0.973 | −2.018 | 2.018 | |||

| Non-Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Tech-Literate | Controllers | . | . | . | . | ||

| Hands | . | . | . | . | |||

| Yes | Nonliterate | Controllers | 2.500 | 0.688 | 1.073 | 3.927 | |

| Hands | 6.000 | 0.795 | 4.352 | 7.648 | |||

| Non-Tech-Literate | Controllers | −2.776 × 10−16 | 0.688 | −1.427 | 1.427 | ||

| Hands | 2.000 | 0.688 | 0.573 | 3.427 | |||

| Tech-Literate | Controllers | 0.333 | 0.795 | −1.314 | 1.981 | ||

| Hands | 2.667 | 0.459 | 1.715 | 3.618 | |||

Appendix F

| Test Value = 24.14 | ||||||

|---|---|---|---|---|---|---|

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Total Interactions in Level 1 | 0.913 | 4 | 0.413 | 1.460 | −2.98 | 5.90 |

| Test Value = 40 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 1st Task (Object Interaction—Grab Interaction) Completion Time | 2.452 | 4 | 0.070 | 14.200 | −1.88 | 30.28 |

| Test Value = 22.29 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 2nd Task (Map—Grab & Move Interaction) Completion Time | 4.536 | 4 | 0.011 | 21.110 | 8.19 | 34.03 |

| Test Value = 27.14 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 3rd Task (Change Music/TV Ch—Poke Interaction) Completion Time | 1.609 | 4 | 0.183 | 2.060 | −1.50 | 5.62 |

| Test Value = 16.86 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 4th Task (Put Jewelry—Grab & Move + Grab & Place Interaction) Completion Time | 3.611 | 4 | 0.023 | 14.740 | 3.41 | 26.07 |

| Test Value = 20 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 5th Task (Sword & Cut—Grab & Use Interaction) Completion Time | 3.162 | 4 | 0.034 | 4.000 | 0.49 | 7.51 |

| Test Value = 29.86 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 6th Task (Pistol & Shoot—Grab & Move + Grab & Use Interaction) Completion Time | 2.202 | 4 | 0.092 | 11.540 | −3.01 | 26.09 |

| Test Value = 9 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Total Interactions in Level 2 | 0.121 | 4 | 0.910 | 0.200 | −4.40 | 4.80 |

| Test Value = 38.54 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 1st Task (Alphabet Cards—Distance Grab + Pinch Interaction) Completion Time | −7.207 | 4 | 0.002 | −16.340 | −22.63 | −10.05 |

| Test Value = 35.93 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 2nd Task (Alphabet Cards—Two-handed, Distance Grab + Pinch + Poke Interaction) Completion Time | 0.311 | 4 | 0.771 | 3.870 | −30.68 | 38.42 |

| Test Value = 72.81 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 3rd Task Air Writing (Pinch + Move Interaction) | 1.950 | 4 | 0.123 | 10.130 | −4.29 | 24.55 |

| Test Value = 91.01 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 3rd Task Board Writing (Grab or Pinch + Move interaction) | 1.946 | 4 | 0.124 | 12.650 | −5.40 | 30.70 |

| Test Value = 109.19 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 3rd Task Typewriter (Poke Interaction) | 1.951 | 4 | 0.123 | 15.210 | −6.44 | 36.86 |

| Test Value = 1.14 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| External Users required external guidance to complete the task. | 0.300 | 4 | 0.779 | 0.060 | −0.50 | 0.62 |

| Test Value = 2.71 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Gameplay User tries to interact with every object. | −5.348 | 4 | 0.006 | −1.310 | −1.99 | −0.63 |

| Test Value = 1.29 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 Errors in Grab Interaction | 1.850 | 4 | 0.138 | 1.110 | −0.56 | 2.78 |

| Test Value = 4.71 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 Errors in Grab and Move Interaction | 1.096 | 4 | 0.335 | 0.890 | −1.37 | 3.15 |

| Test Value = 4.0 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 Errors is Poke Interaction | −1.372 | 4 | 0.242 | −0.800 | −2.42 | 0.82 |

| Test Value = 1.71 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 Errors in Grab and Use Sword | −5.348 | 4 | 0.006 | −1.310 | −1.99 | −0.63 |

| Test Value = 2.14 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 Errors in Grab and Use Pistol | −1.919 | 4 | 0.127 | −0.940 | −2.30 | 0.42 |

| Test Value = 1.71 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 Errors in Distance Grab Interaction | −1.858 | 4 | 0.137 | −0.910 | −2.27 | 0.45 |

| Test Value = 0.86 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 Errors in Poke Interaction on Alphabet Card | 2.205 | 4 | 0.092 | 0.540 | −0.14 | 1.22 |

| Test Value = 0.71 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 Errors in Pinch and Move Interaction to Write in Air | −1.266 | 4 | 0.274 | −0.310 | −0.99 | 0.37 |

| Test Value = 0.14 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 Errors in Grab, Move and Use Interaction to Write on Board | 1.923 | 4 | 0.127 | 0.860 | −0.38 | 2.10 |

| Test Value = 1.86 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 Errors in Poke Interaction on Urdu Keyboard | −0.510 | 4 | 0.637 | −0.260 | −1.68 | 1.16 |

| Test Value = 1.29 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| VR Sickness I feel discomfort. | −0.450 | 4 | 0.676 | −0.090 | −0.65 | 0.47 |

Appendix G

| Test Value = 30 | ||||||

|---|---|---|---|---|---|---|

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Total Interactions in Level 1 | −2.264 | 4 | 0.086 | −4.200 | −9.35 | 0.95 |

| Test Value = 48 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 1st Task (Object Interaction—Grab Interaction) Completion Time | −0.649 | 4 | 0.552 | −2.400 | −12.66 | 7.86 |

| Test Value = 45 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 2nd Task (Map—Grab & Move Interaction) Completion Time | −0.673 | 4 | 0.538 | −1.600 | −8.21 | 5.01 |

| Test Value = 31.38 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 3rd Task (Change Music/TV Ch—Poke Interaction) Completion Time | 1.625 | 4 | 0.180 | 8.020 | −5.68 | 21.72 |

| Test Value = 25.15 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 4th Task (Put Jewelry—Grab & Move + Grab & Place Interaction) Completion Time | 6.744 | 4 | 0.003 | 13.250 | 7.80 | 18.70 |

| Test Value = 22.31 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 5th Task (Sword & Cut—Grab & Use Interaction) Completion Time | 3.686 | 4 | 0.021 | 12.090 | 2.98 | 21.20 |

| Test Value = 53.46 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 6th Task (Pistol & Shoot—Grab & Move + Grab & Use Interaction) Completion Time | 1.283 | 4 | 0.269 | 9.940 | −11.58 | 31.46 |

| Test Value = 8.85 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Total Interactions in Level 2 | 0.495 | 4 | 0.647 | 1.150 | −5.30 | 7.60 |

| Test Value = 37.40 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 1st Task (Alphabet Cards—Distance Grab + Pinch Interaction) Completion Time | −1.564 | 4 | 0.193 | −5.800 | −16.10 | 4.50 |

| Test Value = 39.15 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 2nd Task (Alphabet Cards—Two-handed, Distance Grab + Pinch + Poke Interaction) Completion Time | 1.837 | 4 | 0.140 | 29.250 | −14.95 | 73.45 |

| Test Value = 61.89 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 3rd Task Air Writing (Pinch + Move Interaction) | 4.860 | 4 | 0.008 | 18.870 | 8.09 | 29.65 |

| Test Value = 77.36 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 3rd Task Board Writing (Grab or Pinch + Move interaction) | 4.839 | 4 | 0.008 | 23.560 | 10.04 | 37.08 |

| Test Value = 92.83 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 3rd Task Typewriter (Poke Interaction) | 4.849 | 4 | 0.008 | 28.290 | 12.09 | 44.49 |

| Test Value = 4.69 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| External User is confident while interacting with the VR. | −1.000 | 4 | 0.374 | −0.490 | −1.85 | 0.87 |

| Test Value = 1.31 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| External Users required external guidance to complete the task. | 2.379 | 4 | 0.076 | 0.890 | −0.15 | 1.93 |

| Test Value = 3.15 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Gameplay User tries to interact with every object. | −3.637 | 4 | 0.022 | −1.150 | −2.03 | −0.27 |

| Test Value = 3.46 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Gameplay User is following the in-app instructions. | 6.700 | 4 | 0.003 | 1.340 | 0.78 | 1.90 |

| Test Value = 2.46 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Gameplay User tried varied poses to interact with the objects. | −3.511 | 4 | 0.025 | −0.860 | −1.54 | −0.18 |

| Test Value = 3 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 Errors in Grab Interaction | −0.667 | 4 | 0.541 | −0.400 | −2.07 | 1.27 |

| Test Value = 9.77 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 Errors in Grab and Move Interaction | −4.548 | 4 | 0.010 | −4.770 | −7.68 | −1.86 |

| Test Value = 7.85 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 Errors is Poke Interaction | −16.169 | 4 | 0.000 | −6.050 | −7.09 | −5.01 |

| Test Value = 5.08 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 1 Errors in Grab and Use Sword | −10.941 | 4 | 0.000 | −2.680 | −3.36 | −2.00 |

| Test Value = 1.08 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 Errors in Distance Grab Interaction | 4.704 | 4 | 0.009 | 3.120 | 1.28 | 4.96 |

| Test Value = 1.31 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 Errors in Poke Interaction on Alphabet Card | −0.980 | 4 | 0.382 | −0.310 | −1.19 | 0.57 |

| Test Value = 1.92 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 Errors in Pinch and Move Interaction to Write in Air | −0.472 | 4 | 0.662 | −0.320 | −2.20 | 1.56 |

| Test Value = 2.46 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 Errors in Grab, Move and Use Interaction to Write on Board | 0.275 | 4 | 0.797 | 0.140 | −1.28 | 1.56 |

| Test Value = 1.92 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| Level 2 Errors in Poke Interaction on Urdu Keyboard | −0.800 | 4 | 0.469 | −0.320 | −1.43 | 0.79 |

| Test Value = 1.15 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| VR Sickness I feel discomfort. | 1.837 | 4 | 0.140 | 0.450 | −0.23 | 1.13 |

| Test Value = 1.15 | ||||||

| t | df | Sig. (2-tailed) | Mean Difference | 95% Confidence Interval of the Difference | ||

| Lower | Upper | |||||

| VR Sickness I feel fatigued. | 1.837 | 4 | 0.140 | 0.450 | −0.23 | 1.13 |

Appendix H

| Chi-Square | df | Asymp. Sig. | |

|---|---|---|---|

| Total Interactions in Level 1 | 0.048 | 2 | 0.976 |

| Level 1 1st Task (Object Interaction—Grab Interaction) Completion Time | 2.091 | 2 | 0.351 |

| Level 1 2nd Task (Map—Grab & Move Interaction) Completion Time | 4.960 | 2 | 0.084 |

| Level 1 3rd Task (Change Music/TV Ch—Poke Interaction) Completion Time | 1.636 | 2 | 0.441 |

| Level 1 4th Task (Put Jewelry—Grab & Move + Grab & Place Interaction) Completion Time | 1.729 | 2 | 0.421 |

| Level 1 5th Task (Sword & Cut—Grab & Use Interaction) Completion Time | 1.401 | 2 | 0.496 |

| Level 1 6th Task (Pistol & Shoot—Grab & Move + Grab & Use Interaction) Completion Time | 0.273 | 2 | 0.873 |

| Total Interactions in Level 2 | 1.132 | 2 | 0.568 |

| Level 2 1st Task (Alphabet Cards—Distance Grab + Pinch Interaction) Completion Time | 0.021 | 2 | 0.990 |

| Level 2 2nd Task (Alphabet Cards—Two-handed, Distance Grab + Pinch + Poke Interaction) Completion Time | 0.491 | 2 | 0.782 |

| Level 2 3rd Task Air Writing (Pinch + Move Interaction) | 2.106 | 2 | 0.349 |

| Level 2 3rd Task Board Writing (Grab or Pinch + Move interaction) | 2.106 | 2 | 0.349 |

| Level 2 3rd Task Typewriter (Poke Interaction) | 2.106 | 2 | 0.349 |

| External User is confident while interacting with the VR. | 0.563 | 2 | 0.755 |

| External Users required external guidance to complete the task. | 1.953 | 2 | 0.377 |

| Gameplay User tries to interact with every object. | 2.025 | 2 | 0.363 |

| Gameplay User is following the in-app instructions. | 4.000 | 2 | 0.135 |

| Gameplay User tried varied poses to interact with the objects. | 1.500 | 2 | 0.472 |

| Level 1 Errors in Grab Interaction | 3.309 | 2 | 0.191 |

| Level 1 Errors in Grab and Move Interaction | 1.174 | 2 | 0.556 |

| Level 1 Errors is Poke Interaction | 2.559 | 2 | 0.278 |

| Level 1 Errors in Grab and Use Sword | 0.109 | 2 | 0.947 |

| Level 1 Errors in Grab and Use Pistol | 1.969 | 2 | 0.374 |

| Level 2 Errors in Distance Grab Interaction | 0.519 | 2 | 0.771 |

| Level 2 Errors in Poke Interaction on Alphabet Card | 0.375 | 2 | 0.829 |

| Level 2 Errors in Pinch and Move Interaction to Write in Air | 2.030 | 2 | 0.362 |

| Level 2 Errors in Grab, Move and Use Interaction to Write on Board | 1.047 | 2 | 0.593 |

| Level 2 Errors in Poke Interaction on Urdu Keyboard | 0.300 | 2 | 0.861 |

| VR Sickness I feel discomfort. | 0.563 | 2 | 0.755 |

| VR Sickness I feel fatigued. | 0.429 | 2 | 0.807 |

| VR Sickness User is in postural sway while standing. | 0.000 | 2 | 1.000 |

| 1 | https://www.oculus.com/ (accessed on 22 September 2022). |

| 2 | https://www.vive.com (accessed on 22 September 2022). |

| 3 | https://www.unity.com (accessed on 22 September 2022). |

| 4 | https://developer.oculus.com/documentation/unity/unity-isdk-interaction-sdk-overview (accessed on 22 September 2022). |

| 5 | https://www.blender.org (accessed on 3 October 2022). |

| 6 | https://www.gimp.org (accessed on 3 October 2022). |

| 7 | https://www.audacityteam.org (accessed on 3 October 2022). |

| 8 | https://assetstore.unity.com (accessed on 3 October 2022). |

| 9 | https://quixel.com/megascans (accessed on 3 October 2022). |

| 10 | https://www.meta.com/quest/products/quest-2/ (accessed on 3 October 2022). |

References

- UNESCO-UIS. Literacy. Available online: http://uis.unesco.org/en/topic/literacy (accessed on 7 February 2021).

- Lal, B.S. The Economic and Social Cost of Illiteracy: An Overview. Int. J. Adv. Res. Innov. Ideas Educ. 2015, 1, 663–670. [Google Scholar]

- Literate Pakistan Foundation. Aagahi Adult Literacy Programme, Pakistan. Available online: https://uil.unesco.org/case-study/effective-practices-database-litbase-0/aagahi-adult-literacy-programme-pakistan (accessed on 2 July 2021).

- UIL. National Literacy Programme, Pakistan. Available online: https://uil.unesco.org/case-study/effective-practices-database-litbase-0/national-literacy-programme-pakistan (accessed on 2 July 2021).

- Iqbal, T.; Hammermüller, K.; Nussbaumer, A.; Tjoa, A.M. Towards Using Second Life for Supporting Illiterate Persons in Learning. In Proceedings of the World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education 2009, Vancouver, BC, Canada, 26–30 October 2009; pp. 2704–2709. [Google Scholar]

- Iqbal, T.; Iqbal, S.; Hussain, S.S.; Khan, I.A.; Khan, H.U.; Rehman, A. Fighting Adult Illiteracy with the Help of the Environmental Print Material. PLoS ONE 2018, 13, e0201902. [Google Scholar] [CrossRef] [PubMed]

- Ur-Rehman, I.; Shamim, A.; Khan, T.A.; Elahi, M.; Mohsin, S. Mobile Based User-Centered Learning Environment for Adult Absolute Illiterates. Mob. Inf. Syst. 2016, 2016, 1841287. [Google Scholar] [CrossRef]

- Knowles, M. The Adult Learner: A Neglected Species, 3rd ed.; Gulf Publishing: Houston, TX, USA, 1984. [Google Scholar]

- Pereira, A.; Ortiz, K.Z. Language Skills Differences between Adults without Formal Education and Low Formal Education. Psicol. Reflexão Crítica 2022, 35, 4. [Google Scholar] [CrossRef] [PubMed]

- van Linden, S.; Cremers, A.H.M. Cognitive Abilities of Functionally Illiterate Persons Relevant to ICT Use. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2008; Volume 5105 LNCS, pp. 705–712. [Google Scholar] [CrossRef]

- Katre, D.S. Unorganized Cognitive Structures of Illiterate as the Key Factor in Rural E-Learning Design. J. Educ. 2006, 2, 67–71. [Google Scholar] [CrossRef]

- ISO (International Organization for Standardization). Ergonomics of Human-System Interaction—Part 11: Usability: Definitions and Concepts. Available online: https://www.iso.org/standard/63500.html (accessed on 18 December 2022).

- Bartneck, C.; Forlizzi, J. A Design-Centred Framework for Social Human-Robot Interaction. In Proceedings of the RO-MAN 2004, 13th IEEE International Workshop on Robot and Human Interactive Communication (IEEE Catalog No.04TH8759), Kurashiki, Japan, 22 September 2004; IEEE: New York, NY, USA, 2004; pp. 591–594. [Google Scholar] [CrossRef]

- Lalji, Z.; Good, J. Designing New Technologies for Illiterate Populations: A Study in Mobile Phone Interface Design. Interact. Comput. 2008, 20, 574–586. [Google Scholar] [CrossRef]

- Carvalho, M.B. Designing for Low-Literacy Users: A Framework for Analysis of User-Centred Design Methods. Master’s Thesis, Tampere University, Tampere, Finland, 2011. [Google Scholar]

- Taoufik, I.; Kabaili, H.; Kettani, D. Designing an E-Government Portal Accessible to Illiterate Citizens. In Proceedings of the 1st International Conference on Theory and Practice of Electronic Governance—ICEGOV’07, Macau, China, 10–13 December 2007; p. 327. [Google Scholar] [CrossRef]

- Friscira, E.; Knoche, H.; Huang, J. Getting in Touch with Text: Designing a Mobile Phone Application for Illiterate Users to Harness SMS. In Proceedings of the 2nd ACM Symposium on Computing for Development—ACM DEV’12, Atlanta, GA, USA, 11–12 March 2012; p. 1. [Google Scholar] [CrossRef]

- Huenerfauth, M.P. Design Approaches for Developing User-Interfaces Accessible to Illiterate Users. Master’s Thesis, University College Dublin, Dublin, Ireland, 2002. [Google Scholar]

- Rashid, S.; Khattak, A.; Ashiq, M.; Ur Rehman, S.; Rashid Rasool, M. Educational Landscape of Virtual Reality in Higher Education: Bibliometric Evidences of Publishing Patterns and Emerging Trends. Publications 2021, 9, 17. [Google Scholar] [CrossRef]

- Huettig, F.; Mishra, R.K. How Literacy Acquisition Affects the Illiterate Mind—A Critical Examination of Theories and Evidence. Lang. Linguist. Compass 2014, 8, 401–427. [Google Scholar] [CrossRef]

- Steuer, J. Defining Virtual Reality: Dimensions Determining Telepresence. J. Commun. 1992, 42, 73–93. [Google Scholar] [CrossRef]

- Slater, M. Immersion and the Illusion of Presence in Virtual Reality. Br. J. Psychol. 2018, 109, 431–433. [Google Scholar] [CrossRef]

- Zube, E.H. Environmental Perception. In Environmental Geology; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1999; pp. 214–216. [Google Scholar] [CrossRef]

- Ohno, R. A Hypothetical Model of Environmental Perception. In Theoretical Perspectives in Environment-Behavior Research; Springer: Boston, MA, USA, 2000; pp. 149–156. [Google Scholar] [CrossRef]

- Wilson, G.I.; Holton, M.D.; Walker, J.; Jones, M.W.; Grundy, E.; Davies, I.M.; Clarke, D.; Luckman, A.; Russill, N.; Wilson, V.; et al. A New Perspective on How Humans Assess Their Surroundings; Derivation of Head Orientation and Its Role in ‘Framing’ the Environment. PeerJ 2015, 3, e908. [Google Scholar] [CrossRef]

- Slater, M.; Sanchez-Vives, M.V. Enhancing Our Lives with Immersive Virtual Reality. Front. Robot. AI 2016, 3, 74. [Google Scholar] [CrossRef]

- Csikszentmihalyi, M. Flow: The Psychology of Optimal Experience; Harper & Row: New York, NY, USA, 2008. [Google Scholar]

- Csikszentmihalyi, M.; LeFevre, J. Optimal Experience in Work and Leisure. J. Pers. Soc. Psychol. 1989, 56, 815–822. [Google Scholar] [CrossRef] [PubMed]

- Alexiou, A.; Schippers, M.; Oshri, I. Positive Psychology and Digital Games: The Role of Emotions and Psychological Flow in Serious Games Development. Psychology 2012, 3, 1243–1247. [Google Scholar] [CrossRef]

- Ruvimova, A.; Kim, J.; Fritz, T.; Hancock, M.; Shepherd, D.C. “transport Me Away”: Fostering Flow in Open Offices through Virtual Reality. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar] [CrossRef]

- Jennett, C.; Cox, A.L.; Cairns, P.; Dhoparee, S.; Epps, A.; Tijs, T.; Walton, A. Measuring and Defining the Experience of Immersion in Games. Int. J. Hum. Comput. Stud. 2008, 66, 641–661. [Google Scholar] [CrossRef]

- Kim, D.; Ko, Y.J. The Impact of Virtual Reality (VR) Technology on Sport Spectators’ Flow Experience and Satisfaction. Comput. Hum. Behav. 2019, 93, 346–356. [Google Scholar] [CrossRef]

- Kiili, K. Content Creation Challenges and Flow Experience in Educational Games: The IT-Emperor Case. Internet High. Educ. 2005, 8, 183–198. [Google Scholar] [CrossRef]

- de Regt, A.; Barnes, S.J.; Plangger, K. The Virtual Reality Value Chain. Bus. Horiz. 2020, 63, 737–748. [Google Scholar] [CrossRef]

- Bodzin, A.; Robson, A., Jr.; Hammond, T.; Anastasio, D. Investigating Engagement and Flow with a Placed-Based Immersive Virtual Reality Game. J. Sci. Educ. Technol. 2020, 30, 347–360. [Google Scholar] [CrossRef]

- Csikszentmihalyi, M. Flow and Education. In Applications of Flow in Human Development and Education; Springer: Dordrecht, The Netherlands, 2014; pp. 129–151. [Google Scholar] [CrossRef]

- Chang, E.; Kim, H.T.; Yoo, B. Virtual Reality Sickness: A Review of Causes and Measurements. Int. J. Hum. Comput. Interact. 2020, 36, 1658–1682. [Google Scholar] [CrossRef]

- Bown, J.; White, E.; Boopalan, A. Looking for the Ultimate Display. In Boundaries of Self and Reality Online; Elsevier: Amsterdam, The Netherlands, 2017; pp. 239–259. [Google Scholar] [CrossRef]

- Meta Store. Apps That Support Hand Tracking on Meta Quest Headsets. Available online: https://www.meta.com/help/quest/articles/headsets-and-accessories/controllers-and-hand-tracking/#hand-tracking (accessed on 12 December 2022).

- Seibert, J.; Shafer, D.M. Control Mapping in Virtual Reality: Effects on Spatial Presence and Controller Naturalness. Virtual Real. 2018, 22, 79–88. [Google Scholar] [CrossRef]

- Masurovsky, A.; Chojecki, P.; Runde, D.; Lafci, M.; Przewozny, D.; Gaebler, M. Controller-Free Hand Tracking for Grab-and-Place Tasks in Immersive Virtual Reality: Design Elements and Their Empirical Study. Multimodal Technol. Interact. 2020, 4, 91. [Google Scholar] [CrossRef]

- Benda, B.; Esmaeili, S.; Ragan, E.D. Determining Detection Thresholds for Fixed Positional Offsets for Virtual Hand Remapping in Virtual Reality. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Porto de Galinhas, Brazil, 9–13 November 2020; IEEE: New York, NY, USA, 2020; pp. 269–278. [Google Scholar] [CrossRef]

- Wang, A.; Thompson, M.; Uz-Bilgin, C.; Klopfer, E. Authenticity, Interactivity, and Collaboration in Virtual Reality Games: Best Practices and Lessons Learned. Front. Virtual Real. 2021, 2, 734083. [Google Scholar] [CrossRef]

- Nanjappan, V.; Liang, H.N.; Lu, F.; Papangelis, K.; Yue, Y.; Man, K.L. User-Elicited Dual-Hand Interactions for Manipulating 3D Objects in Virtual Reality Environments. Hum.-Cent. Comput. Inf. Sci. 2018, 8, 31. [Google Scholar] [CrossRef]

- Hemmerich, W.; Keshavarz, B.; Hecht, H. Visually Induced Motion Sickness on the Horizon. Front. Virtual Real. 2020, 1, 582095. [Google Scholar] [CrossRef]

- Yardley, L. Orientation Perception, Motion Sickness and Vertigo: Beyond the Sensory Conflict Approach. Br. J. Audiol. 1991, 25, 405–413. [Google Scholar] [CrossRef]

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator Sickness Questionnaire: An Enhanced Method for Quantifying Simulator Sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- Kwon, C. Verification of the Possibility and Effectiveness of Experiential Learning Using HMD-Based Immersive VR Technologies. Virtual Real. 2019, 23, 101–118. [Google Scholar] [CrossRef]

- Ghosh, K.; Parikh, T.S.; Chavan, A.L. Design Considerations for a Financial Management System for Rural, Semi-Literate Users. In Proceedings of the CHI’03 Extended Abstracts on Human Factors in Computer Systems, Fort Lauderdale, FL, USA, 5–10 April 2003; p. 824. [Google Scholar] [CrossRef]

- Huenerfauth, M.P. Developing Design Recommendations for Computer Interfaces Accessible to Illiterate Users. Master’s Thesis, University College Dublin, Dublin, Ireland, 2002. [Google Scholar]

- Parikh, T.; Ghosh, K.; Chavan, A. Design Studies for a Financial Management System for Micro-Credit Groups in Rural India. ACM SIGCAPH Comput. Phys. Handicap. 2003, 73–74, 15–22. [Google Scholar] [CrossRef]

- Zaman, S.K.U.; Khan, I.A.; Hussain, S.S.; Iqbal, T.; Shuja, J.; Ahmed, S.F.; Jararweh, Y.; Ko, K. PreDiKT-OnOff: A Complex Adaptive Approach to Study the Impact of Digital Social Networks on Pakistani Students’ Personal and Social Life. Concurr. Comput. 2020, 32, e5121. [Google Scholar] [CrossRef]

- Medhi Thies, I. User Interface Design for Low-Literate and Novice Users: Past, Present and Future. Found. Trends®Hum.–Comput. Interact. 2015, 8, 1–72. [Google Scholar] [CrossRef]

- Rasmussen, M.K.; Pedersen, E.W.; Petersen, M.G.; Hornbæk, K. Shape-Changing Interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; ACM: New York, NY, USA, 2012; pp. 735–744. [Google Scholar] [CrossRef]

- Saba, T. Intelligent Game-Based Learning: An Effective Learning Model Approach. Int. J. Comput. Appl. Technol. 2020, 64, 208. [Google Scholar] [CrossRef]

- Wade, S.; Kidd, C. The Role of Prior Knowledge and Curiosity in Learning. Psychon. Bull. Rev. 2019, 26, 1377–1387. [Google Scholar] [CrossRef] [PubMed]

- Ali, W.; Riaz, O.; Mumtaz, S.; Khan, A.R.; Saba, T.; Bahaj, S.A. Mobile Application Usability Evaluation: A Study Based on Demography. IEEE Access 2022, 10, 41512–41524. [Google Scholar] [CrossRef]

- El-Dakhs, D.A.S.; Altarriba, J. How Do Emotion Word Type and Valence Influence Language Processing? The Case of Arabic–English Bilinguals. J. Psycholinguist. Res. 2019, 48, 1063–1085. [Google Scholar] [CrossRef] [PubMed]

- Tyng, C.M.; Amin, H.U.; Saad, M.N.M.; Malik, A.S. The Influences of Emotion on Learning and Memory. Front. Psychol. 2017, 8, 1454. [Google Scholar] [CrossRef] [PubMed]

- Kern, A.C.; Ellermeier, W. Audio in VR: Effects of a Soundscape and Movement-Triggered Step Sounds on Presence. Front. Robot. AI 2020, 7, 20. [Google Scholar] [CrossRef] [PubMed]

- Stockton, B.A.G. How Color Coding Formulaic Writing Enhances Organization: A Qualitative Approach for Measuring Student Affect. Master’s Thesis, Humphreys College, Stockton, CA, USA, 2014. [Google Scholar]

- Itaguchi, Y.; Yamada, C.; Yoshihara, M.; Fukuzawa, K. Writing in the Air: A Visualization Tool for Written Languages. PLoS ONE 2017, 12, e0178735. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A “Quick and Dirty” Usability Scale, 1st ed.; Taylor & Francis: Abingdon, UK, 1996. [Google Scholar]

- Kamińska, D.; Zwoliński, G.; Laska-Leśniewicz, A. Usability Testing of Virtual Reality Applications—The Pilot Study. Sensors 2022, 22, 1342. [Google Scholar] [CrossRef]

- Khundam, C.; Vorachart, V.; Preeyawongsakul, P.; Hosap, W.; Noël, F. A Comparative Study of Interaction Time and Usability of Using Controllers and Hand Tracking in Virtual Reality Training. Informatics 2021, 8, 60. [Google Scholar] [CrossRef]

| Interaction Modalities | Interaction Types | ||||

|---|---|---|---|---|---|

| Grab | Pinch | Poke | Move | Haptics | |

| Motion Controllers | Press the Grip button. | Press the trigger button. | Press the grip button and point the index finger. | The controllers track translation and rotation. | Feedback on interaction |

| Hand Tracking | Make a grip. | Join the index finger and thumb. | Make a grip and point the index finger. | Translation and rotation are tracked by HMD. | None |

| # | Task | Interactions |

|---|---|---|

| 1 | Grab the objects, 2 are required for the next task. | Grab and/or pinch |

| 2 | Grab the blue object on the map and move it to the appropriate province. | Grab and move (translate) |

| 3 | Press the buttons on the left of the table to change the music and/or on the right to change the TV channels. | Poke |

| 4 | Open the black drawer of the small cupboard and put the bracelet inside. | Grab and move (translate), grab and place |

| 5 | Grab the sword on the pedestal and cut the hay sticks. | Grab, move (translate and rotate), and use. |

| 6 | Open the red box in the big cupboard and grab the pistol inside. Shoot the target. | Grab and move (rotate), Grab, move and use. |

| # | Task | Interactions |

|---|---|---|

| 1 | Grab the alphabet card from the alphabet board by pointing your palm toward the board and pinch or grab it. | Distance Grab and pinch |

| 2 | Press the button on the alphabet card to watch an informative video about the alphabet. (Task 1 must be completed for this to work). | Poke |

| 3 | Use all three practice modes of writing the alphabets. Air-Writing, Board writing, Using Urdu keyboard. | Pinch and Move, Grab and Move, Poke |

| Id | Questions |

|---|---|

| 1 | I think that I would like to use this system frequently. مجھے لگتا ہے کہ میں اس سسٹم کو کثرت سے استعمال کرنا چاہوں گا۔ |

| 2 | I found the system unnecessarily complex. میں نے سسٹم کو غیر ضروری طور پر پیچیدہ پایا۔ |

| 3 | I thought the system was easy to use. میں نے سوچا تھا کہ سسٹم استعمال کرنا آسان ہوگا۔ |

| 4 | I think that I would need the support of a technical person to be able to use this system. میں سمجھتا ہوں کہ اس سسٹم کو استعمال کرنے کے لیے مجھے کسی تکنیکی شخص کی مدد کی ضرورت ہوگی۔ |

| 5 | I found the various functions in this system were well integrated. میں نے پایا کہ اس سسٹم میں مختلف طریقے اچھی طرح سے ضم تھے۔ |

| 6 | I thought there was too much inconsistency in this system. میں نے سوچا کہ اس سسٹم میں بہت زیادہ عدم مطابقت ہے۔ |

| 7 | I would imagine that most people would learn to use this system very quickly. میں تصور کرتا ہوں کہ زیادہ تر لوگ اس نظام کو بہت جلد استعمال کرنا سیکھ لیں گے۔ |

| 8 | I found the system very cumbersome to use. میں نے سسٹم کو استعمال کرنے میں اپنے آپ کو بہت بوجھل پایا۔ |

| 9 | I felt very confident using the system. میں نے سسٹم کا استعمال کرتے ہوئے بہت پر اعتماد محسوس کیا۔ |

| 10 | I needed to learn a lot of things before I could get going with this system. اس سسٹم استعمال کرنے سے پہلے مجھے بہت سی چیزیں سیکھنے کی ضرورت تھی۔ |

| Variables | Level 1 | Level 2 | ||

|---|---|---|---|---|

| Id | Measures | Id | Measures | |

| Effectiveness | 1 | Total Interaction in Level 1 | 1 | The user is confident while interacting in VR. |

| 2 | The user was confident while interacting in VR. | 2 | The user required external help. | |

| 3 | The user required external help. | 3 | The user followed in-app instructions. | |

| 4 | The user followed in-app instructions. | 4 | The user tried varied poses for interaction | |

| 5 | The user tried varied poses for interaction. | 5 | The user tried to interact with every object. | |

| 6 | The user tried to interact with every object. | 6 | Total Interactions in Level 2 | |

| Efficiency | 1 | 1st Task Completion Time | 1 | 1st Task Completion Time |

| 2 | 2nd Task Completion Time | 2 | 2nd Task Completion Time | |

| 3 | 3rd Task Completion Time | 3 | 3rd-a Task Completion Time | |

| 4 | 4th Task Completion Time | 4 | 3rd-b Task Completion Time | |

| 5 | 5th Task Completion Time | 5 | 3rd-c Task Completion Time | |