Biases in Stakeholder Elicitation as a Precursor to the Systems Architecting Process

Abstract

:1. Introduction

1.1. Heuristics

1.2. Stakeholders and Cognitive Bias

1.3. Systems Architecture

1.4. Research Contribution

2. Materials and Methods

Systems Architecture Workshop

3. Results and Discussion

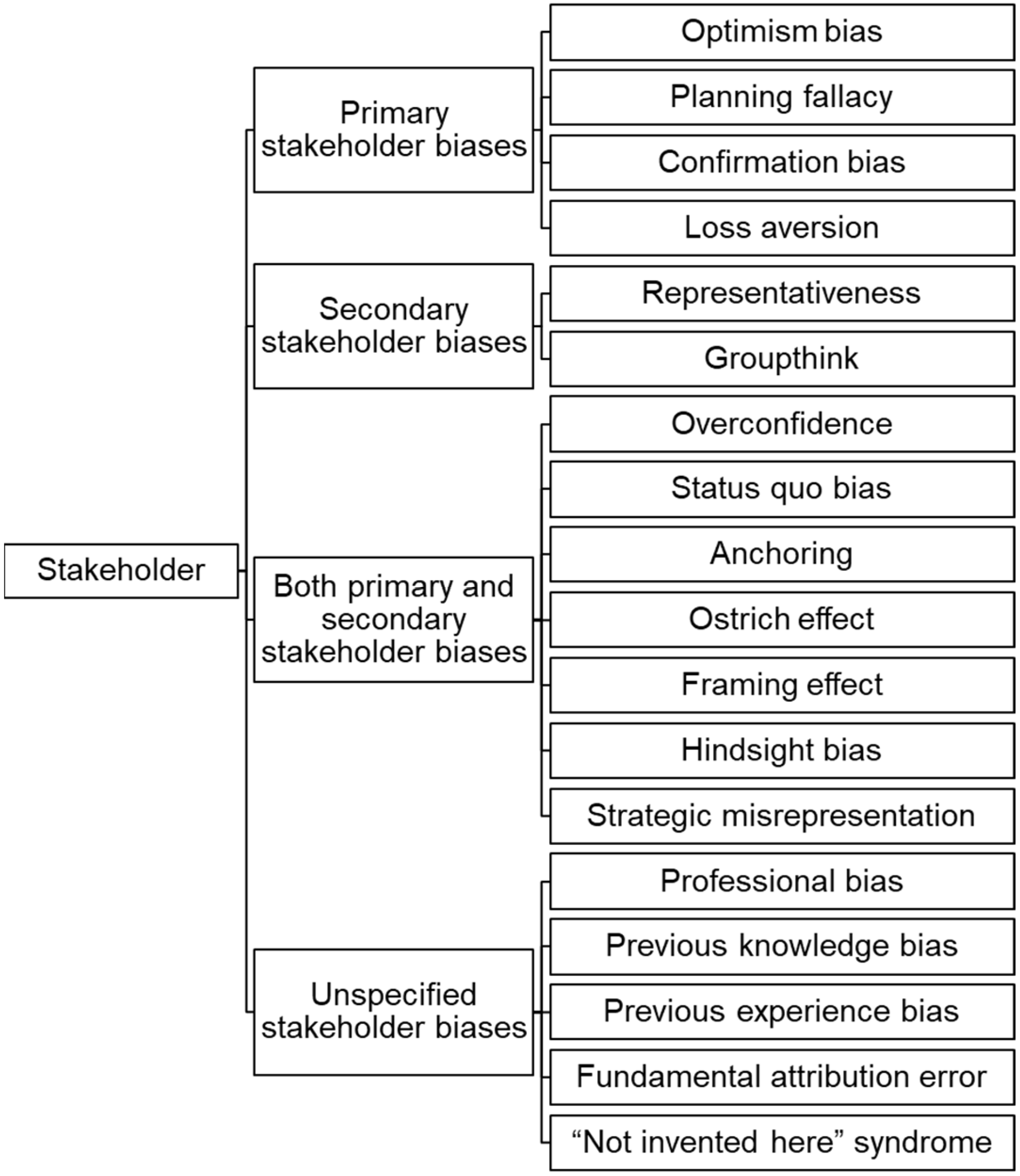

3.1. General Stakeholder Biases

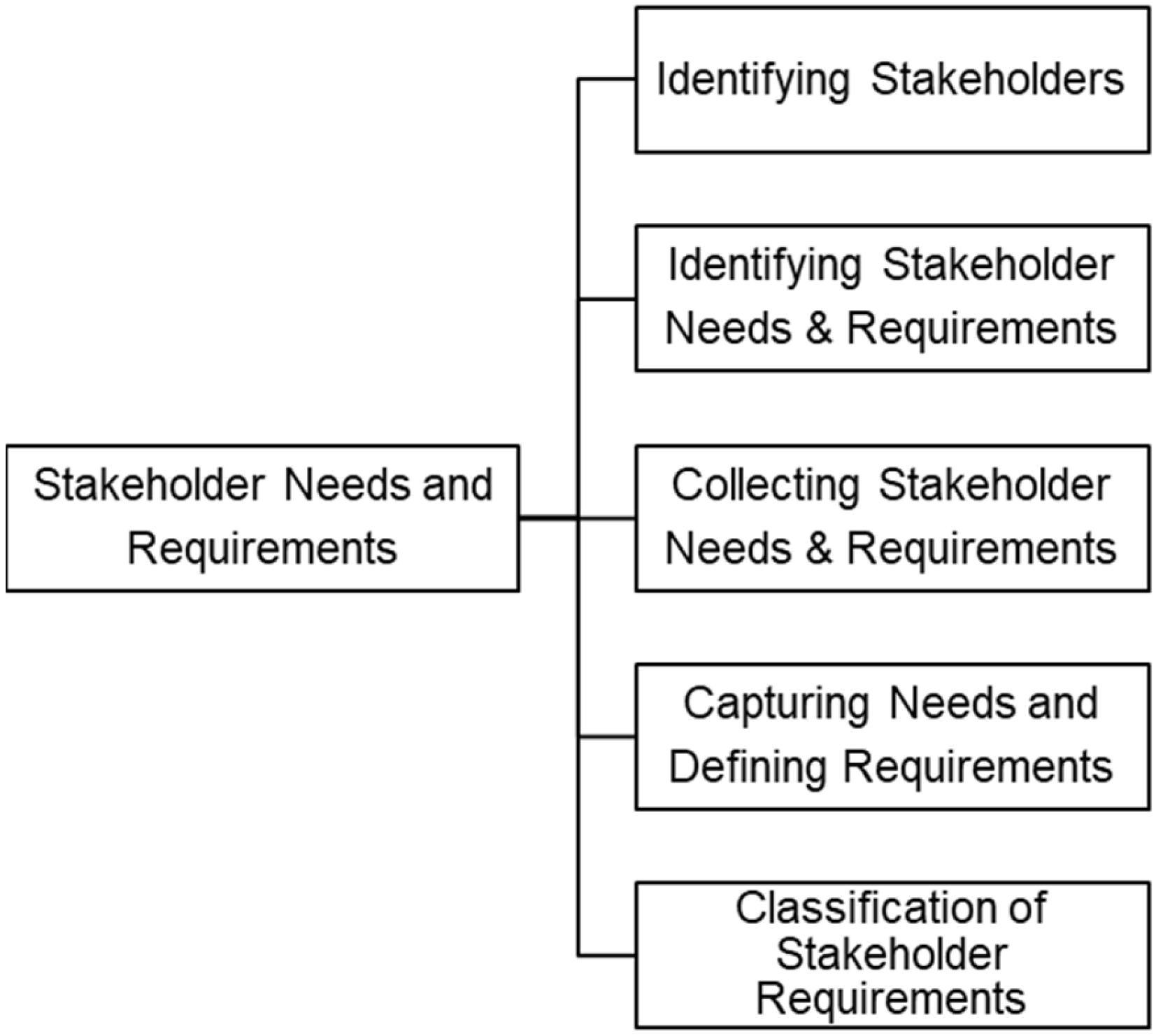

3.2. Stakeholder Needs and Requirements

3.2.1. Identifying Stakeholders

3.2.2. Identifying Stakeholder Needs and Requirements

3.2.3. Collecting Stakeholder Needs and Requirements

3.2.4. Capturing Needs and Defining Requirements

3.2.5. Classification of Stakeholder Requirements

3.3. Biases in Stakeholder Elicitation

3.3.1. Identifying Stakeholders

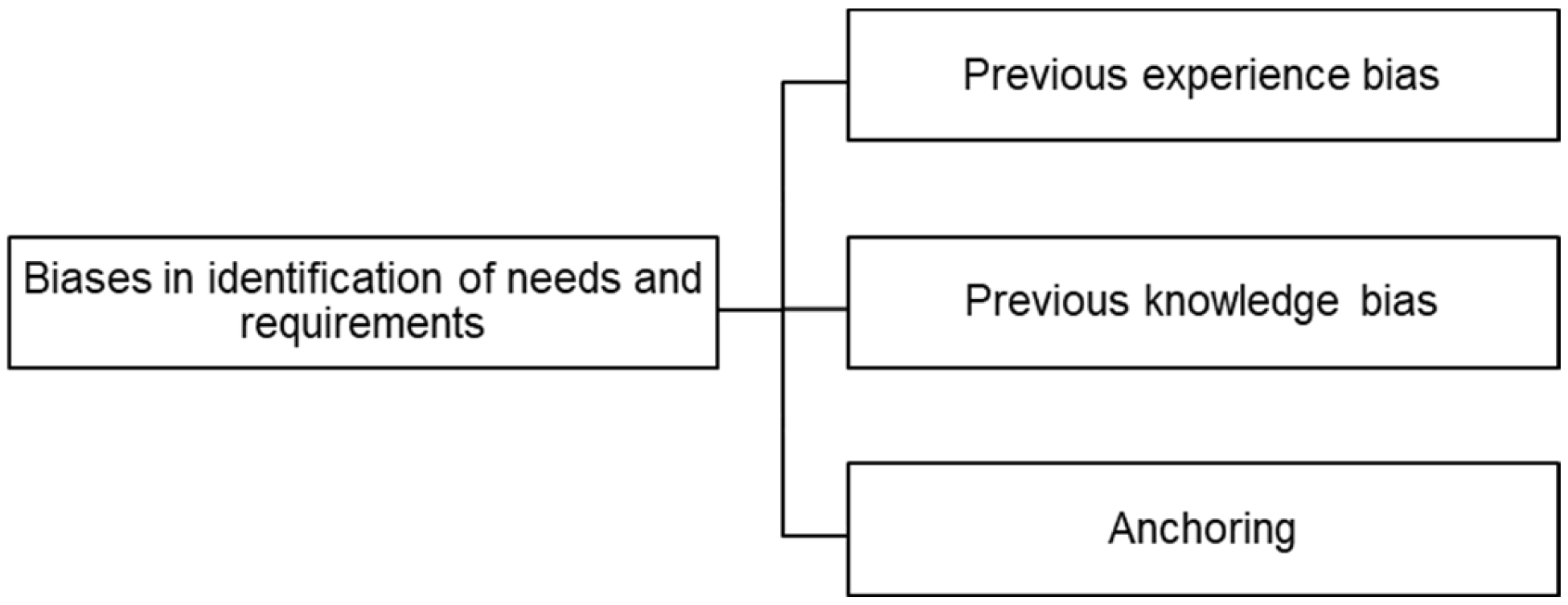

3.3.2. Identifying Stakeholder Needs and Requirements

3.3.3. Collecting Stakeholder Needs and Requirements

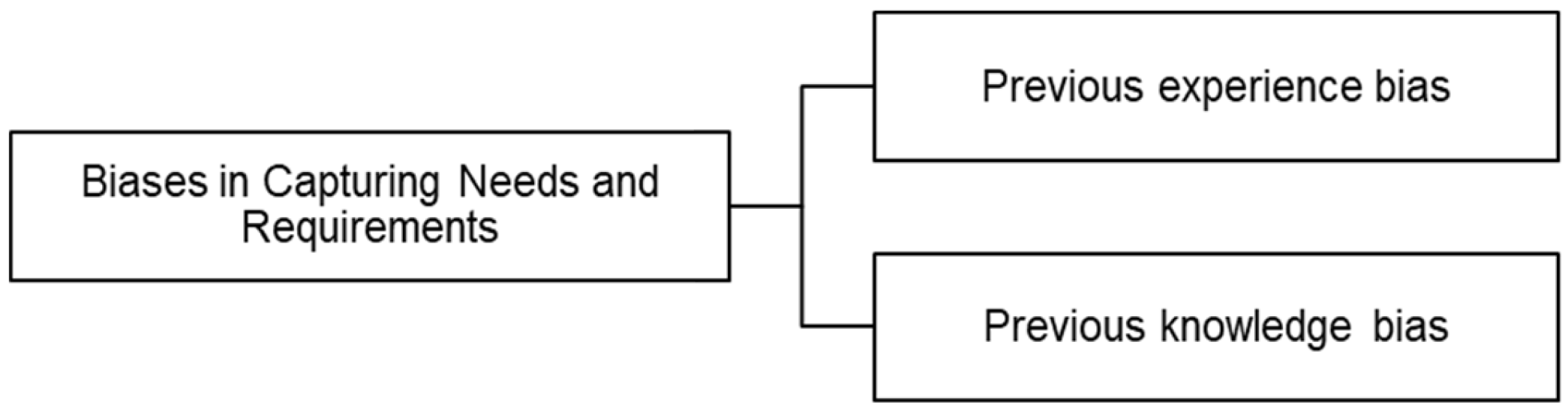

3.3.4. Capturing Needs and Defining Requirements

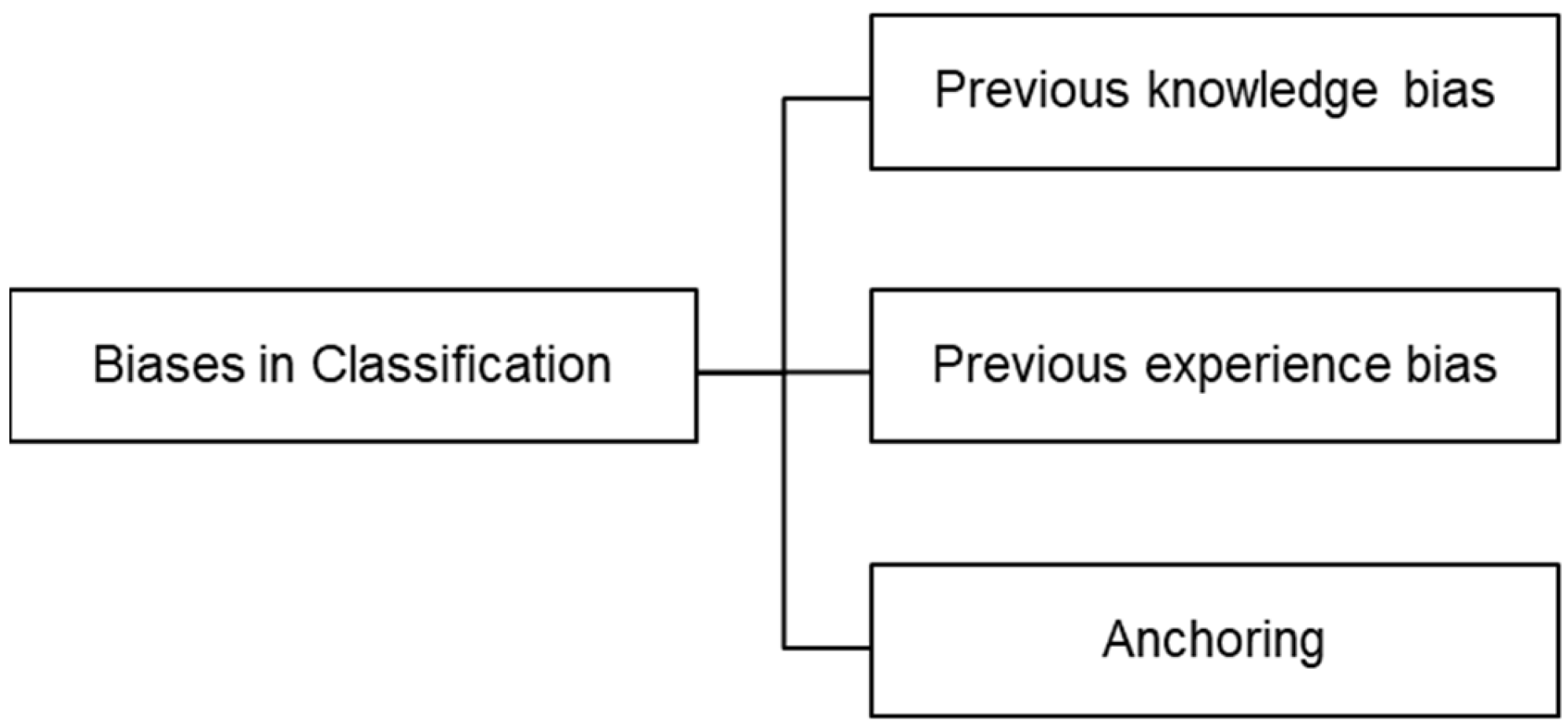

3.3.5. Classification of Stakeholder Requirements

3.4. Systems Architecture Workshop

3.4.1. Stakeholder Identification and Selection

3.4.2. Identifying Stakeholder Needs and Requirements

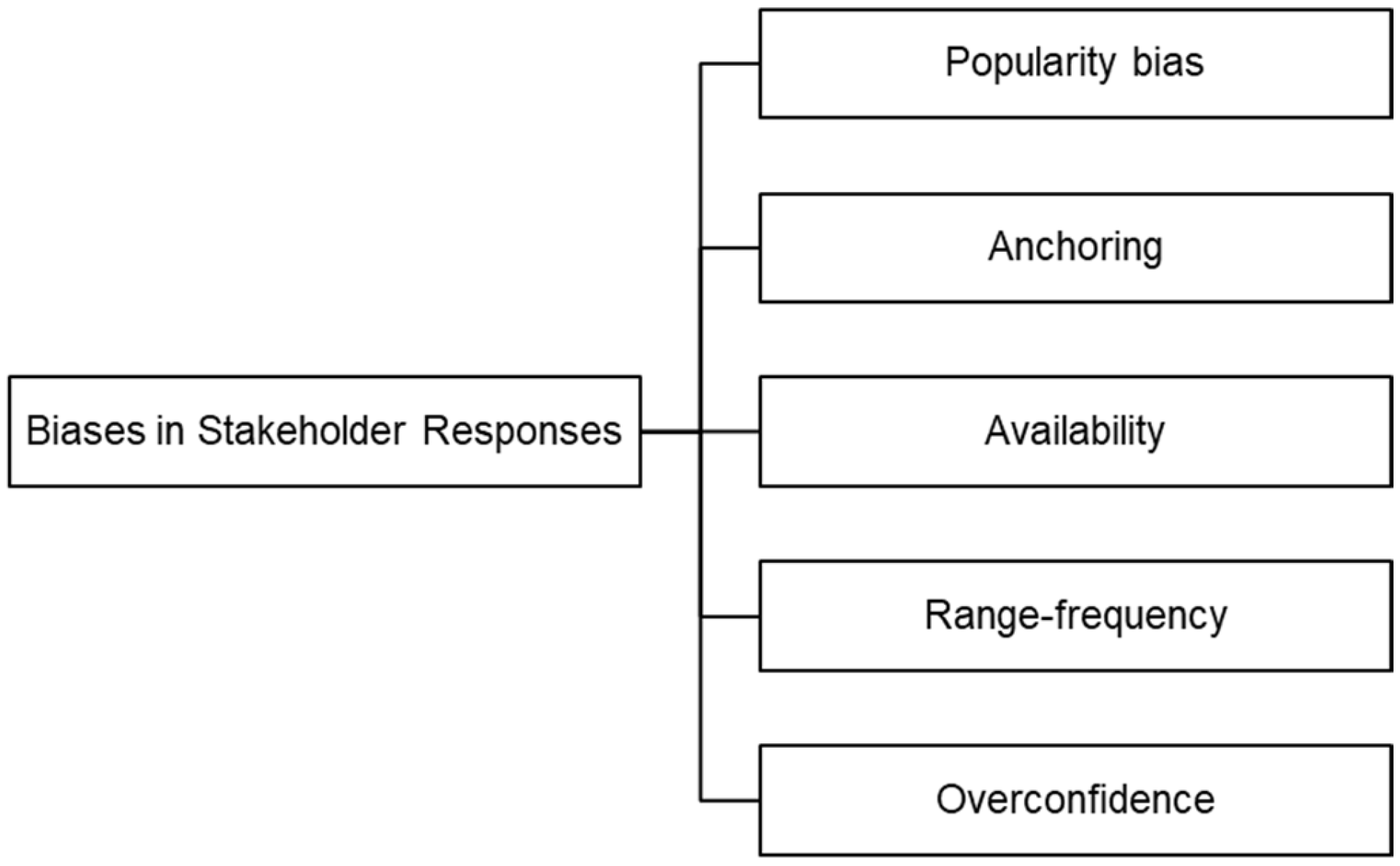

3.4.3. Collecting Stakeholder Needs and Requirements: Stakeholder Response Biases

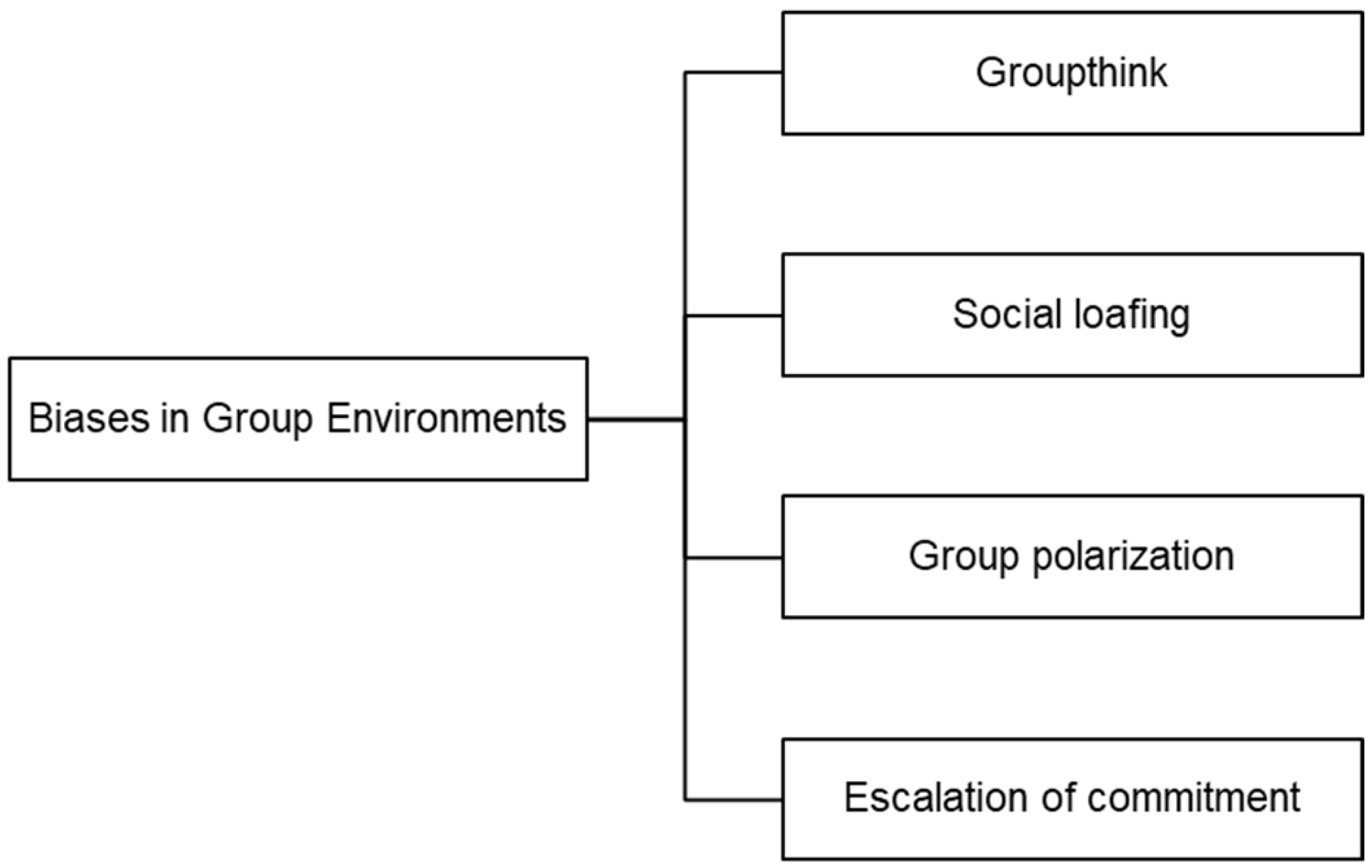

3.4.4. Collecting Stakeholder Needs and Requirements: Group Environment Biases

3.4.5. Collecting Stakeholder Needs and Requirements: Stakeholder Participation Biases

3.4.6. Capturing Needs and Defining Requirements

3.4.7. Classification of Stakeholder Requirements

3.5. Application of Results

4. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

- Apathy bias [38]—Stakeholders may not respond if they feel others will perform their role for them.

- Awareness bias [38]—Announcing an open call for stakeholder engagement may target a biased and unbalanced group of stakeholders.

- Confirmation bias [23]—The tendency to focus on information that affirms the individual’s beliefs and assumptions.

- Escalation of commitment [26]—The tendency to justify increased investment in a decision, based on the cumulative prior investment, despite new evidence suggesting the decision may be wrong (some may refer to this as the sunk cost fallacy).

- Faith bias [38]—Stakeholders may not engage if they believe that their views will not be heard due to failures on the part of others.

- Framing effect [23]—Using an approach or description that is too narrow for the situation or issue.

- Fundamental attribution error [35]—People blame individuals rather than the situation for negative events.

- Group polarization [26]—Groups sometimes make more extreme (compound) decisions than the initial position of its (individual) members.

- Hindsight [28]—The tendency to see past events as being predictable at the time those events happened.

- Identification bias [38]—Purposeful selection of stakeholders using personal/organizational knowledge or unsystematic searches may result in a biased and unbalanced group of stakeholders.

- Intimidation bias [38]—Stakeholders may be less likely to respond if they feel their views are unlikely to be heard over the views of the majority.

- Loss aversion [23]—The tendency of individuals to prefer to avoid losses than acquire gains.

- Network bias [38]—Asking others to suggest potential stakeholders may result in a biased and unbalanced group of stakeholders.

- “Not invented here” syndrome [36]—A general negative attitude towards knowledge (ideas, technologies) derived from an external source.

- Optimism bias [23]—The tendency to be overly optimistic about the outcome of planned actions, including overestimation of the frequency and size of positive events and underestimation of the frequency and size of negative ones.

- Ostrich effect [23]—Avoiding risky or difficult situations or failed projects at the cost of learning.

- Overconfidence [23]—Making fast and intuitive decisions when slow and deliberate decisions are necessary; individuals are overly optimistic in their initial assessment of a situation and then are slow to incorporate additional information about the situation into later assessments because of their initial overconfidence.

- Planning fallacy [23]—The tendency to underestimate costs, schedule, and risk and overestimate benefits and opportunities.

- Popularity bias [39]—Certain stakeholders (popular ones) may achieve very high utility values while other stakeholders (less popular ones) are ignored.

- Previous experience bias [34]—Prior experience can make a significant impact in judgments.

- Previous knowledge bias [34]—Prior knowledge is used to make judgments.

- Professional bias [33]—Practitioners’ experience or expertise may impact judgments/predictions.

- Range-frequency bias [40]—The tendency to assign less probability to the categories judged most likely and more probability to other categories.

- Self-promotion bias [38]—Systematically searching for potential stakeholders may select only those with an online presence, producing a biased or unbalanced group of stakeholders.

- Social loafing [26]—Group situations may reduce the motivation, level of effort, and skills employed in problem-solving compared with those that an individual would deploy when working alone.

- Status quo [23]—The human preference for the current state of affairs; any change from the baseline is considered a loss.

References

- Madni, A.M.; Madni, C.C. Architectural framework for exploring adaptive human-machine teaming options in simulated dynamic environments. Systems 2018, 6, 44. [Google Scholar] [CrossRef]

- Suzuki, S.; Harashima, F. Estimation algorithm of machine operational intention by Bayes filtering with self-organizing map. Adv. Hum.-Comput. Interact. 2012, 2012, 724587. [Google Scholar] [CrossRef]

- SEBoK Editorial Board. The Guide to the Systems Engineering Body of Knowledge (SEBoK); v. 2.7; Cloutier, R.J., Ed.; The Trustees of the Stevens Institute of Technology: Hoboken, NJ, USA, 2022. [Google Scholar]

- Kahneman, D. Thinking, Fast and Slow; Macmillan: New York, NY, USA, 2011. [Google Scholar]

- Payne, J.W.; Bettman, J.R.; Johnson, E.J. The Adaptive Decision Maker; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar]

- Oppenheimer, D.M. Not so fast! (and not so frugal!): Rethinking the recognition heuristic. Cognition 2003, 90, B1–B9. [Google Scholar] [CrossRef]

- Ellis, G. Cognitive Biases in Visualizations; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Freeman, R.E. Strategic Management: A Stakeholder Approach; Pitman: Boston, MA, USA, 1984. [Google Scholar]

- Clarkson, M.B.E. A stakeholder framework for analyzing and evaluating corporate social performance. Acad. Manag. Rev. 1995, 20, 92–117. [Google Scholar] [CrossRef]

- Mitchell, R.K.; Agle, B.R.; Wood, D.J. Toward a Theory of Stakeholder Identification and Salience: Defining the Principle of Who and What Really Counts. Acad. Manag. Rev. 1997, 22, 853–886. [Google Scholar] [CrossRef]

- Babar, M.A.; Zhu, L.; Jeffery, R. A framework for classifying and comparing software architecture evaluation methods. In Proceedings of the 2004 Australian Software Engineering Conference, Melbourne, VIC, Australia, 13–16 April 2004; pp. 309–318. [Google Scholar]

- Caron, F. Project planning and control: Early engagement of project stakeholders. J. Mod. Proj. Manag. 2014, 2, 85–97. [Google Scholar]

- Burgman, M.A. Expert Frailities in Conservation Risk Assessment and Listing Decisions; Royal Zoological Society of New South Wales: New South Wales, Australia, 2004. [Google Scholar]

- Hemming, V.; Burgman, M.A.; Hanea, A.M.; McBride, M.F.; Wintle, B.C. A practical guide to structured expert elicitation using the IDEA protocol. Methods Ecol. Evol. 2018, 9, 169–180. [Google Scholar] [CrossRef]

- Shanteau, J.; Weiss, D.J.; Thomas, R.P.; Pounds, J.C. Performance-based assessment of expertise: How to decide if someone is an expert or not. Eur. J. Oper. Res. 2002, 136, 253–263. [Google Scholar] [CrossRef]

- Wallsten, T.S.; Budescu, D.V.; Rapoport, A.; Zwick, R.; Forsyth, B. Measuring the vague meanings of probability terms. J. Exp. Psychol. Gen. 1986, 115, 348. [Google Scholar] [CrossRef]

- Janis, I. Groupthink. In A First Look at Communication Theory; McGrawHill: New York, NY, USA, 1991; pp. 235–246. [Google Scholar]

- Aspinall, W.P.; Cooke, R.M. Quantifying Scientific Uncertainty from Expert Judgment Elicitation. In Risk and Uncertainty Assessment for Natural Hazards; Rougier, J., Sparks, S., Hill, L., Eds.; Cambridge University Press: Cambridge, UK, 2013; pp. 64–99. [Google Scholar]

- Lorenz, J.; Rauhut, H.; Schweitzer, F.; Helbing, D. How social influence can undermine the wisdom of crowd effect. Proc. Natl. Acad. Sci. USA 2011, 108, 9020–9025. [Google Scholar] [CrossRef]

- Arik, S.L.; Sri, A.L. The Effect of Overconfidence and Optimism Bias on Stock Investment Decisions with Financial Literature as Moderating Variable. Eurasia Econ. Bus. 2021, 12, 84–93. [Google Scholar]

- Alnifie, K.M.; Kim, C. Appraising the Manifestation of Optimism Bias and Its Impact on Human Perception of Cyber Security: A Meta Analysis. J. Inf. Secur. 2023, 14, 93–110. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, H. Research on Human Machine Viewpoint of Architecture for C4ISR System. In Proceedings of the 2019 11th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 24–25 August 2019; pp. 84–88. [Google Scholar] [CrossRef]

- Chatzipanos, P.A.; Giotis, T. Cognitive Biases as Project & Program Complexity Enhancers: The Astypalea Project; Project Management Institute: Newtown Square, PA, USA, 2014. [Google Scholar]

- Tversky, A.; Kahneman, D. Judgment under Uncertainty: Heuristics and Biases. Science 1974, 185, 1124–1131. [Google Scholar] [CrossRef] [PubMed]

- Irshad, S.; Badshah, W.; Hakam, U. Effect of Representativeness Bias on Investment Decision Making. Manag. Adm. Sci. Rev. 2016, 5, 26–30. [Google Scholar]

- Mannion, R.; Thompson, C. Systematic biases in group decision-making: Implications for patient safety. Int. J. Qual. Health Care 2014, 26, 606–612. [Google Scholar] [CrossRef] [PubMed]

- Gulati, A.; Lozano, M.A.; Lepri, B.; Oliver, N. BIASeD: Bringing Irrationality into Automated System Design. arXiv 2022, arXiv:2210.01122. [Google Scholar]

- Flyvbjerg, B. Top Ten Behavioral Biases in Project Management: An Overview. Proj. Manag. J. 2021, 52, 531–546. [Google Scholar] [CrossRef]

- Adomavicius, G.; Bockstedt, J.C.; Curley, S.P.; Zhang, J. Do recommender systems manipulate consumer preferences? A study of anchoring effects. Inf. Syst. Res. 2013, 24, 956–975. [Google Scholar] [CrossRef]

- Souza, P.E.U.; Carvalho Chanel, C.P.; Dehais, F.; Givigi, S. Towards human-robot interaction: A framing effect experiment. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary,, 9–12 October 2016; pp. 001929–001934. [Google Scholar]

- Kim, T.; Song, H. Communicating the limitations of AI: The effect of message framing and ownership on trust in artificial intelligence. Int. J. Hum. Comput. Interact. 2023, 39, 790–800. [Google Scholar] [CrossRef]

- Flyvbjerg, B. Quality control and due diligence in project management: Getting decisions right by taking the outside view. Int. J. Proj. Manag. 2012, 3, 760–774. [Google Scholar] [CrossRef]

- Enriquez-de-Salamanca, A. Stakeholders’ manipulation of Environmental Impact Assessment. Environ. Impact Assess. Rev. 2018, 68, 10–18. [Google Scholar] [CrossRef]

- Das-Smaal, E.A. Biases in categorization. Adv. Psychol. 1990, 68, 349–386. [Google Scholar]

- Coombs, W.T.; Holladay, S.J. Unpacking the halo effect: Reputation and crisis management. J. Commun. Manag. 2006, 10, 123–137. [Google Scholar] [CrossRef]

- Antons, D.; Piller, F.T. Opening the black box of “not invented here”: Attitudes, decision biases, and behavioral consequences. Acad. Manag. Perspect. 2015, 29, 193–217. [Google Scholar] [CrossRef]

- Samset, K. Early Project Appraisal: Making the Initial Choices; Palgrave Macmillan: London, UK, 2010. [Google Scholar]

- Haddaway, N.R.; Kohl, C.; Rebelo da Silva, N.; Schiemann, J.; Spok, A.; Stewart, R.; Sweet, J.B.; Wilhelm, R. A framework for stakeholder engagement during systematic reviews and maps in environmental management. Environ. Evid. 2017, 6, 1–14. [Google Scholar] [CrossRef]

- Abdollahpouri, H. Popularity Bias in Ranking and Recommendation. In Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society, Honolulu, HI, USA, 27–28 January 2019. [Google Scholar]

- O’Hagan, A. Expert Knowledge Elicitation: Subjective, but Scientific. Am. Stat. 2019, 73, 69–81. [Google Scholar] [CrossRef]

- Nelson, J.D.; McKenzie, C.R. Confirmation Bias. In Encyclopaedia of Medical Decision Making; Sage: Los Angeles, CA, USA, 2009; pp. 167–171. [Google Scholar]

- Parnas, D.L.; Weiss, D.M. Active Design Reviews: Principles and Practices. In Proceedings of the 8th International Conference on Software Engineering, London, UK, 28–30 August 1985. [Google Scholar]

- Zuber, L. What in the world were we thinking? Managing stakeholder expectations and engagement through transparent and collaborative project estimation. Proj. Manag. World J. 2013, 2, 1–13. [Google Scholar]

- Loh, G.H. Simulation differences between academia and industry: A branch prediction case study. In Proceedings of the IEEE International Symposium on Performance Analysis of Systems and Software, ISPASS 2005, Madison, WI, USA, 20–22 March 2005; pp. 21–31. [Google Scholar]

- Mineka, S.; Sutton, S.K. Cognitive biases and the emotional disorders. Sage J. 1992, 3, 65–69. [Google Scholar] [CrossRef]

- Acciarini, C.; Brunetta, F.; Boccardelli, P. Cognitive biases and decision-making strategies in times of change: A systematic literature review. Manag. Decis. 2021, 59, 638–652. [Google Scholar] [CrossRef]

- Das, T.K.; Teng, B.S. Cognitive biases and strategic decision processes: An integrative perspective. J. Manag. Stud. 1999, 36, 757–778. [Google Scholar] [CrossRef]

- Hammond, J.S.; Keeney, R.L.; Raiffa, H. Even swaps: A rational method for making trade-offs. Harv. Bus. Rev. 1998, 76, 137–150. [Google Scholar]

- Lahtinen, T.J.; Hämäläinen, R.P.; Jenytin, C. On preference elicitation processes which mitigate the accumulation of biases in multi-criteria decision analysis. Eur. J. Oper. Res. 2020, 282, 201–210. [Google Scholar] [CrossRef]

- Cooke, R.M. Experts in Uncertainty: Opinion and Subjective Probability in Science; Oxford University Press: New York, NY, USA, 1991. [Google Scholar]

- O’Hagan, A.; Buck, C.E.; Daneshkhah, A.; Eiser, J.R.; Garthwaite, P.H.; Jenkinson, D.J.; Rakow, T. Uncertain Judgements: Eliciting Experts’ Probabilities; John Wiley & Sons: West Sussex, UK, 2006. [Google Scholar]

- Van Bossuyt, D.L.; Beery, P.; O’Halloran, B.M.; Hernandez, A.; Paulo, E. The Naval Postgraduate School’s Department of Systems Engineering approach to mission engineering education through capstone projects. Systems 2019, 7, 38. [Google Scholar] [CrossRef]

- Bickford, J.; Van Bossuyt, D.L.; Beery, P.; Pollman, A. Operationalizing digital twins through model-based systems engineering methods. Syst. Eng. 2020, 23, 724–750. [Google Scholar] [CrossRef]

- Bhatia, G.; Mesmer, B. Trends in occurrences of systems engineering topics in literature. Systems 2019, 7, 28. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yeazitzis, T.; Weger, K.; Mesmer, B.; Clerkin, J.; Van Bossuyt, D. Biases in Stakeholder Elicitation as a Precursor to the Systems Architecting Process. Systems 2023, 11, 499. https://doi.org/10.3390/systems11100499

Yeazitzis T, Weger K, Mesmer B, Clerkin J, Van Bossuyt D. Biases in Stakeholder Elicitation as a Precursor to the Systems Architecting Process. Systems. 2023; 11(10):499. https://doi.org/10.3390/systems11100499

Chicago/Turabian StyleYeazitzis, Taylor, Kristin Weger, Bryan Mesmer, Joseph Clerkin, and Douglas Van Bossuyt. 2023. "Biases in Stakeholder Elicitation as a Precursor to the Systems Architecting Process" Systems 11, no. 10: 499. https://doi.org/10.3390/systems11100499

APA StyleYeazitzis, T., Weger, K., Mesmer, B., Clerkin, J., & Van Bossuyt, D. (2023). Biases in Stakeholder Elicitation as a Precursor to the Systems Architecting Process. Systems, 11(10), 499. https://doi.org/10.3390/systems11100499