Abstract

This paper reports the results of an investigation into system effectiveness’s role in acquiring and sustaining U.S. weapons systems from 1958 to 2022. An understanding is vital because the acquisition system has recently undergone a significant change. In the 64 year period covered by this study there have been many changes to the Department of Defense acquisition and sustainment processes. The investigation used three qualitative methods: a structured literature review, a grounded theory analysis of the structured literature review, and a historiography of the initial grounded theory results. The research identified five epochs, the first two lasting approximately 22 years each. The last epoch is still in its early period. Each epoch corresponds to a change in the acquisition process. There are four conclusions. First, system effectiveness does not serve its original intent and purpose. Second, analysis of source documents provides insight into why system effectiveness plays a diminished role. Third, the original approach to system effectiveness may have relevance for today’s problems and challenges. Finally, an integrated research methodology is valuable for making sense of conflicting information spread over time.

1. Introduction

This paper presents research into the role of system effectiveness in the acquisition and sustainment of U.S. defense systems from 1958 to 2022. The paper was first presented as a preprint in 2021 [1], revised and included in the proceedings of the Nineteenth Annual Naval Postgraduate School Acquisition Research Symposium in the Spring of 2022 [2]. This version of the paper is further revised and expanded and based on detailed research conducted over the last three years.

Prompted by a significant change to the acquisition process in 2020 that involved structural and philosophical revisions that omitted reference to system effectiveness, the research examined how the role of system effectiveness altered from its inception in the 1950s to 2022. The research examined the inception of system effectiveness, the attempts to apply the concept and methodology. Ultimately, using an approach that combines a structured literature review, grounded theory analysis methods, and historiography techniques, a theory was formulated as to why system effectiveness fell into disfavor.

Given the extensive period covered by this study and the many changes to the acquisition process that occurred during the period of interest, it would be reasonable to expect a change in the role of system effectiveness. The literature supports several changes throughout the time frame [3]. Still, changes were not necessarily driven by the acquisition process itself but by the underlying methodologies for developing systems that were also changing. The analysis shows a dynamic tension between the diverse communities involved in developing system effectiveness, which eventually led to the demise of the development of the concept as a methodology. However, system effectiveness still exists as a concept in systems engineering texts, such as Habayeb’s Systems Effectiveness [4] and the current version of Wasson’s System Engineering Analysis, Design, and Development [5].

This paper is organized as follows: the rest of the introduction including the statement of the problem and the contribution. Section 2 presents the methods followed by Section 3 which presents the results. Section 4 contains the discussion of the results and the conclusion.

1.1. Background

World War II highlighted the need for a concept and associated methodology by which the military could assess the effectiveness of weapons systems during development and influence the development process to meet contractual requirements. Complex challenges and problems faced the Department of Defense. State-of-the-art solutions were required, as were methods by which to evaluate them. In the 1950s and 1960s, military systems were pushing the state of the art. Post-war systems were even more complex, encompassing programs such as the B-52 bomber and the Polaris missile program. Moreover, given the nature of their missions, they needed to be ready, reliable and effective.

As a result, Secretary of Defense McNamara introduced Systems Analysis into the defense acquisition process to address the quantification of cost and the effectiveness of weapons systems [6]. The initial response to McNamara’s challenge came from the Army, Navy, Air Force, and analysis organizations within the Department of Defense. As a result, throughout the 1960s, there was a flurry of activity by all three military services as they tried to incorporate McNamara’s ideas into their vision of the acquisition process [7].

A review of the literature related to system effectiveness showed inconsistency in the concept from its first uses in the early 1960s through today. Earlier work by the reliability community started in the late 1950s. It served as the basis for developing the concept and associated methodology in the 1960s, while the literature shows little academic interest in the topic, there is a substantial body of work produced by the Department of Defense and defense contractors. The Department of Defense made a serious effort to develop system effectiveness as a discipline highlighted by the development of the WSEIAC1 methodology [8] to predict and measure system effectiveness. By the early 1980s, the concept had all but disappeared from the literature. As system effectiveness faded to a definition in the Defense Acquisition Guidebook [9], substantial interest in the topic of measures of effectiveness began to dominate the defense-related literature and is the most common concept currently in use. There is a subtle question that underlies this transition: why measures of effectiveness over system effectiveness? Accordingly, this paper aims to examine this fundamental question, provide context, and answer why the shift occurred.

1.2. Defining System Effectiveness: What Is System Effectiveness, and Why Is It Important?

Cost-effectiveness, system effectiveness, integrated logistics support, and maintainability comprise the acquisition and sustainment process [10]. Of these four components, system effectiveness is the linchpin. System effectiveness is the starting point for deriving the other three components. As Blanchard notes:

The ultimate goal of any system or equipment is to fulfill a particular mission for which it was designed. The degree of fulfillment is often referred to as system effectiveness. [7]2

The original intent of system effectiveness was to focus management attention on overall effectiveness throughout the system lifecycle, from design through testing [11]. Further, system effectiveness is a framework for analytic methods to predict and measure the overall results of the analysis while placing the contributing characteristics in their proper perspective relative to the desired outcome of the system performing the mission [11]. Thus, the system effectiveness framework provides a basis for developing needs and requirements during project definition and concept development influencing system design and development to meet contractual requirements during the acquisition phase and to maintain the required level of system effectiveness during the sustainment phase.

As developed by the Department of Defense, system effectiveness in the evaluation role combined elements of system availability, reliability theory and system capability analysis. It was an outgrowth of work started in the 1950s by the reliability community and the system analysis work done by the RAND Corporation. In 1965, Dordick, an analyst from RAND, wrote a monograph describing system effectiveness and noted that the merger of reliability and systems analysis could be an uncomfortable relationship because the two groups viewed the problem from different perspectives. [12].

The official definition of system effectiveness is [7]:

“a measure of the degree to which an item can be expected to achieve a set of specific mission requirements, and which may be expressed as a function of availability, dependability, and capability”.

As a measure, system effectiveness is one of the two elements of cost-effectiveness. Together, system effectiveness and cost-effectiveness represent the key elements of a 15-step management approach formulated to deal with the cost and complexity of modern military systems [11]. Restating the definition of system effectiveness per Blanchard [7,10], the management goal is to establish the probability that a system can successfully meet the operational demand within a given time when operated under specified conditions. This goal is the probability of success for the system. Accordingly, the framework focuses on evaluating or predicting the degree of effectiveness for any system configuration (existing or proposed). This degree of effectiveness has a cost associated with it that is the value used in the cost-effectiveness (CE) equation displayed in Equation (1) [10]:

where:

= cost of system effectiveness

= initial cost of procurement

= sustainment cost (lifecycle cost)

System effectiveness has three elements that determine both cost and the probability that the system is effective (). This paper refers to them as the pillars upon which the system effectiveness concept rests. These pillars are:

- Availability—is the system ready to perform its function?

- Dependability—how well will the system perform during a mission?

- Capability—will the system produce the desired effects?

The first pillar is commonly referred to as operational availability or readiness and the second pillar is commonly called mission reliability. Finally, some sources equate the third pillar, capability, with design adequacy, i.e., is the design adequate for its intended mission? The three pillars are expressed as probabilities; thus, system effectiveness , the measure, is the product of , , and as shown in Equation (2)

The intent was to use the system effectiveness concept as a vehicle to proceed from predicted values in the conceptual phase of acquisition to empirical values as the system design matured, became operational and sustainment costs become paramount.

1.3. Statement of the Problem

The review of the literature over the last 60 years reveals that there is only fragmented material available, There is no single document covering the history of system effectiveness nor are many of the references identified through the structured literature review accessible.

Current literature referencing the system effectiveness concept (and, by extension, effectiveness measures) describes it ad hoc, based more on tribal lore than primary sources [13]. This approach is understandable because the legacy literature describes four system effectiveness models, one for the Army, one for the Air Force, and two for the Navy, while there is the common foundation of the pillars between the four models, terminology issues confound the problem. For example, the Navy has system effectiveness and operational effectiveness models. Further, the operational effectiveness model uses the three pillars concept (with different names), whereas the Navy’s system effectiveness model has an entirely different approach that does not directly use the three pillars. The latter model is what the Navy intended to use for system effectiveness studies, even though the model was inconsistent with the Army or the Air Force (which use the three pillars but different names for the pillars). To further the confusion, the Navy used the operational effectiveness model to train its analysts and supervisory personnel.

The problem is further compounded by the complexity of the mathematics used to describe system effectiveness. The common depiction of system effectiveness (the measure) is a scalar model of the three pillars’ mathematical product as described above. In reality, system effectiveness has a state-space solution and is the product of the availability vector ([A]) times the dependability vector ([D] times the capability vector ([C] or [11,14]. The scalar solution is a special case that occurs when the value of each pillar is equal to one.

Another problem is the lack of current references. The literature search turned up only one document that was written in the last ten years that discussed system effectiveness: Operational Availability Handbook NAVSO P-7001 of May 2018 [15] (A previous version has been cancelled [16]). The handbook provided definitions and a computational approach to availability but left the determination of system effectiveness to the reader. The document illustrates ad hoc behavior by the incorrect definition of system effectiveness:

"Systems Effectiveness: The measure of the extent to which a system may be expected to achieve a set of specific mission requirements. It is a function of availability, reliability, dependability, personnel, and capability".

In the original model, system effectiveness is a function only of availability, dependability, and capability for a reason. Reliability and personnel are unexplained additions. Dependability includes reliability, and personnel is vague and not included in the original model. Given that the original system effectiveness model answers the essential questions, (1) is the system available when required? (2) is the system reliable throughout the mission? (3) is the system capable of satisfactorily completing the mission? It is clear that the provided handbook definition does not support the system effectiveness criterion of being quantifiable and probabilistic [8,11].

The final problem relates to the issue of measures of effectiveness and system effectiveness. AMCP 706-191 defines measures of effectiveness as an input into the system effectiveness process [17]. Therefore, measures of effectiveness became the ultimate measure with the reduced emphasis on system effectiveness. Avoiding confusion between the two concepts is simple. First, system effectiveness is a function of the three pillars. Second, a measure of effectiveness (MOE) measures how a system functions within its environment [18]. The difference is all about context.

1.4. Specific Contribution of the Research

This paper makes three significant contributions to the literature on system effectiveness. A structured literature review of the system effectiveness domain reveals that the relevant material is fragmented and that the most comprehensive document is a survey paper written over 40 years ago [19]. Contribution one is that this paper describes research that identified over 700 system effectiveness sources and reports on over 400 selected documents [20]. COVID-19 restrictions forced the structured literature reviewed to be conducted electronically. Table 1 was created to facilitate the research. Contribution two is a detailed grounded theory analysis of the changes in the perception of what makes up system effectiveness from its inception to the present. The framework used for the analysis was a historiography, combined with the structured literature review. The analysis postulates that system effectiveness failed to mature as a concept or discipline despite its initial promise. The third contribution is the research method itself.

Table 1.

Sources used in the Literature Search.

Structured Literature Reviews and Grounded Theory have their roots in the social sciences. However, applications of grounded theory can extend beyond the social sciences. For example, Johnson recently published a doctoral dissertation titled “Complex Adaptive Systems of Systems: A Grounded Theory Approach” [21,22]. In addition, Structured Literature Reviews and Grounded Theory are being used in Software Engineering [23,24,25]. However, they have yet to be combined in a mixed-methods approach. The mixed-method approach of a structured literature review integrated with grounded theory analysis and historiography is unique to this research.

In summary, the unique contribution of this research to system effectiveness is that it extends knowledge in the domain of system effectiveness related to acquisition and sustainment Sustainment is the appropriate term. Sustainability and sustainable have taken on specific meanings within the environmental community. The research presents a more current, thorough, and detailed analysis of a topic of interest to the acquisition and sustainment communities and supporting disciplines such as system engineering and reliability engineering. The research is novel because it uses several analysis techniques in an integrated approach not generally applied to studies in this area. The research combines a structured literature review with grounded theory analysis and historiography techniques to develop a deeper and more detailed understanding of system effectiveness based on a comprehensive database of relevant papers from current and historical sources. This understanding provides a foundation for expanding the understanding and development of measures of effectiveness within the framework of system acquisition.

2. Materials and Methods

The research problem of investigating the role of system effectiveness in the acquisition process over 60 years does not fit into a traditional dissertation-like process. The answers to the research questions are qualitative, not quantitative. The data is the literature. For example, what was the subject and when was the document published? Gathering and analyzing literature that went back before 1958 requires a different form of a literature review; hence after some trial and error, the structured literature review concept was adopted for the subject research. Towards the end of the literature search, the need for a more detailed analysis process became apparent. The structured literature review was vital in determining the patterns in the literature. However, the structured literature review did not provide a methodology to aggregate the perceived patterns into a central concept or theory. Grounded theory methods were selected to meet this need because they facilitate developing the patterns in the data into a defensible theory. Finally, assembly of the timelines led to the inclusion of historiography techniques to assist with the analysis.

There are four essential elements to developing a structured literature review and grounded theory analysis:

- The research question;

- The structured literature review;

- The domain of inquiry;

- Critical elements in findings.

The following subsections discuss the first three elements. The Results Section presents the findings.

The research into the integrated methods indicated five benefits [26]:

- Increased validity of the results;

- A more nuanced view of the problem;

- Increased confidence in the results;

- Unique answers or results;

- A better understanding of the phenomenon involved.

The techniques are sequential and recursive. Each pass through the data builds off the last pass, refining and distilling the observations into a central theme.

2.1. The Research Question(s)

The aim of the research is to assess the role of system effectiveness relative to the acquisition and sustainment process over a period that exceeds 60 years. The changes are a given. For example, sustainment was not a consideration in the beginning, so what were the factors that led to its inclusion? How did that change the role of system effectiveness? Sustainment as a process in acquisition appeared as a theme, along with reliability and maintainability amongst others. Were there noticeable patterns in the themes? Taken together, these three points form a set of questions that can investigate the system effectiveness domain over the period of interest.

- What factors led to the change in the role of System Effectiveness?

- What themes began to emerge with the changing role?

- What were noticeable patterns of change?

The structured literature review presents the data organized into a set of timelines that supports answering the research questions. Finally, the domain of inquiry is the examination of the literature in the context of the timeline using grounded theory. The outcome is in the form of factors, themes, and patterns that emerge from the literature analysis over time.

2.2. The Structured Literature Review

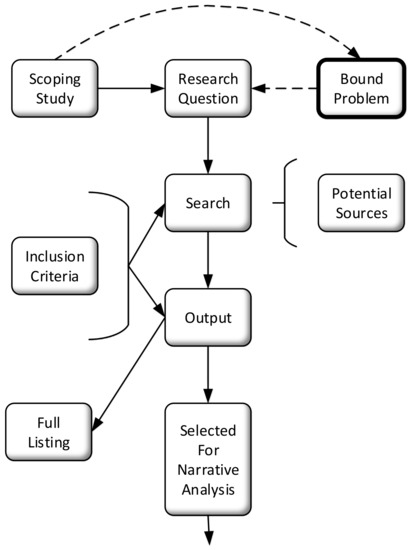

The structured literature review served two purposes in this study. First, the search protocol identified material related to system effectiveness facilitating the development of an organized database. Second, the structured literature review served as the first filter in identifying potential patterns for the grounded theory analysis. Figure 1 describes the overall literature search process. The scoping study of Figure 1 identified possible sources to search. Table 2 presents the various literature categories used in the search. Table 1 presents the list of sources used. Furthermore, the scoping study helped to limit the keywords used in the literature search. Table 3 lists prospective keywords developed from several sources, the primary source being the paper written by Tillman, Hwang, and Kuo [19]. The Tillman, Hwang, and Kuo paper was a known entity and used in the scoping study of Figure 1. The paper, written in 1980, surveyed the literature and identified 89 references specific to system effectiveness. The paper also described the main system effectiveness models developed to that point in time. Finally, Table 4 presents the final list used in the protocol.

Figure 1.

The High-Level Literature Search Process.

Table 2.

Categories of Appropriate Literature.

The focus of the search was on primary literature or original reports and secondary literature, which describes or summarizes the original writings. Additionally, important is the category of the literature. What is its source? Table 2 lists the various literature categories used in the search:

The order of search was:

- Peer-reviewed material;

- Grey literature (items 2–7 of Table 2);

- Books (texts and professional).

Grey literature is unpublished or not published commercially [27]. Because the development of System Effectiveness was primarily a government effort, the majority of the literature retrieved fell into the Grey category.

The initial searches used different browsers and search engine combinations. The Google search engine was picked as the best option for this research because it had an excellent search string feature, and Google Scholar is a bonus. Additionally, the Chrome browser has a better download feature.

The literature retrieval process used three steps.

- The use of a focused search string on the sources of Table 1;

- The use of “snowball” searches;

- A general web search using the focused search string.

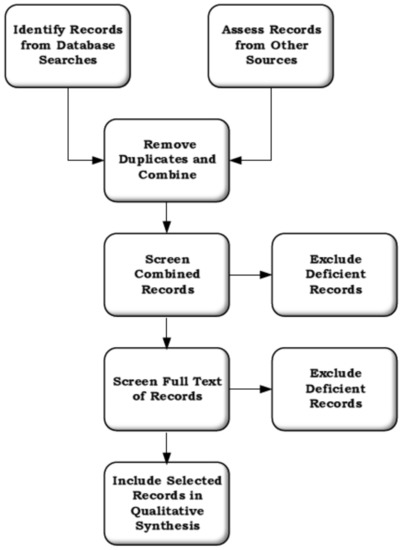

The use of a focused search string simplified the building of the database. Storing of the results was in folders named for the keywords. All filtering was manual, and sources identified but not available were not included in the database. Figure 2 uses “records” as a general term to cover papers, books, and reports.

Figure 2.

The Structured Literature Review.

| TITLE-ABS ((“System Effectiveness” AND ((“keyword”)) |

The issue of using “system” vice “systems” is essential. The use of “systems” provides lots of unfocused results, most of which are not usable. On the other hand, the use of “system” provides more focused results that are usable.

The desire to conduct as complete a search as possible drove the selection of sources to search (Table 1). Unfortunately, most 1950s and 1960s materials exist only in microfiche format, and COVID-19 restrictions limited access to archived materials. The search of Table 1 covered all sources listed. However, the primary focus was on the government column. The Defense Technical Information Center (DTIC) changed the public interface to use the Google search engine early in the research phase. This change had two undesired effects. First, the early searches were not repeatable, and the Google search engine provided few results. Fortunately, DTIC has a research portal that provides good results with the search string and the snowball search discussed below. Unfortunately, the portal is not available to the general public.

The second step was a snowball search [28] using the reference section of the selected papers. This search produced another 52 unique papers that were retrievable. Finally, the search string was also employed in a general web search, resulting in three conference proceedings found in Google Books unavailable from other sources. There were numerous references to conference proceedings as a significant source of information. However, few were available electronically and library access was nonexistent because of the COVID crisis.

Table 3 and Table 4 present the list of keywords considered and selected, respectively. Many of the keywords of Table 3 not used were tested but returned results not germane to system effectiveness. For example, serviceability is too broad even with filtering. The final list is composed of words that provided focused results relevant to the research. The primary focus of the search was thematic. What was the paper’s subject, and how did it relate to system effectiveness? The specific focus was on papers that addressed the theory, application, or programmatic issues.

The focus in examining search returns was title relevance, abstract relevance, and paper content in that order. In addition, the search return had to demonstrate relevance to system effectiveness, the Department of Defense, and the acquisition and sustainment process.

Table 3.

Prospective Key Words.

Table 3.

Prospective Key Words.

| Tillman, Hwang, and Kuo [19] | Other Sources |

|---|---|

| Reliability | Sustainment |

| Availability | Tactical availability |

| Operational readiness | Readiness |

| Repairability | Acquisition |

| Maintainability | Mission Reliability |

| Serviceability | Cost Effectiveness |

| Design adequacy | Operational Availability |

| Capability | Mission analysis |

| Dependability | Measures of effectiveness |

| Human performance | Measures of performance |

| Environmental effects |

Table 4.

Selected Key Words.

Table 4.

Selected Key Words.

| Reliability | Maintainability |

| Availability | Operational availability |

| Operational readiness | Readiness |

| Dependability | Mission reliability |

| Design adequacy | Cost effectiveness |

| Capability | Mission analysis |

| Measures of effectiveness | Measures of performance |

2.3. The Domain of Inquiry: Grounded Theory and Coding the Data

McCall and Edwards [29] have identified three methodologies associated with grounded theory: classic grounded theory, pragmatic grounded theory, and constructivist grounded theory. The discussion of the differences among these methodologies is beyond the scope of this paper. However, the study that this paper is reporting on used the pragmatic grounded theory approach [20].

The following reasons are the basis for selecting this approach: First, it recognizes the literature as the phenomena to be studied. Second, it takes an interpretive approach that allows the development of a more profound understanding of the literature and the evolution of an abstract theory. Resultant theories are the researcher’s interpretations of causal mechanisms. Third, the role of the researcher is that of an interpreter. However, this approach recognizes the researcher’s personal experience and knowledge as a factor.

The data sampling process is a back-and-forth effort that results in substantial memo writing and diagramming to identify and incorporate the data into manageable sets. The technique employs three distinct methods: open coding, axial coating, and selective coding. These sequential processes take the researcher through the steps to develop the data patterns (open coding) and examine the derived patterns for causality (axial coding). Axial coding confirms relationships between categories or bounds their applicability. Selective coding is about determining which category embodies the characteristics of the previously derived patterns. This category becomes the core category and represents the resulting theory. The overall procedure is recursive and proceeds until the sequence results in a candidate theory.

3. Results

Over 700 sources covering approximately 70 years (1950 to 2021) were the basis for developing the grounded theory. This research was unique in that the literature was the data. In addition, the resulting narrative was not linear. In the beginning, system effectiveness was the focus. However, in the end, the literature was more about analysis of alternatives, acquisition reform, and problems with reliability.

3.1. Step 1: The Analysis of the Data

Table 5 and Table 6 are examples from the research report. Table 5 is the historiography, and Table 6 is the curated literature pertinent to the time frame. The aim was to present the main events during the period of interest with relevant documents published within the time frame. Comparing the event list with the publication list gives the reviewer an indication of what is of interest Within the world of acquisition and sustainment during that time period.

Table 5.

Major Milestones 1981–1990.

Table 6.

Systems Effectiveness Publications 1981–1990.

Table 7 presents the structured literature review’s initial or open coding analysis.

Table 7.

Initial Coding.

3.2. Step 2: Results of the Initial Coding

Initial coding is the search for trends and patterns in the database. The recursive analysis process initially divided the timeline into arbitrary ten-year increments. Further examination led to an initial division of the timeline into three epochs defined by:

- McNamara’s tenure as the Secretary of Defense;

- The introduction of the 5000 series of acquisition instructions in 1971;

- The advent of the Joint Capabilities Integration and Development System (JCIDS) process in 2002.

Each pass through the data refined the timeline into sub-epochs that clarified the patterns and associated factors. The adoption of commercial standards in 1993 and the current implementation of the Adaptive Acquisition Framework (AAF) complete the list. The final result was five epochs, as shown in Table 8 which lists the epochs with their causal event and interval of influence.

Table 8.

EPOCHS.

The recursive process identified thirteen patterns. The grounded theory literature made it clear that behavior patterns were as crucial as a definable event. For example, a pattern of behavior might be the constant changing of personnel within a particular office in the Department of Defense. A definable event might be the release of a new acquisition instruction. The two ideas merge when a new acquisition instruction is issued every time the leadership changes. This form of reasoning was the basic logic used for identifying the following patterns.

Table 9 combines Table 7 and Table 8 to capture the historiographic aspects of the analysis. The table entries in bold font were identified as potential causal effects or categories for the axial coding step shown in Table 10.

Table 9.

Initial Coding Results by Epoch (I–V).

Table 10.

Axial Coding-Categories.

3.3. Initial Coding Patterns and Concepts Described

The following subsections provide an overview of the patterns and concepts derived during the initial coding phase.

3.3.1. Changes with Time

The factors in this pattern address the history of system effectiveness as a function of time. It traces the development of the system effectiveness models, their impact on military standards and their subsequent input into the sustainment process. Example factors include the development of reliability engineering, systems engineering and logistics engineering alongside the attempts to develop system effectiveness.

3.3.2. Changes with Policy

Identifies the significant policy changes that occurred to the acquisition structure with time from 1958 to the present. Sample factors include the cycles of acquisition reform, the type of cycle, and the form of the changes.

3.3.3. Changes with DoD Structure

Factors in this pattern include reorganization of research labs, changes in responsibility for system effectiveness within the DOD structure, and a lack of central authority.

3.3.4. Changes with Technology

The factors in this pattern refer to the emphasis of reliability over complexity. For example, the user community initially originally favored systems that demonstrated mission reliability over capability.

3.3.5. Changes with Knowledge and the Knowledge Base

This could also read “changes with lack of knowledge or knowledge base”. Factors include loss of experienced analysts, inexperienced analysts, lack of reference material, and lack of example reports. The latter two are problem areas because early material exists primarily as microfiche.

3.3.6. Disparate Technical Disciplines

This pattern is distinguished by a lack of common background or education.

3.3.7. Tension among Technical Disciplines

Factors in this pattern include a failure by some disciplines to see the big picture. This is better known as “if your only tool is a hammer, you tend to see problems as a nail”.

3.3.8. Inconsistent Models of System Effectiveness

The factors in this pattern center around differences in what comprised effectiveness and similar terms that had different meanings among the various models of system effectiveness.

3.3.9. Following Fads

This pattern contains the factors that describe misguided attempts to redefine System Effectiveness to accommodate the management of the fad du jour. An example is equating system effectiveness to quality at the expense of capability.

3.3.10. Lack of Participation by Industry

This pattern is found throughout the literature. Factors include proprietary methods that are time tested and no financial incentives to change.

3.3.11. Lack of Participation by Academia

Factors in this pattern address the lack of research and publication by the academic community.

3.3.12. Misuse of the Concept

This is a common issue in the literature. Factors include failure to understand the purpose of the system effectiveness concept and misrepresentation of the concept as solely a reliability model.

3.3.13. Lack of a Consistent Language

Currently there is a lack of common and consistent terms for use when discussing system effectiveness. Factors include no ontology and/or taxonomy for system effectiveness and cost effectiveness. The lexicon developed in the 1960s does not describe system effectiveness adequately. This has led to a confusion between what is system effectiveness and what is a measure of effectiveness.

3.4. Step 3: Results of the Axial Coding

Axial coding is about causation. Again, this coding step is recursive, and the stopping point is when the grouping of patterns and their causal effects is complete. Reexamination of the patterns leads to the conclusion that several of them share the same causal effect. Table 10 presents the distillation of the 13 patterns into a list of five candidates for the selective coding step.

Analyzing these five effects over the timeline leads to further refinement and integration into one central concept. Despite all the effort the services put into developing system effectiveness, once the acquisition system was consolidated at the Department of Defense level, interest waned and system effectiveness stopped developing. The concept of system effectiveness was not sufficiently mature to play its intended role in the acquisition process.

Consider these specific points from the literature:

- Dordick identified the tension and lack of consistency between disciplines early on [12];

- Aziz [6] pointed out the confusion in terminology and a lack of organized progress, particularly in the area of performance analysis;

- Too many people confused the concept with only reliability and maintainability (RAM) modeling;

- The various models shown in Blanchard’s papers did not fully develop nor integrate the models into one consolidated model [7,10];

- Coppola [3] considered system effectiveness to be a transient idea and notes that system effectiveness gave way to life cycle cost as the emphasis;

- DoDD 5000.1 came two years after McNamara left office leading to MIL-STD-721C [21] which removed all references to system effectiveness (supporting Coppola’s point).

Thus, taking the five causal effects together leads to the conclusion that the concept of system effectiveness was not allowed to mature. Development stopped, and people moved on.

4. Discussion

It would be easy to say, given the evidence, that system effectiveness is a failed concept, that the theory is one of failure rather than one of immaturity. However, Habayeb presents a solid case to the contrary [4]. His book presents three applications: hardware system evaluation, organizational development and evaluation, and conflict analysis. In addition, Rudwick identified three positive characteristics of the WSEIAC definition of system effectiveness [30]:

- The definition allows for the determination of the effectiveness of any system type;

- The definition supports the measurement of any system in a hierarchy of systems;

- The definition forces the analysis to focus on the three pillars.

Further, a search on [4] led to new material in Asia, specifically China. The Chinese have adopted the WSEIAC concept, referring to it as the ADC (for availability, dependability, and capability) model3. These points further support a theory that the development of system effectiveness stopped before maturity.

4.1. The Theory of Immaturity

The outcome of the selective coding step is the Theory of Immaturity. How can a concept that is in its 60s be immature? Simple. What may be signs of failure can also be signs that the idea never reached its full potential. That is the contention here. The literature shows that system effectiveness may have been a victim of a short attention span within the U.S. Department of Defense environment. The era of support for system effectiveness began and ended with McNamara. Additionally, there were four variants of the system effectiveness model in play, one model for the Army, one for the Air Force, and two for the Navy [7,10]. Four models for the same purpose do not indicate maturity. Confusion over the mathematical concepts do not indicate maturity. Finally, the services lost control of the acquisition process by the Secretary of Defense implementing DoDD 5000.1 in 1971. The literature indicates a lack of support by the disappearance of system effectiveness from DoDD 500.1 A mature process would most likely have received support from the engineering community as a whole.

4.2. Threats to the Validity of the Study

Research validity is essential in a study of this type where the result is subjective. Two factors drive the conversation: the literature review and the coding.

The challenge of the literature review is building a comprehensive database. The impact of COVID-19 isolation restricted access to physical visits to libraries as well as access to materials archived on microfiche. In addition, no one was present to make PDF files from the microfiche. Nevertheless, despite the limitations, this research is a comprehensive study of system effectiveness with 708 records in the initial database.

Verification of the coding work occurred at each level of analysis. To mitigate researcher bias, a researcher from another university experienced in grounded theory and knowledgeable about system effectiveness performed a confirmation analysis of the coding. Additionally, ten experienced researchers reviewed the original work that is the basis for this article. Their feedback was incorporated into the final result.

Finally, an evaluation criteria checklist presented as Table 11 guided the grounded theory analysis. The checklist also serves as a guide for the reader to follow the analysis results.

Table 11.

Evaluation Criteria.

4.3. Answering the Research Questions

Table 12 restates the research questions that this paper set out to answer. Q1 The initial coding identified thirteen factors that provide an answer to Q1. Chief among these factors is the tension between disciplines. The people involved practiced different disciplines and brought different perspectives and experiences to system effectiveness. Coppola was a reliability person, and their comment about system effectiveness meshes with Dordick’s perspective about the difficulty in having different disciplines set aside their differences. This point is at the top of the list for “Immaturity of Concept”.

Table 12.

Research Questions Revisited.

The answer to Q2 has three answers or themes. The first theme emphasized reliability and maintainability (RAM) at the expense of capability. The second theme was life cycle cost which incorporated the cost of RAM. Again, the capability pillar was not in the picture. The third theme focused on sustainment which encapsulated the first two themes. However, it became more about a sustainable system than a capable system.

The following set of changes answer Q3: First, the focus shifted to life cycle cost (LCC), while important, LCC focused only on sustainment. The replacement for LCC was the Cost and Operational Effectiveness Analysis (COEA) approach. The COEA followed a rigid, prescribed approach and gave way to the more flexible analysis of alternatives concept (AoA) with its broader focus on operational effectiveness and life-cycle cost studies of alternative material solutions.

The concept of system effectiveness is always lurking in the background, as exemplified by the Operational Availability Handbook NAVSO P-7001 of May 2018. However, there are weaknesses in the concept discussed in the handbook. Specifically, there is an issue with both the lexicon and taxonomy presented in the handbook. Thus, there is a need for an ontology to provide structure and organization for system effectiveness. An ontology would provide a framework for the quantification of system effectiveness by resolving the four existing models into one. An ontology would also provide structure for resolving the system effectiveness/measure of effectiveness confusion. One model resolves the mathematical framework issues as well. The pillars should be primary by definition. Readiness and RAM have their role but they need to be placed in perspective [31]. A taxonomy of system effectiveness would be a step in the right direction. Resolution of these issues and needs would move system effectiveness from “tribal lore” to established fact.

4.4. Summary of Research Results

This research is a significant contribution to the Systems Engineering Body of Knowledge because:

- The research is the most comprehensive published literature search from the origin of systems effectiveness to the present;

- The research is a synthesis of the role of systems effectiveness in U.S. DoD systems acquisition and sustainment as it evolved over time. The research is the only publication with this breadth and depth, using an innovative research methodology namely integrating a structured literature review with grounded theory and a historiography;

- The methodology itself is a novel contribution to the engineering community.

4.4.1. Conclusions

There are four conclusions that are supported by the research:

- System effectiveness does not serve its original intent and purpose: Cited definitions from official documents show a lack of understanding of how the pillars truly defined system effectiveness. The definitions do not match each other, let alone follow the original system effectiveness concept. In addition, the current definitions do not provide the requisite mathematical framework for assessing system effectiveness.

- Source documents provide insight as to why system effectiveness plays a diminished role in acquisition and sustainment: The 13 patterns derived from the analysis of the source documents combined with the subsequent grounded theory and historiographic analysis clearly depict the shift from system effectiveness to RAM with time.

- The original approach to system effectiveness may have relevance to today’s issues and challenges: The conclusions point to the need for a rebirth in system effectiveness research and use. The concept has application to both the acquisition and sustainment engineering communities [32]. Future work should develop an ontology and taxonomy that will provide a defined foundation to inform the application of system effectiveness and its methods.

- An integrated research methodology is valuable for making sense of conflicting information spread over time: The selected research method(s) served to clarify how system effectiveness came about, the attempts to make it viable, and how it meandered from the original concept. The integrated mixed-methods approach led to the Theory of Immaturity by identifying patterns, concepts, and causal relationships. The research methods also clarified future research directions and highlighted issues and ideas that can improve the understanding and usage of system effectiveness.

4.4.2. Recommendations for Improving Systems Effectiveness

Specifically, there are five recommendations to bolster system effectiveness:

- Build the ontology;

- Refine the four system effectiveness models into one model;

- Establish the limits of the mathematical model;

- Explicitly define the difference between system effectiveness and measures of effectiveness;

- Document the revisions and enforce usage of the concepts.

Make system effectiveness explicit by putting RAM and readiness in perspective.

4.4.3. Future Work

A WSEIAC- type working group is planned to be established to develop requirements and a notional plan for a Systems Effectiveness Framework and associated methodology with the goal of increasing mission success during design and development of new US defense systems. To ensure a neutral system engineering approach, a university team from NPS and SMU will lead the working group to consist of US defense sector representatives, both government and industry, based on needed expertise. The research reported in this paper will be the primary source for guiding the working group, applying its research, and utilizing its extensive references.

Author Contributions

Conceptualization, J.G. and J.S.; methodology, J.G.; formal analysis, J.G.; investigation, J.G.; data curation, J.G.; writing—original draft preparation, J.G.; writing—review and editing, J.G. and J.S.; visualization, J.G.; supervision, J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Acknowledgments

The authors would like to thank Gary Langford of Portland State University for his enthusiastic support, especially with the review of the coding of the data.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| U.S. | United States |

| WSEIAC | Weapons System Effectiveness Industry Advisory Committee |

| CE | cost-effectiveness |

| SE | System Effectiveness |

| IC | initial cost |

| SC | sustainment cost |

| LCC | life cycle cost |

| MOE | measures of effectiveness |

| DTIC | Defense Technical Information Center |

| AAF | Adaptive Acquisition Framework |

| COTS | commercial off-the-shelf |

| JCIDS | Joint Capabilities Integration and Development System |

| RAM | reliability and maintainability |

| DoDD | Department of Defense Directive |

| ADC | availability, dependability, capability |

| COEA | Cost and Operational Effectiveness Analysis |

| AoA | analysis of alternatives |

| COPLIMO | constructive product line investment model |

Notes

| 1 | Weapon System Effectiveness Industry Advisory Committee |

| 2 | Benjamin Blanchard published nine books related to system effectiveness. He is frequently cited as an authority |

| 3 | The search used “Chinese and the WSEIAC model”. |

References

- Green, J. An Investigation of the Role of System Effectiveness in the Acquisition and Sustainment of US Defense Systems: 1958 to 2021. TechRxiv 2021. [Google Scholar] [CrossRef]

- Green, J. An Investigation of the Role of System Effectiveness in the Acquisition and Sustainment of U.S. Defense Systems: 1958 to 2021. In Proceedings of the Nineteenth Annual Acquisition Research Symposium, Monterey, CA, USA, 11–12 May 2022. [Google Scholar]

- Coppola, A. Reliability engineering of electronic equipment a historical perspective. IEEE Trans. Reliab. 1984, R-33, 29–35. [Google Scholar] [CrossRef]

- Habayeb, A. Systems Effectiveness; Pergamon Press: Oxford, UK, 1987. [Google Scholar]

- Wasson, C. System Engineering Analysis, Design, and Development, 2nd ed; Wiley: Hoboken, NJ, USA, 2015. [Google Scholar]

- Aziz, A. System Effectiveness in the United States Navy. Nav. Eng. J. 1967, 79, 961–971. [Google Scholar] [CrossRef]

- Blanchard, B. System Worth, System Effectiveness, Integrated Logistics Support, and Maintainability. IEEE Trans. Aerosp. Electron. Syst. 1967, AES-3, 186–194. [Google Scholar] [CrossRef]

- Air Force Systems Command. Weapon System Effectiveness Industry Advisory Committee (WSEIAC) Final Report of Task Group VI, Chairman’s Final Report (Integrated Summary); Air Force Systems Command: Washington, DC, USA, 1965. [Google Scholar]

- Available online: https://www.dau.edu/glossary/Pages/Glossary.aspx (accessed on 13 August 2022).

- Blanchard, B. Cost Effectiveness, System Effectiveness, Integrated Logistics Support, and Maintainability. IEEE Trans. Reliab. 1967, R-163, 117–126. [Google Scholar] [CrossRef]

- Air Force Systems Command. Weapon System Effectiveness Industry Advisory Committee (WSEIAC) Final Report of Task Group II, Prediction—Measurement; Air Force Systems Command: Washington, DC, USA, 1965. [Google Scholar]

- Dordick, H.S. An Introduction to System Effectiveness; RAND Report P-3237; The Rand Corporation: Santa Monica, CA, USA, 1965. [Google Scholar]

- Reed, C.M.; Fenwick, A. A Consistent Multi-User Framework for Assessing System Performance. arXiv 2010, arXiv:1011.2048. [Google Scholar]

- Fowler, P. Analytic Justification of the WSEIAC Formula. IEEE Trans. Reliab. 1969, R-18, 29. [Google Scholar] [CrossRef]

- The Operational Availability Handbook, NAVSO P-7001; Assistant Secretary of the Navy: Washington, DC, USA, 2018.

- OPNAVINST 3000.12A, Operational Availability Handbook. 2 September 2003 (Cancelled). Available online: https://www.wdfxw.net/doc25688776.htm (accessed on 13 August 2022).

- Department of the Army. Engineering Design Handbook, System Analysis and Cost-Effectiveness; AMCP-706-191; Army Materiel Command’: Washington, DC, USA, 1971. [Google Scholar]

- Green, J. Establishing System Measures of Effectiveness. Available online: https://acqnotes.com/wp-content/uploads/2014/09/Establishing-System-Measures-of-Effectiveness-by-John-Green.pdf (accessed on 13 August 2022).

- Tillman, F.A.; Hwang, C.L.; Kuo, W. System Effectiveness models: An annotated bibliography. IEEE Trans. Reliab. 1980, R-29, 295–304. [Google Scholar] [CrossRef]

- Green, J. An Investigation of the Role of System Effectiveness in the Acquisition and Sustainment of U.S. Defense Systems: 1958 to 2021. Ph.D. Thesis, Southern Methodist University, Dallas, TX, USA, 2022. [Google Scholar]

- Johnson, B.; Holness, K.; Porter, W.; Hernandez, A.A. Complex Adaptive Systems of Systems: A Grounded Theory Approach. Grounded Theory Rev. 2018, 17, 52–69. Available online: https://groundedtheoryreview.com/wp-content/uploads/2019/01/06-Johnson-Complex-GTR_Dec_2018.pdf (accessed on 13 August 2022).

- Johnson, B. A Framework for Engineered Complex Adaptive Systems of Systems. Ph.D. Thesis, Naval Postgraduate School, Monterey, CA, USA, 2019. [Google Scholar]

- Stol, K.; Ralph, P.; Fitzgerald, B. Grounded theory in software research: A critical review and guidelines. In Proceedings of the International Conference on Software Engineering Proceedings, Austin, TX, USA, 14–22 May 2016. [Google Scholar]

- Babar, M.A.; Zhang, H. Systematic literature reviews in software engineering: Preliminary results from interviews with researchers. In Proceedings of the 3rd International Symposium on Empirical Software Engineering and Measurement, Lake Buena Vista, FL, USA, 15–16 October 2009; pp. 346–355. [Google Scholar]

- Hoda, R. Socio-Technical Grounded Theory for Software Engineering. arXiv 2021, arXiv:2103.14235. [Google Scholar] [CrossRef]

- Available online: http://researcharticles.com/index.php/data-triangulation-in-qualitative-research/ (accessed on 13 August 2022).

- Kamel, F. The use of grey literature review as evidence for practitioners. ACM SIGSOFT Softw. Eng. Notes 2019, 44, 23. [Google Scholar]

- Wohlin, C. Guidelines for snowballing in systematic literature studies and a replication in software engineering. In Proceedings of the EASE’ 14, London, UK, 13–14 May 2014; Available online: https://www.overleaf.com/project/6313d75eef8761e56a2762fc (accessed on 13 August 2022).

- McCall, C.; Edwards, C. New perspectives for implementing grounded theory. Stud. Eng. Educ. 2021, 1, 93–107. [Google Scholar] [CrossRef]

- Rudwick, B. Systems Analysis for Effective Planning: Principles and Cases; Wiley: Hoboken, NJ, USA, 1969. [Google Scholar]

- Green, J.M.; Stracener, J. A Framework for a Defense Systems Effectiveness Modeling and Analysis Capability: Systems Effectiveness Modeling for Acquisition. 2019. Available online: https://dair.nps.edu/handle/123456789/1757 (accessed on 13 August 2022).

- Madachy, R.; Green, J. Naval Combat System Product Line Architecture Economics. In Proceedings of the 16th Annual Acquisition Research Symposium, Monterey, CA, USA, 8 May 2019. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).