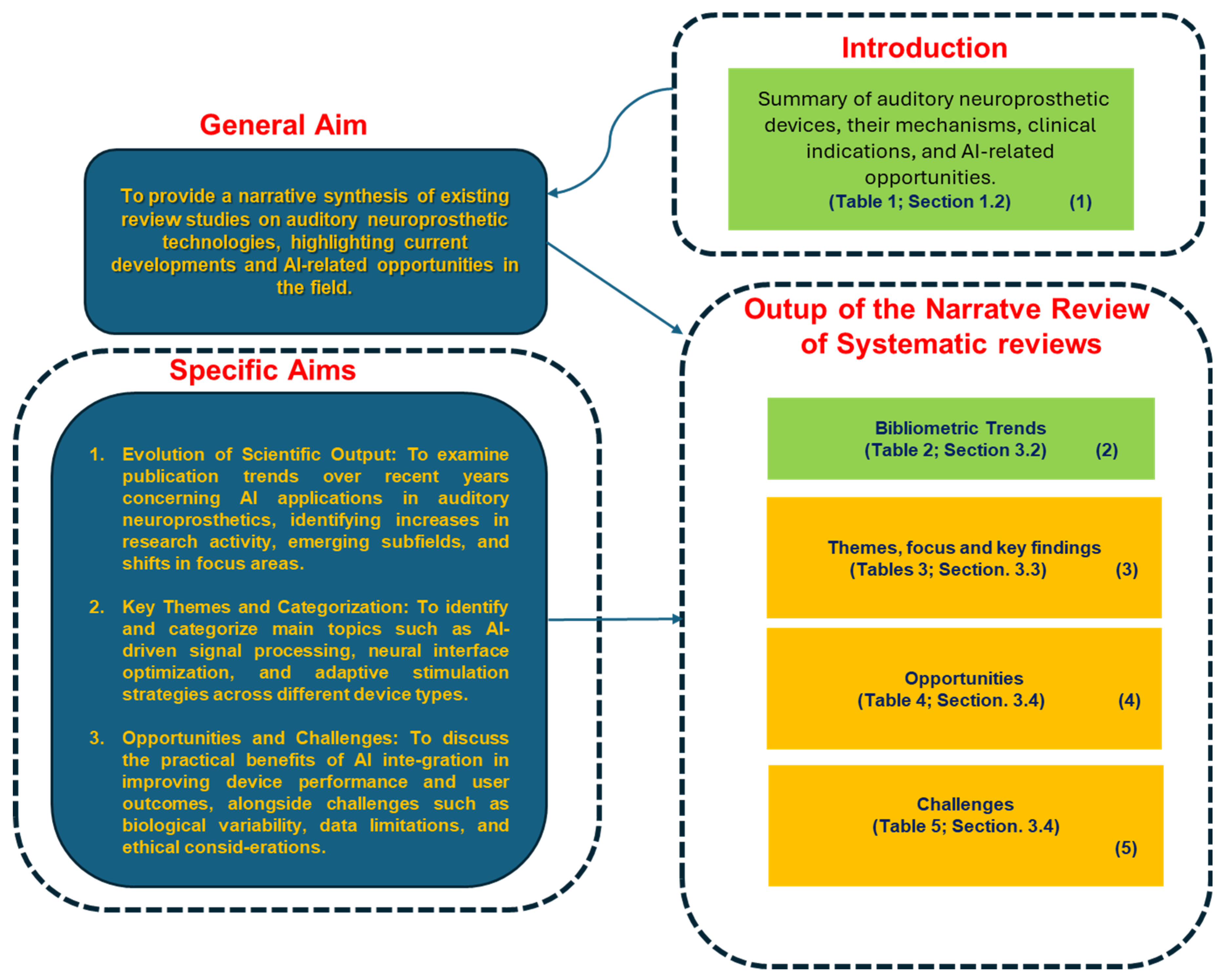

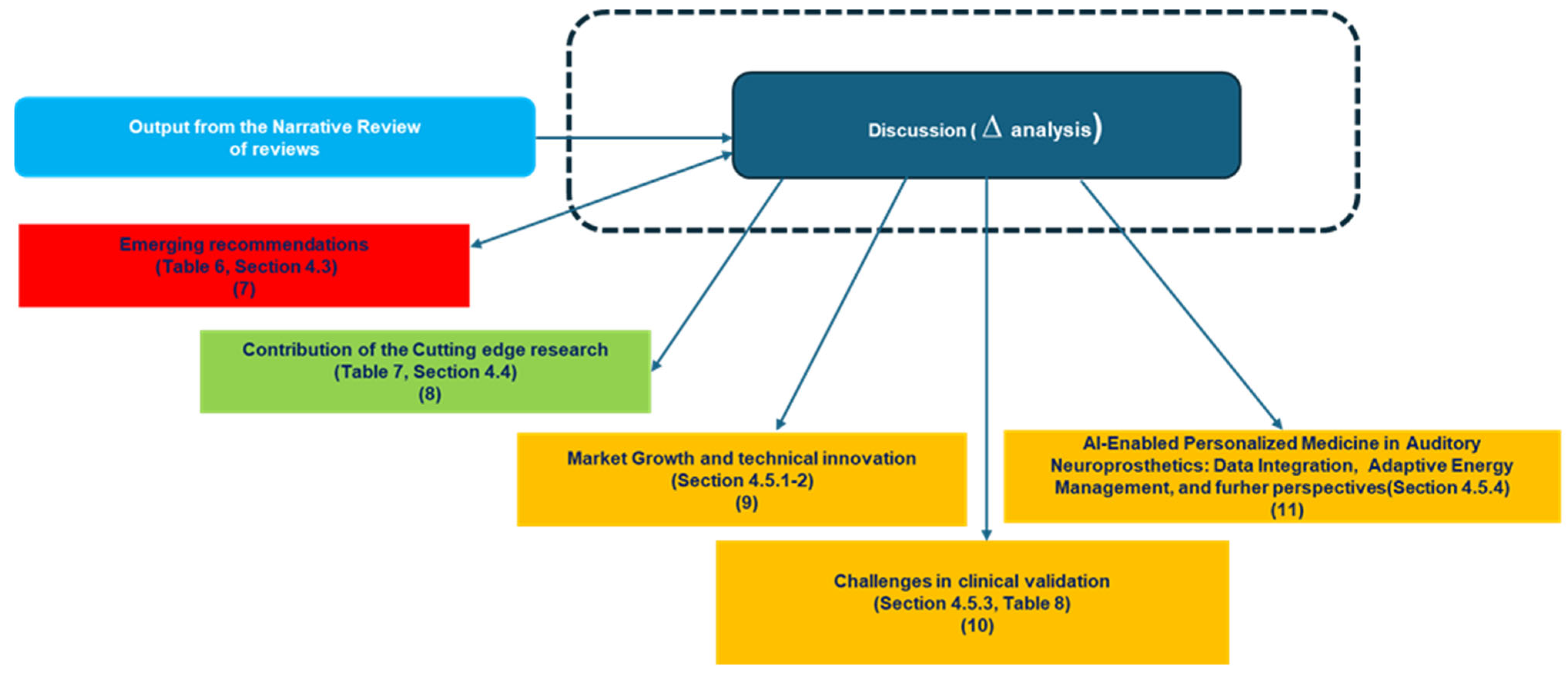

The discussion interprets the findings of this narrative review, linking evidence synthesis with forward-looking insights on AI integration into auditory neuroprosthetics. Its purpose is twofold: first, to consolidate the knowledge gained from reviewing both mature and emerging domains; second, to map the field’s opportunities, limitations, and strategic directions in light of cutting-edge research. It is organized into six sections.

Overall, this structure allows the discussion to move seamlessly from summarizing key contributions, through critical analysis of gaps, to forward-looking perspectives and methodological reflection, providing a comprehensive framework to guide research, clinical practice, and innovation in AI-enhanced auditory neuroprosthetics.

4.2. Contribution and Summary of Key Findings

This narrative review of reviews offers a consolidated and biologically anchored synthesis of recent research trends at the intersection of artificial intelligence (AI) and auditory neuroprosthetics. By focusing on published reviews rather than primary studies, the work aims to capture both the maturation of established topics and the conceptual emergence of newer, cross-disciplinary domains. This higher-level perspective enables the identification of methodological convergences, thematic clusters, and gaps that are often obscured when focusing solely on experimental results.

The reviewed literature [

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33] underscores how AI is being increasingly integrated into several aspects of auditory neuroprosthetic care, particularly in cochlear implants and related systems. Mature areas include predictive modeling of user outcomes [

16,

17,

22], biologically inspired signal processing [

18,

19,

32], and AI-supported surgical planning [

27,

31]. These domains reflect a growing synergy between data-driven approaches and core biological principles—such as neural plasticity, cochlear anatomy, and auditory nerve integrity—which has begun to shape both device design and rehabilitation protocols.

Importantly, the review also points to less explored but highly promising areas of research. These include the development of multisensory augmentation systems [

21,

30], cross-modal neuroplasticity strategies, and AI-enabled remote care infrastructures [

26,

29]. Such topics remain fragmented across the literature, and their clinical implementation is still in its early stages. Nonetheless, their potential impact—particularly in supporting patient-specific, adaptive, and inclusive solutions—marks them as critical frontiers for future investigation.

By adopting a narrative approach, this review supports a nuanced and flexible mapping of the field, particularly valuable in a domain that evolves rapidly and draws from heterogeneous disciplines. The synthesis presented here does not merely catalog technologies; rather, it clarifies conceptual trajectories and promotes biologically meaningful integrations between AI systems and auditory neural function.

Ultimately, the value of this narrative review lies in its ability to identify stable foundations as well as opportunities for innovation, offering guidance for researchers working across auditory neuroscience, machine learning, and neuroprosthetic design. In doing so, it reinforces the need for research frameworks that remain attentive to biological relevance while embracing computational advancement—a dual imperative that will likely define the next phase of development in this rapidly evolving field.

4.3. Limitations and Gaps

Despite significant progress in integrating AI into auditory neuroprosthetics, several limitations persist that constrain both the generalizability and the clinical utility of current approaches.

First, as repeatedly emphasized [

16,

17,

22,

25], the field still suffers from fragmented biological and clinical datasets. Models are often trained on retrospective clinical data lacking high-resolution biological indicators such as patterns of neuronal degeneration, cochlear fibrosis, or neuroplasticity profiles. This data sparsity severely limits the ability to personalize auditory neuroprosthetics.

Second, the absence of standardized, biologically meaningful biomarkers—such as eABRs, ECAPs, or ASSRs—hinders the development of interpretable and adaptive AI systems [

23,

28,

33]. While these electrophysiological markers have demonstrated value for auditory monitoring, they are inconsistently used across centers and underreported in algorithm design, reducing reproducibility.

Third, ethical, regulatory, and algorithmic transparency challenges remain pronounced, particularly when AI models interpret neural signals without a clear explainability framework [

20,

24,

25]. This is especially concerning in neuroprosthetic contexts, where model-driven decisions have direct consequences for patients’ sensory experience and neurological development.

A fourth gap lies in the persistent engineering-centric focus of current systems. Many studies report enhancements in signal-to-noise ratios or classification accuracy [

18,

32], yet few evaluate how these improvements translate to subjective measures such as speech perception under ecological conditions or user satisfaction—factors deeply tied to biological and cognitive responses.

Notably, there is also a visible scarcity of AI-driven studies beyond cochlear implants. Despite the growing literature, little attention has been given to other auditory or vestibular neuroprosthetic devices (e.g., auditory brainstem implants, electro-haptic aids, or hybrid acoustic-electric interfaces), creating an imbalance in innovation spread.

Finally, broader challenges such as health equity, technological access, and underrepresentation of biological diversity in datasets [

26,

29] persist and risk reinforcing disparities if not actively addressed.

To support the adoption and future development of AI-enhanced auditory neuroprosthetic devices, including but not limited to cochlear implants, the key recommendations derived from the analysis of identified challenges and gaps are summarized in

Table 6.

Table 6.

Recommendations to Address Challenges in AI-Driven Auditory Neuroprosthetics.

Table 6.

Recommendations to Address Challenges in AI-Driven Auditory Neuroprosthetics.

| # | Challenge | Strategic Recommendation | Ref |

|---|

| 1 | Fragmented biological data | Promote multi-institutional biorepositories including histological, imaging, and omics data | [16,17,22,25] |

| 2 | Lack of standardized biomarkers | Develop consensus guidelines for collection and reporting of ECAPs, eABRs, and ASSRs | [23,28,33] |

| 3 | Regulatory and ethical barriers | Co-develop interpretable AI frameworks with biomedical and regulatory stakeholders | [20,24,25] |

| 4 | Engineering-centric development | Integrate neurocognitive and perceptual metrics into training and evaluation pipelines | [18,32] |

| 5 | Underrepresentation of other devices | Expand AI applications to vestibular implants, auditory brainstem interfaces, etc. | [19,21] |

| 6 | Inequity and limited access | Embed ethical frameworks and data diversity requirements in AI development practices | [26,29] |

4.4. Discussion in Light of Recent Cutting-Edge Research

In light of the limitations and gaps identified through the narrative review of reviews, it becomes not only timely but almost mandatory to explore how recent cutting-edge studies may contribute to addressing these unresolved challenges. This step is a natural progression of the present work, which has highlighted the need to move beyond consolidated evidence and embrace more recent and potentially transformative advances. Following the first key outlined in

Box S1 and in line with the adopted methodology, the following studies were selected for further analysis [

34,

35,

36,

37,

38,

39,

40,

41,

42,

43,

44,

45,

46,

47,

48,

49,

50,

51,

52,

53,

54,

55,

56,

57,

58,

59,

60,

61,

62,

63,

64,

65,

66,

67,

68,

69].

These studies provide concrete examples of how recent research aligns with the strategic recommendations for overcoming barriers in AI-enabled auditory neuroprosthetics (

Table 7).

The fragmentation and scarcity of biologically rich datasets—a major bottleneck for personalized auditory neuroprosthetics—find some partial alleviation in genetic and anatomical research. For instance, González-Aguado et al. [

35] explore Diaphanous Related Formin 1 gene (DIAPH1) mutations linked to sensorineural hearing loss, enriching the biological knowledge base that can inform more tailored AI models. Similarly, investigations into preoperative factors influencing cochlear implant (CI) outcomes [

39], and anatomical considerations for individualized implantation [

40], further augment biological and clinical data relevant for personalization. However, these efforts remain somewhat siloed and do not yet translate directly into comprehensive multi-modal datasets that can be leveraged for AI training.

The lack of standardized and biologically meaningful biomarkers—such as ECAPs (Electrically Evoked Compound Action Potentials) or eABRs (Electrically Evoked Auditory Brainstem Responses)—that are consistently incorporated into AI algorithms continues to impede interpretability and reproducibility. In this context, Garcia and Carlyon’s work [

41] introduces a panoramic ECAP method to assess electrode array differences, advancing biomarker utility and standardization. This represents a meaningful step toward embedding richer electrophysiological signals in AI models, potentially improving their biological grounding and clinical relevance.

Ethical and regulatory concerns around AI transparency and explainability remain pressing, especially given the direct impact of AI decisions on patients’ sensory and neurological outcomes. Here, several studies offer encouraging directions. Icoz and Parlak Kocabay [

34] evaluate ChatGPT’s role as an informational tool for cochlear implants, highlighting both potentials and pitfalls of large language models (LLMs) in clinical contexts. More directly related to clinical prediction, Demyanchuk et al. [

37] develop a machine learning model to forecast postoperative speech recognition, comparing it rigorously against expert clinical judgment—this juxtaposition is crucial to building trust and explainability in AI applications. Moreover, Jehn et al. [

42] utilize convolutional neural networks to improve decoding of selective auditory attention, demonstrating how advanced AI can align closely with cognitive and neural processes, thus enhancing interpretability and functional relevance.

While many AI systems focus heavily on engineering metrics like signal-to-noise ratio or classification accuracy, fewer address real-world subjective outcomes such as speech perception in everyday environments. The CCi-MOBILE (Cochlear Implant Mobile) research platform [

36] facilitates real-time sound coding studies, supporting more ecologically valid assessments. Combined with predictive models of speech outcomes [

37] and analyses of learning curves post-implantation [

39], these studies underscore a growing emphasis on integrating neurocognitive and perceptual metrics alongside traditional engineering benchmarks.

Notably absent from this recent literature is significant progress on expanding AI applications beyond cochlear implants to other auditory or vestibular neuroprosthetic devices, indicating a persistent imbalance in innovation distribution.

Broader social concerns such as health equity and access are only tangentially addressed. Hughes et al. [

38] emphasize evidence-based strategies for early detection and management of age-related hearing loss, which indirectly relate to equitable care and access to auditory technologies. However, explicit incorporation of ethical frameworks and data diversity considerations in AI development remains underexplored.

Continuing the analysis of recent literature, several cutting-edge studies appear to move in the direction of overcoming some of the limitations highlighted in earlier research on AI-enabled auditory neuroprosthetics [

43,

44,

45,

46,

47,

48,

49,

50,

51,

52,

53,

54,

55], offering promising—though not definitive—contributions. Wohlbauer et al. [

43] explore combined channel deactivation and dynamic current focusing strategies to improve speech outcomes in cochlear implant users, moving beyond purely signal-to-noise enhancements towards optimizing perceptual benefits in real-world listening environments. This aligns with the recommendation to integrate perceptual metrics into system evaluations.

Liu et al. [

44] propose a novel estimation method for dynamic range parameters based on neural response telemetry thresholds, enhancing individualized parameter setting by leveraging electrophysiological markers, which helps address the lack of standardized, biologically meaningful biomarkers crucial for adaptive AI algorithms.

Müller et al. [

45] demonstrate that ambient noise reduction algorithms can reduce listening effort and improve speech recognition in noise among MED-EL cochlear implant users, reinforcing the need to incorporate ecological validity and user-centered outcomes in device optimization.

Shew et al. [

46] apply machine learning beyond single biomarkers, incorporating multiple physiological and behavioral features to better predict cochlear implant outcomes. This multidimensional modeling approach directly addresses fragmented data and biomarker standardization challenges, promoting more interpretable and robust AI systems.

Zhao et al. [

47] and Sinha et al. [

48] highlight the importance of neural communication patterns and early cognitive development prediction in cochlear implant recipients, suggesting that integrating neural connectivity and developmental biomarkers can enhance personalization and long-term functional predictions.

Avallone et al. [

49] and Schraivogel et al. [

50] focus on automated surgical planning and radiation-free electrode localization through impedance telemetry, advancing engineering aspects toward safer and more precise implantations, which can improve patient-specific outcomes and reduce procedural risks.

Skarżyńska et al. [

51] introduce local steroid delivery via an inner ear catheter during implantation, a novel clinical intervention with potential to modulate the biological environment, thus addressing the need for richer biological data integration.

Franke-Trieger et al. [

52] develop a voltage matrix algorithm to detect electrode misplacement intraoperatively, enhancing procedural accuracy and implant functionality.

Yuan et al. [

53] utilize preoperative brain imaging to predict auditory skill outcomes in pediatric patients, representing a step toward incorporating central nervous system biomarkers and improving prognostic modeling.

Finally, Gnadlinger et al. [

54] report on an intelligent tutoring system integrated into game-based auditory rehabilitation, offering a user-centered, adaptive training approach that may improve long-term functional gains and patient engagement.

Together, these studies contribute novel methodologies and clinical tools that advance the field beyond existing limitations: improving biological data richness and standardization, integrating multi-dimensional AI models, enhancing interpretability and explainability, expanding beyond cochlear implants to encompass surgical and rehabilitative domains, and emphasizing patient-centered outcomes and ethical considerations.

Several further recent studies [

55,

56,

57,

58,

59,

60,

61,

62,

63,

64,

65,

66,

67,

68,

69] provide valuable contributions that address persisting gaps or open promising pathways in the field of cochlear implants (CIs), particularly at the intersection of engineering innovation, personalized medicine, and digital health.

Some works aim to enhance intraoperative precision. Babajanian et al. [

55] propose a novel algorithm for analyzing multi-frequency electrocochleography data to better monitor electrode placement during surgery, directly responding to the gap in real-time feedback tools. Similarly, Siebrecht et al. [

64] and Radomska et al. [

65] develop automated segmentation tools and CT-based evaluations that improve anatomical guidance and preoperative planning, bridging technical and anatomical knowledge for improved outcomes.

Regarding safety and comorbidity management, Zarchi et al. [

56] raise a critical issue about transcranial stimulation in CI users, calling for clearer safety guidelines in neuromodulation practices. Their case-based approach underlines the need for more nuanced risk assessments in CI populations, especially as neurostimulation techniques expand.

Advances in outcome prediction and personalization are well represented. Wohlbauer et al. [

57] explore channel deactivation strategies for speech-in-noise performance, offering a flexible solution that could complement individualized programming. Chen et al. [

58] use functional near-infrared spectroscopy to predict pediatric outcomes—expanding the tools available for tailoring expectations and interventions in prelingual deafness. In parallel, Patro et al. [

62] validate a machine learning model leveraging multifactorial preoperative data, demonstrating its utility in personalized prognosis, while Sinha and Azadpour [

60] assess acoustic simulations through deep learning for real-time speech evaluation. These studies collectively target the gap in reliable, patient-specific outcome modeling.

Technological innovation in CI hardware and software also continues to evolve. Sahoo et al. [

61] compare conventional versus AI-enhanced speech processors, showing improvements in audiological outcomes. Deng et al. [

63] provide real-world data on the Cochlear Nucleus 7 system, offering a rare post-market evaluation of digital upgrades. Henry et al. [

59] explore how speech intelligibility varies based on noise-reduction training masks, enriching the understanding of algorithmic tuning under ecological conditions.

Meanwhile, Yusuf et al. [

67] and Aktar Uğurlu & Uğurlu [

66] contribute from a neurodevelopmental and bibliometric standpoint, respectively. The former identifies altered neural coupling in congenital deafness, reinforcing the need for early intervention and adaptive stimulation strategies. The latter traces publication trends in hearing loss, revealing shifts in research emphasis and underexplored subdomains—a meta-perspective that informs funding and policy directions.

On the translational side, Cramer et al. [

68] describe a reproducible method for aligning CI electrode arrays during insertion trials, which can support standardization in device testing. Finally, Wisotzky et al. [

69] highlight the role of XR-based telepresence in surgical training and assistance, pointing to future integration of immersive technologies in CI surgery and education—a field that remains nascent but full of potential.

Together, these studies enrich the multidimensional landscape of cochlear implantation by addressing technical, anatomical, algorithmic, and neurodevelopmental gaps. They emphasize the growing convergence of AI, precision diagnostics, surgical robotics, and immersive environments in redefining patient care and clinical decision-making for auditory prosthetics.

Table 7.

Recent Studies Addressing Challenges and Strategic Recommendations for AI-enabled Auditory Neuroprosthetics. (# indicate the number of recommendation).

Table 7.

Recent Studies Addressing Challenges and Strategic Recommendations for AI-enabled Auditory Neuroprosthetics. (# indicate the number of recommendation).

| Study | Key Contribution | Linked Strategic Recommendation |

|---|

| [34] | Evaluates ChatGPT as an information source for cochlear implants, assessing both accuracy and reproducibility, highlighting potentials and limitations of large language models (LLMs) in clinical decision-making. | # 3 Regulatory and ethical barriers—emphasizes AI transparency, explainability, and building trust in clinical applications. |

| [35] | Investigates DIAPH1 gene mutations in patients with sensorineural hearing loss, enriching biological knowledge and enabling more personalized predictive models. | # 1 Fragmented biological data—contributes to multi-institutional datasets for individualized AI modeling. |

| [36] | Describes the CCi-MOBILE platform enabling real-time sound coding and ecologically valid auditory testing in cochlear implant users. | # 4 Engineering-centric development—integrates neurocognitive and perceptual metrics into AI evaluation pipelines. |

| [37] | Develops a machine learning model to predict postoperative speech recognition outcomes, validated against expert clinical judgment to enhance model interpretability. | # 3 Regulatory and ethical barriers—supports explainable AI and informed clinical decision-making. |

| [38] | Provides evidence-based strategies for early detection and management of age-related hearing loss, addressing aspects of access and equity. | # 6 Inequity and limited access—informs equitable access strategies and data diversity considerations. |

| [39] | Analyzes preoperative factors affecting learning curves in postlingual cochlear implant recipients, informing individualized rehabilitation approaches. | # 1 Fragmented biological data—adds clinically relevant preoperative variables to support AI modeling. |

| [40] | Discusses anatomical considerations for achieving optimized individualized cochlear implant outcomes. | # 1 Fragmented biological data—enriches anatomical datasets for personalized AI predictions. |

| [41] | Introduces panoramic ECAP method to assess electrode array differences, advancing biomarker standardization for AI integration. | # 2 Lack of standardized biomarkers—enables consistent electrophysiological signal incorporation into AI algorithms. |

| [42] | Applies convolutional neural networks to improve decoding of selective auditory attention in cochlear implant users, linking neural activity to perception. | # 4 Engineering-centric development—incorporates neurocognitive and perceptual features into AI evaluation. |

| [43] | Combines channel deactivation and dynamic current focusing to improve speech outcomes in real-world listening conditions. | # 4 Engineering-centric development—emphasizes perceptual and ecological optimization beyond traditional engineering metrics. |

| [44] | Proposes dynamic range estimation based on neural response telemetry thresholds to optimize individualized cochlear implant parameters. | # 2 Lack of standardized biomarkers—leverages electrophysiological signals to enhance AI model grounding. |

| [45] | Demonstrates that ambient noise reduction reduces listening effort and improves speech recognition in noise for MED-EL cochlear implant users. | # 4 Engineering-centric development—prioritizes ecological validity and user-centered outcomes in AI training. |

| [46] | Uses multiple physiological and behavioral features in ML models to predict cochlear implant outcomes, moving beyond single biomarkers. | # 1 Fragmented biological data and # 2 Lack of standardized biomarkers—supports robust, multidimensional AI modeling. |

| [47] | Highlights asymmetric inter-hemisphere neural communication contributing to early speech acquisition in toddlers with cochlear implants. | # 1 Fragmented biological data—integrates neural connectivity and developmental biomarkers for AI personalization. |

| [48] | Predicts long-term cognitive and verbal development in prelingual deaf children using six-month performance assessments. | # 1 Fragmented biological data—incorporates early performance measures to improve individualized predictive models. |

| [49] | Investigates automated cochlear length and electrode insertion angle predictions for surgical planning, optimizing patient-specific implantation. | # 4 Engineering-centric development—enhances safety, precision, and outcome optimization in AI-assisted planning. |

| [50] | Develops radiation-free localization of cochlear implant electrodes using impedance telemetry, improving intraoperative accuracy. | # 4 Engineering-centric development—improves procedural safety and integration of engineering and clinical metrics. |

| [51] | Explores local steroid delivery to the inner ear during cochlear implantation to modulate the biological environment. | # 1 Fragmented biological data—provides additional biologically relevant data for AI-based predictive modeling. |

| [52] | Introduces a voltage matrix algorithm to detect cochlear implant electrode misplacement intraoperatively, enhancing procedural safety. | # 4 Engineering-centric development—integrates precise intraoperative monitoring for improved outcomes. |

| [53] | Uses preoperative brain imaging to predict auditory skill outcomes after pediatric cochlear implantation. | # 1 Fragmented biological data—enriches datasets with neuroimaging biomarkers for personalized AI predictions. |

| [54] | Incorporates an intelligent tutoring system into game-based auditory rehabilitation, validating AI-assisted training for adult CI recipients. | # 4 Engineering-centric development—integrates cognitive and perceptual metrics into rehabilitation-focused AI systems. |

| [55] | Develops a multi-frequency electrocochleography algorithm to monitor electrode placement during cochlear implant surgery. | # 2 Lack of standardized biomarkers—standardizes intraoperative electrophysiological measures for AI integration. |

| [56] | Case study evaluating safety implications of cochlear implants with transcranial stimulation. | # 3 Regulatory and ethical barriers—informs safety and ethical considerations in AI-guided procedural decisions. |

| [57] | Evaluates speech-in-noise performance using combined channel deactivation and dynamic focusing in adults with cochlear implants. | # 4 Engineering-centric development—emphasizes perceptual and real-world outcome optimization. |

| [58] | Predicts individualized postoperative cochlear implantation outcomes in children using functional near-infrared spectroscopy (fNIRS). | # 1 Fragmented biological data—incorporates neuroimaging and physiological biomarkers for AI personalization. |

| [59] | Investigates the impact of mask type on speech intelligibility and quality in cochlear implant noise reduction systems. | # 4 Engineering-centric development—integrates user-centered metrics to improve AI-assisted sound processing. |

| [60] | Employs deep learning to evaluate speech information in acoustic simulations of cochlear implants. | # 4 Engineering-centric development—leverages AI to optimize signal processing and perceptual fidelity. |

| [61] | Compares audiological outcomes between conventional and AI-upgraded cochlear implant processors. | # 4 Engineering-centric development—demonstrates AI-enhanced device performance for clinical relevance. |

| [62] | Uses machine learning and multifaceted preoperative measures to predict adult cochlear implant outcomes in a pilot study. | # 1 Fragmented biological data and # 2 Lack of standardized biomarkers—integrates multidimensional preoperative data into AI models. |

| [63] | Real-world evaluation of improved sound processor technology (Cochlear Nucleus 7) in Chinese CI users. | # 4 Engineering-centric development—supports AI-enhanced device optimization with clinical outcome validation. |

| [64] | Automates segmentation of clinical CT scans of the cochlea, analyzing vertical cochlear profile for implantation planning. | # 1 Fragmented biological data—provides anatomical datasets to enhance AI-guided surgical predictions. |

| [65] | Evaluates round window access using CT imaging for cochlear implantation. | # 1 Fragmented biological data—adds precise anatomical measurements for AI-assisted surgical planning. |

| [66] | Bibliometric exploration of hearing loss publications over four decades to identify research trends and gaps. | # 6 Inequity and limited access—informs strategic planning and equitable distribution of AI research resources. |

| [67] | Shows that congenital deafness reduces alpha-gamma cross-frequency coupling in auditory cortex. | # 1 Fragmented biological data—contributes neurophysiological biomarkers for AI-based predictive modeling. |

| [68] | Presents a method for accurate and reproducible specimen alignment for CI electrode insertion tests. | # 4 Engineering-centric development—ensures standardized experimental setups for AI training and validation. |

| [69] | Explores telepresence and extended reality for surgical assistance and training. | # 4 Engineering-centric development and # 6 Inequity and limited access—improves training access and enhances AI-assisted surgical guidance. |

4.5. Perspective and Future Directions

The future of auditory neuroprosthetics lies at the intersection of growing demand, technological innovation, and stringent regulatory frameworks. This section explores six key dimensions that will shape the evolution of the field: market growth and demographic drivers, the integration of artificial intelligence, regulatory and standardization frameworks, data-sharing platforms with privacy-preserving approaches, and enhanced signal processing and innovative energy-supply systems (alongside obviously continuously advanced technological developments such as miniaturization, improved materials). Each pillar underscores the challenges, opportunities, and strategic directions necessary for the development of neuroprosthetics that are more effective, safer, and sustainable, while pushing the boundaries of human–machine integration.

4.5.1. Market Growth and Demographic Drivers

In outlining the future of auditory neuroprosthetics, three interrelated dimensions emerge as critical: the strong and sustained market growth driven by demographic and technological factors; the crucial role of AI integration supported by evolving regulatory and standardization frameworks; and the inherent unpredictability of AI advancements that demands ongoing vigilance and adaptive governance.

The auditory neuroprosthetics market is experiencing significant growth and is projected to continue expanding over the coming years. According to Mordor Intelligence, the global neuroprosthetics market is expected to reach USD 13.43 billion in 2025 and grow at a compound annual growth rate (CAGR) of 11.83%, reaching USD 23.49 billion by 2030 [

70]. Similarly, the Business Research Company forecasts the market size to be USD 11.72 billion in 2025, with a revenue forecast of USD 20.61 billion by 2034, reflecting a CAGR of 15.2% [

71]. This growth is driven by several factors, including the increasing prevalence of hearing impairments, advancements in implantable technologies, and a growing aging population. As the demand for effective auditory solutions rises, there is a corresponding need for innovative technologies to enhance the performance and adaptability of auditory neuroprosthetics.

4.5.2. AI Integration and Technological Innovation

Artificial intelligence (AI) is poised to play a pivotal role in this evolution. AI algorithms enable personalized sound processing, real-time adaptation to environmental changes, and improved user outcomes by learning from individual auditory experiences. The integration of AI into auditory neuroprosthetics is expected to lead to more sophisticated and effective devices, further driving market growth and adoption. For example, the market intelligence report by Grand View Research projects a compound annual growth rate (CAGR) exceeding 10% for the neuroprosthetics market through 2030 [

72]. This is largely propelled by advances in AI and machine learning that improve device functionality and patient personalization.

It is therefore essential to move toward the routine integration of AI technologies within auditory neuroprosthetic systems. This requires not only technical innovation but also robust regulatory and standardization frameworks to ensure safety, interoperability, and ethical alignment.

4.5.3. Regulatory and Standardization Landscape: Challenges in Clinical Validation

The integration of artificial intelligence (AI) into neuroprosthetic devices increasingly intersects with evolving regulatory frameworks and international standardization efforts, shaping the development of highly reliable, safe, and ethically robust technologies. Global regulatory agencies are actively delineating approaches to ensure AI-enabled medical devices meet stringent requirements for clinical safety, performance validation, transparency, and accountability. Within the European Union, the Medical Device Regulation (MDR) 2017/745 [

73] establishes rigorous standards for clinical evaluation, post-market surveillance, and risk management throughout the device lifecycle, while the recently adopted AI Act [

74] introduces a risk-stratified framework—commonly termed the “double traffic light” model—classifying AI systems according to potential patient, provider, and societal risk. These frameworks are particularly critical in high-stakes medical domains, including auditory neuroprosthetics, where algorithmic errors or systemic bias can directly impact neural interfacing and auditory perception.

International standardization initiatives provide technical and procedural guidance for trustworthy AI implementation in clinical contexts. ISO/IEC standards—including ISO/IEC 22989 on AI terminology [

75], ISO/IEC 23894 on AI system risk management [

76], and ISO 81001-1 on health software safety [

77]—define best practices encompassing algorithm validation, data integrity, model robustness, reproducibility, interpretability, and continuous post-deployment monitoring. Complementary standards, such as IEC 60601-1-11:2015+A1:2020 [

78] on electrical safety and essential performance for medical devices in home healthcare environments, and ISO/IEC 27001:2022 [

79] on information security management, further mitigate operational and cybersecurity risks inherent to AI-driven medical devices. These standards collectively facilitate consistent, transparent AI behavior, minimizing adverse outcomes, enhancing clinical interpretability, and enabling interoperability across healthcare infrastructures.

AI integration with personalized medicine in auditory neuroprosthetics poses specific challenges for clinical validation. Algorithms must adapt to individual auditory profiles, neural plasticity, and real-world environmental variability, demanding rigorous testing across diverse patient populations. Standardized frameworks enable structured clinical evaluation, multicenter trials, cross-institutional data aggregation, and generalization of AI models, thereby supporting reliable personalization strategies while maintaining patient safety and reproducibility. Moreover, ethical principles—including patient consent, fairness, privacy, and accountability—are embedded in regulatory measures, fostering public trust and responsible adoption in clinical practice.

The harmonization of regulatory and standardization measures underpins patient safety and catalyzes innovation. Clear requirements for algorithmic performance, data governance, and clinical validation encourage the deployment of advanced AI algorithms, adaptive signal processing systems, and real-time personalization strategies essential for auditory rehabilitation. Given the rapid evolution of AI methodologies, these frameworks must adopt iterative, adaptive governance approaches, incorporating novel algorithms, emerging data modalities, and real-world evidence to ensure sustained efficacy, safety, and ethical compliance. In this context, the interplay of regulation, technical standardization, and ethical oversight serves both as a safeguard and as a strategic enabler, allowing AI-enabled auditory neuroprosthetics to achieve maximal therapeutic impact while advancing patient-centered outcomes.

Table 8 presents a summary of the cited regulatory frameworks. Other relevant standards not specific to AI, such as ISO 13485 (quality management), ISO 14971 (risk management), IEC 62304 (medical device software lifecycle), and diverse others, also apply but are not included here for brevity.

Table 8.

Key Regulatory and Standardization Frameworks for AI Auditory Neuroprosthetic Devices.

Table 8.

Key Regulatory and Standardization Frameworks for AI Auditory Neuroprosthetic Devices.

| Framework/Standard | Main Scope | Key Objectives | Impact on Auditory Neuroprosthetic Devices | Devices Affected | Practical AI Implementation Examples |

|---|

| MDR (EU Medical Device Regulation) 2017/745 | Medical device regulation | Clinical safety, performance, risk assessment, post-market surveillance | Ensures safety and performance of AI-enabled auditory neuroprostheses | CI, ABI, AMI, HAT, BAHS, experimental devices | Pre-market approval; continuous monitoring of device performance and patient outcomes |

| AI Act (EU) | AI system regulation | Risk-based classification (“double traffic light” with the MDR), transparency, accountability | Regulates high-risk AI algorithms, ensuring robustness, reliability, and ethical deployment in AI-enabled auditory devices | CI, ABI, AMI, HAT, BAHS, experimental devices | Adaptive AI sound processing and neural stimulation algorithms; ethical deployment in high-risk devices |

| ISO/IEC 22989 | AI terminology | Standardization of AI terms and concepts | Provides common language for describing AI functions and models across devices | CI, ABI, AMI, HAT, BAHS | Shared terminology for neural signal processing, ML models for auditory scene analysis |

| ISO/IEC 23894 | AI risk management | Guidelines on identifying, assessing, and mitigating AI-related risks | Supports evaluation of AI reliability, failure modes, and clinical safety | CI, ABI, AMI, HAT, BAHS, experimental devices | Risk assessment for predictive models controlling stimulation patterns |

| ISO 81001-1 | Health software | Safety, quality, lifecycle management of healthcare software | Ensures safe software development and deployment for AI-enabled devices | CI, ABI, AMI, HAT, BAHS | Safe AI firmware updates, software maintenance, patient safety |

| IEC 60601-1-11:2015+A1:2020 | Electrical safety | Protection from electrical hazards | Guarantees electrical safety for AI-integrated auditory devices in home healthcare environments | CI, ABI, AMI, HAT, BAHS | Integration of AI-controlled signal processors and electrode arrays ensuring electrical safety |

| ISO/IEC 27001:2022 | Information security | Secure data management | Protects patient data used for training, calibration, and operation of AI systems | CI, ABI, AMI, HAT, BAHS, experimental devices | Secure cloud-based federated learning, privacy-compliant data sharing |

4.5.4. AI-Enabled Personalized Medicine in Auditory Neuroprosthetics: Data Integration, Adaptive Energy Management, and Future Perspectives

The development of AI-driven auditory neuroprosthetics critically depends on access to large, high-quality, and diverse datasets, which enable models to generalize across patient populations while supporting personalized medicine interventions. Secure and standardized data-sharing platforms allow AI algorithms to learn from multi-modal datasets—including auditory thresholds, speech perception tests, cognitive assessments, imaging studies, and device usage patterns—while ensuring patient privacy, forming the backbone of personalized auditory medicine.

For example, the Auditory Implant Initiative (Aii) promotes collaboration among cochlear implant centers. Its HERMES (HIPAA-Secure Encrypted Research Management Evaluation Solution) database securely stores de-identified patient information such as hearing history, demographics, and surgical details, enabling aggregated analyses that enhance AI model reliability and support individualized device programming aligned with personalized medicine principles [

80]. Similarly, multimodal research platforms at the Hospital Universitario Virgen Macarena in Seville systematically collect interdisciplinary patient data, providing rich inputs for AI models that adapt stimulation parameters to personalized auditory profiles [

81].

Federated learning offers a promising approach for privacy-preserving AI development, allowing models to be trained across decentralized datasets without centralizing sensitive patient information [

82]. Complementary techniques, including homomorphic encryption (enabling computations on encrypted data) and differential privacy (introducing statistical noise to prevent re-identification), further safeguard confidentiality while maintaining model generalizability, ensuring that personalized medicine approaches can scale safely across institutions [

83].

Integrating these AI capabilities with personalized medicine enables dynamic adaptation of neuroprosthetic devices to each patient’s auditory characteristics, neural plasticity, and real-world environments. AI algorithms can continuously optimize sound-processing strategies, electrode stimulation patterns, and signal amplification based on longitudinal patient data, moving devices beyond static, “one-size-fits-all” programming toward truly patient-tailored auditory rehabilitation and fully realizing the goals of personalized medicine.

A complementary avenue for personalization lies in AI-enhanced energy management. Current neuroprostheses, including cochlear implants, rely on external power sources, limiting autonomy and continuous stimulation. Recent advances in biomechanical-to-electrical energy harvesting using inorganic dielectric materials (IDMs) with engineered micro-/nanoarchitectures offer pathways toward self-powered or hybrid-powered devices, enabling personalized energy delivery that adapts to the unique physiological and auditory profiles of each patient [

84,

85].

By combining personalized AI control, adaptive energy harvesting, and real-time physiological feedback, next-generation auditory neuroprostheses are poised to deliver autonomous, efficient, and patient-centered performance, reducing the burden of device maintenance and enhancing quality of life, fully embracing the principles of personalized medicine.

In a broader perspective, these developments illustrate how AI-enabled, data-driven, and adaptive neuroprosthetic systems can form the foundation of personalized medicine in auditory care, where device programming, stimulation, and energy management are dynamically tailored in real time to the unique characteristics, preferences, and physiological responses of each patient [

86,

87]. Beyond auditory rehabilitation, this framework provides a blueprint for integrating multimodal patient data—including cognitive, vestibular, and behavioral metrics—into adaptive AI-driven systems, enabling holistic patient-centered interventions [

88].

Future directions include the integration of predictive analytics and clinical decision support, where AI models can anticipate individual patient needs, optimize rehabilitation protocols, and guide clinicians in personalized medicine adjustments. Coupled with remote monitoring and telemedicine platforms, these systems allow continuous feedback loops, enhancing real-world applicability and supporting longitudinal personalization even outside clinical settings [

86].

Moreover, combining AI-driven neuroprosthetics with genomic, pharmacological, and lifestyle data opens the possibility for precision auditory medicine to interface with broader personalized medicine strategies, aligning device interventions with patient-specific biological and environmental factors. Adaptive learning algorithms can continuously refine device performance based on longitudinal patient trajectories, neural plasticity, and real-world auditory experiences, further enhancing both efficacy and safety [

87,

88].

Ultimately, these AI-enabled frameworks exemplify how personalized medicine can extend from individualized device control to a comprehensive, integrative approach, bridging cutting-edge technology with truly patient-tailored clinical care. They establish a pathway toward next-generation precision healthcare, where dynamic adaptation, predictive intelligence, and multi-domain integration maximize therapeutic outcomes, patient satisfaction, and quality of life.

4.6. Limitations of the Study

While this narrative review does not aim to be exhaustive, its design offers a valuable and timely synthesis of how artificial intelligence is being integrated into biologically grounded auditory neuroprosthetics. Several methodological considerations—sources of potential bias—can inform future developments, yet many of these features are also sources of strength in rapidly evolving fields.

Firstly, the review is thematic-oriented rather than strictly systematic, which introduces selection bias, since studies were chosen based on relevance to key biological and technological themes. Moreover, being narrative, the review could incorporate recent studies incrementally, providing a discussion that evolves with new findings and insights—an advantage in fields undergoing rapid development.

Secondly, the narrative approach involves a degree of interpretive subjectivity, potentially emphasizing certain findings over others. However, this subjectivity also allows for interdisciplinary insights, connecting neural, clinical, and engineering perspectives, and highlighting patterns that a purely quantitative synthesis might miss.

Thirdly, the review focused on English-language publications, which could introduce language bias, but this ensured consistency in interpretation and facilitated the integration of complex biological and technological concepts.

Finally, the emphasis on biologically grounded studies—such as neural coding, plasticity, and auditory pathway dynamics—excluded purely technical or algorithmic research without a clear biological anchor, representing a scope bias. Nevertheless, this targeted approach enhances translational relevance, making the review especially informative for readers interested in clinical and neuroscientific applications.

Overall, while the narrative design introduces some potential biases, it also maximizes the review’s utility by being thematically rich, flexible, and integrative, capable of capturing emerging trends and insights, and supporting incremental discussion as new studies are published.