Simple Summary

An AI-based approach for diagnosing oral squamous cell carcinoma is developed using a database of histopathology images obtained through biopsy and evaluated by two pathologists. To increase the objectivity and repeatability of the histopathological examination, automated multiclass grading of OSCC is performed in the first step. Furthermore, the second step builds confidence in the AI-based system by integrating explainable AI components like Grad-CAM, which give clinicians valuable visual insights into the model’s decision-making process. Regarding multiclass grading, the method based on deep convolutional neural networks produced satisfactory results.

Abstract

Oral cancer is typically diagnosed through histological examination; however, the primary issue with this type of procedure is tumor heterogeneity, where a subjective aspect of the examination may have a direct effect on the treatment plan for a patient. To reduce inter- and intra-observer variability, artificial intelligence algorithms are often used as computational aids in tumor classification and diagnosis. This research proposes a two-step approach for automatic multiclass grading using oral histopathology images (the first step) and Grad-CAM visualization (the second step) to assist clinicians in diagnosing oral squamous cell carcinoma. The Xception architecture achieved the highest classification values of 0.929 (±σ = 0.087) AUCmacro and 0.942 (±σ = 0.074) AUCmicro. Additionally, Grad-CAM provided visual explanations of the model’s predictions by highlighting the precise areas of histopathology images that influenced the model’s decision. These results emphasize the potential of integrated AI algorithms in medical diagnostics, offering a more precise, dependable, and effective method for disease analysis.

1. Introduction

Worldwide, the incidence of oral cancer (OC) has an increasing rate, which makes up 1–2% of all cancers [1,2]. OC has a high mortality rate, mainly due to late-stage diagnosis when tumor metastasis occurred. The five-year survival rate for early-stage cancer is approximately 80%, compared to the five-year survival rate at advanced stages, which is about 20% [3]. Over 90% of OC comprises oral squamous cell carcinoma (OSCC) [4].

Despite advances in medicine over the past decades, there are no clinical markers or other diagnostic tools that are useful in detecting cancerous change at the early stage [5]. Currently, standard methods for detecting oral cancer and the gold standard are clinical examination, conventional oral examination (COE), and histopathological evaluation following biopsy, which can detect cancer in the stage of established lesions with significant malignant changes [6,7]. However, the subjective nature of the examination is the primary issue when employing histological examination for tumor distinction, respectively, inter- and intra-observer variability [8]. Moreover, many other factors influence manual evaluation. These could include the condition of the microscope, lighting during the observation, the quality of the stains or slides, the amount of time spent on each observation, and so forth [9]. For that reason, reducing inter- and intra-observer variability and increasing objectivity and reproducibility using deep learning (DL) models could directly affect patient-specific therapeutic interventions by utilizing clinic-pathological features to determine patient outcomes [10].

For the detection and classification of OC, deep learning models have become an extremely powerful tool [11,12,13]. The ability of DL models to automatically extract features from images makes them suitable for medical image analysis. However, many DL models used for OC diagnosis are thought of as “black boxes”, which makes it challenging to comprehend their mechanisms to make decisions, especially in crucial applications like cancer diagnosis [14]. Therefore, creating DL models for OC analysis that are more reliable, interpretable, and clinically useful is crucial. Furthermore, there is a need for an OC classification model that provides explainable insights to improve trust and transparency in the diagnostic process and achieves high accuracy and sensitivity to ensure prompt detection of OC [15].

This research proposes OC multiclass classification using gradient-weighted class activation mapping (Grad-CAM) along with the DL model. By emphasizing the parts of the histopathological images that most influence the model’s predictions, this method offers explicable insights and improves the diagnostic process’s transparency and trustworthiness.

The main contributions of this research are as follows:

- a DL model for oral squamous cell carcinoma multiclass grading, which may enhance the objectiveness and repeatability of histopathological analysis and reduce the amount of time required for pathological inspections,

- improving trust and transparency in the AI-based diagnostic process by providing comprehensible insights utilizing Grad-CAM.

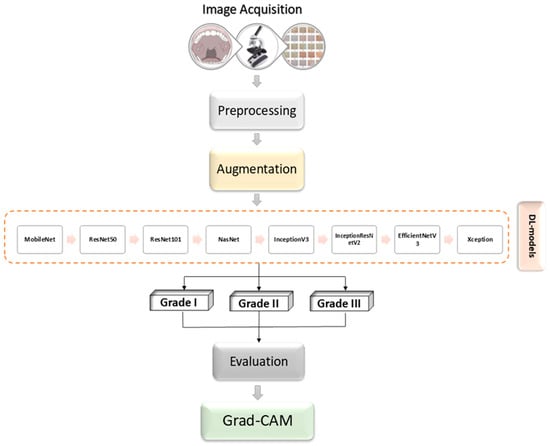

The methodology employed in this research focuses on an extensive analysis of oral cancer images. The procedure involves several steps, including preprocessing and augmenting the data, integrating multiple pretrained AI networks, and utilizing visualization tools like Grad-CAM. Figure 1 provides an overview of the proposed framework.

Figure 1.

Block diagram illustration of the proposed methodology.

Related Work

This section summarizes the current Artificial Intelligence (AI) solutions in a related field. It offers a brief review of the numerous models, approaches, and techniques applied in diverse solutions in related research fields.

According to the literature, most researchers have used DL models in retrospective studies to classify oral cancer. OC classification, often referred to as binary classification, establishes whether the given data is cancerous. Furthermore, the different stages (grades) of cancer can also be identified using multiclass classification.

Rahman et al. (2022) used a modified convolutional neural network (CNN) AlexNet to predict malignant and normal oral tissue based on biopsy images of oral squamous cell carcinoma [16]. As a result, the prediction accuracy and loss rate of the proposed model were 90.06% and 9.08%, respectively [16]. A system called OralNet is proposed by Mohan et al. (2023) for the detection of oral cancer from histopathology images [17]. The research is divided into four steps: The first step is to collect and prepare histopathology images. The second step involves extracting relevant features from images using both traditional and deep learning techniques. The third step includes feature reduction using the artificial hummingbird algorithm (AHA) and concatenation. The last step includes three-fold cross-validation performance validation and binary classification. According to test results, OralNet could identify oral cancer with above 99.5% accuracy [17]. Based on transfer learning and deep ensemble learning, Das et al. (2024) presented a classification model for binary oral cancer classification using histopathology images [18]. Ensemble learning can enhance the benefits of the DL technique by increasing generalization and accuracy. Using the stacking method, an ensemble model is built in this study that achieves 97.88% accuracy, exceeding base models [18].

Explainable AI (XAI) has drawn much attention, especially in fields like medical imaging, where precise and comprehensible machine learning models are essential for efficient diagnosis and treatment planning [19]. To improve interpretability and confidence in the outcomes, Grad-CAM is a baseline that identifies the most important image areas that are employed in a deep learning model’s decision-making process. It is used for various computer vision (CV) applications, including explanation and classification [19].

Several studies have employed Grad-CAM to classify cancer images using higher accuracy, precision, and recall in order to increase diagnostic reliability and interpretability.

Oya et al. (2023) aimed to examine AI’s capacity to assess OSCC using a novel training approach that considers cellular and structural atypia and their applicability [20]. The model of the convolutional neural network used was EfficientNetB0. Its validity was clarified using gradient-weighted class activation mapping. The suggested technique used images with 512 × 512 pixels as input and reached a high accuracy of 99.65%. According to Grad-CAM results, AI model concentrated on the area around the basal layer and addressed both cellular and structural atypia of OSCC [20]. In order to predict oral squamous cell carcinoma, Afify et al. (2023) paper suggests a novel model that uses deep transfer learning and gradient-class activation mapping to identify the lesion area in the histopathology image [21]. The results obtained from the proposed approach are significant because they represent the clinical community’s critical leadership in the early, accurate detection of oral cancer [21]. In order to gain an improved comprehension of the capabilities and limitations of DL methods in the context of oral cancer diagnosis, Da Silva et al. (2024) conducted a comprehensive evaluation of the performance of two DL models that are well-known for their high accuracy in oral cancer classification. Their analysis went beyond simple accuracy metrics; they used Grad-CAM to provide visual explanations of the models’ decisions, and they also looked into subclass accuracy rates and the distribution of prediction confidences [22].

A thorough review of the literature shows that, at the time when this research was performed, no work has been done on multiclass classification along Grad-CAM visualization using histopathology images obtained by biopsy and stained with marker protein.

2. Materials and Methods

This section provides a detailed description of the dataset used for OSCC classification as well as a brief overview of Grad-CAM technique. Furthermore, a description of deep learning models and evaluation criteria are described.

2.1. Dataset Description

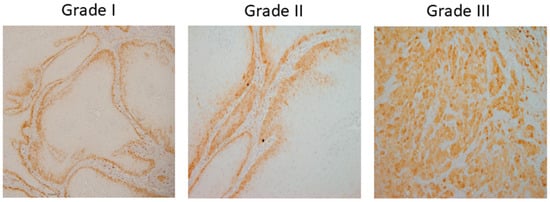

A dataset of 322 histology images with 768 × 768-pixel size was created for this research. The formalin-fixed, paraffin-embedded oral mucosa tissue blocks of histopathological reported cases of OSCC were retrieved from the archives of the Clinical Department of Pathology and Cytology, Clinical Hospital Center in Rijeka. Sample slides were examined and classified in accordance with World Health Organization (WHO) guidelines by two unbiased pathologists [23]. The level of agreement among the pathologists was assessed using the Kappa coefficient. The Kappa coefficient was determined to be 0.94. Images were divided into three classes: Grade I (well-differentiated), Grade II (moderately differentiated) and Grade III (poorly differentiated), as shown in Figure 2.

Figure 2.

OSCC group of Grade I (well-differentiated), Grade II (moderately differentiated) and Grade III (poorly differentiated).

Briefly, 4 µm sized paraffin-embedded tissue sections were stained following standard immunohistochemistry (IHC) protocol with various marker proteins. The IHC images used were stained with DAB and hematoxylin. Images were captured using the light microscope (Olympus BX51, Olympus, Tokyo, Japan) equipped with a digital camera (DP50, Olympus, Japan) and transmitted to a computer by CellF software (Olympus, Japan, https://www.olympus.co.jp/). Furthermore, images were captured with 10× objective lenses.

A comparable clinic-pathological report of the patients is shown in Table 1. The patient’s age at the time of diagnosis, sex, smoking status, and alcohol consumption were among the demographic details. The patients were adults with a median age of 64. While 38% of the patients consumed alcohol, 55% of them smoked. 30% of the patients were female, and 70% were male. Only 15% of patients had a grade III diagnosis, whereas 45% received a grade I diagnosis. A higher percentage of patients (52%) had lymph node metastases.

Table 1.

Characteristics of the patients include sex, age, smoking and alcohol habits, presence of metastases in the lymph nodes, and grade of oral squamous cell carcinoma.

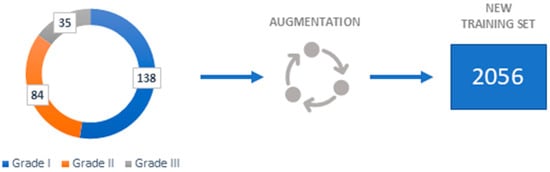

To achieve good performance and prevent overfitting, Deep Convolutional Neural Networks require a large number of samples. Since some fields, like medical image analysis, do not always have access to many samples the data augmentation is necessary.

By performing data augmentation techniques, the number of samples can be artificially increased. The following geometrical transformations are employed in the augmentation process: 90-, 180-, and 270-degree anticlockwise rotations; vertical flip; vertical flip combined with 90-degree anticlockwise rotation; horizontal flip; and horizontal flip combined with 90-degree anticlockwise rotation. The augmentation process only serves to create training samples since newly generated data are variants of the original data, testing samples are not augmented.

As seen in Figure 3, a new training set of 2056 images has been created using the aforementioned transformations.

Figure 3.

Dataset representation.

2.2. Gradient Weighted Class Activation Mapping (Grad-CAM)

Unprecedented advances in a range of computer vision tasks, including semantic segmentation, object detection, and image classification, have been achieved through the use of deep neural models based on convolutional neural networks. Although these models allow for better performance, they are difficult to understand since they cannot be separated into separately intuitive components.

In order to make decisions from a broad class of CNN-based models more transparent and understandable, Selvaraju et al. (2017) proposed a method for creating “visual explanations” [24]. Their method, called Gradient-weighted Class Activation Mapping (Grad-CAM), produces a coarse localization map that highlights the key areas in the image for concept prediction by using the gradients of any target concept flowing into the final convolutional layer.

To construct the class discriminative localization map , the authors first calculate the gradient of the class score c, with respect to feature maps . Global average pooling gradients are used to determine the neuron significance weights, :

This represents the significance of feature map k for a target class c and represents a partial linearization of the deep learning model downstream from A. By using the ReLU activation function, gathers the corresponding class discriminative localization map;

In general, a CNN that classifies images does not always need to produce as its class score. It might be any differentiable activation, such as a question response or words from a caption.

The global average pooling is used to spatially pool the K feature maps . The pooled feature map and linear transformation are then used to obtain the class c score, :

It is possible to modify the Equation (3) by using (CAM):

2.3. Deep Learning Models

Combining medical image analysis with deep learning models allows for the real-time analysis of large and complex medical datasets, providing insights that improve patient outcomes. Such algorithms can be employed to classify and distinguish between different stages of a disease. This subsection gives an overview of standard image classification algorithms:

- ResNet—Since training deep neural networks is challenging, He et al. (2016) introduce a residual learning system for training networks significantly deeper than previously used networks [25]. They evaluated residual nets with a depth of up to 152 layers on the ImageNet which resulted in a 3.57% error [25]

- MobileNetv2—Sandler et al. (2018) describe a new mobile architecture called MobileNetv2. Their basic building unit has many characteristics that make it especially well-suited for mobile applications [26]. The described architecture enhances the state-of-the-art for a wide range of performance points on the ImageNet dataset [26].

- Xception—Chollet (2017) demonstrated a new deep convolutional neural network architecture inspired by Inception called Xception [27]. Inception modules are replaced with depth-wise separable convolutions in this architecture, which results in higher performance improvement [27].

- EfficientNet—In their paper, Tan and Lee (2019) propose a novel scaling method called EfficientNet [28]. To scale up CNNs in a more structured manner, such a method employs a simple yet highly effective compound coefficient. EfficientNets, by significantly improving model efficiency could potentially serve as a new foundation for future computer vision tasks, according to the authors [28].

- InceptionV3—The concept of InceptionV3 was put forth by Szegedy et al. (2016) after InceptionV1 and InceptionV2. Its main goal is to reduce processing power by altering earlier Inception architectures. Several network optimization methods, including factorized convolutions, regularization, dimension reduction, and parallelized calculations, have been proposed in InceptionV3 that loosens the constraints for more straightforward model adaptation [29].

- InceptionResNetV2—InceptionResNetv2, which combines the Inception design with residual connections, was developed by Szegedy et al. (2017) since it has been proven that the Inception architecture produces good results at a comparatively cheap computational cost. The presented architecture significantly increased training speed and enhanced recognition performance [30].

- NASNet—In their research, Zoph et al. (2018) demonstrated a method for directly learning model architectures on the relevant dataset. Since this approach is costly when the dataset is large, they propose utilizing a small dataset to identify an architectural building block that can subsequently be applied to a larger dataset. Designing a new search space that allows for transferability, which researchers refer to as the “NASNet search space” is the main contribution of their work [31].

2.4. Evaluation Criteria

Statistical metrics like the micro- and macro-Area Under the Curve (AUC) are used to assess the classification performance of models since the aim of this research is to perform multiclass classification of OSCC grades. Micro and macro averaging can be used to determine AUCmicro and -macro, respectively. A micro averaging of the true positive rate (TPR) uses the total number of samples as the denominator and the number of valid classifications for each class as the numerator. Moreover, the fallout or false positive rate (FPR) is computed as the ratio of false classifications for each class to the total number of samples [32]. Micro averaging is represented mathematically as follows:

and

where:

- true positive (TP) is when both the predicted and actual values are positive,

- true negative (TN) is when both the actual and predicted values are negative,

- false negative (FN) is when a negative prediction is made but the actual number is positive and,

- false positive (FP) is when a prediction is positive, but the actual value is negative [33].

After calculating the metrics for every class independently, macro averaging for k classes averages the outcomes. To compute AUCmacro, a TPRmacro and FPRmacro are utilized, which can be expressed as:

and

The AUC value is defined between 0 and 1.0, where a higher value indicates better performance of the model and vice-versa. Additionally, stratified k-fold cross-validation is utilized as a statistical method in order to evaluate the performance of the models used in this research. Such an approach ensures more stable estimate and generalization since the evaluation is performed multiple “k” times on different portions of the dataset. The main difference between the classical k-fold and stratified k-fold cross validation is that stratified k-fold preserves original class distribution within each fold. Aforementioned is crucial especially in imbalanced datasets [34]. In order to prevent data leakage, the authors implemented strict patient-level separation during cross-validation. Such an approach ensures that all samples from a single patient are contained within the same fold, therefore preventing any patient’s data from appearing in both training and validation sets simultaneously.

3. Results and Discussion

In this section, the experimental results of the proposed methodology are shown. Using the pretrained ResNet50, ResNet101, NASNet, Xception, InceptionV3, MobileNetV2, InceptionResNetV2 and EfficientNetB3 architectures on ImageNet, initial experimental results are obtained. At the top of each aforementioned architecture, two additional layers are added in order to enable multiclass classification of the OSCC grades. The first layer is the global average pooling layer, which reduces the (height, width, channels) tensor to a , which also forces the network to focus on global spatial information. Furthermore, the fully connected layer is the second added layer, consisting of three neurons and a Softmax activation function, as the focus of this research is to perform OSCC grading in three classes: Grade I, Grade II, and Grade III.

Stochastic Gradient Descent (SGD), Adam, and RMSprop are the three optimizers used to train each model architecture. Additionally, each model architecture is trained in two phases; in the first phase only the output layer is trainable while the rest was frozen, and the second phase in which the output layer is frozen while all the other layers are trainable. Such an approach ensures gradual adaptation and stable training.

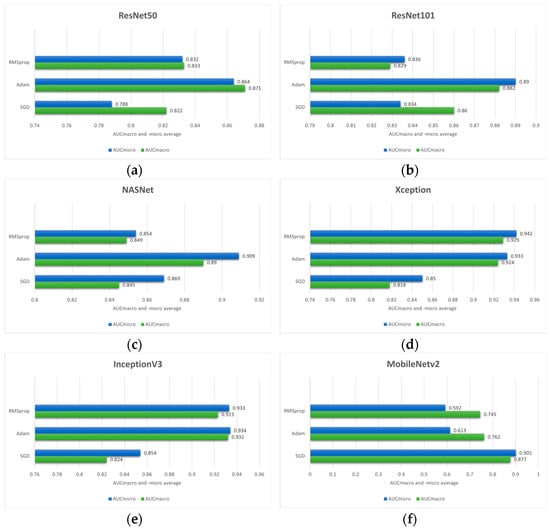

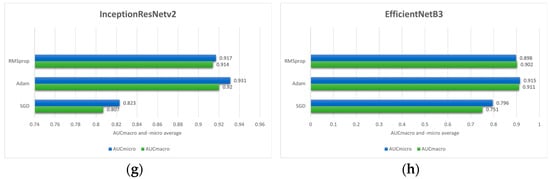

By utilizing early stopping and adjusting optimizer hyperparameters such as learning rate and learning rate decay, the results shown in Figure 4. are obtained. Additionally, stratified 5-fold cross-validation is employed since it provides a robust and unbiased estimate of model performance.

Figure 4.

Comparison of the mean AUCmacro and -micro values of three different optimizers (RMSprop, Adam and SGD) on pre-trained models: (a) ResNet50; (b) ResNet101; (c) NASNet; (d) Xception; (e) InceptionV3; (f) MobileNetV2; (g) InceptionResNetV2 and (h) EfficientNetB3.

Analyzing the experimental results, the overall highest values of performance measure are achieved when, in the first phase, only the output layer is trained with a learning rate of 1 × 10−3 and a learning rate decay of 1 × 10−6. Moreover, in the second phase, the output layer was frozen, and the remaining model layers were trained with learning rate and learning rate decay values of 1 × 10−4 and 1 × 10−6, respectively.

Based on the outcome of the stratified 5-fold cross-validation it can be seen that Adam optimizer provides the superior performance across most of the model architectures according to AUCmacro and -micro values. Therefore, in the case of this research, the Adam optimizer can be considered as a more effective optimization strategy compared to SGD and RMSprop. In the case of the ResNet50 model architecture, the highest values of performance measures are achieved utilizing the Adam optimizer with the values of 0.871 ± 0.105 (AUCmacro) and 0.864 ± 0.090 (AUCmicro). On the other hand, the SGD yielded the lowest performance values. Similarly, ResNet101 model architecture trained using Adam resulted in AUCmacro and AUCmicro values of 0.882 ± 0.125 and 0.890 ± 0.112, respectively. Moreover, the NASNet model architecture combined with Adam optimizer yielded the highest performances (0.890 ± 0.054 AUCmacro and 0.909 ± 0.043 AUCmicro). On the contrary, RMSprop provided the lowest values of performance measures implying that optimizer learning strategy is less compatible with the data used in this research along with the feature representations learned by the model itself.

Interestingly, RMSprop combined with Xception architecture yielded the highest overall performance with the values of 0.929 ± 0.087 and 0.942 ± 0.074 for the AUCmacro and AUCmicro, respectively. This strongly implies that the effectiveness of the optimizer can be model architecture dependent. In the case of IncetionV3, the highest AUCmacro and AUCmicro are achieved with the values of 0.932 ± 0.081 and 0.938 ± 0.088, while the SGD produced the lowest values. Moreover, when combined with SGD optimizer, MobileNetV2 model architecture achieved the highest AUCmacro value of 0.877 ± 0.062 and AUCmicro value of 0.901 ± 0.049. On the other hand, the highest AUCmacro of 0.920 ± 0.059 and AUCmicro of 0.931 ± 0.0.064 are obtained by utilizing the Adam optimizer with InceptionResNetV2 model architecture, while the lowest performance measure resulted when InceptionResNetV2 was combined with SGD optimizer. EfficientNetB3 architecture, the last one shown in Figure 3, indicates the highest AUCmacro of 0.911 ± 0.148 and AUCmicro of 0.915 ± 0.148 when is combined with Adam optimizer. In addition to high performance values, the standard deviation is also relatively larger compared to other model architectures, indicating greater variability across the 5-folds.

According to the presented performances of the model architectures and optimizers, it can be seen that in most cases the Adam optimizer provides high classification performance on data used in this research. While RMSprop provided mixed results, excelling only with Xception model architecture, SGD underperforms in most cases.

In order to establish performance benchmarks, two additional conventional machine learning algorithms are employed as baseline models to perform the classification of histopathology images into three classes (Grade I, Grade II, and Grade III). The logistic regression classifier, implemented with a limited-memory Broyden-Fletcher-Goldfarb-Shanno (L-BFGS) solver, resulted in AUCmacro of 0.509 ± 0.060 and AUCmicro 0.634 ± 0.059. On the other hand, the k-nearest neighbors (KNN) configured with 5 nearest neighbors yielded in AUCmacro of 0.539 ± 0.052 and AUCmicro of 0.658 ± 0.035. A comprehensive comparison of the baseline models with the deep learning model architectures is presented in Table 2.

Table 2.

Performance of different algorithms utilizing the stratified 5-fold cross-validation.

In order to enable an unbiased evaluation of the efficacy of utilized models tested under the same experimental settings, a statistical analysis (Friedman test) is performed to ascertain whether there are statistically significant differences in their performances. The analysis is conducted under the null-hypothesis that no significant difference exists in model performance, implying equivalent performance across all evaluated model architectures. The test yielded a Friedman statistic of 28.354 with a corresponding p-value of 0.0008. The p-value in this case is significantly below the threshold of 0.05 indicating a statistically significant difference in performance among evaluated models, thereby allowing rejection of the null-hypothesis that all models perform equivalently.

Furthermore, to identify specific pairwise differences between models, post-hoc analysis is conducted utilizing the Nemenyi test. The results revealed several statistically significant pairwise comparisons at the 5% significance level. Specifically, logistic regression performed significantly differently from Xception (p-value of 0.0169) and InceptionV3 (p-value of 0.0338). Additionally, KNN also differed significantly from Xception with the p-value of 0.0338. The acquired test results suggest that deep learning model architectures achieved consistently superior performance compared to traditional baseline classification methods.

The remaining pairwise comparisons did not yield statistically significant differences, indicating comparable performance among the remaining models at the 5% significance level. Notably, the lack of statistical significance does not rule out the existence of practically meaningful differences. Even if statistical significance is not achieved, consistent advantages in performance metrics, such as AUC, may nevertheless represent practically meaningful improvements.

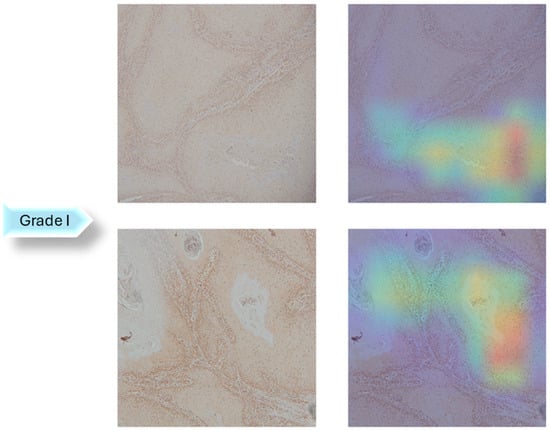

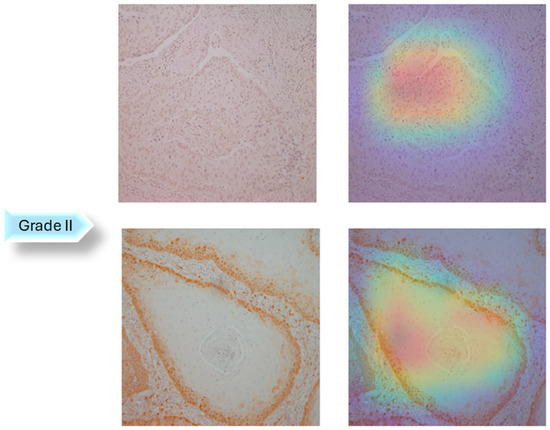

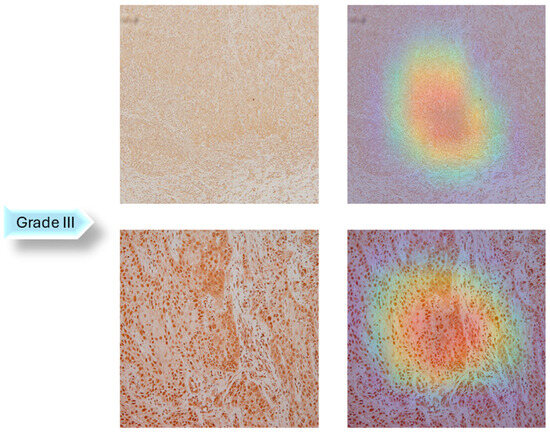

In the next step of the research, Gradient-weighted Class Activation Mapping was used to visually explain which regions of the images affected the model’s predictions. These visualizations provide another level of interpretability to the model’s decision-making process by enabling healthcare professionals to identify which histopathology slide findings are most suggestive of classifications. This can help with further diagnostic reasoning and increase confidence in automated systems. The Grad-CAM visualization of the proposed model is shown in Figure 5, Figure 6 and Figure 7. The color spectrum, which ranges from blue to red, clearly identifies the sections of an image that are most important for the model’s prediction output. The highest and lowest activation areas are represented by red and blue, respectively.

Figure 5.

Grad-CAM Visualization of Grade I.

Figure 6.

Grad-CAM Visualization of Grade II.

Figure 7.

Grad-CAM Visualization of Grade III.

As seen in Figure 5, Figure 6 and Figure 7, Grad-CAM is utilized to create heatmaps that identify key areas in histopathology images for multiclass classification. It captures gradients related to specific output classes, such as Grade I, Grade II, and Grade III, that flow into the final convolutional layers. To create a localization map, these gradients are pooled channel-wise, emphasizing important regions for class prediction. Heatmaps are created by forward passing an image through the network, computing gradients in relation to feature maps, spatially pooling these gradients, and combining weights with activation maps. Clinically, these heatmaps help differentiate pathologically significant features from possible artefacts or irrelevant regions by visually validating the model’s focus on important regions. Grad-CAM helps health professionals make accurate decisions by improving the validation and transparency of AI-driven diagnostic analyses.

However, such an approach has several limitations that reduce its usefulness for understanding deep learning models. Grad-CAM primarily highlights high-level features from the convolutional neural network’s deeper layers. These layers often overlook crucial low- or mid-level features such as edges, textures, or fine-grained structures in favor of abstract patterns or class-discriminative areas. This is a drawback in medical imaging since minor features, such as microscopic lesions, microcalcifications, or early-stage abnormalities, often appear in earlier layers of the network and can be crucial for diagnosis. The spatial resolution is another drawback. The generated heatmaps are coarse since Grad-CAM employs feature maps from deeper layers, which are downsampled as a result of pooling procedures. For that, it is challenging to locate small but crucial parts in an image precisely.

Nevertheless, the upside of this technique is that the Grad-CAM can be used for purposes other than diagnosing oral cancer from histopathology images. It can be used with various medical imaging modalities, including ultrasounds, CT scans, MRIs, and X-rays, to produce heatmaps that emphasize important areas that affect model predictions. Furthermore, when it comes to tasks such as identifying lung, breast, brain tumors, or cardiovascular abnormalities, this interpretability can increase confidence in AI models [35,36,37].

With a standard deviation of ±σ = 0.087 and ±σ = 0.074, respectively, the Xception architecture with RMSprop as an optimizer achieved AUCmacro and AUCmicro more than 0.9. Such values of standard deviation and performance measure show that the model is highly robust, and when combined with Grad-CAM visualization, it has a lot of potential as an assistive tool for medical image analysis. Many disorders, such as Alzheimer’s, liver cirrhosis, or chronic lung diseases, require accurate localization of abnormalities within imaging data, much as oral cancer diagnosis. Healthcare personnel can benefit from the transparent nature of DL model predictions and Grad-CAM visual outputs by ensuring that the AI’s focus aligns with known pathology, which improves diagnostic accuracy in complex cases.

The proposed AI-based system can be used as a decision support tool integrated into the digital pathology pipeline. Following the scanning and preprocessing of digitized histopathology slides, the model may automatically identify regions of interest and provide classification results. Grad-CAM visualizations can also be used to highlight the image regions that had the most influence on the model’s decision. As visual aids, Grad-CAM heatmaps might be included in pathology reports to assist pathologists comprehend and assess the model’s findings. In situations that are ambiguous or borderline, heatmaps may be particularly helpful in highlighting particular histological features that demand further investigation.

AI is most suited as a supplemental tool to pathologists rather than as a replacement, especially in positions such as triage assistant or second reader, which improve diagnostic efficiency, accuracy, and consistency. An AI-based system can serve as a second reader and offer pathologists objective, real-time assistance by reducing cognitive and perceptual errors, particularly in complex or visually subtle cases. Furthermore, AI-based systems can be used as a triage mechanism to identify urgent or high-risk cases that require manual evaluation. In rare or unusual disease presentations, where AI can serve as a knowledge extender, it has shown the potential to reduce diagnostic time and error rate. Moreover, such systems have much potential to increase the effectiveness and precision of diagnostics, particularly in well-defined clinical subgroups.

4. Conclusions

This research highlights the enormous potential of applying XAI-based algorithms to improve OSCC diagnosis accuracy and survival rates. To attain satisfactory classification performance, the authors investigated multiple deep learning models utilizing different configuration settings for the multiclass classification task. According to the results, Xception architecture resulted in the highest classification performance, 0.929 AUCmacro and 0.942 AUCmicro, with the lowest standard deviations, ±σ = 0.087 and ±σ = 0.074, respectively. In conclusion, this research show the importance of model architecture selection, optimizer selection and model-optimizer compatibility for the specific data and task in order to achieve optimal performance.

Additionally, Grad-CAM visualization was also used to validate interpretability to the model’s decision-making process. This approach outperforms traditional single-model approaches, offering a more thorough analysis with less variability and human error.

Since the research’s data availability was limited, a dataset with more histopathological images should be used in future research to produce a more reliable model. Furthermore, adding more oral cancer types to the dataset would enable the algorithm to identify additional morphological features and improve its applicability in a larger range of clinical settings. For a more thorough understanding of tumor biology, future research should also incorporate other modalities, such as molecular profiling and genomic data (such as TP53 mutations, genomic instability, and other driver alterations). These molecular processes may also be related to grading, differentiation, or response to treatment, and they frequently underline the morphological changes seen in tissue. Additionally, future work should incorporate risk factors into the AI model for a thorough and realistic model of OSCC progression. Such an approach can aid in understanding the ways in which lifestyle, histology, and demographic factors interact to affect the patient’s prognosis and the severity of the disease.

Prospective validation in actual clinical settings is a crucial next step to improve the beneficial relevance and generalizability of AI model. This would entail implementing the AI-based system in a diagnostic process, initially in a supportive capacity, enabling pathologists to compare their interpretations with AI-driven suggestions.

Author Contributions

Conceptualization, J.Š., D.Š., N.A. and Z.C.; methodology, J.Š. and D.Š.; soft-ware, J.Š. and D.Š.; validation, N.A.; formal analysis, Z.C.; investigation, J.Š. and D.Š.; resources, Z.C.; data curation, J.Š.; writing—original draft preparation, J.Š. and D.Š.; writing—review and editing, N.A. and Z.C.; visualization, J.Š.; supervision, Z.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The research has been approved by Clinical Hospital Center Rijeka, Ethics Board (Krešimirova 42, 51000 Rijeka); under the number 2170-29-02/1-19-2, on 24 September 2019.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author if data sharing is approved by the ethics committee. The data are not publicly available due to data protection laws and conditions stated by the ethics committee.

Acknowledgments

This research was (partly) supported by Erasmus+ AISE, under grant 2023-1-EL01-KA220-SCH-000157157; and by the CZI ‘BrainClock’ project under grant NPOO.C3.2.R3-I104.0089.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| OC | Oral cancer |

| OSCC | Oral squamous cell carcinoma |

| COE | Conventional oral examination |

| DL | Deep Learning |

| Grad-CAM | Gradient-weighted class activation mapping |

| AI | Artificial Intelligence |

| CNN | Convolutional neural network |

| AHA | Artificial hummingbird algorithm |

| XAI | Explainable Artificial Intelligence |

| CV | Computer vision |

| WHO | World Health Organization |

| IHC | Immunohistochemistry |

| AUC | Area Under the Curve |

| KNN | K-nearest neighbors |

References

- Cirillo, N. Precursor lesions, overdiagnosis, and oral cancer: A critical review. Cancers 2024, 16, 1550. [Google Scholar] [CrossRef] [PubMed]

- Torre, L.A.; Siegel, R.L.; Ward, E.M.; Jemal, A. Global cancer incidence and mortality rates and trends—An update. Cancer Epidemiol. Prev. Biomark. 2016, 25, 16–27. [Google Scholar] [CrossRef] [PubMed]

- de França, G.M.; da Silva, W.R.; Medeiros, C.K.S.; Júnior, J.F.; de Moura Santos, E.; Galvão, H.C. Five-year survival and prognostic factors for oropharyngeal squamous cell carcinoma: Retrospective cohort of a cancer center. Oral Maxillofac. Surg. 2022, 26, 261–269. [Google Scholar] [CrossRef] [PubMed]

- Marur, S.; Forastiere, A.A. Head and neck cancer: Changing epidemiology, diagnosis, and treatment. Mayo Clin. Proc. 2008, 83, 489–501. [Google Scholar] [CrossRef] [PubMed]

- Bagan, J.; Sarrion, G.; Jimenez, Y. Oral cancer: Clinical features. Oral Oncol. 2010, 46, 414–417. [Google Scholar] [CrossRef] [PubMed]

- Essat, M.; Cooper, K.; Bessey, A.; Clowes, M.; Chilcott, J.B.; Hunter, K.D. Diagnostic accuracy of conventional oral examination for detecting oral cavity cancer and potentially malignant disorders in patients with clinically evident oral lesions: Systematic review and meta-analysis. Head Neck 2022, 44, 998–1013. [Google Scholar] [CrossRef] [PubMed]

- Warnakulasuriya, S.; Reibel, J.; Bouquot, J.; Dabelsteen, E. Oral epithelial dysplasia classification systems: Predictive value, utility, weaknesses and scope for improvement. J. Oral Pathol. Med. 2008, 37, 127–133. [Google Scholar] [CrossRef] [PubMed]

- Mehlum, C.S.; Larsen, S.R.; Kiss, K.; Groentved, A.M.; Kjaergaard, T.; Möller, S.; Godballe, C. Laryngeal precursor lesions: Interrater and intrarater reliability of histopathological assessment. Laryngoscope 2018, 128, 2375–2379. [Google Scholar] [CrossRef] [PubMed]

- Bruschini, R.; Maffini, F.; Chiesa, F.; Lepanto, D.; De Berardinis, R.; Chu, F.; Tagliabue, M.; Giugliano, G.; Ansarin, M. Oral cancer: Changing the aim of the biopsy in the age of precision medicine. A review. ACTA Otorhinolaryngol. Ital. 2021, 41, 108. [Google Scholar] [CrossRef] [PubMed]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Alanazi, A.A.; Khayyat, M.M.; Khayyat, M.M.; Elamin Elnaim, B.M.; Abdel-Khalek, S. Intelligent deep learning enabled oral squamous cell carcinoma detection and classification using biomedical images. Comput. Intell. Neurosci. 2022, 2022, 7643967. [Google Scholar] [CrossRef] [PubMed]

- Musulin, J.; Štifanić, D.; Zulijani, A.; Ćabov, T.; Dekanić, A.; Car, Z. An enhanced histopathology analysis: An ai-based system for multiclass grading of oral squamous cell carcinoma and segmenting of epithelial and stromal tissue. Cancers 2021, 13, 1784. [Google Scholar] [CrossRef] [PubMed]

- Dixit, S.; Kumar, A.; Srinivasan, K. A current review of machine learning and deep learning models in oral cancer diagnosis: Recent technologies, open challenges, and future research directions. Diagnostics 2023, 13, 1353. [Google Scholar] [CrossRef] [PubMed]

- Singh, A.; Sengupta, S.; Lakshminarayanan, V. Explainable deep learning models in medical image analysis. J. Imaging 2020, 6, 52. [Google Scholar] [CrossRef] [PubMed]

- Chaddad, A.; Peng, J.; Xu, J.; Bouridane, A. Survey of explainable AI techniques in healthcare. Sensors 2023, 23, 634. [Google Scholar] [CrossRef] [PubMed]

- Rahman, A.U.; Alqahtani, A.; Aldhafferi, N.; Nasir, M.U.; Khan, M.F.; Khan, M.A.; Mosavi, A. Histopathologic oral cancer prediction using oral squamous cell carcinoma biopsy empowered with transfer learning. Sensors 2022, 22, 3833. [Google Scholar] [CrossRef] [PubMed]

- Mohan, R.; Rama, A.; Raja, R.K.; Shaik, M.R.; Khan, M.; Shaik, B.; Rajinikanth, V. OralNet: Fused optimal deep features framework for oral squamous cell carcinoma detection. Biomolecules 2023, 13, 1090. [Google Scholar] [CrossRef] [PubMed]

- Das, M.; Dash, R.; Mishra, S.K.; Dalai, A.K. An Ensemble deep learning model for oral squamous cell carcinoma detection using histopathological image analysis. IEEE Access 2024, 12, 127185–127197. [Google Scholar] [CrossRef]

- Suara, S.; Jha, A.; Sinha, P.; Sekh, A.A. Is grad-cam explainable in medical images? In Proceedings of the International Conference on Computer Vision and Image Processing, Jammu, India, 3–5 November 2023; Springer Nature: Cham, Switzerland, 2023; pp. 124–135. [Google Scholar] [CrossRef]

- Oya, K.; Kokomoto, K.; Nozaki, K.; Toyosawa, S. Oral squamous cell carcinoma diagnosis in digitized histological images using convolutional neural network. J. Dent. Sci. 2023, 18, 322–329. [Google Scholar] [CrossRef] [PubMed]

- Afify, H.M.; Mohammed, K.K.; Hassanien, A.E. Novel prediction model on OSCC histopathological images via deep transfer learning combined with Grad-CAM interpretation. Biomed. Signal Process. Control 2023, 83, 104704. [Google Scholar] [CrossRef]

- Da Silva, A.V.B.; Saldivia-Siracusa, C.; de Souza, E.S.C.; Araújo, A.L.D.; Lopes, M.A.; Vargas, P.A.; Kowalski, L.P.; Santos-Silva, A.R.; de Carvalho, A.C.P.L.F.; Quiles, M.G. Enhancing Explainability in Oral Cancer Detection with Grad-CAM Visualizations. In Proceedings of the International Conference on Computational Science and Its Applications, Hanoi, Vietnam, 1–4 July 2024; Springer Nature: Cham, Switzerland, 2024; pp. 151–164. [Google Scholar] [CrossRef]

- El-Naggar, A.K.; Chan, J.K.; Grandis, J.R.; Takata, T.; Slootweg, P.J. (Eds.) WHO Classification of Head and Neck Tumours; International Agency for Research on Cancer (IARC): Lyon, France, 2017. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning (PMLR), Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception architecture for computer vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar] [CrossRef]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Tharwat, A. Classification assessment methods. Appl. Comput. Inform. 2020, 17, 168–192. [Google Scholar] [CrossRef]

- Leonard, L.C. Web-Based Behavioral Modeling for Continuous User Authentication (CUA). Adv. Comput. 2017, 105, 1–44. [Google Scholar] [CrossRef]

- Prusty, S.; Patnaik, S.; Dash, S.K. SKCV: Stratified K-fold cross-validation on ML classifiers for predicting cervical cancer. Front. Nanotechnol. 2022, 4, 972421. [Google Scholar] [CrossRef]

- Hamm, C.A.; Baumgärtner, G.L.; Biessmann, F.; Beetz, N.L.; Hartenstein, A.; Savic, L.J.; Froböse, K.; Dräger, F.; Schallenberg, S.; Rudolph, M.; et al. Interactive explainable deep learning model informs prostate cancer diagnosis at MRI. Radiology 2023, 307, e222276. [Google Scholar] [CrossRef] [PubMed]

- Brunese, L.; Mercaldo, F.; Reginelli, A.; Santone, A. Explainable deep learning for pulmonary disease and coronavirus COVID-19 detection from X-rays. Comput. Methods Programs Biomed. 2020, 196, 105608. [Google Scholar] [CrossRef] [PubMed]

- Alshazly, H.; Linse, C.; Barth, E.; Martinetz, T. Explainable COVID-19 detection using chest CT scans and deep learning. Sensors 2021, 21, 455. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).