Simple Summary

Farm animal welfare and productivity, along with safety, depend on the proper understanding and management of aggressive behavior. Scientists have developed two contemporary methods to study aggression through smart video monitoring systems that analyze animal behaviors and which conduct brain gene expression analysis during aggressive states. The review examined 268 studies from 2014 to 2025 which included 250 artificial intelligence (AI)-based behavior monitoring studies and 18 transcriptomics studies but no studies that combined both methods. The review examined how these methods have been implemented across different farm species, including pigs, cows and chickens, but discovered that these tools remain disconnected. Most research continues to focus on well-known species while other species have received limited scientific attention. The review focuses on the requirement for standardized behavioral definitions together with affordable monitoring tools and multi-species research, particularly in practical farm settings. Scientists and farmers can better predict and prevent aggression through the combination of behavioral video data with genetic insights. An integrated approach would promote better animal health while ensuring farm safety and enhanced breeding results and sustainable livestock operations.

Abstract

The presence of aggressive behavior in livestock creates major difficulties for animal welfare, farm safety, economic performance and selective breeding. The two innovative tools of deep learning-based video analysis and transcriptomic profiling have recently appeared to aid the understanding and monitoring of such behaviors. This scoping review assesses the current use of these two methods for aggression research across livestock species and identifies trends while revealing unaddressed gaps in existing literature. A scoping literature search was performed through the PubMed, Scopus and Web of Science databases to identify articles from 2014 to April 2025. The research included 268 original studies which were divided into 250 AI-driven behavioral phenotyping papers and 18 transcriptomic investigations without any studies combining both approaches. Most research focused on economically significant species, including pigs and cattle, yet poultry and small ruminants, along with camels and fish and other species, received limited attention. The main developments include convolutional neural network (CNN)-based object detection and pose estimation systems, together with the transcriptomic identification of molecular pathways that link to aggression and stress. The main barriers to progress in the field include inconsistent behavioral annotation and insufficient real-farm validation together with limited cross-modal integration. Standardized behavior definitions, together with multimodal datasets and integrated pipelines that link phenotypic and molecular data, should be developed according to our proposal. These innovations will speed up the advancement of livestock welfare alongside precision breeding and sustainable animal production.

1. Introduction

Behavior and aggression in livestock are key elements of welfare, productivity and sustainability in animal husbandry [1]. Inimical interactions can lead to injuries, chronic stress, reduced feed intakes and reproductive failures, emphasizing the need for effective monitoring and early detection structures. These can create ethical problems which also endanger the long-term viability and efficiency of livestock production systems. The growing worldwide need for animal protein creates additional stress on farms to detect and prevent negative social behaviors in order to maintain both welfare and performance [2]. In recent years, the merging of precision livestock farming with AI-based phenotyping tools and transcriptomics has enabled remarkable insights into both external behavioral cues and internal regulatory mechanisms, though the combination of the two areas has yet to be explored [3,4].

Behavior and social stress evaluation in the past has depended on manual observations through ethograms and simple sensor-based monitoring systems, which have proved time-consuming and subjective, with limited resolution and scalability. Precision livestock farming (PLF) has brought automated monitoring tools for continuous non-invasive animal observation. Deep learning algorithms in computer vision systems have demonstrated exceptional effectiveness for detecting specific behavioral traits, including aggression, mounting, feeding and locomotion. Many systems employ CNNs and recurrent neural networks (RNNs) and pose estimation frameworks to detect minor postural and interaction patterns in video streams, which enables real-time large-scale phenotyping [5,6].

Meanwhile, transcriptomic technologies have revealed molecular pathways controlling social behavior and aggression, providing understanding of these complex attributes. The advancement of transcriptomic technologies including RNA sequencing (RNA-Seq) has enabled a better understanding of behavioral molecular mechanisms, including aggression, stress response and social hierarchy. Research on model and farm species has revealed gene networks that control neuroendocrine regulation, neurotransmission, inflammation and hormonal responses, which help scientists connect behavioral phenotypes to genomic pathways [7]. The application of transcriptomics enables researchers to discover biomarkers and develop predictive breeding methods with personalized welfare interventions for farm settings [8].

The two powerful methods of AI-based behavioral analysis and transcriptomics exist independently in the scientific literature. The fast development of both fields has not been accompanied by sufficient research that merges real-time behavioral information with molecular data, thus creating a significant void in computational neuroethology. The majority of current research has focused on economically dominant species including pigs and cattle, though small ruminants and poultry as well as aquaculture species and camels remain underrepresented despite their worldwide significance [9]. The current scoping review assesses the application of AI-assisted computer vision and transcriptomic techniques for livestock aggression evaluation while identifying species-specific research patterns and methodological challenges in uniting behavioral phenotyping with omics-level data. This paper examines recent developments and limitations and future paths for building integrated, scalable, and ethically grounded behavioral monitoring systems in precision livestock farming.

2. Materials and Methods

2.1. Databases Search Strategy

According to the PRISMA-ScR guideline, a literature search was performed on the following platforms: PubMed, Scopus and Web of Science. Searches were conducted in April and May 2025 for papers published between 2014 and 2025.

The used keywords were selected methodically after initial test searches, to identify the adequate prompts and Boolean operators. The accepted keywords with the Boolean operators were as follows: aggression AND RNA-Seq; aggression AND transcriptomics; behavior AND deep learning; behavior AND livestock AND transcriptomics; computational neuroethology; livestock AND aggression AND deep learning; livestock AND CNN; livestock AND pose estimation; livestock AND video analysis; transcriptomics AND deep learning AND behavior. This resulted in 10 advanced searches on each platform.

2.2. Selection Criterias, Selection Process

The inclusion of articles in the final literature pool were based on original research articles, in English only. During advanced and refined searches on each platform, the “other animal” category was used to ensure the avoidance of publications on human biology and medicine. For this review, we focused on articles published between 1 January 2014 and 1 April 2025. During the search process we also included early access articles in the year 2025, this was undertaken to ensure that relevant and state-of-the-art data are presented. Searching for and assessment of the studies were conducted by one author.

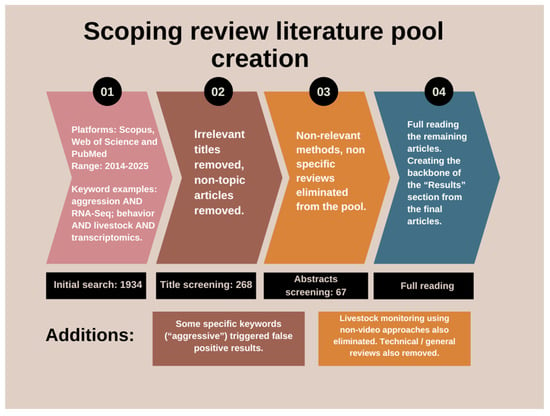

Review papers were excluded from the literature pool to avoid information overlap and repetition. Studies not focusing on behavior in video analysis, or using other sensors and means of acquiring data to research other livestock-related topics were also excluded from the final library. Purely technical/method papers with no animal context were also rooted out during the screening process (Figure 1). Despite the advanced search method, there were several articles listed in the results focusing on human medicine, specifically on oncology, which were also removed. These accidental instances are attributed mainly to the keyword “aggression”.

Figure 1.

Flowchart of the main literature creation with key steps, presenting the search strategy and selection process.

In total, 1934 articles were obtained after the initial search, combined on all three platforms. The screening method was a manual Excel (Microsoft Corporation, 2016) sorting based on the articles’ titles. After the first selection process 268 articles were obtained (250 in computer vision in livestock behavior (Table S1), 18 in transcriptomic studies in livestock behavior (Table S2) and 0 combined), thus creating the final literature pool for the scoping review (Figure 2 and Figure 3). After creating the library, a further screening was conducted by reading the abstract, material and methods, and conclusion segments of the articles. Via this approach, articles were selected for full-read analysis and creating the main content and backbone of the results segment of the review. After this further narrowing, we considered 50 articles pertaining to AI-based behavior analysis and 17 referring to transcriptomics to be key articles. During selection, behavior, social dynamics and aggression were in the focus on both subjects.

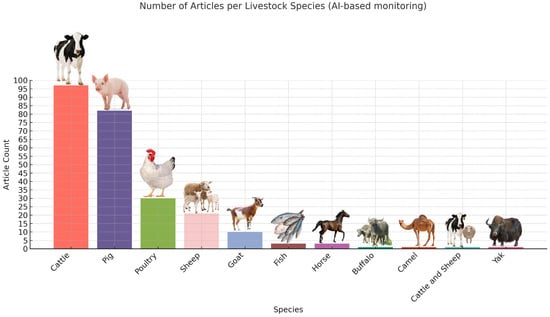

Figure 2.

The obtained DeepLearning-based behavior monitoring articles before the final screening.

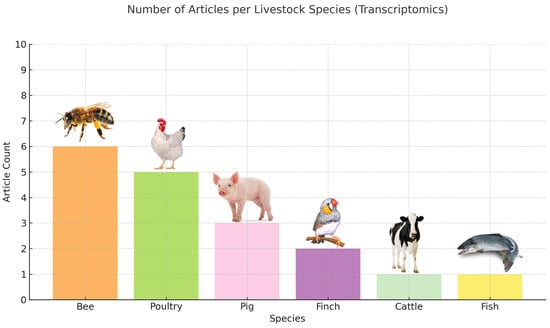

Figure 3.

Transcriptome analysis-based behavior assessment-related articles before the final screening.

3. Results

3.1. Deep Learning-Based Video Analysis of Cattle Behavior

Deep learning techniques help precision livestock farming through automatic behavioral identification and categorization of cattle by analyzing videos. This section presents current advancements in computer vision models which detect cattle behaviors.

Multiple research projects employed object detection architectures to measure and locate cattle behaviors with exact precision. The You Only Look Once version 8 (YOLOv8) framework has received an enhanced version which integrates feature attention mechanisms with squeeze-and-excitation blocks to detect eating and lying and standing and walking behaviors effectively. The model achieved a mean average precision (mAP) of 88%, showing versatility across changing environmental conditions [10]. Another YOLOv5-based method identified both estrus-linked mounting events and also offered individual recognition of the involved animals. The two-stage process started with mounting detection, followed by region of interest-based identity recognition and produced an excellent detection accuracy of 99% [11]. The YOLOv7-E6E model, which was presented in [12] used software, including AutoAugment and GridMask, as enhancements for object detection. The data augmentation method improved the mean average precision (mAP) from 88.2% to 93.0%, thus proving the effectiveness of this approach. The researchers modified faster regions with convolutional neural network (R-CNN) features to analyze a custom cattle dataset for detecting standing and lying and walking behaviors in barn environments, achieving respective behavior-specific accuracies of 94%, 92% and 89% [13]. Another study focused on behavior action classification instead of object detection to analyze the temporal patterns of cattle activities. The approach added the flow-based equalization (FlowEQ) transform, which enhances video signals by adding motion data to an action classifier, leading to 91.5% classification accuracy and an 8% performance gain over baseline models [14]. Another system used a 3D CNN together with convolutional long short-term memory (ConvLSTM) to successfully detect both space and time-based information to organize feeding, grooming, walking, standing, and exploring behaviors. The proposed framework reached 90.32% accuracy for calf videos and 86.67% accuracy for cow videos, which outperformed state-of-the-art models, including BiLSTM and Inception-V3 [15]. Fuentes et al. [16] applied their hierarchical behavior recognition study to 15 categorical behaviors by using both instance-level and spatio-temporal aspects in RGB videos from multiple farm installations that included day and night recordings. The system integrated DeepLabCut pose estimation with LSTM networks to perform drinking behavior classification against non-drinking behavior classification. The researchers tracked essential body parts, including head, ear and neck, before they attributed the resulting temporal data to the LSTM classification model, which achieved 97.35% accuracy on test data [17]. A CNN–RNN hybrid model performed the identification of drinking and grazing behavioral patterns. The two models were calibrated before the resulting model achieved 84.88% accuracy with strong precision to demonstrate the importance of spatial and sequential feature modeling [18]. The dual-branch temporal excitation and aggregation with frequency channel attention (DB-TEAF) presented a new architecture by which to identify fighting, mounting, and running as abnormal behaviors in group-housed cattle. The model used both a motion-attentive sampling approach and a spatio-temporal attention mechanism. The system delivered enhanced precision and performance after acquiring new data because of its practical application in animal behavior and welfare monitoring [19]. The behavior recognition system of Bello et al. [20] utilized Mask R-CNN in real-time to assess four basic behaviors, which included eating, drinking and active and inactive states. The model achieved an accurate detection at 20 frames per second (FPS) while maintaining high accuracy in all categories, which makes it suitable for practical farm use. The research demonstrates an increasing preference toward real-time cattle monitoring systems that use deep learning for behavior analysis. On the other hand, behavior overlaps and group housing occlusions continue to be challenges, as shown by Fuentes et al. [21] and limited availability of complex behavior annotations in datasets are also problematic. Another research study presents a powerful surveillance system that monitors cattle behavior in real time in barn facilities through deep learning-based action detection and pose estimation. The system uses synchronized footage from four viewpoints to track hierarchical behaviors and positioning, which it then combines into a bird’s eye view (BEV) projection for unobstructed top-down visualization. The action detection model achieved state-of-the-art performance in behavior classification and spatial localization through its training on 6398 images and more than 67,000 annotations from a custom multi-camera cattle action dataset, which resulted in a mean average precision (mAP) of 99.2% and an F1-confidence peak of 98% [22].

3.2. Deep Learning-Based Video Analysis of Pig Behavior

Artificial intelligence-powered automated video analysis of pig behavior has become an essential tool for precision livestock farming which provides extensive solutions to welfare problems and aggression detection, reproductive management and environmental enrichment. The latest research on computer vision-based behavior recognition for pig monitoring includes aggressive and socially significant actions in pig behavior analysis.

The most difficult welfare and economic problems in pig production occur due to aggression in group-housed systems. Modern research presents multiple solutions for precise aggressive event detection. A CNN–RNN framework successfully identified tail-biting behavior with 96.25% classification accuracy together with 92.71% localization accuracy. A tracking-by-detection method with group communication reduction through pairwise contact analysis allowed early intervention possibilities according to [23]. The combination of CNN and gated recurrent unit (GRU) networks with a spatio-temporal attention mechanism enabled 94.8% accurate aggressive episode detection across 5530 labeled video segments [24]. Other authors developed an aggression analysis framework that utilizes RGB and optical flow methods with dual-attention fusion. The model detected aggression events, including their start and durations, with 68.0% average precision, which is a very important behavioral data [25]. The research conducted by Ji et al. [26] integrated temporal shift modules (TSMs) into ResNeXt50 CNNs (residual network) to achieve a 95.65% F1-score and real-time inference at 29 ms per frame. The research of Odo et al. [27] developed an efficient system that combined YOLOv4 and YOLOv7 with deep simple online and real-time tracking (DeepSORT) and centroid architectures to track and measure ear biting aggression events. Although detection reached 98% accuracy it faced tracking difficulties because false alarms reached 34%. In a different study, authors built a dual-stream faster R-CNN model to enhance automated sow posture recognition using combined RGB and depth (RGB-D) video data. The system achieved superior results when compared with traditional RGB models through depth camera usage, particularly when lighting conditions change. The approach does not address aggression directly, yet it enhances behavior monitoring reliability through day–night behavioral analysis in intensive farming systems [28]. Welfare assessment requires positive social interactions and playful behaviors as key elements. The combination of CNN-based detection with graph convolutional network (GCN) enabled the identification of preweaning piglet social interaction behaviors like nosing and seemingly aggressive play. The approach demonstrated precision of 96.6% and F1-score of 0.9535 [29]. A CNN–SVM system for detecting mating and aggressive interactions in videos achieved F1-scores of 0.9494 when tracking short durations and 0.9377 when tracking entire days [30]. The Dual-YOLOX-Tiny-ByteTrack method operated as a deep learning video system to track individual pigs in group housing environments. Through improved detection models for multi-target tracking the system delivered 98.3% detection accuracy and 97.1% tracking precision on 180,321 images. The system enabled non-invasive behavior tracking of pigs in swine production facilities without focusing on hostile conduct [31]. The detection of playing actions through a CNN–LSTM model achieved more than 92% accuracy which demonstrates computer vision applications for social dynamics detection in scalable and working systems that may extend to hostile social events, as shown in [32]. Research on general behavior recognition has also analyzed multiple types of activities, the YOLOX + SCTS-SlowFast system used object detection with temporal-spatial attention to classify eating, drinking, standing and walking behaviors, achieving 80.05% mAP and supporting full-day observations of group-housed pigs [33]. A system using fully convolutional networks (FCNs) for spatial segmentation and motion tracking detected lactating sow behaviors that included feeding and nursing along with inactivity. The model demonstrated 97.49% accuracy in drinking detection and 88.09% accuracy in nursing detection [34]. Posture detection accuracy in farrowing environments reached 98.3% when ResNet-18 used RGB images for training, because multimodal inputs proved better than RGB-only models for efficient body position classification [35]. A specialized model consisting of SlowFast + hidden Markov model performed precise sow nursing segmentation by identifying three sub-behavior patterns. The architecture reached 90.5% accuracy for behavior detection and achieved 87.05% accuracy in behavior transition identification [36]. A YOLOv8 + observation-centric SORT (OC-SORT) pipeline provided real-time behavior and identity tracking with multi-object tracking accuracy at 99.7% for long-duration videos, as the tracking ID system establishes behavioral episode connections which, according to Tu et al., provide the foundation for early welfare monitoring [37]. An enrichment engagement (EE) analysis using InceptionV3 + LSTM achieved 97.9% accuracy in differentiating between EE and non-EE clips for 1 s intervals. The authors also established object preferences [38]. In another study the dual-stream deep mutual learning network based on RGB + flow achieved 96.52% general behavior classification accuracy while maintaining low parameter complexity and short training time, which shows promise for developing scalable farm-level systems [39]. A YOLOv7 and YOLOv9 model combined with the mixed-efficient layer aggregation network (ELAN) model that used diverse convolution kernel sizes (MixConv) to detect crushing and lying behaviors, reached 0.805 mean average precision for newborn safety monitoring [40]. The implementation of deep learning-based video analysis for pig behavior monitoring has resulted in improved precision for aggression and social interaction detection as well as posture and enrichment use assessment. These systems provide scalable real-time solutions for welfare and management improvements. Although seemingly, out of all other livestock species, aggression investigation is the most advanced in pig farming, the models primarily identify single behaviors within limited contexts without considering age groups in their analysis.

3.3. AI-Augmented Video Analysis in Poultry

The application of AI-based video surveillance in poultry farming has developed significantly, with increasing focus on detecting behaviors through non-invasive methods. Seven studies focused on poultry, mainly on chickens and quails demonstrating diverse advancements and behavioral focuses, meaning a promising foundation for behavior monitoring in commercial poultry production. Several studies employed deep learning object detection architectures, such as different versions of YOLO models, faster R-CNN, EfficientDet, and SSD, often combined with tracking algorithms like the Kalman filter, DeepSORT, or BoT-SORT, to detect and follow poultry in their environments. Notably, ChickTrack used a multiscale-adapted YOLOv5 pipeline for detecting chickens under occlusion and changing lighting conditions, achieving reliable real-time tracking of behaviors like perching, walking and foraging, which are key signs of positive welfare [41]. Other models focused on quail behavior in caged environments. As has been shown, the I-QAMS system has integrated YOLOv7 with DeepSORT to monitor feeding and movement, achieving over 90% accuracy [42]. Similarly, a comparative study tested four architectures for detecting eating, drinking, and walking, with YOLOv5 reaching the highest precision of 85.52%, showing its effectiveness for behavior classification tasks [43]. Beyond object detection, some studies incorporated temporal modeling to assess behavior. A YOLOv8-based head detection model combined with LSTM prediction enabled group-level estimation of stress-linked deviations in cage environments by quantifying head emersion as a signal for alertness [44]. Silvera et al. [45] investigated vision-based movement analysis to assess lameness in broilers, correlating camera-derived post-disturbance activity with traditional posture scores. This study validated automated video analysis for detecting movement problems across flocks from multiple commercial farms in Europe. In a cage-free context, the use of BoT-SORT tracking embedded in YOLOv8 achieved the classification of running, exploring, and resting behaviors in layers, providing movement-based time means. The model achieved a mean average precision of 98.5%, demonstrating its effectiveness in behavior segmentation and supporting environmental comparisons between aviary setups [46]. While aggression-specific behaviors were not directly studied in most cases, several systems, like ChickTrack and BoT-SORT, lay the technical groundwork for such integration by enabling identity-aware multi-bird tracking, interaction mapping, and spatial-temporal behavior modeling. The relatively lower precision in single shot multibox detector (SSD)-based systems (60.4%) highlight the need for identity tracking developments, especially when analyzing intersecting individuals [47]. Another study introduced the fast chicken aggressive behavior recognition model (FCTR) as a transformer-based architecture for the simultaneous aggressive behavior detection and individual identification of free-range chickens through video monitoring. The system combines wearable ID tagging technology with the FCTR model to achieve precise behavioral measurement through its ability to link behavior with individual identity. The model achieved excellent performance results on the ChickenFight-2024 dataset by reaching mAP scores of 89.81% for fight detection, 85.76% for tread detection, 90.14% for peck detection and high recognition accuracy for eating and drinking behaviors. The system achieved 94.88% accuracy in individual identity matching while maintaining an overall mAP of 77.39% for identity classification. The system demonstrates its ability to detect aggression at high resolution while maintaining real-time individual tracking in farm environments [48]. Collectively, these studies confirm the viability of deep learning-based video analysis for behavior recognition and welfare monitoring in poultry, with notable success in movement, feeding, and stress-related scopings. The diversity in species (broilers, layers, quails), housing systems (cages, cage-free, aviaries), and behavioral focus (movement, rest, alertness) reflects a maturing field with strong introductory potential into aggression detection and welfare evaluation in other avian livestock species, like squab pigeon, turkey, or waterfowl species.

3.4. AI-Augmented Video Behavior Analysis in Minor Livestock and Aquatic Species

Research has primarily investigated AI-driven livestock behavior analysis on cattle, poultry and pigs, though scientists have also recently studied small ruminants (sheep, goat), camels, yaks, horses and fish. The scientific literature demonstrates how computer vision adapts to different environmental and production conditions by showing its relevance in challenging situations where traditional observation methods fail. This section includes four investigations about sheep which utilize deep learning to classify multiple behaviors and determine temperament and optimize technical operations. A study on multi-camera synchronization developed a timestamp-based adjusting method to ensure accurate behavior tracking across views, an essential infrastructure step for future multi-angle AI analysis [49]. The use of optical flow in combination with CNN and LSTM models by Bati & Ser [50] led to 100% accuracy in detecting fear-related temperament traits during isolation tests through motion-based inputs, versus 70% accuracy with raw frames. The results confirm stress evaluation methods while supporting genetic selection programs. A two-stage sheep behavior detector reached >98% mean average precision in detecting two natural behaviors (feeding and lying) and three disruptive actions (attacking, biting and climbing) by using a lightweight visual geometry group (VGG)-based system suitable for farm implementation. Gu et al. [51] have presented one of the rare investigations which directly focuses on aggression behavior. Other authors deployed video–acoustic equipment secured to animal halters to observe grazing patterns, browsing behaviors and chewing actions within eight hours of free-range sheep activity. This research establishes a fundamental basis for wearable monitoring devices which use multiple sensory inputs despite lacking artificial intelligence modeling [52]. The existing methods for tracking identities at scale and extended aggression observation in open pastures require improvement, though a strong foundation exists for conflict analysis. In goat behavior analysis, the GSCW-YOLO model, which employs YOLOv8n, GCT, content-aware reassembly of features (CARAFE) and Wise-IoU achieved 97.5% mean average precision and 94.1% recall while processing 9213 annotated frames at 175 FPS. The model excels at detecting minor atypical behaviors within intricate situations [53]. The second study focusing on goats implemented YOLOv4 with spatial-temporal tracking through a top-side video angle to identify eating and drinking behaviors and active and inactive activities in real-time, with a precision of ~97% [54]. Accurate real-time classification of goat behavior emerges from these studies while future research should focus on developing models for social antagonism and identification. Researchers have developed an enhanced SlowFast model with YOLOX to detect sleeping activities alongside eating and three recumbency positions in horses kept in stalls. The model demonstrated a high accuracy level of 98.77% for eating observations, followed by 93.56% for sleeping observations and a combined accuracy of 93.90% for continuous welfare monitoring of horses [55]. An AI-based camel study presented YOLOv11pose-Camel as a Bactrian camel-specific system which incorporated efficient channel attention (ECA), SimSPPF, and DECA blocks for better global feature extraction. The system achieved 94.5% accuracy together with 94.1% mean average precision to provide a powerful posture-based welfare tracking method for visually comparable species [56]. A pioneering yak behavior model achieved 96.6% total accuracy through the use of 3D ResNet50 and SlowFast to detect eating and lying and standing and walking behaviors in outdoor conditions. The system enables frame-level classification, which proves essential for ongoing health monitoring of extensive grazing systems [57]. Two research papers have contributed to computer vision applications in aquaculture. The dual-stream recurrent network (DSRN), consisting of spatial and 3D motion networks combined with LSTM, achieved 80% accuracy for feeding versus non-feeding detection in salmon within commercial environments [58]. The Aqua3DNet system achieved 80.6% accuracy and 87.3% F1-score using monocular video and salient object detection (SOD)-based depth estimation to produce 3D density heat maps of fish within dynamic environments, which benefits both welfare surveillance and feeding optimization [59]. These research projects analyze specific visual traits of aquatic animals to establish the foundation for vision-based neuroethology applications throughout aquaculture operations.

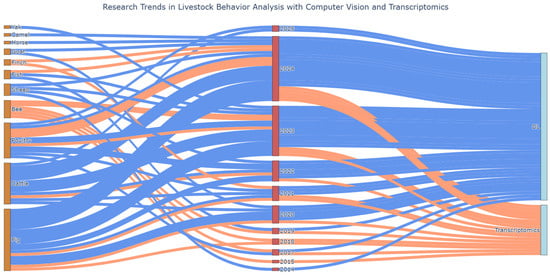

The growing range of AI-enhanced video analysis applications in various livestock sectors is demonstrated through these research studies (Figure 4), showing great versatility in species and the used models for object detection, keypoint estimation/pose estimation, behavior classification, and segmentation, sometimes even using custom frameworks (Table 1). The classification accuracy of several systems is high but there are still unexplored areas regarding aggression detection and more research is needed to model animal interactions and to track multiple animals.

Figure 4.

Sankey diagram presenting research trends according to focused species and year in relation to computer vision (blue strings) and transcriptomics (orange strings).

Table 1.

Summarized table of computer vision frameworks by species.

3.5. Transcriptomic Insights into Aggression and Social Behavior in Livestock and Model Species

Knowledge of molecular mechanisms in relation with aggression in livestock helps to improve animal welfare outcomes and production efficiency and to resolve ethical issues in animal husbandry. Transcriptomic technologies now provide unique insights into the regulatory networks that manage social behaviors and even aggression. This segment presents findings from nineteen transcriptomic research projects conducted on cattle, pigs, poultry, honeybees, fish, and avian subjects which reveal vital pathways and species-specific data in neuroethology. The review also discusses and identifies research limitations, and possible solutions in developing the field.

Starting with cattle, only one research find seemed fitting in the scope of the review. Analyzing the prefrontal cortex of Lidia bulls showed major transcriptomic variations related to age, which pointed to glutamate receptor and synaptogenesis pathways as causes of aggression. Multispecies analysis confirmed overlap with known aggression-related genes, supporting the aggression mechanisms across mammals [60].

Numerous research projects have analyzed the transcriptomic data of pigs to understand their behavioral patterns. Research on weaning effects on amygdala transcriptomes demonstrated sex-specific and treatment-based gene expression changes which affected neurodevelopmental aggression-related genes [61]. Scientists studied domestic pig aggression reduction through Illumina RNA-seq analysis of wild boar and Rongchang pig frontal cortex transcriptomes. The researchers found more than 1200 genes that expressed exclusively in the study while noting major differences in behavioral regulation pathways connected to immune functions. The analysis revealed seven domestication-related genes which matched previous studies indicating a persistent tameness signal in their transcriptomes. Brain gene expression underwent significant changes due to domestication according to a study which focused on social behavior-controlling regions [62]. Researchers demonstrated how omics methods enable the discovery of stress response and damaging behavior biomarkers through their examination of complex stress-related behaviors [63].

Poultry aggression displays itself through different behavioral patterns, with aggressive pecking representing one of the main forms. Researchers combined transcriptomic and metabolomic analysis of Sansui ducks to identify eight potential genes responsible for neurodegenerative imbalance and excitatory changes which trigger aggressive pecking behavior [64]. Stress response from novelty exposure produced distinctive transcriptomic patterns in individual laying ducks. The study showed that EXOSC3 functions as a molecular regulator of productivity traits by participating in neural communication pathways [65]. Selective breeding of Henan gamecocks for combativeness revealed excessive serotonergic–dopaminergic system activation alongside suppressed neuroimmune functions, which demonstrates an immune–aggression tradeoff. The core pathways identified through multi-omics integration consisted of tyrosine metabolism together with neuroactive components [66]. Multiple breeds underwent genomic screening, which identified aggression-related loci including SOX5 and DGKB alongside KCNMA1 that linked to muscularity [67].

Honeybees function as an excellent laboratory system to decode genomic reactions to social stimuli. One study has demonstrated that brain mushroom bodies show different genetic responses to agonistic and cooperative social interactions. Metabolic and chromosomal genes increase during aggression, which indicates that social behavior is encoded at a molecular level [68]. Under aggressive conditions, aerobic glycolysis occurs which was previously linked to cancer metabolism, but which researchers now show enhances neural activity in bees [69]. Academics observed transcriptomic changes which occurred without large chromatin alterations, indicating that specific balanced gene areas function for rapid activation [70]. Scientists analyzed aggressive behavior in worker honeybees by performing RNA sequencing experiments on their brain tissue, body fat and midgut samples. Analysts evaluated aggression connections to immunity and stress resistance by comparing gene expression between bees from high-aggression and low-aggression colonies. Evidence indicates that immune pathways show increased gene expression in non-neural tissues when linked to aggression-related genes. The findings indicate that aggression exists as a typical transcriptomic condition that emerges from social interactions [71]. The genetic variations present in colonies affect how genes related to aggression express themselves, especially in olfactory sensors [72]. The last relevant study indicates that intragenomic conflict and the allele expression stemming from parental genes lead to aggressive behaviors in animals [73].

A study on Chinook salmon behavior due to triploidy and probiotic diet used both gut microbiome analysis and brain transcriptomic methods. The triploid fish showed increased activity levels and higher expression of neuropeptide Y and neural development genes (EGR1, NEUROD, SNAP25) but probiotics did not bring back normal phenotypes because of the intricate relationship between genetic makeup and dietary factors and behavioral traits [74].

An interesting model species, the Zebra finch served as a valuable standard to study how hormones and environmental factors affect aggression. The study by Bentz et al. [75] found that elevated prenatal testosterone exposure led to increased adult aggression and resulted in gene expression and methylation changes of BDNF, ADCY2, and PCSK2 in the hypothalamus and amygdala. The study by Riyahi et al. [76] found that increased sexual competition caused gene expression changes in the posterior pallium and optic tectum but not in the testes, showing that different brain regions have varying sensitivity to social hierarchy pressure.

These studies indicate that transcriptomics holds promise for aggression studies yet multiple essential gaps exist within the field. The research on transcriptomic behavior does not include enough data from livestock species, including goats and sheep, but shows a trend of increasing publications in the field on a year-by-year basis (Figure 4). The research design of the current study does not include longitudinal approaches to study transcriptomic plasticity across developmental stages and environmental cycles.

4. Discussion

This review addresses a major advancement in livestock behavior tracking using AI video systems, along with the transcriptomic background of aggressive behavior in domesticated animal species, but exposes fundamental gaps in research concentration, species diversity and the integration of methodologies. AI-based computer vision technologies served as the basis for 250 out of 268 studies, while transcriptomic aggression research involved only 18 investigations, without any combination of both approaches. Real-time behavioral monitoring has seen a very rapid growth in deep learning technologies for phenotyping but omics-level insights continue to progress at a slower rate. The scientific community dedicates the majority of their research to pigs and cattle for both artificial intelligence and transcriptomic studies. The majority of included studies focused on these species because their economic value and intensive production systems require precise behavior tracking tools. The research into pig group housing behavior resulted in specialized models that track tail biting and ear biting and social interactions. AI systems were widely used in cattle herds to detect mounting and standing and feeding activities. The research focuses on a few poultry studies together with sheep, goat, horse, camel and aquaculture species, which each have less than five studies. The unequal distribution of research findings creates barriers to the universal application of the research results and slows down the development of welfare monitoring systems for minor livestock species.

The reviewed studies demonstrates that AI video analysis has expanded into various livestock applications, which proves the growing adoption of this technology. Most studied systems demonstrated a detection accuracy above 90%, but their behavioral analysis remains restricted to fundamental actions such as eating, walking or lying down. The development of systems which detect social interactions as well as emotional states, reproductive behaviors and aggression under dynamic conditions continues to be inadequate. Research conducted on group-housed animals demonstrates major challenges in identifying individual animals while tracking their social interactions throughout time periods. The study of aquatic systems shows promise, but researchers have encountered specific obstacles when attempting to determine poses, depth perception and group aggression patterns in underwater conditions. Extensive livestock systems, including those associated with sheep, camels and yaks, experienced monitoring difficulties because of weather conditions, long distances and large areas that make sensor-based data collection difficult. Researchers need to develop new temporal modeling techniques, together with multi-view fusion methods and lightweight adaptive models which will support real-time applications in commercial environments and under environmental limitations.

The AI-based studies employed different technical frameworks, including CNNs, LSTMs, pose estimation and tracking algorithms. The models achieved high detection rates but worked only within specific behavioral settings, needed improved external validation and utilized datasets with different annotation practices. These models face generalization problems when moving between species groups, age classes or different farm settings. The detection of aggressive actions, together with changes in group hierarchy remains understudied through social dynamic and cross-behavior interactions.

The transcriptomic studies demonstrated valuable findings about aggression through the identification of genes involved in neurodevelopment, immune response, neuroendocrine signaling and social behavior regulation. The research focused mainly on pigs, together with chickens and honeybees, but most other livestock, such as goats, sheep and aquatic species, received minimal attention. Research on gene expression changes throughout development and environmental cycles remains non-existent in the current literature. Most of the existing research measured gene activity through static moments taken after specific events, making it difficult to align behavioral data temporally. The field lacks sufficient qPCR, CRISPR, RNAi and GWAS methods for validating candidate genes identified through transcriptomic studies, which reduces their practical applications. The research shows that transcriptomics shows excellent potential for studying aggression, but numerous and essential knowledge gaps exist. The research community should pursue the following goals: Multiple tissues need to receive integrated analysis of transcriptomics with proteomics and epigenomics, standard behavioral assays need development to establish direct relationships between molecular profiles and aggression phenotypes, and studies must establish translation methods that link findings from bee and finch models to farm animal applications.

The scoping review demonstrates that no investigations exist to combine AI behavioral monitoring with transcriptomic profiling. Computational neuroethology misses a crucial chance because the synchronization between high-frequency behavioral data and biological sampling would advance aggression research and predictive modeling. The implementation of such integration demands the development of unified data platforms and time-stamped behavioral records that match tissue samples alongside interdisciplinary research teams. We outline the following essential steps for advancing this field:

- The field requires expansion to underrepresented species in order to develop fair behavioral information across worldwide livestock systems;

- The creation of multimodal datasets should include combining AI-generated behavior tracking systems with molecular sampling systems.

- AI models require evaluation through cross-validation and benchmarking tests to determine their performance across different species and environmental settings and behavioral domains.

- There should be a standardization of behavioral definitions, annotation practices, and model performance reporting in order to support meta-analysis and reproducibility.

- The evaluation of both behavioral and transcriptomic systems in real farms should occur in order to guarantee their practical use in animal husbandry.

Future research should achieve ethical and efficient livestock systems with intelligent management of aggression through technological and biological frameworks after addressing structural and scientific challenges.

5. Conclusions

The combination of AI-based behavioral phenotyping with transcriptomic profiling presents substantial potential for future precision livestock farming development. The review establishes current field status while providing a strategic direction to speed up behavioral and molecular data integration. Future studies could achieve better animal behavior understanding through data-rich standardized multi-species approaches, which will lead to more ethical, productive and resilient livestock systems.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/biology14070771/s1, Table S1. List of articles on DeepLearning based livestock behavior analysis before final screening. Table S2. List of articles on transcriptomics based livestock behavior analysis before final screening.

Author Contributions

Conceptualization, R.J. and S.K.; data curation, R.J.; writing—original draft preparation, R.J.; writing—review and editing, S.K., Z.B. and V.B.; visualization, R.J.; supervision, Z.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by 2023-1.1.1-PIACI_FÓKUSZ-2024-00042 and by the University of Debrecen Program for Scientific Publication.

Data Availability Statement

No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Orihuela, A. Review: Management of livestock behavior to improve welfare and production. Animal 2021, 15, 100290. [Google Scholar] [CrossRef] [PubMed]

- Leroy, F.; Abraini, F.; Beal, T.; Dominguez-Salas, P.; Gregorini, P.; Manzano, P.; Rowntree, J.; Van Vliet, S. Animal board invited review: Animal source foods in healthy, sustainable, and ethical diets—An argument against drastic limitation of livestock in the food system. Animal 2022, 16, 100457. [Google Scholar] [CrossRef] [PubMed]

- Shoyombo, A.J.; Popoola, M.A.; Kuusu, D.J.; Yisah, L.J.; Adebayo, O.M.; Yakubu, H.; Ndiomu, E.P.; Moses, A.A. Computational Approaches in Livestock Breeding: A Review. In Proceedings of the International Conference on Science, Engineering and Business for Driving Sustainable Development Goals (SEB4SDG), Omu-Aran, Nigeria, 2–4 April 2024. [Google Scholar] [CrossRef]

- Yan, Y.; Zhu, S.; Jia, M.; Chen, X.; Qi, W.; Gu, F.; Valencak, T.G.; Liu, J.; Sun, H. Advances in single-cell transcriptomics in animal research. J. Anim. Sci. Biotechnol. 2024, 15, 1. [Google Scholar] [CrossRef] [PubMed]

- Congdon, J.V.; Hosseini, M.; Gading, E.F.; Masousi, M.; Franke, M.; MacDonald, S.E. The future of artificial intelligence in monitoring animal identification, health, and behaviour. Animals 2022, 12, 1711. [Google Scholar] [CrossRef]

- Fazzari, E.; Romano, D.; Falchi, F.; Stefanini, C. Animal Behavior Analysis Methods Using Deep Learning: A survey. Expert Syst. Appl. 2025, 289, 128330. [Google Scholar] [CrossRef]

- Chakraborty, D.; Sharma, N.; Kour, S.; Sodhi, S.S.; Gupta, M.K.; Lee, S.J.; Son, Y.O. Applications of OMICS technology for livestock selection and improvement. Front. Genet. 2022, 13, 774113. [Google Scholar] [CrossRef]

- Zhang, C.; Yang, H.; Xu, Q.; Liu, M.; Chao, X.; Chen, J.; Zhou, B.; Liu, Y. Comprehensive Genome and Transcriptome Analysis Identifies SLCO3A1 Associated with Aggressive Behavior in Pigs. Biomolecules 2023, 13, 1381. [Google Scholar] [CrossRef]

- Jain, K.; Panigrahi, M.; Nayak, S.S.; Rajawat, D.; Sharma, A.; Sahoo, S.P.; Bhushan, B.; Dutt, T. The evolution of contemporary livestock species: Insights from mitochondrial genome. Gene 2024, 927, 148728. [Google Scholar] [CrossRef]

- Ahmad, M.; Zhang, W.; Smith, M.; Brilot, B.; Bell, M. IYOLO-FAM: Improved YOLOv8 with Feature Attention Mechanism for Cow Behaviour Detection. In Proceedings of the 2024 IEEE 15th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), Yorktown Heights, NY, USA, 17–19 October 2024. [Google Scholar] [CrossRef]

- Arıkan, İ.; Ayav, T.; Seçkin, A.Ç.; Soygazi, F. Estrus Detection and Dairy Cow Identification with Cascade Deep Learning for Augmented Reality-Ready Livestock Farming. Sensors 2023, 23, 9795. [Google Scholar] [CrossRef]

- Sim, H.; Kim, T.; Lee, C.; Choi, C.; Kim, J.S.; Cho, H. Optimizing Cattle Behavior Analysis in Precision Livestock Farming: Integrating YOLOv7-E6E with AutoAugment and GridMask to Enhance Detection Accuracy. Appl. Sci. 2024, 14, 3667. [Google Scholar] [CrossRef]

- Ahmad, M.; Zhang, W.; Smith, M.; Brilot, B.; Bell, M. Real-Time livestock activity monitoring via Fine-Tuned faster R-CNN for multiclass cattle behaviour detection. In Proceedings of the 2022 IEEE 13th Annual Ubiquitous Computing, Electronics & Amp; Mobile Communication Conference (UEMCON), New York, NY, USA, 26–29 October 2022. [Google Scholar] [CrossRef]

- Chae, J.; Sim, H.; Lee, C.; Choi, C.; Cho, H. Video-Based Analysis of Cattle Behaviors: Improved Classification using FlowEQ Transform. IEEE Access 2024, 12, 42860–42867. [Google Scholar] [CrossRef]

- Qiao, Y.; Guo, Y.; Yu, K.; He, D. C3D-ConvLSTM based cow behaviour classification using video data for precision livestock farming. Comput. Electron. Agric. 2022, 193, 106650. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Park, J.; Park, D.S. Deep learning-based hierarchical cattle behavior recognition with spatio-temporal information. Comput. Electron. Agric. 2020, 177, 105627. [Google Scholar] [CrossRef]

- Islam, M.N.; Yoder, J.; Nasiri, A.; Burns, R.T.; Gan, H. Analysis of the drinking behavior of beef cattle using computer Vision. Animals 2023, 13, 2984. [Google Scholar] [CrossRef]

- Daker, M.; Elsayaad, F.; Atia, A. The Classification of Cattle Behaviors Using Deep Learning. In Proceedings of the 2024 6th International Conference on Computing and Informatics (ICCI), Cairo, Egypt, 6–7 March 2024. [Google Scholar] [CrossRef]

- Han, Y.; Wu, J.; Zhang, H.; Cai, M.; Sun, Y.; Li, B.; Feng, X.; Hao, J.; Wang, H. Beef cattle abnormal behaviour recognition based on dual-branch frequency channel temporal excitation and aggregation. Biosyst. Eng. 2024, 241, 28–42. [Google Scholar] [CrossRef]

- Bello, R.; Mohamed, A.S.A.; Talib, A.Z.; Sani, S.; Wahab, M.N.A. Behavior Recognition of Group-ranched Cattle from Video Sequences using Deep Learning. Indian J. Anim. Res. 2021, 56, 505–512. [Google Scholar] [CrossRef]

- Fuentes, A.; Han, S.; Nasir, M.F.; Park, J.; Yoon, S.; Park, D.S. Multiview monitoring of individual cattle behavior based on action recognition in closed barns using deep learning. Animals 2023, 13, 2020. [Google Scholar] [CrossRef]

- Nasir, M.F.; Fuentes, A.; Han, S.; Liu, J.; Jeong, Y.; Yoon, S.; Park, D.S. Multi-camera fusion and bird-eye view location mapping for deep learning-based cattle behavior monitoring. Artif. Intell. Agric. 2025, 15, 724–743. [Google Scholar] [CrossRef]

- Liu, D.; Oczak, M.; Maschat, K.; Baumgartner, J.; Pletzer, B.; He, D.; Norton, T. A computer vision-based method for spatial-temporal action recognition of tail-biting behaviour in group-housed pigs. Biosyst. Eng. 2020, 195, 27–41. [Google Scholar] [CrossRef]

- Gao, Y.; Yan, K.; Dai, B.; Sun, H.; Yin, Y.; Liu, R.; Shen, W. Recognition of aggressive behavior of group-housed pigs based on CNN-GRU hybrid model with spatio-temporal attention mechanism. Comput. Electron. Agric. 2023, 205, 107606. [Google Scholar] [CrossRef]

- Yan, K.; Dai, B.; Liu, H.; Yin, Y.; Li, X.; Wu, R.; Shen, W. Deep neural network with adaptive dual-modality fusion for temporal aggressive behavior detection of group-housed pigs. Comput. Electron. Agric. 2024, 224, 109243. [Google Scholar] [CrossRef]

- Ji, H.; Teng, G.; Yu, J.; Wen, Y.; Deng, H.; Zhuang, Y. Efficient Aggressive Behavior recognition of Pigs based on temporal shift module. Animals 2023, 13, 2078. [Google Scholar] [CrossRef] [PubMed]

- Odo, A.; Muns, R.; Boyle, L.; Kyriazakis, I. Video analysis using deep learning for automated quantification of ear biting in pigs. IEEE Access 2023, 11, 59744–59757. [Google Scholar] [CrossRef]

- Wei, L.; Zhu, X.; Li, J.; Zhai, Z. Sow Behavior Feature Recognition Based on Deep Camera Data. In Proceedings of the 2024 International Symposium on Digital Home (ISDH), Guilin, China, 1–4 November 2024. [Google Scholar] [CrossRef]

- Gan, H.; Xu, C.; Hou, W.; Guo, J.; Liu, K.; Xue, Y. Spatiotemporal graph convolutional network for automated detection and analysis of social behaviours among pre-weaning piglets. Biosyst. Eng. 2022, 217, 102–114. [Google Scholar] [CrossRef]

- Gan, H.; Ou, M.; Huang, E.; Xu, C.; Li, S.; Li, J.; Liu, K.; Xue, Y. Automated detection and analysis of social behaviors among preweaning piglets using key point-based spatial and temporal features. Comput. Electron. Agric. 2021, 188, 106357. [Google Scholar] [CrossRef]

- Li, Q.; Zhuo, Z.; Gao, R.; Wang, R.; Zhang, N.; Shi, Y.; Wu, T.; Ma, W. A PIG behavior-tracking method based on a Multi-Channel High-Efficiency attention mechanism. Agric. Commun. 2024, 4, 100062. [Google Scholar] [CrossRef]

- Low, B.E.; Cho, Y.; Lee, B.; Yi, M.Y. Playing behavior classification of Group-Housed pigs using a deep CNN-LSTM network. Sustainability 2022, 14, 16181. [Google Scholar] [CrossRef]

- Li, R.; Dai, B.; Hu, Y.; Dai, X.; Fang, J.; Yin, Y.; Liu, H.; Shen, W. Multi-behavior detection of group-housed pigs based on YOLOX and SCTS-SlowFast. Comput. Electron. Agric. 2024, 225, 109286. [Google Scholar] [CrossRef]

- Yang, A.; Huang, H.; Zheng, B.; Li, S.; Gan, H.; Chen, C.; Yang, X.; Xue, Y. An automatic recognition framework for sow daily behaviours based on motion and image analyses. Biosyst. Eng. 2020, 192, 56–71. [Google Scholar] [CrossRef]

- Pacheco, V.M.; Brown-Brandl, T.M.; De Sousa, R.V.; Rohrer, G.A.; Sharma, S.R.; Martello, L.S. Deep learning-based sow posture classifier using colour and depth images. Smart Agric. Technol. 2024, 9, 100563. [Google Scholar] [CrossRef]

- Li, B.; Xu, W.; Chen, T.; Cheng, J.; Shen, M. Recognition of fine-grained sow nursing behavior based on the SlowFast and hidden Markov models. Comput. Electron. Agric. 2023, 210, 107938. [Google Scholar] [CrossRef]

- Tu, S.; Du, J.; Liang, Y.; Cao, Y.; Chen, W.; Xiao, D.; Huang, Q. Tracking and behavior analysis of Group-Housed pigs based on a Multi-Object Tracking approach. Animals 2024, 14, 2828. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, W.; Oczak, M.; Maschat, K.; Baumgartner, J.; Larsen, M.L.V.; Norton, T. A computer vision approach for recognition of the engagement of pigs with different enrichment objects. Comput. Electron. Agric. 2020, 175, 105580. [Google Scholar] [CrossRef]

- Hao, W.; Zhang, K.; Zhang, L.; Han, M.; Hao, W.; Li, F.; Yang, G. TSML: A new PIG behavior recognition method based on Two-Stream Mutual Learning Network. Sensors 2023, 23, 5092. [Google Scholar] [CrossRef]

- Lee, J.; Choi, Y.H.; Lee, H.; Park, H.J.; Hong, J.S.; Lee, J.H.; Sa, S.J.; Kim, Y.M.; Kim, J.E.; Jeong, Y.D.; et al. Enhanced Swine Behavior Detection with YOLOs and a Mixed Efficient Layer Aggregation Network in Real Time. Animals 2024, 14, 3375. [Google Scholar] [CrossRef]

- Neethirajan, S. ChickTrack—A quantitative tracking tool for measuring chicken activity. Measurement 2022, 191, 110819. [Google Scholar] [CrossRef]

- Evangelista, I.R.S.; Concepcion, R.; Palconit, M.G.B.; Bandala, A.A.; Dadios, E.P. YOLOV7 and DeepSORT for intelligent Quail Behavioral Activities monitoring. In Proceedings of the 2021 IEEE 13th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Boracay Island, Philippines, 20–30 November 2021. [Google Scholar] [CrossRef]

- Evangelista, I.R.S.; Catajay, L.T.; Bandala, A.A.; Concepcion, R.S., II; Sybingco, E.; Dadios, E.P. Exploring deep learning for detection of poultry activities—Towards an autonomous health and welfare monitoring in poultry farms. In Proceedings of the 2023 17th International Conference on Ubiquitous Information Management and Communication (IMCOM), Seoul, Republic of Korea, 3–5 January 2023. [Google Scholar] [CrossRef]

- Fomina, A.S.; Sklyarenko, A.A.; Dolgov, V.V.; Vasiliev, P.V.; Zelenkova, G.A.; Kochetkova, N.A. Assessing the motor activity of chickens based on artificial intelligence technology. BIO Web Conf. 2024, 149, 01017. [Google Scholar] [CrossRef]

- Silvera, A.; Knowles, T.; Butterworth, A.; Berckmans, D.; Vranken, E.; Blokhuis, H. Lameness assessment with automatic monitoring of activity in commercial broiler flocks. Poult. Sci. 2017, 96, 2013–2017. [Google Scholar] [CrossRef]

- Siriani, A.L.R.; De Carvalho Miranda, I.B.; Mehdizadeh, S.A.; Pereira, D.F. Chicken tracking and individual bird activity monitoring using the BOT-SORT algorithm. AgriEngineering 2023, 5, 1677–1693. [Google Scholar] [CrossRef]

- Khairunissa, J.; Wahjuni, S.; Soesanto, I.R.H.; Wulandari, W. Detecting Poultry Movement for Poultry Behavioral Analysis using The Multi-Object Tracking (MOT) Algorithm. In Proceedings of the 2021 8th International Conference on Computer and Communication Engineering (ICCCE), Kuala Lumpur, Malaysia, 22–23 June 2021. [Google Scholar] [CrossRef]

- Xue, H.; Ma, J.; Yang, Y.; Qu, H.; Wang, L.; Li, L. Aggressive behavior recognition and welfare monitoring in yellow-feathered broilers using FCTR and wearable identity tags. Comput. Electron. Agric. 2025, 235, 110284. [Google Scholar] [CrossRef]

- Dovdon, E.; Agarwal, M.; Dajsuren, Y.; De Vlieg, J. Irregular frame rate synchronization of multi-camera videos for Data-Driven Animal Behavior Detection. In Proceedings of the Second International Conference on Advances in Computing Research (ACR’24), Madrid, Spain, 3–5 June 2024, 1st ed.; Daimi, K., Al Sadoon, A., Eds.; Springer: Cham, Switzerland, 2024; pp. 97–112. [Google Scholar] [CrossRef]

- Bati, C.T.; Ser, G. SHEEPFEARNET: Sheep fear test behaviors classification approach from video data based on optical flow and convolutional neural networks. Comput. Electron. Agric. 2022, 204, 107540. [Google Scholar] [CrossRef]

- Gu, Z.; Zhang, H.; He, Z.; Niu, K. A two-stage recognition method based on deep learning for sheep behavior. Comput. Electron. Agric. 2023, 212, 108143. [Google Scholar] [CrossRef]

- Sneddon, J.; Mason, A. Automated Monitoring of Foraging Behaviour in Free Ranging Sheep Grazing a Bio-diverse Pasture using Audio and Video Information. Int. J. Smart Sens. Intell. Syst. 2014, 7, 1–4. [Google Scholar] [CrossRef][Green Version]

- Wang, X.; Hu, Y.; Wang, M.; Li, M.; Zhao, W.; Mao, R. A Real-Time lightweight behavior recognition model for multiple dairy goats. Animals 2024, 14, 3667. [Google Scholar] [CrossRef]

- Jiang, M.; Rao, Y.; Zhang, J.; Shen, Y. Automatic behavior recognition of group-housed goats using deep learning. Comput. Electron. Agric. 2020, 177, 105706. [Google Scholar] [CrossRef]

- Liu, Y.; Zhou, F.; Zheng, W.; Bai, T.; Chen, X.; Guo, L. Sleeping and eating behavior recognition of horses based on an improved SlowFast network. Sensors 2024, 24, 7791. [Google Scholar] [CrossRef]

- Liu, L.; Kurban, A.; Liu, Y. Improved YOLOV11pose for posture estimation of Xinjiang Bactrian Camels. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 12. [Google Scholar] [CrossRef]

- Sun, G.; Liu, T.; Zhang, H.; Tan, B.; Li, Y. Basic behavior recognition of yaks based on improved SlowFast network. Ecol. Inform. 2023, 78, 102313. [Google Scholar] [CrossRef]

- Måløy, H.; Aamodt, A.; Misimi, E. A spatio-temporal recurrent network for salmon feeding action recognition from underwater videos in aquaculture. Comput. Electron. Agric. 2019, 167, 105087. [Google Scholar] [CrossRef]

- Koh, M.E.; Fong, M.W.K.; Ng, E.Y.K. Aqua3DNet: Real-time 3D pose estimation of livestock in aquaculture by monocular machine vision. Aquacult. Eng. 2023, 103, 102367. [Google Scholar] [CrossRef]

- Eusebi, P.G.; Sevane, N.; O’Rourke, T.; Pizarro, M.; Boeckx, C.; Dunner, S. Age Effects Aggressive Behavior: RNA-Seq Analysis in Cattle with Implications for Studying Neoteny Under Domestication. Behav. Genet. 2022, 52, 141–153. [Google Scholar] [CrossRef] [PubMed]

- Keever-Keigher, M.R.; Zhang, P.; Bolt, C.R.; Rymut, H.E.; Antonson, A.M.; Caputo, M.P.; Houser, A.K.; Hernandez, A.G.; Southey, B.R.; Rund, L.A.; et al. Interacting impact of maternal inflammatory response and stress on the amygdala transcriptome of pigs. G3-Genes Genomes Genet. 2021, 11, 8. [Google Scholar] [CrossRef] [PubMed]

- Long, K.; Mao, K.; Che, T.; Zhang, J.; Qiu, W.; Wang, Y.; Tang, Q.; Ma, J.; Li, M.; Li, X. Transcriptome differences in frontal cortex between wild boar and domesticated pig. Animal 2018, 89, 848–857. [Google Scholar] [CrossRef] [PubMed]

- Kasper, C.; Ribeiro, D.; De Almeida, A.M.; Larzul, C.; Liaubet, L.; Murani, E. Omics Application in Animal Science—A special emphasis on stress response and damaging behaviour in pigs. Genes 2020, 11, 920. [Google Scholar] [CrossRef]

- Zhu, B.; Zhu, J.; Liu, A.; Yao, B.; Liao, F.; Yang, S. Transcriptomic and metabolomic analysis based on different aggressive pecking phenotype in duck. Sci. Rep. 2024, 14, 1. [Google Scholar] [CrossRef]

- Sun, H.X.; Guo, R.B.; Gu, T.T.; Zong, Y.B.; Xu, W.W.; Chen, L.; Tian, Y.; Li, G.Q.; Lu, L.Z.; Zeng, T. Investigating the correlation between phenotypes, adrenal transcriptome, and serum metabolism in laying ducks exhibiting varying behaviors under the same stressor. Animal 2024, 18, 101343. [Google Scholar] [CrossRef]

- Su, C.; Zhang, L.; Pan, Y.; Jiao, J.; Luo, P.; Chang, X.; Zhang, H.; Si, X.; Chen, W.; Huang, Y. Enhancing aggression in Henan gamecocks via augmentation of serotonergic-dopaminergic signaling and attenuation of neuroimmune response. Poult. Sci. 2024, 103, 104055. [Google Scholar] [CrossRef]

- Ren, X.; Guan, Z.; Zhao, X.; Zhang, X.; Wen, J.; Cheng, H.; Zhang, Y.; Cheng, X.; Liu, Y.; Ning, Z.; et al. Systematic Selection signature analysis of Chinese gamecocks based on genomic and transcriptomic data. Int. J. Mol. Sci. 2023, 24, 5868. [Google Scholar] [CrossRef]

- Shpigler, H.Y.; Saul, M.C.; Murdoch, E.E.; Corona, F.; Cash-Ahmed, A.C.; Seward, C.H.; Chandrasekaran, S.; Stubbs, L.J.; Robinson, G.E. Honey bee neurogenomic responses to affiliative and agonistic social interactions. Genes Brain Behav. 2018, 18, 1. [Google Scholar] [CrossRef]

- Chandrasekaran, S.; Rittschof, C.C.; Djukovic, D.; Gu, H.; Raftery, D.; Price, N.D.; Robinson, G.E. Aggression is associated with aerobic glycolysis in the honey bee brain1. Genes Brain Behav. 2015, 14, 158–166. [Google Scholar] [CrossRef]

- Shpigler, H.Y.; Saul, M.C.; Murdoch, E.E.; Cash-Ahmed, A.C.; Seward, C.H.; Sloofman, L.; Chandrasekaran, S.; Sinha, S.; Stubbs, L.J.; Robinson, G.E. Behavioral, transcriptomic and epigenetic responses to social challenge in honey bees. Genes Brain Behav. 2017, 16, 579–591. [Google Scholar] [CrossRef] [PubMed]

- Rittschof, C.C.; Rubin, B.E.R.; Palmer, J.H. The transcriptomic signature of low aggression in honey bees resembles a response to infection. BMC Genom. 2019, 20, 1. [Google Scholar] [CrossRef] [PubMed]

- Traniello, I.M.; Bukhari, S.A.; Dibaeinia, P.; Serrano, G.; Avalos, A.; Ahmed, A.C.; Sankey, A.L.; Hernaez, M.; Sinha, S.; Zhao, S.D.; et al. Single-cell dissection of aggression in honeybee colonies. Nat. Ecol. Evol. 2023, 7, 1232–1244. [Google Scholar] [CrossRef]

- Bresnahan, S.T.; Lee, E.; Clark, L.; Ma, R.; Markey, M.; Rangel, J.; Grozinger, C.M.; Li-Byarlay, H. Examining parent-of-origin effects on transcription and RNA methylation in mediating aggressive behavior in honey bees (Apis mellifera). BMC Genom. 2023, 24, 315. [Google Scholar] [CrossRef]

- Frank, C.E.; Sadeghi, J.; Heath, D.D.; Semeniuk, C.A.D. Behavioral transcriptomic effects of triploidy and probiotic therapy (Bifidobacterium, Lactobacillus, and Lactococcus mixture) on juvenile Chinook salmon (Oncorhynchus tshawytscha). Genes Brain Behav. 2024, 23, e12898. [Google Scholar] [CrossRef]

- Bentz, A.B.; Niederhuth, C.E.; Carruth, L.L.; Navara, K.J. Prenatal testosterone triggers long-term behavioral changes in male zebra finches: Unravelling the neurogenomic mechanisms. BMC Genom. 2021, 22, 158. [Google Scholar] [CrossRef]

- Riyahi, S.; Liebermann-Lilie, N.D.; Jacobs, A.; Korsten, P.; Mayer, U.; Schmoll, T. Transcriptomic changes in the posterior pallium of male zebra finches associated with social niche conformance. BMC Genom. 2024, 25, 694. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).