1. Introduction

Welding, as one of the crucial technologies in industrial manufacturing, plays an important role in the production of pressure pipelines. These pipelines are designed to transport gases and liquids under high-pressure conditions. These pipelines frequently operate in harsh environments characterized by elevated temperatures and pressures. To meet these challenges, pressure pipelines require exceptional sealing performance and long-term structural stability. Accordingly, the quality of welds is a critical determinant of both the mechanical integrity and operational safety of the pipeline system. Despite the adoption of stringent procedural standards, the welding process is inherently complex and subject to variability. Factors such as material properties, equipment performance, and operator skill can lead to defects such as porosity, cracks, slag inclusion, lack of fusion, and undercut. These defects not only degrade the mechanical properties of the weld but also pose significant safety risks, potentially undermining the reliability and service life of the equipment. Therefore, ensuring high welding quality necessitates the implementation of a comprehensive quality control system, which should encompass the optimization of welding procedures, the judicious selection of materials, and the advancement of operator training—each aimed at minimizing the incidence of weld defects [

1,

2].

Welding quality inspection methods are generally categorized into destructive and nondestructive testing. Among these, nondestructive testing (NDT) has become the preferred approach due to its efficiency and practicality. In particular, X-ray inspection stands out as a widely adopted method for identifying weld defects, owing to its high resolution. Utilizing the penetrating properties of X-rays to capture transmission images, this method enables the accurate detection and localization of internal defects within welded components [

3,

4]. However, traditional manual inspection of X-ray images relies heavily on the expertise and subjective judgment of quality inspectors, rendering the process time-consuming and susceptible to human error. The limitations of manual inspection require intelligent inspection technologies that can enhance accuracy and efficiency while reducing human interference. With the rapid advancement of industrial automation, such technologies have become essential for ensuring the reliability and safety of pressurized pipelines, offering a more consistent and dependable approach to welding quality assurance [

5].

In recent years, the rapid development of deep learning technology has offered innovative solutions for the intelligent detection of weld defects. Computer vision, as a critical branch of artificial intelligence, simulates the human visual system to capture, process, and interpret information from images and videos, thereby enabling accurate and efficient analysis of visual data [

6]. When combined with deep learning algorithms, computer vision can extract complex features from large datasets, enabling an efficient and accurate identification of welding defects. For digitizing radiographic films and directly acquiring digital images of the weld, deep learning models can automatically detect weld defects and precisely classify their types and locations. Compared to traditional manual film evaluation, this approach offers enhanced accuracy, significantly improves detection efficiency, and reduces the likelihood of human errors, such as misjudgments or omissions, while also lowering labor and associated financial costs.

Deep learning-based object detection algorithms, such as Faster R-CNN and YOLO, have demonstrated immense potential in improving welding quality inspection. These methods demonstrate strong capabilities in classifying and localizing various weld defects, such as porosity, cracks, and slag inclusion, in radiographic images, thereby significantly enhancing both the efficiency and reliability of defect detections [

7]. Furthermore, researchers have explored advanced techniques such as data augmentation, feature extraction, statistical design methods (e.g., response surface methodology, RSM), and model optimization, specifically tailored to the unique characteristics of weld defects. These methods are particularly valuable for enhancing the robustness and reliability of detection models in complex industrial environments [

7,

8,

9].

Currently, the deep integration of radiographic flaw detection technology and object detection algorithms has emerged as a pivotal direction for the intelligent advancement of the welding industry [

10]. On one hand, deep learning-based defect detection algorithms are capable of rapidly processing large volumes of data and establishing highly accurate welding defect recognition models. On the other hand, intelligent inspection systems can achieve fully automated workflows, encompassing data acquisition, image processing, and defect classification, thereby providing robust technical support for the intelligent management of industrial production processes. However, practical applications still face significant challenges, including the lack of comprehensive datasets, the complexity of defect types, and the limited generalization capability of existing models. Furthermore, the interpretability of the welding defect detection model remains unknown [

11].

In conclusion, radiographic flaw detection technology serves a crucial role in critical industrial fields, such as pressure pipelines. The integration of computer vision and deep learning technologies markedly enhances the efficiency and accuracy of weld defect detection, providing robust technical support for ensuring equipment safety and product quality. In the future, with the continued evolution of intelligent technologies, artificial intelligence-driven welding quality inspection methods are expected to play an increasingly prominent role in industrial manufacturing. These advancements will significantly accelerate the digitalization and intelligent transformation of industrial production processes.

2. Related Work

In recent years, numerous researchers have explored various methodologies for the automatic detection and evaluation of weld defects in radiographic images, leveraging both traditional image processing techniques and cutting-edge deep learning algorithms. This review summarizes the representative studies and technological advancements in this field, highlighting the evolution of approaches and their respective contributions to intelligent weld defect recognition. Shengming Shi. [

12] proposed a method for constructing three-dimensional spatial models by integrating X-ray flaw detection images with joint profiles based on welding type and layering. This approach enables more accurate classification, localization, and quantification of weld defects, thereby improving the precision of defect evaluation in radiographic images. Xiaohui Yin et al. [

13] conducted a comprehensive review of key technologies for automatic X-ray inspection of welding defects, focusing on inspection workflows, image enhancement, defect segmentation, and model recognition, while also outlining future research directions in the field.

Early work in flaw detection film evaluation has focused extensively on the development of digital image processing technology for welding X-rays, forming the foundation for computer-based automatic defect identification. Hongwei Kang et al. [

14] proposed a defect feature extraction algorithm based on grayscale variation and visual attention mechanisms. By segmenting weld regions and applying filtering and Boolean operations, their method achieved approximately 90% accuracy in defect recognition. Yuanxiang Liu et al. [

15] developed an SVM-based method for weld defect recognition in radiographic images, using eight geometric features—such as edge straightness and tip sharpness—to effectively classify six common defect types. Wenming Guo et al. [

16] applied the Faster R-CNN model to the WDXI dataset for the automatic detection and localization of weld defects in X-ray images. Their method incorporates welding region extraction based on grayscale statistics, as well as image enhancement and noise reduction techniques. Hongyang Wu et al. [

17] developed an improved RetinaNet-based model for multi-class weld defect detection in X-ray images. By integrating K-means clustering, adaptive sample selection, ResNet for feature extraction, and an attention-enhanced feature pyramid network, the model significantly improves detection accuracy and recall, especially for small and imbalanced defect classes.

Liu M et al. [

18] proposed LF-YOLO, a lightweight CNN-based model for weld defect detection. Incorporating RMF and EFE modules for enhanced multiscale and efficient feature extraction, the model performs well on weld and COCO datasets, though challenges remain in detecting small defects like porosity. Deng Cong et al. [

19] presented a comprehensive overview of core AI algorithms for intelligent radiographic inspection, emphasizing image segmentation, weld defect classification, and feature extraction techniques.

Lushuai Xu et al. [

20] proposed an improved YOLOv5 model for intelligent detection of pipeline weld defects in radiographic images, enhancing small target detection via a CA attention mechanism, SIOU loss, and FReLU activation for improved spatial sensitivity and global optimization. Kumaresan et al. [

21] enhanced welding X-ray datasets through data augmentation to address size and diversity limitations. Using transfer learning with VGG16 and ResNet50, they developed a weld defect recognition method with improved generalization.

Although extensive research has been conducted on the intelligent recognition of weld defects, ranging from traditional image processing techniques to advanced artificial intelligence and deep learning approaches, a large-scale commercial application of an intelligent evaluation system for weld X-ray images remains unrealized. Currently, there is a lack of a comprehensive intelligent film evaluation system that integrates negative labeling and screening, automatic defect identification, and the generation of intelligent evaluation reports. This study, leveraging deep learning technology, aims to construct a dedicated dataset and develop an intelligent film evaluation system for the recognition of weld X-ray defects.

3. Research Objectives

The weld X-ray intelligent evaluation system for radiographic films consists of three core components: intelligent labeling and data processing of weld radiographs, a multi-model object detection network, and an intelligent evaluation platform for radiographic films. The primary research focuses include defect labeling on weld radiographs, the development of advanced image processing algorithms, the construction of a multi-model detection network tailored to various defect types, real-time defect detection in weld radiographs, and the implementation of an intelligent evaluation and visualization module. Currently, the research team has completed the development and testing of these functionalities, achieving an initial realization of the intelligent evaluation capability for weld radiographic films.

The aspect ratio of nuclear power pipeline weld X-ray negatives is typically around 4:1, and after cropping the weld image, it can exceed 10:1. However, object detection networks need input images with ratios of 1:1. This discrepancy may result in the compression and distortion of both training images and object information, affecting the accuracy of defect detection. To address these issues, the image processing algorithm for long straight welds was optimized to better support the training process.

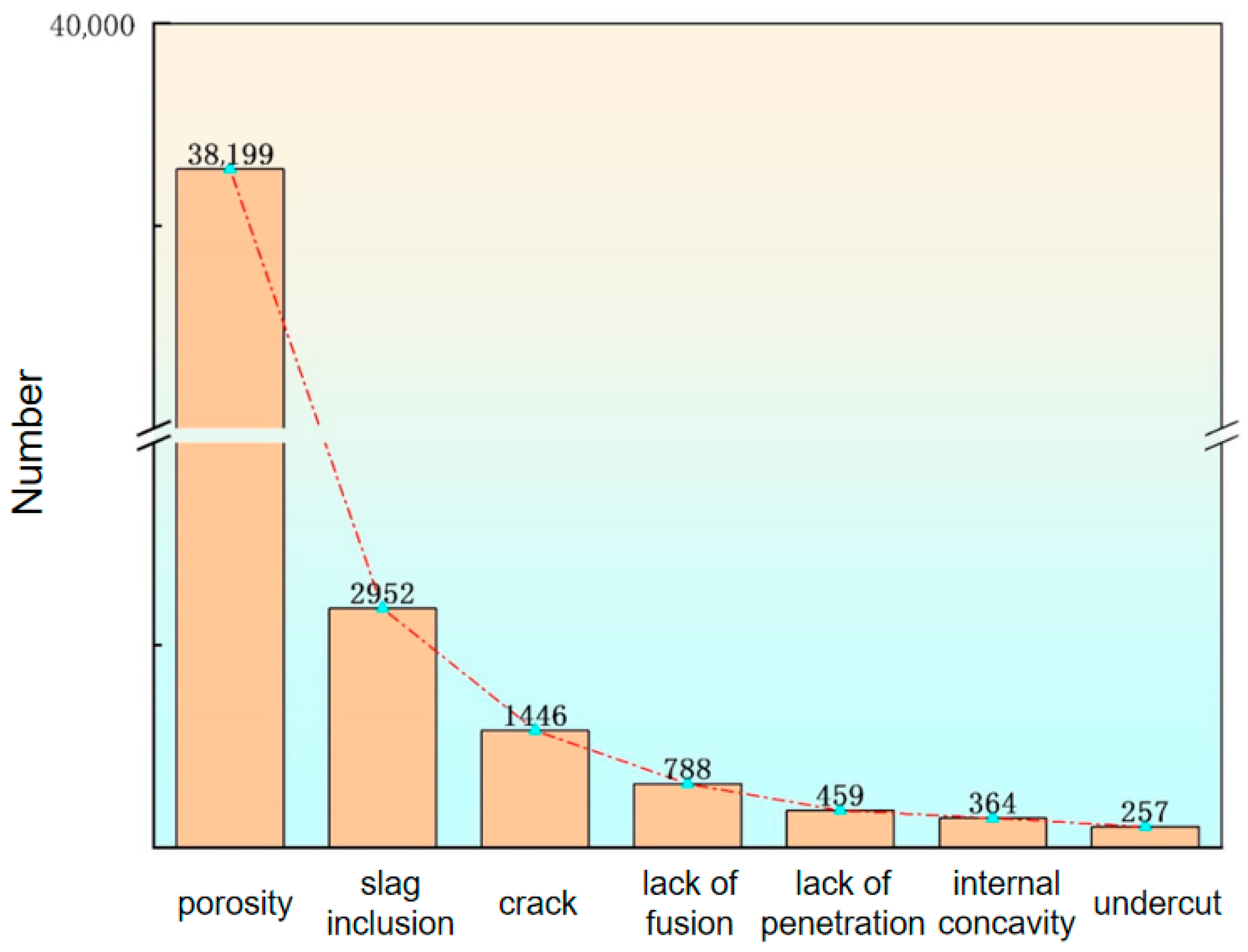

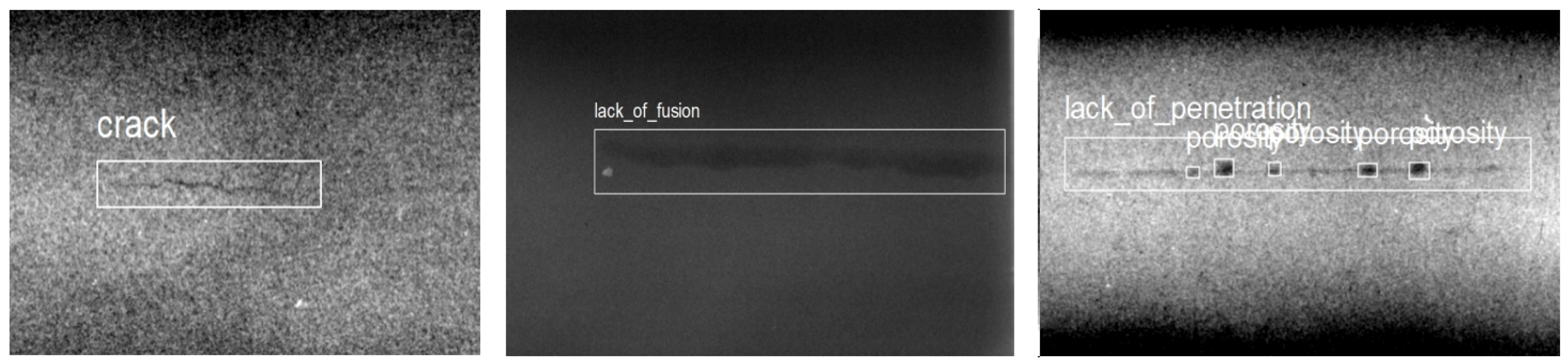

In the context of defect distribution within nuclear power pipeline welds, a total of seven defect types are identified, as illustrated in

Figure 1. The dataset exhibits a long-tailed distribution, indicating that certain defects, such as porosity, occur with significantly higher frequency than others. Consequently, the defect detection model tends to become biased toward these more prevalent defects during training, leading to diminished detection performance for rare defect types. To address this issue, this study categorized the defects into three primary groups based on factors including distribution location, quantity, and area. A separate training procedure was employed for each group to improve the model’s overall recognition accuracy across all defect types.

4. Research Program

4.2. Dataset

Utilizing X-ray radiographs of nuclear power pipeline welds, a dataset containing seven types of defects, namely porosity, slag inclusion, lack of fusion, cracks, undercut, lack of penetration, and internal concavity, was collected. The authors meticulously labeled the defects pixel-by-pixel. Based on this dataset, the images were processed using specialized image processing algorithms to enhance the effectiveness of subsequent target detection training.

4.2.1. Annotation Work

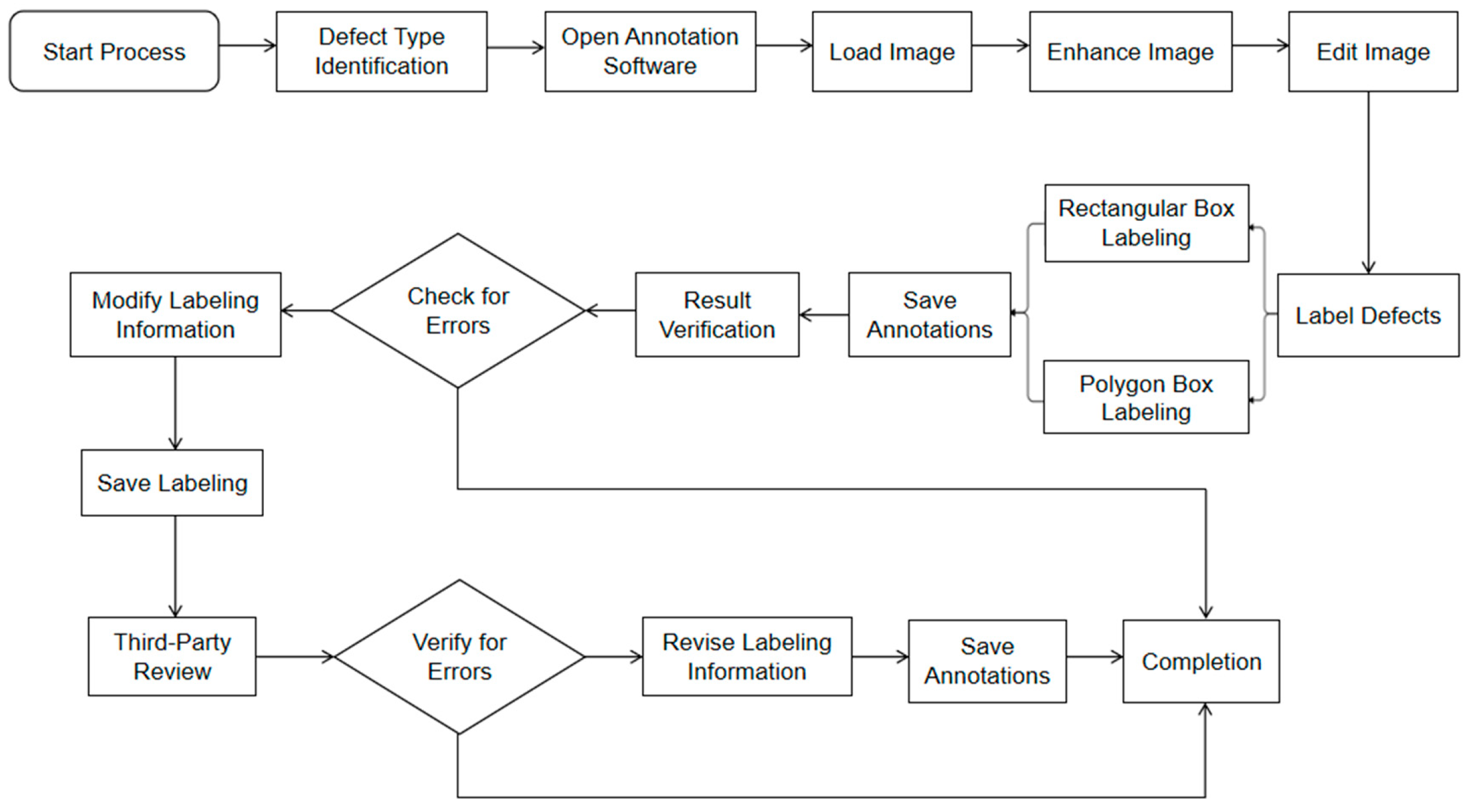

Image annotation is a critical work in computer vision, as it involves using task-specific labels to assist neural networks to learn features of defects. Therefore, standardized annotation is the basis for carrying out automatic map evaluation. The annotation process requires appropriate skills, high-quality equipment, suitable radiographic images, and standardized methods to ensure accuracy and consistency.

Figure 3 below illustrates the annotation process.

4.2.2. Weld Inspection Model

After the labeling work was completed, in order to reduce the influence of the background context on the defect detection training process, it was necessary to crop the weld region from the original image. Utilizing 4602 original weld radiographs along with their corresponding labeled information, the authors employed the YoloV8 object detection network to train the weld detection model. The specific training parameters are listed in

Table 1 below.

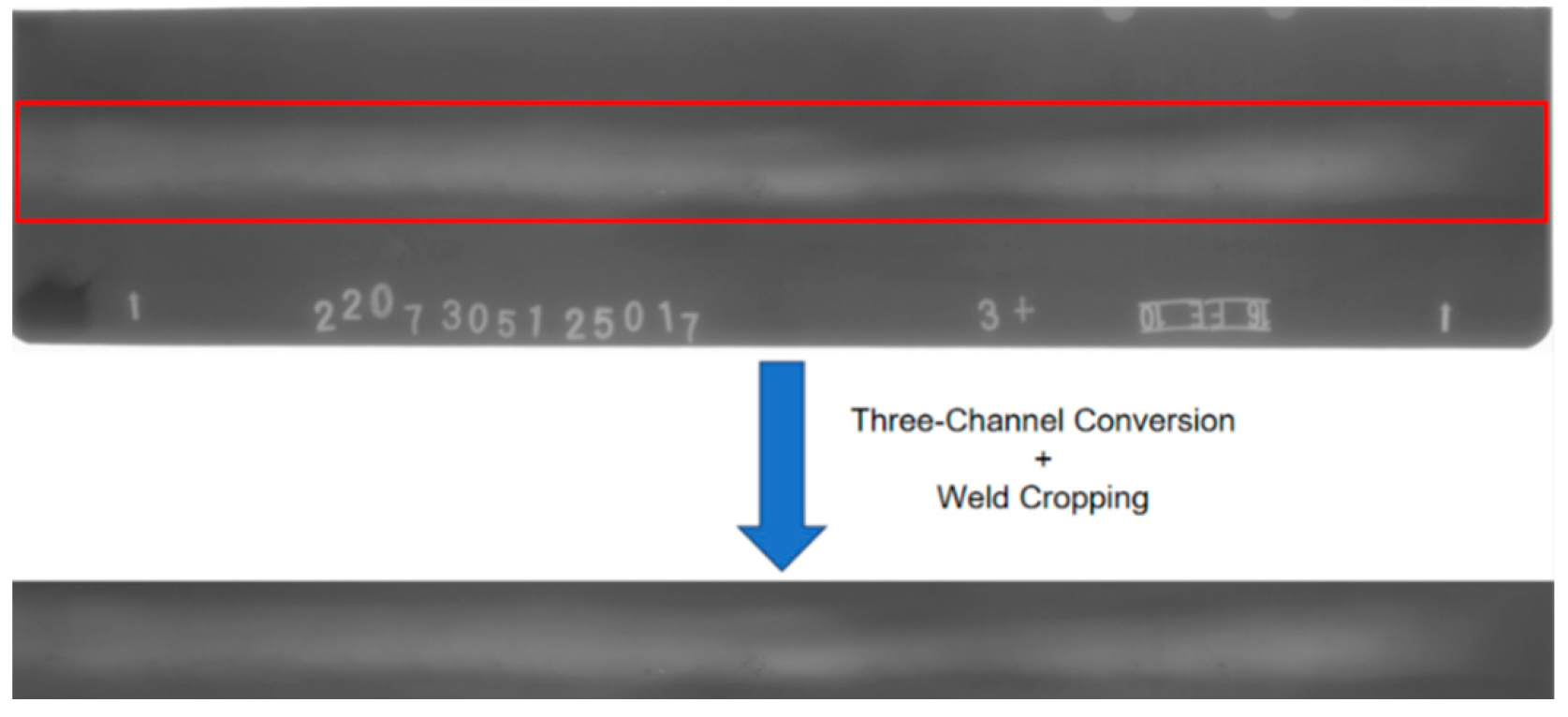

4.2.3. Data Processing

To minimize the negative influence of image background on the model’s training process, we first converted the original two-channel images into a three-channel PNG format. Subsequently, the weld images were cropped using the weld labeling information. The processed images are illustrated in

Figure 4, where the red rectangular box denotes the labeled weld context.

After cropping the weld seam, the authors initially attempted to train the weld seam defect detection model directly using the cropped weld images and corresponding annotation files. However, the results were suboptimal. This issue arose because the aspect ratio of the weld images, often exceeding 10:1, significantly differed from the conventional 1:1 ratio used in the YoloV8 training network, leading to information loss when adjusting the images to fit this ratio. To address this issue, we proposed a sliding cropping method. In this method, a square cropping window was defined with a side length equal to half the length of the shorter edge of the weld image. The window was then systematically slid across the image with an overlap ratio of 0.5 to generate cropped sub-images. The process is illustrated in

Figure 5, where the red rectangular box indicates the sliding crop frame.

4.3. Multi-Model Object Detection Network

As illustrated in

Figure 1, the frequency of occurrence varied significantly among different defect types, resulting in considerable class imbalance across categories. When training on such long-tailed datasets, the object detection network tends to bias toward the more prevalent classes, thereby diminishing the network’s ability to accurately detect rare defects. Similarly, differences in the area of defects can exacerbate this issue. To address these challenges, the authors categorized the seven defect types based on their quantities, size, shape, and typical occurrence locations, dividing them into three groups. Each group was trained separately to enhance overall detection accuracy. The specific grouping scheme is detailed in

Table 2.

Following the grouping process, the defect detection model was trained using the cropped weld images and their corresponding annotation. The dataset was partitioned into training and validation sets in a 9:1 ratio. The latest YoloV8 algorithm was employed due to its superior detection efficiency and accuracy. For all three grouped datasets, the model was trained using the default hyperparameters, with the pretrained YOLOv8.pt weights used for initialization. The YoloV8 model used in this study integrated various data augmentation techniques—such as mosaic, mixup, geometric transformations, and random erasing—within its training pipeline, which enhanced the model’s generalization performance, especially for rare defect types.

4.4. Automated Evaluation System for Weld Defects

Upon the completion of the multi-model defect detection network training described in

Section 4.3, the corresponding models were developed. Combined with the weld inspection model in

Section 4.2.2 and following the technical roadmap, the authors implemented the corresponding automatic film evaluation algorithm, which was subsequently optimized and refined for practical application.

The evaluation process began by converting the X-ray radiograph into a three-channel image. Model 0 was used to identify the weld region in the image. If a weld was successfully detected, the corresponding bounding box was used to crop the weld area, which was further subdivided through a sliding window approach. In cases where no weld was detected, the sliding cropping operation was applied to the entire image. The cropped sub-images were sequentially processed by defect detection models, namely Models 1, 2, and 3. The detected defects were filtered based on predefined confidence thresholds to eliminate false positives. Finally, an optimization algorithm was applied to refine and map the predicted bounding boxes back onto the original image, ensuring that the defect locations were accurately and clearly visualized.

5. Application

5.1. Evaluation Indices

The following indices are commonly used to evaluate multi-model object detection networks.

Firstly, precision and recall are considered. In statistical terms, samples can be categorized based on predicted and actual values as follows: TP (true positive), where both predicted and actual values are positive; TN (true negative), where both predicted and actual values are negative; FP (false positive), where predicted values are positive but actual values are negative, indicating false positives; and FN (false negative), where predicted values are negative but actual values are positive, indicating missed detection.

Precision is defined as the proportion of true positive samples among all samples predicted as positive by the model. Since false negative (FN) instances are not considered in its computation, precision alone is not suitable for evaluating the model’s performance in scenarios where missed detections are critical.

Recall, also referred to as the true positive rate, is defined as the proportion of actual positive samples that are correctly identified by the model. Since FP samples are not taken into account in its calculation, recall alone is not sufficient for evaluating the model’s susceptibility to false alarms.

Given the respective limitations of precision and recall when used in isolation, this study further evaluated the performance of the trained model using the mean average precision (mAP) metric, which provides a more comprehensive assessment and is closely related to precision.

5.2. Performance of Multiple Models

The first is the weld seam detection model, whose performance directly influences the accuracy of both the sliding window cropping process and subsequent defect detection. Upon the completion of the training process illustrated in

Section 4.2.2, the performance of the trained weld detection model was assessed, and the results are presented in

Table 3 and illustrated in

Figure 6.

The results indicate that the weld seam detection model exhibits excellent performance, achieving an accuracy rate approaching 100%. This high level of precision provides a solid foundation for subsequent defect detection tasks. The model’s detection performance on the validation set is visually presented in

Figure 6.

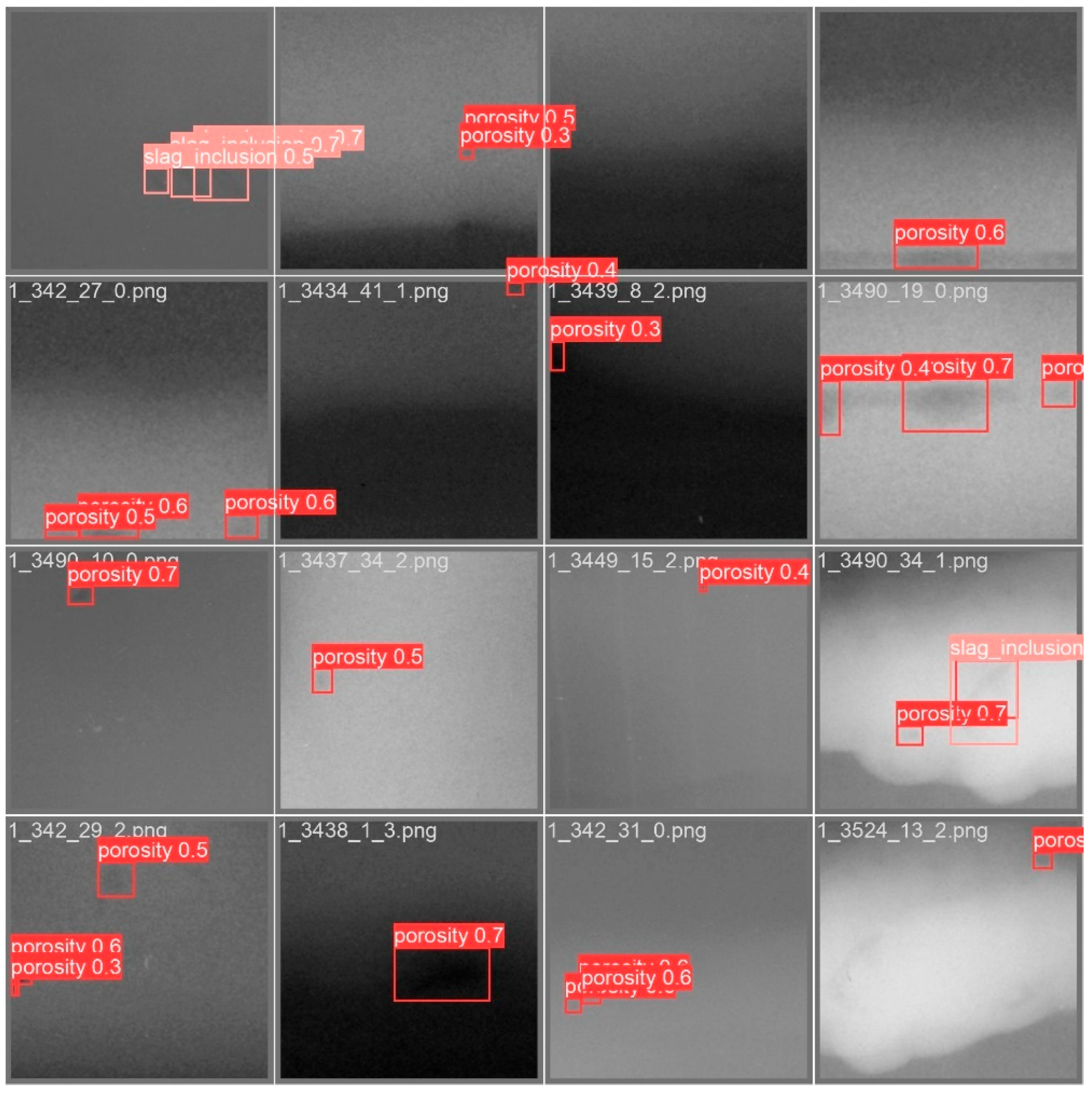

Subsequently, the multi-model target detection approach was trained following the grouping strategy described in

Section 4.3. The training results of Model 1, which detects porosity and slag inclusion, are summarized in

Table 4 and

Figure 7. It is important to note that, during the sliding window cropping process, the non-adhesive nature of rectangular box annotations occasionally resulted in portions of non-defective regions being included within the labeled defect areas. This effectively introduced mislabeled samples into the dataset, thereby negatively impacting detection performance. Similar issues were also observed during the training of Model 2 and Model 3.

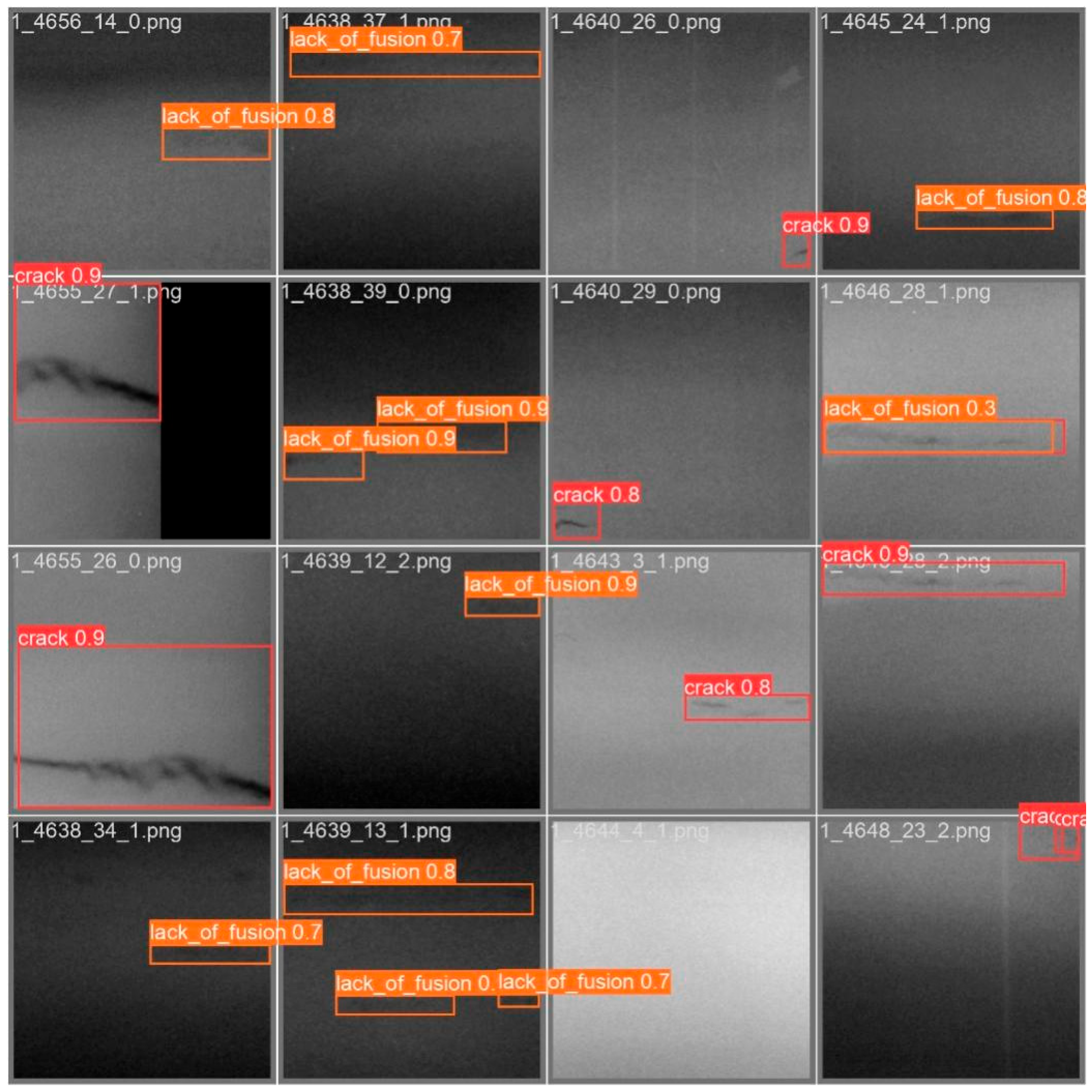

The evaluation results of Model 2, which is designed to detect cracks, undercuts, and lack-of-fusion defects, are presented in

Table 5. In comparison to Model 1, Model 2 demonstrates a notable improvement in precision, recall, and mAP, which can be primarily attributed to the larger size of the defects it detects. The detection performance of Model 2 on the validation set is illustrated in

Figure 8.

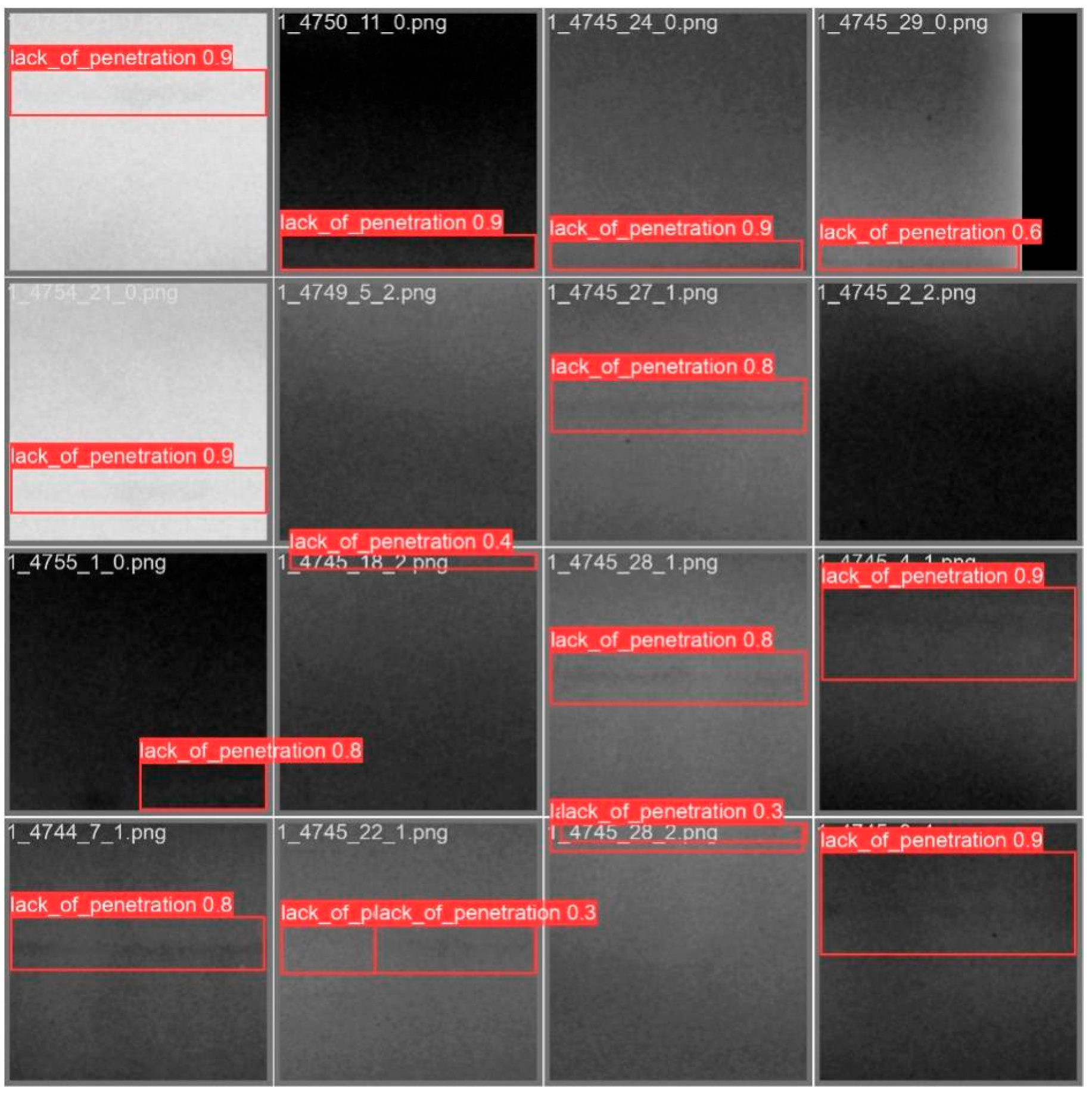

The evaluation results of Model 3, which focuses on detecting a lack of penetration and internal concavity, are shown in

Table 6. The detection outcomes of Model 3 on the validation set are depicted in

Figure 9.

5.3. Tested Performances

In order to further verify the model’s performance, the test dataset was constructed based on the following principles:

- (1)

Data from the training set were not included in the test set.

- (2)

Two Level II qualified inspectors independently evaluate the radiographs. Only data where their qualitative and quantitative assessments were in agreement were included in the test set.

- (3)

The distribution ratio of defect categories and their corresponding quantities in the test set was consistent with that of the training set.

All samples were screened in accordance with RCCM standards, ensuring that the included defects fall within the scope defined by the specification and align with the training requirements. The dataset comprised various defect types, including cracks, lack of penetration, lack of fusion, porosity, slag inclusions, undercuts, and internal concavity. In addition, 200 images without defects were selected to construct a non-defective test dataset. This configuration was employed to evaluate the system’s ability to detect the specified defect types and to assess its performance in weld seam recognition.

Based on engineering practice significance, the performance of the model was assessed using three key metrics: the missed detection rate, false positive rate, and qualitative analysis. The missed detection rate refers to instances in which existing defects are not identified by the system. The false positive rate pertains to cases where defect-free radiographs are incorrectly classified as containing defects. Qualitative analysis assesses the system’s ability to not only detect defects effectively but also to accurately classify their types.

Based on the testing results, the overall missed detection rate is 0%, the false positive rate is 3.5%, and the precision of qualitative defect identification is 92%. The detailed performance is illustrated in

Figure 10 and

Figure 11 below.

6. Conclusions

Based on deep learning technology, this study developed a defect recognition system for weld X-ray radiographs. This system includes the functions of intelligent labeling and image processing of weld radiographs, a multi-model object detection model, and an intelligent evaluation framework. Utilizing the latest YoloV8 algorithm, the system enables the intelligent recognition and localization of defects, such as porosity, cracks, and other imperfections. The development and application of this system significantly enhance the intelligent maintenance and safe operation of pressure equipment.

Experimental results demonstrate that the optimized multi-model detection approach achieved mAP@0.5 values of 0.51 (Model 1), 0.815 (Model 2), and 0.87 (Model 3). Among them, Model 3 exhibited the best performance in detecting lack of fusion and internal concavity defects, achieving a precision of 0.89 and a recall of 0.79. Furthermore, the overall system testing showed a missed detection rate of 0%, a false positive rate of 3.5%, and a qualitative classification accuracy of 92%, validating the system’s effectiveness and robustness in weld defect detection.

However, the current framework has limitations. It was primarily developed for linear MIG/MAG welds, which exhibit relatively uniform radiographic characteristics. Its applicability to more complex weld types (e.g., spot welds, TIG joints, and laser welds) remains to be validated. In addition, like many deep learning models, interpretability is still limited, which could pose challenges in critical industrial applications requiring high transparency and traceability.

Future work will focus on expanding the system’s applicability to diverse welding configurations and improving detection robustness. Advanced strategies such as focal loss, SMOTE, and class re-weighting will be investigated to further address class imbalance and enhance model performance, particularly for rare defect categories. Furthermore, incorporating explainable artificial intelligence methods is expected to improve the transparency of the detection process. The integration of multi-modal data—combining radiographic images with ultrasonic testing—also holds promise for boosting detection accuracy and reliability in complex industrial scenarios.