Modular Soft Sensor Made of Eutectogel and Its Application in Gesture Recognition

Abstract

1. Introduction

- (1)

- Most gel surfaces are susceptible to environmental contamination and are not suitable for direct exposure to air. Therefore, gel sensors used for gesture recognition should be packed to protect the gel material and prolong its shelf life.

- (2)

- Sensing in conductive gels usually relies on the signal response to mechanical deformation (e.g., stretching, inflation, compression), which may cause displacement between the sensor and the measuring circuit. A connecting method is needed, which can not only support the connectivity between the soft material and the rigid circuit but also remain stable during mechanical deformation.

- (3)

- It is common for gel materials to go through an aging process and gradually lose functionality. The design of the sensor array should allow for the facile replacement of broken sensor units without interfering with the functional ones.

- (4)

- The adhesion force between the gel and the attached interface is rather weak. To secure the mounting, conductive gel sensors are usually embedded into the wearable device as a structurally inseparable part. A reversible mounting/connecting strategy is needed to reduce costs during sensor optimization and achieve fast prototyping.

- (1)

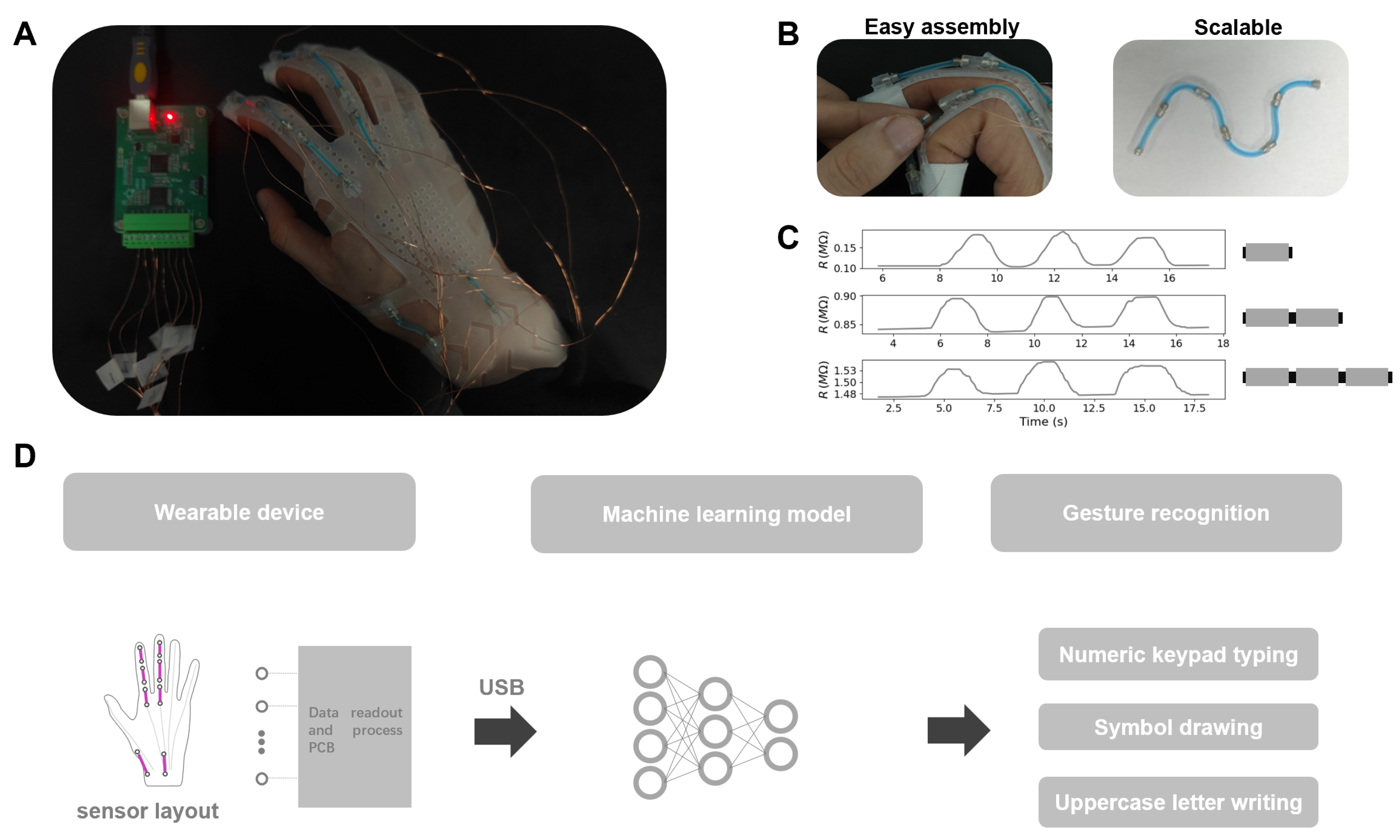

- We presented a novel design for a modular soft sensor unit (M2SU) described as a short, wire-shaped sensory structure composed of eutectogel packed in a sheath with magnetic blocks at both ends. Compared to existing gel sensors, our proposed sensor is more suitable for complex human-machine interaction scenarios. Additionally, the modular sensor has an excellent strain range, high strain sensitivity, and good durability.

- (2)

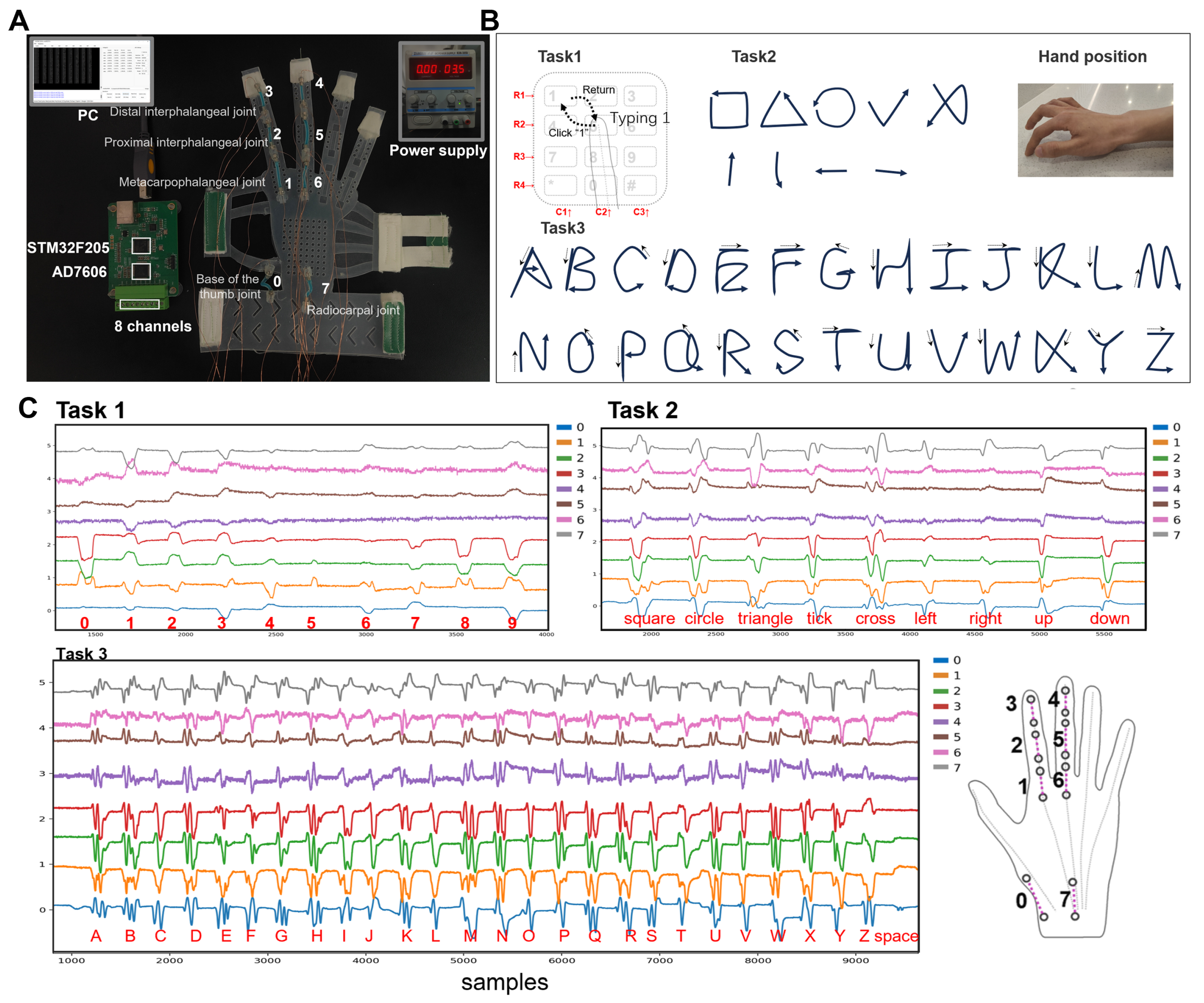

- We constructed a silicone glove with 8 M2SUs for hand motion recognition. The sensory array was reversibly mounted on the wearable device through pre-embedded magnetic blocks. This strategy allows for the modification of the sensing module to be independent of the attached device by simply repositioning the sensor layout.

- (3)

- We developed a neural network to analyze the data gathered from the wearable device during three gesture recognition tasks comprising a total of 46 gestures. The network achieved task-specific accuracies of 80.43% (Top 3: 97.68%) for Task 1, 88.58% (Top 3: 96.13%) for Task 2, and 79.87% (Top 3: 91.59%) for Task 3. These results validated the perception performance of M2SUs for gesture recognition.

2. Related Works

2.1. Gesture Recognition with Flexible Sensors

2.2. Wearable Perception with Gel Materials

3. Fabrication and Characterization of M2SU

3.1. Fabrication

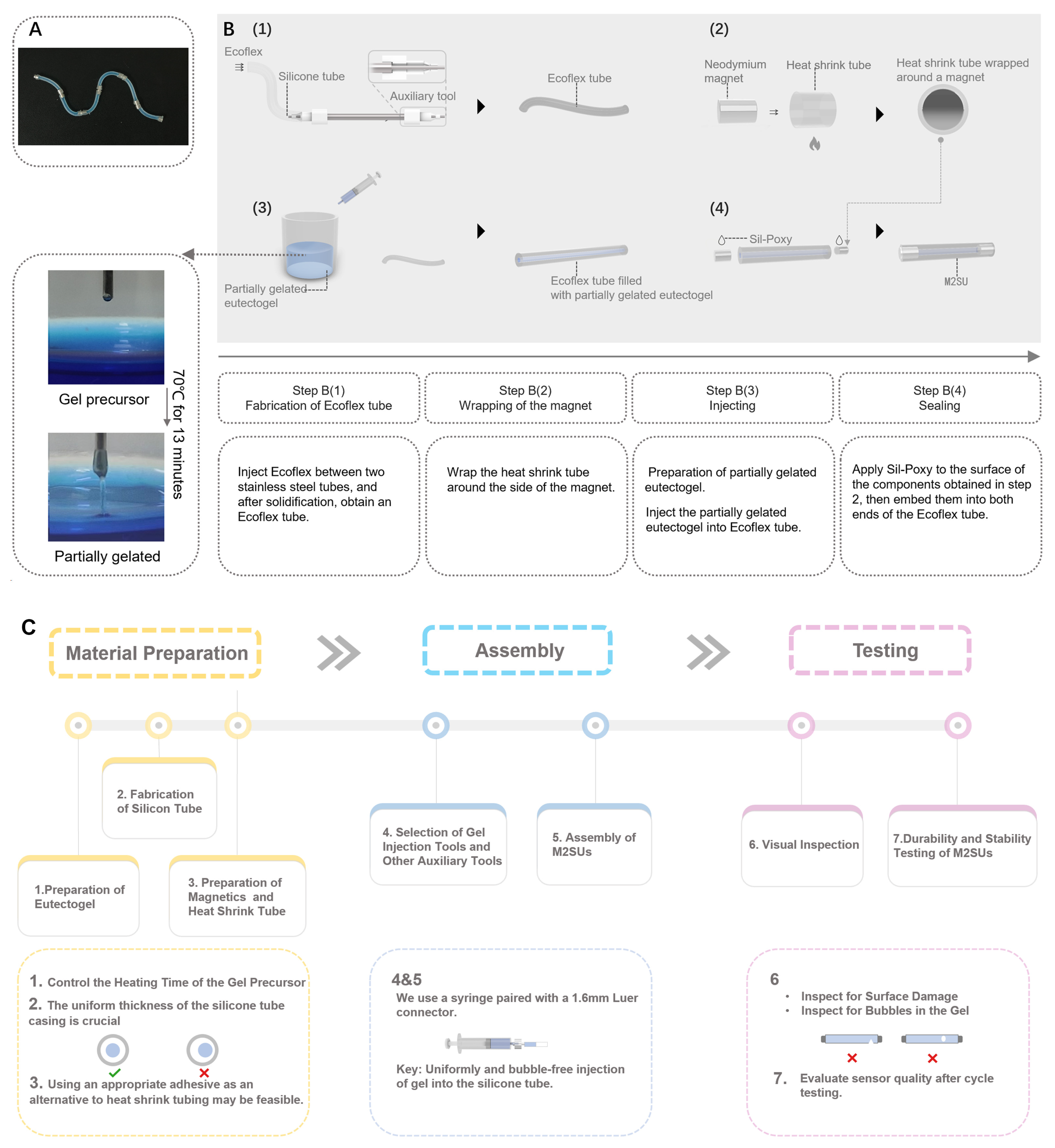

- Step 1: The Ecoflex silicone tube was fabricated using a 3D-printed auxiliary tool, which aligned a 2.4 mm inner diameter stainless steel tube with a 1 mm outer diameter stainless steel tube. Ecoflex was then injected between the tubes and allowed to solidify, yielding the silicone tube.

- Step 2: The magnet electrodes () were encased with heat-shrink tube. This procedure was necessary because Sil-Poxy is proficient at bonding polymers and silicone, but it lacks strong adhesion between metal and Ecoflex. Consequently, the sealing of the gel sensor depended on the frictional fit between the magnet and the heat-shrink tube, with the heat-shrink tube adhering to the silicone shell using Sil-Poxy.

- Step 3: The eutectogel used was constructed with a DES (ChCl–EG–urea), zwitterionic sulfobetaine, and Zn(ClO4)2.The preparation of the eutectogel adhered to methods outlined in a previous publication [29]. Since fully gelated eutectogel proved challenging to inject into slender tubes with a 1 mm inner diameter, we heated the gel precursor to a partially gelated state, facilitating its injection into the silicone tube using a syringe.

- Step 4: Neodymium magnets were used to seal the sensors. At both ends of the sensor, a three-layer structure of magnet, heat-shrink tube, and Ecoflex was tightly bonded to prevent gel leakage.

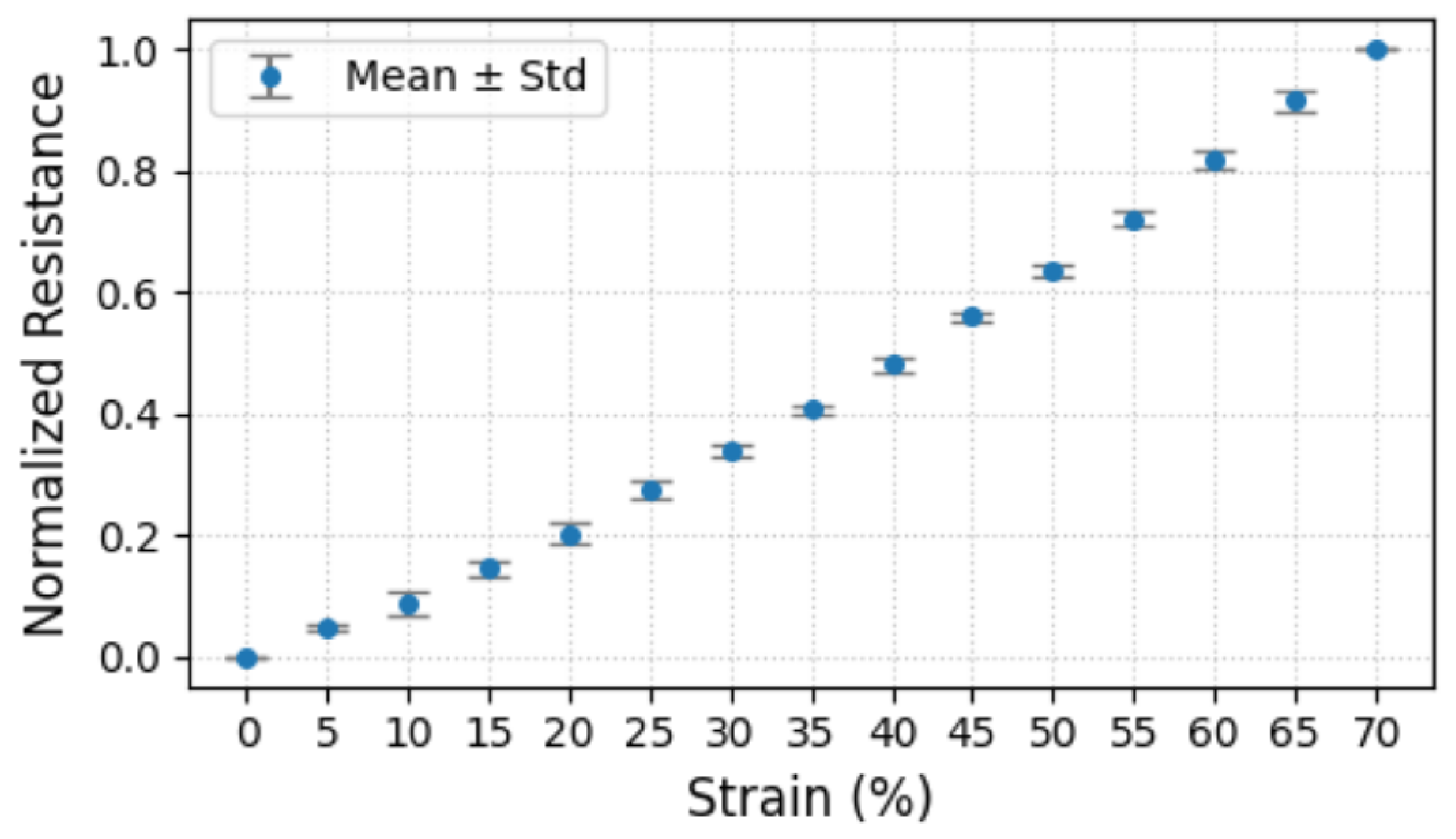

3.2. Characterization

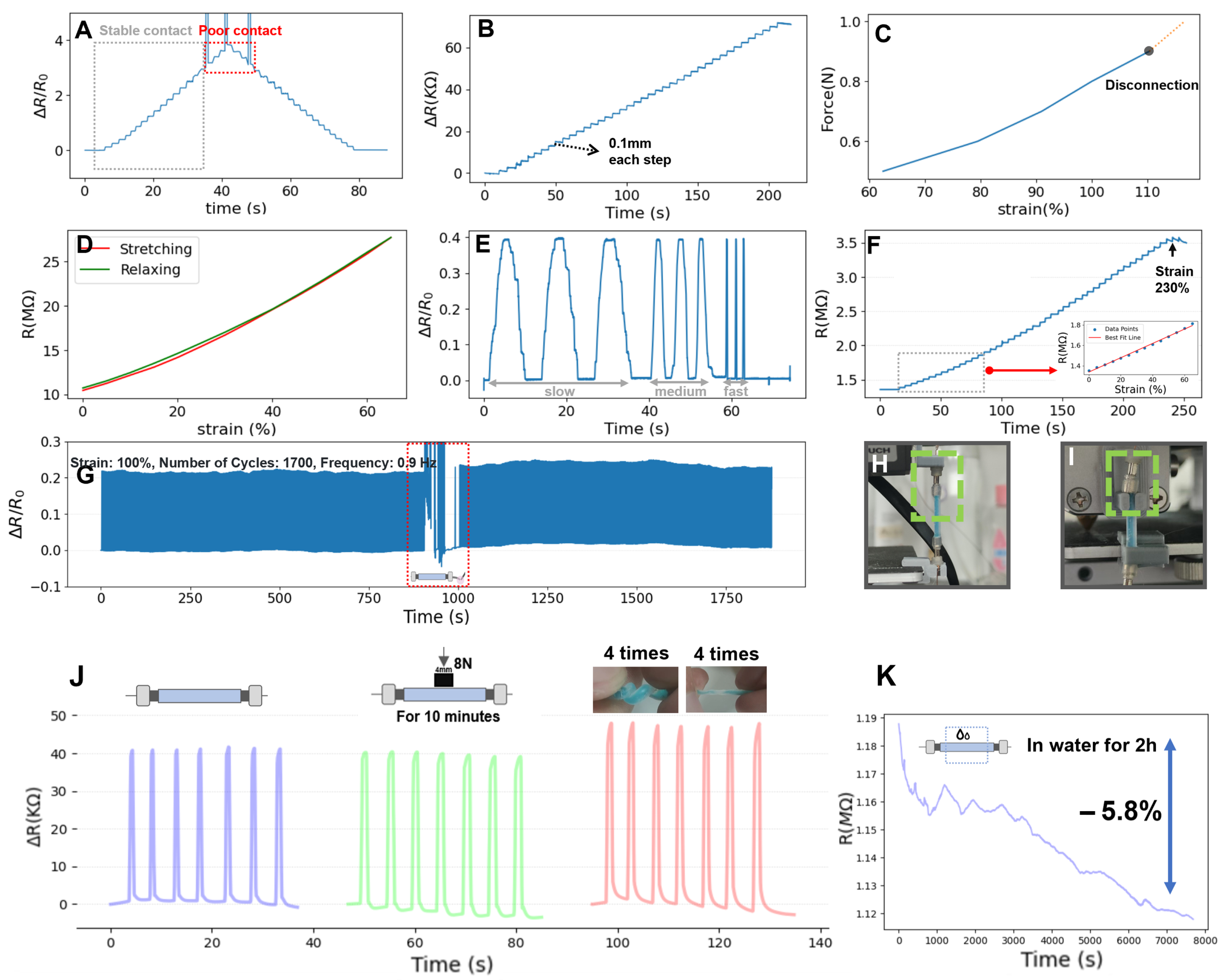

- Press-Twist Experiment of M2SU. Flexible sensors often experience compression and twisting in human-machine interactions. In the compression test, we applied an 8 N force with a rigid indenter to a 4 mm central region of the sensor for 10 min, followed by a cyclic strain test at 70% strain. Figure 3J shows that the sensor’s initial resistance slightly changed after pressing and stabilized over multiple cycles. In the twisting experiment, the sensor was twisted 8 times, causing a greater relative resistance change compared to the original state but remaining consistent during multiple tensile cycles.

- Waterproof Experiment of M2SU. We encapsulated the eutectogel with Ecoflex silicone for waterproofing (excluding the ends). Figure 3K shows the sensor’s resistance change during a 2 h water immersion. The resistance decreased by 5.8%, indicating minimal impact from short-term water exposure.

- M2SU exhibits a good strain range and excellent durability. In scenarios involving magnetic connections, the sensor’s 0–80% strain range is well-suited for gesture motion sensing. The 230% strain limit ensures that the sensor is resistant to tensile damage. Furthermore, the 1700 tensile/release cycles at 100% strain have demonstrated M2SU’s outstanding durability.

- The design of M2SU places greater emphasis on the application of the sensor in human-machine interaction scenarios. With magnetic blocks at both ends, this design facilitates the easy assembly and reversible integration of the sensor directly onto a wearable device in situ. We have investigated the effects of pressing and twisting on M2SU and tested its waterproof capabilities. The results further demonstrate the practical value of M2SU in real-world human-machine interaction scenarios.

4. Gesture Recognition Based on M2SUs

4.1. Three Gesture Tasks

- Task 1: During the performance of the gestures, the forearm remains stationary. The index finger initiates the gesture from the number ‘5’ position, then moves toward the designated number and clicks on it. Finally, the index finger returns to the initial position. In the actual execution process, no physical keyboard guides the finger’s direction. For instance, participants rely on their proprioception to guide the finger to click on number ‘1’ and then return to ‘5’.

- Task 2 and 3: The palm’s position remains consistent with Task 1. In Task 2, the gestures entail drawing a square, a triangle, a circle, a tick, a cross, and movements in the directions of up, down, left, and right. For Task 3, the gestures involve writing all 26 uppercase letters and recognizing the ‘space’. Upon completing each gesture, the fingertip of the index finger returns to the initial position. The motion of tapping the desktop with the thumb represents the ‘space’ gesture.

4.2. Dataset

4.3. Data Processing

4.4. Evaluation

4.4.1. Gesture Recognition Performance

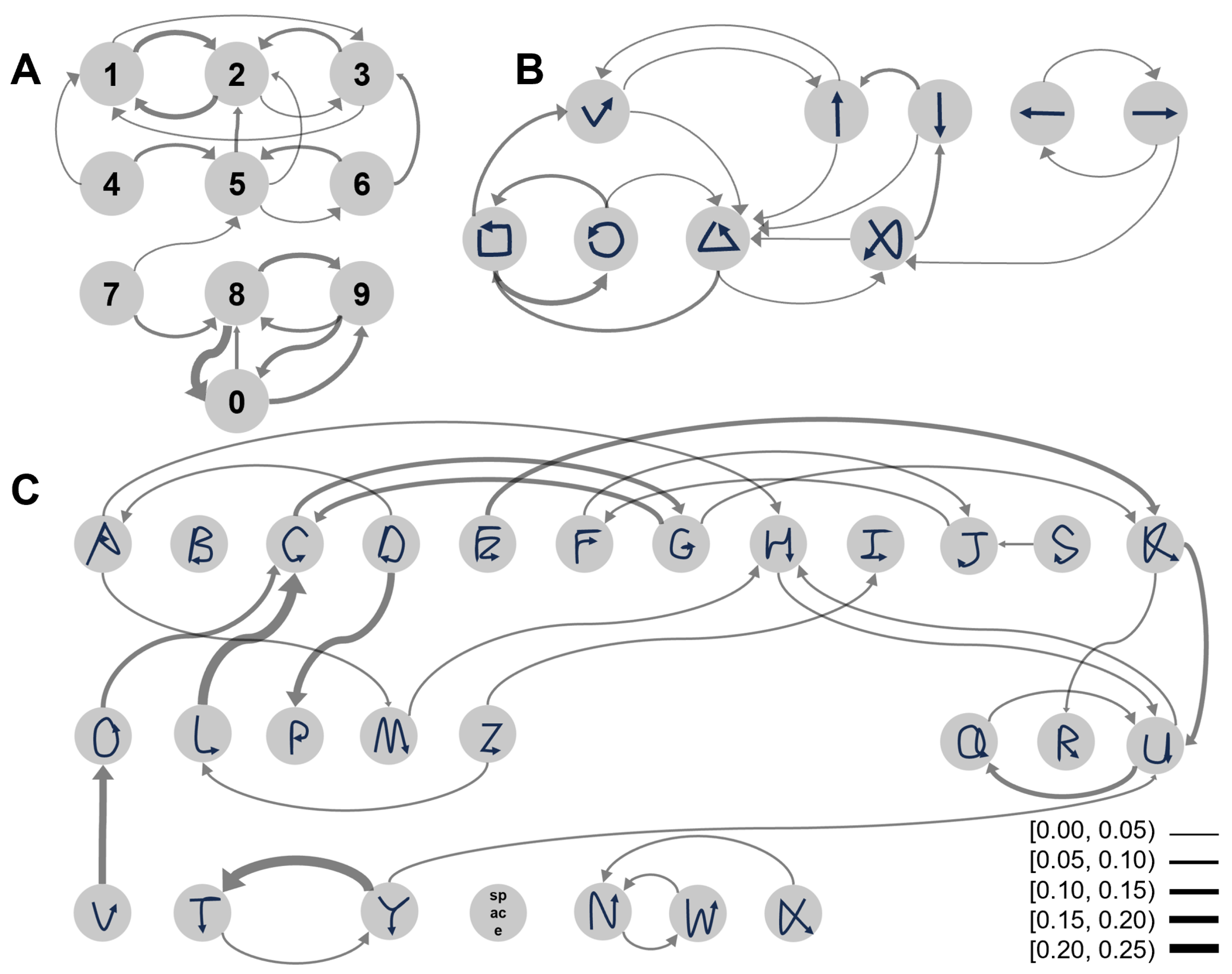

- Classification errors primarily occur between similar gestures. Figure A1 shows the confusion matrices of the recognition results of the three tasks. To provide a more intuitive visualization of the similarities between confusing gestures, we developed a confusion map based on the confusion matrix. In Figure A2, each gesture is linked to the two gestures most frequently confused with it via arrows. The confusion map reveals that in Task 1, gestures for adjacent numbers (“1–2–3” and “8–9–0”) are particularly prone to confusion. This issue arises because participants rely on proprioceptive feedback for number selection, which impedes precise finger positioning. In Tasks 2 and 3, gesture confusion is primarily due to the inherent similarity of symbols (e.g., “square” vs. “circle”, “C” vs. “L”) and the requirement for gestures to return to the starting position. While this requirement facilitates gesture segmentation, it introduces additional movements, thereby making dissimilar gestures, such as “V” and “O”, appear more similar.

- In the mixed-task recognition scenario, the accuracy for each task is lower than that achieved when training each task separately. When training with a mixed dataset of all three tasks, the recognition accuracies for each task were 78.47% (Task 1), 85.45% (Task 2), and 74.23% (Task 3). In contrast, accuracies achieved by training each task separately were 80.43% (Task 1), 88.58% (Task 2), and 79.87% (Task 3). More gestures tend to lead to higher gesture confusion.

- Recognition results from individual datasets varied significantly among participants (Figure 7C). Figure 7D shows the average accuracy for each task across all participants and illustrates the 95% confidence intervals. For Task 1, the best accuracy was 98.75% (id2) and the worst was 38.74% (id9); for Task 2, the best was 100% (id7) and the worst was 46.29% (id9); for Task 3, the best was 86.11% (id9) and the worst was 54.20% (id6). On average, data collection took 50 min per participant. Participants id2 and id7 had 20 min more practice time than others, which contributed to their superior results.

4.4.2. Sensors Layout Evaluation

- Different tasks may have different optimal sensor layouts. Task 2 achieved its highest recognition accuracy (88.58%) with sensors P2 + P3 + P4. Tasks 1 and 3 reached their optimal accuracies (80.43% and 79.87%, respectively) with sensors P1 + P2 + P3.

- Utilizing movement patterns caused by complex hand bio-mechanics in a non-gesturing finger (P4) to achieve gesture recognition is possible. During gesture data collection, the middle finger (P4) was unconstrained and moved according to participants’ habits. For Task 2, the accuracy reached 88.58% with P2 + P3 + P4, and 88.12% with P1 + P2 + P3. Thus, sensors on P1 and P4 are equally important for Task 2.

- Adding more sensors does not always enhance recognition accuracy. With the P1 + P2 + P3 layout, Task 2 and Task 3 achieved accuracies of 88.12% and 79.87%, respectively. Adding P4 sensors to this layout reduced the accuracies to 85.35% for Task 2 and 78.43% for Task 3.

4.5. Ablation Study

5. Discussion

5.1. Limitations

5.2. Potential Application

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| M2SU | modular soft sensor unit |

Appendix A

References

- Streli, P.; Armani, R.; Cheng, Y.F.; Holz, C. HOOV: Hand Out-Of-View Tracking for Proprioceptive Interaction using Inertial Sensing. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–16. [Google Scholar]

- Meier, M.; Streli, P.; Fender, A.; Holz, C. TapID: Rapid Touch Interaction in Virtual Reality using Wearable Sensing. In Proceedings of the 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisboa, Portugal, 27 March–1 April 2021; pp. 519–528. [Google Scholar]

- Li, J.; Huang, L.; Shah, S.; Jones, S.J.; Jin, Y.; Wang, D.; Russell, A.; Choi, S.; Gao, Y.; Yuan, J.; et al. SignRing: Continuous American Sign Language Recognition Using IMU Rings and Virtual IMU Data. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2023, 7, 1–29. [Google Scholar] [CrossRef]

- Sharma, A.; Salchow-Hömmen, C.; Mollyn, V.S.; Nittala, A.S.; Hedderich, M.A.; Koelle, M.; Seel, T.; Steimle, J. SparseIMU: Computational Design of Sparse IMU Layouts for Sensing Fine-grained Finger Microgestures. ACM Trans. Comput.-Hum. Interact. 2023, 30, 39:1–39:40. [Google Scholar] [CrossRef]

- McIntosh, J.; Marzo, A.; Fraser, M. SensIR: Detecting Hand Gestures with a Wearable Bracelet using Infrared Transmission and Reflection. In Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology, Quebec City, QC, Canada, 22–25 October 2017; pp. 593–597. [Google Scholar]

- Xu, C.; Zhou, B.; Krishnan, G.; Nayar, S. AO-Finger: Hands-free Fine-grained Finger Gesture Recognition via Acoustic-Optic Sensor Fusing. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–14. [Google Scholar]

- Luo, Y.; Abidian, M.R.; Ahn, J.H.; Akinwande, D.; Andrews, A.M.; Antonietti, M.; Bao, Z.; Berggren, M.; Berkey, C.A.; Bettinger, C.J.; et al. Technology Roadmap for Flexible Sensors. ACS Nano 2023, 17, 5211–5295. [Google Scholar] [CrossRef] [PubMed]

- Liu, D.; Huyan, C.; Wang, Z.; Guo, Z.; Zhang, X.; Torun, H.; Mulvihill, D.; Bin Xu, B.; Chen, F. Conductive polymer based hydrogels and their application in wearable sensors: A review. Mater. Horizons 2023, 10, 2800–2823. [Google Scholar] [CrossRef]

- Park, K.; Yuk, H.; Yang, M.; Cho, J.; Lee, H.; Kim, J. A biomimetic elastomeric robot skin using electrical impedance and acoustic tomography for tactile sensing. Sci. Robot. 2022, 7, eabm7187. [Google Scholar] [CrossRef]

- Ying, B.; Wu, Q.; Li, J.; Liu, X. An ambient-stable and stretchable ionic skin with multimodal sensation. Mater. Horiz. 2020, 7, 477–488. [Google Scholar] [CrossRef]

- Sundaram, S.; Kellnhofer, P.; Li, Y.; Zhu, J.Y.; Torralba, A.; Matusik, W. Learning the signatures of the human grasp using a scalable tactile glove. Nature 2019, 569, 698–702. [Google Scholar] [CrossRef]

- Luo, Y.; Li, Y.; Sharma, P.; Shou, W.; Wu, K.; Foshey, M.; Li, B.; Palacios, T.; Torralba, A.; Matusik, W. Learning human–environment interactions using conformal tactile textiles. Nat. Electron. 2021, 4, 193–201. [Google Scholar] [CrossRef]

- Paredes, L.; Ipsita, A.; Mesa, J.C.; Martinez Garrido, R.V.; Ramani, K. StretchAR: Exploiting Touch and Stretch as a Method of Interaction for Smart Glasses Using Wearable Straps. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2022, 6, 1–26. [Google Scholar] [CrossRef]

- Tang, L.; Shang, J.; Jiang, X. Multilayered electronic transfer tattoo that can enable the crease amplification effect. Sci. Adv. 2021, 7, eabe3778. [Google Scholar] [CrossRef]

- Röddiger, T.; Beigl, M.; Wolffram, D.; Budde, M.; Sun, H. PDMSkin: On-Skin Gestures with Printable Ultra-Stretchable Soft Electronic Second Skin. In Proceedings of the Augmented Humans International Conference, Kaiserslautern, Germany, 16–17 March 2020; pp. 1–9. [Google Scholar]

- Zhou, Z.; Chen, K.; Li, X.; Zhang, S.; Wu, Y.; Zhou, Y.; Meng, K.; Sun, C.; He, Q.; Fan, W.; et al. Sign-to-speech translation using machine-learning-assisted stretchable sensor arrays. Nat. Electron. 2020, 3, 571–578. [Google Scholar] [CrossRef]

- Wen, F.; Zhang, Z.; He, T.; Lee, C. AI enabled sign language recognition and VR space bidirectional communication using triboelectric smart glove. Nat. Commun. 2021, 12, 5378. [Google Scholar] [CrossRef]

- Zhu, M.; Sun, Z.; Lee, C. Soft Modular Glove with Multimodal Sensing and Augmented Haptic Feedback Enabled by Materials’ Multifunctionalities. ACS Nano 2022, 16, 14097–14110. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.K.; Kim, M.; Pyun, K.; Kim, J.; Min, J.; Koh, S.; Root, S.E.; Kim, J.; Nguyen, B.N.T.; Nishio, Y.; et al. A substrate-less nanomesh receptor with meta-learning for rapid hand task recognition. Nat. Electron. 2022, 6, 64–75. [Google Scholar] [CrossRef]

- Paredes, L.; Reddy, S.S.; Chidambaram, S.; Vagholkar, D.; Zhang, Y.; Benes, B.; Ramani, K. FabHandWear: An End-to-End Pipeline from Design to Fabrication of Customized Functional Hand Wearables. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 1–22. [Google Scholar] [CrossRef]

- Xia, S.; Song, S.; Jia, F.; Gao, G. A flexible, adhesive and self-healable hydrogel-based wearable strain sensor for human motion and physiological signal monitoring. J. Mater. Chem. B 2019, 7, 4638–4648. [Google Scholar] [CrossRef]

- Liu, W.; Xie, R.; Zhu, J.; Wu, J.; Hui, J.; Zheng, X.; Huo, F.; Fan, D. A temperature responsive adhesive hydrogel for fabrication of flexible electronic sensors. npj Flex. Electron. 2022, 6, 68. [Google Scholar] [CrossRef]

- Xiong, X.; Chen, Y.; Wang, Z.; Liu, H.; Le, M.; Lin, C.; Wu, G.; Wang, L.; Shi, X.; Jia, Y.G.; et al. Polymerizable rotaxane hydrogels for three-dimensional printing fabrication of wearable sensors. Nat. Commun. 2023, 14, 1331. [Google Scholar] [CrossRef] [PubMed]

- Chun, K.Y.; Seo, S.; Han, C.S. A Wearable All-Gel Multimodal Cutaneous Sensor Enabling Simultaneous Single-Site Monitoring of Cardiac-Related Biophysical Signals. Adv. Mater. 2022, 34, 2110082. [Google Scholar] [CrossRef]

- Hansen, B.B.; Spittle, S.; Chen, B.; Poe, D.; Zhang, Y.; Klein, J.M.; Horton, A.; Adhikari, L.; Zelovich, T.; Doherty, B.W.; et al. Deep Eutectic Solvents: A Review of Fundamentals and Applications. Chem. Rev. 2021, 121, 1232–1285. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Teng, X.; Liu, L.; Cui, H.; Li, X. Eutectogel-based self-powered wearable sensor for health monitoring in harsh environments. Nano Res. 2024, 17, 5559–5568. [Google Scholar] [CrossRef]

- Xu, X.; Li, Z.; Hu, M.; Zhao, W.; Dong, S.; Sun, J.; He, P.; Yang, J. High Sensitivity and Antifreeze Silver Nanowire/Eutectic Gel Strain Sensor for Human Motion and Healthcare Monitoring. IEEE Sens. J. 2024, 24, 5928–5935. [Google Scholar] [CrossRef]

- Deng, M.; Fan, F.; Wei, X. Learning-Based Object Recognition via a Eutectogel Electronic Skin Enabled Soft Robotic Gripper. IEEE Robot. Autom. Lett. 2023, 8, 7424–7431. [Google Scholar] [CrossRef]

- Wu, Y.; Deng, Y.; Zhang, K.; Wang, Y.; Wang, L.; Yan, L. A flexible and highly ion conductive polyzwitterionic eutectogel for quasi-solid state zinc ion batteries with efficient suppression of dendrite growth. J. Mater. Chem. A 2022, 10, 17721–17729. [Google Scholar] [CrossRef]

- Rahman, M.S.; Huddy, J.E.; Hamlin, A.B.; Scheideler, W.J. Broadband mechanoresponsive liquid metal sensors. npj Flex. Electron. 2022, 6, 1–8. [Google Scholar] [CrossRef]

- Zheng, L.; Zhu, M.; Wu, B.; Li, Z.; Sun, S.; Wu, P. Conductance-stable liquid metal sheath-core microfibers for stretchy smart fabrics and self-powered sensing. Sci. Adv. 2021, 7, eabg4041. [Google Scholar] [CrossRef]

- Wei, C.; Yuan, J.; Zhang, Y.; Zhu, R. Stretchable PEDOT: PSS-Printed Fabric Strain Sensor for Human Movement Monitoring and Recognition. In Proceedings of the 2023 22nd International Conference on Solid-State Sensors, Actuators and Microsystems (Transducers), Kyoto, Japan, 25–29 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 788–791. [Google Scholar]

- Chen, B.; Liu, G.; Wu, M.; Cao, Y.; Zhong, H.; Shen, J.; Ye, M. Liquid Metal-Based Organohydrogels for Wearable Flexible Electronics. Adv. Mater. Technol. 2023, 8, 2201919. [Google Scholar] [CrossRef]

- Cai, Y.; Shen, J.; Yang, C.W.; Wan, Y.; Tang, H.L.; Aljarb, A.A.; Chen, C.; Fu, J.H.; Wei, X.; Huang, K.W.; et al. Mixed-dimensional MXene-hydrogel heterostructures for electronic skin sensors with ultrabroad working range. Sci. Adv. 2020, 6, eabb5367. [Google Scholar] [CrossRef]

- Tashakori, A.; Jiang, Z.; Servati, A.; Soltanian, S.; Narayana, H.; Le, K.; Nakayama, C.; Yang, C.l.; Wang, Z.J.; Eng, J.J.; et al. Capturing complex hand movements and object interactions using machine learning-powered stretchable smart textile gloves. Nat. Mach. Intell. 2024, 6, 106–118. [Google Scholar] [CrossRef]

- Xie, Z.; Chen, Z.; Hu, X.; Mi, H.Y.; Zou, J.; Li, H.; Liu, Y.; Zhang, Z.; Shang, Y.; Jing, X. Ultrastretchable, self-healable and adhesive composite organohydrogels with a fast response for human–machine interface applications. J. Mater. Chem. C 2022, 10, 8266–8277. [Google Scholar] [CrossRef]

- Iwana, B.K.; Uchida, S. An empirical survey of data augmentation for time series classification with neural networks. PLoS ONE 2021, 16, e0254841. [Google Scholar] [CrossRef] [PubMed]

| Ref. | Materials | Sensing Range (%) | Gauge Factor | Hysteresis | Cycle Test (Cycles) | Characteristics | Applications |

|---|---|---|---|---|---|---|---|

| [16] | Stainless steel, Rubber, Polyester, PDMS | 90 | NA | NA | 6000 cycles (0–70%) | Low cost; Skin mountability | 11 Sign Language Gestures |

| [30] | PDMS, EGaIn | 70 | NA | low hysteresis | NA | AC-enhanced Liquid Metal Sensors | Knuckle Flexion Detection, Respiration Tracking |

| [31] | Fluoroelastomer, EGaIn Nanoparticles | 1170 | NA | NA | 600 cycles (0–100%) | Highly conductive and stretchy; Resistance change of only 4% at 200% strain | Glove/Fabric Integration for Wearable Sensors; Self-powered wearable sensors |

| [32] | Polyester-Rubber Fabric, PEDOT:PSS | 45 | 4.5 | 2% | 10,000 cycles (0–30%) | Good Linearity (0.98); High Sensitivity (GF = 4.5); Low Hysteresis (2%) | Finger Movement Monitoring |

| [33] | Ga Liquid Metal, Acrylamide, NaCl, Ammonium Persulfate, Glycerol | 0.1–1000 | 1.2 (strain range of 0–100%) 5.8 (strain range of 100–1000%) | No hysteresis phenomenon observed | 4000 cycles (0–10% strain) | Wide temperature range (−20 °C to 100 °C); Low strain detection limit (0.1%); Self-healing abilities | Detect finger bending |

| [34] | VSNP-PAM hydrogel, PpyNWs, d-Ti3C2Tx MXene layers | 2800 | 16.9 in the x direction and 11.2 in the y direction at 1500% strain | ≤10% | 1000 cycles (0–800% strain, 0.12 Hz) | Tunable sensing mechanisms: tension sensing capabilities and capacity sensing capabilities (using two strips of MXene-PpyNW-VSNP-PAM stretchable e-skins) | Monitoring stretching motions in multiple dimensions, tactile pressure and proximity sensing. |

| [35] | Spandex, PDMS, Au, Polyacrylonitrile NFs | 0.005–155 | NA | NA | >35,000 cycles (0–10% strain, 0.7 Hz) | Low hysteresis and high stability during extensive use and washing cycles | Accurate detection of 48 static and 50 dynamic gestures; Typing on a random surface such as a mock keyboard; Recognition of objects from grasp pose and forces |

| [36] | Composite organohydrogels | 1350 | 3.19 | NA | 800 cycles (0–50% strain) | Self-healing; Wide temperature detection range (−40 °C to 60 °C); High sensitivity: 0–500% (GF = 3.19) | Detect human movements such as finger bending, wrist bending, and facial movements |

| this work | Eutectogel, Ecoflex 0010, Neodymium magnets, etc. | 80 (connect by magnetic), 230 (maximum strain range) | 3.4 (The Gauge Factor decreases with the aging of the eutectogel) | ≤5% | 1700 cycles (0–100% strain, 0.9 Hz) | Modular design for HCI; Good linearity (strain 0–65%, ); Strain sensitive (sensor length: 20 mm, resolution: 0.1 mm); Low cost; High Reliability: Can be pressed, twisted, and waterproof (except for the magnet end) | Three types of dynamic input gestures mentioned in the paper |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, F.; Deng, M.; Wei, X. Modular Soft Sensor Made of Eutectogel and Its Application in Gesture Recognition. Biosensors 2025, 15, 339. https://doi.org/10.3390/bios15060339

Fan F, Deng M, Wei X. Modular Soft Sensor Made of Eutectogel and Its Application in Gesture Recognition. Biosensors. 2025; 15(6):339. https://doi.org/10.3390/bios15060339

Chicago/Turabian StyleFan, Fengya, Mo Deng, and Xi Wei. 2025. "Modular Soft Sensor Made of Eutectogel and Its Application in Gesture Recognition" Biosensors 15, no. 6: 339. https://doi.org/10.3390/bios15060339

APA StyleFan, F., Deng, M., & Wei, X. (2025). Modular Soft Sensor Made of Eutectogel and Its Application in Gesture Recognition. Biosensors, 15(6), 339. https://doi.org/10.3390/bios15060339