Motion Compensation for 3D Multispectral Handheld Photoacoustic Imaging

Abstract

1. Introduction

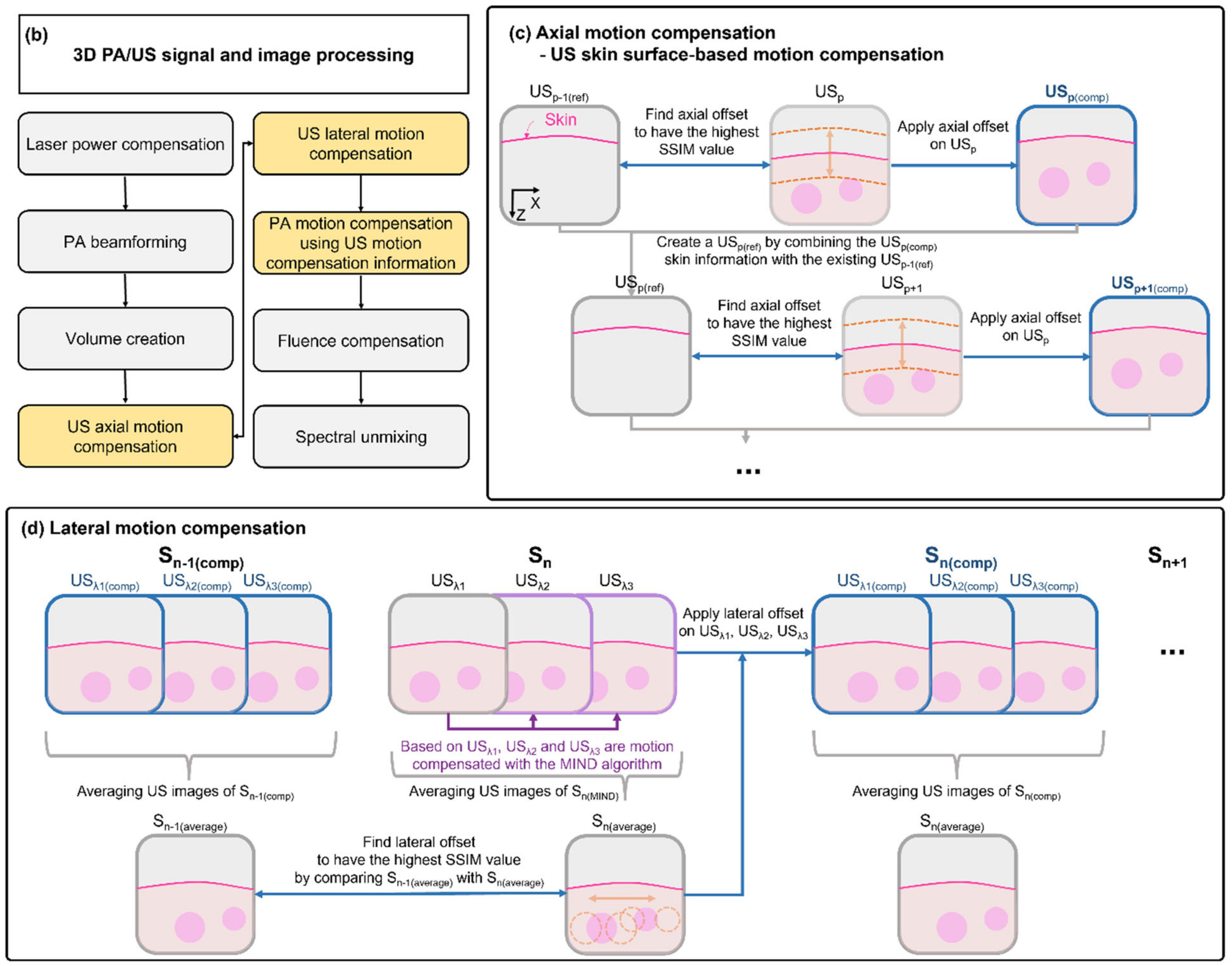

2. Materials and Methods

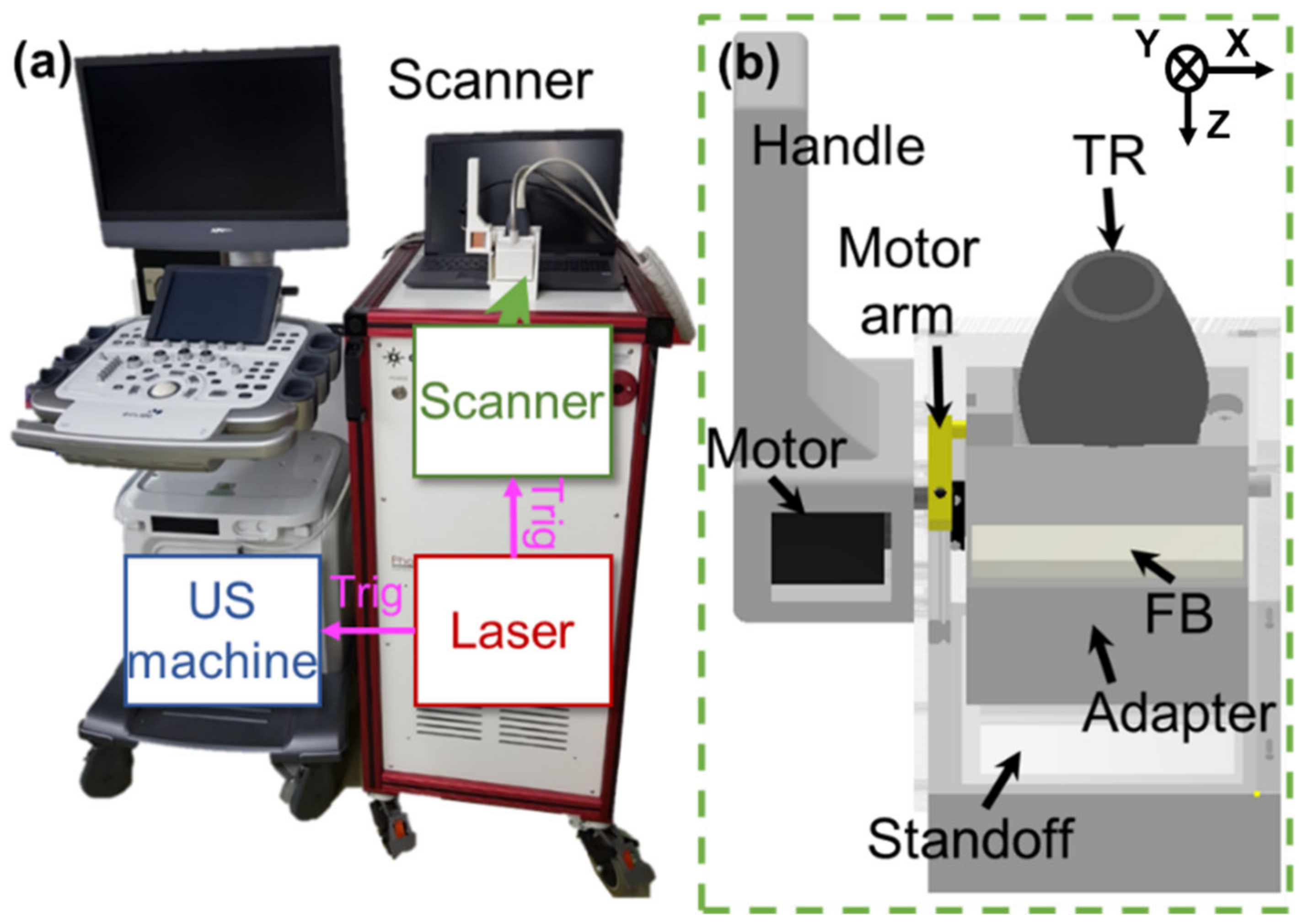

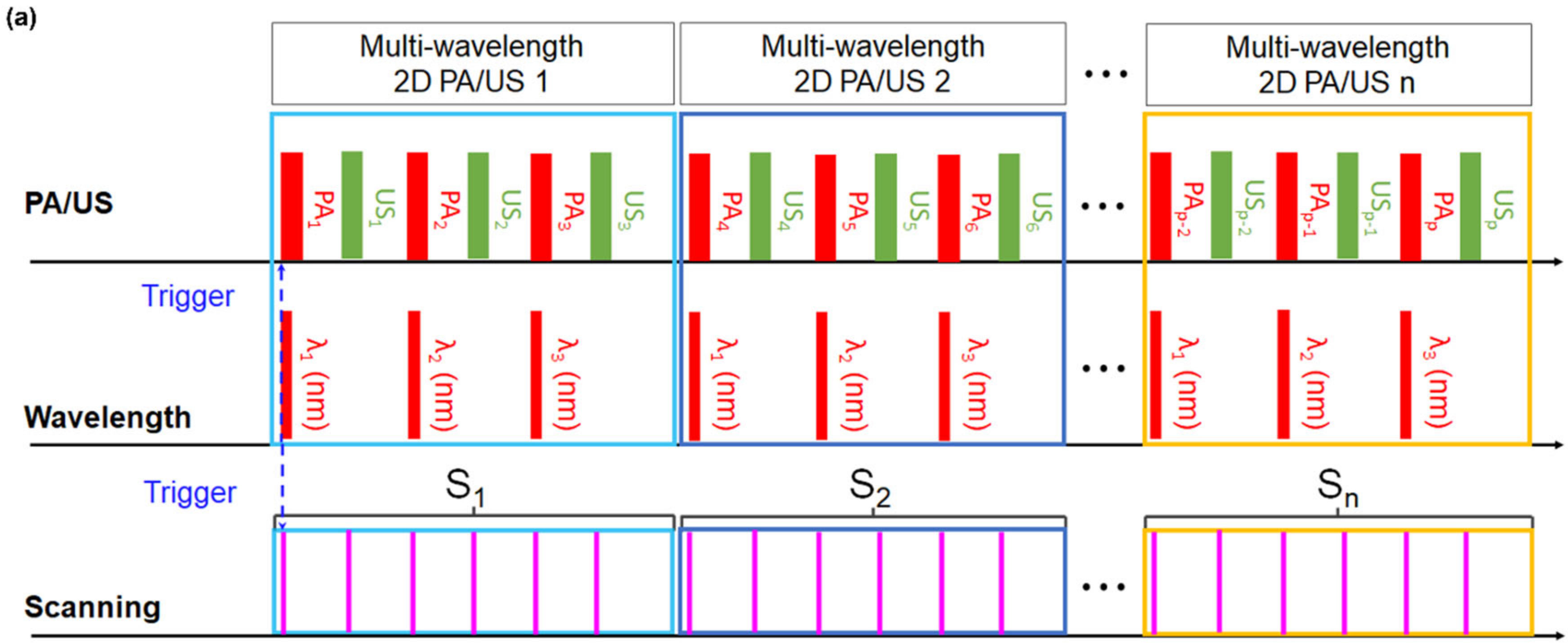

2.1. 3D Clinical Handheld PA/US Imaging System and Scanner

2.2. 3D Clinical Handheld PA/US Imaging System and Scanner

3. Results and Discussion

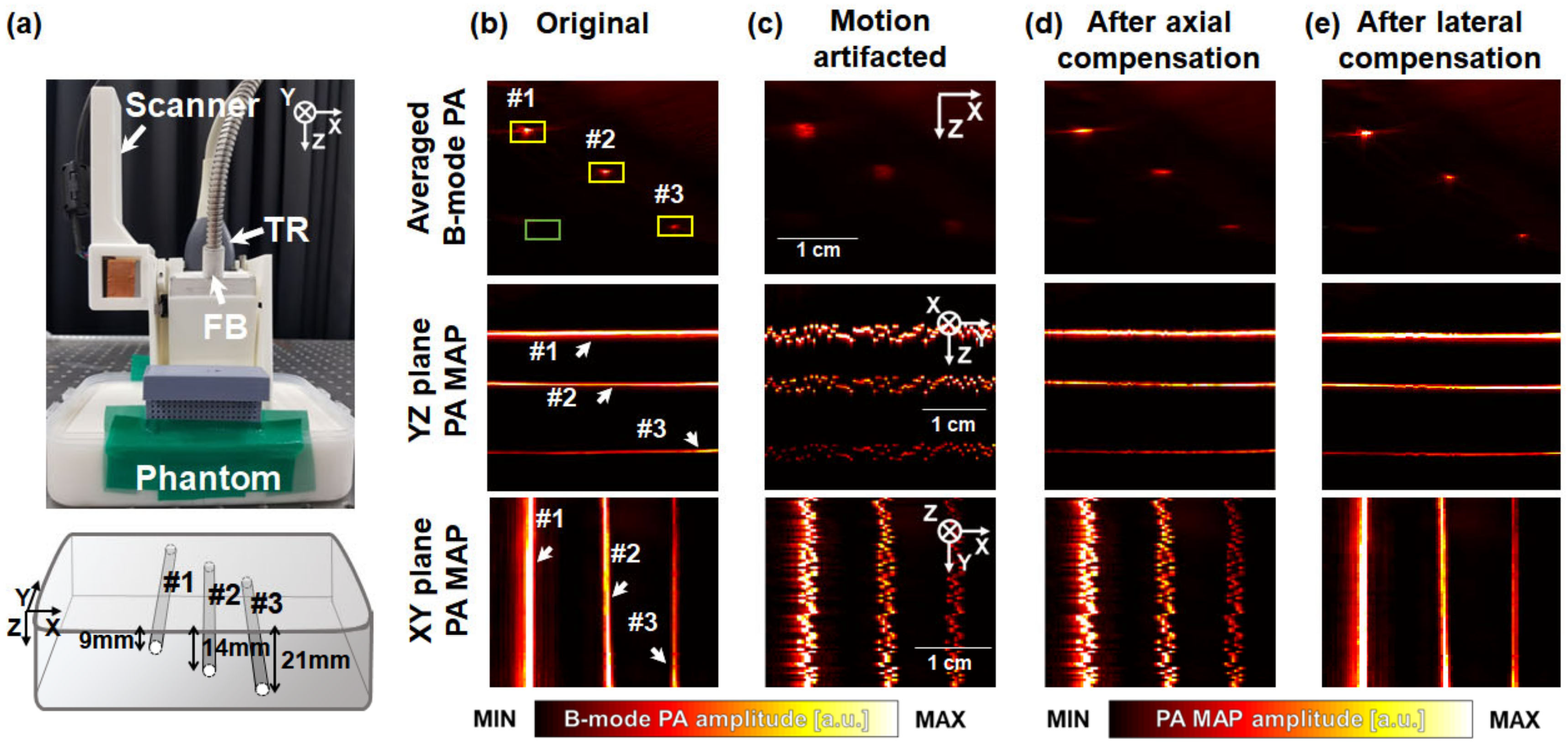

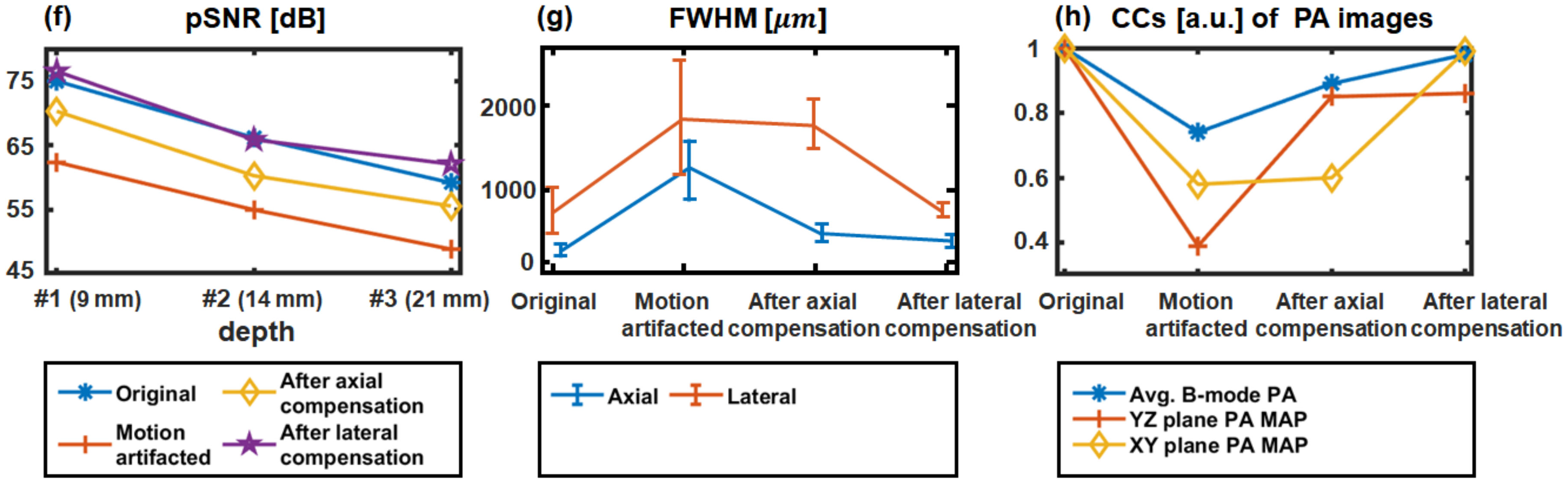

3.1. Performance Test in Phantoms

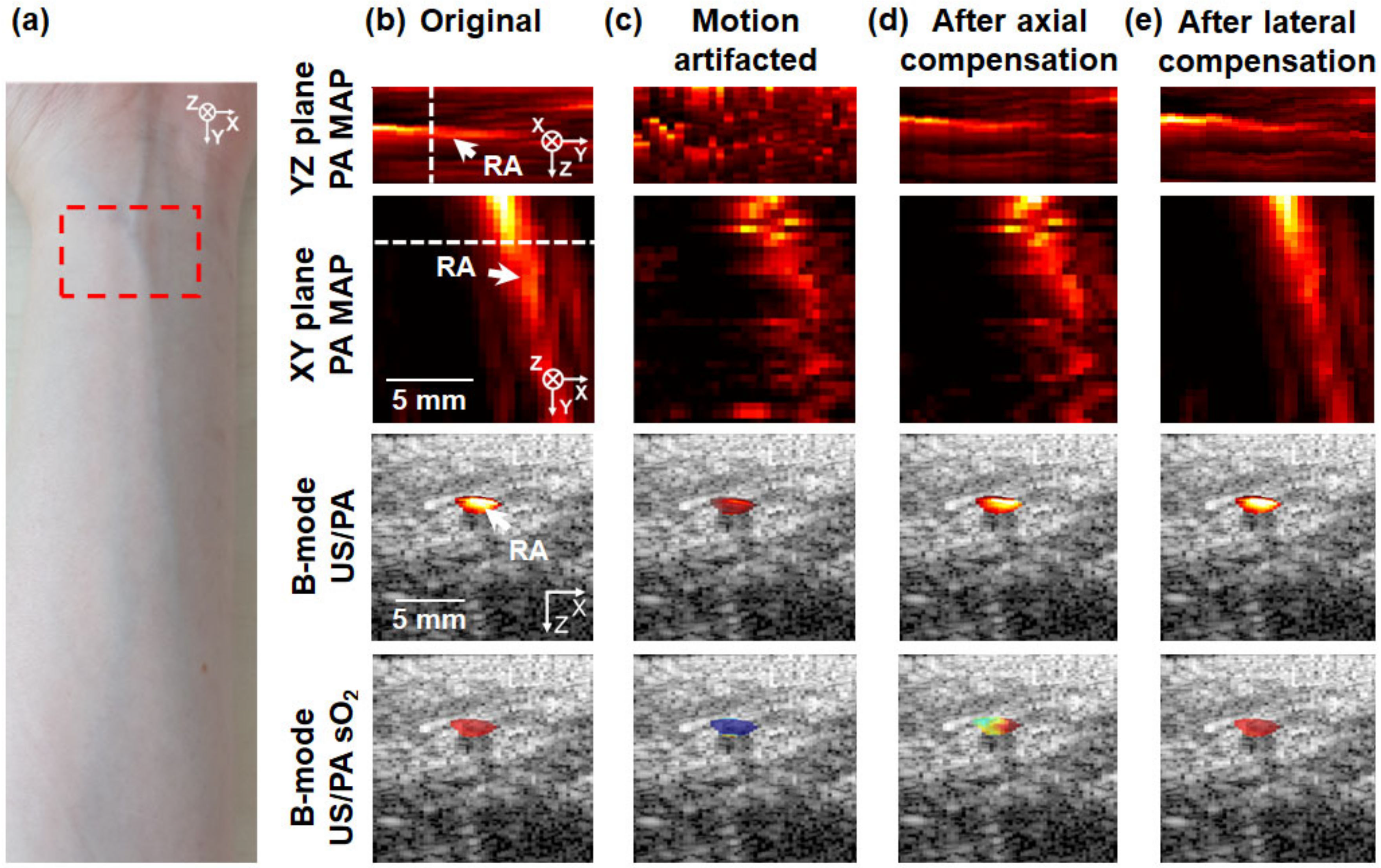

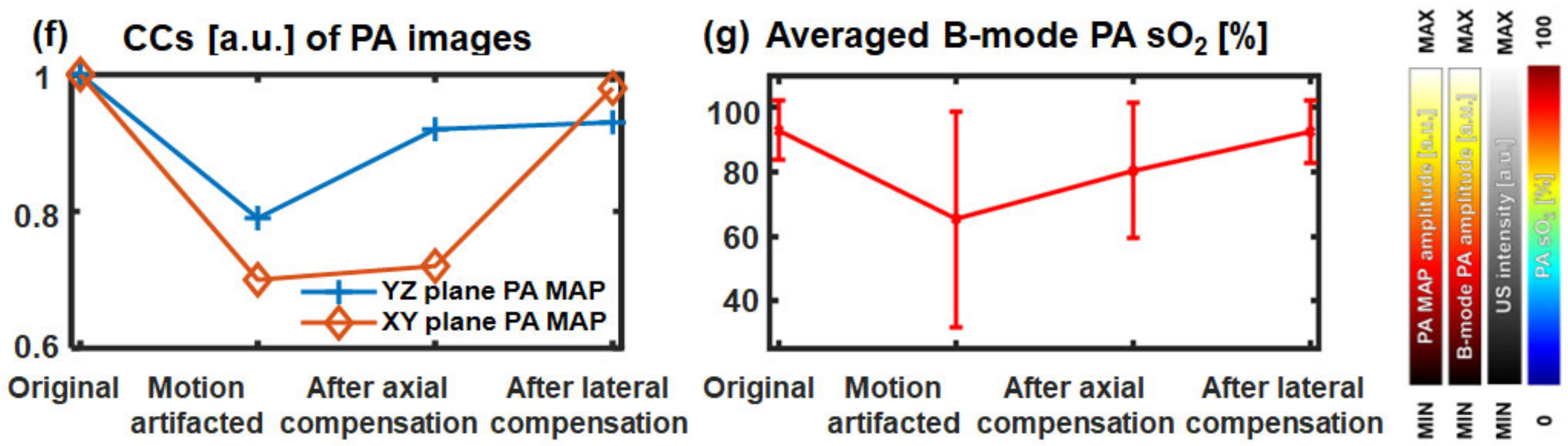

3.2. In Vivo 3D Multi-Wavelength PA/US Imaging of a Human Wrist

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Choi, W.; Park, E.-Y.; Jeon, S.; Kim, C. Clinical Photoacoustic Imaging Platforms. Biomed. Eng. Lett. 2018, 8, 139–155. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.V.; Wu, H.-I. Biomedical Optics: Principles and Imaging; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Lutzweiler, C.; Razansky, D. Optoacoustic Imaging and Tomography: Reconstruction Approaches and Outstanding Challenges in Image Performance and Quantification. Sensors 2013, 13, 7345–7384. [Google Scholar] [CrossRef] [PubMed]

- Ntziachristos, V.; Razansky, D. Molecular Imaging by Means of Multispectral Optoacoustic Tomography (MSOT). Chem. Rev. 2010, 110, 2783–2794. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Park, E.-Y.; Park, B.; Choi, W.; Lee, K.J.; Kim, C. Towards Clinical Photoacoustic and Ultrasound Imaging: Probe Improvement and Real-Time Graphical User Interface. Exp. Biol. Med. 2020, 245, 321–329. [Google Scholar] [CrossRef] [PubMed]

- Ahn, J.; Baik, J.W.; Kim, Y.; Choi, K.; Park, J.; Kim, H.; Kim, J.Y.; Kim, H.H.; Nam, S.H.; Kim, C. Fully Integrated Photoacoustic Microscopy and Photoplethysmography of Human in Vivo. Photoacoustics 2022, 27, 100374. [Google Scholar] [CrossRef]

- Park, B.; Park, S.; Kim, J.; Kim, C. Listening to Drug Delivery and Responses via Photoacoustic Imaging. Adv. Drug Deliv. Rev. 2022, 184, 114235. [Google Scholar] [CrossRef]

- Park, J.; Park, B.; Kim, T.Y.; Jung, S.; Choi, W.J.; Ahn, J.; Yoon, D.H.; Kim, J.; Jeon, S.; Lee, D.; et al. Quadruple Ultrasound, Photoacoustic, Optical Coherence, and Fluorescence Fusion Imaging with a Transparent Ultrasound Transducer. Proc. Natl. Acad. Sci. USA 2021, 118, e1920879118. [Google Scholar] [CrossRef]

- Baik, J.W.; Kim, H.; Son, M.; Choi, J.; Kim, K.G.; Baek, J.H.; Park, Y.H.; An, J.; Choi, H.Y.; Ryu, S.Y.; et al. Intraoperative Label-Free Photoacoustic Histopathology of Clinical Specimens. Laser Photon. Rev. 2021, 15, 2100124. [Google Scholar] [CrossRef]

- Hosseinaee, Z.; Le, M.; Bell, K.; Reza, P.H. Towards Non-Contact Photoacoustic Imaging [Review]. Photoacoustics 2020, 20, 100207. [Google Scholar] [CrossRef]

- Kim, M.; Lee, K.W.; Kim, K.; Gulenko, O.; Lee, C.; Keum, B.; Chun, H.J.; Choi, H.S.; Kim, C.U.; Yang, J.-M. Intra-Instrument Channel Workable, Optical-Resolution Photoacoustic and Ultrasonic Mini-Probe System for Gastrointestinal Endoscopy. Photoacoustics 2022, 26, 100346. [Google Scholar] [CrossRef]

- Huang, G.; Lv, J.; He, Y.; Yang, J.; Zeng, L.; Nie, L. In Vivo Quantitative Photoacoustic Evaluation of the Liver and Kidney Pathology in Tyrosinemia. Photoacoustics 2022, 28, 100410. [Google Scholar] [CrossRef]

- Lyu, T.; Yang, C.; Gao, F.; Gao, F. 3D Photoacoustic Simulation of Human Skin Vascular for Quantitative Image Analysis. In Proceedings of the 2021 IEEE International Ultrasonics Symposium (IUS), Xi’an, China, 11 September 2021; pp. 1–3. [Google Scholar]

- Liu, C.; Wang, L. Functional Photoacoustic Microscopy of Hemodynamics: A Review. Biomed. Eng. Lett. 2022, 12, 97–124. [Google Scholar] [CrossRef]

- Tang, Y.; Wu, H.; Klippel, P.; Zhang, B.; Huang, H.-Y.S.; Jing, Y.; Jiang, X.; Yao, J. Deep Thrombosis Characterization Using Photoacoustic Imaging with Intravascular Light Delivery. Biomed. Eng. Lett. 2022, 12, 135–145. [Google Scholar] [CrossRef]

- Allen, T.J.; Hall, A.; Dhillon, A.P.; Owen, J.S.; Beard, P.C. Spectroscopic Photoacoustic Imaging of Lipid-Rich Plaques in the Human Aorta in the 740 to 1400 Nm Wavelength Range. J. Biomed. Opt. 2012, 17, 061209. [Google Scholar] [CrossRef]

- Cox, B.T.; Arridge, S.R.; Beard, P.C. Estimating Chromophore Distributions from Multiwavelength Photoacoustic Images. J. Opt. Soc. Am. A 2009, 26, 443. [Google Scholar] [CrossRef]

- Kim, J.; Kim, Y.H.; Park, B.; Seo, H.-M.; Bang, C.H.; Park, G.S.; Park, Y.M.; Rhie, J.W.; Lee, J.H.; Kim, C. Multispectral Ex Vivo Photoacoustic Imaging of Cutaneous Melanoma for Better Selection of the Excision Margin. Br. J. Dermatol. 2018, 179, 780–782. [Google Scholar] [CrossRef]

- Han, S.; Lee, H.; Kim, C.; Kim, J. Review on Multispectral Photoacoustic Analysis of Cancer: Thyroid and Breast. Metabolites 2022, 12, 382. [Google Scholar] [CrossRef]

- Park, B.; Han, M.; Park, J.; Kim, T.; Ryu, H.; Seo, Y.; Kim, W.J.; Kim, H.H.; Kim, C. A Photoacoustic Finder Fully Integrated with a Solid-State Dye Laser and Transparent Ultrasound Transducer. Photoacoustics 2021, 23, 100290. [Google Scholar] [CrossRef]

- Choi, W.; Park, E.-Y.; Jeon, S.; Yang, Y.; Park, B.; Ahn, J.; Cho, S.; Lee, C.; Seo, D.-K.; Cho, J.-H.; et al. Three-Dimensional Multistructural Quantitative Photoacoustic and US Imaging of Human Feet in Vivo. Radiology 2022, 303, 467–473. [Google Scholar] [CrossRef]

- Attia, A.B.E.; Moothanchery, M.; Li, X.; Yew, Y.W.; Thng, S.T.G.; Dinish, U.S.; Olivo, M. Microvascular Imaging and Monitoring of Hemodynamic Changes in the Skin during Arterial-Venous Occlusion Using Multispectral Raster-Scanning Optoacoustic Mesoscopy. Photoacoustics 2021, 22, 100268. [Google Scholar] [CrossRef]

- Ahn, J.; Kim, J.Y.; Choi, W.; Kim, C. High-Resolution Functional Photoacoustic Monitoring of Vascular Dynamics in Human Fingers. Photoacoustics 2021, 23, 100282. [Google Scholar] [CrossRef] [PubMed]

- Dasa, M.K.; Nteroli, G.; Bowen, P.; Messa, G.; Feng, Y.; Petersen, C.R.; Koutsikou, S.; Bondu, M.; Moselund, P.M.; Podoleanu, A.; et al. All-Fibre Supercontinuum Laser for in Vivo Multispectral Photoacoustic Microscopy of Lipids in the Extended near-Infrared Region. Photoacoustics 2020, 18, 100163. [Google Scholar] [CrossRef] [PubMed]

- Lei, S.; Zhang, J.; Blum, N.T.; Li, M.; Zhang, D.-Y.; Yin, W.; Zhao, F.; Lin, J.; Huang, P. In Vivo Three-Dimensional Multispectral Photoacoustic Imaging of Dual Enzyme-Driven Cyclic Cascade Reaction for Tumor Catalytic Therapy. Nat. Commun. 2022, 13, 1298. [Google Scholar] [CrossRef] [PubMed]

- Menger, M.M.; Körbel, C.; Bauer, D.; Bleimehl, M.; Tobias, A.L.; Braun, B.J.; Herath, S.C.; Rollmann, M.F.; Laschke, M.W.; Menger, M.D.; et al. Photoacoustic Imaging for the Study of Oxygen Saturation and Total Hemoglobin in Bone Healing and Non-Union Formation. Photoacoustics 2022, 28, 100409. [Google Scholar] [CrossRef]

- Bhutiani, N.; Samykutty, A.; McMasters, K.M.; Egilmez, N.K.; McNally, L.R. In Vivo Tracking of Orally-Administered Particles within the Gastrointestinal Tract of Murine Models Using Multispectral Optoacoustic Tomography. Photoacoustics 2019, 13, 46–52. [Google Scholar] [CrossRef]

- Karlas, A.; Kallmayer, M.; Bariotakis, M.; Fasoula, N.-A.; Liapis, E.; Hyafil, F.; Pelisek, J.; Wildgruber, M.; Eckstein, H.-H.; Ntziachristos, V. Multispectral Optoacoustic Tomography of Lipid and Hemoglobin Contrast in Human Carotid Atherosclerosis. Photoacoustics 2021, 23, 100283. [Google Scholar] [CrossRef]

- Attia, A.B.E.; Balasundaram, G.; Moothanchery, M.; Dinish, U.S.; Bi, R.; Ntziachristos, V.; Olivo, M. A Review of Clinical Photoacoustic Imaging: Current and Future Trends. Photoacoustics 2019, 16, 100144. [Google Scholar] [CrossRef]

- Valluru, K.S.; Willmann, J.K. Clinical Photoacoustic Imaging of Cancer. Ultrasonography 2016, 35, 267–280. [Google Scholar] [CrossRef]

- Valluru, K.S.; Wilson, K.E.; Willmann, J.K. Photoacoustic Imaging in Oncology: Translational Preclinical and Early Clinical Experience. Radiology 2016, 280, 332–349. [Google Scholar] [CrossRef]

- Taruttis, A.; Ntziachristos, V. Advances in Real-Time Multispectral Optoacoustic Imaging and Its Applications. Nat. Photonics 2015, 9, 219–227. [Google Scholar] [CrossRef]

- Karlas, A.; Masthoff, M.; Kallmayer, M.; Helfen, A.; Bariotakis, M.; Fasoula, N.A.; Schäfers, M.; Seidensticker, M.; Eckstein, H.-H.; Ntziachristos, V.; et al. Multispectral Optoacoustic Tomography of Peripheral Arterial Disease Based on Muscle Hemoglobin Gradients—A Pilot Clinical Study. Ann. Transl. Med. 2021, 9, 36. [Google Scholar] [CrossRef]

- Karlas, A.; Kallmayer, M.; Fasoula, N.; Liapis, E.; Bariotakis, M.; Krönke, M.; Anastasopoulou, M.; Reber, J.; Eckstein, H.; Ntziachristos, V. Multispectral Optoacoustic Tomography of Muscle Perfusion and Oxygenation under Arterial and Venous Occlusion: A Human Pilot Study. J. Biophotonics 2020, 13, e201960169. [Google Scholar] [CrossRef]

- Lavaud, J.; Henry, M.; Gayet, P.; Fertin, A.; Vollaire, J.; Usson, Y.; Coll, J.-L.; Josserand, V. Noninvasive Monitoring of Liver Metastasis Development via Combined Multispectral Photoacoustic Imaging and Fluorescence Diffuse Optical Tomography. Int. J. Biol. Sci. 2020, 16, 1616–1628. [Google Scholar] [CrossRef]

- Lee, C.; Choi, W.; Kim, J.; Kim, C. Three-Dimensional Clinical Handheld Photoacoustic/Ultrasound Scanner. Photoacoustics 2020, 18, 100173. [Google Scholar] [CrossRef]

- Park, B.; Bang, C.H.; Lee, C.; Han, J.H.; Choi, W.; Kim, J.; Park, G.S.; Rhie, J.W.; Lee, J.H.; Kim, C. 3D Wide-field Multispectral Photoacoustic Imaging of Human Melanomas in Vivo: A Pilot Study. J. Eur. Acad. Dermatol. Venereol. 2021, 35, 669–676. [Google Scholar] [CrossRef]

- Yang, C.; Lan, H.; Gao, F.; Gao, F. Review of Deep Learning for Photoacoustic Imaging. Photoacoustics 2021, 21, 100215. [Google Scholar] [CrossRef]

- Rajendran, P.; Sharma, A.; Pramanik, M. Photoacoustic Imaging Aided with Deep Learning: A Review. Biomed. Eng. Lett. 2022, 12, 155–173. [Google Scholar] [CrossRef]

- Kim, J.; Kim, G.; Li, L.; Zhang, P.; Kim, J.Y.; Kim, Y.; Kim, H.H.; Wang, L.V.; Lee, S.; Kim, C. Deep Learning Acceleration of Multiscale Superresolution Localization Photoacoustic Imaging. Light Sci. Appl. 2022, 11, 131. [Google Scholar] [CrossRef]

- Choi, S.; Yang, J.; Lee, S.Y.; Kim, J.; Lee, J.; Kim, W.J.; Lee, S.; Kim, C. Deep Learning Enhances Multiparametric Dynamic Volumetric Photoacoustic Computed Tomography in Vivo (DL-PACT). Adv. Sci. 2022, 2202089. [Google Scholar] [CrossRef]

- Awasthi, N.; Jain, G.; Kalva, S.K.; Pramanik, M.; Yalavarthy, P.K. Deep Neural Network-Based Sinogram Super-Resolution and Bandwidth Enhancement for Limited-Data Photoacoustic Tomography. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2020, 67, 2660–2673. [Google Scholar] [CrossRef]

- Benyamin, M.; Genish, H.; Califa, R.; Wolbromsky, L.; Ganani, M.; Wang, Z.; Zhou, S.; Xie, Z.; Zalevsky, Z. Autoencoder Based Blind Source Separation for Photoacoustic Resolution Enhancement. Sci. Rep. 2020, 10, 21414. [Google Scholar] [CrossRef]

- Zheng, W.; Huang, C.; Zhang, H.; Xia, J. Slit-Based Photoacoustic Tomography with Co-Planar Light Illumination and Acoustic Detection for High-Resolution Vascular Imaging in Human Using a Linear Transducer Array. Biomed. Eng. Lett. 2022, 12, 125–133. [Google Scholar] [CrossRef]

- Erlöv, T.; Sheikh, R.; Dahlstrand, U.; Albinsson, J.; Malmsjö, M.; Cinthio, M. Regional Motion Correction for in Vivo Photoacoustic Imaging in Humans Using Interleaved Ultrasound Images. Biomed. Opt. Express 2021, 12, 3312. [Google Scholar] [CrossRef]

- Mozaffarzadeh, M.; Moore, C.; Golmoghani, E.B.; Mantri, Y.; Hariri, A.; Jorns, A.; Fu, L.; Verweij, M.D.; Orooji, M.; de Jong, N.; et al. Motion-Compensated Noninvasive Periodontal Health Monitoring Using Handheld and Motor-Based Photoacoustic-Ultrasound Imaging Systems. Biomed. Opt. Express 2021, 12, 1543. [Google Scholar] [CrossRef]

- Lee, H.; Han, S.; Park, S.; Cho, S.; Yoo, J.; Kim, C.; Kim, J. Ultrasound-Guided Breath-Compensation in Single-Element Photoacoustic Imaging for Three-Dimensional Whole-Body Images of Mice. Front. Phys. 2022, 10, 894837. [Google Scholar] [CrossRef]

- Kirchner, T.; Gröhl, J.; Sattler, F.; Bischoff, M.S.; Laha, A.; Nolden, M.; Maier-Hein, L. An Open-Source Software Platform for Translational Photoacoustic Research and Its Application to Motion-Corrected Blood Oxygenation Estimation. arXiv 2019, arXiv:1901.09781. [Google Scholar]

- Choi, W.; Oh, D.; Kim, C. Practical Photoacoustic Tomography: Realistic Limitations and Technical Solutions. J. Appl. Phys. 2020, 127, 230903. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Jeon, S.; Park, E.-Y.; Choi, W.; Managuli, R.; Lee, K.; Kim, C. Real-Time Delay-Multiply-and-Sum Beamforming with Coherence Factor for in Vivo Clinical Photoacoustic Imaging of Humans. Photoacoustics 2019, 15, 100136. [Google Scholar] [CrossRef] [PubMed]

- Cho, S.; Jeon, S.; Choi, W.; Managuli, R.; Kim, C. Nonlinear Pth Root Spectral Magnitude Scaling Beamforming for Clinical Photoacoustic and Ultrasound Imaging. Opt. Lett. 2020, 45, 4575. [Google Scholar] [CrossRef]

- Park, J.; Jeon, S.; Meng, J.; Song, L.; Lee, J.S.; Kim, C. Delay-Multiply-and-Sum-Based Synthetic Aperture Focusing in Photoacoustic Microscopy. J. Biomed. Opt. 2016, 21, 036010. [Google Scholar] [CrossRef]

- Jeon, S.; Choi, W.; Park, B.; Kim, C. A Deep Learning-Based Model That Reduces Speed of Sound Aberrations for Improved In Vivo Photoacoustic Imaging. IEEE Trans. Image Process. 2021, 30, 8773–8784. [Google Scholar] [CrossRef]

- De Luca, V.; Banerjee, J.; Hallack, A.; Kondo, S.; Makhinya, M.; Nouri, D.; Royer, L.; Cifor, A.; Dardenne, G.; Goksel, O.; et al. Evaluation of 2D and 3D Ultrasound Tracking Algorithms and Impact on Ultrasound-guided Liver Radiotherapy Margins. Med. Phys. 2018, 45, 4986–5003. [Google Scholar] [CrossRef]

- Liu, X.; Su, H.; Kang, S.; Kane, T.D.; Shekhar, R. Application of Single-Image Camera Calibration for Ultrasound Augmented Laparoscopic Visualization. In Medical Imaging 2015: Image-Guided Procedures, Robotic Interventions, and Modeling, Proceedings of the SPIE Medical Imaging, Orlando, FL, USA, 21–26 February 2015; Webster, R.J., Yaniv, Z.R., Eds.; SPIE: Bellingham, WA, USA, 2015; Volume 9415, p. 94151T. [Google Scholar]

- Sirbu, C.L.; Seiculescu, C.; Adrian Burdan, G.; Moga, T.; Daniel Caleanu, C. Evaluation of Tracking Algorithms for Contrast Enhanced Ultrasound Imaging Exploration. In Proceedings of the Australasian Computer Science Week 2022, Online, 14–18 February 2022; ACM: New York, NY, USA, 2022; pp. 161–167. [Google Scholar]

- Heinrich, M.P.; Jenkinson, M.; Bhushan, M.; Matin, T.; Gleeson, F.V.; Brady, S.M.; Schnabel, J.A. MIND: Modality Independent Neighbourhood Descriptor for Multi-Modal Deformable Registration. Med. Image Anal. 2012, 16, 1423–1435. [Google Scholar] [CrossRef]

- Kim, J.; Park, B.; Ha, J.; Steinberg, I.; Hooper, S.M.; Jeong, C.; Park, E.-Y.; Choi, W.; Liang, T.; Bae, J.S.; et al. Multiparametric Photoacoustic Analysis of Human Thyroid Cancers In Vivo. Cancer Res. 2021, 81, 4849–4860. [Google Scholar] [CrossRef]

- Vinegoni, C.; Lee, S.; Aguirre, A.D.; Weissleder, R. New Techniques for Motion-Artifact-Free in Vivo Cardiac Microscopy. Front. Physiol. 2015, 6, 147. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoon, C.; Lee, C.; Shin, K.; Kim, C. Motion Compensation for 3D Multispectral Handheld Photoacoustic Imaging. Biosensors 2022, 12, 1092. https://doi.org/10.3390/bios12121092

Yoon C, Lee C, Shin K, Kim C. Motion Compensation for 3D Multispectral Handheld Photoacoustic Imaging. Biosensors. 2022; 12(12):1092. https://doi.org/10.3390/bios12121092

Chicago/Turabian StyleYoon, Chiho, Changyeop Lee, Keecheol Shin, and Chulhong Kim. 2022. "Motion Compensation for 3D Multispectral Handheld Photoacoustic Imaging" Biosensors 12, no. 12: 1092. https://doi.org/10.3390/bios12121092

APA StyleYoon, C., Lee, C., Shin, K., & Kim, C. (2022). Motion Compensation for 3D Multispectral Handheld Photoacoustic Imaging. Biosensors, 12(12), 1092. https://doi.org/10.3390/bios12121092