Toward a Consensus Model of Cognitive–Reading Achievement Relations Using Meta-Structural Equation Modeling

Abstract

1. Introduction

1.1. Theoretical Frameworks of Reading

1.2. Cattell–Horn–Carroll (CHC) Theory of Intelligence

1.3. Cognitive–Achievement Relations in Reading

1.4. Cross-Battery Meta-Analytic Research

1.5. The Current Study

2. Methods

2.1. Identification of Published Tests and Manuals

2.2. Input Data

2.3. Broad and Narrow CHC Ability Categorization of Subtests

2.4. Step 1: Estimating Meta-Analytic Correlations

2.5. Stage 2: Using the Meta-Analytic Correlation Matrix to Examine Cognitive–Reading Relations

3. Results

3.1. Descriptive Statistics

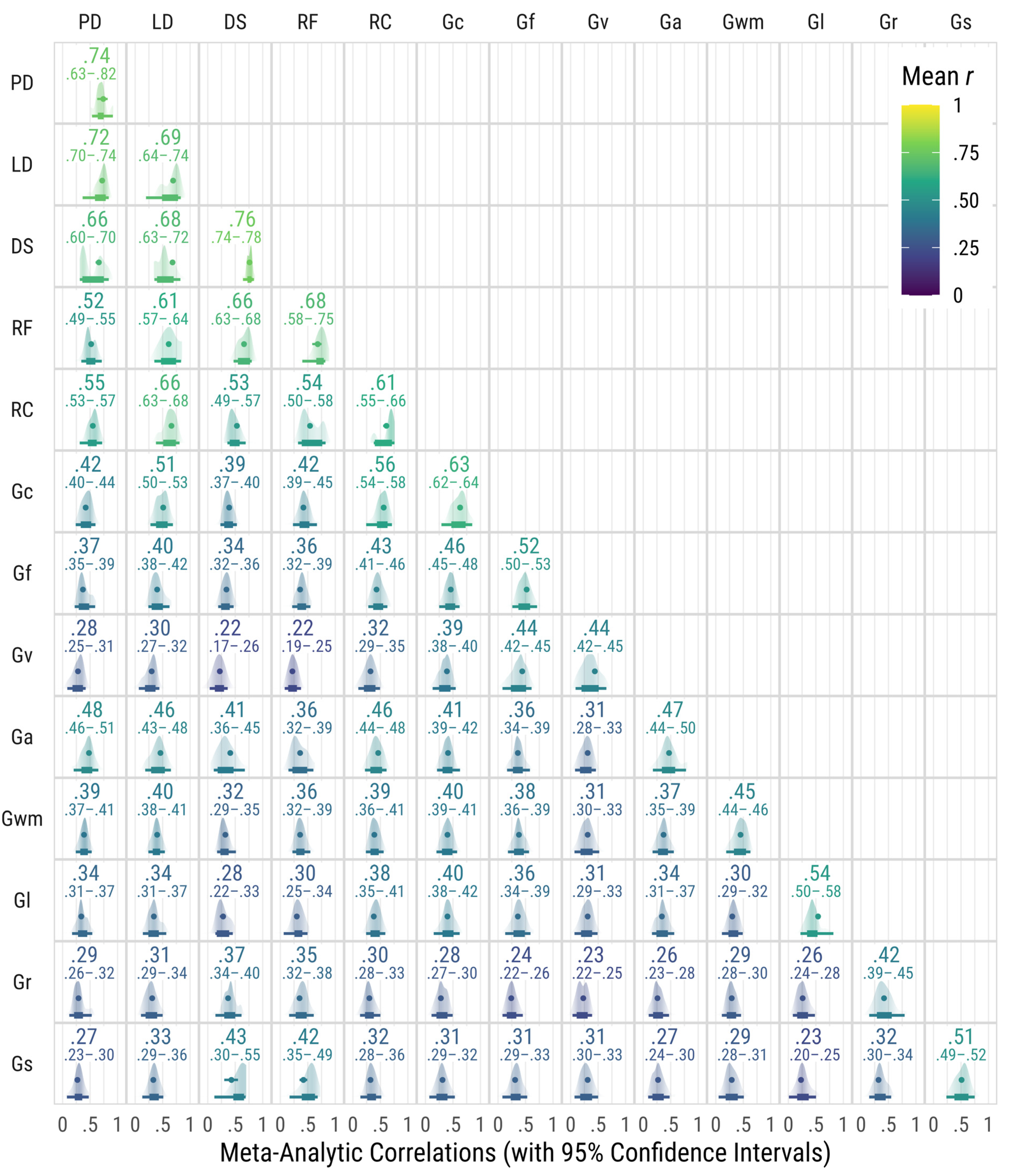

3.2. Meta-Analytic Correlations

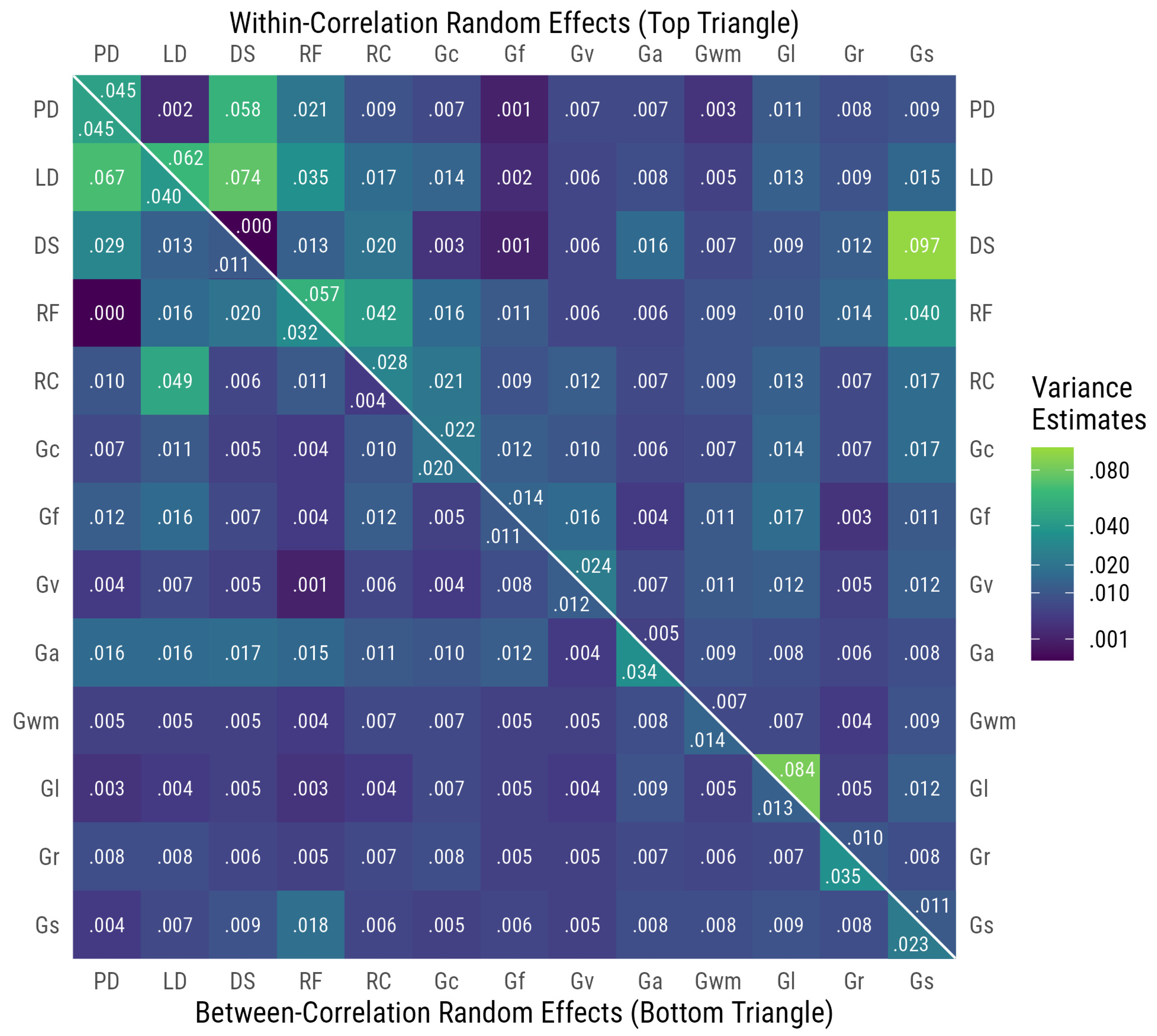

3.3. Heterogeneity of Meta-Analytic Correlations

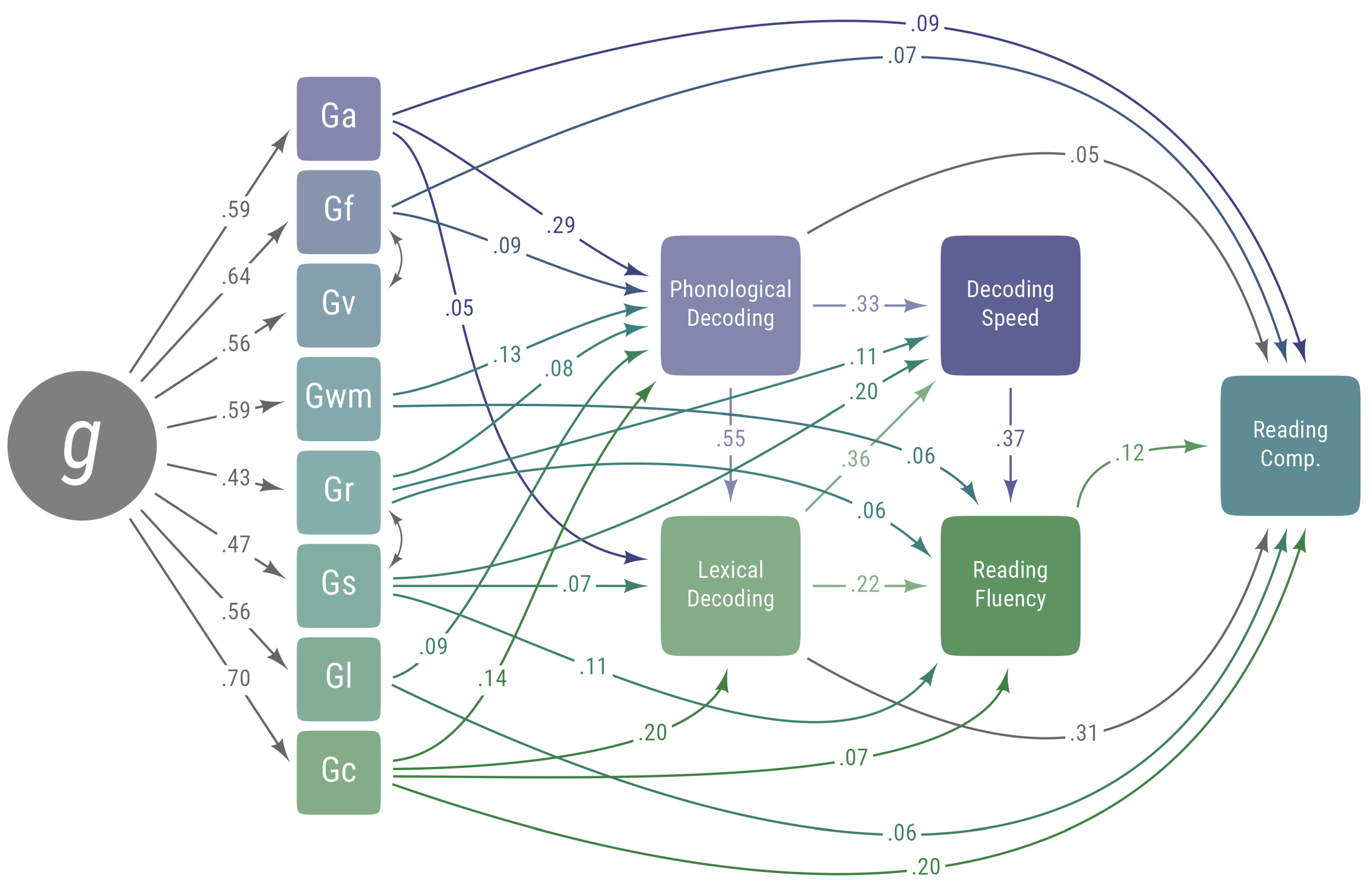

3.4. SEM Using the Meta-Analytic Correlation Matrix

4. Discussion

4.1. Basic Reading

4.2. Reading Fluency

4.3. Reading Comprehension

4.4. Additional Findings

4.5. Practical Implications

4.6. Limitations and Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- American Educational Research Association, American Psychological Association, and National Council on Measurement in Education. 2014. Standards for Educational and Psychological Testing. Washington, DC: American Educational Research Association. ISBN 978-0-935302-35-6. [Google Scholar]

- Benson, Nicholas F., John H. Kranzler, and Robert G. Floyd. 2016. Examining the Integrity of Measurement of Cognitive Abilities in the Prediction of Achievement: Comparisons and Contrasts across Variables from Higher-Order and Bifactor Models. Journal of School Psychology 58: 1–19. [Google Scholar] [CrossRef] [PubMed]

- Bryan, Victoria M., and John D. Mayer. 2020. A Meta-Analysis of the Correlations among Broad Intelligences: Understanding Their Relations. Intelligence 81: 101469. [Google Scholar] [CrossRef]

- Caemmerer, Jacqueline M., David L. S. Maddocks, Timothy Z. Keith, and Matthew R. Reynolds. 2018. Effects of Cognitive Abilities on Child and Youth Academic Achievement: Evidence from the WISC-V and WIAT-III. Intelligence 68: 6–20. [Google Scholar] [CrossRef]

- Caemmerer, Jacqueline M., Timothy Z. Keith, and Matthew R. Reynolds. 2020. Beyond Individual Intelligence Tests: Application of Cattell–Horn–Carroll Theory. Intelligence 79: 101433. [Google Scholar] [CrossRef]

- Cain, Kate, Jane Oakhill, and Peter Bryant. 2004. Children’s Reading Comprehension Ability: Concurrent Prediction by Working Memory, Verbal Ability, and Component Skills. Journal of Educational Psychology 96: 31–42. [Google Scholar] [CrossRef]

- Carroll, John B. 1993. Human Cognitive Abilities: A Survey of Factor-Analytic Studies. New York: Cambridge University Press. [Google Scholar]

- Catts, Hugh W., Thomas P. Hogan, and Suzanne M. Adlof. 2005. Developmental changes in reading and reading disabilities. In The Connections Between Language and Reading Disabilities. Edited by Hugh W. Catts and Alan G. Kamhi. Mahwah: Lawrence Erlbaum Associates. [Google Scholar]

- Cheung, Mike W.-L. 2015. Meta-Analysis: A Structural Equation Modeling Approach. Chichester: John Wiley & Sons. ISBN 978-1-118-95783-7. [Google Scholar]

- Cormier, Damien C., Kevin S. McGrew, Osman Bulut, and Akiko Funamoto. 2017. Revisiting the Relations between the WJ-IV Measures of Cattell–Horn–Carroll (CHC) Cognitive Abilities and Reading Achievement during the School-Age Years. Journal of Psychoeducational Assessment 35: 731–54. [Google Scholar] [CrossRef]

- Evans, Jeffrey J., Robert G. Floyd, Kevin S. McGrew, and Melissa H. Leforgee. 2002. The Relations between Measures of Cattell–Horn–Carroll (CHC) Cognitive Abilities and Reading Achievement during Childhood and Adolescence. School Psychology Review 31: 246–62. [Google Scholar] [CrossRef]

- Feraco, Tommaso, Donatella Resnati, Davide Fregonese, Andrea Spoto, and Chiara Meneghetti. 2023. An Integrated Model of School Students’ Academic Achievement and Life Satisfaction: Linking Soft Skills, Extracurricular Activities, Self-Regulated Learning, Motivation, and Emotions. European Journal of Psychology of Education 38: 109–30. [Google Scholar] [CrossRef]

- Flanagan, Dawn P., Samuel O. Ortiz, and Vincent C. Alfonso. 2025. Cross-Battery Assessment Software System. Hoboken: Wiley. [Google Scholar]

- Flanagan, Dawn P., Vincent C. Alfonso, and Matthew R. Reynolds. 2013. Broad and Narrow CHC Abilities Measured and Not Measured by the Wechsler Scales: Moving beyond within-Battery Factor Analysis. Journal of Psychoeducational Assessment 31: 202–23. [Google Scholar] [CrossRef]

- Floyd, Randy G., Elizabeth Meisinger, Nancy Gregg, and Timothy Z. Keith. 2012. An Explanation of Reading Comprehension across Development Using Models from Cattell–Horn–Carroll Theory: Support for Integrative Models of Reading. Psychology in the Schools 49: 725–43. [Google Scholar] [CrossRef]

- Floyd, Randy G., Timothy Z. Keith, George E. Taub, and Kevin S. McGrew. 2007. Cattell–Horn–Carroll Cognitive Abilities and Their Effects on Reading Decoding Skills: G Has Indirect Effects, More Specific Abilities Have Direct Effects. School Psychology Quarterly 22: 200–33. [Google Scholar] [CrossRef]

- Foorman, Barbara R., Yaacov Petscher, and Sandra Herrera. 2018. Unique and Common Effects of Decoding and Language Factors in Predicting Reading Comprehension in Grades 1–10. Learning and Individual Differences 63: 12–23. [Google Scholar] [CrossRef]

- Garcia, Ricardo J., and Kate Cain. 2014. Decoding and Reading Comprehension: A Meta-Analysis to Identify Which Reader and Assessment Characteristics Influence the Strength of the Relationship in English. Review of Educational Research 84: 74–111. [Google Scholar] [CrossRef]

- Gough, Philip B., and William E. Tunmer. 1986. Decoding, Reading, and Reading Disability. Remedial and Special Education 7: 6–10. [Google Scholar] [CrossRef]

- Grégoire, Jacques. 2017. Interpreting the WISC-V Indices. Le Journal des Psychologues 343: 24–29. [Google Scholar]

- Grigorenko, Elena L., Donald L. Compton, Lynn S. Fuchs, Richard K. Wagner, Erik G. Willcutt, and Jack M. Fletcher. 2020. Understanding, Educating, and Supporting Children with Specific Learning Disabilities: 50 Years of Science and Practice. American Psychologist 75: 37–51. [Google Scholar] [CrossRef]

- Hajovsky, Daniel, Matthew R. Reynolds, Robert G. Floyd, Joshua J. Turek, and Timothy Z. Keith. 2014. A Multigroup Investigation of Latent Cognitive Abilities and Reading Achievement Relations. School Psychology Review 43: 385–406. [Google Scholar] [CrossRef]

- Hajovsky, Daniel B., Christopher R. Niileksela, Jennifer Robbins, and Yiyun Sun. 2025. Understanding Contextual Specificity in Cognitive–Reading Relations: Moderation by Age and IQ. Journal of Psychoeducational Assessment 2025: 07342829251352605. [Google Scholar] [CrossRef]

- Jak, Suzanne, Hongfei Li, Laura Kolbe, Hanneke De Jonge, and Mike W.-L. Cheung. 2021. Meta-Analytic Structural Equation Modeling Made Easy: A Tutorial and Web Application for One-Stage MASEM. Research Synthesis Methods 12: 590–606. [Google Scholar] [CrossRef]

- Johnson, Elizabeth S., Michael Humphrey, Daryl F. Mellard, Kristen Woods, and Howard L. Swanson. 2010. Cognitive Processing Deficits and Students with Specific Learning Disabilities: A Selective Meta-Analysis of the Literature. Learning Disability Quarterly 33: 3–18. [Google Scholar] [CrossRef]

- Kaufman, Alan S., and Nadeen L. Kaufman. 2004. Kaufman Assessment Battery for Children, 2nd ed. Circle Pines: American Guidance Service. [Google Scholar]

- Keith, Timothy Z. 1999. Effects of General and Specific Abilities on Student Achievement: Similarities and Differences across Ethnic Groups. School Psychology Quarterly 14: 239–62. [Google Scholar] [CrossRef]

- Keith, Timothy Z. 2019. Multiple Regression and Beyond: An Introduction to Multiple Regression and Structural Equation Modeling, 3rd ed. New York and London: Routledge, Taylor & Francis Group. ISBN 978-1-138-06144-6. [Google Scholar]

- Kim, Young-Suk Grace. 2023. Simplicity meets complexity: Expanding the simple view of reading with the direct and indirect effects model of reading (DIER). In Handbook on the Science of Early Literacy. Edited by Sonia Q. Cabell, Susan B. Neuman and Nell K. Duke. New York: Guilford Press, pp. 9–22. ISBN 978-1-4625-5156-9. [Google Scholar]

- Kintsch, Walter. 2012. Psychological models of reading comprehension and their implications for assessment. In Measuring Up: Advances in How We Assess Reading Ability. Edited by J. Sabatini, E. Albro and T. O’ Reilly. New York: Rowman & Littlefield Publishers, pp. 21–38. [Google Scholar]

- Kutner, Mark, Elizabeth Greenberg, Ying Jin, Bridget Boyle, Yung-chen Hsu, and Eric Dunleavy. 2007. Literacy in Everyday Life: Results from the 2003 National Assessment of Adult Literacy. NCES 2007-490. Washington, DC: National Center for Education Statistics. [Google Scholar]

- LaForte, Eric M., Deborah Daily, and Kevin S. McGrew. 2025. Technical Manual: Woodcock–Johnson V. Itasca: Riverside Assessments, LLC. [Google Scholar]

- Lesnick, Joy, Robert M. Goerge, Christopher Smithgall, and Julia Gwynne. 2010. Reading on Grade Level in Third Grade: How Is It Related to High School Performance and College Enrollment? A Longitudinal Analysis of Third-Grade Students in Chicago in 1996–97 and Their Educational Outcomes. Chicago: Chapin Hall at the University of Chicago. [Google Scholar]

- Machek, Greg R., and J. Matthew Nelson. 2010. School Psychologists’ Perceptions Regarding the Practice of Identifying Reading Disabilities: Cognitive Assessment and Response to Intervention Considerations. Psychology in the Schools 47: 230–45. [Google Scholar] [CrossRef]

- Messick, Samuel. 1989. Validity. In Educational Measurement. Edited by Robert Linn. Washington, DC: American Council on Education, pp. 104–31. [Google Scholar]

- Messick, Samuel. 1995. Validity of Psychological Assessment: Validation of Inferences from Persons’ Responses and Performances as Scientific Inquiry into Score Meaning. American Psychologist 50: 741–49. [Google Scholar] [CrossRef]

- Muthén, Linda K., and Bengt Muthén. 1998–2017. Mplus User’s Guide, 8th ed. Los Angeles: Muthén & Muthén. [Google Scholar]

- Niileksela, Christopher R., and Matthew R. Reynolds. 2019. Enduring the Tests of Age and Time: Wechsler Constructs across Versions and Revisions. Intelligence 77: 101403. [Google Scholar] [CrossRef]

- Niileksela, Christopher R., Matthew R. Reynolds, Timothy Z. Keith, and Kevin S. McGrew. 2016. A Special Validity Study of the Woodcock–Johnson IV. In WJ IV Clinical Use and Interpretation. Cambridge: Elsevier, pp. 65–106. ISBN 978-0-12-802076-0. [Google Scholar]

- Perfetti, Charles A. 1985. Reading Ability. New York: Oxford University Press. ISBN 978-0-19-503501-8. [Google Scholar]

- Peterson, Robin L., Laurie M. McGrath, Erik G. Willcutt, Janice M. Keenan, Richard K. Olson, and Bruce F. Pennington. 2021. How Specific Are Learning Disabilities? Journal of Learning Disabilities 54: 466–83. [Google Scholar] [CrossRef] [PubMed]

- Phelps, LeAdelle, Kevin S. McGrew, Sandra N. Knopik, and Laurie Ford. 2005. The General (g), Broad, and Narrow CHC Stratum Characteristics of the WJ III and WISC-III Tests: A Confirmatory Cross-Battery Investigation. School Psychology Quarterly 20: 66–88. [Google Scholar] [CrossRef]

- Reynolds, Matthew R., Timothy Z. Keith, Dawn P. Flanagan, and Vincent C. Alfonso. 2013. A Cross-Battery, Reference Variable, Confirmatory Factor Analytic Investigation of the CHC Taxonomy. Journal of School Psychology 51: 535–55. [Google Scholar] [CrossRef]

- Ritchie, Stuart J., and Timothy C. Bates. 2013. Enduring Links from Childhood Mathematics and Reading Achievement to Adult Socioeconomic Status. Psychological Science 24: 1301–8. [Google Scholar] [CrossRef]

- Sanders, Sara, David E. McIntosh, Michael Dunham, Bruce A. Rothlisberg, and Helen Finch. 2007. Joint Confirmatory Factor Analysis of the Differential Ability Scales and the Woodcock–Johnson Tests of Cognitive Abilities–Third Edition. Psychology in the Schools 44: 119–38. [Google Scholar] [CrossRef]

- Schneider, W. Joel, and Kevin S. McGrew. 2018. The Cattell–Horn–Carroll Theory of Intelligence. In Contemporary Intellectual Assessment: Theories, Tests, and Issues. Edited by Dawn P. Flanagan and Erin M. McDonough. New York: Guilford Press, pp. 73–130. ISBN 978-1-4625-3578-1. [Google Scholar]

- Seidenberg, Mark S. 2013. The Science of Reading and Its Educational Implications. Language and Learning Development 9: 331–60. [Google Scholar] [CrossRef]

- Seymour, Philip H. K., Mikko Aro, and Jane M. Erskine. 2003. Foundation Literacy Acquisition in European Orthographies. British Journal of Psychology 94: 143–74. [Google Scholar] [CrossRef]

- Smart, Diana, George J. Youssef, Ann Sanson, Margot Prior, John W. Toumbourou, and Craig A. Olsson. 2017. Consequences of Childhood Reading Difficulties and Behaviour Problems for Educational Achievement and Employment in Early Adulthood. British Journal of Educational Psychology 87: 288–308. [Google Scholar] [CrossRef]

- Snowling, Margaret J., Charles Hulme, and Kate Nation, eds. 2022. The Science of Reading: A Handbook, 2nd ed. Hoboken: Wiley-Blackwell. ISBN 978-1-119-70509-3. [Google Scholar]

- Tunmer, William E., and Wesley A. Hoover. 2019. The Cognitive Foundations of Learning to Read: A Framework for Preventing and Remediating Reading Difficulties. Australian Journal of Learning Difficulties 24: 75–93. [Google Scholar] [CrossRef]

- Van Den Broek, Paul, and Christine A. Espin. 2012. Connecting Cognitive Theory and Assessment: Measuring Individual Differences in Reading Comprehension. School Psychology Review 41: 315–325. [Google Scholar] [CrossRef]

- Van Den Broek, Paul, and Panayiota Kendeou. 2022. Reading Comprehension I: Discourse. In The Science of Reading: A Handbook. Edited by Margaret J. Snowling, Charles Hulme and Kate Nation. Hoboken: Wiley, pp. 239–60. ISBN 978-1-119-70509-3. [Google Scholar]

- Viechtbauer, Wolfgang. 2010. Conducting Meta-Analyses in R with the Metafor Package. Journal of Statistical Software 36: 1–48. [Google Scholar] [CrossRef]

- Viswesvaran, Chockalingam, and Deniz S. Ones. 1995. Theory Testing: Combining Psychometric Meta-Analysis and Structural Equations Modeling. Personnel Psychology 48: 865–85. [Google Scholar] [CrossRef]

- Woodcock, Richard W. 1990. Theoretical Foundations of the WJ-R Measures of Cognitive Ability. Journal of Psychoeducational Assessment 8: 231–58. [Google Scholar] [CrossRef]

- Zaboski, Brian A., John H. Kranzler, and Nicholas A. Gage. 2018. Meta-Analysis of the Relationship between Academic Achievement and Broad Abilities of the Cattell–Horn–Carroll Theory. Journal of School Psychology 71: 42–56. [Google Scholar] [CrossRef]

| Gc | Gf | Gv | Ga | Gl | Gr | Gwm | Gs | LD | PD | DS | RF | RC | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Gc | 3197 | ||||||||||||

| Gf | 1831 | 400 | |||||||||||

| Gv | 3170 | 1219 | 1125 | ||||||||||

| Ga | 852 | 386 | 400 | 190 | |||||||||

| Gl | 1257 | 679 | 1791 | 384 | 495 | ||||||||

| Gr | 1057 | 519 | 541 | 381 | 496 | 331 | |||||||

| Gwm | 3778 | 1582 | 2756 | 566 | 1350 | 817 | 1619 | ||||||

| Gs | 2215 | 824 | 1427 | 342 | 556 | 503 | 1929 | 501 | |||||

| LD | 625 | 197 | 206 | 215 | 175 | 236 | 322 | 169 | 62 | ||||

| PD | 386 | 124 | 128 | 136 | 109 | 153 | 181 | 113 | 155 | 9 | |||

| DS | 250 | 76 | 56 | 125 | 48 | 203 | 132 | 56 | 99 | 67 | 27 | ||

| RF | 283 | 93 | 87 | 103 | 80 | 166 | 129 | 91 | 113 | 71 | 89 | 26 | |

| RC | 544 | 153 | 160 | 159 | 135 | 213 | 236 | 145 | 224 | 129 | 84 | 115 | 25 |

| Gc | Gf | Gv | Ga | Gl | Gr | Gwm | Gs | LD | PD | DS | RF | RC | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Gc | 384 | ||||||||||||

| Gf | 216 | 150 | |||||||||||

| Gv | 273 | 222 | 263 | ||||||||||

| Ga | 82 | 49 | 55 | 34 | |||||||||

| Gl | 123 | 111 | 160 | 48 | 126 | ||||||||

| Gr | 112 | 89 | 107 | 65 | 70 | 76 | |||||||

| Gwm | 252 | 199 | 278 | 63 | 142 | 106 | 220 | ||||||

| Gs | 179 | 154 | 198 | 44 | 70 | 85 | 182 | 133 | |||||

| LD | 152 | 59 | 67 | 72 | 56 | 69 | 74 | 49 | 41 | ||||

| PD | 96 | 46 | 45 | 59 | 39 | 56 | 52 | 42 | 102 | 9 | |||

| DS | 41 | 19 | 18 | 38 | 14 | 47 | 26 | 16 | 46 | 41 | 21 | ||

| RS | 71 | 27 | 26 | 43 | 21 | 60 | 29 | 26 | 77 | 66 | 54 | 14 | |

| RC | 140 | 52 | 52 | 62 | 45 | 65 | 58 | 47 | 136 | 99 | 40 | 75 | 21 |

| Phonological Decoding Direct (Indirect, Total) | Lexical Decoding Direct (Indirect, Total) | Decoding Speed Direct (Indirect, Total) | Reading Fluency Direct (Indirect, Total) | Reading Comprehension Direct (Indirect, Total) | |

|---|---|---|---|---|---|

| Gc | 0.14 | 0.20 (0.08, 0.27) | −0.02 (0.14, 0.12) | 0.07 (0.11, 0.18) | 0.20 (0.12, 0.32) |

| Gf | 0.09 | 0.04 (0.05, 0.09) | 0.01 (0.06, 0.07) | 0.03 (0.05, 0.08) | 0.07 (0.04, 0.12) |

| Ga | 0.29 | 0.05 (0.16, 0.21) | 0.03 (0.17, 0.20) | −0.01 (0.12, 0.11) | 0.09 (0.10, 0.19) |

| Gl | 0.09 | 0.00 (0.05, 0.05) | −0.02 (0.05, 0.02) | 0.02 (0.02, 0.04) | 0.06 (0.03, 0.09) |

| Gr | 0.09 | 0.04 (0.05, 0.09) | 0.11 (0.06, 0.17) | 0.06 (0.08, 0.14) | 0.01 (0.06, 0.07) |

| Gwm | 0.13 | 0.04 (0.07, 0.11) | −0.04 (0.08, 0.05) | 0.06 (0.04, 0.10) | 0.03 (0.06, 0.08) |

| Gs | 0.03 | 0.07 (0.02, 0.09) | 0.20 (0.04, 0.25) | 0.11 (0.11, 0.23) | 0.00 (0.07, 0.07) |

| Phonological Decoding | – | 0.55 | 0.33 (0.20, 0.53) | 0.01 (0.32, 0.33) | 0.05 (0.23, 0.28) |

| Lexical Decoding | – | – | 0.36 | 0.22 (0.13, 0.36) | 0.31 (0.06, 0.37) |

| Decoding Speed | – | – | – | 0.37 | 0.04 (0.05, 0.08) |

| Reading Fluency | – | – | – | – | 0.12 |

| Indirect Effect of g | 0.50 | 0.54 | 0.47 | 0.48 | 0.57 |

| R2 | 0.34 | 0.59 | 0.57 | 0.52 | 0.54 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hajovsky, D.B.; Niileksela, C.R.; Flanagan, D.P.; Alfonso, V.C.; Schneider, W.J.; Robbins, J. Toward a Consensus Model of Cognitive–Reading Achievement Relations Using Meta-Structural Equation Modeling. J. Intell. 2025, 13, 104. https://doi.org/10.3390/jintelligence13080104

Hajovsky DB, Niileksela CR, Flanagan DP, Alfonso VC, Schneider WJ, Robbins J. Toward a Consensus Model of Cognitive–Reading Achievement Relations Using Meta-Structural Equation Modeling. Journal of Intelligence. 2025; 13(8):104. https://doi.org/10.3390/jintelligence13080104

Chicago/Turabian StyleHajovsky, Daniel B., Christopher R. Niileksela, Dawn P. Flanagan, Vincent C. Alfonso, William Joel Schneider, and Jacob Robbins. 2025. "Toward a Consensus Model of Cognitive–Reading Achievement Relations Using Meta-Structural Equation Modeling" Journal of Intelligence 13, no. 8: 104. https://doi.org/10.3390/jintelligence13080104

APA StyleHajovsky, D. B., Niileksela, C. R., Flanagan, D. P., Alfonso, V. C., Schneider, W. J., & Robbins, J. (2025). Toward a Consensus Model of Cognitive–Reading Achievement Relations Using Meta-Structural Equation Modeling. Journal of Intelligence, 13(8), 104. https://doi.org/10.3390/jintelligence13080104