Abstract

This study explores Generative Artificial Intelligence (GenAI) applications in creativity. We identify the four most common parts of the creative process: (1) Problem Identification and Framing, (2) Generating ideas, (3) Evaluating ideas, and (4) Deploying and Implementing ideas. We map Generative AI systems into this common part of the creative process. By positioning GenAI as a supportive “AI as a helper”, we propose a structured framework that identifies specific GenAI tools and their capabilities within each common part of the creative process. Through the analysis and demonstration of use cases, this study demonstrates how Generative AI systems facilitate problem identification, generate novel ideas, evaluate ideas, and enhance implementation. We also propose the criteria for evaluating these GenAI systems for each part of the process. Moreover, this study provides insights for researchers and practitioners who are seeking to enhance GenAI’s creative capabilities and human creativity. This study concludes with a discussion of the implications of these illustrative use cases and suggests directions for future research to further advance the use of GenAI.

1. Introduction

The rapid advancements of Generative Artificial Intelligence (GenAI) have significantly impacted various fields, especially in the creative industries. According to Generative AI Examples from Google Cloud (2023), GenAI is defined as “the use of AI to create new content, like text, images, music, audio, and videos”, utilizing a machine learning (ML) model “to learn the patterns and relationships in a dataset of human-created content” and then “applying the learned patterns to generate new content”. Thus, GenAI represents an evolution in AI, emphasizing the creation of human-like content through advanced machine learning algorithms and neural networks, generating original outputs from human prompts. This technology is changing how many industries work by enhancing human-AI collaboration and providing tools to augment human capabilities, including automated content creation and decision-making support. GenAI systems such as ChatGPT and DALL-E are increasingly used to augment human creativity, especially for idea generation. This has led to improved efficiency and innovation in various fields (Oluwagbenro 2024; Young et al. 2024).

The creative process usually involves various stages, such as preparation, idea generation, selection, and implementation (Chompunuch 2023). Generative AI (GenAI) has significantly transformed the creative landscape, enhancing various stages of the creative process through intelligent assistance. From generating novel ideas to implementing those ideas, AI systems are reshaping how creativity is approached, making it more dynamic and accessible. This transformation is evident across multiple domains, including design (As and Basu 2021; Chandrasekera et al. 2024), art (Egon et al. 2023; Sinha et al. 2024), and music (Hirawata and Otani 2024) where AI acts as a co-creator or partner (Lubart 2005). Human collaboration with Generative Artificial Intelligence (GenAI) in creative tasks is characterized by a synergistic relationship, where AI acts as a co-creator with human artists and thinkers. This synergy is particularly evident in scenarios where AI applications are intentionally designed to enhance human creativity, aiding in the invention of new practices. Including AI in creative workflows fosters balanced cooperation that bolsters the creative process, adheres to ethical norms, and maintains human values (Vinchon et al. 2023; O’Toole and Horvát 2024).

Moreover, GenAI has significantly democratized creativity by reducing the expertise required for creative tasks. Individuals who may lack traditional creative skills can now use AI-assisted tools to articulate their ideas and emotions, making creativity more accessible to a broader audience. In this way, GenAI is a powerful facilitator of human expression, enabling a more diverse range of people to participate in creative activities (Tigre Moura 2023). However, integrating GenAI into the creative process creates challenges. Despite the increasing adoption of GenAI in creative processes, there is a lack of a comprehensive framework that systematically maps GenAI functions to specific stages of the creative process, making it challenging for practitioners to effectively integrate these tools.

This study addresses two research questions:

- How can GenAI facilitate common steps of the creative process?

- What are the main benefits and limitations of integrating GenAI tools?

This study positions Generative AI (GenAI) as an “AI as a helper” to highlight the collaborative dynamic between human creativity and machine capabilities. By representing AI as a supportive partner rather than a replacement, this perspective maintains human agency and judgment, allowing individuals to shape and enhance AI outputs. Consequently, “AI as a helper” fosters a more balanced, ethically grounded, and adaptive creative process that harnesses both human strengths and the efficiency of machine intelligence. Through detailed analysis and key use case demonstrations, we aim to provide a structured framework that highlights GenAI’s potential benefits and applications in creative work. Our analysis encompasses stage-specific applications and essential steps in the creative process, including (1) Problem Identification and Framing, (2) Generating Ideas, (3) Evaluating Ideas, and (4) Deploying and Implementing Ideas.

This study contributes to both theory and practice by providing a comprehensive framework of GenAI assistance across the four common steps of the creative process. Our goal is to provide valuable insights to researchers and practitioners on using GenAI to enhance human creativity. This research enriches the growing understanding of GenAI and creativity, highlighting potential opportunities and challenges in the field. We conclude by discussing the implications of our findings and suggesting future research directions for integrating GenAI into creative processes.

The first section of the paper discusses the history and background of generative artificial intelligence (GenAI). The Literature Review then examines the body of research on models of the creative process, GenAI-enhanced creativity, and the justification for referring to it as an “AI helper” in the creative process. Following this, the Conceptual Framework section aligns GenAI systems with four key common steps in the creative process. The Methodology describes the research design, incorporating theoretical mapping and a demonstration-based approach. In the Use Case Analysis, examples illustrate how GenAI tools operate within four common creative steps. Moreover, the Discussion integrates findings, highlights implications, and outlines limitations. The Conclusion summarizes key contributions and suggests future research directions.

2. Literature Review

2.1. The Creative Process: Definitions and Models

The creative process is defined as a succession of thoughts and actions leading to original and appropriate productions (Lubart 2001). The literature on the creative process has extensively explored the concept of stage models for creative problem-solving. The pioneer in this field was Wallas (1926), whose seminal qualitative work conceptualized four linear stages for creativity to occur: Preparation, Incubation, Illumination, and Verification (Wallas 1926). Since then, various researchers have proposed their own stage models (see Table 1). Thus, the study of the creative process heavily relies on stage models, which have become an essential tool for researchers (Runco 2004; Sadler-Smith 2015). These models have led to the development of divergent thinking tests such as (Torrance 1974) and insight problem-solving tasks (Davidson 2003). However, these models have been criticized for assuming that creativity follows a linear process. Montag et al. (2012) argue that the creative process is a dynamic and reiterative process that requires both divergent and convergent thinking, which qualitative evidence largely supports (Montag et al. 2012; Hargadon and Bechky 2006).

Table 1.

The Creative process stage models and Common part of the process.

From Table 1, it can be observed that the creative process typically follows four common parts. Therefore, the common parts that occur most in many creative process models often include:

- Problem identification/problem-framing

- Generating ideas

- Evaluating ideas

- Deploying ideas (Validation or Implementation)

These steps are commonly recognized as key parts of the creative process (Leone et al. 2023; Lubart 2001; Puccio and Modrzejewska-Świgulska 2022). It is important to note that these steps are not always linear and can be influenced by various internal and external factors, as well as the specific context in which creativity is being applied. The integration of Generative Artificial Intelligence (GenAI) into this process has brought new possibilities, such as enabling rapid idea generation through GenAI brainstorming tools, providing immediate feedback and critiques on ideas, prototyping, and visual synthesis. However, this integration also has challenges, including potential biases in AI-generated suggestions, difficulty in managing human oversight and creative autonomy, and overly generic or superficial outputs. Therefore, effective GenAI integration requires domain expertise, ethical judgment, and context-sensitive human interpretation.

2.2. GenAI in Creativity Research and Gaps in the Literature

There are studies emerging on GenAI-supported or GenAI-driven creativity in various domains, such as visual art (Zhou and Lee 2024), music composition (Michele Newman and Lee 2023), narrative generation (Doshi and Hauser 2024; McGuire 2024), and design (Moreau et al. 2023). In addition, Urban et al. (2024) study found that ChatGPT improves the creative problem-solving of university students. As shown in the historical timeline of GenAI, early work in computational creativity often investigated “Rule-based” approaches that generate novel artifacts under constraints. The advances in deep learning or Generative Adversarial Networks (GANs) and transformer-based models have produced a new generation of creative AI systems that can automate generating paintings, music, and design prototypes. Studies e.g., (Lubart et al. 2021; Zhang et al. 2023; Mao et al. 2024) in AI creativity now often focus on “Creative Collaboration”, how human-AI collaboration or co-creation can generate novelty using GenAI tools such as ChatGPT, Dall-E, etc.

However, although previous research has broadly acknowledged the supportive role of GenAI in creative tasks, studies did not target specific phases of creativity that benefit most from GenAI assistance. There are a few exceptions that do look at specific phases; for example, Gindert and Müller (2024) found that GenAI tools can support teams in the idea generation phase. Similarly, Wan et al. (2024) explored human-AI co-creativity in prewriting tasks, and they also identified the creative process stages, such as ideation, illumination, and implementation, but without a detailed analysis of GenAI effectiveness in each specific stage.

While the research on AI creativity expands, the gaps in the literature need to be addressed. Much of the research focuses on isolated/single-use cases, and there is a need for studies on structured frameworks for GenAI in the creative process; for instance, in this study, we identify the four common steps of the creative process: Problem identification and Framing, Generating ideas, Evaluating ideas, Deploying and Implementing idea. There is a lack of guidelines or frameworks for researchers and practitioners seeking to integrate GenAI across all creative stages. As a result, we introduce GenAI tools for each step and give examples and guidelines on how to use them.

2.3. Positioning “AI as a Helper”

Several authors have proposed the frameworks or levels of human-AI collaboration. There are three ways/modes of human-AI creative collaboration (Davis et al. 2015; Karimi et al. 2018; Lubart et al. 2021; Zhang et al. 2023; Mao et al. 2024). The core idea is humans, and AI contributions can range from AI as a supportive tool (often seen as a Helper, as shown in this study), AI as a partner or co-creator, and AI as a principal Creator, depending on the level of AI autonomy and involvement. These three modes emphasize how shifts in autonomy and collaborative depth can define the creative relationship between humans and AI.

- AI as a Helper (as proposed in this study): In this mode, with the least AI involvement, humans perform most of the stages, and GenAI should provide assistance or act as a supportive tool for each part of the creative process (Zhang et al. 2023).

- AI as a Partner or Co-Creator: In this mode, with moderate AI involvement, humans and AI engage in communication to exchange information (Zhang et al. 2021) and provide mutual guidance (Oh et al. 2018), and AI helps build and test the creative outcome.

- AI as a Principal Creator: In this mode, with the highest AI involvement, “AI should be a more autonomous process, with AI participating in most of the stages based on requirements or inputs provided by humans” (Zhang et al. 2023).

Therefore, in this study, we proposed “AI as a Helper” to demonstrate one mode of human-AI collaboration. AI as a Helper highlights a human-centered approach where AI is used as a helper to assist (rather than compete) in the creative process. This human-centered approach supports the concept of collaborative innovation over pure automation. Rather than framing AI as a replacement for human creativity, we emphasize the potential boost from GenAI models such as text-based ones like ChatGPT or image-based ones like Dall-e or Midjourney to enhance rather than replace the creativity of individuals and teams. Such a human-centered approach, viewing AI systems as supportive partners, the “AI as a Helper” concept adds a novel dimension to the creative interactions and encourages researchers and practitioners to gain efficiency and fresh conceptual inputs while maintaining full human control over contextual and ethical decisions.

3. Conceptual Framework: Mapping GenAI to the Creative Process

3.1. Overview of the Four Common Parts of the Creative Process

- Problem Identification and Framing

The first stage of the creative process, known as “Preparation” in Wallas’s (1926) model, focuses on identifying problems and framing problems (Carson 1999; Wallas 1926). Creativity is sparked by an incident or idea encountered by an individual (Doyle 1998). This stage is vital to the creative process, with research indicating that the way individuals approach problem identification and construction significantly impacts their creative output (Getzels and Csikszentmihalyi 1976). This initial phase, the most common part of the creative process, focuses on identifying, recognizing, clarifying, or reframing the core problem or question. Scholars like Rhodes (1961) and Osborn (1979) highlight the importance of articulating the problem clearly and compellingly, as this shapes all subsequent ideation and solution-building efforts (Rhodes 1961; Osborn 1979). Amabile (1983, 1988) emphasizes that how a problem is posed interacts with an individual’s intrinsic motivation and domain-relevant skills, thus influencing creative potential (Amabile 1983, 1988). Similarly, Mumford and Reiter-Palmon (1994) and Runco and Dow (1999) highlight that a sound problem-construction process leads to higher-quality, more innovative outcomes (Mumford and Reiter-Palmon 1994; Runco and Dow 1999). Reiter-Palmon and Illies (2004) provide empirical support showing that the clarity of problem-framing predicts the success of creative solutions (Reiter-Palmon and Illies 2004), while Zhang and Bartol (2010) demonstrate how leadership and contextual support can enhance this stage (Zhang and Bartol 2010). Overall, carefully defining and reframing a problem sets the foundation for productive and impactful creativity.

- Generating ideas

According to Wallas (1926), the second stage is “Incubation” (Osborn 1979; Shaw 1989; Sadler-Smith 2015). Once a problem is well-defined, the focus turns to idea generation, which is the “Generating ideas” part. Osborn (1953) introduced brainstorming as a core technique, underscoring the need for freely generated ideas (Osborn 1979). During the ideation part, teams will work together to find solutions to the problem that was identified in the preparation phase. This second common part of the creative process involves gathering information to exchange ideas and generate novel ideas (Paulus and Yang 2000). Ideation refers to generating new and valuable solutions for potential opportunities. This process demands effective sharing of knowledge and information among team members, along with consideration of different perspectives. Moreover, it highlights the influence of social and motivational factors on how ideas emerge (Amabile 1983, 1988) while Nemiro (2002) examines how distributed teams effectively share and refine concepts. Consequently, the team integrates and develops individual ideas to create practical and innovative solutions. Reiter-Palmon and Illies (2004) again stress that effective problem-framing fosters more productive ideation (Reiter-Palmon and Illies 2004).

- Evaluating ideas

During the third stage, known as the “Selection” stage, a team has to determine the best idea from a range of ideas generated in the ideation phase. As noted by Reiter-Palmon et al. (2007), this part of the creative process involves evaluating the ideas and selecting the most promising ones (Reiter-Palmon et al. 2007). Usually, the idea selection process entails assessing ideas based on specific criteria to make a final decision. While at the individual level, idea selection is an intrapersonal process (Herman and Reiter-Palmon 2011), at the team level, it becomes a more interactive and interpersonal endeavor. Although research on idea selection is relatively limited, findings suggest that teams are generally more proficient in choosing the best ideas compared to individuals (Mumford et al. 2001b), particularly when they have fewer alternatives to consider (Mumford et al. 2001a). In this research, the Evaluating of ideas part is defined as “the process of evaluating possible new ideas and selecting the best one”. The process ends with team members selecting the best available idea.

- Deploying and Implementing Idea

During the last stage of the creative process, “Deploying and Implementing idea”, the abstract concept is transformed into a tangible output such as an implementation plan or a prototype. According to Reiter-Palmon and Illies (2004), translating a newly formed idea into practice needs structuring and implementing the plan, which includes clear project goals and timelines for implementation. Botella highlights the provisional object or draft of deploying and implementing the idea (Botella et al. 2013). Sawyer (2012) also emphasizes the “Externalization” of the creative thinking or idea in the deploying and implementing stage. In addition, Cropley (2015) suggests the communication and validation of an idea in the last stage of the creative process.

3.2. Roles of GenAI in Each Part

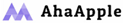

In aligning the specific capabilities of GenAI tools with four common steps of the creative process, it is recognized that GenAI does not act as a sole agent; rather, it acts as a Helper to enhance human creativity. For each common creative step: 1. Problem Identification and Framing, 2. Generating ideas, 3. Evaluating ideas, and 4. Deploying and implementing Ideas. Connecting GenAI across the steps can enhance and foster human creativity (see Figure 1).

Figure 1.

An illustration of Four common stages in creative process.

- Problem Identification and Framing: GenAI language models (e.g., GPT-4) can rapidly synthesize large volumes of text to help humans understand the context and inform the definition of the problem. GenAI can also reframe and show the alternative aspect of the problem. Moreover, GenAI can help by scanning relevant information and providing quick assumptions and feedback to ensure the framing problem is comprehensive. However, GenAI may overlook and oversimplify complex issues or may introduce biased information from its training data, which leads to misleading problem definitions, so this requires validation by human expertise.

- Generating ideas: GenAI can help humans brainstorm and cultivate divergent thinking. It will act as an “idea catalyst”. Large language models (LLMs) or text-based models can generate a list of unconventional ideas or solutions and blend unrelated concepts in surprising ways. Image-based models (e.g., Dall-E) can generate visual pictures to spark idea generation, helping design inspiration. Also, some GenAI can be integrated into brainstorming workshops like Stormz AI, pushing the team’s ideas beyond the boundaries. Despite these advantages, GenAI-generated ideas might lack feasibility or practical value. There is also a risk of generating superficial ideas due to the absence of domain-specific expertise and contextual understanding.

- Evaluating ideas: Once multiple ideas are generated, GenAI can help compare and critique them. LLMs can help generate pros or opportunities and cons or constraints and suggest improvements, provide structured feedback and preliminary validation that humans can refine further. Thus, this GenAI still needs human oversight for contextual and ethical judgment. However, GenAI may lack contextual, ethical awareness and careful judgment, which leads to biased assessments. Thus, this step needs careful human oversight.

- Deploying and implementing Idea: GenAI text-based or image-based can generate rapid prototyping or UX/UI designs or solutions so that humans can adjust and finalize them further (e.g., Leo AI), thus speeding up idea prototyping and deployment. For example, ChatGPT can help human users write initial draft recommendations or user manuals of the product, etc. However, GenAI-generated prototypes may not fully align with user needs and practical constraints. Moreover, reliance on AI for the initial draft could lead to overlooking critical usability considerations, so human judgment is necessary to ensure that final implementations are context-appropriate, ethically sound, and address user requirements and constraints.

4. Methodology

In this research, we present the Selection Methodology as shown in Table 2.

Table 2.

Selection Methodology.

This highlights the systematic approach to identifying and selecting the most potential GenAI systems for each common part of the creative process.

- Identify the Common Creative Steps: (1) Problem Identification and Framing (2) Generating Ideas (3) Evaluating Ideas (4) Deploying and Implementing Idea

- Define relevant keywords search: For each creative step, we identify keywords to guide the search in Table 3:

Table 3. Keywords search for four common parts of the creative process.

Table 3. Keywords search for four common parts of the creative process. - Source of GenAI Tools: We use two sources to search for GenAI tools for specific steps in the creative process. (1) Search “There is An AI for that” for Specific Tools: First, we searched for keywords on the “There is An AI for that” website (https://theresanaiforthat.com/) (accessed on 25 March 2025) for specialized GenAI Tools for specific tasks within each creative process. (2) Search “AI Insider” for Generic Tools: Additionally, we identified generic GenAI tools based on the “AI Insider” website (https://ainsider.tools/) (accessed on 25 March 2025) that can be applied across multiple creative steps, such as text-based GenAI tools like ChatGPT.

- List all relevant GenAI tools from both sources for each creative step

- Select Top GenAI Tools Based on Step-Specific and Generic Criteria: From the relevant GenAI lists, we then select the top 3 tools based on popularity scores that potentially meet the predefined step-specific criteria (from “There is An AI for that”) and include generic tools (from “AI Insider”) that support multiple creative steps.

- Use Case Problem Selection: In this study, we choose the problem from UN Goals UN. Sustainable Development Goal 2 (SDG Goal 2): Zero Hunger. World hunger is one of the most serious global issues. The causes of world hunger include poverty, food shortages, war and conflict, climate change, poor nutrition, poor public policy and political instability, a bad economy, food waste, gender inequality, and forced migration. These factors contribute to hunger. Therefore, we have chosen this urgent global challenge as an example to highlight GenAI’s potential role in addressing such issues and to demonstrate its application across various steps of the creative process.

- Proposed Criteria to assess the effectiveness of GenAI tools for each step of the creative process

We apply the core structure of Saaty (1980) and Davies (1994) Hierarchical Analysis by defining key criteria, scoring each criterion, and profiling results. Their “Hierarchical Analysis or Analytic Hierarchy Process” can be interpreted as a structured way of: Defining the primary goal, Breaking down that goal into major criteria (top-level dimensions), Further dividing those criteria into sub-criteria, if necessary, Weighting each criterion and sub-criterion based on its importance, Scoring alternatives (in this case, AI tools) against each criterion and Aggregating the scores to arrive at a final comparative ranking. The concept of hierarchical analysis has broad applications across different fields, including information systems (IS), where hierarchical models (e.g., analytical hierarchy process, multi-layered system designs) are commonly used (Saaty 1980).

- Criteria used to assess GenAI tools for four common steps of the creative process

In this section, we propose criteria for assessing GenAI tools for each step of the creative process. Table 4 for problem identification and framing, Table 5 for generating ideas, Table 6 for evaluating ideas, and Table 7 for deploying and implementing ideas.

Table 4.

Proposed criteria for assessing GenAI tools for Problem Identification and Framing.

Table 5.

Proposed criteria for assessing GenAI tools for Generating Ideas.

Table 6.

Proposed criteria for assessing GenAI tools for Evaluating Ideas.

Table 7.

Proposed criteria for assessing GenAI tools for Deploying and Implementing Idea.

For each criterion, a five-point rating scale can be used to evaluate the output of a genAI tool.

- 1 = Very poor/Not demonstrated

- 2 = Below average/Needs improvement

- 3 = Average/Acceptable

- 4 = Good/Above average

- 5 = Excellent/Outstanding

5. Use Case Demonstrations and GenAI Systems Evaluation

5.1. Use Case 1: Problem Identification and Framing

We demonstrate how GenAI tools helped refine or reframe the problem for Sustainable Development Goal 2 (SDG Goal 2): Zero Hunger. There are 8 GenAIs for Problem Identification and Framing using the keyword “Research Assistance”, and Table 8 shows the Top 3 GenAIs for Problem Identification and Framing. The full list of 8 GenAIs can be found in Supplementary Material S (S1), and a demonstration of the Top 3 GenAIs can be found in Supplementary Material S (S2).

Table 8.

Top 3 GenAIs for Problem Identification and Framing based on popularity score.

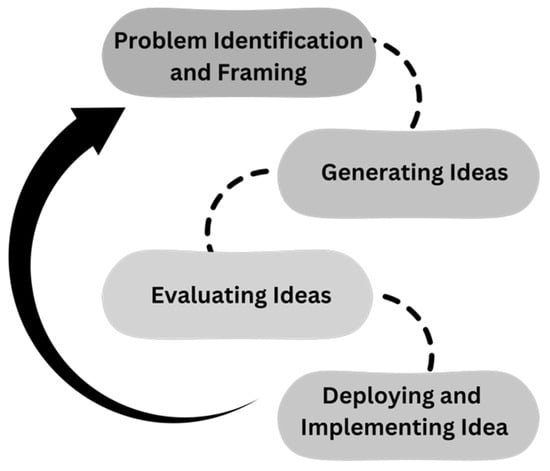

- Profiler of GenAI tools for Problem Identification and Framing step

This is a hypothetical example (Table 9) of how we score the top three GenAI tools under the five criteria (Clarity, Contextual Relevance, Analytical Depth, Innovative Angle, and Ease of Use). The two researchers (authors) evaluated the performance of GenAIs according to the criteria using a five-point Likert scale. To ensure inter-rater reliability, we calculated the Spearman–Brown coefficient based on the ratings from two independent researchers. The resulting Spearman–Brown reliability coefficient was 0.90, indicating strong agreement between evaluators and confirming the consistency of judgments across the five evaluation criteria.

Table 9.

Profiler of GenAI tools for Problem Identification and Framing step.

The radar charts (Figure 2) represent a comparison of three GenAI tools (Autoresearch.pro, Inquisite, Chunk AI) that are used for the Problem Identification and Framing step across five criteria for evaluation: Clarity, Context Relevance, Analytical Depth, Innovative Angel, and Ease of Use.

Figure 2.

Radar Chart Comparison of 3 GenAI Tools (Autoresearch.pro, Inquisite, Chunk AI) for Problem Identification and Framing step.

- Autoresearch.pro performs strongly on Context Relevance and Ease of Use (score of five), suggesting that the tool effectively addresses user needs in the context of SDG Goal 2 and the tool is user-friendly. In addition, Clarity and Analytical Depth are well-rated. This suggests that the tool effectively and clearly communicates insightful information. The Innovative Angle still needs to improve.

- Inquisite has the highest rating for Ease of Use, which highlights the user-friendly nature of the tool. Notably, the tool scores high in Contextual Relevance, which aligns with user requirements. Clarity and Analytical Depth reflect good but moderate effectiveness. The lowest score concerns the Innovative Angel.

- Chunk AI demonstrates high performance in Clarity, Contextual relevance, and Ease of Use with a near-top rating. This suggests that the tool clearly communicates its output and aligns well with user contexts. However, the rating scores for Analytical Depth and Innovative Angel are lower, which indicates areas for improvement.

Overall, Autoresearch.pro, Inquisite, and Chunk appear useful in the Problem Identification and Framing step; among them, Autoresearch.pro stands out for its balance of clarity, depth, and usability. Inquisite leads in ease of use, and Chunk AI excels in delivering clear and contextually aligned insights, which makes each tool valuable depending on specific user priorities.

5.2. Use Case 2: Generating Ideas

We demonstrate how GenAI tools helped to generate ideas for Sustainable Development Goal 2 (SDG Goal 2): Zero Hunger. There are 10 Free AIs for generating ideas using the keyword “Brainstorming”, and Table 10 shows the Top 3 GenAIs for Generating Ideas. The full list of 10 GenAIs can be found in Supplementary Material S1 (S1), and a demonstration of the Top 3 GenAIs can be found in Supplementary Material S2 (S2).

Table 10.

Top 3 GenAIs for Generating Ideas based on popularity scores.

- Profiler of GenAI tools for Generating ideas step

This is a hypothetical example (Table 11) of how we score the top three GenAI tools on the five criteria (Idea Fluency, Novelty (Originality), Relevance, Depth/Elaboration and Ease of Use). The two evaluators independently assessed each tool using a five-point Likert scale. The Spearman–Brown coefficient was 0.80, indicating good agreement between the two judges.

Table 11.

Profiler of GenAI tools for Generating Ideas step.

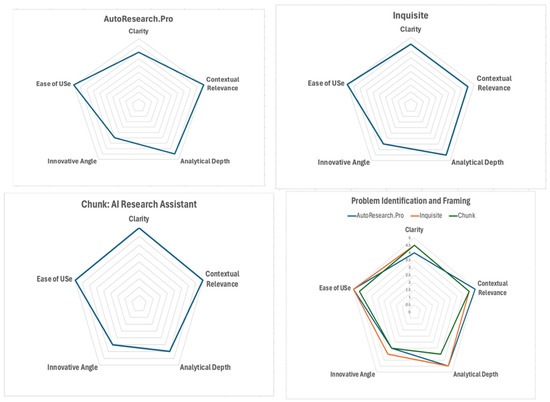

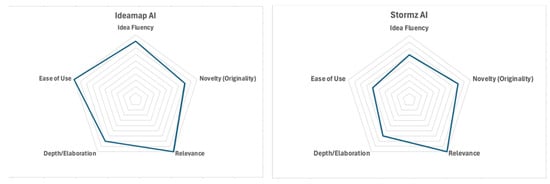

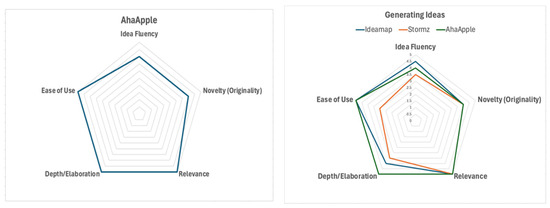

The radar charts (Figure 3) represent a comparison of 3 GenAI tools (Ideamap, Stormz, AhaApple) that are used for the Generating ideas step across 5 criteria for evaluation: Idea fluency, Novelty, Relevance, Depth/Elaboration, and Ease of Use.

Figure 3.

Radar Chart Comparison of 3 GenAI Tools (Ideamap, Stormz, AhaApple) for Generating Ideas step.

- Ideamap performs strongly on Relevance and Ease of Use, suggesting that the tool generates ideas that are highly aligned with the problem context (in this case, SDG Goal 2) and is user-friendly. In addition, Idea Fluency and Novelty are well-rated. This suggests that Ideamap can produce a good volume of ideas with a fair level of novelty. However, Depth/Elaboration is slightly lower rated. Overall, Ideamap AI stands out as a capable tool for generating relevant and accessible ideas, with room to improve the depth of the creative outputs.

- Stormz has the highest Relevance rating, which highlights its alignment with the problem context (SDG Goal 2). Idea fluency and Novelty are rated moderately, which indicates that Stormz can generate a fair number of original ideas but this is not outstanding. In contrast, Depth/Elaboration and Ease of Use are the lowest rated.

- AhaApple demonstrates higher performance in all dimensions, particularly Relevance, Depth/Elaboration, and Ease of Use. This suggests that the tool produces highly context-appropriate ideas, develops them in depth, and is user-friendly. Novelty and Idea fluency also score high (4), which suggests that the ideas produced are in good quantity with a reasonable level of creativity.

Overall, AhaApple stands out as a comprehensive and well-balanced tool that combines originality/novelty, depth, and usability, making it effective for supporting idea generation.

5.3. Use Case 3: Evaluating Ideas

We demonstrate how GenAI tools helped to evaluate ideas for Sustainable Development Goal 2 (SDG Goal 2): Zero Hunger. There are 7 Free AIs for evaluating ideas using the keywords “Idea Evaluation, Idea Testing, and Idea Refinement”, and Table 12 shows the Top 3 GenAIs for Evaluating Ideas. The full list of 7 GenAIs can be found in Supplementary Material S1 (S1), and a demonstration of the Top 3 GenAIs can be found in Supplementary Material S2 (S2).

Table 12.

Top 3 GenAIs for Evaluating Ideas step based on popularity scores.

- Profiler of GenAI tools for Evaluating ideas step

This is a hypothetical example (Table 13) of how we score the top three GenAI tools under the five criteria (Feasibility, Impact/Value, Risk Identification, Actionability/Next Steps, and Ease of Use). The two researchers (authors) evaluated the performance of the GenAI tools. The Spearman–Brown coefficient was 0.90, indicating strong agreement between evaluators, confirming the consistency of judgments across the five evaluation criteria.

Table 13.

Profiler of GenAI tools for Evaluating Ideas step.

The radar charts (Figure 4) represent a comparison of three GenAI tools (10X your Ideas, Inventor’s Idea Analysis and Business Plan, Idea Spark) used for the Evaluating ideas step across five evaluation criteria: Feasibility, Impact/Value, Risk Identification, Actionability/Next Steps, and Ease of Use.

Figure 4.

Radar Chart Comparison of 3 GenAI Tools (10X your Ideas, Inventor’s Idea Analysis and Business Plan, Idea Spark) for Evaluating Ideas step.

- 10X Your Ideas and Inventor’s Idea Analysis and Business Plan show identical scores in all dimensions. It demonstrates steady capabilities in Feasibility (3.5), Impact/Value (4), Risk Identification (3.5 and 4, respectively), Actionability/Next Steps (4), and Ease of Use (5)

- Idea Spark slightly outperforms the other two in Feasibility with a score of four.

All three tools receive the highest score of five in Ease of Use. This suggests a strong focus on user-friendliness. Overall, the tools demonstrate similar strengths, particularly in usability and actionable insights. Idea Spark shows marginally higher feasibility and Inventor’s Idea appears slightly stronger in assessing risk. However, the output generated by the tool can be better according to specific prompts or questions (i.e., asking for impact/value or risk identification of SDG Goal 2).

5.4. Use Case 4: Deploying and Implementing Idea

We demonstrate how GenAI tools helped to deploy and implement ideas for Sustainable Development Goal 2 (SDG Goal 2): Zero Hunger. There are 4 GenAIs for Deploying and Implementing Ideas using the keywords “prototyping”, and Table 14 shows the Top 3 GenAIs for Deploying and Implementing Ideas. The full list of GenAIs can be found in Supplementary Material S1 (S1), and a demonstration of the Top 3 GenAIs can be found in Supplementary Material S2 (S2).

Table 14.

Top 3 GenAIs for Deploying and Implementing Idea step based on popularity scores.

- Profiler of GenAI tools for Deploying and Implementing idea step

This is a hypothetical example (Table 15) of how we score the top three GenAI tools under the five criteria (Feasibility, Impact/Value, Risk Identification, Actionability/Next Steps, and Ease of Use). The two researchers (authors) evaluated the performance of GenAI tools. The Spearman–Brown coefficient was 1.0, indicating a perfect agreement between evaluators.

Table 15.

Profiler of GenAI tools for Deploying and Implementing Idea step.

In this step, the two researchers evaluated three GenAI tools based on five criteria. Although the ratings can be given, for some criteria (e.g., Security and Privacy, Reliability and Scalability), the tools Lovable and Prototype App Generator did not generate much relevant for these issues. It appears that the AI tools evaluated, which are the most popular, are not well aligned with what we want AI tools to do in the last step.

6. Discussion

Key Themes Emerging from the Use Cases

Effective GenAI tools in early-stage creative thinking: Previous research generally identified GenAI as broadly supportive of creative tasks but did not clearly state which specific stages of the creative process benefit most (Gindert and Müller 2024; Wan et al. 2024). Our findings suggest that publicly available GenAI tools may be particularly useful for structuring early-stage creative thinking, such as during problem identification, idea generation, and idea evaluation. In these phases, GenAI tools such as Inquisite assist by reframing problems from various angles. Ideamap AI assists by rapidly producing diverse and novel ideas. Text-based GenAI tools like 10X your Ideas, Inventor’s Idea Analysis and Business Plan, and Idea Spark (on top of ChatGPT) support preliminary assessment by offering a quick comparison and evaluation framework. However, the effectiveness of existing genAI tools seems less promising in later stages, such as deploying and implementing ideas, where contextual complex, practical constraints, technical integration, and real-world testing require human expertise, deep domain knowledge, and cross-functional collaboration. Thus, this highlights a key strength of current GenAIs in fostering the cognitive and conceptual work at the front end of the creative process rather than executing complex and real-world implementation.

GenAI-Driven Ideation still needs Human Oversight: Whereas specific GenAI systems for the Generating Ideas step can generate a quick, wide array of novel ideas, sometimes it pushes creativity into entirely new directions but this is not infallible. Earlier studies (e.g., Urban et al. 2024; Duan et al. 2025) have shown the importance of human oversight. Urban et al. (2024) studied how students co-creating with ChatGPT can improve creative problem-solving performance. They mentioned Hybrid Human-AI Regulation theory (Molenaar 2022), which emphasizes human metacognitive regulation. Similarly, Duan et al. (2025) investigate the issue of narrow creativity in both humans and GenAI through the Circle Exercise. Their work identifies key challenges and opportunities for advancing GenAI-driven creativity support tools. They also argue that humans should oversee, evaluate, and guide GenAI creative process. However, these studies did not investigate precisely how human oversight improves GenAI-generated outcomes. In contrast, our use cases provide a more detailed view by suggesting that human domain expertise is essential for filtering, refining, and contextualizing the AI’s output. This human oversight can prevent biased, impractical, and ethically problematic suggestions from the final outputs/products. Thus, our findings extend and critically engage with the earlier studies by showing that the manner and depth of human oversight significantly impact the effectiveness of Human-AI co-creation.

AI as a Helper is Efficient But Not Autonomous: As use cases demonstrate, rapid prototyping, quick information summaries, and automated draft plans all shorten creativity and innovation process cycles. However, no use case suggests that GenAI can be entirely autonomous without human validation, leading to our proposition focused on “AI as a Helper”. The “Human-in-the-loop” (Munro 2021), remains necessary for quality control, ethical governance, and final decision-making. It is worth noting that the use cases examined show that no single genAI system offers the complete set of tools that can facilitate the creative process. Thus, the human operator retains a key role in orchestrating the choice of specific tools and their sequential use in creative thinking.

The integration with Existing GenAI tools and Processes: As use cases demonstrate, another theme we found to be important is the ease of integration. Individuals/teams benefit most when GenAI systems are compatible and embedded in collaborative systems. This seamless integration simplifies workflows and maximizes the value AI could provide. The features that facilitate certain AI systems to allow easy integration can be studied and promoted in forthcoming tools.

Iterative Prompting and Feedback Loops: Previous studies, e.g., (Duan et al. 2025) discussed iterative prompting techniques in isolation and did not show iteration from the human-AI feedback loop. Our analysis suggests that GenAI tools may be most effective when we use them iteratively. Creative teams often refine and fine-tune prompts and adjust the inputs or parameters to fit their style, depth, or format of outputs. Thus, over multiple iterations, the synergy between “Human expertise” and “Machine intelligence” is expected to yield more sophisticated and context-appropriate solutions. Further research is needed to see the additional value of these recursive loops in the prompting process. For example, to what extent does recursive feedback from humans in the loop lead to actual human-AI synergy, and is the expected improvement in output progressive, or described better as an abrupt boost in performance?

7. Implications and Limitations

When considering GenAI adoption within creative workflows, teams or organizations should start by “Identifying clear use cases” aligned with distinct stages of the creative process, such as problem identification, ideation, evaluation, or deployment and implementation. Teams then should roll out the small pilots or proof of concept to refine their processes. Next, selecting appropriate GenAI tools becomes crucial, and it requires a careful comparison of features and evaluation of GenAI tools, which are suited for each creative process (as we proposed the evaluation criteria for GenAI tools with the four common steps of the creative process and give examples on how to score and profiling). Notably, human-in-the-loop protocols should be established where regular checkpoints allow human reviewers to validate AI outputs and provide guidance. Through continuous monitoring and evaluation, organizations and teams can track both quantitative and qualitative metrics by applying lessons learned to update their AI models and refine collaborative processes. Finally, promoting cross-functional collaboration will ensure that AI initiatives align with organizational values or team goals and foster a collaborative culture (e.g., open feedback and shared learning).

When introducing GenAI in the organization, training, and skill development toward collaboration with GenAI are very important. In general terms, it is widely proposed that teams can benefit from practical workshops like prompt engineering, AI-tool customization and data governance that support the new adopters engaging with AI and reducing the overreliance on taking what AI produces as an end product. Ethical usage and responsible innovation should include regular audits to detect and mitigate biases such as GDPR privacy. Establishing collaboration strategies with GenAI that position it as a Helper can contribute to alignment with ethical standards, organizational vision and objectives.

The findings from the current study show specifically how well different AIs perform in the four proposed stages of the creative process. By proposing AI as a supportive yet influential partner, these findings expand existing models of creativity to include the first mode of human-AI collaboration (AI as a Helper), where the outputs require human oversight and expertise. This contributes to the evolving literature on human-AI collaboration dynamics, notably human-led oversight, and AI-driven ideation, which can coexist.

Several limitations remain for future studies to be conducted. First, there is a need for deeper exploration of human-AI collaboration modes; specifically, AI can take more roles as a partner or an autonomous agent (i.e., moving from this study “AI as a Helper” to “AI as a Partner” or even “Creator”). Such inquiries could examine how and when AI can responsibly adopt increased agency without decreasing human ownership. Second, the development of advanced evaluation criteria and metrics is needed. Existing measures of such examples proposed in this study may not fully capture the complexity of AI-assisted creativity, in particular in the required special domains focusing on cultural sensitivity, ethical considerations, or long-term user engagement. Last, longitudinal and comparative research across various domains could illuminate best practices for sustaining ethical and high-impact human-AI collaboration over time. By tackling these limitations, future research can refine theoretical models, inform best practices, and guide policy decisions in the AI-driven creativity era.

8. Conclusions

In this study, the proposed framework demonstrates how Generative AI (GenAI) tools can be methodically integrated into each common step of the creative process: (1) Problem Identification and Framing, (2) Generating Ideas, (3) Evaluating Ideas, and (4) Deploying and Implementing Ideas. Our proposition, “AI as a Helper”, leverages AI’s capabilities for data synthesis, novel idea generation, critique, and prototyping. By mapping GenAI tools to specific steps of the creative process and using AI features such as prompt-based text generation, image synthesis, etc., this framework/concept reduces ambiguity and shows when and how AI can add value. This approach ensures that human oversight remains central (human-centered), guiding AI outputs toward contextual awareness, ethical considerations, and high-quality solutions.

The central focus of this study is “AI as a Helper”, which places human expertise and AI agents at the forefront rather than replacing human creativity. AI acts as a supportive helper and ensures that decision-making is in human hands. Thus, it reduces the risks of bias and overreliance on AI. This study’s insights highlight the complementary role of AI in modern creative workflows (creative process), enabling teams to generate, refine, and implement their innovative ideas more efficiently.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/jintelligence13050057/s1.

Author Contributions

Conceptualization, S.C. and T.L.; methodology, S.C.; software, S.C.; validation, S.C. and T.L.; formal analysis, S.C.; investigation, S.C. and T.L.; resources, T.L.; data curation, S.C. and T.L.; writing—original draft preparation S.C.; writing—review and editing, S.C. and T.L.; visualization, S.C.; supervision, T.L.; project administration, S.C. and T.L. funding acquisition, T.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work has received support under the program “Investissement d’Avenir” launched by the French Government and implemented by ANR, with the reference “ANR-18-IdEx-0001” as part of its program “Emergence”.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article/Supplementary Material. Further inquiries can be directed to the corresponding author(s).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Amabile, Teresa M. 1983. The Social Psychology of Creativity: A Componential Conceptualization. Journal of Personality and Social Psychology 45: 357. [Google Scholar] [CrossRef]

- Amabile, Teresa M. 1988. A Model of Creativity and Innovation in Organizations. Research in Organizational Behavior 10: 123–67. [Google Scholar]

- Anderson, Ross. 2020. Security Engineering: A Guide to Building Dependable Distributed Systems, 3rd ed. Indianapolis: John Wiley & Sons. [Google Scholar]

- As, Imdat, and Prithwish Basu, eds. 2021. The Routledge Companion to Artificial Intelligence in Architecture. Abington: Routledge Companions. New York: Routledge. [Google Scholar]

- Bonnardel, Nathalie, Alicja Wojtczuk, Pierre-Yves Gilles, and Sylvain Mazon. 2018. The creative process in design. The Creative Process: Perspectives from Multiple Domains, 229–54. [Google Scholar]

- Botella, Marion, Vlad Glaveanu, Franck Zenasni, Martin Storme, Nils Myszkowski, Marion Wolff, and Todd Lubart. 2013. How Artists Create: Creative Process and Multivariate Factors. Learning and Individual Differences 26: 161–70. [Google Scholar] [CrossRef]

- Botella-Nicolás, A. M., and M. D. L. Á. Peiró-Esteve. 2018. A study of auditory discrimination in early childhood education in Valencia. Magis 10: 13–34. [Google Scholar] [CrossRef]

- Brown, Tim. 2008. Design Thinking. Harvard Business Review. Available online: https://hbr.org/2008/06/design-thinking (accessed on 30 January 2025).

- Carson, David K. 1999. Counseling. In Encyclopedia of Creativity. New York: Academic Press, vol. 1, pp. 395–402. [Google Scholar]

- Chandrasekera, Tilanka, Zahrasadat Hosseini, and Ubhaya Perera. 2024. Can Artificial Intelligence Support Creativity in Early Design Processes? International Journal of Architectural Computing 23: 14780771241254637. [Google Scholar] [CrossRef]

- Chompunuch, Sudapa. 2023. Exploring the Concept and Practices of Team Creativity through a Critical Incidents Approach. Universite Grenoble Alpes. Available online: https://theses.hal.science/tel-04660142/ (accessed on 25 January 2025).

- Clements, Paul, Rick Kazman, and Mark Klein. 2012. Evaluating Software Architectures: Methods and Case Studies. 10. print. SEI Series in Software Engineering; Boston: Addison-Wesley. [Google Scholar]

- Colton, Simon, and Geraint A Wiggins. 2012. Computational Creativity: The Final Frontier? In Frontiers in Artificial Intelligence and Applications. Amsterdam: IOS Press, vol. 242, pp. 21–26. [Google Scholar]

- Cooper, Robert G. 1990. Stage-Gate Systems: A New Tool for Managing New Products. Business Horizons 33: 44–54. [Google Scholar] [CrossRef]

- Cropley, Arthur. 2006. In Praise of Convergent Thinking. Creativity Research Journal 18: 391–404. [Google Scholar] [CrossRef]

- Cropley, David H. 2015. Promoting Creativity and Innovation in Engineering Education. Psychology of Aesthetics, Creativity, and the Arts 9: 161–71. [Google Scholar] [CrossRef]

- Crowston, Kevin, and James Howison. 2005. The Social Structure of Free and Open Source Software Development. First Monday, February 7. [Google Scholar] [CrossRef]

- Davidson, Janet E. 2003. Insights about Insightful Problem Solving. In The Psychology of Problem Solving, 1st ed. Edited by Janet E. Davidson and Robert J. Sternberg. Cambridge: Cambridge University Press, pp. 149–75. [Google Scholar] [CrossRef]

- Davies, Mark A. P. 1994. A Multicriteria Decision Model Application for Managing Group Decisions. Journal of the Operational Research Society 45: 47–58. [Google Scholar] [CrossRef]

- Davis, Nicholas, Chih-Pin Hsiao, Yanna Popova, and Brian Magerko. 2015. An Enactive Model of Creativity for Computational Collaboration and Co-Creation. In Creativity in the Digital Age. Edited by Nelson Zagalo and Pedro Branco. Springer Series on Cultural Computing. London: Springer, pp. 109–33. [Google Scholar] [CrossRef]

- Doshi, Anil R., and Oliver P. Hauser. 2024. Generative AI Enhances Individual Creativity but Reduces the Collective Diversity of Novel Content. Science Advances 10: eadn5290. [Google Scholar] [CrossRef] [PubMed]

- Doyle, Charlotte L. 1998. The Writer Tells: The Creative Process in the Writing of Literary Fiction. Creativity Research Journal 11: 29–37. [Google Scholar] [CrossRef]

- Duan, Runlin, Shao-Kang Hsia, Yuzhao Chen, Yichen Hu, Ming Yin, and Karthik Ramani. 2025. Investigating Creativity in Humans and Generative AI Through Circles Exercises. arXiv arXiv:2502.07292. [Google Scholar]

- Egon, Kaledio, Russell Eugene, and Julia Rosinski. 2023. AI in Art and Creativity: Exploring the Boundaries of Human-Machine Collaboration. Charlottesville: Open Science Framework. [Google Scholar] [CrossRef]

- Getzels, Jacob W., and Mihaly Csikszentmihalyi. 1976. Concern for Discovery in the Creative Process. In The Creativity Question. Durham: Duke University Press, pp. 161–65. [Google Scholar]

- Gindert, Michael, and Marvin Lutz Müller. 2024. The Impact of Generative Artificial Intelligence on Ideation and the performance of Innovation Teams (Preprint). arXiv arXiv:2410.18357. [Google Scholar]

- Google Cloud. 2023. What Is Generative AI and What Are Its Applications? Available online: https://cloud.google.com/use-cases/generative-ai (accessed on 30 January 2025).

- Guilford, J. P. 1967. Creativity: Yesterday, Today and Tomorrow. The Journal of Creative Behavior 1: 3–14. [Google Scholar] [CrossRef]

- Hargadon, Andrew B., and Beth A. Bechky. 2006. When Collections of Creatives Become Creative Collectives: A Field Study of Problem Solving at Work. Organization Science 17: 484–500. [Google Scholar] [CrossRef]

- Herman, Anne, and Roni Reiter-Palmon. 2011. The Effect of Regulatory Focus on Idea Generation and Idea Evaluation. Psychology of Aesthetics, Creativity, and the Arts 5: 13–20. [Google Scholar] [CrossRef]

- Hirawata, So, and Noriko Otani. 2024. Interactive Melody Generation System for Enhancing the Creativity of Musicians. arXiv arXiv:2403.03395. [Google Scholar]

- Karimi, Pegah, Kazjon Grace, Mary Lou Maher, and Nicholas Davis. 2018. Evaluating Creativity in Computational Co-Creative Systems. arXiv arXiv:1807.09886. [Google Scholar]

- Klein, Gary, Brain Moon, and Robert R. Hoffman. 2006. Making Sense of Sensemaking 1: Alternative Perspectives. IEEE Intelligent Systems 21: 70–73. [Google Scholar] [CrossRef]

- Kotter, John P. 1996. Leading Change. Boston: Harvard Business School Press. [Google Scholar]

- Leone, Salvatore, Payge Japp, and Roni Reiter-Palmon. 2023. The Emergence of Problem Construction at the Team-Level. Small Group Research 54: 639–70. [Google Scholar] [CrossRef]

- Lubart, Todd I. 2001. Models of the Creative Process: Past, Present and Future. Creativity Research Journal 13: 295–308. [Google Scholar] [CrossRef]

- Lubart, Todd. 2005. How Can Computers Be Partners in the Creative Process: Classification and Commentary on the Special Issue. International Journal of Human-Computer Studies 63: 365–69. [Google Scholar] [CrossRef]

- Lubart, Todd, Dario Esposito, Alla Gubenko, and Claude Houssemand. 2021. Creativity in Humans, Robots, Humbots. Creativity. Theories-Research-Applications 8: 23–37. [Google Scholar] [CrossRef]

- Manning, Christopher D., Prabhakar Raghavan, and Hinrich Schütze. 2008. Introduction to Information Retrieval. New York: Cambridge University Press. [Google Scholar]

- Mao, Yaoli, Janet Rafner, Yi Wang, and Jacob Sherson. 2024. HI-TAM, a Hybrid Intelligence Framework for Training and Adoption of Generative Design Assistants. Frontiers in Computer Science 6: 1460381. [Google Scholar] [CrossRef]

- McGuire, Linda. 2024. Finding Your Mathematical Roots: Inclusion and Identity Development in Mathematics. Journal of Humanistic Mathematics 14: 4–39. [Google Scholar] [CrossRef]

- Michele Newman, Lidia Morris, and Jin Ha Lee. 2023. Human-AI Music Creation: Understanding the Perceptions and Experiences of Music Creators for Ethical and Productive Collaboration. Paper presented at 24th International Society for Music Information Retrieval Conference, Milan, Italy, November 5–9; pp. 80–88. [Google Scholar] [CrossRef]

- Molenaar, Inge. 2022. The concept of hybrid human-AI regulation: Exemplifying how to support young learners’ self-regulated learning. Computers and Education: Artificial Intelligence 3: 100070. [Google Scholar] [CrossRef]

- Montag, Tamara, Carl P. Maertz, and Markus Baer. 2012. A Critical Analysis of the Workplace Creativity Criterion Space. Journal of Management 38: 1362–86. [Google Scholar] [CrossRef]

- Moreau, Page, Emanuela Prandelli, and Martin Schreier. 2023. Generative Artificial Intelligence and Design Co-Creation in Luxury New Product Development: The Power of Discarded Ideas. SSRN Electronic Journal. [Google Scholar] [CrossRef]

- Mumford, Michael D., and Roni Reiter-Palmon. 1994. Problem Construction and Cognition: Applying Problem Representations in Ill-Defined Domains. In Problem Finding, Problem Solving, and Creativity. New York: Ablex Publishing, pp. 3–39. [Google Scholar]

- Mumford, Michael D., Jack M. Feldman, Michael B. Hein, and Dennis J. Nagao. 2001a. Tradeoffs Between Ideas and Structure: Individual Versus Group Performance in Creative Problem Solving. The Journal of Creative Behavior 35: 1–23. [Google Scholar] [CrossRef]

- Mumford, Michael D., Rosemary A. Schultz, and Judy R. Van Doorn. 2001b. Performance in Planning: Processes, Requirements, and Errors. Review of General Psychology 5: 213–40. [Google Scholar] [CrossRef]

- Munro, Rob. 2021. Human-in-the-Loop Machine Learning: Active Learning and Annotation for Human-Centered AI. Shelter Island: Manning. [Google Scholar]

- Nemiro, Jill E. 2002. The Creative Process in Virtual Teams. Creativity Research Journal 14: 69–83. [Google Scholar] [CrossRef]

- Nielsen, Jakob. 1993. Usability Engineering. Cambridge: AP Professional. [Google Scholar]

- Norman, Donald A. 1990. The Design of Everyday Things. Reprint. New York: Doubleday. [Google Scholar]

- Oh, Changhoon, Jungwoo Song, Jinhan Choi, Seonghyeon Kim, Sungwoo Lee, and Bongwon Suh. 2018. I Lead, You Help but Only with Enough Details: Understanding User Experience of Co-Creation with Artificial Intelligence. Paper presented at 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, April 21–26; pp. 1–13. [Google Scholar] [CrossRef]

- Oluwagbenro, Mogbojuri Babatunde Dr. 2024. Generative AI: Definition, Concepts, Applications, and Future Prospects. TechRxiv. Preprints. [Google Scholar] [CrossRef]

- O’Toole, Katherine, and Emőke-Ágnes Horvát. 2024. Extending human creativity with AI. Journal of Creativity 34: 100080. [Google Scholar] [CrossRef]

- Osborn, Alex F. 1953. Applied Imagination: Principles and Procedures of Creative Problem Solving. New York: Charles Scribner’s Sons. [Google Scholar]

- Osborn, Alex F. 1979. Applied Imagination: Principles and Procedures of Creative Problem-Solving, 3rd. ed. New York: Scribner. [Google Scholar]

- Paulus, Paul B., and Huei-Chuan Yang. 2000. Idea Generation in Groups: A Basis for Creativity in Organizations. Organizational Behavior and Human Decision Processes 82: 76–87. [Google Scholar] [CrossRef]

- Pressman, Roger S. 2005. Software Engineering: A Practitioner’s Approach, 6th ed. McGraw-Hill Series in Computer Science; Boston: McGraw-Hill. [Google Scholar]

- Puccio, Gerard J., and Monika Modrzejewska-Świgulska. 2022. Creative problem solving: From evolutionary and everyday perspectives. In Homo Creativus. Edited by Todd Lubart, Marion Botella, Samira Bourgeois-Bougrine, Xavier Caroff, Jerome Guegan, Christophe Mouchiroud, Julien Nelson and Franck Zenasni. The 7 C’s of Human Creativity. Cham: Springer International Publishing, pp. 265–86. [Google Scholar]

- Reiter, Ehud, and Robert Dale. 1997. Building Applied Natural Language Generation Systems. Natural Language Engineering 3: 57–87. [Google Scholar] [CrossRef]

- Reiter-Palmon, Roni, and Jody J. Illies. 2004. Leadership and Creativity: Understanding Leadership from a Creative Problem-Solving Perspective. The Leadership Quarterly 15: 55–77. [Google Scholar] [CrossRef]

- Reiter-Palmon, Roni, Anne E. Herman, and Francis J. Yammarino. 2007. Creativity and Cognitive Processes: Multi-Level Linkages between Individual and Team Cognition. In Research in Multi Level Issues. Bingley: Emerald (MCB UP), vol. 7, pp. 203–67. [Google Scholar] [CrossRef]

- Rhodes, Mel. 1961. An Analysis of Creativity. Phi Delta Kappan 42: 305–10. [Google Scholar]

- Runco, Mark A., and Garrett J. Jaeger. 2012. The Standard Definition of Creativity. Creativity Research Journal 24: 92–96. [Google Scholar] [CrossRef]

- Runco, Mark, and G. Dow. 1999. Problem Finding. In Encyclopedia of Creativity. Cambridge: Academic Press, pp. 433–35. [Google Scholar]

- Runco, Mark. 2004. Personal Creativity and Culture. In Creativity. Edited by Sing Lau, Anna N. N. Hui and Grace Y. C. Ng. Singapore: World Scientific, pp. 9–21. [Google Scholar] [CrossRef]

- Saaty, Thomas L. 1980. The Analytic Hierarchy Process: Planning, Priority Setting, Resource Allocation. New York and London: McGraw-Hill International Book Co. [Google Scholar]

- Sadler-Smith, Eugene. 2015. Wallas’ Four-Stage Model of the Creative Process: More Than Meets the Eye? Creativity Research Journal 27: 342–52. [Google Scholar] [CrossRef]

- Sawyer, Robert Keith. 2012. Explaining Creativity: The Science of Human Innovation, 2nd ed. Oxford: Oxford University Press. [Google Scholar]

- Shaw, Melvin P. 1989. The Eureka Process: A Structure for the Creative Experience in Science and Engineering. Creativity Research Journal 2: 286–98. [Google Scholar] [CrossRef]

- Sinha, Sayak, Sourajit Datta, Raghvendra Kumar, Sudipta Bhattacharya, Arijit Sarkar, and Kunal Das. 2024. Exploring Creativity: The Development and Uses of Generative AI. In Advances in Computational Intelligence and Robotics. Edited by Raghvendra Kumar, Sandipan Sahu and Sudipta Bhattacharya. Hershey: IGI Global, pp. 167–98. [Google Scholar] [CrossRef]

- Sternberg, Robert J., and Todd I. Lubart. 1998. The Concept of Creativity: Prospects and Paradigms. In Handbook of Creativity, 1st ed. Edited by Robert J. Sternberg. Cambridge: Cambridge University Press, pp. 3–15. [Google Scholar] [CrossRef]

- Taggar, S. 2002. Individual creativity and group ability to utilize individual creative resources: A multilevel model. Academy of management Journal 45: 315–30. [Google Scholar] [CrossRef]

- Tidd, Joe, and John R. Bessant. 2021. Managing Innovation: Integrating Technological, Market and Organizational Change, 7th ed. Hoboken: Wiley. [Google Scholar]

- Tigre Moura, Francisco. 2023. Artificial Intelligence, Creativity, and Intentionality: The Need for a Paradigm Shift. The Journal of Creative Behavior 57: 336–38. [Google Scholar] [CrossRef]

- Torrance, E. Paul. 1974. Retooling Education for Creative Talent: How Goes It? Gifted Child Quarterly 18: 233–39. [Google Scholar] [CrossRef]

- Ulwick, Anthony W. 2005. What Customers Want: Using Outcome-Driven Innovation to Create Breakthrough Products and Services. New York: McGraw-Hill. [Google Scholar]

- Urban, Marek, Filip Děchtěrenko, Jiří Lukavský, Veronika Hrabalová, Filip Svacha, Cyril Brom, and Kamila Urban. 2024. ChatGPT improves creative problem-solving performance in university students: An experimental study. Computers & Education 215: 105031. [Google Scholar]

- Vinchon, Florent, Todd Lubart, Sabrina Bartolotta, Valentin Gironnay, Marion Botella, Samira Bourgeois Bougrine, Jean-Marie Burkhardt, Nathalie Bonnardel, Giovanni Emanuele Corazza, Vlad Glăveanu, and et al. 2023. Artificial intelligence & creativity: A manifesto for collaboration. The Journal of Creative Behavior 57: 472–84. [Google Scholar]

- Wallas, Graham. 1926. The Art of Thought. London: Jonathan Cape. [Google Scholar]

- Wan, Qian, Siying Hu, Yu Zhang, Piaohong Wang, Bo Wen, and Zhicong Lu. 2024. “It Felt Like Having a Second Mind”: Investigating Human-AI Co-creativity in Prewriting with Large Language Models. In Proceedings of the ACM on Human-Computer Interaction. New York: Association for Computing Machinery, vol. 8, pp. 1–26. [Google Scholar]

- Young, Darrell L., Perry Boyette, James Moreland, and Jason Teske. 2024. Generative AI Agile Assistant. In Disruptive Technologies in Information Sciences VIII. Edited by Bryant T. Wysocki, Misty Blowers and Ramesh Bharadwaj. National Harbor: SPIE, vol. 18. [Google Scholar] [CrossRef]

- Zhang, Mingyuan, Zhaolin Cheng, Sheung Ting Ramona Shiu, Jiacheng Liang, Cong Fang, Zhengtao Ma, Le Fang, and Stephen Jia Wang. 2023. Towards Human-Centred AI-Co-Creation: A Three-Level Framework for Effective Collaboration between Human and AI. In Computer Supported Cooperative Work and Social Computing. Minneapolis: ACM, pp. 312–16. [Google Scholar] [CrossRef]

- Zhang, Rui, Nathan J. McNeese, Guo Freeman, and Geoff Musick. 2021. ‘An Ideal Human’: Expectations of AI Teammates in Human-AI Teaming. In Proceedings of the ACM on Human-Computer Interaction. New York: Association for Computing Machinery, vol. 4, pp. 1–25. [Google Scholar] [CrossRef]

- Zhang, Xiaomeng, and Kathryn M. Bartol. 2010. Linking Empowering Leadership and Employee Creativity: The Influence of Psychological Empowerment, Intrinsic Motivation, and Creative Process Engagement. Academy of Management Journal 53: 107–28. [Google Scholar] [CrossRef]

- Zhou, Eric, and Dokyun Lee. 2024. Generative Artificial Intelligence, Human Creativity, and Art. PNAS Nexus 3: pgae052. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).