Concurrent Validity of Virtual Reality-Based Assessment of Executive Function: A Systematic Review and Meta-Analysis

Abstract

1. Introduction

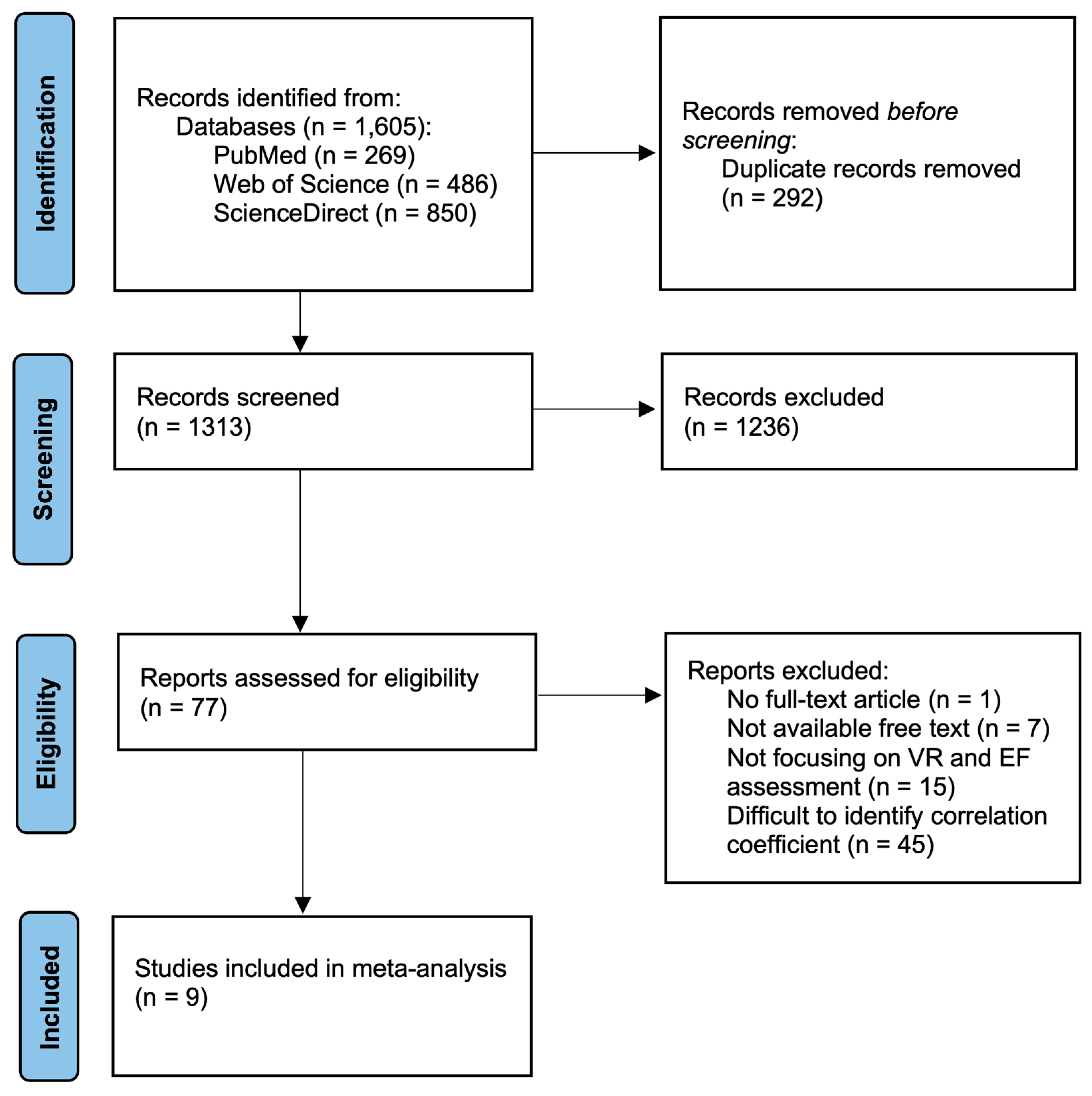

2. Methods

2.1. Literature Search

2.2. Study Eligibility

2.3. Study Screening

2.4. Data Extraction

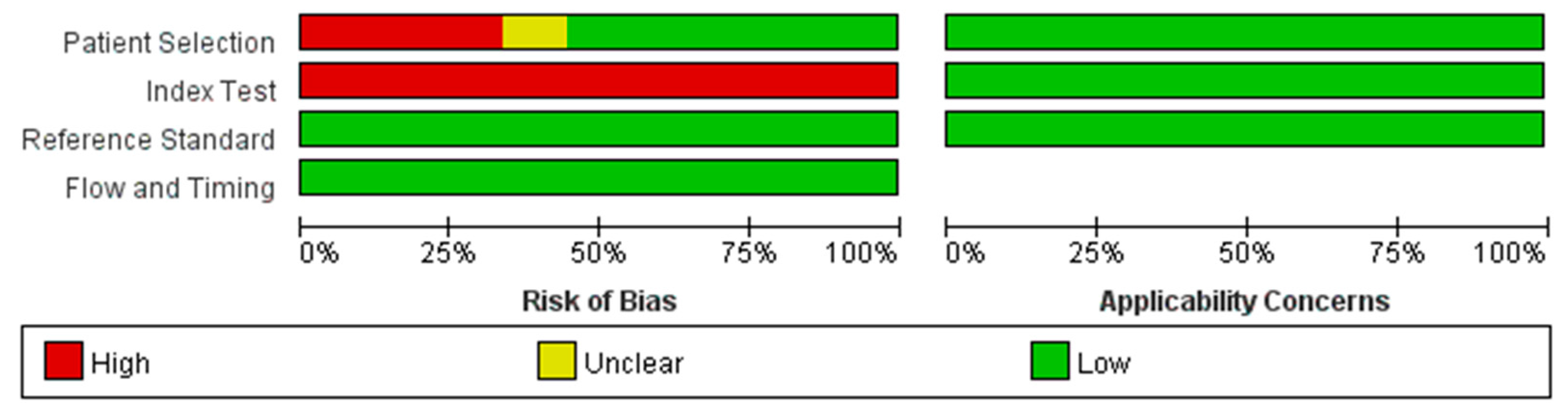

2.5. Assessment of Study Quality

2.6. Data Analysis

3. Results

3.1. Interater Agreement

3.2. Study Selection

3.3. Study Characteristics

3.4. Study Quality

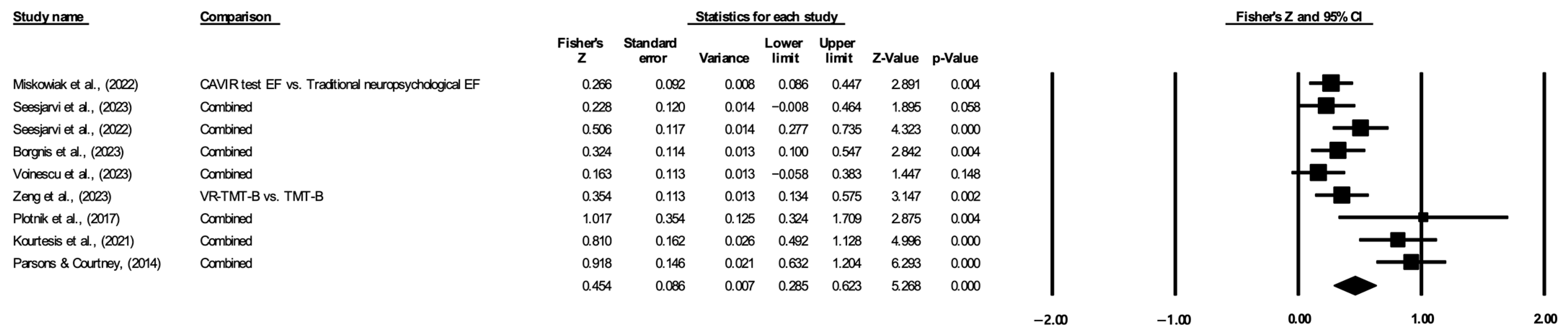

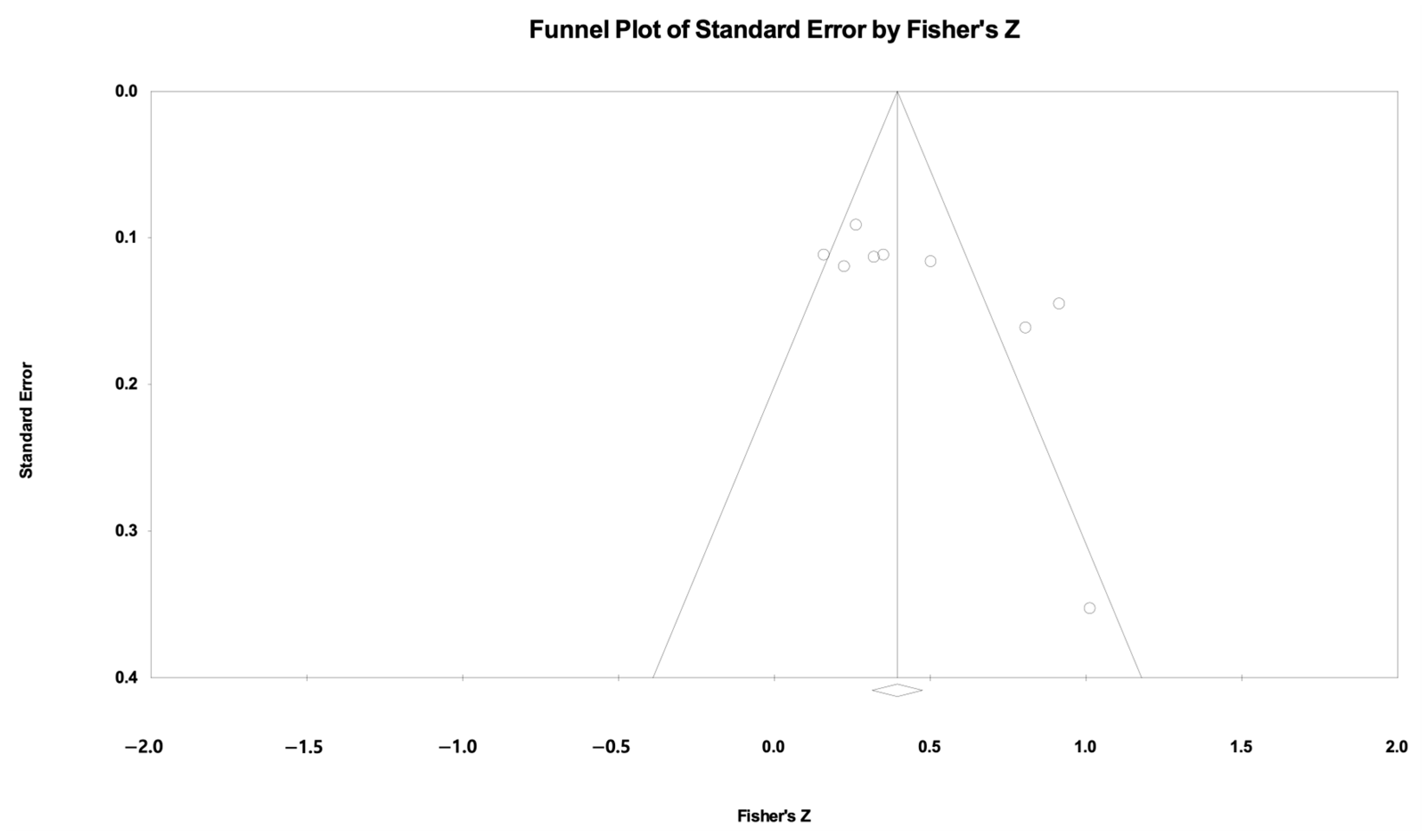

3.5. Results of the Meta-Analysis

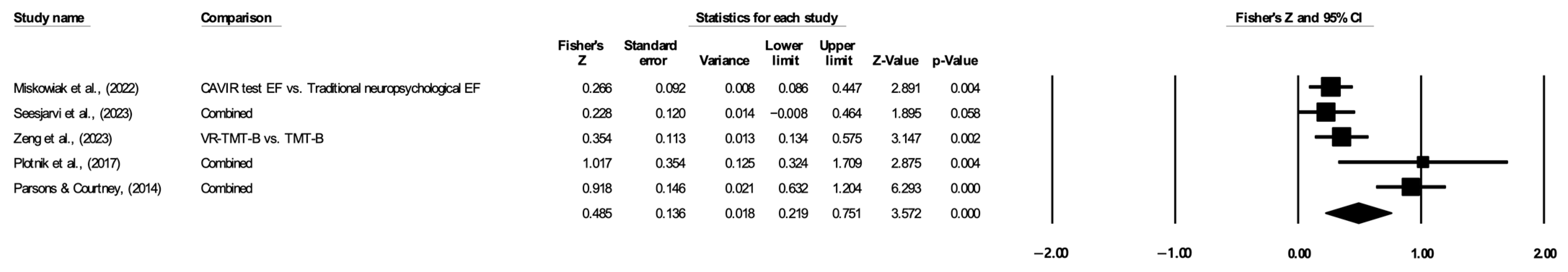

3.5.1. Overall Executive Function

3.5.2. Sensitivity Analysis

3.5.3. Attention

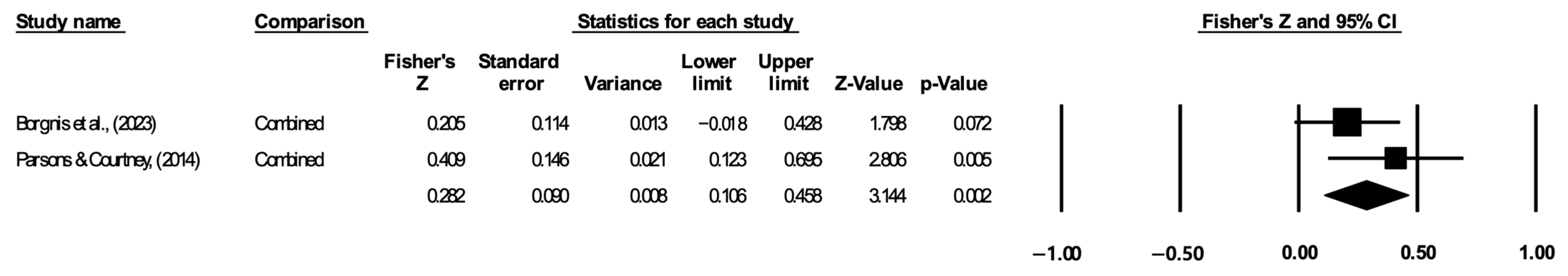

3.5.4. Inhibition

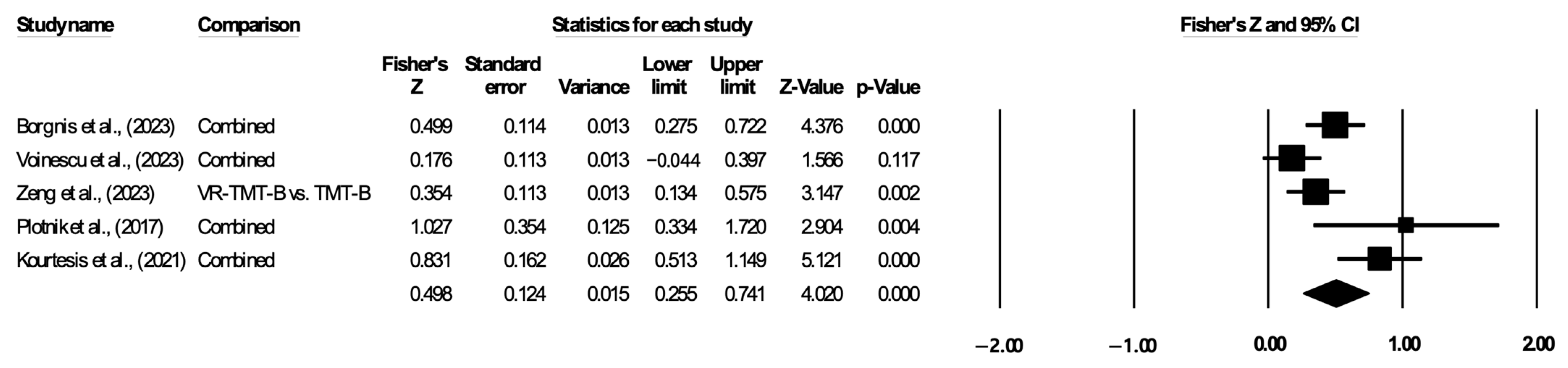

3.5.5. Cognitive Flexibility

3.6. Subgroup Analysis

3.6.1. VR-Based Assessment Properties

3.6.2. Clinical Status of the Sample

3.6.3. Age of the Sample

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Borgnis, Francesca, Francesca Baglio, Elisa Pedroli, Federica Rossetto, Lidia Uccellatore, Jorge Alexandre Gaspar Oliveira, Giuseppe Riva, and Pietro Cipresso. 2022. Available Virtual Reality-Based Tools for Executive Functions: A Systematic Review. Frontiers in Psychology 13: 833136. [Google Scholar] [CrossRef] [PubMed]

- Borgnis, Francesca, Francesca Borghesi, Federica Rossetto, Elisa Pedroli, Mario Meloni, Giuseppe Riva, Francesca Baglio, and Pietro Cipresso. 2023. Psychometric validation for a brand-new tool for the assessment of executive functions using 360° technology. Scientific Reports 13: 8613. [Google Scholar] [CrossRef] [PubMed]

- Cambridge Cognition. 2006. Cambridge Neuropsychological Test Automated Battery (CANTAB). Cambridge: Cambridge Cognition. [Google Scholar]

- Cortés Pascual, Alejandra, Nieves Moyano Muñoz, and Alberto Quílez Robres. 2019. The relationship between executive functions and academic performance in primary education: Review and meta-analysis. Frontiers in Psychology 10: 449759. [Google Scholar] [CrossRef] [PubMed]

- Delis, Dean C., Edith Kaplan, and Joel H. Kramer. 2001. Delis-Kaplan Executive Function System (D-KEFS). San Antonio: Psychological Corporation. [Google Scholar]

- Diamond, Adele. 2013. Executive Functions. Annual Review of Psychology 64: 135–68. [Google Scholar] [CrossRef] [PubMed]

- Doebel, Sabine. 2020. Rethinking Executive Function and Its Development. Perspectives on Psychological Science 15: 942–56. [Google Scholar] [CrossRef]

- Freeman, Daniel, Sarah Reeve, Abi Robinson, Anke Ehlers, David Clark, Bernhard Spanlang, and Mel Slater. 2017. Virtual reality in the assessment, understanding, and treatment of mental health disorders. Psychological Medicine 47: 2393–400. [Google Scholar] [CrossRef]

- Friedman, Naomi P., and Trevor W. Robbins. 2022. The role of prefrontal cortex in cognitive control and executive function. Neuropsychopharmacology 47: 72–89. [Google Scholar] [CrossRef]

- Gioia, Gerard A., Peter K. Isquith, Steven C. Guy, and Lauren Kenworthy. 2000. TEST REVIEW Behavior Rating Inventory of Executive Function. Child Neuropsychology 6: 235–38. [Google Scholar] [CrossRef]

- Godwin, Marshall, Andrea Pike, Cheri Bethune, Allison Kirby, and Adam Pike. 2013. Concurrent and Convergent Validity of the Simple Lifestyle Indicator Questionnaire. International Scholarly Research Notices 2013: 529645. [Google Scholar] [CrossRef]

- Golden, C. J., and S. M. Freshwater. 1978. Stroop Color and Word Test. Chicago: Stoelting. [Google Scholar]

- Heaton, Robert K. 1981. Wisconsin Card Sorting Test Manual. Odessa: Psychological Assessment Resources. [Google Scholar]

- Ibrahim, Joseph Elias, Laura J. Anderson, Aleece MacPhail, Janaka Jonathan Lovell, Marie-Claire Davis, and Margaret Winbolt. 2017. Chronic disease self-management support for persons with dementia, in a clinical setting. Journal of Multidisciplinary Healthcare 10: 49–58. [Google Scholar] [CrossRef]

- Jansari, Ashok S., Alex Devlin, Rob Agnew, Katarina Akesson, Lesley Murphy, and Tony Leadbetter. 2014. Ecological Assessment of Executive Functions: A New Virtual Reality Paradigm. Brain Impairment 15: 71–87. [Google Scholar] [CrossRef]

- Kourtesis, Panagiotis, Simona Collina, Leonidas A. A. Doumas, and Sarah E. MacPherson. 2021. Validation of the Virtual Reality Everyday Assessment Lab (VR-EAL): An Immersive Virtual Reality Neuropsychological Battery with Enhanced Ecological Validity. Journal of the International Neuropsychological Society 27: 181–96. [Google Scholar] [CrossRef] [PubMed]

- Lau, Karen M., Mili Parikh, Danielle J. Harvey, Chun-Jung Huang, and Sarah Tomaszewski Farias. 2015. Early Cognitively Based Functional Limitations Predict Loss of Independence in Instrumental Activities of Daily Living in Older Adults. Journal of the International Neuropsychological Society 21: 688–98. [Google Scholar] [CrossRef] [PubMed]

- Mathur, Maya B., and Tyler J. VanderWeele. 2020. Sensitivity Analysis for Publication Bias in Meta-Analyses. Journal of the Royal Statistical Society Series C: Applied Statistics 69: 1091–119. [Google Scholar] [CrossRef] [PubMed]

- Miskowiak, Kamilla W., Andreas E. Jespersen, Lars V. Kessing, Anne Sofie Aggestrup, Louise B. Glenthøj, Merete Nordentoft, Caroline V. Ott, and Anders Lumbye. 2022. Cognition Assessment in Virtual Reality: Validity and feasibility of a novel virtual reality test for real-life cognitive functions in mood disorders and psychosis spectrum disorders. Journal of Psychiatric Research 145: 182–89. [Google Scholar] [CrossRef]

- Miyake, Akira, Naomi P. Friedman, Michael J. Emerson, Alexander H. Witzki, Amy Howerter, and Tor D. Wager. 2000. The unity and diversity of executive functions and their contributions to complex “frontal lobe” tasks: A latent variable analysis. Cognitive Psychology 41: 49–100. [Google Scholar] [CrossRef]

- Neguţ, Alexandra, Silviu-Andrei Matu, Florin Alin Sava, and Daniel David. 2015. Convergent validity of virtual reality neurocognitive assessment: A meta-analytic approach. Transylvanian Journal of Psychology 16: 31–55. [Google Scholar]

- Nyongesa, Moses K., Derrick Ssewanyana, Agnes M. Mutua, Esther Chongwo, Gaia Scerif, Charles R. J. C. Newton, and Amina Abubakar. 2019. Assessing Executive Function in Adolescence: A Scoping Review of Existing Measures and Their Psychometric Robustness. Frontiers in Psychology 10: 311. [Google Scholar] [CrossRef]

- Parsons, Thomas D. 2015. Virtual Reality for Enhanced Ecological Validity and Experimental Control in the Clinical, Affective and Social Neurosciences. Frontiers in Human Neuroscience 9: 660. [Google Scholar] [CrossRef]

- Parsons, Thomas D., and Christopher G. Courtney. 2014. An initial validation of the Virtual Reality Paced Auditory Serial Addition Test in a college sample. Journal of Neuroscience Methods 222: 15–23. [Google Scholar] [CrossRef]

- Plotnik, Meir, Glen M. Doniger, Yotam Bahat, Amihai Gottleib, Oran Ben Gal, Evyatar Arad, Lotem Kribus-Shmiel, Shani Kimel-Naor, Gabi Zeilig, Michal Schnaider-Beeri, and et al. 2017. Immersive trail making: Construct validity of an ecological neuropsychological test. Paper presented at 2017 International Conference on Virtual Rehabilitation (ICVR), Montreal, QC, Canada, June 19–22. [Google Scholar]

- Reitan, Ralph M. 1992. Trail Making Test: Manual for Administration and Scoring. Tucson: Reitan Neuropsychology Laboratory. [Google Scholar]

- Ribeiro, Nicolas, Toinon Vigier, Jieun Han, Gyu Hyun Kwon, Hojin Choi, Samuel Bulteau, and Yannick Prié. 2024. Three Virtual Reality Environments for the Assessment of Executive Functioning Using Performance Scores and Kinematics: An Embodied and Ecological Approach to Cognition. Cyberpsychology, Behavior, and Social Networking 27: 127–34. [Google Scholar] [CrossRef] [PubMed]

- Seesjärvi, Erik, Jasmin Puhakka, Eeva T Aronen, Jari Lipsanen, Minna Mannerkoski, Alexandra Hering, Sascha Zuber, Matthias Kliegel, Matti Laine, and Juha Salmi. 2022. Quantifying ADHD Symptoms in Open-Ended Everyday Life Contexts With a New Virtual Reality Task. Journal of Attention Disorders 26: 1394–411. [Google Scholar] [CrossRef] [PubMed]

- Seesjärvi, Erik, Matti Laine, Kaisla Kasteenpohja, and Juha Salmi. 2023. Assessing goal-directed behavior in virtual reality with the neuropsychological task EPELI: Children prefer head-mounted display but flat screen provides a viable performance measure for remote testing. Frontiers in Virtual Reality 4: 1138240. [Google Scholar] [CrossRef]

- Suchy, Yana, Michelle Gereau Mora, Libby A. DesRuisseaux, Madison A. Niermeyer, and Stacey Lipio Brothers. 2024. Pitfalls in research on ecological validity of novel executive function tests: A systematic review and a call to action. Psychological Assessment 36: 243–61. [Google Scholar] [CrossRef] [PubMed]

- Sugarman, Heidi, Aviva Weisel-Eichler, Arie Burstin, and Riki Brown. 2011. Use of novel virtual reality system for the assessment and treatment of unilateral spatial neglect: A feasibility study. Paper presented at 2011 International Conference on Virtual Rehabilitation, Zurich, Switzerland, June 27–29. [Google Scholar]

- Voinescu, Alexandra, Karin Petrini, Danaë Stanton Fraser, Radu-Adrian Lazarovicz, Ion Papavă, Liviu Andrei Fodor, and Daniel David. 2023. The effectiveness of a virtual reality attention task to predict depression and anxiety in comparison with current clinical measures. Virtual Reality 27: 119–40. [Google Scholar] [CrossRef]

- Whiting, Penny F., Anne W. S. Rutjes, Marie E. Westwood, Susan Mallett, Jonathan J. Deeks, Johannes B. Reitsma, Mariska M. G. Leeflang, Jonathan A. C. Sterne, and Patrick M. M. Bossuyt. 2011. QUADAS-2: A Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies. Annals of Internal Medicine 155: 529–36. [Google Scholar] [CrossRef]

- Zelazo, Philip David, and Stephanie M. Carlson. 2023. Reconciling the context-dependency and domain-generality of executive function skills from a developmental systems perspective. Journal of Cognition and Development 24: 205–22. [Google Scholar] [CrossRef]

- Zeng, Yingchun, Qiongyao Guan, Yan Su, Qiubo Huang, Jun Zhao, Minghui Wu, Qiaohong Guo, Qiyuan Lyu, Yiyu Zhuang, and Andy S. K. Cheng. 2023. A self-administered immersive virtual reality tool for assessing cognitive impairment in patients with cancer. Asia-Pacific Journal of Oncology Nursing 10: 100205. [Google Scholar] [CrossRef]

| Author and Year | Mean Age (Years) | % of Male Participants | N | Clinical Status of the Sample | Characteristics of VR-Based Assessments | Outcome Measure |

|---|---|---|---|---|---|---|

| (Miskowiak et al. 2022) | 29.92 | 44.63 | 121 | Healthy and clinical (MD, PSD) | The CAVIR (Cognition Assessment in Virtual Reality): an immersive VR test of daily life cognitive functions in an interactive VR kitchen scenario. | TMT-B, CANTAB, Fluency test |

| (Seesjärvi et al. 2023) | 11 | 59.72 | 72 | Healthy children | The EPELI (Executive Performance of Everyday Living) requires participants to execute multiple tasks from memory by navigating a virtual home environment and interacting with pertinent target objects, while simultaneously keeping track of time and disregarding irrelevant distracting objects and events. | BRIEF GEC |

| (Seesjärvi et al. 2022) | ADHD: 10 year 4 month, Healthy: 10 year 9 month | 82.89 | 76 | Healthy and clinical (ADHD) | The EPELI (Executive Performance of Everyday Living) requires participants to execute multiple tasks from memory by navigating a virtual home environment and interacting with pertinent target objects, while simultaneously keeping track of time and disregarding irrelevant distracting objects and events. | BRIEF |

| (Borgnis et al. 2023) | 66.94 | 41.25 | 80 | Healthy and clinical (PD) | The EXIT 360° (EXecutive-functions Innovative Tool 360°): an innovative 360° tool designed for an ecologically valid and multi-component evaluation of executive functioning. Participants are immersed in 360° household environments, where they must complete seven everyday subtasks to simultaneously and rapidly assess various aspects of executive functioning. | PMR, AM, FAB, VF, DS, TMT-A, TMT-B, TMT-BA, ST-E, ST-T |

| (Voinescu et al. 2023) | 32.4 | 40.2 | 82 | Healthy and clinical (depression, anxiety) | The Nesplora Aquarium evaluates attention and executive functions in adults, based on the Continuous Performance Test (CPT) paradigm. In this VR environment, participants are instructed to focus on the main tank in the aquarium while CPTs are integrated into the experience. | TMT-A, TMT-B |

| (Zeng et al. 2023) | 47.74 | 49.7 | 82 | Clinical (cancer) | The VR cognitive assessment: a virtual outdoor scenario was developed to assess executive function. This scenario includes the Trail Making Test-B (TMT-B) for assessing executive functioning. | TMT-B |

| (Plotnik et al. 2017) | 37.1 | 81.82 | 11 | Healthy | The VR CTT (Virtual Reality Color Trails Test): the participant’s performance, traditionally recorded with a pen-and-pencil, is now captured using a marker attached to the tip of a short pointing stick held by the participant. | TMT-A, TMT-B, TMT-BA |

| (Kourtesis et al. 2021) | 29.15 | 48.78 | 41 | Healthy | The VR-EAL (Virtual Reality Everyday Assessment Lab) evaluates executive functioning, including planning and multitasking, within a realistic immersive VR scenario lasting around 60 min. Planning ability is assessed by having participants draw their route around the city on a 3D interactive board. Multitasking is examined through a cooking task, where participants prepare and serve breakfast and place a chocolate pie in the oven. | BADS-Key Search, CTT-1, 2 |

| (Parsons and Courtney 2014) | 25.58 | 75 | 50 | Healthy | The VR-PASAT (Virtual Reality-Paced Auditory Serial Addition Test) concentrates on the detailed analysis of neurocognitive testing within a virtual gaming environment to evaluate attention processing and executive functioning while navigating a virtual city. | PASAT-200, D-KEFS Color-Word Interference Test (Inhibition, Inhibition/Switching) |

| Executive Function Sub-Elements | Outcome Measures |

|---|---|

| Attention | TMT-A, CTT-1, AM, PASAT-200 |

| Inhibition | ST-E, ST-T, D-KEFS Color-Word Interference Test (Inhibition, Inhibition/Switching) |

| Cognitive flexibility | TMT-B, TMT-BA, CTT-2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.-A.; Kim, J.-Y.; Park, J.-H. Concurrent Validity of Virtual Reality-Based Assessment of Executive Function: A Systematic Review and Meta-Analysis. J. Intell. 2024, 12, 108. https://doi.org/10.3390/jintelligence12110108

Lee S-A, Kim J-Y, Park J-H. Concurrent Validity of Virtual Reality-Based Assessment of Executive Function: A Systematic Review and Meta-Analysis. Journal of Intelligence. 2024; 12(11):108. https://doi.org/10.3390/jintelligence12110108

Chicago/Turabian StyleLee, Si-An, Ji-Yea Kim, and Jin-Hyuck Park. 2024. "Concurrent Validity of Virtual Reality-Based Assessment of Executive Function: A Systematic Review and Meta-Analysis" Journal of Intelligence 12, no. 11: 108. https://doi.org/10.3390/jintelligence12110108

APA StyleLee, S.-A., Kim, J.-Y., & Park, J.-H. (2024). Concurrent Validity of Virtual Reality-Based Assessment of Executive Function: A Systematic Review and Meta-Analysis. Journal of Intelligence, 12(11), 108. https://doi.org/10.3390/jintelligence12110108