Computer-Based Development of Reading Skills to Reduce Dropout in Uncertain Times

Abstract

1. Introduction

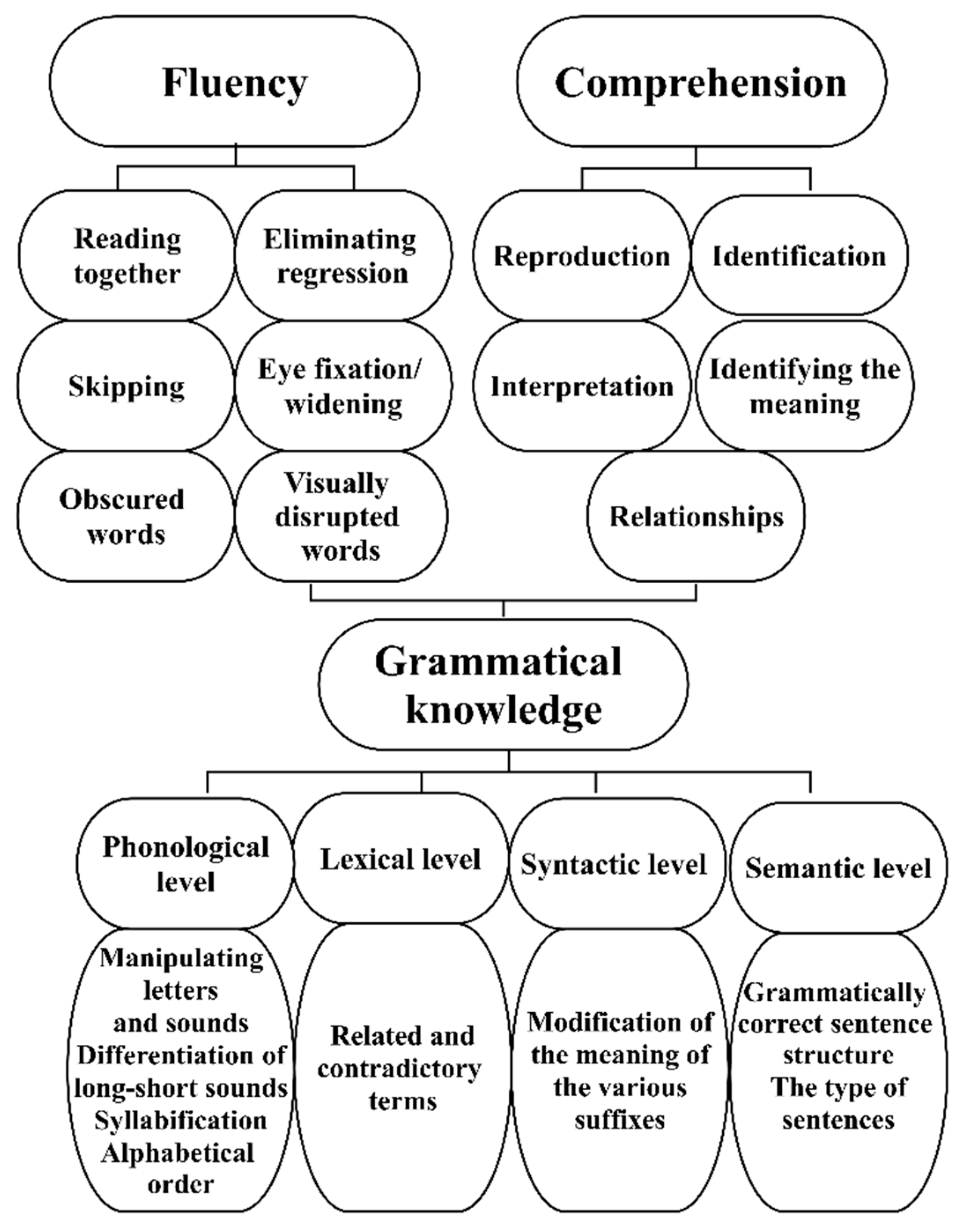

1.1. Cognitive and Linguistic Components of Reading

1.2. Computer-Based Development of Reading Skills

1.3. Aims and Research Questions

- RQ1. How effectively can a complex online reading intervention program be implemented at the ages of 9–11?

- RQ2. Which starting level of reading skills is the most sensitive to the complex online training program? Which level can we thus expect the largest effect on?

- RQ3. Which group of students can be enhanced the most via the online reading program based on students’ socioeconomic background?

- RQ4. How generalizable are the results? Are the effects confirmed by latent-level analyses using a no-change model in the control group and a latent change model in the intervention group?

2. Materials and Methods

2.1. Participants

2.2. Instrument

2.2.1. Content and Structure of the Training Program

2.2.2. Procedure

3. Results

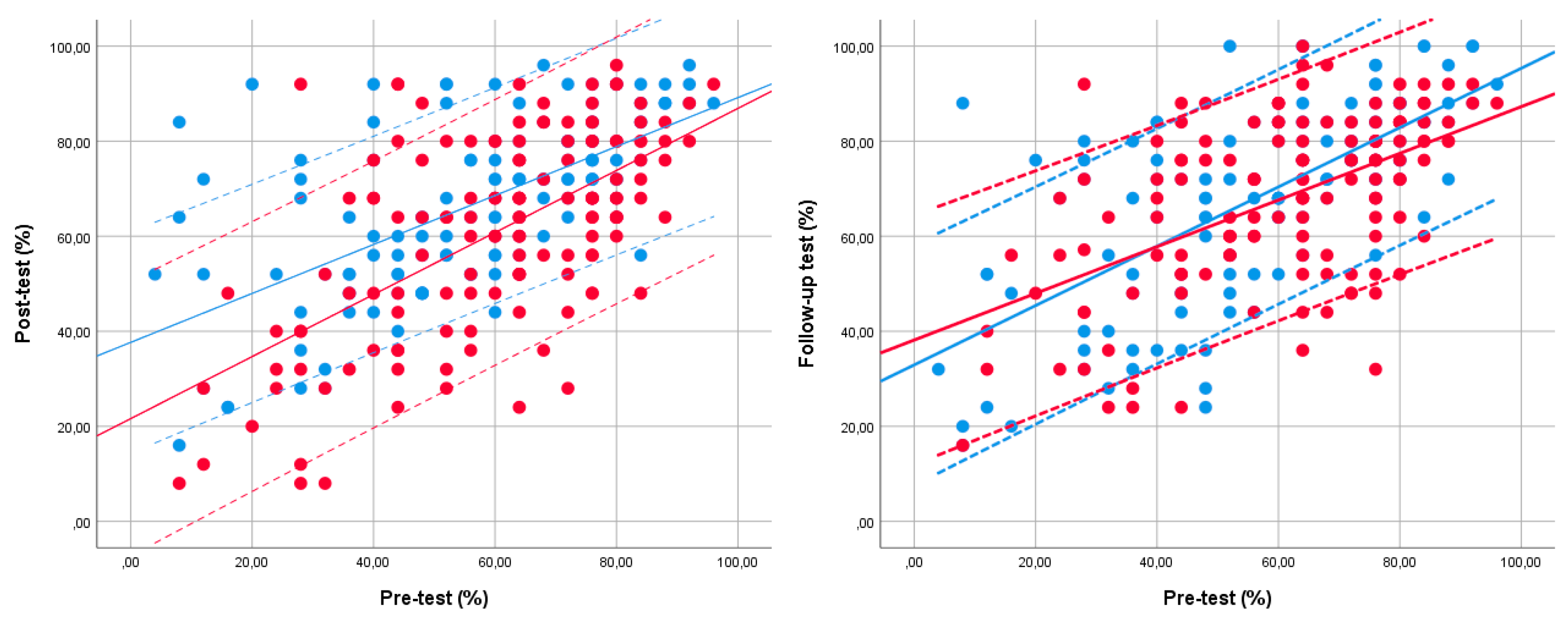

3.1. RQ1. Changes in Reading Performance Compared to Students’ Original Reading Skills Level

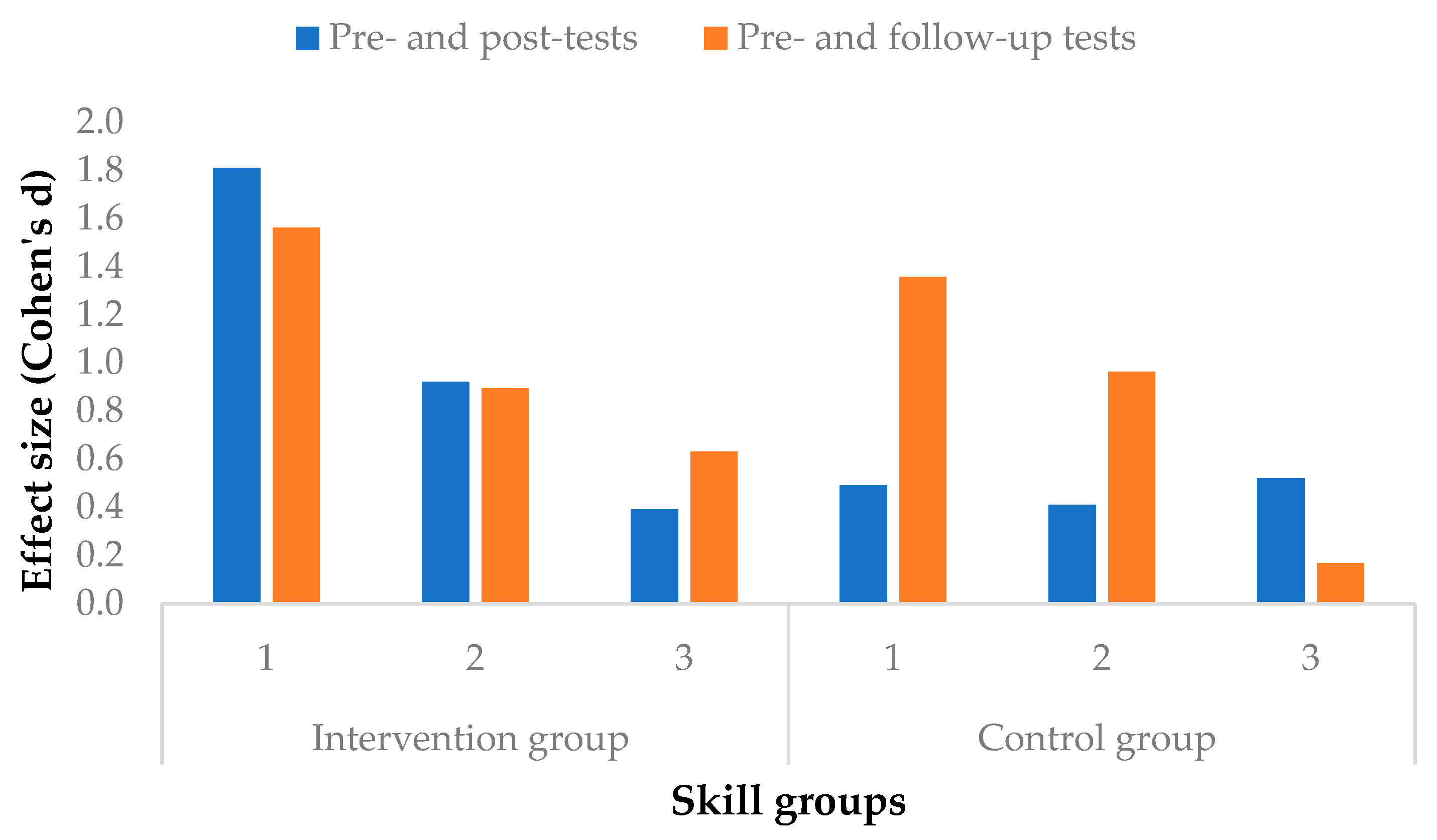

3.2. RQ2. Expand the Impact of the Intervention According to the Initial Skill Level of the Students

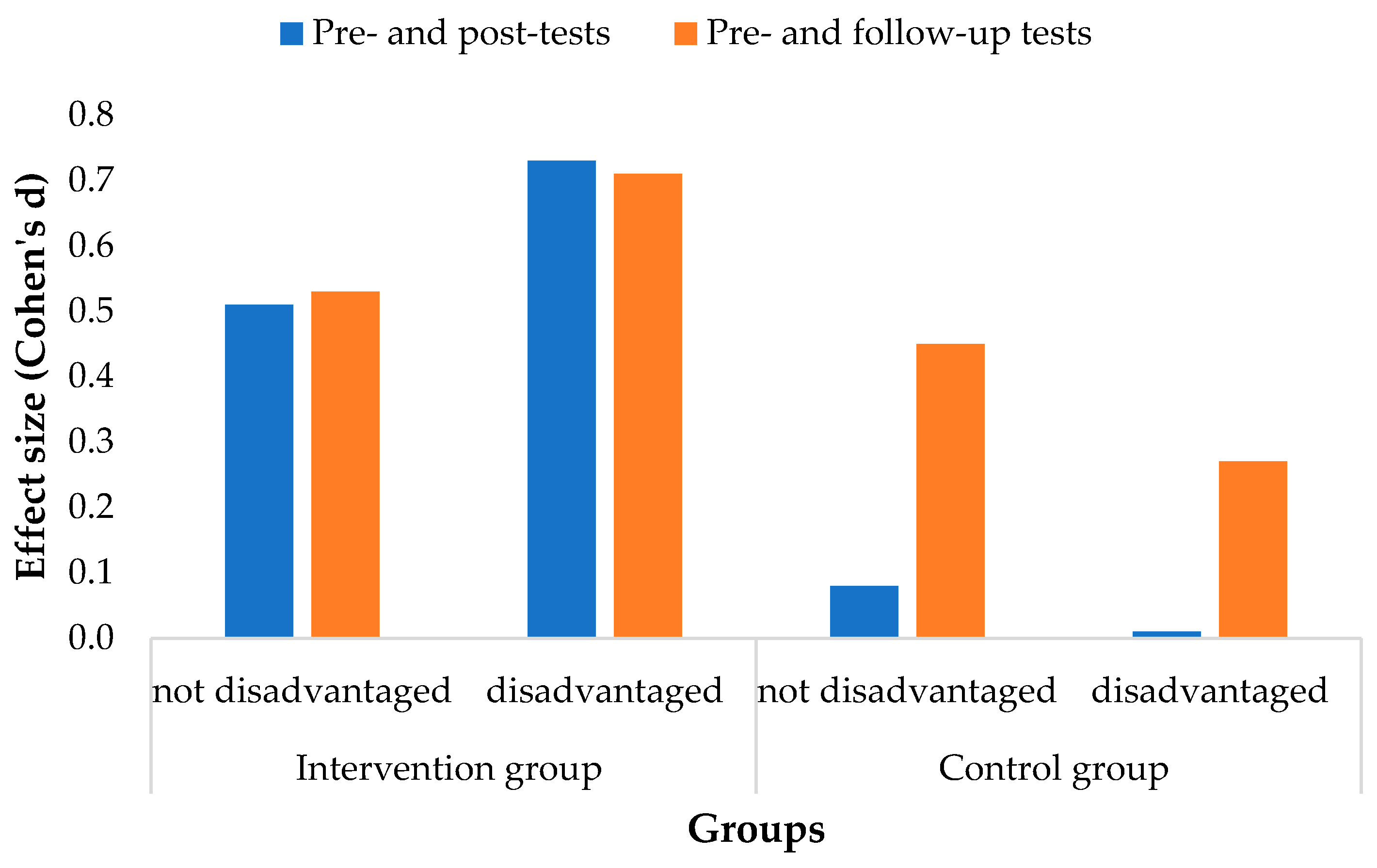

3.3. RQ3. Expand the Effect Size of the Intervention on Disadvantaged Students

3.4. RQ4. Evaluating the Effect of the Intervention Program within the Latent Curve Modeling Framework

4. Discussion

4.1. RQ1. Changes in Reading Performance Compared to Students’ Original Reading Skills Level

4.2. RQ2. Expanding the Impact of the Intervention According to the Initial Skill Level of the Students

4.3. RQ3. Expanding the Effect Size of the Intervention on Disadvantaged Students

4.4. RQ4. Evaluating the Effect of the Intervention Program within the Latent Curve Modeling Framework

5. Limitations of the Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Adlof, Suzanne M., Hugh W. Catts, and Jaehoon Lee. 2010. Kindergarten predictors of second versus eighth grade reading comprehension impairments. Journal of Learning Disabilities 43: 332–45. [Google Scholar] [CrossRef] [PubMed]

- Al Otaiba, Stephanie, Marcia L. Kosanovich, and Joseph K. Torgesen. 2012. Assessment and instruction in phonemic awareness and word recognition skills. In Language and Reading Disabilities, 3rd ed. Edited by Alan G. Kamhi and Hugh W. Catts. Needham Heights: Allyn & Bacon, pp. 112–39. [Google Scholar]

- Alessandri, Guido, Antonio Zuffianò, and Enrico Perinelli. 2017. Evaluating intervention programs with a pretest-posttest design: A structural equation modeling approach. Frontiers in Psychology 8: 223. [Google Scholar] [CrossRef] [PubMed]

- Andreev, Rumen, Valentina Terzieva, and Petia Kademova-Katzarova. 2009. An approach to development of personalized e-learning environment for dyslexic pupils’ acquisition of reading competence. Paper presented at the International Conference on Computer Systems and Technologies and Workshop for PhD Students in Computing—CompSysTech, Ruse, Bulgaria, June 18–19. [Google Scholar]

- Athanaselis, Theologos, Stelios Bakamidis, Ioannis Dologlou, Evmorfia Argyriou, and Antonis Symvonis. 2014. Making assistive reading tools user friendly: A new platform for Greek dyslexic students empowered by automatic speech recognition. Multi-Media Tools and Applications 3: 681–99. [Google Scholar] [CrossRef]

- Barley, Zoe, Patricia A. Lauer, Sheila A. Arens, Helen A. Apthorp, Kerry S. Englert, David Snow, and Motoko Akiba. 2002. Helping A-Risk Students Meet Standards: A Synthesis of Evidence-Based Classroom Practices. Washington, DC: Office of Educational Research and Improvement. [Google Scholar]

- Burnham, Kenneth P., and David R. Anderson. 2004. Multimodel Inference Understanding AIC and BIC in Model Selection. Sociological Methods and Research 33: 261–304. [Google Scholar] [CrossRef]

- Cain, Kate, and Jane Oakhill. 2011. Matthew effects in young readers reading comprehension and reading experience aid vocabulary development. Journal of Learning Disabilities 44: 431–43. [Google Scholar] [CrossRef]

- Campbell, Laurie O., Claudia C. Sutter, and Glenn W. Lambie. 2019. An investigation of the summer learning effect on fourth grade students’ reading scores. Reading Psychology 40: 465–90. [Google Scholar] [CrossRef]

- Campbell, Laurie O., Cassandra Howard, Glenn W. Lambie, and Xueying Gao. 2022. The efficacy of a computer-adaptive reading program on grade 5 students’ reading achievement scores. Education and Information Technologies 27: 8147–63. [Google Scholar] [CrossRef]

- Carlson, Coleen D., and David J. Francis. 2002. Increasing the reading achievement of at-risk children through direct instruction: Evaluation of the Rodeo Institute for Teacher Excellence (RITE). Journal of Education for Students Placed at Risk 7: 141–66. [Google Scholar] [CrossRef]

- Cassady, Jerrell C., and Lawrence L. Smith. 2004. Acquisition of blending skills: Comparisons among body-coda, onset-rime, and phoneme blending tasks. Reading Psychology 25: 261–72. [Google Scholar] [CrossRef]

- Castles, Anne, Kathleen Rastle, and Kate Nation. 2018. Ending the reading wars: Reading acquisition from novice to expert. Psychological Science in the Public Interest 19: 5–51. [Google Scholar] [CrossRef] [PubMed]

- Chall, Jeanne S., and Vicki A. Jacobs. 1983. Writing and reading in the elementary grades: Developmental trends among low SES children. Language Arts 60: 617–26. [Google Scholar]

- Chen, Fang Fang. 2007. Sensitivity of goodness of fit indexes to lack of measurement invariance. Structural Equation Modeling: A Multidisciplinary Journal 14: 464–504. [Google Scholar] [CrossRef]

- Cohen, Jacob. 1988. Statistical Power Analysis for the Behavioral Sciences, 2nd ed. Hillsdale: Lawrence Earlbaum Associates. [Google Scholar]

- Connor, Carol McDonald, Frederick J. Morrison, Barry Fishman, Elizabeth C. Crowe, Stephanie Al Otaiba, and Christopher Schatschneider. 2013. A longitudinal cluster-randomized controlled study on the accumulating effects of individualized literacy instruction on students’ reading from first through third grade. Psychological Science 8: 1408–19. [Google Scholar] [CrossRef] [PubMed]

- Corbett, Albert. 2001. Cognitive computer tutors: Solving the two-sigma problem. In User Modeling 2001. Edited by Mathias Bauer, Piotr J. Gmytrasiewicz and Julita Vassileva. Berlin/Heidelberg and Sonthofen: Springer, pp. 137–47. [Google Scholar] [CrossRef]

- Cotton, Sue M., and Sheila G. Crewther. 2009. The relationship between reading and intelligence in primary school aged children: Implications for definitional models of dyslexia. The Open Education Journal 2: 42–50. [Google Scholar] [CrossRef]

- Csapó, Benő, and Gyöngyvér Molnár. 2019. Online Diagnostic Assessment in Support of Personalized Teaching and Learning: The eDia System. Frontiers in Psychology 10: 1522. [Google Scholar] [CrossRef] [PubMed]

- Cunningham, Anne E., and Keith E. Stanovich. 1991. Tracking the unique effects of print exposure in children: Associations with vocabulary, general knowledge, and spelling. Journal of Educational Psychology 83: 264–74. [Google Scholar] [CrossRef]

- Cunningham, Anne E., and Keith E. Stanovich. 1998. What reading does for the mind. American Educator 22: 8–15. [Google Scholar]

- de Graaff, Saskia, Anna Bosman, Fred Hasselman, and Ludo Verhoeven. 2009. Benefits of systematic phonics instruction. Scientific Studies of Reading 13: 318–33. [Google Scholar] [CrossRef]

- Ehri, Linnea C., Simone R. Nunes, Dale M. Willows, Barbara Valeska Schuster, Zoh Yaghoub-Zadeh, and Timothy Shanahan. 2001. Phonemic awareness instruction helps children learn to read: Evidence from the national reading panel’s meta-analysis. Reading Research Quarterly 36: 250–87. [Google Scholar] [CrossRef]

- Elleman, Amy M., and Eric L. Oslund. 2019. Reading Comprehension Research: Implications for Practice and Policy. Policy Insights from the Behavioral and Brain Sciences 6: 3–11. [Google Scholar] [CrossRef]

- Engzell, Per, Arun Frey, and Mark D. Verhagen. 2021. Learning loss due to school closures during the COVID-19 pandemic. SocArXiv. [Google Scholar] [CrossRef]

- Gottfredson, Linda S. 1997. Why g matters: The complexity of everyday life. Intelligence 24: 79–132. [Google Scholar] [CrossRef]

- Guthrie, John T., Allan Wigfield, Pedro Barbosa, Katleen C. Perencevich, Ana M. Taboada, Marcia Davis, Nicole T. Scafiddi, and Stephen M. Tonks. 2004. Increasing reading comprehension and engagement through concept-oriented reading instruction. Journal of Educational Psychology 96: 403–23. [Google Scholar] [CrossRef]

- Hecht, Steven A., and Linda Close. 2002. Emergent literacy skills and training time uniquely predict variability in responses to phonemic awareness training in disadvantaged kindergartners. Journal of Experimental Child Psychology 82: 93–115. [Google Scholar] [CrossRef]

- Hirsch, Edward D. 2003. Reading comprehension requires knowledge-of words and the world. American Educator 27: 10–13. [Google Scholar]

- James, Laurie. 2014. The integration of a computer-based early reading program to increase English language learners’ literacy skills. Teaching English with Technology 14: 9–22. [Google Scholar]

- Jamshidifarsani, Hossein, Samir Garbaya, Theodore Lim, Pierre Blazevic, and James M. Ritchie. 2019. Technology-based reading intervention programs for elementary grades: An analytical review. Computers & Education 128: 427–51. [Google Scholar] [CrossRef]

- Jenkins, Joseph, Margaret Schulze, Allison Marti, and Allen G. Harbaugh. 2017. Curriculum-based measurement of reading growth: Weekly versus intermittent progress monitoring. Exceptional Children 84: 42–54. [Google Scholar] [CrossRef]

- Johnson, Erin Phinney, Justin Perry, and Haya Shamir. 2010. Variability in reading ability gains as a function of computer-assisted instruction method of presentation. Computers and Education 55: 209–17. [Google Scholar] [CrossRef]

- Kamhi, Alan G., and Hugh W. Catts. 2012. Language and Reading Disabilities, 3rd ed. New York: Pearson. [Google Scholar]

- Kendeou, Panayiota, Robert Savage, and Paul van den Broek. 2009. Revisiting the simple view of reading. British Journal of Educational Psychology 79: 353–70. [Google Scholar] [CrossRef]

- Kendeou, Panayiota, Paul van den Broek, Anne Helder, and Josefine Karlsson. 2014. A cognitive view of reading comprehension: Implications for reading difficulties. Learning Disabilities Research and Practice 29: 10–16. [Google Scholar] [CrossRef]

- Kendeou, Panayiota, Kristen L. McMaster, and Theodore J. Christ. 2016. Reading comprehension core components and processes. Policy Insights from the Behavioral and Brain Sciences 3: 62–69. [Google Scholar] [CrossRef]

- Kim, Yeu Hong, and Ernest T. Goetz. 1995. Children’s Use of Orthographic and Contextual Information in Word Recognition and Comprehension. In The Varieties of Orthographic Knowledge. Edited by Virginia Wise Berninger. Dordrecht: Kluwer Academic Publishers, pp. 205–49. [Google Scholar] [CrossRef]

- Kline, Rex B. 2016. Principles and Practice of Structural Equation Modeling, 4th ed. New York: The Guilford Press. [Google Scholar]

- Kloos, Heidi, Stephanie Sliemers, Macey Cartwright, Quintino Mano, and Scott Stage. 2019. MindPlay Virtual Reading Coach: Does it affect reading fluency in elementary school? Frontiers in Education 4: 1–13. [Google Scholar] [CrossRef]

- LaBerge, David, and Jay Samuels. 1974. Toward a theory of automatic information processing in reading. Cognitive Psychology 6: 293–323. [Google Scholar] [CrossRef]

- Little, Todd D., William A. Cunningham, Golan Shahar, and Keith F. Widaman. 2002. To parcel or not to parcel: Exploring the question, weighing the merits. Structural Equation Modeling 9: 151–73. [Google Scholar] [CrossRef]

- Lonigan, Christopher J. 2015. Literacy development. In Handbook of Child Psychology and Developmental Science. Volume 2: Cognitive Processes. Edited by Richard M. Lerner, Lynn S. Liben and Ulrick Müeller. Hoboken: Wiley and Sons, pp. 763–805. [Google Scholar]

- Lonigan, Christopher J., Kimberly Driscoll, Beth M. Phillips, Benlee G. Cantor, Jason L. Anthony, and Howard Goldstein. 2003. A computer-assisted instruction phonological sensitivity program for preschool children at-risk for reading problems. Journal of Early Intervention 25: 248–62. [Google Scholar] [CrossRef]

- Lonigan, Christopher J., Chris Schatschneider, and Laura Westberg. 2008. Results of the national early literacy panel research synthesis: Identification of children’s skills and abilities linked to later outcomes in reading, writing, and spelling. In NELP: Developing Early Literacy: Report of the National early Literacy Panel; Washington, DC: National Institute for Literacy, pp. 55–106. [Google Scholar]

- Lovett, Maureen W., Jan C. Frijters, Maryanne Wolf, Karen A. Steinbach, Rose A. Sevcik, and Robin D. Morris. 2017. A longitudinal cluster-randomized controlled study on the accumulating effects of individualized literacy instruction on students’ reading from first through third grade. Journal of Educational Psychology 7: 889–914. [Google Scholar] [CrossRef] [PubMed]

- Luo, Tian, Guang-Lea Lee, and Cynthia Molina. 2017. Incorporating Istation into early childhood classrooms to improve reading comprehension. Journal of Information Technology Education: Research 16: 247–66. [Google Scholar] [CrossRef][Green Version]

- Macaruso, Paul, and Alyson Rodman. 2011. Benefits of computer-assisted instruction to support reading acquisition in English language learners. Bilingual Research Journal 34: 301–15. [Google Scholar] [CrossRef]

- Macaruso, Paul, and Adelaide Walker. 2008. The efficacy of computer-assisted instruction for advancing literacy skills in kindergarten children. Reading Psychology 29: 266–87. [Google Scholar] [CrossRef]

- Macaruso, Paul, Pamela E. Hook, and Robert McCabe. 2006. The efficacy of computer-based supplementary phonics programs for advancing reading skills in at-risk elementary students. Journal of Research in Reading 29: 162–72. [Google Scholar] [CrossRef]

- Macaruso, Paul, Shani Wilkes, Sarah Franzén, and Rachel Schechter. 2019. Three-Year Longitudinal Study: Impact of a Blended Learning Program—Lexia® Core5® Reading—On Reading Gains in Low-SES Kindergarteners. Computers in the Schools 36: 2–18. [Google Scholar] [CrossRef]

- Malouf, David B. 1988. The effect of instructional computer games on continuing student motivation. The Journal of Special Education 4: 27–38. [Google Scholar] [CrossRef]

- Mayer, Richard E., and Roxana Moreno. 2003. Nine Ways to Reduce Cognitive Load in Multimedia Learning. Educational Psychologist 38: 43–52. [Google Scholar] [CrossRef]

- Mitchell, Mary Jane, and Barbara J. Fox. 2001. The effects of computer software for developing phonological awareness in low-progress readers. Literacy Research and Instruction 40: 315–32. [Google Scholar] [CrossRef]

- Molnár, Gyöngyvér, and Zoltán Hermann. 2022. Short- and Long-term Effects of COVID-related Kindergarten and School Closures on First- to Eighth-grade Students’ School Readiness Skills and Mathematics, Reading and Science Learning. Submitted for publication. [Google Scholar]

- Morris, Brittany M., and Christopher J. Lonigan. 2022. What components of working memory are associated with children’s reading skills? Learning and Individual Differences 95: 1–13. [Google Scholar] [CrossRef]

- Nation, Kate. 2005. Children’s reading comprehension difficulties. In The Science of Reading: A Handbook. Edited by Margaret J. Snowling and Charles Hulme. Oxford: Blackwell Publishing, pp. 248–65. [Google Scholar] [CrossRef]

- National Reading Panel. 2000. Teaching Children to Read: An Evidence-Based Assessment of the Scientific Research Literature on Reading and Its Implications for Reading Instruction; Washington, DC: National Institute of Child Health and Human Development.

- Nelson, Nickola W. 2010. Language and Literacy Disorders: Infancy through Adolescence. Boston: Education Inc. [Google Scholar]

- Nelson, Jack L., Stuart Palonsky, and Mary Rose McCarthy. 2012. Critical Issues in Education: Dialogues and Dialectics. New York: McGraw-Hill Higher Education. [Google Scholar]

- Papastergiou, Marina. 2009. Digital Game-Based Learning in high school Computer Science education: Impact on educational effectiveness and student motivation. Computers & Education 1: 1–12. [Google Scholar] [CrossRef]

- Perfetti, Charles A., Nicole Landi, and Jane Oakhill. 2005. The acquisition of reading comprehension skill. In The Science of Reading: A Handbook. Edited by Margaret J. Snowling and Charles Hulme. Oxford: Blackwell Blackwell Publishing, pp. 227–47. [Google Scholar]

- Prescott, Jen Elise, Kristine Bundschuh, Elizabeth R. Kazakoff, and Paul Macaruso. 2018. Elementary school–wide implementation of a blended learning program for reading intervention. The Journal of Educational Research 111: 497–506. [Google Scholar] [CrossRef]

- Rabiner, David L., Jennifer Godwin, and Kenneth A. Dodge. 2016. Predicting academic achievement and attainment: The contribution of early academic skills, attention difficulties, and social competence. School Psychology Review 45: 250–67. [Google Scholar] [CrossRef]

- Ricci, Katrina E., Eduardo Salas, and Janis A. Cannon-Bowers. 2009. Do computer-based games facilitate knowledge acquisition and Retention? Military Psychology 4: 295–307. [Google Scholar] [CrossRef]

- Ritchie, Stuart J., Timothy C. Bates, and Robert Plomin. 2014. Does Learning to Read Improve Intelligence? A Longitudinal Multivariate Analysis in Identical Twins From Age 7 to 16. Child Development 1: 23–36. [Google Scholar] [CrossRef]

- Saine, Nina L., Marja-Kristiina Lerkkanen, Timo Ahonen, Asko Tolvanen, and Heikki Lyytinen. 2011. Computer-assisted remedial reading intervention for school beginners at risk for reading disability. Child Development 82: 1013–28. [Google Scholar] [CrossRef] [PubMed]

- Savage, Robert, Philip C. Abrami, Noella Piquette, Eileen Wood, Gia Deleveaux, Sukhbinder Sanghera-Sidhu, and Giovani Burgos. 2013. A pan-Canadian cluster randomized control effectiveness trial of the ABRACADABRA web-based literacy program. Journal of Educational Psychology 105: 310–28. [Google Scholar] [CrossRef]

- Segers, Eliane, and Ludo Verhoeven. 2005. Long-term effects of computer training of phonological awareness in kindergarten. Journal of Computer Assisted Learning 21: 17–27. [Google Scholar] [CrossRef]

- Shelley-Tremblay, John, and Joshua C. Eyer. 2009. Effect of the reading plus program on reading skills in second graders. Journal of Behavioral Optometry 20: 59–66. [Google Scholar]

- Simmons, Deborah C., Michael D. Coyne, Oi-man Kwok, Sarah McDonagh, Beth A. Harn, and Edward J. Kame’enui. 2008. Indexing response to intervention: A longitudinal study. Journal of Learning Disabilities 2: 158–73. [Google Scholar] [CrossRef] [PubMed]

- Sivin-Kachala, Jay, and Ellen R. Bialo. 2000. 2000 Research Report on the Effectiveness of Technology in Schools. Washington, DC: Software and Information Industry Association. [Google Scholar]

- Steyer, Rolf, Michael Eid, and Peter Schwenkmezger. 1997. Modeling true intraindividual change: True change as a latent variable. Methods of Psychological Research Online 2: 21–33. [Google Scholar]

- Stockard, Jean. 2010. Promoting reading achievement and countering the “fourth-grade slump”: The impact of direct instruction on reading achievement in fifth grade. Journal of Education for Students Placed at Risk 15: 218–40. [Google Scholar] [CrossRef]

- Swart, Nicole M., Marloes M. L. Muijselaar, Esther G. Steenbeek-Planting, Mienke Droop, Peter F. de Jong, and Ludo Verhoeven. 2017. Cognitive precursors of the developmental relation between lexical quality and reading comprehension in the intermediate elementary grades. Learning and Individual Differences 59: 43–54. [Google Scholar] [CrossRef]

- Sztajn, Paola, Jere Confrey, P. Holt Wilson, and Cynthia Edgington. 2012. Learning trajectory-based instruction: Toward a theory of teaching. Educational Researcher 41: 147–56. [Google Scholar] [CrossRef]

- Tomasik, Martin J., Laura A. Helbling, and Urs Moser. 2021. Educational gains of in-person vs. distance learning in primary and secondary schools: A natural experiment during the COVID-19 pandemic school closures in Switzerland. International Journal of Psychology 56: 566–76. [Google Scholar] [CrossRef] [PubMed]

- Torgesen, Joseph K. 2005. Recent discoveries on remedial interventions for children with dyslexia. In The Science of Reading: A Handbook. Edited by Charles Hulme and Margaret J. Snowling. Oxford: Blackwell, pp. 521–37. [Google Scholar]

- van Daal, Victor H. P., and Pieter Reitsma. 2000. Computer-assisted learning to read and spell: Results from two pilot studies. Journal of Research in Reading 23: 181–93. [Google Scholar] [CrossRef]

- van Scoter, Judy, and Suzie Boss. 2004. Learners, Language, and Technology: Making Connections That Support Literacy. Creating Communities of Learning & Excellence. Portland: Northwest Regional Educational Laboratory. [Google Scholar]

- Wild, Mary. 2009. Using computer-aided instruction to support the systematic practice of phonological skills in beginning readers. Journal of Research in Reading 32: 413–32. [Google Scholar] [CrossRef]

- Williams, T. Craig, and Hyder Zahed. 1996. Computer-based training versus traditional lecture: Effect on learning and retention. Journal of Business and Psychology 2: 297–310. [Google Scholar] [CrossRef]

- Wyschkon, Anne, Franziska Schulz, Finnya Sunnyi Gallit, Nadine Poltz, Juliane Kohn, Svenja Moraske, Rebecca Bondü, Michael von Aster, and Günter Esser. 2017. 5-Jahres-Verlauf der LRS: Stabilität, Geschlechtseffekte, Schriftsprachniveau und Schulerfolg. Zeitschrift für Kinder- und Jugendpsychiatrie und Psychotherapie 46: 1–16. [Google Scholar] [CrossRef]

| Gender (%) | |||

|---|---|---|---|

| Boys | Girls | Missing | |

| Intervention group | 49.3 | 44.9 | 5.8 |

| Control group | 60.1 | 34.8 | 5.1 |

| Total | 54.7 | 39.9 | 5.4 |

| Group | Test | Development | SD | t | df | p | d |

|---|---|---|---|---|---|---|---|

| Intervention group | Pre- and post-tests | 9.68 | 17.11 | −6.65 | 137 | <.01 | .51 |

| Post- and follow-up tests | 1.58 | 14.85 | −1.24 | 136 | .22 | .12 | |

| Pre- and follow-up tests | 11.04 | 16.72 | −7.72 | 136 | <.01 | .59 | |

| Control group | Pre- and post-tests | .67 | 18.09 | −.43 | 137 | .67 | .03 |

| Post- and follow-up tests | 6.69 | 14.68 | −5.33 | 136 | <.01 | .35 | |

| Pre- and follow-up tests | 7.27 | 18.18 | −4.68 | 136 | <.01 | .39 |

| Skills Group | Test | Group | N | M | SD | t | p |

|---|---|---|---|---|---|---|---|

| 1 | Pretest | Intervention group | 23 | 22.26 | 10.43 | −.95 | .35 |

| Control group | 19 | 25.05 | 8.09 | ||||

| Post-test | Intervention group | 23 | 49.57 | 20.95 | 2.74 | <.01 | |

| Control group | 19 | 32.00 | 20.31 | ||||

| Follow-up test | Intervention group | 23 | 48.52 | 22.25 | .46 | .65 | |

| Control group | 19 | 45.53 | 19.51 | ||||

| 2 | Pretest | Intervention group | 69 | 54.84 | 9.85 | −.90 | .37 |

| Control group | 70 | 56.34 | 9.74 | ||||

| Post-test | Intervention group | 69 | 64.64 | 13.78 | 1.47 | .14 | |

| Control group | 70 | 60.63 | 17.99 | ||||

| Follow-up test | Intervention group | 69 | 66.43 | 17.17 | −.54 | .59 | |

| Control group | 70 | 67.94 | 15.55 | ||||

| 3 | Pretest | Intervention group | 46 | 79.74 | 6.39 | −.08 | .94 |

| Control group | 49 | 79.84 | 5.77 | ||||

| Post-test | Intervention group | 46 | 80.43 | 9.17 | 3.22 | <.01 | |

| Control group | 49 | 72.90 | 13.15 | ||||

| Follow-up test | intervention group | 46 | 82.96 | 9.92 | 2.82 | <.01 | |

| Control group | 49 | 76.24 | 12.95 |

| Disadvantaged Situation | Test | Groups | N | Mean | SD | t | p |

|---|---|---|---|---|---|---|---|

| Nondisadvantaged | Pretest | Intervention group | 104 | 62.38 | 18.47 | −.22 | .82 |

| Control group | 109 | 62.94 | 17.74 | ||||

| Post-test | Intervention group | 104 | 70.35 | 16.82 | 2.33 | .02 | |

| Control group | 109 | 64.55 | 19.27 | ||||

| Follow-up test | Intervention group | 104 | 72.27 | 18.93 | .61 | .55 | |

| Control group | 109 | 70.79 | 16.73 | ||||

| Disadvantaged | Pretest | Intervention group | 34 | 43.41 | 23.53 | −1.24 | .22 |

| Control group | 29 | 50.76 | 23.42 | ||||

| Post-test | Intervention group | 34 | 58.35 | 16.69 | 2.11 | .04 | |

| Control group | 29 | 47.86 | 22.74 | ||||

| Follow-up test | Intervention group | 34 | 58.82 | 19.76 | .46 | .65 | |

| Control group | 29 | 56.59 | 18.57 |

| Group | Disadvantaged Situation | Skill Group | Tests | N | Development | SD | t | p | d |

|---|---|---|---|---|---|---|---|---|---|

| Intervention group | Nondisadvantaged | 1 | Pre- and post-tests | 9 | 25.51 | 27.24 | −2.81 | .02 | 1.42 |

| Post- and follow-up tests | 2.92 | 23.24 | .38 | .72 | 0 | ||||

| 2 | Pre- and post-tests | 55 | 11.25 | 14.31 | −5.83 | .00 | .97 | ||

| Post- and follow-up tests | 1.67 | 14.24 | −.87 | .39 | .10 | ||||

| 3 | Pre- and post-tests | 40 | 3.61 | 8.15 | −2.80 | .01 | .45 | ||

| Post- and follow-up tests | 1.99 | 10.33 | −1.22 | .23 | .16 | ||||

| Disadvantaged | 1 | Pre- and post-tests | 14 | 31.48 | 21.29 | −5.53 | .00 | 2.04 | |

| Post- and follow-up tests | −2.23 | 22.82 | .37 | .72 | .00 | ||||

| 2 | Pre- and post-tests | 14 | 9.52 | 9.38 | −3.80 | .00 | .82 | ||

| Post- and follow-up tests | .04 | 13.66 | .01 | .99 | .00 | ||||

| 3 | Pre- and post-tests | 6 | .62 | 4.33 | .35 | .74 | .00 | ||

| Post- and follow-up tests | 3.86 | 6.32 | −1.50 | .20 | .66 | ||||

| Control group | Nondisadvantaged | 1 | Pre- and post-tests | 9 | 9.88 | 24.91 | −1.19 | .27 | .58 |

| Post- and follow-up tests | 14.98 | 12.30 | −3.65 | .01 | .62 | ||||

| 2 | Pre- and post-tests | 58 | 7.47 | 18.24 | −3.12 | .00 | .53 | ||

| Post- and follow-up tests | 6.21 | 15.55 | −3.04 | .00 | .37 | ||||

| 3 | Pre- and post-tests | 42 | −5.03 | 12.16 | 2.68 | .01 | .00 | ||

| Post- and follow-up tests | 4.60 | 11.18 | −2.67 | .01 | .37 | ||||

| Disadvantaged | 1 | Pre- and post-tests | 10 | 5.56 | 17.22 | −1.02 | .33 | .39 | |

| Post- and follow-up tests | 10.05 | 12.03 | −2.64 | .03 | .69 | ||||

| 2 | Pre- and post-tests | 12 | 2.78 | 14.91 | .65 | .53 | .00 | ||

| Post- and follow-up tests | 8.22 | 11.27 | −2.53 | .03 | .55 | ||||

| 3 | Pre- and post-tests | 7 | −6.88 | 12.55 | 1.45 | .20 | .00 | ||

| Post- and follow-up tests | −3.00 | 21.30 | .37 | .72 | .00 |

| Model | χ2 | df | AIC | BIC | CFI | TLI | RMSEA [90% CI] |

|---|---|---|---|---|---|---|---|

| Latent change model for both of the groups | 103.2 | 10 | 8837.8 | 8901.9 | .842 | .810 | .268 [.222, .316] |

| No-change model for both of the groups | 155.4 | 12 | 8885.9 | 8942.9 | .757 | .757 | .303 [.262, .347] |

| No-change model for the control and latent change model for the intervention group | 108.6 | 11 | 9331.7 | 9393.2 | .842 | .828 | .255 [.212, .299] |

| Model | χ2 | df | AIC | BIC | CFI | TLI | RMSEA [90% CI] |

|---|---|---|---|---|---|---|---|

| Latent change model for both groups | 35.8 | 10 | 9902.7 | 9967.8 | .928 | .913 | .137 [.091, .187] |

| No-change model for both groups | 54.9 | 12 | 9917.8 | 9975.6 | .880 | .880 | .162 [.120, .206] |

| No-change model for the control group and latent change model for the intervention group | 36.1 | 11 | 9900.9 | 9962.4 | .930 | .923 | .129 [.084, .177] |

| Model | χ2 | df | AIC | BIC | CFI | TLI | RMSEA [90% CI] |

|---|---|---|---|---|---|---|---|

| Latent change model for both groups | 144.0 | 10 | 8671.9 | 8737.0 | .925 | .911 | .313 [.269, .359] |

| No-change model for both groups | 149.2 | 12 | 8671.8 | 8733.2 | .924 | .918 | .299 [.257, .343] |

| No-change model for the control group and latent change model for the intervention group | 145.8 | 11 | 8671.2 | 8731.0 | .925 | .924 | .289 [.249, .331] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Szili, K.; Kiss, R.; Csapó, B.; Molnár, G. Computer-Based Development of Reading Skills to Reduce Dropout in Uncertain Times. J. Intell. 2022, 10, 89. https://doi.org/10.3390/jintelligence10040089

Szili K, Kiss R, Csapó B, Molnár G. Computer-Based Development of Reading Skills to Reduce Dropout in Uncertain Times. Journal of Intelligence. 2022; 10(4):89. https://doi.org/10.3390/jintelligence10040089

Chicago/Turabian StyleSzili, Katalin, Renáta Kiss, Benő Csapó, and Gyöngyvér Molnár. 2022. "Computer-Based Development of Reading Skills to Reduce Dropout in Uncertain Times" Journal of Intelligence 10, no. 4: 89. https://doi.org/10.3390/jintelligence10040089

APA StyleSzili, K., Kiss, R., Csapó, B., & Molnár, G. (2022). Computer-Based Development of Reading Skills to Reduce Dropout in Uncertain Times. Journal of Intelligence, 10(4), 89. https://doi.org/10.3390/jintelligence10040089