A Validated Ontology for Metareasoning in Intelligent Systems

Abstract

1. Introduction

- The Integrated Metareasoning Ontology, an ontology for the representation of different metareasoning problems in intelligent systems. We describe a framework for the use of the formally defined semantics of the classes, the individuals, and the properties of the ontology to construct the knowledge representation structure necessary to monitor and control the reasoning processes in intelligent agents. The proposed ontology also reuses existing ontologies that are used for partial modelling of some aspects of the metareasoning domain.

2. Materials and Methods

3. Results

3.1. Definition

- What is the purpose? The objective of the ontology is to facilitate the integration of metareasoning processes into advanced intelligent systems.

- What is the scope? The ontology will include information on the processes related to metareasoning, such as allocation deliberation time, allocation evaluation effort, detection of reasoning failures, meta-explanations, introspective monitoring and metalevel control.

- Who are the intended end users? Users include research groups in cognitive science, artificial intelligence, and cognitive computing. Although the proposed ontology addresses general topics of meta-reasoning, our focus is the application in educational settings, mainly in solving academic problems in higher education institutions.

- What is the intended use? The main functional roles include the ability to model meta-reasoning problems that can occur in intelligent systems in terms of monitoring and controlling cognitive processes. The ontology is useful for reducing the discrepancies between the data structures required in different components of the meta-reasoning process. Discrepancies between data structures and language syntax make it even more difficult to exchange information between meta-reasoning models, leading to considerable information deviations when data flows are connected through the different designed components. Knowledge sharing among researchers, academics, and developers can be facilitated by having an ontology for the meta-reasoning domain. This is because the ontology reduces the ambiguity of terms and has a controlled vocabulary. The ontology provides a semantic basis for communication between designers, academics, and researchers so that designers share a common understanding of knowledge.

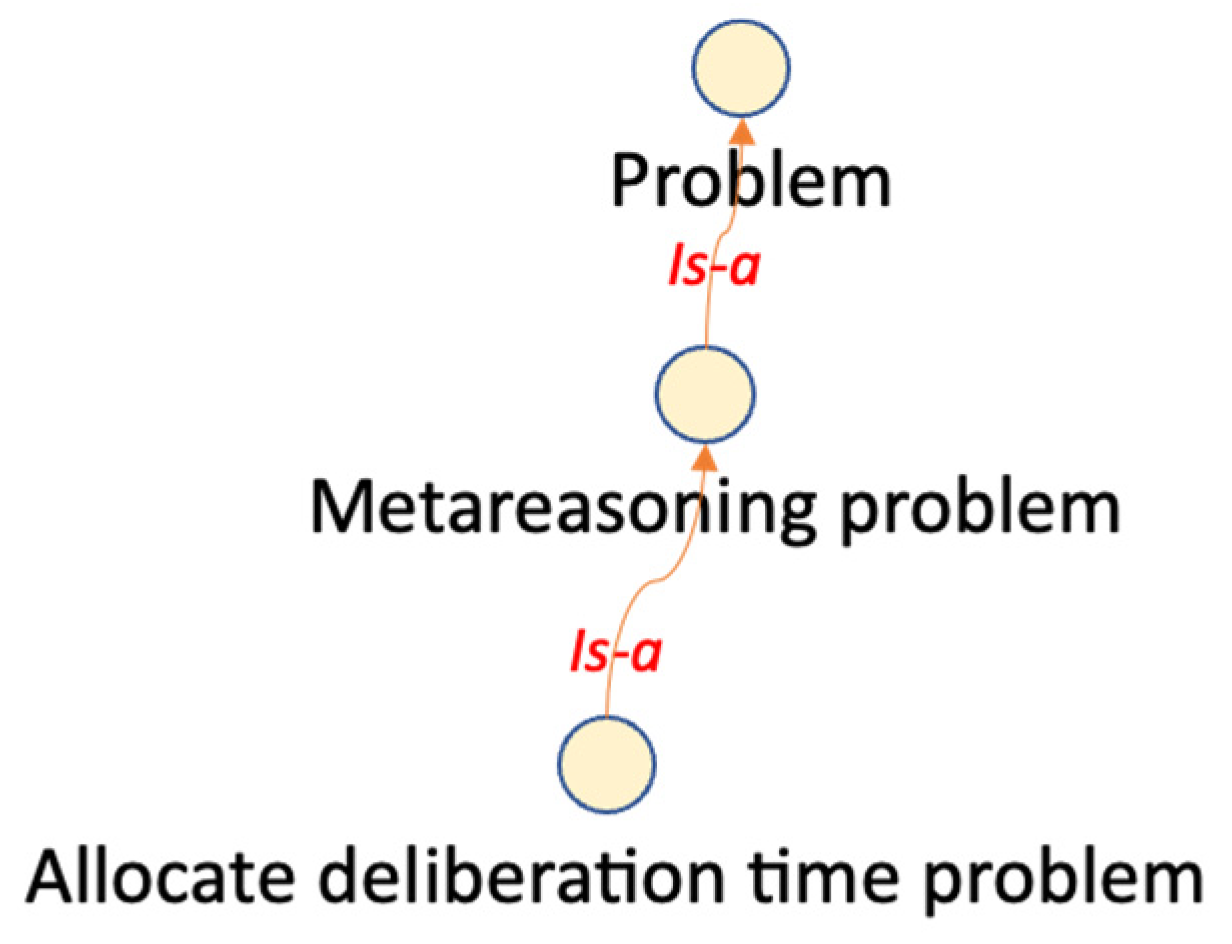

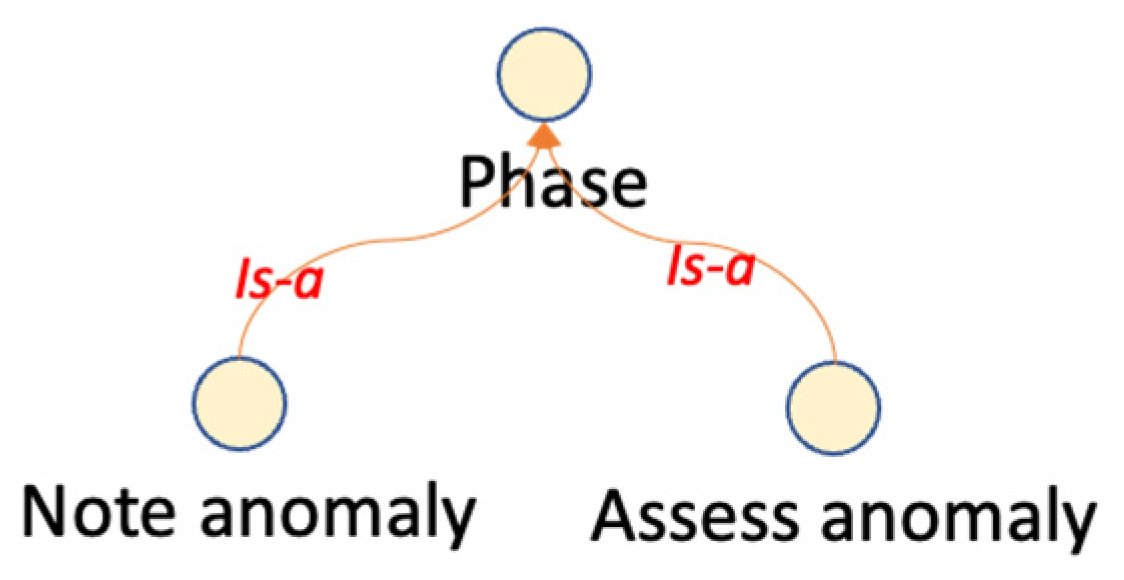

3.2. Conceptualization

- (i)

- Listing the relevant terms in the ontology;

- (ii)

- Defining classes;

- (iii)

- Defining class properties with specifications for their range and domain (Noy and McGuinness 2001).

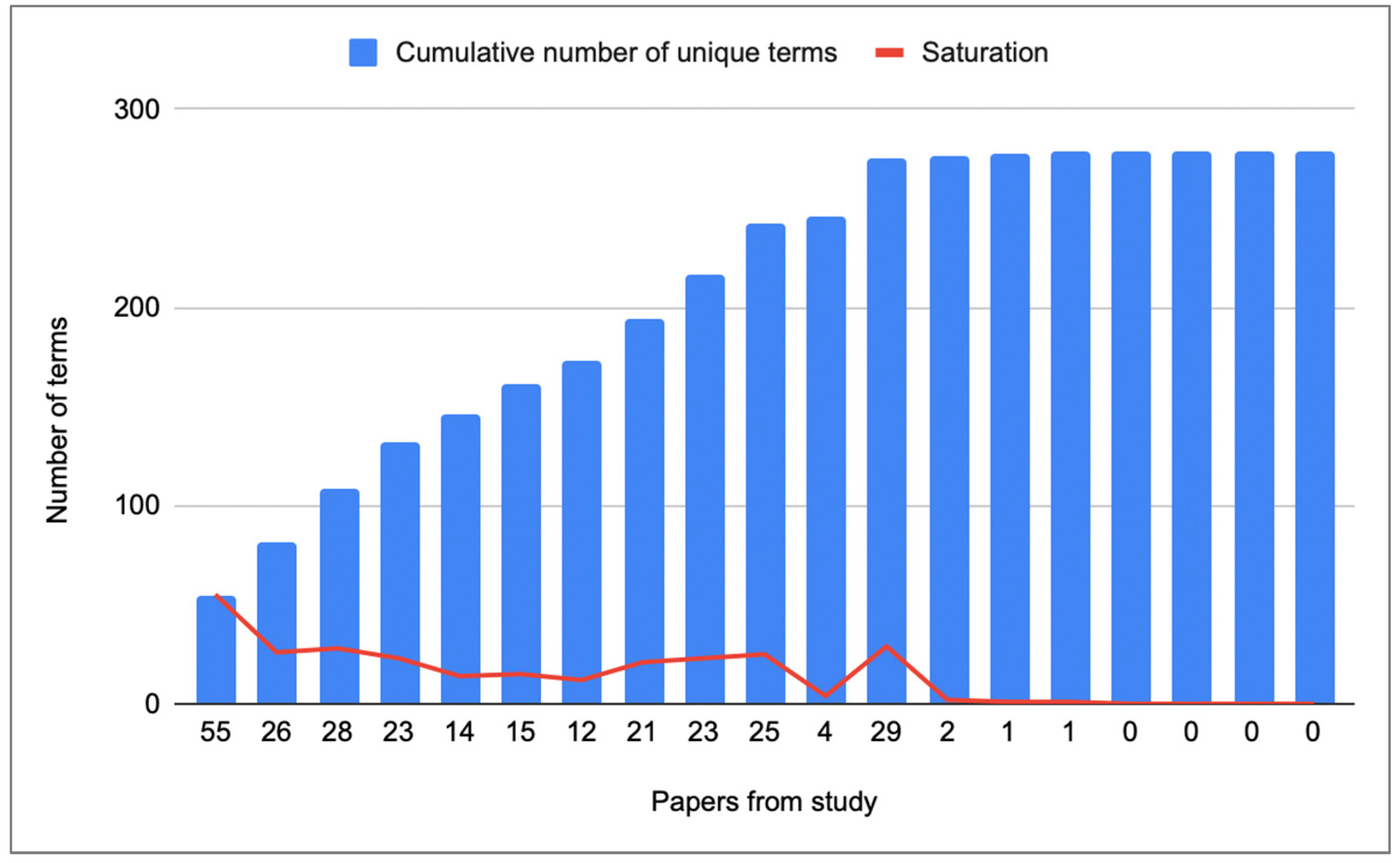

3.2.1. Listing the Relevant Terms in the Ontology

3.2.2. Defining Classes

3.2.3. Define Class Properties with Specification of the Range and Domain

3.3. Formalization

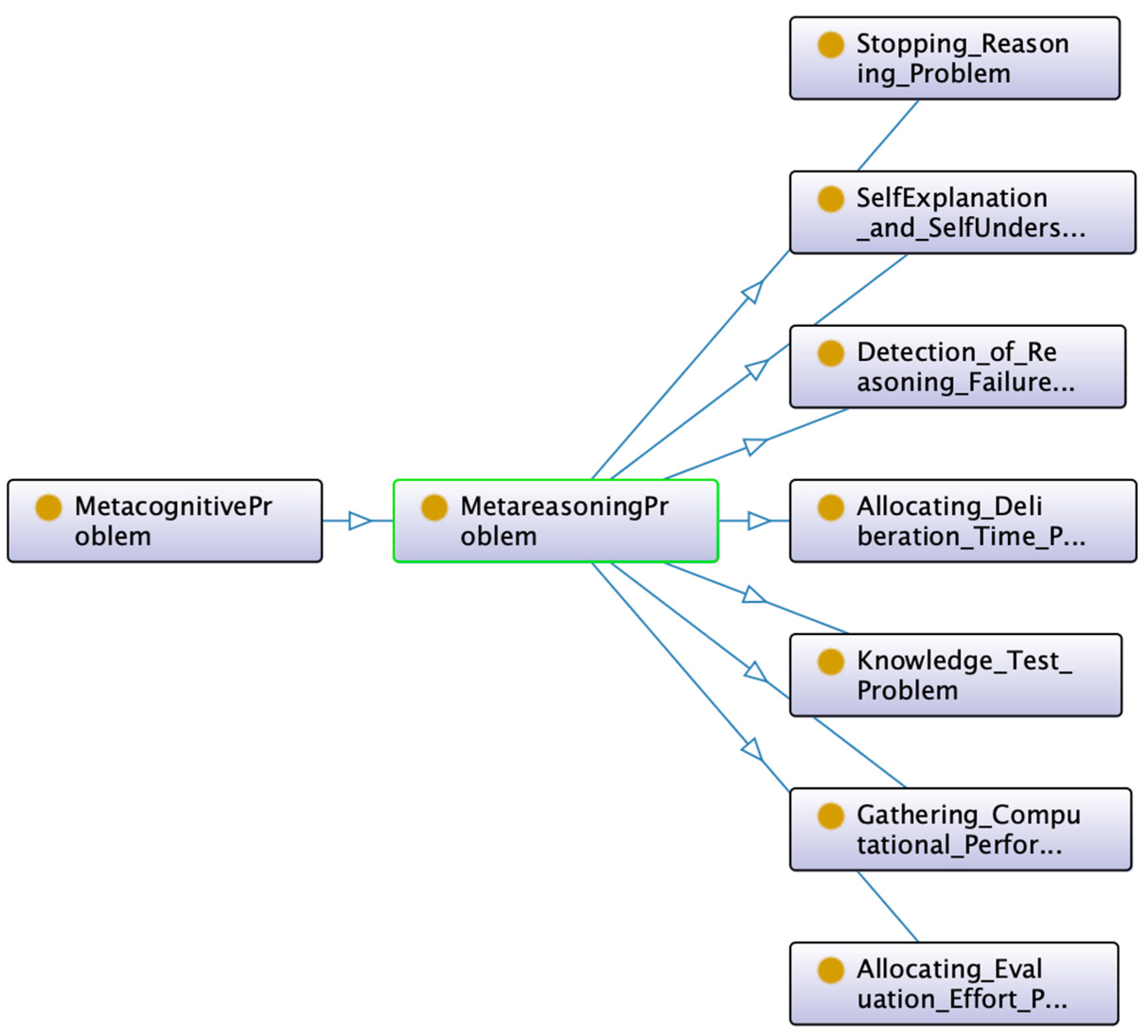

3.4. Implementation: The Integrated Metareasoning Ontology (IM-Onto)

- CAPITAL LETTERS denote concepts defined in the ontology. For example, ALLOCATE_DELIBERATION_TIME and OBJECT_LEVEL are important concepts and are further described in the ontology term detailed description.

- Italics letters refer to a property of a relation between two or more ontology concepts. For example, has_problem_solution is a property that relates the ontology term PROBLEM and SOLUTION.

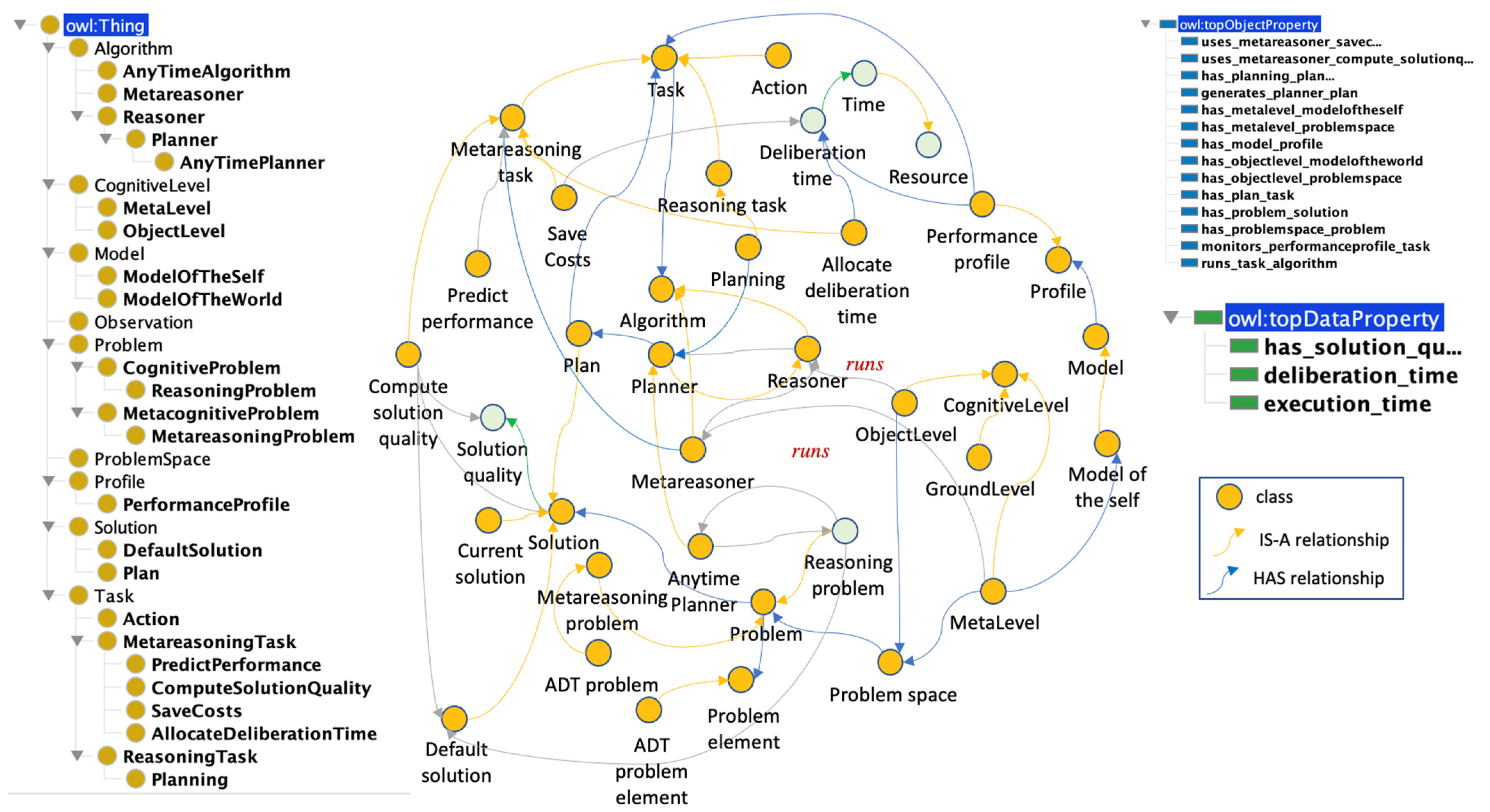

3.4.1. The Allocating Deliberation Time Problem (ADTP) Subontology

- A PERFORMANCE_PROFILE generally represents a vector with the quality of the solutions of an algorithm that is monitored in reasoning time intervals.

- ANYTIME_ALGORITHM is a class that represents an anytime algorithm whose quality of results gradually improves as computation time increases and can return a valid solution to a problem even if it is interrupted before completion. This kind of algorithm offers a tradeoff between solution quality and computation time, which is expected to find better solutions the longer it keeps running. In the context of this work, an anytime algorithm works to solve a REASONING_PROBLEM.

- The METAREASONING_TASK class refers to tasks that are carried out in the META_LEVEL, and its objective is to monitor and control the reasoning processes that are carried out at the OBJECT_LEVEL. The main metareasoning tasks in this problem are COMPUTE_SOLUTION_QUALITY, PREDICT_PERFORMANCE, ALLOCATE_DELIBERATION_TIME and SAVE_COST.

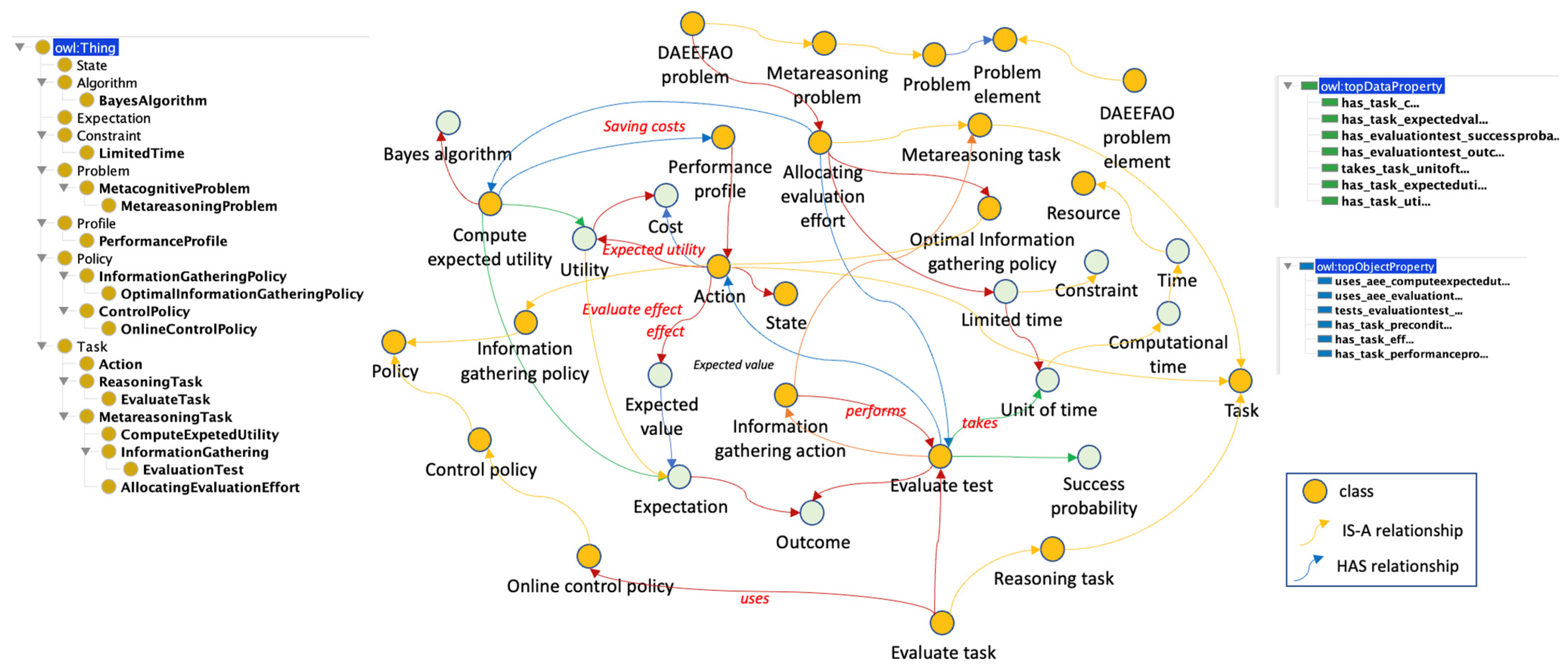

3.4.2. Allocating Evaluation Effort Problem Subontology

- Class ACTION represents a set of actions that an agent could evaluate.

- EVALUATION_TEST allows to verify the history of events or failures of the execution of an action. This can bring savings to the ACTION selection evaluation effort.

- The main metareasoning tasks in this problem are ALLOCATING_EVALUATION_EFFORT, COMPUTE_EXPECTED_UTILITY, and EVALUATE_TEST.

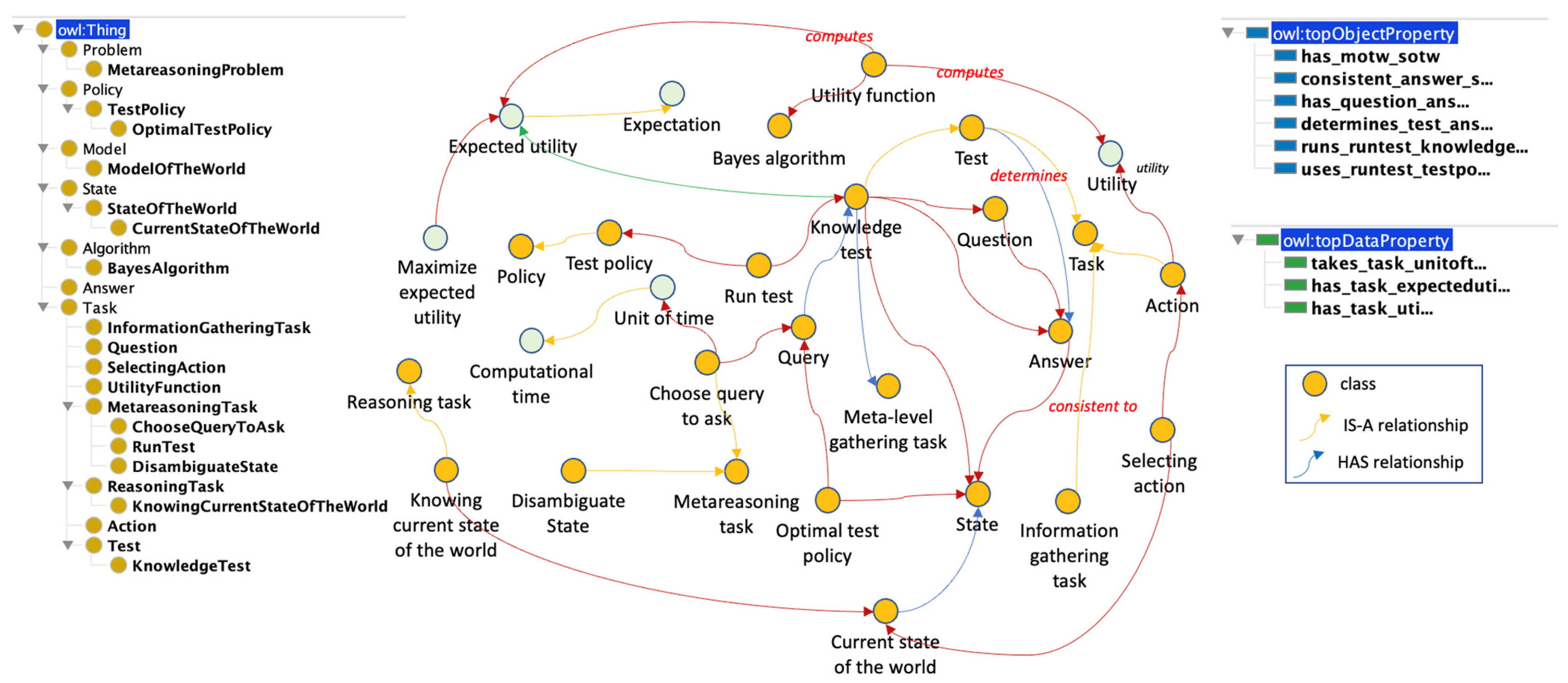

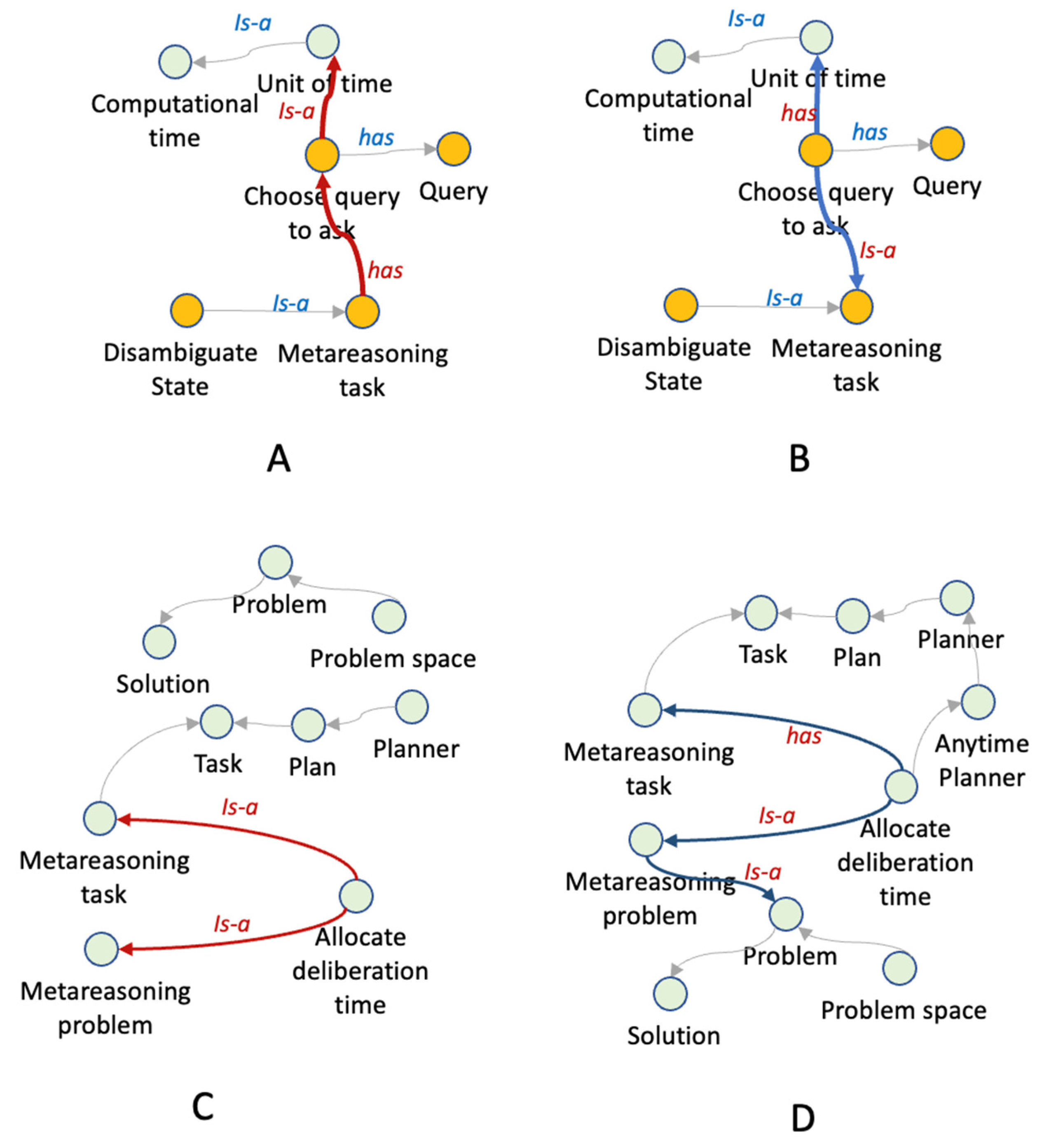

3.4.3. The Knowledge Test Problem (KTP) Subontology

- The KNOWLEDGE_TEST class determines the answers to a set of questions to determine the current state of the world.

- Class QUERY represents a set of questions to determine the current state of the world.

- Class ANSWER contains the output of the queries.

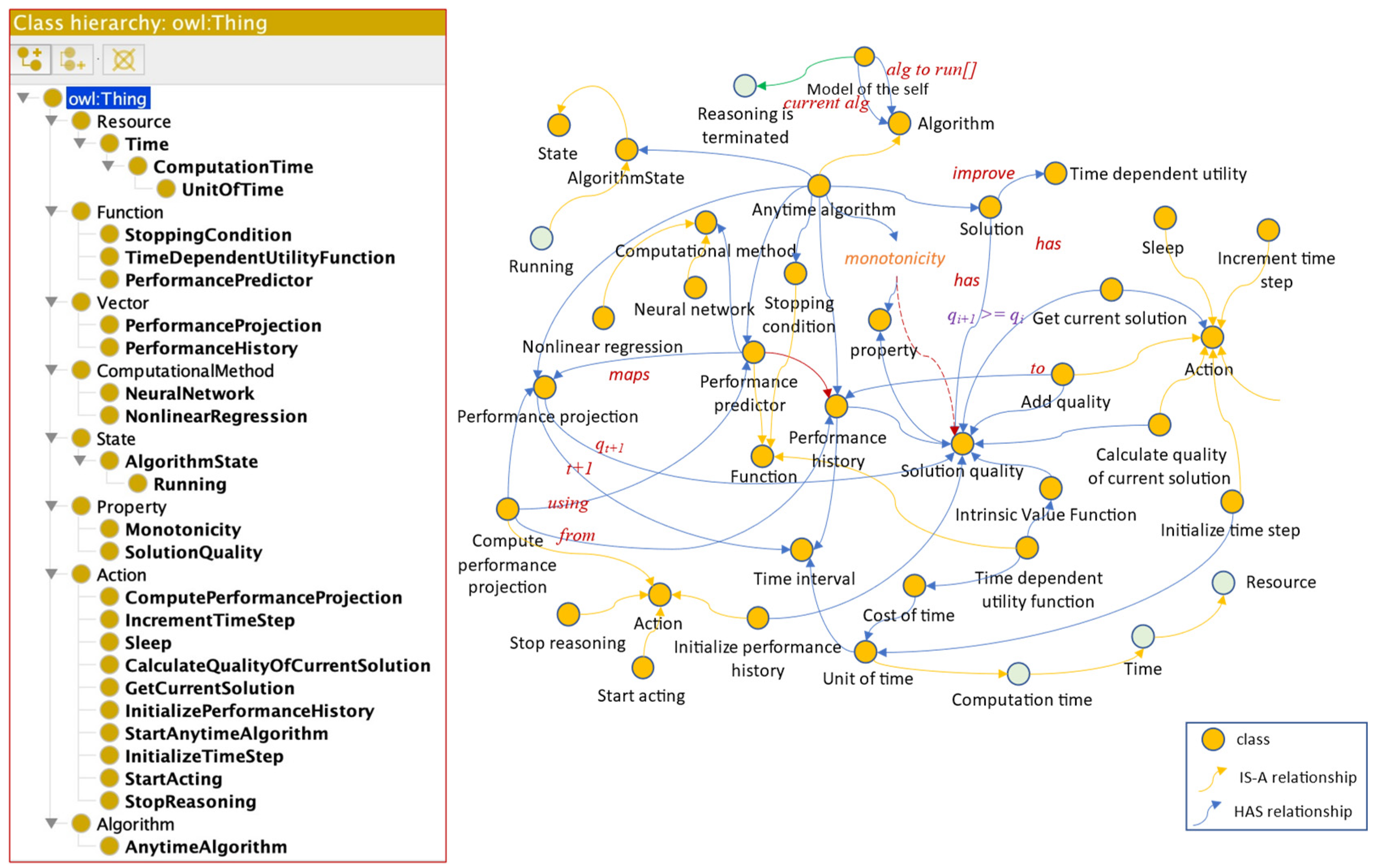

3.4.4. The Stopping Reasoning Problem (SRP) Subontology

- A TIME_DEPENDENT_UTILITY represents the utility of a solution computed by an anytime algorithm.

- SOLUTION_QUALITY represents the quality of the solution to a problem. In this case, a solution is generated by an algorithm to solve the current problem.

- A PERFORMANCE_PROFILE_HISTORY represents the past performance of an anytime algorithm as a vector of solution qualities.

- A PERFORMANCE_PROFILE_PROJECTION represents the future performance of an anytime algorithm as a vector of solution qualities.

- PERFORMANCE_PREDICTOR is a function that maps a PERFORMANCE_PROFILE_HISTORY to a PERFORMANCE_PROFILE_PROJECTION.

3.4.5. The Gathering Computational Performance Data Problem (GCPDP) Subontology

- MODEL_OF_THE_WORLD is an internal model that stores information related to the perception history that describes the state of the environment or world that is perceived by the system.

- MODEL_OF_THE_SELF is a dynamic model of the object level. This model is part of the meta-level and contains updated information on the current state of the reasoning processes that are carried out at the object level.

3.4.6. Detection of Reasoning Failure Problem (DRFP) Subontology

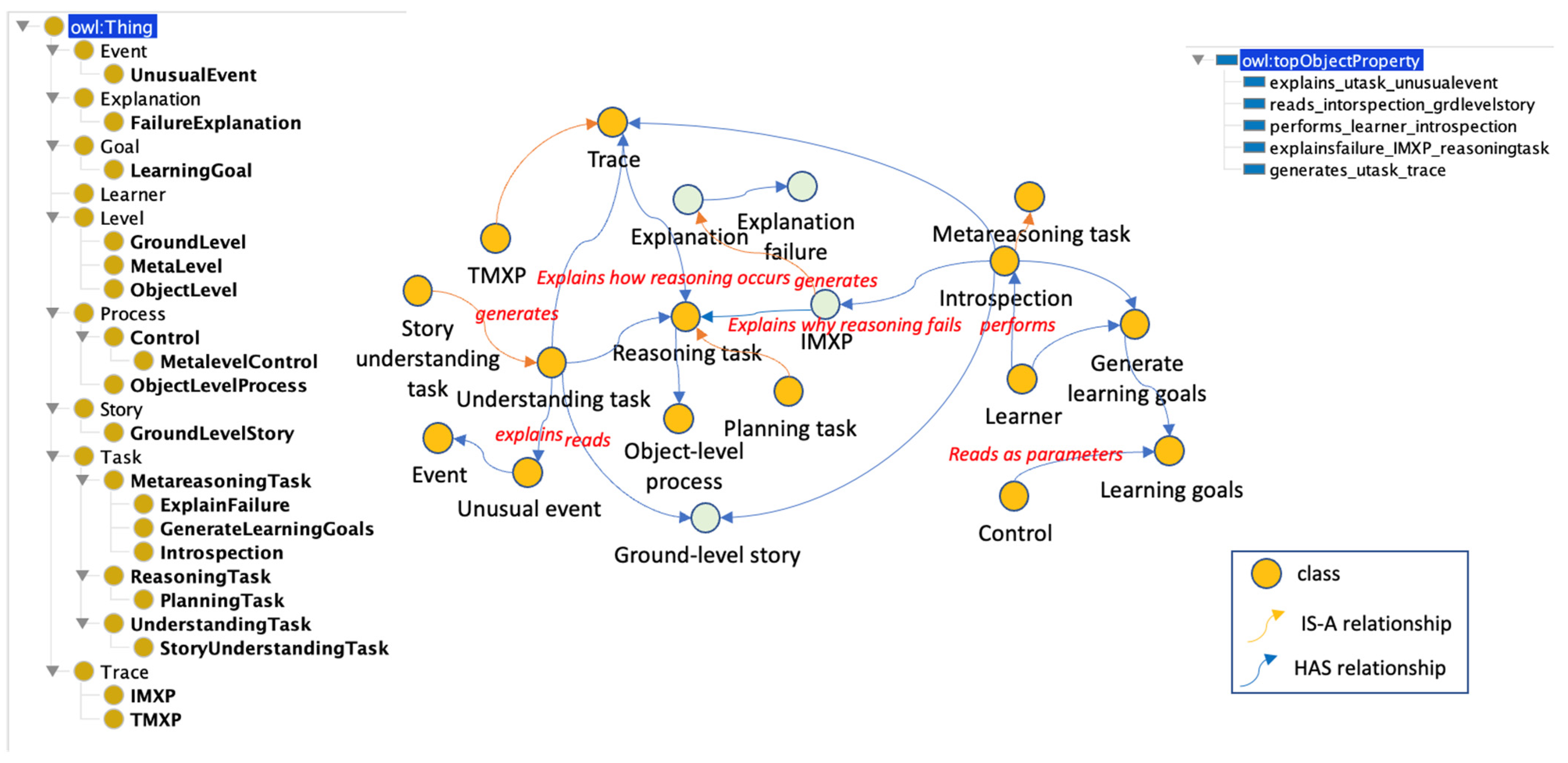

3.4.7. Self-Explanation and Self-Understanding Problem (SE&SUP) Subontology

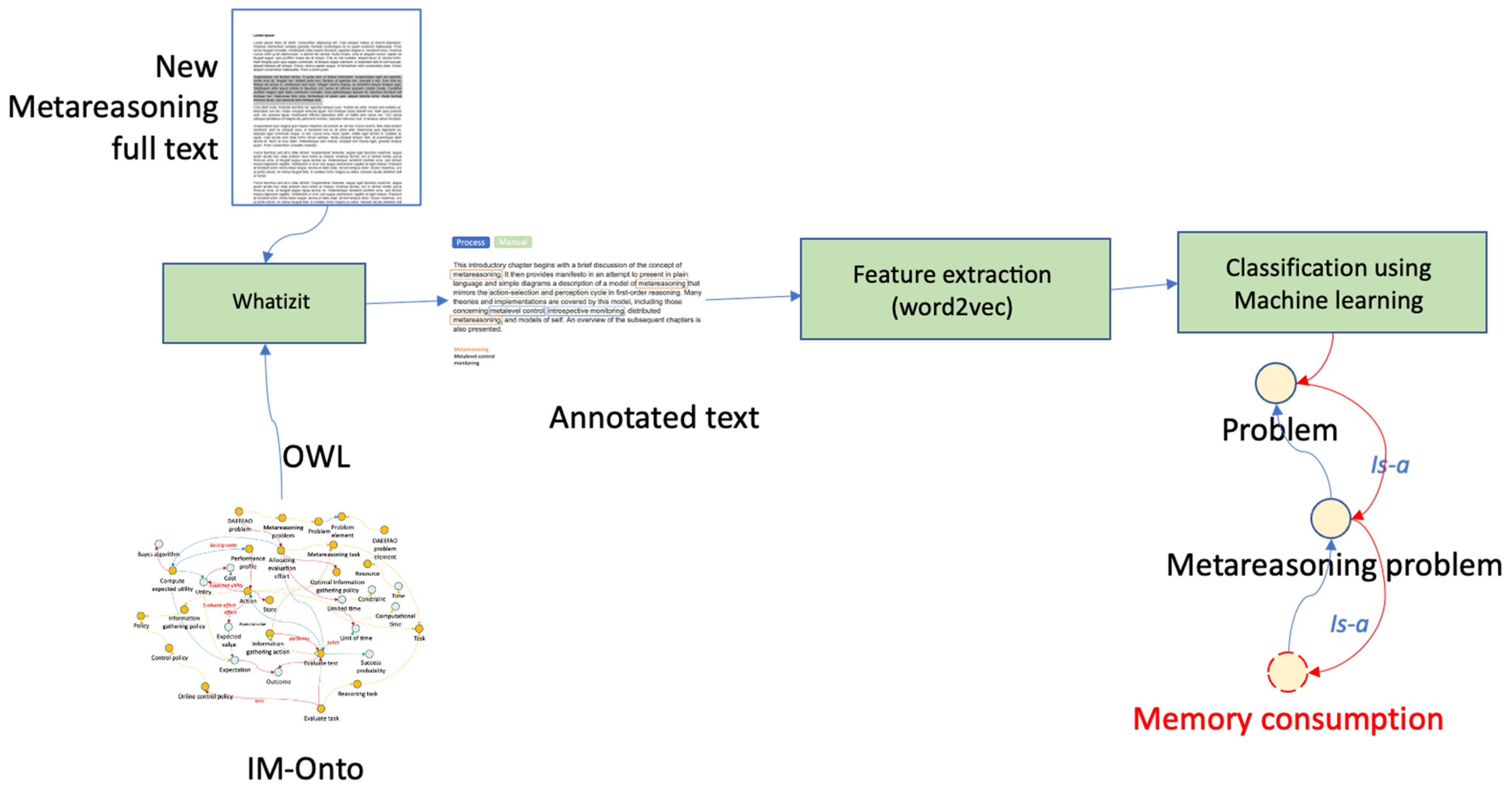

3.4.8. Improving the Ability of the Approach to Generalize to New (or Existing but Unstudied) Problems

3.5. Evaluation and Case Study

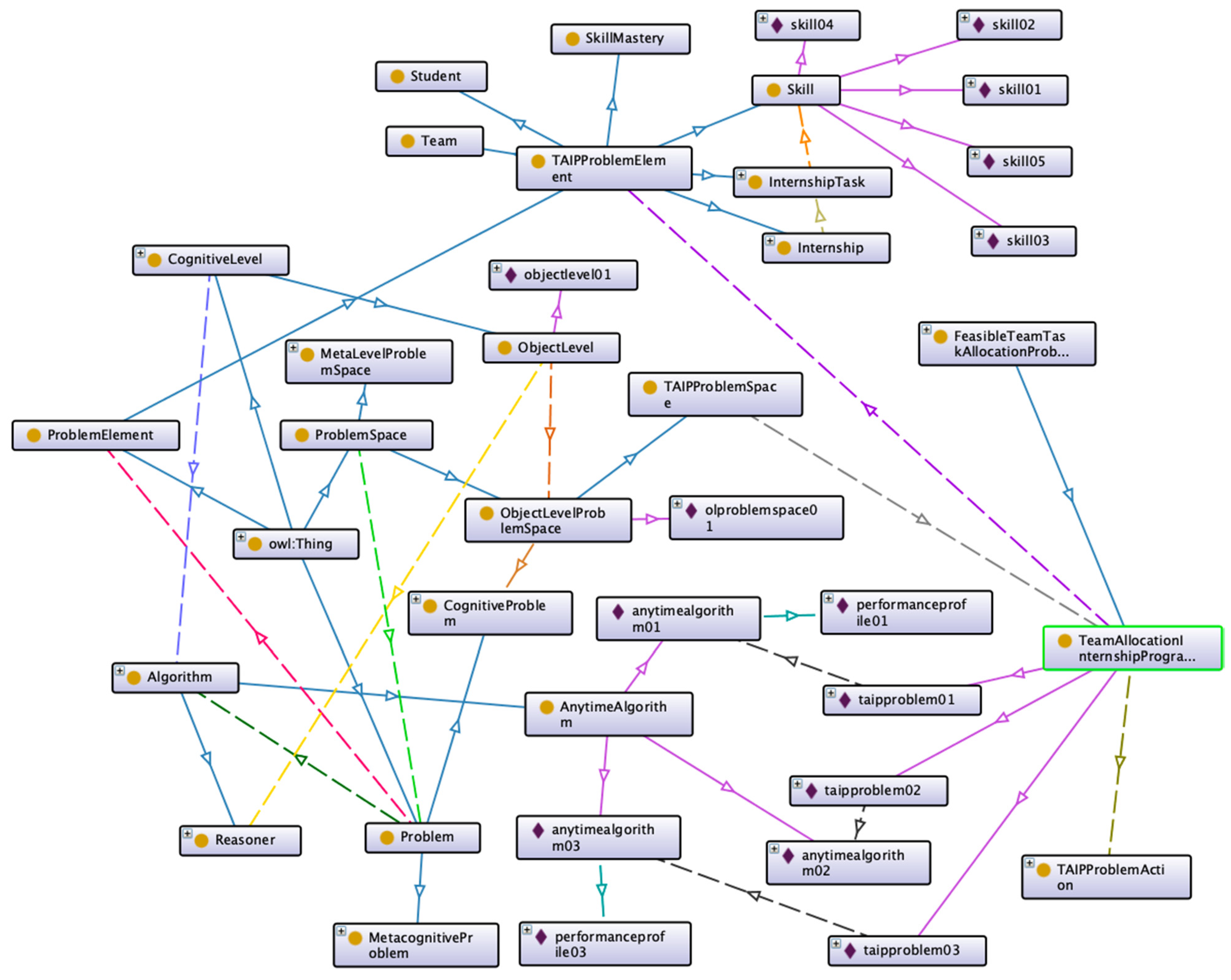

Running Example: The Team Allocation for Internship Programs (TAIP)

- A Python package was developed that contains the classes common to the 7 types of problems addressed in this article. In this package, a basic cognitive system is made up of two cognitive levels, as specified in the ontology. A Beta version of the package is available at: https://github.com/dairdr/carina (login is required).

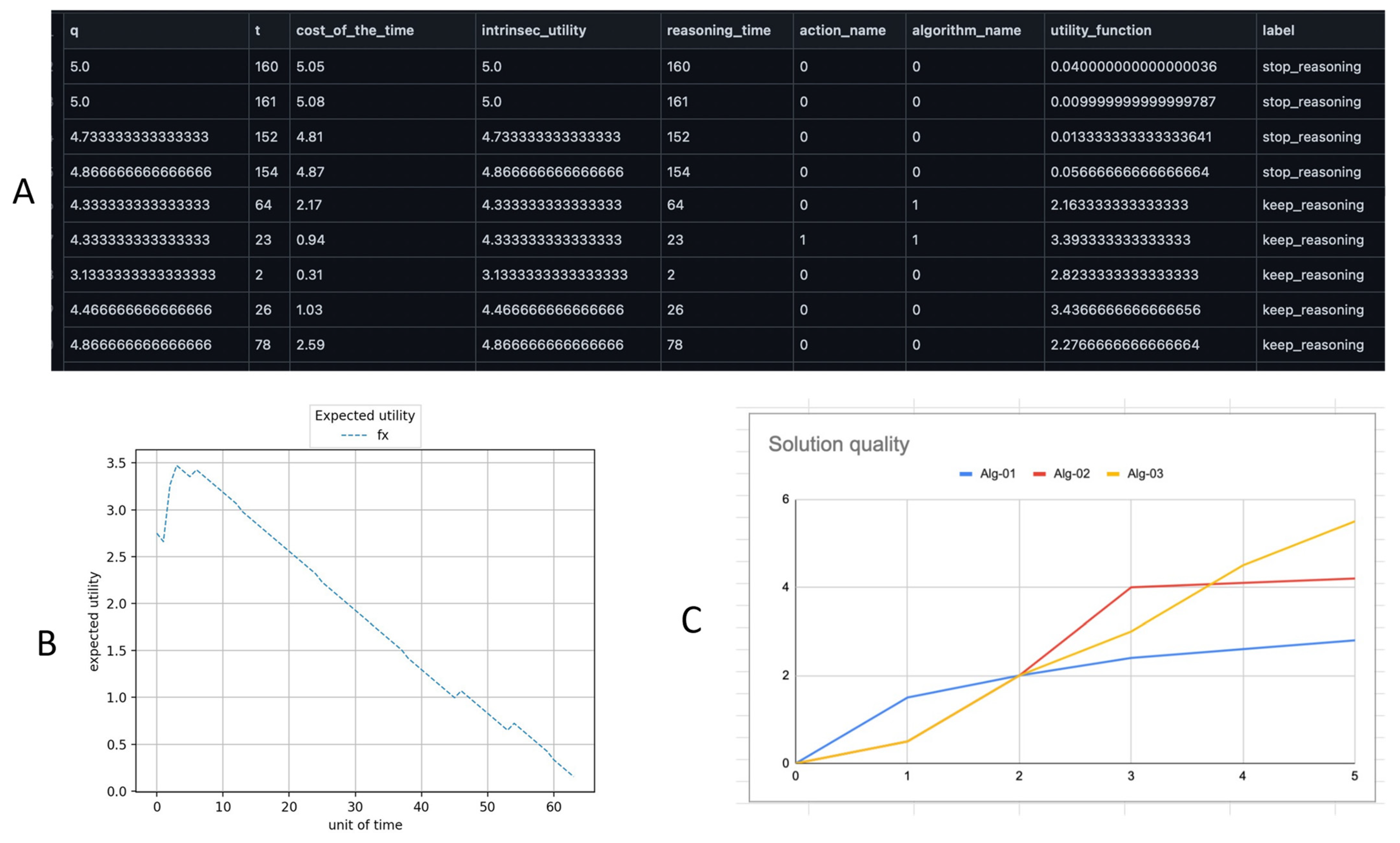

- A polymorphic meta-reasoner was created at the meta-level, which monitors the object level by accessing the data that is updated in the model of the self (MoS); this model is designed according to the MODELOFTHESELF class. The monitoring and gathering of information are done through the MoS, which is updated in real-time from the object level. The MoS stores the profiles of the cognitive tasks that are executed at the object level; this is done automatically and does not require human intervention. Figure 15 shows a dataset based on the performance profile of the object-level reasoner and the history of a stopping reasoning problem (SRP). The meta-level uses the dataset to train SRP using a random forest algorithm; see Figure 15, section A. The MoS is stored in the working memory of the cognitive system, serving as a bridge between the object level and the meta-level. The meta-reasoner runs in parallel with the object level and analyzes the reasoning traces of the cognitive task profile. The meta-reasoner analyzes the data using a random forest algorithm to select the method to execute according to the meta-reasoning problem detected. Profiles store data about cognitive tasks such as start time, execution time, output quality, and data that are common to any task executed at the object level. In this sense, the scaling of the meta-reasoner is facilitated, encompassing new models due to its polymorphic design.

- A cognitive system with two cognitive levels was designed: the object level and the meta-level. The object level was configured according to the IM-Onto object level class specifications. Where the problem or cognitive task performed by the object level was defined considering the REASONINGPROBLEM class, then the elements of the problem were added according to the PROBLEMELEMENT class.In this case study, the object level is based on three anytime algorithms that are monitored and controlled by a meta-level until a suitable solution is found in cost and time. An example of the implementation of the FTAP problem is available at: https://github.com/dairdr/carina/blob/master/miscellaneous.py (login is required).

- The system was configured with three algorithms to induce some meta-reasoning problems to observe the behavior of the meta-level. In this sense, for TAIP, one algorithm randomly selects the members of the team, another selects the most qualified members for each competition and thus assembles the team, while another algorithm receives parameters that restrict the selection; for example, if the average skill proficiency of a selected team has a student with low performance, then the algorithm replaces the student. The meta-level selects the best algorithm profile according to a set of constraints stipulated in the problem configuration, such as deliberation time and algorithm performance. Figure 15 shows some outcomes resulting from the validations of the system. Section A shows a subset of features obtained from the profiles of the cognitive tasks that are stored in the MoS and are used by the meta-level for monitoring and control; in this case, it is a training dataset for the problem of stopping the reasoning process. Section B shows the behavior of the time-dependent utility function of the algorithm that is running at the object level, which is used to predict the stopping of the reasoning process. Section C presents the profiles of the three algorithms with respect to the behavior of the time-dependent utility function.

3.6. Ontology Evaluation

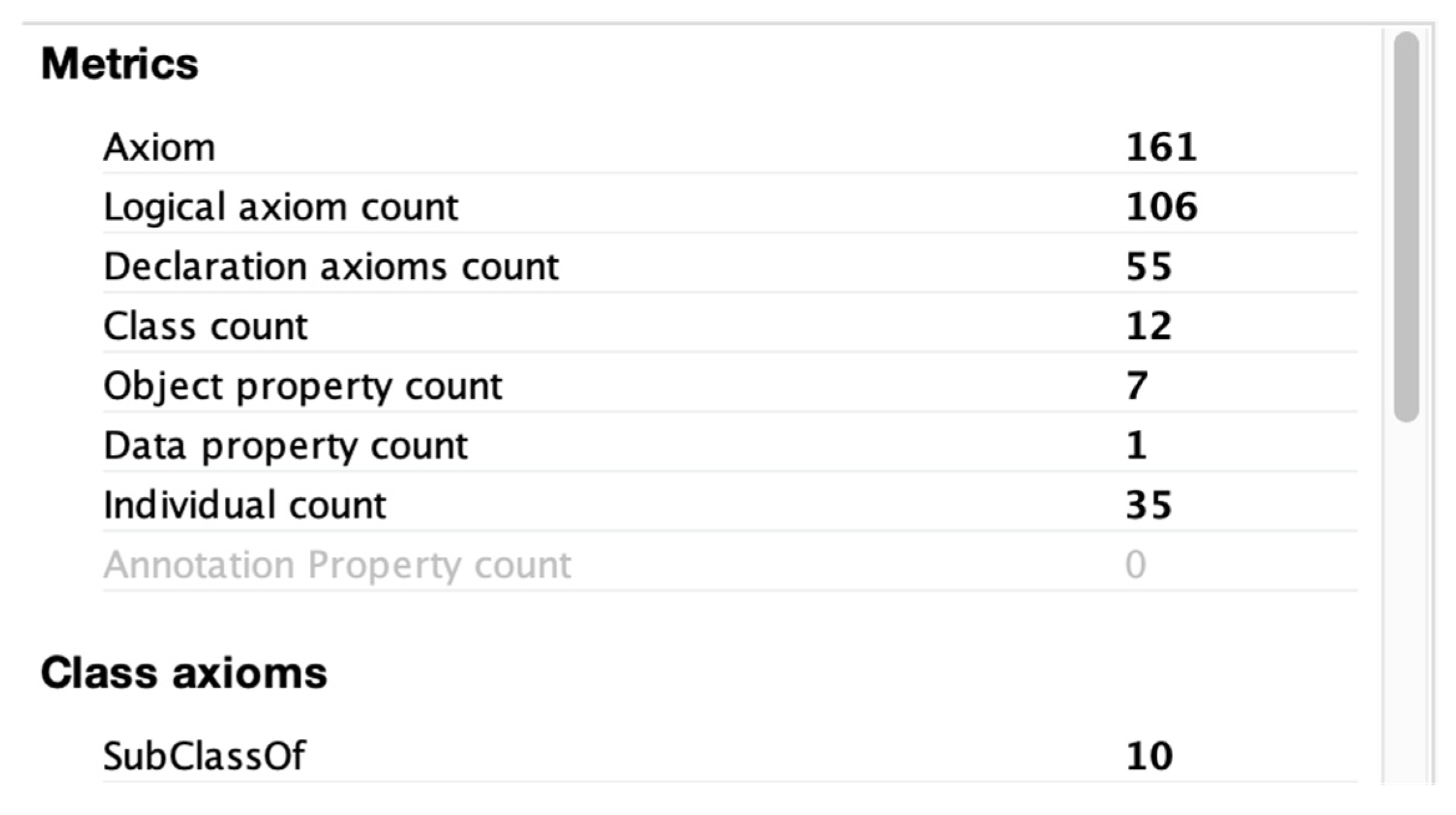

3.6.1. Automated Consistency Checking

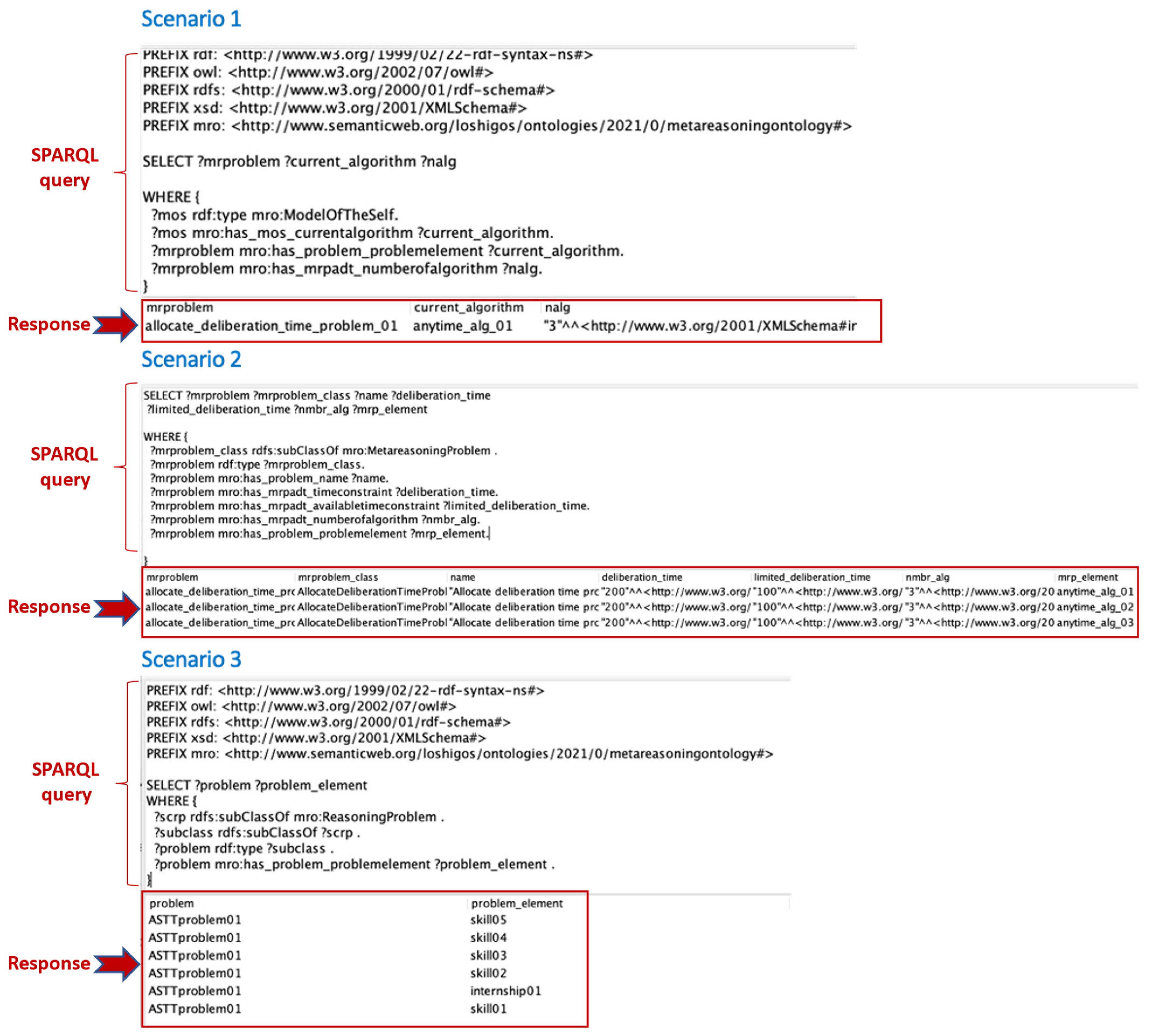

3.6.2. Task-Based Evaluation

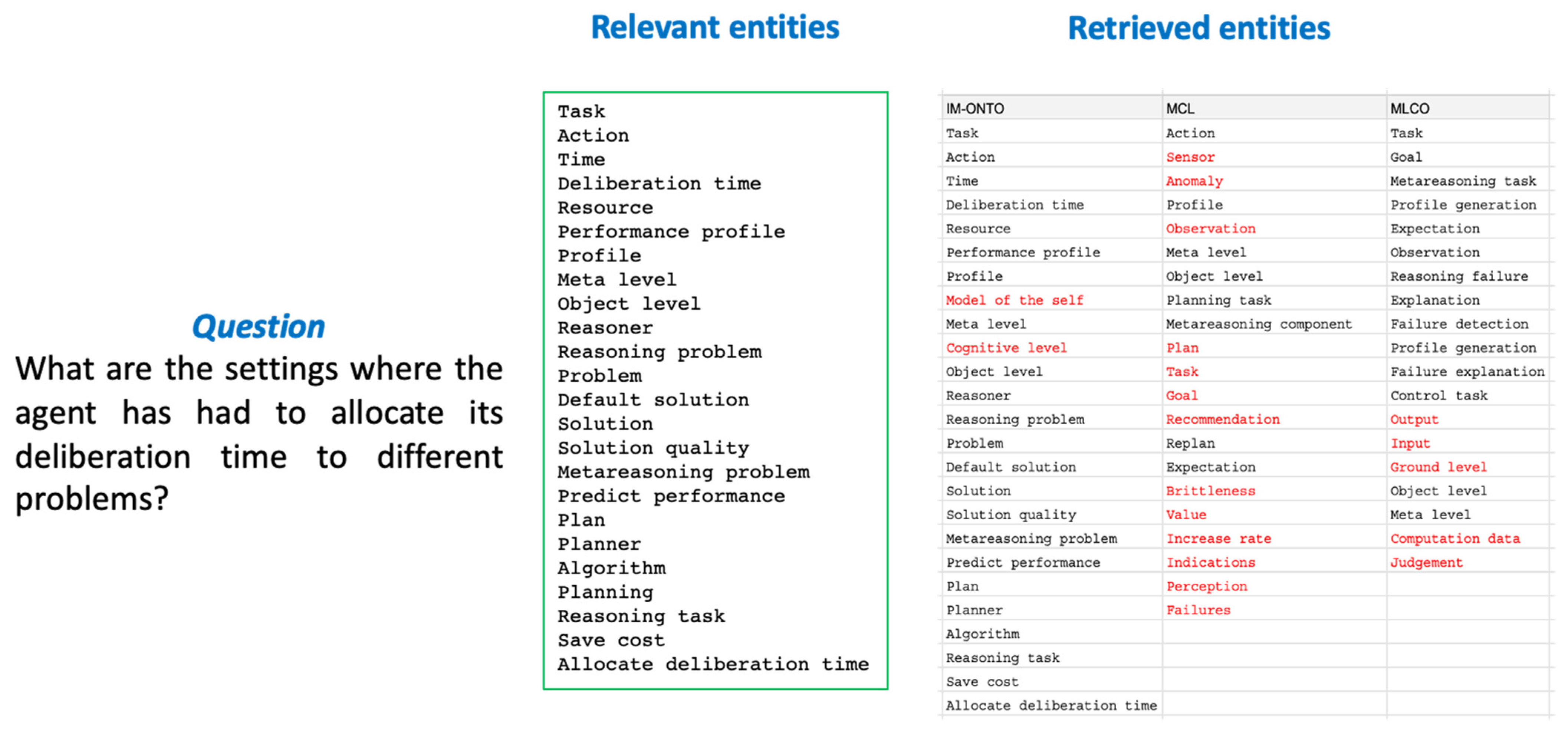

3.6.3. Data-Driven Evaluation

3.6.4. Criteria-Based Evaluation

3.7. Ontology Documentation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ackerman, Rakefet, and Valerie Thompson. 2017. Meta-Reasoning: Monitoring and Control of Thinking and Reasoning. Trends in Cognitive Sciences 21: 607–17. [Google Scholar] [CrossRef] [PubMed]

- Allemang, Dean, Irene Polikoff, and Ralph Hodgson. 2005. Enterprise architecture reference modeling in OWL/RDF. In International Semantic Web Conference (ISWC 2005). Edited by Yolanda Gil, Enrico Motta, V. Richard Benjamins and Mark A. Musen. Lecture Notes in Computer Science. Berlin: Springer, vol. 3729, pp. 844–57. [Google Scholar]

- Althubaiti, Sara, Şenay Kafkas, Marwa Abdelhakim, and Robert Hoehndorf. 2020. Combining lexical and context features for automatic ontology extension. Journal of Biomedical Semantics 11: 1–13. [Google Scholar] [CrossRef]

- Anderson, Michael, and Timothy Oates. 2007. A review of recent research in metareasoning and metalearning. AI Magazine 28: 12. [Google Scholar]

- Andrejczuk, Ewa, Filippo Bistaffa, Christian Blum, Juan A. Rodríguez-Aguilar, and Carles Sierra. 2019. Synergistic team composition: A computational approach to foster diversity in teams. Knowledge-Based Systems 182: 104799. [Google Scholar] [CrossRef]

- Baccigalupo, Claudio, and Enric Plaza. 2007. Poolcasting: A social Web radio architecture for group customisation. Paper presented at the 3rd International Conference on Automated Production of Cross Media Content for Multi-Channel Distribution, AXMEDIS 2007, Barcelona, Spain, November 28–30; pp. 115–22. [Google Scholar]

- Badr, Kamal Badr Abdalla, Afaf Badr Abdalla Badr, and Mohammad Nazir Ahmad. 2013. Phases in Ontology Building Methodologies: A Recent Review. In Ontology-Based Applications for Enterprise Systems and Knowledge Management. Hershey: IGI Global, pp. 100–23. [Google Scholar]

- Borghetti, Brett, and Maria Gini. 2008. Weighted prediction divergence for metareasoning. Metareasoning: Paper presented at the 2008 AAAI Workshop, Chicago, IL, USA, July 13–17. [Google Scholar]

- Brewster, Christopher, Harith Alani, Srinandan Dasmahapatra, and Yorick Wilks. 2004. Data driven ontology evaluation. Paper presented at the 4th International Conference on Language Resources and Evaluation, LREC 2004, Lisbon, Portugal, May 26–28. [Google Scholar]

- Caleiro, Carlos, Luca Vigano, and David Basin. 2005. Metareasoning about security protocols using distributed temporal logic. Electronic Notes in Theoretical Computer Science 125: 67–89. [Google Scholar] [CrossRef]

- Caro, Manuel, Darsana Josyula, and Jovani A. Jiménez. 2014. A formal model for metacognitive reasoning in intelligent systems. International Journal of Cognitive Informatics and Natural Intelligence 8: 70–86. [Google Scholar] [CrossRef][Green Version]

- Chen, Xiaoping, Zhiqiang Sui, and Jianmin Ji. 2013. Towards metareasoning for human-robot interaction. In Intelligent Autonomous Systems 12. Berlin/Heidelberg: Springer, pp. 355–67. [Google Scholar] [CrossRef]

- Conitzer, Vincent, and Tuomas Sandholm. 2003. Definition and complexity of some basic metareasoning problems. Paper presented at the IJCAI International Joint Conference on Artificial Intelligence, Acapulco, Mexico, August 9–15; pp. 1099–106. [Google Scholar]

- Cox, Michael. 2005. Metacognition in computation: A selected research review. Artificial Intelligence 169: 104–41. [Google Scholar] [CrossRef]

- Cox, Michael T. 2011. Metareasoning, monitoring, and self-explanation. In Metareasoning: Thinking about Thinking. Edited by Michael T. Cox and Anita Raja. Cambridge: MIT Press, pp. 131–49. [Google Scholar]

- Cox, Michael, and Anita Raja. 2008. Metareasoning: A manifesto. In Metareasoning: Thinking about Thinking, Proceedings of the 2008 AAAI Workshop, Chicago, IL, USA, July 13–17. Technical Report WS-08-07. Edited by Michael T. Cox and Anita Raja. Menlo Park: AAAI Press, pp. 1–4. [Google Scholar]

- Cox, Michael, and Anita Raja. 2011. Metareasoning: An introduction. In Metareasoning: Thinking about Thinking. Edited by Michael T. Cox and Anita Raja. Cambridge: MIT Press, pp. 3–14. [Google Scholar]

- Cserna, Bence, Wheeler Ruml, and Jeremy Frank. 2017. Planning time to think: Metareasoning for on-line planning with durative actions. Paper presented at the International Conference on Automated Planning and Scheduling, Pittsburgh, PA, USA, June 18–23, vol. 27, pp. 56–60. [Google Scholar]

- Dannenhauer, Dustin, Michael Cox, and Hector Munoz-Avila. 2018. Declarative metacognitive expectations for high-level cognition. Advances in Cognitive Systems 6: 231–50. [Google Scholar]

- Dannenhauer, Dustin, Michael T. Cox, Shubham Gupta, Matt Paisner, and Don Perlis. 2014. Toward meta-level control of autonomous agents. Procedia Computer Science 41: 226–32. [Google Scholar] [CrossRef][Green Version]

- Dunlosky, John, and Robert Bjork. 2008. Handbook of Metamemory and Memory. New York: Psychology Press. [Google Scholar]

- El-Diraby, Tamer. 2014. Validating ontologies in informatics systems: Approaches and lessons learned for AEC. Journal of Information Technology in Construction 19: 474–93. [Google Scholar]

- Farmer, William. 2018. Incorporating quotation and evaluation into Church’s type theory. Information and Computation 260: 9–50. [Google Scholar] [CrossRef]

- Fernández-López, Mariano, Asunción Gómez-Pérez, and Natalia Juristo. 1997. Methontology: From ontological art towards ontological engineering. Paper presented at the AAAI97 Spring Symposium Series on Ontological Engineering, Palo Alto, CA, USA, March 24–25; pp. 33–40. [Google Scholar]

- France-Mensah, Jojo, and William O’Brien. 2019. A shared ontology for integrated highway planning. Advanced Engineering Informatics 41: 100929. [Google Scholar] [CrossRef]

- Fusch, Patricia, and Lawrence Ness. 2015. Are we there yet? Data saturation in qualitative research. Qualitative Report 20: 1408–16. [Google Scholar] [CrossRef]

- Georgara, Athina, Carles Sierra, and Juan Rodríguez. 2020a. Edu2Com: An anytime algorithm to form student teams in companies. Paper presented at the AI for Social Good Workshop 2020, Virtual, July 20–21. [Google Scholar]

- Georgara, Athina, Carles Sierra, and Juan Rodríguez. 2020b. TAIP: An anytime algorithm for allocating student teams to internship programs. arXiv arXiv:2005.09331. [Google Scholar]

- Griffiths, Thomas, Frederick Callaway, Michael Chang, Erin Grant, Paul Krueger, and Falk Lieder. 2019. Doing more with less: Meta-reasoning and meta-learning in humans and machines. Current Opinion in Behavioral Sciences 29: 24–30. [Google Scholar] [CrossRef]

- Gruber, Thomas. 1995. Toward principles for the design of ontologies used for knowledge sharing. International Journal of Human-Computer Studies 43: 907–28. [Google Scholar] [CrossRef]

- Guo, Brian, and Yang Miang Goh. 2017. Ontology for design of active fall protection systems. Automation in Construction 82: 138–53. [Google Scholar] [CrossRef]

- Hayes-Roth, Frederick, Donald Waterman, and Douglas Lenat. 1983. Building Expert Systems. Boston: Addison-Wesley Longman Publishing Co., Inc. [Google Scholar]

- Hevner, Alan, Salvatore March, Jinsoo Park, and Sudha Ram. 2004. Design science in information systems research. MIS Quarterly: Management Information Systems 28: 75–105. [Google Scholar] [CrossRef]

- Horridge, Matthew, Rafael Gonçalves, Csongor Nyulas, Tania Tudorache, and Mark Musen. 2019. WebProtégé: A cloud-based ontology editor. Paper presented at the Web Conference 2019—Companion of the World Wide Web Conference, WWW 2019, San Francisco, CA, USA, May 13–17; pp. 686–89. [Google Scholar] [CrossRef]

- Horvitz, Eric. 1987. Reasoning About Beliefs and Actions Under Computational Resource Constraints. Paper presented at the Third Conference on Uncertainty in Artificial Intelligence (UAI1987), Seattle, WA, USA, July 10–12. [Google Scholar]

- Houeland, Tor Gunnar, and Agnar Aamodt. 2018. A learning system based on lazy metareasoning. Progress in Artificial Intelligence 7: 129–46. [Google Scholar] [CrossRef]

- Karpas, Erez, Oded Betzalel, Solomon Eyal Shimony, David Tolpin, and Ariel Felner. 2018. Rational deployment of multiple heuristics in optimal state-space search. Artificial Intelligence 256: 181–210. [Google Scholar] [CrossRef]

- Kuokka, Daniel. 1991. MAX: A meta-reasoning architecture for “X”. ACM SIGART Bulletin 2: 93–97. [Google Scholar] [CrossRef]

- Lieder, Falk, and Thomas Griffiths. 2017. Strategy selection as rational metareasoning. Psychological Review 124: 762. [Google Scholar] [CrossRef]

- Lin, Christopher, Andrey Kolobov, Ece Kamar, and Eric Horvitz. 2015. Metareasoning for Planning Under Uncertainty. Paper presented at the Twenty-Fourth International Joint Conference on Artificial Intelligence (IJCAI 2015), Buenos Aires, Argentina, July 25–31. [Google Scholar]

- Madera-Doval, Dalia Patricia. 2019. A validated ontology for meta-level control domain. Acta Scientiæ Informaticæ 6: 26–30. [Google Scholar]

- Mikolov, Tomas, Ilya Sutskever, Kai Chen, Greg Corrado, and Jeff Dean. 2013. Distributed representations of words and phrases and their compositionality. In Advances in Neural Information Processing Systems. Lake Tahoe: Curran Associates, Inc., vol. 26. [Google Scholar]

- Milli, Smitha, Falk Lieder, and Thomas Griffiths. 2017. When does bounded-optimal metareasoning favor few cognitive systems? Paper presented at the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, February 4–9, vol. 31. [Google Scholar]

- Nelson, Thomas. 1990. Metamemory: A theoretical framework and new findings. In The Psychology of Learning and Motivation: Advances in Research and Theory. Edited by Gordon Bower. New York: Academic Press, pp. 125–73. [Google Scholar]

- Noy, Natalya, and Deborah McGuinness. 2001. Ontology Development 101: A Guide to Creating Your First Ontology. Knowledge Systems Laboratory Technical Report KSL-01-05 and Stanford Medical Informatics Technical Report SMI-2001-0880. Stanford, CA. Available online: https://protege.stanford.edu/conference/2005/slides/T1_Noy_Ontology101.pdf (accessed on 1 October 2022).

- Parashar, Priyam, Ashok Goel, Bradley Sheneman, and Henrik Christensen. 2018. Towards life-long adaptive agents: Using metareasoning for combining knowledge-based planning with situated learning. The Knowledge Engineering Review 33: e24. [Google Scholar] [CrossRef]

- Peffers, Ken, Marcus Rothenberger, Tuure Tuunanen, and Reza Vaezi. 2012. Design science research evaluation. In International Conference on Design Science Research in Information Systems. Berlin and Heidelberg: Springer, pp. 398–410. [Google Scholar]

- Rebholz-Schuhmann, Dietrich, Miguel Arregui, Sylvain Gaudan, Harald Kirsch, and Antonio Jimeno. 2008. Text processing through Web services: Calling Whatizit. Bioinformatics 24: 296–98. [Google Scholar] [CrossRef]

- Russell, Stuart, and Eric Wefald. 1991. Principles of metareasoning. Artificial Intelligence 49: 361–95. [Google Scholar] [CrossRef]

- Schmill, Matt, Darsana Josyula, Michael L. Anderson, Shomir Wilson, Tim Oates, Don Perlis, and Scott Fults. 2007. Ontologies for Reasoning about Failures in AI Systems. Paper presented at the Workshop on Metareasoning in Agent Based Systems at the Sixth International Joint Conference on Autonomous Agents and Multiagent Systems, Honolulu, HI, USA, May 14–18. [Google Scholar]

- Schmill, Matthew, Michael Anderson, Scott Fults, Darsana Josyula, Tim Oates, Don Perlis, Hamid Shahri, Shomir Wilson, and Dean Wright. 2011. The metacognitive loop and reasoning about anomalies. In Metareasoning: Thinking about Thinking. Edited by Michael Cox and Anita Raja. Cambridge: The MIT Press, pp. 183–98. [Google Scholar]

- Silla, Carlos, and Alex Freitas. 2011. A survey of hierarchical classification across different application domains. Data Mining and Knowledge Discovery 22: 31–72. [Google Scholar] [CrossRef]

- Sirin, Evren, Bijan Parsia, Bernardo Cuenca Grau, Aditya Kalyanpur, and Yarden Katz. 2007. Pellet: A practical OWL-DL reasoner. Journal of Web Semantics 5: 51–53. [Google Scholar] [CrossRef]

- Sung, Yoonchang, Leslie Pack Kaelbling, and Tomás Lozano-Pérez. 2021. Learning When to Quit: Meta-Reasoning for Motion Planning. Paper presented at the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, September 27–October 1; pp. 4692–99. [Google Scholar]

- Svegliato, Justin, and Shlomo Zilberstein. 2018. Adaptive Metareasoning for Bounded Rational Agents. Paper presented at the CAI-ECAI Workshop on Architectures and Evaluation for Generality, Autonomy and Progress in AI (AEGAP), Stockholm, Sweden, July 15. [Google Scholar]

- Svegliato, Justin, Connor Basich, Sandhya Saisubramanian, and Shlomo Zilberstein. 2021. Using Metareasoning to Maintain and Restore Safety for Reliably Autonomy. Paper presented at the Submission to the IJCAI Workshop on Robust and Reliable Autonomy in the Wild (R2AW), Virtual, August 19–26. [Google Scholar]

- Svegliato, Justin, Kyle Hollins Wray, and Shlomo Zilberstein. 2018. Meta-Level Control of Anytime Algorithms with Online Performance Prediction. Paper presented at the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, July 13–19. [Google Scholar]

- Uschold, Mike, and Michael Gruninger. 1996. Ontologies: Principles, Methods and Applications. The Knowledge Engineering Review 11: 93–136. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.48.5917&rep=rep1&type=pdf (accessed on 1 October 2022). [CrossRef]

- Van Assem, Mark, Aldo Gangemi, and Guus Schreiber. 2006. Conversion of WordNet to a standard RDF/OWL representation. Paper presented at the Fifth International Conference on Language Resources and Evaluation (LREC’06), Genoa, Italy, May 22–28. [Google Scholar]

| Literature Source | Ontological Terms |

|---|---|

| (Schmill et al. 2007, 2011) | sensor, reasoning process, rebuild models, recommendation recover, reinforcement learning, replan, response ontology, result, reward, self-awareness, sensor, sensor failure, sensor malfunction, sensor not reporting, state, system, failure, time, unanticipated perturbation |

| (Madera-Doval 2019) | agent, metacognition, self-regulation, metamemory, introspective monitoring, meta-level control, cognitive elements, cognitive level, task, reasoning, metareasoning, reasoning task, metareasoning task, object level, cognitive function, perception, situation assessment, categorization, recognition, belief maintenance, problem solving, planning, prediction, expectation, sensor, observation |

| Research Paper | Terms per Paper | Citations per Paper * |

|---|---|---|

| (Russell and Wefald 1991) | 48 | 444 |

| (Conitzer and Sandholm 2003) | 67 | 41 |

| (Cox 2005) | 63 | 261 |

| (Anderson and Oates 2007) | 36 | 91 |

| (Schmill et al. 2007) | 77 | 21 |

| (Cox and Raja 2008) | 55 | 100 |

| (Chen et al. 2013) | 37 | 3 |

| (Lin et al. 2015) | 54 | 46 |

| (Lieder and Griffiths 2017) | 37 | 23 |

| (Ackerman and Thompson 2017) | 36 | 105 |

| (Milli et al. 2017) | 35 | 39 |

| (Cserna et al. 2017) | 34 | 9 |

| (Karpas et al. 2018) | 22 | 5 |

| (Farmer 2018) | 7 | 5 |

| (Madera-Doval 2019) | 27 | 1 |

| (Houeland and Aamodt 2018) | 25 | 5 |

| (Parashar et al. 2018) | 33 | 1 |

| (Griffiths et al. 2019) | 18 | 29 |

| (Svegliato et al. 2018) | 41 | 16 |

| (Sung et al. 2021) | 36 | 0 |

| Classes of the Ontology |

|---|

| action, action selection, agent, algorithm, allocate deliberation time, allocating evaluation effort, answer, anytime algorithm, anytime planner, assess anomaly, bayes algorithm, best action, calculate quality of current solution, choose query to ask, cognitive level, cognitive problem, cognitive task, component, computational method, computational step, computational step result, computational time, compute expected utility, compute performance projection, compute solution quality, constraint, control, control policy, current state of the world, default action, default solution, disambiguate state, evaluation task, evaluation test, event, expectation, explain failure, explanation, failure, failure explanation, failure state, function, generate expectation, generate learning goals, get current solution, goal, ground level, ground-level story, guided response, imxp, increment time step, information gathering, information gathering policy, information gathering task, initialize performance history, initialize time step, internal state, introspection, itmxp, knowing current state of the world, knowledge test, learner, learning goal, limited time, make recommendation, mental action, meta level, metacognitive loop, metacognitive problem, metalevel control, metareasoner, metareasoning component, metareasoning problem, metareasoning task, model, model of the self, model of the word, monitor behavior, monotonicity, nag cycle, neural network, nonlinear regression, note anomaly object level, object-level process observation, online control policy, optimal information gathering policy, optimal test policy, outcome state, perception, performance history, performance predictor, performance profile, performance projection, perturbation, perturbation detection, phase, plan, planner, planning, planning task, policy, predict performance, problem, process, profile, problem space, property, question, recommendation action, reactivate learning, reasoner, reasoning, reasoning failure, reasoning problem, reasoning strategy, reasoning system, reasoning task, recommendation, recover, replan, resource, result, run test, save costs, selecting action, sensor, situation assessment, sleep, solution, solution quality, start acting, start anytime algorithm, state, state of the world, stop reasoning, stopping condition, story, story understanding task, strategy, success state, system, task, test, test policy, time, time dependent utility function, trace, unanticipated perturbation, understanding task, unit of time, unusual event, utility function, vector, violated expectation |

| Class | Property | Domain | Value Restriction |

|---|---|---|---|

| MetaLevel | rdf:subClassOf | CognitiveLevel | |

| has_problemspace | ProblemSpace | Non-empty array | |

| has_metareasoner | Metareasoner | An algorithm | |

| has_current_metareasoning_loop | Integer | Positive integer | |

| MetareasoningTask | rdf:subClassOf | MetacognitiveTask | |

| has_goal | Goal | ||

| has_id | String | Unique value | |

| has_input | Array | An array of objects | |

| has_output | Array | Non-empty | |

| has_runtime | Number | Positive number | |

| has_preconditions | State | Non-empty array of states | |

| has_effects | State | Non-empty array of states | |

| has_name | String | Alphanumerical value |

| Ontologies | Precision | Recall | F-Measure |

|---|---|---|---|

| IM-Onto | 92% | 90% | 91% |

| MCL (Schmill et al. 2007, 2011) | 47% | 37% | 41% |

| MLCO (Madera-Doval 2019) | 63% | 45% | 53% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Caro, M.F.; Cox, M.T.; Toscano-Miranda, R.E. A Validated Ontology for Metareasoning in Intelligent Systems. J. Intell. 2022, 10, 113. https://doi.org/10.3390/jintelligence10040113

Caro MF, Cox MT, Toscano-Miranda RE. A Validated Ontology for Metareasoning in Intelligent Systems. Journal of Intelligence. 2022; 10(4):113. https://doi.org/10.3390/jintelligence10040113

Chicago/Turabian StyleCaro, Manuel F., Michael T. Cox, and Raúl E. Toscano-Miranda. 2022. "A Validated Ontology for Metareasoning in Intelligent Systems" Journal of Intelligence 10, no. 4: 113. https://doi.org/10.3390/jintelligence10040113

APA StyleCaro, M. F., Cox, M. T., & Toscano-Miranda, R. E. (2022). A Validated Ontology for Metareasoning in Intelligent Systems. Journal of Intelligence, 10(4), 113. https://doi.org/10.3390/jintelligence10040113