LMI-Based Results on Robust Exponential Passivity of Uncertain Neutral-Type Neural Networks with Mixed Interval Time-Varying Delays via the Reciprocally Convex Combination Technique

Abstract

1. Introduction

2. Preliminaries

3. Main Results

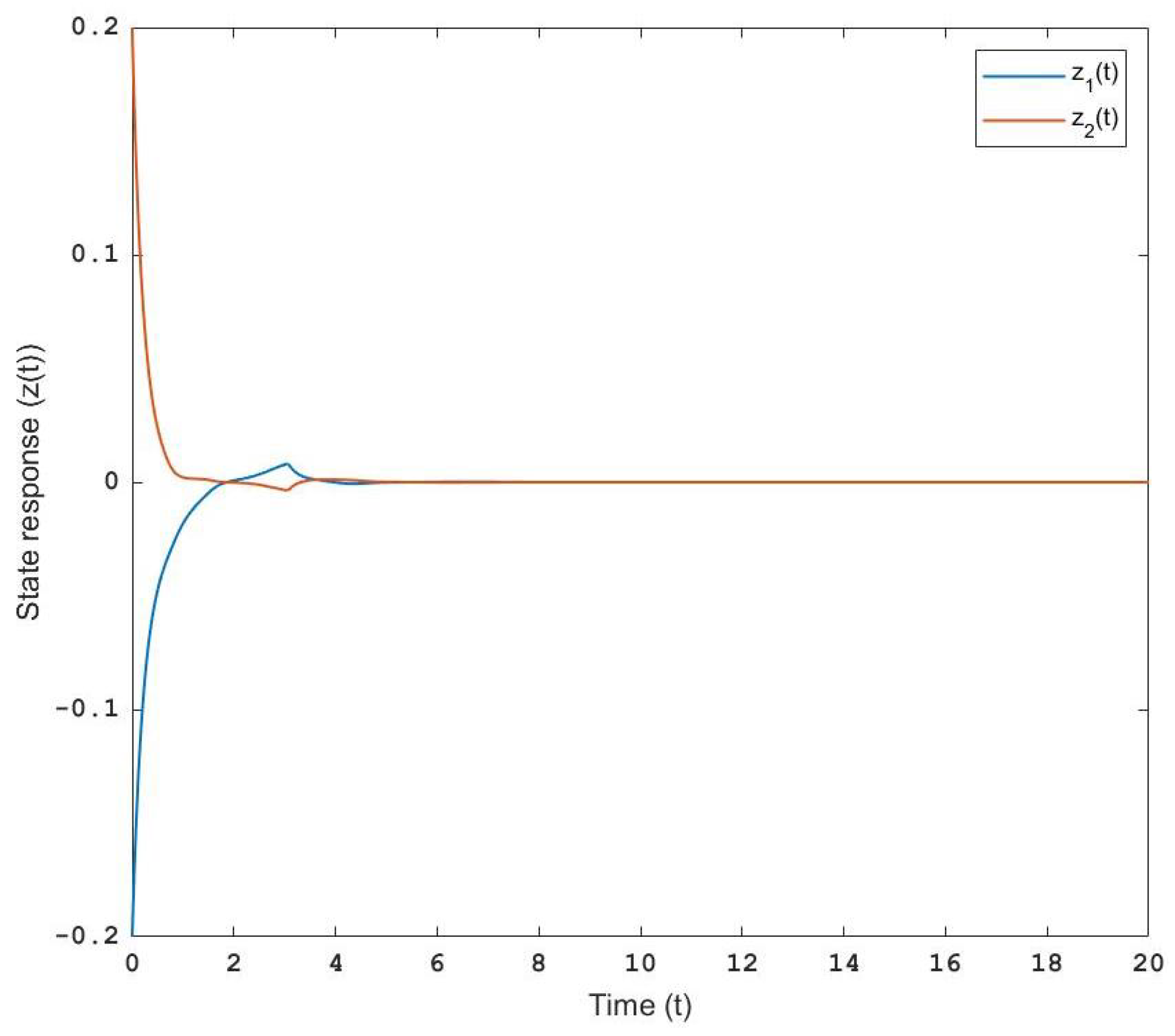

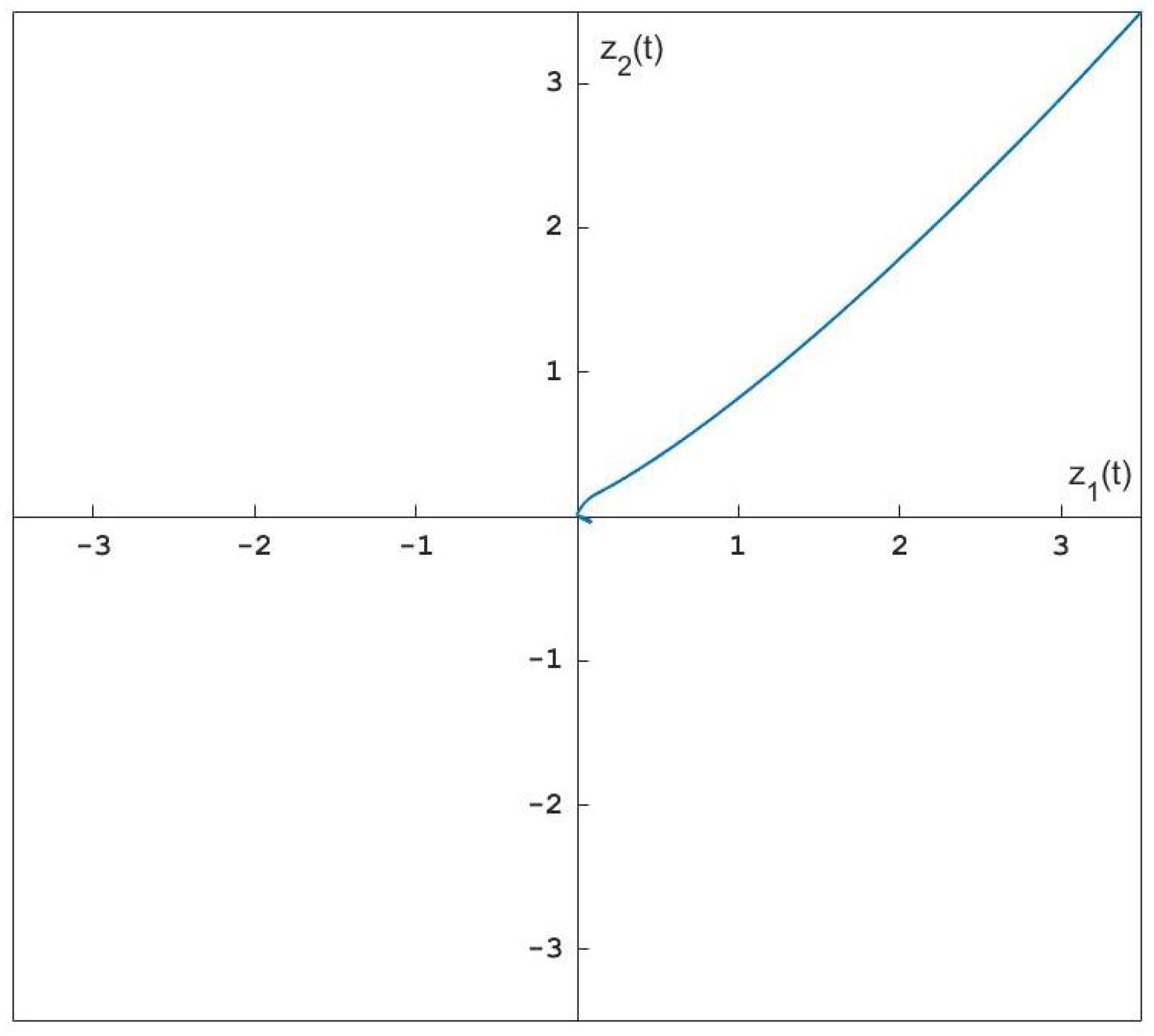

4. Numerical Examples

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Shang, Y. On the delayed scaled consensus problems. Appl. Sci. 2017, 7, 713. [Google Scholar] [CrossRef]

- Park, J.H.; Kwon, O.M. Global stability for neural networks of neutral-type with interval time-varying delays. Chaos Solitons Fractals 2009, 41, 1174–1181. [Google Scholar] [CrossRef]

- Sun, J.; Liu, G.P.; Chen, J.; Rees, D. Improved delay-range-dependent stability criteria for linear systems with time-varying delays. Automatica 2010, 46, 466–470. [Google Scholar] [CrossRef]

- Peng, C.; Fei, M.R. An improved result on the stability of uncertain t-s fuzzy systems with interval time-varying delay. Fuzzy Sets Syst. 2013, 212, 97–109. [Google Scholar] [CrossRef]

- Manivannan, R.; Mahendrakumar, G.; Samidurai, R.; Cao, J.; Alsaedi, A. Exponential stability and extended dissipativity criteria for generalized neural networks with interval time-varying delay signals. J. Frankl. Inst. 2017, 354, 4353–4376. [Google Scholar] [CrossRef]

- Zhang, S.; Qi, X. Improved Integral Inequalities for Stability Analysis of Interval Time-Delay Systems. Algorithms 2017, 10, 134. [Google Scholar] [CrossRef]

- Manivannan, R.; Samidurai, R.; Cao, J.; Alsaedi, A.; Alsaadi, F.E. Delay-dependent stability criteria for neutral-type neural networks with interval time-varying delay signals under the effects of leakage delay. Adv. Differ. Equ. 2018, 2018, 53. [Google Scholar] [CrossRef]

- Klamnoi, A.; Yotha, N.; Weera, W.; Botmart, T. Improved results on passivity anlysis of neutral-type neural networks with time-varying delays. J. Res. Appl. Mech. Eng. 2018, 6, 71–81. [Google Scholar]

- Hale, J.K. Introduction to Functional Differention Equations; Springer: New York, NY, USA, 2001. [Google Scholar]

- Niculescu, S.I. Delays Effects on Stability: A Robust Control Approach; Springe: London, UK, 2001. [Google Scholar]

- Brayton, R.K. Bifurcation of periodic solution in a nonlinear difference-differential equation of neutral type. Quart. Appl. Math. 1966, 24, 215–224. [Google Scholar] [CrossRef]

- Kuang, Y. Delay Differential Equation with Application in Population Dynamics; Academic Press: Boston, MA, USA, 1993. [Google Scholar]

- Bevelevich, V. Classical Network Synthesis; Van Nostrand: New York, NY, USA, 1968. [Google Scholar]

- Gu, K.; Kharitonov, V.L.; Chen, J. Stability of Time-Delay Systems; Birkhäuser: Berlin, Germany, 2003. [Google Scholar]

- Haykin, S. Neural Networks: A Comprehensive Foundation; Prentice–Hall: Englewood Cliffs, NJ, USA, 1998. [Google Scholar]

- Joya, G.; Atencia, M.A.; Sandoval, F. Hopfield neural networks for optimization: Study of the different dynamics. Neurocomputing 2002, 43, 219–37. [Google Scholar] [CrossRef]

- Manivannan, R.; Samidurai, R.; Zhu, Q. Further improved results on stability and dissipativity analysis of static impulsive neural networks with interval time-varying delays. J. Frankl. Inst. 2017, 354, 6312–6340. [Google Scholar] [CrossRef]

- Marcu, T.; Kppen-Seliger, B.; Stucher, R. Design of fault detection for a hydraulic looper using dynamic neural networks. Control Eng. Pract. 2008, 16, 192–213. [Google Scholar] [CrossRef]

- Stamova, I.; Stamov, T.; Li, X. Global exponential stability of a class of impulsive cellular neural networks with supremums. Int. J. Adapt. Control Signal Process. 2014, 28, 1227–39. [Google Scholar] [CrossRef]

- Park, M.J.; Kwon, O.M.; Ryu, J.H. Passivity and stability analysis of neural networks with time-varying delays via extended free-weighting matrices integral inequality. Neural Netw. 2018, 106, 67–78. [Google Scholar] [CrossRef]

- Wu, Z.G.; Park, J.H.; Su, H.; Chu, J. New results on exponential passivity of neural networks with time-varying delays. Nonlinear Anal. Real World Appl. 2012, 13, 1593–1599. [Google Scholar] [CrossRef]

- Du, Y.; Zhong, S.; Xu, J.; Zhou, N. Delay-dependent exponential passivity of uncertain cellular neural networks with discrete and distributed time-varying delays. ISA Trans. 2015, 56, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Lam, J.D.; Ho, W.C.; Zou, Y. Delay-dependent exponential stability for a class of neutral neural networks with time delays. J. Comput. Appl. Math. 2005, 183, 16–28. [Google Scholar] [CrossRef]

- Tu, Z.W.; Cao, J.; Alsaedi, A.; Alsaadi, F.E.; Hayat, T. Global Lagrange stability of complex-valued neural networks of neutral type with time-varying delays. Complexity 2016, 21, 438–450. [Google Scholar] [CrossRef]

- Maharajan, C.; Raja, R.; Cao, J.; Rajchakit, G.; Alsaedi, A. Novel results on passivity and exponential passivity for multiple discrete delayed neutral-type neural networks with leakage and distributed time-delays. Chaos Solitons Fractals 2018, 115, 268–282. [Google Scholar] [CrossRef]

- Weera, W.; Niamsup, P. Novel delay-dependent exponential stability criteria for neutral-type neural networks with non-differentiable time-varying discrete and neutral delays. Neurocomputing 2016, 173, 886–898. [Google Scholar] [CrossRef]

- Hill, D.J.; Moylan, P.J. Stability results for nonlinear feedback systems. Automatica 1977, 13, 377–382. [Google Scholar] [CrossRef]

- Santosuosso, G.J. Passivity of nonlinear systems with input-output feed through. Automatica 1977, 33, 693–697. [Google Scholar] [CrossRef]

- Xie, L.; Fu, M.; Li, H. Passivity analysis and passification for uncertain signal processing systems. IEEE Trans. Signal Process. 1998, 46, 2394–2403. [Google Scholar]

- Chua, L.O. Passivity and complexity. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 1999, 46, 71–82. [Google Scholar] [CrossRef]

- Calcev, G.; Gorez, R.; Neyer, M.D. Passivity approach to fuzzy control systems. Automatica 1988, 34, 339–344. [Google Scholar] [CrossRef]

- Wu, C.W. Synchronization in arrays of coupled nonlinear systems: Passivity, circle criterion, and observer design. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 2001, 48, 1257–1261. [Google Scholar]

- Chellaboina, V.; Haddad, W.M. Exponentially dissipative dynamical systems: A nonlinear extension of strict positive realness. J. Math. Prob. Eng. 2003, 2003, 25–45. [Google Scholar] [CrossRef]

- Fradkov, A.L.; Hill, D.J. Exponential feedback passivity and stabilizability of nonlinear systems. Automatica 1998, 34, 697–703. [Google Scholar] [CrossRef]

- Hayakawa, T.; Haddad, W.M.; Bailey, J.M.; Hovakimyan, N. Passivity-based neural network adaptive output feedback control for nonlinear nonnegative dynamical systems. IEEE Trans. Neural Netw. 2005, 16, 387–398. [Google Scholar] [CrossRef] [PubMed]

- Park, P.G.; Ko, J.W.; Jeong, C. Reciprocally convex approach to stability of systems with time-varying delays. Automatica 2011, 47, 235–238. [Google Scholar] [CrossRef]

- Li, T.; Guo, L.; Lin, C. A new criterion of delay-dependent stability for uncertain time-delay systems. IET Control Theory Appl. 2007, 1, 611–616. [Google Scholar] [CrossRef]

| 0.5 | 0.8 | |

|---|---|---|

| Park and Kwon [2] | 1.65 | - |

| Tu et al. [24] | 2.66 | - |

| Manivannan et al. [7] | 3.94 | 3.43 |

| Theorem 1 | 4.06 | 3.68 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Samorn, N.; Yotha, N.; Srisilp, P.; Mukdasai, K. LMI-Based Results on Robust Exponential Passivity of Uncertain Neutral-Type Neural Networks with Mixed Interval Time-Varying Delays via the Reciprocally Convex Combination Technique. Computation 2021, 9, 70. https://doi.org/10.3390/computation9060070

Samorn N, Yotha N, Srisilp P, Mukdasai K. LMI-Based Results on Robust Exponential Passivity of Uncertain Neutral-Type Neural Networks with Mixed Interval Time-Varying Delays via the Reciprocally Convex Combination Technique. Computation. 2021; 9(6):70. https://doi.org/10.3390/computation9060070

Chicago/Turabian StyleSamorn, Nayika, Narongsak Yotha, Pantiwa Srisilp, and Kanit Mukdasai. 2021. "LMI-Based Results on Robust Exponential Passivity of Uncertain Neutral-Type Neural Networks with Mixed Interval Time-Varying Delays via the Reciprocally Convex Combination Technique" Computation 9, no. 6: 70. https://doi.org/10.3390/computation9060070

APA StyleSamorn, N., Yotha, N., Srisilp, P., & Mukdasai, K. (2021). LMI-Based Results on Robust Exponential Passivity of Uncertain Neutral-Type Neural Networks with Mixed Interval Time-Varying Delays via the Reciprocally Convex Combination Technique. Computation, 9(6), 70. https://doi.org/10.3390/computation9060070