Factors, Prediction, Explainability, and Simulating University Dropout Through Machine Learning: A Systematic Review, 2012–2024

Abstract

1. Introduction

- To provide an inventory of factors, predictive, explanatory, and simulation models for UD.

- To provide the reader with a wide range of bibliographical references to understand and research UED using ML.

2. Theoretical Background

2.1. University Dropout

2.2. Machine Learning

2.3. Artificial Intelligence Explained (XAI)

2.4. Simulation

3. Materials and Methods

- Planning: in this phase, the research questions are established, and the review protocol is defined. this protocol outlines the sources of information used, the criteria for including and excluding studies, the data search strategy, and the period considered for the review.

- Development: primary studies are selected according to the plan, and their quality is then assessed for data extraction and synthesis.

- Results: the results and statistical analyses, which provide answers to the research questions, are presented in Section 3.3 and Section 4, respectively.

3.1. Planification

- Q1. What factors exist for UED, and which are the most studied?

- Q2. What machine learning models are used for predicting UED?

- Q3. What are the advances of XAI in UED?

- Q4. What simulation models exist for UED?

3.2. Development

3.3. Statistics

3.3.1. Number of Potential and Selected Items

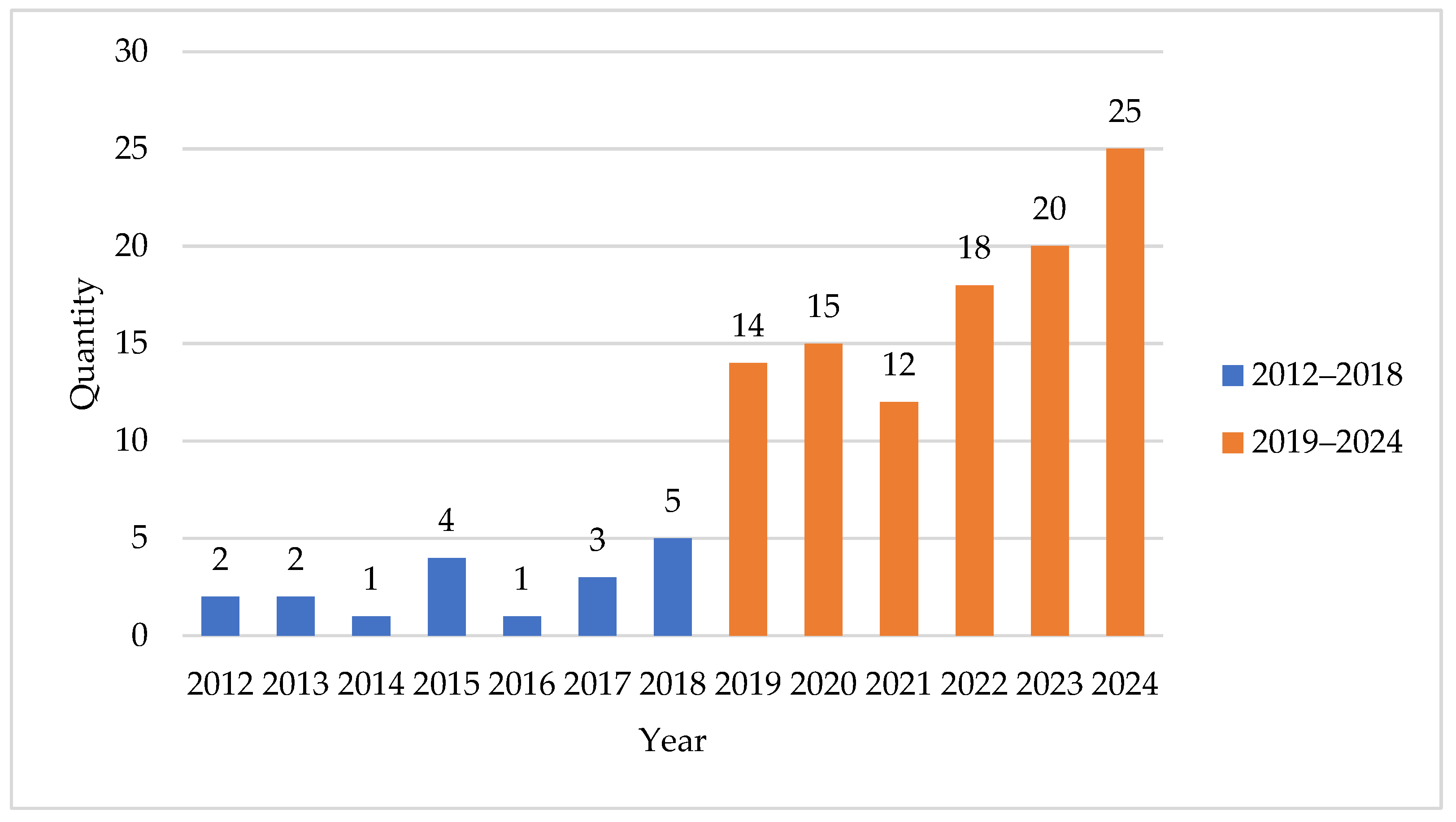

3.3.2. Trend of Articles per Year

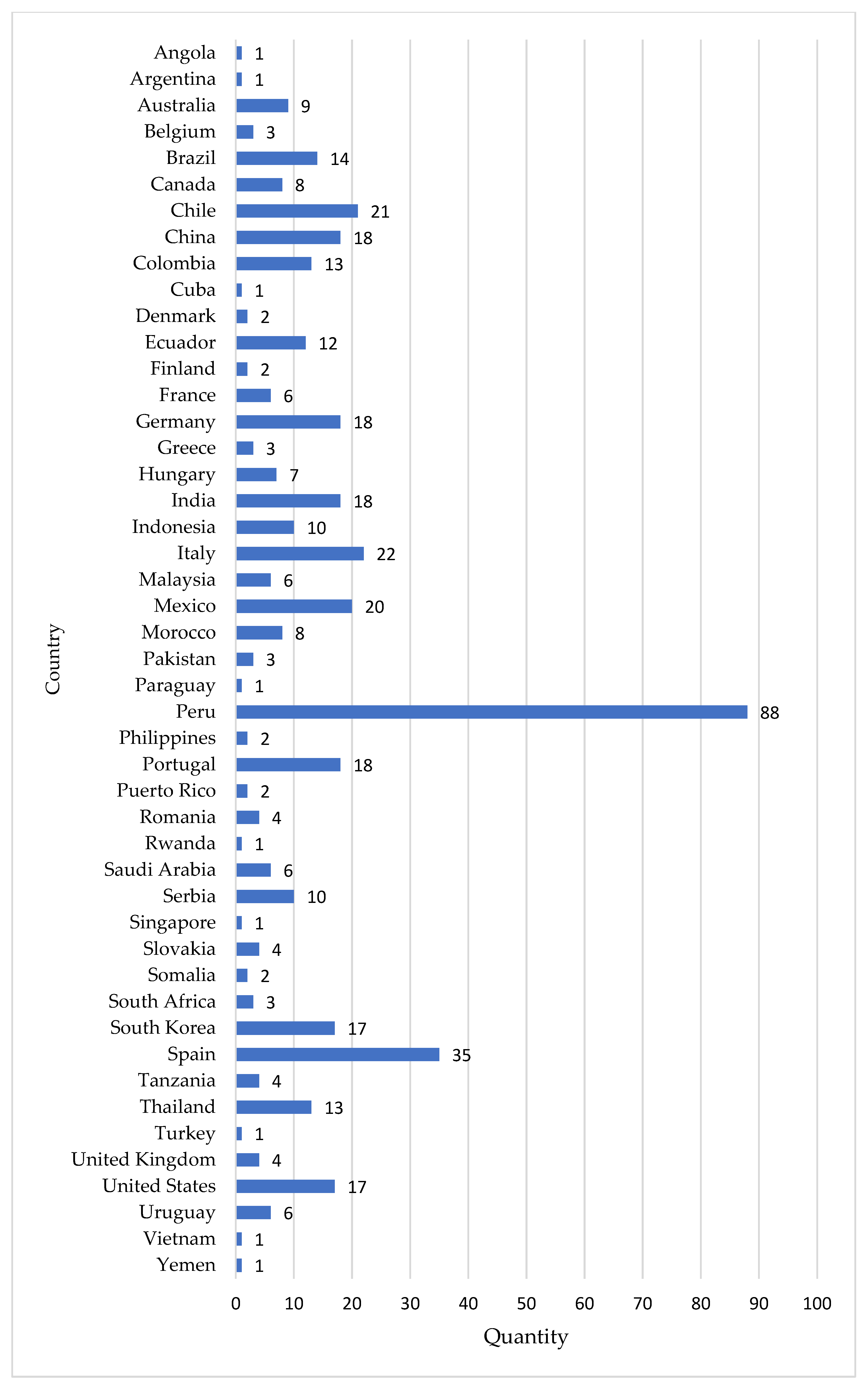

3.3.3. Number of Authors by Country of Affiliation

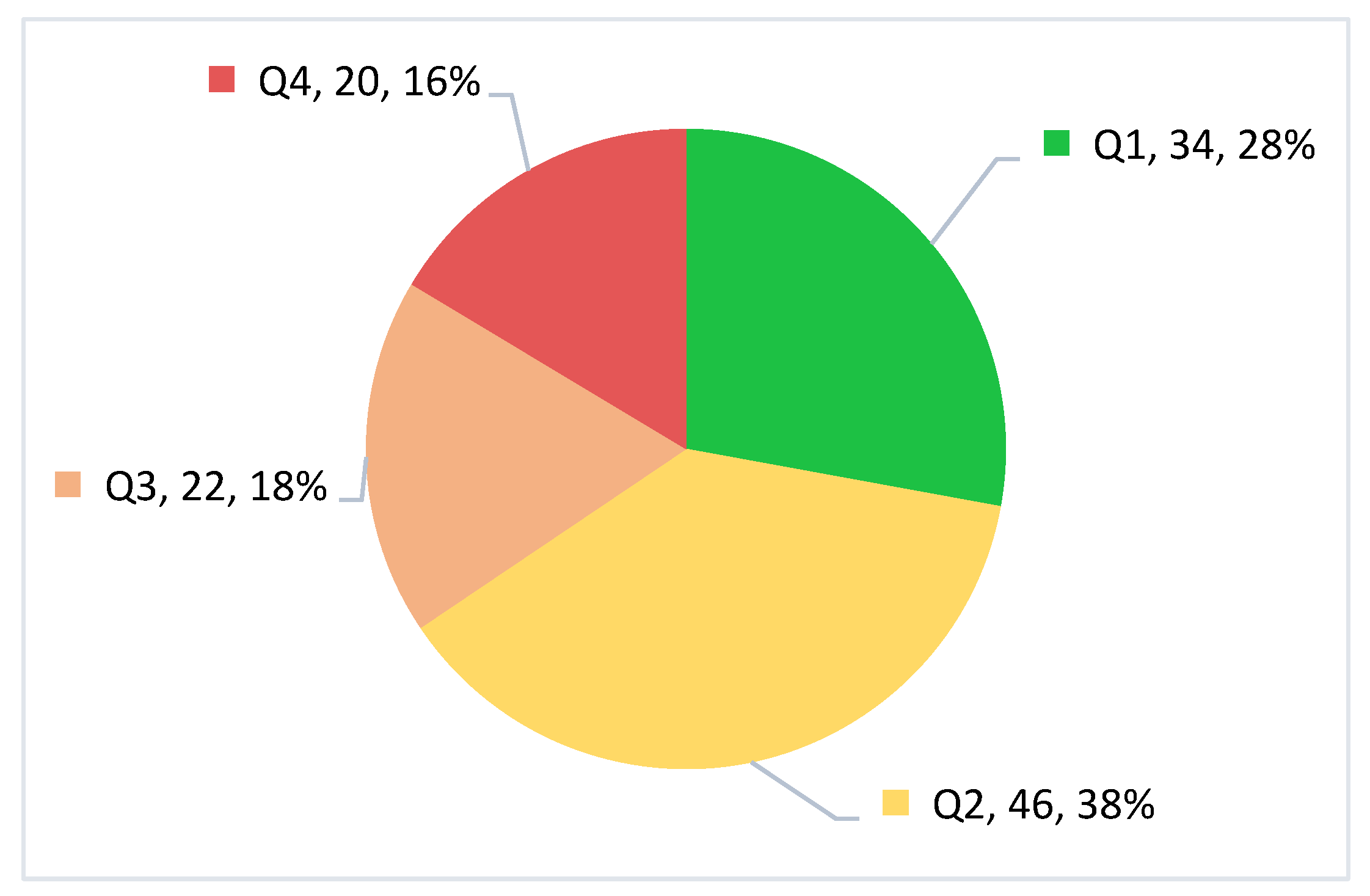

3.3.4. Selected Articles by Quartile

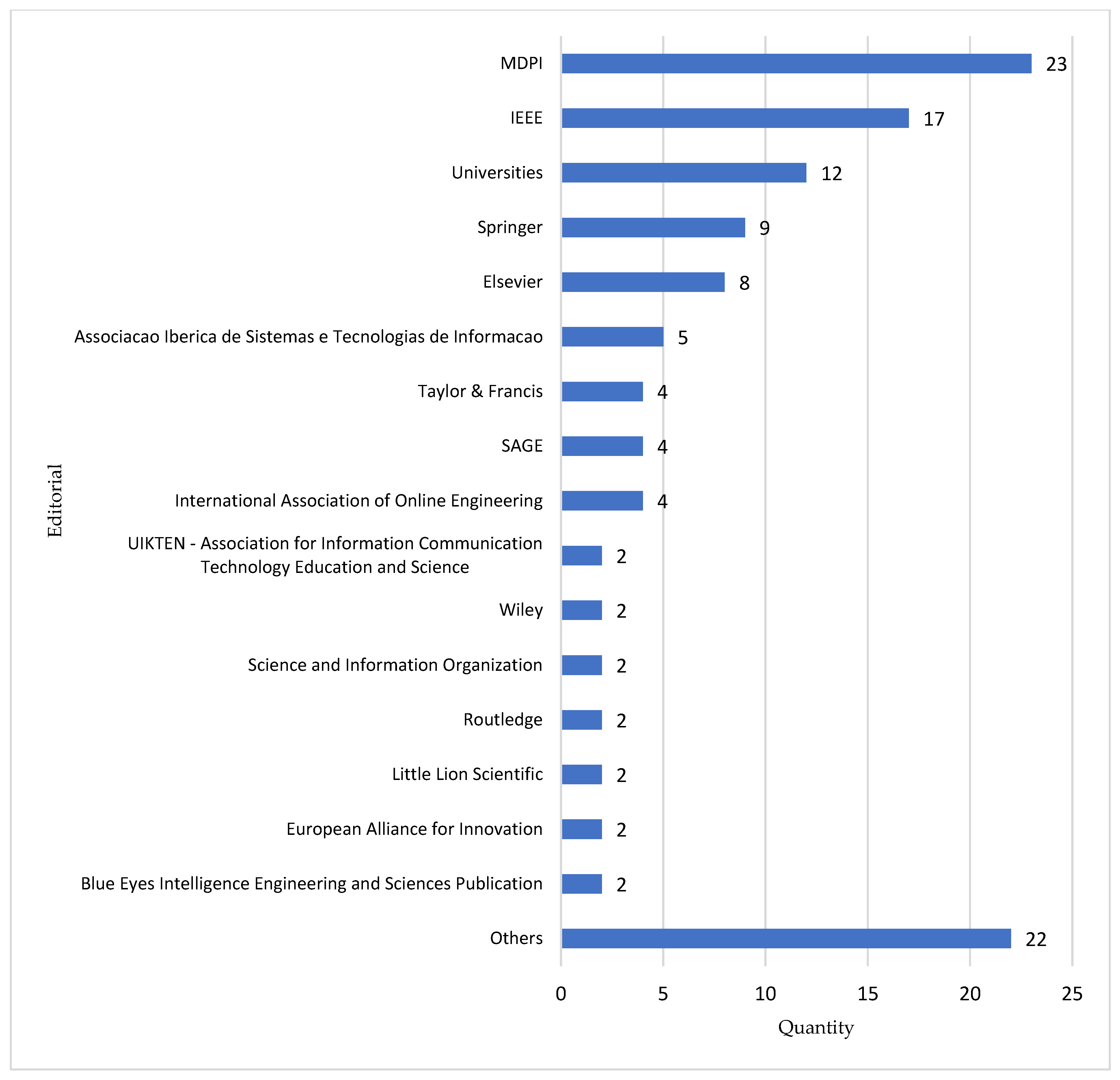

3.3.5. Selected Articles by Publisher

4. Results

4.1. What UED Factors Exist, and Which Are the Most Studied?

4.1.1. Demographic Factors

4.1.2. Socioeconomic Factors

4.1.3. Institutional Factors

4.1.4. Personal Factors

4.1.5. Academic Factors

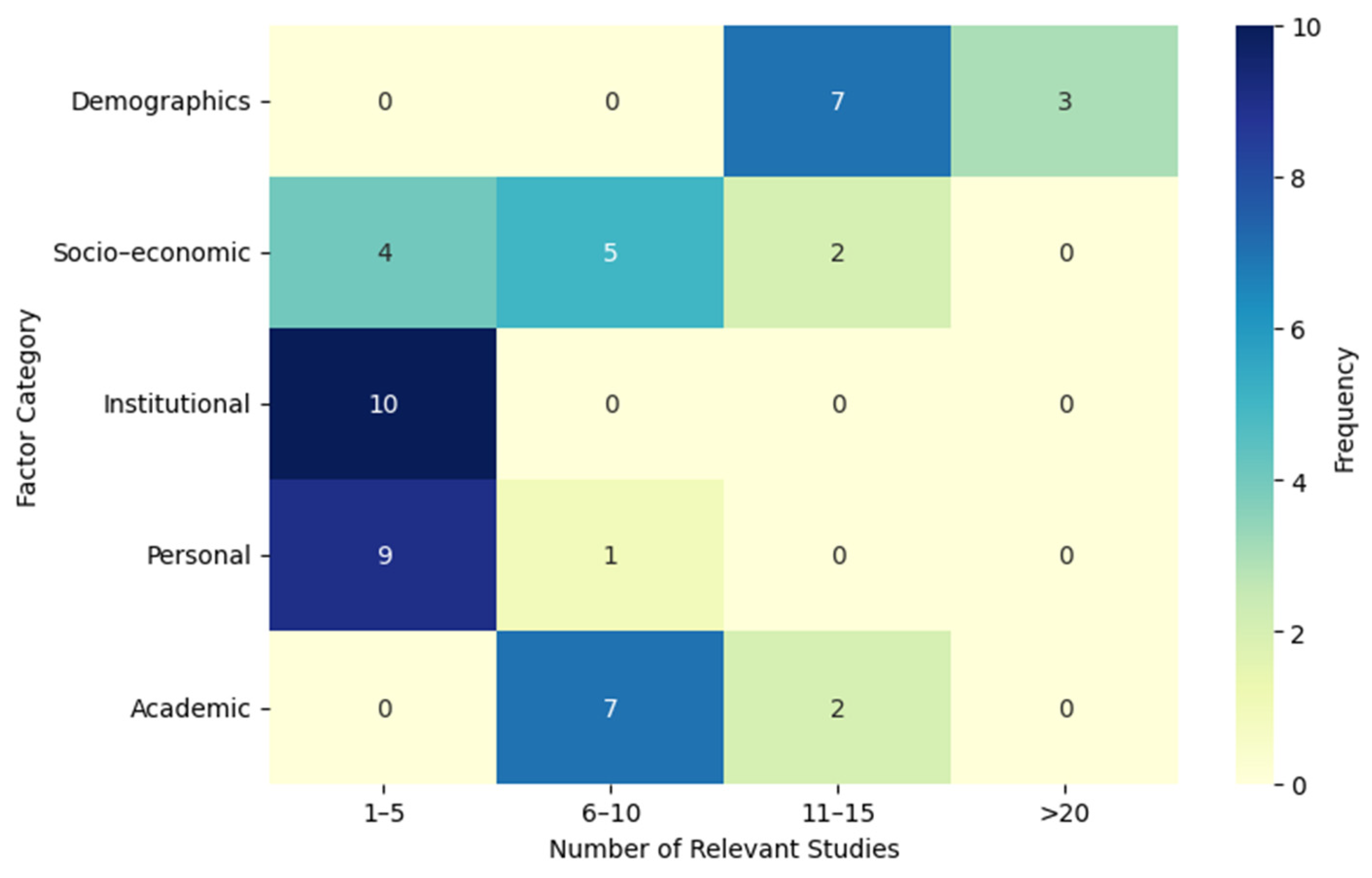

4.1.6. Summary of Categories

4.2. Which ML Models Are Used for Predicting UED?

4.3. What Progress Has XAI Made in the UED?

4.4. What Simulation Models Exist for the UED?

5. Discussion

5.1. About Factors

5.2. About the Model

5.3. About Explication

5.4. About Simulation

5.5. Factors, Prediction, Explanation, and Simulation

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| KPCA | Kernel Principal Component Analysis |

| PCA | Principal Component Analysis |

| LPP | Locality Preserving Projection |

| NPE | Neighborhood Preserving Embedding |

| IsoP | Isometric Projection |

| WCT-T | Weighted Connected Triple Transformation |

| WTQ-T | Weighted Triple Quality Transformation |

| DT | Decision Tree |

| LR | Regression Logistic |

| DT-ID3 | Iterative Dichotomiser 3 |

| SVM | Vector Support Machine |

| RF | Random Forest |

| HDBSCAN | Hierarchical Density-Based Spatial Clustering of Applications with Noise |

| DBSCAN | Density-Based Spatial Clust1ering of Applications with Noise |

| GMERF | Generalized Mixed-Effects Random Forest |

| BG | Gradient Boosting |

| GBM | Gradient Boosting Machine |

| XGBoost | Extreme Gradient Boosting |

| CNNs | Convolutional Neural Networks |

| DP-CNN | Convolutional Neural Network with Dynamic Pooling |

| NB | Naive Bayes |

| CLSA | Sentiment Analysis Model at Concept Level |

| KNN | K- NeighborsClassifier |

| ANNs | Artificial Neural Networks |

| AHP | Analytic Hierarchy Process |

| LSTM | Long Short-term Memory |

| GLM | Generalized Linear Model |

| SGD | Stochastic Gradient Descent |

| MLP | Multilayer Perception |

| AdaBoost | Adaptive Boosting |

| BAG | Bootstrap Aggregated Decision Trees |

| SVMSMOTE | Support Vector Machines—Synthetic Minority Over-Sampling Technique |

| SMOTE | Synthetic Minority Over-Sampling Technique |

| MMFA | Modified Mutated Firefly Algorithm |

| GBNs | Gaussian Bayesian Networks |

| FFNN | Feed Forward Neural Network |

| BNs | Bayesian Networks |

| RBF | Radial Basis Function |

| DT-CHAID | Decision Tree-Chi-Square Automatic Interaction Detector |

| LLM | Logit Leaf Model |

| LMT | Logistic Model Tree |

| LightGBM | Light Gradient Boosting Machine |

| SEDM | Student Educational Data Mining |

| PESFAM | Probabilistic Ensemble Simplified Fuzzy ARTMAP |

| FNN | Feed Forward Neural Network |

| BRF | Balanced Random Forest |

| EE | Easy Ensemble |

| RB | RUSBoost |

| CART | Classification and Regression Trees |

| CTM | Classification Tree Model |

| SMOTE-NC | Synthetic Minority Over-Sampling Technique for Nominal and Categorical Data |

| ETC | Extra Trees Classifier |

| CIT | Conditional Inference Tree |

| Bagged CART | Classification and Regression Tree Bagging |

| FTT | Feature Tokenizer Transformer |

| GMM | Gaussian Mixture Model |

| GBT | Gradient Boosted Trees |

| NNs | Neural Networks |

| LDA | Linear Discriminant Analysis |

| PR | Polynomial Regression |

| PEM-SNN | Piecewise Exponential Model with Structural Neural Network |

| ARD | Automatic Relevance Determination |

| LASSO | Least Absolute Shrinkage and Selection Operator |

| BR | Bayesian Ridge |

| LIRE | Linear Regression |

| RR | Ridge Regression |

| DR | Dummy Regressor |

| IF | Isolation Fores |

| DC | Data Cleaning (DC); |

| DTA | Data Transformation (DTA) |

| FS | Feature Selection |

| SV | Standardization of Variables |

| VC | Variable Coding |

| SMOTE | Synthetic Minority Oversampling Technique |

| EV | Elimination of Variables |

| TV | Transformation of Variables |

| DR | Dimensionality Reduction |

| CC | Categorical Coding |

| DS | Data Selection |

Appendix A

| Id | Factor | References | Id | Factor | References |

|---|---|---|---|---|---|

| F001 | Gender | [7,14,39,50,51,53,54,58,59,60,61,63,64,65,66,67,68,69,71,72,74,77,78,78,82,83,86,88,89,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110] | F039 | Travel time to university | [93] |

| F002 | Age | [7,15,51,54,59,64,65,66,67,68,69,71,72,74,77,78,80,83,84,86,89,91,94,97,98,99,100,101,105,108,109,111,112,113,114,115,116,117,118,119] | F040 | University of origin | [116] |

| F003 | Marital status | [51,59,61,68,69,71,76,80,86,91,93,97,102,106,108,114,116,118] | F041 | Citizenship status | [52] |

| F004 | Sex | [21,55,78,80,84,86,90,91,111,112,114,115,118,120,121,122,123,124] | F042 | Geographical displacement | [130] |

| F005 | Place of residence | [7,14,39,51,64,65,66,68,74,86,93,106,108,114,121,125] | F043 | First-generation student | [95] |

| F006 | Place of origin | [39,51,53,54,71,78,89,90,91,93] | F044 | City | [110] |

| F007 | Nationality | [7,21,53,55,58,61,64,65,76,93,101,104,115,126] | F045 | Multidimensional Poverty Index of the school | [103] |

| F008 | Parents’ educational level | [59,70,72,93,94,95,97,99,112,117,118] | F046 | Zonal Address | [91] |

| F009 | Type of school | [14,64,65,66,75,78,86,89,91,95,103,109] | F047 | Pre-university preparation | [91] |

| F010 | Ethnicity | [72,74,77,83,84,86,97,98,112,113,126] | F048 | Housing tenure | [91] |

| F011 | Date of birth | [53,58,65,87,120,121,127] | F049 | Type of construction | [91] |

| F012 | Registration age | [14,55,61,73,93,106] | F050 | Water | [91] |

| F013 | School | [39,58,72,78,108,129] | F051 | Drain | [91] |

| F014 | Foreign | [55,108,109,117,122] | F052 | Phone | [91] |

| F015 | Province of origin | [91,96,106,118] | F053 | Colour TV | [91] |

| F016 | Number of siblings | [114,116,129] | F054 | Radio | [91] |

| F017 | Type of housing | [72,101,114] | F055 | Sound equipment | [91] |

| F018 | Zip code | [51,88,113] | F056 | Iron | [91] |

| F019 | Country of origin | [99,101,108] | F057 | Cellular | [91] |

| F020 | Region | [39,117] | F058 | Laptop | [91] |

| F021 | Type of university | [39,70] | F059 | Refrigerator | [91] |

| F022 | Higher education centre | [101,122] | F060 | Personal library | [91] |

| F023 | Change of residence | [114,130] | F061 | Wardrobe | [91] |

| F024 | Foreigner | [78,93] | F062 | Wire | [91] |

| F025 | Displaced student | [55,61] | F063 | Home environments | [91] |

| F026 | Number of children | [80,85] | F064 | Number of floors | [91] |

| F027 | Computer | [77,91] | F065 | Number of bedrooms | [91] |

| F028 | Generation | [119] | F066 | Number of kitchens | [91] |

| F029 | Migration status | [72] | F067 | Number of bathrooms | [91] |

| F030 | Country of origin risk | [73] | F068 | Number of rooms | [91] |

| F031 | Proximity to the university | [61] | F069 | Number of dining rooms | [91] |

| F032 | Cohabitation status with parents | [61] | F070 | Orphanhood | [91] |

| F033 | Generation | [119] | F071 | He lives alone | [91] |

| F034 | Family size | [93] | F072 | Breadwinner | [91] |

| F035 | Family you live with | [116] | F073 | Marital Status of Father | [74] |

| F036 | Community support | [61] | F074 | Marital Status of Mother | [74] |

| F037 | Live in the country or the city | [106] | F075 | Area of residence | [77] |

| F038 | Family municipality | [105] |

| Id | Factor | References | Id | Factor | References |

|---|---|---|---|---|---|

| F076 | Scholarships | [55,61,72,75,83,93,94,99,104,106,112,120,127] | F116 | Total amount of scholarships | [88] |

| F077 | Works | [54,60,69,71,80,91,94,108,114,125,128] | F117 | Suspensions | [122] |

| F078 | Family income | [59,80,86,95,97,98,102,125,129] | F118 | Single with dependents | [131] |

| F079 | Income level | [14,58,80,89,96,108,118] | F119 | Family financial support | [72] |

| F080 | Admission score | [14,39,78,95,98,121,123] | F120 | Health insurance | [104] |

| F081 | Disability | [69,72,77,88,100,105,114] | F121 | Type of insurance | [116] |

| F082 | Educational level | [54,101,105,108,117,122] | F122 | Medical insurance company | [54] |

| F083 | Socioeconomic status | [64,77,83,95,124] | F123 | Financial commitment of the firstborn son to his family | [85] |

| F084 | Father’s profession | [15,76,93,129] | F124 | Student perspective on their integration into the labor market | [85] |

| F085 | Financial aid | [68,80,83,100,112] | F125 | Economic problems | [130] |

| F086 | Mother’s rating | [55,61,102] | F126 | Lack of family support | [130] |

| F087 | Father’s rating | [55,61,102] | F127 | Mother’s highest educational level | [126] |

| F088 | Father’s employment status | [15,55,102] | F128 | Father’s highest educational level | [126] |

| F089 | Mother’s occupation | [15,93,102] | F129 | Employment status | [95] |

| F090 | Internet access | [77,91,97,114,129] | F130 | Housing situation | [95] |

| F091 | Financial situation | [80,121,123] | F131 | Monthly tuition payment | [103] |

| F092 | Type of scholarship | [68,79,99] | F132 | Social stratification of the school | [103] |

| F093 | Total income | [66,77,91] | F133 | Household composition | [91] |

| F094 | Study financing | [80,81] | F134 | Family Burden | [91] |

| F095 | Working conditions | [59,72] | F135 | Children in Higher Education | [91] |

| F096 | Type of transport | [102,114] | F136 | Economic dependence | [91] |

| F097 | Current occupation | [113,118] | F137 | Head of household | [91] |

| F098 | School tuition cost | [78,117] | F138 | Economic income modality | [91] |

| F099 | Debtor | [55,61] | F139 | Access to technology | [65] |

| F100 | Registrations up to date | [55,61] | F140 | Latitude | [74] |

| F101 | Dependence on parents | [71,114] | F141 | Length | [74] |

| F102 | Source of income | [77,96] | F142 | Social class | [74] |

| F103 | Mother’s profession | [76,93] | F143 | Brothers at school | [74] |

| F104 | Economic indicator | [112] | F144 | Type of license plate | [75] |

| F105 | Professional status | [101] | F145 | Type of income | [75] |

| F106 | Political status | [106] | F146 | Unemployment rate | [76] |

| F107 | Works part-time | [133] | F147 | Tuition payment up to date | [76] |

| F108 | Student loan | [132] | F148 | Economic situation | [77] |

| F109 | Money for food | [116] | F149 | Number of people in the household | [77] |

| F110 | Study books | [116] | F150 | Eligibility | [112] |

| F111 | Scholarship percentage | [78] | F151 | Academic integration | [83] |

| F112 | Parents’ main field | [59] | F152 | Subsidized loan | [83] |

| F113 | Student’s profession | [101] | F153 | Unsubsidized loan | [83] |

| F114 | Percentage of loans | [78] | F154 | Work-study | [83] |

| F115 | Total percentage of aid | [78] | F155 | Aid for merit | [83] |

| Id | Factor | References | Id | Factor | References |

|---|---|---|---|---|---|

| F156 | Infrastructure | [80,81,84] | F168 | Counselor’s perception of the counselor’s own expectations | [126] |

| F157 | Educational services | [66,83] | F169 | Counselor’s perception of the director’s expectations | [126] |

| F158 | Suitable equipment | [80,81] | F170 | Institutional integrity | [84] |

| F159 | Place | [78,89] | F171 | Social infrastructure | [84] |

| F160 | Institution size | [83,84] | F172 | Social aspects induction program | [84] |

| F161 | Area | [60] | F173 | Institutional control | [83] |

| F162 | Geographical area | [78] | F174 | Percentage of minorities | [83] |

| F163 | Teacher’s commitment to the student | [85] | F175 | Part-time teachers | [83] |

| F164 | Classification of the career or institution | [85] | F176 | Full-time teachers | [83] |

| F165 | Group class | [82] | F177 | Instruction expenditure | [83] |

| F166 | School climate assessment scale | [126] | F178 | Academic support expenses | [83] |

| F167 | Counselor’s perception of teachers’ expectations | [126] |

| Id | Factor | References | Id | Factor | References |

|---|---|---|---|---|---|

| F179 | Year of admission | [58,64,74,75,87,90,94,95,120,127] | F248 | Mobile phone addiction | [121] |

| F180 | Motivation | [80,81,82,97] | F249 | Gaming Addiction | [121] |

| F181 | Extracurricular activities | [72,84,97] | F250 | Video game addiction | [121] |

| F182 | Commitment | [99,124,130] | F251 | Shopping addiction | [121] |

| F183 | Class participation | [84,131,132] | F252 | Smoking | [82] |

| F184 | Number of voluntary activities | [7,58,88] | F253 | Student’s sense of school belonging | [126] |

| F185 | Future time perspective | [55,130] | F254 | Perception of social support | [95] |

| F186 | Time to study | [60,80] | F255 | Use of learning platform | [95] |

| F187 | Adaptation and coexistence | [55,133] | F256 | Frequency of library use | [95] |

| F188 | Leader or president | [60,93] | F257 | Participation in tutoring | [95] |

| F189 | Addictions/vices | [85,116] | F258 | Participation in mentoring | [95] |

| F190 | Social media addiction | [119,142] | F259 | Access to academic support services | [95] |

| F191 | Club participation | [7,58] | F260 | Short-term objective | [110] |

| F192 | Stress level | [80,97] | F261 | Weekly minutes on the platform | [110] |

| F193 | Level of motivation | [64,116] | F262 | Active days | [110] |

| F194 | Participation in study groups | [84,97] | F263 | Total progress | [110] |

| F195 | Landline | [116] | F264 | Device used | [110] |

| F196 | Cellular phone | [116] | F265 | Login frequency | [110] |

| F197 | Second language | [116] | F266 | Average session duration | [110] |

| F198 | Masked student | [97] | F267 | Number of sessions per week, | [110] |

| F199 | External social relations | [80] | F268 | Number of accesses in the last month | [110] |

| F200 | Desire for knowledge | [127] | F269 | Hours in the last month | [110] |

| F201 | Frankness | [125] | F270 | Hours in the last 3 months | [110] |

| F202 | Extraversion | [125] | F271 | Hours in the last 6 months | [110] |

| F203 | Neuroticism | [125] | F272 | Hours in the last year | [110] |

| F204 | Conscientiousness | [125] | F273 | Interaction with tutors | [110] |

| F205 | Emotional commitment | [125] | F274 | Participation in forums | [110] |

| F206 | Calculating commitment | [125] | F275 | Interaction with multimedia resources | [110] |

| F207 | Regulatory commitment | [125] | F276 | Average time per resource | [110] |

| F208 | Professional interests | [124] | F277 | Completed activities | [110] |

| F209 | Conference programs | [51] | F278 | Number of evaluations submitted | [110] |

| F210 | Family problems | [142] | F279 | Evaluation results | [110] |

| F211 | Mental health | [107] | F280 | Response time in activities | [110] |

| F212 | Health problem | [107] | F281 | Number of clicks per session | [110] |

| F213 | Study habits | [143] | F282 | Participation in chats | [110] |

| F214 | Depression | [143] | F283 | Preferred content type | [110] |

| F215 | Anxiety | [143] | F284 | Navigation route | [110] |

| F216 | License history | [51] | F285 | Number of messages received | [110] |

| F217 | Communications level | [113] | F286 | Number of messages sent | [110] |

| F218 | First generation to study | [119] | F287 | Activity during non-business hours | [110] |

| F219 | Extracurricular activity scores | [7] | F288 | Level of self-efficacy | [65] |

| F220 | Participation in first-year camp activities | [7] | F289 | Learning strategy | [65] |

| F221 | Frequency of computer use | [86] | F290 | Rh factor | [74] |

| F222 | Learning approach | [133] | F291 | Neuroticism | [141] |

| F223 | I wanted practical work | [63] | F292 | Extraversion | [141] |

| F224 | Disease | [63] | F293 | Kindness | [141] |

| F225 | Pregnancy | [63], | F294 | Responsibility | [141] |

| F226 | Incompatibility between career and childcare | [63] | F295 | Openness to experience | [141] |

| F227 | Self-assessment | [61] | F296 | Social integration | [83,84] |

| F228 | Time spent exercising | [118] | F297 | Perception of learning | [84] |

| F229 | Vocational training | [125] | F298 | Experiences of exam disappointment | [84] |

| F230 | Number of friends | [93] | F299 | Support and guidance | [84] |

| F231 | Kindness | [125] | F300 | Quality of teaching | [84] |

| F232 | Leisure | [61] | F301 | Alignment in teaching | [84] |

| F233 | Study hours | [93] | F302 | Clarity in instruction | [84] |

| F234 | Planned and unplanned pregnancy | [85] | F303 | Feedback active learning | [84] |

| F235 | Bullying | [85] | F304 | Higher-order thinking | [84] |

| F236 | Sexism | [85] | F305 | Cooperative learning | [84] |

| F237 | Student adaptation to university learning | [85] | F306 | Introductory courses | [84] |

| F238 | Poor interpersonal relationships with peers | [130] | F307 | Student research programs | [84] |

| F239 | Lack of study habits and techniques | [130] | F308 | Perception of difficulty | [84] |

| F240 | Demotivation | [130] | F309 | Coherence between courses in the curriculum | [84] |

| F241 | Feeling of not belonging | [130] | F310 | Educational aspiration | [83] |

| F242 | Health problems | [130] | F311 | Language | [68] |

| F243 | Internet addiction | [135] | F312 | Video platform | [77] |

| F244 | Technology addiction | [135] | F313 | Physical books | [77] |

| F245 | Alcohol addiction | [121] | F314 | Reading time | [77] |

| F246 | Addiction to emotional dependence | [121] | F315 | Internet browsing time | [77] |

| F247 | Drug addiction | [121] |

| Id | Factor | References | Id | Factor | References |

|---|---|---|---|---|---|

| F316 | Ratings | [39,54,65,74,76,78,82,87,90,106,111,116,117,120,126,127] | F420 | Temporary withdrawal | [132] |

| F317 | General GPA | [7,59,64,67,69,75,83,84,89,90,97,113,120,123,126,129] | F421 | Order of option to apply | [101] |

| F318 | Secondary note | [39,72,83,86,97,103,109,111,121,129] | F422 | Access order | [101] |

| F319 | Subjects taken | [15,72,73,78,94,98,108,118] | F423 | Weighted historical average | [116] |

| F320 | Credits taken | [71,89,100,101,112,120,121] | F424 | Lower test results | [106] |

| F321 | Attendance | [55,59,61,64,72,73,76,120] | F425 | Years of study at the University | [116] |

| F322 | Type of admission | [39,58,78,90,95,107] | F426 | Belongs to the institute’s school | [119] |

| F323 | Type of school | [66,86,89,99,102,103,108] | F427 | Specialty | [132] |

| F324 | School | [39,55,70,78,89,91,117] | F428 | Student status | [60] |

| F325 | Academic year | [39,78,93,100,134] | F429 | Course evaluation comments | [59] |

| F326 | Number of failed courses | [67,72,78,97,101,111] | F430 | Average evaluations first semester | [117] |

| F327 | Subjects | [87,107,121,122,143] | F431 | First period average | [78] |

| F328 | Entrance examination | [39,53,70,117,127] | F432 | Attendance status | [131] |

| F329 | Average grades throughout the career | [39,78,86,88,120] | F433 | Dropping out during the semester | [114] |

| F330 | Academic cycle | [78,87,91,111,112] | F434 | Admission date | [123] |

| F331 | Course number | [51,71,108,111] | F435 | Rewards and penalties | [88] |

| F332 | Active semester | [7,69,90,118,121] | F436 | Group study | [118] |

| F333 | Average subjects | [39,111,118,129] | F437 | High school completion status | [131] |

| F334 | Years of graduation | [15,58,71,134] | F438 | Title to obtain | [123] |

| F335 | Admission score | [55,72,78,115] | F439 | Enrolled semester number | [54] |

| F336 | Academic department | [54,85,90,106] | F440 | Number of programs enrolled | [54] |

| F337 | Course | [61,76,88,137] | F441 | Graduate | [140] |

| F338 | Subject | [86,104,112,140] | F442 | Type of graduation | [64] |

| F339 | Registered | [78,112,120] | F443 | Good high school graduation | [127] |

| F340 | Tasks | [59,60,137] | F444 | Type of associate degree | [114] |

| F341 | Type of institution | [39,87] | F445 | First-generation student | [114] |

| F342 | Student status | [90,112,121] | F446 | Number of internships | [52] |

| F343 | Repeater | [100,121,137] | F447 | First registration | [100] |

| F344 | Access note | [93,94,130] | F448 | Persistence | [100] |

| F345 | Number of courses approved | [100,101,111] | F449 | Home Language | [100] |

| F346 | Total credits | [59,88,111] | F450 | Previous year’s activity | [100] |

| F347 | Absence | [60,88,120] | F451 | Final decision | [100] |

| F348 | Academic field | [51,79,123] | F452 | Specialty access | [105] |

| F349 | Evidence | [117,134,137] | F453 | Follow the path | [105] |

| F350 | Faculty | [65,69,120] | F454 | Access description | [129] |

| F351 | Failed subjects | [64,80,118] | F455 | Leveling | [113] |

| F352 | Approved credits | [69,88,114] | F456 | Quality of online teaching activities | [127] |

| F353 | Number of semesters completed | [68,97,106] | F457 | Limited knowledge of using specialized software | [85] |

| F354 | Average of previous semesters | [79,110,117] | F458 | Academic problems | [130] |

| F355 | Career | [65,77,98] | F459 | Level of previous studies | [82] |

| F356 | Repeating course number | [51,80] | F460 | Student grade point average in ninth grade | [126] |

| F357 | Cluster | [78,116] | F461 | Hours dedicated to tasks | [126] |

| F358 | Failed exam | [107,109] | F462 | Number of course withdrawals | [95] |

| F359 | Subjects passed | [73,159] | F463 | Number of disciplinary actions | [95] |

| F360 | Credit ratio per subject | [39,87] | F464 | Last school level achieved | [110] |

| F361 | Ratio of credits to expected credits | [39,78] | F465 | Income cohort | [128] |

| F362 | Study day | [65,118] | F466 | Average grades per subject | [128] |

| F363 | Admission program | [107,117] | F467 | GPA per semester | [103] |

| F364 | Student code | [93,114] | F468 | Other programs taken | [103] |

| F365 | Credits | [14,21] | F469 | Reading comprehension score | [103] |

| F366 | Average national exam score | [55,101] | F470 | Score in logical reasoning | [103] |

| F367 | Attempts to pass the exam | [14,21] | F471 | Academic admission program | [103] |

| F368 | Entrance qualification grade | [53,99] | F472 | Performance test | [111] |

| F369 | Grade points | [88,134] | F473 | Academic Department | [111] |

| F370 | Degree exam | [53,59] | F474 | Plan hours | [111] |

| F371 | Type of study program | [53,64] | F475 | Hours recorded in the last semester | [111] |

| F372 | Full-time status | [109,112] | F476 | First year average | [111] |

| F373 | Exam | [62,134] | F477 | Program duration | [111] |

| F374 | Level enrolled | [94,95] | F478 | Title name | [89] |

| F375 | Career application option range | [75,96] | F479 | Additional learning requirements | [89] |

| F376 | Average secondary grades | [89,116] | F480 | Number of honors obtained | [89] |

| F377 | Exam grades | [92,131] | F481 | Admission method | [91] |

| F378 | Abandoned materials | [87] | F482 | Type of activity | [146] |

| F379 | Period | [81] | F483 | Type of action | [146] |

| F380 | Project rating | [140] | F484 | Access frequency by day of the week | [146] |

| F381 | Average attempts | [117] | F485 | Frequency per week and month of the semester | [146] |

| F382 | Subject code | [87] | F486 | Access time | [146] |

| F383 | Initial test note | [144] | F487 | Amount and type of interaction with materials | [146] |

| F384 | Entrance exam date | [54] | F488 | Participation in evaluations | [146] |

| F385 | Lower consolidated result | [106] | F489 | Number of subjects taken | [65] |

| F386 | Number of national exams taken | [56] | F490 | Enrollment method | [65] |

| F387 | Delay | [122] | F491 | Number of times registered | [65] |

| F388 | Type of entry qualification | [54] | F492 | Entry level | [74] |

| F389 | Degree of study | [90] | F493 | Current grade | [74] |

| F390 | First level degree | [105] | F494 | Cumulative average | [75] |

| F391 | Anonymity of the university | [63] | F495 | Level of previous education | [68] |

| F392 | Place institution | [81] | F496 | Syllabus | [68] |

| F393 | Type of student | [114] | F497 | Beginning of the semester | [68] |

| F394 | Type of study (full-part time) | [104] | F498 | Accumulated credits | [68] |

| F395 | Reason for admission | [105] | F499 | Days in exchange programs | [68] |

| F396 | Admission category | [93] | F500 | Moodle Activity Count | [68] |

| F397 | Computer knowledge | [86] | F501 | Activity trend in Moodle | [68] |

| F398 | Disciplinary infraction | [122] | F502 | Course access | [92] |

| F399 | Admission form | [99] | F503 | Test results | [92] |

| F400 | Risk via admission | [73] | F504 | Tasks submitted | [92] |

| F401 | Binary license plate | [112] | F505 | Final course grade | [92] |

| F402 | Admission option | [105] | F506 | Practice grades | [134] |

| F403 | Average score on entrance exams | [101] | F507 | Project ratings | [134] |

| F404 | Registration value | [120] | F508 | Reading comprehension | [134] |

| F405 | Course of study | [54] | F509 | Cumulative GPA | [134] |

| F406 | Times failed degree | [99] | F510 | Credits earned | [134] |

| F407 | First-choice studies | [133] | F511 | Time enrolled in university | [134] |

| F408 | Tutorials carried out | [113] | F512 | Access outside of class | [141] |

| F409 | Antique | [100] | F513 | Curricular units enrolled | [76] |

| F410 | Previous qualification | [62] | F514 | Approved curricular units | [76] |

| F411 | Average last cycle | [116] | F515 | Accredited curricular units | [76] |

| F412 | Mode | [113] | F516 | Training chain | [77] |

| F413 | Military service | [122] | F517 | Current semester average | [70] |

| F414 | Drop subject | [132] | F518 | Average of subjects passed | [70] |

| F415 | Average score | [82] | F519 | Absences | [70] |

| F416 | Failed subjects in secondary school | [79] | F520 | Field of study | [83] |

| F417 | Repeating a secondary school year | [79] | |||

| F418 | Repeating the first academic year | [94] | |||

| F419 | Temporary withdrawal | [132] |

| Studies | Dataset | Preprocessing | Model | Result (%) |

|---|---|---|---|---|

| [64] | 21,654 | Random subsampling (RUS) | DT | 96.20 1 |

| LR | 96.60 1 | |||

| SVM | 97.70 1 | |||

| ANN | 95.50 1 | |||

| LR + SMOTE | 83.20 1 | |||

| DT + SMOTE | 92.50 1 | |||

| ANN + SMOTE | 88.10 1 | |||

| SMV + SMOTE | 95.40 1 | |||

| LR + over-sampling | 85.50 1 | |||

| DT + over-sampling | 79.30 1 | |||

| SVM + over-sampling | 86.90 1 | |||

| ANN + over-slamping | 85.50 1 | |||

| LR + under-sampling | 86.00 1 | |||

| DT + under-sampling | 86.70 1 | |||

| SVM + under-sampling | 87.90 1 | |||

| ANN + under-slamping | 84.70 1 | |||

| [118] | 670 | Data cleaning | JRip | 96.00 1 |

| NNge | 95.80 1 | |||

| OneR | 93.70 1 | |||

| Prism | 94.40 1 | |||

| Ridor | 93.40 1 | |||

| ADTree | 96.6 1 | |||

| DT-J48 | 94.30 1 | |||

| RandomTree | 94.00 1 | |||

| REPTree | 92.70 1 | |||

| SimpleCart | 96.60 1 | |||

| ICRM v1 | 92.10 1 | |||

| ICRM v2 | 93.70 1 | |||

| ICRM v3 | 93.40 1 | |||

| [82] | 670 | Data cleansing Discretization of variables Creation of attributes | ADTree | 98.20 1 |

| J48 | 96.70 1 | |||

| RandomTree | 96.10 1 | |||

| REPTree | 96.50 1 | |||

| SimpleCart | 96.40 1 | |||

| Prism | 99.80 1 | |||

| Ridor | 97.90 1 | |||

| ICRM v1 | 92.10 1 | |||

| ICRM v2 | 94.40 1 | |||

| ICRM v3 | 94.00 1 | |||

| [39] | 5951 | Data cleansing Dimensionality reduction Data balancing Data transformation | Random model | 51.00 1 |

| KNN | 62.00 1 | |||

| SVM | 65.00 1 | |||

| DT | 68.00 1 | |||

| RF | 69.00 1 | |||

| GB | 69.00 1 | |||

| NB | 66.00 1 | |||

| LR | 62.00 1 | |||

| ANN | 66.00 1 | |||

| [57] | 10,554 | Unrealized | DT | 72.80 1 |

| LR | 84.50 1 | |||

| SVM | 82.80 1 | |||

| RF | 82.60 1 | |||

| ANN | 77.80 1 | |||

| GB | 83.70 1 | |||

| LLM | 83.90 1 | |||

| LMT | 80.10 1 | |||

| BAG | 78.00 1 | |||

| [97] | 13,696 | Data cleansing | DT | 86.60 1 |

| LR | 88.90 1 | |||

| SVM | 89.40 1 | |||

| KNN | 87.60 1 | |||

| RF | 90.02 1 | |||

| MLP | 89.20 1 | |||

| CNN | 94.60 1 | |||

| GBN | 85.40 1 | |||

| [147] | 79,186 | Data cleaning Normalization Time series Matrix specifications | LR | 85.70 1 |

| SVM | 80.10 1 | |||

| CNN | 86.40 1 | |||

| LSTM | 80.10 1 | |||

| CNN-LSTM | 84.80 1 | |||

| DP-CNN | 84.20 1 | |||

| CLSA | 87.40 1 | |||

| [59] | 7536 | Unrealized | DT | 84.70 1 |

| LR | 76.60 1 | |||

| SVM | 76.60 1 | |||

| RF | 82.90 1 | |||

| MLP | 89.60 1 | |||

| MSNF | 87.70 1 | |||

| STUD | 90.10 1 | |||

| [51] | 425 | Unrealized | DT | 97.92 1 |

| LR | 99.47 1 | |||

| KNN | 82.10 1 | |||

| RF | 99.47 1 | |||

| NB | 96.79 1 | |||

| GB | 98.68 1 | |||

| [120] | 261 | Feature selection Data cleaning | DT | 94.00 1 |

| LR | 96.00 1 | |||

| SVM | 94.00 1 | |||

| RF | 94.00 1 | |||

| NB | 94.00 1 | |||

| ANN | 97.00 1 | |||

| [122] | 60,010 | Data cleansing | LightGBM | 81.00 1 |

| XGBoost | 83.00 1 | |||

| LR | 50.00 1 | |||

| SVM | 51.00 1 | |||

| RF | 80.00 1 | |||

| DT | 65.00 1 | |||

| [136] | 3029 | Undersampling SMOTE-Tomek | LR | 89.00 1 |

| SGD | 86.00 1 | |||

| DT | 98.00 1 | |||

| MLP | 79.001 | |||

| RF | 99.001 | |||

| SVM | 72.00 1 | |||

| [132] | 1650 | Data transformation | DT | 80.00 1 |

| LR | 87.59 1 | |||

| SVM | 85.55 1 | |||

| RF | 88.33 1 | |||

| NB | 77.14 1 | |||

| MLP | 83.92 1 | |||

| [139] | 32,593 | Data extraction Data cleaning Data scaling | DT | 78.00 1 |

| LR | 80.00 1 | |||

| SVM | 79.00 1 | |||

| RF | 79.00 1 | |||

| SELOR | 84.00 1 | |||

| SIHMM | 83.00 1 | |||

| [148] | 104 | Data cleansing | SVM | 36.73 1 |

| PESFAM | 43.24 1 | |||

| FFNN | 68.97 1 | |||

| SEDM | 85.71 1 | |||

| LR | 98.95 1 | |||

| [72] | 26 | Data cleaning | DT | 78.00 1 |

| SVM | 80.00 1 | |||

| KNN | 73.00 1 | |||

| RF | 92.00 1 | |||

| ANN | 90.00 1 | |||

| [100] | 4419 | Data cleaning | DT | 88.46 1 |

| SVM | 86.92 1 | |||

| KNN | 83.85 1 | |||

| RF | 92.31 1 | |||

| NB | 79.23 1 | |||

| [140] | 261 | Data cleaning | RF | 91.76 1 |

| GB | 86.76 1 | |||

| XGBoost | 91.76 1 | |||

| FNN + RF + GB + XGBoost | 93.59 1 | |||

| FNN | 96.76 1 | |||

| [56] | 4433 | Extract Transform Upload | SMOTE + RF | 87.00 1 |

| SVMSMOTE +RF | 87.00 1 | |||

| BRF | 82.80 1 | |||

| EE | 83.20 1 | |||

| RB | 81.30 1 | |||

| [93] | 131 | Data cleaning | LR | 73.20 1 |

| SVM | 70.99 1 | |||

| RF | 92.30 1 | |||

| NB | 75.50 1 | |||

| MLP | 92.30 1 | |||

| DT-J48 | 74.00 1 | |||

| [105] | 3425 | Feature selection | DT | 82.05 1 |

| LR | 83.37 1 | |||

| SVM | 82.90 1 | |||

| KNN | 85.59 1 | |||

| ANN | 85.11 1 | |||

| [90] | 811 | KPCA, PCA, LPP, NPE, IsoP, WCT-T, and WTQ-T | KNN | 93.30 1 |

| ANN | 94.00 1 | |||

| DT-C4.5 | 92.60 1 | |||

| NB | 93.80 1 | |||

| [15] | 331 | Data cleaning | CatBoost | 84.00 1 |

| RF | 81.00 1 | |||

| XGBoost | 82.00 1 | |||

| ANN | 87.00 1 | |||

| [78] | 143,326 | Data cleaning | DT | 99.53 1 |

| LR | 99.58 1 | |||

| ANN | 97.72 1 | |||

| XGBoost | 99.28 1 | |||

| [54] | Na | Unrealized | LR | 93.76 1 |

| Random Forest (Bagging) | 93.58 1 | |||

| AdaBoost | 95.51 1 | |||

| ANN | 94.76 1 | |||

| [7] | Na | Data cleaning | DT | 91.00 1 |

| LR | 87.00 1 | |||

| NB | 55.00 1 | |||

| MLP | 90.00 1 | |||

| [106] | 77,384 | Data cleaning | DT | 94.63 1 |

| ANN | 93.97 1 | |||

| BN | 93.92 1 | |||

| [98] | 11,496 | Unrealized | RF | 90.10 1 |

| ANN | 89.30 1 | |||

| Logit | 91.20 1 | |||

| [55] | 12,370 | Unrealized | LR + SMOKE_SVM | 72.00 1 |

| RF | 78.00 1 | |||

| ANN + SMOKE_SVM | 74.00 1 | |||

| [143] | 670 | Data cleaning | K-means | 80.01 1 |

| HDBSCAN | 65.63 1 | |||

| DBSCAN | 95.71 1 | |||

| [101] | 3373 | Unrealized | SVM | 76.39 1 |

| RF | 80.40 1 | |||

| ANN | 77.95 1 | |||

| [61] | 128 | Data transformation | DT | 84.00 1 |

| LR | 82.00 1 | |||

| NB | 84.00 1 | |||

| ANN | 82.00 1 | |||

| [142] | 220 | Unrealized | DT | 97.69 1 |

| [113] | 1861 | Data cleaning Data transformation | DT-J48 | 91.80 1 |

| ANN | 94.60 1 | |||

| DT + ANN | 98.70 1 | |||

| [86] | 2422 | Unrealized | LR | 76.03 1 |

| AHP | 64.57 1 | |||

| [129] | 5426 | Data cleaning | SVM | 89.041 1 |

| RF | 88.312 1 | |||

| GB | 87.103 1 | |||

| [14] | 46,000 | Unrealized | GMERF | 93.58 1 |

| CART | 87.01 1 | |||

| GLM | 91.05 1 | |||

| [161] | 530 | Data cleaning | RF | 56.67 1 |

| XGBoost | 70.00 1 | |||

| RF + XGBoost | 91.52 1 | |||

| [109] | 970 | Data cleaning | ANN | 62.00 1 |

| DT-C4.5 | 65.00 1 | |||

| DT-D3 | 62.00 1 | |||

| [81] | 160 | Variable generation Data selection Data cleaning | ANN | 100.00 1 |

| DT-C4.5 | 87.77 1 | |||

| DT-ID3 | 70.79 1 | |||

| [87] | 976 | Data Selection Data cleaning Generation data integration Formatting | ANN | 85.00 1 |

| DT-C4.5 | 68.00 1 | |||

| DT-ID3 | 75.00 1 | |||

| [94] | 1022 | Unrealized | LR | 80.00 1 |

| Análisis discriminante | 91.50 1 | |||

| [88] | 67,060 | SMOTE RandomOverSampler SMOTETOMEK SMOTEENN | LR | 95.30 1 |

| ANN | 98.20 1 | |||

| GB | 98.00 1 | |||

| GB + RF + SVM | 97.80 1 | |||

| XGBoost + Catboost | 98.90 1 | |||

| [137] | 1862 | MMFA | NB | 92.85 1 |

| DT | 95.82 1 | |||

| [60] | 17,432 | Data cleaning Data transformation SMOTE | KNN | 98.20 1 |

| CART | 97.91 1 | |||

| NB | 98.24 1 | |||

| [85] | 2670 | Data cleaning | MLP | 98.60 1 |

| RBF | 98.10 1 | |||

| [138] | 201 | Data cleaning | DT-ID3 | 92.90 1 |

| DT-J48 | 92.90 1 | |||

| [135] | 1178 | Data cleaning Data transformation Attribute selection | LR | 84.90 1 |

| DT | 91.70 1 | |||

| [115] | 83 | Unrealized | LR | 89.00 1 |

| [127] | 176 | Unrealized | LR | 95.80 1 |

| [96] | 189 | Unrealized | Cluster Analysis | 83.30 1 |

| [116] | 6300 | SMOTE | DT | 95.91 1 |

| [123] | 1851 | Unrealized | DT | 87.90 1 |

| [21] | 41,098 | Unrealized | GMERF | 90.80 1 |

| [79] | 237 | Unrealized | GBM | 92.20 1 |

| [108] | 12,148 | Data cleaning Variable coding | DT | 71.40 1 |

| [131] | 24,770 | Unrealized | XGBoost | 80.32 1 |

| [144] | 197 | Unrealized | DT | 79.90 1 |

| [145] | 389 | Data cleaning | DT | 89.39 1 |

| [80] | 237 | Unrealized | DT | 87.76 1 |

| [102] | 32,593 | Lasso and ridge | LR | 86.90 1 |

| [130] | 3773 | Normalization | LR | 95.00 1 |

| [151] | 3172 | Data cleaning | RF | 87.67 1 |

| [121] | 3162 | Data cleaning Data transformation Variable extraction | DT-CHAID | 98.71 1 |

| [21] | 24,736 | Data division Variable selection | GMERF | 90.85 1 |

| [126] | SMOTE-NC | DT | 84.29 1 | |

| [95] | 1500 | Data cleaning Removing features | DeepS3VM (RNN + S3VM) | 92.54 1 |

| [110] | 35,000 | SMOTE | XGBoost | 82.00 1 |

| LightGBM | 79.80 1 | |||

| DT | 79.80 1 | |||

| RF | 81.50 1 | |||

| ETC | 79.00 1 | |||

| LR | 77.00 1 | |||

| SVM | 61.00 1 | |||

| [128] | 197 | Data cleaning Categorical coding Normalization Feature selection | RF | 100.00 1 |

| [111] | 5883 | Unrealized | CART | 79.70 1 |

| CIT | 81.90 1 | |||

| SVM | 83.00 1 | |||

| GLM | 82.30 1 | |||

| ANN | 81.40 1 | |||

| NB | 70.30 1 | |||

| BAGGD CART | 81.40 1 | |||

| Random Forest | 83.10 1 | |||

| ADABOOST | 80.90 1 | |||

| XGBoost | 82.30 1 | |||

| [89] | 44,875 | Variable coding Standardization of variables Class imbalance Data separation | RF | 85.00 1 |

| FTT | 87.00 1 | |||

| [91] | 329 | Feature selection Dimensionality reduction | DT | 80.20 1 |

| LR | 73.10 1 | |||

| SVM | 71.00 1 | |||

| NB | 62.40 1 | |||

| [146] | Data transformation Data cleaning Feature selection Dimensionality reduction | BIRCH | 56.50 4 | |

| DBSCAN | 32.08 4 | |||

| GMM | 43.50 4 | |||

| RF | 86.00 1 | |||

| DT | 84.00 1 | |||

| SVM | 83.00 1 | |||

| LR) | 82.00 1 | |||

| KNN | 81.00 1 | |||

| [65] | 985 | Data cleaning Standardization of variables SMOTE | AdaBoost | 88.00 1 |

| XGBoost | 88.86 1 | |||

| [74] | 1865 | Data cleaning Data transformation Categorical coding Feature selection | RF | 88.00 1 |

| SVM | 79.00 1 | |||

| GBT | 92.00 1 | |||

| [66] | 4792 | Data transformation | LR | 85.00 1 |

| DT | 87.00 1 | |||

| [75] | 17,904 | Data cleaning Categorical coding Feature selection SMOTE | DT | 91.70 1 |

| NB | 83.40 1 | |||

| KNN | 96.30 1 | |||

| [67] | 1957 | Data cleaning Imputation of missing values Transformation of variables | LR | 98.20 1 |

| PR | 98.20 1 | |||

| NB | 98.60 1 | |||

| RF | 98.50 1 | |||

| DT | 98.80 1 | |||

| SVM | 98.80 1 | |||

| KNN | 98.00 1 | |||

| [68] | 8813 | Reindexing of time series Data deletion Variable coding Standardization of variables | CatBoost | 85.30 3 |

| NN | 84.40 3 | |||

| LR | 84.20 3 | |||

| LDA | 84.10 3 | |||

| RF | 83.90 3 | |||

| LightGBM | 83.20 3 | |||

| XGBoost | 82.30 3 | |||

| SVM | 82.30 3 | |||

| NB | 78.00 3 | |||

| KNN | 77.70 3 | |||

| [92] | 321 | Data cleaning Feature selection Standardization of variables | LR | 83.30 1 |

| PR | 86.30 1 | |||

| [134] | 661 | Data cleaning Transformation of variables Standardization of variables Swinging | LSTM | 98.30 1 |

| DNN | 98.10 1 | |||

| DT | 93.40 1 | |||

| RF | 92.00 1 | |||

| LR | 98.00 1 | |||

| SVM | 74.70 1 | |||

| KNN | 99.00 1 | |||

| [69] | 129,846 | Data cleaning Transformation of variables Variable coding Semantic clustering Standardization of variables | PEM-SNN | 81.10 1 |

| [141] | 322 | Elimination of variables Variable coding Standardization of variables | ARD | 1.42 5 |

| BR | 1.45 5 | |||

| LIRE | 1.47 5 | |||

| RR | 1.48 5 | |||

| LASSO | 1.49 5 | |||

| DT | 1.65 5 | |||

| RF | 1.60 5 | |||

| AdaBoost | 1.62 5 | |||

| XGBoost | 1.63 5 | |||

| CatBoost | 1.64 5 | |||

| SVM | 1.66 5 | |||

| KNN | 1.68 5 | |||

| MLP | 1.65 5 | |||

| DR | 2.13 5 | |||

| [76] | 4424 | Elimination of variables Variable coding Standardization of variables | DT | 81.00 2 |

| RF | 87.00 2 | |||

| XGBoost | 88.00 2 | |||

| CatBoost | 88.00 2 | |||

| LightGBM | 88.00 2 | |||

| BG | 85.00 2 | |||

| SVM | 76.00 2 | |||

| [77] | 288 | Feature selection Converting variables Elimination of variables | DT | 90.51 1 |

| K-means | 44.29 1 | |||

| IF | 30.34 1 | |||

| LIRE | 35.06 1 | |||

| [70] | 6312 | Elimination of variables Variable coding Normalization | ANN | 81.00 1 |

References

- Baranyi, M.; Nagy, M.; Molontay, R. Interpretable Deep Learning for University Dropout Prediction. In Proceedings of the SIGITE 2020—Proceedings of the 21st Annual Conference on Information Technology Education, Virtual Event, 7–9 October 2020. [Google Scholar] [CrossRef]

- Bustamante, D.; Garcia-Bedoya, O. Predictive Academic Performance Model to Support, Prevent and Decrease the University Dropout Rate. In Communications in Computer and Information Science; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- OECD. How many students complete tertiary education? In Education at a Glance 2022: OECD Indicators; OECD Publishing: Paris, France, 2022. [Google Scholar] [CrossRef]

- Agrusti, F.; Bonavolontà, G.; Mezzini, M. University dropout prediction through educational data mining techniques: A systematic review. J. E-Learn. Knowl. Soc. 2019, 15, 161–182. [Google Scholar] [CrossRef]

- Netanda, R.S.; Mamabolo, J.; Themane, M. Do or die: Student support interventions for the survival of distance education institutions in a competitive higher education system. Stud. High. Educ. 2019, 44, 397–414. [Google Scholar] [CrossRef]

- Felderer, B.; Kueck, J.; Spindler, M. Using Double Machine Learning to Understand Nonresponse in the Recruitment of a Mixed-Mode Online Panel. Soc. Sci. Comput. Rev. 2022, 41, 461–481. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, M.; Kim, D.; Gil, J.M. Evaluation of Predictive Models for Early Identification of Dropout Students. J. Inf. Process. Syst. 2021, 17, 630–644. [Google Scholar] [CrossRef]

- Pfau, W.; Rimpp, P. AI-Enhanced Business Models for Digital Entrepreneurship; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Vargas, A.V.; Palacio, G.J.L. Abandono estudiantil en una universidad privada: Un fenómeno no ajeno a los posgrados. Valoración cuantitativa a partir del análisis de supervivencia. Colombia, 2012–2016. Rev. Educ. 2020, 44, 177–191. [Google Scholar]

- Buduma, N.; Locascio, N. Fundamentals of Deep Learning: Designing Next-Generation Machine Intelligence Algorithms; O’Reilly Media: Sebastopol, CA, USA, 2017. [Google Scholar]

- Berka, P.; Marek, L. Bachelor’s degree student dropouts: Who tend to stay and who tend to leave? Stud. Educ. Eval. 2021, 70, 100999. [Google Scholar] [CrossRef]

- Nájera, A.B.U.; Ortega, L.A.M. Predictive Model for Taking Decision to Prevent University Dropout. Int. J. Interact. Multimed. Artif. Intell. 2022, 7, 205–213. [Google Scholar]

- Núñez-Naranjo, A.F.; Ayala-Chauvin, M.; Riba-Sanmartí, G. Prediction of university dropout using machine learning. In Proceedings of the International Conference on Information Technology & Systems, La Libertad, Ecuador, 4–6 February 2021; Springer: Cham, Switzerland, 2021; pp. 396–406. [Google Scholar]

- Cannistrà, M.; Masci, C.; Ieva, F.; Agasisti, T.; Paganoni, A.M. Early-predicting dropout of university students: An application of innovative multilevel machine learning and statistical techniques. Stud. High. Educ. 2022, 47, 1935–1956. [Google Scholar] [CrossRef]

- Moreira da Silva, D.E.; Solteiro Pires, E.J.; Reis, A.; de Moura Oliveira, P.B.; Barroso, J. Forecasting Students Dropout: A UTAD University Study. Future Internet 2022, 14, 76. [Google Scholar] [CrossRef]

- Bertolini, R.; Finch, S.; Nehm, R. Enhancing data pipelines for forecasting student performance: Integrating feature selection with cross-validation. Int. J. Educ. Technol. High. Educ. 2021, 18, 1–23. [Google Scholar] [CrossRef]

- Lee, S.; Chung, J.Y. The machine learning-based dropout early warning system for improving the performance of dropout prediction. Appl. Sci. 2019, 9, 3093. [Google Scholar] [CrossRef]

- Blundo, C.; Fenza, G.; Fuccio, G.; Loia, V.; Orciuoli, F. A time-driven FCA-based approach for identifying students’ dropout in MOOCs. Int. J. Intell. Syst. 2022, 37, 2683–2705. [Google Scholar] [CrossRef]

- Heuillet, A.; Couthouis, F.; Díaz-Rodríguez, N. Explainability in deep reinforcement learning. Knowl.-Based Syst. 2021, 214, 106685. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’16, San Francisco, CA, USA, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 1135–1144. [Google Scholar] [CrossRef]

- Pellagatti, M.; Masci, C.; Ieva, F.; Paganoni, A.M. Generalized mixed-effects random forest: A flexible approach to predict university student dropout. Stat. Anal. Data Min. 2021, 14, 241–257. [Google Scholar] [CrossRef]

- Alban, M.; Mauricio, D. Predicting University Dropout through Data Mining: A Systematic Literature. Indian J. Sci. Technol. 2019, 12, 10. [Google Scholar] [CrossRef]

- Albreiki, B.; Zaki, N.; Alashwal, H. A systematic literature review of student’ performance prediction using machine learning techniques. Educ. Sci. 2021, 11, 552. [Google Scholar] [CrossRef]

- Andrade-Girón, D.; Sandivar-Rosas, J.; Marín-Rodriguez, W.; Susanibar-Ramirez, E.; Toro-Dextre, E.; Ausejo-Sanchez, J.; Villarreal-Torres, H.; Angeles-Morales, J. Predicting Student Dropout based on Machine Learning and Deep Learning: A Systematic Review. EAI Endorsed Trans. Scalable Inf. Syst. 2023, 10, 1. [Google Scholar] [CrossRef]

- Mduma, N.; Kalegele, K.; Machuve, D. A survey of machine learning approaches and techniques for student dropout prediction. Data Sci. J. 2019, 18, 14. [Google Scholar] [CrossRef]

- Alalawi, K.; Athauda, R.; Chiong, R. Contextualizing the current state of research on the use of machine learning for student performance prediction: A systematic literature review. Eng. Rep. 2023, 5, e12699. [Google Scholar] [CrossRef]

- Guo, T.; Bai, X.; Tian, X.; Firmin, S.; Xia, F. Educational anomaly analytics: Features, methods, and challenges. Front. Big Data 2022, 4, 811840. [Google Scholar] [CrossRef]

- Alhothali, A.; Albsisi, M.; Assalahi, H.; Aldosemani, T. Predicting student outcomes in online courses using machine learning techniques: A review. Sustainability 2022, 14, 6199. [Google Scholar] [CrossRef]

- Idowu, J.A. Debiasing education algorithms. Int. J. Artif. Intell. Educ. 2024, 34, 1510–1540. [Google Scholar] [CrossRef]

- Venkatesan, R.G.; Karmegam, D.; Mappillairaju, B. Exploring statistical approaches for predicting student dropout in education: A systematic review and meta-analysis. J. Comput. Soc. Sci. 2024, 7, 171–196. [Google Scholar] [CrossRef]

- Tinto, V. Dropout from Higher Education: A Theoretical Synthesis of Recent Research. Rev. Educ. Res. 1975, 45, 89–125. [Google Scholar] [CrossRef]

- Tinto, V. Limits of Theory and Practice in Student Attrition. J. High. Educ. 1982, 53, 687–700. [Google Scholar] [CrossRef]

- Tinto, V. Leaving College: Rethinking the Causes and Cures of Student Attrition; University of Chicago Press: Chicago, IL, USA, 1994. [Google Scholar] [CrossRef]

- Franz, S.; Paetsch, J. Academic and social integration and their relation to dropping out of teacher education: A comparison to other study programs. Front. Educ. 2023, 8, 1179264. [Google Scholar] [CrossRef]

- Villegas-Ch, W.; Govea, J.; Revelo-Tapia, S. Improving Student Retention in Institutions of Higher Education through Machine Learning: A Sustainable Approach. Sustainability 2023, 15, 14512. [Google Scholar] [CrossRef]

- Quincho Apumayta, R.; Carrillo Cayllahua, J.; Ccencho Pari, A.; Inga Choque, V.; Cárdenas Valverde, J.; Huamán Ataypoma, D. University Dropout: A Systematic Review of the Main Determinant Factors (2020-2024)[Version 2; Peer Review: 2 Approved]. F1000Research 2024, 13, 942. [Google Scholar] [CrossRef]

- Lorenzo-Quiles, O.; Galdón-López, S.; Lendínez-Turón, A. Factors contributing to university dropout: A review. Front. Educ. 2023, 8, 1159864. [Google Scholar] [CrossRef]

- Xavier, M.; Meneses, J. A Literature Review on the Definitions of Dropout in Online Higher Education. In Proceedings of the European Distance and E-Learning Network (EDEN) Proceedings, Timisoara, Romania, 22–24 June 2020; Available online: https://femrecerca.cat/meneses/publication/literature-review-definitions-dropout-online-higher-education/literature-review-definitions-dropout-online-higher-education.pdf (accessed on 25 February 2025).

- Opazo, D.; Moreno, S.; Álvarez-Miranda, E.; Pereira, J. Analysis of First-Year University Student Dropout through Machine Learning Models: A Comparison between Universities. Mathematics 2021, 9, 2599. [Google Scholar] [CrossRef]

- Dervenis, C.; Kyriatzis, V.; Stoufis, S.; Fitsilis, P. Predicting Students’ Performance Using Machine Learning Algorithms. In Proceedings of the 6th International Conference on Algorithms, Computing and Systems, ICACS ’22, Larissa, Greece, 16–18 September 2022; Association for Computing Machinery: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Yağcı, M. Educational data mining: Prediction of students’ academic performance using machine learning algorithms. Smart Learn. Environ. 2022, 9, 11. [Google Scholar] [CrossRef]

- Wang, J.; Yu, Y. Machine Learning Approach to Student Performance Prediction of Online Learning. PLoS ONE 2025, 20, e0299018. [Google Scholar] [CrossRef] [PubMed]

- Dabhade, P.; Agarwal, R.; Alameen, K.P.; Fathima, A.T.; Sridharan, R.; Gopakumar, G. Educational Data Mining for Predicting Students’ Academic Performance Using Machine Learning Algorithms. Mater. Today Proc. 2021, 47, 5260–5267. [Google Scholar] [CrossRef]

- Hakim, N.; Jastacia, B.; Mansoori, A.A. Personalizing Learning Paths: A Study of Adaptive Learning Algorithms and Their Effects on Student Outcomes. J. Emerg. Technol. Educ. 2024, 2, 318–330. [Google Scholar] [CrossRef]

- Alzubaidi, A.; Alzubaidi, A.; Alzubaidi, A. Assessment and Evaluation of Different Machine Learning Models for Predicting Students’ Academic Performance. J. Comput. Sci. 2023, 19, 415–427. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Cotfas, L.-A.; Delcea, C.; Mancini, S.; Ponsiglione, C.; Vitiello, L. An agent-based model for cruise ship evacuation considering the presence of smart technologies on board. Expert Syst. Appl. 2023, 214, 119124. [Google Scholar] [CrossRef]

- Kitchenham, B.; Pearl Brereton, O.; Budgen, D.; Turner, M.; Bailey, J.; Linkman, S. Systematic literature reviews in software engineering—A systematic literature review. Inf. Softw. Technol. 2009, 51, 7–15. [Google Scholar] [CrossRef]

- Shiguihara, P.; Lopes, A.d.A.; Mauricio, D. Dynamic Bayesian Network Modeling, Learning, and Inference: A Survey. IEEE Access 2021, 9, 117639–117648. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, 71. [Google Scholar] [CrossRef]

- Mutrofin, S.; Ginardi, R.V.H.; Fatichah, C.; Kurniawardhani, A. A critical assessment of balanced class distribution problems: The case of predict student dropout. Test Eng. Manag. 2019, 81, 1764–1770. [Google Scholar]

- Phan, M.; De Caigny, A.; Coussement, K. A decision support framework to incorporate textual data for early student dropout prediction in higher education. Decis. Support Syst. 2023, 168, 113940. [Google Scholar] [CrossRef]

- Al-Jallad, N.T.; Ning, X.; Khairalla, M.A. An interpretable predictive framework for students’ withdrawal problem using multiple classifiers. Eng. Lett. 2019, 27, 1–8. [Google Scholar]

- Berens, J.; Schneider, K.; Görtz, S.; Oster, S.; Burghoff, J. Early Detection of Students at Risk—Predicting Student Dropouts Using Administrative Student Data from German Universities and Machine Learning Methods. J. Educ. Data Min. 2019, 11, 1–41. [Google Scholar]

- Velasco, C.L.R.; Villena, E.G.; Ballester, J.B.; Prados, F.Á.D.; Alvarado, E.S.; Álvarez, J.C. Forecasting of Post-Graduate Students’ Late Dropout Based on the Optimal Probability Threshold Adjustment Technique for Imbalanced Data. Int. J. Emerg. Technol. Learn. 2023, 18, 120–155. [Google Scholar] [CrossRef]

- Martins, M.V.; Baptista, L.; Machado, J.; Realinho, V. Multi-Class Phased Prediction of Academic Performance and Dropout in Higher Education. Appl. Sci. 2023, 13, 4702. [Google Scholar] [CrossRef]

- Coussement, K.; Phan, M.; De Caigny, A.; Benoit, D.F.; Raes, A. Predicting student dropout in subscription-based online learning environments: The beneficial impact of the logit leaf model. Decis. Support Syst. 2020, 135, 113325. [Google Scholar] [CrossRef]

- Oqaidi, K.; Aouhassi, S.; Mansouri, K. Towards a Students’ Dropout Prediction Model in Higher Education Institutions Using Machine Learning Algorithms. Int. J. Emerg. Technol. Learn. 2022, 17, 103–117. [Google Scholar] [CrossRef]

- Won, H.S.; Kim, M.J.; Kim, D.; Kim, H.S.; Kim, K.M. University Student Dropout Prediction Using Pretrained Language Models. Appl. Sci. 2023, 13, 7073. [Google Scholar] [CrossRef]

- Hutagaol, N. Suharjito Predictive modelling of student dropout using ensemble classifier method in higher education. Adv. Sci. Technol. Eng. Syst. 2019, 4, 206–211. [Google Scholar] [CrossRef]

- Sultana, S.; Khan, S.; Abbas, M.A. Predicting performance of electrical engineering students using cognitive and non-cognitive features for identification of potential dropouts. Int. J. Electr. Eng. Educ. 2017, 54, 105–118. [Google Scholar] [CrossRef]

- Realinho, V.; Machado, J.; Baptista, L.; Martins, M.V. Predicting Student Dropout and Academic Success. Data 2022, 7, 146. [Google Scholar] [CrossRef]

- Behr, A.; Giese, M.; Teguim Kamdjou, H.D.; Theune, K. Motives for dropping out from higher education—An analysis of bachelor’s degree students in Germany. Eur. J. Educ. 2021, 56, 325–343. [Google Scholar] [CrossRef]

- Thammasiri, D.; Delen, D.; Meesad, P.; Kasap, N. A critical assessment of imbalanced class distribution problem: The case of predicting freshmen student attrition. Expert Syst. Appl. 2014, 41, 321–330. [Google Scholar] [CrossRef]

- Goran, R.; Jovanovic, L.; Bacanin, N.; Stankovic, M.; Simic, V.; Antonijevic, M.; Zivkovic, M. Identifying and understanding student dropouts using metaheuristic optimized classifiers and explainable artificial intelligence techniques. IEEE Access 2024, 12, 122377–122400. [Google Scholar] [CrossRef]

- Gutiérrez, B.; Dehnhardt, M.; Cortés, R.; Matheu, A.; Cornejo, C. Modelo logístico de deserción mediante técnicas de regresión y árbol de decisión para la eficiencia en la destinación de recursos: El caso de una universidad privada chilena. Rev. Ibérica Sist. E Tecnol. Informação 2024, E68, 398–412. [Google Scholar]

- Hassan, M.A.; Muse, A.H.; Nadarajah, S. Predicting student dropout rates using supervised machine learning: Insights from the 2022 National Education Accessibility Survey in Somaliland. Appl. Sci. 2024, 14, 7593. [Google Scholar] [CrossRef]

- Vaarma, M.; Li, H. Predicting student dropouts with machine learning: An empirical study in Finnish higher education. Technol. Soc. 2024, 76, 102474. [Google Scholar] [CrossRef]

- Cai, C.; Fleischhacker, A. Structural Neural Networks Meet Piecewise Exponential Models for Interpretable College Dropout Prediction. J. Educ. Data Min. 2024, 16, 279–302. [Google Scholar]

- Asto-Lazaro, M.S.; Cieza-Mostacero, S.E. Web Application Based on Neural Networks for the Detection of Students at Risk of Academic Desertion. TEM J. 2024, 13, 2581. [Google Scholar] [CrossRef]

- Isleib, S.; Woisch, A.; Heublein, U. Causes of higher education dropout: Theoretical basis and empirical factors. Z. Erzieh. 2019, 22, 1047–1076. [Google Scholar] [CrossRef]

- Guerra, L.; Rivero, D.; Ortiz, A.; Diaz, E.; Quishpe, S. Prediction model of university dropout through data analytics: Strategy for sustainability. RISTI—Rev. Iber. Sist. E Tecnol. Inf. 2020, 2020, 38–47. [Google Scholar]

- Hinojosa, M.; Derpich, I.; Alfaro, M.; Ruete, D.; Caroca, A.; Gatica, G. Student clustering procedure according to dropout risk to improve student management in higher education. Texto Livre 2022, 15, e37275. [Google Scholar] [CrossRef]

- Zapata-Medina, D.; Espinosa-Bedoya, A.; Jiménez-Builes, J.A. Improving the Automatic Detection of Dropout Risk in Middle and High School Students: A Comparative Study of Feature Selection Techniques. Mathematics 2024, 12, 1776. [Google Scholar] [CrossRef]

- Arthana, I.K.R.; Maysanjaya, I.M.D.; Pradnyana, G.A.; Dantes, G.R. Optimizing Dropout Prediction in University Using Oversampling Techniques for Imbalanced Datasets. Int. J. Inf. Educ. Technol. 2024, 14, 1052–1060. [Google Scholar] [CrossRef]

- Villar, A.; de Andrade, C.R.V. Supervised machine learning algorithms for predicting student dropout and academic success: A comparative study. Discov. Artif. Intell. 2024, 4, 2. [Google Scholar] [CrossRef]

- Diaz, J.; Moreira, F. Toward Educational Sustainability: An AI System for Identifying and Preventing Student Dropout. IEEE Rev. Iberoam. Tecnol. Aprendiz. 2024, 19, 100–110. [Google Scholar]

- Kuz, A.; Morales, R. Education in the Knowledge Society Educational Data Science and Machine Learning: A Case Study on University Student Dropout in Mexico. Educ. Knowl. Soc. 2023, 24, 14. [Google Scholar]

- Villarreal-Torres, H.; Ángeles-Morales, J.; Cano-Mejía, J.; Mejía-Murillo, C.; Flores-Reyes, G.; Palomino-Márquez, M.; Marín-Rodriguez, W.; Andrade-Girón, D. Classification model for student dropouts using machine learning: A case study. EAI Endorsed Trans. Scalable Inf. Syst. 2023, 10, 1–12. [Google Scholar] [CrossRef]

- Díaz, B.; Marín, W.; Lioo, F.; Baldeos, L.; Villanueva, D.; Ausejo, J. Student desertion, factors associated with decision trees: The case of a graduate school at a public university in Peru. RISTI—Rev. Iber. Sist. E Tecnol. Inf. 2022, 2022, 197–211. [Google Scholar]

- Bedregal-Alpaca, N.; Cornejo-Aparicio, V.; Zarate-Valderrama, J.; Yanque-Churo, P. Classification models for determining types of academic risk and predicting dropout in university students. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 7. [Google Scholar] [CrossRef]

- Marquez-Vera, C.; Morales, C.R.; Soto, S.V. Predicting School Failure and Dropout by Using Data Mining Techniques. IEEE Rev. Iberoam. Tecnol. Aprendiz. 2013, 8, 7–14. [Google Scholar] [CrossRef]

- Chen, R. Institutional characteristics and college student dropout risks: A multilevel event history analysis. Res. High. Educ. 2012, 53, 487–505. [Google Scholar] [CrossRef]

- Qvortrup, A.; Lykkegaard, E. The malleability of higher education study environment factors and their influence on humanities student dropout—Validating an instrument. Educ. Sci. 2024, 14, 904. [Google Scholar] [CrossRef]

- Alban, M.; Mauricio, D. Neural networks to predict dropout at the universities. Int. J. Mach. Learn. Comput. 2019, 9, 149–153. [Google Scholar] [CrossRef]

- Silva, H.A.; Quezada, L.E.; Oddershede, A.M.; Palominos, P.I.; O’Brien, C. A Method for Estimating Students’ Desertion in Educational Institutions Using the Analytic Hierarchy Process. J. Coll. Stud. Retent. Res. Theory Pract. 2020, 25, 101–125. [Google Scholar] [CrossRef]

- Bedregal-Alpaca, N.; Tupacyupanqui-Jaén, D.; Cornejo-Aparicio, V. Analysis of the academic performance of systems engineering students, desertion possibilities and proposals for retention. Ingeniare 2020, 28, 668–683. [Google Scholar] [CrossRef]

- Kim, S.; Choi, E.; Jun, Y.K.; Lee, S. Student Dropout Prediction for University with High Precision and Recall. Appl. Sci. 2023, 13, 6275. [Google Scholar] [CrossRef]

- Zanellati, A.; Zingaro, S.P.; Gabbrielli, M. Balancing performance and explainability in academic dropout prediction. IEEE Trans. Learn. Technol. 2024, 17, 2086–2099. [Google Scholar] [CrossRef]

- Iam-On, N.; Boongoen, T. Improved student dropout prediction in Thai University using ensemble of mixed-type data clusterings. Int. J. Mach. Learn. Cybern. 2017, 8, 497–510. [Google Scholar] [CrossRef]

- Quispe, J.O.Q.; Toledo, O.C.; Toledo, M.C.; Llatasi, E.E.C.; Saira, E. Early prediction of university student dropout using machine learning models. Nanotechnol. Percept. 2024, 20, 659–669. [Google Scholar]

- Bouihi, B.; Bousselham, A.; Aoula, E.; Ennibras, F.; Deraoui, A. Prediction of Higher Education Student Dropout based on Regularized Regression Models. Eng. Technol. Appl. Sci. Res. 2024, 14, 17811–17815. [Google Scholar] [CrossRef]

- Aggarwal, D.; Mittal, S.; Bali, V. Prediction model for classifying students based on performance using machine learning techniques. Int. J. Recent Technol. Eng. 2019, 8, 496–503. [Google Scholar] [CrossRef]

- Alvarez, N.L.; Callejas, Z.; Griol, D. Factors that affect student desertion in careers in Computer Engineering profile. Rev. Fuentes 2020, 22, 105–126. [Google Scholar] [CrossRef]

- Cam, H.N.T.; Sarlan, A.; Arshad, N.I. A hybrid model integrating recurrent neural networks and the semi-supervised support vector machine for identification of early student dropout risk. PeerJ Comput. Sci. 2024, 10, e2572. [Google Scholar] [CrossRef]

- Castelo Branco, U.V.; Jezine, E.; Santos Diniz, A.V.; Silva, G.T. Sistema de Alerta para la Identificación de Posibles Factores de Deserción de Estudiantes de Grado en Período de Pandemia en Paraíba (Brasil). Res. Educ. Learn. Innov. Arch. 2022, 29, 83–101. [Google Scholar] [CrossRef]

- Gutierrez-Pachas, D.A.; Garcia-Zanabria, G.; Cuadros-Vargas, E.; Camara-Chavez, G.; Gomez-Nieto, E. Supporting Decision-Making Process on Higher Education Dropout by Analyzing Academic, Socioeconomic, and Equity Factors through Machine Learning and Survival Analysis Methods in the Latin American Context. Educ. Sci. 2023, 13, 154. [Google Scholar] [CrossRef]

- Hoffait, A.S.; Schyns, M. Early detection of university students with potential difficulties. Decis. Support Syst. 2017, 101, 1–11. [Google Scholar] [CrossRef]

- Lacave, C.; Molina, A.I.; Cruz-Lemus, J.A. Learning Analytics to identify dropout factors of Computer Science studies through Bayesian networks. Behav. Inf. Technol. 2018, 37, 993–1007. [Google Scholar] [CrossRef]

- Lottering, R.; Hans, R.; Lall, M. A Machine Learning Approach to Identifying Students at Risk of Dropout: A Case Study. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 417–422. [Google Scholar] [CrossRef]

- Martins, M.; Migueis, V.; Fonseca, D. Gouveia Paulo Prediction of academic dropout in a higher education institution using data mining. RISTI—Rev. Iber. Sist. E Tecnol. Inf. 2020, 2020, 188–203. [Google Scholar]

- Radovanović, S.; Delibašić, B.; Suknović, M. Predicting dropout in online learning environments. Comput. Sci. Inf. Syst. 2021, 18, 957–978. [Google Scholar] [CrossRef]

- Rivera-Baena, O.D.; Patiño-Rodríguez, C.E.; Úsuga-Manco, O.C.; Hernández-Barajas, F. ADHE: A tool to characterize higher education dropout phenomenon. Rev. Fac. Ing. Univ. Antioq. 2024, 64–75. [Google Scholar] [CrossRef]

- Schneider, K.; Berens, J.; Burghoff, J. Early detection of student dropout: What is relevant information? Z. Erzieh. 2019, 22, 1121–1146. [Google Scholar] [CrossRef]

- Segura, M.; Mello, J.; Hernández, A. Machine Learning Prediction of University Student Dropout: Does Preference Play a Key Role? Mathematics 2022, 10, 3359. [Google Scholar] [CrossRef]

- Tan, M.; Shao, P. Prediction of student dropout in E-learning program through the use of machine learning method. Int. J. Emerg. Technol. Learn. 2015, 10, 11. [Google Scholar] [CrossRef]

- Wainipitapong, S.; Chiddaycha, M. Assessment of dropout rates in the preclinical years and contributing factors: A study on one Thai medical school. BMC Med. Educ. 2022, 22, 461. [Google Scholar] [CrossRef]

- Yasmin. Application of the classification tree model in predicting learner dropout behaviour in open and distance learning. Distance Educ. 2013, 34, 218–231. [Google Scholar] [CrossRef]

- Zárate-Valderrama, J.; Bedregal-Alpaca, N.; Cornejo-Aparicio, V. Classification models to recognize patterns of desertion in university students. Ingeniare 2021, 29, 168–177. [Google Scholar] [CrossRef]

- Zerkouk, M.; Mihoubi, M.; Chikhaoui, B.; Wang, S. A machine learning based model for student’s dropout prediction in online training. Educ. Inf. Technol. 2024, 29, 15793–15812. [Google Scholar] [CrossRef]

- Alfahid, A. Algorithmic Prediction of Students On-Time Graduation from the University. TEM J. 2024, 13, 692–698. [Google Scholar] [CrossRef]

- Mealli, F.; Rampichini, C. Evaluating the effects of university grants by using regression discontinuity designs. J. R. Stat. Soc. Ser. A Stat. Soc. 2012, 175, 775–798. [Google Scholar] [CrossRef]

- Daza, A. A stacking based hybrid technique to predict student dropout at universities. J. Theor. Appl. Inf. Technol. 2022, 100, 1–12. [Google Scholar]

- Lackner, E. Community College Student Persistence During the COVID-19 Crisis of Spring 2020. Community Coll. Rev. 2023, 51, 193–215. [Google Scholar] [CrossRef] [PubMed]

- Willging, P.A.; Johnson, S.D. Factors that influence students’ decision to dropout of online courses. Online Learn. J. 2019, 13, 115–127. [Google Scholar] [CrossRef]

- Vega, H.; Sanez, E.; De La Cruz, P.; Moquillaza, S.; Pretell, J. Intelligent System to Predict University Students Dropout. Int. J. Online Biomed. Eng. 2022, 18, 27–43. [Google Scholar] [CrossRef]

- Fontana, L.; Masci, C.; Ieva, F.; Paganoni, A.M. Performing learning analytics via generalised mixed-effects trees. Data 2021, 6, 74. [Google Scholar] [CrossRef]

- Márquez-Vera, C.; Cano, A.; Romero, C.; Ventura, S. Predicting student failure at school using genetic programming and different data mining approaches with high dimensional and imbalanced data. Appl. Intell. 2013, 38, 315–330. [Google Scholar] [CrossRef]

- Alvarado-Uribe, J.; Mejía-Almada, P.; Masetto Herrera, A.L.; Molontay, R.; Hilliger, I.; Hegde, V.; Montemayor Gallegos, J.E.; Ramírez Díaz, R.A.; Ceballos, H.G. Student Dataset from Tecnologico de Monterrey in Mexico to Predict Dropout in Higher Education. Data 2022, 7, 119. [Google Scholar] [CrossRef]

- Dasi, H.; Kanakala, S. Student Dropout Prediction Using Machine Learning Techniques. Int. J. Intell. Syst. Appl. Eng. 2022, 10, 408–414. [Google Scholar]

- Albán, M.; Mauricio, D.; Albán, M. Decision trees for the early identification of university students at risk of desertion. Int. J. Eng. Technol 2018, 7, 51. [Google Scholar] [CrossRef]

- Song, Z.; Sung, S.H.; Park, D.M.; Park, B.K. All-Year Dropout Prediction Modeling and Analysis for University Students. Appl. Sci. 2023, 13, 1143. [Google Scholar] [CrossRef]

- Fauszt, T.; Erdélyi, K.; Dobák, D.; Bognár, L.; Kovács, E. Design of a Machine Learning Model to Predict Student Attrition. Int. J. Emerg. Technol. Learn. 2023, 18, 184–195. [Google Scholar] [CrossRef]

- Meyer, J.; Leuze, K.; Strauss, S. Individual Achievement, Person-Major Fit, or Social Expectations: Why Do Students Switch Majors in German Higher Education? Res. High. Educ. 2022, 63, 222–247. [Google Scholar] [CrossRef]

- Wild, S.; Schulze Heuling, L. Student dropout and retention: An event history analysis among students in cooperative higher education. Int. J. Educ. Res. 2020, 104, 101687. [Google Scholar] [CrossRef]

- Wongvorachan, T.; Bulut, O.; Liu, J.X.; Mazzullo, E. A Comparison of Bias Mitigation Techniques for Educational Classification Tasks Using Supervised Machine Learning. Information 2024, 15, 326. [Google Scholar] [CrossRef]

- Sacală, M.D.; Pătărlăgeanu, S.R.; Popescu, M.F.; Constantin, M. Econometric research of the mix of factors influencing first-year students’ dropout decision at the faculty of agri-food and environmental economics. Econ. Comput. Econ. Cybern. Stud. Res. 2021, 55, 203–220. [Google Scholar] [CrossRef]

- Kok, C.L.; Ho, C.K.; Chen, L.; Koh, Y.Y.; Tian, B. A Novel Predictive Modeling for Student Attrition Utilizing Machine Learning and Sustainable Big Data Analytics. Appl. Sci. 2024, 14, 9633. [Google Scholar] [CrossRef]

- Fernandez-Garcia, A.J.; Preciado, J.C.; Melchor, F.; Rodriguez-Echeverria, R.; Conejero, J.M.; Sanchez-Figueroa, F. A real-life machine learning experience for predicting university dropout at different stages using academic data. IEEE Access 2021, 9, 133076–133090. [Google Scholar] [CrossRef]

- Alban, M.; Mauricio, D. Factors that influence undergraduate university desertion according to students perspective. Int. J. Eng. Technol. 2019, 10, 1585–1602. [Google Scholar] [CrossRef]

- Huo, H.; Cui, J.; Hein, S.; Padgett, Z.; Ossolinski, M.; Raim, R.; Zhang, J. Predicting Dropout for Nontraditional Undergraduate Students: A Machine Learning Approach. J. Coll. Stud. Retent. Res. Theory Pract. 2023, 24, 1054–1077. [Google Scholar] [CrossRef]

- Nuanmeesri, S.; Poomhiran, L.; Chopvitayakun, S.; Kadmateekarun, P. Improving Dropout Forecasting during the COVID-19 Pandemic through Feature Selection and Multilayer Perceptron Neural Network. Int. J. Inf. Educ. Technol. 2022, 12, 851–857. [Google Scholar] [CrossRef]

- Zamora Menéndez, Á.; Gil Flores, J.; de Besa Gutiérrez, M.R. Learning approaches, time perspective and persistence in university students. Educ. XX1 2020, 23, 17–39. [Google Scholar] [CrossRef]

- Vives, L.; Cabezas, I.; Vives, J.C.; Reyes, N.G.; Aquino, J.; Cóndor, J.B.; Altamirano, S.F.S. Prediction of students’ academic performance in the programming fundamentals course using long short-term memory neural networks. IEEE Access 2024, 12, 5882–5898. [Google Scholar] [CrossRef]

- Alban, M.; Mauricio, D. Prediction of university dropout through technological factors: A case study in Ecuador. Rev. Espac. 2018, 39, 8. [Google Scholar]

- Cedeño-Valarezo, L.; Morales-Carrillo, J.; Quijije-Vera, C.P.; Palau-Delgado, S.A.; López-Mora, C.I. Machine learning to predict school dropout in the context of COVID-19. RISTI—Rev. Iber. Sist. E Tecnol. Inf. 2023, 2023, 370–377. [Google Scholar]

- Gamao, A.O.; Gerardo, B.D. Prediction-based model for student dropouts using modified mutated firefly algorithm. Int. J. Adv. Trends Comput. Sci. Eng. 2019, 8, 3461–3469. [Google Scholar] [CrossRef]

- Heredia, D.; Amaya, Y.; Barrientos, E. Student Dropout Predictive Model Using Data Mining Techniques. IEEE Lat. Am. Trans. 2015, 13, 3127–3134. [Google Scholar] [CrossRef]

- Mubarak, A.A.; Cao, H.; Zhang, W. Prediction of students’ early dropout based on their interaction logs in online learning environment. Interact. Learn. Environ. 2022, 30, 1414–1433. [Google Scholar] [CrossRef]

- Niyogisubizo, J.; Liao, L.; Nziyumva, E.; Murwanashyaka, E.; Nshimyumukiza, P.C. Predicting student’s dropout in university classes using two-layer ensemble machine learning approach: A novel stacked generalization. Comput. Educ. Artif. Intell. 2022, 3, 100066. [Google Scholar] [CrossRef]

- Rico-Juan, J.R.; Cachero, C.; Macià, H. Study regarding the influence of a student’s personality and an LMS usage profile on learning performance using machine learning techniques. Appl. Intell. 2024, 54, 6175–6197. [Google Scholar] [CrossRef]

- Selvan, M.P.; Navadurga, N.; Prasanna, N.L. An efficient model for predicting student dropout using data mining and machine learning techniques. Int. J. Innov. Technol. Explor. Eng. 2019, 8, 750–752. [Google Scholar] [CrossRef]

- Valles-Coral, M.A.; Salazar-Ramírez, L.; Injante, R.; Hernandez-Torres, E.A.; Juárez-Díaz, J.; Navarro-Cabrera, J.R.; Pinedo, L.; Vidaurre-Rojas, P. Density-Based Unsupervised Learning Algorithm to Categorize College Students into Dropout Risk Levels. Data 2022, 7, 165. [Google Scholar] [CrossRef]

- Figueroa-Canas, J.; Sancho-Vinuesa, T. Early prediction of dropout and final exam performance in an online statistics course. Rev. Iberoam. Tecnol. Aprendiz. 2020, 15, 86–94. [Google Scholar] [CrossRef]

- Nuankaew, P. Dropout situation of business computer students, University of Phayao. Int. J. Emerg. Technol. Learn. 2019, 14, 115–131. [Google Scholar] [CrossRef]

- Pecuchova, J.; Drlik, M. Enhancing the Early Student Dropout Prediction Model Through Clustering Analysis of Students’ Digital Traces. IEEE Access 2024, 12, 159336–159367. [Google Scholar] [CrossRef]

- Fu, Q.; Gao, Z.; Zhou, J.; Zheng, Y. CLSA: A novel deep learning model for MOOC dropout prediction. Comput. Electr. Eng. 2021, 94, 107315. [Google Scholar] [CrossRef]

- Burgos, C.; Campanario, M.L.; Peña, D.d.l.; Lara, J.A.; Lizcano, D.; Martínez, M.A. Data mining for modeling students’ performance: A tutoring action plan to prevent academic dropout. Comput. Electr. Eng. 2018, 66, 541–556. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; Available online: https://proceedings.neurips.cc/paper_files/paper/2017/file/8a20a8621978632d76c43dfd28b67767-Paper.pdf (accessed on 25 February 2025).

- Melo, E.; Silva, I.; Costa, D.G.; Viegas, C.M.D.; Barros, T.M. On the use of explainable artificial intelligence to evaluate school dropout. Educ. Sci. 2022, 12, 845. [Google Scholar] [CrossRef]

- Dass, S.; Gary, K.; Cunningham, J. Predicting student dropout in self-paced mooc course using random forest model. Information 2021, 12, 476. [Google Scholar] [CrossRef]

- Karlos, S.; Kostopoulos, G.; Kotsiantis, S. Predicting and interpreting students’ grades in distance higher education through a semi-regression method. Appl. Sci. 2020, 10, 8413. [Google Scholar] [CrossRef]

- Torres, J.A.O.; Santiago, A.M.; Izaguirre, J.M.V.; Garduza, S.H.; García, M.A.; Alejandro, G.F. Multilayer fuzzy inference system for predicting the risk of dropping out of school at the high school level. IEEE Access 2024, 2, 137523–137532. [Google Scholar] [CrossRef]

- Karimi-Haghighi, M.; Castillo, C.; Hernández-Leo, D. A Causal Inference Study on the Effects of First Year Workload on the Dropout Rate of Undergraduates. In Artificial Intelligence in Education; Rodrigo, M.M., Matsuda, N., Cristea, A.I., Dimitrova, V., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 15–27. [Google Scholar]

- Alhaza, K.; Abdel-Salam, A.-S.G.; Mollazehi, M.D.; Ismail, R.M.; Bensaid, A.; Johnson, C.; Al-Tameemi, R.A.N.; A Hasan, M.; Romanowski, M.H. Factors affecting university image among undergraduate students: The case study of Qatar University. Cogent Educ. 2021, 8, 1977106. [Google Scholar] [CrossRef]

- Viloria, A.; Lezama, O.B.P. Mixture structural equation models for classifying university student dropout in Latin America. Procedia Comput. Sci. 2019, 160, 629–634. [Google Scholar] [CrossRef]

- Ishii, T.; Tachikawa, H.; Shiratori, Y.; Hori, T.; Aiba, M.; Kuga, K.; Arai, T. What kinds of factors affect the academic outcomes of university students with mental disorders? A retrospective study based on medical records. Asian J. Psychiatry 2018, 32, 67–72. [Google Scholar] [CrossRef]

- Pecuchova, J.; Drlik, M. Predicting students at risk of early dropping out from course using ensemble classification methods. Procedia Comput. Sci. 2023, 225, 3223–3232. [Google Scholar] [CrossRef]

- Brigato, L.; Iocchi, L. A Close Look at Deep Learning with Small Data. arXiv 2020, arXiv:2003.12843. [Google Scholar]

- Mauricio, D.; Cárdenas-Grandez, J.; Uribe Godoy, G.V.; Rodríguez Mallma, M.J.; Maculan, N.; Mascaro, P. Maximizing Survival in Pediatric Congenital Cardiac Surgery Using Machine Learning, Explainability, and Simulation Techniques. J. Clin. Med. 2024, 13, 6872. [Google Scholar] [CrossRef]