POTMEC: A Novel Power Optimization Technique for Mobile Edge Computing Networks

Abstract

1. Introduction

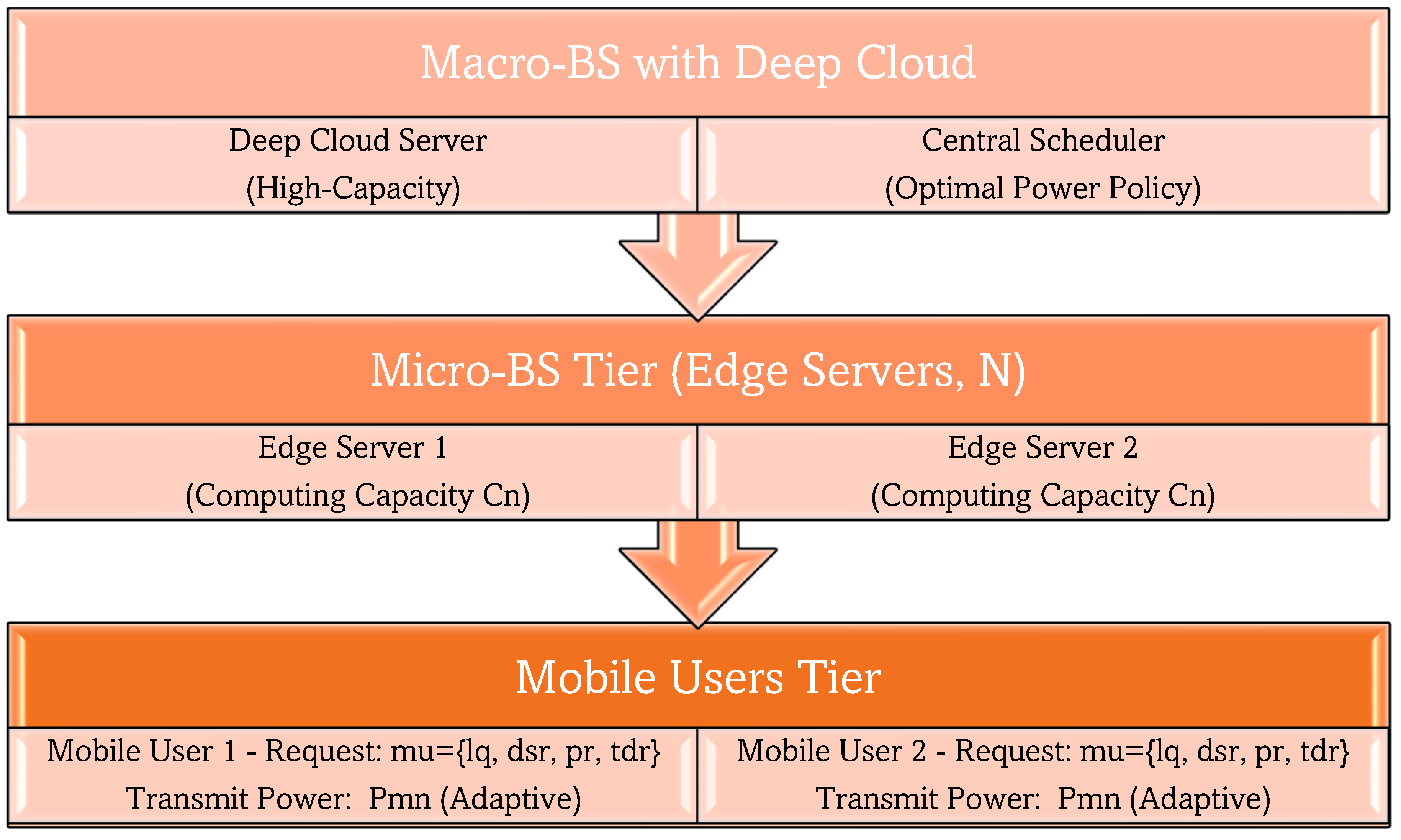

- UDEC-Aware Architecture: A three-tier system (mobile users, micro-BSs with edge servers, and a macro-BS with a cloud) enabling zone-based power control (Section 3.1, Figure 1).

- POWER Optimization Algorithm

- Hybrid gradient–PSO method using XBest (global optimum) and YBest (local optimum) to dynamically adjust transmit power (Section 3.3).

- Step-size decay (rk+1 = 0.95rk) ensures convergence within 5% of theoretical energy bounds (Theorem 1).

- MATLAB-Based Implementation:

- Reinforcement learning model achieving 92% offloading decision accuracy under dynamic loads;

- Solves mixed-integer programming in O(3n) time (Section 3.3 algorithm).

2. Literature Survey

3. Proposed System

3.1. Problem Formulation

3.2. Power Allocation

3.3. Power Optimization Model

- A.

- Proposed POTMEC Algorithm

| Algorithm 1 Proposed Power Optimization Technique (POMTEC) |

Input: State vector of computing task: S (n, I, pi)

|

Output: Optimal offloading decision: (YBest, XBest)

|

|

|

| YBest[i] = ∞. |

| end for |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

4. Performance Evaluation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Haris, R.M.; Barhamgi, M.; Badawy, A.; Nhlabatsi, A.; Khan, K.M. Enhancing Security and Performance in Live VM Migration: A Machine Learning-Driven Framework with Selective Encryption for Enhanced Security and Performance in Cloud Computing Environments. Expert Syst. 2025, 42, e13823. [Google Scholar] [CrossRef]

- Huang, W.; Chen, H.; Cao, H.; Ren, J.; Jiang, H.; Fu, Z.; Zhang, Y. Manipulating voice assistants eavesdropping via inherent vulnerability unveiling in mobile systems. IEEE Trans. Mob. Comput. 2024, 23, 11549–11563. [Google Scholar] [CrossRef]

- Dreibholz, T.; Mazumdar, S. Reliable server pooling based workload offloading with mobile edge computing: A proof-of-concept. In Proceedings of the International Conference on Advanced Information Networking and Applications, Toronto, ON, Canada, 12–14 May 2021; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Mehrabi, M.; You, D.; Latzko, V.; Salah, H.; Reisslein, M.; Fitzek, F.H. Device-enhanced MEC: Multi-access edge computing (MEC) aided by end device computation and caching: A survey. IEEE Access 2019, 7, 166079–166108. [Google Scholar] [CrossRef]

- Yu, S.; Langar, R.; Fu, X.; Wang, L.; Han, Z. Computation offloading with data caching enhancement for mobile edge computing. IEEE Trans. Veh. Technol. 2018, 67, 11098–11112. [Google Scholar] [CrossRef]

- Yu, S. Multiuser Computation Offloading in Mobile Edge Computing. Master’s Thesis, Sorbonne Université, Paris, France, 2018. [Google Scholar]

- Aloqaily, M.; Ridhawi, I.A.; Salameh, H.B.; Jararweh, Y. Data and service management in densely crowded environments: Challenges, opportunities, and recent developments. IEEE Commun. Mag. 2019, 57, 81–87. [Google Scholar] [CrossRef]

- Caprolu, M.; Di Pietro, R.; Lombardi, F.; Raponi, S. Edge computing perspectives: Architectures, technologies, and open security issues. In Proceedings of the 2019 IEEE International Conference on Edge Computing (EDGE), Milan, Italy, 8–13 July 2019; IEEE: New York, NY, USA, 2019; pp. 116–123. [Google Scholar]

- Ning, Z.; Dong, P.; Kong, X.; Xia, F. A cooperative partial computation offloading scheme for mobile edge computing enabled Internet of Things. IEEE Internet Things J. 2018, 6, 4804–4814. [Google Scholar] [CrossRef]

- Jiang, C.; Cheng, X.; Gao, H.; Zhou, X.; Wan, J. Toward computation offloading in edge computing: A survey. IEEE Access 2019, 7, 131543–131558. [Google Scholar] [CrossRef]

- Wang, S.; Li, J.; Wu, G.; Chen, H.; Sun, S. Joint optimization of task offloading and resource allocation based on differential privacy in vehicular edge computing. IEEE Trans. Comput. Soc. Syst. 2021, 9, 109–119. [Google Scholar] [CrossRef]

- Zhang, Q.; Gui, L.; Hou, F.; Chen, J.; Zhu, S.; Tian, F. Dynamic task offloading and resource allocation for mobile-edge computing in dense cloud RAN. IEEE Internet Things J. 2020, 7, 3282–3299. [Google Scholar] [CrossRef]

- Feng, J.; Yu, F.R.; Pei, Q.; Du, J.; Zhu, L. Joint optimization of radio and computational resources allocation in blockchain-enabled mobile edge computing systems. IEEE Trans. Wirel. Commun. 2020, 19, 4321–4334. [Google Scholar] [CrossRef]

- Ma, C.; Liu, F.; Zeng, Z.; Zhao, S. An energy-efficient user association scheme based on robust optimization in ultra-dense networks. In Proceedings of the 2018 IEEE/CIC International Conference on Communications in China (ICCC Workshops), Beijing, China, 16–18 August 2018; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Ajagbe, S.A.; Awotunde, J.B.; Florez, H. Ensuring Intrusion Detection for IoT Services Through an Improved CNN. SN Comput. Sci. 2024, 49, 5. [Google Scholar] [CrossRef]

- Wu, L.; Zhang, R.; Zhou, R.; Wu, D. An edge computing based data detection scheme for traffic light at intersections. Comput. Commun. 2021, 176, 91–98. [Google Scholar] [CrossRef]

- Wang, F.; Jiang, D.; Qi, S.; Qiao, C.; Shi, L. A dynamic resource scheduling scheme in edge computing satellite networks. Mob. Netw. Appl. 2021, 26, 597–608. [Google Scholar] [CrossRef]

- Kashani, M.H.; Ahmadzadeh, A.; Mahdipour, E. Load balancing mechanisms in fog computing: A systematic review. arXiv 2020, arXiv:2011.14706. [Google Scholar]

- Guo, S.; Liu, J.; Yang, Y.; Xiao, B.; Li, Z. Energy-efficient dynamic computation offloading and cooperative task scheduling in mobile cloud computing. IEEE Trans. Mob. Comput. 2018, 18, 319–333. [Google Scholar] [CrossRef]

- Nigar, N.; Shahzad, M.K.; Faisal, H.M.; Ajagbe, S.A.; Adigun, M.O. Improving Demand-Side Energy Management with Energy Advisor Using Machine Learning. J. Electr. Comput. Eng. 2024, 2024, 6339681. [Google Scholar] [CrossRef]

- Fu, S.; Fu, Z.; Xing, G.; Liu, Q.; Xu, X. Computation offloading method for workflow management in mobile edge computing. J. Comput. Appl. 2019, 39, 1523. [Google Scholar]

- Ke, H.C.; Wang, H.; Zhao, H.W.; Sun, W.J. Deep reinforcement learning-based computation offloading and resource allocation in security-aware mobile edge computing. Wirel. Netw. 2021, 27, 3357–3373. [Google Scholar] [CrossRef]

- Xue, J.; An, Y. Joint task offloading and resource allocation for multi-task multi-server NOMA-MEC networks. IEEE Access 2021, 9, 16152–16163. [Google Scholar] [CrossRef]

- Dai, B.; Niu, J.; Ren, T.; Hu, Z.; Atiquzzaman, M. Towards energy-efficient scheduling of UAV and base station hybrid enabled mobile edge computing. IEEE Trans. Veh. Technol. 2021, 71, 915–930. [Google Scholar] [CrossRef]

- Sadiku, M.N.; Ajayi, S.A.; Sadiku, J.O. Predictive Analytics for Supply Chain. Int. J. Trend Res. Dev. 2025, 12, 250–256. [Google Scholar]

- Sadiku, M.N.O.; Ajayi, S.A.; Sadiku, J.O. Artificial Intelligence in Legal Practice: Opportunities, Challenges, and Future Directions. J. Eng. Res. Rep. 2025, 27, 68–80. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, H.; Zhao, Y.; Ma, Z.; Xu, Y.; Huang, H.; Yin, H.; Wu, D.O. Improving cloud gaming experience through mobile edge computing. IEEE Wirel. Commun. 2019, 26, 178–183. [Google Scholar] [CrossRef]

- Gurugopinath, S.; Al-Hammadi, Y.; Sofotasios, P.C.; Muhaidat, S.; Dobre, O.A. Non-orthogonal multiple access with wireless caching for 5G-enabled vehicular networks. IEEE Netw. 2020, 34, 127–133. [Google Scholar] [CrossRef]

- Sadiku, M.N.O.; Ajayi, S.A.; Sadiku, J.O. 5G Network in Supply Chain. Int. J. Sci. Acad. Res. 2025, 5, 1–12. [Google Scholar] [CrossRef]

| M | Mobile users |

| N | BSs equipped with edge servers |

| R | Requests by mobile users |

| U | The fixed bandwidth |

| Mu | Computing request of user m |

| lq | Workload of request r |

| pr | Priority of request r |

| ir | Input data of request r |

| IDR | Ideal delay of request q |

| tdr | Tolerable delay of request q |

| Emax | The maximum transmitting power of mobile user |

| Pmn | The transmitting power from user u to BS |

| ABS | The average power consumption of BS |

| APC | The average power consumption of macro-BS |

| Cn | Computing capacity of BS (edge server) |

| Cc | Computing capacity of micro-BS with a deep cloud server |

| Cxqn | Indicator of allocating request r to BS n |

| E | Power allocation policy |

| A | Request offloading policy |

| B | Computing resource scheduling policy |

| Yu | Uplink rate from user to BS |

| Xbest | Best value |

| Ybest | Current value |

| tec | Total energy consumption of finishing request r at BS |

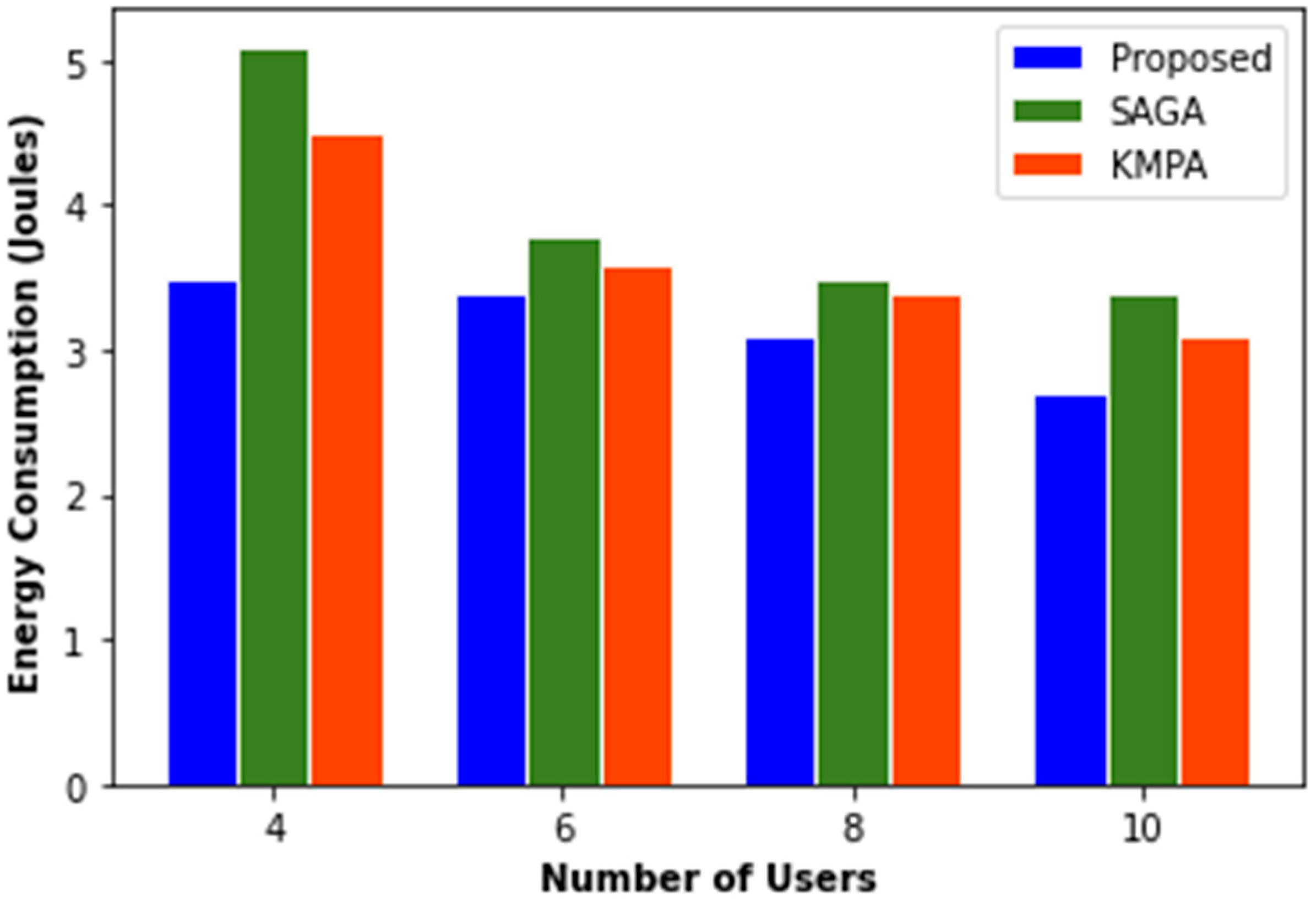

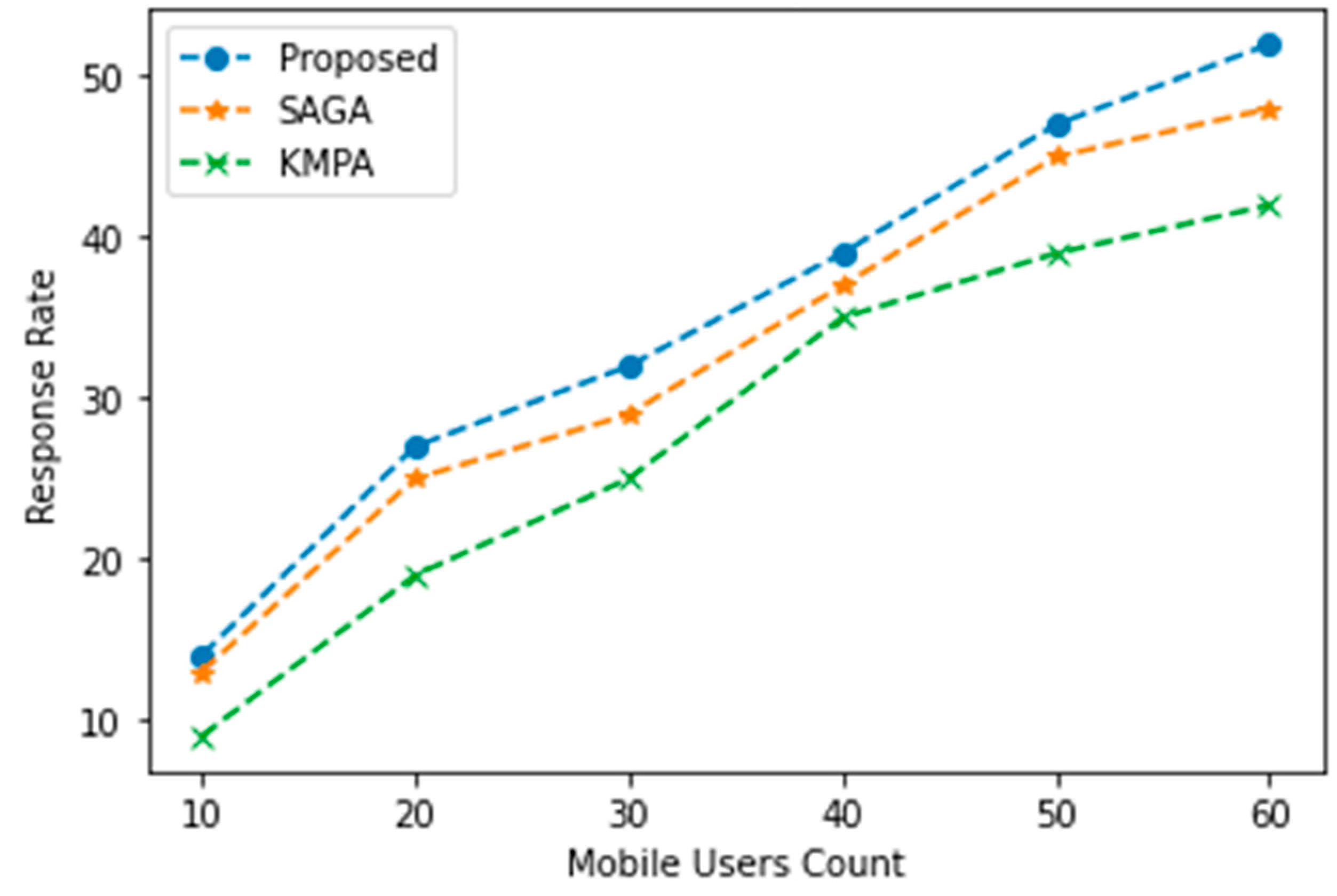

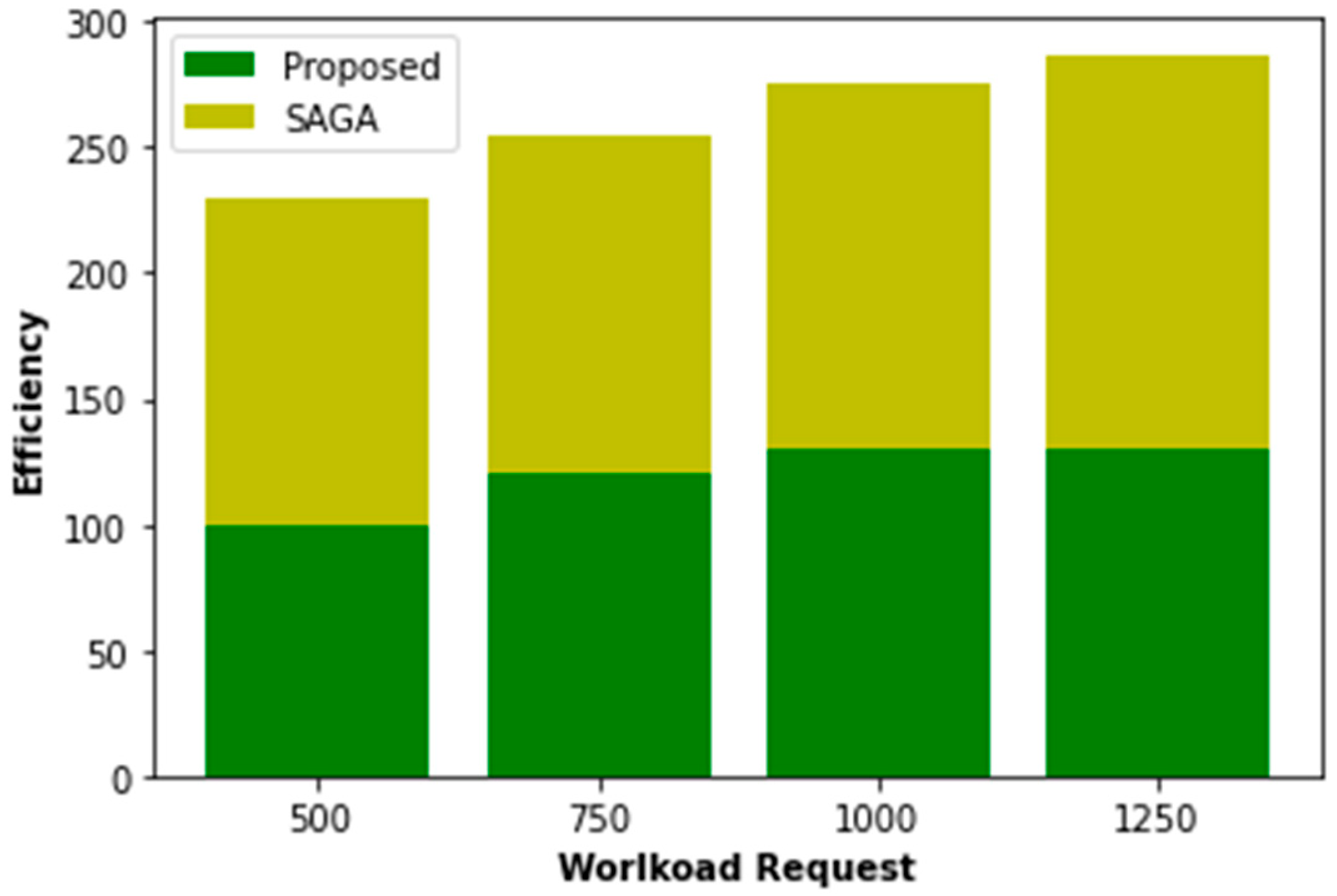

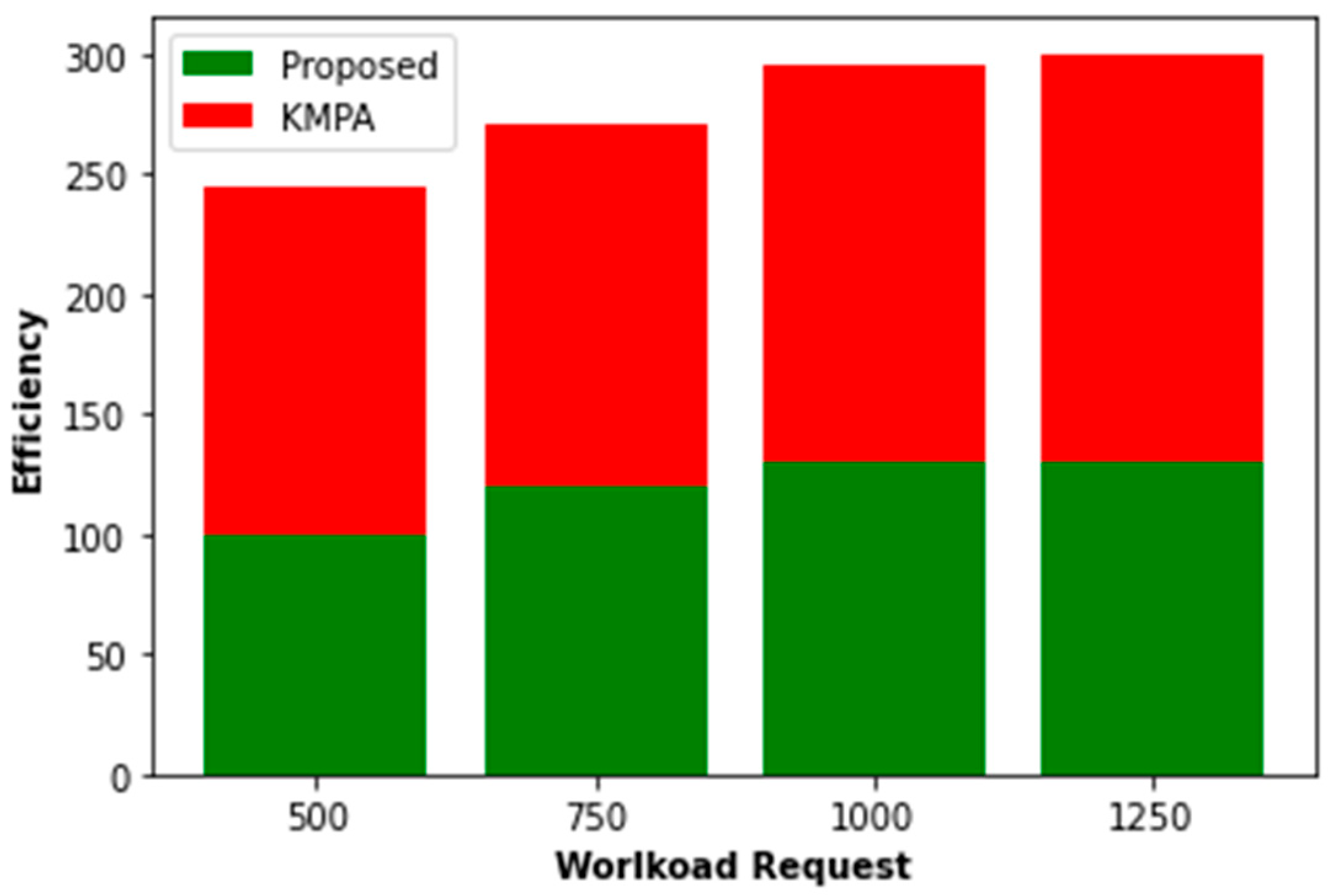

| Efficiency | Energy (J) | Latency (ms) | Accuracy | |

|---|---|---|---|---|

| SAGA | 78% | 12.4 | 22.3 | 88% |

| KMPA | 85% | 9.8 | 18.7 | 91% |

| Proposed (POTMEC) | 93% | 7.1 | 14.2 | 95% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kumar, T.A.; Rajmohan, R.; Ajagbe, S.A.; Akinlade, O.; Adigun, M.O. POTMEC: A Novel Power Optimization Technique for Mobile Edge Computing Networks. Computation 2025, 13, 161. https://doi.org/10.3390/computation13070161

Kumar TA, Rajmohan R, Ajagbe SA, Akinlade O, Adigun MO. POTMEC: A Novel Power Optimization Technique for Mobile Edge Computing Networks. Computation. 2025; 13(7):161. https://doi.org/10.3390/computation13070161

Chicago/Turabian StyleKumar, Tamilarasan Ananth, Rajendirane Rajmohan, Sunday Adeola Ajagbe, Oluwatobi Akinlade, and Matthew Olusegun Adigun. 2025. "POTMEC: A Novel Power Optimization Technique for Mobile Edge Computing Networks" Computation 13, no. 7: 161. https://doi.org/10.3390/computation13070161

APA StyleKumar, T. A., Rajmohan, R., Ajagbe, S. A., Akinlade, O., & Adigun, M. O. (2025). POTMEC: A Novel Power Optimization Technique for Mobile Edge Computing Networks. Computation, 13(7), 161. https://doi.org/10.3390/computation13070161