Integrating Linguistic and Eye Movements Features for Arabic Text Readability Assessment Using ML and DL Models

Abstract

1. Introduction

- The first multimodal evaluation of Arabic readability using a corpus that combines text and gaze features at the paragraph level, a unit of analysis that balances contextual meaning with experimental tractability.

- We conducted an in-depth investigation of the relationship between cognitive data and text readability assessment, particularly in the Arabic context.

- The impact of two cognitive features—direct and indirect—related to reading features and experimental conditions on the ML and DL models was identified, in addition to their significant contribution to the accurate prediction of readability scores.

- We compared the performance of different ML and DL models in predicting text difficulty using various textual representations including handcrafted features and feature vectorization methods. Empirical evidence showed that gaze features substantially improved both ML and DL models compared with text-only features.

- The results of the cognitive measures of Arabic text readability were compared with other readability measures for Arabic and other languages such as English, German, Japanese, and Portuguese.

2. Background

2.1. Text Readability Assessment

2.2. Eye-Tracking

2.3. Arabic Language

2.4. Arabic Language and Eye-Tracking

- Informational density: Arabic text requires more time to process, making word recognition harder than in Latin scripts [51].

- Fixation and perceptual span patterns: Arabic readers tend to fixate centrally within words and extend their perceptual span leftward, reflecting the script’s directionality [51].

- Dot density and orthographic ambiguity: Many letters differ only by dots, adding to visual load and fixation demands [44].

- Right-to-left directionality and bidirectional reading: Text runs right-to-left, while numbers are read left-to-right; this affects saccade planning and sometimes causes inversion errors [54].

- Cursive and shape-changing script: Letters connect and change form depending on position, which complicates word segmentation [55].

3. Related Research

3.1. Classic Text Readability Assessments

3.2. Data-Driven Text Readability Assessment

3.2.1. Arabic Text Readability Assessment Studies

3.2.2. Global Text Readability Assessment Studies Using Eye-Tracking

3.3. Discussion

4. ML-Based Experiments and Results

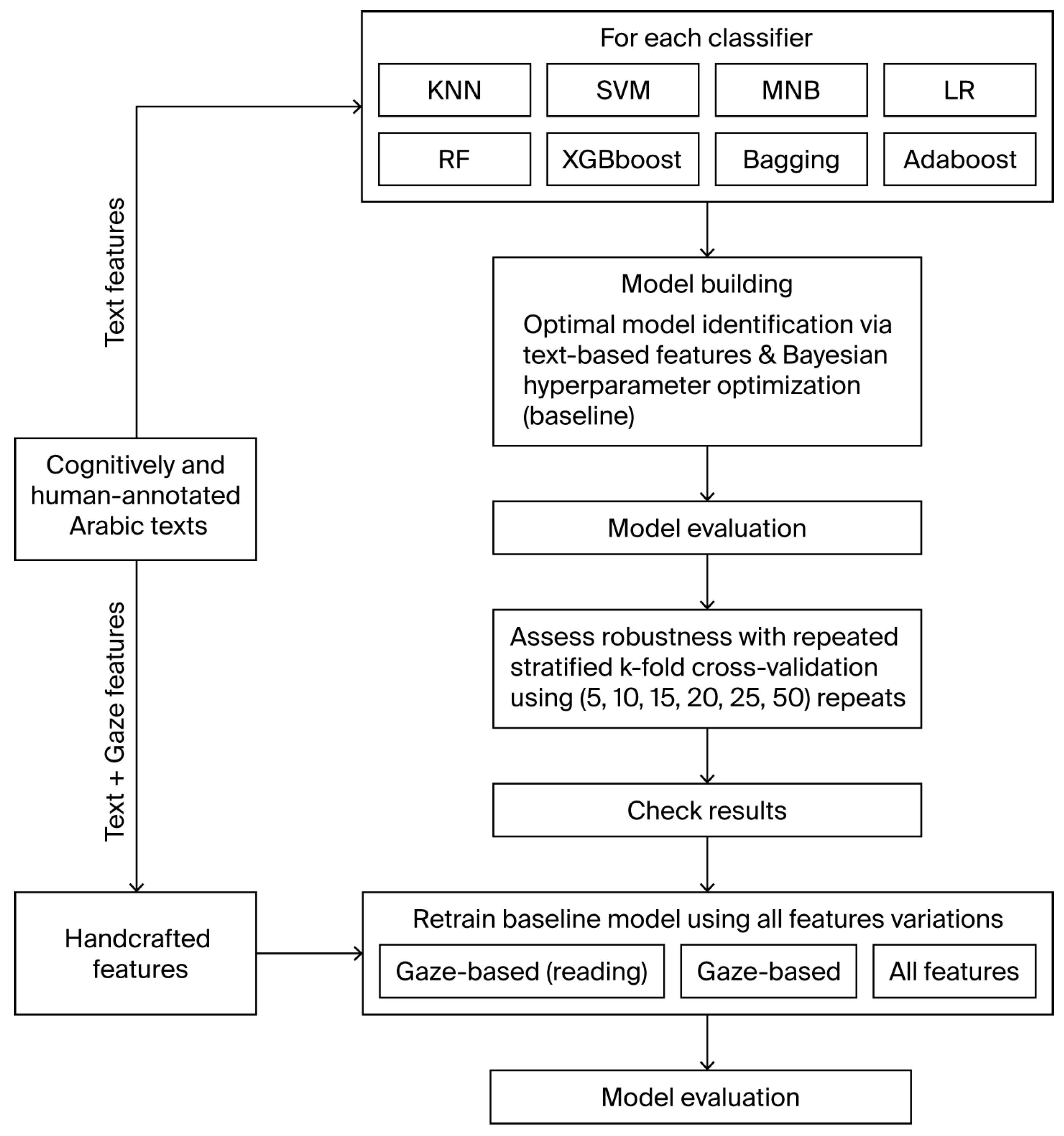

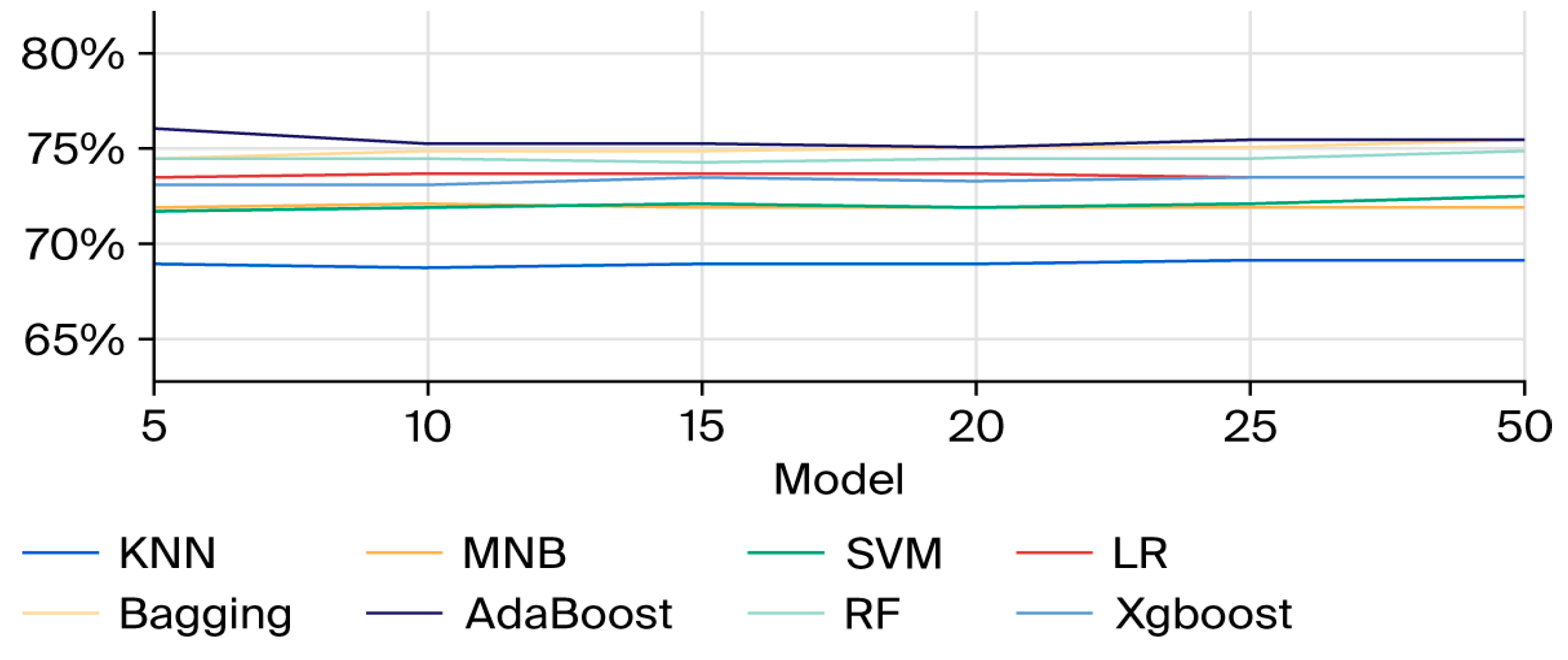

4.1. Model Building and Evaluation

- Dataset: Arabic text readability was framed as a supervised classification task using the AraEyebility corpus [110]. The corpus comprises 587 paragraphs (57,617 words) drawn from 92 MSA and CA texts across 13 genres including grammar, literature, health, and politics. Texts were partially diacritized and segmented into coherent paragraphs of different lengths and difficulty levels. Eye-movement data were collected from 15 native Arabic speakers. Extracted eye-tracking features—fixation, saccades, regressions, and pupil metrics—serve as the indicators of reading effort [4]. Additionally, the corpus includes linguistic features and subjective gold-standard readability annotations from participants, classifying Arabic texts into easy, medium, and difficult levels. The distribution is notably skewed, with approximately 61% being easy, 34% being medium, and 5% being difficult, indicating a significant data imbalance.

- Classification using handcrafted features (Section 4.2): Four ML models—multinomial naive Bayes (MNB), logistic regression (LR), support vector machines (SVMs), and K-nearest neighbors (KNN)—and four ensemble methods—bootstrap aggregating (Bagging), RF, adaptive boosting (AdaBoost), and extreme gradient boosting (XGBoost)—were trained. Ensemble methods apply the “wisdom of the many” concept, potentially improving prediction accuracy [109]. The models were evaluated using handcrafted linguistic and cognitive features, followed by experiments that varied feature sets to assess their impact.

- Classification using feature vectorization methods (Section 4.3): Texts were represented using TF–IDF, and the ML models were re-evaluated on these representations. Results were compared against those obtained with handcrafted features.

- Handling class imbalance: The dataset was notably skewed (61% easy, 34% medium, 5% difficult). As noted in [71], class imbalance is a common challenge in readability datasets, particularly in automatic text readability assessment, where natural text distributions are often uneven. This issue is also frequently observed in Arabic readability studies. Oversampling, undersampling, and SMOTE were initially tested, but these approaches yielded inconsistent results and distorted class distributions. Consequently, the class imbalance was addressed through stratified sampling to divide the data into training and testing sets, ensuring that each readability class was proportionally represented. This approach is particularly effective for imbalanced datasets, as it preserves class distribution and helps reduce model bias during training and evaluation. In addition, we adopted hyperparameter fine-tuning and cost-sensitive training to further improve performance under imbalance conditions. These steps involved adjusting model-specific parameters and employing appropriate evaluation metrics to better capture the performance across all classes and ensure robustness.

4.2. Classification Using Handcrafted Features

4.2.1. Baseline Models

Experiments

Results

4.2.2. Analysis of Feature Variations

Experiments

Results

Overall Performance and Gaze Data

4.3. Classification Using Simple Feature Vectorization Methods

4.3.1. Analysis of Feature Variations

Experiments

Results

Overall Performance and Gaze Data

5. DL-Based Experiments and Results

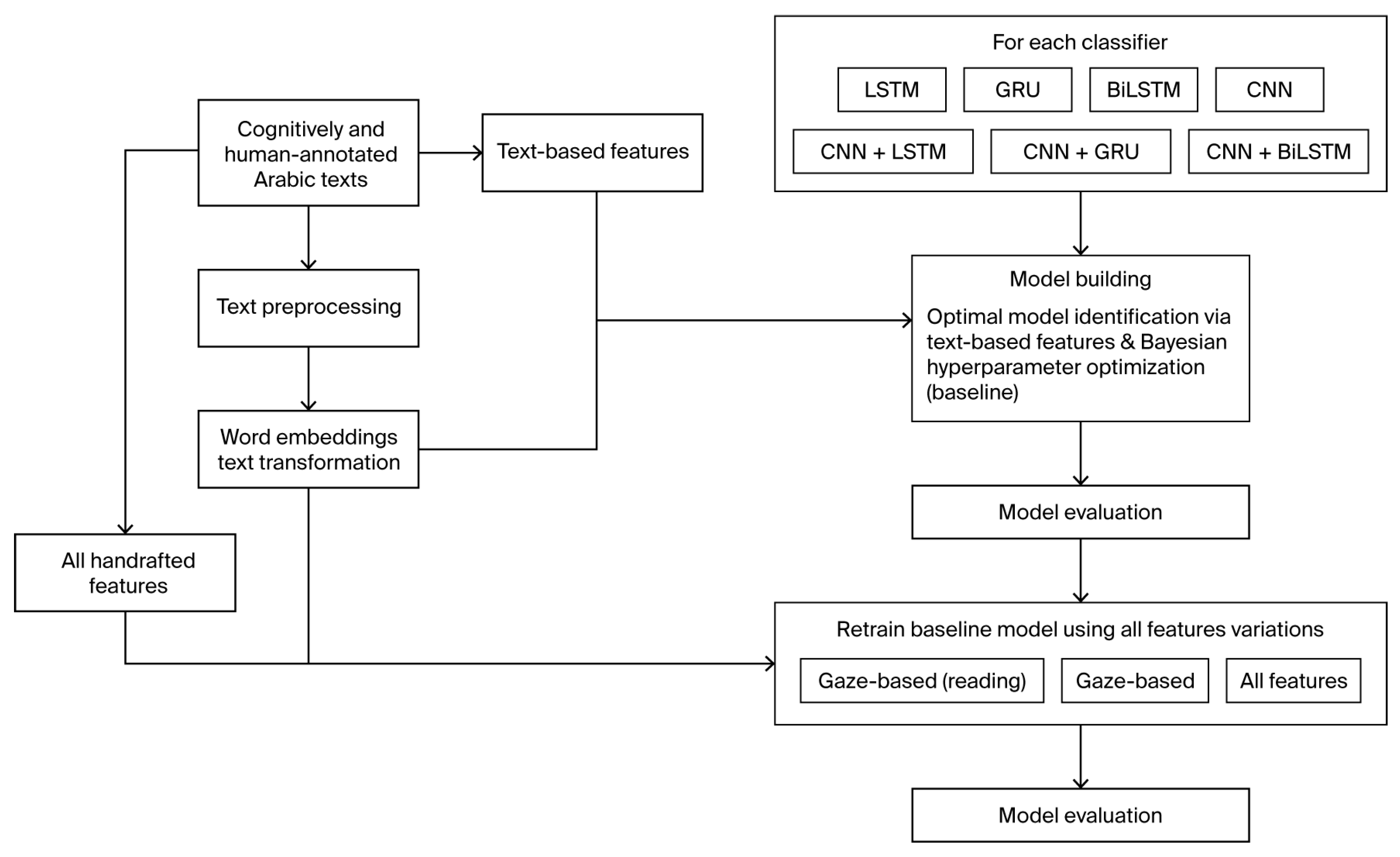

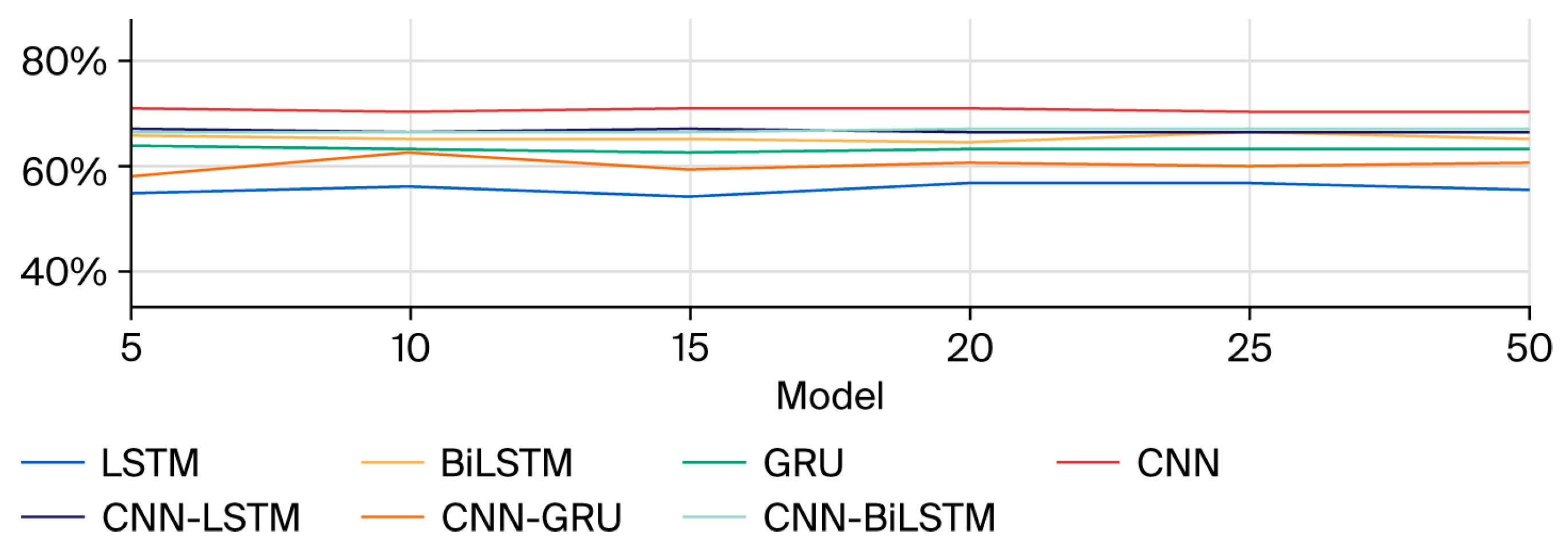

5.1. Model Building and Evaluation

- Classification with embeddings and feature variations (Section 5.2): Given the limited use of sequence-to-sequence models, such as RNNs, in Arabic readability research, seven DL models were selected based on prior studies [71,118,119,124,125,126,127,128] including long short-term memory (LSTM), bi-directional LSTM (BiLSTM), and gated recurrent units (GRUs). Additionally, convolutional neural networks (CNNs) were explored in order to leverage their effectiveness in cognitive studies and text classification tasks. Hybrid architectures (CNN-LSTM, CNN-GRU, and CNN-BiLSTM) were also explored, mirroring ensemble strategies to boost predictive accuracy. This stage evaluated the contribution of cognitive signals to DL models, covering preprocessing, embedding integration, hyperparameter tuning, and the analysis of feature variations.

5.2. Classification Using Word Embeddings and DL Models

5.2.1. Baseline Models

Experiments

Results

5.2.2. Analysis of Feature Variations

Experiments

Results

Overall Performance and Gaze Data

6. Discussion

6.1. Impact of Eye-Tracking Features on Arabic Text Readability Assessment

6.2. Impact of Eye-Tracking Experimental Conditions and Reading Features on Arabic Text Readability Prediction

6.3. Comparison of ML and DL Models in Text Readability Prediction

6.4. Comparison of Cognitive Measures of Arabic Text Readability with Other Readability Measures for Arabic and Other Languages

7. Conclusions, Limitations, and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Arabic Readability and Eye-Tracking-Based Studies

| Year | Study | Audience | Algorithm(s) | Level | Reported Results (%) |

|---|---|---|---|---|---|

| ML-based methods | |||||

| 2010 | [7] | L1 learners | SVM, NB, DT | 3 | Accuracy: 77.77 |

| 2013 | [79] | L2 learners | SVM, MIRA | 7 | MSE: 0.198 |

| 2014 | [82] | L2 learners | DT | 4 | Accuracy (k-means): 86.95 |

| 5 | Accuracy (balanced): 91.3 | ||||

| 25 | Accuracy: 60.8 | ||||

| [80] | L2 learners | MIRA, SVM | 7 | MSE: 0.198 | |

| [81] | L2 learners | KNN | 3 | F-score: 71.9 | |

| 5 | F-score: 51.9 | ||||

| 2015 | [29] | L2 learners | ZeroR, OneR, DT, KNN, SVM, RF | 3 | Accuracy: 73.31, F-score: 73 |

| 5 | Accuracy: 59.76, F-score: 58.9 | ||||

| 2018 | [8] | L2 learners | ZeroR, OneR, DT, KNN, SVM, RF | 3 | Accuracy: 90.43, F-score: 90.5 |

| 4 | Accuracy: 89.56, F-score: 89.5 | ||||

| 5 | Accuracy: 89.56, F-score: 89.5 | ||||

| [91] | L1 learners L2 learners | ZeroR, OneR, DT, KNN, SVM, RF | 4 | L1 Accuracy: 94.8 L2 Accuracy: 72.4 | |

| 2020 | [85] | L1 learners | DT, KNN, SVM, RF | 3 | Accuracy: 78.84 |

| 2021 | [77] | L1 learners L1 readers | MNB, BNB, SVM, RF (BoW, TF–IDF) | 4 | Accuracy: 87.14 |

| 2022 | [86] | L1 learners L2 learners | ---- | 3 | Accuracy (class clustering): 70, 75, 88, 68 |

| 5 | Accuracy (SMOTE): 60, 63.04, 98.46, 67.21 | ||||

| [83] | L2 learners | DT, KNN, SVM, RF | 3 | Accuracy: 86.15 | |

| 5 | Accuracy: 76.92 | ||||

| DL-based methods | |||||

| 2017 | [56] | L1 learners L1 readers | DT, NB, KNN, SVM, ANN | 3 | Accuracy: 91.41 |

| 2021 | [88] | L2 learners | SVM, RF, KNN, XGBoost (fastText, mBERT, XLM-R, Arabic-BERT) | 3 | F-score: 80 |

| 2023 | [57] | L2 learners | RF (AraVec, TF–IDF) | 3 | Accuracy:80.63, F-score: 78.82 |

| [89] | L2 learners | SVM, RF (ArabicBert, AraBert, XLM-R) | 3 | Accuracy:82.67, F-score: 83.49 | |

| 2024 | [1] | L1 learners | RF (AraVec, AraBert) | 3 | Accuracy: 77.5, F-score: 76.93 |

| 2024 | [90] | L2 learners | BERT-BiLSTM | 3 | Accuracy: 89.55, Precision: 89.65, Recall: 89.55, F1 Score: 89.52 |

| Year | Study | Audience | Algorithm(s) | Level | Reported Results (%) |

|---|---|---|---|---|---|

| 2012 | [5] | German L1 learners | SVM | 6 | Accuracy: 62.25 |

| 2013 | [133] | English L1 learners | FFNN | 10 | MSE: 0.38 |

| 2014 | [134] | English L1, L2 learners | FFNN | 2, 3 | Accuracy: 79–89 |

| 2015 | [25] | English L1 and L2 Readers | DT, RF, FFNN | 3 | MSE: 0.22 |

| [24] | English L1 users | RF | 3 | Accuracy: 81.25 | |

| 2016 | [17] | English L2 learners | GAMMs | 2 | Variance: 0.78 |

| [135] | English L2 learners | LR, SVM | 3 | Accuracy: 75.21 | |

| 2017 | [26] | English L2 learners | FFNN, SVM, RF | 2 | Precision: 66 |

| [3] | Japanese L2 learners | SVM | 5 | MAE: 0.33 | |

| [38] | English L1 readers | Multi-task MLP, LR | 2 | Accuracy: 86.54 | |

| 2018 | [136] | English L2 learners, L1 readers | Single-task and multi-task MLP | 2 | Accuracy: 86.62 |

| [40] * | English L1 readers | NB, LR, RF, FFNN | 4 | QWK: 55.2 | |

| [92] | English L1 readers | Linear regression | 2 | CC: 0.59 | |

| 2020 | [101] | Brazilian Portuguese L1 readers | Single-task MLP, multi-task MLP, transfer learning | 2 | Accuracy: 97.5 |

| 2021 | [19] | English L1 readers | SVM, fine-tuned ALBERT | 7 | RMSE: 0.44 |

| [94] * | English L2 learners | CNN-LSTM | 5 | QWK: 49.8 | |

| 2022 | [141] * | English L1 learners | RF | 2 | AUPRC: 81 |

| 2023 | [96] * | English L1 learners | FFNN (BERT+ RoBERTa) | 4 | F1 score: 69.9 |

| [4] * | English L1 learners | RF, linear regression | 2 | CC: 0.32 | |

| 2024 | [95] * | English L1, L2 readers | Multiple regression | 3 | Variance explained: 0.38 |

| [153] * | English L1 learners | Logistic regression, CNN, BEyeLSTM, Eyettention, RoBERTa-QEye, MAG-QEye, PostFusion-QEye | 4 | Balanced accuracy: up to 30.9 | |

| 2025 | [149] * | English L1 learners | Traditional formulas, modern readability measures, LLMs, commercial system psycholinguistic measures | 2 | Pearson r up to 0.40 |

| [154]* | English L2 learners | SVM, RF | 3 | Accuracy: 52.1, F1: 52.1 | |

| [155]* | English L1 readers | RoBERTa | --- | Spearman correlation: ~0.91, MAE: ~4.57 |

Appendix B. Hyperparameter Optimization Results

| Model | Parameters and Values |

|---|---|

| KNN | algorithm: kd_tree, leaf_size: 21, metric: chebyshev, n_neighbors: 19, p: 2, weights: distance. |

| SVM | C: 0.1, class_weight: None, gamma: auto, kernel: linear. |

| LR | C: 10.1, class_weight: none, max_iter: 70,000, penalty: l2, solver: sag. |

| MNB | alpha: 50.0, fit_prior: false, force_alpha: true. |

| Bagging | base_estimator_class_weight: balanced, base_estimator_criterion: entropy, base_estimator_max_depth: none, base_estimator_max_features: log2, base_estimator_min_samples_leaf: 1, base_estimator_min_samples_split: 10, bootstrap: false, max_features: 0.9, max_samples: 0.8, n_estimators: 500. |

| RF | Bootstrap: false, class_weight: none, criterion: gini, max_depth: 20, max_features: log2, min_samples_leaf: 1, min_samples_split: 3, n_estimators: 1500. |

| AdaBoost | algorithm: SAMME, base_estimator_class_weight: none, base_estimator_criterion: entropy, base_estimator_max_depth: 5, base_estimator_max_features: log2, base_estimator_min_samples_leaf: 1, base_estimator_min_samples_split: 10, base_estimator_splitter: random, learning_rate: 0.05, n_estimators: 1000. |

| XGBoost | booster: gbtree, colsample_bytree: 0.6, gamma: 1.0, earning_rate: 0.1, max_delta_step: 7, max_depth: 5, min_child_weight: 1, n_estimators: 50, reg_alpha: 1 × 10−5, reg_lambda: 0.1, subsample: 0.773478. |

Appendix C

| Model | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|

| KNN | 66 | 69 | 67 |

| SVM | 68 | 73 | 70 |

| LR | 73 | 73 | 71 |

| MNB | 68 | 64 | 65 |

| Model | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|

| Bagging | 72 | 70 | 71 |

| RF | 77 | 75 | 74 |

| AdaBoost | 72 | 71 | 71 |

| XGBoost | 69 | 70 | 70 |

| Model | Feature Variation | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| KNN | Text (Baseline) | 66 | 69 | 67 |

| Gaze (Reading) | 66 | 69 | 68 | |

| Gaze | 67 | 70 | 68 | |

| All Features | 66 | 70 | 68 | |

| SVM | Text (Baseline) | 68 | 73 | 70 |

| Gaze (Reading) | 68% | 71 | 69 | |

| Gaze | 66 | 69 | 68 | |

| All Features | 74 | 75 | 74 | |

| LR | Text (Baseline) | 73 | 73 | 71 |

| Gaze (Reading) | 65 | 69 | 67 | |

| Gaze | 65 | 69 | 67 | |

| All Features | 75 | 75 | 75 | |

| MNB | Text (Baseline) | 68 | 64 | 65 |

| Gaze (Reading) | 73 | 65 | 68 | |

| Gaze | 68 | 65 | 66 | |

| All Features | 68 | 66 | 67 |

| Model | Feature Variation | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| Bagging | Text (Baseline) | 72 | 70 | 71 |

| Gaze (Reading) | 77 | 76 | 76 | |

| Gaze | 78 | 77 | 77 | |

| All Features | 77 | 76 | 76 | |

| RF | Text (Baseline) | 77 | 75 | 74 |

| Gaze (Reading) | 72 | 72 | 72 | |

| Gaze | 74 | 74 | 74 | |

| All Features | 72 | 75 | 73 | |

| AdaBoost | Text (Baseline) | 72 | 71 | 71 |

| Gaze (Reading) | 72 | 71 | 71 | |

| Gaze | 70 | 70 | 70 | |

| All Features | 80 | 81 | 80 | |

| XGBoost | Text (Baseline) | 69 | 70 | 70 |

| Gaze (Reading) | 72 | 72 | 72 | |

| Gaze | 73 | 73 | 73 | |

| All Features | 76 | 76 | 76 |

Appendix D

| Model | Feature Variation | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| KNN | Text (Baseline) | 66 | 69 | 67 |

| TF-IDF | 44 | 58 | 47 | |

| TF-IDF + Text | 65 | 69 | 67 | |

| TF-IDF + Gaze (Reading) | 66 | 69 | 68 | |

| TF-IDF + Gaze | 65 | 69 | 69 | |

| TF-IDF + All Features | 66 | 70 | 68 | |

| SVM | Text (Baseline) | 68 | 73 | 70 |

| TF-IDF | 37 | 61 | 46 | |

| TF-IDF + Text | 72 | 72 | 72 | |

| TF-IDF + Gaze (Reading) | 67 | 71 | 69 | |

| TF-IDF + Gaze | 66 | 69 | 67 | |

| TF-IDF + All Features | 73 | 74 | 73 | |

| LR | Text (Baseline) | 73 | 73 | 71 |

| TF-IDF | 68 | 69 | 65 | |

| TF-IDF + Text | 70 | 71 | 70 | |

| TF-IDF + Gaze (Reading) | 66 | 69 | 68 | |

| TF-IDF + Gaze | 64 | 68 | 66 | |

| TF-IDF + All Features | 71 | 72 | 71 | |

| MNB | Text (Baseline) | 68 | 64 | 65 |

| TF-IDF | 62 | 42 | 36 | |

| TF-IDF + Text | 38 | 61 | 47 | |

| TF-IDF + Gaze (Reading) | 37 | 61 | 46 | |

| TF-IDF + Gaze | 37 | 61 | 46 | |

| TF-IDF + All Features | 37 | 61 | 46 |

| Model | Feature Variation | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| Bagging | Text (Baseline) | 72 | 70 | 71 |

| TF-IDF | 50 | 62 | 49 | |

| TF-IDF + Text | 55 | 63 | 50 | |

| TF-IDF + Gaze (Reading) | 62 | 64 | 54 | |

| TF-IDF + Gaze | 59 | 64 | 52 | |

| TF-IDF + All Features | 64 | 69 | 62 | |

| RF | Text (Baseline) | 77 | 75 | 74 |

| TF-IDF | 37 | 61 | 46 | |

| TF-IDF + Text | 37 | 61 | 46 | |

| TF-IDF + Gaze (Reading) | 38 | 61 | 47 | |

| TF-IDF + Gaze | 38 | 61 | 47 | |

| TF-IDF + All Features | 55 | 63 | 50 | |

| AdaBoost | Text (Baseline) | 72 | 71 | 71 |

| TF-IDF | 37 | 61 | 46 | |

| TF-IDF + Text | 60 | 65 | 57 | |

| TF-IDF + Gaze (Reading) | 64 | 68 | 61 | |

| TF-IDF + Gaze | 61 | 66 | 58 | |

| TF-IDF + All Features | 58 | 64 | 57 | |

| XGBoost | Text (Baseline) | 69 | 70 | 70 |

| TF-IDF | 64 | 69 | 65 | |

| TF-IDF + Text | 67 | 70 | 69 | |

| TF-IDF + Gaze (Reading) | 67 | 70 | 69 | |

| TF-IDF + Gaze | 66 | 69 | 67 | |

| TF-IDF + All Features | 68 | 71 | 69 |

Appendix E

- BiLSTM: This architecture utilizes a single BiLSTM layer, leveraging bidirectional processing to enhance context understanding [124]. A single-layer BiLSTM is preferred over deeper variants to mitigate overfitting, especially with smaller datasets.

- GRU: GRUs are employed here with two layers, optimizing information flow within the network through advanced gating mechanisms [125]. The model integrates an initial GRU layer with an embedding layer, followed by another GRU layer and a dropout layer.

- CNN: CNNs adapt well to text analysis by focusing on key n-gram features rather than processing every word individually [71]. The architecture includes multiple convolutional and max pooling layers, concluding with a dropout layer, to extract significant patterns from input texts.

- CNN-GRU: Similarly to CNN-LSTM, this model incorporates CNNs for initial feature extraction but uses GRU instead of LSTM for sequential data processing, enhancing long-term dependency handling [126].

Appendix F

| Parameter | LSTM | BiLSTM | GRU | CNN | CNN-LSTM | CNN-GRU | CNN-BiLSTM |

|---|---|---|---|---|---|---|---|

| Batch size | 32 | 16 | 16 | 16 | 16 | 32 | 16 |

| Epoch count | 96 | 29 | 35 | 100 | 95 | 55 | 37 |

| Optimizer | SGD | Nadam | RMSprop | Nadam | Nadam | SGD | Adam |

| Learning rate | 0.01 | 0.001 | 0.01 | 0.001 | 0.001 | 0.0001 | 0.0001 |

| Dense units | 512 | 320 | 352 | 128 | 256 | 448 | 224 |

| Dense dropout | 0.2 | 0.1 | 0.2 | 0.2 | 0.2 | 0.1 | 0 |

| Model | Parameters and Values |

|---|---|

| LSTM | lstm_units1: 384, lstm_units2: 128, lstm_dropout_rate: 0.1, class_weight: balanced |

| BiLSTM | lstm_units: 128, lstm_dropout_rate: 0.2, class_weight: balanced |

| GRU | gru_units1: 256, gru_units2: 288, gru_dropout_rate: 0, class_weight: none |

| CNN | conv_filters1: 96, kernel_size1: 3, pool_size1: 3, conv_filters2: 128, kernel_size2: 3, pool_size2: 3, cnn_dropout_rate: 0.2, class_weight: balanced |

| CNN-LSTM | conv_filters: 32, kernel_size: 3, pool_size: 3, lstm_units: 288, lstm_dropout_rate: 0.5, class_weight: balanced |

| CNN-GRU | conv_filters: 96, kernel_size: 7, pool_size: 3, gru _units: 96, gru_dropout_rate: 0, class_weight: balanced |

| CNN-BiLSTM | conv_filters: 128, kernel_size: 7, pool_size: 2, lstm_units: 384, lstm_dropout_rate: 0, class_weight: balanced |

Appendix G

| Model | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|

| LSTM | 52 | 58 | 52 |

| BiLSTM | 72 | 70 | 70 |

| GRU | 71 | 59 | 61 |

| CNN | 77 | 78 | 77 |

| Model | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|

| CNN-LSTM | 73 | 69 | 70 |

| CNN-GRU | 71 | 59 | 60 |

| CNN-BiLSTM | 70 | 70 | 70 |

| Model | Feature Variation | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| LSTM | Text (Baseline) | 52 | 58 | 52 |

| Gaze (Reading) | 74 | 68 | 69 | |

| Gaze | 74 | 66 | 65 | |

| All Features | 71 | 69 | 69 | |

| BiLSTM | Text (Baseline) | 72 | 70 | 70 |

| Gaze (Reading) | 75 | 52 | 58 | |

| Gaze | 77 | 67 | 68 | |

| All Features | 73 | 72 | 72 | |

| GRU | Text (Baseline) | 71 | 59 | 61 |

| Gaze (Reading) | 74 | 58 | 59 | |

| Gaze | 66 | 69 | 67 | |

| All Features | 76 | 73 | 74 | |

| CNN | Text (Baseline) | 77 | 78 | 77 |

| Gaze (Reading) | 72 | 74 | 72 | |

| Gaze | 73 | 73 | 73 | |

| All Features | 71 | 72 | 71 |

| Model | Feature Variation | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| CNN-LSTM | Text (Baseline) | 73 | 69 | 70 |

| Gaze (Reading) | 70 | 64 | 63 | |

| Gaze | 71 | 65 | 67 | |

| All Features | 73 | 73 | 72 | |

| CNN-GRU | Text (Baseline) | 71 | 59 | 60 |

| Gaze (Reading) | 69 | 66 | 67 | |

| Gaze | 76 | 69 | 71 | |

| All Features | 76 | 73 | 73 | |

| CNN-BiLSTM | Text (Baseline) | 70 | 70 | 70 |

| Gaze (Reading) | 78 | 71 | 71 | |

| Gaze | 67 | 69 | 68 | |

| All Features | 66 | 68 | 66 |

References

- Berrichi, S.; Nassiri, N.; Mazroui, A.; Lakhouaja, A. Exploring the Impact of Deep Learning Techniques on Evaluating Arabic L1 Readability. In Artificial Intelligence, Data Science and Applications; Springer Nature: Cham, Switzerland, 2024; pp. 1–7. [Google Scholar]

- McCarthy, K.S.; Yan, E.F. Reading Comprehension and Constructive Learning: Policy Considerations in the Age of Artificial Intelligence. Policy Insights Behav. Brain Sci. 2024, 11, 19–26. [Google Scholar] [CrossRef]

- Sanches, C.L.; Augereau, O.; Kise, K. Using the Eye Gaze to Predict Document Reading Subjective Understanding. In Proceedings of the 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 8, pp. 28–31. [Google Scholar]

- Southwell, R.; Mills, C.; Caruso, M.; D’Mello, S.K. Gaze-based predictive models of deep reading comprehension. User Model. User-Adapt. Interact. 2023, 33, 687–725. [Google Scholar] [CrossRef]

- Biedert, R.; Dengel, A.; Elshamy, M.; Buscher, G. Towards robust gaze-based objective quality measures for text. In Proceedings of the Symposium on Eye Tracking Research and Applications, Santa Barbara, CA, USA, 28–30 March 2012; pp. 201–204. [Google Scholar]

- Balyan, R.; McCarthy, K.S.; McNamara, D.S. Comparing Machine Learning Classification Approaches for Predicting Expository Text Difficulty. In Proceedings of the Thirty-First International Flairs Conference, Melbourne, FL, USA, 21–23 May 2018; pp. 421–426. [Google Scholar]

- Al-Khalifa, H.S.; Al-Ajlan, A.A. Automatic readability measurements of the Arabic text: An exploratory study. Arab. J. Sci. Eng. 2010, 35, 103–124. [Google Scholar]

- Nassiri, N.; Lakhouaja, A.; Cavalli-Sforza, V. Modern Standard Arabic Readability Prediction. In Proceedings of the Arabic Language Processing: From Theory to Practice (ICALP 2017), Fez, Morocco, 11–12 October 2017; pp. 120–133. [Google Scholar]

- Feng, L.; Elhadad, N.M.; Huenerfauth, M. Cognitively motivated features for readability assessment. In Proceedings of the 12th Conference of the European Chapter of the Association for Computational Linguistics, Athens, Greece, 30 March–3 April 2009; pp. 229–237. [Google Scholar]

- Dale, E.; Chall, J.S. The Concept of Readability. Elem. Engl. 1949, 26, 19–26. [Google Scholar]

- Alotaibi, S.; Alyahya, M.; Al-Khalifa, H.; Alageel, S.; Abanmy, N. Readability of Arabic Medicine Information Leaflets: A Machine Learning Approach. Procedia Comput. Sci. 2016, 82, 122–126. [Google Scholar] [CrossRef]

- Al Tamimi, A.K.; Jaradat, M.; Al-Jarrah, N.; Ghanem, S. AARI: Automatic Arabic readability index. Int. Arab J. Inf. Technol. 2014, 11, 370–378. [Google Scholar]

- Baazeem, I. Analysing the Effects of Latent Semantic Analysis Parameters on Plain Language Visualisation. Master’s Thesis, Queensland University, Brisbane, Australia, 2015. [Google Scholar]

- Collins-Thompson, K. Computational assessment of text readability: A survey of current and future research. ITL Int. J. Appl. Linguist. 2014, 165, 97–135. [Google Scholar] [CrossRef]

- Mesgar, M.; Strube, M. Graph-based coherence modeling for assessing readability. In Proceedings of the Fourth Joint Conference on Lexical and Computational Semantics, Denver, CO, USA, 4–5 June 2015; pp. 309–318. [Google Scholar]

- Balakrishna, S.V. Analyzing Text Complexity and Text Simplification: Connecting Linguistics, Processing and Educational Applications. Ph.D. Thesis, der Eberhard Karls Universität Tübingen, Tübingen, Germany, 2015. [Google Scholar]

- Vajjala, S.; Meurers, D.; Eitel, A.; Scheiter, K. Towards grounding computational linguistic approaches to readability: Modeling reader-text interaction for easy and difficult texts. In Proceedings of the Workshop on Computational Linguistics for Linguistic Complexity (CL4LC), Osaka, Japan, 11 December 2016; pp. 38–48. [Google Scholar]

- Vajjala, S.; Lucic, I. On understanding the relation between expert annotations of text readability and target reader comprehension. In Proceedings of the Fourteenth Workshop on Innovative Use of NLP for Building Educational Applications, Florence, Italy, 2 August 2019; pp. 349–359. [Google Scholar]

- Sarti, G.; Brunato, D.; Dell’Orletta, F. That looks hard: Characterizing linguistic complexity in humans and language models. In Proceedings of the Workshop on Cognitive Modeling and Computational Linguistics, Virtual, 10 June 2021; pp. 48–60. [Google Scholar]

- Ghosh, S.; Dhall, A.; Hayat, M.; Knibbe, J.; Ji, Q. Automatic Gaze Analysis: A Survey of Deep Learning Based Approaches. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 61–84. [Google Scholar] [CrossRef]

- Mathias, S.; Kanojia, D.; Mishra, A.; Bhattacharyya, P. A Survey on Using Gaze Behaviour for Natural Language Processing. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence (IJCAI-20) Survey Track, Yokohama, Japan, 7–15 January 2021; pp. 4907–4913. [Google Scholar]

- Just, M.A.; Carpenter, P.A. A theory of reading: From eye fixations to comprehension. Psychol. Rev. 1980, 87, 329–354. [Google Scholar] [CrossRef]

- Atvars, A. Eye movement analyses for obtaining Readability Formula for Latvian texts for primary school. Procedia Comput. Sci. 2017, 104, 477–484. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, W.; Song, D.; Zhang, P.; Ren, Q.; Hou, Y. Inferring Document Readability by Integrating Text and Eye Movement Features. In Proceedings of the SIGIR2015 Workshop on Neuro-Physiological Methods in IR Research, Santiago, Chile, 2 December 2015. [Google Scholar]

- Copeland, L.; Gedeon, T.; Caldwell, S. Effects of text difficulty and readers on predicting reading comprehension from eye movements. In Proceedings of the 6th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Gyor, Hungary, 19–21 October 2015; pp. 407–412. [Google Scholar]

- Garain, U.; Pandit, O.; Augereau, O.; Okoso, A.; Kise, K. Identification of reader specific difficult words by analyzing eye gaze and document content. In Proceedings of the 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 1, pp. 1346–1351. [Google Scholar]

- Baazeem, I.; Al-Khalifa, H.; Al-Salman, A. Cognitively Driven Arabic Text Readability Assessment Using Eye-Tracking. Appl. Sci. 2021, 11, 8607. [Google Scholar] [CrossRef]

- Al-Ajlan, A.A.; Al-Khalifa, H.S.; Al-Salman, A.S. Towards the development of an automatic readability measurements for Arabic language. In Proceedings of the Third International Conference on Digital Information Management, London, UK, 13–16 November 2008; pp. 506–511. [Google Scholar]

- Saddiki, H.; Bouzoubaa, K.; Cavalli-Sforza, V. Text readability for Arabic as a foreign language. In Proceedings of the 2015 IEEE/ACS 12th International Conference of Computer Systems and Applications (AICCSA), Marrakech, Morocco, 17–20 November 2015; pp. 1–8. [Google Scholar]

- Klaib, A.F.; Alsrehin, N.O.; Melhem, W.Y.; Bashtawi, H.O.; Magableh, A.A. Eye tracking algorithms, techniques, tools, and applications with an emphasis on machine learning and Internet of Things technologies. Expert Syst. Appl. 2021, 166, 114037. [Google Scholar] [CrossRef]

- Singh, H.; Singh, J. Human eye tracking and related issues: A review. Int. J. Sci. Res. Publ. 2012, 2, 1–9. [Google Scholar]

- Conklin, K.; Pellicer-Sánchez, A. Using eye-tracking in applied linguistics and second language research. Second Lang. Res. 2016, 32, 453–467. [Google Scholar] [CrossRef]

- Tobii. Available online: https://www.tobii.com (accessed on 20 November 2021).

- SR Research EyeLink. Available online: https://www.sr-research.com (accessed on 31 March 2021).

- Al-Edaily, A.; Al-Wabil, A.; Al-Ohali, Y. Interactive Screening for Learning Difficulties: Analyzing Visual Patterns of Reading Arabic Scripts with Eye Tracking. In Proceedings of the HCI International 2013—Posters’ Extended Abstracts, Las Vegas, NV, USA, 21–26 July 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 3–7. [Google Scholar]

- Tobii Technology AB. What Is Eye Tracking? Available online: https://www.tobii.com/learn-and-support/get-started/what-is-eye-tracking (accessed on 16 December 2023).

- Cop, U.; Dirix, N.; Drieghe, D.; Duyck, W. Presenting GECO: An eyetracking corpus of monolingual and bilingual sentence reading. Behav. Res. Methods 2017, 49, 602–615. [Google Scholar] [CrossRef]

- Gonzalez-Garduno, A.V.; Søgaard, A. Using gaze to predict text readability. In Proceedings of the 12th Workshop on Innovative Use of NLP for Building Educational Applications, Copenhagen, Denmark, 8 September 2017; pp. 438–443. [Google Scholar]

- Grabar, N.; Farce, E.; Sparrow, L. Study of readability of health documents with eye-tracking approaches. In Proceedings of the 1st Workshop on Automatic Text Adaptation (ATA), Tilburg, The Netherlands, 8 November 2018. [Google Scholar]

- Mathias, S.; Kanojia, D.; Patel, K.; Agarwal, S.; Mishra, A.; Bhattacharyya, P. Eyes are the windows to the soul: Predicting the rating of text quality using gaze behaviour. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 2352–2362. [Google Scholar]

- Hermena, E.W.; Drieghe, D.; Hellmuth, S.; Liversedge, S.P. Processing of Arabic diacritical marks: Phonological–syntactic disambiguation of homographic verbs and visual crowding effects. J. Exp. Psychol. Hum. Percept. Perform. 2015, 41, 494–507. [Google Scholar] [CrossRef]

- Al-Samarraie, H.; Sarsam, S.M.; Alzahrani, A.I.; Alalwan, N. Reading text with and without diacritics alters brain activation: The case of Arabic. Curr. Psychol. 2020, 39, 1189–1198. [Google Scholar] [CrossRef]

- Nassiri, N.; Cavalli-Sforza, V.; Lakhouaja, A. Approaches, Methods, and Resources for Assessing the Readability of Arabic Texts. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2022, 22, 95. [Google Scholar] [CrossRef]

- Paterson, K.B.; Almabruk, A.A.A.; McGowan, V.A.; White, S.J.; Jordan, T.R. Effects of word length on eye movement control: The evidence from Arabic. Psychon. Bull. Rev. 2015, 22, 1443–1450. [Google Scholar] [CrossRef]

- Alrabiah, M.; Alsalman, A.; Atwell, E. The design and construction of the 50 million words KSUCCA King Saud University Corpus of Classical Arabic. In Proceedings of the WACL’2 Second Workshop on Arabic Corpus Linguistics, Lancaster, UK, 22 July 2013; pp. 5–8. [Google Scholar]

- Alnefaie, R.; Azmi, A.M. Automatic minimal diacritization of Arabic texts. Procedia Comput. Sci. 2017, 117, 169–174. [Google Scholar] [CrossRef]

- El-Haj, M.; Kruschwitz, U.; Fox, C. Creating language resources for under-resourced languages: Methodologies, and experiments with Arabic. Lang. Resour. Eval. 2015, 49, 549–580. [Google Scholar] [CrossRef]

- Bouamor, H.; Zaghouani, W.; Diab, M.; Obeid, O.; Oflazer, K.; Ghoneim, M.; Hawwari, A. A pilot study on Arabic multi-genre corpus diacritization. In Proceedings of the Second Workshop on Arabic Natural Language Processing, Beijing, China, 30 July 2015; pp. 80–88. [Google Scholar]

- Al-Edaily, A.; Al-Wabil, A.; Al-Ohali, Y. Dyslexia Explorer: A Screening System for Learning Difficulties in the Arabic Language Using Eye Tracking. In Proceedings of the Human Factors in Computing and Informatics, Maribor, Slovenia, 1–3 July 2013; pp. 831–834. [Google Scholar]

- Al-Wabil, A.; Al-Sheaha, M. Towards an interactive screening program for developmental dyslexia: Eye movement analysis in reading Arabic texts. In Proceedings of the 12th International Conference on Computers Helping People with Special Needs, Vienna, Austria, 14–16 July 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 25–32. [Google Scholar]

- AlJassmi, M.A.; Hermena, E.W.; Paterson, K.B. Eye movements in Arabic reading. In Studies in Arabic Linguistics; John Benjamins Publishing Company: Amsterdam, The Netherlands, 2021; Volume 10, pp. 85–108. [Google Scholar] [CrossRef]

- Hermena, E.W.; Bouamama, S.; Liversedge, S.P.; Drieghe, D. Does diacritics-based lexical disambiguation modulate word frequency, length, and predictability effects? An eye-movements investigation of processing Arabic diacritics. PLoS ONE 2021, 16, e0259987. [Google Scholar] [CrossRef]

- Roman, G.; Pavard, B. A comparative study: How we read in Arabic and French. In Eye Movements from Physiology to Cognition; Elsevier: Amsterdam, The Netherlands, 1987; pp. 431–440. [Google Scholar]

- Blanken, G.; Dorn, M.; Sinn, H. Inversion errors in Arabic number reading: Is there a nonsemantic route? Brain Cogn. 1997, 34, 404–423. [Google Scholar] [CrossRef]

- Naz, S.; Razzak, M.I.; Hayat, K.; Anwar, M.W.; Khan, S.Z. Challenges in baseline detection of Arabic script based languages. In Proceedings of the Intelligent Systems for Science and Information: Extended and Selected Results from the Science and Information Conference, London, UK, 7–9 October 2013; Springer: Cham, Switzerland, 2013; pp. 181–196. [Google Scholar]

- Al Jarrah, E.Q. Using Language Features to Enhance Measuring the Readability of Arabic Text. Master’s Thesis, Yarmouk University, Irbid, Jordan, 2017. [Google Scholar]

- Berrichi, S.; Nassiri, N.; Mazroui, A.; Lakhouaja, A. Impact of Feature Vectorization Methods on Arabic Text Readability Assessment. In Artificial Intelligence and Smart Environment (ICAISE 2022); Springer International Publishing: Cham, Switzerland, 2023; Volume 635, pp. 504–510. [Google Scholar]

- Flesch, R. A new readability yardstick. J. Appl. Psychol. 1948, 32, 221–233. [Google Scholar] [CrossRef]

- Gunning, R. The Technique of Clear Writing, 2nd ed.; McGraw-Hill Book Company: New York, NY, USA, 1968. [Google Scholar]

- Mc Laughlin, G.H. SMOG Grading—A New Readability Formula. J. Read. 1969, 12, 639–646. [Google Scholar]

- Coleman, M.; Liau, T.L. A computer readability formula designed for machine scoring. J. Appl. Psychol. 1975, 60, 283–284. [Google Scholar] [CrossRef]

- Kincaid, J.P.; Fishburne, R.P., Jr.; Rogers, R.L.; Chissom, B.S. Derivation of New Readability Formulas (Automated Readability Index, Fog Count and Flesch Reading Ease Formula) for Navy Enlisted Personnel; Naval Technical Training Command Millington TN Research Branch: Millington, TN, USA, 1975. [Google Scholar]

- Chall, J.S.; Dale, E. Readability Revisited: The New Dale-Chall Readability Formula; Brookline Books: Cambridge, MA, USA, 1995. [Google Scholar]

- El-Haj, M.; Rayson, P. OSMAN―A Novel Arabic Readability Metric. In Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC’16), Portorož, Slovenia, 23–28 May 2016; pp. 250–255. [Google Scholar]

- Cavalli-Sforza, V.; Saddiki, H.; Nassiri, N. Arabic Readability Research: Current State and Future Directions. Procedia Comput. Sci. 2018, 142, 38–49. [Google Scholar] [CrossRef]

- Dawood, B. The Relationship Between Readability and Selected Language Variables. Master’s Thesis, Baghdad University, Baghdad, Iraq, 1977. [Google Scholar]

- Al-Heeti, K.N. Judgment Analysis Technique Applied to Readability Prediction of Arabic Reading Material. Ph.D. Thesis, Northern Colorado University, Greeley, CO, USA, 1985. [Google Scholar]

- Daud, N.M.; Hassan, H.; Aziz, N.A. A corpus-based readability formula for estimate of Arabic texts reading difficulty. World Appl. Sci. J. 2013, 21, 168–173. [Google Scholar] [CrossRef]

- Ghani, K.A.; Noh, A.S.; Yusoff, N.M.R.N.; Hussein, N.H. Developing Readability Computational Formula for Arabic Reading Materials Among Non-native Students in Malaysia. In The Importance of New Technologies and Entrepreneurship in Business Development: In the Context of Economic Diversity in Developing Countries: The Impact of New Technologies and Entrepreneurship on Business Development; Springer: Cham, Switzerland, 2021; Volume 194, pp. 2041–2057. [Google Scholar]

- Mesgar, M.; Strube, M. A neural local coherence model for text quality assessment. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 4328–4339. [Google Scholar]

- Vajjala, S.; Majumder, B.; Gupta, A.; Surana, H. Practical Natural Language Processing: A Comprehensive Guide to Building Real-World NLP Systems; O’Reilly Media Inc.: Sebastopol, CA, USA, 2020. [Google Scholar]

- Chen, X.; Meurers, D. Characterizing text difficulty with word frequencies. In Proceedings of the 11th Workshop on Innovative Use of NLP for Building Educational Applications, San Diego, CA, USA, 16 June 2016; pp. 84–94. [Google Scholar]

- Rello, L. DysWebxia: A Text Accessibility Model for People with Dyslexia. Ph.D. Thesis, Universitat Pompeu Fabra, Barcelona, Spain, 2014. [Google Scholar]

- Azpiazu, I.M.; Pera, M.S. Multiattentive Recurrent Neural Network Architecture for Multilingual Readability Assessment. Trans. Assoc. Comput. Linguist. 2019, 7, 421–436. [Google Scholar] [CrossRef]

- Martinc, M.; Pollak, S.; Robnik-Šikonja, M. Supervised and unsupervised neural approaches to text readability. Comput. Linguist. 2021, 47, 141–179. [Google Scholar] [CrossRef]

- Oliveira, A.M.d.; Germano, G.D.; Capellini, S.A. Comparison of Reading Performance in Students with Developmental Dyslexia by Sex. Paidéia 2017, 27, 306–313. [Google Scholar] [CrossRef]

- Bessou, S.; Chenni, G. Efficient measuring of readability to improve documents accessibility for arabic language learners. J. Digit. Inf. Manag. 2021, 19, 75–82. [Google Scholar] [CrossRef]

- Marie-Sainte, S.L.; Alalyani, N.; Alotaibi, S.; Ghouzali, S.; Abunadi, I. Arabic Natural Language Processing and Machine Learning-Based Systems. IEEE Access 2018, 7, 7011–7020. [Google Scholar] [CrossRef]

- Shen, W.; Williams, J.; Marius, T.; Salesky, E. A language-independent approach to automatic text difficulty assessment for second-language learners. In Proceedings of the 2nd Workshop on Predicting and Improving Text Readability for Target Reader Populations, Sofia, Bulgaria, 8 August 2013; pp. 30–38. [Google Scholar]

- Salesky, E.; Shen, W. Exploiting Morphological, Grammatical, and Semantic Correlates for Improved Text Difficulty Assessment. In Proceedings of the Ninth Workshop on Innovative Use of NLP for Building Educational Applications, Baltimore, MD, USA, 16 June 2014; pp. 155–162. [Google Scholar]

- Forsyth, J.N. Automatic Readability Detection for Modern Standard Arabic. Master’s Thesis, Brigham Young University, Provo, UT, USA, 2014. [Google Scholar]

- Cavalli-Sforza, V.; El Mezouar, M.; Saddiki, H. Matching an Arabic text to a learners’ curriculum. In Proceedings of the 2014 Fifth International Conference on Arabic Language Processing (CITALA 2014), Oujda, Morocco, 26–27 November 2014; pp. 79–88. [Google Scholar]

- Nassiri, N.; Lakhouaja, A.; Cavalli-Sforza, V. Arabic L2 readability assessment: Dimensionality reduction study. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 3789–3799. [Google Scholar] [CrossRef]

- Nassiri, N.; Lakhouaja, A.; Cavalli-Sforza, V. Arabic Readability Assessment for Foreign Language Learners; Springer International Publishing: Cham, Switzerland, 2018; Volume 10859, pp. 480–488. [Google Scholar]

- Nassiri, N.; Lakhouaja, A.; Cavalli-Sforza, V. Combining Classical and Non-classical Features to Improve Readability Measures for Arabic First Language Texts. In International Conference on Advanced Intelligent Systems for Sustainable Development; Springer: Cham, Switzerland, 2020; pp. 463–470. [Google Scholar]

- Nassiri, N.; Lakhouaja, A.; Cavalli-Sforza, V. Evaluating the Impact of Oversampling on Arabic L1 and L2 Readability Prediction Performances. In Networking, Intelligent Systems and Security; Springer International Publishing: Singapore, 2022; Volume 237, pp. 763–774. [Google Scholar]

- Al Aqeel, S.; Abanmy, N.; Aldayel, A.; Al-Khalifa, H.; Al-Yahya, M.; Diab, M. Readability of written medicine information materials in Arabic language: Expert and consumer evaluation. BMC Health Serv. Res. 2018, 18, 139. [Google Scholar] [CrossRef]

- Khallaf, N.; Sharoff, S. Automatic Difficulty Classification of Arabic Sentences. In Proceedings of the Sixth Arabic Natural Language Processing Workshop (WANLP), Virtual, Kyiv, Ukraine, 19 April 2021; pp. 105–114. [Google Scholar]

- Berrichi, S.; Nassiri, N.; Mazroui, A.; Lakhouaja, A. Interpreting the Relevance of Readability Prediction Features. Jordanian J. Comput. Inf. Technol. 2023, 9, 36–52. [Google Scholar] [CrossRef]

- Ouassil, M.A.; Jebbari, M.; Rachidi, R.; Errami, M.; Cherradi, B.; Raihani, A. Enhancing Arabic Text Readability Assessment: A Combined BERT and BiLSTM Approach. In Proceedings of the 2024 International Conference on Circuit, Systems and Communication (ICCSC), Fes, Morocco, 28–29 June 2024; pp. 1–7. [Google Scholar]

- Saddiki, H.; Habash, N.; Cavalli-Sforza, V.; Al Khalil, M. Feature optimization for predicting readability of Arabic L1 and L2. In Proceedings of the 5th Workshop on Natural Language Processing Techniques for Educational Applications, Melbourne, Australia, 19 July 2018; pp. 20–29. [Google Scholar]

- Mishra, A.; Bhattacharyya, P. Scanpath Complexity: Modeling Reading Effort Using Gaze Information. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 77–98. [Google Scholar]

- Mathias, S.; Murthy, R.; Kanojia, D.; Mishra, A.; Bhattacharyya, P. Happy are those who grade without seeing: A multi-task learning approach to grade essays using gaze behaviour. In Proceedings of the 1st Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 10th International Joint Conference on Natural Language Processing, Suzhou, China, 4–7 December 2020; pp. 858–872. [Google Scholar]

- Mathias, S.; Murthy, R.; Kanojia, D.; Bhattacharyya, P. Cognitively aided zero-shot automatic essay grading. In Proceedings of the 17th International Conference on Natural Language Processing (ICON), Patna, India, 18–21 December 2021; pp. 175–180. [Google Scholar]

- Mézière, D.C.; Yu, L.; McArthur, G.; Reichle, E.D.; von der Malsburg, T. Scanpath regularity as an index of Reading comprehension. Sci. Stud. Read. 2024, 28, 79–100. [Google Scholar] [CrossRef]

- Nicula, B.; Panaite, M.; Arner, T.; Balyan, R.; Dascalu, M.; McNamara, D.S. Automated Assessment of Comprehension Strategies from Self-explanations Using Transformers and Multi-task Learning. In Artificial Intelligence in Education. Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practitioners, Doctoral Consortium and Blue Sky; Springer Nature: Cham, Switzerland, 2023; pp. 695–700. [Google Scholar]

- Hollenstein, N. Leveraging Cognitive Processing Signals for Natural Language Understanding; ETH Zurich: Zürich, Switzerland, 2021. [Google Scholar]

- Hollenstein, N.; Barrett, M.; Troendle, M.; Bigiolli, F.; Langer, N.; Zhang, C. Advancing NLP with cognitive language processing signals. arXiv 2019, arXiv:1904.02682. [Google Scholar] [CrossRef]

- Sood, E.; Tannert, S.; Müller, P.; Bulling, A. Improving natural language processing tasks with human gaze-guided neural attention. In Proceedings of the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Online, 6–12 December 2020; Volume 33, pp. 6327–6341. [Google Scholar]

- Barrett, M. Improving Natural Language Processing with Human Data: Eye Tracking and Other Data Sources Reflecting Cognitive Text Processing. Ph.D. Thesis, University of Copenhagen, Copenhagen, Denmark, 2018. [Google Scholar]

- Leal, S.E.; Vieira, J.M.M.; dos Santos Rodrigues, E.; Teixeira, E.N.; Aluísio, S. Using eye-tracking data to predict the readability of Brazilian Portuguese sentences in single-task, multi-task and sequential transfer learning approaches. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 5821–5831. [Google Scholar]

- Clifton, C., Jr.; Staub, A. Syntactic influences on eye movements during reading. In The Oxford Handbook of Eye Movements; No. 2; Oxford University Press: Oxford, UK, 2011; Volume 3, pp. 895–910. [Google Scholar]

- Liversedge, S.P.; Paterson, K.B.; Pickering, M.J. Chapter 3—Eye Movements and Measures of Reading Time. In Eye Guidance in Reading and Scene Perception; Underwood, G., Ed.; Elsevier Science Ltd.: Amsterdam, The Netherlands, 1998; pp. 55–75. [Google Scholar] [CrossRef]

- Rayner, K. Eye movements in reading and information processing: 20 years of research. Psychol. Bull. 1998, 124, 372–422. [Google Scholar] [CrossRef]

- Wiechmann, D.; Qiao, Y.; Kerz, E.; Mattern, J. Measuring the impact of (psycho-) linguistic and readability features and their spill over effects on the prediction of eye movement patterns. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; Volume 1, pp. 5276–5290. [Google Scholar]

- Ibañez, M.; Reyes, L.L.A.; Sapinit, R.; Hussien, M.A.; Imperial, J.M. On Applicability of Neural Language Models for Readability Assessment in Filipino. In Artificial Intelligence in Education. Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practitioners’ and Doctoral Consortium, Proceedings of the 23rd International Conference, AIED 2022, Durham, UK, 27–31 July 2022; Proceedings, Part II; Springer: Cham, Switzerland, 2022; pp. 573–576. [Google Scholar]

- Howcroft, D.M.; Demberg, V. Psycholinguistic models of sentence processing improve sentence readability ranking. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics, Valencia, Spain, 3–7 April 2017; Volume 1, pp. 958–968. [Google Scholar]

- Yancey, K.; Pintard, A.; Francois, T. Investigating readability of french as a foreign language with deep learning and cognitive and pedagogical features. Lingue Linguaggio 2021, 20, 229–258. [Google Scholar] [CrossRef]

- Olukoga, T.A.; Feng, Y. A Case Study on the Classification of Lost Circulation Events During Drilling using Machine Learning Techniques on an Imbalanced Large Dataset. arXiv 2022, arXiv:2209.01607. [Google Scholar] [CrossRef]

- Baazeem, I.; Al-Khalifa, H.; Al-Salman, A. AraEyebility: Eye-Tracking Data for Arabic Text Readability. Computation 2025, 13, 108. [Google Scholar] [CrossRef]

- Scikit-Learn. Scikit-Learn Machine Learning in Python. Available online: https://scikit-learn.org/stable/ (accessed on 5 October 2023).

- Li, D.; Kanoulas, E. Bayesian Optimization for Optimizing Retrieval Systems. In Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, Marina Del Rey, CA, USA, 5–9 February 2018; pp. 360–368. [Google Scholar] [CrossRef]

- Bzdok, D.; Krzywinski, M.; Altman, N. Machine learning: Supervised methods. Nat. Methods 2018, 15, 5–6. [Google Scholar] [CrossRef]

- Aldayel, A.; Al-Khalifa, H.; Alaqeel, S.; Abanmy, N.; Al-Yahya, M.; Diab, M. ARC-WMI: Towards Building Arabic Readability Corpus for Written Medicine Information. In Proceedings of the 3rd Workshop on Open-Source Arabic Corpora and Processing Tools, Miyazaki, Japan, 8 May 2018; p. 14. [Google Scholar]

- Abellán, J.; Mantas, C.J.; Castellano, J.G.; Moral-García, S. Increasing diversity in random forest learning algorithm via imprecise probabilities. Expert Syst. Appl. 2018, 97, 228–243. [Google Scholar] [CrossRef]

- Brownlee, J. Repeated k-Fold Cross-Validation for Model Evaluation in Python. Guiding Tech Media. Available online: https://machinelearningmastery.com/repeated-k-fold-cross-validation-with-python/ (accessed on 21 December 2023).

- Bengfort, B.; Bilbro, R.; Ojeda, T. Applied Text Analysis with Python: Enabling Language-Aware Data Products with Machine Learning; O’Reilly Media Inc.: Sebastopol, CA, USA, 2018. [Google Scholar]

- Priyadarshini, I.; Cotton, C. A novel LSTM–CNN–grid search-based deep neural network for sentiment analysis. J. Supercomput. 2021, 77, 13911–13932. [Google Scholar] [CrossRef] [PubMed]

- Hedhli, M.; Kboubi, F. CNN-BiLSTM Model for Arabic Dialect Identification. In Advances in Computational Collective Intelligence; Nguyen, N.T., Botzheim, J., Gulyás, L., Nunez, M., Treur, J., Vossen, G., Kozierkiewicz, A., Eds.; Springer: Cham, Switzerland, 2023; pp. 213–225. [Google Scholar]

- Rahman, S.S.M.M.; Biplob, K.B.M.B.; Rahman, M.H.; Sarker, K.; Islam, T. An Investigation and Evaluation of N-Gram, TF-IDF and Ensemble Methods in Sentiment Classification. In Cyber Security and Computer Science (ICONCS); Springer: Cham, Switzerland, 2020; Volume 325, pp. 391–402. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Chen, J.; Yuan, P.; Zhou, X.; Tang, X. Performance Comparison of TF*IDF, LDA and Paragraph Vector for Document Classification. In Knowledge and Systems Sciences; Chen, J., Nakamori, Y., Yue, W., Tang, X., Eds.; Springer: Singapore, 2016; pp. 225–235. [Google Scholar]

- Mesgar, M.; Strube, M. Lexical coherence graph modeling using word embeddings. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1414–1423. [Google Scholar]

- Sabbeh, S.F.; Fasihuddin, H.A. A Comparative Analysis of Word Embedding and Deep Learning for Arabic Sentiment Classification. Electron. 2023, 12, 1425. [Google Scholar] [CrossRef]

- Ahmed, Z.A.T.; Albalawi, E.; Aldhyani, T.H.H.; Jadhav, M.E.; Janrao, P.; Obeidat, M.R.M. Applying Eye Tracking with Deep Learning Techniques for Early-Stage Detection of Autism Spectrum Disorders. Data 2023, 8, 168. [Google Scholar] [CrossRef]

- Sarika, P.K. Comparing LSTM and GRU for Multiclass Sentiment Analysis of Movie Reviews. Bachelor’s Thesis, Blekinge Institute of Technology, Karlskrona, Sweden, 2020. [Google Scholar]

- Wilcox, E.; Gauthier, J.; Hu, J.; Qian, P.; Levy, R. On the Predictive Power of Neural Language Models for Human Real-Time Comprehension Behavior. In Proceedings of the 42nd Annual Meeting of the Cognitive Science Society, Virtual, 29 July–1 August 2020; pp. 1707–1713. [Google Scholar]

- Aurnhammer, C.; Frank, S.L. Comparing gated and simple recurrent neural network architectures as models of human sentence processing. In Proceedings of the 41st Annual Conference of the Cognitive Science Society (CogSci 2019), Montreal, QC, Canada, 24–27 July 2019; pp. 112–118. [Google Scholar]

- Facebook Inc. fastText Library for Efficient Text Classification and Representation Learning. Available online: https://fasttext.cc (accessed on 22 September 2023).

- Setyanto, A.; Laksito, A.; Alarfaj, F.; Alreshoodi, M.; Kusrini; Oyong, I.; Hayaty, M.; Alomair, A.; Almusallam, N.; Kurniasari, L. Arabic Language Opinion Mining Based on Long Short-Term Memory (LSTM). Appl. Sci. 2022, 12, 4140. [Google Scholar] [CrossRef]

- Uluslu, A.Y.; Schneider, G. Exploring Linguistic Features for Turkish Text Readability. In Proceedings of the 6th International Conference on Natural Language and Speech Processing (ICNLSP-2023), Virtual, 16–17 December 2023; pp. 223–232. [Google Scholar]

- Keras. KerasTuner. Available online: https://keras.io/keras_tuner/ (accessed on 16 January 2023).

- Copeland, L.; Gedeon, T. Measuring Reading Comprehension Using Eye Movements. In Proceedings of the IEEE 4th International Conference on Cognitive Infocommunications (CogInfoCom), Budapest, Hungary, 2–5 December 2013; pp. 791–796. [Google Scholar]

- Copeland, L.; Gedeon, T.; Mendis, B.S.U. Predicting reading comprehension scores from eye movements using artificial neural networks and fuzzy output error. Artif. Intell. Res. 2014, 3, 35–48. [Google Scholar] [CrossRef]

- Singh, A.D.; Mehta, P.; Husain, S.; Rajkumar, R. Quantifying sentence complexity based on eye-tracking measures. In Proceedings of the Workshop on Computational Linguistics for Linguistic Complexity (CL4LC), Osaka, Japan, 11 December 2016; pp. 202–212. [Google Scholar]

- Gonzalez-Garduno, A.; Søgaard, A. Learning to predict readability using eye-movement data from natives and learners. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32, pp. 5118–5124. [Google Scholar]

- Mézière, D.C.; Yu, L.; Reichle, E.D.; Von Der Malsburg, T.; McArthur, G. Using Eye-Tracking Measures to Predict Reading Comprehension. Read. Res. Q. 2023, 58, 425–449. [Google Scholar] [CrossRef]

- Makowski, S.; Jäger, L.A.; Abdelwahab, A.; Landwehr, N.; Scheffer, T. A discriminative model for identifying readers and assessing text comprehension from eye movements. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Dublin, Ireland, 10–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 209–225. [Google Scholar]

- Liu, F.; Lee, J.S. Hybrid models for sentence readability assessment. In Proceedings of the 18th Workshop on Innovative Use of NLP for Building Educational Applications, Toronto, ON, Canada, 13 July 2023; pp. 448–454. [Google Scholar]

- Srivastava, H. Zero Shot Crosslingual Eye-Tracking Data Prediction using Multilingual Transformer Models. In Proceedings of the Workshop on Cognitive Modeling and Computational Linguistics, Dublin, Ireland, 26 May 2022; pp. 102–107. [Google Scholar]

- Caruso, M.; Peacock, C.E.; Southwell, R.; Zhou, G.; D’Mello, S.K. Going Deep and Far: Gaze-Based Models Predict Multiple Depths of Comprehension during and One Week Following Reading. In Proceedings of the 15th International Conference on Educational Data Mining, International Educational Data Mining Society, Durham, UK, 24–27 July 2022; pp. 145–157. [Google Scholar]

- Kennedy, A.; Hill, R.; Pynte, J.E. The Dundee Corpus. In Proceedings of the 12th European Conference on Eye Movements, Dundee, UK, 20–24 August 2003. [Google Scholar]

- Fosch-Villaronga, E.; Poulsen, A.; Søraa, R.A.; Custers, B.H.M. A little bird told me your gender: Gender inferences in social media. Inf. Process. Manag. 2021, 58, 102541. [Google Scholar] [CrossRef]

- Southwell, R.; Gregg, J.; Bixler, R.; D’Mello, S.K. What eye movements reveal about later comprehension of long connected texts. Cogn. Sci. 2020, 44, e12905. [Google Scholar] [CrossRef]

- Goodkind, A.; Bicknell, K. Predictive power of word surprisal for reading times is a linear function of language model quality. In Proceedings of the 8th Workshop on Cognitive Modeling and Computational Linguistics (CMCL 2018), Salt Lake City, UT, USA, 7 January 2018; pp. 10–18. [Google Scholar]

- Hadar, C.A.; Shubi, O.; Meiri, Y.; Berzak, Y. Decoding Open-Ended Information Seeking Goals from Eye Movements in Reading. arXiv 2025, arXiv:2505.02872. [Google Scholar] [CrossRef]

- Chiang, C.-H.; Lee, H.-Y. Can Large Language Models Be an Alternative to Human Evaluations? In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Volume 1, pp. 15607–15631. [Google Scholar]

- Moons, P.; Van Bulck, L. Using ChatGPT and Google Bard to improve the readability of written patient information: A proof of concept. Eur. J. Cardiovasc. Nurs. 2024, 23, 122–126. [Google Scholar] [CrossRef]

- Klein, K.G.; Frenkel, S.; Shubi, O.; Berzak, Y. Eye Tracking Based Cognitive Evaluation of Automatic Readability Assessment Measures. arXiv 2025, arXiv:2502.11150. [Google Scholar]

- Azmi, A.M.; Alnefaie, R.M.; Aboalsamh, H.A. Light Diacritic Restoration to Disambiguate Homographs in Modern Arabic Texts. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2021, 21, 60. [Google Scholar] [CrossRef]

- Azmi, A.M.; Alsaiari, A. A calligraphic based scheme to justify Arabic text improving readability and comprehension. Comput. Hum. Behav. 2014, 39, 177–186. [Google Scholar] [CrossRef]

- Bolliger, L.S.; Reich, D.R.; Jäger, L.A. ScanDL 2.0: A Generative Model of Eye Movements in Reading Synthesizing Scanpaths and Fixation Durations. Proc. ACM Hum.-Comput. Interact. 2025, 9, ETRA05. [Google Scholar] [CrossRef]

- Shubi, O.; Meiri, Y.; Hadar, C.A.; Berzak, Y. Fine-grained prediction of reading comprehension from eye movements. arXiv 2024, arXiv:2410.04484. [Google Scholar] [CrossRef]

- Melo, J.; Fernandez, L.; Ishimaru, S. Automatic Classification of Difficulty of Texts From Eye Gaze and Physiological Measures of L2 English Speakers. IEEE Access 2025, 13, 24555–24575. [Google Scholar] [CrossRef]

- Dini, L.; Domenichelli, L.; Brunato, D.; Dell’Orletta, F. From Human Reading to NLM Understanding: Evaluating the Role of Eye-Tracking Data in Encoder-Based Models. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vienna, Austria, 27 July–1 August 2025; pp. 17796–17813. [Google Scholar] [CrossRef]

| Feature Variation | Count | Description |

|---|---|---|

| Text-based | 69 | Features extracted to represent the selected texts and their linguistic complexity. |

| Gaze-based | 29 | Features extracted during the eye-tracking experiment, based on widely used metrics, reflect cognitive processing and comprehension such as reading duration. These include both reading and experimental condition metrics. |

| Gaze-based (Reading) | 23 | Features were extracted during the eye-tracking experiment using widely used metrics from the literature, focusing exclusively on eye-tracking reading metrics and deliberately excluding experimental condition metrics to understand the independent impact of reading metrics. |

| All Features | 98 | A combination of gaze-based and text-based features. |

| Feature Variation | F1 Score (%) | Model |

|---|---|---|

| Text | 74% | RF |

| Gaze (Reading) | 76% | Bagging |

| Gaze | 77% | Bagging |

| All Features | 80% | AdaBoost |

| Model | Feature Variation | F1 Score (%) |

|---|---|---|

| KNN | Gaze (reading), Gaze, All Features | 68 |

| SVM | All Features | 74 |

| LR | All Features | 75 |

| MNB | Gaze (Reading) | 68 |

| Bagging | Gaze | 77 |

| RF | Text, Gaze | 74 |

| AdaBoost | All Features | 80 |

| XGBoost | All Features | 76 |

| Feature Variation | Description |

|---|---|

| TF-IDF | Features were extracted to represent the texts using TF-IDF vectorization. |

| TF-IDF + Text | The concatenation of the vectors obtained from TF-IDF and the text-based handcrafted features. |

| TF-IDF + Gaze (Reading) | The concatenation of the vectors obtained from TF-IDF and eye-tracking reading metrics, referred to as “gaze-based (reading)” handcrafted features. |

| TF-IDF + Gaze | The concatenation of the vectors obtained from TF-IDF and the gaze-based handcrafted features. |

| TF-IDF + All Features | The concatenation of the vectors obtained from the TF-IDF vectorization and all text-based and gaze-based handcrafted features. |

| Features Variations | F1 Score | Model |

|---|---|---|

| TF-IDF + Text | 72% | SVM |

| TF-IDF + Gaze (Reading) | 69% | SVM, XGBoost |

| TF-IDF + Gaze | 69% | KNN |

| TF-IDF + All Features | 73% | SVM |

| Model | Feature Variation | F1 Score (%) |

|---|---|---|

| KNN | TF-IDF + Gaze | 69 |

| SVM | TF-IDF + All Features | 73 |

| LR | TF-IDF + All Features | 71 |

| MNB | TF-IDF + Text | 47 |

| Bagging | TF-IDF + All Features | 62 |

| RF | TF-IDF + All Features | 50 |

| AdaBoost | TF-IDF + Gaze (Reading) | 61 |

| XGBoost | TF-IDF + Text, TF-IDF + Gaze (Reading), TF-IDF + All Features | 69 |

| Hyperparameter | Options |

|---|---|

| Batch size | 16, 32, 64, 128 |

| Epochs count | 50, 100, 200 |

| Optimizer | Adam, Nadam, RMSprop, SGD |

| Learning rate | 0.1, 0.01, 0.001, 0.0001 |

| Dense units | Minimum value = 32, Maximum value = 512, step = 32 |

| Dense dropout | 0.0, 0.1, 0.2, 0.5 |

| Feature Variation | Description |

|---|---|

| Text | The vectors obtained from fastText were concatenated with text-based handcrafted features. |

| Gaze (Reading) | The concatenation of the vectors obtained from fastText and eye-tracking reading metrics, referred to as gaze-based (reading) handcrafted features. |

| Gaze | The concatenation of the vectors obtained from fastText and gaze-based handcrafted features. |

| All Features | The concatenation of the vectors obtained from fastText vectorization and all text-based and gaze-based handcrafted features. |

| Feature Variation | F1 Score (%) | Model |

|---|---|---|

| Text | 77 | CNN |

| Gaze (Reading) | 72 | CNN |

| Gaze | 73 | CNN |

| All Features | 74 | GRU |

| Model | Feature Variation | F1 Score (%) |

|---|---|---|

| LSTM | Gaze (Reading), All Features | 69 |

| BiLSTM | All Features | 72 |

| GRU | All Features | 74 |

| CNN | Text | 77 |

| CNN-LSTM | All Features | 72 |

| CNN-GRU | All Features | 73 |

| CNN-BiLSTM | Gaze (Reading) | 71 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baazeem, I.; Al-Khalifa, H.; Al-Salman, A. Integrating Linguistic and Eye Movements Features for Arabic Text Readability Assessment Using ML and DL Models. Computation 2025, 13, 258. https://doi.org/10.3390/computation13110258

Baazeem I, Al-Khalifa H, Al-Salman A. Integrating Linguistic and Eye Movements Features for Arabic Text Readability Assessment Using ML and DL Models. Computation. 2025; 13(11):258. https://doi.org/10.3390/computation13110258

Chicago/Turabian StyleBaazeem, Ibtehal, Hend Al-Khalifa, and Abdulmalik Al-Salman. 2025. "Integrating Linguistic and Eye Movements Features for Arabic Text Readability Assessment Using ML and DL Models" Computation 13, no. 11: 258. https://doi.org/10.3390/computation13110258

APA StyleBaazeem, I., Al-Khalifa, H., & Al-Salman, A. (2025). Integrating Linguistic and Eye Movements Features for Arabic Text Readability Assessment Using ML and DL Models. Computation, 13(11), 258. https://doi.org/10.3390/computation13110258