4.1. IKOA Optimization Method

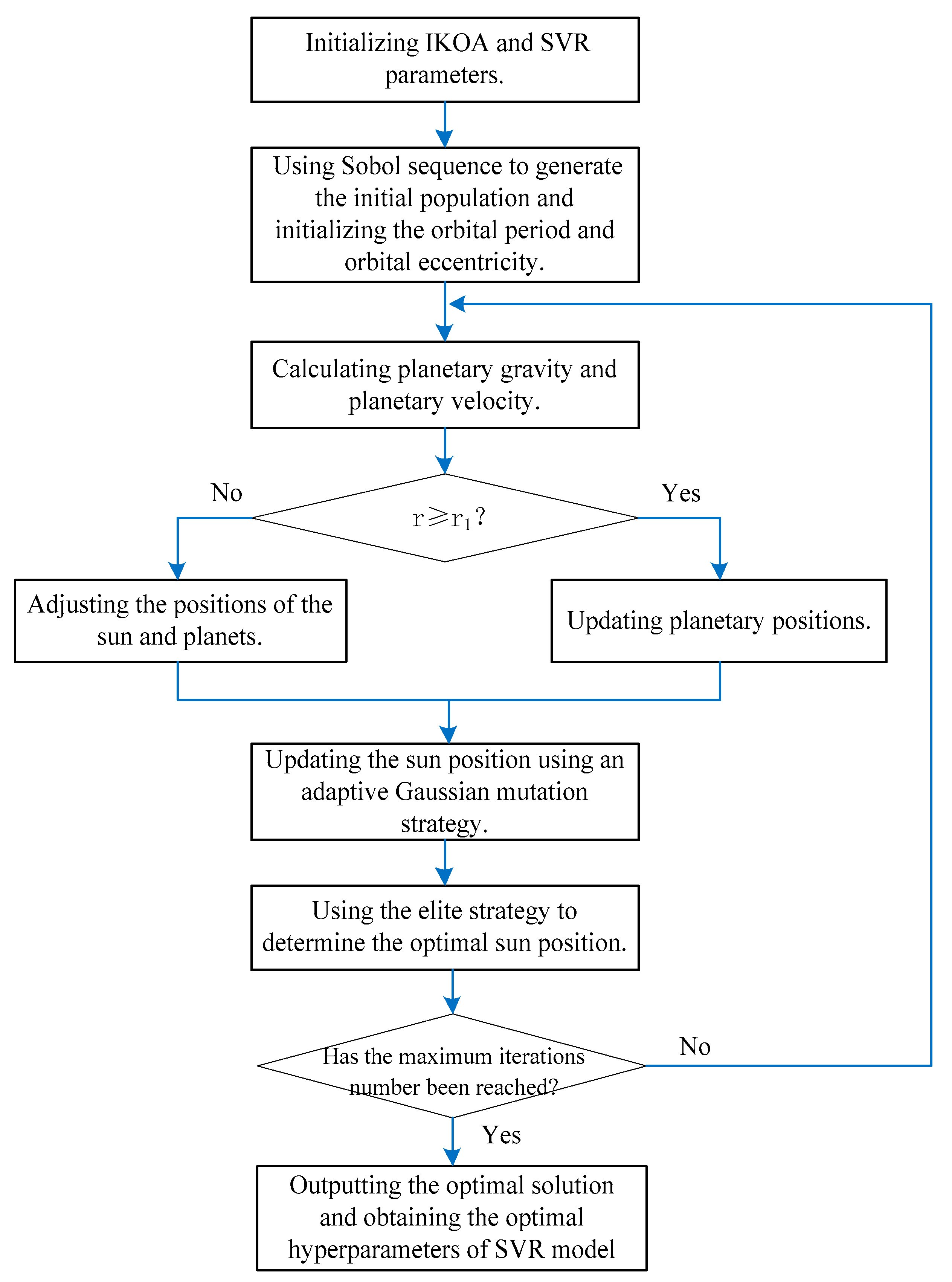

To address the drawback of KOA being prone to falling into local optima, this paper proposed the IKOA method, which combined Sobol sequence generation and an adaptive Gaussian mutation strategy, to achieve intelligent optimization of key parameters in SVR models. The IKOA process was shown in

Figure 5.

The specific steps of the IKOA method were as follows:

- (1)

Initialized IKOA parameters, including initial population size , population dimension dim, maximum iteration number , cycle control parameter , initial gravitational parameter , and gravitational decay factor . Initialized SVR parameters, including C and γ.

- (2)

Used the Sobol sequence to generate the initial population and initialized the orbital period and orbital eccentricity.

The Sobol sequence was used to generate candidate solutions (planets) within the specified ranges of C and γ. Each planet contains a set of hyperparameters C and γ, forming the initial population. Calculating the fitness of each planet and selecting the planet with the minimum fitness as the Sun, the initial position of the population can be written as

where

is the position of the

i-th planet in the

j-th dimension;

is the random number generated by the Sobol sequence for the

i-th planet,

; and

and

are the upper and lower bounds of the

j-th dimension of the

i-th planet, respectively.

The equations for initializing the orbital period and orbital eccentricity are as follows:

where

is a random number generated based on a normal distribution.

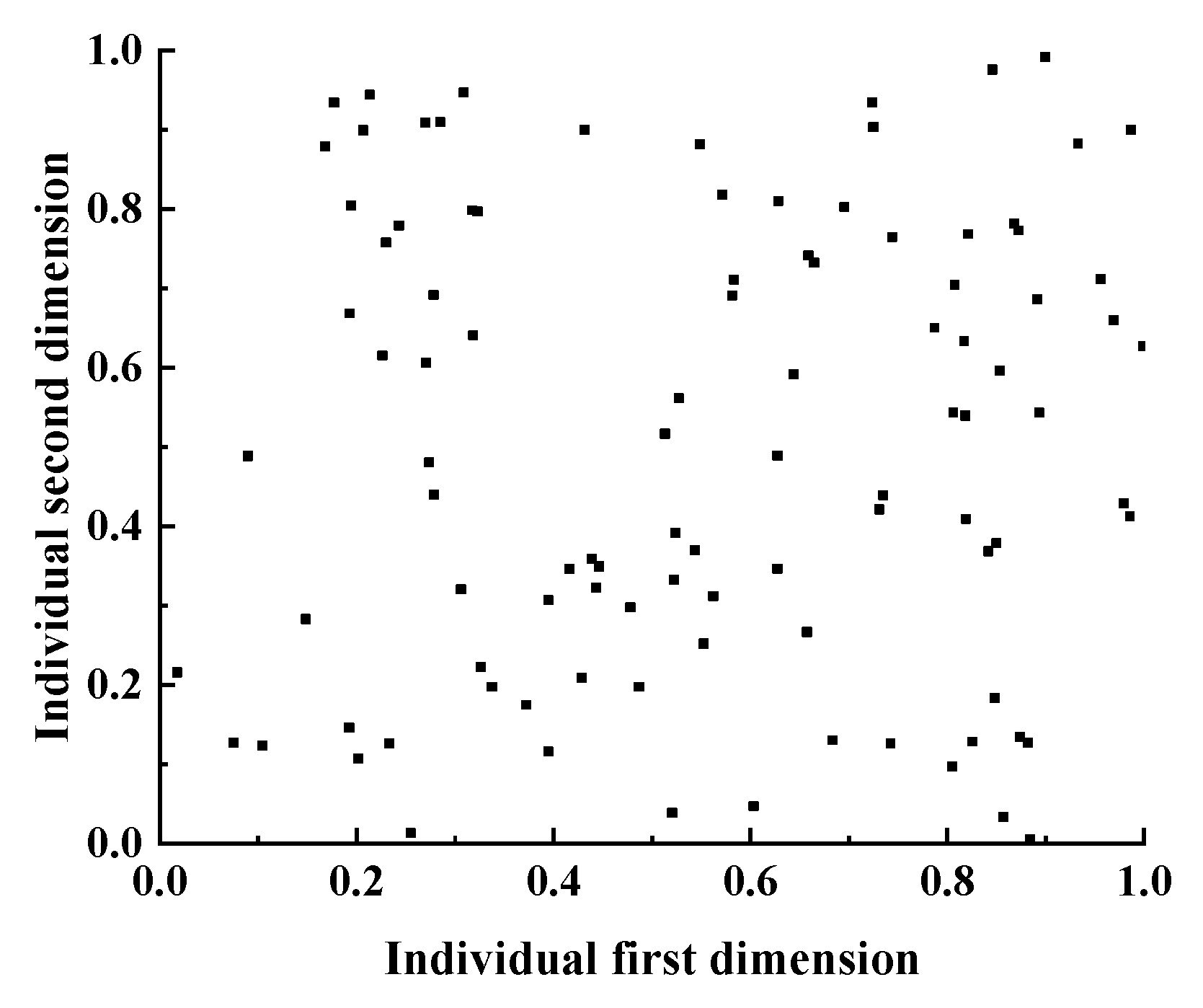

Traditional KOA uses a random number generation method for the initial population positions, which introduces uncertainty in the initial positions and may fall into local optima [

20,

21]. In contrast, by using the Sobol sequence to generate initial solutions, the generated random numbers can be evenly distributed in the solution space. To facilitate efficient optimization by the IKOA, we linearly normalized the search ranges of the SVM hyperparameters C and γ to the interval [0, 1]. The algorithm operates within a unified two-dimensional search space [0, 1]. The population size is set to 100, the dimension is 2, and the upper and lower bounds of the population position are 1 and 0, respectively. The initial population distributions generated by the random number method and the Sobol sequence method within the normalized space are shown in

Figure 6 and

Figure 7, respectively (Note:

Figure 6 and

Figure 7 are for visualization purposes only and use a sample size of 100 individuals; the actual experiment employed 30 individuals). For each fitness evaluation, the dimensional values of an individual are mapped back to their actual hyperparameter values. As shown in the figures, there is a significant difference in the scatter distribution characteristics between the two methods. The scatter points generated by random number generation are typically more densely distributed in certain regions and relatively sparse in others, with obvious blank areas. In contrast, the scatter points generated by the Sobol sequence generation are more uniformly distributed across the entire space, ensuring a more comprehensive coverage of the entire search space and avoiding large areas of sample overlap or omission, thereby enhancing the global exploration ability of the algorithm in the initial stage and laying a better foundation for finding high-quality solutions.

- (3)

Calculating planetary gravity and planetary velocity.

The expressions of planetary gravity are

where

is the planetary gravity of the

i-th planet at the

t-th iteration;

is the planetary gravity;

is the orbital eccentricity of the

i-th planet;

is the gravitational parameter, which is an exponentially decreasing function of the number of iterations

t, used to control search accuracy;

are the normalized values of the solar mass and planetary mass, respectively;

is the squared normalized Euclidean distance between the Sun and the planet at the

t-th iteration;

is a randomly generated number between 0 and 1;

is a small value to prevent the denominator from becoming zero;

are the positions of the Sun and the

i-th planet at the

t-th iteration, respectively;

is the position of the Sun in the

j-th dimension at the

t-th iteration; and

is the position of the

i-th planet in the

j-th dimension at the

t-th iteration.

The equations of solar mass, planetary mass, and fitness are as follows:

where

is the solar mass;

is the planetary mass;

is a randomly generated number between 0 and 1;

is the fitness of the

i-th planet in the

t-th iteration;

is the fitness of the

k-th planet,

, introduced to avoid conflicts with

;

is the minimum value among the fitnesses of all planets in the

t-th iteration;

is the minimum value among the fitnesses of all planets in the

t-th iteration;

is the maximum values among the fitnesses of all planets in the

t-th iteration.

Planetary velocity represents the step size and direction of planetary movement in the search space.

represents the normalized distance between the Sun and the planet; when

, the local exploitation formula is adopted to calculate planetary velocity; and when

, the global exploration formula is used. The expression is as follows:

where

is the planetary velocity of the

i-th planet at the

t-th iteration;

are the positions of two randomly selected planets;

are the maximum and minimum values of the planet positions at the

t-th iteration;

is the Euclidean distance between the Sun and the

i-th planet at the

t-th iteration;

are randomly generated numbers between 0 and 1;

and

are variables that can be either 0 or 1; when

,

takes 0, and otherwise it takes 1; when

,

takes 0, and otherwise it takes 1;

is a variable that can be either 1 or −1; and when

,

takes 1, and otherwise takes −1. Some variables in Equation (11) can be written as

where

is the semi-major axis of the elliptical orbit of the

i-th planet, controlling the amplitude of the planet’s motion; the larger

is, the broader the search range is;

is a variable that takes 0 or 1; when

,

takes 0, and otherwise takes 1;

is a randomly generated number between 0 and 1; and

is a small value to prevent the denominator from becoming zero.

When , proceed to step (4) to update the planetary positions, and when , proceed to step (5) to adjust the positions of the Sun and planets.

- (4)

Updating planetary positions. During the simulation of planetary motion around the Sun, periodically switch the search direction to break through local optimal regions, providing planets with better opportunities to explore the entire space [

23]. When

, the new planetary position is updated as follows:

where

is the position of the

i-th planet at the

t + 1 iteration;

is a randomly generated number between 0 and 1.

- (5)

Adjusting the positions of the Sun and planets. When

, update the planetary positions and simulate the changes in the distance between the Sun and planets with the number of iterations. When planets are close to the Sun, the development operator is activated to enhance convergence speed, and KOA focuses on optimizing development; when planets are far from the Sun, they adjust their distances from the Sun, and KOA focuses on optimizing the exploration operation [

24]. The mathematical model expression is as follows:

where

is the adaptive factor used to control the distance between the Sun and the planet in the

t-th iteration, expressed as

where

is a linear decreasing factor from 1 to −2, expressed as

and where

is the loop control parameter that gradually decreases from −1 to −2 over

loops during the entire optimization process, expressed as

- (6)

Updating the Sun’s position using an adaptive Gaussian mutation strategy.

KOA may encounter situations where the Sun’s position remains unchanged after multiple iterations, and the Sun’s position cannot escape the local optimum after the perturbation weakens in the later stages of exploration. This premature convergence problem is particularly prominent in multi-peak optimization problems, leading to the final optimization result deviating from the global optimum solution [

25]. To address this issue, this paper introduces an adaptive Gaussian mutation strategy into the algorithm’s iteration process. By dynamically adjusting the intensity and frequency of Gaussian mutations during algorithm execution, individuals can escape from local optima and regain search activity when trapped in a local optimum. Compared to fixed-parameter mutation methods, the adaptive mechanism uses the current individual’s position as an adaptive adjustment factor to dynamically adjust the perturbation intensity of Gaussian mutation and flexibly regulate the mutation range. This approach maintains local search precision while enhancing global exploration capabilities. The adaptive Gaussian mutation strategy is expressed as

where

is the Sun’s position after mutation;

is the Sun’s position before mutation;

and

are randomly generated numbers between 0 and 1;

is a normal random variable with a mean of 0 and a standard deviation of

;

is the standard deviation of normal mutation, which controls the mutation intensity, and its expression is as follows:

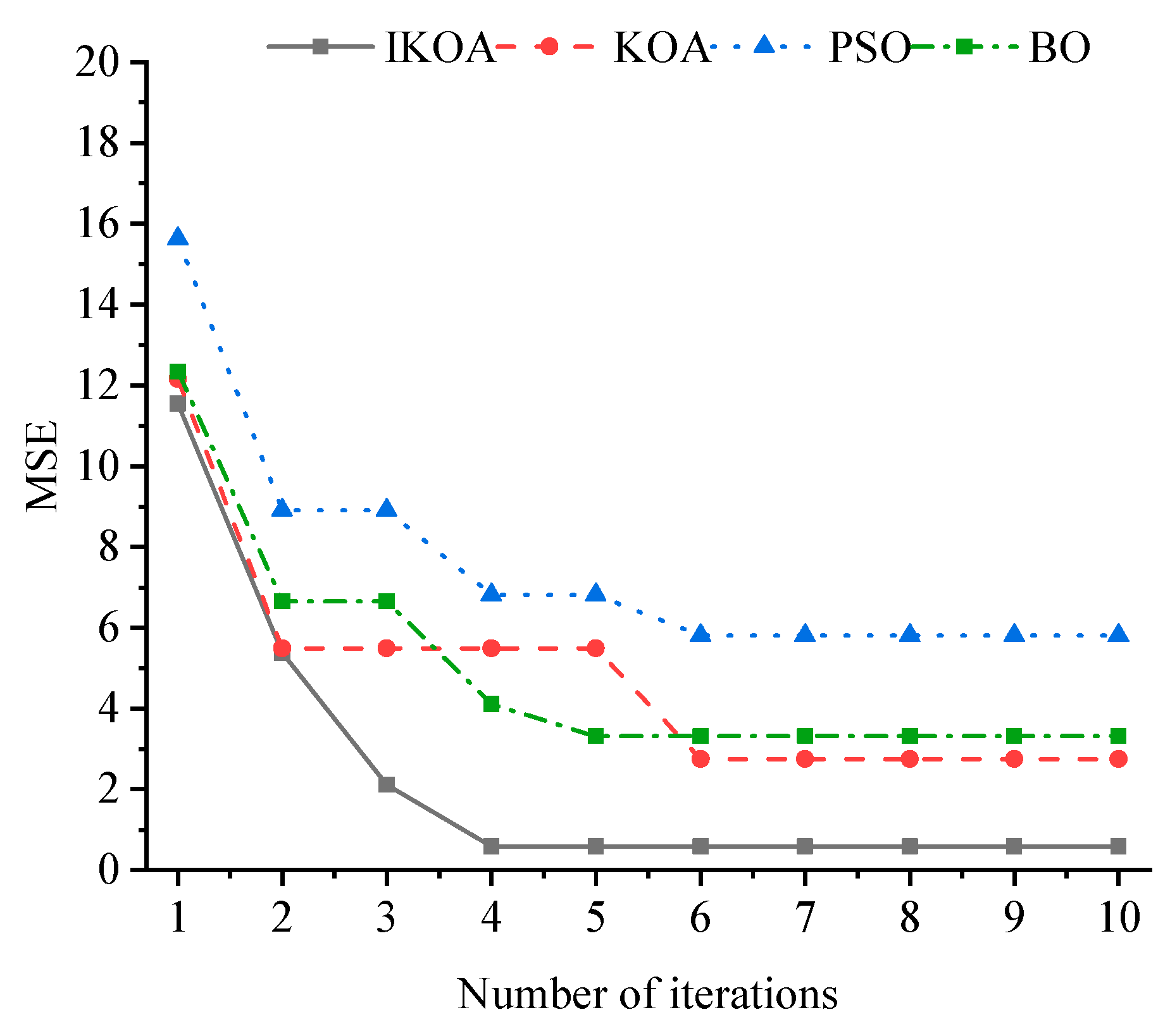

From the above equation, the standard deviation curve with the number of iterations can be obtained, as shown in

Figure 8.

- (7)

Using the elite strategy to determine the optimal Sun position

The elite strategy is used to determine the optimal Sun position, expressed as

where

represents the retained position of the Sun.

- (8)

Determining whether the maximum number of iterations has been reached. If so, the iteration ends, and the results C and γ are output. If not, return to step (3).

4.2. Building the SVR Model

SVR is a machine learning algorithm based on the principle of minimizing structural risk. Its fundamental objective is not merely to fit the training data, but to minimize the model’s generalization error by seeking a regression function f(x) that is as “smooth” as possible while maintaining predictive accuracy.

The implementation of SVR relies on the configuration of three core hyperparameters: C, γ, and the insensitivity loss parameter ε. These three parameters collectively determine the model’s performance: C: balances the model’s structural risk against empirical risk. A larger C value forces the model to reduce training error, but this may come at the cost of sacrificing the model’s generalization ability, leading to overfitting. Conversely, a smaller C value places greater emphasis on reducing model complexity, potentially resulting in the model underfitting the training data. γ: When using a radial basis function (RBF) kernel, γ determines the spatial influence range of individual training samples. A high γ value indicates a small influence range, where only nearby data points affect predictions, potentially causing the model to focus excessively on local details and overfit. A low γ value indicates a large influence range, resulting in a smoother model but potentially failing to capture complex data variations, leading to underfitting. ε: Defines the width of the ε-insensitivity band. Loss is only computed when the prediction error of a sample point exceeds this range. Thus, ε controls the model’s precision and tolerance for error. A larger ε increases the model’s tolerance for error, resulting in a simpler model; a smaller ε makes the model more sensitive to error, leading to a more complex model.

Mathematically, for the training set

, SVR performs regression by constructing a separating hyperplane or an approximating function in the high-dimensional feature space:

where

is the objective function;

is a mapping function;

is the weight vector; and

is the bias term.

By introducing slack variables

, the optimal

f(

x) can be found by solving the following convex quadratic programming problem:

The constraints are as follows:

By introducing the Lagrange multiplier

and taking the partial derivative with respect to the parameter, the minimization problem can be transformed into the following dual problem:

where

is the kernel function.

The final SVR decision function is

4.3. IKOA-SVR Model Training

Based on the intelligent identification training dataset, a Gaussian radial basis kernel function is used as the kernel function for the SVR model. A multi-step sliding cross-validation method is proposed to train the SVR model, which involves randomly and uniformly dividing the training set into n subsets. The training set is randomly and uniformly divided into n subsets, and two subsets are selected as the internal testing dataset in sequence (without repetition), while the remaining subsets are used as the internal training dataset. There will be C(n,2) situations. Each situation is trained using the internal training dataset and tested using the internal testing dataset, with the cross-validation process repeated C(n,2) times to ensure that each pair of subsets is used as the internal testing dataset exactly once.

Compared to traditional K-fold cross-validation, which uses only a single data subset as the testing set in each iteration, the multi-step sliding cross-validation method selects two data subsets to form the testing set. One primary source of uncertainty in traditional K-fold cross-validation is the variance within the testing set during a single iteration. Multi-step sliding cross-validation increases the number of testing samples by using two subsets as the testing set in each iteration, effectively smoothing out randomness in single-run evaluations and thereby reducing the variance in performance assessments. Finally, averaging the results from C(n,2) exhaustive tests further ensures the high stability of the final evaluation outcomes.

Traditional K-fold cross-validation only tests a model’s ability to learn from n − 1 subsets and generalize to a single unknown subset. In contrast, multi-step sliding cross-validation comprehensively evaluates a model’s capacity to learn from n − 2 subsets and generalize to any combination of two unknown subsets. This approach enables the model to not only adapt to variations within a single data distribution but also overcome challenges posed by different combinations of data distributions, thereby enhancing its generalization capability against data heterogeneity.

The C(n,2) iterations far exceed the n iterations of traditional K-fold cross-validation. This approach systematically eliminates random biases arising from specific data partitioning schemes, ensuring evaluation outcomes are not dependent on any single random split. Consequently, the model assessment achieves greater comprehensiveness and thoroughness.

For each test, an intelligent identification model for valve internal leakage is constructed using Mean Squared Error (MSE) as the fitness function. The specific formula is as follows:

where

is the number of samples in the training dataset;

is the actual value of the

i-th sample in the training dataset; and

is the predicted value of the

i-th sample in the training dataset.

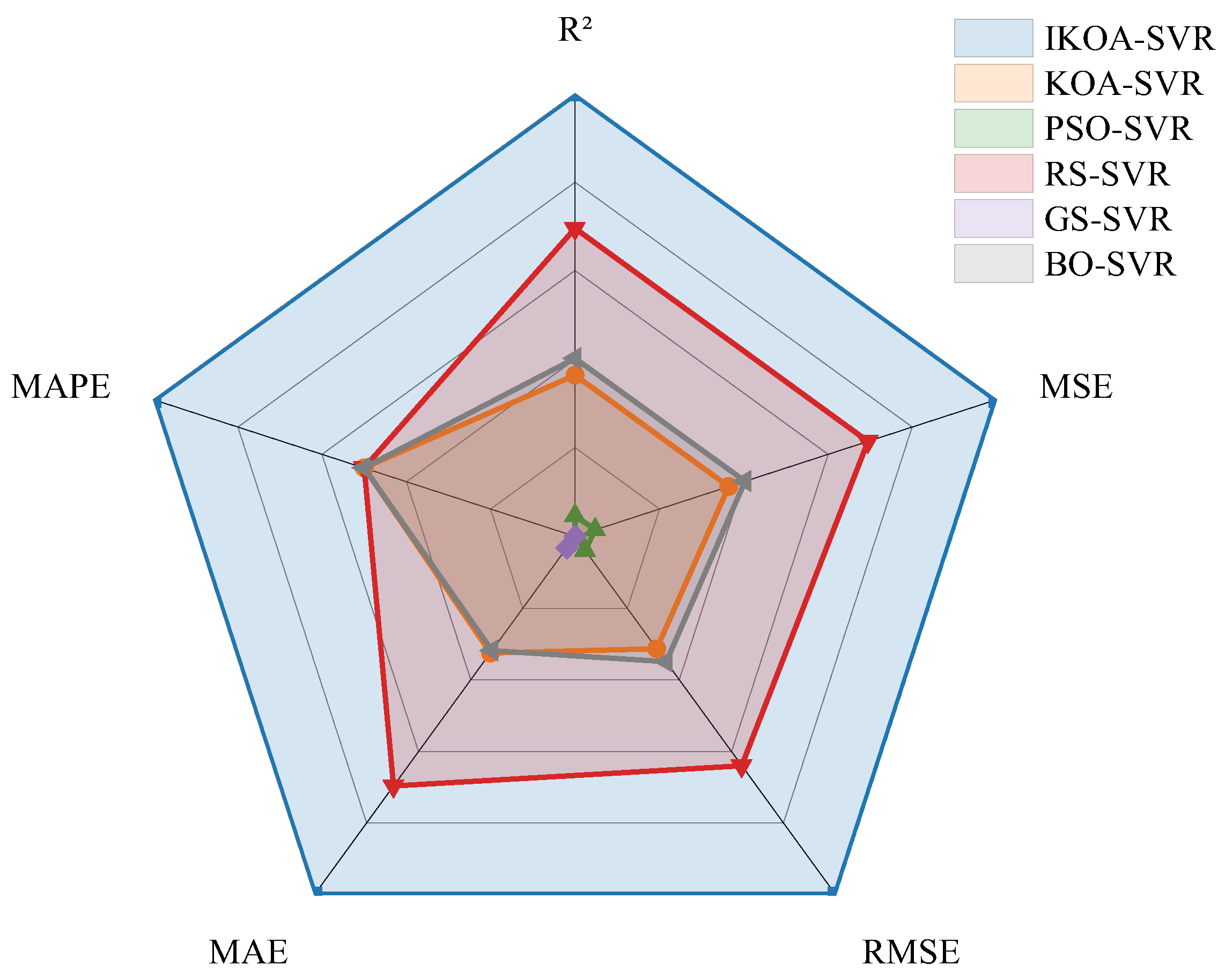

To objectively and quantitatively evaluate the model performance proposed in this paper, in addition to MSE, four performance metrics are used to evaluate the model performance, including Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), Mean Absolute Percentage Error (MAPE), and determination coefficient (R2). The final performance evaluation result of the model is obtained by taking the average of the performance results from C(n,2) tests. By using five performance metrics to evaluate the identification results, the identification accuracy and reliability of the model can be comprehensively measured from different perspectives.

The equations for these performance metrics are as follows:

MSE, RMSE, and MAE are used to measure the deviation degree between the identification value and the actual value. The smaller the value, the smaller the identification error and the higher the accuracy. MAPE represents the identification error as a percentage of the actual value, which can more intuitively reflect the relative error size of the identification result. The R2 reflects the fitness degree of the model, and the closer the R2 is to 1, the closer the identification result is to the actual value.