Interpretable Conversation Routing via the Latent Embeddings Approach

Abstract

1. Introduction

2. Materials and Methods

2.1. Routing Benchmark Datasets

- Catalog recommendations, where the agent has to generate a search query, find the corresponding wines, and form a message with a proposition. The resulting message has to be grounded by the search response so that the model does not make up any items out of the catalog;

- General questions about wine, where the agent has to answer any question about wine topics in general without search or other additional actions. Here, the model is allowed to talk about the topic in any way, even about items that are not sold by store;

- Small talks, where the agent just needs to keep a human-like, friendly conversation and answer simple messages like greetings, gratitude, and others. The agent is allowed to be creative and does not require any additional actions;

- Out of scope messages (offtop). Such messages should be ignored by the system completely as they are either LLM jailbreaks (harmful injections or attempts to use the chatbot as a free LLM wrapper) or just random questions out of the system’s scope (not about wines or their attributes, history, geography, and the winemaking process itself in the case of this dataset) [23].

2.2. Semantic Routing Based on Latent Sentence Embeddings Retrieval

2.3. Proposed Modifications for the Semantic Routing Method

- Just filter input examples using another cutoff threshold to reduce the example set size and make it more efficient;

- Save not just the original version of the input example but also a generalized version generated via LLM to cover multiple use cases at once with possibly higher similarity. Examples would become more templated to correspond to multiple requests at once.

- Fetch examples set of the route provided by the developer;

- Encode all examples of the route with the embedder model;

- Save the first example to router memory;

- For the following examples retrieve the top 1 similar sample of the current route, which is already saved to the router memory;

- If the similarity score of the top 1 most similar sample is higher than the example pruning threshold (a coefficient, which describes when 2 texts are too similar, so it does not make sense to save another similar example), ignore the new example and do not add it to the router memory;

- If the similarity score of the top 1 most similar sample is less than the example pruning threshold, add it to router memory either as it is (first proposed approach) or pass it through the LLM to generalize it (second proposed approach) and save this generalized version to router memory;

- Repeat for every route.

3. Results

3.1. The Effect of Pruning of Examples on Semantic Routing

- Aggregation function: max;

- Number of examples to retrieve: 15;

- Similarity score threshold for each route: 0.6 (if route aggregated similarity is lower than 0.6, it would be rejected). This threshold was tuned to suffice the benchmark during our previous research [21]. Higher values would cut off more possible routes and lower would allow low-confidence predictions;

- Examples are provided only for valid routes: general wine questions, catalog, and small talk. The offtop route is assigned only when all valid routes are rejected;

- Examples pruning threshold: 0.8;

- LLM configuration for the generalization of examples: GPT 4o with temperature 0.0 and top p 0.0 [27];

- Encoder model for the router: text-multilingual-embedding-002 by Google with task type RETRIEVAL_QUERY with an embedding size of 768 (task types in Google text embedding API allow us to choose the model optimized for the specific embeddings use case) [28,29]. During our previous research, this encoder proved to be the best out of e5, OpenAI text-ada, and OpenAI text-embedding-3-small. It is a multilingual version of the text-embedding-4 model by Google, which scores 66.31 on average across all tasks on the MTEB benchmark;

- Random seed: 42;

- Encoder model for pruning: text-multilingual-embedding-002 by Google with task type SEMANTIC_SIMILARITY with an embedding size of 768;

- A full list of examples provided to the router is listed in Appendix A.

3.2. Jailbreak Prevention

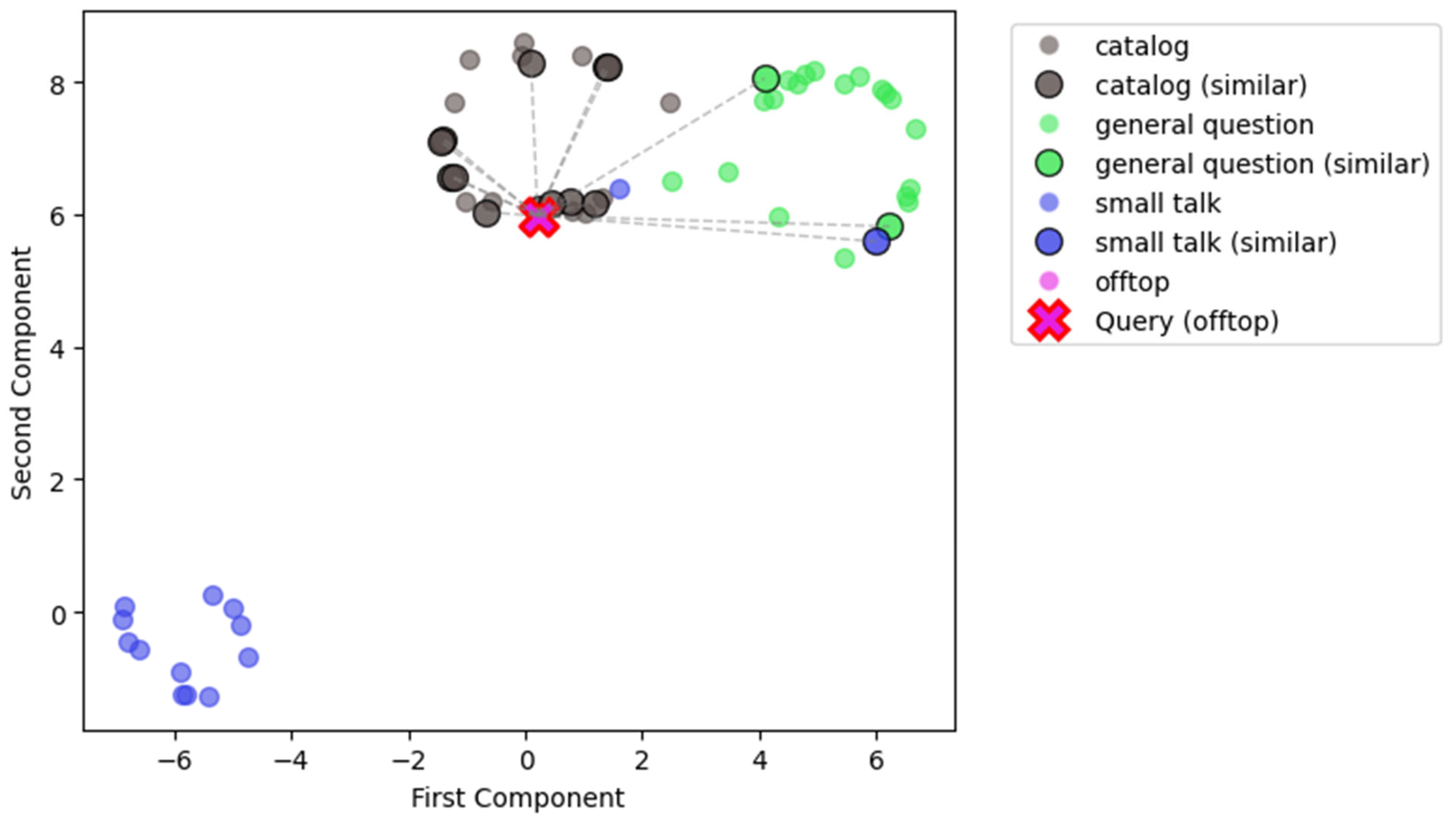

3.3. Interpretability and Controllability

- N_neighbors: 10;

- N_components: 2;

- Metric: cosine;

- Random_state: 42.

4. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

- What are the main types of wine grapes?

- Tell me about the history of wine.

- What are some popular wine regions?

- How does climate affect wine production?

- What are the characteristics of a good Merlot?

- How do you make white wine with red grapes?

- Can wine be part of a healthy diet, and if so, how?

- What are the pros and cons of drinking wine compared to other alcoholic beverages?

- How does the taste of wine vary depending on the region it comes from?

- What are tannins in wine and how do they affect the taste?

- Can you explain the concept of ‘terroir’ in winemaking?

- What is the significance of the year on a wine bottle?

- What are sulfites in wine and why are they added?

- How does the alcohol content in wine vary and what factors influence it?

- What is the difference between dry and sweet wines?

- I’ve always wondered how to properly taste wine. Could you give me some tips on how to do this?

- I’ve noticed that some wines have a higher alcohol content than others. How does this affect the taste and the potential effects of the wine?

- I’ve heard that some wines are better suited for certain seasons. Is this true and if so, which wines are best for which seasons

- I’ve always been curious about the different wine regions around the world. Could you tell me about some of the most famous ones and what makes them unique?

- I’ve heard that certain wines should be served at specific temperatures. Is this true and if so, why?

- I’ve noticed that some wines are described as ‘full-bodied’ while others are ‘light’. What do these terms mean and how do they affect the taste of the wine?

- I’ve heard that some people collect wine as an investment. Is this a good idea and if so, which wines are best for this?

- I’ve always been curious about the process of making sparkling wine. Could you explain how it differs from still wine production?

- Can you recommend a wine for a romantic dinner?

- I want to order some wine

- What’s the price of a good bottle of ?

- I need a wine suggestion for a summer picnic.

- Tell me about the wines available in your catalog.

- What wine would you suggest for a barbecue?

- I’m looking for a specific wine I had last week.

- Can you help me find a wine within a $20 budget?

- We both enjoy sweet wines. What dessert wine would you recommend for a cozy night in?

- I’m preparing a French-themed dinner. What French wine would complement the meal?

- We’re having a cheese and wine night. What wine goes well with a variety of cheeses?

- I’m planning a surprise picnic. What rosé wine would be ideal for a sunny afternoon?

- We’re having a movie night and love red wine. What bottle would you suggest?

- Hello, I’m new to the world of wine. Could you recommend a bottle around $30 that’s not too sweet and would complement a grilled shrimp dish?

- Ciao, stasera cucino un risotto ai frutti di mare. Quale vino bianco si abbina bene senza essere troppo secco?

- Hey, I’m searching for a nice red wine around $40. I usually enjoy a good Merlot, but I’m open to other options. Anything with a smooth finish and rich fruit flavors would be great! Any recommendations?

- Hi, I need a good wine pairing for a roasted turkey dinner with herbs. I usually prefer a dry white, something like a Pinot Grigio, but I’m open to other suggestions. Do you have any recommendations around $30?

- Hello, I’m looking for a robust red wine with moderate tannins to pair with a rich mushroom and truffle pasta. Ideally, something from the Tuscany region, under $70. Any suggestions?

- Hi, I need a light and refreshing wine to pair with grilled salmon and a citrus salad. Any suggestions for something not too sweet under $25?

- Hallo! Ich suche eine gute Flasche Wein für etwa 30–40 €. Normalerweise bevorzuge ich trockenere Weißweine, wie einen Chardonnay oder vielleicht einen deutschen Riesling. Haben Sie Empfehlungen?

- I’m looking for a good but affordable wine for a casual get-together. I don’t know much about wine, so any help would be appreciated.

- Which wines would you recommend for a beginner that are easy to drink and have a fruity flavor?

- Hallo, ich suche einen vollmundigen Weißwein mit moderaten Säurenoten, der zu einem cremigen Meeresfrüchte-Risotto passt. Idealerweise etwas aus der Region Burgund, unter 50 €. Haben Sie Vorschläge?

- Je prépare un curry thaï aux crevettes ce soir. Vous pensez à un blanc plus léger ? Quelque chose qui ne dominera pas les saveurs épicées. Suggestions?

- Salut, je cherche une bouteille de vin rouge pour environ 40 €. Quelque chose de facile à boire, pas trop tannique, peut-être avec des notes de fruits rouges ? Que recommandez-vous?

- Cerco un vino da utilizzare in cucina, nello specifico per fare il risotto ai frutti di mare. Eventuali suggerimenti?

- I want to buy a wine that I can age for the next 5–10 years. What would you recommend in the $50 range?

- Hey! I need a wine recommendation for a cozy night in with friends—something that pairs well with a cheese platter. Any suggestions?

- I’m making a Thai shrimp curry tonight. Thinking a lighter white? Something that won’t overpower the spicy flavors. Any suggestions?

- Is this wine vegan-friendly?

- Hello, I’m looking for a good wine to pair with a seared tuna steak and a fresh salad. I usually enjoy a crisp Pinot Grigio, but I’m open to new suggestions. Any recommendations?

- Je prépare un magret de canard rôti avec une sauce aux airelles. Je pense à un Bordeaux, mais je suis ouvert aux suggestions. Quelque chose d’équilibré et pas trop boisé. Que recommandez-vous?

- Hey! Ich suche eine gute Flasche Wein zu gegrilltem Rinderfilet. Normalerweise nehme ich kräftige Rotweine, bin aber für Vorschläge offen. Etwas Weiches, nicht zu Trockenes, um die 40–50 €? Was empfehlen Sie?

- Hi/Hello/Hallo

- Hi there, how are you?

- Thank you for your help!

- Goodbye, have a nice day!

- What can you do as an assistant?

- I’m not sure if I want to buy anything right now, but I’ll keep your site in mind for the future.

- I’m sorry, but I didn’t find what I was looking for on your site.

- I appreciate your help, but I think I’ll look elsewhere for now.

- Thanks for your time, have a great day!

- Great selection of wines you have here.

- I’m just looking around for now, but I might have some questions later.

- This site is really easy to navigate, thanks for making it user-friendly.

- I’m not sure what I’m looking for yet, but I’ll let you know if I have any questions.

- I’m impressed with the variety of wines you offer.

- What are some of the things you can help me with?

- What kind of questions can you answer?

- Can you tell me more about your capabilities?

- What kind of information can you provide about wines?

Appendix B

- Identify the main topic and specific terms that need to be retained.

- Simplify any specific examples or details, replacing them with more general equivalents.

- Maintain the original tone and language style of the message.

- Ensure the revised version retains the essence of the original message but is applicable to a broader context.

- (1)

- general-questions: Questions about wine, sommelier, wine grapes, wine regions, countries and their locations, development, culture or history, wine geography or history, people’s wine preferences, and characteristics of certain wines or grape varieties. Never about wineries, proposals, buying;

- (2)

- catalog: Inquiries about drinking, buying, tasting, recommendations, questions about specific wines from the catalog and their attributes, direct or indirect requests to find a certain kind of wine for a particular occasion, and questions about pricing and related matters;

- (3)

- smalltalk: General conversation like greetings, thanks, farewells, and questions about the assistant and its functionality. No wine-related discussion;

- (4)

- offtop: Everything else not related to the previous classes or wine in general.

References

- Minaee, S.; Mikolov, T.; Nikzad, N.; Chenaghlu, M.; Socher, R.; Amatriain, X.; Gao, J. Large Language Models: A Survey. arXiv 2024. [Google Scholar] [CrossRef]

- Erdem, E.; Kuyu, M.; Yagcioglu, S.; Frank, A.; Parcalabescu, L.; Plank, B.; Babii, A.; Turuta, O.; Erdem, A.; Calixto, I.; et al. Neural Natural Language Generation: A Survey on Multilinguality, Multimodality, Controllability and Learning. J. Artif. Intell. Res. 2022, 73, 1131–1207. [Google Scholar] [CrossRef]

- Guo, T.; Chen, X.; Wang, Y.; Chang, R.; Pei, S.; Chawla, N.V.; Wiest, O.; Zhang, X. Large Language Model Based Multi-Agents: A Survey of Progress and Challenges. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence, International Joint Conferences on Artificial Intelligence Organization, Jeju, Republic of Korea, 3–9 August 2024; pp. 8048–8057. [Google Scholar] [CrossRef]

- Rebedea, T.; Dinu, R.; Sreedhar, M.N.; Parisien, C.; Cohen, J. NeMo Guardrails: A Toolkit for Controllable and Safe LLM Applications with Programmable Rails. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Association for Computational Linguistics, Singapore, 6–10 December 2023; pp. 431–445. [Google Scholar] [CrossRef]

- Greshake, K.; Abdelnabi, S.; Mishra, S.; Endres, C.; Holz, T.; Fritz, M. Not What You’ve Signed Up For: Compromising Real-World LLM-Integrated Applications with Indirect Prompt Injection. In Proceedings of the 16th ACM Workshop on Artificial Intelligence and Security, Copenhagen, Denmark, 30 November 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 79–90. [Google Scholar] [CrossRef]

- Mohit, T.; Juclà, D.G. Long Text Classification Using Transformers with Paragraph Selection Strategies. In Proceedings of the Natural Legal Language Processing Workshop 2023, Association for Computational Linguistics, Singapore, 7 December 2023; pp. 17–24. [Google Scholar] [CrossRef]

- Padalko, H.; Chomko, V.; Yakovlev, S.; Chumachenko, D. Ensemble Machine Learning Approaches for Fake News Classification. Radioelectron. Comput. Syst. 2023, 4, 5–19. [Google Scholar] [CrossRef]

- Meng, X.; Yan, X.; Zhang, K.; Liu, D.; Cui, X.; Yang, Y.; Zhang, M.; Cao, C.; Wang, J.; Wang, X.; et al. The Application of Large Language Models in Medicine: A Scoping Review. iScience 2024, 27, 109713. [Google Scholar] [CrossRef] [PubMed]

- Dmytro, C. Exploring Different Approaches to Epidemic Processes Simulation: Compartmental, Machine Learning, and Agent-Based Models. In Data-Centric Business and Applications; Štarchoň, P., Fedushko, S., Gubíniová, K., Eds.; Springer Nature: Cham, Switzerland, 2024; Volume 208, pp. 27–54. [Google Scholar] [CrossRef]

- Hajar, H.; Abnane, I.; Idri, A. Interpretability in the Medical Field: A Systematic Mapping and Review Study. Appl. Soft Comput. 2022, 117, 108391. [Google Scholar] [CrossRef]

- Zhang, Y.; Tiňo, P.; Leonardis, A.; Tang, K. A Survey on Neural Network Interpretability. IEEE Trans. Emerg. Top. Comput. Intell. 2021, 5, 726–742. [Google Scholar] [CrossRef]

- Howard, J.; Ruder, S. Universal Language Model Fine-tuning for Text Classification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Volume 1: Long Papers, pp. 328–339. [Google Scholar] [CrossRef]

- Maksymenko, D.; Saichyshyna, N.; Paprzycki, M.; Ganzha, M.; Turuta, O.; Alhasani, M. Controllability for English-Ukrainian Machine Translation by Using Style Transfer Techniques. Ann. Comput. Sci. Inf. Syst. 2023, 35, 1059–1068. [Google Scholar] [CrossRef]

- Nohara, Y.; Matsumoto, K.; Soejima, H.; Nakashima, N. Explanation of Machine Learning Models Using Shapley Additive Explanation and Application for Real Data in Hospital. Comput. Methods Programs Biomed. 2022, Volume 214, 106584. [Google Scholar] [CrossRef]

- Parikh, S.; Tiwari, M.; Tumbade, P.; Vohra, Q. Exploring Zero and Few-Shot Techniques for Intent Classification. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Volume 5, pp. 744–751. [Google Scholar] [CrossRef]

- Chen, Q.; Zhu, X.; Ling, Z.-H.; Inkpen, D.; Wei, S. Neural Natural Language Inference Models Enhanced with External Knowledge. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Volume 1, pp. 2406–2417. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, Y.; Ye, J.; Kong, L. Self-Adaptive In-Context Learning: An Information Compression Perspective for In-Context Example Selection and Ordering. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Volume 1, pp. 1423–1436. [Google Scholar] [CrossRef]

- Wei, S.-L.; Wu, C.-K.; Huang, H.-H.; Chen, H.-H. Unveiling Selection Biases: Exploring Order and Token Sensitivity in Large Language Models. In Findings of the Association for Computational Linguistics ACL 2024; Association for Computational Linguistics: Bangkok, Thailand, 2024; pp. 5598–5621. [Google Scholar] [CrossRef]

- Wang, P.; Li, L.; Chen, L.; Cai, Z.; Zhu, D.; Lin, B.; Cao, Y.; Kong, L.; Liu, Q.; Liu, T.; et al. Large Language Models Are Not Fair Evaluators. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, Bangkok, Thailand, 11–16 August 2024; Volume 1, pp. 9440–9450. [Google Scholar] [CrossRef]

- Singh, C.; Inala, J.P.; Galley, M.; Caruana, R.; Gao, J. Rethinking Interpretability in the Era of Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Maksymenko, D.; Kryvoshein, D.; Turuta, O.; Kazakov, D.; Turuta, O. Benchmarking Conversation Routing in Chatbot Systems Based on Large Language Models. In Proceedings of the 4th International Workshop of IT-professionals on Artificial Intelligence (ProfIT AI 2024), Cambridge, MA, USA, 25–27 September 2024; Volume 3777, pp. 75–86. Available online: https://ceur-ws.org/Vol-3777/paper6.pdf (accessed on 15 October 2024).

- Manias, D.M.; Chouman, A.; Shami, A. Semantic Routing for Enhanced Performance of LLM-Assisted Intent-Based 5G Core Network Management and Orchestration. arXiv 2024. [Google Scholar] [CrossRef]

- Wei, A.; Haghtalab, N.; Steinhardt, J. Jailbroken: How Does LLM Safety Training Fail? arXiv 2023. [Google Scholar] [CrossRef]

- Gemini Team; Georgiev, P.; Lei, V.I.; Burnell, R.; Bai, L.; Gulati, A.; Tanzer, G.; Vincent, D.; Pan, Z.; Wang, S.; et al. Gemini 1.5: Unlocking Multimodal Understanding across Millions of Tokens of Context. arXiv 2024, arXiv:2403.05530. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Zhang, J.; Lopyrev, K.; Liang, P. SQuAD: 100,000+ Questions for Machine Comprehension of Text. arXiv 2016. [Google Scholar] [CrossRef]

- Luo, W.; Ma, S.; Liu, X.; Guo, X.; Xiao, C. JailBreakV: A Benchmark for Assessing the Robustness of MultiModal Large Language Models against Jailbreak Attacks. arXiv 2024. [Google Scholar] [CrossRef]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2023. [Google Scholar] [CrossRef]

- Lee, J.; Dai, Z.; Ren, X.; Chen, B.; Cer, D.; Cole, J.R.; Hui, K.; Boratko, M.; Kapadia, R.; Ding, W.; et al. Gecko: Versatile Text Embeddings Distilled from Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Choose an Embeddings Task Type|Generative AI on Vertex AI. Google Cloud. Available online: https://cloud.google.com/vertex-ai/generative-ai/docs/embeddings/task-types (accessed on 22 October 2024).

- Li, Z.; Zhu, H.; Lu, Z.; Yin, M. Synthetic Data Generation with Large Language Models for Text Classification: Potential and Limitations. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Association for Computational Linguistics, Singapore, 6–10 December 2023; pp. 10443–10461. [Google Scholar] [CrossRef]

- Molino, P.; Wang, Y.; Zhang, J. Parallax: Visualizing and Understanding the Semantics of Embedding Spaces via Algebraic Formulae. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Florence, Italy, 28 July–2 August 2019; pp. 165–180. [Google Scholar] [CrossRef]

- McInnes, L.; Healy, J.; Melville, J. UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction. arXiv 2018. [Google Scholar] [CrossRef]

- Saichyshyna, N.; Maksymenko, D.; Turuta, O.; Yerokhin, A.; Babii, A.; Turuta, O. Extension Multi30K: Multimodal Dataset for Integrated Vision and Language Research in Ukrainian. In Proceedings of the Second Ukrainian Natural Language Processing Workshop (UNLP), Association for Computational Linguistics, Dubrovnik, Croatia, 5 May 2023; pp. 54–61. [Google Scholar] [CrossRef]

| Route | Original Examples | Synthetic | Scrapped | SQuAD | Total |

|---|---|---|---|---|---|

| General wine questions | 30 | 283 | 618 | 0 | 931 |

| Catalog | 82 | 675 | 0 | 0 | 757 |

| Small talk | 7 | 297 | 0 | 0 | 304 |

| Offtop | 0 | 0 | 0 | 884 | 884 |

| Total | 122 | 1255 | 618 | 884 | 2876 |

| Route | English | Each of the German/French/ Italian/Ukrainian |

|---|---|---|

| General wine questions | 468 | 116 |

| Catalog | 442 | 78 |

| Small talk | 172 | 33 |

| Offtop | 500 | 96 |

| Total | 1584 | 323 |

| Language | Characters Count | Word Count | Average Word Count per Text | Average Word Length |

|---|---|---|---|---|

| English | 189,677 | 38,483 | 24.29 | 4.05 |

| German | 31,338 | 5519 | 17.08 | 4.81 |

| French | 33,199 | 5836 | 18.07 | 4.41 |

| Italian | 31,245 | 5867 | 18.16 | 4.45 |

| Ukrainian | 27,847 | 5154 | 16.00 | 4.56 |

| Router Configuration | Memorized Examples | Accuracy | General Wine Questions F1 | Catalog F1 | Small Talk F1 | Offtop F1 |

|---|---|---|---|---|---|---|

| No pruning | 72 | 0.84 | 0.79 | 0.86 | 0.70 | 0.90 |

| Pruning (0.8) | 46 | 0.84 | 0.80 | 0.88 | 0.68 | 0.87 |

| Pruning (0.8) + generalization | 49 | 0.81 | 0.76 | 0.84 | 0.63 | 0.87 |

| XLM-R finetuned with 60% of the dataset | - | 0.97 | 0.95 | 0.97 | 0.94 | 0.98 |

| Router Configuration | Memorized Examples | Accuracy | General Wine Questions F1 | Catalog F1 | Small Talk F1 | Offtop F1 |

|---|---|---|---|---|---|---|

| No pruning | 72 | 0.85 | 0.80 | 0.86 | 0.73 | 0.90 |

| Pruning (0.8) | 46 | 0.85 | 0.81 | 0.89 | 0.71 | 0.88 |

| Pruning (0.8) + generalization | 49 | 0.82 | 0.76 | 0.85 | 0.66 | 0.88 |

| GPT 4o LLM in-context learning router | - | 0.91 | 0.90 | 0.94 | 0.82 | 0.93 |

| Router Configuration | The Original Number of Examples | 0.7 Threshold | 0.75 Threshold | 0.8 Threshold | 0.85 Threshold | 0.9 Threshold |

|---|---|---|---|---|---|---|

| General wine questions | 23 | 3 | 8 | 16 | 21 | 23 |

| Catalog | 32 | 2 | 10 | 16 | 25 | 32 |

| Small talk | 17 | 7 | 10 | 14 | 15 | 16 |

| Total | 72 | 12 | 28 | 46 | 61 | 71 |

| Pruning Threshold | Memorized Examples | Accuracy | General Wine Questions F1 | Catalog F1 | Small Talk F1 | Offtop F1 |

|---|---|---|---|---|---|---|

| 0.70 | 12 | 0.39 | 0.00 | 0.00 | 0.45 | 0.60 |

| 0.75 | 28 | 0.80 | 0.71 | 0.85 | 0.70 | 0.84 |

| 0.80 | 46 | 0.84 | 0.80 | 0.88 | 0.68 | 0.87 |

| 0.85 | 61 | 0.85 | 0.84 | 0.88 | 0.69 | 0.89 |

| 0.90 | 71 | 0.83 | 0.79 | 0.85 | 0.68 | 0.90 |

| No pruning | 72 | 0.84 | 0.79 | 0.86 | 0.70 | 0.90 |

| Pruning Threshold | Memorized Examples | Accuracy | General Wine Questions F1 | Catalog F1 | Small Talk F1 | Offtop F1 |

|---|---|---|---|---|---|---|

| 0.80 | 46 | 0.85 | 0.81 | 0.89 | 0.71 | 0.88 |

| 0.85 | 61 | 0.86 | 0.84 | 0.89 | 0.73 | 0.89 |

| 0.90 | 71 | 0.84 | 0.80 | 0.86 | 0.71 | 0.90 |

| No pruning | 72 | 0.85 | 0.80 | 0.86 | 0.73 | 0.90 |

| Router Configuration | Memorized Examples | Accuracy |

|---|---|---|

| No pruning | 72 | 0.97 |

| Pruning (0.8) | 46 | 0.97 |

| Pruning (0.8) + generalization | 49 | 0.97 |

| XLM-R finetuned with 60% of the dataset | - | 0.63 |

| Router Configuration | Memorized Examples | Accuracy |

|---|---|---|

| No pruning | 72 | 0.97 |

| Pruning (0.8) | 46 | 0.97 |

| Pruning (0.8) + generalization | 49 | 0.97 |

| XLM-R finetuned with 60% of the dataset | - | 0.63 |

| Router Configuration | Memorized Examples | Accuracy |

|---|---|---|

| No pruning | 72 | 0.32 |

| Pruning (0.8) | 46 | 0.32 |

| Pruning (0.8) + generalization | 49 | 0.22 |

| XLM-R finetuned with 60% of the dataset | - | 0.02 |

| Text | Type | Similarity |

|---|---|---|

| Ciao, stasera cucino un risotto ai frutti di mare. Quale vino bianco si abbina bene senza essere troppo secco? | catalog | 0.76 |

| Can you recommend a wine for a romantic dinner? | catalog | 0.76 |

| Hello, I’m looking for a robust red wine with moderate tannins to pair with a rich mushroom and truffle pasta. Ideally, something from the Tuscany region, under $70. Any suggestions? | catalog | 0.74 |

| Hey, I’m searching for a nice red wine around $40. I usually enjoy a good Merlot, but I’m open to other options. Anything with a smooth finish and rich fruit flavors would be great! Any recommendations? | catalog | 0.74 |

| I’m preparing a French-themed dinner. What French wine would complement the meal? | catalog | 0.73 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maksymenko, D.; Turuta, O. Interpretable Conversation Routing via the Latent Embeddings Approach. Computation 2024, 12, 237. https://doi.org/10.3390/computation12120237

Maksymenko D, Turuta O. Interpretable Conversation Routing via the Latent Embeddings Approach. Computation. 2024; 12(12):237. https://doi.org/10.3390/computation12120237

Chicago/Turabian StyleMaksymenko, Daniil, and Oleksii Turuta. 2024. "Interpretable Conversation Routing via the Latent Embeddings Approach" Computation 12, no. 12: 237. https://doi.org/10.3390/computation12120237

APA StyleMaksymenko, D., & Turuta, O. (2024). Interpretable Conversation Routing via the Latent Embeddings Approach. Computation, 12(12), 237. https://doi.org/10.3390/computation12120237