1. Introduction

The numerical solution process for tridiagonal systems is a crucial task in scientific computing, such as in, for example, wavelets [

1,

2,

3], spline interpolations [

2], and numerical simulations of partial differential equations, such as heat transfer problems [

4], convection–diffusion phenomena [

5,

6], and computational fluid dynamics [

7,

8], among others. Traditionally, the approximation schemes used in the finite difference method (FDM) and the finite volume method (FVM) are explicit schemes that are very easy to parallelize by using ghost cells that are padded to the actual computing domain. These ghost cells act as a surrogate for the true data, which are actually being solved on other processors. However, explicit schemes often exhibit relatively poor resolving power compared to implicit schemes. Lele [

5] proposed to use compact schemes to approximate the first and second derivatives. He shows that, in a broad spectrum problem where the fundamental physics contains a vastly different range of length scale, a compact scheme is superior to the explicit scheme of the same order of accuracy. For example, instead of computing the second derivative through an explicit scheme, he suggests using compact higher-order schemes such as

which is considerably more efficient than the explicit scheme of the same convergence rate. The parameters

,

,

,

, and

are the approximation coefficients. These compact schemes have far-reaching implications, serving as a foundation for diverse algorithms in various fields. This includes applications in Navier–Stokes equations [

9,

10,

11], magnetohydrodynamics [

12], acoustics [

13,

14], chemical reacting flow [

15], as well as emerging discretization approaches like finite surface discretization [

8,

16,

17] and the multi-moment method [

6].

When numerical grids sufficiently capture the underlying phenomenon, employing high-order numerical methods can yield remarkable efficiency. Hokpunna et al. [

7] demonstrate that a compact fourth-order scheme outperforms the classical second-order finite volume method (FVM) by a factor of 10. The efficiency improvement continues with the sixth-order compact finite surface method [

8], which is 2.7 times more effective than the compact fourth-order FVM. Thus, the integration of a compact scheme in physical simulations significantly accelerates scientific research. However, the equation mentioned above needs an efficient solution method for solving diagonally dominant tridiagonal systems in parallel. The implicit nature that enhances the approximation’s resolving power introduces a directional dependency (in this case,

i). The simplest problem configuration is illustrated in

Figure 1. Efficient parallelization of the solution process in this context is not straightforward.

Applications in the previously mentioned areas often necessitate transient solutions with an extensive number of grid points. This scale is still challenging today even for massively parallel computer systems. For instance, isotropic turbulence simulations conducted on the Earth Simulator to explore turbulent flow physics involved up to 68 billion grid points [

18,

19,

20]. Applying a compact scheme to this case involves solving tridiagonal systems with a system size of 4096 and a substantial number of right-hand sides (rhs), amounting to

. Similarly, in wall-bounded flows, Lee and Moser [

21] utilized 121 billion grid points for solving one problem. The computational power required for these problems far surpasses the capabilities of shared memory computers and requires distributed memory computers for the simulation. Despite the remarkable scale of these simulations, the Reynolds numbers in these cases are still far smaller than those seen in industrial applications. Therefore, efficient parallel algorithms for solving such systems are in demand.

Let us consider a cooling down process of a metal slab that was initially heated with the temperature distribution

i.e.,

, with the following governing equation:

where

is the Péclet number, and the boundary condition is homogeneous. We can evolve the temperature in time by solving

The spatial approximation from the compact scheme (Equation (

1)) can be used to obtain the three second derivatives in this equation. The implicitness of the compact scheme is only carried in one direction. Thus, for the above setting, we have three independent linear systems. If an explicit time integration is used for Equation (

3), each second derivative of the Laplacian must be evaluated and then summed up to compute the time integral. The linear system of equation (LSE) at each time step is

where

and

, the augmentation of the unknown and the right-hand vectors, respectively. Each unknown vector

is the second derivative containing

members.

Let us consider a simple setting depicted in

Figure 1. Here, the domain is divided and distributed to two processors,

and

. The two domains are separated at the midplane in the

k direction. It is not straightforward to find the solution to the LSE of

because the right-hand sides are located on different processors. In this setting, the linear system for

and

can be solved without any problem because the needed data are located within the processor. It should be clear that, in a large scaled simulation, there will be multiple cuts in all three directions. The missing dependency across the interfaces has to be fulfilled by some means.

It is clear that the approximation in each tuple

normal to the

-plane is independent of one another, and one can transfer half of the missing data to one processor, solve the systems, and then transfer the solution back to it. This approach is called the

transpose algorithm [

22]. This algorithm achieves the minimum number of floating point operations, but it has to relocate a lot of data. The minimum data transfer of this algorithm is

. This approach is therefore not suitable for massively parallel computers in which the floating point operations are much faster than the intercommunication. Another approach that achieves the minimum number of floating point operations and also the minimum amount of data transfer is the

pipelined algorithm [

23]. In this approach, the tuples are solved successively after the dependency is satisfied. For example, the processor

can start the forward elimination first on the

tuple until the

mth row and then send the result to

. Once

obtains the data, it can start the forward elimination on that tuple, and

can work on the forward elimination of the next tuple. The simplest pipelined algorithm will send a real number

times per interface. The performance of this algorithm depends heavily on the latency and availability of the communication system.

When designing an algorithm, the designer should be aware of the disproportion between the data transfer and computing power. Take the AMD 7763 processor as an example: it has a memory bandwidth (

) of 205 GBytes/s, while its theoretical peak performance reaches 3580 giga floating point operations per second (GFLOPS). This performance is based on the fused–multiplied–add operation that needs four data for each calculation. For the processor to work at the peak rate, it would need to obtain the data at 114,560 GB/s (for the double precision data). Let us call this the transfer rate

. The ratio

/

is about 560. This disparity requires algorithms to excel in terms of communication efficiency. In parallel computing, things become even more difficult regarding the interconnection between computing nodes. A dual-socket computing node with this setting linked to other nodes by a network bandwidth

= 80 GB/s would have the ratio

/

= 2200. For a GPU cluster with a recent NVIDIA H100, the ratio of the

/

is even higher at 16,320. And this unbalance keeps growing. This disparity between the computational power and the ability to communicate between computing units renders fine-grain parallel algorithms unsuitable for the current massively parallel computer systems. Coarse-grained parallelism such as that in [

24,

25,

26,

27,

28,

29] is thus preferable. These coarse-grained algorithms send data less frequently but in a larger package. Lawrie and Sameh [

30], Bondeli [

26], and Sun [

27] developed algorithms specialized for tridiagonal systems with the diagonal dominant property. The

reduced parallel diagonal dominant algorithm (RPDD) in [

27] can use

p processors to solve a tridiagonal system of

n equations with

right-hand sides using a complexity of

operations (with some small number

J described later). This algorithm is very efficient for this problem.

In this paper, we present a new interface-splitting algorithm (ITS) for solving diagonally dominant tridiagonal linear systems with the system size of

n and

right-hand sides on

p processor. The algorithm has a complexity of

. The idea is to reduce the communications, truncate the data dependency, and fully take advantage of bi-directional communication. The proposed method makes use of exponential decay in the inverse of diagonally dominant matrices explained in [

31]. The proposed scheme is competitive and applicable for non-Toeplitz as well as periodic systems and non-uniform meshes. It has less complexity than the algorithm presented in [

27], requires one synchronization step fewer, and the cost of the data transfer is potentially halved. Therefore, the proposed algorithm is less sensitive to load balancing and network congestion problems.

The presentation of this paper is organized as follows. First, we present the interface-splitting algorithm and then discuss its complexity and accuracy. The accuracy and the performance of the algorithm are evaluated on four specialized systems, starting with a single-node Intel Xeon 8168, and then SGI ALTIX 4700, IBM BladeCenter HS21XM, and NEC HPC 144Rb-1. The performance of the proposed algorithm is compared with the ScaLAPACK and the RPDD algorithms. After that, the application to Navier–Stokes equations is presented, followed by the conclusion and remarks.

2. Interface-Splitting Algorithm

2.1. Concept

The decomposition of the domain on different processors led to the problem mentioned earlier in

Figure 1. The second processor cannot start the forward elimination because the interface value (

) is not known. However, if they have already computed the central row of

, then they can both compute

after exchanging a single real number. In this way, both processors can continue to solve their systems without further communication. It is well known that the inverse of the diagonally dominant tridiagonal matrix decays away from the diagonal, both row and column. The minimum decay rate of non-symmetric matrices has been developed in [

32] and [

31]. Thus, the interface value can be computed from the row inverse of a smaller matrix, e.g.,

by computing

This truncation introduces an error into the solution. However, in the system that originated from a simulation-based problem, there is a certain level of accuracy in how that particular system represents the true value. For example, the compact scheme in Equation (

1) differs from the true second derivative by the size of the local truncation error (LTE). Any errors that are much smaller than LTE will not be observable. For example, in most approximations of the compact scheme above, the LTE should be larger than 1

. When such system is solved by a direct method under double precision, the error due to the solution process should be on the order of 1

. Using quadruple precision can reduce the solution error to about 1

, and the error of the differentiation would still be 1

.

In the next step, we explain the algorithm in detail, including the strategy for reducing the number of operations and controlling the truncation errors.

2.2. The Parallel Interface-Splitting Algorithm

For simplicity of notation, let us consider a tridiagonal system of size

n with a single right-hand side,

where

is a strictly diagonal dominant matrix,

,

, and

= 0. In order to solve this system in parallel, one can decompose the matrix using

and solve the original system in two steps:

The first equation computes the solution at the interface using the coefficients stored locally. This factorization can be considered as a preprocessing scheme where the right-hand side is modified such that the solution of the simpler block matrices delivers the desired result. The partitioning algorithm [

24] and the parallel line solver [

33] belong to this type.

Suppose that is a tridiagonal matrix of size , where p is the number of processors and m is the size of our subsystems. The kth processor is holding the right-hand side , . Here, it is sufficient to consider one right-hand side. The application to multiple right-hand sides is straightforward.

Let

be the

kth block subdiagonal matrix of

, that is,

and

is the matrix

, except the last row is replaced by that of the identity matrix:

Instead of using the block diagonal matrix of

as an independent subsystem as in the alternative algorithms, the interface-splitting algorithm builds the matrix

up form the special block matrix

:

The neighboring subsystems (kth and th) are separated by the kth interface, which is the last row of the kth subsystem. Note that if we use as an independent subsystem, the algorithm will be the transposed version of the PDD algorithm.

The algorithm corresponding to the selected decomposition is given by

where

is the vector whose

th component is one and zero otherwise. The vector

is given by

,

, with

, which is the result of the decomposition. For the system in

Figure 1, we solve Equation (

7) by setting

This means the vector can be obtained by manipulating b only at the top and the bottom elements ( and ).

If we think of the vector as the spikes similar to that in the SPIKE algorithm, our spike can be thought of as two spikes pointing away from horizontally, unlike the spikes of the RPDD and SPIKE algorithms, which are column spikes (vertical).

2.3. Cost Savings

The key to the efficiency of the proposed algorithm is based on the decay of the matrix

. By exploiting this decay, we truncate scalar product

to 2

J terms. This truncation introduces an approximation to

, which is then transferred to

and propagates to the solutions in the inner domain. Letting

, the

kth subsystem of

then takes the following form:

The components of the vector

are zero except for the first and the last components, which are given by

and

. The vector

represents the errors in the solution due to the approximation at the top. It should be kept in mind that the elements of

are non-zero only in the first and the last components. The interface-splitting algorithm neglects the error term

and solves the following system:

As mentioned earlier, it is not necessary to find the actual inverse of the matrix. It is sufficient to compute from . This way, the inversion of the matrix is avoided. A further savings can be achieved by reducing the matrix to a submatrix , enclosing the interface.

To summarize, the ITS algorithm consists of seven steps described in the following algorithm.

Letting

J and

L be some small positive integers, then the interface splitting algorithm is defined as follows (Algorithm 1):

| Algorithm 1 The interface splitting algorithm. |

- 1:

On (), allocate , , and . - 2:

On (), - 1.

allocate submatrix , , where ; - 2.

allocate , , where is the ith element of ; - 3.

solve (Equation ( 11)); - 4.

allocate , ; - 5.

allocate , and send it to .

- 3:

On (), - 1.

receive from and store it in .

- 4:

Compute parts of the solution at the interface. - 1.

On (), ; - 2.

On (), ,

where and are the first and the last J elements of , respectively. - 5:

Communicate the results. - 1.

On (), send to and receive from it. - 2.

On (), send to and receive from it.

- 6:

Modify the rhs. - 1.

On (), ; - 2.

On (), .

Note: is stored in place of . - 7:

On , solve (Equation (14)).

|

In this algorithm, J is the number of terms that we chose to truncate the scalar product. The interface-splitting algorithm is equivalent to the direct method up to the machine’s accuracy (ϵ) if decays below ϵ within this truncation length, i.e., for .

Supposing the J was chosen and the omitted terms in the scalar product are not larger than , then we do not need to compute to full precision. In order to maintain the accuracy of the smallest terms retained for the scalar product, we need to solve a larger system containing the th row. In the second step, we chose , whose dimension is , with . The choice of L and its effects on the accuracy of will be explained later.

One of the most important features of the ITS algorithm (Algorithm 1) lies in step 4. Instead of using one-way communication in two sequential steps as in the RPDD algorithm, the ITS algorithm communicates in only one step. The communications with the two nearest neighbors are allowed to overlap. Therefore, the cost of the communication can be reduced by up to with the ITS algorithm, depending on the actual capability of the interconnection link and the network topology.

The error of the interface-splitting algorithm consists of the approximation error of and the propagation of this error into the solutions in the inner domain. The factors determining the accuracy of this approximation are: (i) the row diagonal dominance factor and (ii) the matrix bandwidth used to compute . The complexity and the effect of on L and J are described in the next section.

2.4. Comparison to Other Approaches

Our approach exploits the decay of the LU decomposition of the system. This property has been exploited many times already in the literature. The low complexity of the RPDD algorithm is achieved by the same means, apart from RPDD [

27] and the truncated SPIKE [

32], in which the amount of data transfer is optimal. McNally, Garey, and Shaw [

34] developed a communication-less algorithm. In this approach, each processor

holding the subsystem

solves a larger system enveloping this subsystem. This new system has to be large enough such that the errors propagated into the desired solution

due to the truncation of the system can be neglected. It can be shown that, for the same level of accuracy, this approach requires

J times more data transfer than the proposed algorithm. This high communication overhead and the increase in the subsystem size make this algorithm non-competitive for multiple-rhs problems. A similar concept has been applied to compact finite differences by Sengupta, Dipankar, and Rao in [

35]. However, their algorithm requires a symmetrization that doubles the number of floating point operations. It should be noted that the current truncated SPIKE implementation utilizes bidirectional communication as well. However, this improvement is not made public. It will be shown later that such a simple interchange to the communication pattern can greatly improve the performance of parallel algorithms. There are a number of algorithms using biased stencils that mimic the spectral transfer of the inner domain [

36,

37,

38]. In this approach, the user has no control over the error of the parallel algorithm. Even though the local truncation error of the approximation at the boundary is matched with that of the inner scheme, the discrepancies in spectral transfer can lead to a notable error at the interface in under-resolved simulations.

3. Complexity, Speedup, and Scalability

The complexity of the interface-splitting algorithm depends on two parameters, the one-digit decay length

L and the half-matrix bandwidth

J. These numbers are determined by the cut-off threshold

and the degree of diagonal dominance of the system (

). For Toeplitz systems

, the entries of the matrices in the LU-decomposition of this system converge to certain values, and the half-matrix bandwidth

J can be estimated from them. Bondeli [

39] deduces this convergence and estimates

J with respect to the cut-off threshold

by

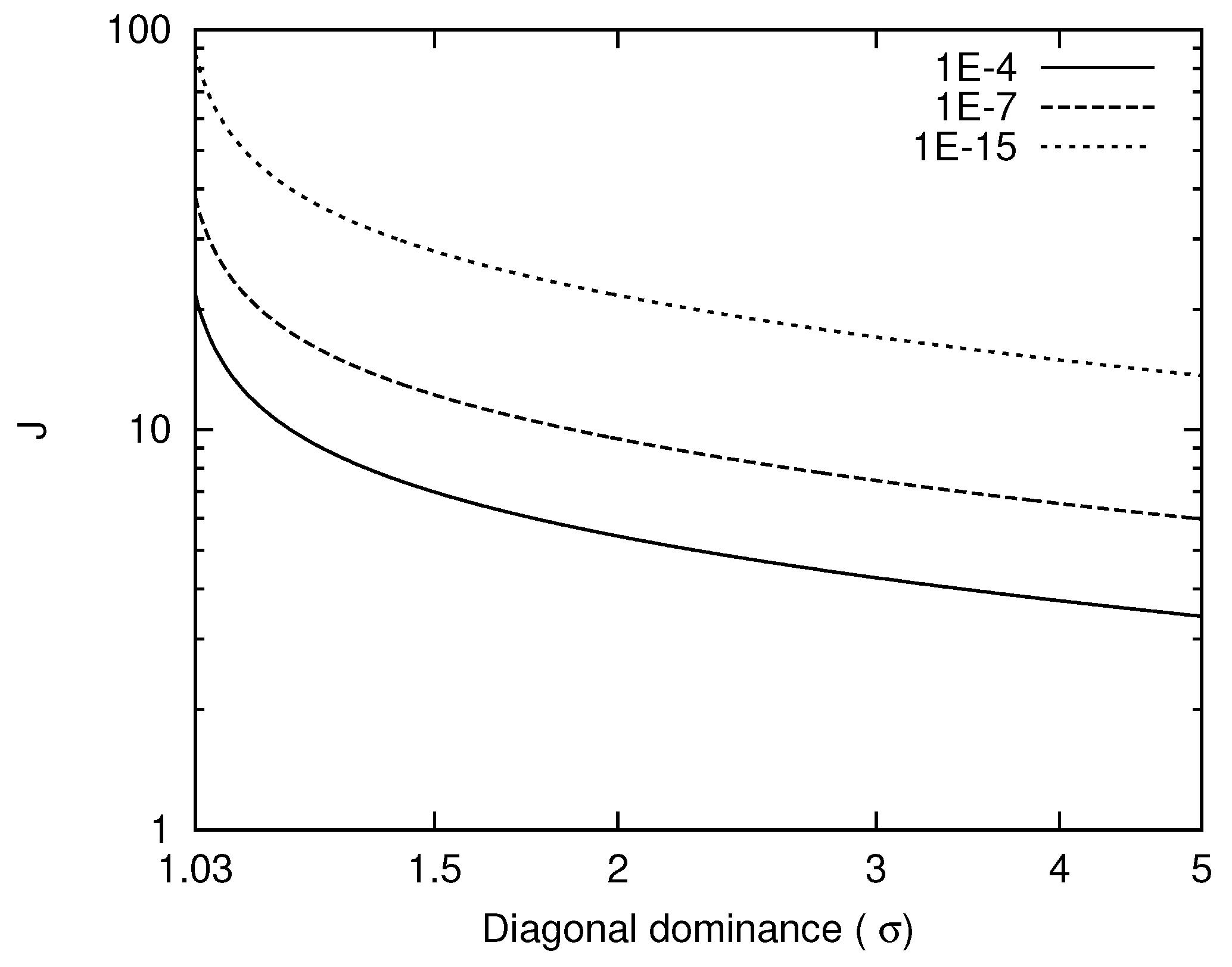

For example,

J equals 7 and 27 for

and

when

(

), which corresponds to the fourth-order compact differentiation [

5] and the cubic spline interpolation. These two numbers are much smaller than the usual size of the subsystem used in scientific computing. Effects of diagonal dominance on

J are shown in

Figure 2. The proposed algorithm is thus not recommended for a very small

. This includes the inversion of the Laplace operator. For solving such systems, we refer the reader to the work of Bondeli [

26,

39], in which he adapts his DAC algorithm for the Poisson equation. In the fourth-order compact schemes, the diagonal dominance of the first derivative and the second derivative are 2 and 5, respectively. In sixth-order compact schemes, the values are

and

, respectively. Therefore, the proposed scheme is well-suited for these approximations. On the contrary, if the system is not diagonally dominant,

J will decay linearly to zero at half the size of the global system e.g.,

. In such a system, the proposed algorithm will perform poorly. It will be beneficial to combine the ITS and RPDD algorithms in this situation. Using ITS as the pre-processor will reduce the amount of back-correction in the RPDD. This approach will have better cache coherence, in contrast to computing the full correction in any single method by going from one end of the system to the other.

3.1. Complexity

The interface-splitting algorithm is an approximate method, but it can be made equivalent to other direct methods by setting

to machine accuracy. The minimum decay rate of non-symmetric matrices has been developed in [

31,

32] and can be used to approximate

J. However, the matrix bandwidth obtained in this way is usually too pessimistic for the computation to be efficient because the minimum decay rate depends on

, which may not be in the vicinity of the interfaces. In multiple-rhs problems, it is worth solving the subdiagonal matrix consisting of the two subsystems enclosing the

kth interface and then choosing

J according to the desired cut-off threshold. In single-rhs problems, backward elimination of

and forward elimination of

could be an effective way to determine the appropriate

J.

In the second step of the algorithm, we have to solve a linear system for the

th row of the matrix

. This system has to be larger than

such that the truncated

is sufficiently accurate. In this work, we solve a system of size

, which ensures that the smallest element of the truncated

is at least correct in the first digit. Since it is unlikely that one would be satisfied with errors larger than

, we assume that

and use this relation to report the operation count of the ITS algorithm in

Table 1. A cut-off threshold lower than this leads to lower complexity. Thus, we assume

reflects the practical complexity in general applications. In this table, we also list the communication time, which can be expressed by a simple model:

, where

is the fixed latency,

is the transmission time per datum, and

is the number of data. Note that, for multiple-rhs problems, we neglect the cost of the first to the third step of the algorithm because the number of rhs is considered to be much larger than

m.

In

Table 1, the complexity of the proposed algorithm is presented, assuming that each processor only has knowledge of its subsystem and that they do not know the global system. The coefficients thus have to be sent among the neighbors, leading to a higher data transfer in single-rhs problems. Otherwise, the communication is reduced to that of the multiple-rhs problem.

3.2. Speedup and Scalability of the Algorithm

Using

Table 1, we can estimate the absolute speedup of the ITS algorithm. The absolute speedup here means the solution time on a single processor from the fastest sequential algorithm divided by the time used by the ITS algorithm on

p processors. In theoretical complexity analysis, one usually assumes a constant value of communication bandwidth. In practice, the interconnection network is not completely connected, and the computing nodes are usually linked by less expensive topologies such as tree, fat tree, hypercube, array, etc. The data bandwidth is thus a function of the number of processors as well as the amount of the data being transferred. Let the communication cost be a function of

p and

, that is

, and assume that

n and

are sufficiently large such that the latency and

can be neglected. Then, the absolute speedup of the ITS algorithm in multiple-rhs problems is given by Equation (

19) below:

where

is the peak performance of the machine. The key numbers determining the performance of the interface-splitting algorithm are thus the ratios

and

. The first one is the computation overhead, and the latter is the communication overhead. If the ratio

is small and

is negligible, an excellent speedup can be expected, otherwise the algorithm suffers a penalty. For example, the absolute efficiency will drop from

to

when

J is increased from

to

m on multiple right-hand side systems.

In general, one must size the subsystem appropriately such that the speedup from load distribution justifies the increased complexity, overhead, and communication costs. In the previous speedup equation (Equation (

19)), the function

is the inverse of the data transfer rate, and the product

is simply the ratio of the peak performance (operation/s) to the data transfer rate (operand/s), similar to the one mentioned in the introduction. Therefore, as long as

J is smaller than

, the overhead of the ITS algorithm will be less than the cost of the communication. For example, let us consider a cluster consisting of older-generation hardware such as dual AMD EPYC 7302 nodes connected by a 100 G network. The value of

is 983. During the latency time alone, which is (1

s), the processor could have solved a compact differentiation on a

Cartesian grid. We already discussed this number in modern computers, and it is much higher than this value. Thus, the

overhead of the ITS algorithm is very small.

According to Equation (

19), our algorithm is perfectly scalable because the speedup does not explicitly contain the number of processors, except for the one hidden in

. The cost of communication per datum

is determined by the latency, the data bandwidth, and the topology of the interconnection networks among the computing nodes and the pattern of communication. When

p and

are large,

can be the major cost of the computation. Even if the exact formula for

is not known, we can predict when the algorithm will be scalable for the scaled problem size (fixed

and

m). Using the costs from

Table 1 and substituting

for

, it is easy to see that the proposed algorithm is scalable, i.e.,

when

. This means that, as long as the interconnection network is scalable, the ITS algorithm is scalable.

Note that the algorithm assumes ; if this is not true, the program adopting this algorithm should issue a warning or an error to the user. The user then can decide whether to adjust the size of the subsystem or extend the scalar product by including more processors.

4. Accuracy Analysis

In the previous section, we discussed the complexity and established the half-matrix bandwidth J for the selected cut-off threshold . In this section, we analyze how the cut-off threshold propagates into the solution in the inner variables.

Solving Equation (

16) for

is equivalent to solving the

linear system

for

with

Due to the structure of

, it follows that

where

and

. The vector

is a column vector of zero. Its size should be clear from the context. The interface-splitting algorithm injects an approximation in place of

and

, changes the rhs to

, and solves

instead of the original system. This process introduces the following error into the solution:

It follows that the error in the solution vector satisfies

Equation (

23) indicates that the error at the inner indices (

,

) is a sum of the error propagated from both interfaces. In order to establish the error bound of the ITS algorithm, we first identify the position of the maximum error.

Proposition 1. Errors of the interface-splitting algorithm for diagonally dominant tridiagonal matrices are maximal at the interfaces.

Proof. Since the errors of the interface-splitting algorithm

in the inner domain satisfy Equation (

23), that is,

the error of the inner indices, i.e.,

,

, is not larger than the maximum error introduced at the interface because

□

In the next step, we assume that a small number is set as the error threshold and was used to truncate the vector . A small number J is the minimum satisfying . The maximum error of the proposed algorithm thus consists of two parts: (i) the truncated terms and (ii) the round-off error in the calculation of the dot product in Step 3. The following theorem states that the sum of these errors is bounded by a small factor of the cut-off threshold.

Theorem 1. The maximum error of the interface-splitting algorithm is bounded bywith the cut-off threshold of the coefficient vector , the machine accuracy ϵ, and the length L, in which the magnitude of the coefficients is reduced by at least one significant digit. Proof. First, let us assume that the rhs stored by the machine is exact, and let

be the error in the coefficient vector

when it is represented by machine numbers

; that is,

. The hat symbol denotes that the number is a machine-accurate numerical value of the respective real number. Let

be the floating point operation on

x. For instance, if

and

are real numbers, then

. The addition obeys the following inequalities:

The numerical value obtained for

by computing

is thus

For general matrices whose inverse does not decay, the error is bounded by , and the error in Equation (27) is given by . Thus, the result can be inaccurate for a very large n. However, this is not the case for the matrices considered here.

On computers, a floating point number is stored in a limited mantissa and exponent. Adding a small number

b to a very large number

a will not change

a if their magnitude differs from the range of the mantissa. In other words,

if

. Since

decays exponentially, there is a smallest number

L such that

for

and

for

. Thus, let us assume that the machine accuracy

lies in

; for some natural number

, we can rewrite (27) as

with

, provided that

.

Next, we consider the effects of the exponential decay of

on the behavior of the round-off error. Let

and

be the first and the second sum in Equation (

28). Due to the decaying of

, the round-off errors only affect the result coming from the first

L largest terms; thus, the first sum is reduced to:

Since the exponential decay is bounded by a linear decay, we can conservatively approximate the numerical error due to the second sum in Equation (

29), bounded by

. Therefore, the error

of the solution

computed by the scalar product with

is given by

We now have the error bound when the full scalar product

was used to approximate

. It is thus straightforward to show that the error of the interface-splitting algorithm with the cut-off threshold

is

□

The application of the ITS algorithm to numerical simulations does not need to be extremely accurate. The cut-off threshold can be set several digits lower than the approximation errors. The numerical errors of the algorithm would then be dwarfed by the approximation error. Any effort to reduce the numerical error beyond this point would not improve the final solution. In most cases, the approximation errors are much greater than the machine’s accuracy. In such situations, the maximum error of the algorithm is bounded by .

It should be noted that, when one applies the ITS algorithm to approximation problems, the physical requirement of the underlying principle should be respected. For example, if the ITS algorithm is applied to an interpolation problem, the sum of the coefficients should be one to maintain consistency. Likewise, the sum of the differentiation coefficients should be zero. This correction is on the order of and can be applied to the two smallest (farthest) coefficients.