A Video Analytics System for Person Detection Combined with Edge Computing

Abstract

:1. Introduction

Our Contribution

- A real-time and flexible system is proposed that provides enhanced situation awareness for person detection through a video streaming feed on an embedded edge device. The VAEC system is able to be placed either at indoor/outdoor premises (e.g., buildings or critical infrastructures) or mounted on a vehicle (e.g., car). Additionally, VAEC system adopts state-of-the-art algorithms responsible for object detection or a tracking-by-detection (TBD) processes (i.e., pre-trained YOLOv5 and DeepSort, respectively), utilizing several types of camera sensors (RGB or thermal). It should be noted that, depending on the use case, the VAEC system should be pre-configured to execute an object detection process or a TBD process.

- The VAEC system is integrated with the proposed edge computing platform—namely, Distribute Edge Computing Internet of Things (DECIoT). The DECIoT is a scalable, secure, flexible, fully controlled, potentially interoperable, and modular open-source framework that ensures information sharing with other platforms or systems. Through the DECIoT, the computation, data storage, and information sharing are performed together, directly in the edge device, in a real-time manner.

- Once a person (or persons) is detected, crucial information (alert with timestamp, geolocation, number of the detected persons, and relevant video frames with the detections) is provided and is able to be shared with other platforms.

2. Materials and Methods

2.1. Proposed Methodology

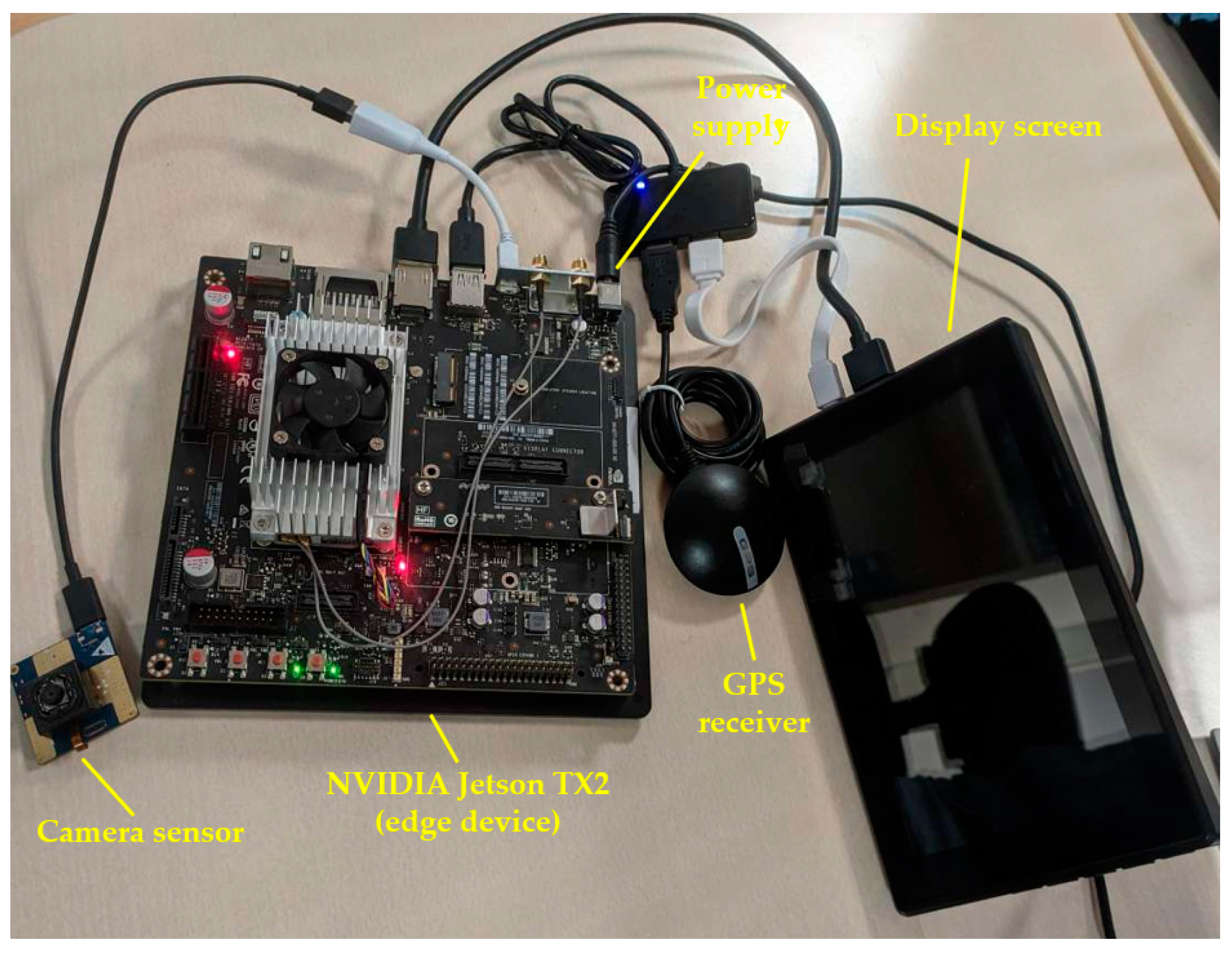

2.2. Hardware Components

- Camera sensor: The camera sensor provides the video stream. In this study, four camera sensors were used for the experiments. Relevant details, such as video resolution, dimensions (Height-H, Length-L, Width-W), frames per second (FPS), connectivity with the edge device, and spectrum for each camera sensor, are quoted in Table 1.

- NVIDIA Jetson TX2 (VAEC’s edge device): The Jetson TX2 is considered as an efficient and flexible solution to be as an embedded edge device with GPU processing capability [16,34]. The physical dimensions of Jetson TX2 are 18 cm × 18 cm × 5 cm. Thus, in this study, the Jetson TX2 was used as an edge device, so (i) the DECIoT is installed, (ii) has WiFi and Ethernet network connections, (iii) has a FTP server deployed, (iv) can process the video stream from the connected camera sensor, (v) executes the object detection or TBD algorithms on the video stream, (vi) stores the video frames (in which the detections are superimposed) as images, and (vii) is able to publish the alert information to other platforms or systems.

- Display screen: A display of a 7 inch touch screen LCD was used to provide autonomy, ability to control, and real-time monitoring of the object detection or TBD algorithms on the video stream. It is mentioned that the display screen is optional, and thus, it is able to be disconnected/deactivated to further consider a privacy-oriented framework [33].

- Power supply: The power supply needs of the Jetson TX2 are: 19 V, 4.7 A, and 90 W max.

- GPS device receiver: The GPS receiver provides, in real-time, the geolocation in WGS’84 coordinate system (i.e., latitude, longitude) of the VAEC system. The GPS receiver is connected with the Jetson TX2.

2.3. Object Detection and Tracking-By-Detection (TBD)

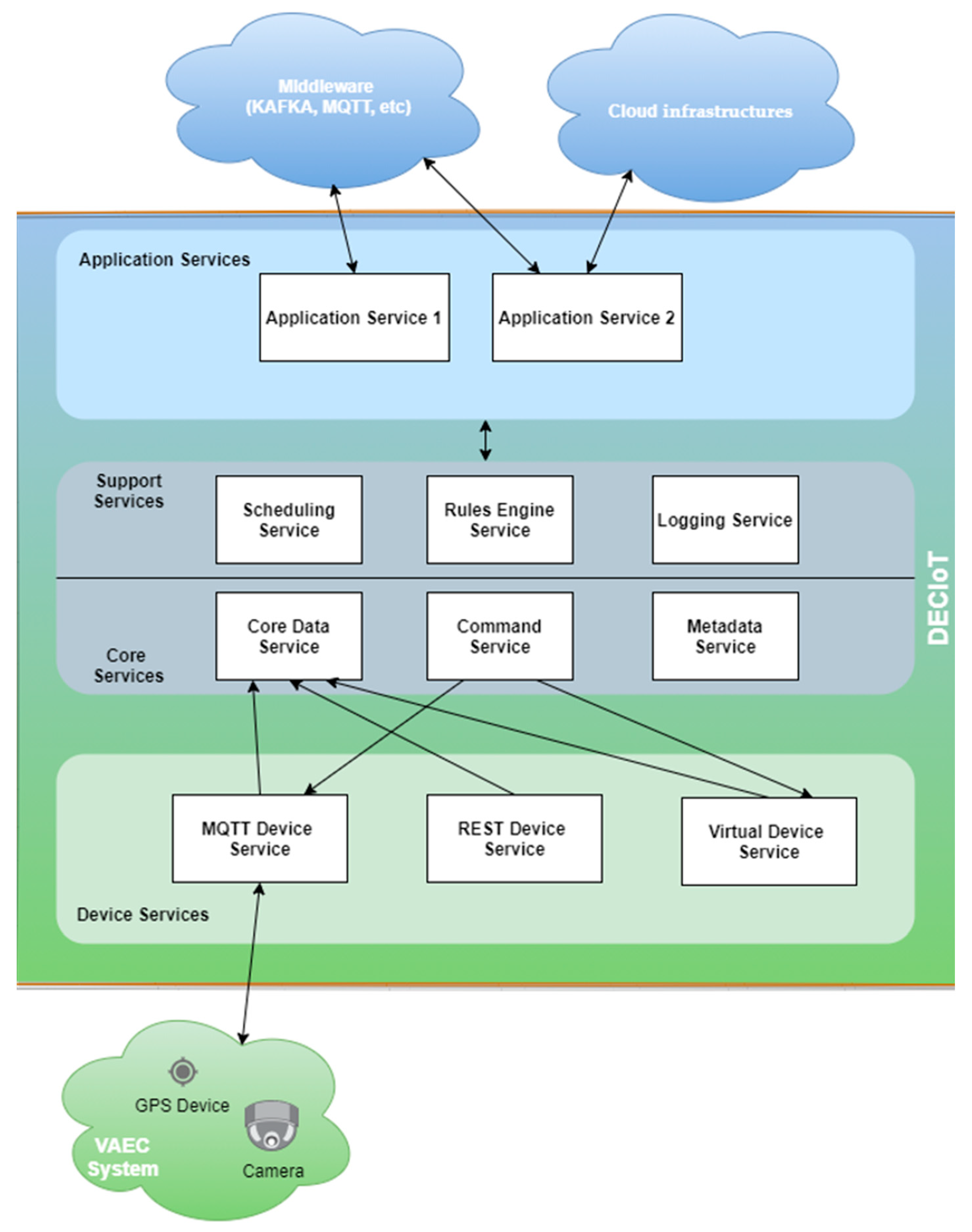

2.4. Distributed Edge Computing Platform (DECIoT)

- The Device Service Layer acts as an interface of the system with physical devices and is tasked with the functionality of collecting data and actuating the devices with commands. It supports multiple protocols for communication though a set of device services (MQTT Device Service, REST Device Service, Virtual Device Service, etc.) and an SDK for creating new Device Services. In the VAEC system, the MQTT Device Service was used to receive information from the object detection process. Between the object detection process and the MQTT Device Service, there is a MQTT broker (Mosquito) [72].

- The Core Services Layer is at the center of the DECIoT platform and is used for storing data, as well as commanding and registering devices. The Core Data Service is used for storing data, the Command Service initiates all the actuating commands to devices, and the Metadata Service stores all the details for the registered devices. This microservices are implemented with the use of Consul, Redis, and adapters developed in Go for integration with all other microservices. In the VAEC system, all of the above microservices have been exploited.

- The Support Services Layer includes microservices for local/edge analytics and typical application duties such as logging, scheduling, and data filtering. The Scheduling Service is a microservice capable of running periodic tasks within DECIoT (for example, cleaning the database of the Core Data Service each day) and also capable of initiating periodic actuation commands to devices using the Command Core Service. This is an implementation in Go that exploit features of Consul and Redis. The Rules Engine Service performs data filtering and basic edge data analytics, and Kuiper is used in this microservice. The Logging Service, a Go language implementation, is used for logging messages of other microservices. In this study, these microservices were not exploited in the VAEC system as no logging, scheduling, and data filtering were needed.

- The Application Services Layer consists of one or more microservices with the functionality of communicating with external infrastructures and applications. Application Services are the means to extract, transform, and send data from the DECIoT to other endpoints or Applications. Using this Layer, the DECIoT can communicate with a variety of middleware brokers (MQTT, Apache Kafka, etc.) or REST APIs with the goal of reaching external applications and infrastructures. At the same time, the support of an SDK allows the implementation of new Application Services that fit the use case. For the VAEC system, a new Application Service has been implemented to send data to the smart city’s middleware (in this study, the Apache Kafka was used) using Go language.

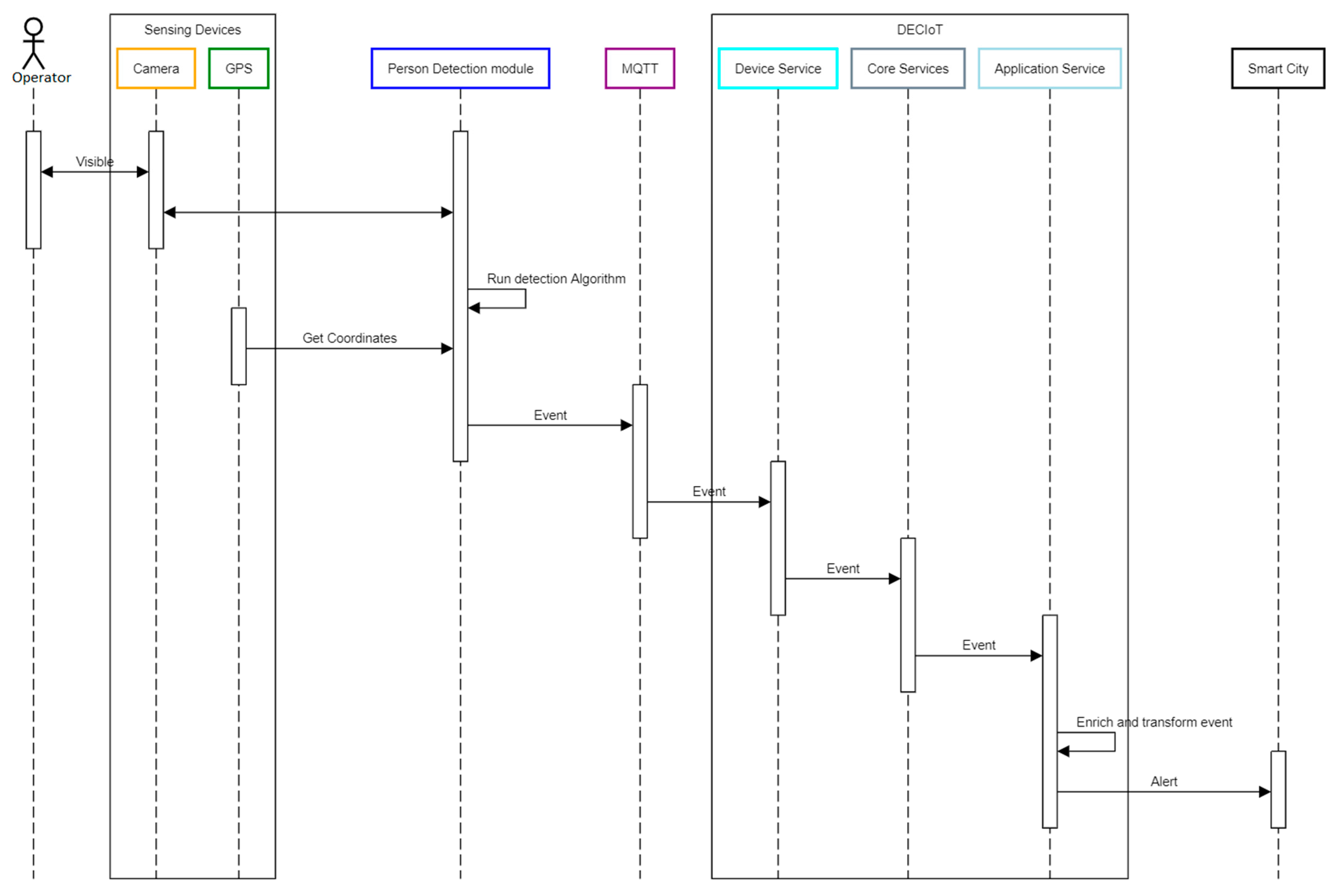

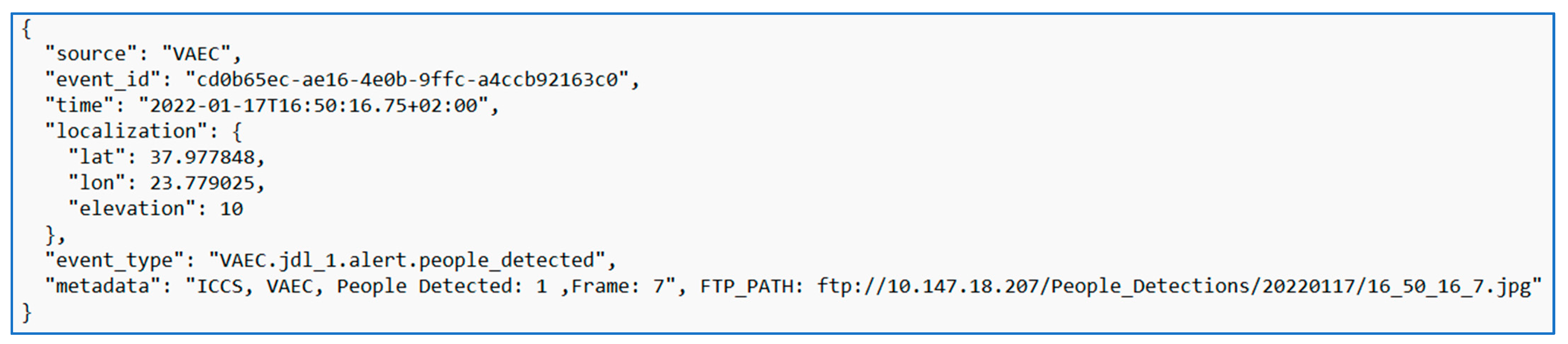

2.5. Person Detection Alert from DECIoT to Other Platforms

3. Results

3.1. Preparation and Experimental Parameters Setting

3.2. Evaluation Metrics

3.3. Experiments

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Smagilova, E.; Hughes, L.; Rana, N.P.; Dwivedi, Y.K. Security, Privacy and Risks Within Smart Cities: Literature Review and Development of a Smart City Interaction Framework. Inf. Syst. Front. 2020, 1–22. [Google Scholar] [CrossRef]

- Voas, J. Demystifying the Internet of Things. Computer 2016, 49, 80–83. [Google Scholar] [CrossRef]

- SINTEF. New Waves of IoT Technologies Research—Transcending Intelligence and Senses at the Edge to Create Multi Experience Environments. Available online: https://www.sintef.no/publikasjoner/publikasjon/1896632/ (accessed on 11 January 2022).

- Zanella, A.; Bui, N.; Castellani, A.; Vangelista, L.; Zorzi, M. Internet of Things for Smart Cities. IEEE Internet Things J. 2014, 1, 22–32. [Google Scholar] [CrossRef]

- Endsley, M.R. Toward a Theory of Situation Awareness in Dynamic Systems. Hum. Factors J. Hum. Factors Ergon. Soc. 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Rummukainen, L.; Oksama, L.; Timonen, J.; Vankka, J.; Lauri, R. Situation awareness requirements for a critical infrastructure monitoring operator. In Proceedings of the 2015 IEEE International Symposium on Technologies for Homeland Security (HST), Greater Boston, MA, USA, 14–16 April 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Geraldes, R.; Goncalves, A.; Lai, T.; Villerabel, M.; Deng, W.; Salta, A.; Nakayama, K.; Matsuo, Y.; Prendinger, H. UAV-Based Situational Awareness System Using Deep Learning. IEEE Access 2019, 7, 122583–122594. [Google Scholar] [CrossRef]

- Eräranta, S.; Staffans, A. From Situation Awareness to Smart City Planning and Decision Making. In Proceedings of the 14th International Conference on Computers in Urban Planning and Urban Management, Cambridge, MA, USA, 7–10 July 2015. [Google Scholar]

- Suri, N.; Zielinski, Z.; Tortonesi, M.; Fuchs, C.; Pradhan, M.; Wrona, K.; Furtak, J.; Vasilache, D.B.; Street, M.; Pellegrini, V.; et al. Exploiting Smart City IoT for Disaster Recovery Operations. In Proceedings of the 2018 IEEE 4th World Forum on Internet of Things (WF-IoT), Singapore, 5–8 February 2018; pp. 458–463. [Google Scholar] [CrossRef]

- Mitaritonna, A.; Abasolo, M.J.; Montero, F. Situational Awareness through Augmented Reality: 3D-SA Model to Relate Requirements, Design and Evaluation. In Proceedings of the 2019 International Conference on Virtual Reality and Visualization (ICVRV), Hong Kong, China, 18–19 November 2019; pp. 227–232. [Google Scholar] [CrossRef]

- Neshenko, N.; Nader, C.; Bou-Harb, E.; Furht, B. A survey of methods supporting cyber situational awareness in the context of smart cities. J. Big Data 2020, 7, 92. [Google Scholar] [CrossRef]

- Jiang, M. An Integrated Situational Awareness Platform for Disaster Planning and Emergency Response. In Proceedings of the 2020 IEEE International Smart Cities Conference (ISC2), Online, 28 September–1 October 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Sun, H.; Shi, W.; Liang, X.; Yu, Y. VU: Edge Computing-Enabled Video Usefulness Detection and its Application in Large-Scale Video Surveillance Systems. IEEE Internet Things J. 2019, 7, 800–817. [Google Scholar] [CrossRef]

- Github. Ultralytics. Ultralytics/Yolov5. 2022. Available online: https://github.com/ultralytics/yolov5 (accessed on 13 January 2022).

- Griffin, B.A.; Corso, J.J. BubbleNets: Learning to Select the Guidance Frame in Video Object Segmentation by Deep Sorting Frames. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8906–8915. [Google Scholar] [CrossRef] [Green Version]

- Patrikar, D.R.; Parate, M.R. Anomaly Detection using Edge Computing in Video Surveillance System: Review. arXiv 2021, arXiv:2107.02778. Available online: http://arxiv.org/abs/2107.02778 (accessed on 13 January 2022).

- Liu, Z.; Chen, Z.; Li, Z.; Hu, W. An Efficient Pedestrian Detection Method Based on YOLOv2. Math. Probl. Eng. 2018, 2018, 3518959. [Google Scholar] [CrossRef]

- Ahmad, M.; Ahmed, I.; Adnan, A. Overhead View Person Detection Using YOLO. In Proceedings of the 2019 IEEE 10th Annual Ubiquitous Computing, Electronics Mobile Communication Conference (UEMCON), New York, NY, USA, 10–12 October 2019; pp. 0627–0633. [Google Scholar] [CrossRef]

- Karthikeyan, B.; Lakshmanan, R.; Kabilan, M.; Madeshwaran, R. Real-Time Detection and Tracking of Human Based on Image Processing with Laser Pointing. In Proceedings of the 2020 International Conference on System, Computation, Automation and Networking (ICSCAN), Pondicherry, India, 3–4 July 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Matveev, I.; Karpov, K.; Chmielewski, I.; Siemens, E.; Yurchenko, A. Fast Object Detection Using Dimensional Based Features for Public Street Environments. Smart Cities 2020, 3, 93–111. [Google Scholar] [CrossRef] [Green Version]

- Kim, C.; Oghaz, M.; Fajtl, J.; Argyriou, V.; Remagnino, P. A Comparison of Embedded Deep Learning Methods for Person Detection. In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Prague, Czech Republic, 25–27 February 2019; Volume 5, pp. 459–465. [Google Scholar] [CrossRef]

- Farooq, M.A.; Corcoran, P.; Rotariu, C.; Shariff, W. Object Detection in Thermal Spectrum for Advanced Driver-Assistance Systems (ADAS). IEEE Access 2021, 9, 156465–156481. [Google Scholar] [CrossRef]

- Chen, J.; Wang, F.; Li, C.; Zhang, Y.; Ai, Y.; Zhang, W. Online Multiple Object Tracking Using a Novel Discriminative Module for Autonomous Driving. Electronics 2021, 10, 2479. [Google Scholar] [CrossRef]

- Wu, H.; Du, C.; Ji, Z.; Gao, M.; He, Z. SORT-YM: An Algorithm of Multi-Object Tracking with YOLOv4-Tiny and Motion Prediction. Electronics 2021, 10, 2319. [Google Scholar] [CrossRef]

- Rajavel, R.; Ravichandran, S.K.; Harimoorthy, K.; Nagappan, P.; Gobichettipalayam, K.R. IoT-based smart healthcare video surveillance system using edge computing. J. Ambient Intell. Humaniz. Comput. 2021. [Google Scholar] [CrossRef]

- Xu, R.; Nikouei, S.Y.; Chen, Y.; Polunchenko, A.; Song, S.; Deng, C.; Faughnan, T.R. Real-Time Human Objects Tracking for Smart Surveillance at the Edge. In Proceedings of the 2018 IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Maltezos, E.; Protopapadakis, E.; Doulamis, N.; Doulamis, A.; Ioannidis, C. Understanding Historical Cityscapes from Aerial Imagery through Machine Learning. In Digital Heritage. Progress in Cultural Heritage: Documentation, Preservation, and Protection, Proceedings of the Euro-Mediterranean Conference, Nicosia, Cyprus, 29 October–3 November 2018; Springer: Cham, Switzerland, 2018; pp. 200–211. [Google Scholar] [CrossRef]

- Maltezos, E.; Doulamis, A.; Ioannidis, C. Improving the Visualisation of 3D Textured Models via Shadow Detection and Removal. In Proceedings of the 2017 9th International Conference on Virtual Worlds and Games for Serious Applications (VS-Games), Athens, Greece, 6–8 September 2017; pp. 161–164. [Google Scholar] [CrossRef]

- Pudasaini, D.; Abhari, A. Scalable Object Detection, Tracking and Pattern Recognition Model Using Edge Computing. In Proceedings of the 2020 Spring Simulation Conference (SpringSim), Fairfax, VA, USA, 19–21 May 2020; pp. 1–11. [Google Scholar] [CrossRef]

- Pudasaini, D.; Abhari, A. Edge-based Video Analytic for Smart Cities. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 10. [Google Scholar] [CrossRef]

- Kafka. Apache Kafka. Available online: https://kafka.apache.org/ (accessed on 26 January 2022).

- Nasiri, H.; Nasehi, S.; Goudarzi, M. A Survey of Distributed Stream Processing Systems for Smart City Data Analytics. In Proceedings of the International Conference on Smart Cities and Internet of Things—SCIOT ’18, Mashhad, Iran, 26–27 September 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Chen, A.T.-Y.; Biglari-Abhari, M.; Wang, K.I.-K. Trusting the Computer in Computer Vision: A Privacy-Affirming Framework. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1360–1367. [Google Scholar] [CrossRef]

- Zhao, Y.; Yin, Y.; Gui, G. Lightweight Deep Learning Based Intelligent Edge Surveillance Techniques. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 1146–1154. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2004, arXiv:abs/2004.10934. Available online: http://arxiv.org/abs/2004.10934 (accessed on 13 January 2022).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

- Maltezos, E.; Douklias, A.; Dadoukis, A.; Misichroni, F.; Karagiannidis, L.; Antonopoulos, M.; Voulgary, K.; Ouzounoglou, E.; Amditis, A. The INUS Platform: A Modular Solution for Object Detection and Tracking from UAVs and Terrestrial Surveillance Assets. Computation 2021, 9, 12. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef] [Green Version]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Forward-Backward Error: Automatic Detection of Tracking Failures. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istambul, Turkey, 23–26 August 2010; pp. 2756–2759. [Google Scholar] [CrossRef] [Green Version]

- Grabner, H.; Grabner, M.; Bischof, H. Real-Time Tracking via On-line Boosting. In Proceedings of the British Machine Vision Conference, Edinburgh, UK, 4–7 September 2006. [Google Scholar]

- Held, D.; Thrun, S.; Savarese, S. Learn to Track at 100 fps with Deep Regression Networks. In Computer Vision—ECCV 2016; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 749–765. [Google Scholar] [CrossRef] [Green Version]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual Object Tracking Using Adaptive Correlation Filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar] [CrossRef]

- Lukežič, A.; Vojíř, T.; Zajc, L.; Matas, J.; Kristan, M. Discriminative Correlation Filter Tracker with Channel and Spatial Reliability. Int. J. Comput. Vis. 2018, 126, 671–688. [Google Scholar] [CrossRef] [Green Version]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Face-TLD: Tracking-Learning-Detection Applied to Faces. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 27–29 September 2010; pp. 3789–3792. [Google Scholar] [CrossRef] [Green Version]

- Cai, C.; Liang, X.; Wang, B.; Cui, Y.; Yan, Y. A Target Tracking Method Based on KCF for Omnidirectional Vision. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 2674–2679. [Google Scholar] [CrossRef]

- Babenko, B.; Yang, M.-H.; Belongie, S. Visual Tracking with Online Multiple Instance Learning. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 983–990. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Wang, X.; Zeng, W.; Liu, W. FairMOT: On the Fairness of Detection and Re-identification in Multiple Object Tracking. Int. J. Comput. Vis. 2021, 129, 3069–3087. [Google Scholar] [CrossRef]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-T.; Wu, X. Object Detection With Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, QC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar] [CrossRef]

- Nepal, U.; Eslamiat, H. Comparing YOLOv3, YOLOv4 and YOLOv5 for Autonomous Landing Spot Detection in Faulty UAVs. Sensors 2022, 22, 464. [Google Scholar] [CrossRef]

- Phadtare, M.; Choudhari, V.; Pedram, R.; Vartak, S. Comparison between YOLO and SSD Mobile Net for Object Detection in a Surveillance Drone. Int. J. Sci. Res. Eng. Man. 2021, 5. [Google Scholar] [CrossRef]

- Zhou, F.; Zhao, H.; Nie, Z. Safety Helmet Detection Based on YOLOv5. In Proceedings of the 2021 IEEE International Conference on Power Electronics, Computer Applications (ICPECA), Shenyang, China, 22–24 January 2021; pp. 6–11. [Google Scholar] [CrossRef]

- Kaya, A.; Keceli, A.S.; Catal, C.; Yalic, H.Y.; Temucin, H.; Tekinerdogan, B. Analysis of transfer learning for deep neural network based plant classification models. Comput. Electron. Agric. 2019, 158, 20–29. [Google Scholar] [CrossRef]

- Mike, Yolov5 + Deep Sort with PyTorch. 2022. Available online: https://github.com/mikel-brostrom/Yolov5_DeepSort_Pytorch (accessed on 1 February 2022).

- TensorFlow. Libraries & Extensions. Available online: https://www.tensorflow.org/resources/libraries-extensions (accessed on 13 January 2022).

- “Home” OpenCV. Available online: https://opencv.org/ (accessed on 13 January 2022).

- T. L. Foundation. Welcome. Available online: https://www.edgexfoundry.org (accessed on 13 January 2022).

- Maltezos, E.; Karagiannidis, L.; Dadoukis, A.; Petousakis, K.; Misichroni, F.; Ouzounoglou, E.; Gounaridis, L.; Gounaridis, D.; Kouloumentas, C.; Amditis, A. Public Safety in Smart Cities under the Edge Computing Concept. In Proceedings of the 2021 IEEE International Mediterranean Conference on Communications and Networking (MeditCom), Athens, Greece, 7–10 September 2021; pp. 88–93. [Google Scholar] [CrossRef]

- Suciu, G.; Hussain, I.; Iordache, G.; Beceanu, C.; Kecs, R.A.; Vochin, M.-C. Safety and Security of Citizens in Smart Cities. In Proceedings of the 2021 20th RoEduNet Conference: Networking in Education and Research (RoEduNet), Iasi, Romania, 4–6 November 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Cao, K.; Liu, Y.; Meng, G.; Sun, Q. An Overview on Edge Computing Research. IEEE Access 2020, 8, 85714–85728. [Google Scholar] [CrossRef]

- Jin, S.; Sun, B.; Zhou, Y.; Han, H.; Li, Q.; Xu, C.; Jin, X. Video Sensor Security System in IoT Based on Edge Computing. In Proceedings of the 2020 International Conference on Wireless Communications and Signal Processing (WCSP), Wuhan, China, 20–23 October 2020; pp. 176–181. [Google Scholar] [CrossRef]

- Villali, V.; Bijivemula, S.; Narayanan, S.L.; Prathusha, T.M.V.; Sri, M.S.K.; Khan, A. Open-Source Solutions for Edge Computing. In Proceedings of the 2021 2nd International Conference on Smart Electronics and Communication (ICOSEC), Tiruchirappalli, India, 7–9 October 2021; pp. 1185–1193. [Google Scholar] [CrossRef]

- Xu, R.; Jin, W.; Kim, D. Enhanced Service Framework Based on Microservice Management and Client Support Provider for Efficient User Experiment in Edge Computing Environment. IEEE Access 2021, 9, 110683–110694. [Google Scholar] [CrossRef]

- The Alliance for the Internet of Things Innovation. Available online: https://aioti.eu/ (accessed on 13 January 2022).

- The Go Programming Language. Available online: https://go.dev/ (accessed on 1 February 2022).

- Eclipse Mosquitto. Eclipse Mosquitto, 8 January 2018. Available online: https://mosquitto.org/ (accessed on 26 January 2022).

- Rottensteiner, F.; Sohn, G.; Gerke, M.; Wegner, J.D. ISPRS Test Project on Urban Classification and 3D Building Reconstruction. 2013. Available online: http://www2.isprs.org/tl_files/isprs/wg34/docs/ComplexScenes_revision_v4.pdf (accessed on 1 February 2022).

- Wen, C.; Li, X.; Yao, X.; Peng, L.; Chi, T. Airborne LiDAR point cloud classification with global-local graph attention convolution neural network. ISPRS J. Photogramm. Remote Sens. 2021, 173, 181–194. [Google Scholar] [CrossRef]

- Godil, A.; Shackleford, W.; Shneier, M.; Bostelman, R.; Hong, T. Performance Metrics for Evaluating Object and Human Detection and Tracking Systems; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2014. [CrossRef] [Green Version]

- Sharma, T.; Debaque, B.; Duclos, N.; Chehri, A.; Kinder, B.; Fortier, P. Deep Learning-Based Object Detection and Scene Perception under Bad Weather Conditions. Electronics 2022, 11, 563. [Google Scholar] [CrossRef]

- Montenegro, B.; Flores, M. Pedestrian Detection at Daytime and Nighttime Conditions Based on YOLO-v5—Ingenius. 2022. Available online: https://ingenius.ups.edu.ec/index.php/ingenius/article/view/27.2022.08 (accessed on 1 February 2022).

- Li, S.; Li, Y.; Li, Y.; Li, M.; Xu, X. YOLO-FIRI: Improved YOLOv5 for Infrared Image Object Detection. IEEE Access 2021, 9, 141861–141875. [Google Scholar] [CrossRef]

| Camera Sensor ID | Type | Resolution | Dimensions | FPS | Spectrum | Connectivity |

|---|---|---|---|---|---|---|

| CAM1 | Logitech BRIO 4 K Ultra HD | 4096 × 2160 | H: 6.5 cm W: 10 cm L: 4.6 cm | 30 | RGB | USB |

| CAM2 | Dahua IPC-HFW3841T-ZAS | 3840 × 2160 | H: 7.7 cm W: 7.7 cm L: 24.5 cm | 30 | RGB | Ethernet |

| CAM3 | IMX258  | 1920 × 1080 | H: 3.8 cm W: 3.5 cm L: 1.7 cm | 30 | RGB | USB |

| CAM4 | FLIR Tau 2 | 640 × 512 | H: 4.5 cm W: 4.5 cm L: 7 cm | 30 | Thermal | USB |

| Experiment ID | Camera | Light Condition | Building Case | Moving Car Case | Object Detection | TBD | Length of the Recorded Frame Sequences |

|---|---|---|---|---|---|---|---|

| EXP1 | CAM1 | day | 🗸 | - | 🗸 | - | 495 |

| EXP2 | CAM1 | day | 🗸 | - | - | 🗸 | 250 |

| EXP3 | CAM1 | day | - | 🗸 | 🗸 | - | 300 |

| EXP4 | CAM2 | day | 🗸 | - | 🗸 | - | 285 |

| EXP5 | CAM2 | day | 🗸 | - | - | 🗸 | 180 |

| EXP6 | CAM3 | day | - | 🗸 | 🗸 | - | 450 |

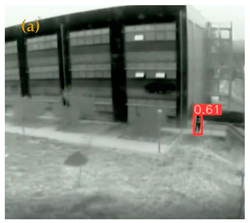

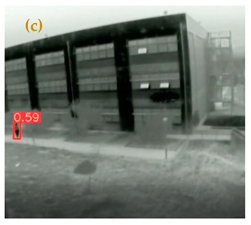

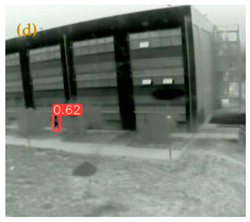

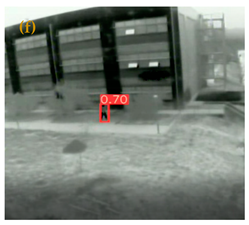

| EXP7 | CAM4 | night | 🗸 | - | 🗸 | - | 435 |

| EXP8 | CAM4 | night | - | 🗸 | 🗸 | - | 480 |

| Experiment ID | Representative Consecutive Video Frames | ||

|---|---|---|---|

| EXP1 |  |  |  |

|  |  | |

| EXP2 |  |  |  |

|  |  | |

| EXP3 |  |  |  |

|  |  | |

| EXP4 |  |  |  |

|  |  | |

| EXP5 |  |  |  |

|  |  | |

| EXP6 |  |  |  |

|  |  | |

| EXP7 |  |  |  |

|  |  | |

| EXP8 |  |  |  |

|  |  | |

| Object Detection Process | ||||

| Experiment ID | CM (%) | CR (%) | Q (%) | F1 Score |

| EXP1 | 86.4 | 95.0 | 82.6 | 90.5 |

| EXP3 | 85.7 | 81.8 | 72.0 | 83.7 |

| EXP4 | 87.5 | 95.5 | 84.0 | 91.3 |

| EXP6 | 87.6 | 77.8 | 70.0 | 82.4 |

| EXP7 | 66.7 | 100.0 | 66.7 | 80.0 |

| EXP8 | 83.3 | 100.0 | 83.3 | 90.9 |

| Average | 82.8 | 91.7 | 76.4 | 86.4 |

| TBD process | ||||

| Experiment id | IDC | |||

| EXP2 | 2 | |||

| EXP5 | 13 | |||

| Average | 15 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maltezos, E.; Lioupis, P.; Dadoukis, A.; Karagiannidis, L.; Ouzounoglou, E.; Krommyda, M.; Amditis, A. A Video Analytics System for Person Detection Combined with Edge Computing. Computation 2022, 10, 35. https://doi.org/10.3390/computation10030035

Maltezos E, Lioupis P, Dadoukis A, Karagiannidis L, Ouzounoglou E, Krommyda M, Amditis A. A Video Analytics System for Person Detection Combined with Edge Computing. Computation. 2022; 10(3):35. https://doi.org/10.3390/computation10030035

Chicago/Turabian StyleMaltezos, Evangelos, Panagiotis Lioupis, Aris Dadoukis, Lazaros Karagiannidis, Eleftherios Ouzounoglou, Maria Krommyda, and Angelos Amditis. 2022. "A Video Analytics System for Person Detection Combined with Edge Computing" Computation 10, no. 3: 35. https://doi.org/10.3390/computation10030035

APA StyleMaltezos, E., Lioupis, P., Dadoukis, A., Karagiannidis, L., Ouzounoglou, E., Krommyda, M., & Amditis, A. (2022). A Video Analytics System for Person Detection Combined with Edge Computing. Computation, 10(3), 35. https://doi.org/10.3390/computation10030035