Optimizing DSO Requests Management Flexibility for Home Appliances Using CBCC-RDG3

Abstract

:1. Introduction

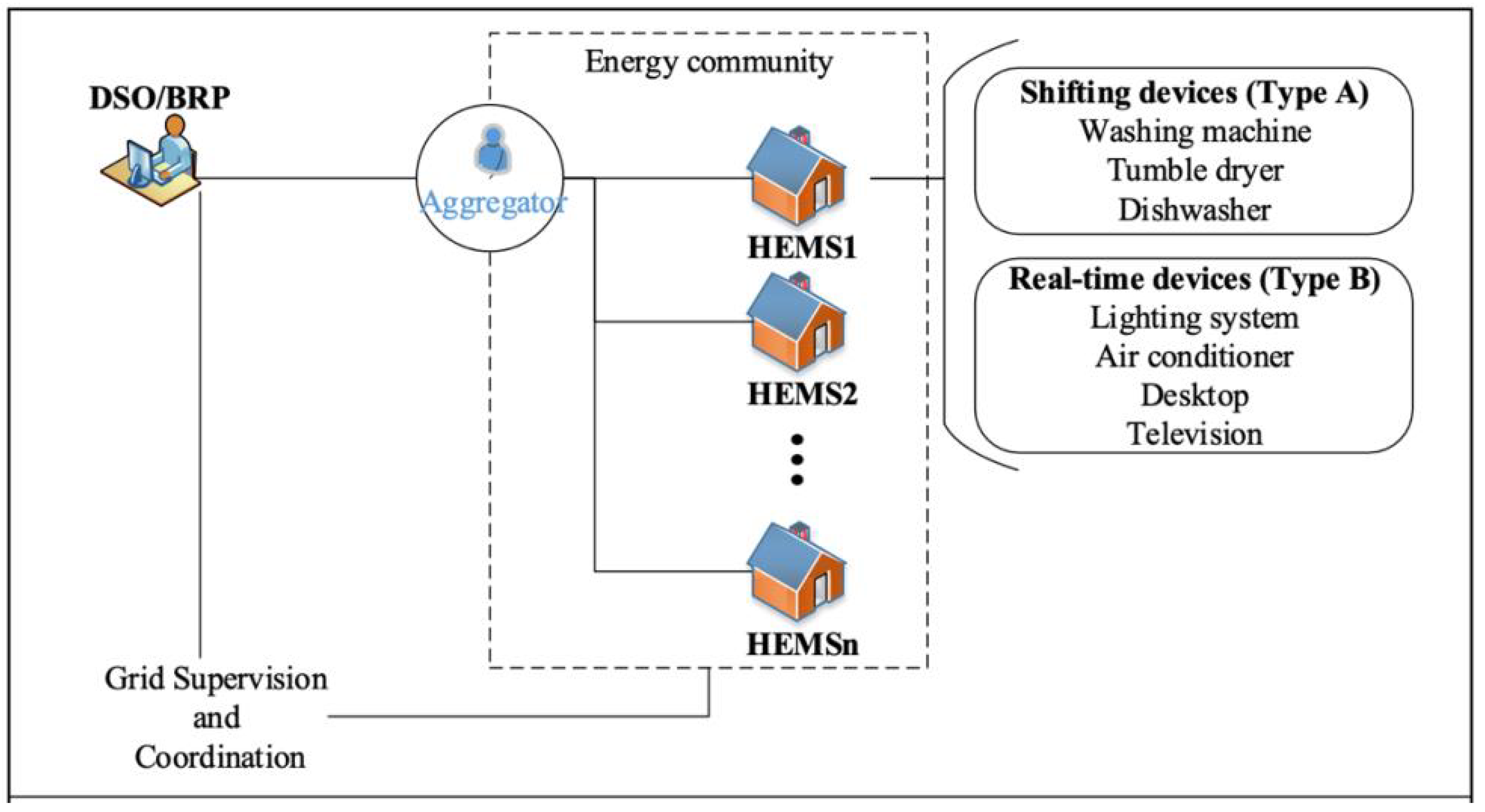

2. Competition

- Perspective of an aggregator in charge of HEMS with various devices with disaster recovery capabilities.

- Two types of devices are considered for disaster recovery: devices whose consumption can be rolled over to another period, and devices with the ability to manage in real time.

- The aggregator responds to a flexibility request from the DSO or BRP, which pays monetary compensation for each unit of capacity (PU) of flexibility provided.

- The aggregator uses a flex management system to reschedule some devices and approximate the flex curve provided by the DSO as closely as possible.

- Users can register their devices for flexibility and set preferences for the allowed shift times, expected rewards for flex activation, and the prioritization of available devices for activation, among other things.

- Assuming that the necessary infrastructure to achieve such command and control (e.g., smart metering systems, communication lines, HEMS) is in place.

- Both the DSO/BPR and the aggregator have access to the predicted baseline power consumption provided by a third party.

2.1. Description of Parameters

2.1.1. Type A Appliances

2.1.2. Type B Appliances

2.2. Solution Representation

2.3. Objective Function

3. Mathematical Optimization Problem

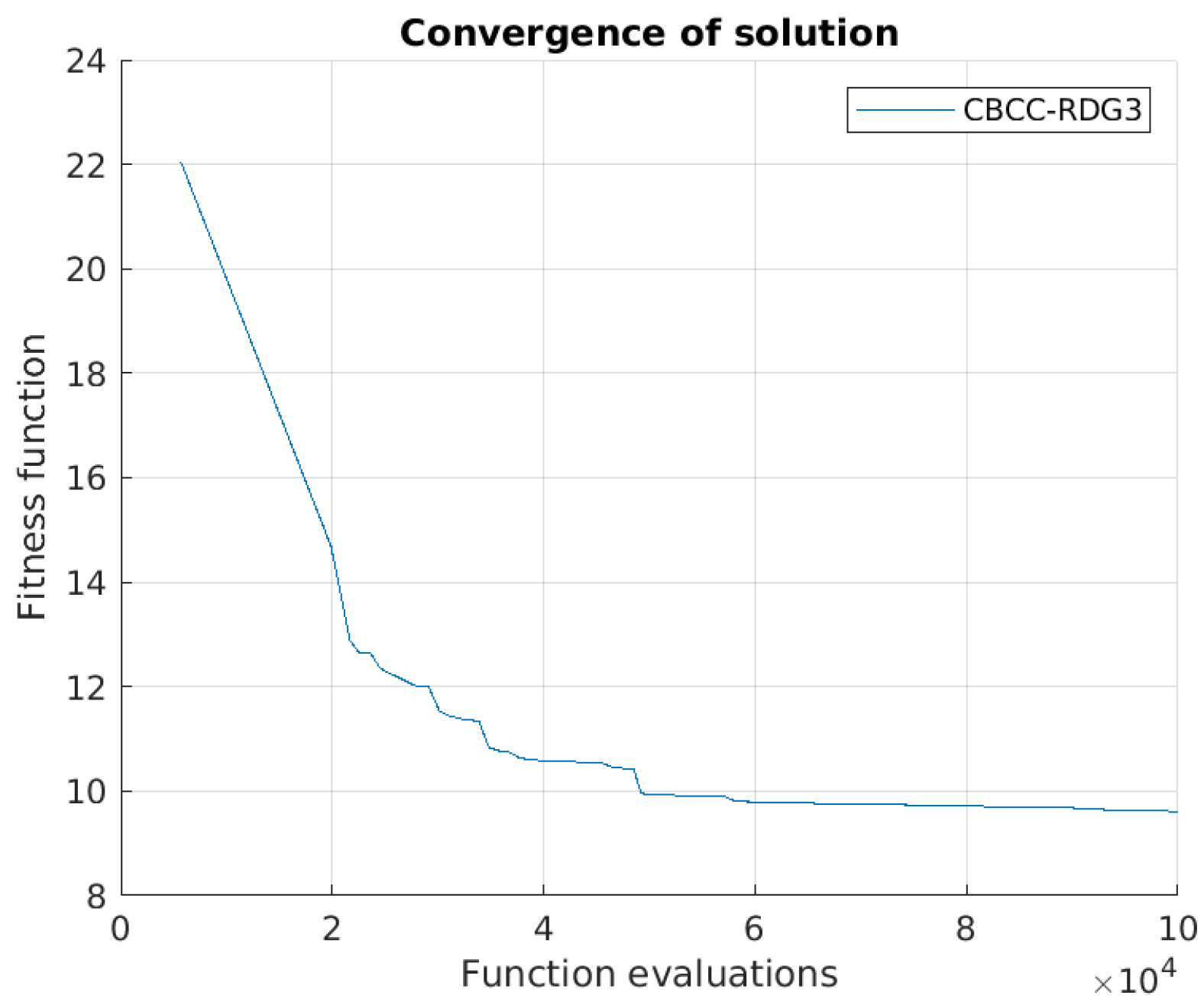

- The competition organizers provided information that a maximum number of 100,000 function evaluations are allowed in the competition.

- The total dimension of the problem is 940.

4. Solution Approach

4.1. Known Methods

4.2. CBCC-RDG3

| Algorithm 1 CBCC-RDG3. |

|

4.2.1. RDG3

4.2.2. CMA-ES

| Algorithm 2 CMA-ES. |

|

4.3. Genetic Algorithm

- 1.

- InitializationA set of vectors called population is randomly generated, where G is the number of generations and NP is the size of the population. Then, we calculate the fitness function for every vector from the population.

- 2.

- SelectionIn this step, we leave in the next generation either the parent vector or trial vector according to their fitness value.

- 3.

- RecombinationTrial vectors are generated on the basis of our current generation using a recombination operator: the mutation vector is combined with the individual from the population.

- 4.

- MutationAt every generation for each vector, we generate mutation vectors using a mutation operator.Steps 2–4 are repeated until we reach the maximum number of iterations or function evaluations.

- 1.

- InitializationThis is performed by generating a required number of individuals using a random number generator that uniformly distributes numbers in the desired range, in our case .

- 2.

- SelectionStochastic Universal Sampling was used. It is a single-phase sampling algorithm with minimum spread and zero bias.

- 3.

- RecombinationThe default crossover function ’crossover scattered’ generates a random binary vector and selects the genes where the vector is a 1 from the first parent and the genes where the vector is a 0 from the second parent, further combining the genes to form the child.

- 4.

- MutationFor that, we used Gaussian mutation. That method adds a random number obtained from a Gaussian distribution with a mean 0 to every part of the parent vector.

4.4. HyDE-DF

| Algorithm 3 HyDE-DF. |

|

5. Simulation Results

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tuballa, M.L.; Abundo, M.L. A review of the development of smart grid technologies. Renew. Sustain. Energy Rev. 2016, 59, 710–725. [Google Scholar] [CrossRef]

- Aien, M.; Hajebrahimi, A.; Fotuhi-Firuzabad, M. A comprehensive review on uncertainty modeling techniques in power system studies. Renew. Sustain. Energy Rev. 2016, 57, 1077–1089. [Google Scholar] [CrossRef]

- Lezema, F.; Soares, J.; Vale, Z.; Rueda, J.; Rivera, S.; Elrich, I. 2017 IEEE competition on modern heuristic optimizers for smart grid operation: Testbeds and results. Swarm Evol. Comput. 2019, 44, 420–427. [Google Scholar] [CrossRef]

- Cohen, A.I.; Wang, C.C. An optimization method for load management scheduling. IEEE Trans. Power Syst. 1988, 3, 612–618. [Google Scholar] [CrossRef]

- Ng, K.-H.; Sheble, G.B. Direct load control-A profit-based load management using linear programming. IEEE Trans. Power Syst. 1998, 13, 688–694. [Google Scholar] [CrossRef]

- Schweppe, F.C.; Daryanian, B.; Tabors, R.D. Algorithms for a spot price responding residential load controller. IEEE Trans. Power Syst. 1989, 4, 507–516. [Google Scholar] [CrossRef]

- Lee, S.H.; Wilkins, C.L. A practical approach to appliance load control analysis: A water heater case study. IEEE Power Eng. Rev. 1983, PER-3(5), 64. [Google Scholar] [CrossRef]

- Kurucz, C.N.; Brandt, D.; Sim, S. A linear programming model for reducing system peak through customer load control programs. IEEE Trans. Power Syst. 1996, 11, 1817–1824. [Google Scholar] [CrossRef]

- Chu, W.-C.; Chen, B.-K.; Fu, C.-K. Scheduling of direct load control to minimize load reduction for a utility suffering from generation shortage. IEEE Trans. Power Syst. 1993, 8, 1525–1530. [Google Scholar]

- Weller, H.G. Managing the instantaneous load shape impacts caused by the operation of a large-scale direct load control system. IEEE Trans. Power Syst. 1988, 3, 197–199. [Google Scholar] [CrossRef]

- Hsu, Y.-Y.; Su, C.-C. Dispatch of direct load control using dynamic programming. IEEE Trans. Power Syst. 1991, 6, 1056–1061. [Google Scholar]

- Yao, L.; Chang, W.-C.; Yen, R.-L. An iterative deepening genetic algorithm for scheduling of direct load control. IEEE Trans. Power Syst. 2005, 20, 1414–1421. [Google Scholar] [CrossRef]

- Kunwar, N.; Yash, K.; Kumar, R. Area-load based pricing in DSM through ANN and heuristic scheduling. IEEE Trans. Smart Grid 2013, 4, 1275–1281. [Google Scholar] [CrossRef]

- Pallotti, E.; Mangiatordi, F.; Fasano, M.; Vecchio, P.D. GA strategies for optimal planning of daily energy consumptions and user satisfaction in buildings. In Proceedings of the 2013 12th International Conference on Environment and Electrical Engineering, Wroclaw, Poland, 5–8 May 2013; pp. 440–444. [Google Scholar]

- Logenthiran, T.; Srinivasan, D.; Shun, T.Z. Demand side management in smart grid using heuristic optimization. IEEE Trans. Smart Grid 2012, 3, 1244–1252. [Google Scholar] [CrossRef]

- GECCO 2021. The Genetic and Evolutionary Computation Conference. Available online: https://gecco-2021.sigevo.org/HomePage (accessed on 1 April 2021).

- Call for Competition on Evolutionary Computation in the Energy Domain: Smart Grid Applications 2021. Available online: http://www.gecad.isep.ipp.pt/ERM-competitions/2021-2/ (accessed on 1 April 2021).

- Yang, J.; Zhuang, Y. An improved ant colony optimization algorithm for solving a complex combinatorial optimization problem. Appl. Soft Comput. 2010, 10, 653–660. [Google Scholar] [CrossRef]

- Deng, W.; Xu, J.; Zhao, H. An improved ant colony optimization algorithm based on hybrid strategies for scheduling problem. IEEE Access 2019, 7, 20281–20292. [Google Scholar] [CrossRef]

- Kemmoe Tchomte, S.; Gourgand, M. Particle swarm optimization: A study of particle displacement for solving continuous and combinatorial optimization problems. Int. J. Prod. Econ. 2009, 121, 57–67. [Google Scholar] [CrossRef]

- Niar, S.; Bekrar, A.; Ammari, A. An effective and distributed particle swarm optimization algorithm for flexible job-shop scheduling problem. J. Intell. Manuf. 2015, 2, 603–615. [Google Scholar]

- Mirjalili, S. Genetic Algorithm; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 43–55. [Google Scholar]

- Special Session and Competition on Large-Scale Global Optimization. Available online: http://www.tflsgo.org/special_sessions/cec2019 (accessed on 1 April 2021).

- Lezama, F.; Soares, J.; Canizes, B.; Vale, Z. Flexibility management model of home appliances to support DSO requests in smart grids. Sustain. Cities Soc. 2020, 55, 102048. [Google Scholar] [CrossRef]

- Weise, T. Global Optimization Algorithm: Theory and Application. Self-Published Thomas Weise. 2009. Available online: https://www.google.com.hk/url?sa=t&rct=j&q=&esrc=s&source=web&cd=&ved=2ahUKEwiT7-P34Oj6AhVQfd4KHZOPCU0QFnoECBMQAQ&url=http%3A%2F%2Fwww.it-weise.de%2Fprojects%2Fbook.pdf&usg=AOvVaw1Ajs2m4Z940ArUFDXZh77N (accessed on 1 April 2021).

- Zhang, X.; Wang, X. Hybrid-adaptive differential evolution with decay function applied to transmission network expansion planning with renewable energy resources generation. Iet Gener. Transm. Distrib. 2022, 16, 2829–2839. [Google Scholar] [CrossRef]

- Mallipeddi, R.; Suganthan, P.N.; Pan, Q.-K.; Tasgetiren, M.F. Differential evolution algorithm with ensemble of parameters and mutation strategies. Appl. Soft Comput. 2011, 11, 1679–1696. [Google Scholar] [CrossRef]

- Wu, G.; Mallipeddi, R.; Suganthan, P.N.; Wang, R.; Chen, H. Differential evolution with multi-population based ensemble of mutation strategies. Inf. Sci. 2016, 329, 329–345. [Google Scholar] [CrossRef]

- Liang, J.J.; Qin, A.K.; Suganthan, P.N.; Baskar, S. Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans. Evol. Comput. 2006, 10, 281–295. [Google Scholar] [CrossRef]

- Sun, Y.; Li, X.; Ernst, A.; Omidvar, M.N. Decomposition for Large-scale Optimization Problems with Overlapping Components. In Proceedings of the 2019 IEEE Congress on Evolutionary Computation (CEC), Wellington, New Zealand, 10 June 2019; pp. 326–333. [Google Scholar]

- Auger, A.; Brockhoff, D.; Hansen, N.; Ait Elhara, O.; Semet, Y.; Kassab, R.; Barbaresco, F. A Comparative Study of Large-Scale Variants of CMA-ES. In Proceedings of the 15th International Conference, Dubrovnik, Croatia, 21–24 May 2018; pp. 3–15. [Google Scholar]

- Beyer, H.-G.; Sendhoff, B. Simplify Your Covariance Matrix Adaptation Evolution Strategy. IEEE Trans. Evol. Comput. 2017, 21, 746–759. [Google Scholar] [CrossRef]

- Hansen, N. The CMA Evolution Strategy: A Comparing Review. Towards a new evolutionary computation. Stud. Fuzziness Soft Comput. 2007, 192, 75–102. [Google Scholar]

- Hansen, N. The CMA Evolution Strategy: A Tutorial. arXiv 2016, arXiv:1604.00772. [Google Scholar]

- Hansen, P.; Mladenovic, N.; Moreno-Pérez, J. Variable neighbourhood search: Methods and applications. 4OR 2010, 175, 367–407. [Google Scholar]

- Genetic Algorithm, Global Optimization Toolbox, MATLAB Documentation. Available online: https://se.mathworks.com/help/gads/genetic-algorithm.html (accessed on 1 April 2021).

- Lezama, F.; Soares, J.A.; Faia, R.; Vale, Z. Hybrid-adaptive differential evolution with decay function (hyde-df) applied to the 100-digit challenge competition on single objective numerical optimization, CMA-ES. In Proceedings of the GECCO ’19: Proceedings of the Genetic and Evolutionary Computation Conference Companion, Prague, Czech Republic, 13–17 July 2019; pp. 7–8. [Google Scholar]

- Lezama, F.; Soares, J.A.; Faia, R.; Pinto, T.; Vale, Z. A New Hybrid-Adaptive Differential Evolution for a Smart Grid Application Under Uncertainty. In Proceedings of the 2018 IEEE Congress on Evolutionary Computation, Rio de Janeiro, Brazil, 8–13 July 2018. [Google Scholar]

- Brest, J.; Zamuda, A.; Boskovic, B.; Maucec, M.S.; Zumer, V. Dynamic optimization using self-adaptive differential evolution. In Proceedings of the IEEE Congress on Evolutionary Computation, Trondheim, Norway, 18–21 May 2009; pp. 415–422. [Google Scholar]

- Hansen, N.; Ostermeier, A. Completely Derandomized Self-Adaptation in Evolution Strategies. Evol. Comput. 2001, 9, 159–195. [Google Scholar] [CrossRef]

| iRuns | Fit | avgConveRate | timeSpent |

|---|---|---|---|

| Run 1 | 8.86501141 | −0.131981 | 134.466 |

| Run 2 | 8.08449529 | −0.141198 | 131.738 |

| Run 3 | 7.0025118 | −0.152127 | 135.624 |

| Run 4 | 9.1514026 | −0.122969 | 127.689 |

| Run 5 | 8.0904988 | −0.142578 | 133.0495 |

| Run 6 | 7.936813 | −0.134536 | 127.6906 |

| Run 7 | 8.1752147 | −0.141713 | 133.892 |

| Run 8 | 8.6713717 | −0.127540 | 121.833 |

| Run 9 | 8.7980497 | −0.135358 | 135.251 |

| Run 10 | 9.280903 | −0.129113 | 135.896 |

| Run 11 | 8.485648 | −0.130553 | 129.031 |

| Run 12 | 7.002512 | −0.152127 | 137.488 |

| Run 13 | 8.700719 | −0.128485 | 131.982 |

| Run 14 | 8.3261197 | −0.132086 | 130.299 |

| Run 15 | 8.1971067 | −0.141490 | 135.037 |

| Run 16 | 8.4959366 | −0.131720 | 132.182 |

| Run 17 | 7.9112851 | −0.142948 | 140.537 |

| Run 18 | 8.3895182 | −0.132753 | 132.206 |

| Run 19 | 7.9224518 | −0.142835 | 156.714 |

| Run 20 | 8.8456134 | −0.134872 | 150.9896 |

| Method | AvgFit | StdFit | VarFit | minFit | maxFit | AvgTime |

|---|---|---|---|---|---|---|

| CBCC-RDG3 | 8.31665 | 0.5998037 | 0.3598 | 7.002511 | 9.2809 | 134.6798 |

| HyDE-DF | 8.05981 | 0.4778170 | 0.2283 | 6.974658 | 8.8835 | 103.1191 |

| GA | 10.4546 | 0.798004 | 0.63681 | 8.657787 | 11.859997 | 1150.9098 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bezmaslov, M.; Belyaev, D.; Vasilev, V.; Dolgintseva, E.; Yamshchikova, L.; Petrosian, O. Optimizing DSO Requests Management Flexibility for Home Appliances Using CBCC-RDG3. Computation 2022, 10, 188. https://doi.org/10.3390/computation10100188

Bezmaslov M, Belyaev D, Vasilev V, Dolgintseva E, Yamshchikova L, Petrosian O. Optimizing DSO Requests Management Flexibility for Home Appliances Using CBCC-RDG3. Computation. 2022; 10(10):188. https://doi.org/10.3390/computation10100188

Chicago/Turabian StyleBezmaslov, Mark, Daniil Belyaev, Vladimir Vasilev, Elizaveta Dolgintseva, Lyubov Yamshchikova, and Ovanes Petrosian. 2022. "Optimizing DSO Requests Management Flexibility for Home Appliances Using CBCC-RDG3" Computation 10, no. 10: 188. https://doi.org/10.3390/computation10100188

APA StyleBezmaslov, M., Belyaev, D., Vasilev, V., Dolgintseva, E., Yamshchikova, L., & Petrosian, O. (2022). Optimizing DSO Requests Management Flexibility for Home Appliances Using CBCC-RDG3. Computation, 10(10), 188. https://doi.org/10.3390/computation10100188