A Large Effective Touchscreen Using a Head-Mounted Projector

Abstract

:1. Introduction

2. Related Work

2.1. UIs Using a Projector

2.2. Peephole Interaction Using a Handheld Projector

2.3. UIs Using a Head-Mounted Projector

2.4. UIs Using a Head-Mounted Display

3. Proposed System

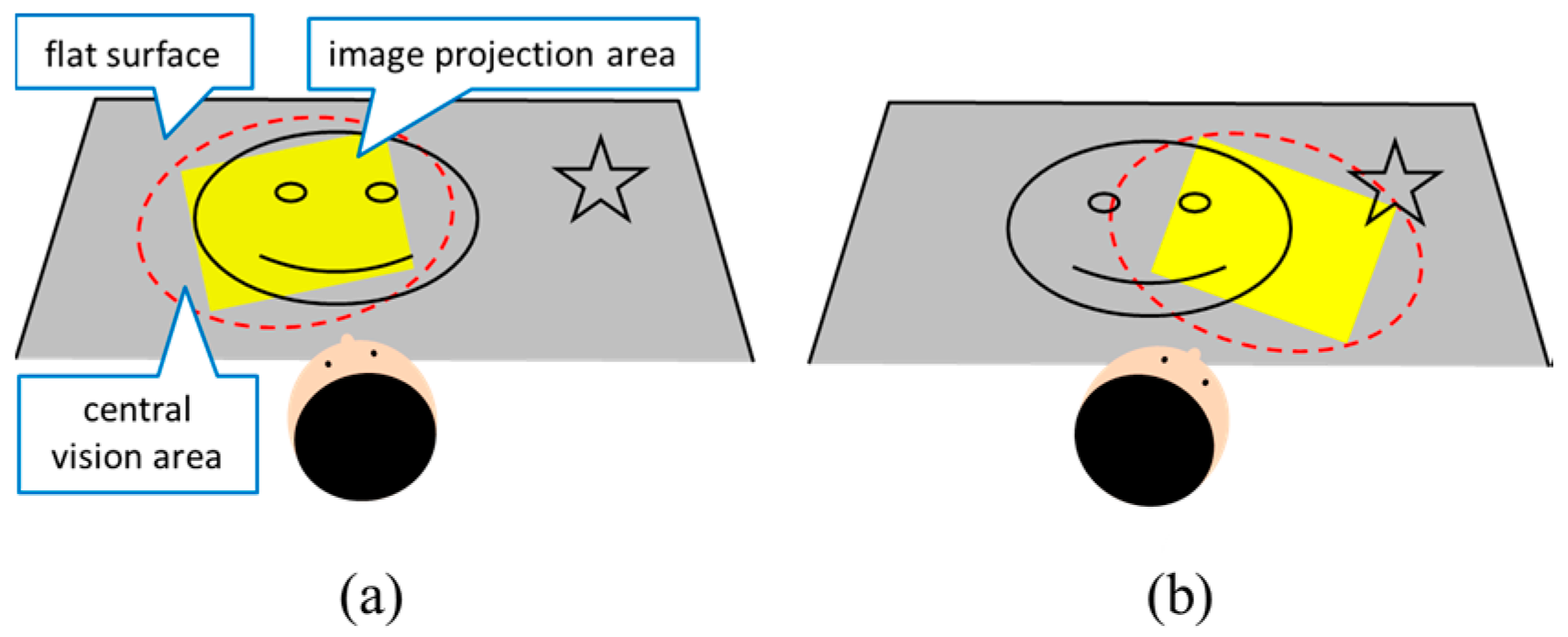

3.1. Overview

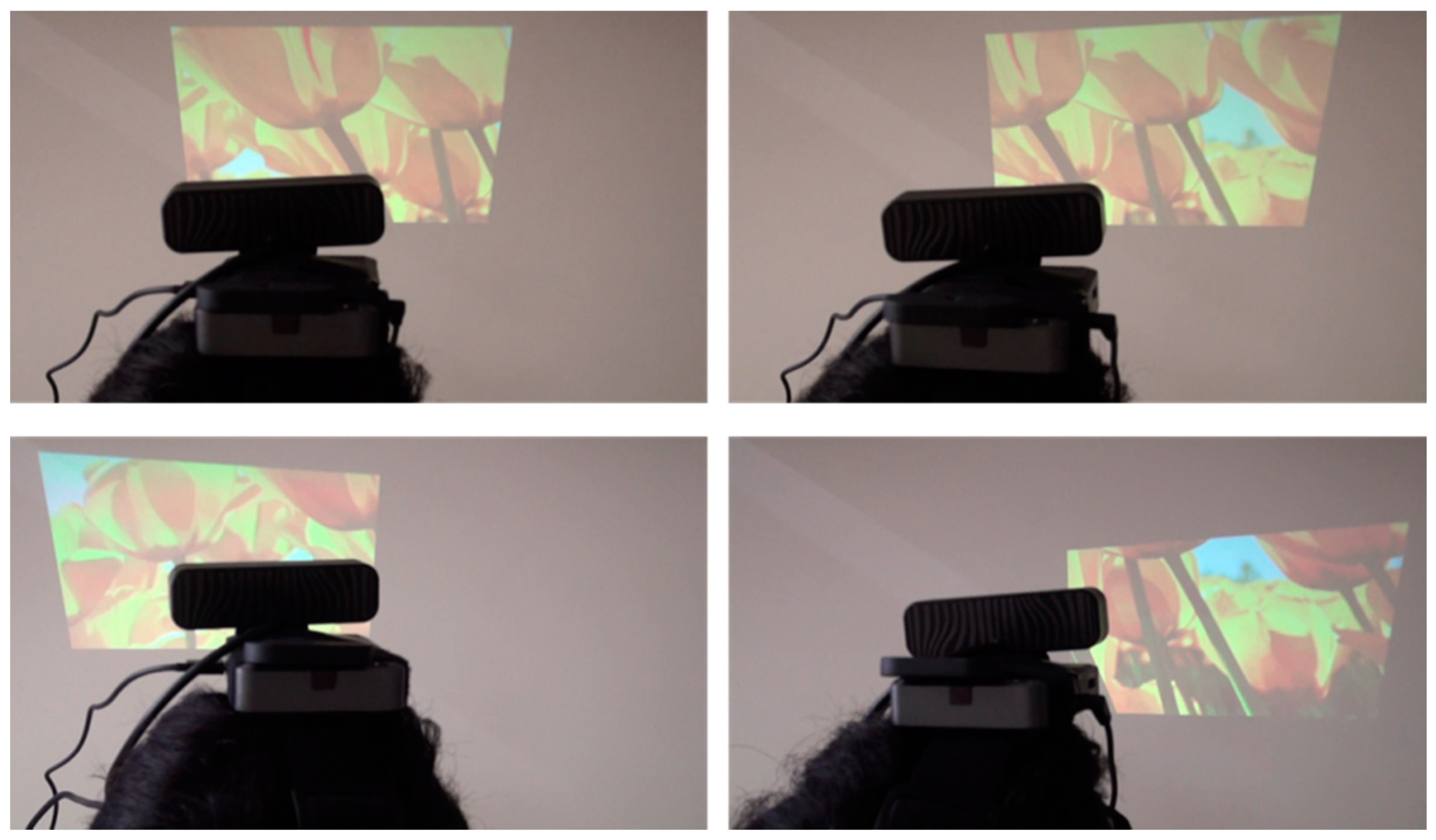

3.2. Experimental System

3.3. Superimposing Images

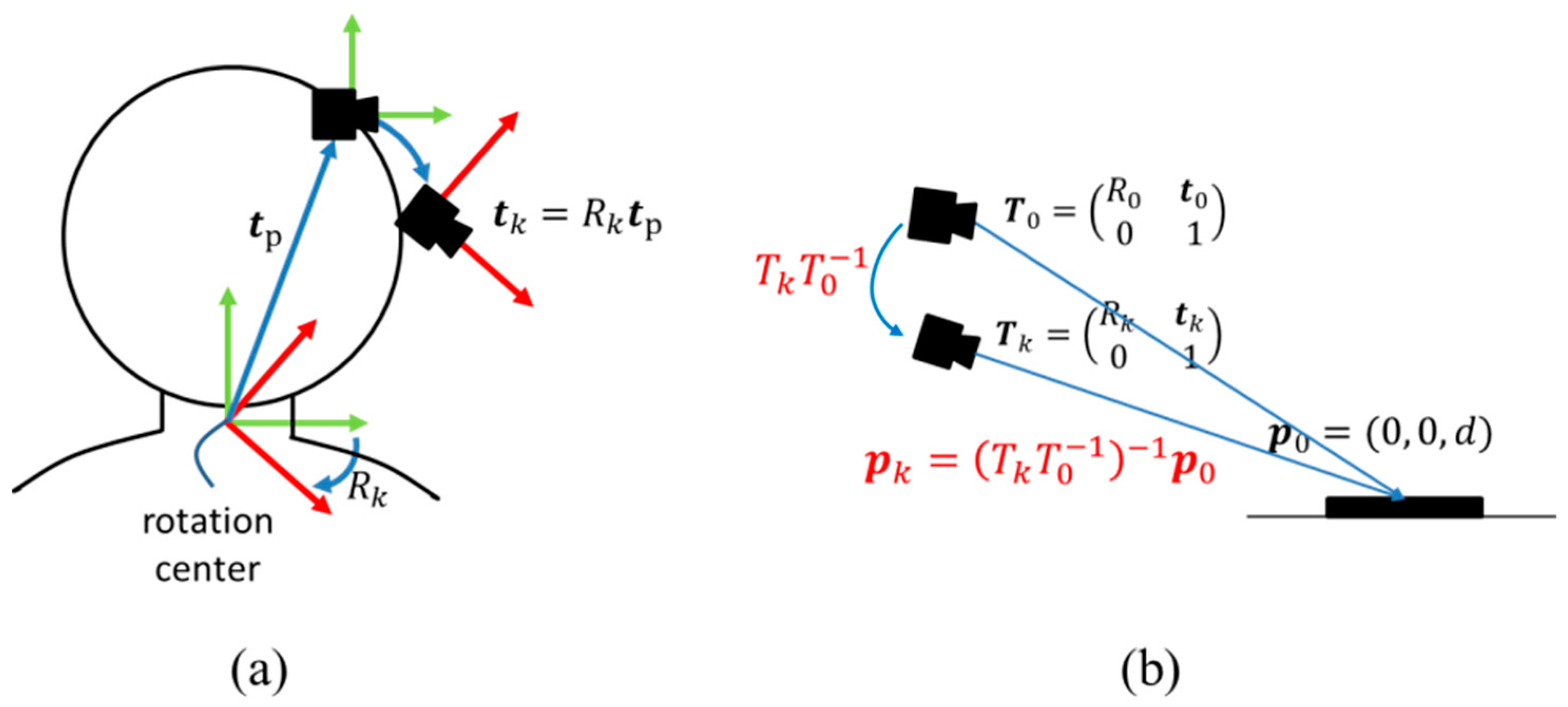

3.4. Registration

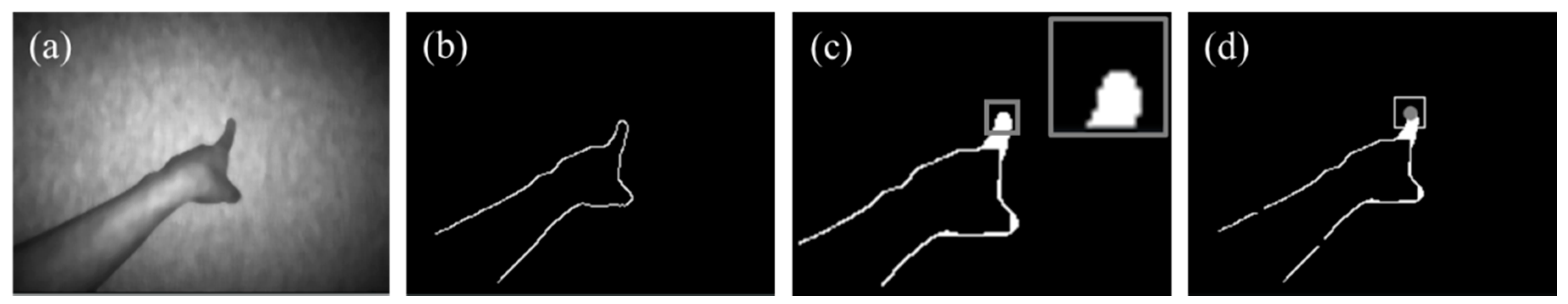

3.5. Touch Detection

4. Evaluation

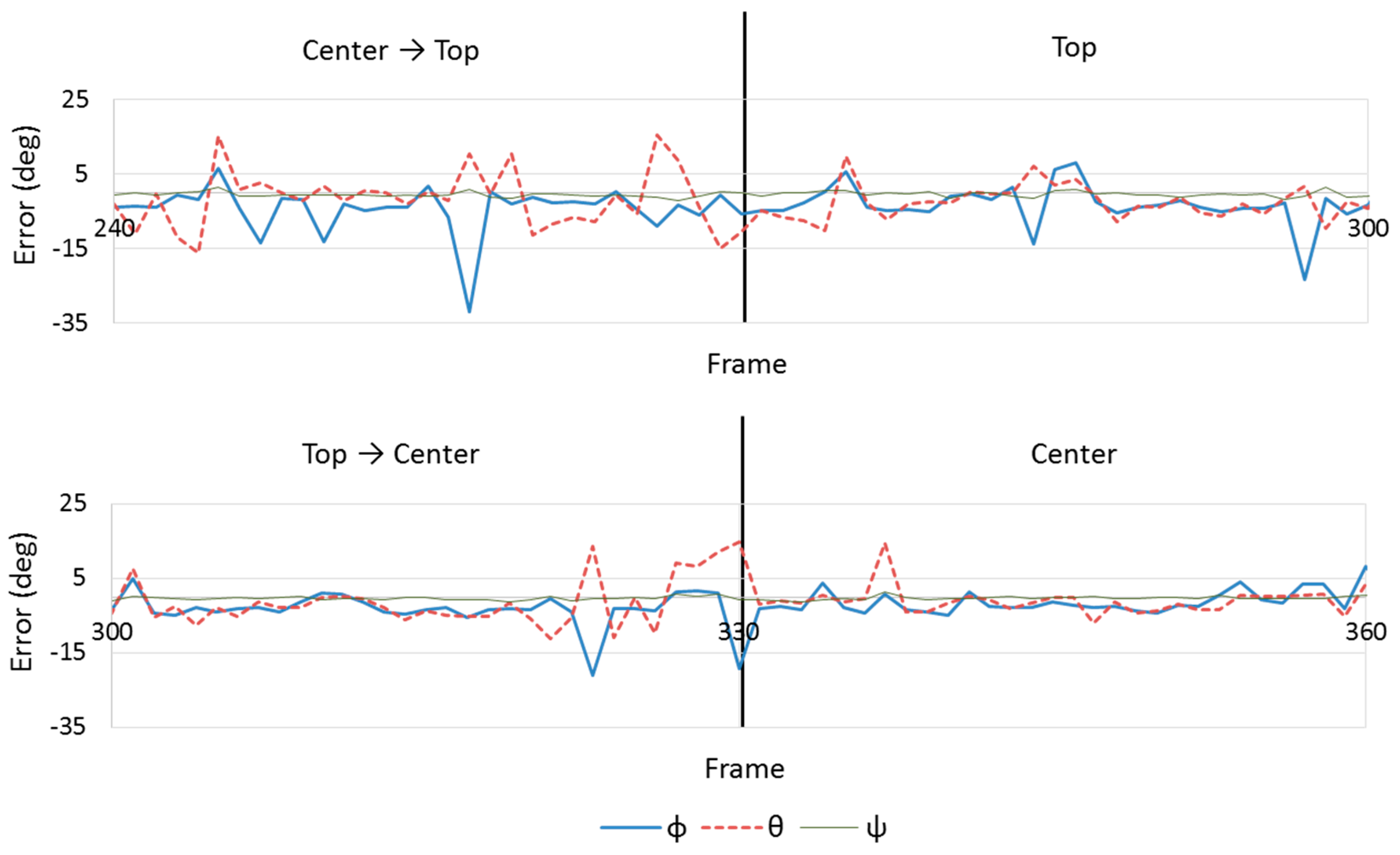

4.1. Registration Accuracy

4.2. Delay of the System

4.3. Limitation of the System

4.3.1. DOF of Head Movement

4.3.2. Delay

4.3.3. Range of Image Projection

4.3.4. Size

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Roeber, H.; Bacus, J.; Tomasi, C. Typing in thin air: The canesta projection keyboard—A new method of interaction with electronic devices. In Proceedings of the 21nd Human Factors in Computing Systems, New York, NY, USA, 5–10 April 2003; pp. 712–713. [Google Scholar]

- Wilson, A.D.; Benko, H. Combining multiple depth cameras and projectors for interactions on, above and between surfaces. In Proceedings of the 23rd annual ACM symposium on User Interface Software and Technology, New York, NY, USA, 3–6 October 2010; pp. 273–282. [Google Scholar]

- Wilson, A.; Benko, H.; Izadi, S.; Hilliges, O. Steerable augmented reality with the beamatron. In Proceedings of the 25th annual ACM symposium on User Interface Software and Technology, Cambridge, MA, USA, 7–10 October 2012; pp. 413–422. [Google Scholar]

- Harrison, C.; Benko, H.; Wilson, A.D. OmniTouch: Wearable multitouch interaction everywhere. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 441–450. [Google Scholar]

- Dai, J.; Chung, C.-K.R. Touchscreen everywhere: On transferring a normal planar surface to a touch-sensitive display. IEEE Trans. Cybern. 2014, 44, 1383–1396. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.; Balakrishnan, R. Interacting with dynamically defined information spaces using a handheld projector and a pen. In Proceedings of the 19th annual ACM symposium on User Interface Software and Technology, Montreux, Switzerland, 15–18 October 2006; pp. 225–234. [Google Scholar]

- Kaufmann, B.; Hitz, M. X-large virtual workspaces for projector phones through peephole interaction. In Proceedings of the 20th ACM International Conference on Multimedia, Nara, Japan, 29 October–2 November 2012; pp. 1279–1280. [Google Scholar]

- Fergason, J.L. Optical System for a Head Mounted Display Using a Retro-Reflector and Method of Displaying an Image. U.S. Patent 5,621,572, 24 August 1994. [Google Scholar]

- Inami, M.; Kawakami, N.; Sekiguchi, D.; Yanagida, Y.; Maeda, T.; Tachi, S. Visuo-haptic display using head-mounted projector. In Proceedings of the IEEE Virtual Reality 2000 Conference, New Brunswick, NJ, USA, 18–22 March 2000; pp. 233–240. [Google Scholar] [Green Version]

- Kijima, R.; Hirose, M. A compound virtual environment using the projective head mounted display. In Proceedings of the International Conference on Virtual Reality Software and Technology 1995, Chiba, Japan, 20–22 November 1995; pp. 111–121. [Google Scholar]

- Bolas, M.; Krum, D.M. Augmented reality applications and user interfaces using head-coupled near-axis personal projectors with novel retroreflective props and surfaces. In Proceedings of the Pervasive 2010 Ubiprojection Workshop, Helsinki, Finland, 17 May 2010. [Google Scholar]

- Kade, D.; Akşit, K.; Ürey, H.; Özcan, O. Head-mounted mixed reality projection display for games production and entertainment. Pers. Ubiquitous Comput. 2015, 19, 509–521. [Google Scholar] [CrossRef]

- Yoshida, T.; Kuroki, S.; Nii, H.; Kawakami, N.; Tachi, S. ARScope. In Proceedings of the 35th International Conference on Computer Graphics and Interactive Techniques, Westmont, IL, USA, 12–14 August 2008. [Google Scholar]

- Mistry, P.; Maes, P.; Chang, L. WUW-wear Ur world: A wearable gestural interface. In Proceedings of the Extended Abstracts on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; pp. 4111–4116. [Google Scholar]

- Tamaki, E.; Miyaki, T.; Rekimoto, J. Brainy hand: An ear-worn hand gesture interaction device. In Proceedings of the Extended Abstracts on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; pp. 4255–4260. [Google Scholar]

- Kemmoku, Y.; Komuro, T. AR Tabletop Interface using a Head-Mounted Projector. In Proceedings of the 15th IEEE International Symposium on Mixed and Augmented Reality, Merida, Mexico, 19–23 September 2016; pp. 288–291. [Google Scholar]

- Xiao, R.; Schwarz, J.; Throm, N.; Wilson, A.D.; Benko, H. MRTouch: Adding Touch Input to Head-Mounted Mixed Reality. IEEE Trans. Vis. Comput. Gr. 2018, 24, 1653–1660. [Google Scholar] [CrossRef] [PubMed]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 586–606. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the IEEE and ACM International Symposium on Mixed and Augmented Reality, Washington, DC, USA, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Wilson, A.D. Using a depth camera as a touch sensor. In Proceedings of the ACM International Conference on Interactive Tabletops and Surfaces, Saarbrücken, Germany, 7–10 November 2010; pp. 69–72. [Google Scholar]

- ARToolKit. Available online: http://artoolkit.sourceforge.net/ (accessed on 16 September 2018).

- Casiez, G.; Roussel, N.; Vogel, D. 1€ filter: A simple speed-based low-pass filter for noisy input in interactive systems. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 2527–2530. [Google Scholar]

| x [mm] | y [mm] | z [mm] | [deg] | [deg] | [deg] | |

|---|---|---|---|---|---|---|

| MAE | 10.20 | 7.20 | 14.28 | 4.02 | 4.69 | 1.01 |

| RMSE | 14.64 | 9.75 | 17.70 | 5.42 | 6.74 | 1.39 |

| SD | 12.96 | 9.45 | 15.54 | 5.09 | 6.72 | 1.39 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kemmoku, Y.; Komuro, T. A Large Effective Touchscreen Using a Head-Mounted Projector. Information 2018, 9, 235. https://doi.org/10.3390/info9090235

Kemmoku Y, Komuro T. A Large Effective Touchscreen Using a Head-Mounted Projector. Information. 2018; 9(9):235. https://doi.org/10.3390/info9090235

Chicago/Turabian StyleKemmoku, Yusuke, and Takashi Komuro. 2018. "A Large Effective Touchscreen Using a Head-Mounted Projector" Information 9, no. 9: 235. https://doi.org/10.3390/info9090235