1. Introduction

Nowadays, a common topic of debate is that robots, social and asocial, will dehumanize societies. With the demography in many developing countries showing the ageing of societies, multiple activities require increasing levels of automatization. In manufacturing workplaces the need to improve the robustness and efficiency of the workforce leads to the use of robots [

1], sometimes with the argument that it will reduce workplace fatigue (see [

2,

3,

4]). In fact, many workplaces, other than manufacturing, operate based in sequences of routines and, hence, humans stress levels tend to build up naturally. Examples of such professions are call center operators and sales persons. The repeated sales pitches, with the pressure for succeeding in selling and the potential poor interactions with the prospective customers, are stress factors (see [

5]). These are jobs/tasks including stress-generating routines and, hence, naturallygood candidates to a replacement with automated/autonomous/intelligent strategies. A key question is if such stressing routines, which include recognizing and generating emotions and evaluating, on the fly, the information being exchanged between robot and human (e.g., for hidden meanings), can be implemented with currently available technologies.

At home, the caring of elderly and people with disabilities already led to a whole new area of research in robotics (the literature and media coverage are extensive, see, for example, [

6,

7,

8,

9]). In professional healthcare, robots are being used to help caregivers, remind patients to take their medications, help carry people (see the Robear [

10], p. 30), or simply act as companions (see the Paro robot [

11]).

With the expanding of caregiving applications, some authors point to ethical dangers, namely in elderly care [

12,

13], and to a necessity of humanizing healthcare in general [

14]. These fears indirectly assign a human quality to social robots, and seem biased as, for example, similar concerns can be made against smartphones (essentially, in what concerns reasoning and decision-making processes, there is no difference between a social robot and a smartphone running software embedding human personality traits and verbal communication skills). Thus, the aesthetics and the ability to move, which is intrinsically to robots, seems to play a part in these concerns. While having a smart watch/phone remembering daily tasks and/or making suggestions tends to be seen as a “product” utility, the same functionality equipped with autonomous motion skills tends to be seen differently.

In addition to ethical fears/dangers, from a social robotics perspective, caring is an activity that poses essentially the same problems as the activities in the context of call centers or selling goods: the interaction between humans and the autonomous agents/robots must emulate the interactions among humans. However, though there is a consensus that caring represents an opportunity to help people [

15] (and, simultaneously, generate business opportunities), it is clear that there is a long way to go before humans feel comfortable having machines taking care of their beloved ones. Apart from the ethical issues, namely, the future dystopia claimed in [

13], as of 2009, market analysts recognized that most robot systems were still in the research phase [

16]. As of 2017, the robots already in the market are being described as responding stock phrases and unable to elaborate a conversation [

17].

However, the gap between social robots and humans is likely to decrease rapidly, given the huge resources currently involved, and the recent developments in related technologies, e.g., learning. One may conjecture an exponential decrease—the interest in human-robot interaction and related issues has been referred to as increasing exponentially (see, for example [

18,

19]), which, assuming an optimistic view of R&D time-to-market, supports the conjecture. As it is happening with other autonomous/intelligent technologies, e.g., autonomous cars, as the distance approaches zero, the difficulty of the scientific/engineering problems scales up (see, for instance, the comments by E. Musk in IEEE Spectrum [

20], which seem to have been confirmed by the current reality). Still, one of the factors being referred to as the cause for the discrepancy between the amount of research resources and the commercial output has been the field testing at a limited scale [

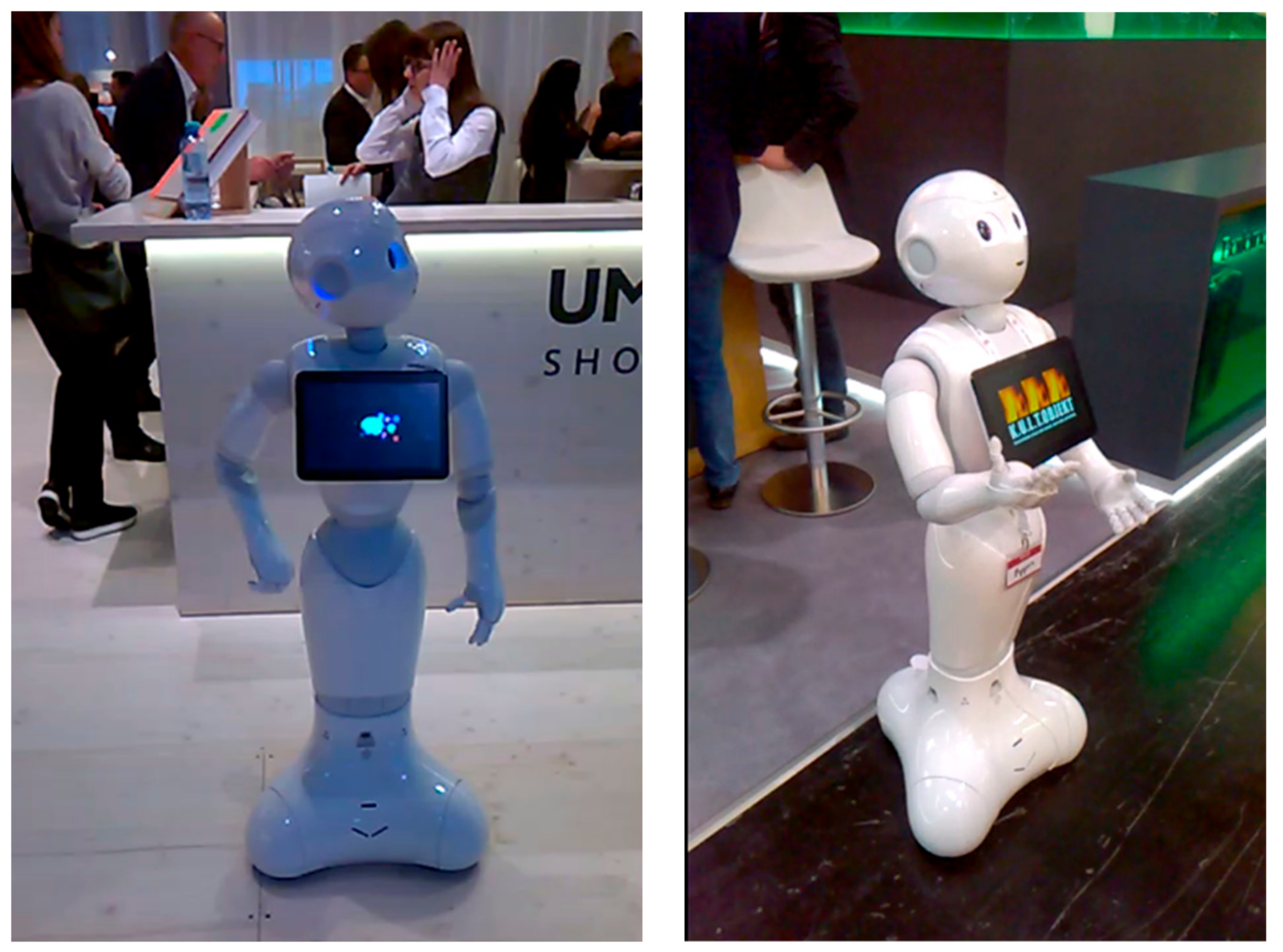

16]. In recent years, multiple projects embraced field testing (see the European projects Monarch and Robot-Era, and the numerous projects and commercial usage using the Pepper (see

Figure 1) and NAO robots from Softbank Robotics). However, the aforementioned comment in [

17] still applies.

Explicit and implicit forms of communication are still a major issue in social robotics. Though explicit communication, using voice and/or haptic devices, gets the majority of attention from the research community, implicit forms, such as the movement, are also extremely important to convey intentions and/or hidden meanings. In fact, if a robot changes its pose in a way that matches that of the humans in a social environment, it is likely to have more chances of achieving some form of social mingling/integration, as it conveys a primary perception of liveness (see [

21]) and predictability.

Unsurprisingly, robots have been considered as instigating all sorts of fears in people. The issue of predictability arises immediately once a human is facing a robot. Even though a certain dose of skepticism by people regarding new technologies may be considered as natural, social robots have been “credited” (mainly by the media) with skills far beyond realistic expectations, both in what concerns static (not involving movement) and dynamic (involving movement) interactions.

In addition, the role of the media, nudging people to (sometimes) extreme emotional states of mind (as caused by the fear induced by a hypothetic social domination by robots), prevents a proper perception of the advantages of social robots. It seems a question worth some analysis if (generically) media ethics related to robotics is beneficial to society in general.

Research is frequently oriented towards having robots mimicking human skills (see, for instance, [

22] and the claim, therein, that robots need to adapt to human environments). Though this may come naturally from the success of humans as a living species, forcing a mutual understanding, i.e., developing robots targeting specific needs of humans, may hinder the full potential of social robotics.

The paper starts by discussing human fears and prejudice, and the slow acceptance of some technologies by societies that allows them reaching stable states. Along the paper, intermediate conclusions are collected from the different knowledge areas considered. Evidence from out-lab experiments is presented. This evidence was obtained spontaneously, i.e., the experiments where not scheduled in advance as to test a specific thesis. Instead, the experiments aimed at simply gathering information on interesting situations arising when the robot is running in fully autonomous mode, and no expert/technical help is present in the environment.

The main claim of the paper is that social robots can be used to smooth out human behaviors, hence preventing dehumanization, just by showing humans the frailties of their behaviors and how difficult it is to mimic them. This idea of smoothing represents, in fact, the adaptation to different social environmental conditions—created by the introduction of robots as de facto living social entities. The increase in the complexity of interactions by small increments—complex/elaborate interactions, may trigger fears of hidden meanings and, hence, an embedded sense of stability may suggest/lead to a refusal of social interactions with robots—does not aim, specifically, at improving the perception of the humans. However, it is not difficult to accept that the simple presence of a robot in a social environment may expand the way the environment is perceived by the corresponding native humans (an analogy with the Tetris example/argument in [

23] is possible—the robot can be identified with a Tetris falling piece that is manipulated by a player—the humans at the scene—in order to fit into the environment).

Moreover, social robots have the potential to nudge people to search for upgraded education levels, in order to understand the complexity of integrating a robot in a social environment. Social robots, namely of average skills, can be configured as communication facilitators/enablers that can change behaviors. Nudges are already being seen as simple solutions for difficult problems (e.g., as an alternative to legislation/taxation [

24], p. 9). People having some inclination/curiosity about technology may find, in the interaction with robots, a good reason to expand their knowledge. Nevertheless, it does not seem plausible that social robots be a mass solution to increase the efficiency of educational processes.

2. Fearing the Unknown?

Fearing the unknown is being considered as a fundamental fear and a key influencer of the neurotic personality trait (see [

25], p. 14). The extensive visibility of robots provided by the media essentially provide expectations about robots, but the innate perception of the complexity of human beings is likely to set the level of knowledge close to unknown.

However, the lack of knowledge can still generate some expectations, namely, in what concerns motion. The Tweenbots experiment (

www.tweenbots.com) is paradigmatic on the empathy that people may develop towards (i) a non-living and (ii) highly predictable entity—as the package induces people to not to expect any autonomous motion.

In what concerns empathy towards humans and robots, [

26] show that, to some extent, the mechanisms controlling the empathy are similar, i.e., the same areas of the brain are activated when a human observes another human or a robot being mistreated, thought the strength is significantly higher in the human situation.

Current commercial output presence of social robots is rapidly increasing. Around 10,000 Pepper units are already in operation, in usages such as hosting, as shown in

Figure 1, requiring empathy- and emotion-recognition abilities. Still, the perception conveyed to people is “robot’s spoken responses feel canned, instead of being synthesized in real-time. For now, Pepper can only say some stock phrases …” [

17]. However, with such numbers already “living” in social environments, it may happen that people are starting to bring their expectations to a realistic level.

The case of Sanbot (

en.sanbot.com), with around 60,000 units already operating in China (see [

27]), is also interesting. Allegedly, IBM Watson conversational skills and Amazon Alexa voice recognition should lead to minimal refusal or, possibly, high acceptance of the robot in a generic social environment.

However, when operating in the diversity of cultural backgrounds, often existing in complex social environments, it may happen that some people do not appreciate interacting with a robot because, if it is too smart, it may be difficult to trust, as there may be not a quick way of knowing what it is capable of.

Thus, a social robot must exhibit its intelligence convincingly but also in a careful form, convincing people that there are no hidden meanings/feelings/intentions. Establishing trust is thus a key issue. Moreover, as with humans, accepting errors plays an important part in it; that is, intentional and non-intentional errors require careful management, to help convey an adequate notion of trust.

In addition to the fear of the physical actions that social robots can take, the fact that they can record image and sound triggers, immediately, fears of privacy violation (and, in some situations, legal issues may be at stake). Moreover, even if the hardware and software making the robots can be guaranteed to be safe, it is difficult to ensure that no third party installs a device onboard (e.g., a micro-camera or sound recorder) that operates independently of the robot (which, then, is simply acting as a transporter vehicle for the spying devices).

Of, course, similar fears should already exist relative to “smart” technologies. However, creative nudging techniques have been often used to overcome this (e.g., emails and webpages remembering people to check for specific events, pre-installed software apps of specific manufacturers, and catchy interface designs, that are non-intrusive and, at times, useful), so it is reasonable to assume that similar techniques can help removing many fears regarding robots. Nudging is known to be a powerful, cost-effective, manipulation technique (see [

28]). Moreover, designing digital nudges is becoming a structured process that can be applied also in the robotics domain (see for instance [

29]).

4. Humanizing Robotics Technology

Caring for others is a common behavior in humans, though some authors refer to it as a need (see for instance [

37]) and, even, as a basis dimension of the human being (see the comment on Heidegger’s work in [

38], p. 14). Caring for a robot, a non-living agent (in the biological sense), may require either additional social skills, namely, egalitarian moral for humans and machines, or an understanding of technology possibilities/limitations combined with personality traits leading to the desire of having it running flawlessly.

Technology limitations tend to act in favor of humanization, as they convey a perception of weakness/frailty that may trigger sympathetic feelings. This is precisely a key finding in some ongoing experiments at IST (Instituto Superior Técnico/Institute for Systems and Robotics, Lisbon, Portugal).

The type of social robotic experiments considered in the paper often raise practical issues in what concerns the scientifically valid collection of data. Regular experiments rely (i) on direct observation and recording—in general, constrained due to privacy regulations which, when duly addressed easily bias the results—and (ii) on post-processing analysis, i.e., feature extraction pointing to concepts that are often difficult to disambiguate, e.g., emotions and subsequent statistical processing. In the case of purely observational, non-scheduled, experiments, it is necessary either to wait until any relevant events are triggered (which may require a long time), or to sample the environment with short-time experiments. The rate of events may thus be small, as the case of those reported in this paper which tends to further increase the time necessary for assertive conclusions to be drawn.

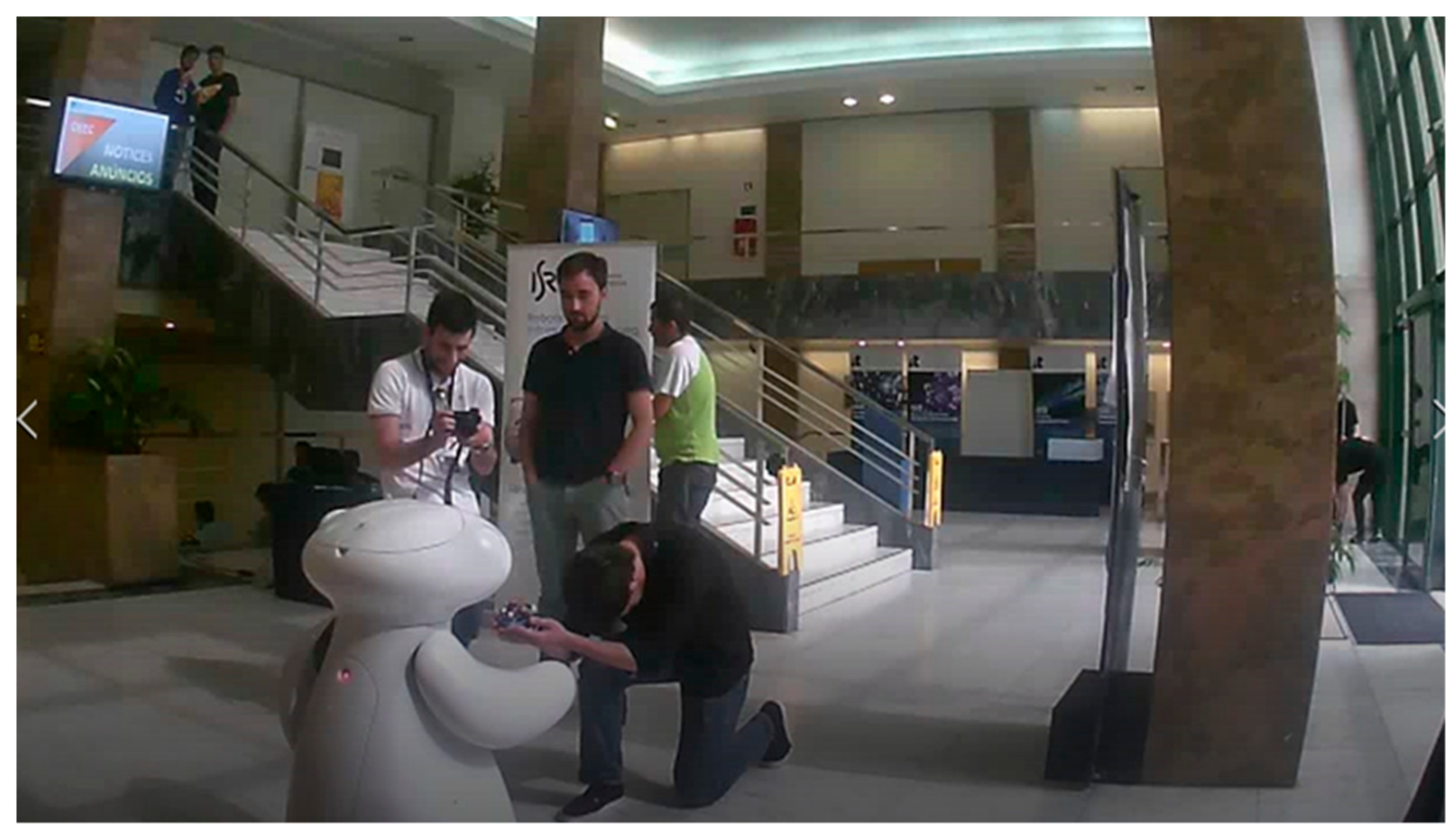

Figure 2,

Figure 3 and

Figure 4 show a social experiment with a “mbot” robot (

www.monarch-fp7.eu) in a non-lab environment at IST. The experiment aimed at verifying the reaction of ordinary people towards a robot equipped with very basic interaction skills. The space was a lobby of a university building. The audience was formed mainly of engineering students (of heterogeneous curricula), and people without a technological background, namely, administrative and maintenance staff.

The social skills of the robot were limited to (i) asking people for a handshake whenever someone is detected in front of the robot while it is waiting, and (ii) asking people to follow the robot when navigating between a collection of locations in the environment.

The robot was fully autonomous, and the people managing the experiment stayed out of sight during the entire duration, and did not intervene in any way. The experiment duration was constrained only by the duration of the batteries (approximately 4 h).

This particular type of robot does not usually operate in this type of open public area. Its physical shape is known to most people, but not its social skills, though there is a general understanding that it is a programmable device. Some people knew that it was used in the Robotics labs located in the same building.

People passing by naturally looked at the robot. The photos show common situations observed during the experiment, with people spontaneously initiating an interaction (trying to shake hands and following) with the robot.

People willing to try to interact with the robot are natural candidates to exhibit more theatrical behaviors, as shown in

Figure 4.

The effect of the people’s willingness to interact with the robot and such theatrical behaviors, namely expressions of affection, have the practical effect of humanizing the environment with a side effect that bystanders seeing people playing with the robot get a perception of friendship, or, at least, non-dangerousness from the robot. People naturally tend to find the most out of a situation and attribute human qualities to artificial entities (and behaving accordingly). Also, people are known to react favorably to generic humanized entities (see, for example, [

39] on the advantages of humanizing a brand to get maximal response from people/consumers).

Once accepted that people are willing to work to humanize environments, then advantages in the reduction of stress levels and burning rate of professionals emerge (see [

40] on the relations between stress levels and job satisfaction). Therefore, having people playing (possibly beyond simple interactions) with robots may come as a beneficial activity.

The same type of robot is being used in a pediatric ward of a hospital for basic interactions with children and acceptance studies. While the acceptance of the robot by the children was very good, it was a collection of a priori unexpected behaviors by visitors and staff that suggested the thesis in this paper.

Figure 5 shows a spontaneous interaction, triggered by a staff member, in the hospital ward. Even though the interaction capabilities of the robot were limited, as the staff member was already aware of, there was an urge to interact with the robot. Other examples have been observed, namely, when people in the ward perceive new skills for the robot being tested. A frequent example is for people to tap the robot lightly in the head while passing by in the corridor—this behavior seems analogous to the light physical contact sometimes observed among people in stable social environments.

In general, there will be a multitude of admissible reasons for such behaviors. One may conjecture an analogy with, for example, the small breaks staff members take during a normal daywork—which clearly include the escaping of social pressures.

The fact that, in both experiments presented, no auxiliary/technical staff to the robot was in sight may have influenced the triggering of such behaviors. The presence of the robot in the environment seems to represent a catalyst for such behaviors. However, it must be emphasized that the theatrical behavior observed does not correspond to a bilateral interaction—the robot is not humanly aware in an emotional sense, only in a perceptual/sensory sense.

The examples above merely suggest the thesis of the paper: robots can facilitate humanizing an environment. The fact that the experiments occurred in real environments reinforces the suggestion. However, for a thorough analysis, the experiments must have long duration, often not practical and involving significant resources. As studies on professional burnout emphasize the need to increase the interventions (see [

41]) it is likely that more of such situations be reported.

The possibility that such behaviors by people towards social robots are caused by the mechanisms that regulate the empathy from humans towards inanimate objects (see [

26,

42]) cannot be discarded, i.e., it would be a natural biological response. Nevertheless, this may be a natural mechanism in human biology to keep humans within some moral boundaries. An alternative explanation is that the demanding nature of many healthcare jobs may foster the emerging of urges to establish relations with social robots.

The dynamics of such behaviors may be subject to a novelty effect. This would be analogous to behaviors often seen among humans when a new element enters a social circle, with an initial attention/interest peak followed by a decrease along some period of time, eventually reaching a stationary level. As aforementioned, identifying such dynamics, e.g., the mean time between activations may require long-run experiments, as, to avoid introducing any biases, these must be spontaneous.

5. Conclusions

Social robots are part of what is nowadays commonly called “always on” culture (see [

43]), of which smartphones are common agents (de facto transforming their human owners into social robots). The associated risks are clearly identified, namely, in what concerns privacy and mental and physical health via the so called “telepressure” [

44], that may lead to productivity/performance decreases (even though not all stress can be considered harmful [

45]). This leads, naturally, to the current trend against social robots and the fears of dehumanization. However, humans are living since the dawn of mankind under weak privacy assumptions and have learned to dissimulate feelings/emotions/thoughts, avoiding that these could be misused by others. This paper goes against the backdrop of mainstream research, and points to the alternative possibility that robots can help humanizing.

In a mixed human-robot society, the humans can assume that robots have skills that foster privacy violations (allegedly) and, hence, develop their own skills to fool the robots, much as they do among themselves. This feedback process has the potential to improve the awareness of humans on their own personality processes and recognition of their frailties. This is likely to play a positive role in humanizing societies.

The emergence of negative opinions and phobias is likely to occur, as it is a common behavioral pattern from humans to react against changes (or threats), namely when novel technologies are being introduced in a socially stable environment. Technology acceptance models (TAMs) have identified numerous relevant factors (see, for instance, the literature review in [

46]); the perceptions of usefulness and ease of use are the core of these models, and were originally proposed as the drives modulating the intention of a use of a technology (see [

47]). Therefore, in addition to improvement of the awareness of their social condition, nudging people to perceive a potential added value of the technology is likely to boost their awareness to the field.

As referred in [

1], lifelong learning will be a valuable skill in the future. This means that people will have to prepare themselves to live under a continuous learning requirement, this meaning learning to live among robots. Even though this process may not be without incidents, as referred to in [

48], robots, automation, and augmentation technologies are here to stay.

Social robots are constantly evolving, supported by the increasing complexity in the technologies/systems they are made of. The number of these systems can easily grow, sometimes because they implement intrinsically complex systems, other times because implementation gets easier if a complex system is divided in subsystems. The bottom line is that the number of interconnected systems is growing, and new features are likely to add new systems to such complex networks, hence increasing the overall complexity. Though the main usage of TAMs is in the domain of Information Systems, the proximity with Social Robotics suggests that conclusions can also be drawn on this technology (several other models exist in the literature, e.g., the Unified Theory of Acceptance and Use of Technology Model, and the Theory of Planned Behavior, accounting, for example, for subjective norms—see, for instance, [

49], [

50], or [

46] for literature reviews).

Two forces are running here in parallel: on one side, people will have increasingly better knowledge about the robots’ skills; on the other side, social robots will tend to be increasingly complex/sophisticated, and its predictability will decrease, i.e., it will be more like a human and less like a machine. The effect may be twofold. On one side, there may be a humanizing perception, as humans are often not predictable. Simultaneously, a fear of the unknown and/or some form of phobia against (social) robots may develop and, hence, constrain interactions.

Still, some authors argue that humanization of robots is a “problem’’ (see [

51]), though humanization may smooth out integration, a number of problems deserve attention, e.g., how humans will manage their emotions and expectations towards the robots and other people that must interact with robots, that may require hindering the humanization.

The nudging by the media will, unquestionably, introduce a bias in the perception of social robots by people. Positive news is likely to increase the desire of trying them out. Negative news will send an opposite message, e.g., the “firing” of a humanoid robot that was supposed to help customers of a supermarket but that, in fact, was not much of a help. Fictional works, namely by the movie industry, producing believable scenarios, besides the nudging, represent, also, a form of acceptance test that may promote the introduction of social robots. This, however, is a two-faced tool, as it also exposes the technology frailties. From the “Robot & Frank” [

52], to “Blade Runner” [

53], social acceptance has been a concern of fictional movies. In the former example, the focus is put in the robot intellect while, in the later example, the appearance and physical resilience/strength of the robots is also a relevant factor. When considered jointly, these two fictional examples seem to ignore the uncanny valley paradigm (see [

54]), as people do not seem to significantly care about the aspect of the robots—though they are anthropomorphized—implicitly suggesting that people are willing to accept morally challenging technologies, as social robots, regardless of appearance (the societies simply developed strategies to accommodate technology). Also, the fact that data is increasingly becoming a commodity implicitly empowers people to opt for alternative ways of living and, most importantly, assigns them the responsibility of self-education such that they understand the consequences of living in this new age in which robots are becoming omnipresent and develop skills to make any necessary adjustments, much like as anticipated in fictional worlds, such as those referred to above.