A Theory of Physically Embodied and Causally Effective Agency

Abstract

1. Introduction

2. Background

2.1. Structural Causal Models

Causal claims are much bolder than those made by probability statements; not only do they summarize relationships that hold [in the data generating process], but they also predict relationships that should hold when the [process] undergoes changes a stable dependence between X and Y that cannot be attributed to some prior cause common to both [and is] preserved when an exogenous control is applied to X.

2.2. Causal Markov Processes

- For each s, s’ ∈ S and a ∈ A, the function π (· | s’; a) is a discrete probability measure on S.

- Given an initial state and conditional distributions for selecting actions conditional on the past history of actions and states, the joint distribution for the sequence of actions and states satisfies:

2.3. Quantum Theory Basics

- Tr() = Tr(ρ);

- is a positive operator; and

- If τ is a density operator on the tensor product space and is the identity operator on , then ()τ is also a positive operator.

- for each r;

- for r ≠ s; and

- .

- Mechanical evolution (von Neumann’s Process 2): A state evolving mechanically for d time units transforms to , where is a CPTP map satisfying and, in the case of time-invariant evolution, .

- Reduction (von Neumann’s Process 1): The state undergoes an instantaneous and discontinuous transformation to , where r is one of the eigenvalues of the reduction operator , and is the associated projection operator in the spectral decomposition, and is the probability of the outcome associated with eigenvalue r. The allowable reduction operators form a von Neumann algebra.

3. A Causal Model of Physically Embodied Agents

3.1. Properties a Theory of Efficacious Free Choice Must Satisfy

- P1

- Freedom. The theory contains a construct to represent free choices made by agents. That is, there are occasions, called choice points, at which there are multiple possibilities for the agent’s future behavior.

- P2

- Attribution. The determination of which alternative is enacted at a given choice point is ascribed to the agent’s choice.

- P3

- Efficaciousness. The elements representing free choices should be efficacious in the sense that they cause effects in the physical world that depend on the choices made by agents.

- P4

- Physicality. The theory should be consistent with the laws of physics.

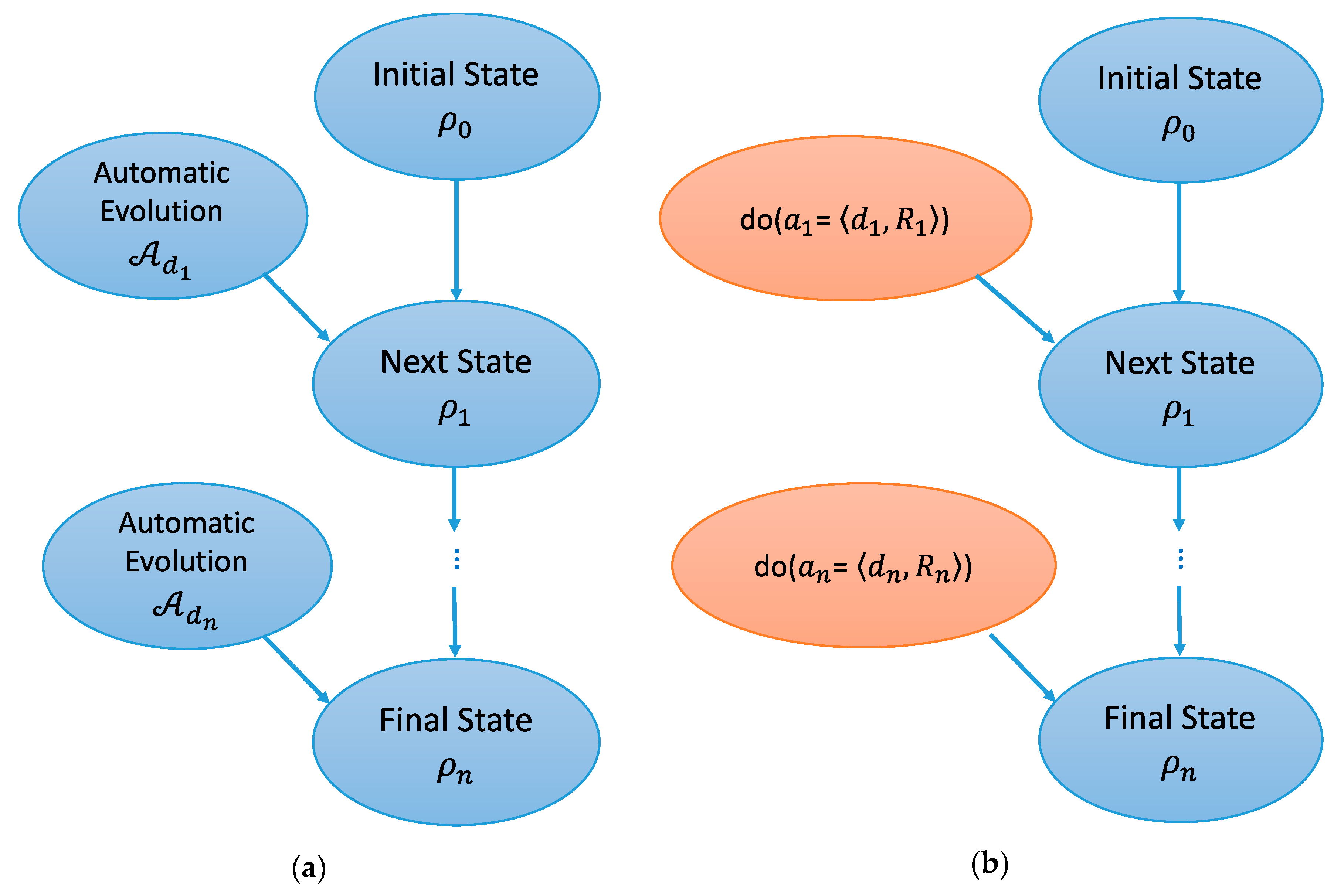

3.2. Quantum Theory as a Causal Markov Process

- State space: The states of a quantum causal Markov process are density operators on the Hilbert space of the quantum system.

- Action space: The allowable actions in a quantum causal Markov process are the tuples where d is a positive real number representing the time until the next reduction and R is a reduction operator. The allowable reduction operators form a von Neumann algebra over .

- Transition distribution. According to Definition 1, the transition distribution for a causal Markov process is a set of probability measures on states, one for each combination of previous state and current action. Let ρ be the state just after the previous reduction, d the time until the next reduction, the CPTP map representing mechanical evolution, and R the reduction operator applied after d time units. The initial state ρ evolves mechanically to , at which point the state transitions abruptly to the outcome associated with one of the eigenvalues r. The probability of observing eigenvalue r is given by . The post-reduction state if r is observed is . The possible outcomes are mutually orthogonal.

3.3. Quantum Theory Ontology

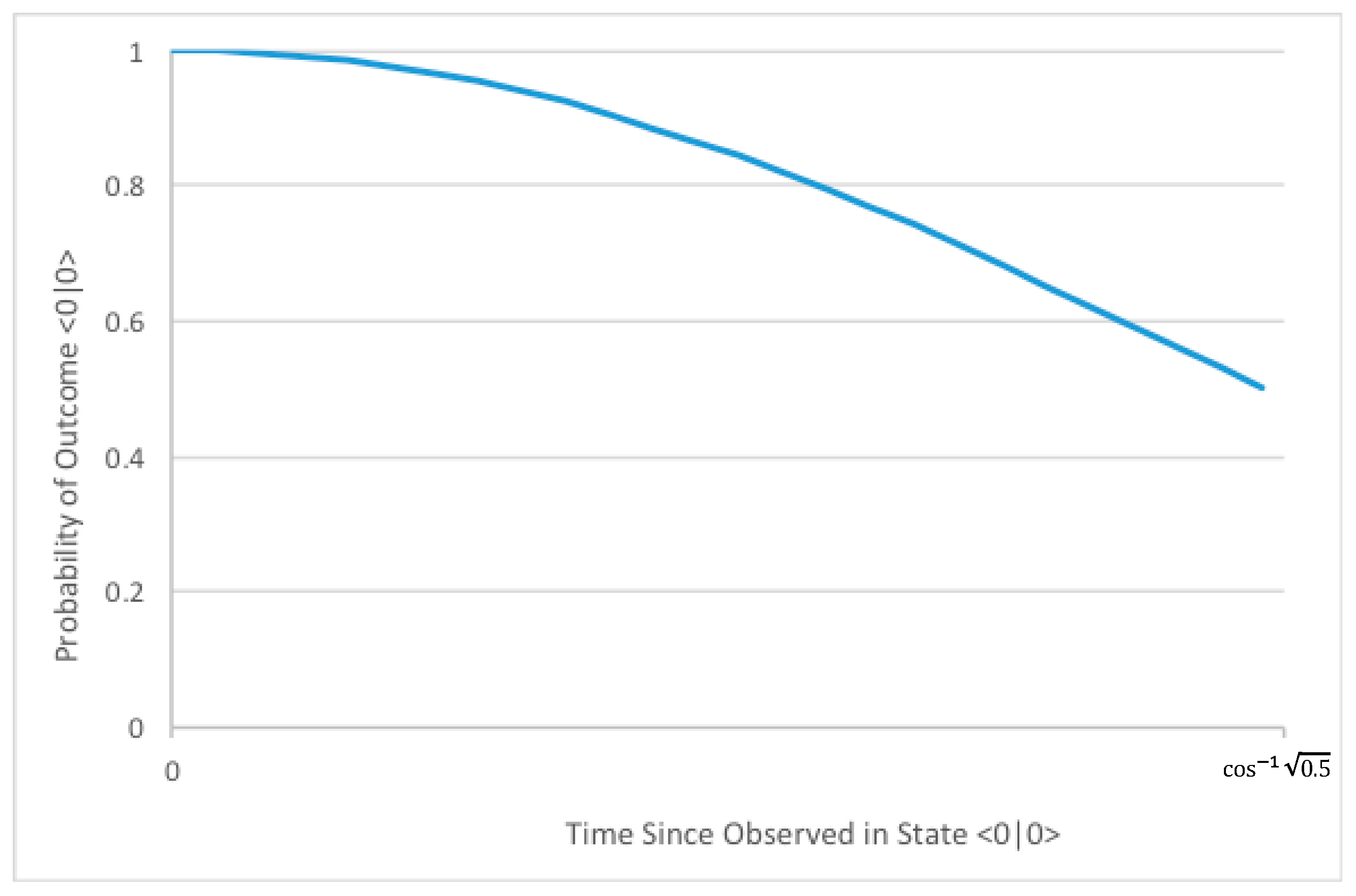

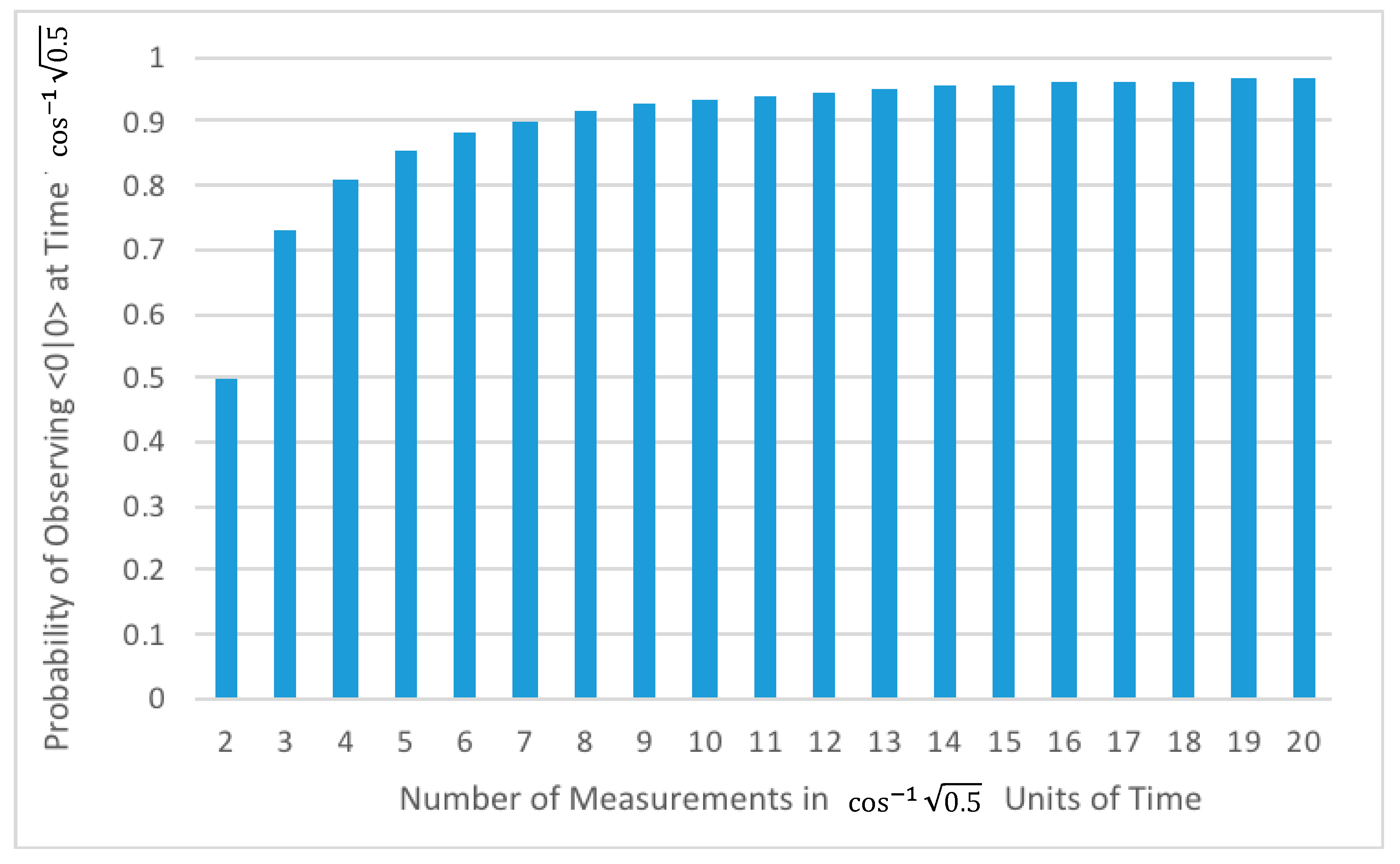

3.4. Quantum Zeno Effect

3.5. A Model of Efficacious Physically Embodied Agency in Humans

- P1

- Freedom. As currently understood, the laws of physics specify how a quantum system evolves when not subjected to reductions and the probability distribution of outcomes given the time since the last reduction and the operator set. That is, quantum theory specifies the following dynamic laws:

- via mechanical evolution for d time units; and

- () with probability if reduction operator R with spectral decomposition is applied after undisturbed evolution for d time units.The known laws of physics place no constraints on the choice of time interval d or reduction operator R. Modulo as yet undiscovered limits on d and R, there are multiple allowable choices of action Therefore, there are multiple possible options at each choice point.

- P2

- Attribution. RAH attributes the choice of action to the reducing agent.

- P3

- Efficaciousness. The analysis of Section 3.4, as illustrated in Figure 3, demonstrates that the choice of action has empirically distinguishable effects in the physical world.

- P4

- Physicality. RAH is fully consistent with the known laws of physics as formalized by von Neumann [24].

- P5

- Representation. Human reducing agents must be able, in a manner consistent with neurobiology, to form representations of the world. They must be able to manipulate these representations to predict the effects of the available options and compare the desirability of different options.

- P6

- Implementation. There must be a way, consistent with human neurobiology and physiology, for human reducing agents to enact their choices to cause their bodies to behave as intended.

4. Evaluating the Theory

4.1. Simulating a Reducing Agent

4.2. Laboratory Studies

4.3. Hardware Implementation

5. Conclusions

6. Discussion

Funding

Acknowledgments

Conflicts of Interest

References

- Marshall, A. Self-Driving Cars Have Hit Peak Hype—Now They Face the Trough of Disillusionment. Available online: https://www.wired.com/story/self-driving-cars-challenges/ (accessed on 26 September 2018).

- Marcus, G. Deep Learning: A Critical Appraisal. arXiv, 2018; arXiv:1801.00631. [Google Scholar]

- Pearl, J. Theoretical Impediments to Machine Learning with Seven Sparks from the Causal Revolution; UCLA Computer Science Department: Los Angeles, CA, USA, 2018. [Google Scholar]

- Pearl, J. Causality: Models, Reasoning, and Inference, 2nd ed.; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2009; ISBN 978-0-521-89560-6. [Google Scholar]

- Spirtes, P.; Glymour, C.; Scheines, R. Causation, Prediction, and Search, 2nd ed.; A Bradford Book: Cambridge, MA, USA, 2001; ISBN 978-0-262-19440-2. [Google Scholar]

- Spirtes, P. Introduction to Causal Inference. J. Mach. Learn. Res. 2010, 11, 1643–1662. [Google Scholar]

- Korb, K.B.; Nicholson, A.E. Bayesian Artificial Intelligence, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2011; ISBN 978-1-4398-1591-5. [Google Scholar]

- Pourret, O.; Naïm, P.; Marcot, B. Bayesian Networks: A Practical Guide to Applications; John Wiley & Sons: Hoboken, NJ, USA, 2008; ISBN 978-0-470-99454-2. [Google Scholar]

- Kenett, R.S. Applications of Bayesian Networks; Social Science Research Network: Rochester, NY, USA, 2012. [Google Scholar]

- Petersen, M.L.; van der Laan, M.J. Causal Models and Learning from Data. Epidemiol. Camb. Mass 2014, 25, 418–426. [Google Scholar] [CrossRef] [PubMed]

- Pearl, J.; Mackenzie, D. The Book of Why: The New Science of Cause and Effect, 1st ed.; Basic Books: New York, NY, USA, 2018; ISBN 978-0-465-09760-9. [Google Scholar]

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2009; ISBN 978-0-13-604259-4. [Google Scholar]

- Royakkers, L.; van Est, R. A Literature Review on New Robotics: Automation from Love to War. Int. J. Soc. Robot. 2015, 7, 549–570. [Google Scholar] [CrossRef]

- Gibbs, S. AlphaZero AI Beats Champion Chess Program After Teaching Itself in Four Hours. Available online: https://www.theguardian.com/technology/2017/dec/07/alphazero-google-deepmind-ai-beats-champion-program-teaching-itself-to-play-four-hours (accessed on 26 September 2018).

- Hern, A. Computers are Now Better than Humans at Recognising Images. Available online: https://www.theguardian.com/global/2015/may/13/baidu-minwa-supercomputer-better-than-humans-recognising-images (accessed on 26 September 2018).

- Baumeister, R.F.; Brewer, L.E. Believing versus Disbelieving in Free Will: Correlates and Consequences. Soc. Personal. Psychol. Compass 2012, 6, 736–745. [Google Scholar] [CrossRef]

- Martin, N.D.; Rigoni, D.; Vohs, K.D. Free will beliefs predict attitudes toward unethical behavior and criminal punishment. Proc. Natl. Acad. Sci. USA 2017, 114, 7325–7330. [Google Scholar] [CrossRef] [PubMed]

- Monroe, A.E.; Brady, G.L.; Malle, B.F. This Isn’t the Free Will Worth Looking For: General Free Will Beliefs Do Not Influence Moral Judgments, Agent-Specific Choice Ascriptions Do. Soc. Psychol. Personal. Sci. 2017, 8, 191–199. [Google Scholar] [CrossRef]

- Monroe, A.E.; Malle, B.F. From Uncaused Will to Conscious Choice: The Need to Study, Not Speculate About People’s Folk Concept of Free Will. Rev. Philos. Psychol. 2010, 1, 211–224. [Google Scholar] [CrossRef]

- Stillman, T.F.; Baumeister, R.F.; Mele, A.R. Free Will in Everyday Life: Autobiographical Accounts of Free and Unfree Actions. Philos. Psychol. 2011, 24, 381–394. [Google Scholar] [CrossRef]

- Schwartz, J.M.; Stapp, H.P.; Beauregard, M. Quantum Physics in Neuroscience and Psychology: A New Model with Respect to Mind/Brain Interaction. Philos. Trans. R. Soc. B 2005, 360, 1309–1327. [Google Scholar] [CrossRef] [PubMed]

- Stapp, H.P. Mind, Matter and Quantum Mechanics, 3rd ed.; Springer: Berlin, Germany, 2009; ISBN 978-3-540-89653-1. [Google Scholar]

- Stapp, H.P. Quantum Theory and Free Will: How Mental Intentions Translate into Bodily Actions, 1st ed.; Springer: New York, NY, USA, 2017; ISBN 978-3-319-58300-6. [Google Scholar]

- Von Neumann, J. Mathematical Foundations of Quantum Mechanics; Princeton University Press: Princeton, NJ, USA, 1955. [Google Scholar]

- Pearl, J. Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference; Morgan Kaufmann: San Mateo, CA, USA, 1988. [Google Scholar]

- Puterman, M.L. Chapter 8 Markov decision processes. In Handbooks in Operations Research and Management Science; Stochastic Models; Elsevier: Amsterdam, The Netherlands, 1990; Volume 2, pp. 331–434. ISBN 978-0-444-87473-3. [Google Scholar]

- Nielsen, M.A.; Chuang, I.L. Quantum Computation and Quantum Information; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Shankar, R. Principles of Quantum Mechanics; Plenum; Springer: Berlin, Germany, 1994. [Google Scholar]

- Dixmier, J. Von Neumann Algebras; Elsevier: Amsterdam, The Netherlands, 2011; ISBN 978-0-08-096015-9. [Google Scholar]

- Lledó, F. Operator algebras: An informal overview. arXiv, 2009; arXiv:0901.0232. [Google Scholar]

- Laskey, K.B. Acting in the World: A Physical Model of Free Choice. J. Cogn. Sci. 2018, 19, 125–163. [Google Scholar] [CrossRef]

- Walter, H. Neurophilosophy of Free Will: From Libertarian Illusions to a Concept of Natural Autonomy; MIT Press: Cambridge, MA, USA, 2009; ISBN 978-0-262-26503-4. [Google Scholar]

- Stapp, H.P. Mindful Universe: Quantum Mechanics and the Participating Observer, 2nd ed.; Springer: Berlin, Germany; New York, NY, USA, 2011; ISBN 978-3-642-18075-0. [Google Scholar]

- Bohm, D. Quantum Theory; Prentice-Hall: New York, NY, USA, 1951. [Google Scholar]

- Woodward, J. Causation and Manipulability. In The Stanford Encyclopedia of Philosophy; Zalta, E.N., Ed.; Stanford University: Stanford, CA, USA, 2016. [Google Scholar]

- Mermin, N.D. What’s Wrong with this Pillow? Phys. Today 1989, 42, 9. [Google Scholar] [CrossRef]

- Zurek, W.H. Decoherence and the Transition from Quantum to Classical—Revisited. In Quantum Decoherence; Progress in Mathematical Physics; Birkhäuser: Basel, Switzerland, 2006; pp. 175–212. ISBN 978-3-7643-7807-3. [Google Scholar]

- Adler, S.L. Why Decoherence has not Solved the Measurement Problem: A Response to P. W. Anderson. Stud. Hist. Philos. Sci. Part B Stud. Hist. Philos. Mod. Phys. 2003, 34, 135–142. [Google Scholar] [CrossRef]

- Ghirardi, G.C.; Rimini, A.; Weber, T. A model for a unified quantum description of macroscopic and microscopic systems. In Quantum Probability and Applications II; Lecture Notes in Mathematics; Springer: Berlin/Heidelberg, Germany, 1985; pp. 223–232. ISBN 978-3-540-15661-1. [Google Scholar]

- Penrose, R. On Gravity’s role in Quantum State Reduction. Gen. Relativ. Gravit. 1996, 28, 581–600. [Google Scholar] [CrossRef]

- Misra, B.; Sudarshan, E.C.G. The Zeno’s paradox in quantum theory. J. Math. Phys. 1977, 18, 756–763. [Google Scholar] [CrossRef]

- Altenmüller, T.P.; Schenzle, A. Dynamics by measurement: Aharonov’s inverse quantum Zeno effect. Phys. Rev. A. 1993, 48, 70–79. [Google Scholar] [CrossRef] [PubMed]

- Newell, A.; Simon, H. Computer Science as Empirical Inquiry: Symbols and Search. Commun. ACM 1976, 19, 113–126. [Google Scholar] [CrossRef]

- Ball, P. Physics of life: The dawn of quantum biology. Nat. News 2011, 474, 272–274. [Google Scholar] [CrossRef] [PubMed]

- Güçlü, U.; van Gerven, M.A.J. Modeling the Dynamics of Human Brain Activity with Recurrent Neural Networks. Front. Comput. Neurosci. 2017, 11. [Google Scholar] [CrossRef] [PubMed]

- Latorre, R.; Levi, R.; Varona, P. Transformation of Context-dependent Sensory Dynamics into Motor Behavior. PLOS Comput. Biol. 2013, 9, e1002908. [Google Scholar] [CrossRef] [PubMed]

- Barreto, G.D.A.; Araújo, A.F.R.; Ritter, H.J. Self-Organizing Feature Maps for Modeling and Control of Robotic Manipulators. J. Intell. Robot. Syst. 2003, 36, 407–450. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Available online: https://www.hindawi.com/journals/cin/2018/7068349/ (accessed on 23 July 2018).

- James, W. Psychology: The Briefer Course; Later Edition; Dover Publications: Mineola, NY, USA, 2001; ISBN 978-0-486-41604-5. [Google Scholar]

- Ward, L.M. Synchronous neural oscillations and cognitive processes. Trends Cogn. Sci. 2003, 7, 553–559. [Google Scholar] [CrossRef] [PubMed]

- Uhlhaas, P.J.; Pipa, G.; Lima, B.; Melloni, L.; Neuenschwander, S.; Nikolić, D.; Singer, W. Neural Synchrony in Cortical Networks: History, Concept and Current Status. Front. Integr. Neurosci. 2009, 3. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.-J. Neurophysiological and Computational Principles of Cortical Rhythms in Cognition. Physiol. Rev. 2010, 90, 1195–1268. [Google Scholar] [CrossRef] [PubMed]

- Hirabayashi, T.; Miyashita, Y. Dynamically modulated spike correlation in monkey inferior temporal cortex depending on the feature configuration within a whole object. J. Neurosci. Off. J. Soc. Neurosci. 2005, 25, 10299–10307. [Google Scholar] [CrossRef] [PubMed]

- Van Wijk, B.C.M.; Beek, P.J.; Daffertshofer, A. Neural synchrony within the motor system: What have we learned so far? Front. Hum. Neurosci. 2012, 6. [Google Scholar] [CrossRef] [PubMed]

- Tzagarakis, C.; West, S.; Pellizzer, G. Brain oscillatory activity during motor preparation: Effect of directional uncertainty on beta, but not alpha, frequency band. Front. Neurosci. 2015, 9. [Google Scholar] [CrossRef] [PubMed]

- Fröhlich, F.; McCormick, D.A. Endogenous electric fields may guide neocortical network activity. Neuron 2010, 67, 129–143. [Google Scholar] [CrossRef] [PubMed]

- Ye, H.; Steiger, A. Neuron matters: Electric activation of neuronal tissue is dependent on the interaction between the neuron and the electric field. J. Neuroeng. Rehabil. 2015, 12. [Google Scholar] [CrossRef] [PubMed]

- McFadden, J. The CEMI Field Theory Closing the Loop. J. Conscious. Stud. 2013, 20, 153–168. [Google Scholar]

- Pockett, S. The Nature of Consciousness: A Hypothesis; iUniverse: San Jose, CA, USA, 2000; ISBN 978-0-595-12215-8. [Google Scholar]

- Fingelkurts, A.A.; Fingelkurts, A.A.; Neves, C.F.H. Brain and Mind Operational Architectonics and Man-Made “Machine” Consciousness. Cogn. Process. 2009, 10, 105–111. [Google Scholar] [CrossRef] [PubMed]

- Rubino, D.; Robbins, K.A.; Hatsopoulos, N.G. Propagating waves mediate information transfer in the motor cortex. Nat. Neurosci. 2006, 9, 1549. [Google Scholar] [CrossRef] [PubMed]

- Buxhoeveden, D.P.; Casanova, M.F. The minicolumn hypothesis in neuroscience. Brain 2002, 125, 935–951. [Google Scholar] [CrossRef] [PubMed]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Laskey, K.B. A Theory of Physically Embodied and Causally Effective Agency. Information 2018, 9, 249. https://doi.org/10.3390/info9100249

Laskey KB. A Theory of Physically Embodied and Causally Effective Agency. Information. 2018; 9(10):249. https://doi.org/10.3390/info9100249

Chicago/Turabian StyleLaskey, Kathryn Blackmond. 2018. "A Theory of Physically Embodied and Causally Effective Agency" Information 9, no. 10: 249. https://doi.org/10.3390/info9100249

APA StyleLaskey, K. B. (2018). A Theory of Physically Embodied and Causally Effective Agency. Information, 9(10), 249. https://doi.org/10.3390/info9100249