1. Introduction

Probabilistic models in many scientific contexts are often characterized by ambiguity. Consider the following real-world example, due to [

1]. Geologists in the Ticino canton in southern Switzerland want to know how likely it is that a debris flow in a given region will be of low, medium, or high severity, given certain information about the geomorphology of a region. A debris flow is a geological incident that is similar to a mudslide. A low-severity flow is one in which the thickness of the debris displaced is less than 10

, a medium-severity flow is one in which the thickness of the debris displaced is between 10

and 50

, and a high-severity flow is one in which the thickness of the debris displaced is greater than 50

. A statistical model representing the relationship between the geomorphology of a region and the severity of a debris flow contains several input variables, such as land use and antecedent soil moisture. Scientists are uncertain as to the values of these variables, and they are also uncertain about the extent to which these values influence the probability distribution over the severity of a debris flow. The model reflects this uncertainty by assigning probability intervals, rather than precise probabilities, to each of the possible debris flow outcomes. To illustrate, for one set of inputs, Antonucci et al.’s model determines that the probability of a low-severity flow is in the interval

, that the probability of a medium-severity flow is in the interval

, and that the probability of a high severity flow is in the interval

([

1], p. 7). (In the example cited, these probability intervals are calculated using the imprecise Bayes nets techniques discussed in this paper.)

It is plausible that the inherent ambiguity of these probabilistic outputs is a feature, not a bug, of the model that generates them. As Joyce [

2] argues, there are cases in which agents ought to have imprecise degrees of belief with respect to future outcomes, since their total evidence does not warrant the assignment of a precise probability to any particular outcome (See [

3] for a full accounting of the various reasons discussed in the literature for adopting epistemic attitudes best represented by imprecise probabilities). I take it that the geological case described above falls into this category. Indeed, Antonucci et al. claim that the use of probability intervals, as opposed to precise probabilities, allows them to “quantify uncertainty on the basis of historical data, expert knowledge, and physical theories” ([

1], p. 1). Further, the uncertainty regarding the relationships between various inputs and the severity of a debris flow is such that one cannot define a second-order probability distribution over the interval range. Thus, it does not make sense to take an average over the interval and arrive at a single, precise probability. Rather, the imprecise judgments produced by the model may well represent the epistemic attitude that is most warranted by the available evidence.

Bayesian network models, such as those developed by Pearl [

4] and Spirtes et al. [

5], provide powerful tools for representing the causal structure of the world. Traditionally, these models exclusively use precise probabilities. Thus, it is an important question whether extensions of these models that allow for imprecise probabilities will yield similar fruit. Most work on imprecise Bayesian networks (IBNs) has occurred in the statistics and computer science literature (see [

6] for a comprehensive overview) (In the computer science and statistical literature, IBNs are sometimes called credal networks). I avoid this term here because of the different usage of the term “credence” in formal epistemology. Unsurprisingly, this literature has focused largely on the use of IBNs to make predictions about future events. By contrast, there has been little attention focused on the philosophical issue of whether and to what extent IBNs can be used to represent the causal structure of actual systems; that is, whether the edges in an IBN can be interpreted as type-level causal relations between variables.(The meaning of “edge” and “variable” in this context will be made precise in the next section). Addressing this issue is my focus in this paper.

My central task is to determine whether and how the Causal Markov Condition (CMC) and the Minimality condition—which are individually necessary and jointly sufficient conditions for the causal interpretation of a Bayesian network—can be extended into the imprecise context. In general, determining whether a Bayes net satisfies CMC and Minimality requires an understanding of what it means for two random variables to be independent of one another. In the precise context, such independence has only one mathematical definition, but in an imprecise context, multiple independence concepts abound (see [

7,

8]). Most saliently, imprecise probability distributions over different variables may satisfy so-called strong independence, or the weaker notion of epistemic independence. In what follows, I argue that neither strong nor epistemic independence can be neatly “plugged in” to CMC and Minimality to yield a compelling set of adequacy conditions for the causal interpretation of IBNs without troubling implications. These implications can be avoided by positing further restrictions on the probabilistic features of IBNs, but such restrictions demonstrate the extent to which many otherwise innocuous imprecise probabilistic models cannot be interpreted causally. Thus, I conclude that introducing imprecision into probabilistic graphical models can limit their ability to represent causal structure.

My plan for this paper is as follows. In

Section 2, I present the basic formalism for precise Bayesian network models, show how CMC and Minimality license a causal interpretation of those models, and describe the central role that probabilistic independence plays in defining these conditions. In

Section 3, I present the basic formalism for IBNs. In

Section 4, I illustrate the distinction between strong and epistemic independence between random variables in an imprecise probabilistic context. In

Section 5, I argue that neither strong nor epistemic independence can be used to adapt CMC and Minimality into an imprecise context. In

Section 6, I consider and respond to some salient objections to my argument. In

Section 7, I offer concluding remarks.

2. Precise Bayes Nets as Causal Models

A Bayes net is triple . is set of random variables whose values denote different possible states of the system being represented. is an acyclic set of ordered pairs of the variables in . These ordered pairs, called “edges”, are usually represented visually as arrows pointing from one variable to another. If a graph contains an edge , then is a parent of , and is a child of . A chain of parent–child relationships is called a directed path. If there is a directed path from a variable to a variable , then is a descendant of and is an ancestor of . Together, these sets of variables and edges comprise the graph , which represents the causal structure of a given system. A joint probability distribution P is defined over the variables of the graph. This joint distribution can be used to derive the conditional probability that each variable in the graph takes some value, given some setting of values for any combination of variables in the graph, as well as marginal probability distributions over all of the variables in the graph. Extreme probabilities can be used to give a deterministic representation of a causal system. At this point, we assume that all probability distributions in the Bayes net are precise, meaning that they assign a single numerical value to each event in the measure space of the variables that they are defined over.

We want to be able to interpret a Bayes net causally. A causal interpretation is one that takes the parent–child relationship to represent a direct causal relevance relation between two variables, with each parent variable directly causing its children. Following Ref. [

9], I will not attempt to give a reductive account of direct causation, but will instead show that certain probabilistic constraints on a Bayes net ensure that the parent–child relationship satisfies intuitive constraints on any notion of direct causation (Elsewhere, Woodward [

10] gives an account of direct causation in terms of interventions. This account is also non-reductive, in that interventions on variables are themselves defined using the concept of direct causation). The core tenet of the causal modelling literature is that, to represent causal relationships, a Bayes net must satisfy the Causal Markov Condition, which is defined as follows.

Causal Markov Condition (CMC): For any variable X in , the value of X is independent of its non-descendants, conditional on its parents.

At first glance, the connection between CMC and the causal interpretation of a Bayes net is opaque. However, this connection can be rendered more obvious by considering two crucial consequences of CMC, namely the way in which parents screen off their children from other variables, and the way in which correlations between variables that are not causally related can be accounted for by considering the common parents of those variables.

Let us begin with screening off. According to this condition, any representation of direct causation should entail that, once we have full information about all the causes of an event, no additional information about the causes of those causes should change the probability that we assign to an event. More formally, let us define the screening off condition as follows.

Screening Off: For any variable X in , if is the set containing all the parents of X and W is a parent of some variable in , then X and W are independent, conditional on .

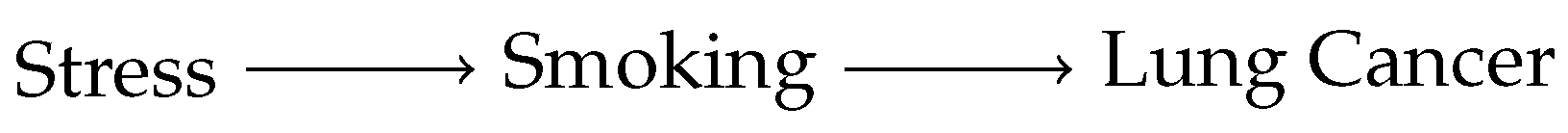

To illustrate, consider the simple causal graph in

Figure 1, in which each variable takes the value 1 or 0 depending on whether the indicated factor is absent or present in a given person. Screening Off says that, once we know the value of the variable Smoking, the probability that the person has lung cancer is independent of the value of Stress. Thus, the parent–child relationship plays the screening-off role that we require of any notion of direct causation.

The second important corollary of CMC is closely related to Reichenbach’s [

11] “common cause” condition. This condition states that any correlation between two variables can be explained by showing a chain of direct causal relations from one variable to another, or by tracing the causal history of both variables back to a common ancestor. This can be stated in graph-theoretic terms as follows.

Common Cause: For any disjoint sets of variables and in , if and are not unconditionally independent, then there exists a pair of variables and such that either one variable is a descendant of the other, or X and Y have at least one shared ancestor such that X and Y are independent conditional on .

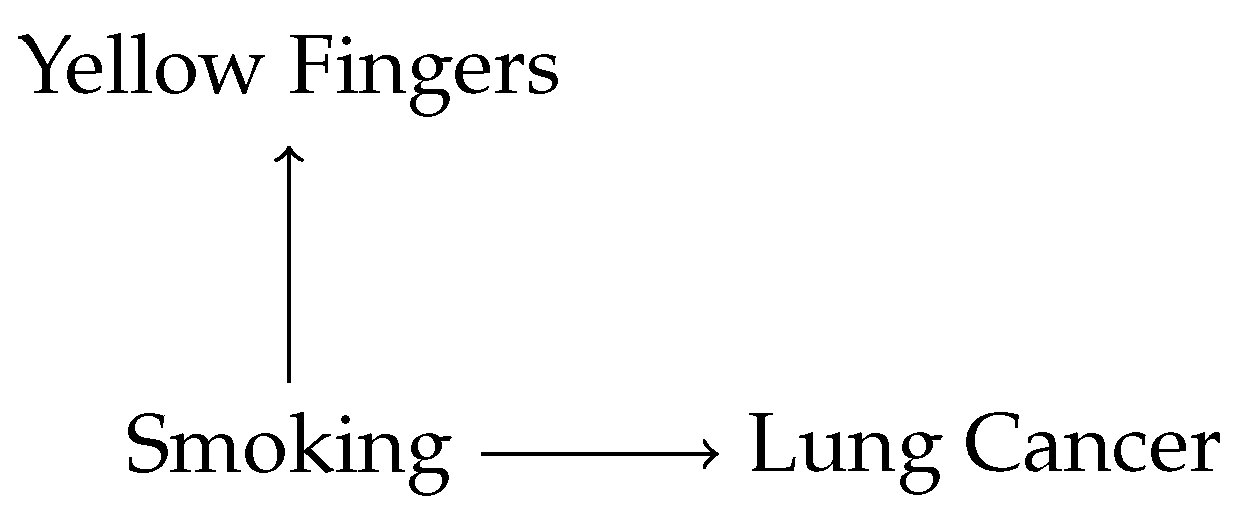

To illustrate, consider the graph in

Figure 2. Again, all variables are binary and denote the presence or absence of yellow teeth, smoking, or lung cancer. Suppose that there is an observed correlation between Yellow Fingers and Lung Cancer; unconditionally, the two variables are not independent. If, as is likely the case, there is no direct causal link in either direction between Yellow Fingers and Lung Cancer, Common Cause tells us that the two variables must share a common ancestor, viz. Smoking, on which they are conditionally independent.

Screening Off and Common Cause exhaust the logical content of CMC. That is, the following proposition is true (see

Appendix A for proof):

Proposition 1. A graph satisfies CMC if and only if it satisfies Screening Off and Common Cause.

The connection between these three conditions is well-established, such that the truth of this proposition is not a novel result. The content of the result can largely be found in [

12], and in similar results by [

13,

14]. However, this unambiguous connection between CMC and two intuitive necessary conditions for interpreting the parent–child relationship as one of direct causation is rarely stated in these concrete terms.

CMC alone is not sufficient for the causal interpretation of a Bayes net. To see why, consider a simple Bayes net of the form

. If we suppose that the variables

X,

Y, and

Z are all independent of one another, then this Bayes net trivially satisfies CMC; if all three variables are independent of each other, then they are each by implication independent of their non-descendants, conditional on their parents. However, since the three variables are independent, they cannot be causally related to each other in the manner suggested by the ordering of edges in this graph. Thus, a further condition is required to ensure the sufficiency of a Bayes net for causal interpretation. The weakest such condition proposed in the literature is the Minimality condition [

5], which can be stated as follows.

Minimality: For any graph , there is a subgraph that differs from solely with respect to a single edge that is included in but absent from . A graph satisfies Minimality if and only if no such subgraph satisfies the Causal Markov Condition.

A Bayes net that satisfies Minimality contains no extraneous edges. That is, there are no arrows between any two variables such that those two variables are independent conditional on their parents. Thus, if the variables

X,

Y, and

Z are all independent of one another, then the graph

does not satisfy Minimality. This is because any of the arrows in the graph can be removed without creating a sub-graph that violates CMC. If we hold that Screening Off and Common Cause are the only two conditions that we require a primitive notion of direct causation to satisfy, then CMC and Minimality are individually necessary and jointly sufficient conditions for the causal interpretation of Bayes net, since they ensure that all parent–child relationships in the graph play one of the two roles that we want any representation of a direct causal relationship to play (Many readers will be familiar with the Faithfulness condition for Bayes nets, which is strictly stronger than Minimality. I choose Minimality over Faithfulness as an adequacy condition for the causal interpretation of Bayes nets, since there is a case to be made that Bayes nets that satisfy Minimality but not Faithfulness are accurate representations of some causal systems. For a perspicuous comparison of the two conditions, see [

15]).

Thus, if a Bayes net satisfies CMC and Minimality, we can interpret its arrows causally. Note, however, that the fact that satisfying CMC and Minimality licenses a causal interpretation of a Bayes net does not entail that any Bayes net that satisfies these conditions provides a correct representation of a particular system. To see this, suppose that the variables X, Y, and Z are all correlated with one another. The graphs , and , among others, will all satisfy CMC and Minimality. To determine which graph is correct, we will need to perform experiments; for example, if we exogenously fix the value of the variable X and this changes the probability distribution over Z, then is the correct graph of the three listed above. While the discovery of the correct causal graph using interventions is an important part of the literature on causal Bayes nets, I bracket this discussion for the remainder of this paper. What is important for my purposes is that, because they satisfy CMC and Minimality, all three of these graphs can be interpreted as hypotheses about the causal structure of the world.

However, Bayes nets methods can yield some unambiguous conclusions regarding the causal structure of target systems. Let

be a pair of adjacent variables in a Bayes net

if and only if

contains an edge

or an edge

. [

12] prove that if two Bayes nets share the same variable set

and the same joint probability distribution

P, then they share the same pairs of adjacent variables. Thus, for any given precise probability distribution

P and variable set

, all Bayes nets that are consistent with

P will agree with respect to which variables are directly causally related and which variables are not. The ambiguity as to the correct Bayes net, given the joint probability distribution over

, is solely a matter of the

direction of the direct causal relationship between two variables; there is no ambiguity as to whether such a relationship exists. To put this another way, if it were to turn out to be the case that no direct causal connection in fact exists between two variables related by a direct edge in a Bayes net, it would have to be the case that either of two conditions obtains: (1) there is a failure of causal sufficiency, i.e., there are latent variables that have not been included in the Bayes net (A precise definition of causal sufficiency is as follows. For any variable

X that is not in

, the joint probability assigned to all variables in

is the same for all values of

X ([

5], p. 475)); or (2) the probability distribution over the existing variables is inaccurate. Similarly, if there is a direct causal connection between two variables that are not related by a directed edge, then there is a either a failure of causal sufficiency or the joint probability distribution over the graph is inaccurate. This important feature of Bayes nets turns out not to hold in the imprecise context, and this contrast between precise and imprecise Bayes nets will be important to my subsequent analysis.

This analysis helps itself to the existence of a model-independent fact of the matter as to whether there is a causal relationship between two variables. Though the nature of such model-independent causal relationships is a topic well beyond the scope of this paper, for the sake of argument, I adopt a mechanistic understanding of such relationships; one variable directly causes another if there is a direct mechanistic connection between changes in the value of one variable and changes in the value of the other. Following Ref. [

16], I understand mechanisms as “entities and activities organized such that they are productive of regular changes from start or set-up to finish or termination conditions” ([

16], p. 3). As such, a mechanistic connection between two variables means that, in the actual world, there are entities and activities organized such that changing the value of one variable leads to regular changes in the probability distribution over another variable. A direct mechanistic connection is one that holds independently of the values taken by the other variables in the graph. This is a decidedly counterfactual or interventionist understanding of the meaning of “productive”, in keeping with the understanding of mechanisms advanced by Woodward [

17]. This stands in contrast to a more ontic notion of productivity advocated by Glennan [

18], according to which productivity in mechanisms requires a physical process connecting the components of a mechanism.

Before moving to discuss Bayes nets with imprecise probabilities, I must first address the concept of probabilistic independence between random variables. Obviously, probabilistic independence plays a crucial role in our understanding of CMC and Minimality, since independence is part of the very definition of CMC. Therefore, one must understand the relation of independence between random variables in order to understand the causal interpretation of a Bayes net. Where all probabilities are assumed to be precise, independence has a straightforward mathematical meaning, which can be stated as follows.

Precise Unconditional Independence:X and Y are independent if and only if, for all values x and y, .

Similarly, conditional independence in the precise probabilistic context can be defined as follows.

Precise Conditional Independence:X and Y are independent conditional on Z if and only if, for all values x, y and z, .

Different modellers may hold that different statistical hurdles have to be cleared to license the conclusion that there is dependence or independence between two variables. For example, different modellers may hold that data must support the existence of a dependence relation between two variables at a certain level of statistical significance. Nevertheless, where precise probabilities are used, the mathematical definitions of independence and conditional independence are just those given above.

3. Imprecise Bayes Nets: The Basics

Following Ref. [

6], I define an imprecise Bayes net as a triple

. As in a precise Bayes net,

is a set of variables and

is a acyclic set of directed edges. However, whereas a precise Bayes net contains a joint probability distribution

P, an imprecise Bayes net contains a set of joint probability distributions

. That is,

maps every possible combination of values for all of the variables in the graph to a set of possible probabilities for that combination of values. As in the precise context, we can use

to derive sets of conditional and marginal probabilities over the variables in the graph. Following Ref. [

19,

20], I call these sets of probability distributions over variables “credal sets”. For example, if

X is a variable in an IBN, then the set of marginal probability distributions over

X that is implied by the joint distribution

is the credal set for

X. If

Y is another variable in the graph, then the set of conditional probability distributions over

X, given that

, is the conditional credal set over

X, given that

.

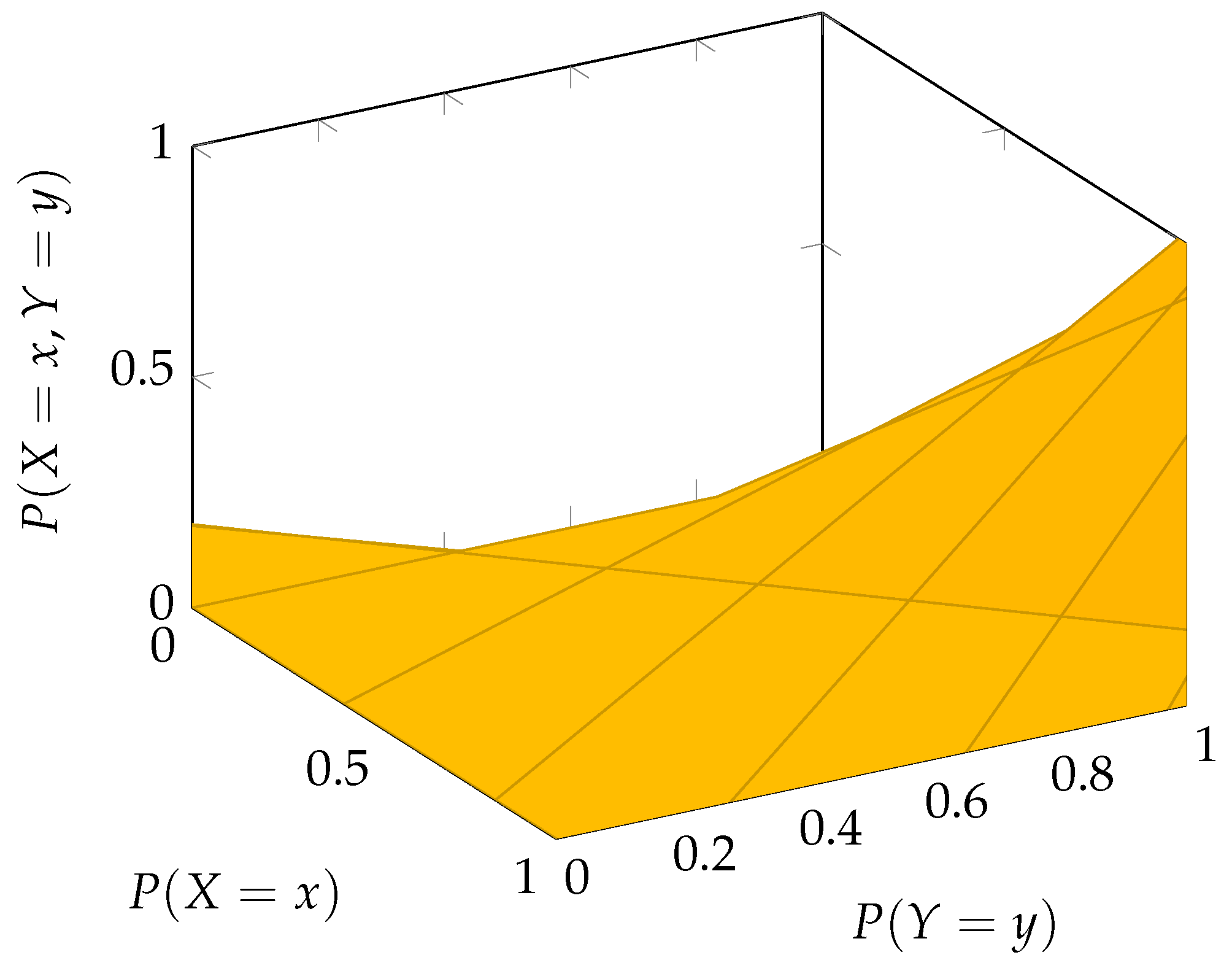

To illustrate via a toy example, suppose that an IBN contains a binary variable

S denoting whether a person smokes, and another binary variable

denoting whether they develop lung cancer. If the probability that someone develops lung cancer, given that they smoke, is best represented by the interval

, then we can define the the following conditional credal set over

, given

(i.e., given that the person smokes):

This is the set of all precise probability distributions over , given , such that any probability that is consistent with takes a precise value within the interval , and such that any probability that is consistent with takes a precise value within the interval . From this element-by-element, or pointwise, analysis of the credal set, we can infer the interval-valued claim that the probability of lung cancer, given smoking, is in the interval .

This method of representing imprecise probabilities as sets of probability distributions has its roots in foundational works by Levi [

19,

20] and Walley [

21]. However, unlike these authors, I do not assume that all credal sets are convex. That is, I drop the assumption that for any two probabilities in the credal set, the linear average of these two probabilities is also in the credal set. While perhaps non-standard, the decision to eschew the convexity requirement for credal sets has some precedent in the recent literature; see for instance [

22,

23].

To interpret an IBN causally, we will need to extend the notions of unconditional and conditional independence between variables to apply to cases where there is not a precise joint probability distribution over all pairs of variables in a graph, but where there is a specified family of joint probability distributions over the variables in a graph. This would allow us to define imprecise extensions of the CMC and Minimality conditions. However, as described in

Section 1, there is no single notion of what it means for two variables to be independent when independence is defined in terms of imprecise probabilities. In the next section, I outline the two leading ways of cashing out independence in an imprecise context: strong independence and epistemic independence. I show that there are substantive differences between the two notions of independence, with important consequences for the causal interpretation of IBNs.

5. Problems for an Imprecise Version of CMC

I argue that neither strong nor epistemic independence can be neatly “plugged into” CMC to allow for the causal interpretation of imprecise Bayes nets. To see why, let us begin by defining two possible versions of CMC, using each of the two independence concepts considered here:

Strong CMC: For any variable X in , the value of X is strongly independent of its non-descendants, conditional on its parents.

Epistemic CMC: For any variable X in , the value of X is epistemically independent of its non-descendants, conditional on its parents.

Similarly, we can define the following versions of the Minimality condition:

Strong Minimality: If we remove an edge from the IBN , the resulting subgraph does not satisfy Strong CMC.

Epistemic Minimality: If we remove an edge from the IBN , the resulting subgraph does not satisfy Epistemic CMC.

Next, let us adopt the following necessary and sufficient condition for an IBN to have a causal interpretation:

Causal Interpretation Condition: An IBN can be interpreted causally if and only if for every , the Bayes net satisfies the precise versions of CMC and Minimality.

It turns out that graphs that satisfy any consistent combination of Strong CMC, Strong Minimality, Epistemic CMC and Epistemic Minimality can still violate the Causal Interpretation Condition. Thus, neither set of adequacy conditions is sufficient for the causal interpretation of an IBN.

To see why, suppose that X and Y are variables in an IBN , and that they are epistemically but not strongly independent conditional on their parents. If satisfies Strong CMC and Strong Minimality, then there must be a directed path in either direction between X and Y. This directed path may simply be an edge between X and Y, such that the two variables are adjacent. However, does not satisfy the Causal Interpretation Condition. Since X and Y are epistemically but not strongly independent conditional on their parents, there is some precise distribution such that X and Y are independent conditional on their parents, in the precise sense of independence. The graph would violate the precise version of Minimality, since removing an edge on the directed path between X and Y would not create a subgraph that violates the precise version of CMC. Thus, violates the Causal Interpretation Condition. This shows that Strong CMC and Strong Minimality are not sufficient conditions for an IBN to satisfy the Causal Interpretation Condition.

Next, consider an IBN , with variables X and Y that are epistemically but not strongly independent, conditional on their parents. If satisfies Epistemic CMC and Epistemic Minimality, then there must not be a directed path between X and Y, since the existence of such a path would violate Epistemic Minimality. Indeed, the only difference between and is that the former contains a directed path between X and Y, while the latter does not. It turns out that also does not satisfy the Causal Interpretation Condition. Since X and Y are only epistemically independent, there is some such that X and Y are not independent conditional on their parents, in the precise sense, and yet there is no directed path between them. The graph would violate the precise version of CMC, so that violates the Causal Interpretation Condition. Thus, Epistemic CMC and Epistemic Minimality are not a sufficient set of conditions for an IBN to satisfy the Causal Interpretation Condition. One can check that the same result holds if we suppose that satisfies Epistemic CMC and Strong Minimality. Therefore, this mixed-strength combination of adequacy conditions also does not suffice to satisfy the Causal Interpretation Condition.

For the sake of completeness, it is worth noting that if there are variables X and Y in an IBN that are epistemically but strongly independent conditional on their parents, then that graph cannot satisfy Strong CMC and Epistemic Minimality. This is for the straightforward reason that, when two variables in an IBN are epistemically but not strongly independent conditional on their parents, Strong CMC is logically inconsistent with Epistemic Minimality. To see this, one need only note that since X and Y are epistemically but not strongly independent, Strong CMC requires that there be a path between them but Epistemic Minimality requires that there not be such a path. Thus, the IBN cannot satisfy both conditions.

The upshot of this discussion is as follows. To interpret an IBN causally, we want it to be the case that every precise distribution in the credal set supports a causal interpretation of the graph. However, the possibility of epistemically but not strongly independent variables is in conflict with this desideratum, no matter how we formulate imprecise versions of CMC and Minimality. To put this point slightly differently, if an IBN satisfies Strong CMC and contains two variables X and Y that are epistemically but not strongly independent, then an edge between those variables may be a false positive. That is, the two variables may be adjacent, indicating a direct mechanistic connection between them, even though the joint credal set over the graph is consistent with some precise joint distribution according to which there is no direct mechanistic connection between X and Y. On the other hand, if an IBN satisfies Epistemic CMC and has two variables X and Y that are epistemically but not strongly independent, the lack of an edge between those variables may be a false negative. That is, the two variables are non-adjacent, indicating that there is no direct mechanistic connection between them, even though the joint credal set over the graph is consistent with some precise joint distribution according to which there is a direct mechanistic connection between X and Y.

The possibility of these kinds of false positives and false negatives injects considerable ambiguity into the causal interpretation of an IBN. Recall that in a precise Bayes net, if two variables are adjacent and there is no direct mechanistic connection between them, or if two variables are not adjacent and there is a mechanistic connection between them, then either there is a failure of causal sufficiency or the joint probability distribution is incorrect. By contrast, the results given above show that the following four claims can all consistently hold of an IBN: (1) two variables X and Y are adjacent; (2) there is no mechanistic connection between X and Y; (3) the variable set is causally sufficient; and (4) the joint credal set is accurate. Alternatively, the following four claims can also all be true of an IBN: (1) two variables X and Y are not adjacent; (2) there is a mechanistic connection between X and Y; (3) the variable set is causally sufficient; and (4) the joint credal set is accurate. The possible consistency of these claims shows an ambiguity in the causal interpretation of an IBN that is absent from the precise context.