Face Classification Using Color Information

Abstract

:1. Introduction

2. Texture Analysis

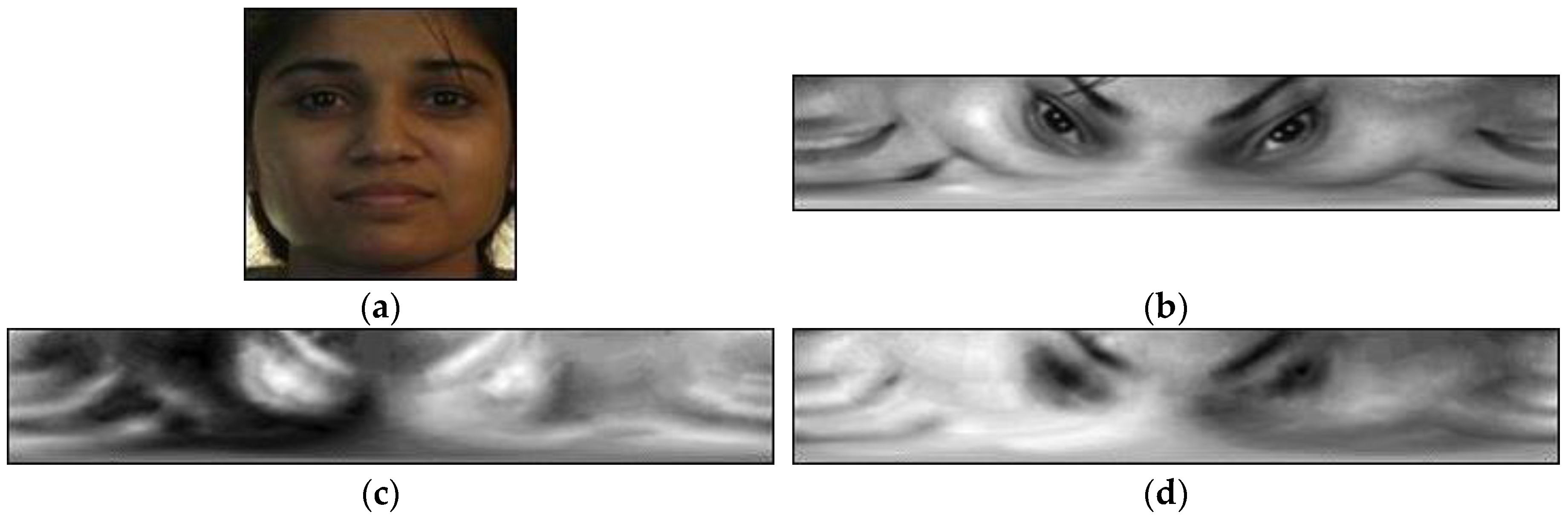

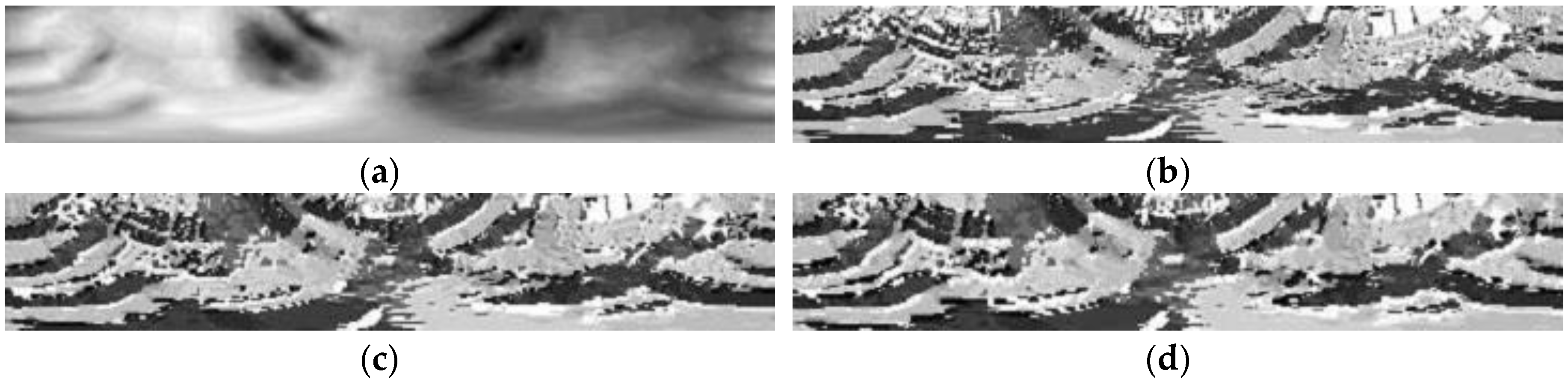

2.1. Local Binary Pattern (LBP)

2.2. Compound Local Binary Pattern (CLBP)

2.3. Non-Redundant Local Binary Pattern (NRLBP)

3. Color Models

4. Proposed Methods

5. Experimental Setup

5.1. Database

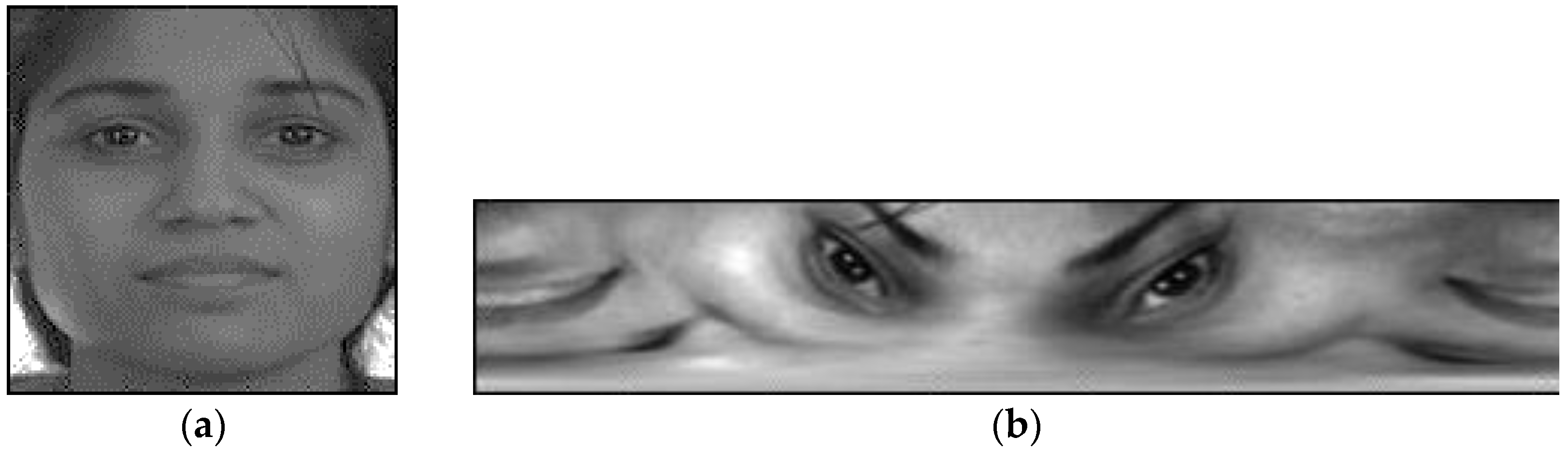

5.2. Preprocessing

5.3. Feature Extraction

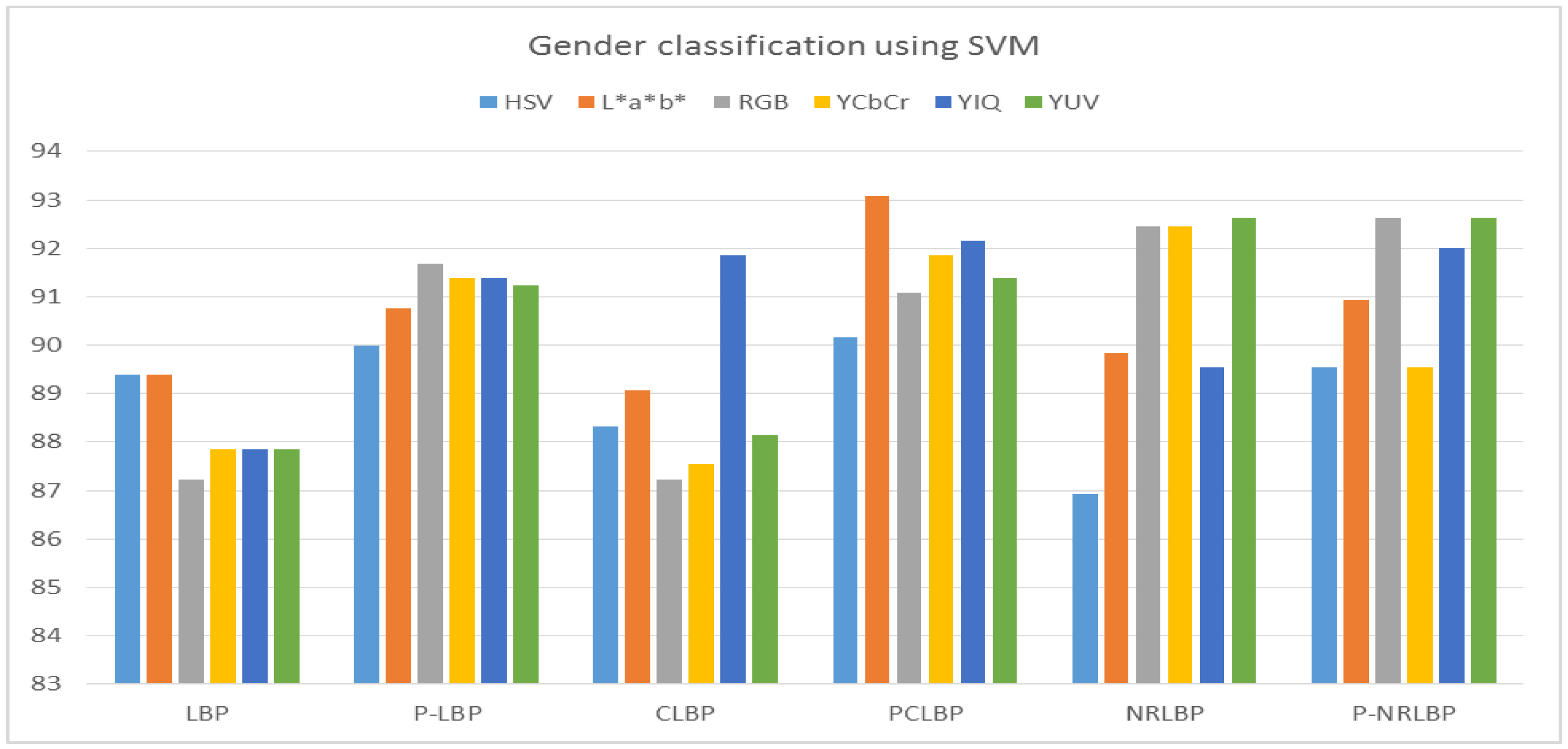

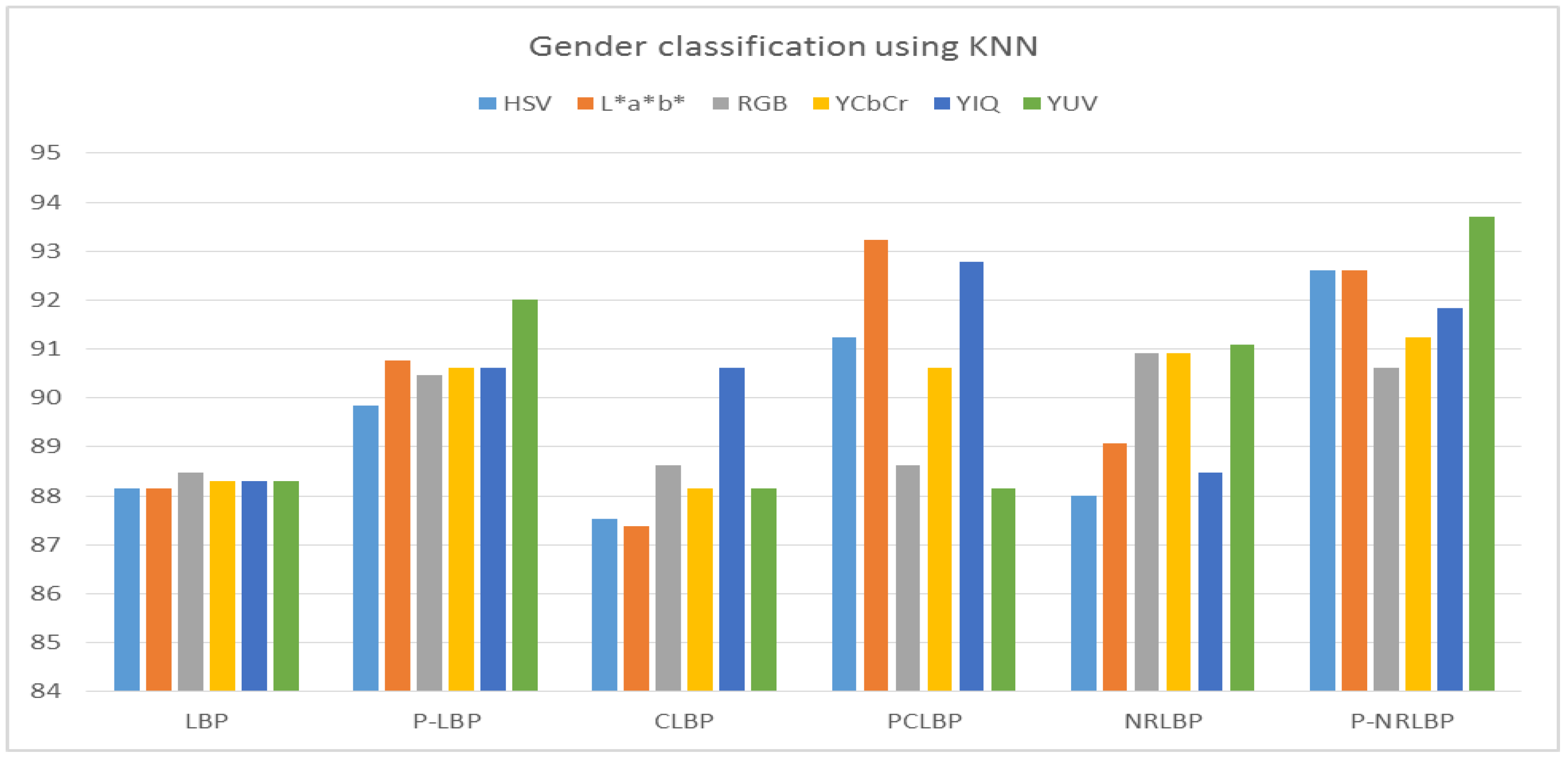

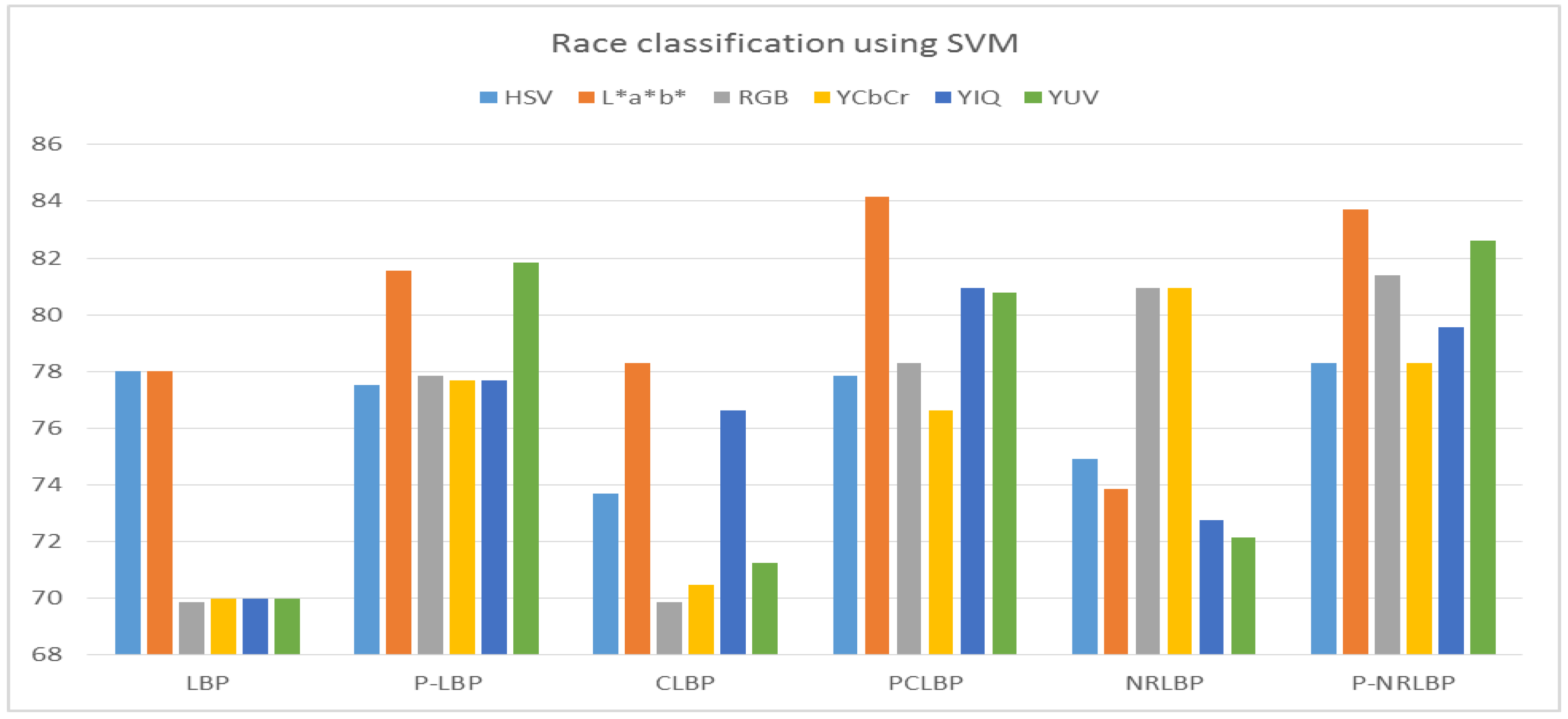

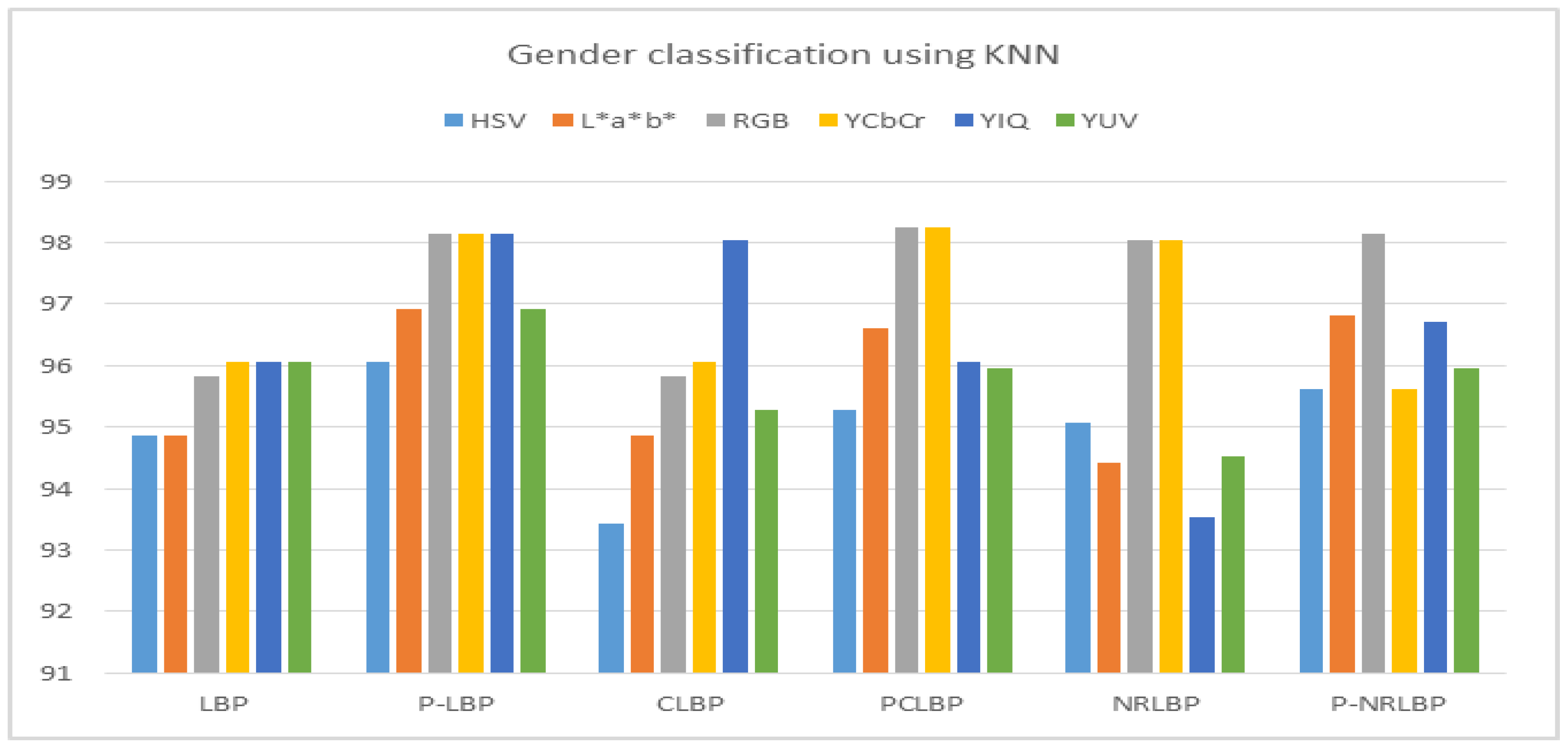

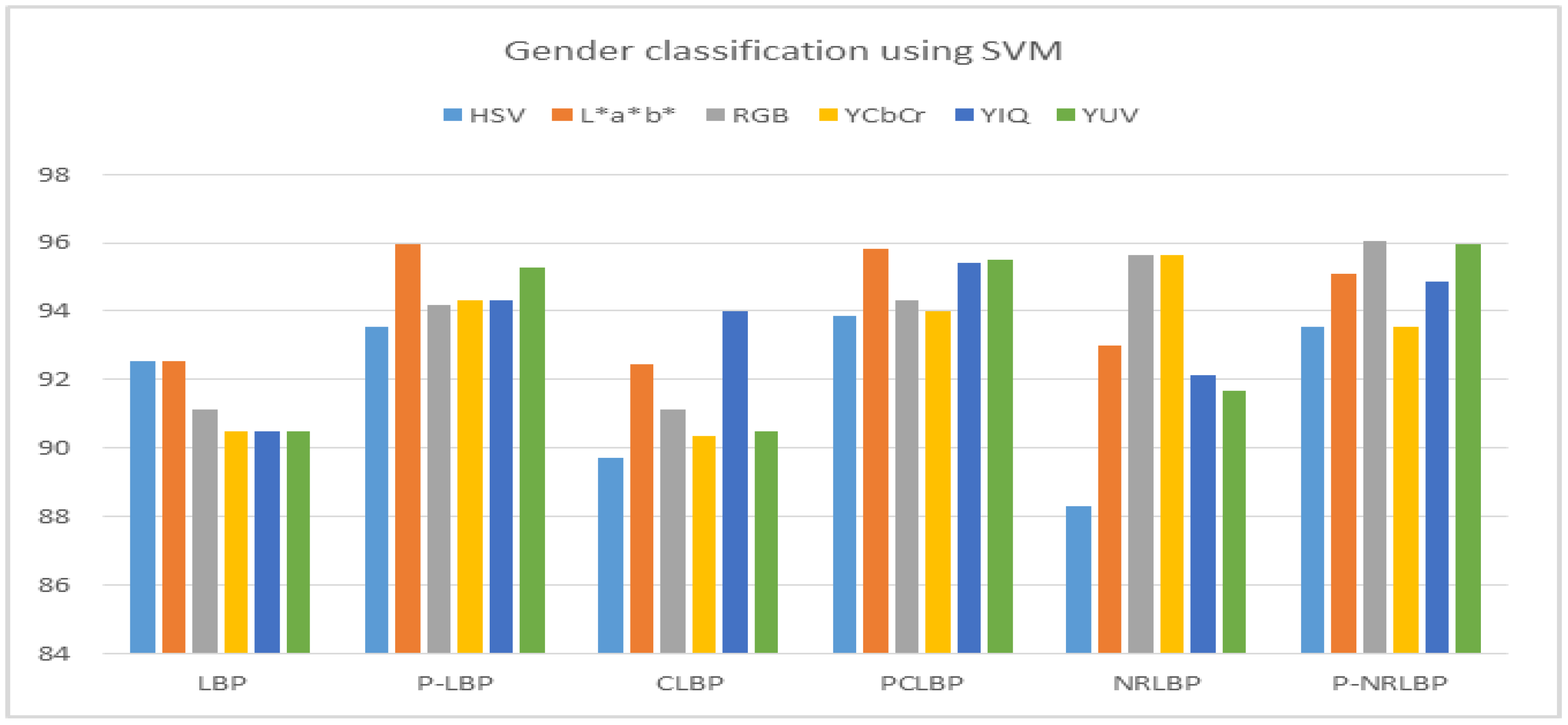

6. Experimental Results

7. Discussion

8. Conclusions

Author Contributions

Conflicts of Interest

References

- Han, H.; Otto, C.; Liu, X.; Jain, A.K. Demographic estimation from face images: Human vs. machine performance. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1148–1161. [Google Scholar] [CrossRef] [PubMed]

- Jafri, R.; Arabnia, H.R. A survey of face recognition techniques. J. Inf. Process. Syst. 2009, 5, 41–68. [Google Scholar] [CrossRef]

- Jafri, R.; Arabnia, H.R. Fusion of Face and Gait for Automatic Human Recognition. In Proceedings of the International Conference on Information Technology—New Generations, Las Vegas, NV, USA, 7–8 April 2008; pp. 167–173. [Google Scholar]

- Jafri, R.; Arabnia, H.R. Analysis of Subspace-Based Face Recognition Techniques under Changes in Imaging Factors. In Proceedings of the IEEE International Conference on Information Technology—New Generations (ITNG 2007), Las Vegas, NV, USA, 2–4 April 2007; pp. 406–413. [Google Scholar]

- Jafri, R.; Arabnia, H.R.; Simpson, K.J. An Integrated Face-Gait System for Automatic Recognition of Humans. In Proceedings of the International Conference on Security & Management, Las Vegas, NV, USA, 13–16 July 2008; pp. 580–591. [Google Scholar]

- Han, H.; Jain, A.K. Age, Gender and Race Estimation from Unconstrained Face Images; MSU Technical Report (MSU-CSE-14–5); Department Computer Science Engineering, Michigan State University: East Lansing, MI, USA, 2014. [Google Scholar]

- Farinella, G.; Dugelay, J.L. Demographic classification: Do gender and ethnicity affect each other? In Proceedings of the IEEE International Conference on Informatics, Electronics & Vision (ICIEV), Dhaka, Bangladesh, 18–19 May 2012; pp. 383–390. [Google Scholar]

- Demirkus, M.; Garg, K.; Guler, S. Automated person categorization for video surveillance using soft biometrics. In Proceedings of the SPIE Defense, Security, and Sensing, International Society for Optics and Photonics, Orlando, FL, USA, 5–9 April 2010; p. 76670P. [Google Scholar]

- Wang, C.; Huang, D.; Wang, Y.; Zhang, G. Facial image-based gender classification using local circular patterns. In Proceedings of the 21st IEEE International Conference on Pattern Recognition, Tsukuba, Japan, 11–15 November 2012; pp. 2432–2535. [Google Scholar]

- Anbarjafari, G. Face recognition using color local binary pattern from mutually independent color channels. EURASIP J. Image Video Process. 2013, 2013, 1–11. [Google Scholar] [CrossRef]

- Shih, P.; Liu, C. Comparative assessment of content-based face image retrieval in different color spaces. Int. J. Pattern Recognit. Artif. Intell. 2005, 19, 873–893. [Google Scholar] [CrossRef]

- Liu, Z.; Liu, C. Fusion of color, local spatial and global frequency information for face recognition. Pattern Recognit. 2010, 43, 2882–2890. [Google Scholar] [CrossRef]

- Choi, J.Y.; Ro, Y.M.; Plataniotis, K.N. Color local texture features for color face recognition. IEEE Trans. Image Process. 2012, 21, 1366–1380. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.I.; Ro, Y.M. Collaborative facial color feature learning of multiple color spaces for face recognition. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 1669–1673. [Google Scholar]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Zong, Z.; Ogunbona, P.; Li, W. Object detection using non-redundant local binary patterns. In Proceedings of the 17th IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 4609–4612. [Google Scholar]

- Ahonen, T.; Hadid, A.; Pietikäinen, M. Face recognition with local binary patterns. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2004; pp. 469–481. [Google Scholar]

- Sujatha, B.M.; Babu, K.S.; Raja, K.B.; Venugopal, K.R. Hybrid domain based face recognition using DWT, FFT and compressed CLBP. Int. J. Image Process. 2015, 9, 283–303. [Google Scholar]

- Plataniotis, K.; Venetsanopoulos, A.N. Color Image Processing and Applications; Springer Science & Business Media: New York, NY, USA, 2013. [Google Scholar]

- Zhang, D.; Lu, G. Generic Fourier descriptor for shape-based image retrieval. In Proceedings of the International Conference on Multimedia and Expo, Atlanta, GA, USA, 26–29 August 2002; Volume 1, pp. 425–428. [Google Scholar]

- Oh, J.H.; Kwak, N. Robust Face Recognition under the Polar Coordinate System. 2016. Available online: http://mipal.snu.ac.kr/images/2/2b/IPC4052.pdf (accessed on 25 May 2017).

- Bhattacharjee, D. Adaptive polar transform and fusion for human face image processing and evaluation. In Human-Centric Computing and Information Sciences, Article 4; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Song, T.; Li, H. Local polar DCT features for image description. IEEE Signal Process. Lett. 2013, 20, 59–62. [Google Scholar] [CrossRef]

- Turk, M.; Pentland, A. Eigenfaces for recognition. J. Cogn. Neurosci. 1991, 3, 71–86. [Google Scholar] [CrossRef] [PubMed]

- Phillips, P.J.; Moon, H.; Rizvi, S.A.; Rauss, P.J. The FERET evaluation methodology for face-recognition algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1090–1104. [Google Scholar] [CrossRef]

- Psychological Image Collection at Stirling (PICS). Available online: pics.stir.ac.uk (accessed on 29 May 2016).

- Mohammed, A.A.; Sajjanhar, A. Robust approaches for multi-label face classification. In Proceedings of the IEEE International Conference on Digital Image Computing: Techniques and Applications, Gold Coast, Australia, 30 November–2 December 2016; pp. 275–280. [Google Scholar]

- Hsu, C.W.; Chang, C.C.; Lin, C.J. A Practical Guide to Support Vector Classification. 2003, pp. 1–16. Available online: http://www.datascienceassn.org/sites/default/files/Practical%20Guide%20to%20Support%20Vector%20Classification.pdf (accessed on 13 May 2017).

- Boiman, O.; Shechtman, E.; Irani, M. In defense of nearest-neighbor based image classification. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

| Labels | Classes |

| Gender | Male, Female |

| Race | European, African, Middle Eastern, South Asian, East Asian, and Hispanic |

| Approach | Gender Classification | Race Classification | ||

|---|---|---|---|---|

| SVM | KNN | SVM | KNN | |

| LBP | 90.15 | 90.46 | 68.76 | 75.53 |

| PLBP | 92.92 | 91.69 | 79.38 | 79.69 |

| CLBP | 89.84 | 89.84 | 66.46 | 75.07 |

| PCLBP | 92.30 | 91.69 | 75.23 | 80.30 |

| NRLBP | 88.92 | 90.00 | 68.76 | 80.00 |

| P-NRLBP | 92.30 | 92.00 | 80.92 | 82.46 |

| Approach | Gender Classification | Race Classification | ||

|---|---|---|---|---|

| SVM | KNN | SVM | KNN | |

| LBP | 90.00 | 90.15 | 69.38 | 75.23 |

| P-LBP | 91.69 | 92.15 | 79.38 | 79.07 |

| CLBP | 89.84 | 89.07 | 67.23 | 75.38 |

| PCLBP | 94.00 | 91.23 | 74.30 | 80.61 |

| NRLBP | 88.92 | 90.30 | 69.23 | 80.15 |

| P-NRLBP | 92.30 | 91.84 | 80.92 | 82.46 |

| Approach | Gender Classification | |

|---|---|---|

| SVM | KNN | |

| LBP | 91.78 | 96.60 |

| PLBP | 95.18 | 98.79 |

| CLBP | 91.01 | 96.27 |

| PCLBP | 94.63 | 98.46 |

| NRLBP | 91.23 | 96.27 |

| P-NRLBP | 95.83 | 98.24 |

| Approach | Gender Classification | |

|---|---|---|

| SVM | KNN | |

| LBP | 91.67 | 96.38 |

| PLBP | 95.18 | 98.24 |

| CLBP | 90.79 | 96.16 |

| PCLBP | 94.41 | 98.46 |

| NRLBP | 91.23 | 96.16 |

| P-NRLBP | 95.72 | 98.13 |

| Male | Female | |

|---|---|---|

| male | 97.17 | 2.82 |

| female | 12 | 88 |

| Male | Female | |

|---|---|---|

| male | 99.13 | 0.87 |

| female | 1.54 | 98.45 |

| Europe | African | Middle Eastern | South Asian | East Asian | Hispanic | |

|---|---|---|---|---|---|---|

| European | 95.55 | 0.63 | 1.58 | 0.63 | 1.26 | 0.31 |

| African | 21.66 | 71.66 | 5 | 1.66 | 0 | 0 |

| Middle Eastern | 15 | 3 | 76 | 2 | 1 | 3 |

| South Asian | 5.71 | 2.85 | 5.71 | 71.42 | 14.28 | 0 |

| East Asian | 16.19 | 0 | 1.90 | 2.85 | 78.09 | 0.95 |

| Hispanic | 20 | 0 | 5.71 | 5.71 | 11.42 | 57.14 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sajjanhar, A.; Mohammed, A.A. Face Classification Using Color Information. Information 2017, 8, 155. https://doi.org/10.3390/info8040155

Sajjanhar A, Mohammed AA. Face Classification Using Color Information. Information. 2017; 8(4):155. https://doi.org/10.3390/info8040155

Chicago/Turabian StyleSajjanhar, Atul, and Ahmed Abdulateef Mohammed. 2017. "Face Classification Using Color Information" Information 8, no. 4: 155. https://doi.org/10.3390/info8040155

APA StyleSajjanhar, A., & Mohammed, A. A. (2017). Face Classification Using Color Information. Information, 8(4), 155. https://doi.org/10.3390/info8040155