Designing the Health-related Internet of Things: Ethical Principles and Guidelines

Abstract

1. Introduction

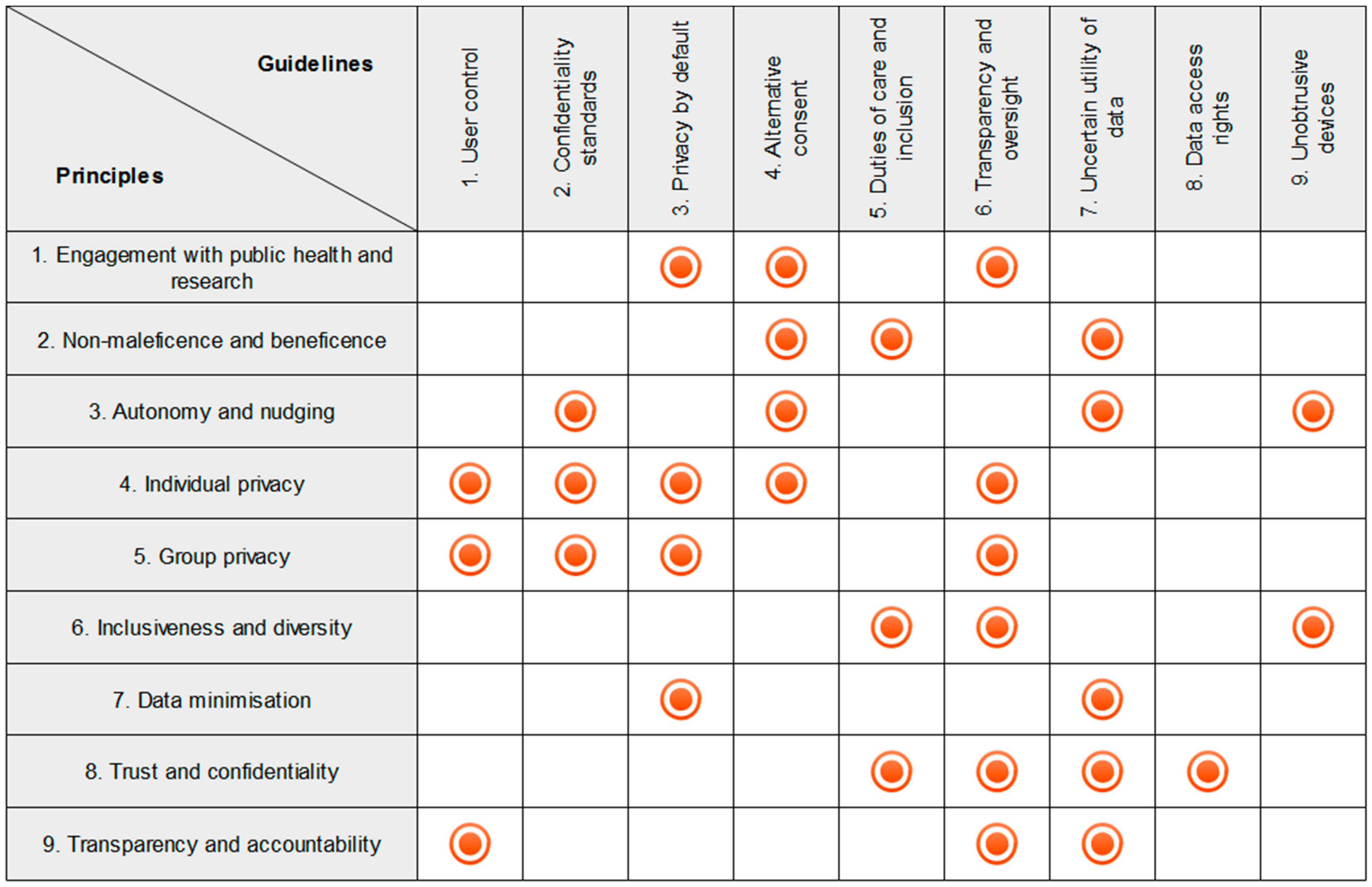

- Facilitate public health actions and user engagement with research via the H-IoT;

- Non-maleficence and beneficence;

- Respect autonomy and avoid subtle nudging of user behaviour;

- Respect individual privacy;

- Respect group privacy;

- Embed inclusiveness and diversity in design;

- Collect the minimal data required;

- Establish and maintain trust and confidentiality between H-IoT users and providers;

- Ensure data processing protocols are transparent and accountable.

- Give users control over data collection and transmission;

- Iteratively adhere to industry and research confidentiality standards;

- Design devices and data sharing protocols to protect user privacy by default;

- Use alternative consent mechanisms when sharing H-IoT data;

- Meet professional duties of care and facilitate inclusion of medical professionals in H-IoT mediated care;

- Include robust transparency mechanisms in H-IoT data protocols to grant users oversight over their data;

- Report the uncertain utility of H-IoT data to users at the point of adoption;

- Provide users with practically useful mechanisms to exercise meaningful data access rights;

- Design devices to be unobtrusive according to the needs of specific user groups.

2. Principles for Ethical Design of the H-IoT

2.1. Facilitate Public Health Actions and User Engagement with Research via the H-IoT

2.2. Non-Maleficence and Beneficence

2.3. Respect Autonomy and Avoid Subtle Nudging of User Behaviour

2.4. Respect Individual Privacy

2.5. Respect Group Privacy

2.6. Embed Inclusiveness and Diversity in Design

2.7. Collect the Minimal Data Desired by Users

2.8. Establish and Maintain Trust and Confidentiality between H-IoT Users and Providers

2.9. Ensure Data Processing Protocols Are Transparent and Accountable

3. Guidelines for Ethical Design of the H-IoT

3.1. Give Users Control over Data Collection and Transmission

3.2. Iteratively Adhere to Public, Research, and Industry Confidentiality Standards

3.3. Design Devices and Data Sharing Protocols to Protect User Privacy by Default

3.4. Use Alternative Consent Mechanisms when Sharing H-IoT Data

3.5. Meet Professional Duties of Care and Facilitate Inclusion of Medical Professionals in H-IoT Mediated Care

3.6. Include Robust Transparency Mechanisms in H-IoT Data Protocols to Grant Users Oversight over Their Data

3.7. Report the Uncertain Utility of H-IoT Data to Users at the Point of Adoption

3.8. Provide Users with Practically Useful Mechanisms to Exercise Meaningful Data Access Rights

3.9. Design Devices to be Unobtrusive According to the Needs of Specific User Groups

4. Two Examples

4.1. H-IoT for Elderly Users

4.2. H-IoT for Children

5. Conclusions

Acknowledgments

Conflicts of Interest

References

- Kubitschke, L.; Cullen, K.; Műller, S. ICT & Ageing: European Study on Users, Markets and Technologies–Final Report; European Commission: Brussels, Belgium, 2010. [Google Scholar]

- Mittelstadt, B.; Fairweather, N.B.; Shaw, M.; McBride, N. The ethical implications of personal health monitoring. Int. J. Tech. 2014, 5, 37–60. [Google Scholar] [CrossRef]

- Lupton, D. The commodification of patient opinion: the digital patient experience economy in the age of big data. Sociol. Health Illn. 2014, 36, 856–869. [Google Scholar] [CrossRef] [PubMed]

- Lyon, D. Surveillance as Social Sorting: Privacy, Risk, and Digital Discrimination; Routledge: London, UK, 2003. [Google Scholar]

- Bowes, A.; Dawson, A.; Bell, D. Ethical implications of lifestyle monitoring data in ageing research. Inf. Commun. Soc. 2012, 15, 5–22. [Google Scholar] [CrossRef]

- Edgar, A. The expert patient: Illness as practice. Med. Health Care Philos. 2005, 8, 165–171. [Google Scholar] [CrossRef] [PubMed]

- Pellegrino, E.D.; Thomasma, D.C. The Virtues in Medical Practice; Oxford University Press: New York, NY, USA, 1993. [Google Scholar]

- Beauchamp, T.L.; Childress, J.F. Principles of Biomedical Ethics; Oxford University Press: New York, NY, USA, 2009. [Google Scholar]

- Woodward, R. The OECD Privacy Framework; Organisation for Economic Co-operation and Development; Routledge: Abingdon-on-Thames, UK, 2009; Available online: https://www.oecd.org/sti/ieconomy/oecd_privacy_framework.pdf (accessed on 30 June 2017).

- Schaefer, G.O.; Emanuel, E.J.; Wertheimer, A. The obligation to participate in biomedical research. J. Am. Med. Assoc. 2009, 302, 67–72. [Google Scholar] [CrossRef] [PubMed]

- Chadwick, R.; Berg, K. Solidarity and equity: new ethical frameworks for genetic databases. Nat. Rev. Genet. 2001, 2, 318–321. [Google Scholar] [CrossRef] [PubMed]

- Mittelstadt, B.; Benzler, J.; Engelmann, L.; Prainsack, B.; Vayena, E. Is there a duty to participate in digital epidemiology? Life Sci. Soc. Policy 2017, in press. [Google Scholar]

- Dove, E.S.; Knoppers, B.M.; Zawati, M.H. Towards an ethics safe harbor for global biomedical research. J. Law Biosci. 2014, 1, 3–51. [Google Scholar] [CrossRef] [PubMed]

- Gao, T.; Pesto, C.; Selavo, L.; Chen, Y.; Ko, J.G.; Lim, J.H.; Terzis, A.; Watt, A.; Jeng, J.; Chen, B. Wireless Medical Sensor Networks in Emergency Response: Implementation and Pilot Results. In Proceedings of the 2008 IEEE Conference on Technologies for Homeland Security, Waltham, MA, USA, 12–13 May 2008. [Google Scholar]

- Brey, P. Freedom and privacy in ambient intelligence. Eth. Inf. Technol. 2005, 7, 157–166. [Google Scholar] [CrossRef]

- Remmers, H. Environments for ageing, assistive technology and self-determination: Ethical perspectives. Inform. Health Soc. Care 2010, 35, 200–210. [Google Scholar] [CrossRef] [PubMed]

- Ananny, M. Toward an ethics of algorithms convening, observation, probability, and timeliness. Sci. Technol. Hum. Values 2016, 41, 93–117. [Google Scholar] [CrossRef]

- Floridi, L. The informational nature of personal identity. Minds Mach. 2011, 21, 549–566. [Google Scholar] [CrossRef]

- Johnson, J.A. Ethics of Data Mining and Predictive Analytics in Higher Education; Social Science Research Network: Rochester, NY, USA, 2013. [Google Scholar]

- Coll, S. Consumption as biopower: Governing bodies with loyalty cards. J. Consum. Cult. 2013, 13, 201–220. [Google Scholar] [CrossRef]

- Landau, R.; Werner, S.; Auslander, G.K.; Shoval, N.; Heinik, J. What do cognitively intact older people think about the use of electronic tracking devices for people with dementia? A preliminary analysis. Int. Psychogeriatr. 2010, 22, 1301–1309. [Google Scholar] [CrossRef] [PubMed]

- Topo, P. Technology studies to meet the needs of people with dementia and their caregivers a literature review. J. Appl. Gerontol. 2009, 28, 5–37. [Google Scholar] [CrossRef]

- Choudhury, S.; Fishman, J.R.; McGowan, M.L.; Juengst, E.T. Big data, open science and the brain: Lessons learned from genomics. Front. Hum. Neurosci. 2014, 8, 239. [Google Scholar] [CrossRef] [PubMed]

- Mittelstadt, B.; Floridi, L. The ethics of big data: Current and foreseeable issues in biomedical contexts. Sci. Eng. Eth. 2016, 22, 303–341. [Google Scholar] [CrossRef] [PubMed]

- Coll, S. Power, knowledge, and the subjects of privacy: Understanding privacy as the ally of surveillance. Inf. Commun. Soc. 2014, 17, 1250–1263. [Google Scholar] [CrossRef]

- Richards, N.M.; King, J.H. Three paradoxes of big data. Stanf. Law Rev. Online 2013, 66, 41. [Google Scholar]

- TechUK. Trust in an Internet of Things World; TechUK: London, UK, 2017; Available online: https://www.techuk.org/component/techuksecurity/security/download/10031?file=IoT_Trust.pdf&Itemid=177&return=aHR0cHM6Ly93d3cudGVjaHVrLm9yZy9pbnNpZ2h0cy9uZXdzL2l0ZW0vMTAwMzEtaW90LXRydXN0LXByaW5jaXBsZXM= (accessed on 30 June 2017).

- Kitchin, R. The Data Revolution: Big data, Open Data, Data Infrastructures and Their Consequences; CPI Group: Croydon, UK, 2014. [Google Scholar]

- Pasquale, F.; Ragone, T.A. Protecting health privacy in an era of big data processing and cloud computing. Stan. Tech. L. Rev. 2013, 17, 595–654. [Google Scholar]

- Tene, O.; Polonetsky, J. Big data for all: Privacy and user control in the age of analytics. Northwest. J. Technol. Intellect. Prop. 2013, 11, 239–273. [Google Scholar]

- Mittelstadt, B.; Allo, P.; Taddeo, M.; Wachter, S.; Floridi, L. The ethics of algorithms: Mapping the debate. Big Data Soc. 2016, 3, 2053951716679679. [Google Scholar] [CrossRef]

- Burrell, J. How the machine “thinks:” understanding opacity in machine learning algorithms. Big Data Soc. 2016, 3, 2053951715622512. [Google Scholar] [CrossRef]

- Wel, L.; Royakkers, L. Ethical issues in web data mining. Ethics Inf. Technol. 2004, 6, 129–140. [Google Scholar]

- Schermer, B.W. The limits of privacy in automated profiling and data mining. Comput. Law Secur. Rev. 2011, 27, 45–52. [Google Scholar] [CrossRef]

- Floridi, L. The ontological interpretation of informational privacy. Eth. Inf. Technol. 2005, 7, 185–200. [Google Scholar] [CrossRef]

- Kosta, E.; Pitkänen, O.; Niemelä, M.; Kaasinen, E. Mobile-centric ambient intelligence in Health- and Homecare-anticipating ethical and legal challenges. Sci. Eng. Eth. 2010, 16, 303–323. [Google Scholar] [CrossRef] [PubMed]

- Friedewald, M.; Vildjiounaite, E.; Punie, Y.; Wright, D. Privacy, identity and security in ambient intelligence: A scenario analysis. Telemat. Inform. 2007, 24, 15–29. [Google Scholar] [CrossRef]

- Moncrieff, S.; Venkatesh, S.; West, G. A framework for the design of privacy preserving pervasive healthcare. In Proceedings of the 2009 IEEE International Conference on Multimedia and Expo, New York, NY, USA, 28 June–2 July 2009. [Google Scholar]

- Terry, N. Health privacy is difficult but not impossible in a post-hipaa data-driven world. Chest 2014, 146, 835–840. [Google Scholar] [CrossRef] [PubMed]

- Moore, P.; Xhafa, F.; Barolli, L.; Thomas, A. Monitoring and Detection of Agitation in Dementia: Towards Real-Time and Big-Data Solutions. In Proceedings of the 2013 Eighth International Conference on P2P Parallel Grid Cloud Internet Computing (3PGCIC), Compiegne, France, 28–30 October 2013. [Google Scholar]

- Shilton, K. Participatory personal data: An emerging research challenge for the information sciences. J. Am. Soc. Inf. Sci. Technol. 2012, 63, 1905–1915. [Google Scholar] [CrossRef]

- Markowetz, A.; Błaszkiewicz, K.; Montag, C.; Switala, C.; Schlaepfer, T.E. Psycho-informatics: Big data shaping modern psychometrics. Med. Hypotheses 2014, 82, 405–411. [Google Scholar] [CrossRef] [PubMed]

- Schadt, E.E. The changing privacy landscape in the era of big data. Mol. Syst. Biol. 2012, 8, 612. [Google Scholar] [CrossRef] [PubMed]

- Goodman, E. Design and ethics in the era of big data. Interactions 2014, 21, 22–24. [Google Scholar] [CrossRef]

- Hayden, E.C. A Broken Contract. Nature 2012, 486, 312–314. [Google Scholar] [CrossRef] [PubMed]

- Joly, Y.; Dove, E.S.; Knoppers, B.M.; Bobrow, M.; Chalmers, D. Data sharing in the post-genomic world: The experience of the International Cancer Genome Consortium (ICGC) Data Access Compliance Office (DACO). PLoS Comput. Biol. 2012, 8, e1002549. [Google Scholar] [CrossRef] [PubMed]

- McGuire, A.L.; Achenbaum, L.S.; Whitney, S.N.; Slashinski, M.J.; Versalovic, J.; Keitel, W.A.; McCurdy, S.A. Perspectives on human microbiome research ethics. J. Empir. Res. Hum. Res. Eth. Int. J. 2012, 7, 1–14. [Google Scholar]

- Nissenbaum, H. Privacy as Contextual Integrity; Social Science Research Network: Rochester, NY, USA, 2004. [Google Scholar]

- Liyanage, H.; de Lusignan, S.; Liaw, S.T.; Kuziemsky, C.E.; Mold, F.; Krause, P.; Fleming, D.; Jones, S. Big data usage patterns in the health care domain: A use case driven approach applied to the assessment of vaccination benefits and risks. Yearb. Med. Inform. 2014, 9, 27–35. [Google Scholar] [CrossRef] [PubMed]

- Fairfield, J.; Shtein, H. Big data, big problems: Emerging issues in the ethics of data science and journalism. J. Mass Media Eth. 2014, 29, 38–51. [Google Scholar] [CrossRef]

- Sloot, B. Privacy in the Post-NSA era: Time for a fundamental revision? JIPITEC 2014, 1, 1–11. [Google Scholar]

- Docherty, A. Big data-ethical perspectives. Anaesthesia 2014, 69, 390–391. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Article 29 Data Protection Working Party Opinion 06/2014 on the Notion of Legitimate Interests of the Data Controller under Article 7 of Directive 95/46/EC. 2014. Available online: http://www.dataprotection.ro/servlet/ViewDocument?id=1086 (accessed on 30 June 2017).

- Bagüés, S.A.; Zeidler, A.; Klein, C.; Valdivielso, F.; Matias, R. Enabling personal privacy for pervasive computing environments. J. Univers. Comput. Sci. 2010, 16, 341–371. [Google Scholar]

- Little, L.; Briggs, P. Pervasive healthcare: The elderly perspective. In Proceedings of the 2nd International Conference on PErvasive Technologies Related to Assistive Environments, Corfu, Greece, 9–13 June 2009. [Google Scholar]

- Chakraborty, S.; Choi, H.; Srivastava, M.B. Demystifying Privacy in Sensory Data: A QoI Based Approach. In Proceedings of the 2011 9th IEEE International Conference on Pervasive Computing and Communications Workshops, Seattle, WA, USA, 21–25 March 2011. [Google Scholar]

- Coughlin, J.F.; Lau, J.; D’Ambrosio, L.A.; Reimer, B. Adult Children’s Perceptions of Intelligent Home Systems in the Care of Elderly Parents. In Proceedings of the 3rd International Convention on Rehabilitation Engineering and Assistive Technology, Singapore, 22–26 April 2009. [Google Scholar]

- Dhukaram, A.V.; Baber, C.; Elloumi, L.; Van Beijnum, B.J.; De Stefanis, P. End-User Perception Towards Pervasive Cardiac Healthcare Services: Benefits, Acceptance, Adoption, Risks, Security, Privacy and Trust. In Proceedings of the 2011 5th International Conference on Pervasive Computing Technologies for Healthcare and Workshops, Dublin, Ireland, 23–26 May 2011. [Google Scholar]

- Rashid, U.; Schmidtke, H.; Woo, N. Managing Disclosure of Personal Health Information in Smart Home Healthcare; Springer: New York, NY, USA, 2007; pp. 188–197. [Google Scholar]

- Wang, K.; Sui, Y.; Zou, X.; Durresi, A.; Fang, S. Pervasive and Trustworthy Healthcare. In Proceedings of the 22nd International Conference on Advanced Information Networking and Applications-Workshops, AINAW 2008, Gino-wan, Okinawa, Japan, 25–28 March 2008. [Google Scholar]

- Yuan, W.; Guan, D.; Lee, S.; Lee, Y.K. The Role of Trust in Ubiquitous Healthcare. In Proceedings of the 9th International Conference on e-Health Networkin, Application and Services, Taipei, Taiwan, 19–22 June 2007. [Google Scholar]

- Turilli, M.; Vaccaro, A.; Taddeo, M. The case of online trust. Knowl. Technol. Policy 2010, 23, 333–345. [Google Scholar] [CrossRef]

- Taddeo, M. Trust in technology: A distinctive and a problematic relation. Knowl. Technol. Policy 2010, 23, 283–286. [Google Scholar] [CrossRef]

- Taddeo, M.; Floridi, L. The case for e-trust. Eth. Inf. Technol. 2011, 13, 1–3. [Google Scholar] [CrossRef]

- McLean, A. Ethical frontiers of ICT and older users: Cultural, pragmatic and ethical issues. Eth. Inf. Technol. 2011, 13, 313–326. [Google Scholar] [CrossRef]

- Hildebrandt, M.; Koops, B.J. The challenges of ambient law and legal protection in the profiling era. Mod. Law Rev. 2010, 73, 428–460. [Google Scholar] [CrossRef]

- Floridi, L. Mature information societies—A matter of expectations. Philos. Technol. 2016, 29, 1–4. [Google Scholar] [CrossRef]

- Wachter, S.; Mittelstadt, B.; Floridi, L. Why a right to explanation of automated decision-making does not exist in the general data protection regulation. Int. Data Priv. Law 2017, 7, 76–99. [Google Scholar] [CrossRef]

- Giannotti, F.; Saygin, Y. Privacy and Security in Ubiquitous Knowledge Discovery; Springer: Berlin/Heidelberg, Germany, 2010; pp. 75–89. [Google Scholar]

- Percival, J.; Hanson, J. Big brother or brave new world? Telecare and its implications for older people’s independence and social inclusion. Crit. Soc. Policy 2006, 26, 888–909. [Google Scholar] [CrossRef]

- Garcia-Morchon, O.; Falck, T.; Wehrle, K. Sensor network security for pervasive e-health. Secur. Commun. Netw. 2011, 4, 1257–1273. [Google Scholar] [CrossRef]

- Massacci, F.; Nguyen, V.H.; Saidane, A. No purpose, No Data: Goal-Oriented Access Control Forambient Assisted Living. In Proceedings of the 1st ACM Workshop on Security and Privacy in Medical and Home-Care Systems, SPIMACS “09, Co-located with the 16th ACM Computer and Communications Security Conference, CCS”09, Chicago, IL, USA, 13 November 2009. [Google Scholar]

- Bagüés, S.A.; Zeidler, A.; Valdivielso, F.; Matias, R. Disappearing for a while—Using white lies in pervasive computing. In Proceedings of the 2007 ACM workshop on Privacy in electronic society, Alexandria, VA, USA, 29 October 2007. [Google Scholar]

- Greenfield, A. Some guidelines for the ethical development of ubiquitous computing. Philos. Trans. R. Soc. Math. Phys. Eng. Sci. 2008, 366, 3823–3831. [Google Scholar] [CrossRef] [PubMed]

- Roman, R.; Najera, P.; Lopez, J. Securing the internet of things. Computer 2011, 44, 51–58. [Google Scholar] [CrossRef]

- Genus, A.; Coles, A. On constructive technology assessment and limitations on public participation in technology assessment. Technol. Anal. Strateg. Manag. 2005, 17, 433–443. [Google Scholar] [CrossRef]

- Joss, S.; Bellucci, S. Participatory Technology Assessment: European Perspectives; Centre for the Study of Democracy, University of Westminster: London, UK, 2002. [Google Scholar]

- Gaul, S.; Ziefle, M. Smart Home Technologies: Insights into Generation-Specific Acceptance Motives; Springer: Berlin, Germany, 2009; pp. 312–332. [Google Scholar]

- Ziefle, M.; Röcker, C.; Holzinger, A. Medical technology in smart homes: Exploring the user’s perspective on privacy, intimacy and trust. In Proceedings of the 35th Annual Computer Software and applications Conference Workshops (COMPSACW), Munich, Germany, 18–22 July 2011. [Google Scholar]

- OTA Releases IoT Trust Framework. 2016. Available online: https://otalliance.org/news-events/press-releases/ota-releases-iot-trust-framework (accessed on 29 June 2017).

- International Telecommunications Union H.810: Interoperability design Guidelines for Personal Health Systems 2016. Available online: https://www.itu.int/rec/T-REC-H.810 (accessed on 29 June 2017).

- Content of Premarket Submissions for Management of Cybersecurity in Medical Devices. 2014. Available online: https://www.fda.gov/MedicalDevices/NewsEvents/WorkshopsConferences/ucm419118.htm (accessed on 29 June 2017).

- Postmarket Management of Cybersecurity in Medical Devices. 2016. Available online: https://www.fda.gov/downloads/medicaldevices/deviceregulationandguidance/guidancedocuments/ucm482022.pdf (accessed on 29 June 2017).

- Knoppers, B.M.; Harris, J.R.; Tassé, A.M.; Budin-Ljøsne, I.; Kaye, J.; Deschênes, M.; Zawati, M.H. Towards a data sharing code of conduct for international genomic research. Genome Med. 2011, 3, 46. [Google Scholar] [CrossRef] [PubMed]

- Clayton, E.W. Informed consent and biobanks. J. Law. Med. Eth. 2005, 33, 15–21. [Google Scholar] [CrossRef]

- Ioannidis, J.P.A. Informed consent, big data, and the oxymoron of research that is not research. Am. J. Bioeth. 2013, 13, 40–42. [Google Scholar] [CrossRef] [PubMed]

- Majumder, M.A. Cyberbanks and other virtual research repositories. J. Law. Med. Eth. 2005, 33, 31–39. [Google Scholar] [CrossRef]

- Kaye, J.; Whitley, E.A.; Lund, D.; Morrison, M.; Teare, H.; Melham, K. Dynamic consent: A patient interface for twenty-first century research networks. Eur. J. Hum. Genet. 2015, 23, 141–146. [Google Scholar] [CrossRef] [PubMed]

- MacIntyre, A. After Virtue: A Study in Moral Theory, 3rd ed.; Gerald Duckworth: New York, NY, USA, 2007. [Google Scholar]

- Terry, N. Protecting patient privacy in the age of big data. UMKC Rev. 2012, 81, 385. [Google Scholar] [CrossRef]

- Barry, C.A.; Stevenson, F.A.; Britten, N.; Barber, N.; Bradley, C.P. Giving voice to the lifeworld. More humane, more effective medical care? A qualitative study of doctor-patient communication in general practice. Soc. Sci. Med. 2001, 53, 487–505. [Google Scholar] [CrossRef]

- Lupton, D. The digitally engaged patient: self-monitoring and self-care in the digital health era. Soc. Theory Health 2013, 11, 256–270. [Google Scholar] [CrossRef]

- Coeckelbergh, M. E-care as craftsmanship: virtuous work, skilled engagement, and information technology in health care. Med. Health Care Philos. 2013, 16, 807–816. [Google Scholar] [CrossRef] [PubMed]

- Morris, D.B. About suffering: Voice, genre, and moral community. Daedalus 1996, 125, 25–45. [Google Scholar]

- Stutzki, R.; Weber, M.; Reiter-Theil, S. Finding their voices again: a media project offers a floor for vulnerable patients, clients and the socially deprived. Med. Health Care Philos. 2013, 16, 739–750. [Google Scholar] [CrossRef] [PubMed]

- Chan, M.; Estève, D.; Escriba, C.; Campo, E. A review of smart homes—Present state and future challenges. Comput. Methods Programs Biomed. 2008, 91, 55–81. [Google Scholar] [CrossRef] [PubMed]

- Palm, E. Who cares? Moral obligations in formal and informal care provision in the light of ICT-based home care. Health Care Anal. 2013, 21, 171–188. [Google Scholar] [CrossRef] [PubMed]

- Zwijsen, S.A.; Niemeijer, A.R.; Hertogh, C.M.P.M. Ethics of using assistive technology in the care for community-dwelling elderly people: An overview of the literature. Aging Ment. Health 2011, 15, 419–427. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.-H.; Fassert, C.; Rigaud, A.S. Designing robots for the elderly: Appearance issue and beyond. Arch. Gerontol. Geriatr. 2012, 54, 121–126. [Google Scholar] [CrossRef] [PubMed]

- Mittelstadt, B. Auditing for Transparency in Content Personalization Systems. Int. J. Commun. 2016, 10, 12. [Google Scholar]

- Cath, C.J.N.; Wachter, S.; Mittelstadt, B.; Taddeo, M.; Floridi, L. Artificial Intelligence and the “Good Society”: The US, EU, and UK Approach; Social Science Research Network: Rochester, NY, USA, 2016. [Google Scholar]

- Howard, P.N. Pax. Technica.: How the Internet of Things May Set Us Free or Lock Us Up; Yale University Press: New Haven, London, UK, 2015. [Google Scholar]

- Mittelstadt, B. From individual to group privacy in big data analytics. Philos. Technol. 2017, 1–20. [Google Scholar] [CrossRef]

- Taylor, L.; Floridi, L.; van der Sloot, B. Group Privacy: New Challenges of Data Technologies; Springer: New York, NY, USA, 2017. [Google Scholar]

- Boyd, D.; Crawford, K. Critical questions for big data: Provocations for a cultural, technological, and scholarly phenomenon. Inf. Commun. Soc. 2012, 15, 662–679. [Google Scholar]

- Watson, R.W.G.; Kay, E.W.; Smith, D. Integrating biobanks: Addressing the practical and ethical issues to deliver a valuable tool for cancer research. Nat. Rev. Cancer 2010, 10, 646–651. [Google Scholar] [CrossRef] [PubMed]

- Hensel, B.K.; Demiris, G.; Courtney, K.L. Defining obtrusiveness in home telehealth technologies: A conceptual framework. J. Am. Med. Inform. Assoc. 2006, 13, 428–431. [Google Scholar] [CrossRef] [PubMed]

- Nefti, S.; Manzoor, U.; Manzoor, S. Cognitive agent based intelligent warning system to monitor patients suffering from dementia using ambient assisted living. In Proceedings of the 2010 International Conference on the Information Society (i-Society), London, UK, 28–30 June 2010. [Google Scholar]

- Tiwari, P.; Warren, J.; Day, K.J.; McDonald, B. Some non-technology implications for wider application of robots assisting older people. Health Care Inform. Rev. Online 2010, 14, 2–11. [Google Scholar]

- Courtney, K.L. Privacy and senior willingness to adopt smart home information technology in residential care facilities. Method. Inf. Med. 2008, 47, 76–81. [Google Scholar] [CrossRef]

- Kenner, A.M. Securing the elderly body: Dementia, surveillance, and the politics of “aging in place”. Surveill. Soc. 2008, 5, 252–269. [Google Scholar]

- Essén, A. The two facets of electronic care surveillance: An exploration of the views of older people who live with monitoring devices. Soc. Sci. Med. 2008, 67, 128–136. [Google Scholar] [CrossRef] [PubMed]

- van Hoof, J.; Kort, H.S.M.; Rutten, P.G.S.; Duijnstee, M.S.H. Ageing-in-place with the use of ambient intelligence technology: Perspectives of older users. Int. J. Med. Inf. 2011, 80, 310–331. [Google Scholar] [CrossRef] [PubMed]

- Gao, W.; Emaminejad, S.; Nyein, H.Y.Y.; Challa, S.; Chen, K.; Peck, A.; Fahad, H.M.; Ota, H.; Shiraki, H.; Kiriya, D.; et al. Fully integrated wearable sensor arrays for multiplexed in situ perspiration analysis. Nature 2016, 529, 509–514. [Google Scholar] [CrossRef] [PubMed]

- Lee, H.; Choi, T.K.; Lee, Y.B.; Cho, H.R.; Ghaffari, R.; Wang, L.; Choi, H.J.; Chung, T.D.; Lu, N.; Hyeon, T.; et al. A graphene-based electrochemical device with thermoresponsive microneedles for diabetes monitoring and therapy. Nat. Nanotechnol. 2016, 11, 566–572. [Google Scholar] [CrossRef] [PubMed]

- Pasluosta, C.F.; Gassner, H.; Winkler, J.; Klucken, J.; Eskofier, B.M. An emerging era in the management of Parkinson’s Disease: Wearable technologies and the Internet of things. IEEE J. Biomed. Health Inform. 2015, 19, 1873–1881. [Google Scholar] [CrossRef] [PubMed]

- Boulos, M.N.K.; Wheeler, S.; Tavares, C.; Jones, R. How smartphones are changing the face of mobile and participatory healthcare: An overview, with example from eCAALYX. Biomed. Eng. Online 2011, 10. [Google Scholar] [CrossRef] [PubMed]

- Pentland, A.; Lazer, D.; Brewer, D.; Heibeck, T. Using reality mining to improve public health and medicine. Stud. Health Technol. Inf. 2009, 149, 93–102. [Google Scholar]

- Kang, S.K.; Murphy, R.K.J.; Hwang, S.W.; Lee, S.M.; Harburg, D.V.; Krueger, N.A.; Shin, J.; Gamble, P.; Cheng, H.; Yu, S.; et al. Bioresorbable silicon electronic sensors for the brain. Nature 2016, 530, 71–76. [Google Scholar] [CrossRef] [PubMed]

- PositiveID PositiveID CorporationTM –GlucoChip. Available online: http://www.positiveidcorp.com/products_glucochip.html (accessed on 12 April 2013).

- Pousaz, L. Under the Skin, a Tiny Laboratory. Available online: http://actu.epfl.ch/news/under-the-skin-a-tiny-laboratory/ (accessed on 12 April 2013).

- Courtney, K.L.; Demiris, G.; Hensel, B.K. Obtrusiveness of information-based assistive technologies as perceived by older adults in residential care facilities: A secondary analysis. Med. Inform. Internet Med. 2007, 32, 241–249. [Google Scholar] [CrossRef] [PubMed]

- Ure, J.; Pinnock, H.; Hanley, J.; Kidd, G.; McCall Smith, E.; Tarling, A.; Pagliari, C.; Sheikh, A.; MacNee, W.; McKinstry, B. Piloting tele-monitoring in COPD: A mixed methods exploration of issues in design and implementation. Prim. Care Respir. J. 2012, 21, 57–64. [Google Scholar] [CrossRef] [PubMed]

- Martin-Ruiz, M.L.; Valero, M.A.; Lindén, M.; Nunez-Nagy, S.; Garcia, A.G. Foundations of a smart toy development for the early detection of motoric impairments at childhood. Int. J. Pediatr. Res. 2015, 1, 1–5. [Google Scholar] [CrossRef]

- Bonafide, C.P.; Jamison, D.T.; Foglia, E.E. The emerging market of smartphone-integrated infant physiologic monitors. JAMA 2017, 317, 353–354. [Google Scholar] [CrossRef] [PubMed]

- Dobbins, D.L. Analysis of Security Concerns & Privacy Risks of Children’s Smart Toys. Ph.D Thesis, Washington University, Louis, MO, USA, 2015. [Google Scholar]

- Munro, K. Fight! Fight! Hello Barbie vs My Friend Cayla | Pen Test Partners. Available online: https://www.pentestpartners.com/blog/fight-fight-hello-barbie-vs-my-friend-cayla/ (accessed on 29 January 2017).

- Gross, G. Privacy groups urge investigation of “internet of toys”. Computerworld. 2016. Available online: http://www.computerworld.com/article/3147624/internet-of-things/privacy-groups-urge-investigation-of-internet-of-toys.html (accessed on 30 June 2017).

- Mascheroni, G. The internet of toys. Parent. Digit. Future. 2017. Available online: http://eprints.lse.ac.uk/76134/ (accessed on 29 June 2017).

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mittelstadt, B. Designing the Health-related Internet of Things: Ethical Principles and Guidelines. Information 2017, 8, 77. https://doi.org/10.3390/info8030077

Mittelstadt B. Designing the Health-related Internet of Things: Ethical Principles and Guidelines. Information. 2017; 8(3):77. https://doi.org/10.3390/info8030077

Chicago/Turabian StyleMittelstadt, Brent. 2017. "Designing the Health-related Internet of Things: Ethical Principles and Guidelines" Information 8, no. 3: 77. https://doi.org/10.3390/info8030077

APA StyleMittelstadt, B. (2017). Designing the Health-related Internet of Things: Ethical Principles and Guidelines. Information, 8(3), 77. https://doi.org/10.3390/info8030077