Raising the Ante of Communication: Evidence for Enhanced Gesture Use in High Stakes Situations

Abstract

: Theorists of language have argued that co-speech hand gestures are an intentional part of social communication. The present study provides evidence for these claims by showing that speakers adjust their gesture use according to their perceived relevance to the audience. Participants were asked to read about items that were and were not useful in a wilderness survival scenario, under the pretense that they would then explain (on camera) what they learned to one of two different audiences. For one audience (a group of college students in a dormitory orientation activity), the stakes of successful communication were low; for the other audience (a group of students preparing for a rugged camping trip in the mountains), the stakes were high. In their explanations to the camera, participants in the high stakes condition produced three times as many representational gestures, and spent three times as much time gesturing, than participants in the low stakes condition. This study extends previous research by showing that the anticipated consequences of one's communication—namely, the degree to which information may be useful to an intended recipient—influences speakers' use of gesture.1. Introduction

McNeill [1,2] postulates that language consists of a combination of both speech and co-speech gestures, that neither on its own is sufficient to constitute our most basic form of communication, and that these two modalities form a tightly integrated system. This theoretical position assumes that co-speech gestures contain valuable communicative information and are not merely a by-product of speech production [3,4]. Indeed, the informative weight of co-speech gesture is broadly agreed upon and well supported in the literature [5-8].

Moreover, researchers have postulated that co-speech gestures are not just informative from the addressee's perspective, but that they are indeed produced by speakers with communicative intent [9-11], that is, with the intent to convey a meaningful message successfully to an addressee. According to this view, speakers employ the gestural modality in conjunction with speech in order to best meet the demands of a current communicative situation, determined largely by the addressee's informational needs. Co-speech gestures are seen as an integral element of language use in communicative interactions elicited and shaped by social context and the reciprocal nature of dialogue [11-13].

A plethora of empirical evidence exists which lends support to this claim. For example, some studies have shown that speakers adapt their gestural representations depending on the spatial location of their addressee [14,15]. In addition, a range of experimental studies have manipulated the visibility between speakers and addressees and found, on the whole, that speakers gesture less when they know their addressee is unable to see their gestures [16-18]; that gestures locating referents in space become less spatially defined and differentiated [19]; and that speech and gesture encode more redundant information than when the gestures are visible to an addressee [17]. A recent study has provided evidence that, in addition to visibility influencing gesture rate, dialogic involvement increases speakers' use of gesture further [20], thus corroborating the view that speakers' gesture use is influenced by both physical aspects of the social context as well as the interactive, reciprocal process of conversation itself.

Further, recent experimental studies have gone beyond manipulating the physical context or the degree of interaction between speakers and addressees. By manipulating what addressees know (or do not know), researchers have shown that co-speech gestures are recipient-designed to an even larger extent than previously assumed. Not only do speakers take into account aspects such as visibility and spatial location when gesturing, they also seem to take into consideration the addressee's thinking, and, in particular, the knowledge that they and their addressee mutually share (i.e., their common ground, CG, [13]). For example, Gerwing and Bavelas [21] asked participants to play with a set of toys and talk about those toys with either someone who played with the same set of toys (CG) or with a different set (No CG). Their results showed that the speakers' gestures were less informative, less complex and less precise when they talked to participants who shared common ground about the toys (and the functions the toys were designed to fulfill). Similarly, Holler and Stevens [22] found that mutually shared knowledge influenced speakers' gestural depiction of entities' size, with people producing smaller gestures for large objects when addressees did versus did not share knowledge about the true size of objects. Further, Parrill [23] found that speakers are less likely to encode “ground information” relating to a particular event (a cartoon scene of a cat melting down some stairs, with stairs representing the “ground” element of the event) when they share knowledge with the addressee about this event, at least in response to a question that also mentions the ground component (i.e., in combination with a manipulation of linguistic salience). Finally, Holler and Wilkin [24] found that common ground affects gesture rate; in the context of a narrative task, gesture rate increased in the common ground condition, that is, the gestures continued to play an important role, despite addressees being familiar with the contents of the narrated scenes. Instead of showing increased ellipsis of gesture when common ground existed compared to when it did not, this study hints at an increased use of gesture in the common ground context. One possible explanation for this difference in pattern is the nature of the task (Holler and Wilkin's study uses a narrative context, whereas the other studies cited above use referential communication tasks or short descriptions of individual scenes), but further research is required to shed more light on the manifold ways in which common ground appears to influence gesture use. Thus, although it is still not resolved exactly how common ground influences gesture production, a reasonable conclusion from these different studies is that gesture production is certainly sensitive to common ground, thereby providing empirical evidence for the link between communicative intent and gesture use.

Taken together, this is strong evidence that speakers do indeed design their gestures for their addressees. However, one aspect that has been neglected so far is an enquiry into how the anticipated consequences of communication may influence a speaker's use of gestures. In the studies mentioned above, participants were basically told the same thing in order to vary communicative motivation, for example, that the addressee would be asked to answer questions about the content of a narrative or a picture at the end of the experiment, that they would be asked to summarize the information provided by the speaker and so forth. This means that the consequences of the speakers' communicative effort were similar—in all cases, participants were instructed to provide an account good enough to allow an addressee to successfully complete a task at the end of the experiment. Because of the different conditions under which they communicated with their addressees (knowing or unknowing recipients, visible or invisible addressee and so forth), this overall aim led to different communication strategies. What we currently do not know is whether speakers' knowledge of the extent to which their communication matters to recipients also influences their gestural behavior. In other words, what happens if we keep the communicative conditions (such as visibility and common ground) constant, but make speakers aware that their communication will have different consequences for different addressees? This would involve speakers (gesturers) reflecting on what the recipients of the information will do with this information, and thus reflecting on the future goals and behaviors of these addressees. Manipulating the stakes of communication in this way should, in turn, have a profound impact on the speakers' communicative goals. As yet, the issue of whether recipient design—resulting from such reflective processes—affects gesture use remains unexplored.

Those theories assuming a direct link between language use and gesture—and more importantly, gesture use, social context and communicative intent [9-13]—would predict that, as a consequence of such a manipulation, we should see clear effects on gesture production. In addition, one recent view proposed by Hostetter and Alibali [25], entitled the gesture as simulated action (GSA) hypothesis, makes explicit predictions in this respect. The GSA hypothesis delves into the origins of gesture asserting that gesture production stems from a combination of both motor and perceptual simulations associated with embodied cognition, language, and mental imagery. Recent findings [26,27] provide compelling support for various aspects of the GSA. Specifically, the GSA hypothesis maintains that a speaker's tendency to gesture is based on three key factors: (1) how much simulated action underlies their present thinking, (2) the level of the individual's gesture threshold (i.e., the degree of activation required to translate these simulations into motor movements) and (3) whether the motor system is simultaneously engaged in the production of speech. The present study focuses on the second component of the GSA—the gesture threshold—and tests one of the explicit predictions made by Hostetter and Alibali [25] about it, namely that, “A speaker who is in a situation where the stakes of communicating are particularly high should gesture more than a speaker who is in a situation where the stakes are not as high” (p. 511). If the information being discussed is identical in both low and high stakes situations, the first and third factors of the GSA framework will be held constant. Changing the stakes of communication therefore, must relate to the second factor, the gesture threshold. Presumably, raising a speaker's personal stakes in communication should lower the gesture threshold and increase gesture production, providing listeners with more useful information.

In the present experiment, we experimentally manipulated the stakes of communication by asking participants to talk to different types of audiences (with different “stakes” at hand), which should adjust their gesture threshold, and consequently, influence gesture production. We attempted to do this in a way that would not also leave a speaker visually exposed to their audience because any changes in gesture production could be the result of visible reactions from addressees. Thus, we removed addressees from the “interaction” by having participants speak into a video camera. By using a video camera (cf., [17]) our manipulation leaves speakers unable to adapt their discourse to the immediate responses of their audience. In this way, the task ensures that any effect on gesture production must be mediated by internally adjusting the gesture threshold (i.e., in this case, the speakers' perceived import of their communication to the respective audiences).

The opposing low and high stakes situations were generated experimentally by having two different groups of participants communicate the same information under different pretenses. Across both conditions, participants read about items that were highly useful for survival in a simulated military training scenario, and were then asked to explain (to a camera) details about those items to two different audiences. In the low stakes condition, participants were told that their taped explanations would be watched by students in first-year dormitory orientation groups who would be performing the activity purely to bond. In the high stakes condition, participants were told that their tapes would be watched by students in an outdoor education program who would be actively preparing for a winter camping excursion in the Adirondacks.

We expect that participants in the high stakes condition should draw proportionally more on the gestural modality than participants in the low stakes condition. The enhanced desire to effectively communicate information as a result of the high stakes nature of the situation should lower the speaker's gesture threshold. This, in turn, should increase the likelihood that simulation-related activation would spread to motor areas and surpass the threshold. Thus, we predict that the amount of gesturing will be significantly greater among participants whose stake in communicating information has been elevated compared to their counterparts in the relatively lower stakes condition. We measure this in two ways. Firstly, as a global index of gesture use, we use a novel measure of the overall time speakers spent gesturing in the two experimental conditions, with the prediction that speakers in the high stakes condition draw more on the gestural modality than speakers in the low stakes condition. Secondly, we also employ the more traditional measure of gesture rate. Specifically, based on the GSA framework, we predict that there will be a higher gesture rate in the high stakes condition, and this effect will be driven by gestures that convey semantic information closely related to the information contained in the speech that they accompany (representational gestures). However, the GSA hypothesis makes no claims regarding non-representational gestures (such as “beats”, which are quick, bi-phasic gestural movements tied to the rhythmical patterns of speech as well as discourse structure, [1]). Indeed, past research has revealed a dissociation between representational and non-representational gestures in connection with the manipulation of social context. Speakers produced significantly fewer representational gestures when they knew their gestures could not be seen by their interlocutor while beat gestures remained largely unaffected [16]. Thus, we predict that our manipulation should affect predominantly the production of representational gestures, but we have to remain tentative regarding the prediction for non-representational gestures (beats).

2. Results and Discussion

Total speech

Participants did not differ in the total number of words used across the two conditions, producing on average 463 (SD = 62) words for the low stakes condition and 477 (SD = 50) words for the high stakes condition, t(18) = 0.60, ns. In addition, there was no significant difference in the number of steps (12 possible) covered in the 3-minute allotted time period, with an average of 9.6 (SD = 1.95) steps for the low stakes group and 9.2 (SD = 1.31) for high stakes group, t(18) = 0.60, ns.

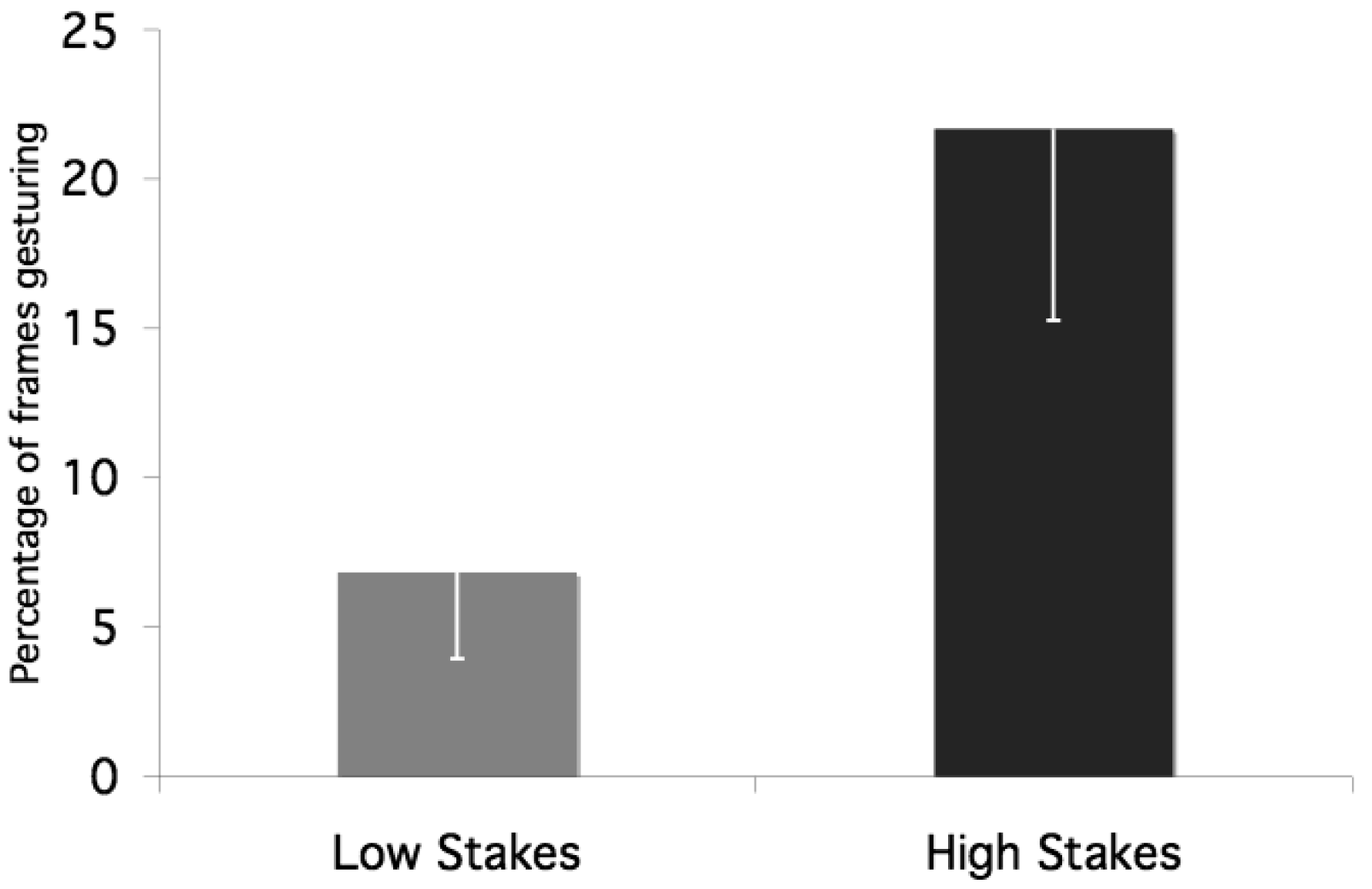

Time spent gesturing

The average percentage of time spent gesturing was more than three times lower for the low stakes (M = 6.85%, SD = 9.22) compared to high stakes condition (M = 21.87%, SD = 20.18), t(18) = 2.11, p = 0.025 (one-tailed) (Figure 1). This effect held even when removing participants who produced no gestures at all (two participants did not gesture in the low stakes condition and one in the high stakes condition), with the remaining participants spending significantly less time gesturing in the low stakes (M = 8.56%, SD = 9.62) compared to the high stakes condition (M = 24.08%, SD = 19.83), t(15) = 2.01, p = 0.032 (one-tailed).

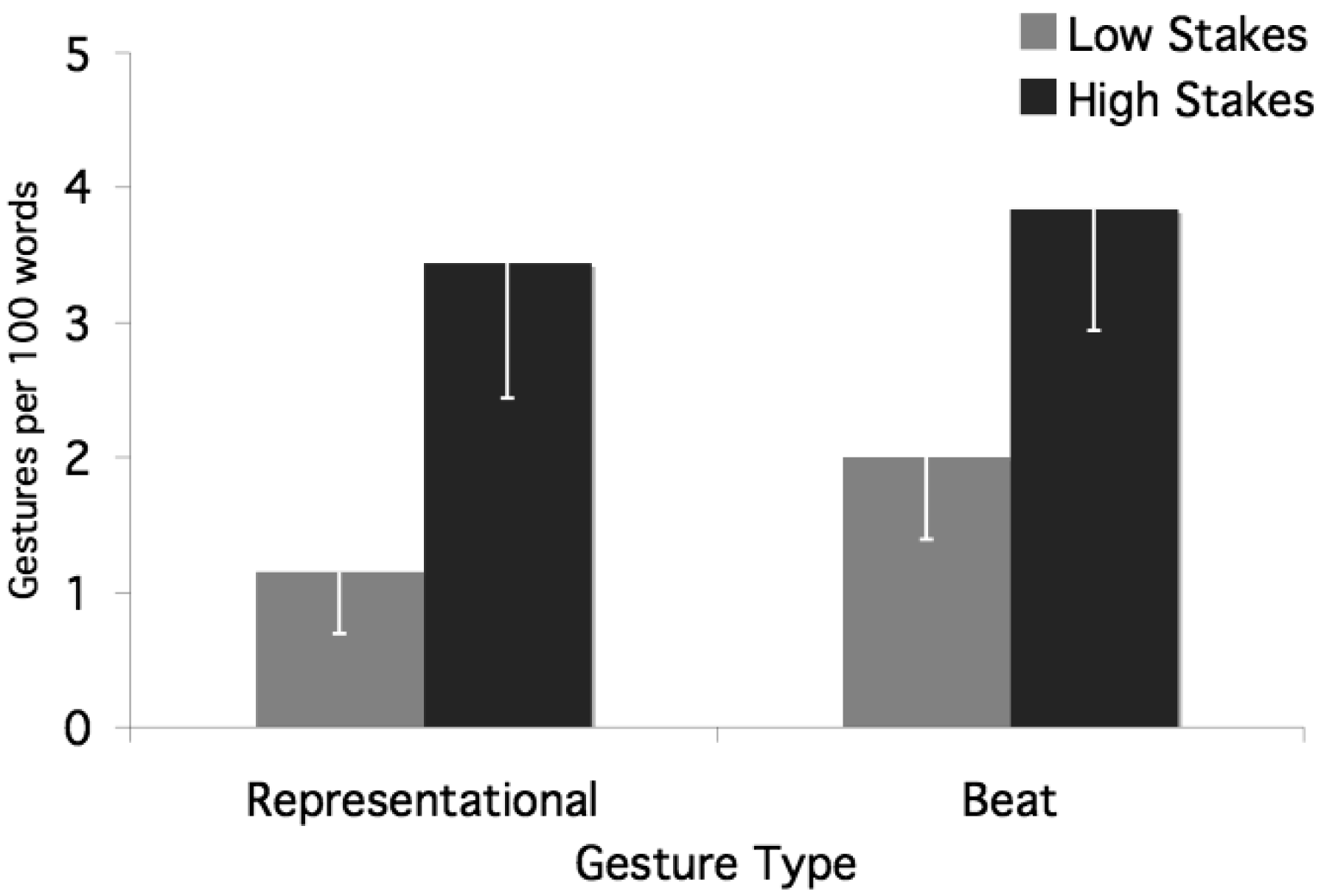

Gesture rate

First, to validate our exploratory measure (above), we correlated time spent gesturing with total gesture rate and found a significant correlation, r = 0.904, p < 0.001. When gesture rate was subdivided into the two gesture categories, it was significantly correlated with output for both representational gestures, r = 0.934, p < 0.001, and beat gestures, r = 0.697, p < 0.001.

For representational gestures, gesture rate (per 100 words) was almost three times lower in the low stakes condition (M = 1.15, SD = 1.45) compared to the high stakes condition (M = 3.44, SD = 3.20) t(18) = 2.058, p = 0.027 (one-tailed). For beat gestures, there was only a marginal difference between the low stakes (M = 2.00, SD = 1.93) and high stakes (M = 3.84, SD = 2.88) conditions, t(18) = 1.67, p = 0.111 (two-tailed) (Figure 2). We also ran a two-way ANOVA with Stakes (Low vs. High) and Gesture Type (Representational vs. Non-Representational) as variables, and there was not a significant interaction, F(1, 18) = .195, ns. Thus, the different t-test results should be interpreted with caution.

The present results support our prediction that raising the stakes of communication increases gesture production, both in amount of time spent gesturing and in gesture rate. This result bolsters previous findings attesting to the link between communicative intent and co-speech gestures [16,17,21,22]. Whereas these previous studies have demonstrated that gesture use is affected by both the physical context in which communication takes place and the knowledge state of the addressee, the present study has shed new light on the connection between communicative intent and gesturing. We have shown that even the anticipated consequences of one's communication—namely, the degree to which information may be useful to the intended recipient—influences speakers' gesture use. This finding is in line with theories postulating a strong connection between communicative intent and gesture use during language production [9,11-13].

More specifically, the present findings directly tap into a particular aspect of Hostetter and Alibali's [25] GSA framework, the “gesture threshold”. This purported threshold determines whether a gesture is (or is not) produced at any given moment. Based on the present findings, we assert that manipulating speakers' motivations to convey their message—by varying the degree to which they expect this communication to be relevant to their audience—exerts its effects on gesture production by adjusting the height of the gesture threshold. Importantly, the fact that our manipulation seemed to affect representational gesture more than beats (at least with the t-test comparisons) provides further support for the GSA framework. That is, gestures that involve some sort of motor and perceptual simulation appear to be most sensitive to the changing stakes of a communicative context.

Note that in the present design, we manipulated only the stakes of communication while keeping all other variables constant. In both conditions, the task required participants to briefly discuss the possible uses, advantages and disadvantages of the same range of survival items. Thus, the identical nature of the task between the low and high stakes conditions makes it unlikely that our findings are the result of differences in lexical retrieval [3,4], conceptual packaging [28,29] or degree of motor simulation [25,26]. It is also noteworthy that participants used the same number of words, and communicated generally the same spoken content (i.e., covered the same number of steps), across the two conditions. Although the present study used only rough indicators of speech quality and content, the findings are provocative because they suggest that changing the stakes of communication has a much more profound impact on the production of gesture than the production of speech.

One obvious limitation of the present study is that speakers were talking to an imagined audience that would later watch the speakers' video recordings (see [17] for a comparable design). However, this artificial situation has its merits: unlike a face-to-face interaction, participants could not use addressees' reactions to adjust their gesture use. In this way, we can conclude that enhanced gesture use in the high stakes condition was the result of imagined relevance to addressees, and not a direct reaction to their observed interest. Using this finding as a base, we plan to extend our paradigm to investigate speakers' gesture use in a comparable situation immersed in an actual interactive context. If anything, we expect that raising the stakes of communication in this more natural interactive context will enhance gesture use to an even greater extent.

We are also planning a follow-up to determine how other variables affect the gesture threshold. The GSA framework also asserts that personal beliefs about gesturing factor into the threshold's height. It would be interesting to determine if a personal belief that gesture is or is not communicatively informative could further modulate the effects reported here. For example, participants who believe that gesture lacks communicative value or that gesture is inappropriate in some circumstances may have such a high threshold that gesture use would be unlikely regardless of the stakes of communication.

3. Experimental Section

3.1. Participants

Participants were recruited through convenience sampling of an Introduction to Psychology course. A total of twenty college undergraduates, 12 females and 8 males, ranging from ages 18–22 completed the experimental task. All participants' decisions to partake in the study were entirely voluntary, and no compensation was given in exchange for participation.

3.2. Materials

The premise, directions and solution to a ranking puzzle game entitled, “Survival: A Simulation Game” (see Appendix) were explained to all participants across both conditions. The game is played in groups in which participants hypothetically have just crash-landed in the snow-covered Canadian wilderness. The pilot and co-pilot have perished, and although not educated in wilderness survival, the survivors have managed to salvage 12 items from the wreckage. The group's task is to rank these 12 items in their order of importance for their survival, considering uses, advantages, and disadvantages for each item. A group's performance in the game is evaluated by comparing their ranking to the ranking of a survival expert (a former instructor in survival training for the Reconnaissance School of the 101st Division of the United States Army).

3.3. Procedure

Participants were tested individually in the lab. After filling out a consent form, the experimenter explained the survival game's scenario and objective and told participants that they would be given the correct answers about how to solve this survival puzzle. Following this, the experimenter presented a text of the “correct ranking” of the 12 items, and the participant had ten minutes to review the rationale behind the ranking (see Appendix). At this point, participants knew only that they needed to understand the rankings well enough to explain them to someone else who had played the game.

After this 10-minute review, participants were told that they would be required to repeat and explain the correct list of the rankings to camera, as a sort of video answer key. Participants in the low stakes condition were told that their taped explanations would be shown to students in first-year dormitory orientation groups who would be performing the ranking activity purely as a bonding exercise. Participants in the high stakes condition were told that their taped explanations would be shown to students in an outdoor education program who would be performing the ranking activity not only to bond, but also in their efforts to prepare for an upcoming winter camping trip to the Adirondacks. In other words, participants in both conditions communicated the same information to their unseen audiences for the same purpose—as an answer key to the survival game—but only the high-stakes participants believed that their explanations would additionally be of practical use to their audience.

Participants' explanations of the correct rankings of the salvaged items were video-recorded. Following the completion of the recorded explanation, participants were fully debriefed in regard to the necessary experimental deception and the true nature of the research. The importance of this deception (that there was to be no actual audience) to the experimental protocol was emphasized, and all participants agreed not to reveal or discuss this deception with anyone else in the class.

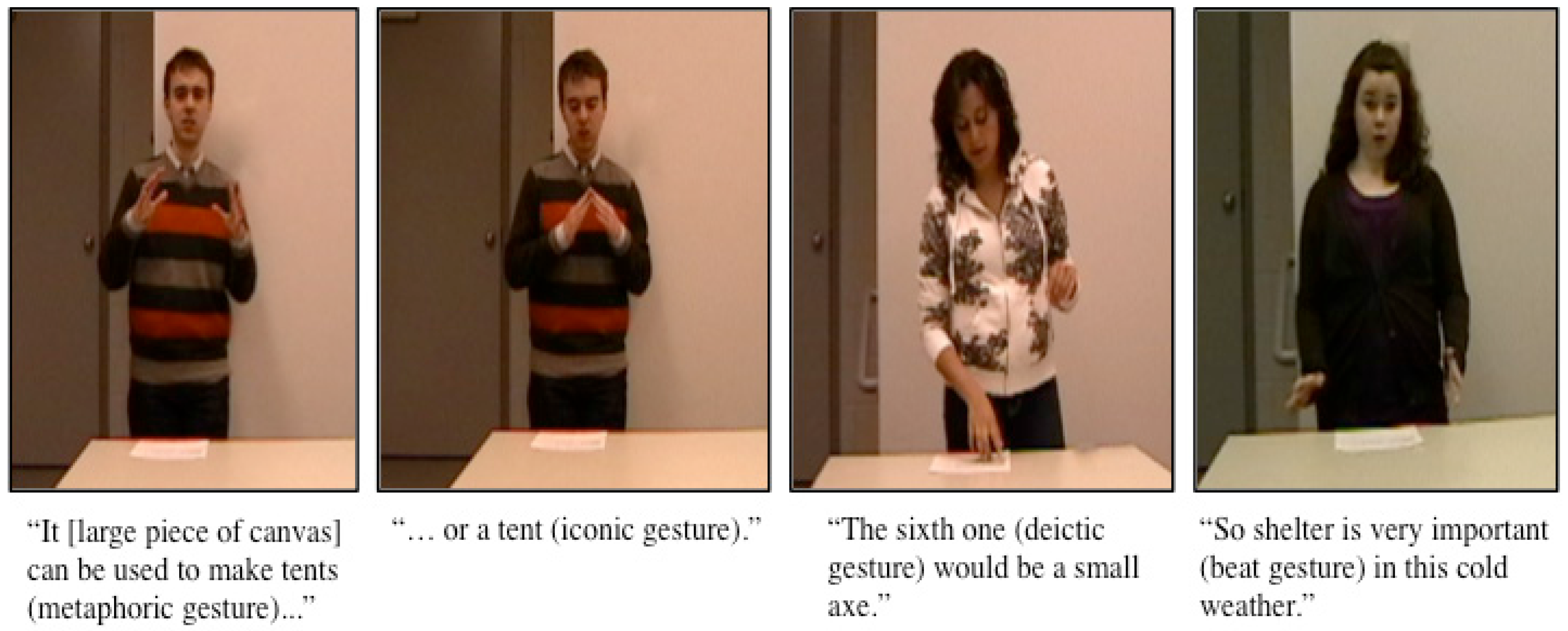

3.4. Coding

Participants were asked to provide their full explanation of the correct rankings in approximately 3 minutes, which was predetermined to be the maximum length of coded video per participant. This time limit was imposed to discourage participants from spending too much time on the explanations. We hoped that this would also roughly equate the amount of time participants spent speaking, so that we could determine whether our manipulation impacted gesture production independent of speech production. Movements coded as co-speech gestures for purpose of the present analysis included two categories of gesture. The first category was “representational gesture” and included deictic gestures (points), iconic gestures (depicting concrete images or actions), metaphoric gestures (imagistically adding concreteness to the abstract). The second category was beat gesture, which are short, bi-phasic movements serving the purpose of emphasis and marking discourse structure (McNeill, 2005). See Figure 3 for screenshots of each gesture type. Other movements such as self- or object-adapters (those often associated with nervousness such as wringing one's hands, hair-twirling and self-grooming) were not counted as relevant gestures.

For the present study, we operationalized speakers' use of gesture in two ways. First, as with previous studies, we determined gesture rate (number of gestures divided by total words). Reliability was based on well-established criteria for differentiating representational and non-representational gestures (e.g., [16]). For the small percentage of ambiguous gestures, a second coder was consulted, and the appropriate code was assigned when both coders could agree on the code.

For the second dependent variable, we introduced a new measure of gesture production: the amount of time that participants spent gesturing. To create this measure, we calculated an overall measure of gesture duration (rather than the average duration of individual gestures). Each second of video consisted of 30 frames and each frame lasted for 33 ms. Participants' usage of gesture was scored in terms of the number of frames spent making co-speech gestures, and this was divided by the total number of frames in the three minute segment (5400 total frames). The resulting measure was thus the percentage of time a participant spent gesturing. Because this is a new and exploratory measure of gesture production, we had two raters independently code the videos. Agreement on all 20 participants was quite high, with a Pearson Produce Correlation at 0.957. Averaging the two different scores together for each participant created the final percentages used in our analyses.

4. Conclusions

Raising the ante of communication enhances the production of gesture, particularly representational gestures. This finding provides empirical support for social-interactional theories of gesture use [11-13], in addition to validating a specific prediction of the GSA framework [25]. In general, the present study adds to the growing body of work demonstrating that language use—which includes both words and gestures—is best understood by carefully considering the shifting social contexts that guide and influence what people attempt to accomplish with language.

Appendix

SURVIVAL: A Simulation Game

You and your companions have just survived the crash of a small plane. Both the pilot and co-pilot were killed in the crash. It is mid-January, and you are in Northern Canada. The daily temperature is 25 below zero, and the nighttime temperature is 40 below zero. There is snow on the ground, and the countryside is wooded with several creeks criss-crossing the area. The nearest town is 20 miles away. You are all dressed in city clothes appropriate for a business meeting. Your group of survivors managed to salvage the following items:

A ball of steel wool

A small ax

A loaded 0.45-caliber pistol

Can of Crisco shortening

Newspapers (one per person)

Cigarette lighter (without fluid)

Extra shirt and pants for each survivor

20 × 20 ft. piece of heavy-duty canvas

A sectional air map made of plastic

One quart of 100-proof whiskey

A compass

Family-size chocolate bars (one per person)

Your task as a group is to list the above 12 items in order of importance for your survival. List the uses for each. You MUST come to agreement as a group.

Explanation

Mid-January is the coldest time of year in Northern Canada. The first problem the survivors face is the preservation of body heat and the protection against its loss. This problem can be solved by building a fire, minimizing movement and exertion, using as much insulation as possible, and constructing a shelter.

The participants have just crash-landed. Many individuals tend to overlook the enormous shock reaction this has on the human body and the deaths of the pilot and co-pilot increases the shock. Decision-making under such circumstances is extremely difficult. Such a situation requires a strong emphasis on the use of reasoning for making decisions and for reducing fear and panic. Shock would be shown in the survivors by feelings of helplessness, loneliness, hopelessness, and fear. These feelings have brought about more fatalities than perhaps any other cause in survival situations. Certainly the state of shock means the movement of the survivors should be at a minimum, and that an attempt to calm them should be made.

Before taking off, a pilot has to file a flight plan that contains vital information such as the course, speed, estimated time of arrival, type of aircraft, and number of passengers. Search-and-rescue operations begin shortly after the failure of a plane to appear at its destination at the estimated time of arrival.

The 20 miles to the nearest town is a long walk under even ideal conditions, particularly if one is not used to walking such distances. In this situation, the walk is even more difficult due to shock, snow, dress, and water barriers. It would mean almost certain death from freezing and exhaustion. At temperatures of minus 25 to minus 40, the loss of body heat through exertion is a very serious matter.

Once the survivors have found ways to keep warm, their next task is to attract the attention of search planes. Thus, all the items the group has salvaged must be assessed for their value in signaling the group's whereabouts.

The ranking of the survivors' items was made by Mark Wanvig, a former instructor in survival training for the Reconnaissance School of the 101st Division of the U.S. Army. Mr. Wanvig currently conducts wilderness survival training programs in the Minneapolis, Minnesota area. This survival simulation game is used in military training classrooms.

Rankings (from best to worst)

1. Cigarette Lighter (without fluid)

The gravest danger facing the group is exposure to cold. The greatest need is for a source of warmth and the second greatest need is for signaling devices. This makes building a fire the first order of business. Without matches, something is needed to produce sparks, and even without fluid, a cigarette lighter can do that.

2. Ball of Steel Wool

To make a fire, the survivors need a means of catching he sparks made by the cigarette lighter. This is the best substance for catching a spark and supporting a flame, even if the steel wool is a little wet.

3. Extra Shirt and Pants for Each Survivor

Besides adding warmth to the body, clothes can also be used for shelter, signaling, bedding, bandages, string (when unraveled), and fuel for the fire.

4. Can of Crisco Shortening

This has many uses. A mirror-like signaling device can be made from the lid. After shining the lid with steel wool, it will reflect sunlight and generate 5 to 7 million candlepower. This is bright enough to be seen beyond the horizon. While this could be limited somewhat by the trees, a member of the group could climb a tree and use the mirrored lid to signal search planes. If they had no other means of signaling than this, they would have a better than 80% chance of being rescued within the first day.

There are other uses for this item. It can be rubbed on exposed skin for protection against the cold. When melted into oil, the shortening is helpful as fuel. When soaked into a piece of cloth, melted shortening will act like a candle. The empty can is useful in melting snow for drinking water. It is much safer to drink warmed water than to eat snow, since warm water will help retain body heat. Water is important because dehydration will affect decision-making. The can is also useful as a cup.

5. 20 × 20-foot Piece of Canvas

The cold makes shelter necessary, and canvas would protect against wind and snow (canvas is used in making tents). Spread on a frame made of trees, it could be used as a tent or a wind screen. It might also be used as a ground cover to keep the survivors dry. Its shape, when contrasted with the surrounding terrain, makes it a signaling device.

6. Small ax

Survivors need a constant supply of wood in order to maintain the fire. The ax could be used for this as well as for clearing a sheltered campsite, cutting tree branches for ground insulation, and constructing a frame for the canvas tent.

7. Family Size Chocolate Bars (one per person)

Chocolate will provide some food energy. Since it contains mostly carbohydrates, it supplies the energy without making digestive demands on the body.

8. Newspapers (one per person)

These are useful in starting a fire. They can also be used as insulation under clothing when rolled up and placed around a person's arms and legs. A newspaper can also be used as a verbal signaling device when rolled up in a megaphone-shape. It could also provide reading material for recreation.

9. Loaded 0.45-caliber Pistol

The pistol provides a sound-signaling device. (The international distress signal is 3 shots fired in rapid succession). There have been numerous cases of survivors going undetected because they were too weak to make a loud enough noise to attract attention. The butt of the pistol could be used as a hammer, and the powder from the shells will assist in fire building. By placing a small bit of cloth in a cartridge emptied of its bullet, one can start a fire by firing the gun at dry wood on the ground. The pistol also has some serious disadvantages. Anger, frustration, impatience, irritability, and lapses of rationality may increase as the group awaits rescue. The availability of a lethal weapon is a danger to the group under these conditions. Although a pistol could be used in hunting, it would take an expert marksman to kill an animal with it. Then the animal would have to be transported to the crash site, which could prove difficult to impossible depending on its size.

10. Quart of 100 Proof Whiskey

The only uses of whiskey are as an aid in fire building and as a fuel for a torch (made by soaking a piece of clothing in the whiskey and attaching it to a tree branch). The empty bottle could be used for storing water. The danger of whiskey is that someone might drink it, thinking it would bring warmth. Alcohol takes on the temperature it is exposed to, and a drink of minus 30 degrees Fahrenheit whiskey would freeze a person's esophagus and stomach. Alcohol also dilates the blood vessels in the skin, resulting in chilled blood belong carried back to the heart, resulting in a rapid loss of body heat. Thus, an intoxicated person is more likely to get hypothermia than a sober person is.

11. Compass

Because a compass might encourage someone to try to walk to the nearest town, it is a dangerous item. Its only redeeming feature is that it could be used as a reflector of sunlight (due to its glass top).

12. Sectional Air Map Made of Plastic

This is also among the least desirable of the items because it will encourage individuals to try to walk to the nearest town. Its only useful feature is as a ground cover to keep someone dry.

References

- McNeill, D. Hand and Mind; University of Chicago Press: Chicago, IL, USA, 1992. [Google Scholar]

- McNeill, D. Gesture and Thought; University of Chicago Press: Chicago, IL, USA, 2005. [Google Scholar]

- Butterworth, B.; Hadar, U. Gesture, speech, and computational stages: A reply to McNeill. Psychol. Rev. 1989, 96, 168–174. [Google Scholar]

- Krauss, R.M.; Chen, Y.; Gottesman, R. Lexical Gestures and Lexical Access: A Process Model. In Language and Gesture; McNeill, D., Ed.; Cambridge University Press: Cambridge, UK, 2000; pp. 261–283. [Google Scholar]

- Graham, J.A.; Argyle, M. A cross-cultural study of the communication of extra-verbal meaning by gestures. Int. J. Psychol. 1975, 10, 57–67. [Google Scholar]

- Kelly, S.D.; Church, R.B. A comparison between children's and adults' ability to detect conceptual information conveyed through representational gestures. Child Dev. 1998, 69, 85–93. [Google Scholar]

- Holler, J.; Shovelton, H.; Beattie, G. Do iconic gestures really contribute to the semantic information communicated in face-to-face interaction? J. Nonverbal Behav. 2009, 33, 73–88. [Google Scholar]

- Hostetter, A.B. When do gestures communicate? A meta-analysis. Psychol. Bull. 2011, 137, 297–315. [Google Scholar]

- Kendon, A. Gesture and Speech: Two Aspects of the Process of Utterance. In Relationship of Verbal and Nonverbal Communication; Key, M.R., Ed.; Mouton De Gruyter: The Hague, The Netherlands, 1980; pp. 207–227. [Google Scholar]

- Kendon, A. Some Uses of Gesture. In Perspectives on Silence; Tannen, D., Saville-Troike, M., Eds.; Ablex: Norwood, NY, USA, 1985; pp. 215–234. [Google Scholar]

- Kendon, A. Gesture: Visible Action as Utterance; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Bavelas, J.B.; Chovil, N. Visible acts of meaning: An integrated message model of language in face-to-face dialogue. J. Lang. Soc. Psychol. 2000, 19, 163–194. [Google Scholar]

- Clark, H.H. Using Language; Cambridge University Press: Cambridge, UK, 1996. [Google Scholar]

- Furuyama, N. Gestural Interaction between the Instructor and the Learner in Origami Instruction. In Language and Gesture; McNeill, D., Ed.; Cambridge University Press: Cambridge, UK, 2000; pp. 99–117. [Google Scholar]

- Özyürek, A. Do speakers design their cospeech gestures for their addressees? The effects of addressee location on representational gestures. J. Mem. Lang. 2002, 46, 688–704. [Google Scholar]

- Alibali, M.W.; Heath, D.C.; Myers, H.J. Effects of visibility between speaker and listener on gesture production: Some gestures are meant to be seen. J. Mem. Lang. 2001, 44, 1–20. [Google Scholar]

- Bavelas, J.B.; Kenwood, C.; Johnson, T.; Phillips, B. An experimental study of when and how speakers use gestures to communicate. Gesture 2002, 2, 1–18. [Google Scholar]

- Mol, L.; Krahmer, E.; Maes, A.; Swerts, M. The communicative import of gestures: Evidence from a comparative analysis of human-human and human-machine interactions. Gesture 2009, 9, 97–126. [Google Scholar]

- Gullberg, M. Handling discourse: Gestures, reference tracking, and communication strategies in early L2. Lang. Learn. 2006, 56, 155–196. [Google Scholar]

- Bavelas, J.B.; Gerwing, J.; Sutton, C.; Prevost, D. Gesturing on the telephone: Independent effects of dialogue and visibility. J. Mem. Lang. 2008, 58, 495–520. [Google Scholar]

- Gerwing, J.; Bavelas, J. Linguistic influences on gesture's form. Gesture 2004, 4, 157–195. [Google Scholar]

- Holler, J.; Stevens, R. The effect of common ground on how speakers use gesture and speech to represent size information. J. Lang. Soc. Psychol. 2007, 26, 4–27. [Google Scholar]

- Parrill, F. The Hands Are Part of the Package: Gesture, Common Ground, and Information Packaging. In Empirical and Experimental Methods in Cognitive/Functional Research; Newman, J., Rice, S., Eds.; CSLI: Stanford, CA, USA, 2010; pp. 285–302. [Google Scholar]

- Holler, J.; Wilkin, K. Communicating common ground: How mutually shared knowledge influences speech and gesture in a narrative task. Lang. Cogn. Process. 2009, 24, 267–289. [Google Scholar]

- Hostetter, A.B.; Alibali, M.W. Visible embodiment: Gestures as simulated action. Psychon. Bull. Rev. 2008, 15, 495–514. [Google Scholar]

- Hostetter, A.B.; Alibali, M.W. Language, gesture, action! A test of the gesture as simulated action framework. J. Mem. Lang. 2010, 63, 245–257. [Google Scholar]

- Sassenberg, U.; van der Meer, E. Do we really gesture more when it is more difficult? Cogn. Sci. 2010, 34, 643–664. [Google Scholar]

- Hostetter, A.B.; Alibali, M.W.; Kita, S. I see it in my hands' eye: Representational gestures reflect conceptual demands. Lang. Cogn. Process. 2007, 22, 313–336. [Google Scholar]

- Kita, S.; Davies, T.S. Competing conceptual representations trigger co-speech representational gestures. Lang. Cogn. Process. 2009, 24, 761–775. [Google Scholar]

© 2011 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Kelly, S.; Byrne, K.; Holler, J. Raising the Ante of Communication: Evidence for Enhanced Gesture Use in High Stakes Situations. Information 2011, 2, 579-593. https://doi.org/10.3390/info2040579

Kelly S, Byrne K, Holler J. Raising the Ante of Communication: Evidence for Enhanced Gesture Use in High Stakes Situations. Information. 2011; 2(4):579-593. https://doi.org/10.3390/info2040579

Chicago/Turabian StyleKelly, Spencer, Kelly Byrne, and Judith Holler. 2011. "Raising the Ante of Communication: Evidence for Enhanced Gesture Use in High Stakes Situations" Information 2, no. 4: 579-593. https://doi.org/10.3390/info2040579

APA StyleKelly, S., Byrne, K., & Holler, J. (2011). Raising the Ante of Communication: Evidence for Enhanced Gesture Use in High Stakes Situations. Information, 2(4), 579-593. https://doi.org/10.3390/info2040579