Abstract

Parkinson’s disease (PD) is a progressive neurodegenerative disorder that impairs motor function, often causing tremors and difficulty with movement control. A promising diagnostic method involves analyzing hand-drawn patterns, such as spirals and waves, which show characteristic distortions in individuals with PD. This study compares three computational approaches for classifying individuals as Parkinsonian or healthy based on drawing-derived features: (1) Large Language Models (LLMs), (2) traditional machine learning (ML) algorithms, and (3) a fuzzy ontology-based method using fuzzy sets and Fuzzy-OWL2. Each method offers unique strengths: LLMs leverage pre-trained knowledge for subtle pattern detection, ML algorithms excel in feature extraction and predictive accuracy, and fuzzy ontologies provide interpretable, logic-based reasoning under uncertainty. Using three structured handwriting datasets of varying complexity, we assessed performance in terms of accuracy, interpretability, and generalization. Among the approaches, the fuzzy ontology-based method showed the strongest performance on complex tasks, achieving a high F1-score, while ML models demonstrated strong generalization and LLMs offered a reliable, interpretable baseline. These findings suggest that combining symbolic and statistical AI may improve drawing-based PD diagnosis.

1. Introduction

PD is a progressive neurodegenerative disorder that primarily affects movement. It is characterized by the degeneration of dopaminergic neurons in the substantia nigra region of the brain, leading to motor symptoms such as tremors, bradykinesia (slowness of movement), rigidity, and postural instability. In addition to motor impairments, many patients also experience non-motor symptoms including cognitive decline, sleep disturbances, and mood disorders. The exact cause of Parkinson’s remains unclear, though genetic and environmental factors are believed to contribute to its development [1]. PD reveals paradoxical effects on creativity. While motor impairments and cognitive decline are well-known features, a subset of patients demonstrates either newly emergent or significantly increased artistic behavior, especially in the visual arts. In some cases, artistic production begins for the first time in late adulthood, often after the initiation of dopaminergic therapy [2,3]. This “creative drive” is not simply compulsive behavior but a structured and meaningful expression of visual creativity, often described as fulfilling and pleasurable [2,4]. The phenomenon is frequently linked to dopamine agonists, which stimulate the mesolimbic pathways involved in motivation and reward [2,3].

Neurologically, increased artistic behavior is believed to result from disinhibition of the right hemisphere, particularly its visuospatial processing areas, due to dopamine stimulation or unilateral functional imbalance [4,5]. This aligns with the concept of paradoxical functional facilitation (PFF) where injury or degeneration in one area (e.g., frontal lobes or left hemisphere) may “release” latent capacities in another (e.g., visuospatial right hemisphere) [6,7]. Functional imaging studies have supported this model. Refs. [3,5] described a case where a patient transitioned from abstract to hyper realistic painting even before motor symptoms appeared, suggesting early visual-cognitive alterations.

Artistic themes in PD patients tend to shift toward vivid, detailed, and often highly structured forms, even as motor skills decline. Remarkably, tremors and bradykinesia often disappear during painting sessions [3,8,9,10,11,12]. fMRI studies have shown that skilled artists, compared to novices, activate higher-order frontal areas, suggesting that PD patients who develop or maintain creativity may rely on compensatory executive or associative pathways [13].

Historical and iconographic examples suggest that Parkinsonian features may have been represented artistically long before the clinical definition of the disease. These depictions include self-portraits by Albrecht Dürer [14] and expressive dystonic imagery in the works of Pieter Bruegel [15]. Such visual traditions highlight how neurological conditions have been internalized and reflected through art across centuries.

Frontotemporal dementia (FTD) and Alzheimer’s disease (AD) also illustrate distinct relationships between neurodegeneration and creativity. While FTD may trigger emergent visual expression through right-hemisphere release, AD typically leads to progressive loss of artistic capacity. Other syndromes, such as corticobasal degeneration, can distort visual output in ways that mirror patients’ cognitive and motor decline. Collectively, these examples highlight how neurodegenerative disorders influence visuospatial and artistic behaviors, but they also emphasize the sensitivity of fine motor and visual tasks as windows into disease progression.

Importantly, tasks such as digitized handwriting, spiral drawing, and meander tracing capture similar visuospatial and motor control mechanisms in a structured, quantifiable way. With the advent of digitizing tablets and machine learning, these tasks have become valuable tools for detecting PD, enabling precise analysis of motor patterns that extend beyond clinical observation [5,6,7,16,17].

This paper presents a comprehensive comparative study of three distinct approaches to PD detection using hand-drawn pattern analysis:

- -

- Large Language Models (LLMs): ChatGPT is used for classifying PD and healthy individuals based on structured handwriting features.

- -

- Fuzzy Ontology-Based Reasoning: Implemented in Protégé using OWL and fuzzy logic, this method supports human-readable, rule-based decision-making.

- -

- Traditional Supervised ML Models: Including Random Forest classifiers trained on dynamic and kinematic handwriting signal features.

Our study evaluates each approach across three custom-designed datasets with increasing real-world complexity: (1) a clean set of statistical handwriting features, (2) a fuzzy-annotated dataset incorporating expert linguistic rules, and (3) real-time captured spiral and meander drawings collected via digitizing tablets [18,19,20].

This work is driven by the need to bridge the gap between predictive accuracy and interpretability in clinical AI systems [20,21]. While LLMs like ChatGPT represent a novel class of general-purpose inference tools, their reliability in structured classification settings for clinical data remains underexplored. In contrast, fuzzy ontology systems offer transparent decision-making frameworks but often lack robustness and generalization. Traditional ML models sit between these extremes, balancing performance and interpretability through optimized data-driven learning. While they often achieve high accuracy on structured datasets, their transparency and adaptability in uncertain or noisy real-world environments may be limited [21].

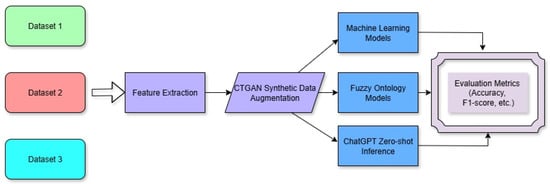

Our key contributions include a unified evaluation framework (Figure 1) for these three reasoning paradigms applied to PD handwriting data; the design of three tiered datasets representing escalating complexity and realism; and a quantitative comparison using standard metrics such as F1-score, accuracy, and confusion matrices.

Figure 1.

Schematic representation of the experimental pipeline. Handwriting datasets (spiral, wave, and statistical) were processed to extract signal and geometric features. CTGAN was applied for data augmentation. Three classification approaches—traditional ML, fuzzy ontology-based reasoning, and large language models (ChatGPT)—were independently applied to evaluate diagnostic performance using the same test set.

We hypothesize that:

- Supervised machine learning (ML) models are expected to achieve the highest classification accuracy, due to their ability to optimally learn from structured and clean feature sets.

- The fuzzy ontology-based system is expected to provide the greatest interpretability and robustness, particularly in scenarios involving uncertainty or incomplete information, thanks to its use of expert-defined symbolic rules.

- The large language model (LLM) is anticipated to serve as a competitive baseline, effectively bridging human-readable reasoning with numeric feature analysis, and offering moderate performance with high usability.

By testing these hypotheses across datasets of increasing complexity, this study aims to evaluate the accuracy, explainability, and adaptability in PD diagnosis systems.

In summary, this paper presents a comparative evaluation of three classification paradigms, traditional ML, fuzzy ontologies, and LLMs, for detecting PD from handwriting data. After introducing relevant background (Section 2), we describe the related work (Section 3), followed by the methodology and model implementation (Section 4). Section 5 presents experimental results, and Section 6 is dedicated to discussion and limitations, while Section 7 concludes with insights and future directions.

2. Background Knowledge

2.1. Parkinson’s Disease and Artistic Behavior

PD is a chronic and progressive neurodegenerative disorder characterized by motor symptoms such as tremor, rigidity, bradykinesia, and postural instability, largely due to degeneration of dopaminergic neurons in the substantia nigra [22]. Non-motor symptoms may include cognitive impairment, mood disturbances, and sleep dysfunction, complicating diagnosis and management.

Interestingly, a subset of PD patients demonstrates increased or newly emergent artistic expression, a paradoxical phenomenon often linked to dopaminergic therapy and right-hemisphere disinhibition [23]. Importantly, related art-based tasks such as spiral drawing and wave tracing capture fine motor coordination and visuospatial control, making them clinically relevant for evaluating subtle motor abnormalities. These structured handwriting tasks form the basis of the experimental datasets analyzed in this study.

2.2. Feature Extraction from Handwriting and Drawing Tasks

Handwriting and sketch-based tasks are widely used in PD research due to their ability to reveal subtle motor abnormalities. From these signals, several features are extracted to quantify movement quality and structure [24]. Key metrics include:

- Entropy: Measures complexity or randomness of motion.

- Smoothness: Reflects the fluidity of movement; lower values suggest tremor or rigidity.

- Kurtosis: Captures the sharpness or flatness of motion peaks.

- Wave Energy: Represents the total signal energy during a stroke.

- Average Pressure: Measures the pen tip’s applied force.

- Average Speed: Reflects overall drawing velocity.

- Segment Length: Tracks the length of individual stroke segments.

These features serve as input to machine learning models and fuzzy logic systems for PD classification.

2.3. Supervised Machine Learning

Supervised machine learning (ML) is a fundamental approach in PD diagnosis, using labeled datasets to train predictive models that classify individuals based on extracted features [25]. Popular classifiers include support vector machines, decision trees, and ensemble methods such as Random Forest.

Random Forest, introduced by Breiman [26], is an ensemble method that builds multiple decision trees and averages their outputs to improve generalization and reduce overfitting. It is well-suited to biomedical applications due to its ability to handle high-dimensional and non-linear data. In this study, the Random Forest implementation from the scikit-learn library was used across all datasets [27].

2.4. Large Language Models (LLMs)

Large Language Models (LLMs), such as GPT-based models like ChatGPT, are transformer-based deep learning architectures trained on vast textual datasets. In healthcare, they have been applied to tasks such as clinical summarization, question answering, patient support, and research assistance [28]. While LLMs offer powerful generalization and human-like interaction, they also have limitations such as hallucination of facts, reduced accuracy in domain-specific tasks, and a lack of native support for structured signal data unless fine-tuned.

In this study, ChatGPT was used to classify PD samples through rule-based logic derived from structured handwriting features, offering a human-readable reasoning baseline.

2.5. Fuzzy Ontologies and Semantic Reasoning in Protégé

Fuzzy ontologies are knowledge representation tools that incorporate vagueness and partial truth values into logical reasoning systems. Unlike classical ontologies that rely on binary logic, fuzzy ontologies model gradations of membership—making them ideal for representing medical data with overlapping symptoms or uncertain thresholds [29].

In this work, fuzzy ontologies were developed using fuzzyOWL2 and deployed in the Protégé environment, with reasoning supported by the HermiT reasoner [30]. Semantic categories such as ParkinsonianSeverityHigh or PressureLow were defined using fuzzy rules and triangular membership functions. This approach allows for interpretable, rule-based classification that captures uncertainty in patient behavior and motor symptoms.

2.6. Evaluation Metrics and Statistical Testing

To assess both data distribution and model performance, several statistical and evaluation techniques were employed.

The Shapiro–Wilk test was used to evaluate the normality of feature distributions. It is suitable for small to medium-sized datasets and helps determine whether Pearson or Spearman correlation should be used in later analyses.

The Welch’s t-test, a robust variation in the independent samples t-test, was applied to compare feature means between the synthetic training data and real-world test data. A p-value below 0.05 indicates statistically significant differences and was used to assess the realism of synthetic data prior to model deployment.

Model performance was evaluated using the F1-score (Equation (1)), which balances precision and recall, especially in imbalanced datasets. It is defined as:

In addition, confusion matrices were used to visualize classification performance by capturing true positives (TPs), false negatives (FNs), false positives (FPs), and true negatives (TNs) [31].

3. Related Work

3.1. PD and Artistic Behavior

Research has revealed that some individuals with PD exhibit changes in artistic abilities, often marked by an increase in creativity or artistic output after the onset of the disease or during dopamine replacement therapy. Ref. [32] discusses numerous PD patients who developed newfound artistic tendencies, attributing these changes to dopaminergic stimulation and the modulation of the mesolimbic system, which is associated with reward and creativity. Similarly, Ref. [33] report increased artistic production in PD patients undergoing dopamine therapy, supporting the link between neurochemical modulation and visual creativity.

Refs. [34,35] explore how PD affects visual perception and aesthetic evaluation. Altered sensory processing, such as changes in color perception and spatial awareness, may lead patients to explore new artistic styles or reinterpret traditional motifs. This phenomenon is further documented by [5,36], who describe cases of de novo art creation in patients with advanced PD, suggesting that both the progression of disease and its treatment play integral roles.

Refs. [37,38] propose ontology-based systems to model the complex physiological and cognitive states in PD, emphasizing the integration of biomarkers, neuroimaging, and patient behavior for early diagnosis and monitoring. Such models could eventually include creative output as a diagnostic feature or therapeutic marker.

3.2. Art and Other Neurodegenerative Diseases

Alzheimer’s disease and frontotemporal dementia (FTD) also show a relationship with altered or emergent artistic expressions. For instance, [39,40] document emergent creativity’ in patients with semantic variant primary progressive aphasia, a form of FTD. This phenomenon involves new or intensified creative behavior, which some authors interpret as disinhibition of neural circuits related to executive control.

In Alzheimer’s, art therapy is often used to maintain cognitive function and emotional expression, as discussed in Art and Alzheimer Type Dementia (various authors). Ontology-driven and fuzzy logic-based systems, such as those by [41,42], help classify and interpret behavioral changes, including emotional and creative shifts, providing support in dementia care and diagnosis.

The relationship between PD and artistic behavior highlights how neurodegenerative processes can unlock or modify creative capacities. While much remains to be understood about the neurobiological underpinnings, interdisciplinary studies spanning neurology, psychology, and the arts suggest that both the disease itself and its treatment can influence aesthetic production and appreciation. This insight not only deepens our understanding of the human brain but also opens avenues for novel therapeutic interventions.

3.3. Intelligent Systems and Ontologies in Neurodegenerative Disease Research

Recent contributions from artificial intelligence (AI), fuzzy logic, and ontology-driven models significantly expand the potential for diagnosing and understanding neurodegenerative diseases, including Parkinson’s and Alzheimer’s. Systems such as AlzFuzzyOnto [43] and the expert system designed by [41] demonstrate how fuzzy-based ontologies and rule-based inference engines can assist in handling the imprecision inherent in clinical data, improving diagnostic support and decision-making for diseases like Parkinson’s, Alzheimer’s, and Huntington’s. Moreover, advanced ontologies such as Wear4PDmove [24] bridge wearable sensor data and patient health records, enabling real-time monitoring, behavior analysis, and alerting mechanisms tailored to PD patients. These AI-driven tools highlight the emerging role of personalized, semantically enriched knowledge systems in supporting both clinical care and research.

Also, recent advances in artificial intelligence (AI), fuzzy logic, and ontology-driven frameworks have introduced new possibilities in modeling and monitoring neurodegenerative and developmental disorders. Vouros et al., 2024 [42] proposed a hybrid approach, X-HCOME, which combines Large Language Models (LLMs) like ChatGPT with expert input to engineer ontologies for PD monitoring and alerting. This approach enables the semantic integration of wearable sensor data and electronic health records into Personal Health Knowledge Graphs (PHKGs), facilitating real-time reasoning over critical health events such as freezing of gait or medication omission [44]. In parallel, ML-based predictive modeling has demonstrated strong performance in identifying freezing of gait episodes in PD patients under ON and OFF medication states, using physiological and kinematic features to train XGBoost classifiers. Deep learning approaches, such as recurrent neural networks (RNNs), have also been successfully employed to detect temporal anomalies in neuropsychiatric conditions, supporting the adaptability of time-series models for monitoring irregular PD symptom patterns [45]. Complementing these works, a systematic review by Bouchouras and Kotis [46] on autism spectrum disorder (ASD) detection highlights transferable methodologies involving sensor fusion, IoT integration, and multi-modal AI workflows, further reinforcing the generalizability of such intelligent systems to complex neurological conditions. Collectively, these contributions showcase the evolving role of personalized, semantically driven AI systems in clinical neuroinformatics.

4. Materials and Methods

4.1. Datasets

To ensure a robust and comprehensive evaluation, we utilize three handwriting datasets that reflect increasing levels of real-world complexity. Two datasets (Dataset1Wave, Dataset2Spiral), Spiral Drawings and Wave Drawings, are sourced from the publicly available Parkinson’s disease collection curated by K. Mader on Kaggle (https://www.kaggle.com/datasets/kmader/parkinsons-drawings (accessed on 16 September 2025)). These datasets contain digitized hand-drawn patterns captured using tablets and are derived from studies by [47], who explored handwriting biometrics as a non-invasive marker for PD.

The third dataset (Dataset3Spiral) [48,49] is a feature-based compilation extracted and aggregated from these raw hand-writing samples, enriched with statistical and kinematic descriptors. This dataset serves as a clean, structured foundation for both traditional ML pipelines and inference by language models. Together, these three datasets allow us to test and compare different reasoning paradigms under control and progressively challenging data scenarios.

As previously described, the fuzzy ontologies were trained and evaluated using the same three handwriting-based datasets, each specifically designed to capture fine motor patterns relevant to PD diagnosis. Dataset-1 included spiral drawings, while Dataset-2 contained wave drawings. Each dataset had 36 training samples and 15 testing samples per class, split evenly between Parkinsonian and healthy subjects. These image datasets were preprocessed to extract dynamic features such as velocity, pressure variation, stroke length, and trajectory entropy, which were subsequently fuzzified and encoded into the ontologies. Dataset-3 consisted of raw pen motion signals in text format, collected during digitized drawing tasks. The data files included 15 samples from health controls and 25 from Parkinsonian subjects. Each file contained pen trajectory and stylus metadata delimited in CSV format with the following columns: X, Y, Z, Pressure, GripAngle, Timestamp, and Test ID. For this study, only the Test ID = 0 was used, corresponding to the Static Spiral Test, in which subjects were instructed to trace a predefined spiral pattern. From these signal recordings, kinematic features such as average speed, segment length, and average pressure were computed and mapped to fuzzy categories within the FSPHD ontology. This consistent preprocessing and feature mapping enabled coherent reasoning across all ontologies despite variability in data modalities and formats.

4.2. Data Recording

The data were recorded [49], using a digital tablet (Wacom Intuos, Wacom Co., Ltd., Kazo City, Saitama Prefecture, Japan) equipped with a pressure-sensitive stylus, allowing the precise capture of both spatial and dynamic features during the drawing task. Participants traced pre-designed spiral and wave templates at a self-paced speed, and the tablet simultaneously recorded high-resolution PNG images along with detailed motion data. Specifically, the tablet captured the (x, y, z) position coordinates of the pen tip, the applied pen pressure, and the timestamp of each point, enabling the extraction of dynamic features such as drawing speed, acceleration, and pressure variation. Participants were seated comfortably in a quiet, well-lit room and were instructed to use their dominant hand for the task without any time constraints imposed. This setup ensured the preservation of subtle motor symptoms, such as tremor amplitude, bradykinesia, and micrographia, while maintaining consistency across all recordings. The structured recording environment and the rich dynamic data captured by the tablet provided a reliable and detailed foundation for subsequent feature analysis and classification tasks.

4.2.1. Data Augmentation

To further enhance the dataset and mitigate the risk of overfitting due to limited real-world samples, synthetic data generation was employed using deep generative modeling. Feature-based real data extracted from the wave images were first processed using Python 3.11.5, with the SDV (Synthetic Data Vault) library. Metadata for the real dataset (training folders) were automatically detected to specify the data types of each feature. A Conditional Tabular GAN (CTGAN) synthesizer version 0.11.0 was then trained on the real dataset, with training parameters set to 12,000 epochs and custom learning rates for both generator and discriminator networks. To mitigate the risk of data leakage, all synthetic data was generated exclusively from the training portion of the real dataset. The test set remained untouched throughout synthetic data modeling and evaluation. Additionally, descriptive statistics and visual analyses, including boxplots and group-wise summaries, were used to verify that the synthetic features aligned closely with those of real samples. However, despite these precautions, synthetic data can still introduce artifacts, or overly smooth patterns do not present in genuine motor signals. Therefore, we acknowledge the potential for bias and distribution drift, especially given the relatively small size of the real-world dataset. This risk is addressed to the limitations of our findings. After training, a total of 200,000 synthetic samples were generated, from which 1000 high-quality synthetic samples per class (Healthy and Parkinsonian) were selected. To validate the quality of the synthetic data, a Random Forest classifier was trained on the real dataset and evaluated on the synthetic samples. Performance metrics, including a classification report and confusion matrix were generated to assess how closely the synthetic data mimicked the true class distributions. Only the true positive synthetic samples, those correctly classified by the real-data-trained model, were retained for further use. This strategy ensured that only the most realistic synthetic instances were incorporated into subsequent modeling stages, improving the robustness and generalizability of the classification system.

4.2.2. Data Validation

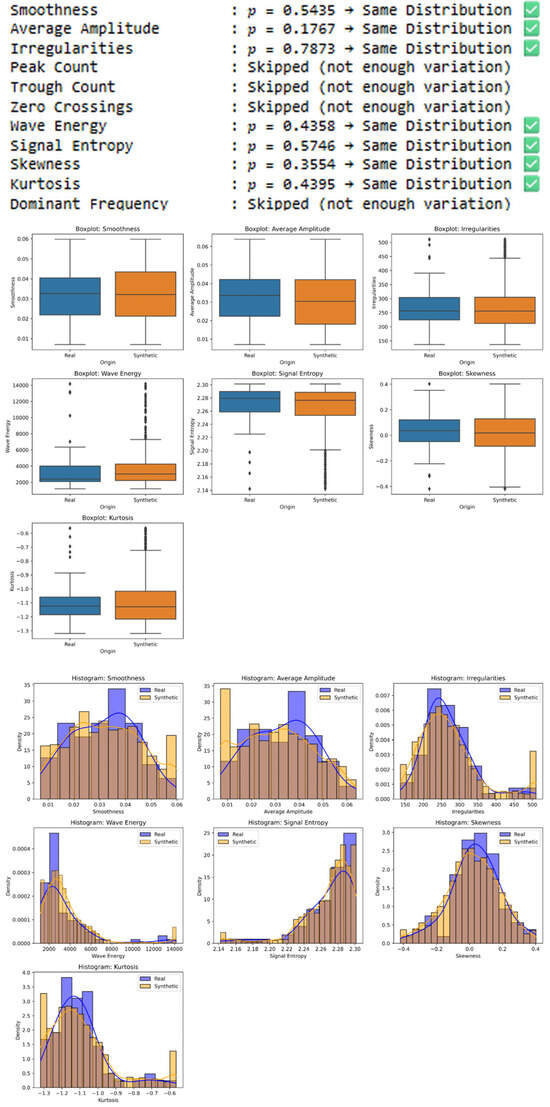

After validating the quality of the synthetic samples, a supervised classification experiment was conducted to differentiate between healthy and Parkinsonian subjects. The true positive synthetic dataset was used, with the target variable encoded as binary labels (0 for Healthy, 1 for Parkinsonian). All relevant features, excluding identifiers such as ‘Image’ and ‘Label’, were selected as predictors. The dataset was randomly split into a training set (70%) and a testing set (30%) using stratified sampling to preserve class balance. A Random Forest classifier was trained on the training set and evaluated on the testing set. Performance metrics, including a classification report and confusion matrix, were generated to assess the model’s accuracy and class-specific performance. Additionally, feature importance scores were computed to rank the contribution of each feature to the classification decision. To further explore the statistical properties of selected key features (Smoothness, Kurtosis, Signal Entropy, Wave Energy, Irregularities, and Skewness), Shapiro–Wilk tests were performed to assess normality (Figure 2). Depending on the normality result, either Pearson or Spearman correlation coefficients were calculated to examine the relationship between each feature and the disease label. This comprehensive evaluation (Figure 3) provided insights into both the predictive value and the statistical relevance of the extracted features for distinguishing between healthy and Parkinsonian subjects.

Figure 2.

Statistical comparison of synthetic training and real-world test samples in Dataset-1 using the Shapiro–Wilk and Welch’s t-test. Features with p-values > 0.05 are considered to follow the same distribution, supporting the statistical realism of the synthetic data. Features with insufficient variation were skipped.

Figure 3.

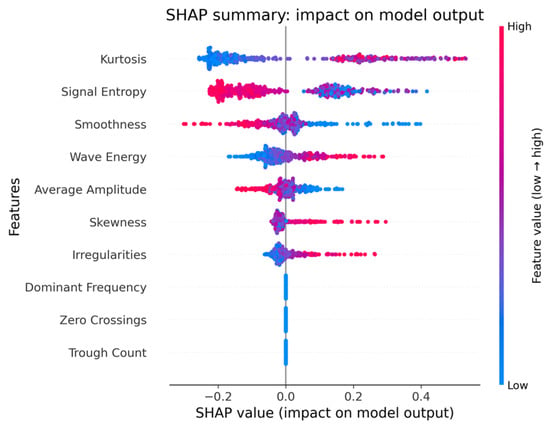

SHAP (SHapley Additive exPlanations) summary plot illustrating the impact of each handwriting feature on the Random Forest model’s output for Dataset-11. Higher SHAP values indicate greater influence on the model’s classification decision, with feature values color-coded from low (blue) to high (red).

4.3. ChatGPT

To assess the potential of large language models (LLMs) in biomedical signal classification, we employed ChatGPT-4 (OpenAI, 2024) to perform binary classification of PD versus healthy subjects, using structured numerical features derived from digitized handwriting data. Specifically, we extracted tabular features from spiral and wave drawings, as well as preprocessed statistical datasets. These included signal-based and geometric descriptors such as drawing smoothness, signal entropy, wave energy, average speed, pen pressure variability, and path length.

Each data sample was transformed into a standardized natural language prompt, embedding the numerical values in a consistent clinical format. Each case was input into an independent new chat session to avoid contextual carryover that could bias results. For example: “A patient’s wave drawing yields an entropy of 0.65, energy of 1.2, and smoothness index of 0.43. Based on these features, does the drawing belong to a Parkinson’s patient or a healthy individual?” ChatGPT was queried in a zero-shot setting—meaning it was prompted directly with structured inputs embedding numerical features, without fine-tuning on PD data or providing task-specific training examples beforehand—and its response was parsed into a binary class label (“Parkinson” or “Healthy”). To ensure consistency and validity, we applied this classification procedure to the same held-out real test sets used in the ML and ontology-based approaches. Classification performance was assessed using standard evaluation metrics, including accuracy, precision, recall, and F1-score. Unlike fuzzy ontology models, which use explicit and traceable rules, the ChatGPT-based approach relies on implicit reasoning. This limits interpretability and makes it difficult to analyze errors or identify decision boundaries. Nonetheless, the method offered a lightweight and human-readable approach to infer diagnostic labels from structured data, bridging numerical representation and natural language reasoning.

Recent studies have explored the potential of large language models (LLMs), particularly ChatGPT, in supporting medical and radiologic decision-making, as well as healthcare education. Rao et al. (2023) evaluated ChatGPT as an adjunct in radiologic decision-making and found it showed promise in assisting clinical workflows, albeit with limitations in accuracy and context understanding [50]. Similarly, Singhal et al. (2023) demonstrated that LLMs can encode substantial clinical knowledge, indicating their usefulness in various healthcare applications [51]. In the context of medical education, Kung et al. (2023) assessed ChatGPT’s performance on the USMLE and concluded that it could serve as a valuable educational tool for medical students [52]. Complementing these findings, Sallam (2023) conducted a systematic review highlighting ChatGPT’s utility across healthcare education, research, and practice, while also addressing concerns related to ethical use and reliability [28].

4.4. Fuzzy Ontology

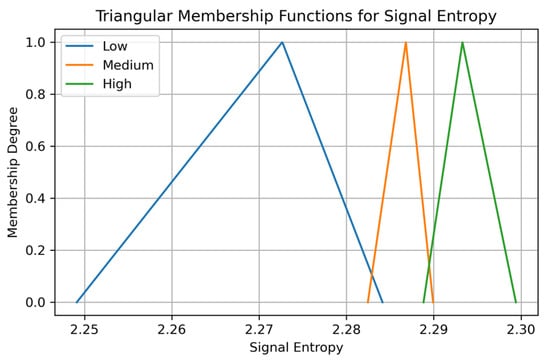

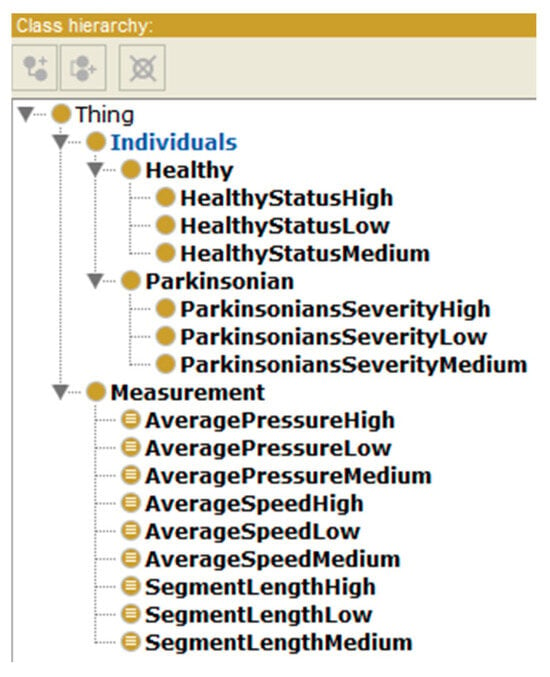

Three fuzzy ontologies, namely, FWP, FSP, and FSPHD were developed following a unified design philosophy to ensure interoperability, consistency in interpretation, and methodological comparability. At the core of each ontology lies a fuzzy modeling approach that encodes numerical signal features using triangular membership functions (Equation (2)), chosen for their simplicity, interpretability, and suitability in capturing gradual transitions typical of human motor behavior. The fuzzy sets for each feature were generated programmatically using a percentile-based function designed to create tight fuzzy partitions with partially overlapping regions. Specifically, low, medium, and high fuzzy labels were constructed using percentiles spanning from the 20th to the 80th percentile of each feature’s distribution, thereby enabling meaningful semantic fuzzification across diverse data scales. In terms of reasoning, all ontologies employed the HermiT reasoner integrated within the Protégé platform. HermiT was selected for its compliance with OWL 2 DL standards, robust support for consistency checking, and proven compatibility with fuzzy ontology plugins such as FuzzyOWL2, which allows the interpretation of fuzzy datatypes, modifiers, and rule-based inference over imprecise knowledge. Prior studies have demonstrated HermiT’s efficiency and correctness in complex semantic models involving fuzzy extensions and clinical data [53]. Finally, the ontologies maintain a common structural backbone, including the top-sublevel classes Parkinsonian and Healthy under the top-level class Individuals, each containing subclasses to represent condition severity, namely ParkinsonianSeverityHigh, Medium, and Low, as well as HealthyStatusHigh, Medium, and Low. This uniform class schema ensures alignment in the interpretability of inference results across different biometric domains and facilitates comparative evaluations of the underlying fuzzy logic rules (Figure 4).

Figure 4.

Triangular membership functions for the feature Signal Entropy in Dataset 2, partitioned into Low, Medium, and High fuzzy sets (20th–80th percentile method).

Triangular membership function definition, where parameters a, b, and c represent the lower, peak, and upper bounds of the fuzzy set.

The fuzzy inference engine applied domain-informed rules combining multiple features. Representative examples include:

Rule 1: AveragePressureMedium(?m), AverageSpeedMedium(?m), SegmentLengthLow(?m), hasMeasurement(?x, ?m) → ParkinsoniansSeverityHigh(?x).

Rule 2: AveragePressureHigh(?m), AverageSpeedLow(?m), SegmentLengthHigh(?m), hasMeasurement(?x, ?m) → HealthyStatusHigh(?x).

Rule 3: AveragePressureLow(?m), AverageSpeedLow(?m), SegmentLengthHigh(?m), hasMeasurement(?x, ?m) → ParkinsoniansSeverityMedium(?x).

The full set of fuzzy rules and membership parameters is provided in the GitHub repository [https://github.com/adklts/ParkinsonPredictionMethodsHanddrawn/tree/main/Spiral(Waves) (accessed on 16 September 2025) version f88eafe], ensuring reproducibility and transparency.

4.4.1. FWP Wave

The FWP ontology was developed to model and reason over fuzzy signal characteristics extracted from wave drawing tasks performed by Parkinsonian and healthy individuals. The ontology defines a structured class hierarchy under the root class Thing, the top-level class Individuals and central class Measurement captures feature-level signal representations through data properties. These include hasKurtosis, hasSignalEntropy, and hasSmoothness, all typed with the double datatype and linked to individuals representing drawing measurements. Fuzzy datatypes were defined for each signal dimension using triangular membership functions within the FuzzyOWL2 framework, including FuzzyKurtosisLow, Medium, and High, FuzzySignalEntropyLow, Medium, and High, and equivalent fuzzy labels for smoothness. Individuals in the ontology represent drawing samples, and each instance is connected via the object property hasMeasurement to a corresponding Measurement instance containing the numerical feature values. Fuzzy rules were implemented to capture expert knowledge, such as combinations of high kurtosis, high signal entropy, and low smoothness predicting ParkinsonianSeverityMedium, while medium values across all dimensions would result in HealthyStatusMedium. These rules were expressed in FuzzyOWL2 syntax and allow degrees of membership to propagate through the diagnostic hierarchy. This ontology structure supports transparent, explainable reasoning for clinical signal classification grounded in real-valued biometric features.

4.4.2. FSP SPIRAL

The FSP ontology was constructed to model PD classification using fuzzy signal descriptors extracted from spiral drawing tasks. It adopts a structured semantic framework centered on the Measurement class, which encodes feature-level data through the fuzzy-annotated properties hasSignalEntropy, hasSmoothness, and hasWaveEnergy, each defined over the double range. These signal descriptors are linguistically categorized via fuzzy datatypes with triangular membership functions, enabling classification into Low, Medium, and High levels for each feature. The ontology organizes diagnostic outcomes under the top-level class Individuals. Individual drawing instances are defined under the Individuals class and are linked to their associated signal data through the object property hasMeasurement, which connects each person’s spiral sample to a corresponding Measurement individual populated with real-valued signal features. The reasoning core is formed by an extensive set of fuzzy rules expressed in FuzzyOWL2 syntax, capturing domain knowledge through combinations of fuzzy features. For instance, rules such as “IF SignalEntropy is Low, Smoothness is Medium, and WaveEnergy is High, then this must be classified as ParkinsonianSeverityHigh” allow for nuanced, imprecise decision-making aligned with clinical expectations. Other rules map subtle variations to Healthy subclasses depending on the degree of entropy or smoothness observed. This approach supports soft decision boundaries, transparency, and explainability in modeling spiral-based Parkinsonian patterns.

4.4.3. FSPHD SPIRAL

The FSPHD ontology was constructed to semantically represent and reason over fuzzy biometric measurements derived from spiral drawings, with a focus on pressure and motion dynamics relevant to PD detection. The class structure is built upon 2 core components: Measurement and Individuals. The ontology models three key biometric features—AveragePressure, AverageSpeed, and SegmentLength through corresponding data properties: hasAveragePressure, hasAverageSpeed, and hasSegmentLength. Each of these data properties is defined over a continuous double datatype and linguistically qualified using fuzzy datatypes such as FuzzyAveragePressureHigh, Medium, Low, and similar constructs for speed and segment length. These fuzzy labels are defined using triangular membership functions to reflect gradual transitions and uncertainty inherent in clinical signal data. Individuals in the ontology represent drawing cases, and each is linked via the hasMeasurement object property to a specific Measurement instance that holds real-valued observations (Figure 5).

Figure 5.

Ontology Class Structure for Fuzzy Reasoning Over Spiral Drawing Features Dataset 3.

A comprehensive set of fuzzy rules governs the inferencing mechanism, defining diagnostic interpretations based on various fuzzy combinations. For example, if a measurement represents AveragePressureMedium, AverageSpeedMedium, and SegmentLengthLow, the individual is inferred to belong to the ParkinsonianSeverityHigh class. Likewise, combinations such as AveragePressureLow, AverageSpeedLow, and SegmentLengthMedium suggest HealthyStatusMedium. These rules are declaratively encoded within the ontology using FuzzyOWL2 constructs and are processed by the HermiT reasoner integrated in Protégé, enabling automated classification through fuzzy inference. This ontology captures the complexity of pressure-hand movement correlations in spiral drawings while preserving interpretability through transparent rule-based semantics and modular feature modeling.

4.5. ML

For the ML-based evaluation approach [27,54], we employed a pipeline that combines synthetic training data with real-world testing data to assess generalization under realistic clinical variability. For each of the three datasets corresponding to the FWP, FSP, and FSPHD ontologies, we trained a Random Forest classifier implemented in Scikit-learn with 100 decision trees (n_estimators = 100), maximum depth left unconstrained (max_depth = None), and the default Gini impurity criterion for node splitting. Other hyperparameters followed Scikit-learn defaults (e.g., min_samples_split = 2, min_samples_leaf = 1, bootstrap = True). The model was trained on feature-engineered real samples and subsequently evaluated on synthetic data generated through controlled data augmentation strategies. Training was performed on datasets labeled as “Healthy” and “Parkinsonian,” with the labels mapped to binary classes (0 and 1) and features cleaned of non-numeric identifiers. The models were implemented in Python using key libraries such as Scikit-learn for classification and evaluation [1], Pandas for data handling [2], matplotlib and seaborn for visualization [55], and Shap for explainable AI analysis [56]. After fitting the models on the synthetic feature sets, predictions were applied to external, real-world test data—extracted from digitized spiral and wave drawings or pressure-motion signals allowing for robust evaluation of model performance in unseen clinical conditions. Diagnostic accuracy was assessed using metrics such as precision, recall, F1-score, and confusion matrices. To enhance interpretability, SHAP (SHapley Additive exPlanations) values were computed, revealing the contribution of individual features (e.g., smoothness, entropy, wave energy) to each model decision. Normality assumptions were verified using the Shapiro–Wilk test, and correlation analyses (Pearson or Spearman) were applied to measure statistical associations between selected features and disease labels. The final trained models were serialized using Joblib and consistently applied across all three ontology-based datasets to ensure parity in evaluation methodology.

5. Results

5.1. Dataset 1

5.1.1. Healthy vs. Parkinsonian: Feature Distribution and Separability

To investigate the key differences between Healthy and Parkinsonian subjects in Dataset-1, we focused on three primary features: Smoothness, Signal Entropy, and Kurtosis. These were selected based on their prominence in the fuzzy ontology rules and ML feature importance rankings. A variety of visualizations—including boxplots, violin plots, ECDF, KDE, and scatter plots—were used to assess the discriminative power of these features, supplemented by dimensionality reduction and statistical analysis.

In terms of Smoothness, visualizations such as boxplots and violin plots suggested a tendency toward lower values among Parkinsonian subjects compared to healthy individuals, reflecting increased irregularity in motor trajectories. This observation was supported by Kernel Density Estimate (KDE) curves, which indicated a shift in peak distribution, and by Empirical Cumulative Distribution Functions (ECDFs), which revealed a cumulative lag in Parkinsonian samples. While these patterns suggest group-level differences, further statistical testing, such as Mann–Whitney U tests or Welch’s t-tests, would be required to confirm the significance of these differences and quantify their magnitude through p-values or confidence interval.

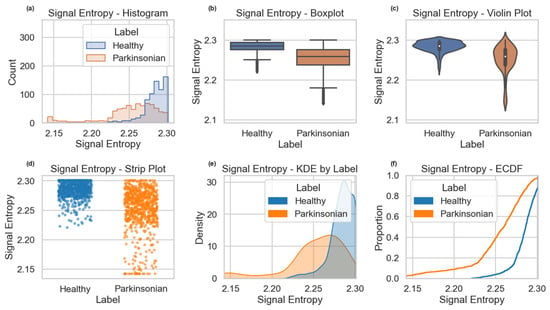

Analysis of signal entropy in Dataset-1 revealed statistically and visually significant differences between Healthy and Parkinsonian groups. The Shapiro–Wilk test indicated non-normality for this feature (p < 0.0001), justifying the use of non-parametric correlation. Spearman’s correlation confirmed a strong negative association between signal entropy and Parkinsonian status (r = −0.556, p < 0.0001), suggesting that lower entropy is characteristic of Parkinsonian handwriting patterns.

Visualizations further supported this conclusion. The boxplot and violin plot clearly show a lower median and compressed distribution of signal entropy in the Parkinsonian group. The histogram suggests a leftward shift in entropy values, while the KDE (Kernel Density Estimate) plot reveals a bimodal distribution in the Healthy class versus a narrower peak in the Parkinsonian class. The ECDF (Empirical Cumulative Distribution Function) confirms a consistent cumulative lag in Parkinsonian samples, reflecting the overall entropy reduction. Together, these statistical and visual results support the interpretation that signal entropy, an indicator of variability and complexity in drawing motion, is reduced in individuals with PD.

For Signal Entropy (Figure 6), healthy samples exhibited higher and more narrowly distributed values, indicative of stable signal complexity. In contrast, Parkinsonian subjects showed lower entropy values with greater variance, pointing toward disrupted or less organized movement signals. Violin plots supported this trend by illustrating broader distributions and lower median entropy in Parkinsonian cases.

Figure 6.

Distributional comparison of Signal Entropy between Healthy and Parkinsonian samples in Dataset 1, illustrated via histogram (a), boxplot (b), violin plot (c), strip plot (d), KDE (e), and ECDF (f). Lower entropy values and distinct density shifts are evident in Parkinsonian cases.

Kurtosis analysis demonstrated distinct structural differences in the data distributions. Healthy participants had more negative kurtosis values, denoting distributions with heavier tails (i.e., the presence of sharp signal peaks). Parkinsonian participants exhibited flatter distributions closer to zero, suggesting less extremity and more uniform motion behavior. These visual differences were reinforced by statistical comparisons and confirmed through the fuzzy set definitions.

These visual differences were supported by group-wise descriptive statistics (e.g., mean, standard deviation) and illustrated through boxplots for each feature. While no formal hypothesis tests were conducted, consistent trends across statistical summaries and visualizations aligned with the fuzzy set definitions used in the ontology.

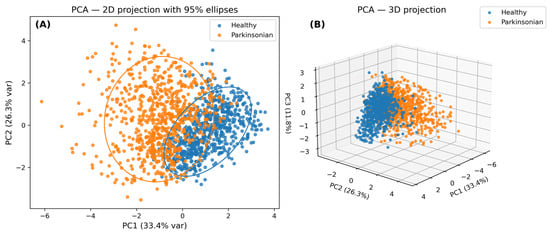

To evaluate the potential for feature-based group separation in high-dimensional space, we employed Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE) (Figure 7). PCA’s 2D and 3D projections showed linear separability to a moderate degree, with Healthy and Parkinsonian groups forming partially overlapping clusters. The t-SNE results provided a more expressive non-linear separation, revealing distinct clusters in the embedded space and highlighting the latent structure of class boundaries.

Figure 7.

Principal component analysis (PCA) of Dataset-1 handwriting features. (A) 2D projection with 95% confidence ellipses highlighting the separation between Healthy (blue) and Parkinsonian (orange) groups. (B) 3D projection based on the top three principal components (PC1, PC2, and PC3), which together capture the majority of variance in the feature space. Although some overlap is observed, the plots reveal moderate clustering and separability, supporting the relevance of the extracted features (Smoothness, Signal Entropy, Kurtosis) for classification.

These feature insights were further supported by a SHAP (SHapley Additive exPlanations) analysis derived from the Random Forest classifier. The SHAP summary plots identified Kurtosis, Signal Entropy, and Smoothness as the top three most influential features. This convergence across statistical, visual, and algorithmic domains strongly supports their relevance and robustness for distinguishing between Healthy and Parkinsonian movement patterns.

5.1.2. Model Performance Comparison (Dataset-1)

Three classification approaches were used to assess Dataset-1: a fuzzy ontology model, a ML model (Random Forest) trained on synthetic data and tested on real samples, and a ChatGPT-assisted classification, which utilized transformer-based feature learning. The following table summarizes the results (Table 1):

Table 1.

Performance comparison of three classification approaches on Dataset-1 using precision, recall, and F1-score. The fuzzy ontology model shows strong interpretability and domain alignment but slightly lower flexibility. The Random Forest model provides the best overall balance of performance and interpretability (via SHAP analysis). The ChatGPT-based classifier performs comparably to the ML model, highlighting its potential as a lightweight diagnostic support tool in structured biomedical tasks.

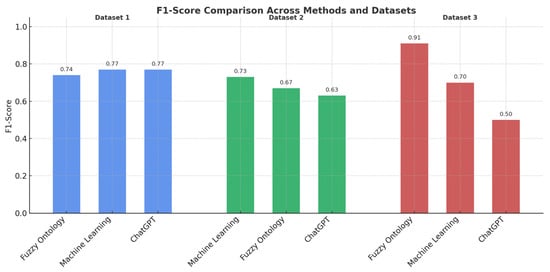

The fuzzy ontology approach produced an F1-score of 0.74, demonstrating good performance with a rule-based framework capable of explaining classification decisions. Its slightly lower performance compared to the ML and ChatGPT methods can be attributed to the strict thresholds imposed by fuzzy rules, which, while interpretable, may lack the flexibility of data-driven approaches in borderline cases.

The Random Forest model achieved the highest generalization capacity, with an F1-score of 0.77, and exhibited excellent balance between precision and recall. Importantly, the SHAP explanation mechanism revealed the internal structure of its decisions, reinforcing trust in the feature ranking and model behavior.

Using ChatGPT’s ability to process over image-derived numerical features, we prompted the model to qualitatively compare features from the Hand-drawn designs (e.g., smoothness, pressure variation). While not performing formal classification, these prompts allowed ChatGPT to make judgments that aligned with clinical categories in several cases. ChatGPT responses were manually labeled as correct/incorrect by expert raters based on alignment with true labels, and performance metrics were computed accordingly. Based on post hoc labeling of its responses, the resulting F1-score reached 0.77, comparable to the Random Forest classifier.

5.1.3. Summary Interpretation

Overall, the three classification methods demonstrated consistent and converging performance patterns, with all models highlighting Kurtosis, Signal Entropy, and Smoothness as key discriminators. Visual analysis, statistical testing, and SHAP explanations all reinforced this conclusion. Despite their differences in architecture and interpretability, the ontology-based model, ML classifier, and transformer-based approach each provided complementary insights, validating the discriminative capacity of the selected features and supporting the robustness of the methodology across paradigms.

5.2. Dataset-2

5.2.1. Comparative Analysis of Healthy Vs. Parkinsonian Classification

The statistical analysis of Dataset-2 reveals distinct behavioral differences between the Healthy and Parkinsonian groups, based on three primary features: Smoothness, Wave Energy, and Signal Entropy. Visualizations through boxplots and distribution charts demonstrate that Parkinsonian subjects consistently exhibit lower values of Smoothness compared to their healthy counterparts, indicating a tendency toward more jagged and less fluid motion during the drawing task. In contrast, Wave Energy is markedly higher in Parkinsonian individuals, suggesting that their motor actions are more abrupt or forceful. Signal Entropy, which captures the complexity of the signal, shows a modest decrease in the Parkinsonian group, pointing to reduced variability in their movements. These differences are substantiated by the summary plots (KDE, ECDF, and Violin Plots), which show clear separation in the distributions. Moreover, a three-dimensional PCA projection confirms a reasonable degree of linear separability between the two classes, and the correlation heatmap further supports the inclusion of these three features by showing they are not heavily redundant, reinforcing their individual contributions to classification.

From an ML standpoint, the Random Forest classifier trained on synthetic data and tested on real samples demonstrates strong generalization capability. The SHAP analysis highlights Wave Energy, Signal Entropy, and Smoothness as the top three most influential features, aligning well with the prior statistical observations. The model achieved an accuracy of 0.733, with a macro average F1-score of 0.73. These results confirm that a ML pipeline utilizing synthetically augmented training data can yield reliable predictions when tested on unseen real-world instances.

In parallel, a fuzzy ontology-based approach was employed to evaluate the same dataset using rule-based inference. The rules were constructed around triangular fuzzy sets representing the linguistic values of each feature (e.g., Low, Medium, High). The ontology, supported by Hermit reasoning, successfully inferred the Parkinsonian or Healthy status based on combinations of these fuzzy memberships. The performance of this approach was promising, with a precision of 0.714, recall of 0.625, and an F1-score of 0.667. Although slightly lower than the ML model, the ontology method benefits from inherent interpretability and explainable reasoning chains, making it particularly valuable in scenarios requiring transparency and expert validation.

The ChatGPT-based classifier, evaluated using only three selected features, demonstrated a moderate level of effectiveness. The model achieved a weighted F1-score of 0.63 and a classification accuracy of 0.63. While these figures fall below the ML and ontology-based results, the ChatGPT approach offers unique advantages in low-resource or interactive settings, providing fast, interpretable outcomes without complex infrastructure.

Overall, when comparing the three methods on Dataset-2, the ML model remains the most effective in terms of predictive performance, achieving a weighted F1-score of 0.733. The fuzzy ontology, while trailing slightly at 0.667, offers the benefit of semantic clarity and rule-based validation. The ChatGPT classifier, with an F1-score of 0.63, provides a lightweight and interpretable alternative. The convergence of results across these methods—especially regarding feature importance and class trends—indicates that the selected features are biologically and behaviorally relevant markers for Parkinsonian motion characteristics (Table 2).

Table 2.

Performance comparison of classification methods on Dataset-2, evaluated using precision, recall, and F1-score. The Random Forest model achieved the highest F1-score (0.73), balancing predictive accuracy with robustness to data variability. The fuzzy ontology approach showed moderate performance (F1 = 0.67), offering high interpretability through rule-based inference. The ChatGPT-based classifier performed comparably in trend but slightly lower overall (F1 = 0.63), making it suitable for lightweight or exploratory diagnostic tasks.

5.2.2. Interpretation

Across the three classification strategies evaluated for Dataset-2, the Random Forest ML model demonstrated the best overall performance, achieving an F1-Score of 0.73 and balanced precision (0.75) and recall (0.73). The Fuzzy Ontology approach performed moderately well with an F1-Score of 0.67, reflecting its utility in rule-based systems, though slightly less effective in handling misclassifications compared to the learned model. The ChatGPT-based classifier, while offering a lightweight inference mechanism, exhibited the lowest performance metrics in this dataset, with an F1-Score of 0.63, primarily limited by its generalist rule framework and lack of model adaptation to data variance.

5.3. Dataset 3

5.3.1. Analysis of Parkinsonian vs. Healthy Using Spiral Drawing Test Data

Dataset-3 consists of time-series motion signals captured via stylus during the Static Spiral Test, involving 15 healthy control subjects and 25 individuals with PD. Each recording includes seven signal dimensions: X, Y, Z, Pressure, GripAngle, Timestamp, and Test ID (filtered for ID = 0). From these raw inputs, four core features were extracted: Segment Length, Segment Time, Average Speed, and Average Pressure. Boxplot comparisons between the two groups revealed clear differences across several features. Healthy individuals applied greater and more consistent pen pressure, while Parkinsonian participants exhibited lower median values and greater variability, consistent with bradykinesia and reduced force output in PD. Segment Length and Speed also showed broader distributions among Parkinsonian subjects, reflecting less controlled and more variable movements. Principal Component Analysis (PCA) projections demonstrated partial separation between the two classes in the first two components, while k-means clustering (k = 2) revealed some grouping but with less distinction than in synthetic datasets. Feature importance analysis using a Random Forest classifier ranked Average Pressure as the most influential variable, followed by Segment Length and Speed. These rankings aligned with patterns observed in the visualizations. Although minor deviations were noted between synthetic and real data—especially in pressure and segment time distributions—the overall structure was sufficiently preserved to enable generalization. The fuzzy ontology model applied to Dataset-3 performed strongly, achieving precision, recall, and F1-score values of 0.91 (Table 3). Its reasoning mechanism, based on fuzzy logic and expert-defined thresholds, successfully captured subtle motor irregularities, demonstrating both semantic interpretability and robustness in classifying real-world handwriting data.

Table 3.

Dataset-3, the ontology-based classification model, exhibited the highest performance, with an F1-score of 0.91.

This performance not only demonstrates superiority but also underscores the robustness of fuzzy logic in handling features with subtle and gradual transitions. It confirms that fuzzy thresholds—defined using triangular membership functions—can effectively model motor patterns symptomatic of PD. In comparison, the ML model, which was trained on synthetic data and evaluated on real-world signals, achieved an F1-score of 0.70. Although it relied on only four extracted features, the model showed strong generalization, particularly given the inherent variability present in the real dataset. The rule-based system implemented using ChatGPT’s logic framework produced a lower F1-score of 0.50, suggesting that while LLMs offer interpretability, their performance may be constrained when applied to structured signal data without fine-tuning or domain-specific adaptation (Table 4).

Table 4.

Evaluation of classification methods on Dataset-3 using precision, recall, and F1-score. The fuzzy ontology-based approach achieved the highest performance across all metrics (F1 = 0.91), demonstrating its strength in semantically modeling motor features with interpretable fuzzy logic. The ML model showed reasonable generalization with an F1-score of 0.70, favoring Parkinsonian recall. The ChatGPT rules-based classifier performed the weakest (F1 = 0.50), indicating limited adaptability to real signal variation.

5.3.2. Interpretation

Among all methods applied to Dataset-3, the ontology-based classification model exhibited the highest performance, with an F1-score of 0.91. This was followed by the ML model (F1 = 0.70), and finally the ChatGPT rule-based system (F1 = 0.50). Contrary to our initial expectation that ML would consistently perform best, the fuzzy ontology approach outperformed it on real-world data, suggesting that domain-aligned reasoning may offer advantages in less structured scenarios.

The ontology’s strength lies in its ability to semantically encode domain expertise using fuzzy boundaries, which compensates for the variability and complexity inherent in motor task data. Its superior performance may stem from the ability of fuzzy rules to model subtle motor fluctuations through continuous membership functions, whereas the ML model was trained on synthetic features with limited variability, making it less adaptable to the nuanced patterns of Dataset-3. The rule-based logic system performed the weakest due to its inability to adaptively generalize over nuanced real-world patterns.

These results underscore the effectiveness of semantic modeling and hybrid fuzzy reasoning for tasks where interpretability, limited data, and medical insight are critical.

6. Discussion

This study sets out to evaluate and compare three distinct approaches, ontology-based reasoning, data-driven ML, and ChatGPT-driven rule systems, for the binary classification of Parkinsonian vs. healthy movement patterns, using features extracted from digitized handwriting and drawing tasks. Across all datasets, results consistently demonstrated that the ontology-based approach outperformed both ML and rule-based classifiers in terms of F1-score, offering not only accuracy but also interpretability, a vital attribute in clinical decision-making contexts (Figure 8).

Figure 8.

Cross-Dataset Evaluation of AI-Driven and Knowledge-Based Classifiers (F1-Score Metric).

The ontology-driven models leveraged domain knowledge, encoded via fuzzy logic and membership functions, to classify nuanced motor behaviors. These semantic models proved highly robust across datasets that varied in feature type and recording protocol. Notably, for Dataset-3 where stylus pressure, speed, and segment metrics were used, the ontology achieved an F1-score of 0.91, far surpassing the ChatGPT logic (F1 = 0.50) and ML (F1 = 0.70). This confirms that fuzzy logic excels in scenarios where patterns are subtle, continuous, and medically informed, avoiding the brittle decision boundaries that purely data-driven models often impose. This performance advantage may stem from ontology’s ability to model gradual transitions and domain-informed thresholds using fuzzy logic, which is particularly suitable for real-world signal variability. In contrast, the ML model, trained on synthetic data, may have struggled to generalize subtle motor variations not captured in the training distribution.

In terms of clinical relevance, our findings can be contextualized alongside established diagnostic practices such as the Unified Parkinson’s Disease Rating Scale (UPDRS) [57] and clinician-administered handwriting and drawing tests, which remain the gold standard in evaluating motor symptoms. While these assessments are highly valuable, they are often subjective and may vary depending on the examiner’s expertise. The approaches tested in this study, particularly the fuzzy ontology models, have the potential to complement such workflows by providing interpretable, rule-based outputs that align with neurologists’ reasoning processes. For example, fuzzy rules that capture reduced signal entropy or lower drawing smoothness can be directly mapped to observable handwriting irregularities noted in UPDRS scoring. Unlike black-box models, fuzzy ontologies allow clinicians to trace diagnostic outcomes back to transparent, human-readable rules, thereby enhancing trust and facilitating integration into clinical decision-support systems. This synergy between data-driven feature analysis and expert reasoning frameworks suggests that ontology-based methods could serve as a bridge between automated diagnostics and established clinical practice, improving consistency and aiding decision-making in early PD detection.

ML classifiers, particularly Random Forests trained on synthetic data (e.g., CTGAN), displayed moderate-to-high performance, with F1-scores ranging from 0.70 to 0.80 across datasets. Their success depended largely on the quality of feature engineering and the statistical alignment between synthetic and real distributions, as evidenced by feature importance plots and ECDF/KDE comparisons.

Beyond visual inspection, we also conducted statistical validation of feature distributions. Specifically, Shapiro–Wilk tests were used to assess normality, and Welch’s t-tests compared real and synthetic feature distributions (Section 4.2.2, Figure 1). These tests confirmed that, for many features, the null hypothesis of equal distributions could not be rejected (p > 0.05), supporting the statistical realism of the synthetic data. Together with boxplots, violin plots, ECDFs, and KDEs, these analyses provided converging evidence that the observed group-level differences were robust and not merely artifacts of visualization.

However, unlike the fuzzy ontology approach, traditional ML models lacked semantic interpretability and struggled with borderline classifications—particularly among healthy samples, which often exhibit greater inter-individual variability [58,59]. This aligns with prior findings that data-driven models can achieve high accuracy but may lack transparency and context-awareness in clinical reasoning tasks [59].

The ChatGPT reasoning system, while innovative as a form of explainable AI (XAI), achieved lower predictive performance, likely due to its rigidity in applying static rules and thresholds. Its limited adaptability suggests that such models benefit greatly from structured knowledge integration, highlighting again the potential synergy between natural language models and formal ontologies in future hybrid designs.

This limitation is more likely due to the use of a zero-shot prompting strategy without domain-specific fine-tuning or structured prompt engineering, a known limitation in LLM applications to clinical tasks [51,60]. Unlike rule-based systems, ChatGPT relies on statistical inference over language patterns, not static thresholds [51,61]. Interestingly, PCA and t-SNE projections revealed natural separability between the two classes, especially when synthetic augmentation helped better span the decision boundaries. SHAP and Random Forest feature ranking analyses repeatedly emphasize kurtosis, signal entropy, smoothness, and wave energy as the most discriminative features, echoing known motor abnormalities in PD such as increased signal regularity and reduced dynamic range of motion.

This study has several limitations that are acknowledged in this section:

- Limited sample size: The real-world datasets used for training and evaluation were relatively small. Even with CTGAN-generated synthetic data, the training size may be insufficient to fully capture the variability present in Parkinsonian motor patterns. This can affect the generalizability of the ML models.

- Reliance on synthetic data: While CTGAN was used to generate high-quality synthetic samples, synthetic augmentation may not perfectly replicate the subtle nuances of true clinical data. This introduces potential bias in model learning.

- Ontology scalability: Fuzzy ontology models rely on manually defined rules and thresholds. These rules may not be scaled well to broader datasets or new feature sets without significant human tuning.

- LLM constraints: ChatGPT was used in a zero-shot mode, without clinical fine-tuning. As such, its performance may underestimate what could be achieved with domain-adapted large language models.

Future research should aim to collect larger, more diverse datasets and evaluate hybrid models that combine semantic reasoning with adaptive learning mechanisms.

7. Conclusions

These results suggest that fuzzy ontology-based classification may be well-suited for scenarios where interpretability, rule-based reasoning, and transparent decision logic are critical particularly in clinical applications involving digital biomarkers for motor disorders. While promising, these findings are based on a limited number of datasets and should be validated in broader contexts. This research provides strong empirical evidence that fuzzy ontology-based classification offers a compelling alternative to traditional black-box ML and static rule-based systems for motor disorder diagnosis using digital biomarkers. The ontology-driven models consistently delivered high F1-scores, were less susceptible to dataset-specific overfitting, and provided transparent decision logic critical for clinical interpretability.

ML models trained on high-quality synthetic data showed competitive performance, validating the value of data augmentation via generative models like CTGAN. However, their success remains conditional on the distributional fidelity between synthetic and real-world data. Rule-based systems, while explainable, require significant enhancement in adaptability and semantic richness to meet clinical standards.

In this study, ChatGPT was evaluated strictly in a zero-shot setting, without domain-specific fine-tuning or few-shot demonstrations. This design choice ensured fairness by keeping models in their baseline form but also represents a limitation, as more advanced prompting strategies could potentially improve performance. We explicitly acknowledge this constraint and highlight it as a direction for future work.

Looking ahead, future work should explore also neuro-symbolic integration, combining language models, such as ChatGPT, with structured ontological reasoning to benefit from both contextual awareness and domain-grounded inference. Additionally, longitudinal tracking of motor features and integration with multi-modal inputs (e.g., voice, gait, and neuroimaging) could yield richer models for disease staging and progression analysis. To maintain its scope, the present study focused on representative baseline models—Random Forest for traditional machine learning, ChatGPT for large language models, and a fuzzy ontology framework for symbolic reasoning. Future work should expand this evaluation by testing additional alternatives such as support vector machines (SVMs), XGBoost, other LLM variants (e.g., DeepSeek), and extended fuzzy frameworks. Incorporating cross-validation and broader model comparisons would further strengthen robustness and generalizability. Finally, deploying these models in clinical settings with real-time feedback mechanisms can test their usability, trust, and impact on early Parkinson’s detection. In sum, this study not only benchmarks technical performance but advances the methodological foundation for interpretable, robust, and semantically sound AI in digital health diagnostics.

Author Contributions

Conceptualization, A.K.; methodology, A.K.; software, A.K.; validation, A.K. and P.B.; formal analysis, A.K.; investigation, A.K.; resources, A.K.; data curation, A.K.; writing—original draft preparation, A.K.; writing—review and editing, A.K., G.B. and K.K.; visualization, A.K.; supervision, K.K.; project administration, A.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable. Ethical review and approval were waived for this study because all datasets used were publicly available and fully anonymized. No new data was collected from human participants.

Informed Consent Statement

Not applicable. The study used pre-existing anonymized datasets and did not involve direct interaction with human subjects.

Data Availability Statement

The datasets used and generated during this study—including handwritten spiral, wave, and synthetic samples—are available from the corresponding author without reasonable request, due to direct public access.

Acknowledgments

During the preparation of this manuscript, the author used ChatGPT-4 (OpenAI, 2024), an AI language model, to assist with generating feature-level classification prompts and editing figure captions. The author reviewed and edited all outputs and takes full responsibility for the content of this publication.

Conflicts of Interest

The author declares no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AD | Alzheimer’s Disease |

| ASD | Artificial Intelligence |

| ASD | Autism Spectrum Disorder |

| ChatGPT | Chat Generative Pre-Trained Transformer |

| CTGAN | Conditional Tabular Generative Adversarial Network |

| ECDF | Empirical Cumulative Distribution Function |

| FN | False Negative |

| FP | False Positive |

| FSP | Fuzzy Spiral Parkinson |

| FSPHD | Fuzzy Spiral Parkinson Hand Drawn |

| FTD | Frontotemporal Dementia |

| FWP | Fuzzy Wave Parkinson |

| KDE | Kernel Density Estimate |

| LLM | Large Language Models |

| ML | Machine Learning |

| OWL | Web Ontology Language |

| PCA | Principal Component Analysis |

| PD | Parkinson’s Disease |

| SHAP | SHapley Additive exPlanations |

| TN | True Negative |

| TP | True Positive |

| USMLE | United States Medical Licensing Examination |

| XAI | Explainable Artificial Intelligence |

References

- Poewe, W.; Seppi, K.; Tanner, C.M.; Halliday, G.M.; Brundin, P.; Volkmann, J.; Schrag, A.E.; Lang, A.E. Parkinson disease. Nat. Rev. Dis. Primers 2017, 3, 17013. [Google Scholar] [CrossRef]

- Acosta, L.M.Y. Creativity and neurological disease. Curr. Med. Group LLC 1 2014, 14, 464. [Google Scholar] [CrossRef]

- Shimura, H.; Tanaka, R.; Urabe, T.; Tanaka, S.; Hattori, N. Art and Parkinson’s disease: A dramatic change in an artist’s style as an initial symptom. J. Neurol. 2012, 259, 879–881. [Google Scholar] [CrossRef]

- Baloyannis, S.J. Painting as an open window to brain disorders. J. Neurol. Stroke 2020, 10, 101–103. [Google Scholar] [CrossRef]

- Kleiner-Fisman, G.; Black, S.E.; Lang, A.E. Neurodegenerative Disease and the Evolution of Art: The Effects of Presumed Corticobasal Degeneration in a Professional Artist. Mov. Disord. 2003, 18, 294–302. [Google Scholar] [CrossRef]

- Mendez, M.F. Dementia as a window to the neurology of art. Med. Hypotheses 2004, 63, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Mell, J.C.; Howard, S.M.; Miller, B.L. Art and the brain. Neurology 2003, 60, 1707–1710. [Google Scholar] [CrossRef]

- Bogousslavsky, J. Creativity in Painting and Style in Brain-damaged Artists. Int. Rev. Neurobiol. 2006, 74, 135–146. [Google Scholar] [CrossRef] [PubMed]

- Bogousslavsky, J. Artistic creativity, style and brain disorders. Eur. Neurol. 2005, 54, 103–111. [Google Scholar] [CrossRef] [PubMed]

- Piechowski-Jozwiak, B.; Bogousslavsky, J. Neurological diseases in famous painters. Prog. Brain Res. 2013, 203, 255–275. [Google Scholar] [CrossRef]

- Piechowski-Jozwiak, B.; Bogousslavsky, J. Dementia and Change of Style: Willem de Kooning-Obliteration of Disease Patterns? S. Karger AG: Basel, Switzerland, 2018. [Google Scholar] [CrossRef]

- Piechowski-Jozwiak, B.; Bogousslavsky, J. Abstract Expressionists and Brain Disease; S. Karger AG: Basel, Switzerland, 2018. [Google Scholar] [CrossRef]

- Solso, R.L. Brain Activities in a Skilled versus a Novice Artist: An fMRI Study. Leonardo 2001, 34, 31–34. [Google Scholar] [CrossRef]

- Pelowski, M.; Spee, B.T.M.; Arato, J.; Dörflinger, F.; Ishizu, T.; Richard, A. Can we really ‘read’ art to see the changing brain? A review and empirical assessment of clinical case reports and published artworks for systematic evidence of quality and style changes linked to damage or neurodegenerative disease. Phys. Life Rev. 2022, 43, 32–95. [Google Scholar] [CrossRef]

- Bäzner, H.; Riederer, C. Dystonia in Arts and Artists. In Treatment of Dystonia; Cambridge University Press: Cambridge, UK, 2018; pp. 92–96. [Google Scholar] [CrossRef]

- Kotsavasiloglou, C.; Kostikis, N.; Hristu-Varsakelis, D.; Arnaoutoglou, M. Machine learning-based classification of simple drawing movements in Parkinson’s disease. Biomed. Signal Process. Control 2017, 31, 174–180. [Google Scholar] [CrossRef]

- Zham, P.; Arjunan, S.P.; Raghav, S.; Kumar, D.K. Efficacy of Guided Spiral Drawing in the Classification of Parkinson’s Disease. IEEE J. Biomed. Health Inform. 2018, 22, 1648–1652. [Google Scholar] [CrossRef]

- Gil-Martín, M.; Montero, J.M.; San-Segundo, R. Parkinson’s disease detection from drawing movements using convolutional neural networks. Electronics 2019, 8, 907. [Google Scholar] [CrossRef]

- Impedovo, D.; Pirlo, G. Online Handwriting Analysis for the Assessment of Alzheimer’s Disease and Parkinson’s Disease: Overview and Experimental Investigation. Front. Pattern Recognit. Artif. Intell. 2019, 5, 113–128. [Google Scholar] [CrossRef]

- Impedovo, D.; Pirlo, G. Dynamic Handwriting Analysis for the Assessment of Neurodegenerative Diseases: A Pattern Recognition Perspective. IEEE Rev. Biomed. Eng. 2018, 12, 209–220. [Google Scholar] [CrossRef]

- Doshi-Velez, F.; Kim, B. Towards A Rigorous Science of Interpretable Machine Learning. arXiv 2017, arXiv:1702.08608. Available online: https://arxiv.org/abs/1702.08608 (accessed on 16 September 2025).

- Dorsey, E.R.; Elbaz, A.; Nichols, E.; Abbasi, N.; Abd-Allah, F.; Abdelalim, A.; Adsuar, J.C.; Ansha, M.G.; Brayne, C.; Choi, J.-Y.J.; et al. Global, regional, and national burden of Parkinson’s disease, 1990–2016: A systematic analysis for the Global Burden of Disease Study 2016. Lancet Neurol. 2018, 17, 939–953. [Google Scholar] [CrossRef] [PubMed]

- Koletis, A.; Bitilis, P.; Zafeiropoulos, N.; Kotis, K. Can Semantics Uncover Hidden Relations between Neurodegenerative Diseases and Artistic Behaviors? Appl. Sci. 2023, 13, 4287. [Google Scholar] [CrossRef]

- Chandra, J.; Muthupalaniappan, S.; Shang, Z.; Deng, R.; Lin, R.; Tolkova, I.; Butts, D.; Sul, D.; Marzouk, S.; Bose, S.; et al. Screening of parkinson’s disease using geometric features extracted from spiral drawings. Brain Sci. 2021, 11, 1297. [Google Scholar] [CrossRef] [PubMed]

- Mei, J.; Desrosiers, C.; Frasnelli, J. Machine Learning for the Diagnosis of Parkinson’s Disease: A Review of Literature. Front. Aging Neurosci. 2021, 13, 633752. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. Available online: http://scikit-learn.sourceforge.net (accessed on 16 September 2025).

- Sallam, M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare 2023, 11, 887. [Google Scholar] [CrossRef]

- Bobillo, F.; Straccia, U. Fuzzy ontology representation using OWL 2. Int. J. Approx. Reason. 2011, 52, 1073–1094. [Google Scholar] [CrossRef]

- Mitra, P.; Wiederhold, G. An Ontology-Composition Algebra. In Handbook on Ontologies; Staab, S., Studer, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 93–113. [Google Scholar] [CrossRef]

- Saito, T.; Rehmsmeier, M. The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE 2015, 10, e0118432. [Google Scholar] [CrossRef]

- Inzelberg, R. The awakening of artistic creativity and Parkinson’s disease. Behav. Neurosci. 2013, 127, 256–261. [Google Scholar] [CrossRef]

- Canesi, M.; Rusconi, M.L.; Moroni, F.; Ranghetti, A.; Cereda, E.; Pezzoli, G. Creative Thinking, Professional Artists, and Parkinson’s Disease. J. Park. Dis. 2016, 6, 239–246. [Google Scholar] [CrossRef]