Abstract

Machine learning in natural language processing (NLP) analyzes datasets to make future predictions, but developing accurate models requires large, high-quality, and balanced datasets. However, collecting such datasets, especially for low-resource languages, is time-consuming and costly. As a solution, data augmentation can be used to increase the dataset size by generating synthetic samples from existing data. This study examines the effect of translation-based data augmentation on sentiment analysis using small datasets in three diverse languages: French, German, and Japanese. We use two neural machine translation (NMT) services—Google Translate and DeepL—to generate augmented datasets through intermediate language translation. Sentiment analysis models based on Support Vector Machine (SVM) are trained on both original and augmented datasets and evaluated using accuracy, precision, recall, and F1 score. Our results demonstrate that translation augmentation significantly enhances model performance in both French and Japanese. For example, using Google Translate, model accuracy improved from 62.50% to 83.55% in Japanese (+21.05%) and from 87.66% to 90.26% in French (+2.6%). In contrast, the German dataset showed a minor improvement or decline, depending on the translator used. Google-based augmentation generally outperformed DeepL, which yielded smaller or negative gains. To evaluate cross-lingual generalization, models trained on one language were tested on datasets in the other two. Notably, a model trained on augmented German data improved its accuracy on French test data from 81.17% to 85.71% and on Japanese test data from 71.71% to 79.61%. Similarly, a model trained on augmented Japanese data improved accuracy on German test data by up to 3.4%. These findings highlight that translation-based augmentation can enhance sentiment classification and cross-language adaptability, particularly in low-resource and multilingual NLP settings.

1. Introduction

In the field of machine learning, the collection of large, high-quality datasets is crucial for the training and implementation of an effective machine learning model. Low-quality datasets, which are small and have an imbalanced distribution of data, can negatively influence the performance and reliability of a machine learning model by introducing a high risk of complex generalization challenges and unwanted bias toward specific classes within the model. However, due to the high cost of the time-consuming process, obtaining a sizable, high-quality dataset or a balanced dataset for classification is a challenging task. To address these challenges, data augmentation has been proposed as a solution.

In data augmentation, the training data is artificially increased [1] or, alternatively, the proportion of a minority class in an imbalanced dataset is increased [2]. However, the data must be inflated with a reasonable modification. For instance, in an image-based dataset, if an image recognition model were trained on a dataset that only includes images of humans facing right, the model would only recognize humans who were facing right. To solve this issue, the dataset can be augmented to include several images of humans facing different directions by cropping and rotating the existing dataset, and these new images can then be used to train the model to recognize images humans facing many directions, which is a reasonable way to inflate the dataset. For text-based datasets, data can be augmented using several methods, for example, changing the order of the words in texts, replacing words with synonyms, translating texts, or summarizing texts.

The importance of having a large training dataset cannot be underestimated in the field of machine learning. Using a larger and higher-quality training dataset can lead to a more reliable machine learning model, which can make more accurate predictions or classifications. On the other hand, using a small training dataset makes it difficult to train a reliable machine learning model [3] because small data samples are insufficient to represent all possible data values for regression or classification tasks. Therefore, a decision made by a model trained by a smaller dataset comes with significant uncertainties [4]. Moreover, using a small dataset increases the risk of overfitting the model, which, in turn, leads to poor generalization capabilities. A model that is overfit will have high accuracy when tested against the training data but will exhibit low accuracy when tested against test data or new data [5]. Therefore, it is crucial to utilize large training datasets to develop reliable and accurate machine learning models.

In a classification task, obtaining a balanced dataset distribution is crucial to ensuring that each class has a roughly equal amount of data. A balanced distribution in the dataset leads to a model that is not biased toward any particular class. However, obtaining a balanced dataset can be challenging, as it is difficult to find samples for specific classes, which can result in imbalanced datasets. Imbalanced datasets pose a challenge for classification algorithms, since minority classes in imbalanced datasets tend to have higher misclassification costs than the majority classes [6], which means that models trained using imbalanced datasets frequently show bias towards majority classes while being unbiased towards minority classes [7]. This results in a lower-performing model.

Although a challenging problem, finding high-quality datasets in sentiment analysis and ensuring a large and balanced distribution of different sentiment classes within the dataset is crucial for accurate and unbiased analysis. For example, in the business market, customer sentiment can significantly affect other customers’ intentions [8]. Therefore, it is essential to understand the customers’ opinions to develop the market. However, with either a small or unbalanced dataset, it can be challenging to understand the customer due to a lack of information. As a result, a dataset used to train a sentiment analysis model must be of high quality [9].

In this study, the effect of data augmentation is investigated to determine its impact on the performance of sentiment analysis models on three distinct language datasets: French, German, and Japanese. Previous scholars [2,10,11,12] in the data augmentation field primarily focused on sentiment analysis within single languages—mostly in English—and overlooked sentiment analysis across several languages. Sentiment analysis across various languages should be considered, as each language has its own unique structures, alphabet, and grammar. Additionally, it is relatively easy to find text-based datasets in English, since English is one of the most widely used languages. On the other hand, it can be challenging to obtain datasets in languages that are not commonly used. Therefore, data augmentation in various languages is crucial to solving this issue.

Translation-based augmentation was employed to expand the original French, German, and Japanese datasets by translating them into English through various intermediate languages, aiming to increase linguistic diversity. While Abonizio et al. [13] evaluated several text augmentation techniques, including back-translation, our study builds on this by systematically comparing Google Translate and DeepL for multilingual sentiment analysis across French, German, and Japanese datasets.

To assess generalization, models trained on one language were tested on datasets from other languages. This cross-lingual testing evaluates whether learned patterns can transfer across linguistic boundaries, which is especially helpful when labeled data is limited. Real-world uses include multilingual sentiment analysis in global business and market research.

This study aims to answer the following research questions:

- RQ1: Does translation-based data augmentation improve sentiment classification in low-resource languages (French, German, and Japanese)?

- RQ2: Can sentiment models trained on augmented data generalize across languages?

We propose the following hypotheses:

Hypothesis 1.

Translation-based augmentation will enhance sentiment classification performance in all three languages.

Hypothesis 2.

Models trained on augmented data will generalize across languages, but the level of improvement will vary due to linguistic and semantic differences.

Key contributions of this study include the following:

- A systematic comparison of Google Translate and DeepL for translation-based augmentation in three typologically diverse languages;

- A quantitative evaluation using Support Vector Machine (SVM) classifiers to measure classification performance and cross-lingual generalization;

- Insights into how language structure and translation quality influence augmentation effectiveness.

The remainder of this paper is organized as follows: Section 2 reviews related work, Section 3 describes the methodology and translation-based augmentation process, Section 4 presents experimental results and analysis, Section 5 concludes the study, and Section 6 outlines limitations and future research directions.

2. Related Work

This section presents key findings and related insights from the research. Any existing knowledge of the data augmentation techniques and their performance in natural language processing is highlighted. Data augmentation is a machine learning technique designed for the synthetic generation of training datasets, which means that the original training dataset is artificially inflated through reasonable modifications with label-preserving transformations [1,14]. Bottou et al. [15] developed the first application of the data augmentation technique for handwritten digit classification in 1998. This technique increased the accuracy of a model from 68% to 82%, providing the first evidence that a larger training dataset leads to improved performance in machine learning algorithms and better results for a handwriting recognition system.

Following research on data augmentation for handwritten digit classification, the data augmentation technique has been applied in various areas, including image recognition, text mining, and numerous other domains. The data augmentation technique has been used successfully in image processing tasks for several years now [16,17,18] for object recognition, object detection, medical image analysis, and other applications. Conversely, data augmentation techniques in natural language processing (NLP) have yet to be successfully adopted [14]. However, in recent years, studies on data augmentation techniques in natural language processing have increased. The use of the method has grown for sentiment analysis models in market research, product development, political analysis, and other areas.

2.1. Data Augmentation Techniques

In natural language processing, data augmentation has been used to improve the quality of training datasets to address problems caused by poor datasets and to improve the model’s performance. Over the years, various methods have been proposed to augment datasets, including paraphrasing, transformation, and generation [13]. Paraphrasing and transformation techniques are among the most common approaches. The transformation augmentation technique transforms a sentence by a simple substitution operation; synonym replacement is one of the approaches used to do so [19]. This approach generates new sentences or text by replacing words with their synonyms [20]. In processing, words are replaced with a randomly selected synonym from a list of synonyms, excluding non-stop words [21]. Feng et al. [20] proposed a tailored text augmentation algorithm to improve the synonym replacement augmentation technique. It consists of probabilistic synonym replacement and zero masking of irrelevant words. Probabilistic synonym replacement replaces a word based on a probability that considers the relevance and discriminative power of the word in response to emotion. Zero masking replaces irrelevant words with zero vectors instead of synonyms. For instance, in the sentence “the food is tasty”, the word “food”, which is an irrelevant word, will be replaced with a zero vector. The researchers’ goal for these considerations was to generate an effective synthetic sentence and balance the distribution of irrelevant words.

Pang et al. [2] proposed a new strategy in the synonym replacement augmentation technique. It is a balancing strategy based on word replacement for text sentiment classification. It involves two stages: oversampling and noise modification. In the oversampling stage, new samples are generated by replacing words in the representative data. This generates new samples in the minority class by replacing the feature words from the majority class with their antonyms and the feature words from the minority class with their synonyms. The noise modification stage detects any noisy data with incorrect sentiment polarity and replaces it with correct words, thereby relatively cleaning the dataset.

The paraphrasing technique is an augmentation technique that manipulates sentences by rephrasing, and translation is one approach [13]. There are various translation methods, including back-translation, forward translation, multilingual translation, and random language translation, among others. Back-translation is a commonly used translation augmentation technique [13,14,22]. This technique involves generating translations from one language to another, then back-translating to the original language. In this study, instead of back-translation, a commonly used technique, another translation augmentation technique is used, which generates new data by translating from the original language to the target languages through several intermediate languages. While augmenting datasets with the translation technique, it is crucial to increase the dataset’s diversity through translation; for example, the text can be translated into several intermediate languages and back to the original language [23].

Abonizio et al. [13] explored several data augmentation techniques, including easy data augmentation (EDA), back-translation (BT), pre-trained data augmenter (PREDATOR), and BART, to improve sentiment analysis models using various machine learning approaches on two types of datasets: imbalanced and small datasets. These four techniques had different advantages and disadvantages. For advantages, PREDATOR and BART had a high possibility of improving the model’s performance, EDA is not an expensive technique to use, and BT improves the dataset quality stably. However, EDA might reduce the model’s performance, and the other three techniques are expensive methods or require costly technology. For instance, BT requires expensive translation APIs to translate text with quality. Despite these disadvantages, these techniques help improve the model’s performance.

2.2. Sentiment Analysis

Sentiment analysis is a text and opinion mining technique that extracts information from opinions and expressions in text format [24]. It is used in various applications, such as market research, product development, political analysis, and other diverse areas to understand and evaluate the private states of individuals towards multiple aspects of a subject [25]. The main goal of sentiment analysis is to predict sentiment polarity by analyzing the words in the sentences or documents [25]. Sentences or documents can be classified into two principal classes: subjective and objective. Subjective classes contain personal information, which includes opinions, beliefs, judgments, and views about specific subjects, whereas objective classes contain factual information, including facts and evidence related to particular subjects [26]. Sentiment can be divided into two types: positive and negative emotions. In a text, positive emotion can be described as “happy”, “joy”, “beautiful”, etc., while negative emotion can be described as “hate” “anger”, and “sad”, among others [19]. However, in the real world, it is essential to note that human expressions are complex, and it is challenging to categorize them as positive and negative sentiments. In recent years, several studies [24,25,27,28,29] have examined sentiment analysis to extract valuable perceptions from human expressions and understand them, for example, from customer reviews.

In sentiment analysis, the dataset undergoes data preprocessing and data labeling steps before training and evaluating the sentiment analysis model. Data preprocessing involves three stages: removing any special characters or punctuation, removing null data, and transforming words to normal form or converting words to lowercase [19], depending on the language’s characteristics. These stages are crucial steps in natural language processing, as they significantly impact the performance of the analysis. Data labeling is a vital process in sentiment analysis used to categorize datasets into positive and negative polarities. Nguyen et al. [19] used a review dataset rated on a scale of 1 to 10, where reviews rated below 5 were labeled as negative and those rated above 5 were labeled as positive. After preprocessing, the dataset was prepared for training and evaluation of the model.

Various machine learning models can be used to analyze sentiment. These include Decision Trees, Naive Bayes, Logistic Regression, SVM, and Long Short-Term Memory (LSTM) networks. Gondhi et al. [30] used an LSTM with the word2vec embedding technique to analyze product reviews on the Amazon shopping website, and LSTM showed F1 scores of 93%; as the dataset was unbalanced, the F1 score was used to measure the performance of the model. Nguyen et al. [19] explored four machine learning models—Decision Tree, Naive Bayes, Logistic Regression, and SVM—to analyze customer reviews of online food service. Compared to other models, SVM showed the highest accuracy of 91.5%, which was 9% higher than that of Naive Bayes [19]. In this study, the SVM machine learning model was used.

Even with high accuracy in sentiment analysis, one of the challenges faced was the machine learning model’s dependence on the quality of the dataset. The datasets were either imbalanced or small. Thus, a researcher had to spend most of their time collecting the dataset and finding a technique to qualify it. Few researchers have utilized data augmentation to address these limitations by enhancing dataset quality.

2.3. Data Augmentation Techniques in Sentiment Analysis

The data augmentation technique was initially adopted with low usage in natural language processing [14]. However, the method has recently been applied in sentiment analysis, which deals with low-quality datasets [2,11,12,13,20,22]. The increase in the use of data augmentation suggests that the benefits of this technique have been recognized in terms of improving a machine learning models’ performance and its contribution to addressing the challenge of data sparsity. Research has been conducted using various data augmentation techniques in the field of natural language processing.

EDA, BT, PREDATOR, and BART generally improved the performance of a machine learning model’s in predicting sentiments, especially for back-translation-boosted LSTM, GRU, CNN, RF, ERNIE, and BERT models, across both imbalanced and small datasets [13]. Notably, PREDATOR significantly enhanced the performance of LSTM. The tailored text augmentation technique proposed by Feng et al. [20] was applied to COVID-19 sentiment analysis, demonstrating effectiveness in improving the model’s performance and generalization capability. In addition, the balancing strategy approach of the word replacement augmentation technique improved the model’s performance by 3% compared to a raw, imbalanced dataset.

Catelli et al. [31] investigated cross-lingual transfer learning for sentiment analysis in low-resource languages, using Italian TripAdvisor reviews as a case study. The authors compared Italian-specific BERT models (BERTBASE Italian and BERTBASE Italian XXL) with multilingual BERT (mBERT) fine-tuned on both monolingual and mixed-language datasets, including high-resource English corpora. Their experiments demonstrated that, while language-specific BERT variants generally outperform mBERT when fine-tuned solely on the target language, cross-lingual fine-tuning of mBERT with both English and Italian data significantly improved performance, surpassing Italian-specific baselines. They further applied explainable AI techniques (SHAP) to interpret model predictions. These results highlight the effectiveness of transfer learning in leveraging high-resource languages to boost sentiment classification accuracy in low-resource settings, supporting its consideration alongside translation-based augmentation strategies.

Enhancing the quality of a low-quality dataset with data augmentation techniques improved the prediction accuracy of the models. However, it is essential to consider how and when to apply data augmentation. Applying data augmentation to every dataset may not improve the model’s performance. Sugiyama et al. [22] employed back-and-forth translation augmentation techniques to increase sample sizes from varying numbers of original datasets, thereby evaluating performance on small and large datasets. Overall, the technique improved the performance of movie review sentiment analysis. However, as the original dataset size gets larger, the difference in total error rate between the augmented and original models decreases. This means that the error increases in the augmented data model as the original dataset size gets larger. This result shows that the data augmentation technique does not work on every dataset. Sometimes, it might produce poor results on a high-quality dataset model.

2.4. Evaluation

In the NLP field, the evaluation and comparison of machine learning models are essential to determine the model’s effectiveness, and evaluation metrics are commonly used, consisting of accuracy, F score, precision, and recall [11,32]. Accuracy measures the model’s ability to classify data [11] correctly. Additionally, the Total Error Rate (TER) is another metric used to evaluate the performance of models. It calculates the model’s error rate, which is essentially the complement of accuracy (i.e., one minus the accuracy); this allows for easy comparison of the performance of different models [10]. By accounting for error rate, TER helps to differentiate the model’s performance in sentiment analysis evaluation.

2.5. Machine Translation and Lexicon Resources

Although the translation technique showed significant improvement in the data augmentation model compared to the original datasets, previous researchers faced the uncertainty of machine translation in the translation process. Machine translation is a convenient tool to translate one language into another [14]. Two of the most used machine translators are Google Translate and DeepL Translate. Google Translate is one of the most used and easily accessed machine translation tools. It changed its translation algorithm in 2017 from a statistical machine translation algorithm to a neural machine translation algorithm [33]. DeepL Translate was launched in 2017 with neural machine translation, and DeepL Translate showed better translation performance than expected [34]. Neural machine translation systems excel in maintaining word order, accurately inserting function words, enhancing morphological agreement, making more informed lexical choices, and improving translation fluency [34]. After Google Translate changed its translation algorithm, Google Translate performed 92% and 81% accurately in translating discharge instructions into Spanish and Chinese for Spanish- and Chinese-speaking patients, respectively; the new Google Translate algorithm also produced fewer harmful inaccuracies than the old algorithm [33]. DeepL Translate also showed effective performance in translation from German literature to English [35]. Google Translate and DeepL Translate APIs are effective, and they can translate not only short phrases but even significant passages [35]. However, DeepL is a powerful translator of European languages [35]. In this study, both machine translation tools are used to assess the model’s performance in each language. Although they may not translate the text with perfect grammar, they are capable of translating sentences reliably with high quality and safety [35,36]. Lexicon-based resources have also played a crucial role in sentiment analysis, especially for low-resource languages. The Affective Norms for English Words (ANEW) dataset, introduced by Bradley and Lang (1999), provides normative emotional ratings (valence, arousal, and dominance) for a selected set of affective words in English. This lexicon has been adapted for other languages, such as the ANGST dataset for German [37,38], and has been shown to enhance sentiment classification by focusing on words that carry emotional meaning rather than treating all tokens equally. These resources can be used to complement data-driven methods, particularly when combined with machine translation or augmentation techniques, by helping to preserve or highlight affective terms during transformation.

3. Methodology

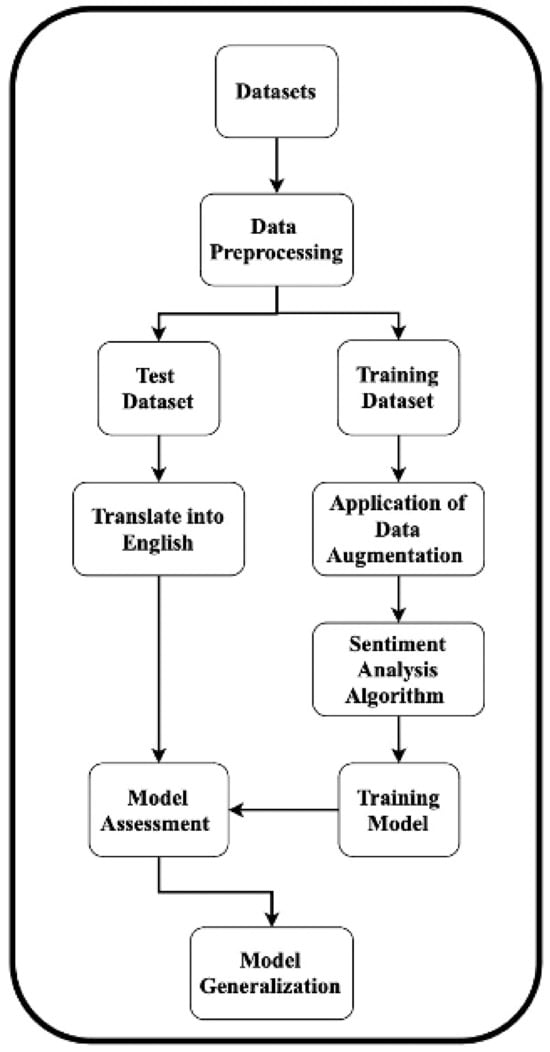

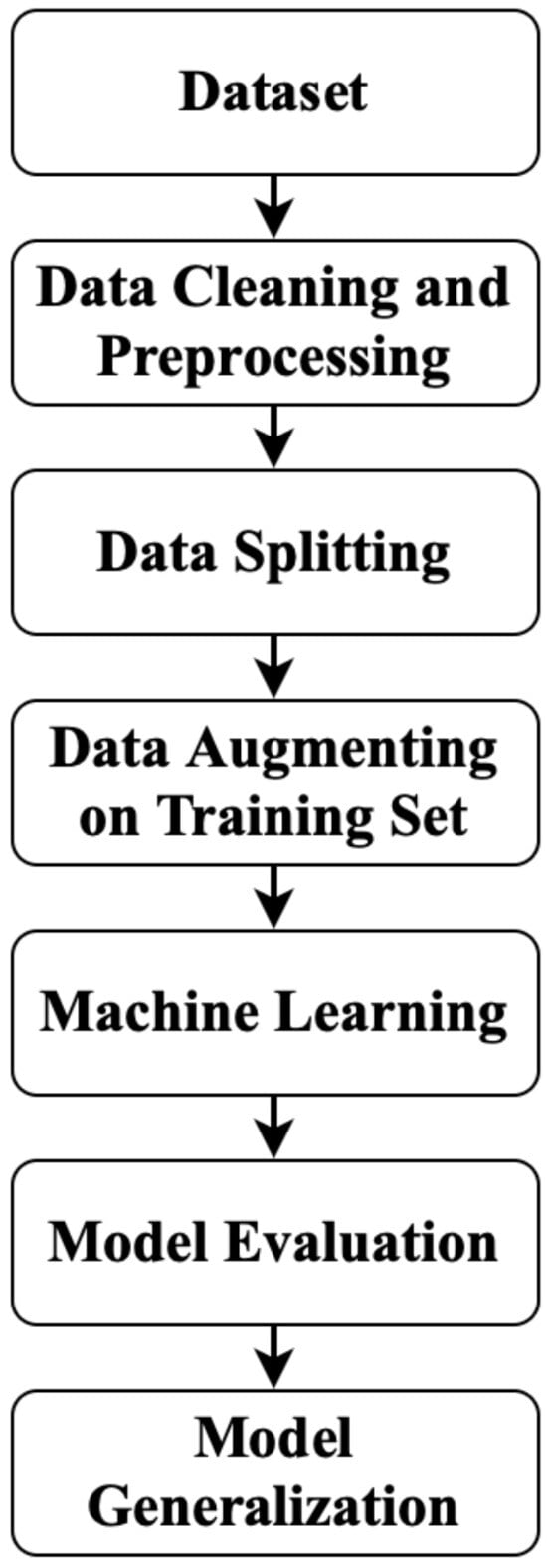

The methodology process demonstrates the progression from dataset preparation to the analysis and generalization of the models, as shown in Figure 1. After the dataset preparation, the datasets were subjected to a preprocessing step, including data cleaning, feature selection, and stopword removal. For compatibility reasons, the English stopword list from scikit-learn was applied to all datasets, including German and Japanese. Although this may not remove all non-informative words in non-English datasets, preliminary testing showed minimal performance differences when stopword removal was omitted entirely. Following this process, the sentiment analysis model was trained using the SVM machine learning algorithm, and its performance was evaluated using an evaluation matrix. Lastly, the model’s generalization capabilities were assessed.

Figure 1.

Overall pipeline.

3.1. Description of Datasets

In this study, online shopping review datasets were chosen to implement sentiment analysis models with data augmentation techniques. The datasets were collected from the Multilingual Amazon Reviews Corpus, Amazon AWS. They include Amazon product reviews from the USA, Japan, Germany, France, Spain, and China written in English, Japanese, German, French, Spanish, and Chinese languages between 1 November 2015 and 1 November 2019. The dataset includes several features: reviewer ID, review text, review title, rating (on a scale of 1 to 5), product ID, and product category. There are numerous reviews in several product categories. For this study, reviews in the ‘beauty’ product category were selected. It is essential to choose a specific product category, as customer reviews can vary depending on the product type. For instance, in the technology category, a review might be “This is very fast and easy to set up”, whereas in the shoe category, a review might be “They are very comfortable when worn”. Thus, focusing on a specific product category allows us to gain unique insights and expressions from customer reviews.

Several language datasets, i.e., French, German, and Japanese, were used to implement the sentiment analysis model with data augmentation techniques. These languages were chosen due to their different language systems. French is part of the Romance language family, and Latin had a direct influence on it. German is from the Germanic language family, which includes English [6]. Unlike other languages, Japanese is an isolated language that does not belong to any language family [6]. Japanese uses three writing scripts—Kanji, Hiragana, and Katakana—while German and French use the Latin alphabet like English [6]. These three languages use different word orders. Japanese uses the subject–object–verb word order, and German and French use the subject–verb–object word order, more like English [6]. Furthermore, these languages differ in various aspects, such as culture, nouns, and grammar.

All reviews were rated on a scale of 1 to 5. To see the distribution of positive and negative reviews, a binary variable was created. A review with a rating above three was grouped as a positive review, and a review with a rating below three was grouped as a negative review. Ratings of three were considered neutral and were removed because a neutral rating is neither good nor bad and does not affect any positive or negative points.

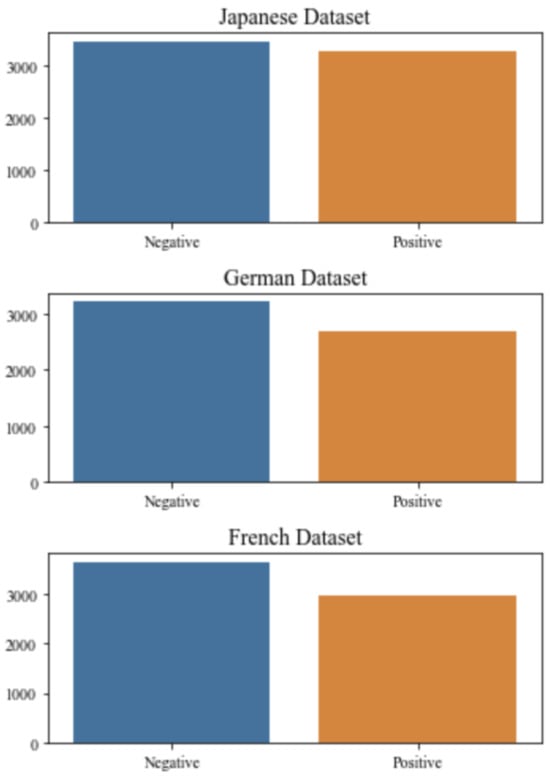

Figure 2 provides a visual representation of the distribution of the positive and negative reviews within three datasets. Although there is a slight difference between positive and negative distributions in the three figures, they are in a balanced distribution condition. To support this visual representation, Table 1 provides the number of positive and negative reviews in each of the three datasets. Since the datasets are of a small size, it will be challenging for the sentiment analysis model to capture the critical characteristics from these datasets, thereby achieving optimal and accurate prediction performance.

Figure 2.

Sentiment barplot.

Table 1.

The number of positive and negative samples in the three datasets.

The raw datasets were much larger and needed filtering to build balanced subsets for binary classification. The original datasets included 679,077 German, 262,397 Japanese, and 254,044 French reviews. These large, skewed datasets were filtered to create balanced sets of positive and negative reviews suitable for binary sentiment classification. After filtering the raw datasets to create balanced binary classification subsets, translation-based data augmentation was applied using Google Translate and DeepL. This sequence ensured that augmentation was performed only on balanced datasets, preserving the 1:1 ratio of positive to negative reviews in the final augmented sets. Crucially, the class balance was preserved after augmentation, with roughly equal numbers of positive and negative samples in each dataset. The purpose of augmentation in this work was not just to enlarge the dataset but to increase data diversity and balance, thereby boosting model robustness and generalization. All text was converted to lowercase, and punctuation was removed. The text was then tokenized using CountVectorizer from scikit-learn. For the German dataset, stopwords were removed using the English stopword list in CountVectorizer (stop_words=‘english’). No stemming or lemmatization was applied in order to preserve the original linguistic features of each language. Finally, the cleaned text was transformed into feature vectors for model training.

3.2. Translation Data Augmentation Technique

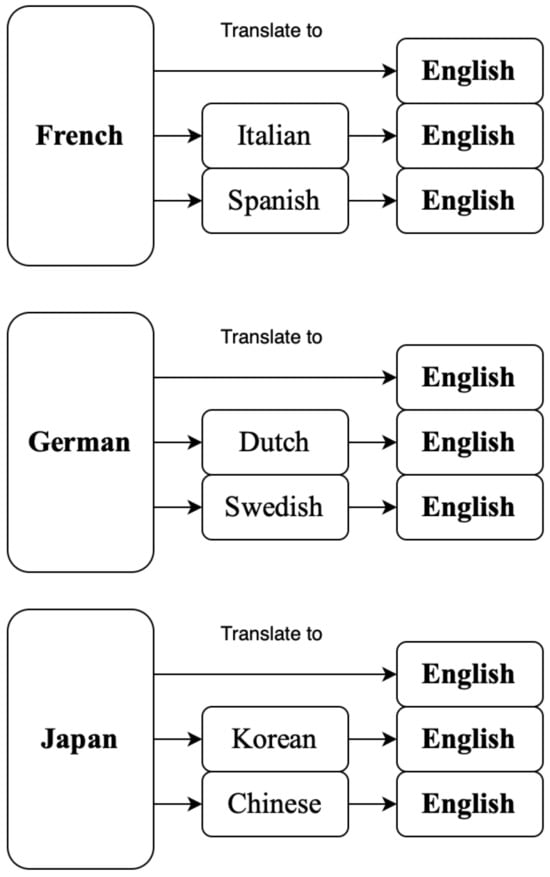

In this study, a translation augmentation technique was used to increase the training dataset by translating it into another language. While inflating the dataset, it was essential to increase the diversity of the translation. Thus, instead of translating French, German, and Japanese directly into English, they were translated through distinct intermediate languages into English, as shown in Figure 3.

Figure 3.

Translation process.

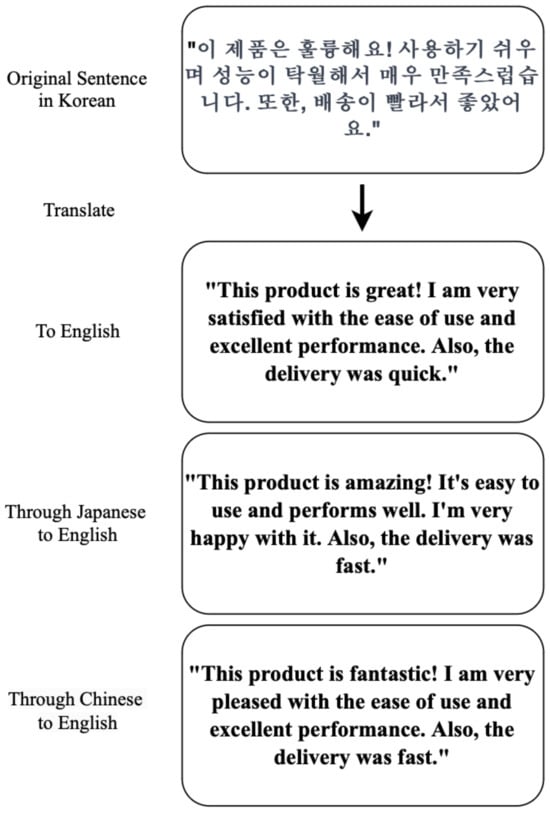

The selection of intermediate languages was based on similarities in grammar, alphabet, culture, and other aspects between the original and intermediate languages. This approach aimed to create diverse sentence structures and vocabulary in the resulting English translations. For instance, as shown in Figure 3, the original sentence was a Korean product review. The intermediate languages used for each source language are listed in Table 2. In Figure 4, an example of the translation process is demonstrated with a Korean product review. The original sentence is translated directly into English, as well as through intermediate languages of Japanese and Chinese, before arriving at the final English version. Each route produces a semantically equivalent sentence but with differences in vocabulary and structure, thereby increasing linguistic diversity in the augmented dataset.

Table 2.

Intermediate languages used for translation-based augmentation.

Figure 4.

Example of translation process.

These languages were selected to promote typological and cultural proximity to the original language, enhancing lexical and syntactic diversity in the augmented English outputs. The review approached the translation process in three distinct ways: a direct translation to English; a translation from Korean to Japanese and then to English; and a translation from Korean to Chinese, then to English. These translation paths generated sentences that deliver the same meaning in English; however, the generated sentences have different vocabularies and sentence structures, resulting in a diversity of language expressions in the target language.

In late 2016, Google Translate began switching from phrase-based machine translation to neural machine translation (GNMT), with the rollout expanding in 2017 [35]. After updating the algorithm, [35] showed 92% and 81% accurate translation of discharge instructions into Spanish and Chinese from English for Spanish- and Chinese-speaking patients, retrospectively, with the new Google Translate algorithm. The new Google Translate algorithm also produced fewer harmful inaccuracies than the old algorithm. DeepL showed effective translation performance from German literature to English [4]. Google Translate and DeepL Translate are effective, and they can translate not only short phrases but even large passages [4]. Although they may not translate the text with perfect grammar [4], they are capable of translating sentences reliably with high quality and safety [3,4]. Thus, Google Translate and DeepL Translate were chosen to translate text datasets. It is important to note that both models used in this study, Google Translate and DeepL, are neural machine translation (NMT) services designed for sentence-level translation. They are not large language models (LLMs) like ChatGPT or DeepSeek. Unlike LLMs, which can perform a wide range of generative and reasoning tasks, NMT services are specialized for bidirectional text translation and were used here solely for translation-based data augmentation.

3.3. Implementation

After applying translation augmentation using Google Translate and DeepL Translate APIs, six augmented training datasets were created: three datasets using the Google Translate API and three datasets using the DeepL Translate API. These augmented training datasets were utilized to train the sentiment analysis model for prediction of the polarity of product reviews. For the machine learning algorithm, Support Vector Machine (SVM), which is commonly used in sentiment analysis, was chosen. For the SVM model, hyperparameter tuning was performed using GridSearchCV with 5-fold cross-validation. The hyperparameter grid included , kernel , and (used with rbf and sigmoid kernels). The best hyperparameters were chosen based on validation accuracy and applied to the test set. All experiments were conducted in Google Colab (16 GB RAM, Python 3.8). While SVM training in scikit-learn is CPU-based, the environment also provided access to an NVIDIA Tesla T4 GPU, which was utilized for preprocessing and experimentation involving TensorFlow-based components. Training was performed using Scikit-learn and TensorFlow libraries. All datasets were randomly split into training and testing sets using an 80:20 ratio, with random_state=0 to ensure reproducibility. Stratified sampling was employed to preserve the class distribution, maintaining the balance between positive and negative samples in both subsets.

The methodological process demonstrates the progression from dataset preparation to the analysis and comparison of the models, as shown in Figure 5.

Figure 5.

Train/test and generalization splits.

The training sets went through the implementation of translation data augmentation to inflate small training datasets. Additionally, the three test sets were translated directly into English to test the generalization of the model. After the preparation of the training and test sets, three models were developed using the three augmented training sets and tested on the test sets. Then, model generalization was evaluated. Figure 6 illustrates the assignment of specific models to the specific test datasets.

Figure 6.

Generalization process.

4. Results

In this section, the findings of the study are presented with respect to the investigation of the performance of sentiment analysis with translation data augmentation in three languages.

In the NLP field, the evaluation and comparison of machine learning models are important to determine the effectiveness of the model, and evaluation metrics are commonly used consisting of accuracy, F score, precision, and recall [16,26]. Accuracy provides a measurement of the correct classification ability of the model [16]. For each language, the performance of machine learning models with data augmentation was assessed by the evaluation metrics. A confusion matrix is a table used to measure classification in terms of predicted and actual classes, as shown in Table 3, and is the basis for calculating accuracy, precision, recall, and F score.

Table 3.

Confusion matrix.

Accuracy (Equation (1)) provides a measure of how often the classifier is correct overall. Precision (Equation (2)) provides a measure of how often the classifier is correct when it predicts ‘yes’. Recall (Equation (3)) provides the measure of how often the classifier predicts ‘yes’ the correct classification is actually ‘yes.’ F score (Equation (4)) provides a measure the accuracy of a binary classification by using precision and recall values.

4.1. Performance of Data Augmentation

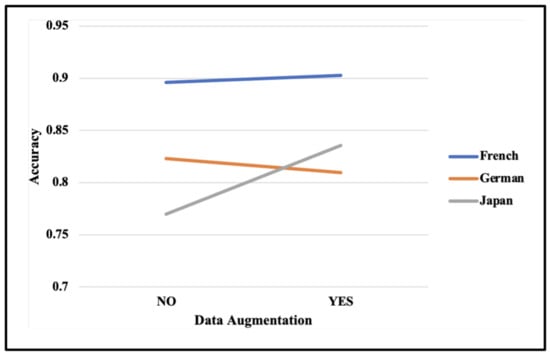

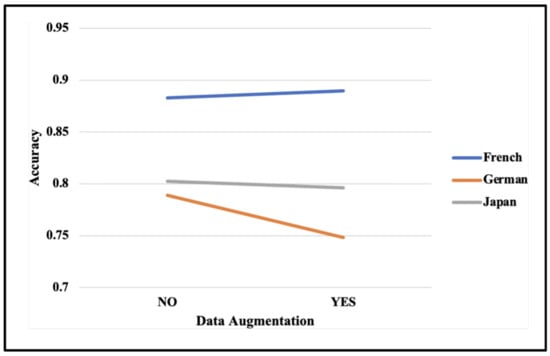

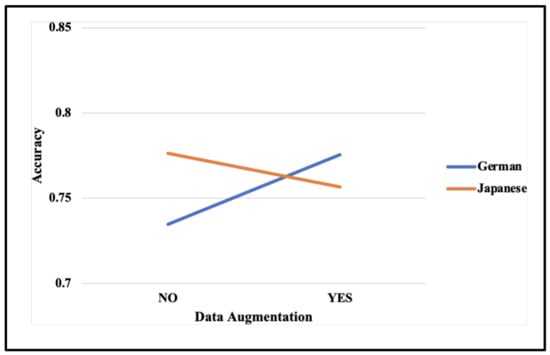

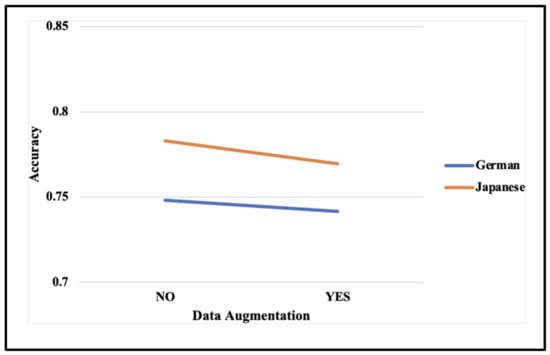

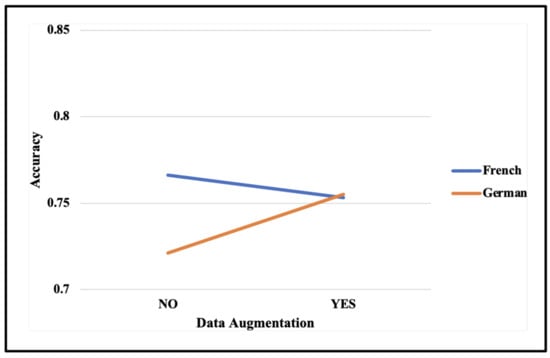

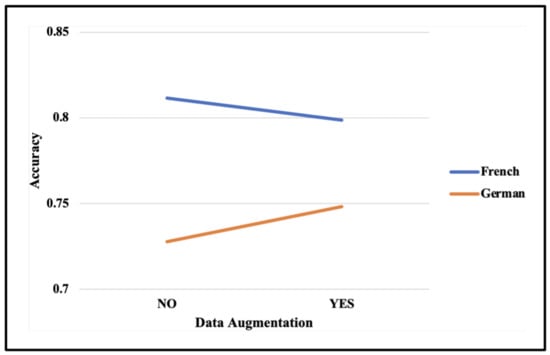

The results of the performance evaluation of the data augmentation technique’s effect on sentiment analysis across datasets in three distinct languages are presented in Table 4, Table 5 and Table 6. Figure 7 and Figure 8 provide graphical representations of accuracy based on the usage of the data augmentation technique using Google Translate and DeepL Translate.

Table 4.

Original sentiment analysis performance of the model by language.

Table 5.

Sentiment Analysis performance of the model by language with only translation and without the data augmentation technique.

Table 6.

Model sentiment analysis performance by language with the translation data augmentation technique.

Figure 7.

Graph of accuracy of usage of data augmentation by Google Translate.

Figure 8.

Graph of accuracy of usage of data augmentation by DeepL Translator.

In Figure 7, the effect of using the translation augmentation technique with Google Translate on sentiment analysis accuracy across various language datasets is presented. The results show that the model’s accuracy increased in the French and Japanese datasets when the data augmentation technique was applied. In particular, the Japanese dataset exhibited a significant improvement of 6.58%. However, the augmentation technique failed to improve the performance of sentiment analysis in the German dataset. Furthermore, using DeepL Translate in the augmentation process did not yield improvements compared to using Google Translate, as shown in Figure 8. The model’s performance did not improve in the German and Japanese datasets. Although the French dataset showed an improvement, the gain was limited to only 0.65%. This investigation indicates that the translation augmentation technique does not consistently enhance sentiment analysis performance across all languages when working with relatively small datasets.

The performance results in sentiment analysis for the three language datasets with the data augmentation technique, no technique, and only translation are shown in Table 4, Table 5 and Table 6. Translation data augmentation improved the performance of the model for sentiment analysis in ’French’ using both Google Translate API and DeepL Translate API. For sentiment analysis in ’Japanese’, the translation data augmentation technique with the Google Translate API (but not the DeepL Translate API) improved the performance of the model. Data augmentation could not improve the performance of sentiment analysis in German. Thus, the translation data augmentation technique does not improve the performance of sentiment analysis in every language, although the datasets used in this study were small.

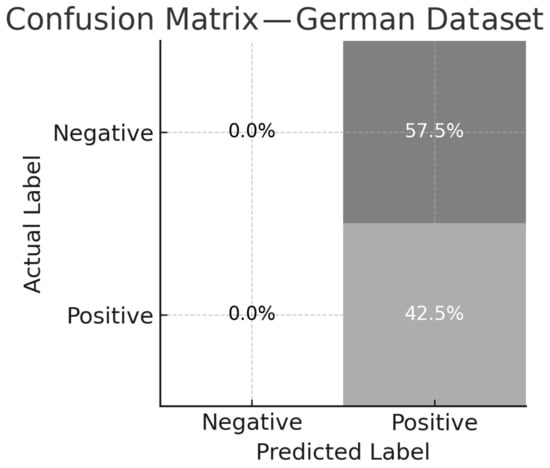

Confusion Matrix for the German Dataset

To further examine the relatively low performance of the model on the German dataset, we present the confusion matrix for the SVM classifier under the baseline configuration (no data augmentation). As shown in Figure 9, the model predicted all test samples as positive. This yielded a recall of 1.00 for the positive class but a precision of only 0.425 due to a large number of false positives.

Figure 9.

Confusion matrix for the German test set. Values represent percentages of all test samples. Darker shades indicate higher proportions, with white text used for readability in darker cells.

The overall accuracy was 42.5%, with an F1 score of 0.60 for the positive class. The area under the ROC curve (AUC) was 0.50, and the mean squared error (MSE) was 0.575, indicating limited discriminative ability and a bias toward the majority class.

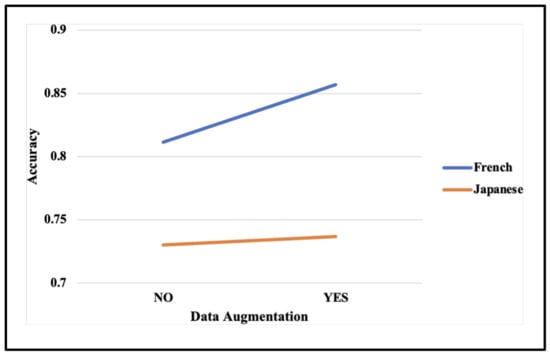

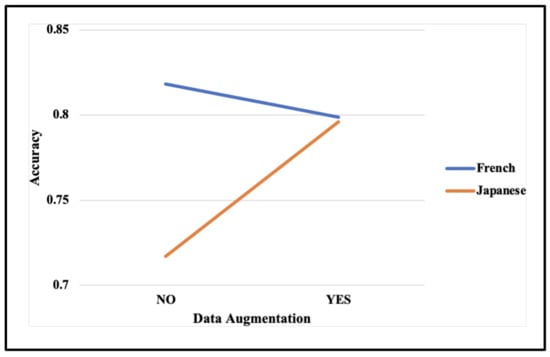

4.2. Generalization

The results of the generalization performance with only translation and with the application of the translation augmentation technique are shown in Table 4 and Table 6. Figure 10 and Figure 11 present graphical representations of the effect of data augmentation on sentiment analysis generalization. The models were trained using the French augmented training dataset, which incorporated Google Translate and DeepL Translate in the augmentation process, then tested on the German and Japanese test datasets (Table 7 and Table 8). When the model was tested on the Japanese test dataset, no improvement was observed compared to the model trained on non-augmented French datasets using either Google Translate or DeepL Translate. However, when the model was tested on the German test dataset using Google Translate in the augmentation process, the accuracy improved by 4.08%.

Figure 10.

Graph of model’s generalization accuracy of using data augmentation with Google Translate: French training dataset.

Figure 11.

Graph of model’s generalization accuracy using data augmentation with DeepL Translator: French training dataset.

Table 7.

Model generalization performance by language with only translation and without the data augmentation technique.

Table 8.

Model generalization performance by language with the translation data augmentation technique.

Figure 12 and Figure 13 illustrate graphical representations of the effect of data augmentation on sentiment analysis generalization. The models were trained using the German augmented training dataset, which incorporated Google Translate and DeepL Translate in the augmentation process, then tested on the French and Japanese test datasets. When the model was tested on the Japanese test dataset, an improvement was observed when using either Google Translate or DeepL Translate in the augmentation process. Notably, using DeepL Translate in the augmentation process on the Japanese test dataset improved the model’s generalization by 7.9%, representing the most significant improvement in this study. In addition, the model improved generalization on the French test dataset with Google Translate, increasing accuracy by 4.54%. Overall, sentiment analysis models trained using the German augmented training dataset demonstrated the ability to generalize across the test datasets for the other two languages. Figure 14 and Figure 15 illustrate graphical representations of the effect of data augmentation on sentiment analysis generalization. The models were trained using the Japanese augmented training dataset, which incorporated Google Translate and DeepL Translate in the augmentation process, then tested on the French and German test datasets.

Figure 12.

Graph of model’s generalization accuracy using augmentation with Google Translate: German training dataset.

Figure 13.

Graph of model’ generalization accuracy using data augmentation with DeepL Translator: French training dataset.

Figure 14.

Graph of model’s generalization accuracy using data augmentation with Google Translate: Japanese training dataset.

Figure 15.

Graph of model’s generalization accuracy using data augmentation with DeepL Translator: Japanese training dataset.

When the model was tested on the French test dataset, no improvement was observed when using either Google Translate or DeepL Translate. However, when the model was tested on the German test dataset, improvements in accuracy of 3.4% and 2.04% were achieved using Google Translate and DeepL Translate, respectively. Overall, the translation augmentation technique improved the generalization performance on Japanese and German training datasets. Specifically, the models trained with augmented Japanese and German training datasets performed better than non-augmented datasets when tested on another test dataset using either the Google Translate API or the DeepL Translate API. Furthermore, models trained with German and French training datasets showed similar patterns, with improved generalization when tested on each other’s test dataset using only the Google Translate API. These findings demonstrate the significance of the intersections of languages in shaping the generalization capabilities of cross-lingual sentiment analysis models.

4.3. Discussion of Results

The results of this study show that the effectiveness of translation-based data augmentation for sentiment analysis varies greatly depending on the language and the machine translation (MT) service used. Notably, Google Translate consistently outperformed DeepL in improving model accuracy for French and Japanese datasets, while neither tool significantly enhanced performance for the German dataset. These differences can be linked to several interconnected factors.

First, language structure is essential. Japanese has a subject–object–verb (SOV) sentence structure and uses logographic scripts (Kanji) and syllabaries (Hiragana and Katakana), which make it linguistically different from English and German. Google Translate, benefiting from extensive training on various language pairs, may handle this linguistic complexity better than DeepL, which is optimized for European languages. Conversely, German, although structurally closer to English (subject–verb–object), includes compound nouns, case-inflected grammar, and rigid syntax, which can lead to more translation errors, especially when using intermediate languages during augmentation. These translation inconsistencies can reduce the quality of augmented data, particularly for sentiment-heavy expressions. In this context, choosing to use intermediate translation was intentional because it can boost linguistic diversity in low-resource and cross-lingual settings, thereby enriching the training data. However, this method naturally involves risks like translation noise, semantic drift, and quality issues, especially when multiple intermediate languages are used. These factors may explain the variability seen in our results. Future research will look into strategies such as adding selective human validation or spot-checking translated samples to maintain sentiment accuracy, along with automated quality metrics to measure translation consistency.

Second, the variability in translation quality across different MT tools likely affected the results. Google Translate is known for its broader language coverage and extensive training data, especially for non-European languages like Japanese, while DeepL is often considered better for translating European languages but may have less support for Japanese or less common translation pathways. Additionally, choosing intermediate languages during augmentation can introduce cascading translation errors, which tend to impact languages with less lexical overlap or different grammatical structures, such as German and Japanese.

Third, domain-specific vocabulary and dataset size may also have played a role. The dataset, based on Amazon beauty product reviews, may include informal or colloquial language that DeepL, often trained on formal text corpora, handles less effectively. Google Translate’s exposure to a wider range of text genres could explain its better performance in capturing contextual sentiment cues.

Overall, these findings show that translation-based augmentation is not always effective and that selecting the right translation tools and understanding language features are important. Future work should include translation quality metrics (e.g., BLEU and semantic similarity) and examine language pair-specific tuning to improve augmentation results. Evaluation of Research Questions and Hypotheses: The findings address RQ1, which asked if translation-based data augmentation improves sentiment classification in low-resource languages. Results show Google Translate augmentation improved French and Japanese, while DeepL had mixed outcomes and neither significantly improved German. Hypothesis 1 is partly supported: augmentation can enhance accuracy in some languages, depending on the language.

For RQ2, which examined if models trained on augmented data can generalize across languages, cross-lingual evaluation showed some improvement for distant pairs (e.g., Japanese → French), but gains were inconsistent and often reduced when languages had different grammatical structures. This partially supports Hypothesis 2, indicating that generalization is possible but not across all language pairs.

5. Conclusions

Sentiment analysis is one of the most complex tasks in natural language processing, particularly when dealing with poor-quality or limited datasets. To address this challenge, the data augmentation technique was introduced to increase dataset size and diversity. In this study, the effect of the data augmentation technique on sentiment analysis was investigated across three distinct languages: French, German, and Japanese. Sentiment analysis was conducted both with and without the translation augmentation technique, and the generalization capability was tested on test datasets in different languages.

Translation augmentation was employed using two machine translation services: the Google Translate API and the DeepL Translate API. Translation augmentation expanded the training dataset by translating it into target languages through various intermediate languages, depending on the original language. This translation process increased the diversity of the dataset. Using this technique, the performance of sentiment analysis models improved for the French and Japanese datasets; however, no improvement was observed for the German dataset. This suggests that the technique’s effectiveness in improving model accuracy depends on the language.

The generalization of sentiment analysis models was then investigated across distinct language test datasets. The sentiment models trained with the data augmentation technique using both Google Translate and DeepL Translate APIs for Japanese and German showed marked improvements in generalization on each other’s test datasets. Additionally, sentiment models trained with French and German datasets demonstrated improvements in generalization on each other’s test datasets when using the Google Translate API. These results suggest that sentiment analysis models trained on one language can be adapted to others if both languages are translated into the same target language. This adaptability has the potential to support cross-lingual sentiment analysis and help address the challenges of working with limited data for specific languages in real-world scenarios.

6. Limitations and Future Work

This study assessed the effectiveness of translation-based data augmentation for sentiment analysis using Amazon beauty product reviews in French, German, and Japanese. The results add to the natural language processing literature by providing empirical evidence on how augmentation performance varies across languages and translation tools. Notably, Google Translate consistently outperformed DeepL for French and Japanese, while neither tool improved results for German, highlighting the importance of considering language-specific features when choosing augmentation strategies.

Intermediate translation was intentionally chosen to increase linguistic diversity in the augmented datasets, a valuable property for low-resource and cross-lingual sentiment analysis. This approach aimed to expose the models to a broader variety of lexical and syntactic structures. However, it also introduces translation-induced noise, semantic drift, and quality inconsistencies, which may distort sentiment polarity across languages. These risks are especially pronounced when multiple intermediate languages are involved.

Future research should explore a broader range of language pairs and application areas, including additional e-commerce categories (such as technology and books) and other review settings (like hospitality and tourism), to assess the general applicability of augmentation effects. Incorporating selective human validation or spot-checking of translated samples could help ensure sentiment accuracy, especially for linguistically difficult pairs. Furthermore, the current limitations of neural machine translation (NMT) tools, including translation noise, semantic drift, and quality variations across languages, highlight the need for alternative or supplementary methods.

Promising directions include the following:

- Combining large language model (LLM)-based augmentation with semantic validation techniques to minimize translation artifacts and improve cross-lingual robustness;

- Directly comparing tTansformer-based multilingual models like mBERT and XLM-R, which understand cross-lingual semantics without explicit translation, against translation-based approaches;

- Developing hybrid systems that integrate multilingual pre-training with targeted translation-based augmentation to leverage the strengths of both methods;

- Exploring LLM-based paraphrasing as a flexible alternative to rigid translation pipelines.

Author Contributions

Conceptualization, H.L. and S.A.; Methodology, S.A.; Software, H.L.; Validation, H.L.; Formal analysis, S.A. and H.L.; Data curation, H.L.; Writing—original draft, S.A.; Supervision, S.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- Taylor, L.; Nitschke, G. Improving deep learning with generic data augmentation. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bangalore, India, 18–21 November 2018; IEEE: New York, NY, USA, 2018; pp. 1542–1547. [Google Scholar]

- Pang, Z.; Li, H.; Wang, C.; Shi, J.; Zhou, J. A two-stage balancing strategy based on data augmentation for imbalanced text sentiment classification. J. Intell. Fuzzy Syst. 2021, 40, 10073–10086. [Google Scholar] [CrossRef]

- Khan, A. Improved multi-lingual sentiment analysis and recognition using deep learning. J. Inf. Sci. 2025, 51, 284–291. [Google Scholar] [CrossRef]

- Zhang, J.; Shields, M.D. On the quantification and efficient propagation of imprecise probabilities resulting from small datasets. Mech. Syst. Signal Process. 2018, 98, 465–483. [Google Scholar] [CrossRef]

- Lever, J.; Krzywinski, M.; Altman, N. Points of significance: Model selection and overfitting. Nat. Methods 2016, 13, 703–705. [Google Scholar] [CrossRef]

- Vuttipittayamongkol, P.; Elyan, E.; Petrovski, A. On the class overlap problem in imbalanced data classification. Knowl.-Based Syst. 2021, 212, 106631. Available online: https://www.sciencedirect.com/science/article/pii/S0950705120307607 (accessed on 21 August 2025). [CrossRef]

- Das, S.; Datta, S.; Chaudhuri, B.B. Handling data irregularities in classification: Foundations, trends, and future challenges. Pattern Recognit. 2018, 81, 674–693. [Google Scholar] [CrossRef]

- Guo, J.; Wang, X.; Wu, Y. Positive emotion bias: Role of emotional content from online customer reviews in purchase decisions. J. Retail. Consum. Serv. 2020, 52, 101891. [Google Scholar] [CrossRef]

- Hasan, M.; Rahman, M.T.; Zillanee, A.H.; Alam, M.G.R.; Islam, M.F.U.; Chakrabarty, A. Multilingual sentiment analysis on social media: Harnessing deep learning for enhanced insights and decision support for foreign travelers. In Proceedings of the 2024 6th International Conference on Electrical Engineering and Information & Communication Technology (ICEEICT), Dhaka, Bangladesh, 2–4 May 2024; IEEE: New York, NY, USA, 2024. [Google Scholar]

- Body, T.; Tao, X.; Li, Y.; Li, L.; Zhong, N. Using back-and-forth translation to create artificial augmented textual data for sentiment analysis models. Expert Syst. Appl. 2021, 178, 115033. [Google Scholar] [CrossRef]

- Wang, L.; Xu, X.; Liu, C.; Chen, Z. M-da: A multifeature text data-augmentation model for improving accuracy of chinese sentiment analysis. Sci. Program. 2022, 2022, 3264378. [Google Scholar] [CrossRef]

- Bayer, M.; Kaufhold, M.-A.; Buchhold, B.; Keller, M.; Dallmeyer, J.; Reuter, C. Data augmentation in natural language processing: A novel text generation approach for long and short text classifiers. Int. J. Mach. Learn. Cybern. 2023, 14, 135–150. [Google Scholar] [CrossRef]

- Abonizio, H.Q.; Paraiso, E.C.; Barbon, S. Toward text data augmentation for sentiment analysis. IEEE Trans. Artif. Intell. 2021, 3, 657–668. [Google Scholar] [CrossRef]

- Pellicer, L.F.A.O.; Ferreira, T.M.; Costa, A.H.R. Data augmentation techniques in natural language processing. Appl. Soft Comput. 2023, 132, 109803. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Abayomi-Alli, O.O.; Damasevicius, R.; Misra, S.; Maskeliunas, R. Cassava disease recognition from low-quality images using enhanced data augmentation model and deep learning. Expert Syst. 2021, 38, e12746. [Google Scholar] [CrossRef]

- Maeda, K.; Takada, S.; Haruyama, T.; Togo, R.; Ogawa, T.; Haseyama, M. Distress detection in subway tunnel images via data augmentation based on selective image cropping and patching. Sensors 2022, 22, 8932. [Google Scholar] [CrossRef]

- Nanni, L.; Paci, M.; Brahnam, S.; Lumini, A. Comparison of different image data augmentation approaches. J. Imaging 2021, 7, 254. [Google Scholar] [CrossRef]

- Nguyen, B.; Nguyen, V.-H.; Ho, T. Sentiment analysis of customer feedbacks in online food ordering services. Bus. Syst. Res. Int. J. Soc. Adv. Innov. Res. Econ. 2021, 12, 46–59. [Google Scholar] [CrossRef]

- Feng, Z.; Zhou, H.; Zhu, Z.; Mao, K. Tailored text augmentation for sentiment analysis. Expert Syst. Appl. 2022, 205, 117605. [Google Scholar] [CrossRef]

- Wei, J.; Zou, K. EDA: Easy data augmentation techniques for boosting performance on text classification tasks. arXiv 2019, arXiv:1901.11196. [Google Scholar] [CrossRef]

- Sugiyama, A.; Yoshinaga, N. Data augmentation using back-translation for context-aware neural machine translation. In Proceedings of the Fourth Workshop on Discourse in Machine Translation (DiscoMT 2019), Hong Kong, China, 3 November 2019; pp. 35–44. [Google Scholar]

- Kryściński, W.; McCann, B.; Xiong, C.; Socher, R. Evaluating the factual consistency of abstractive text summarization. arXiv 2019, arXiv:1910.12840. [Google Scholar] [CrossRef]

- Sadhasivam, J.; Kalivaradhan, R.B. Sentiment analysis of amazon products using ensemble machine learning algorithm. Int. J. Math. Eng. Manag. Sci. 2019, 4, 508. [Google Scholar] [CrossRef]

- Thet, T.T.; Na, J.-C.; Khoo, C.S. Aspect-based sentiment analysis of movie reviews on discussion boards. J. Inf. Sci. 2010, 36, 823–848. [Google Scholar] [CrossRef]

- Feldman, R. Techniques and applications for sentiment analysis. Commun. ACM 2013, 56, 82–89. [Google Scholar] [CrossRef]

- Al-Smadi, M.; Qawasmeh, O.; Al-Ayyoub, M.; Jararweh, Y.; Gupta, B. Deep recurrent neural network vs. support vector machine for aspect-based sentiment analysis of arabic hotels’ reviews. J. Comput. Sci. 2018, 27, 386–393. [Google Scholar] [CrossRef]

- Wen, Y.; Liang, Y.; Zhu, X. Sentiment analysis of hotel online reviews using the bert model and ernie model—Data from China. PLoS ONE 2023, 18, e0275382. [Google Scholar] [CrossRef] [PubMed]

- Alkhushayni, S.; Alomari, Z.; Al-Zaleq, D. A sentiment analysis study of twitter users’ reactions to the COVID-19 vaccine. In Proceedings of the 2023 14th International Conference on Information and Communication Systems (ICICS), Irbid, Jordan, 21–23 November 2023; pp. 1–6. [Google Scholar]

- Gondhi, N.K.; Sharma, E.; Alharbi, A.H.; Verma, R.; Shah, M.A. Efficient long short-term memory-based sentiment analysis of e-commerce reviews. Comput. Intell. Neurosci. 2022, 2022, 3464524. [Google Scholar] [CrossRef]

- Catelli, R.; Bevilacqua, L.; Mariniello, N.; di Carlo, V.S.; Magaldi, M.; Fujita, H.; Esposito, M. Cross lingual transfer learning for sentiment analysis of italian tripadvisor reviews. Expert Syst. Appl. 2022, 209, 118246. [Google Scholar] [CrossRef]

- Mehraliyev, F.; Chan, I.C.C.; Kirilenko, A.P. Sentiment analysis in hospitality and tourism: A thematic and methodological review. Int. J. Contemp. Hosp. Manag. 2022, 34, 46–77. [Google Scholar] [CrossRef]

- Khoong, E.C.; Steinbrook, E.; Brown, C.; Fernandez, A. Assessing the use of google translate for spanish and chinese translations of emergency department discharge instructions. JAMA Intern. Med. 2019, 179, 580–582. [Google Scholar] [CrossRef]

- Varela-Salinas, M.J.; Burbat, R. Google translate and deepl: Breaking taboos in translator training. observational study and analysis. Ibérica 2023, 45, 243–266. [Google Scholar] [CrossRef]

- Zulfiqar, S.; Wahab, M.F.; Sarwar, M.I.; Lieberwirth, I. Is machine translation a reliable tool for reading german scientific databases and research articles? J. Chem. Inf. Model. 2018, 58, 2214–2223. [Google Scholar] [CrossRef]

- Miller, J.M.; Harvey, E.M.; Bedrick, S.; Mohan, P.; Calhoun, E. Simple patient care instructions translate best: Safety guidelines for physician use of google translate. J. Clin. Outcomes Manag. 2018, 25, 18–27. [Google Scholar]

- Bradley, M.M.; Lang, P.J. Affective Norms for English Words (ANEW): Instruction Manual and Affective Ratings; Tech. Rep. C-1, technical Report; The Center for Research in Psychophysiology, University of Florida: Gainesville, FL, USA, 1999. [Google Scholar]

- Schmidtke, D.S.; Schröder, T.; Jacobs, A.M.; Conrad, M. ANGST: Affective norms for german sentiment terms, derived from the affective norms for english words. Behav. Res. Methods 2014, 46, 1108–1118. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).