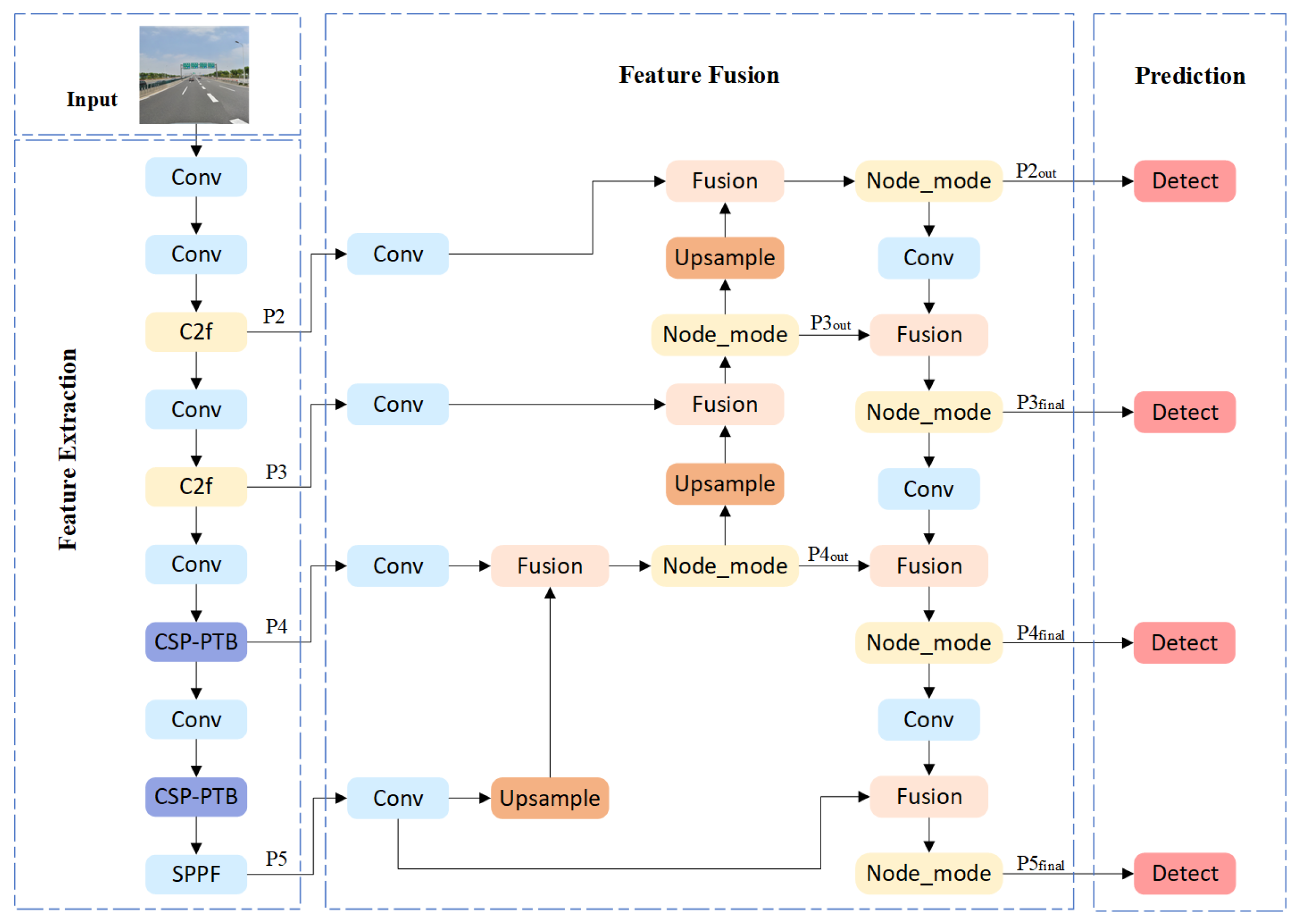

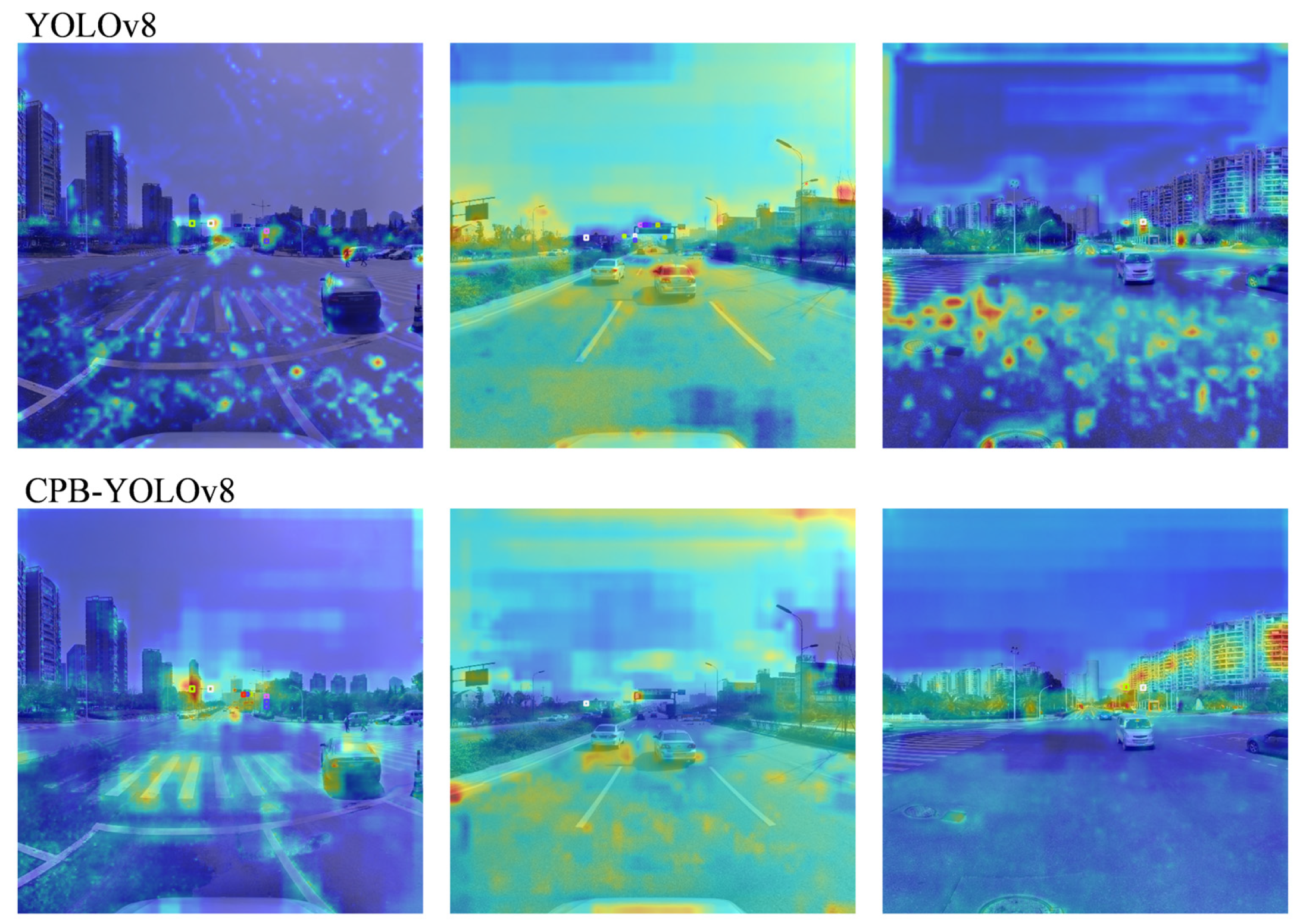

This section introduces the architectural enhancements of CPB-YOLOv8. The proposed model incorporates a dual-branch structure within the feature layers to enhance semantic expressiveness. It further employs a quad-tiered BiFPN within the fusion stage to orchestrate efficient cross-scale feature integration. Additionally, the Wise-IoU v3 loss function is adopted to bolster classification robustness. Collectively, these modifications significantly improve the model’s overall detection performance. Subsequent sections elaborate on the detailed framework design, parameter configuration, and implementation specifics of the constituent modules.

3.2. The Dual-Branch CSP-PTB Module

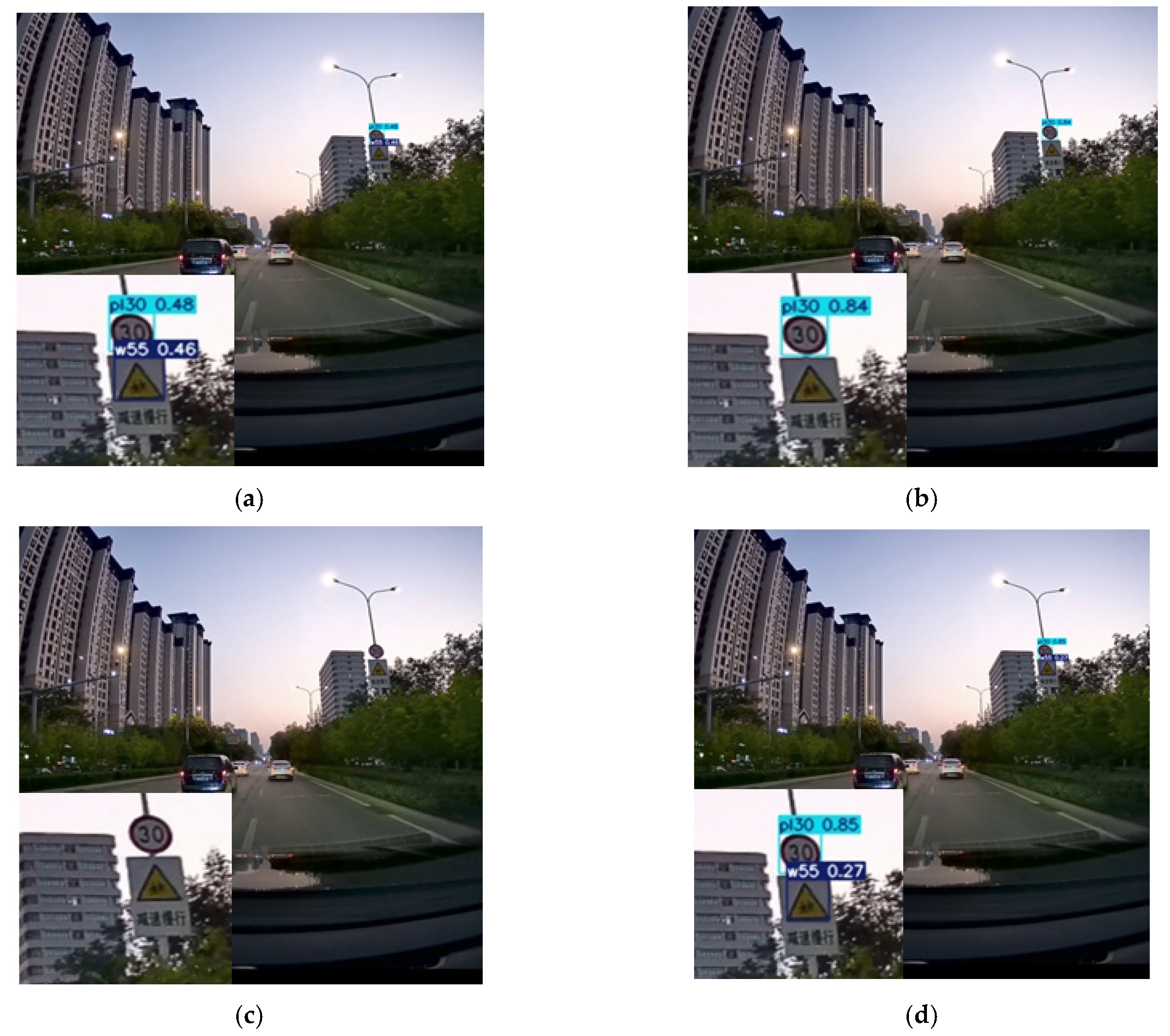

The detection of traffic signs poses unique challenges, such as tiny object sizes, complex backgrounds, and frequent occlusions. Standard convolutional backbones, like the C2f module in YOLOv8, excel at local feature extraction but struggle with modeling long-range global context, leading to false alarms from background clutter. While Vision Transformers offer powerful global modeling, their quadratic computational complexity often renders them unsuitable for real-time applications. To holistically address these competing demands, we propose the CSP-PTB. Its design philosophy is to achieve a synergistic balance between computational efficiency and representational power by integrating the complementary strengths of CNNs and Transformers through a novel dual-branch architecture with cross-stage partial connections. This module is specifically engineered to enhance the model’s capability to discern small traffic signs against distracting backgrounds.

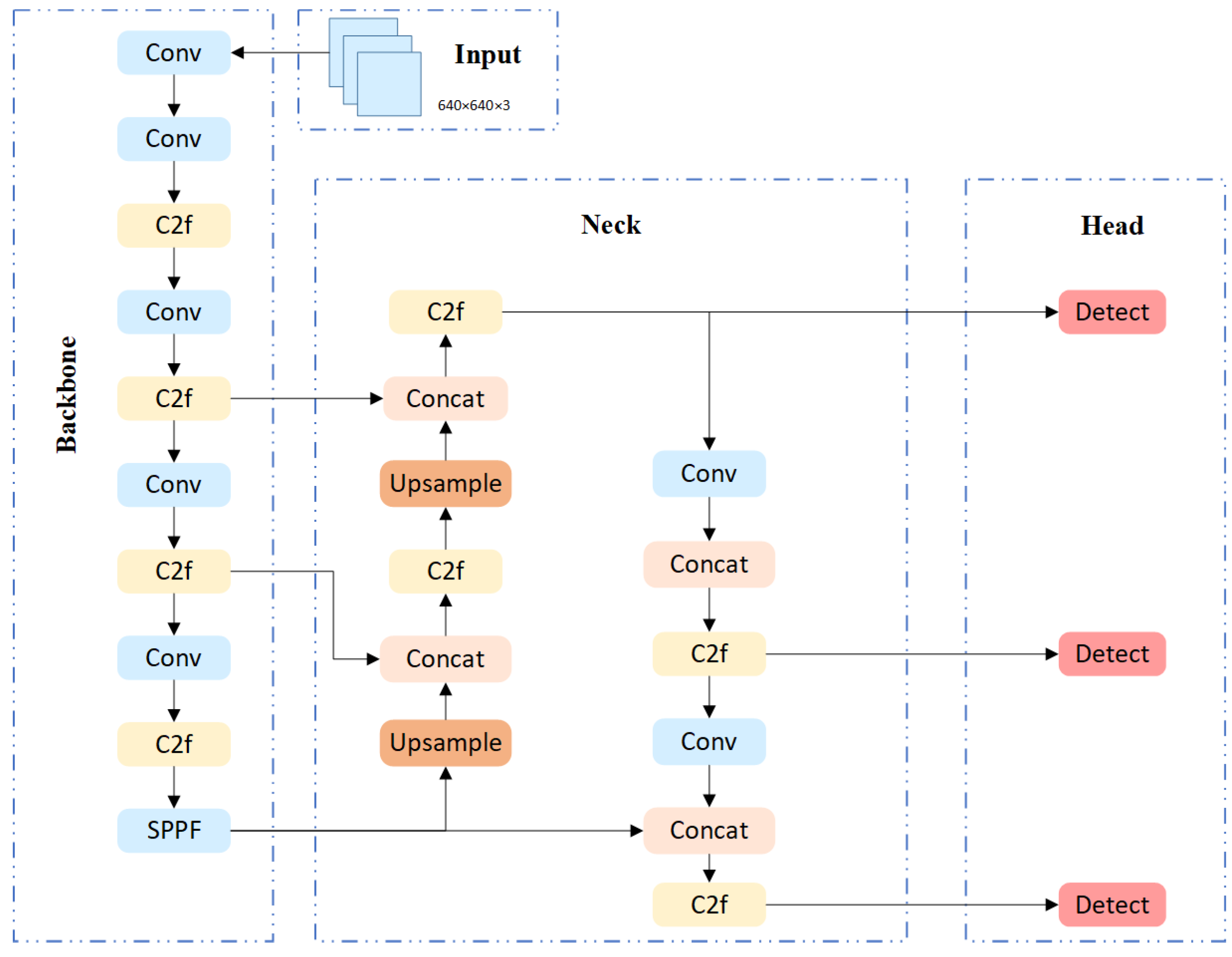

The C2f module within the original YOLOv8 backbone network, as illustrated in

Figure 3a, the C2f structure employs three convolutional modules and

Bottleneck modules. Input features are divided equally, with one portion routed directly to subsequent stages while the other undergoes transformation and integration via bottleneck convolutional layers. While effective, C2f relies primarily on convolutional operations with a limited receptive field, inherently lacking robust global context modeling capabilities. In contrast, the Transformer architecture has demonstrated remarkable success in computer vision due to its powerful global context modeling [

31]. However, its substantial computational cost often precludes its use in real-time detection systems. To address these limitations, we propose the CSP-PTB. This module integrates the local feature extraction strengths of CNNs with the global context modeling advantages of Transformers via a channel allocation strategy. CSP-PTB enhances model performance and feature extraction capability while maintaining computational efficiency suitable for lightweight deployment.

As illustrated in

Figure 3, the CSP-PTB module processes input feature maps

from preceding stages. A channel partitioning strategy splits

into two components:

and

, where

. The channel allocation ratio is governed by hyperparameter

. Within the CNN branch, stacked bottleneck convolutional layers consisting of sequential 3 × 3 and 1 × 1 convolution process

to generate output features

. This design preserves local structural information with minimal computational overhead. Simultaneously, the Transformer branch applies our novel PTB to

, leveraging Multi-Head Self-Attention [

32] with Complex Gated Linear Units (MHSA-CGLU) to capture global contextual relationships, yielding output features

.

The normalized outputs from both branches undergo channel-wise concatenation:

This fused representation combines CNN’s local features and PTB’s global contexts. Through cross-stage partial connections, the CSP mechanism enables efficient propagation of complementary information to subsequent network layers, optimizing feature transmission efficacy.

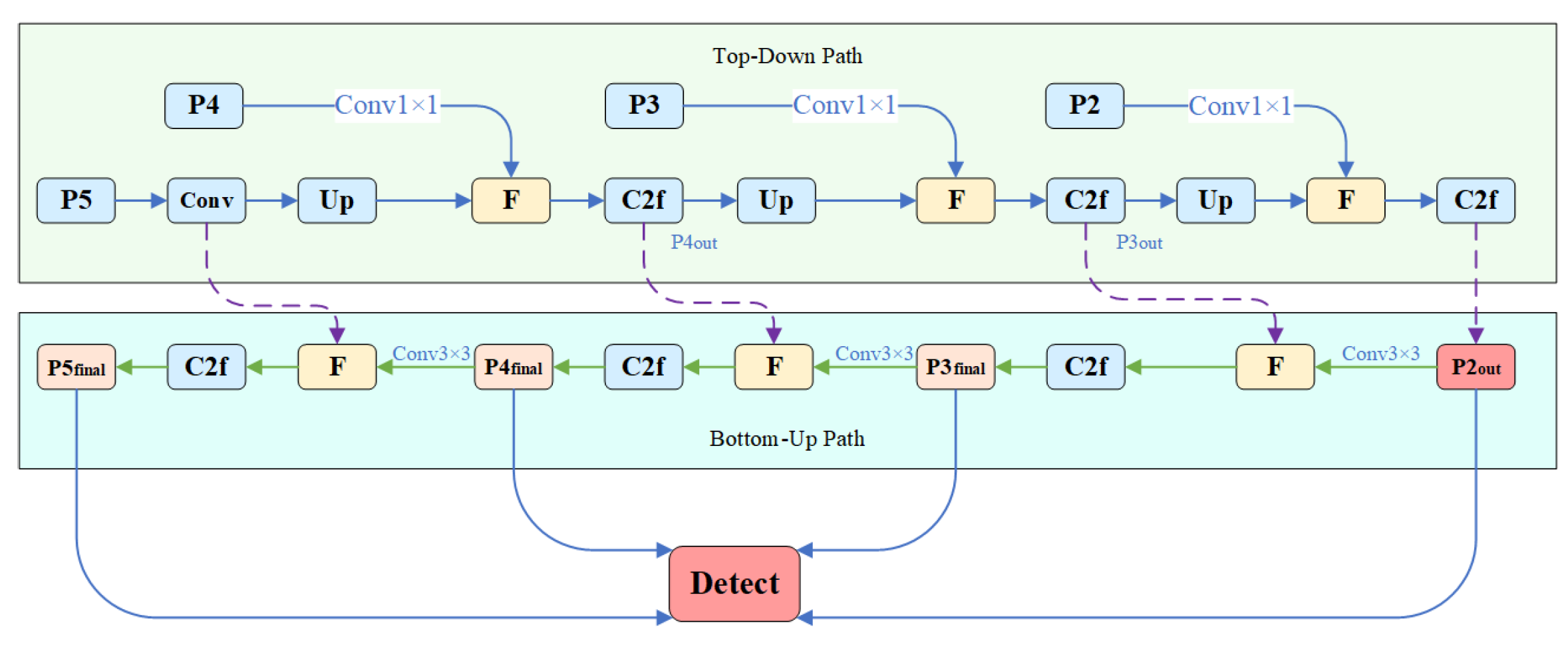

Implemented through the MHSA-CGLU module shown in

Figure 3, the PTB branch core combines an MHSA mechanism and CGLU. The MHSA mechanism, fundamental to Transformer architectures, enables each sequence element to dynamically integrate information from all other elements via weighted aggregation. Implementation occurs via parallel attention heads using scaled dot-product attention to capture multidimensional sequence dependencies, as illustrated structurally in

Figure 4. The input tensor

is first reshaped into sequence form

where

denotes sequence length. Linear transformations then generate Query (

Q), Key (

K), and Value (

V) matrices:

with dimension constraints

(

= number of attention heads). For each group of

Q/K/V matrices, scaled dot-product attention computation is performed per head. Within an individual attention head, the

Q and

K matrices first undergo matrix multiplication. The resultant product is scaled by

to mitigate gradient vanishing in SoftMax, optionally followed by masking. Attention weights are then derived via SoftMax normalization, and the

V matrix is weighted and summed using these weights to generate the single-head output. Finally, multi-head outputs are concatenated and linearly transformed, fusing multi-perspective correlation information to capture complex sequence dependencies.

The Scaled Dot-Product Attention calculation and multi-head fusion process are as follows:

where

is the head output,

is the attention score matrix of each head, and

is the output features. Within each attention head, the scaled dot-product operation quantifies elemental association strengths as “attention scores” in the

and

subspaces. Following normalization via the Softmax function, the value vectors

are weighted using these normalized attention scores. This enables a single attention head to selectively capture dependencies manifested through local and global feature interactions within the image’s feature sequence. Distinct attention heads derive independent sets of

Q,

K, and

V vectors via parallel, independent linear transformations—implicitly projecting the input into distinct subspaces. This enables concurrent modeling of multi-dimensional sequence associations. Following the concatenation of these multi-head features, a linear projection

reduces dimensionality. This integration of multi-view representations overcomes the representational bottleneck inherent to single-head attention, enabling the final output

to more comprehensively characterize sequence dependencies. The core MHSA architecture—single-head subspace association followed by multi-head feature fusion—thus efficiently captures complex, multi-dimensional sequence dependencies without substantially increasing computational overhead. Building upon this principle, we propose the MHSA-CGLU module and will experimentally evaluate its efficacy for feature extraction in traffic sign detection tasks.

The traditional transformer structure uses an FFN feed-forward network with a two-layer linear transformation plus nonlinear activation, which is mathematically expressed as:

where

σ is usually a GELU function,

,

. Although computationally efficient, standard MHSA operations neglect spatial locality, leading to suboptimal modeling of local features critical for traffic sign recognition. To address this limitation, we integrate TransNeXt’s CGLU [

33], which performs joint spatial-channel modeling via a dual-branch architecture shown in

Figure 5. The linear branch processes MHSA output features

via channel-preserving linear transformation

without activation functions, thereby retaining original feature integrity. Simultaneously, the gating branch employs depthwise convolution

to capture local structural details (e.g., sign edges), followed by a sigmoid activation that generates spatially sensitive weighting coefficient

where each element

corresponds to positional significance within the feature map. The final output

, dynamically enhances critical regions (e.g., sign contours/text with G ≈ 1) while suppressing background interference (e.g., tree occlusion with G ≈ 0).

This design is a strategic choice for TSD. The depthwise convolution introduces a much-needed spatial prior, helping the model focus on localized structures like sign boundaries. The gating mechanism provides adaptive, content-dependent feature refinement, significantly enhancing robustness to noise and complex backgrounds. Compared to a standard FFN, the CGLU offers a more powerful and efficient nonlinear transformation that is acutely aware of spatial details, which is paramount for accurately locating small objects. This improvement is quantitatively validated through ablation studies in

Section 4.