Detection of Fake News in Romanian: LLM-Based Approaches to COVID-19 Misinformation

Abstract

1. Introduction

- Introduce a dataset of 627 manually curated and annotated articles for classification of false information that reflects the cultural and linguistic adaptation of COVID-19-related narratives in the Romanian context;

- Provide a complementary dataset of 7950 unannotated articles from the same domain and period, intended to support semi-supervised learning approaches;

- Assess the effectiveness of both Romanian-trained LLMs and multilingual LLMs in distinguishing real news from various forms of misinformation;

- Analyze detection difficulties across different misinformation types, particularly comparing structured narratives (e.g., vaccine misinformation) to unstructured narratives (e.g., conspiracy theories, political misinformation);

- Examine the impact of fine-tuning strategies on improving model performance, evaluating both supervised and semi-supervised learning approaches.

2. Related Work

3. Method

3.1. Defining Fake News

3.2. Dataset

- False or misleading claims contradicting scientific evidence or official health guidelines.

- Sensationalist or alarmist language designed to provoke emotional reactions rather than inform.

- Lack of credible sources, with writers often relying on anonymous experts or conspiracy theorists.

- Mimicry of journalistic conventions; using fabricated statistics, pseudo-scientific jargon, or misleading headlines to enhance credibility.

- Vaccination Narratives—These sub-narratives challenge the safety, efficacy, or legitimacy of vaccines;

- Accusations of Authoritarianism and Dystopia—Public health measures are recast as precursors to totalitarian control;

- Criticism of National and International Actors—Discredits political and institutional responses;

- Criticism of Restrictions—Opposes mitigation efforts such as lockdowns and mask mandates;

- Geopolitical Narratives—Link the pandemic to global rivalries and blame those rivalries for negative effects;

- Minimization of COVID-19 Impact—Downplays the pandemic’s severity;

- Health-Related Falsehoods and Cultural Claims;

- Conspiracy Theories—Suggest orchestrated manipulation by hidden elites;

- Satire—Though minimal in the dataset, some content was framed using irony or exaggeration, making intent and impact difficult to assess.

3.3. Baseline: Classical ML Based on Linguistic Features

3.4. Tokenization, Prompt Structure and Hyperparameters

- Start–include as much as possible from the start;

- End–include as much as possible to the end;

- 1/2-1/2–split the remaining space in half from the beginning and half from the end;

- 1/3-2/3–split the remaining space into three parts, one from the beginning and two from the end;

- 2/3-1/3–split the remaining space into three parts, two from the beginning and one from the end.

- For one-shot and three-shot variants: Each few-shot example could use up to 768 tokens, while the current article received the remainder of the allocated space.

- For the “all” setting: Each few-shot example was allocated a fixed length of 200 tokens, while the current article received the remainder of the available space.

- In the zero-shot setting:

- ∘

- [Task Introduction] [Classes w/o Class Description] [Task Description] [Output Example] [Input]

- ∘

- [Task Introduction] [Classes w/Class Description] [Task Description] [Output Example] [Input]

- In the few-shot setting:

- ∘

- [Task Introduction] [Classes w/o Class Description] [Task Description] [Output Example] [Few-Shot Examples] [Input]

- ∘

- [Task Introduction] [Classes w/Class Description] [Task Description] [Output Example] [Few-Shot Examples] [Input]

3.5. Models and Scoring

3.6. Zero-Shot, Few-Shot and Supervised Fine-Tuning

3.7. Semi-Supervised Learning

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CNN | Convolutional Neural Networks |

| EDA | Easy Data Augmentation |

| LLM | Large Language Models |

| LSTM | Long Short-Term Memory |

| ML | Machine Learning |

| RNN | Recurrent Neural Network |

| SSL | Semi-Supervised Learning |

| SFT | Supervised Fine-Tuning |

| SVM | Support Vector Machine |

| WHO | World Health Organization |

Appendix A. Hyperparameter Ablation Study

| Hyperparameter | Value | Few-Shot F1 Weighted | SFT F1 Weighted | SSL F1 Weighted |

|---|---|---|---|---|

| Pruning Strategy | start | 65.74% | 64.06% | - |

| end | 65.53% | 65.70% | - | |

| 1/3-2/3 | 66.59% | 64.24% | - | |

| 2/3-1/3 | 65.76% | 66.68% | - | |

| 1/2-1/2 | 66.88% | 64.10% | - | |

| Class Description | yes | 66.83% | 68.76% | - |

| no | 64.33% | 60.66% | - | |

| Few-Shot Strategy | 0 | 26.29% | - | - |

| 1 | 52.42% | - | - | |

| 3 | 42.18% | - | - | |

| all | 42.74% | - | - | |

| Confidence Threshold | 0.80 | - | - | 68.68% |

| 0.85 | - | - | 70.20% | |

| 0.90 | - | - | 74.66% | |

| 0.925 | - | - | 68.04% | |

| 0.95 | - | - | 67.12% |

References

- Tandoc, E.C., Jr.; Lim, Z.W.; Ling, R. Defining “fake news” A typology of scholarly definitions. Digit. J. 2018, 6, 137–153. [Google Scholar]

- Wang, X.; Zhang, W.; Rajtmajer, S. Monolingual and Multilingual Misinformation Detection for Low-Resource Languages: A Comprehensive Survey. arXiv 2024, arXiv:2410.18390. [Google Scholar] [CrossRef]

- Zhou, X.; Zafarani, R. A survey of fake news: Fundamental theories, detection methods, and opportunities. ACM Comput. Surv. 2020, 53, 1–40. [Google Scholar] [CrossRef]

- Halevy, A.; Norvig, P.; Pereira, F. The unreasonable effectiveness of data. IEEE Intell. Syst. 2009, 24, 8–12. [Google Scholar] [CrossRef]

- Masala, M.; Ilie-Ablachim, D.; Dima, A.; Corlatescu, D.G.; Zavelca, M.A.; Olaru, O.; Terian, S.M.; Terian, A.; Leordeanu, M.; Velicu, H.; et al. “Vorbești Românește?” A Recipe to Train Powerful Romanian LLMs with English Instructions. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; pp. 11632–11647. [Google Scholar] [CrossRef]

- Hughes, B.; Miller-Idriss, C.; Piltch-Loeb, R.; Goldberg, B.; White, K.; Criezis, M.; Savoia, E. Development of a codebook of online anti-vaccination rhetoric to manage COVID-19 vaccine misinformation. Int. J. Environ. Res. Public Health 2021, 18, 7556. [Google Scholar] [CrossRef] [PubMed]

- Kondamudi, M.R.; Sahoo, S.R.; Chouhan, L.; Yadav, N. A comprehensive survey of fake news in social networks: Attributes, features, and detection approaches. J. King Saud-Univ. Comput. Inf. Sci. 2023, 35, 101571. [Google Scholar] [CrossRef]

- Tsai, C.M. Stylometric detection of fake news based on natural language processing using named entity recognition: In-domain and cross-domain analysis. Electronics 2023, 12, 3676. [Google Scholar] [CrossRef]

- Wu, D.; Tan, Z.; Zhao, H.; Jiang, T.; Geng, N. Domain-and category-style clustering for general detection of fake news via contrastive learning. Inf. Process. Manag. 2024, 61, 103725. [Google Scholar] [CrossRef]

- Castillo, C.; Mendoza, M.; Poblete, B. Information credibility on twitter. In Proceedings of the 20th International Conference on World Wide Web, Hyderabad, India, 28 March–1 April 2011; pp. 675–684. [Google Scholar]

- Ruchansky, N.; Seo, S.; Liu, Y. Csi: A hybrid deep model for detection of fake news. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 797–806. [Google Scholar]

- Reis, J.C.; Correia, A.; Murai, F.; Veloso, A.; Benevenuto, F. Explainable machine learning for detection of fake news. In Proceedings of the 10th ACM Conference on Web Science, Amsterdam, The Netherlands, 27–30 May 2018; pp. 17–26. [Google Scholar]

- Ma, J.; Gao, W.; Mitra, P.; Kwon, S.; Jansen, B.J.; Wong, K.F.; Cha, M. Detecting rumors from microblogs with recurrent neural networks. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; AAAI Press: Washington, DC, USA, 2016. IJCAI’16. pp. 3818–3824. [Google Scholar]

- Sastrawan, I.K.; Bayupati, I.; Arsa, D.M.S. Detection of fake news using deep learning CNN–RNN based methods. ICT Express 2022, 8, 396–408. [Google Scholar] [CrossRef]

- Raza, S.; Ding, C. Fake news detection based on news content and social contexts: A transformer-based approach. Int. J. Data Sci. Anal. 2022, 13, 335–362. [Google Scholar] [CrossRef]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar]

- Nørregaard, J.; Horne, B.D.; Adalı, S. NELA-GT-2018: A large multi-labelled news dataset for the study of misinformation in news articles. In Proceedings of the International AAAI Conference on Web and Social Media, Münich, Germany, 11–14 June 2019; Volume 13, pp. 630–638. [Google Scholar]

- Nakamura, K.; Levy, S.; Wang, W.Y. Fakeddit: A New Multimodal Benchmark Dataset for Fine-grained Fake News Detection. In Proceedings of the Twelfth Language Resources and Evaluation Conference, Marseille, France, 13–15 May 2020; pp. 6149–6157. [Google Scholar]

- Ma, X.; Zhang, Y.; Ding, K.; Yang, J.; Wu, J.; Fan, H. On Fake News Detection with LLM Enhanced Semantics Mining. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; pp. 508–521. [Google Scholar] [CrossRef]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. Llama 2: Open foundation and fine-tuned chat models. arXiv 2023, arXiv:2307.09288. [Google Scholar] [CrossRef]

- Zhou, X.; Wu, J.; Zafarani, R. SAFE: Similarity-aware multi-modal detection of fake news. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Singapore, 11–14 May 2020; Springer: Berlin/Heidelberg, Germany; pp. 354–367. [Google Scholar]

- Segura-Bedmar, I.; Alonso-Bartolome, S. Multimodal detection of fake news. Information 2022, 13, 284. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Bidirectional encoder representations from transformers. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Buzea, M.C.; Trausan-Matu, S.; Rebedea, T. Automatic detection of fake news for romanian online news. Information 2022, 13, 151. [Google Scholar] [CrossRef]

- Moisi, E.V.; Mihalca, B.C.; Coman, S.M.; Pater, A.M.; Popescu, D.E. Romanian Fake News Detection Using Machine Learning and Transformer-Based Approaches. Appl. Sci. 2024, 14, 11825. [Google Scholar] [CrossRef]

- Di Domenico, G.; Sit, J.; Ishizaka, A.; Nunan, D. Fake news, social media and marketing: A systematic review. J. Bus. Res. 2021, 124, 329–341. [Google Scholar] [CrossRef]

- Wardle, C.; Derakhshan, H. Information Disorder: Toward An Interdisciplinary Framework for Research and Policymaking; Council of Europe Strasbourg: Strasbourg, France, 2017; Volume 27. [Google Scholar]

- Bennett, W.L.; Livingston, S. The disinformation order: Disruptive communication and the decline of democratic institutions. Eur. J. Commun. 2018, 33, 122–139. [Google Scholar] [CrossRef]

- Zhang, X.; Ghorbani, A.A. An overview of online fake news: Characterization, detection, and discussion. Inf. Process. Manag. 2020, 57, 102025. [Google Scholar] [CrossRef]

- Egelhofer, J.L.; Lecheler, S. Fake news as a two-dimensional phenomenon: A framework and research agenda. Ann. Int. Commun. Assoc. 2019, 43, 97–116. [Google Scholar] [CrossRef]

- Tsfati, Y.; Boomgaarden, H.G.; Strömbäck, J.; Vliegenthart, R.; Damstra, A.; Lindgren, E. Causes and consequences of mainstream media dissemination of fake news: Literature review and synthesis. Ann. Int. Commun. Assoc. 2020, 44, 157–173. [Google Scholar] [CrossRef]

- Terian, S.M. What Is Fake News: A New Definition. Available online: https://revistatransilvania.ro/wp-content/uploads/2021/12/Transilvania-11-12.2021-112-120.pdf (accessed on 7 August 2025).

- Pennycook, G.; Bear, A.; Collins, E.T.; Rand, D.G. The implied truth effect: Attaching warnings to a subset of fake news headlines increases perceived accuracy of headlines without warnings. Manag. Sci. 2020, 66, 4944–4957. [Google Scholar] [CrossRef]

- Lewandowsky, S.; Ecker, U.K.; Cook, J. Beyond misinformation: Understanding and coping with the “post-truth” era. J. Appl. Res. Mem. Cogn. 2017, 6, 353–369. [Google Scholar] [CrossRef]

- Tversky, A.; Kahneman, D. Judgment under Uncertainty: Heuristics and Biases: Biases in judgments reveal some heuristics of thinking under uncertainty. Science 1974, 185, 1124–1131. [Google Scholar] [CrossRef]

- Stroud, N.J. Niche News: The Politics of News Choice; Oxford University Press: Oxford, UK, 2011. [Google Scholar]

- Soroka, S.; Fournier, P.; Nir, L. Cross-national evidence of a negativity bias in psychophysiological reactions to news. Proc. Natl. Acad. Sci. USA 2019, 116, 18888–18892. [Google Scholar] [CrossRef]

- Lewandowsky, S.; Ecker, U.K.; Seifert, C.M.; Schwarz, N.; Cook, J. Misinformation and its correction: Continued influence and successful debiasing. Psychol. Sci. Public Interest 2012, 13, 106–131. [Google Scholar] [CrossRef] [PubMed]

- BuzzSumo Ltd. BuzzSumo: Media Mentions in Minutes. Content Ideas for Days. Available online: https://buzzsumo.com/ (accessed on 1 June 2012).

- Lazer, D.M.; Baum, M.A.; Benkler, Y.; Berinsky, A.J.; Greenhill, K.M.; Menczer, F.; Metzger, M.J.; Nyhan, B.; Pennycook, G.; Rothschild, D.; et al. The science of fake news. Science 2018, 359, 1094–1096. [Google Scholar] [CrossRef]

- Berger, M. RapidFuzz/Levenshtein: Fastest Levenshtein Implementation. 2024. Available online: https://github.com/rapidfuzz/Levenshtein (accessed on 29 July 2025).

- Dascalu, M.; Gutu, G.; Ruseti, S.; Paraschiv, I.C.; Dessus, P.; McNamara, D.S.; Crossley, S.A.; Trausan-Matu, S. ReaderBench: A multi-lingual framework for analyzing text complexity. In Proceedings of the Data Driven Approaches in Digital Education: 12th European Conference on Technology Enhanced Learning, EC-TEL 2017, Tallinn, Estonia, 12–15 September 2017; Proceedings 12. Springer: Berlin/Heidelberg, Germany, 2017; pp. 495–499. [Google Scholar]

- Verma, P.K.; Agrawal, P.; Amorim, I.; Prodan, R. WELFake: Word embedding over linguistic features for fake news detection. IEEE Trans. Comput. Soc. Syst. 2021, 8, 881–893. [Google Scholar] [CrossRef]

- Seddari, N.; Derhab, A.; Belaoued, M.; Halboob, W.; Al-Muhtadi, J.; Bouras, A. A hybrid linguistic and knowledge-based analysis approach for fake news detection on social media. IEEE Access 2022, 10, 62097–62109. [Google Scholar] [CrossRef]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The llama 3 herd of models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

- Jiang, A.Q.; Sablayrolles, A.; Mensch, A.; Bamford, C.; Chaplot, D.S.; de las Casas, D.; Bressand, F.; Lengyel, G.; Lample, G.; Saulnier, L.; et al. Mistral 7B. arXiv 2023, arXiv:2310.06825. [Google Scholar] [CrossRef]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing, Hong Kong, China, 3–7 November 2019. [Google Scholar]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.A.; Cubuk, E.D.; Kurakin, A.; Li, C.L. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. Adv. Neural Inf. Process. Syst. 2020, 33, 596–608. [Google Scholar]

- Wei, J.; Zou, K. EDA: Easy Data Augmentation Techniques for Boosting Performance on Text Classification Tasks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; Inui, K., Jiang, J., Ng, V., Wan, X., Eds.; pp. 6382–6388. [Google Scholar] [CrossRef]

- Rafailov, R.; Sharma, A.; Mitchell, E.; Manning, C.D.; Ermon, S.; Finn, C. Direct preference optimization: Your language model is secretly a reward model. Adv. Neural Inf. Process. Syst. 2023, 36, 53728–53741. [Google Scholar]

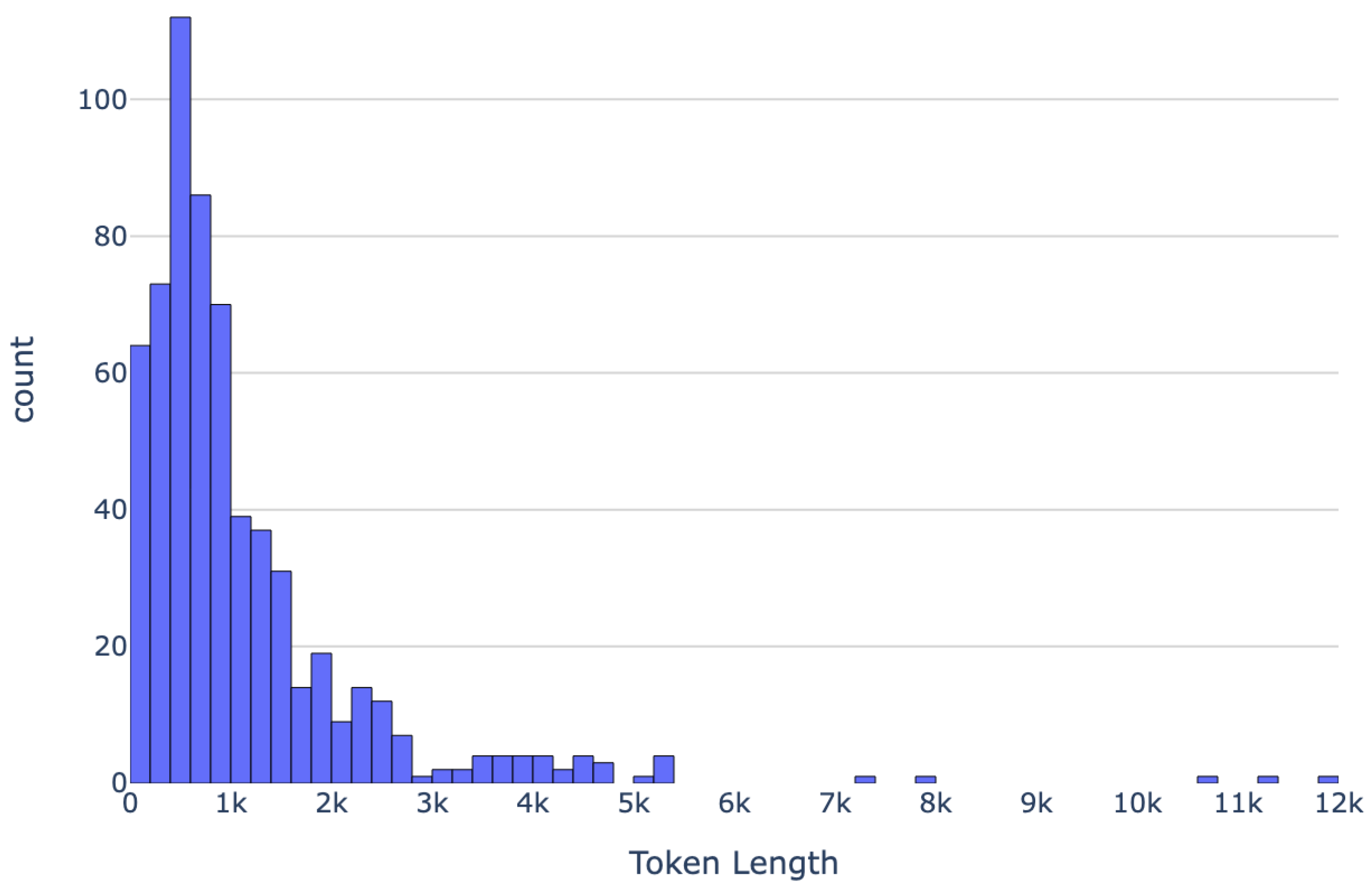

| Count | Mean | St. Dev | Min | 25% | 50% | 75% | Max |

|---|---|---|---|---|---|---|---|

| 290 | 1243.23 | 1409.20 | 41.00 | 509.50 | 840.50 | 1448.25 | 12,177.00 |

| Scenario | Super-Narrative | RoMistral | Llama 3.1 8B | RoLlama 3.1 8B | Llama 3.3 70B |

|---|---|---|---|---|---|

| Few-Shot | Vaccine-related narratives | 0.00% | 10.00% | 8.33% | 50.00% |

| Claims of authoritarianism and dystopia | 19.05% | 80.00% | 0.00% | 28.57% | |

| Conspiracy theories | 66.67% | 30.77% | 46.15% | 69.57% | |

| Criticism of restrictions | 50.00% | 53.33% | 50.00% | 66.66% | |

| Criticism of the national and international actors | 57.14% | 0.00% | 0.00% | 0.00% | |

| Downplaying COVID-19 | 0.00% | 61.54% | 44.44% | 62.50% | |

| Geo-politics | 57.14% | 44.44% | 0.00% | 57.14% | |

| Health-related narratives | 50.00% | 43.33% | 61.90% | 82.05% | |

| Satire | 66.67% | 26.67% | 26.67% | 70.00% | |

| Real news | 63.16% | 33.85% | 59.74% | 76.29% | |

| F1 Weighted | 62.24% | 39.36% | 48.21% | 70.49% | |

| F1 Macro | 45.34% | 38.39% | 29.72% | 56.28% | |

| F1 Micro | 58.73% | 38.89% | 46.03% | 68.25% | |

| SFT | Vaccine-related narratives | 80.00% | 80.00% | 80.00% | - |

| Claims of authoritarianism and dystopia | 0.00% | 40.00% | 33.33% | - | |

| Conspiracy theories | 62.50% | 42.86% | 53.33% | - | |

| Criticism of restrictions | 50.00% | 44.44% | 44.44% | - | |

| Criticism of the national and international actors | 0.00% | 0.00% | 0.00% | - | |

| Downplaying COVID-19 | 58.82% | 52.63% | 55.81% | - | |

| Geo-politics | 57.14% | 85.71% | 85.71% | - | |

| Health-related narratives | 80.00% | 85.71% | 72.73% | - | |

| Satire | 89.66% | 84.62% | 85.71% | - | |

| Real news | 80.37% | 81.08% | 77.67% | - | |

| F1 Weighted | 71.88% | 71.96% | 69.62% | - | |

| F1 Macro | 55.85% | 59.71% | 58.88% | - | |

| F1 Micro | 73.02% | 73.02% | 69.84% | - | |

| SSL | Vaccine-related narratives | 50.00% | 80.00% | 80.00% | - |

| Claims of authoritarianism and dystopia | 40.00% | 40.00% | 33.33% | - | |

| Conspiracy theories | 62.50% | 58.82% | 40.00% | - | |

| Criticism of restrictions | 40.00% | 60.00% | 44.44% | - | |

| Criticism of the national and international actors | 0.00% | 0.00% | 0.00% | - | |

| Downplaying COVID-19 | 60.60% | 63.15% | 58.82% | - | |

| Geo-politics | 80.00% | 75.00% | 75.00% | - | |

| Health-related narratives | 83.33% | 92.30% | 85.00% | - | |

| Satire | 91.67% | 91.67% | 91.67% | - | |

| Real news | 84.68% | 86.53% | 82.71% | - | |

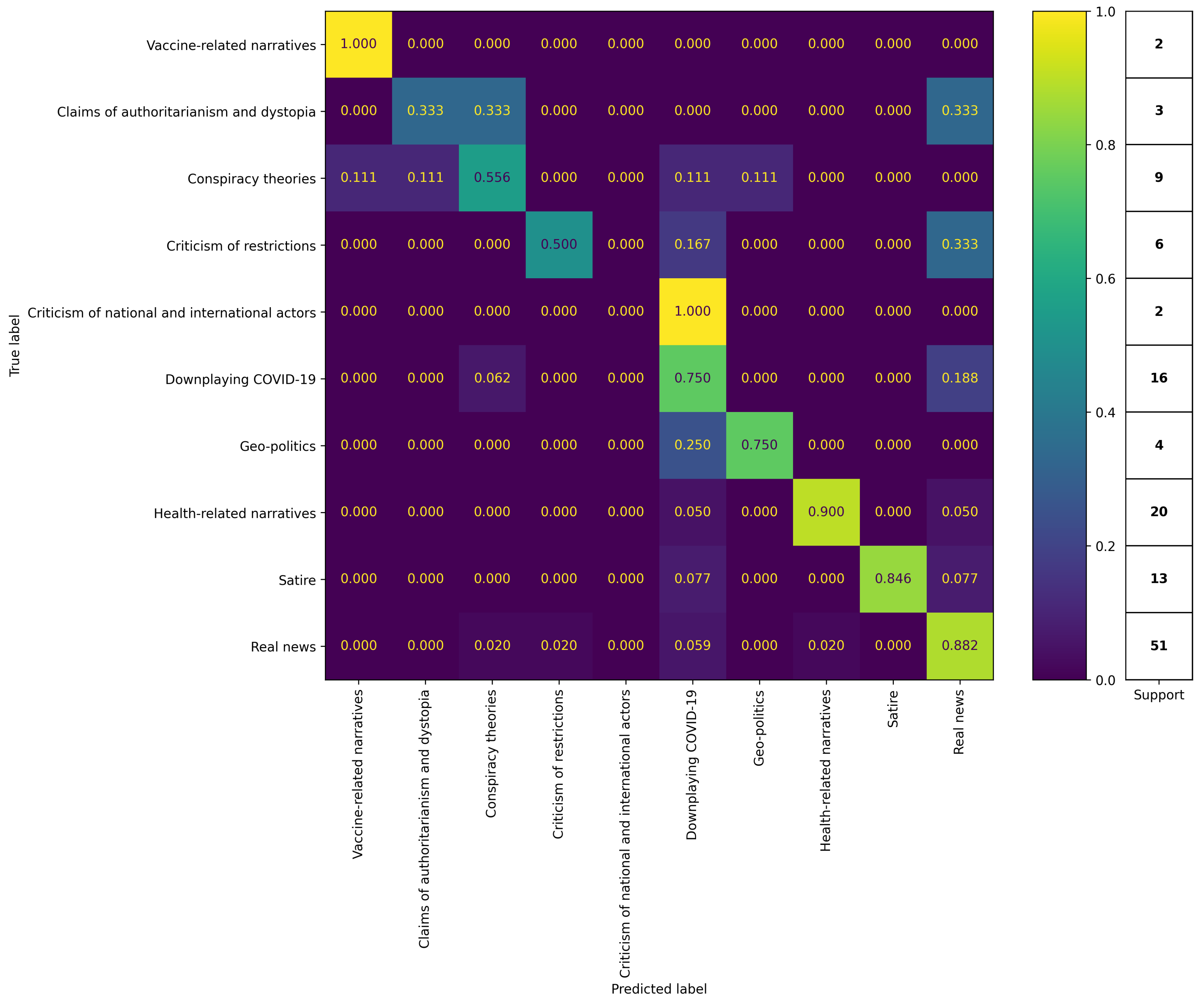

| F1 Weighted | 75.31% | 78.81% | 71.74% | - | |

| F1 Macro | 59.27% | 64.74% | 58.10% | - | |

| F1 Micro | 76.19% | 79.36% | 73.01% | - |

| Setting | Super-Narrative | SVM All | SVM + PCA | SVM + MW | SSL Llama 3.1 8B |

|---|---|---|---|---|---|

| Binary | Fake News | 74.70% | 75.13% | 70.73% | 90.54% |

| Real News | 51.16% | 10.91% | 45.45% | 86.54% | |

| F1 Weighted | 65.17% | 49.13% | 60.50% | 88.92% | |

| F1 Macro | 62.93% | 43.02% | 58.09% | 88.54% | |

| F1 Micro | 66.66% | 61.11% | 61.90% | 88.88% | |

| Multi-Class | Vaccine-related narratives | 0.00% | 0.00% | 33.33% | 80.00% |

| Claims of authoritarianism and dystopia | 0.00% | 33.33% | 0.00% | 40.00% | |

| Conspiracy theories | 11.76% | 10.53% | 10.00% | 58.82% | |

| Criticism of restrictions | 0.00% | 0.00% | 0.00% | 60.00% | |

| Criticism of the national and international actors | 0.00% | 0.00% | 0.00% | 0.00% | |

| Downplaying COVID-19 | 23.53% | 23.53% | 40.00% | 63.15% | |

| Geo-politics | 0.00% | 0.00% | 22.22% | 75.00% | |

| Health-related narratives | 34.15% | 22.22% | 38.89% | 92.30% | |

| Satire | 78.26% | 80.00% | 78.57% | 91.67% | |

| Real news | 55.67% | 55.77% | 47.52% | 86.53% | |

| F1 Weighted | 39.86% | 38.89% | 40.54% | 78.81% | |

| F1 Macro | 20.34% | 22.54% | 27.05% | 64.74% | |

| F1 Micro | 38.10% | 38.89% | 40.48% | 79.36% |

| Scenario | Model | F1 Weighted | F1 Macro | F1 Micro |

|---|---|---|---|---|

| Few-Shot | RoMistral | 58.51% | 36.21% | 55.56% |

| Llama 8B | 33.94% | 31.51% | 30.95% | |

| RoLlama 8B | 44.62% | 31.55% | 42.06% | |

| Llama 70B | 61.67% | 38.18% | 61.11% | |

| SFT | RoMistral | 65.16% | 35.22% | 68.25% |

| Llama 8B | 65.59% | 46.09% | 69.05% | |

| RoLlama 8B | 64.18% | 38.60% | 65.87% | |

| SSL | RoMistral | 71.35% | 43.79% | 73.81% |

| Llama 8B | 73.73% | 50.96% | 76.19% | |

| RoLlama 8B | 67.18% | 41.01% | 69.84% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dima, A.; Ilis, E.; Florea, D.; Dascalu, M. Detection of Fake News in Romanian: LLM-Based Approaches to COVID-19 Misinformation. Information 2025, 16, 796. https://doi.org/10.3390/info16090796

Dima A, Ilis E, Florea D, Dascalu M. Detection of Fake News in Romanian: LLM-Based Approaches to COVID-19 Misinformation. Information. 2025; 16(9):796. https://doi.org/10.3390/info16090796

Chicago/Turabian StyleDima, Alexandru, Ecaterina Ilis, Diana Florea, and Mihai Dascalu. 2025. "Detection of Fake News in Romanian: LLM-Based Approaches to COVID-19 Misinformation" Information 16, no. 9: 796. https://doi.org/10.3390/info16090796

APA StyleDima, A., Ilis, E., Florea, D., & Dascalu, M. (2025). Detection of Fake News in Romanian: LLM-Based Approaches to COVID-19 Misinformation. Information, 16(9), 796. https://doi.org/10.3390/info16090796