EDTF: A User-Centered Approach to Digital Educational Games Design and Development

Abstract

1. Introduction

2. The Design Challenges of Digital Serious Games

2.1. State of the Art on Design Frameworks

2.1.1. Overview of Existing Frameworks

2.1.2. Properties of DSG Design Frameworks

2.2. Empathic and Thinking Design: Theoretical Lens

2.3. Application of ED-DT Principles in Educational Game Design

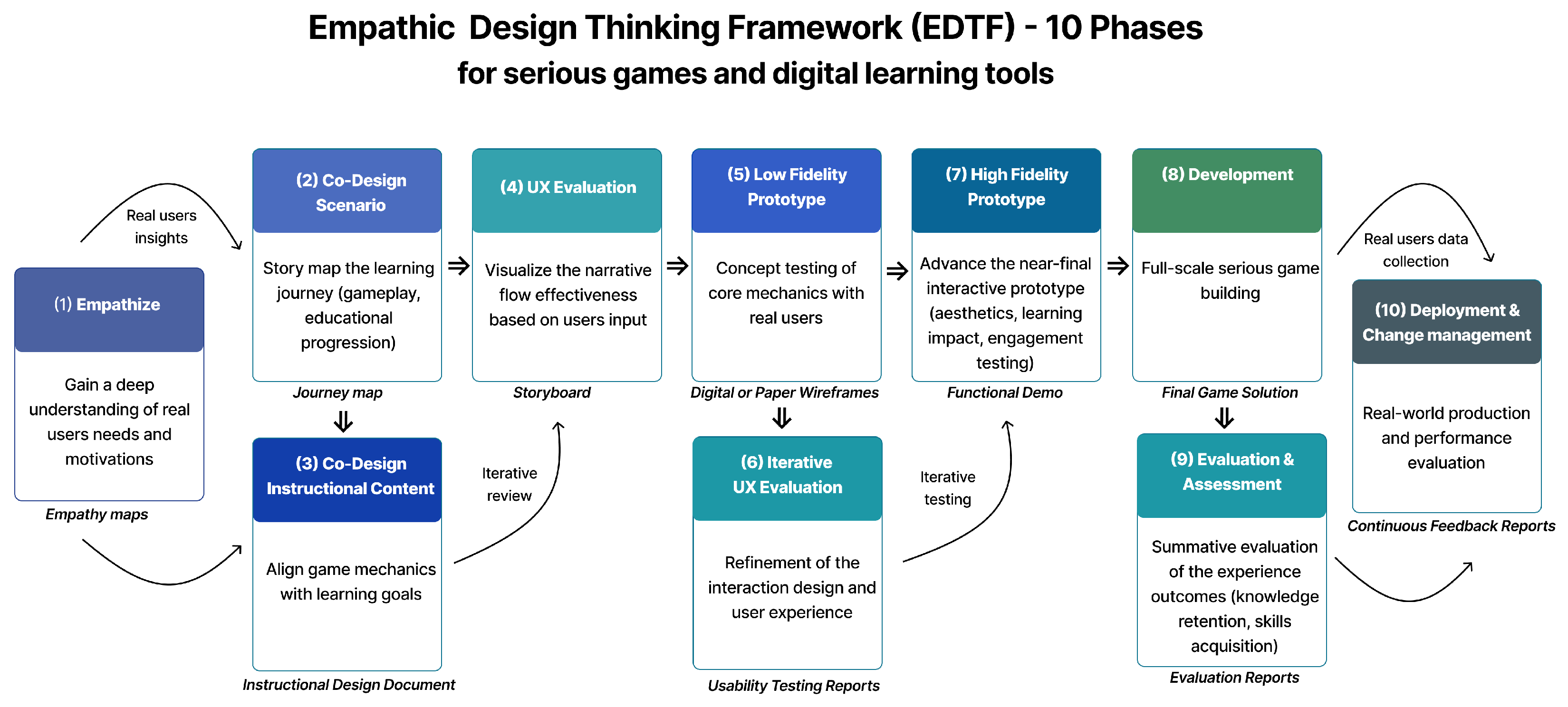

3. Empathic Design Thinking Framework (EDTF)

- (a)

- Emotion and User Experience as Core Drivers: The EDTF positions emotional resonance, educator and learner (player) perception, and contextual user needs as design anchors, not afterthoughts. While traditional models or frameworks often include players in playtesting or final usability stages, the EDTF embeds their affective needs from the first step via empathic research techniques such as shadowing, empathy mapping, journey maps, co-creation workshops, and value-centered interviews.

- (b)

- Systematic Integration of Empathic Design and Design Thinking Methods: Unlike models that rely on generalized “user-centered” claims, the EDTF outlines specific tools and methods from empathic design [47,57,58] and design thinking [48] in each phase. It supports structured problem framing, user segmentation, emotional mapping, prototyping, and affective feedback, with design checkpoints based on empathic insight.

- (c)

- Designed for Educational Constraints: The EDTF is tailored for resource-constrained educational contexts, acknowledging that small teams, limited funding, and institutional silos often inhibit full-cycle design. Therefore, the framework prioritizes lightweight, high-impact tools and participatory shortcuts (e.g., lean empathy mapping, emotional card sorting, stakeholder personas) that make empathic co-design feasible in practice.

3.1. EDTF 10 Phases

3.1.1. Phase 1: Empathize

| Context: Game conceptualization and foundational mechanics. UX Focus: Evaluating user behaviors, values, pain points, emotional responses, and motivations. Opportunity Potential: Uncover visionary potential to address real user challenges. Gaps/Limitations Addressed: Overcomes the lack of attention to users’ implicit motivations, emotional context, and experiential needs identified in prior approaches. Key Activities:

|

3.1.2. Phase 2: Co-Design Scenario

| Context: Structuring the learning journey and narrative. UX Focus: Aligning story progression with educational goals through collaborative mapping. Opportunity Potential: Encourage new perspectives in learning via interactive storytelling (paradigm shift). Gaps/Limitations Addressed: The limited integration of learner perspectives and educational alignment in narrative design found in existing frameworks. Key Activities:

|

3.1.3. Phase 3: Co-Design Instructional Content

| Context: Syncing gameplay mechanics with learning goals. UX Focus: Ensuring instructional strategies meet learner needs. Opportunity Potential: Innovate through learning technology integration (technically challenging). Gaps/Limitations Addressed: Overcomes insufficient alignment between game mechanics and pedagogical objectives common in prior frameworks. Key Activities:

|

3.1.4. Phase 4: UX Evaluation (Storyboard)

| Context: Ensuring visual and narrative coherence. UX Focus: Assessing storytelling clarity and user engagement. Opportunity Potential: Promote cross-functional insight (multidisciplinary collaboration). Gaps/Limitations Addressed: Solves the lack of early narrative validation and coordination between story and instructional design found in existing approaches. Key Activities:

|

3.1.5. Phase 5: Low-Fidelity Prototyping

| Context: Early-stage concept validation. UX Focus: Cost-effective usability testing. Opportunity Potential: Enable fast iteration and feedback (actionable). Gaps/Limitations Addressed: Resolves the issue of late usability testing by enabling early detection of design flaws and faster iteration cycles. Key Activities:

|

3.1.6. Phase 6: Iterative UX Evaluation

| Context: Ongoing interaction design refinement. UX Focus: Optimizing user experience through repeated validation. Opportunity Potential: Ensure adaptability through feedback (continuous improvement). Gaps/Limitations Addressed: Insufficient iterative testing and refinement common in existing design processes. Key Activities:

|

3.1.7. Phase 7: High-Fidelity Prototyping

| Context: Engagement testing and interaction validation. UX Focus: Fine-tune interactivity, aesthetic appeal, and educational impact. Opportunity Potential: Resolve technical hurdles pre-launch (technically challenging). Gaps/Limitations Addressed: The lack of realistic, integrated testing environments for engagement and learning effectiveness before full development. Key Activities:

|

3.1.8. Phase 8: Development

| Context: Transition from prototype to production. UX Focus: Ensure fidelity to tested user needs and educational intent. Opportunity Potential: Enable execution at scale (multidisciplinary execution). Gaps/Limitations Addressed: Challenges in maintaining design integrity and quality control during full-scale production and handoff. Key Activities:

|

3.1.9. Phase 9: Evaluation and Assessment

| Context: Post-deployment learning outcome evaluation. UX Focus: Assess real-world educational and experiential impact. Opportunity Potential: Drive long-term effectiveness (Far-Reaching). Gaps/Limitations Addressed: Overcomes the lack of comprehensive impact evaluation and feedback loops after deployment in existing frameworks. Key Activities:

|

3.1.10. Phase 10: Deployment and Change Management

| Context: Real-world scaling and lifecycle support. UX Focus: Maintain and adapt the product based on user feedback. Opportunity Potential: Secure sustainability and adoption (actionable). Gaps/Limitations Addressed: Resolves the insufficient focus on ongoing maintenance, scalability, and user-driven evolution in current design approaches. Key Activities:

|

4. Evaluation Method

- RQ1: How robust, usable, and applicable is the EDTF framework?

- RQ2: What is the perceived value attributed to each of the EDTF phases?

4.1. Stage 1: Quantitative Evaluation Through Questionnaires

4.2. Stage 2: Qualitative Evaluation Through In-Depth Interviews

5. Results

5.1. Quantitative Evaluation Results

5.1.1. UMUX-Lite Results

5.1.2. System Usability Scale (SUS) Results

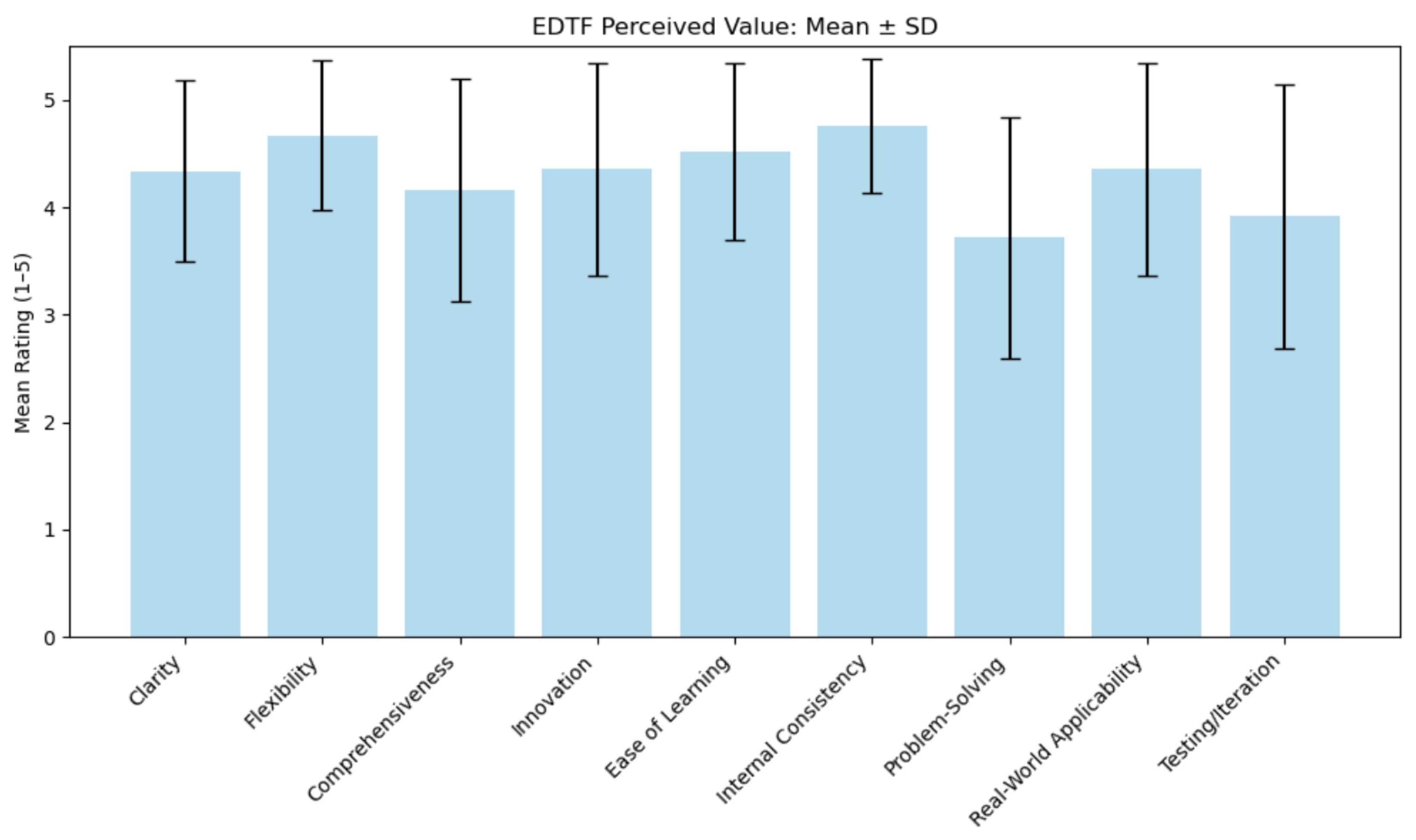

5.1.3. PEQ: General Evaluation Results

- Clarity: Respondents generally found the framework clearly explained and easy to understand (Mean = 4.34, SD = 0.84). Most participants agreed that the structure was logical and well-presented, though a few indicated they could benefit from more detailed guidance.

- Flexibility: Flexibility emerged as one of the strongest aspects of the framework (Mean = 4.67, SD = 0.70). Participants consistently felt the EDTF could be adapted to a wide variety of learning game types, audiences, and design contexts, highlighting its broad applicability.

- Comprehensiveness: Most respondents felt the framework effectively covered essential components of serious game development (Mean = 4.16, SD = 1.04). While the overall feedback was positive, some suggested there may be room to expand or clarify certain areas for a more complete process.

- Innovation: Innovation received a strong positive rating (Mean = 4.36, SD = 0.99), indicating that most experts recognized its value and saw the EDTF as offering fresh, unique, and useful perspectives in digital serious game design and development.

- Ease of Learning: Participants found the framework easy to learn and grasp (Mean = 4.52, SD = 0.82), especially after reviewing its phases. The overall consensus was strong, although a few users noted they would benefit from additional learning resources.

- Internal Consistency: Internal consistency was the highest-rated dimension (Mean = 4.76, SD = 0.62). Nearly all participants agreed that the framework’s components worked well together and formed a coherent whole. This was seen as a major strength, reinforcing its structured and integrated nature.

- Support for Problem-Solving: Feedback on this aspect was more varied (Mean = 3.72, SD = 1.12). While some found the framework helpful for addressing design challenges, others felt it could offer more targeted guidance.

- Real-World Applicability: Participants largely agreed that the framework could be effectively applied in real-world scenarios (Mean = 4.36, SD = 0.99). While most viewed it as practical, a few expressed the need for more real-life examples or case studies to better understand how it performs outside of theoretical settings.

- Support for Testing and Iteration: Opinions on testing and iteration were the most divided (Mean = 3.92, SD = 1.23). Some experts found the guidance sufficient, while others felt the framework needed more emphasis on iterative processes.

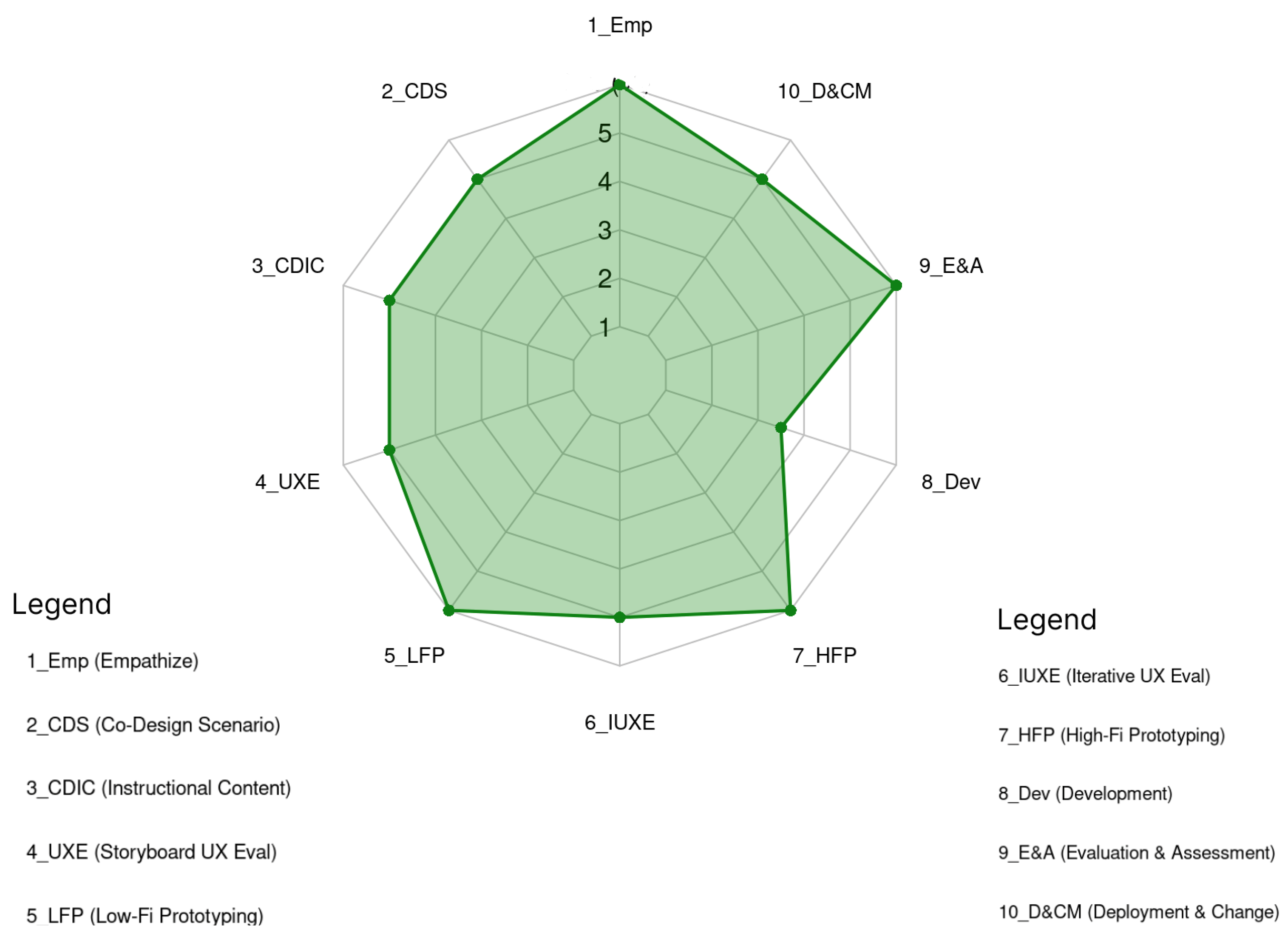

5.1.4. PEQ: Phase-by-Phase Evaluation Results

- Phase 1: Empathize (1_Emp) was unanimously recognized as the cornerstone of the framework. It anchors the process in real user needs, ensuring alignment across all subsequent phases. While its abstract nature may challenge beginners, its human-centered focus justifies its top rating.

- Phase 5: Low-Fidelity Prototyping (5_LFP) was applauded for turning ideas into testable forms early in the process, striking a practical balance between creativity and feasibility. Although resource-intensive for smaller teams, its value in supporting rapid, cost-effective iteration made it stand out.

- Phase 7: High-Fidelity Prototyping (7_HFP) played a pivotal role in validating user experience and learning effectiveness, bridging the gap between design and development. Despite being resource-heavy and technically demanding, it reduces downstream risk.

- Phase 9: Evaluation and Assessment (9_E&A) addressed a frequent shortcoming in other frameworks by emphasizing the measurement of learning outcomes. Experts appreciated its focus on educational goals and called for better tools to assess cognitive impact.

- Phase 2: Co-Design Scenario (2_CDS) helped align stakeholders early through learner journey mapping, though success heavily depends on facilitation skills. Experts recommended the use of templates to support teams with less experience.

- Phase 3: Co-Design Instructional Content (3_CDIC) ensured synergy between instructional content and gameplay. Its effectiveness, however, relied on strong interdisciplinary collaboration, which can be difficult to sustain in resource-limited settings.

- Phase 4: UX Evaluation – Storyboard (4_UXE) was appreciated for its ability to visualize mechanics and engage non-technical stakeholders. Practical and collaborative, it was seen more as a transitional tool than a primary driver of innovation.

- Phase 6: Iterative UX Evaluation 317 (6_IUXE) was considered highly impactful for product refinement but laborious, often lacking clear protocols and requiring substantial user access and time.

- Phase 10: Deployment and Change Management (10_D&CM) was acknowledged for its importance in long-term adoption and sustainability. Experts noted that it is often underfunded and viewed as a “post-launch” phase rather than an integral part of the process. Calls were made for embedded feedback systems to improve its effectiveness.

- Phase 8: Development (8_Dev) received the lowest rating (3/5), primarily due to execution challenges. While technically indispensable, it was perceived as a bottleneck rather than an opportunity for innovation. Experts cited delays, underfunding, and collaboration breakdowns, often caused by siloed development efforts disconnected from earlier design phases. Better project management and integration were seen as necessary to improve its value and reliability within the process.

5.2. Qualitative Evaluation Results

5.2.1. Core Dimensions Evaluation

- Comprehensibility: Experts found the framework to be highly comprehensible, with 89% of participants feeling the structure was coherent. Its phased layout—from early ideation to final deployment—provided a clear and logical pathway. The progression through phases was especially appreciated for its alignment with typical development workflows. A minority (11%) suggested fine-tuning some of the terminology used in specific phases to further enhance clarity and understanding.

- Usefulness: In terms of this dimension, 83% of experts recognized its tangible value in guiding the creation of digital educational games. Phases 7 to 9, which emphasize iterative testing and refinement, were particularly praised for promoting high-quality outcomes. Nonetheless, 17% of experts highlighted potential friction between the framework’s iteration cycles and the fast pace of agile development environments, pointing to possible scheduling difficulties under tight deadlines.

- Usability: For this dimension of the framework, around 78% of participants indicated they could easily apply the EDTF in their workflows. However, some experts (22%) expressed concern that the testing-heavy stages could become resource-intensive, especially for smaller development teams operating with limited time or staff.

- Feasibility: The majority of experts (80%) believed the EDTF could be implemented successfully with adequate resources. However, participants also pointed out that effective use of the framework may require multidisciplinary capabilities—combining skills from design, pedagogy, technology, and user research—making it more challenging for teams lacking such diversity.

- Ease of Use: Lastly, ease of use emerged as one of the strongest areas, with a remarkable 94% of experts appreciating the clear, step-by-step format, noting that it made the framework intuitive and accessible. Only a small fraction (6%) mentioned that the final phases, often involving more complex technical development, could be difficult for teams less familiar with advanced tools or processes.

5.2.2. Reflections on EDTF Phases

“Understanding user pain points isn’t just a box to check—it’s the compass for everything that follows.”(P12)

“It forces subject-matter experts and designers to speak the same language early on.”(P9)

“Without this step, you risk creating a fun game that teaches nothing—or a lesson nobody wants to play.”(P7)

“Catching flaws on paper saves months of coding dead-ends.”(P17)

“Sketching things out helped us catch major misunderstandings before wasting development time.”(P2)

“This is where things get real—you stop designing for yourself and start designing for users.”(P10)

“You can’t test real learning without realistic interactions, and this is where that starts.”(P4)

“This is where interdisciplinary teams often fracture without strong project management.”(P13)

“Most frameworks stop at deployment, but measuring actual learning impact is revolutionary.”(P5)

“Launching is just the beginning—this phase ensures the game evolves with user needs.”(P16)

5.2.3. Expert Walkthrough: Challenges

5.2.4. Deep Dive into Phase 1: Empathize with End Users

- Detailed Flow

- 1.

- Participant Recruitment and Diversity Strategy

- Targeted segments: A total of 8–12 participants per user group, both educators and learners. The targeted range of 8–12 participants per user group (e.g., high-school students, college instructors) is chosen to balance diversity of perspectives with practical constraints. This range aligns with established qualitative research guidelines, which suggest that 8–12 participants are typically sufficient to capture the breadth of user experiences and reach thematic saturation in heterogeneous groups [114].

- Incentives: provided via partnerships with NGOs, schools, or universities (e.g., course credits or gift cards).

- 2.

- Techniques for Empathizing

- Contextual Interviews [77]: Participants share recent learning challenges (e.g., “Tell me about the last time you struggled to understand a concept”). Interviews are adapted with real-time captioning and sign-language interpreters as needed.

- Empathy Mapping [76]: Collected insights are segmented using a template to identify patterns across user groups. For example, “Color-blind users rely on texture and shape” informs UI design in later phases by avoiding color-only cues.

- Emotional Mapping [14]: Emotional “heatmaps” highlight moments of frustration (e.g., dense instructions or unclear rewards), guiding emotional pacing in subsequent phases.

- 3.

- Clarifying Data Saturation and Sufficiency

- 4.

- Interpreting Diverging Preferences in Outcome Analysis

- 5.

- Designing for Inclusion from the Start

- High-contrast visual preferences and text-to-speech support.

- Personas that reflect sensory and cognitive diversity.

- Adaptive mechanisms that evolve based on early user feedback.

- 6.

- Inclusive Design Strategies from Empathic Insights

- “Select Your World” Preferences [117]: Interface options like high-contrast mode, text-to-speech toggles, and simplified inputs are gathered during onboarding and inform ongoing design iterations.

- Accessibility-First Personas [118]: Personas include accessibility needs, technology literacy, and preferred learning styles (visual, auditory, or tactile). These personas remain living documents, continuously updated and used throughout co-design activities in all phases.

- 7.

- Downstream Influence on Later Phases

- Accurate user personas and learner journeys that guide Phase 2 co-design scenarios.

- Anchoring instructional content in Phase 3 to real learner challenges and preventing misalignment between gameplay and education.

- Enhancing Phase 4 storyboards with emotional and cognitive maps to visualize potential friction points early.

- Informing Phase 5 low-fidelity prototypes to reflect diverse user needs and support early design decisions.

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| DSG | Digital Serious Games |

| DSGDFW | Digital Serious Game Design Framework |

| DT | Design Thinking |

| ED | Empathic Design |

| EDTF | Empathic Design Thinking Framework |

| HCI | Human–Computer Interaction |

| PEQ | Perception Evaluation Questionnaire |

| SUS | System Usability Scale |

| VR | Virtual Realidy |

| UI | User Interface |

| UX | User Experience |

| UMUX-Lite | Usability Metric for User Experience—Lite Version |

Appendix A

Appendix A.1. Adapted UMUX-Lite

- Perceived Usefulness: I believe the Empathic Design Thinking Framework (EDTF) would support my needs when designing serious games.

- Perceived Ease of Use: I think the EDTF would be easy to use in a real-world game design context.

Appendix A.2. System Usability Scale (SUS)—Perceived Usability

- I think I would like to use the EDTF regularly in designing learning games.

- I found the EDTF unnecessarily complex. (reverse scored)

- I thought the EDTF was easy to understand.

- I think I would need support from someone experienced to use the EDTF. (reverse scored)

- I found the different components of the EDTF well integrated.

- I thought there was too much inconsistency in the EDTF. (reverse scored)

- I imagine most people would learn to use the EDTF quickly.

- I found the EDTF very cumbersome to navigate. (reverse scored)

- I feel confident that I could explain how the EDTF works to someone else.

- I would need to learn a lot before I could effectively apply the EDTF. (reverse scored)

Appendix A.3. Perception Evaluation Questionnaire (PEQ)

- Clarity: The structure and terminology of the EDTF are clearly explained and easy to understand.

- Flexibility: The EDTF appears adaptable to different types of learning games, audiences, and design contexts.

- Comprehensiveness: The EDTF covers all the essential aspects needed to design and develop a serious game.

- Innovation: The EDTF introduces new and innovative ideas to game design and development, with continuous focus on user experiences.

- Ease of Learning: It was easy for me to learn and make sense of how the EDTF works after reviewing its components.

- Internal Consistency: The components of the EDTF are logically connected and reinforce each other in a coherent way.

- Support for Problem-Solving: The framework provides helpful guidance for addressing common design challenges in serious game development.

- Real-World Applicability: I believe the EDTF could be effectively applied in real-world game design projects, not just theoretical ones.

- Support for Testing and Iteration: The EDTF provides clear support for testing and iterating design ideas throughout all stages of game development, not just at the end.

References

- Mitsea, E.; Drigas, A.; Skianis, C. A Systematic Review of Serious Games in the Era of Artificial Intelligence, Immersive Technologies, the Metaverse, and Neurotechnologies: Transformation Through Meta-Skills Training. Electronics 2025, 14, 649. [Google Scholar] [CrossRef]

- Qian, M.; Clark, K.R. Game-based Learning and 21st century skills: A review of recent research. Comput. Hum. Behav. 2016, 63, 50–58. [Google Scholar] [CrossRef]

- Bond, M.; Buntins, K.; Bedenlier, S.; Zawacki-Richter, O.; Kerres, M. Mapping research in student engagement and educational technology in higher education: A systematic evidence map. Int. J. Educ. Technol. High. Educ. 2020, 17, 2. [Google Scholar] [CrossRef]

- Gundersen, S.W.; Lampropoulos, G. Using Serious Games and Digital Games to Improve Students’ Computational Thinking and Programming Skills in K-12 Education: A Systematic Literature Review. Technologies 2025, 13, 113. [Google Scholar] [CrossRef]

- Salvador-Ullauri, L.; Acosta-Vargas, P.; Luján-Mora, S. Web-Based Serious Games and Accessibility: A Systematic Literature Review. Appl. Sci. 2020, 10, 7859. [Google Scholar] [CrossRef]

- Nylén-Eriksen, M.; Stojiljkovic, M.; Lillekroken, D.; Lindeflaten, K.; Hessevaagbakke, E.; Flølo, T.N.; Hovland, O.J.; Solberg, A.M.S.; Hansen, S.; Bjørnnes, A.K.; et al. Game-thinking; utilizing serious games and gamification in nursing education—A systematic review and meta-analysis. BMC Med. Educ. 2025, 25, 140. [Google Scholar] [CrossRef] [PubMed]

- Dimitriadou, A.; Djafarova, N.; Turetken, O.; Verkuyl, M.; Ferworn, A. Challenges in Serious Game Design and Development: Educators’ Experiences. Simul. Gaming 2021, 52, 132–152. [Google Scholar] [CrossRef]

- Brandl, L.C.; Kordts, B.; Schrader, A. Technological Challenges of Ambient Serious Games in Higher Education. In Proceedings of the Workshop “Making A Real Connection, Pro-Social Collaborative Play in Extended Realities—Trends, Challenges and Potentials” at the 22nd International Conference on Mobile and Ubiquitous Multimedia (MUM ’23), Vienna, Austria, 3–6 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Eyal, L.; Rabin, E.; Meirovitz, T. Pre-Service Teachers’ Attitudes toward Integrating Digital Games in Learning as Cognitive Tools for Developing Higher-Order Thinking and Lifelong Learning. Educ. Sci. 2023, 13, 1165. [Google Scholar] [CrossRef]

- Hébert, C.; Jenson, J.; Terzopoulos, T. “Access to Technology Is the Major Challenge”: Teacher Perspectives on Barriers to DGBL in K-12 Classrooms. E-Learn. Digit. Media 2021, 18, 307–324. [Google Scholar] [CrossRef]

- Lester, D.; Skulmoski, G.J.; Fisher, D.P.; Mehrotra, V.; Lim, I.; Lang, A.; Keogh, J.W.L. Drivers and Barriers to the Utilisation of Gamification and Game-Based Learning in Universities: A Systematic Review of Educators’ Perspectives. Br. J. Educ. Technol. 2023, 54, 1748–1770. [Google Scholar] [CrossRef]

- Tyack, A.; Mekler, E.D. Self-Determination Theory and HCI Games Research: Unfulfilled Promises and Unquestioned Paradigms. ACM Trans. Comput.-Hum. Interact. 2024, 31, 40. [Google Scholar] [CrossRef]

- Serrano-Laguna, Á.; Manero, B.; Freire, M.; Fernández-Manjón, B. A methodology for assessing the effectiveness of serious games and for inferring player learning outcomes. Multimed. Tools Appl. 2018, 77, 2849–2871. [Google Scholar] [CrossRef]

- Surma-Aho, A.; Hölttä-Otto, K. Conceptualization and operationalization of empathy in design research. Des. Stud. 2022, 78, 101075. [Google Scholar] [CrossRef]

- Jumisko-Pyykkö, S.; Viita-aho, T.; Tiilikainen, E.; Saarinen, E. Towards Systems Intelligent Approach in Empathic Design. In Proceedings of the 24th International Academic Mindtrek Conference (Academic Mindtrek ’21), Tampere, Finland, 1–3 June 2021; ACM: New York, NY, USA, 2021; pp. 197–209. [Google Scholar]

- Cooke, L.; Dusenberry, L.; Robinson, J. Gaming Design Thinking: Wicked Problems, Sufficient Solutions, and the Possibility Space of Games. Tech. Commun. Q. 2020, 29, 327–340. [Google Scholar] [CrossRef]

- Kumar, L.; Herger, M. Serious games vs entertainment games. In Gamification in Business; Herger, M., Ed.; Springer: Cham, Switzerland, 2021; pp. 125–139. [Google Scholar]

- Westera, W. The devil’s advocate: Identifying persistent problems in serious game design. Int. J. Serious Games 2022, 9, 115–124. [Google Scholar] [CrossRef]

- Barakat, N.H. A framework for integrating software design patterns with game design framework. In Proceedings of the 8th International Conference on Software and Information Engineering, Cairo, Egypt, 9–12 April 2019; pp. 47–50. [Google Scholar]

- Rooney, P. A theoretical framework for serious game design: Exploring pedagogy, play and fidelity and their implications for the design process. Int. J. Game-Based Learn. 2012, 2, 41–60. [Google Scholar] [CrossRef]

- Maxim, R.I.; Arnedo-Moreno, J. Identifying key principles and commonalities in digital serious game design frameworks: Scoping review. JMIR Serious Games 2025, 13, e54075. [Google Scholar] [CrossRef] [PubMed]

- Verschueren, S.; Buffel, C.; Vander Stichele, G. Developing theory-driven, evidence-based serious games for health: Framework based on research community insights. JMIR Serious Games 2019, 7, e11565. [Google Scholar] [CrossRef]

- Bunt, L.; Greeff, J.; Taylor, E. Enhancing serious game design: Expert-reviewed, stakeholder-centered framework. JMIR Serious Games 2024, 12, e48099. [Google Scholar] [CrossRef]

- Troiano, G.M.; Schouten, D.; Cassidy, M.; Tucker-Raymond, E.; Puttick, G.; Harteveld, C. All good things come in threes: Assessing student-designed games via triadic game design. In Proceedings of the 15th International Conference on the Foundations of Digital Games, Hyannis, MA, USA, 14–17 August 2020; pp. 1–4. [Google Scholar]

- Groff, J.; Clarke-Midura, J.; Owen, V.; Rosenheck, L. Better Learning in Games: A Balanced Design Lens for a New Generation of Learning Games; Technical Report; Learning Games Network, MIT Education Arcade: Cambridge, MA, USA, 2015. [Google Scholar]

- Zarraonandia, T.; Diaz, P.; Aedo, I.; Ruiz, M.R. Designing educational games through a conceptual model based on rules and scenarios. Multimed. Tools Appl. 2015, 74, 4535–4559. [Google Scholar] [CrossRef]

- Holmes, J.B.; Gee, E.R. A framework for understanding game-based teaching and learning. Horizon 2016, 24, 1–16. [Google Scholar] [CrossRef]

- Arnab, S.; Lim, T.; Carvalho, M.B.; Bellotti, F.; de Freitas, S.; Louchart, S.; De Gloria, A. Mapping learning and game mechanics for serious games analysis. Br. J. Educ. Technol. 2015, 46, 391–411. [Google Scholar] [CrossRef]

- Smith, K.; Shull, J.; Shen, Y.; Dean, A.; Heaney, P. A framework for designing smarter serious games. In Smart Innovation, Systems and Technologies; Springer International Publishing: Cham, Switzerland, 2018. [Google Scholar]

- To, A.; Fath, E.; Zhang, E.; Ali, S.; Kildunne, C.; Fan, A.; Hammer, J.; Kaufman, G. Tandem transformational game design: A game design process case study. In Proceedings of the International Academic Conference on Meaningful Play, East Lansing, MI, USA, 20–22 October 2016. [Google Scholar]

- Schell, J. The Art of Game Design: A Book of Lenses, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Koster, R. A Theory of Fun for Game Design; O’Reilly Media, Inc.: Santa Rosa, CA, USA, 2013. [Google Scholar]

- Fullerton, T. Game Design Workshop: A Playcentric Approach to Creating Innovative Games, 4th ed.; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Kniestedt, I.; Gómez Maureira, M.A.; Lefter, I.; Lukosch, S.; Brazier, F.M. Dive deeper: Empirical analysis of game mechanics and perceived value in serious games. Proc. ACM Hum.-Comput. Interact. 2021, 5, 1–25. [Google Scholar] [CrossRef]

- Lukosch, H.; Kurapati, S.; Groen, D.; Verbraeck, A. Gender and cultural differences in game-based learning experiences. Electron. J. e-Learn. 2017, 15, 310–319. [Google Scholar]

- Tsikinas, S.; Xinogalos, S. Designing effective serious games for people with intellectual disabilities. In Proceedings of the EDUCON ’18, Santa Cruz de Tenerife, Spain, 18–20 April 2018. [Google Scholar]

- Xinogalos, S.; Satratzemi, M. Special Issue on New Challenges in Serious Game Design. Appl. Sci. 2023, 13, 7675. [Google Scholar] [CrossRef]

- Kletenik, D.; Adler, R.F. Who wins? A comparison of accessibility simulation games vs. classroom modules. In Proceedings of the 54th ACM Technical Symposium on Computer Science Education V. 1, Toronto, ON, Canada, 15–18 March 2023; pp. 214–220. [Google Scholar]

- Liu, C.; Zhou, K.Z.; Sy, S.; Lodvikov, E.; Shan, J.; Kletenik, D.; Adler, R.F. Opening Digital Doors: Early Lessons in Software Accessibility for K-8 Students. In Proceedings of the 56th ACM Technical Symposium on Computer Science Education V. 1, Pittsburgh, PA, USA, 26 February–1 March 2025; pp. 722–728. [Google Scholar]

- Angeli, C.; Giannakos, M. Computational thinking education: Issues and challenges. Comput. Hum. Behav. 2020, 105, 106185. [Google Scholar] [CrossRef]

- Kletenik, D.; Adler, R.F. Motivated by inclusion: Understanding students’ empathy and motivation to design accessibly across a spectrum of disabilities. In Proceedings of the 55th ACM Technical Symposium on Computer Science Education V. 1, Portland, OR, USA, 20–23 March 2024; pp. 680–686. [Google Scholar]

- Laurent, M.; Monnier, S.; Huguenin, A.; Monaco, P.B.; Jaccard, D. Design principles for serious games authoring tools. Int. J. Serious Games 2022, 9, 63–87. [Google Scholar] [CrossRef]

- Puttick, G.; Cassidy, M.; Tucker-Raymond, E.; Troiano, G.M.; Harteveld, C. “So, we kind of started from scratch, no pun intended”: What can students learn from designing games? J. Res. Sci. Teach. 2024, 61, 772–808. [Google Scholar] [CrossRef]

- Ruiz, A.; Giraldo, W.J.; Arciniegas, J.L. Participatory design method: Co-creating user interfaces for an educational interactive system. In Proceedings of the XIX International Conference on Human Computer Interaction, Palma, Spain, 12–14 September 2018; pp. 1–8. [Google Scholar]

- Dörner, R.; Göbe, S.; Effelsberg, W.; Wiemeyer, J. (Eds.) Serious Games Foundations, Concepts and Practice; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Ávila-Pesántez, D.; Rivera, L.A.; Alban, M.S. Approaches for serious game design: A systematic literature review. Comput. Educ. J. 2017, 8. [Google Scholar]

- Berni, A.; Borgianni, Y. From the definition of user experience to a framework to classify its applications in design. Proc. Des. Soc. 2021, 1, 1627–1636. [Google Scholar] [CrossRef]

- Brown, T. Change by Design, Revised and Updated: How Design Thinking Transforms Organizations and Inspires Innovation; HarperCollins: New York, NY, USA, 2019. [Google Scholar]

- Afroogh, S.; Esmalian, A.; Donaldson, J.P.; Mostafavi, A. Empathic Design in Engineering Education and Practice: An Approach for Achieving Inclusive and Effective Community Resilience. Sustainability 2021, 13, 4060. [Google Scholar] [CrossRef]

- Maxim, R.I.; Arnedo-Moreno, J. Programming games as learning tools: Using empathic design principles for engaging experiences. In Proceedings of the GEM ’24, Turin, Italy, 5–7 June 2024; pp. 1–6. [Google Scholar]

- Scholten, H.; Granic, I. Use of the Principles of Design Thinking to Address Limitations of Digital Mental Health Interventions for Youth: Viewpoint. J. Med. Internet Res. 2019, 21, e11528. [Google Scholar] [CrossRef]

- Stephan, C. The passive dimension of empathy and its relevance for design. Des. Stud. 2023, 86, 101179. [Google Scholar] [CrossRef]

- Yu, Q.; Yu, K.; Lin, R. A meta-analysis of the effects of design thinking on student learning. Humanit. Soc. Sci. Commun. 2024, 11, 1–12. [Google Scholar] [CrossRef]

- Chang-Arana, Á.M.; Piispanen, M.; Himberg, T.; Surma-Aho, A.; Alho, J.; Sams, M.; Hölttä-Otto, K. Empathic Accuracy in Design: Exploring Design Outcomes through Empathic Performance and Physiology. Des. Sci. 2020, 6, e16. [Google Scholar] [CrossRef]

- Tu, J.; Wang, D.; Choong, L.; Abistado, A.; Suarez, A.; Hallifax, S.; Rogers, K.; Nacke, L. Rolling in Fun, Paying the Price: A Thematic Analysis on Purchase and Play in Tabletop Games. ACM Games Res. Pract. 2024, 3, 1–29. [Google Scholar] [CrossRef]

- Campoverde-Durán, R.; Galán-Montesdeoca, J.; Perez-Muñoz, Á. UX and gamification, serious game development centered on the player experience. In Proceedings of the XXIII International Conference on Human Computer Interaction, Lleida, Spain, 4–6 September 2023; pp. 1–4. [Google Scholar]

- Muratovski, G. Research for Designers: A Guide to Methods and Practice; Sage: Thousand Oaks, CA, USA, 2021. [Google Scholar]

- Fulton, S.; Roberts, P. Empathic Design in Educational Technology: A Systematic Review of Methods and Impact. TechTrends 2022, 66, 741–755. [Google Scholar]

- Gibbons, S. Journey Mapping 101; Nielsen Norman Group: Fremont, CA, USA, 2018. [Google Scholar]

- Doorley, S.; Holcomb, S.; Klebahn, P.; Segovia, K.; Utley, J. Design Thinking Bootleg; Stanford University: Stanford, CA, USA, 2018. [Google Scholar]

- Quiñones, D.; Rusu, C. How to develop usability heuristics: A systematic literature review. Comput. Stand. Interfaces 2017, 53, 89–122. [Google Scholar] [CrossRef]

- Wolcott, M.D.; Lobczowski, N.G. Using cognitive interviews and think-aloud protocols to understand thought processes. Curr. Pharm. Teach. Learn. 2021, 13, 181–188. [Google Scholar] [CrossRef]

- Caroux, L.; Pujol, M. Player enjoyment in video games: A systematic review and meta-analysis of the effects of game design choices. Int. J. Hum.-Interact. 2024, 40, 4227–4238. [Google Scholar] [CrossRef]

- Albert, B.; Tullis, T. Measuring the User Experience: Collecting, Analyzing, and Presenting UX Metrics; Morgan Kaufmann: Burlington, MA, USA, 2022. [Google Scholar]

- Chao, C.; Chen, Y.; Wu, H.; Wu, W.; Yi, Z.; Xu, L.; Fu, Z. An emotional design model for future smart product based on grounded theory. Systems 2023, 11, 377. [Google Scholar] [CrossRef]

- Asgari, M.; Hurtut, T. A design language for prototyping and storyboarding data-driven stories. Appl. Sci. 2024, 14, 1387. [Google Scholar] [CrossRef]

- Kalbach, J. Mapping Experiences: A Guide to Creating Value Through Journeys, Blueprints, and Diagrams; O’Reilly Media Inc.: Sebastopol, CA, USA, 2016. [Google Scholar]

- Koskinen, I.; Zimmerman, J.; Binder, T.; Redstrom, J.; Wensveen, S. Design research through practice: From the lab, field, and showroom. IEEE Trans. Prof. Commun. 2013, 56, 262–263. [Google Scholar] [CrossRef]

- Wallace, J.; McCarthy, J.; Wright, P.C.; Olivier, P. Making design probes work. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 3441–3450. [Google Scholar]

- Galvão, L.; García, L.S.; Felipe, T.A. A systematic mapping study on participatory game design with children. In Proceedings of the XXII Brazilian Symposium on Human Factors in Computing Systems, Maceió, Brazil, 16–20 October 2023; pp. 1–12. [Google Scholar]

- Kafai, Y.B.; Burke, Q. Constructionist gaming: Understanding the benefits of making games for learning. Educ. Psychol. 2015, 50, 313–334. [Google Scholar] [CrossRef]

- Voogt, J.M.; Pieters, J.M.; Handelzalts, A. Teacher collaboration in curriculum design teams: Effects, mechanisms, and conditions. In Teacher Learning Through Teacher Teams; Routledge: London, UK, 2018; pp. 7–26. [Google Scholar]

- Getenet, S. Using design-based research to bring partnership between researchers and practitioners. Educ. Res. 2019, 61, 482–494. [Google Scholar] [CrossRef]

- Lu, J.; Schmidt, M.; Lee, M.; Huang, R. Usability research in educational technology: A state-of-the-art systematic review. Educ. Technol. Res. Dev. 2022, 70, 1951–1992. [Google Scholar] [CrossRef]

- Ferreira, B.; Silva, W.; Oliveira, E.; Conte, T. Designing Personas with Empathy Map. In Proceedings of the SEKE, Pittsburgh, PA, USA, 6–8 July 2015; Volume 152. [Google Scholar]

- Feijoo, G.; Crujeiras, R.; Moreira, M. Gamestorming for the Conceptual Design of Products and Processes in the context of engineering education. Educ. Chem. Eng. 2018, 22, 44–52. [Google Scholar] [CrossRef]

- Garcia, S.E. Practical ux research methodologies: Contextual inquiry. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–2. [Google Scholar]

- Pendleton, A.J. Introducing the Game Design Matrix: A Step-by-Step Process for Creating Serious Games. Master’s Thesis, Air Force Institute of Technology, Wright-Patterson AFB, OH, USA, 2020. [Google Scholar]

- Howard, Z.; Kjeldskov, J.; Skov, M.B. Interviewing for Journeys and Experience Landscapes: A Study of Contextual Inquiry and Experience Mapping. In Proceedings of the 26th Australian Computer-Human Interaction Conference (OzCHI), Sydney, Australia, 2–5 December 2014; ACM: New York, NY, USA, 2014; pp. 448–451. [Google Scholar] [CrossRef]

- Reagan, A.J.; Mitchell, L.; Kiley, D.; Danforth, C.M.; Dodds, P.S. The Emotional Arcs of Stories Are Dominated by Six Basic Shapes. EPJ Data Sci. 2016, 5, 31. [Google Scholar] [CrossRef]

- Ramos, D.; Holtmann, B.M.; Schell, D.; Schumacher, K. Tracking Emotional Shifts During Story Reception: The Relationship Between Narrative Structure and Emotional Flow. Secur. Soc. Off. Law J. 2023, 12, 17–39. [Google Scholar]

- McKenney, S.; Reeves, T. Conducting Educational Design Research; Routledge: London, UK, 2018. [Google Scholar]

- Salminen, J.; Wenyun Guan, K.; Jung, S.G.; Jansen, B. Use cases for design personas: A systematic review and new frontiers. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April–5 May 2022; pp. 1–21. [Google Scholar]

- Nofal, E. Participatory design workshops: Interdisciplinary encounters within a collaborative digital heritage project. Heritage 2023, 6, 2752–2766. [Google Scholar] [CrossRef]

- Osterwalder, A.; Pigneur, Y.; Bernarda, G.; Smith, A. Value Proposition Design: How to Create Products and Services Customers Want; Wiley: Hoboken, NJ, USA, 2014. [Google Scholar]

- Feng, L.; Wei, W. An empirical study on user experience evaluation and identification of critical UX issues. Sustainability 2019, 11, 2432. [Google Scholar] [CrossRef]

- Maxim, R. A Case Study of Mobile Game Based Learning Design for Gender Responsive STEM Education. World Acad. Sci. Eng. Technol. Int. J. Educ. Pedagog. Sci. 2021, 15, 189–192. [Google Scholar]

- Winardy, G.C.B.; Septiana, E. Role, play, and games: Comparison between role-playing games and role-play in education. Soc. Sci. Humanit. Open 2023, 8, 100527. [Google Scholar] [CrossRef]

- Katsumata Shah, M.; Jactat, B.; Yasui, T.; Ismailov, M. Low-Fidelity Prototyping with Design Thinking in Higher Education Management in Japan: Impact on the Utility and Usability of a Student Exchange Program Brochure. Educ. Sci. 2023, 13, 53. [Google Scholar] [CrossRef]

- Chen, K.; Zhang, H. Remote paper prototype testing. In Proceedings of the 33rd annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015; pp. 77–80. [Google Scholar]

- Hertzum, M. Concurrent or Retrospective Thinking Aloud in Usability Tests: A Meta-Analytic Review. ACM Trans. Comput.-Hum. Interact. 2024, 31, 1–29. [Google Scholar] [CrossRef]

- Edwards, J.; Nguyen, A.; Lämsä, J.; Sobocinski, M.; Whitehead, R.; Dang, B.; Järvelä, S. A Wizard of Oz methodology for designing future learning spaces. High. Educ. Res. Dev. 2025, 44, 147–162. [Google Scholar] [CrossRef]

- Hertzum, M. Usability Testing: Too Early? Too Often? Or Just Right? Interact. Comput. 2020, 32, 199–207. [Google Scholar] [CrossRef]

- Sugiarti, Y.; Hanifah, S.R.; Anwas, E.O.M.; Anwar, S.; Permatasari, A.D.; Nurmiati, E. Usability Evaluation on Website Using the Cognitive Walkthrough Method. In Proceedings of the 2023 11th International Conference on Cyber and IT Service Management (CITSM), Makassar, Indonesia, 10–11 November 2023; pp. 1–8. [Google Scholar]

- Talero-Sarmiento, L.; Gonzalez-Capdevila, M.; Granollers, A.; Lamos-Diaz, H.; Pistili-Rodrigues, K. Towards a Refined Heuristic Evaluation: Incorporating Hierarchical Analysis for Weighted Usability Assessment. Big Data Cogn. Comput. 2024, 8, 69. [Google Scholar] [CrossRef]

- Li, J.; Tigwell, G.W.; Shinohara, K. Accessibility of high-fidelity prototyping tools. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Online, 8–13 May 2021; pp. 1–17. [Google Scholar]

- IJsselsteijn, W.; de Kort, Y.A.W.; Midden, C.; Eggen, B.; van den Hoven, E.; Wagner, M. The Game Experience Questionnaire: Development of a Self-Report Measure to Capture Player Experiences of Computer Games; Technical Report; TU Eindhoven: Eindhoven, The Netherlands, 2013. [Google Scholar]

- Lim, J.; Mountstephens, J.; Teo, J.T. Emotion Recognition Using Eye-Tracking: Taxonomy, Review and Current Challenges. Sensors 2020, 20, 2384. [Google Scholar] [CrossRef] [PubMed]

- Roncal-Belzunce, V.; Gutiérrez-Valencia, M.; Martínez-Velilla, N.; Ramírez-Vélez, R. System Usability Scale for Gamified E-Learning Courses: Cross-Cultural Adaptation and Measurement Properties of the Spanish Version. Int. J. Hum.-Comput. Interact. 2025, 1–11. [Google Scholar] [CrossRef]

- Seyderhelm, A.J.; Blackmore, K.L. How hard is it really? Assessing game-task difficulty through real-time measures of performance and cognitive load. Simul. Gaming 2023, 54, 294–321. [Google Scholar] [CrossRef]

- Ifenthaler, D.; Eseryel, D.; Ge, X. Assessment for game-based learning. In Assessment in Game-Based Learning; Springer: Berlin/Heidelberg, Germany, 2012; pp. 1–8. [Google Scholar]

- Patton, M.Q. Qualitative Research & Evaluation Methods, 4th ed.; Sage Publications: Thousand Oaks, CA, USA, 2014. [Google Scholar]

- Wang, Q.; Quek, C.L. Investigating Collaborative Reflection with Peers in an Online Learning Environment. In Proceedings of the Exploring the Material Conditions of Learning: The Computer Supported Collaborative Learning (CSCL) Conference 2015, Gothenburg, Sweden, 7–11 June 2015; Lindwall, O., Häkkinen, P., Koschman, T., Tchounikine, P., Ludvigsen, S., Eds.; International Society of the Learning Sciences (ISLS): Irvine, CA, USA, 2015; Volume 1. [Google Scholar]

- Liedtka, J. Perspective: Linking design thinking with innovation outcomes through cognitive bias reduction. J. Prod. Innov. Manag. 2015, 32, 925–938. [Google Scholar] [CrossRef]

- Lewis, J.R.; Utesch, B.S.; Maher, D.E. UMUX-LITE: When there’s no time for the SUS. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 2099–2102. [Google Scholar]

- Marikyan, D.; Papagiannidis, S.; Stewart, G. Technology acceptance research: Meta-analysis. J. Inf. Sci. 2023, 01655515231191177. [Google Scholar] [CrossRef]

- Vlachogianni, P.; Tselios, N. Perceived usability evaluation of educational technology using the System Usability Scale (SUS): A systematic review. J. Res. Technol. Educ. 2022, 54, 392–409. [Google Scholar] [CrossRef]

- Petri, G.; von Wangenheim, C.G.; Borgatto, A.F. MEEGA+: A method for the evaluation of educational games for computing education. IEEE Trans. Educ. 2018, 61, 304–311. [Google Scholar]

- Nardon, L.; Hari, A.; Aarma, K. Reflective Interviewing—Increasing Social Impact through Research. Int. J. Qual. Methods 2021, 20, 1–9. [Google Scholar] [CrossRef]

- Thoring, K.; Mueller, R.; Badke-Schaub, P. Workshops as a research method: Guidelines for designing and evaluating artifacts through workshops. In Proceedings of the 53rd Hawaii International Conference on System Sciences, Maui, HI, USA, 7–10 January 2020. [Google Scholar]

- Nielsen, J. Usability 101: Introduction to Usability. 2012. Available online: https://www.nngroup.com/articles/usability-101-introduction-to-usability (accessed on 10 July 2025).

- Junior, R.; Silva, F. Redefining the MDA framework—The pursuit of a game design ontology. Information 2021, 12, 395. [Google Scholar] [CrossRef]

- Fails, J.A.; Guha, M.L.; Druin, A. Methods and techniques for involving children in the design of new technology for children. Found. Trends® Hum.-Comput. Interact. 2013, 6, 85–166. [Google Scholar] [CrossRef]

- Hennink, M.; Kaiser, B.N. Sample sizes for saturation in qualitative research: A systematic review of empirical tests. Soc. Sci. Med. 2022, 292, 114523. [Google Scholar] [CrossRef]

- Creswell, J.W.; Poth, C.N. Qualitative Inquiry and Research Design: Choosing Among Five Approaches; Sage Publications: Thousand Oaks, CA, USA, 2016. [Google Scholar]

- Saunders, B.; Sim, J.; Kingstone, T.; Baker, S.; Waterfield, J.; Bartlam, B.; Burroughs, H.; Jinks, C. Saturation in qualitative research: Exploring its conceptualization and operationalization. Qual. Quant. 2018, 52, 1893–1907. [Google Scholar] [CrossRef]

- Henka, A.; Zimmermann, G. Persona Based Accessibility Testing: Towards User-Centered Accessibility Evaluation. In Proceedings of the International Conference on Human-Computer Interaction, Crete, Greece, 22–27 June 2014; Springer International Publishing: Cham, Switzerland, 2014; pp. 226–231. [Google Scholar]

- Bennett, C.L.; Rosner, D.K. The promise of empathy: Design, disability, and knowing the “other”. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–13. [Google Scholar]

- Nadolny, L.; Halabi, A. Student participation and engagement in inquiry-based game design and learning. Comput. Hum. Behav. 2016, 54, 478–488. [Google Scholar]

- Wouters, P.; van Nimwegen, C.; van Oostendorp, H.; van der Spek, E.D. A meta-analysis of the cognitive and motivational effects of serious games. J. Educ. Psychol. 2013, 105, 249–265. [Google Scholar] [CrossRef]

| ID | Gender | Role | Experience (Years) | Region | Primary Expertise/Tools |

|---|---|---|---|---|---|

| P1 | Male | Instr. Game Developer | 1–3 | North America | Game Dev: Unity, Unreal Engine |

| P2 | Male | Instr. Game Designer | 3–5 | Europe | Game Design: Unity, GameMaker |

| P3 | Female | Instr. Game Developer | >5 | North America | Game Dev: Unreal Engine |

| P4 | Male | Instr. Game Designer | 1–3 | Europe | Game Design: Unity |

| P5 | Male | Instr. Game Developer | 1–3 | Asia-Pacific | Game Dev: Unity |

| P6 | Female | HCI Expert | 3–5 | North America | HCI: Various UX Tools |

| P7 | Male | Instr. Game Developer | 3–5 | Latin America | Game Dev: Unity, Unreal Engine |

| P8 | Male | Instr. Game Designer | 1–3 | Europe | Game Design: GameMaker |

| P9 | Male | HCI Expert | >5 | North America | HCI: UX Research Tools |

| P10 | Male | Instr. Game Developer | 1–3 | Africa | Game Dev: Unity |

| P11 | Female | Instr. Game Designer | 1–3 | Middle East | Game Design: Unreal Engine |

| P12 | Male | Instr. Game Developer | 3–5 | Europe | Game Dev: Unity, Unreal Engine |

| P13 | Female | HCI Expert | 1–3 | Asia-Pacific | HCI: UX Research Tools |

| P14 | Male | Instr. Game Designer | >5 | North America | Game Design: Unity |

| P15 | Male | Instr. Game Developer | 1–3 | Europe | Game Dev: GameMaker |

| P16 | Male | Instr. Game Designer | 3–5 | North America | Game Design: Unity, Unreal Engine |

| P17 | Male | HCI Expert | >5 | Europe | HCI: Various UX Tools |

| P18 | Female | Instr. Game Developer | 1–3 | North America | Game Dev: Unity |

| # | Use Case Title | Brief Description |

|---|---|---|

| 1 | ChemSolve: Mystery Lab | Puzzle game to mix chemical substances and solve real-world problems. |

| 2 | Climate Crisis Lab | Strategy game for managing resources and policy decisions to combat climate change. |

| 3 | Anti-Fraud Challenge | Learn to identify online scams using AI-driven feedback in realistic scenarios. |

| 4 | Language Learning World | Mini-games for reading, writing, listening, with teacher-student tracking. |

| 5 | Alphabet Explorer | Learn the alphabet through engaging visuals and interactions. |

| 6 | Preschool Fun | Games for young children to learn numbers, shapes, and colors. |

| 7 | University Prep Quiz | Game to test knowledge for university admission tests. |

| 8 | ChessF | Chess-based learning for strategy, logic, and pattern recognition. |

| 9 | Fruit or Vegetable? | Game for ages 4–6 to distinguish between fruits and vegetables. |

| 10 | Math Quest: Kingdom of Numbers | Fantasy math puzzle game that incorporates adaptive difficulty. |

| 11 | CyberShield: Threat Hunter | Simulation game for responding to cybersecurity threats. |

| 12 | Brain Boost: Lecture Dash | Timed challenge turning lectures into memory-based mini-quests. |

| 13 | CodeMaster | Teaches programming through exercises with scalable complexity. |

| 14 | STEM Adventure | Problem-solving game about math, physics, and coding. |

| 15 | Call Center Simulation | Trains staff in software use and company procedures. |

| 16 | Alphabet Memory Game | A simple memory matching game to learn the alphabet. |

| 17 | Python for Beginners | Engaging game to teach basic Python programming. |

| 18 | Financial Literacy Hero | Navigate budgeting, saving, and investing through life scenarios. |

| User Type | Recruitment Source | Target | Inclusion Needs |

|---|---|---|---|

| High-school learners | Local schools | 6 | 2 cognitive disabilities |

| College instructors | University partners | 4 | 2 sensory disabilities |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maxim, R.I.; Arnedo-Moreno, J. EDTF: A User-Centered Approach to Digital Educational Games Design and Development. Information 2025, 16, 794. https://doi.org/10.3390/info16090794

Maxim RI, Arnedo-Moreno J. EDTF: A User-Centered Approach to Digital Educational Games Design and Development. Information. 2025; 16(9):794. https://doi.org/10.3390/info16090794

Chicago/Turabian StyleMaxim, Raluca Ionela, and Joan Arnedo-Moreno. 2025. "EDTF: A User-Centered Approach to Digital Educational Games Design and Development" Information 16, no. 9: 794. https://doi.org/10.3390/info16090794

APA StyleMaxim, R. I., & Arnedo-Moreno, J. (2025). EDTF: A User-Centered Approach to Digital Educational Games Design and Development. Information, 16(9), 794. https://doi.org/10.3390/info16090794