1. Introduction

Sustainable education transcends traditional pedagogy by fostering competencies such as systems thinking, collaborative problem-solving, and reflexivity [

1]. Key principles include:

- •

Active Learning: Students engage in real-world tasks (e.g., collaborative assignments) to apply theoretical knowledge [

2,

3,

4,

5,

6,

7,

8,

9].

- •

Critical Thinking: Individual assignments and case studies encourage independent analysis [

2,

5,

9].

- •

Collaboration: Group work mirrors real-world teamwork, a cornerstone of sustainability education [

2,

3,

5,

6,

7,

8,

9].

The competences mentioned above can help students successfully assume the role of citizens who know how to deal with real-world challenges [

6,

10]. In this sense, sustainable education is primarily interested in preparing students to face possible crises (disasters, fires, earthquakes, and tsunamis), and be aware of environmental issues (climate change, earth degradation, decay, erosion, and pollution), as well as being familiarized with social and economic issues, and is less interested in helping students gain unfruitful knowledge. In parallel, sustainable education emphasizes the need for collaboration to address challenges [

1,

2,

5,

11].

For this purpose, educators design sustainable courses to help students not only develop valuable competencies but also practice them, thereby increasing their efficiency in facing real-world challenges [

12,

13,

14]. Therefore, theoretical material, self-assessment exercises, collaborative assignments, written and online exams, quizzes, and other relevant activities are sustainable course activities that make students understand fundamental sustainable issues and equip them to thrive in a challenging sustainable world.

Moreover, designing a course upon the principles of sustainable education creates measurable engagement patterns that facilitate the prediction of performance.

The rapid expansion of online education necessitates innovative approaches to predict student success, particularly in courses aligned with sustainable education principles. Theatre education naturally operationalizes collaboration, rehearsal cycles, and reflective critique—practices aligned with ESD competencies. Drama, digital narration, and theatre games are adjusted to constitute learning activities associated with real case studies that indicate a specific problem or a real situation. A recent literature review synthesizing digital drama/theatre for sustainability reports that twenty-seven empirical/theoretical studies (2014–2023) link digital theatre activities with students’ sustainability awareness and 21st-century skills, with most research taking place in Europe and Higher Education; secondary and early childhood contexts remain under-represented [

15].

Identifying achievers (students with final grades ≥ 7) in online theatre courses is paramount for:

- •

Optimizing instructional strategies to enhance engagement and retention.

- •

Validating the efficacy of sustainable pedagogy, which emphasizes active learning, collaboration, and critical thinking [

2,

5].

- •

Addressing equity gaps by enabling early interventions for at-risk students [

16,

17].

The research focuses on predicting achievers in online theatre courses since they incorporate collaborative problem-solving competencies, aligned to sustainable education. In contrast to conventional theatre courses, educators and learning designers implement online theatre courses through an educational e-learning platform, allowing for the eliciting of engagement metrics. Important studies have proved that implementing a theatre curriculum through an e-learning platform can achieve pedagogical and learning goals to a satisfactory extent [

18,

19,

20,

21,

22]. These studies point out that up-to-date theatre techniques, such as digital narration and drama, can constitute e-learning activities with promising results. However, two studies underline that the physical presence is irreplaceable, indicating that the learning objectives are better met in the case of conventional teaching [

20,

22]. In this sense, these studies suggest that hybrid theatre courses are most educationally preferable. The studies mentioned do not attenuate the strength of the e-learning part in theatre courses, but they denote that the e-learning part is not enough for a full course delivery. In parallel, these studies view the online implementation of modern theatre techniques as a challenge, accentuating the need for a careful online learning design to meet the course’s objectives.

Considering the need for a careful online learning design, our research attempts to predict achievers in an online theatre course that has been designed to meet the needs of sustainable education. However, the research does not strongly investigate the relationship between the achievement of learning objectives and the students’ performance. In this sense, our study does not examine the online theatre course’s success. The course’s successful delivery is only reflected by grades. This is a common practice when building a risk or prediction model for students at risk or achievers [

15,

16,

17,

23,

24,

25,

26,

27,

28].

Moreover, although literature refers to prediction models for various online courses, this is not the case for online theatre courses, giving space for important scientific output.

The research-added value of this study lies in its dual focus: (1) it applies machine learning techniques to predict achievers in a course explicitly designed around sustainable education principles, and (2) it identifies specific engagement indicators (e.g., collaborative assignments) that align with sustainable pedagogy. Prior works have examined predictive models in online learning, but none have contextualized these models within sustainable education frameworks [

16,

29]. This study addresses this gap by demonstrating how predictive analytics can enhance the design and delivery of sustainability-aligned courses. In detail, the research attempts to predict achievers, indicating factors that affect their performance.

Significant studies consider the students’ attitude towards online education and the level of their e-learning efficacy as factors that are associated with their achievement [

30,

31]. The analysis of a relevant study [

30] indicates that students enrolled in legal, political, and philological studies demonstrated higher levels of conscientious participation in remote classes. Conversely, psychology students exhibited lower levels of participation and rated remote learning less favourably. These findings suggest that the nature of the discipline may impact students’ engagement with and attitudes toward e-learning. These studies [

30,

31] also highlight the importance of emotional engagement in student performance. Nevertheless, our study does not focus on student emotional engagement since it is not easily measurable. Therefore, the study examines the association of behavioural engagement factors (derived from the students’ interaction with the learning activities) since learning analytics tools can provide measurable data in regard to these factors. In parallel, one important study has proved that students’ behavioural engagement metrics are associated with students at risk in online sustainable education courses [

24]. However, risk and prediction models, as the one presented in this relative study, have not been used in predicting achievers. To address this gap, the study examines whether strong predictors of achievers fall into sustainable education. For this purpose, the research assumptions are:

Assumption 1: Students’ engagement data (reflecting their effort) is a strong predictor of achievers in online theatre courses.

Assumption 2: Sustainable education components (such as teamwork and problem-solving competencies) play a significant role in high students’ achievement in online theatre courses.

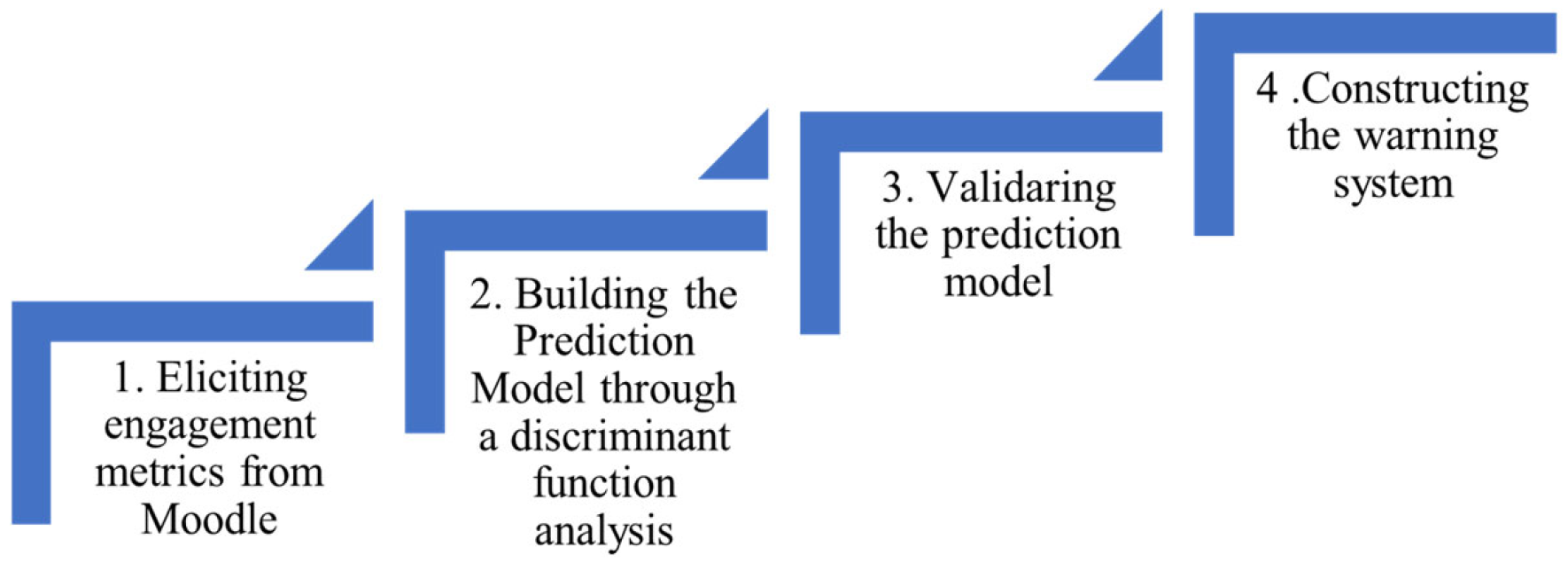

To serve this purpose, an LDA was conducted on students’ engagement data, elicited by implementing the structure of an online theatre course on Moodle. That is because LDA delivers the following results:

It provides a forecast model for achievers and non-achievers.

Through the linear discriminant functions, it indicates strong and weak predictors, and therefore, it points out the contribution of each factor to high or critical students’ performance.

It constructs a model with high training potential, promising that the strong predictors indicated in the LDA model’s coefficients can constitute potential predictors in any course with a similar structure.

It delivers a classification table for achievers and non-achievers, allowing for developing a remedial intervention strategy (given that verified LDA prediction models can lead to early warning systems).

4. Results

LDA was applied to our online theatre course to identify strong predictors of achievers. The LDA outcome was the generation of a prediction model with specific performance characteristics. Our LDA (Linear Discriminant Analysis) model achieved strong performance across several evaluation metrics:

Accuracy: 90%; This means that 90% of all predictions—both positive and negative—were correct. The model demonstrates strong overall performance.

Specificity: 80%; Specificity measures how well the model identifies true negatives. In other words, 80% of the negative cases were correctly classified, while 20% were misclassified as positives.

Correct Classification Rate (CCR): 88%; The CCR provides a balanced view of performance across classes. It is often calculated as the average of sensitivity (true positive rate) and specificity. In this case, a CCR of 88% indicates the model is slightly stronger at identifying positive cases than negative ones, but overall classification performance remains high.

The LDA model performs well overall, achieving high accuracy and balanced classification. It is particularly effective at detecting positive cases, with slightly lower performance on negative cases, suggesting room for improvement in reducing false positives if necessary.

The model’s coefficients (derived from the statistically significant engagement metrics) constitute the linear discriminant functions’ coefficients that are used in the classification process.

Table 4 indicates these coefficients.

Fisher’s discriminant functions

- •

Achievers: f(x) = 0.75 × (Total Logins) + 0.85 × (Collaborative Assignment Grade) + 32.456 (2)

- •

Non-Achievers: f(x) = 0.45 × (Total Logins) + 0.50 × (Collaborative Assignment Grade) + 34.025 (3)

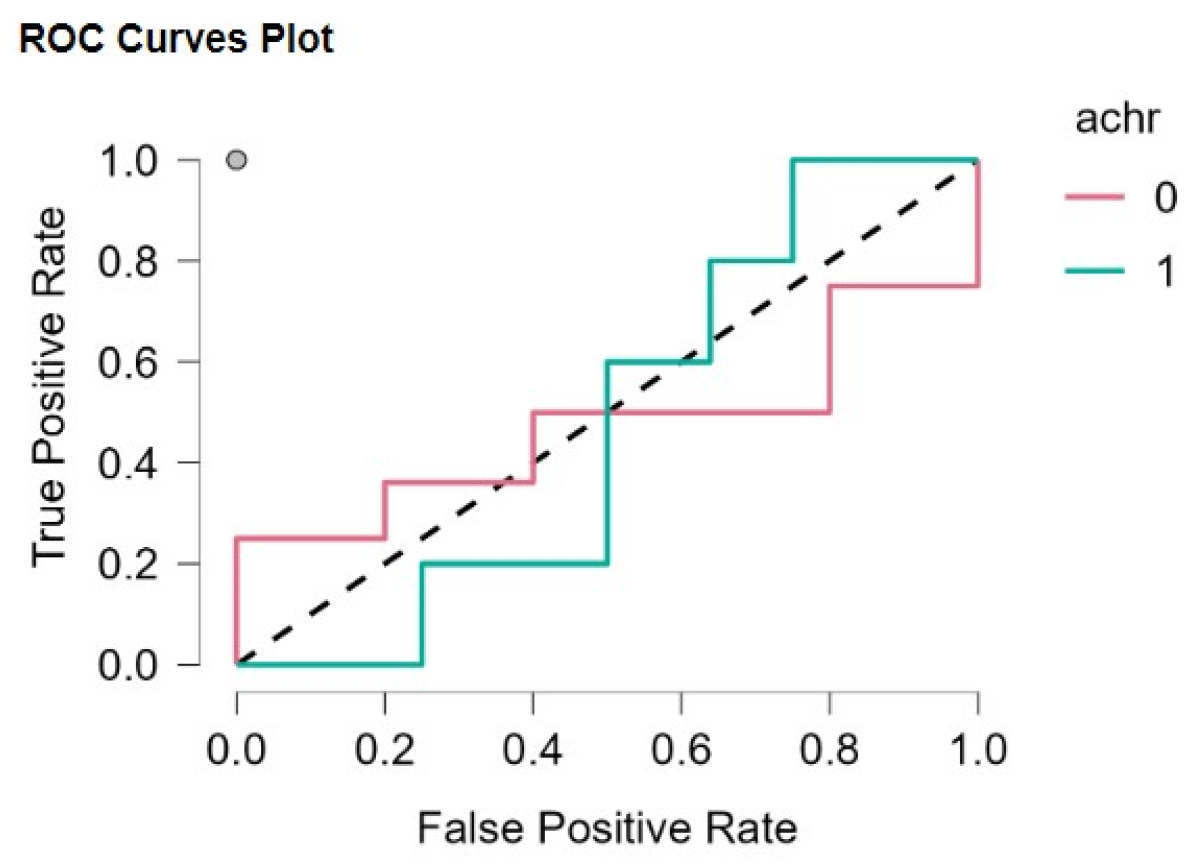

A ROC analysis can illustrate the strength of the discriminant functions’ operation. In our case, ROC analysis yielded a high, confirming strong predictive power.

Figure 2 shows the ROC curve illustration.

Figure 3 illustrates a High True Positive Rate (Sensitivity) even at Low False Positive Rates. In parallel, the Eigenvalue and Canonical Correlation coefficients indicate important aspects of the model’s performance. As shown in

Table 5, the high Eigenvalue (λ = 2.60) and canonical correlation (Rc ≈ 0.85) confirm strong class separation. Both coefficients enhance the argument that our model is robust. The Canonical Correlation coefficient proves that our model accounts for 85 % of the Variance of the strong predictors.

Table 6 shows the classification’s outcome for one hundred randomly selected students (for simplicity, 50 Achievers and 50 Non-Achievers)

The classification rates that derive from the data on the classification table (

Table 6) are the following:

Classification Rates:

- •

Achievers: 48/50 = 96% (Sensitivity)

- •

Non-Achievers: 40/50 = 80% (Specificity)

- •

Overall Accuracy: (48 + 40)/100 = 88% (matches Correct Classification Rate)

Moreover, the classification rates indicate that our model performs better in classifying achievers. The model is powerful at identifying Achievers (96% sensitivity) but slightly weaker for Non-Achievers (80% specificity). Combining the classification rates and the AUC percentage, it denotes that the LDA model has a high probability of correctly ranking a random Achiever higher than a random Non-Achiever. Additionally, the AUC value indicates that a randomly chosen achiever has a higher discriminant score than a randomly chosen non-achiever [

50,

51,

52,

53].

The verification of the LDA assumptions further accentuates our model’s robustness. A general LDA relies on three (3) key assumptions [

54,

55,

56]:

Multivariate Normality (Features are normally distributed per class).

Homoscedasticity (Equal covariance matrices across classes).

Low Multicollinearity (Features are not highly correlated).

Table 7 resumes the LDA assumptions’ verification, and

Table 8 indicates the Covariance Matrices.

Table 8 aligns with the Box’s M test outcome (

p = 0.12), indicating no significant difference among classes, verifying the assumption of homoscedasticity. The standardization process indicates another important aspect of the model’s potential. In detail, standardization (z-score scaling) was applied, and

Table 9 provides the Feature Summary Pre/Post-Standardization.

Important key findings of the standardization process are:

- 1.

Standardization Improved Separation:

- ∘

Eigenvalue (λλ) increased from 4.2 (raw data) to 5.51 (standardized).

- 2.

Classification Accuracy:

- ∘

Raw data: 85% → Standardized: 88% (+3% gain).

Table 10 summarizes the benefits of standardization:

Therefore, standardization improved separation, accuracy, and specificity. It is also essential to underline that normalization was not applied. Standardization was preferred for the LDA to preserve Gaussian properties.

To recapitulate, our model excels in each performance territory, the verification of LDA assumptions, and the improvements made in the standardization phase further accentuate its robustness.

5. Discussion

Our study presents a robust prediction model for achievers (see

Section 4), as the ones presented in a prior LDA-based study [

29], and surpasses logistic regression models in similar contexts [

16]. The total logins into the system and the collaborative assignment grades constitute engagement metrics. Given that these factors proved to be strong predictors of achievers, the first assumption is verified, indicating that in our study, engagement factors affect students’ performance, a finding that previous studies report, especially for students at risk [

33,

34,

57].

In parallel, the collaborative assignment’s grade, which proved to be among the strong predictors of students’ high achievement, is a core component of sustainable pedagogy, reflecting the students’ competency to develop problem-solving strategies and reinforce their critical thinking. In this sense, the second assumption is verified.

The results highlight two critical insights for educators:

One study focuses on LMS logins but omits collaborative tasks, achieving lower specificity: 75% vs. 80% in our model [

16]. In parallel, another study uses time-on-task as a predictor but does not contextualize findings within sustainable pedagogy [

29].

Moreover, in terms of ESD principles, although theory resources (designed to help students cultivate critical thinking) were predominant predictors of students’ high achievement in one study, this is not the case in our work [

24]. On the contrary, although the collaborative assignment did not affect students’ achievement in the respective study, this factor proved to be a decisive predictor in our study. Therefore, a factor that lies in the field of sustainable education was the predominant predictor of students’ achievement.

In terms of learning design, the results proved that sustainable education components affect students’ high performance. Therefore, educators are encouraged to design courses upon sustainable education principles and then attempt to predict achievers using models built on engagement metrics. It is also essential to underline that sustainable education components proved to be decisive predictors in an online theatre course, urging theatre educators to design their courses considering ESD principles, adjusting the course structure to be implemented through an e-learning platform.

Stressing the educational value of the results, it is important to point out that videos watched did not prove to affect students’ high achievement, indicating that digital resources did not play a significant role in the prediction process in our study. Digital media is a core component of modern theatre pedagogy [

12,

58]. Nevertheless, our prediction model’s outcome did not point out this key element. More studies are needed to generalize this finding, since prediction models are course-oriented. Moreover, our model primarily works well for achievers, and thereby, it is not clear if digital media could constitute predictors of students at risk. However, the literature does not report that this factor affects students’ critical performance [

33,

34]. However, since risk models are also course-oriented, the possibility that digital media could emerge as a risk factor in a specific theatre course cannot be ruled out. Additionally, other theatre-wise key elements such as digital narration and theatre game were not associated with achievers in our study. However, our model should be applied to many theatre courses to generalize this finding.

However, the findings do not necessarily accentuate the achievement of learning goals in the case of achievers. This is because in our research, the interest is to identify and predict achievers, not to examine the success of the course delivery. Though in one sense, students who achieve a grade higher than seven could be viewed as meeting the pedagogical learning objectives of the course. Nevertheless, the learning objectives’ achievement rate is not presented in our study. Associating achiever’s predictors with learning objectives could be another expansion of our research.

Although studies suggest that hybrid theatre courses are mostly educationally preferable [

20,

22], our study proved that online theatre courses could be used autonomously to predict students’ high achievement. Since predictors derive from behavioural engagement metrics, the prediction process is facilitated in any online course, and it is not affected by the way the learning goals have been implemented. Therefore, our method can be used in any online course, indicating the scalability and interoperability of our framework.

One important limitation of our model, in comparison to similar prediction attempts in the literature, is that it forecasts achievers after course completion. Therefore, there is less space for early intervention [

8,

9]. However, the discriminant scores can be calculated at any time, and thus our model could vouch for early intervention. Nevertheless, our model has not been assessed for early intervention purposes.

Another limitation of our study lies in the intrinsic drawbacks of most educational forecast models, which heavily depend on course structure and a specific set of predictors [

16,

17,

23,

25,

26,

27,

28,

35,

42,

44,

47,

48,

49]. Nevertheless, the forecast model’s potential remained high in two runs, despite the use of different predictors in specific studies [

16,

17,

26,

27,

28,

35]. Hence, the classification dynamics of the forecast model were unaffected by the variability in predictors for each round of completion. However, more studies could better enhance this argument.

Moreover, the possibility of students’ low digital literacy percentage has not been investigated. In this sense, it is not clear if digital literacy accounts for the low engagement rate of some students (reflected by logins and activities’ completion), especially in the case of students at risk. A pre-questionnaire (delivered before the course start) could indicate students’ digital literacy skills and could be linked to risk factors and predictors, constituting another research expansion.

In parallel, demographic features are not included in our dataset; therefore, our prediction model has not been evaluated to enhance gender inclusivity. However, there are no significant studies in the literature to associate educational risk factors and predictors with demographic features [

34]. The reported risk and prediction models are based on behavioural engagement metrics. Only studies examining students’ retention include demographic data in the risk factors, without incorporating them into a competent risk or prediction model.

6. Conclusions

The paper presented an integrated methodology to construct an efficient prediction model for achievers based on a risk management framework. An online theatre course revolving around sustainability issues (for example, disaster management) served this purpose. The paper also demonstrated how an online theatre course aligns with ESD principles. In parallel, our study indicated how engagement metrics can be elicited in such a course, and how these factors can be analyzed to predict achievers and students at risk. The paper highlighted the LDA’s potential in generating efficient prediction models and demonstrated its application in an online course to execute the prediction process. The contribution of our findings to the field of sustainable education is summarized in the points below:

(a) Online courses (such as theatre courses) can be designed to meet the sustainable educational standards and (b) Sustainable education components, such as collaborative assignments, proved to be strongly associated with students’ performance. Therefore, the study indicated that it is of high educational merit to include collaborative assignments in the learning activities of sustainable education courses.

In addition, the contribution of our study to the field of learning analytics lies in the fact that our framework can be used to facilitate the eliciting of engagement metrics (by Learning Analytics Tools) in any online course. Therefore, our study accentuates that a calibrated risk management framework (such as the one presented in our study) can be easily combined with the learning analytics outcome of e-learning educational platforms, covering the gap of similar scientific research where the risk identification process is not part of an integrated framework, and it is presented in a fragmented way.

To ensure the course’s sustainability, our prediction model could be assessed in multiple theatre courses (with varying structures) to verify that it operates well in the case of variability in predictors. After validating our model in different courses, the remedial action (generating alert messages) will be determined. Then, our model could constitute a competent warning system for non-achievers. However, this process is in the pipeline.

Moreover, it would be of high scientific merit to examine the prediction model’s operation in association with groups’ engagement data to ensure the model’s potential across the emergence of new predictors. It is essential to emphasize that our prediction model applies to any course delivered at any educational level, on the condition that educators or administrators upload a portion of the course to an e-learning platform to obtain engagement metrics. In this case, our model can also predict achievers in blended courses. Statistical experts or risk analysts could compare our LDA model’s outcome to the classification outcome of risk identification methods (such as regression and classifiers) to assess its potential in predicting students at risk. From a similar perspective, managers can utilize our LDA model to predict achievers in online training courses. However, our model does not apply to pure conventional teaching since conventional teaching does not facilitate the measurement of engagement metrics. In the case of hybrid courses, it is of high scientific merit to identify predictors associated with conventional teaching. This could be another area for expansion in our research.

A validated prediction model in any course based on sustainable education principles holds value for a range of stakeholders.

Primarily, teachers (at any educational level) could utilize the model to get an overall picture of their class, identifying students who need timely interventions and support. Although in our study, the discriminant functions’ calculation was implemented after the first course run, educators can also calculate these functions at any time during the course to monitor students’ progress, and to identify students who need extra help, developing an effective strategy for remedial action.

Additionally, by taking advantage of the predominant predictors, our model could help educational stakeholders identify areas for improvement in their learning design. The strong predictors indicate the elements of sustainable education and theatre pedagogy that are most impactful (contributing more to students’ high achievement). In parallel, the weak predictors highlight educational and pedagogical elements that can enhance the learning design to meet students’ needs.

If demographic data and inclusivity characteristics constitute additional model coefficients, then our model could offer benefits to universities, colleges, and online academies, controlling students’ retention and identifying factors that contribute to students’ dropout. It is important to underline that although LDA and predictive analytics, in general, indicate strong and weak predictors, such methods do not necessarily propose ways to minimize the effect of the weak predictors, and to maximize the potential of the strong predictors. Therefore, the students’ retention could be fully controlled if our prediction model is combined with other dynamic optimization methods.

After this process, our model could constitute the pillar on which educational decision makers could design a generic educational policy. By incorporating socio-economic data into our model and integrating its outcome with other efficient optimization methods, we can predict whether a specific educational strategy would favour achievers. The same holds for students at risk.

The predominant predictors highlighted in our findings constitute meaningful engagement metrics associated with students’ high achievement. If our model is verified across multiple courses, a list of meaningful engagement metrics can be created. Educational stakeholders, e-learning systems’ administrators, and course developers can then use this list to calibrate the engagement metrics provided by learning analytics tools, highlighting the most meaningful indicators and identifying areas for improvement. This could be a research expansion that could lead to the amelioration of Moodle learning analytics tools, and therefore to the amelioration of the developed warning systems, enhancing the remedial action. Our team is currently verifying the model in at least two different courses to ensure the model’s high performance in the case of predictors’ variability. In parallel, our team is examining how inclusivity features, demographic characteristics, and socio-economic factors could constitute additional model coefficients to expand the range of its utilities.

In a nutshell, our study presented a robust LDA prediction model for achievers based on a calibrated risk management framework that can be to the avail of many educational stakeholders. The improvements mentioned (as research expansion) can increase the robustness and the versatility of our model. Our team is working on these improvements and continuously evaluates our model to maintain its applicability.